Abstract

Purpose

To evaluate feasibility and impact of evidence-based medicine (EBM) educational prescriptions (EPs) in medical student clerkships.

Methods

Students answered clinical questions during clerkships using EPs, which guide learners through the “four As” of EBM. Epidemiology fellows graded EPs using a rubric. Feasibility was assessed using descriptive statistics and student and fellow end-of-study questionnaires, which also measured impact. In addition, for each EP, students reported patient impact. Impact on EBM skills was assessed by change in EP scores over time and scores on an EBM objective structured clinical exam (OSCE) that were compared to controls from the prior year.

Results

117 students completed 402 EPs evaluated by 24 fellows. Average score was 7.34/9.00 (SD 1.58). 69 students (59%) and 21 fellows (88%) completed questionnaires. Most students thought EPs improved “Acquiring” and “Appraising.” Almost half thought EPs improved “Asking” and “Applying.” Fellows did not value grading EPs. For 18%of EPs, students reported a “change” or “potential change” in treatment. 56% “confirmed” treatment. EP scores increased by 1.27 (95% CI: 0.81–1.72). There were no differences in OSCE scores between cohorts.

Conclusions

Integrating EPs into clerkships is feasible and has impact, yet OSCEs were unchanged, and research fellows had limitations as evaluators.

Keywords: Information technology, evidence-based medicine, medical education

INTRODUCTION

To provide the highest quality care to patients in the ever-changing field of medicine, physicians must be trained in lifelong learning. These skills include the four As to practicing evidence-based medicine (EBM), namely the ability to: 1) Ask answerable clinical questions, 2) Acquire evidence to answer those questions, 3) Appraise evidence, and 4) Apply evidence to patient care (Straus 2005). Studies suggest that although medical students’ self-perceived competence in these areas is high, their actual performance is poor (Caspi 2006). These knowledge gaps have led the Association of American Medical Colleges (AAMC) to explicitly state in their Learning Objectives for Medical Student Education that students must be able to apply the principles of EBM to patient care (2010).

There is no clear evidence about the best method to teach EBM to medical students (Ilic 2014). Studies suggest that one brief training session can improve searching skills (Gruppen 2005), that EBM training embedded in a clerkship can improve EBM skills as measured by self-assessments (Dorsch 2004), and that EBM knowledge and skills can be equally improved with computer- or lecture-based instruction (Davis 2008). But these studies are limited in that they were not able to evaluate all four As of practicing EBM. Instead, they assessed individual components, such as Acquiring or Appraising. These studies as well as other reviews (Oude Rengerink 2013 and Shaneyfelt 2006) also highlight the lack of validated tools to test the four As of practicing EBM. For example, although Shaneyfelt describes two tools that have been assessed for validity in evaluating EBM skills, these tools are not designed for use in the care of actual patients (Shaneyfelt 2006), so they do not allow for the evaluation of all EBM steps, because they do not include an assessment of the application of evidence to patient care.

A model for teaching EBM that was recently proposed suggests integrating formal EBM training across both pre-clerkship and clerkship instruction to optimize the transfer of EBM skills (Maggio 2015). One tool that can enable this, and evaluate all four steps of practicing EBM, is the EBM educational prescription (EP), a novel web-based tool that offers formal EBM training in the context of clinical care by guiding learners through the four steps of EBM to address clinical questions in real time. The EBM EP website (http://ebm.wisc.edu/ep/) and grading criteria were initially designed to evaluate the EBM competency of medical residents, and have been tested for validity as an evaluation tool at multiple residency programs in the US (Feldstein 2009 and 2011). In our study, we evaluate the feasibility of incorporating the web-based EBM EP into medical student clerkships and the impact on student EBM skills and patient care.

METHODS

Overview

At the University of Pennsylvania Perelman School of Medicine, the basic science blocks occupy the first year and a half of the medical school curriculum, and the clinical clerkships occupy the second semester of the second year and the first semester of the third year (January to December). At the time of this study, students’ EBM curriculum included an Introduction to Epidemiology course that provided lectures and small group sessions on study design, bias, confounding, chance, and diagnostic testing, and occurred during the first semester of their first year. They then had a Clinical Decision Making course in the second semester of their first year that provided lectures on EBM, decision analysis, prediction rules, and cost analysis, and small group sessions alongside a year-long series of pathophysiology blocks that occupied the second semester of their first year and the first semester of their second year. In these small group sessions, they practiced their critical appraisal and decision making skills using clinical content from their pathophysiology blocks.

During the intervention period, medical students were required to complete at least one EBM EP per clerkship for clinical questions arising on their Internal Medicine, Family Medicine and Pediatrics clerkship blocks. Prior to performing EPs, students received an information sheet about the EPs and the study from their clerkship directors. The students were also asked to complete an online orientation for the EP.

Graders of the EPs were physician fellows enrolled in the University of Pennsylvania’s Master of Science in Clinical Epidemiology (MSCE) program (2015). Evaluation of EPs was a mandatory part of the MSCE fellows’ first year “Professional Development” curriculum. All second semester first year fellows and first semester second year fellows participated. Prior to their evaluations of the student EPs, the fellows received online training on evaluating EPs, as well as an orientation lecture on the EPs and practicing EBM. Graders also received an information sheet, and introductory and follow-up emails regarding the EP.

The study received expedited approval and a HIPAA waiver from our Institutional Review Board.

EBM Educational Prescription

The EBM EP is a web-based tool that guides learners through the four As of EBM (Supplement 1). We made minor changes (eg. changing the term “resident” to “learner”) to adapt it for medical students. Learners use the EP to define a clinical question (“Ask”), document a search strategy (“Acquire”), “Appraise” the identified evidence, report the results, and describe the “Application” of the evidence to their particular patient. The EP also contains a question about whether the evidence changed patient care: “Did (performing the EBM EP) change your patient’s management?” The answer choices included “No Effect on Plan”, “Reinforced/Confirmed Plan”, “Would Have Changed Plan if Had Information Earlier”, or “Changed Plan”. Questions about the time required to complete the EP, as well as the usefulness of the EP (from “Extremely Useful” to “Not at All”) and learner satisfaction with the EP process (from “Extremely Satisfied” to “Not at All”) were also included at the end of each EP. The EP website has built-in EBM resources to help learners complete their EPs, including resources to help appraise and interpret studies. Learners can view all of their completed EPs.

The EP grading form is a web-based tool that allows graders to evaluate learners’ EPs (Supplements 2A and 2B). EPs are evaluated in five areas: question formation; searching; evidence appraisal; application to patients; and overall competence. A built-in grading rubric provides anchors for grading, using a scale from 1 (not yet competent) to 9 (superior). The EP grading form has the same built-in resources as the learner pages. Fellows entered the time required to grade each EP and were also asked to provide written feedback to students about their EPs.

Study Population

All medical students at the University of Pennsylvania Perelman School of Medicine who completed their clinical clerkships from January to December 2010 were included in the intervention group. Students who did not complete all core clerkships within the calendar year (e.g. MD-PhD students) were excluded. In addition, all medical students who completed their clerkships from January 2009 to December 2009 were included in the study as historic controls for a comparison of performance on an end-of-year EBM objective structured clinical exam (OSCE). The EBM OSCE directly followed the students’ end-of-year Clinical Skills Inventory evaluation, and took place in the same location. Students had 25 minutes to complete the EBM OSCE. The historic controls completed the EBM OSCE in Spring 2010, while those in the intervention group completed the exam in Spring 2011. The intervention group and historic controls received similar EBM educations during their basic science blocks, except the intervention cohort received an introductory lecture on the EBM EP just prior to their clerkships.

Other Data Sources

Baseline characteristics of the two classes of medical students (2009 and 2010) taking the end-of-year EBM exam as well as the fellows were obtained from administrative databases. Baseline data for the medical students included age, gender, race, participation in a dual degree program, and clerkship grades in which they performed EPs. The grades from the three clerkships were averaged to create a “gradepoint average”. Baseline data for the fellows included age, gender, race, graduate degrees, and clinical specialty.

Students and fellows also completed an end-of-year questionnaire through SurveyMonkey (SurveyMonkey Inc., Palo Alto, CA, USA). Questionnaires included quantitative questions based on a Likert scale and free text, and asked about their attitudes toward the EP, barriers to using the EP, impact of the EP on student EBM skills, and suggestions for improvement (Supplements 3 and 4).

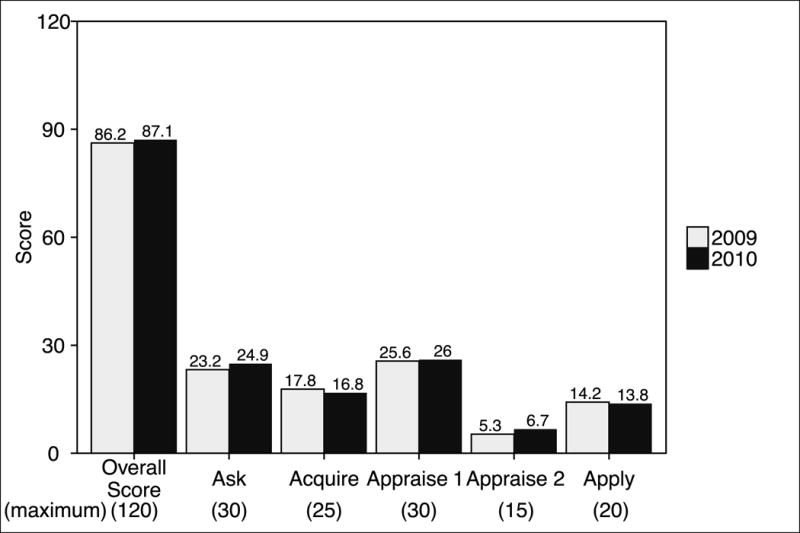

The end-of year EBM exam was based on a previously validated OSCE (Frohna 2006). The OSCE tested the four As of EBM, and had a maximum score of 120, with 30 points for correctly Asking answerable questions, 25 points for correctly Acquiring evidence, 30 points for correctly Appraising studies (Appraise 1), 15 points for correctly calculating a number needed to treat (Appraise 2), and 20 points for correctly Applying evidence to patient care (Frohna 2006 and Fliegel 2002).(Supplement 5) The OSCE and scoring rubric were modified for this study by adding the final 20 point section on applying evidence to patient care. Each EBM OSCE was graded by two independent evaluators. Inter-rater reliability of OSCE scoring was measured using quadratic weighted kappa scores.

Analysis of EP Feasibility, Impact, and Predictive Validity (Table 1)

Table 1.

Types of Outcomes and Specific Measures Evaluated and Data Sources Used

| Outcome Type | Specific Measure | Data Source(s) |

|---|---|---|

| Feasibility | Number of EBM EPs performed | EBM EP |

| Average time to complete EPs | EBM EP | |

| Number of EBM EPs graded | EBM EP | |

| Average time to grade EPs | EBM EP | |

| EBM EP scores | EBM EP | |

| Student satisfaction | EBM EP, Questionnaire | |

| Fellow satisfaction | EBM EP, Questionnaire | |

| Impact | Student reported impact | EBM EP, Questionnaire |

| Fellow reported impact | Questionnaire | |

| Change in EBM EP score over time | EBM EP | |

| Change in OSCE score | OSCE | |

| Association between number of EPs performed and OSCE scores | EBM EP, OSCE | |

| Predictive Validity | Association between EBM EP scores and OSCE scores | EBM EP, OSCE |

| Association between EBM EP scores and gradepoint averages for clerkships where EP was used | EBM EP, Administrative database |

Abbreviations: EBM, evidence-based medicine; EP, educational prescription; OSCE, objective structured clinical exam.

Feasibility was evaluated by examining the number of EPs performed per student, average self-reported time to complete EPs, average number of EPs rated by graders, average self-reported time to evaluate EPs, and average competency rating for each EP category. Descriptive statistics were also used to analyze student and grader questionnaire responses to understand their attitudes toward the EP, barriers to using the EP, and suggestions for improvement.

Impact of performing EPs was evaluated by examining changes in individual student’s EP competency ratings over time and comparing EBM OSCE scores of the intervention and control cohorts. Changes in students’ competency ratings were estimated using a mixed-model regression controlling for within-person effects. Differences in mean total OSCE scores as well as scores for the individual components of the test were analyzed using t-tests. The association between number of EPs performed and overall OSCE scores was examined, controlling for student performance on their last EP. Descriptive statistics were used to analyze student and grader questionnaire responses about the impact of the EP on student EBM skills and to estimate the percentage of EPs that caused a change in management and the types of changes that occurred.

Predictive validity of the EP was evaluated by comparing students’ average and most recent EP competency ratings with their EBM OSCE scores and mean gradepoint average across their Internal Medicine, Family Medicine and Pediatrics clerkships.

RESULTS

One hundred seventeen students had 402 EPs evaluated by fellows (mean 3.4 per student). Five students completed 2 EPs during the year, 57 completed 3 EPs, 54 completed 4 EPs, and 1 student completed 5 EPs. Three hundred seventy-seven EPs (93.8%) addressed foreground questions, which traditionally ask for specific information to directly inform a clinical decision, and define the Population, Intervention, Comparator, and Outcome (i.e. the PICO) of interest. Twenty-five EPs (6.2%) addressed background questions, which traditionally ask for general information, such as the pathophysiology of a disorder, or the mechanism of action or dosing of a drug. The majority (300, 81.5%) involved a question about therapy. Thirty-four (9.2%) examined a diagnostic question.

Twenty four fellows graded the 402 EPs (mean 16.8 EPs per fellow). The specialties of those fellows included: 14 (58.3%) internal medicine subspecialties, 4 (16.7%) pediatrics subspecialties, 2 (8.3%) obstetrics/gynecology, and 1 fellow each (4.2%) from the Departments of Emergency Medicine, Otolaryngology, Pathology, and Neurology. The fellows’ median age was 33, with 12 (50%) females.

Students’ average overall competency score was 7.34 (standard deviation [SD] 1.58) out of 9. Students took a median of 90 minutes (interquartile range [IQR] 60-120) to complete EPs and fellows took a median of 20 minutes (IQR 15-30) to grade EPs. The EPs changed or would have changed plans if they had the information sooner 18% of the time, and 56% confirmed the plan. The change in overall competency score over one year was significant at 1.27 points (95% confidence interval [CI]: 0.81 – 1.72).

Sixty nine students (59%) completed end-of-study questionnaires. Ten students (15%) reported they did not receive adequate instruction on using the EP, 37 (54%) reported “sometimes”, “often” or “always” using the EP in topic presentations to their team, 38 (55%) reported “sometimes”, “often” or “always” receiving helpful feedback from evaluators, and 48 (69%) reported that this feedback was “sometimes”, “often” or “always” timely.

In general, students perceived the EPs to favorably impact their ability to search databases, and find and appraise evidence (Supplementary Figure 1). Approximately 40% thought the EP also improved their ability to ask clinical questions and apply evidence in practice, and viewed the EP as valuable. Students’ most frequently cited barriers to using the EP included time (33%), personal attitude toward EBM (26%), faculty knowledge of EBM (20%), and their own comfort with appraising evidence (19%).

Twenty one fellows (88%) completed questionnaires. Although most fellows believed students’ EPs improved, and over one third believed that EPs strengthened learners’ EBM skills and was a valuable tool for evaluating learners’ EBM skills, the majority did not believe that evaluating EPs was a valuable experience for them, nor would they recommend the experience continue as part of their clinical epidemiology training (Supplementary Figure 2). The most commonly cited barrier by fellows (86%) was the time required to grade EPs.

One hundred eighteen students met eligibility criteria for the control cohort and had OSCEs available for grading. There were no meaningful demographic differences between the intervention and control cohorts (Table 2), nor were there differences in mean overall OSCE scores (means of 87.1 and 86.2 out of 120, respectively, P = 0.66) (appendi 3). There were also no differences in the mean scores on the individual components of the OSCE. The inter-rater reliability of the OSCE graders was excellent with a quadratic weighted kappa of 0.93.

Table 2.

Demographics of Students Eligible for the Study

| Cohort | 2009 | 2010 |

|---|---|---|

| N | 118 | 117 |

| Median Age (Interquartile Range) | 26 (25-27) | 26 (25-28) |

| Race* | ||

| Non-Hispanic White | 71% | 62% |

| Asian or Pacific Islander | 14% | 15% |

| Non-Hispanic Black | 12% | 9% |

| Hispanic | 3% | 7% |

| Non-Hispanic Other | NA | 9% |

| Female | 53% | 53% |

| Dual Degree | 19% | 19% |

| Median Clerkship Grade (Interquartile Range) | 3.33 (3.33 - 3.67) | 3.33 (3.00 - 3.67) |

Race was categorized differently in 2010 compared with 2009

There were no significant associations between number of EPs performed and overall OSCE scores. There were also no significant associations between EP scores and OSCE scores or clerkship “gradepoint averages.”

DISCUSSION

To our knowledge, this is the first study to evaluate the use of a web-based EBM educational prescription integrated into a medical student clerkship. We found that it was feasible to incorporate the EP into clerkships, and students reported impact on patient care and EBM skills. However, scores did not increase on an EBM OSCE for those who used the EP throughout their clerkships, and using fellows who were not involved in the clinical care of patients cared for by the medical students had limitations.

Our findings are similar to those of earlier evaluations of EBM EPs used in the context of Internal Medicine residencies. In a pilot using paper EPs, housestaff reported that the EPs improved their EBM skills, but that time was a barrier to performing EPs (Feldstein 2009). In a later study examining web-based EPs across five Internal Medicine residency sites, housestaff took half the time of our medical students to complete their EPs, yet scored almost a full point lower on average than our students (Feldstein 2011). Housestaff in that study reported an impact of the EPs on their care plans similar to what our medical students reported, except a smaller percentage of the students believed the EP changed the plan, suggesting that students in general may believe they have less decision making authority or impact on the plan compared to housestaff. Examples from EPs in which students noted an impact on the patients management include: adding spironolactone to the medication regimen of a patient with systolic dysfunction; discontinuing a proton pump inhibitor (PPI) in a patient with recurrent pneumonia and no clear indication for a PPI; prescribing a high dose statin for secondary stroke prevention; and recommending watchful waiting instead of antibiotics in a child with uncomplicated acute otitis media.

More than 70% of housestaff thought the EP was a valuable experience, which is much higher than our student ratings, and may be related to the perceived impact of the EPs on patient care. Housestaff and students perceptions about barriers were similar, with time and comfort with evidence resources being the most cited, although housestaff perceived time as a greater barrier, and comfort with resources as a lesser barrier than students. The findings about barriers have been described elsewhere (Green 2005).

Both students and fellows expressed concern about the approach of the EBM EP curriculum. Although both were supportive of the use of EPs in real time to increase EBM skills and improve patient care, both were concerned that the fellows evaluating the EPs were not involved in the care of patients whom the EPs were targeting. This disconnect between the evaluators and clinical care may have led to delays in evaluator feedback, and lower scores from students when evaluating the impact of the EP on their ability to use evidence in practice, and the impact of the EP on their future approach to patient care. Overall, the fellows did not value the experience, and recommended that it not be continued as part of their training in subsequent years. As a result, the EP curriculum adopted a new approach in calendar year 2012. Students’ EPs were evaluated by clinicians responsible for the medical students’ training during their clerkships, including clerkship directors and teaching attendings. For example, in some clerkships, students presented their completed EPs to their attendings, who used EP score cards with the grading rubric in real time to assess the students’ competency. This approach has consistently received favorable ratings over the years (Appendix 1), and has been adopted by other clinical services.

Our study had limitations. First, we were unable to assess whether the EBM EP curriculum was associated with more long term behaviors of students, including asking more clinical questions, performing more literature searches, or practicing EBM more consistently. Second, the significant improvement in EP scores over time identified by the study may have been the result of maturation bias, rather than the result of performing EPs over time. In addition, because inter-rater reliability was not calculated for the fellows who graded the EPs, and because EPs were graded as they were performed and fellows were not blinded to when during the year the EPs were submitted, fellows may have been biased in their assessment of the EPs, providing higher scores over time despite unchanged performance on the EPs. The availability of a grading rubric to anchor grading likely minimized such bias. Third, the end-of-year EBM exam that we used in this study was based on a previously validated EBM OSCE, but was not validated itself. However, there were no significant differences in the overall exam scores or the scores on the individual domains, even when we examined only those domains that were part of the original EBM OSCE. Finally, the study was conducted in a single medical student class at a single institution, thus the generalizability may be limited. However, the program was implemented in a usual teaching setting during inpatient and outpatient clerkships, which may improve its generalizability. Further studies in other medical schools would be needed to estimate the feasibility and impact of this program with more certainty, and to understand how to best integrate the approach into different teaching programs.

CONCLUSION

Integrating an EBM EP into medical student clerkships is feasible, and students reported impact on patient care and EBM skills. Medical schools might consider this as one method to teach and evaluate EBM as required by the AAMC. These results may also be generalizable to other medical students nationally and internationally, but the program would need to be evaluated in additional settings to better estimate its feasibility and impact.

Supplementary Material

Supplement 1. Screenshot of Learner EBM Educational Prescription with Fields Completed

Supplement 2A. Screenshot of Grader EBM Educational Prescription

Supplement 2B. Screenshot of Grader EBM Educational Prescription with the Rollover Scoring Rubric Visible

Supplement 3. Learner End-of-Study Questionnaire

Supplement 4. Grader End-of-Study Questionnaire

Supplement 5. EBM Objective Structured Clinical Exam (OSCE)

Supplementary Figure 1. Student Responses to End-of-Study Questionnaire

Supplementary Figure 2. Fellow Responses to End-of-Study Questionnaire

FIGURE 1.

Mean Scores on Evidence-based Medicine Objective Structured Clinical Exam

Practice Points.

To provide quality care, physicians must be lifelong learners, trained in the practice of evidence-based medicine (EBM).

No clear evidence exists about how best to teach medical students EBM.

We examined the integration of a web-based EBM educational prescription (EP) into clerkships.

Integrating EPs was feasible and has impact. Research fellows had limitations as EP evaluators.

Acknowledgments

The authors with to thank web developer Rob Lauer at the University of Wisconsin, as well as our University of Pennsylvania colleagues Justin Bittner, Anna Delaney, Stephanie Dunbar, Jennifer R Kogan, Jennifer Kuklinski, Denise LaMarra, Anne McCarthy, Elizabeth O’Grady, Judy Shea, and Katie Thomas.

Dr. Umscheid’s contribution to this project was supported in part by the National Center for Research Resources, Grant UL1RR024134, which is now at the National Center for Advancing Translational Sciences, Grant UL1TR000003. Dr. Feldstein’s salary was supported by the Clinical and Translational Science Award (CTSA) program, through the NIH National Center for Advancing Translational Sciences, grant UL1TR000427 and grant KL2TR000428. The content of this paper is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

David A. Feldstein is the developer of the EBM Educational Prescription website (http://ebm.wisc.edu/ep/). The copyright is owned by the University of Wisconsin School of Medicine and Public Health and the website is available for use by subscription.

Appendix 1. Student Evaluations of EBM Educational Prescription Activities in the Pediatrics Clerkship, by Calendar Year

| CY2012 (n=151) |

CY2013 (n=151) |

CY2014 (n=155) |

|

|---|---|---|---|

|

Rate your learning of how to apply scientific evidence to patient care decisions in this clerkship Mean (standard deviation) (n) |

3.62 (1.09) (n=149) |

3.63 (0.99) (n=151) |

3.42 (0.98) (n=155) |

|

Usefulness of the EBM EP system in helping you to formulate your integration of scientific research into clinical care Mean (standard deviation) (n) |

3.36 (1.21) (n=149) |

3.51 (1.04) (n=150) |

3.19 (1.08) (n=154) |

|

Helpfulness of clerkship faculty in this process Mean (standard deviation) (n) |

3.60 (1.25) (n=129) |

3.73 (1.05) (n=134) |

3.45 (1.24) (n=140) |

|

Helpfulness of clerkship residents in this process Mean (standard deviation) (n) |

3.62 (1.27) (n=102) |

3.78 (0.98) (n=97) |

3.31 (1.17) (n=99) |

Abbreviations: CY, calendar year; EBM, evidence-based medicine; EP, educational prescription.

Ratings are on a scale where 1=poor, 2=fair, 3=good, 4=very good, 5=excellent.

Footnotes

This manuscript has not been previously published and is not under consideration in the same or substantially similar form in any other journal. All those listed as authors are qualified for authorship and all who are qualified to be authors are listed as authors on the byline.

NOTES ON CONTRIBUTORS

Dr. Umscheid Directs the Penn Medicine Center for Evidence-based Practice, Philadelphia, PA.

Dr. Maenner is a Fellow, University of Wisconsin, Madison, WI.

Dr. Mull is the Assistant Director of the Penn Medicine Center for Evidence-based Practice, Philadelphia, PA.

Dr. Veesenmeyer is a Fellow, University of Utah, Salt Lake City, Utah.

Dr. Farrar Directs the Undergraduate Medical Education in Epidemiology, and is Deputy Director of the Master of Science in Clinical Epidemiology Program, University of Pennsylvania, Philadelphia, PA.

Dr. Goldfarb is Associate Dean for Clinical Education, University of Pennsylvania, Philadelphia, PA.

Dr. Morrison is Senior Vice Dean for Education, University of Pennsylvania, Philadelphia, PA.

Dr. Albanese is Emeritus Faculty, University of Wisconsin, Madison, WI.

Dr. Frohna is Director of the Pediatric Residency Program and Vice Chair for Education, University of Wisconsin, Madison, WI.

Dr. Feldstein is Faculty, University of Wisconsin, Madison, WI.

DECLARATIONS OF INTEREST

The authors report no other potential financial conflicts of interest relevant to this article.

ETHICAL APPROVAL

The study received expedited approval and a HIPAA waiver from our Institutional Review Board.

PREVIOUS PRESENTATIONS

This study was previously presented as a poster at the Society of General Internal Medicine Annual Meeting in Orlando, Florida in May 2012, and as an oral presentation at the 52nd Annual Research in Medical Education (RIME) Conference in Philadelphia, PA in November 2013.

References

- Caspi O, McKnight P, Kruse L, Cunningham V, Figueredo AJ, Sechrest L. Evidence-based medicine: discrepancy between perceived competence and actual performance among graduating medical students. Med Teach. 2006;28(4):318–325. doi: 10.1080/01421590600624422. [DOI] [PubMed] [Google Scholar]

- Davis J, Crabb S, Rogers E, Zamora J, Khan K. Computer-based teaching is as good as face to face lecture-based teaching of evidence based medicine: a randomized controlled trial. Med Teach. 2008;30(3):302–307. doi: 10.1080/01421590701784349. [DOI] [PubMed] [Google Scholar]

- Dorsch JL, Aiyer MK, Meyer LE. Impact of an evidence-based medicine curriculum on medical students’ attitudes and skills. J Med Lib Assoc. 2004;92(4):397–406. [PMC free article] [PubMed] [Google Scholar]

- Feldstein DA, Mead S, Manwell LB. Feasibility of an evidence-based medicine educational prescription. Med Educ. 2009;43:1005–1106. doi: 10.1111/j.1365-2923.2009.03492.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feldstein D, Maenner M, Allen E, Baumann Kreuziger LM, Kaatz S, Nicholson LJ, Beth Smith ME, Albanese M. A web-based EBM educational prescription to evaluate resident EBM competency. J Gen Int Med. 2011;26(Supplement 1):S136. [Google Scholar]

- Fliegel JE, Frohna JG, Mangrulkar RS. A computer-based OSCE station to measure competence in evidence-based medicine skills in medical students. Acad Med. 2002;77(11):1157–1158. doi: 10.1097/00001888-200211000-00022. [DOI] [PubMed] [Google Scholar]

- Frohna JG, Gruppen LD, Fliegel JE, Mangrulkar RS. Development of an evaluation of medical student competence in evidence-based medicine using a computer-based OSCE station. Teach Learn Med. 2006;18(3):267–272. doi: 10.1207/s15328015tlm1803_13. [DOI] [PubMed] [Google Scholar]

- Green ML, Ruff TR. Why do residents fail to answer their clinical questions? A qualitative study of barriers to practicing evidence-based medicine. Acad Med. 2005;80(2):176–182. doi: 10.1097/00001888-200502000-00016. [DOI] [PubMed] [Google Scholar]

- Gruppen LD, Rana GK, Arndt TS. A controlled comparison study of the efficacy of training medical students in evidence-based medicine literature searching skills. Acad Med. 2005;80(10):940–944. doi: 10.1097/00001888-200510000-00014. [DOI] [PubMed] [Google Scholar]

- Ilic D, Maloney S. Methods to teach medical trainees EBM: a systematic review. Med Educ. 2014;48:124–135. doi: 10.1111/medu.12288. [DOI] [PubMed] [Google Scholar]

- Learning Objectives for Medical Student Education: Guidelines for Medical Schools. AAMC Website Retrieved. Retrieved May 31, 2010. Available from https://services.aamc.org/publications.

- Maggio LA, Cate OT, Irby DM, O’Brien BC. Designing evidence-based medicine training to optimize the transfer of skills from the classroom to clinical practice: applying the four component instructional design model. Acad Med. 2015 doi: 10.1097/ACM.0000000000000769. [DOI] [PubMed] [Google Scholar]

- Master of Science in Clinical Epidemiology. Center for Clinical Epidemiology and Biostatistics. University of Pennsylvania Perelman School of Medicine. Retrieved April 29, 2015. Available from http://www.med.upenn.edu/cceb/epi/edu/msce/index.shtml

- Oude Rengerink K, Zwolsman SE, Ubbink DT, Mol BWJ, van Dijk N, Vermeulen H. Tools to assess evidence-based practice behaviour among healthcare professionals. Evid Based Med. 2013;18(4):129–138. doi: 10.1136/eb-2012-100969. [DOI] [PubMed] [Google Scholar]

- Shaneyfelt T, Baum KD, Bell D, Feldstein D, Houston TK, Kaatz S, Whelan C, Green M. Instruments for evaluating education in evidence-based practice: a systematic review. JAMA. 2006;296(9):1116–1127. doi: 10.1001/jama.296.9.1116. [DOI] [PubMed] [Google Scholar]

- Straus SE, Richardson WS, Glasziou P, Haynes RB. Evidence-based Medicine: How to Practice and Teach EBM. Third. Elsevier; Philadelphia, USA: 2005. [Google Scholar]

- SurveyMonkey Website. Retrieved February 20, 2016. Available from www.surveymonkey.com

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplement 1. Screenshot of Learner EBM Educational Prescription with Fields Completed

Supplement 2A. Screenshot of Grader EBM Educational Prescription

Supplement 2B. Screenshot of Grader EBM Educational Prescription with the Rollover Scoring Rubric Visible

Supplement 3. Learner End-of-Study Questionnaire

Supplement 4. Grader End-of-Study Questionnaire

Supplement 5. EBM Objective Structured Clinical Exam (OSCE)

Supplementary Figure 1. Student Responses to End-of-Study Questionnaire

Supplementary Figure 2. Fellow Responses to End-of-Study Questionnaire