Abstract

Objectives

To investigate the association between hospital safety culture and 30‐day risk‐adjusted mortality for Medicare patients with acute myocardial infarction (AMI) in a large, diverse hospital cohort.

Subjects

The final analytic cohort consisted of 19,357 Medicare AMI discharges (MedPAR data) linked to 257 AHRQ Hospital Survey on Patient Safety Culture surveys from 171 hospitals between 2008 and 2013.

Study Design

Observational, cross‐sectional study using hierarchical logistic models to estimate the association between hospital safety scores and 30‐day risk‐adjusted patient mortality. Odds ratios of 30‐day, all‐cause mortality, adjusting for patient covariates, hospital characteristics (size and teaching status), and several different types of safety culture scores (composite, average, and overall) were determined.

Principal Findings

No significant association was found between any measure of hospital safety culture and adjusted AMI mortality.

Conclusions

In a large cross‐sectional study from a diverse hospital cohort, AHRQ safety culture scores were not associated with AMI mortality. Our study adds to a growing body of investigations that have failed to conclusively demonstrate a safety culture–outcome association in health care, at least with widely used national survey instruments.

Keywords: Safety culture, outcomes, mortality

Organizational culture and its association with performance in business and manufacturing have been studied for decades, using a variety of methods, including surveys (Kotter and Heskett 1992; Schein 2010; Waterson 2014). Culture is multidimensional, but one element receiving particular attention is safety culture (Zohar 1980; Guldenmund 2000; Hansen, Williams, and Singer 2011; Waterson 2014), the “knowledge, beliefs, and attitudes that reflect the role of safety in the organization” (Zohar 1980; Hansen, Williams, and Singer 2011). A related concept is safety climate, the “policies, procedures, and practices, which can be more easily measured through workforce perceptions” (Singer et al. 2009a). We employ these two terms interchangeably, although safety culture is more commonly used in current practice (Waterson 2014).

While early research was focused on improving safety culture or climate in order to reduce industrial accidents, that concept has now expanded. Many of the same attitudes and behaviors that create a safer environment for workers—management support, communication openness, feedback and nonpunitive responses to error, a continuous learning environment, and teamwork—are also features of high reliability organizations that produce consistently higher products and services (Reason 2000; Weick and Sutcliffe 2007; Chassin and Loeb 2013).

Because health care has not achieved the degree of safe, error‐free, and consistent results achieved by other high‐stakes enterprises such as commercial and military aviation and nuclear power (Singer et al. 2010), considerable attention is now focused on the measurement and improvement of safety culture in hospitals. Theoretically, this might identify areas of organizational vulnerability which, if addressed, would improve quality and safety for patients. However, the association of organizational culture with health care safety and quality outcomes has proven difficult to demonstrate, despite evidence of such a relationship from other fields (Zohar 1980, 2000; Reason 1997; Clarke 2006).

We sought to characterize the association of safety culture and patient outcomes using Agency for Healthcare Research and Quality (AHRQ) survey data from a large, diverse sample of US hospitals, and corresponding risk‐adjusted mortality outcomes from these hospitals for elderly patients with acute myocardial infarction (AMI).

Methods

Data Sources

The AHRQ Hospital Survey on Patient Safety Culture (HSOPS; AHRQ 2017) includes 42 items measuring 12 patient safety culture domains, as well as a summary grade for overall patient safety. Westat (Rockville, MD), an AHRQ vendor for HSOPS, distributed our research request to all participating hospitals, seeking permission to use their individual institution, de‐identified, aggregate HSOPS safety culture data. Ultimately, 264 hospitals granted permission, accounting for 384 surveys between 2008 and 2013. This is, to our knowledge, the largest number of hospital‐level AHRQ HSOPS observations that have been available to study the association of safety culture and outcomes. Hospital characteristics (teaching status, bed size, and geographic regions) were also provided by Westat.

We obtained data from the Medicare Provider Analysis and Review (MedPAR) file, including beneficiary demographic characteristics; principal and secondary diagnoses and procedures; and dates of admission, discharge, and death. Patient enrollment information (e.g., fee‐for‐service and Medicare Part A/B enrollment) was obtained from the CMS Master Beneficiary Summary File.

Using the hospital Medicare provider ID, we merged HSOPS data from the 264 hospitals providing safety culture information with MedPAR patient‐level data for AMI discharges from the same institutions.

Patient Population

We included Medicare beneficiaries age 65 or older discharged with a principal diagnosis of AMI between September 2007 and November 2013 and excluded children's hospitals, government hospitals, rehabilitation and psychiatric hospitals, two women's hospitals that did not provide heart services, and hospitals that could not be matched with MedPAR data using their Medicare Provider ID. For 11 hospitals with multiple sites under the same Medicare Provider ID, we combined HSOPS surveys from the same year from different sites and averaged their composite scores to represent hospital group performance. In most instances, overall and domain‐specific safety culture scores did not vary substantially between different sites under the same Medicare Provider ID, suggesting that there were common behaviors and practices across sites within a system.

In the final study cohort, 257 surveys from 171 hospitals were analyzed. Among these 171 hospitals, 44 administered multiple surveys during the study period, and these multiple surveys were included as separate observations.

We used the survey date as the midpoint and linked surveys to AMI discharges that occurred 180 days before and after the survey date, a time frame during which neither safety culture nor AMI performance would likely change substantially. For nine hospitals with two overlapping surveys administered in less than a 12‐month window (range, 6–11 months), we assigned an AMI discharge to the closest survey to avoid double counting.

We initially matched 34,665 discharges from September 2007 to November 2013 with HSOPS‐participating hospitals. Further exclusions were then applied using criteria derived from the CMS AMI 30‐day mortality models (Yale New Haven Health Services Corporation/Center for Outcomes Research and Evaluation 2013). Using the CMS Master Beneficiary Summary File, we only included patients who were enrolled in Medicare Part A fee‐for‐service for at least 12 months prior to the current admission (for patients discharged in 2007, 8–11 months of continuous enrollment). We excluded patients discharged alive within 1 day after admission and not transferred to another hospital (likely coding errors or misdiagnosis), patients who left against medical advice, and patients enrolled in hospice during the previous 12 months.

Management of transfer patients merits particular attention. Unlike CMS, which assigns transfer patients to the first hospital in a referral chain, even if the majority of their care was rendered at the second hospital, for the purposes of this study we assigned AMI patients to the receiving hospital where they presumably received the majority of their care (e.g., often a PCI or CABG). It is the safety culture of this hospital that is most likely related to their subsequent outcomes. However, if safety culture data were unavailable for the receiving hospital, these patients were deleted from the study (N = 1,609). Finally, if a patient had multiple discharges linked with the same survey, we randomly selected one discharge per survey.

Figure S1 provides a flow diagram of our cohort selection process.

Safety Culture Scores

Each HSOPS domain contains three to four items scored on a 5‐point Likert scale (e.g., from “strongly disagree” to “strongly agree”, or from “never” to “always”). The percent positive responses for each item were defined as number of positive responses (e.g., “agree” or “strongly agree” for positively worded questions; “disagree” or “strongly disagree” for negatively worded questions) divided by number of respondents to this item within a hospital. All safety scores were represented as a fraction (range, 0–1). Three different methods were used to characterize safety culture scores in the subsequent analyses. First, the percent positive scores for each item within a domain were averaged to create a composite score for each individual domain. Next, we estimated an average composite score by calculating the mean percent positive scores across the 12 composite domains. Finally, for the overall safety grade (a specific question on the AHRQ survey), the score was the percent of respondents who answered “excellent” or “very good.”

Primary Endpoint and Covariates

The primary endpoint was 30‐day all‐cause mortality, measured from the first day of admission. Patient date of death was obtained from MedPAR files. Patient covariates such as age, gender, medical history, and comorbidities were selected based on published CMS risk‐adjusted 30‐day mortality models, and discharges of the same patient in the preceding 12 months (8–11 months for 2007 discharges) were used to determine whether a specific condition was likely present on admission (e.g., a comorbidity) rather than a complication of hospitalization.

Hospital teaching status and bed size were controlled in multivariable models. Survey year was coded as a continuous variable starting from 0 and was included in the models. We also included in the model a dummy variable that was set to 1 if the hospital had multiple surveys during the study period and 0 otherwise.

Statistical Analyses

Descriptive analyses were performed for patient characteristics. Safety culture percent positive scores were compared with data from the 2011 AHRQ User Comparative Database Report (AHRQ 2011) to determine whether our results, derived from a subset of all hospitals surveyed nationally, differed significantly from overall national data, at least at the midpoint of our study timeframe.

We also compared hospital characteristics among our study hospitals, all CMS hospitals with at least one AMI discharge in 2011, and all hospitals participating in the 2011 AHRQ User Comparative Database Report. We used the 2011 AHA hospital survey to ascertain study hospital characteristics in 2011 (study midpoint); we also linked the 2011 hospital survey to 2011 CMS MedPAR data to determine hospital characteristics for all CMS hospitals with at least one AMI discharge.

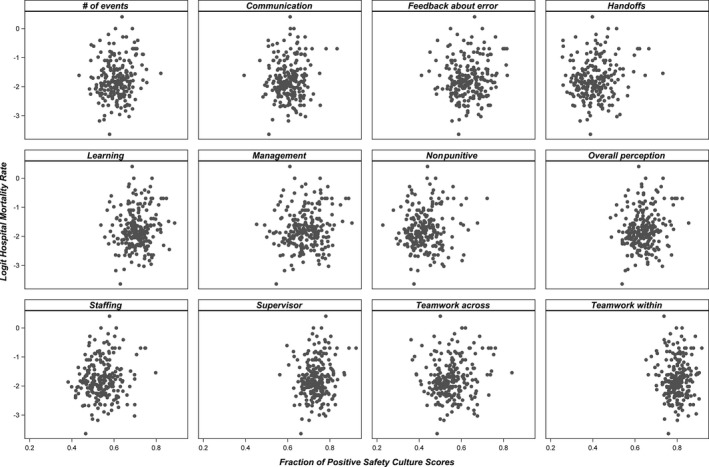

We initially estimated each study hospital's unadjusted 30‐day mortality rate and created a scatter plot to display the association between the logit of a hospital's mortality rate and their positive safety culture scores for 12 safety domains. Next, hierarchical logistic models with hospital‐specific random effects were used to characterize the association between safety culture and adjusted 30‐day AMI mortality. Because of potential collinearity among the 12 safety culture domains, we performed these analyses using all the three scoring approaches previously described: separate models for each of the 12 domains; average of domain scores; and single overall safety score.

To reduce the number of covariates in the hierarchical model and improve computational efficiency, we developed a summary risk score for each patient. This score was estimated with logistic regression using a population of Medicare AMI patients from 2011 that excluded patients from the hospitals in our main study cohort. Twenty‐seven patient covariates from the CMS AMI 30‐day mortality model were entered into this new model, and coefficients were estimated for each patient variable. These coefficients were then used to calculate a summary score for our study patients, thereby reducing the number of patient‐level variables in our final hierarchical model from 27 to 1. For each patient, we subtracted from their summary score the mean score of all AMI patients treated at the hospital during the time period relevant to a specific survey. To address potential case mix confounding by hospital, we also included a centered hospital summary score (difference between the mean risk of all patients within a survey and the grand mean for the 257 surveys) in the hierarchical models.

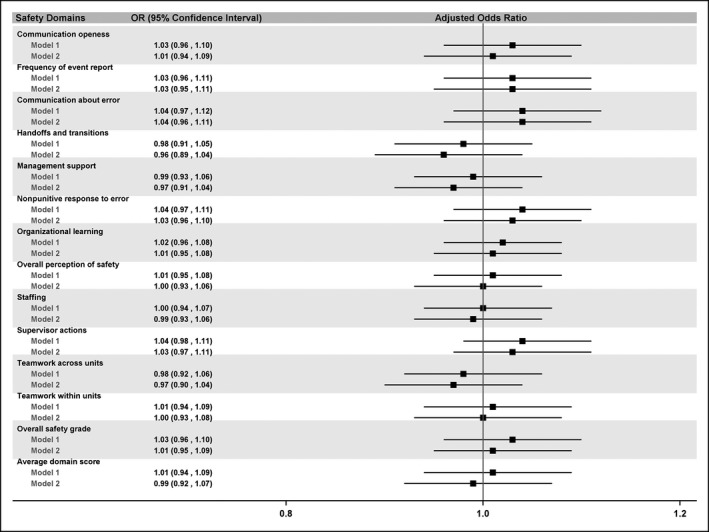

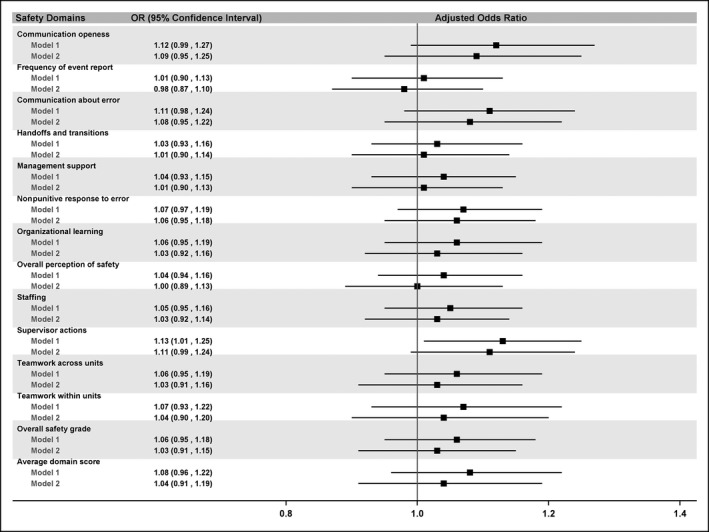

Subsequently, three separate modeling strategies were used. The first set of models to estimate the association between safety culture and 30‐day mortality only included the centered patient and hospital summary risk scores, safety culture domain score, year of survey, and an indicator for multiple surveys. The second set of models included all the covariates in the first model plus hospital teaching status and bed size. The final set of models added interaction terms between safety culture score and hospital characteristics to the second model to test whether the hospital factors moderated the relationship between safety score and mortality. Our primary interest was estimation of the association of the safety culture score and mortality, adjusting for patient risk and other factors. Thus, we computed adjusted odds ratio for a one standard deviation increase in safety culture scores across 257 surveys.

We also performed various sensitivity analyses: (1) eliminated surveys with fewer than 10 matched discharges in our linked population; (2) excluded surveys with less than 50 percent overall survey response rate; (3) randomly selected one survey if a hospital had multiple surveys in the study; (4) restricted our analyses to hospitals with the highest and lowest 10 percent of safety culture scores, and compared risk‐adjusted AMI mortality between high and low safety score hospitals; and (5) weighted the safety culture scores by survey response rate using logistic regression models.

A forest plot was used to display the adjusted odds ratios (95% confidence intervals) from the analyses of mortality and hospital safety culture scores for AMI discharges.

Most statistical analyses were performed using SAS software, version 9.4 (SAS Institute, Inc., Cary, NC); statistical significance was defined as an error rate of < 0.05. PASS (NCSS Statistical Software, LLC, Kaysville, UT) was used for power calculations.

This study was approved by the Partners IRB.

Results

In the final analytic cohort, 19,357 AMI discharges were linked to 257 surveys from 171 hospitals. Among these 257 surveys, 41 (16.0 percent) had fewer than 10 discharges to which they could be linked, but all surveys were included in the primary analysis.

Mean patient age was 79.0 years (SD 8.6), and 52 percent of patients were male. The mean summary score of patient risk factors was −2.09 (SD 0.78). Teaching hospitals contributed 62.4 percent of the discharges, and 64.1 percent of discharges were from hospitals with more than 300 beds. Overall unadjusted 30‐day mortality was 12.2 percent at the patient level (Table 1), and 16 percent averaged across 257 unique hospital‐survey combinations (Table 2).

Table 1.

Patients Characteristics (n = 19,357 AMI Discharges)

| N (%) | |

|---|---|

| Patient characteristics | |

| Age, years, mean (std) | 79.0 (8.6) |

| Male | 10,099 (52.2%) |

| Cardiovascular history | |

| History of PCI | 2,115 (10.9%) |

| History of CABG | 1,662 (8.6%) |

| Heart failure | 3,691 (19.1%) |

| Myocardial infarction | 2,722 (14.1%) |

| Anterior MI | 1,765 (9.1%) |

| Inferior/lateral/posterior MI | 2,727 (14.1%) |

| Unstable angina | 942 (4.9%) |

| Chronic atherosclerosis | 14,781 (76.4%) |

| Respiratory failure | 1,349 (7.0%) |

| Valvular heart disease | 1,123 (5.8%) |

| Coexisting conditions | |

| Hypertension | 13,455 (69.5%) |

| Stroke | 411 (2.1%) |

| Cerebrovascular disease | 885 (4.6%) |

| Renal failure | 3,283 (17.0%) |

| COPD | 4,027 (20.8%) |

| Pneumonia | 1,627 (8.4%) |

| Diabetes | 6,668 (34.4%) |

| Protein‐calorie malnutrition | 942 (4.9%) |

| Dementia | 1,616 (8.4%) |

| Functional disability | 570 (2.9%) |

| Peripheral vascular | 1,610 (8.3%) |

| Metastatic cancer | 463 (2.4%) |

| Trauma in last year | 918 (4.7%) |

| Major psychiatric disorder | 397 (2.1%) |

| Chronic liver disease | 113 (0.6%) |

| Summary score, mean (std) | −2.09 (0.78) |

| Survey year associated with discharge | |

| 2008 | 2,443 (12.6%) |

| 2009 | 3,215 (16.6%) |

| 2010 | 3,405 (17.6%) |

| 2011 | 389 (2.0%) |

| 2012 | 6,128 (31.7%) |

| 2013 | 3,777 (19.5%) |

| Teaching status | |

| Teaching hospital | 12,084 (62.4%) |

| Nonteaching hospital | 7,273 (37.6%) |

| Bed size | |

| 1: 6–24 beds | 2 (0.01%) |

| 2: 25–49 beds | 125 (0.7%) |

| 3: 50–99 beds | 814 (4.2%) |

| 4: 100–199 beds | 1,757 (9.1%) |

| 5: 200–299 beds | 4,258 (22.0%) |

| 6: 300–399 beds | 2,816 (14.6%) |

| 7: 400–499 beds | 3,505 (18.1%) |

| 8: 500 or more beds | 6,086 (31.4%) |

| 30‐day mortality (patient level) | 2,366 (12.2%) |

Table 2.

Comparison of Hospital Characteristics and Safety Culture Survey Scores among Study Hospitals, Hospitals with at Least One CMS AMI Discharge between 2007 and 2013, and Hospitals in the 2011 AHRQ SOPS User Comparative Database

| CMS Hospitals with >1 AMI Discharge | Hospitals in 2011 AHRQ Database | Study Hospitals | |

|---|---|---|---|

| Hospital characteristics | |||

| No. of hospitals | 3,268 hospitals | 1,032 hospitals | 171 hospitals |

| Data source of characteristics | 2011 AHA survey | AHRQ website | 2011 AHA survey |

| Teaching status | |||

| Teaching | 815 (24.94%) | 351 (34%) | 57 (33.33%) |

| Nonteaching | 2,453 (75.06%) | 681 (66%) | 114 (66.67%) |

| Bed size | |||

| 1: 6–24 beds | 75 (2.29%) | 69 (7%) | 1 (0.58%) |

| 2: 25–49 beds | 341 (10.43%) | 163 (16%) | 10 (5.85%) |

| 3: 50–99 beds | 583 (17.84%) | 185 (18%) | 31 (18.13%) |

| 4: 100–199 beds | 925 (28.30%) | 231 (22%) | 36 (21.05%) |

| 5: 200–299 beds | 550 (16.83%) | 170 (16%) | 38 (22.22%) |

| 6: 300–399 beds | 342 (10.47%) | 82 (8%) | 19 (11.11%) |

| 7: 400–499 beds | 181 (5.54%) | 60 (6%) | 16 (9.36%) |

| 8: 500 or more beds | 271 (8.29%) | 72 (7%) | 20 (11.70%) |

| Region | |||

| 1: New England | 139 (4.25%) | 69 (7%) | 4 (2.34%) |

| 2: Mid‐Atlantic | 369 (11.29%) | 26 (3%) | 20 (11.70%) |

| 3: South Atlantic | 575 (17.59%) | 185 (18%) | 21 (12.28%) |

| 4: Central | 1,523 (46.60%) | 573 (56%) | 108 (63.16%) |

| 5: Mountain | 217 (6.64%) | 73 (7%) | 6 (3.51%) |

| 6: Pacific and associated territories | 445 (13.62%) | 106 (10%) | 12 (7.02%) |

| Safety Culture Survey | Mean | Mean (SD, Min, Max) | |

|---|---|---|---|

| No. of surveys | n/a | 1,032 surveys | 257 surveys |

| Data source | n/a | AHRQ website | Westat data |

| Communication openness | n/a | 0.62 | 0.61 (0.06, 0.39, 0.83) |

| Frequency of events reported | n/a | 0.63 | 0.62 (0.06, 0.43, 0.82) |

| Feedback and communication about error | n/a | 0.64 | 0.64 (0.07, 0.41, 0.82) |

| Handoffs and transitions | n/a | 0.45 | 0.42 (0.08, 0.26, 0.73) |

| Management support for patient safety | n/a | 0.72 | 0.69 (0.08, 0.43, 0.90) |

| Nonpunitive response to error | n/a | 0.44 | 0.43 (0.07, 0.23, 0.72) |

| Organizational learning | n/a | 0.72 | 0.71 (0.06, 0.54, 0.89) |

| Overall perception of patient safety | n/a | 0.66 | 0.65 (0.06, 0.48, 0.85) |

| Staffing | n/a | 0.57 | 0.55 (0.07, 0.38, 0.80) |

| Supervisor actions promoting safety | n/a | 0.75 | 0.74 (0.05, 0.56, 0.92) |

| Teamwork across units | n/a | 0.58 | 0.56 (0.08, 0.36, 0.84) |

| Teamwork within units | n/a | 0.80 | 0.80 (0.05, 0.65, 0.92) |

| Overall Safety Grade | n/a | 0.75 | 0.73 (0.08, 0.52, 0.94) |

| Average domain scores | n/a | 0.63 | 0.62 (0.06, 0.45, 0.82) |

| Average 30‐day mortality rate across 257 unique hospital‐survey combinations, mean (std) | n/a | n/a | 0.16 (0.13) |

Among these 257 surveys, the average number of staff surveyed was 1,984 (min: 85, max: 28,950), average number of staff respondents was 834 (min: 43, max: 7,806), and average response rate was 54 percent (min: 5 percent, max: 100 percent). This average response rate was slightly higher than the national average (52 percent) in the 2011 AHRQ HSOPS database; 75 percent of surveys in our sample had response rates greater than 35 percent, and only two surveys had response rates below 10 percent. The highest safety performance domain was teamwork within units (mean 0.80 [min: 0.65 max: 0.92]); the lowest performance domain was handoffs and transitions of care (mean 0.42 [min: 0.26 max: 0.73]).

The domain scores in our study cohort were generally similar to national averages from the 2011 AHRQ SOPS Comparative Database (Table 2). Comparing characteristics of our study hospitals with all CMS hospitals that had at least one AMI discharge in 2011, and with all hospitals in the 2011 SOPS Comparative Database, we excluded more small hospitals (<50 beds) and included more Central region hospitals.

Preliminary examination of the bivariate associations between positive safety culture domain scores and unadjusted 30‐day mortality (Figure 1) suggested no or weak correlations. After adjusting for patient and hospital factors, no statistically significant relationships were found (Figure 2) between risk‐adjusted 30‐day mortality and any measure of safety culture (individual domain scores, average safety scores across domains, or overall safety score) in any of the three sets of models. Because no interaction term was statistically significant, we only show the results from the first two models described previously.

Figure 1.

- Note: The logit mortality for 23 hospitals (8.9 percent) with no mortality and 2 hospitals (0.8 percent) with 100 percent mortality could not be defined and these hospitals/surveys were excluded from the scatter plot. These hospitals had low volume and were matched with only a few eligible discharges, ranging from 1 to 12.

Figure 2.

- Note: Model 1 only included the patient and hospital summary scores, safety culture domain score, year of survey, and a multiple survey indicator; model 2 included all the covariates in model 1 plus hospital teaching status and bed size.

When we restricted our analyses to surveys with greater than 50 percent response rates, higher performance on supervisor actions promoting safety was paradoxically associated with increased mortality (OR 1.13 [1.01, 1.25]). However, when we further adjusted for hospital characteristics and added interaction terms, no significant association was found (Figure 3). The other sensitivity analyses revealed similar lack of significant associations.

Figure 3.

- Note: Model 1 only included the patient and hospital summary scores, safety culture domain score, year of survey, and a multiple survey indicator; model 2 included all the covariates in model 1 plus hospital teaching status and bed size.

A postpower calculation indicated we had more than 90 percent power to detect an odds ratio of 0.90 (or smaller) associated with a one standard deviation increase in the safety score.

Discussion

The Potential Impact of Safety Culture in Health Care

Health care experiences higher rates of adverse safety events compared with most high‐reliability industries and professions, and consequently, patients may not achieve their optimal quality outcomes. There is substantial interest in studying those attitudes, structures, and processes that may facilitate improvements in this critical area, including the role played by safety culture (Zohar 1980; Gaba 2000; Singer et al. 2010; Hansen, Williams, and Singer 2011; Chassin and Loeb 2013; Waterson 2014).

In other industries, safety culture has correlated with worker safety, satisfaction, and stress, and with some objective outcomes such as industrial accidents. In a meta‐analysis of 32 studies, mostly outside of health care, Clarke and colleagues found that safety culture correlated with better compliance and staff engagement; the correlation of better safety culture and reduced accident rates was weaker, observed mostly in prospective studies (Clarke 2006). If the safety culture of a health care institution were conclusively shown to impact not just worker safety but patient outcomes, then the results of safety culture surveys would allow hospitals to assess areas of strength and weakness, and to implement initiatives that would improve both safety culture and the quality of care delivered to patients (Halligan and Zecevic 2011; Morello et al. 2013; Singer and Vogus 2013). Survey results would also provide additional information to patients and the general public seeking care at “safer” institutions.

Health Care Safety Culture Surveys

Standardized national safety culture surveys are the major tools used by health care institutions to assess safety culture and climate. Numerous instruments have been developed including the AHRQ HSOPS; the Safety Attitudes Questionnaire (Sexton et al. 2006); and the Patient Safety Culture in Healthcare Organizations Survey (Singer et al. 2009b). These surveys are used to measure existing safety culture institutionally and within specific units and role groups, to benchmark results against national data, and to focus subsequent improvement initiatives.

The Association of Safety Culture Scores and Patient Outcomes

Given the potential implications of safety culture survey results, considerable research has been devoted to investigating associations between health care safety culture and objective indicators of staff and patient safety. Results have been mixed despite a variety of endpoints studied, including patient experience of care (Hofmann and Mark 2006; Sorra et al. 2012); nursing sensitive indicators (Brown and Wolosin 2013; DiCuccio 2015); complications of bariatric surgery (Birkmeyer et al. 2013); AHRQ Patient Safety Indicators (Singer et al. 2009a; Mardon et al. 2010; Rosen et al. 2010); treatment errors (Katz‐Navon, Naveh, and Stern 2005); 30‐day readmissions after hospitalizations for acute myocardial infarction, heart failure, or pneumonia (Hansen, Williams, and Singer 2011); surgical site infections after colon surgery (Fan et al. 2016); ICU mortality, medication errors, and length of stay (Pronovost et al. 2005; Huang et al. 2010); use of safe practices (Zohar et al. 2007); medication errors (Hofmann and Mark 2006; Vogus and Sutcliffe 2007; Zohar et al. 2007); urinary tract infections (Hofmann and Mark 2006); surgical morbidity and mortality among hospitals participating in the National Surgical Quality Improvement Program (Davenport et al. 2007); staff‐reported adverse events (Smits et al. 2012); adverse events identified using the Global Trigger Tool (Farup 2015; Najjar et al. 2015); adherence to care guidelines in primary care teams (Hann et al. 2007); staff satisfaction and turnover (MacDavitt, Chou, and Stone 2007); and nurse back injuries (Hofmann and Mark 2006). In aggregate, current evidence regarding the association between health care safety culture and patient outcomes is modest, inconsistent, and sometimes even counterintuitive (Scott et al. 2003; Hoff et al. 2004; Weingart et al. 2004; MacDavitt, Chou, and Stone 2007). In a 2011 review of 23 studies, the Health Foundation (2011) found “limited evidence” to support a simplistic, linear association between culture and outcomes; there was stronger evidence for linkages with staff outcomes than with patient outcomes.

Numerous explanations have been invoked to explain the seemingly weak and inconsistent associations between safety culture and patient outcomes. For example, the determination of relevant adverse outcomes can be problematic. Staff‐reported adverse events may not accurately or completely capture all relevant safety outcomes, and some errors resulting from weak safety culture may have no impact on outcomes (Hoff et al. 2004). Also, high event rates could characterize hospitals with poor safety cultures, or they might conversely indicate a strong and transparent safety culture where event reporting is encouraged (Sorra and Dyer 2010). Certain endpoints used in some studies are especially problematic, such as the AHRQ Patient Safety Indicators, or PSIs (Rosen et al. 2012; Rajaram, Barnard, and Bilimoria 2015). The apparent association between safety culture and outcomes also varies by work area and discipline, and it appears stronger when assessed by frontline staff, whose evaluation of culture may be more realistic than that of higher level managers and leaders (Singer et al. 2009b; Rosen et al. 2010; Hansen, Williams, and Singer 2011). Some studies describe response strength, characterized by the consistency of scores across different respondents and role groups, which is thought to reflect the institutional pervasiveness of safety culture. All these variations in study design may help explain the inconsistent findings in many culture–outcomes studies.

Specific methodological concerns with culture–outcomes studies include inadequately adjusted aggregate hospital results (Mardon et al. 2010; Fan et al. 2016), quantitative versus qualitative methodologies (Mannion, Davies, and Marshall 2005), insufficient sample size and power, measurement error, and lack of generalizability when studies are conducted at just one or two hospitals. Culture–outcomes associations may exist, but their impact may be obscured by unmeasured confounders, patient complexity, and external forces such as production pressure (Smits et al. 2012). Finally, because the impact of safety climate on safety motivation, participation, and accidents may be lagged up to several years, simultaneous measurement of safety culture and outcomes may not be optimal (Neal and Griffin 2006). Most studies including ours are cross‐sectional in design, with only a few documenting the longitudinal association of positive changes in safety culture with concomitant improvements in objective outcomes (Timmel et al. 2010; Berry et al. 2016).

AMI‐Focused Studies of Safety Culture, Adverse Event Rates, and Outcomes

Although our study focus was on the widely used, generic AHRQ HSOPS survey instrument, several safety culture studies targeting AMI outcomes have been performed using customized survey tools. Bradley and colleagues conducted two surveys (Bradley et al. 2012, 2014), in 2010 and 2013, administered to more than 500 hospitals, with the goal of identifying strategies for improving AMI care and to determine the associations between these hospital strategies and risk‐adjusted AMI mortality rates. In the original survey (Bradley et al. 2012), most of the survey items were AMI‐focused and related to structural organization at the hospital level (e.g., CPOE, computer alerts and prompts, assignment of case managers, on‐site cardiology coverage, role of pharmacists, and ED physicians), and several culture‐related questions were included. Regarding the latter, in univariate analyses all four AMI communication and coordination questions, and several questions on problem solving for AMI care, were significantly associated with risk‐adjusted mortality. In multivariate analyses, among the culture variables most similar to the AHRQ HSOPS survey, regular meetings with EMS providers (which arguably measures coordination and communication), and creative problem solving were statistically significant. In their longitudinal follow‐up study based on 2013 data (Bradley et al. 2014), three of the four organizational culture questions had lower scores in 2013 compared with 2010, as did the question regarding regular meetings with EMS providers. These studies do, however, raise the question as to whether an association between culture and outcomes for specific conditions might exist, but they may not be captured by more generic surveys such as AHRQ HSOPS.

Adverse event rates might also serve as an indicator of hospital safety culture. Wang and colleagues (Wang et al. 2016) used 2009–2013 data from 793 acute care hospitals to study the association between 21 adverse event rates (e.g., central‐line‐associated bloodstream infection, catheter‐associated urinary tract infection, pressure ulcers, Clostridium difficile infection, medication‐related events, ventilator‐associated pneumonia) and their corresponding risk‐adjusted mortality rates for AMI. A 1 percentage point change in the risk standardized adverse event rate was associated with an average change in the mortality rate of 4.86 percentage points. The authors consider several potential explanations for this association, one of which is that adverse event rates reflect underlying hospital safety culture, which in turn may impact care for AMI and other conditions. Given the possible link between adverse events, safety culture, and outcomes in this study, as well as the findings of Bradley and associates (Bradley et al. 2012, 2014), it is possible that generic safety culture scores such as the AHRQ HSOPS which we studied may not adequately capture this latent construct.

Contributions of the Current Study

Our study addresses many of the methodological limitations of previous investigations. First, we employed a large national sample of hospitals, to our knowledge the largest in the published literature that uses the AHRQ HSOPS instrument. Access to these AHRQ results required the individual consent of all hospitals prior to their release to us. All geographic regions, hospital sizes, and teaching intensity are represented, and the distributions of most characteristics are reasonably similar to the overall Medicare hospital population and to those hospitals participating in the AHRQ national survey. We had access to patient‐level AMI data from these hospitals, as submitted to the federal government. Our power calculations demonstrated that we had adequate sample sizes to detect meaningful associations.

Second, we used a risk‐adjusted endpoint (30‐day AMI mortality), based on peer‐reviewed and published methodologies, which has been a core component part of the Hospital Compare program since its inception. AMI patients are seen with reasonable frequency at most institutions, and mortality rates are high enough to assure adequate endpoints. AMI patients often require complex care in multiple hospital venues, they receive numerous medications, and their care involves many different providers including attending and resident physicians, nurses, and therapists. Thus, there are ample opportunities for various domains of safety culture to impact patient survival, including teamwork within and between units, staffing, and handoffs and transitions. If safety culture has a major impact on outcomes, it seems reasonable to assume that their impact would be reflected in mortality rates for this index condition.

We explored a variety of safety culture measures (individual domain, average domain score, and overall safety score) and performed sensitivity analyses, including the addition of potential hospital confounders. Despite these varied analyses, we could not demonstrate an association of any individual domain, average, or overall safety culture scores with patient‐level, risk‐adjusted mortality for AMI.

Limitations

In addition to its cross‐sectional rather than longitudinal design, as discussed above, another limitation of our study is the measurement of safety culture at the hospital level. Safety culture varies across units within hospitals (Singer et al. 2009b), so it is possible that our aggregate scores missed important within‐hospital differences, especially in units where AMI patients were cared for. However, attributing AMI care to specific units would be difficult as the care location of such patients varies across hospitals. Sample sizes for both surveys and mortality outcomes are also small at the unit level, which would make analyses problematic (Waterson 2014). Because we could not attribute care to specific units within the hospital, we had no option but to use the overall hospital safety culture scores.

There is currently no consistent approach to survey administration across hospitals (e.g., timing and duration of the survey period, numbers and roles of staff surveyed). These hospital‐level sampling differences are not well documented but may impact aggregate results (Waterson 2014).

Our study focused solely only on one patient outcome, mortality. It is possible that there could be an association between safety culture and other outcomes, or with staff satisfaction as demonstrated in some other studies (MacDavitt, Chou, and Stone 2007; Timmel et al. 2010; The Health Foundation 2011; Daugherty Biddison et al. 2016). These other outcomes were not within the scope of our study. It is also possible that safety culture is in fact related to outcomes, but that the AHRQ survey does not adequately or accurately capture the underlying safety culture.

Our study spanned 6 years during which secular decreases in mortality occurred; however, each survey was linked to safety culture data from the same period. Furthermore, results in our own study cohort were relatively stable (results available upon request).

For 11 hospitals with multiple sites but one Medicare Provider Number, we averaged scores across sites, as we assumed common approaches to safety across these systems. Some error could be introduced by this assumption. However, we performed a sensitivity analysis excluding discharges from hospitals with multiple sites, and no significant changes were found in any results.

Due to the number of statistical tests performed, multiple comparisons are a theoretical issue. However, the lack of any significant associations except for one domain in one sensitivity analysis (in which some patient and hospital characteristics of the sensitivity population differed substantially from the rest of the original population) suggests that this was not a practical issue. Our primary interest was the association of safety culture scores and outcomes, and the sensitivity analyses were of secondary importance.

As noted in the Discussion, our study focused on the association of AMI outcomes and scores from the commonly used, generic AHRQ safety culture survey instrument. More AMI‐focused survey instruments (Bradley et al. 2012, 2014) and other proxy indicators of safety culture such as adverse event rates (Wang et al. 2016) have demonstrated associations not found in our study.

Finally, our safety culture data were obtained from a subset of hospitals which agreed to voluntarily release their results for our study. Although generally comparable to overall national data, these self‐selected hospitals may be more safety conscious and have higher safety scores or lower mortality rates than other US hospitals, making it harder to detect any significant associations. This could also limit the generalizability of our findings. However, despite this limitation, our analyses still had the largest and most diverse group of hospitals in any similar study of which we are aware.

Conclusion

Based on safety culture survey data from a large, geographically diverse sample of hospitals, and using CMS risk models for AMI mortality, our analyses could not detect any association between safety culture and 30‐day risk‐adjusted mortality. These findings are relevant, as hospitals devote considerable time, effort, and money to administering and analyzing survey results, communicating these to staff, and designing programs to address low scoring areas, all in an effort to improve safety. If the culture–outcome association is not robust, then perhaps these resources might better be used in other ways.

It is possible that our fundamental understanding of the culture–outcomes association may be flawed or inadequate. Rather than simplistic, unidirectional causal associations, they may be complex and bidirectional; processes and performance may sometimes shape culture rather than the converse (The Health Foundation 2011). It is also conceivable that there is no significant association of health care safety culture and patient outcomes as currently measured, but that there might be if other survey instruments, safety culture indicators, or outcomes were used. Additional research in this area is warranted to determine whether continued reliance on existing widely used safety culture surveys to guide safety improvement efforts is warranted.

Supporting information

Appendix SA1: Author Matrix.

Figure S1: Inclusion Exclusion Flow Chart.

Acknowledgments

Joint Acknowledgment/Disclosure Statement: This project was internally funded (MGH Center for Quality and Safety).

Disclosure: None.

Disclaimer: None.

References

- AHRQ . 2011. “2011 User Comparative Database Report: Hospital Survey on Patient Safety Culture” [accessed on April 12, 2011]. Available at http://www.ahrq.gov/professionals/quality-patient-safety/patientsafetyculture/hospital/2011/index.html

- AHRQ . 2017. “AHRQ Hospital Survey on Patient Safety Culture” [accessed on April 12, 2017]. Available at https://www.ahrq.gov/professionals/quality-patient-safety/patientsafetyculture/hospital/index.html

- Berry, J. C. , Davis J. T., Bartman T., Hafer C. C., Lieb L. M., Khan N., and Brilli R. J.. 2016. “Improved Safety Culture and Teamwork Climate Are Associated with Decreases in Patient Harm and Hospital Mortality across a Hospital System.” Journal of Patient Safety [epub ahead of print]. http://doi.org/10.1097/PTS.0000000000000251 [DOI] [PubMed] [Google Scholar]

- Birkmeyer, N. J. , Finks J. F., Greenberg C. K., McVeigh A., English W. J., Carlin A., Hawasli A., Share D., and Birkmeyer J. D.. 2013. “Safety Culture and Complications after Bariatric Surgery.” Annals of Surgery 257 (2): 260–5. [DOI] [PubMed] [Google Scholar]

- Bradley, E. H. , Curry L. A., Spatz E. S., Herrin J., Cherlin E. J., Curtis J. P., Thompson J. W., Ting H. H., Wang Y., and Krumholz H. M.. 2012. “Hospital Strategies for Reducing Risk‐Standardized Mortality Rates in Acute Myocardial Infarction.” Annals of Internal Medicine 156 (9): 618–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradley, E. H. , Sipsma H., Brewster A. L., Krumholz H. M., and Curry L.. 2014. “Strategies to Reduce Hospital 30‐day Risk‐Standardized Mortality Rates for Patients with Acute Myocardial Infarction: A Cross‐Sectional and Longitudinal Survey.” BMC Cardiovascular Disorders 14: 126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown, D. S. , and Wolosin R.. 2013. “Safety Culture Relationships with Hospital Nursing Sensitive Metrics.” Journal for Healthcare Quality 35 (4): 61–74. [DOI] [PubMed] [Google Scholar]

- Chassin, M. R. , and Loeb J. M.. 2013. “High‐Reliability Health Care: Getting There from Here.” Milbank Quarterly 91 (3): 459–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clarke, S. 2006. “The Relationship between Safety Climate and Safety Performance: A Meta‐Analytic Review.” Journal of Occupational Health Psychology 11 (4): 315–27. [DOI] [PubMed] [Google Scholar]

- Daugherty Biddison, E. L. , Paine L., Murakami P., Herzke C., and Weaver S. J.. 2016. “Associations between Safety Culture and Employee Engagement over Time: A Retrospective Analysis.” BMJ Quality & Safety 25 (1): 31–7. [DOI] [PubMed] [Google Scholar]

- Davenport, D. L. , Henderson W. G., Mosca C. L., Khuri S. F., and R. M. Mentzer, Jr . 2007. “Risk‐Adjusted Morbidity in Teaching Hospitals Correlates with Reported Levels of Communication and Collaboration on Surgical Teams but Not with Scale Measures of Teamwork Climate, Safety Climate, or Working Conditions.” Journal of the American College of Surgeons 205 (6): 778–84. [DOI] [PubMed] [Google Scholar]

- DiCuccio, M. H. 2015. “The Relationship between Patient Safety Culture and Patient Outcomes: A Systematic Review.” Journal of Patient Safety 11 (3): 135–42. [DOI] [PubMed] [Google Scholar]

- Fan, C. J. , Pawlik T. M., Daniels T., Vernon N., Banks K., Westby P., Wick E. C., Sexton J. B., and Makary M. A.. 2016. “Association of Safety Culture with Surgical Site Infection Outcomes.” Journal of the American College of Surgeons 222 (2): 122–8. [DOI] [PubMed] [Google Scholar]

- Farup, P. G. 2015. “Are Measurements of Patient Safety Culture and Adverse Events Valid and Reliable? Results from a Cross Sectional Study.” BMC Health Services Research 15: 186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaba, D. M. 2000. “Structural and Organizational Issues in Patient Safety: A Comparison of Health Care to Other High‐Hazard Industries.” California Management Review 43 (1): 83–102. [Google Scholar]

- Guldenmund, F. 2000. “The Nature of Safety Culture: A Review of Theory and Research.” Safety Science 34: 215–57. [Google Scholar]

- Halligan, M. , and Zecevic A.. 2011. “Safety Culture in Healthcare: A Review of Concepts, Dimensions, Measures and Progress.” BMJ Quality & Safety 20 (4): 338–43. [DOI] [PubMed] [Google Scholar]

- Hann, M. , Bower P., Campbell S., Marshall M., and Reeves D.. 2007. “The Association between Culture, Climate and Quality of Care in Primary Health Care Teams.” Family Practice 24 (4): 323–9. [DOI] [PubMed] [Google Scholar]

- Hansen, L. O. , Williams M. V., and Singer S. J.. 2011. “Perceptions of Hospital Safety Climate and Incidence of Readmission.” Health Services Research 46 (2): 596–616. [DOI] [PMC free article] [PubMed] [Google Scholar]

- The Health Foundation . 2011. “Evidence Scan: Does Improving Safety Culture Affect Patient Outcomes?” [accessed on May 3, 2017]. Available at http://www.health.org.uk/sites/health/files/DoesImprovingSafetyCultureAffectPatientOutcomes.pdf

- Hoff, T. , Jameson L., Hannan E., and Flink E.. 2004. “A Review of the Literature Examining Linkages between Organizational Factors, Medical Errors, and Patient Safety.” Medical Care Research and Review: MCRR 61 (1): 3–37. [DOI] [PubMed] [Google Scholar]

- Hofmann, D. A. , and Mark B.. 2006. “An Investigation of the Relationship between Safety Climate and Medication Errors as Well as Other Nurse and Patient Outcomes.” Personnel Psychology 59 (4): 847–69. [Google Scholar]

- Huang, D. T. , Clermont G., Kong L., Weissfeld L. A., Sexton J. B., Rowan K. M., and Angus D. C.. 2010. “Intensive Care Unit Safety Culture and Outcomes: A US Multicenter Study.” International Journal for Quality in Health Care 22 (3): 151–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Katz‐Navon, T. , Naveh E., and Stern Z.. 2005. “Safety Climate in Health Care Organizations: A Multidimensional Approach.” Academy of Management Journal 48 (6): 1075–89. [Google Scholar]

- Kotter, J. , and Heskett J.. 1992. Corporate Culture and Performance. New York: The Free Press. [Google Scholar]

- MacDavitt, K. , Chou S. S., and Stone P. W.. 2007. “Organizational Climate and Health Care Outcomes.” Joint Commission Journal on Quality and Patient Safety 33 (11 suppl): 45–56. [DOI] [PubMed] [Google Scholar]

- Mannion, R. , Davies H. T., and Marshall M. N.. 2005. “Cultural Characteristics of “High” and “Low” Performing Hospitals.” Journal of Health Organization and Management 19 (6): 431–9. [DOI] [PubMed] [Google Scholar]

- Mardon, R. E. , Khanna K., Sorra J., Dyer N., and Famolaro T.. 2010. “Exploring Relationships between Hospital Patient Safety Culture and Adverse Events.” Journal of Patient Safety 6 (4): 226–32. [DOI] [PubMed] [Google Scholar]

- Morello, R. T. , Lowthian J. A., Barker A. L., McGinnes R., Dunt D., and Brand C.. 2013. “Strategies for Improving Patient Safety Culture in Hospitals: A Systematic Review.” BMJ Quality & Safety 22 (1): 11–8. [DOI] [PubMed] [Google Scholar]

- Najjar, S. , Nafouri N., Vaanhaecht K., and Euwema M.. 2015. “The Relationship between Patient Safety Culture and Adverse Events: A Study in Palestinian Hospitals.” Safety in Health 1 (16): 1–9. http://doi.org/10.1186/s40886-015-0008-z [Google Scholar]

- Neal, A. , and Griffin M. A.. 2006. “A Study of the Lagged Relationships among Safety Climate, Safety Motivation, Safety Behavior, and Accidents at the Individual and Group Levels.” Journal of Applied Psychology 91 (4): 946–53. [DOI] [PubMed] [Google Scholar]

- Pronovost, P. , Weast B., Rosenstein B., Sexton J. B., Holzmueller C. G., Paine L., Davis R., and Rubin H. R.. 2005. “Implementing and Validating a Comprehensive Unit‐Based Safety Program.” Journal of Patient Safety 1 (1): 33–40. [Google Scholar]

- Rajaram, R. , Barnard C., and Bilimoria K. Y.. 2015. “Concerns about Using the Patient Safety Indicator‐90 Composite in Pay‐for‐Performance Programs.” Journal of the American Medical Association 313 (9): 897–8. [DOI] [PubMed] [Google Scholar]

- Reason, J. T . 1997. Managing the Risks of Organizational Accidents. Burlington, VT: Ashgate. [Google Scholar]

- Reason, J. 2000. “Human Error: Models and Management.” British Medical Journal 320 (7237): 768–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosen, A. K. , Singer S., Shibei Z., Shokeen P., Meterko M., and Gaba D.. 2010. “Hospital Safety Climate and Safety Outcomes: Is There a Relationship in the VA?” Medical Care Research and Review: MCRR 67 (5): 590–608. [DOI] [PubMed] [Google Scholar]

- Rosen, A. K. , Itani K. M., Cevasco M., Kaafarani H. M., Hanchate A., Shin M., Shwartz M., Loveland S., Chen Q., and Borzecki A.. 2012. “Validating the Patient Safety Indicators in the Veterans Health Administration: Do They Accurately Identify True Safety Events?” Medical Care 50 (1): 74–85. [DOI] [PubMed] [Google Scholar]

- Schein, E. H. 2010. Organizational Culture and Leadership. San Francisco: Jossey‐Bass. [Google Scholar]

- Scott, T. , Mannion R., Marshall M., and Davies H.. 2003. “Does Organisational Culture Influence Health Care Performance? A Review of the Evidence.” Journal of Health Services Research & Policy 8 (2): 105–17. [DOI] [PubMed] [Google Scholar]

- Sexton, J. B. , Helmreich R. L., Neilands T. B., Rowan K., Vella K., Boyden J., Roberts P. R., and Thomas E. J.. 2006. “The Safety Attitudes Questionnaire: Psychometric Properties, Benchmarking Data, and Emerging Research.” BMC Health Services Research 6: 44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singer, S. , Lin S., Falwell A., Gaba D., and Baker L.. 2009a. “Relationship of Safety Climate and Safety Performance in Hospitals.” Health Services Research 44 (2 Pt 1): 399–421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singer, S. J. , Gaba D. M., Falwell A., Lin S., Hayes J., and Baker L.. 2009b. “Patient Safety Climate in 92 US Hospitals: Differences by Work Area and Discipline.” Medical Care 47 (1): 23–31. [DOI] [PubMed] [Google Scholar]

- Singer, S. J. , Rosen A., Zhao S., Ciavarelli A. P., and Gaba D. M.. 2010. “Comparing Safety Climate in Naval Aviation and Hospitals: Implications for Improving Patient Safety.” Health Care Management Review 35 (2): 134–46. [DOI] [PubMed] [Google Scholar]

- Singer, S. J. , and Vogus T. J.. 2013. “Reducing Hospital Errors: Interventions That Build Safety Culture.” Annual Review of Public Health 34: 373–96. [DOI] [PubMed] [Google Scholar]

- Smits, M. , Wagner C., Spreeuwenberg P., Timmermans D. R., van der Wal G., and Groenewegen P. P.. 2012. “The Role of Patient Safety Culture in the Causation of Unintended Events in Hospitals.” Journal of Clinical Nursing 21 (23–24): 3392–401. [DOI] [PubMed] [Google Scholar]

- Sorra, J. S. , and Dyer N.. 2010. “Multilevel Psychometric Properties of the AHRQ Hospital Survey on Patient Safety Culture.” BMC Health Services Research 10: 199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sorra, J. , Khanna K., Dyer N., Mardon R., and Famolaro T.. 2012. “Exploring Relationships between Patient Safety Culture and Patients’ Assessments of Hospital Care.” Journal of Patient Safety 8 (3): 131–9. [DOI] [PubMed] [Google Scholar]

- Timmel, J. , Kent P. S., Holzmueller C. G., Paine L., Schulick R. D., and Pronovost P. J.. 2010. “Impact of the Comprehensive Unit‐Based Safety Program (CUSP) on Safety Culture in a Surgical Inpatient Unit.” Joint Commission Journal on Quality and Patient Safety 36 (6): 252–60. [DOI] [PubMed] [Google Scholar]

- Vogus, T. J. , and Sutcliffe K. M.. 2007. “The Impact of Safety Organizing, Trusted Leadership, and Care Pathways on Reported Medication Errors in Hospital Nursing Units.” Medical Care 45 (10): 997–1002. [DOI] [PubMed] [Google Scholar]

- Wang, Y. , Eldridge N., Metersky M. L., Sonnenfeld N., Fine J. M., Pandolfi M. M., Eckenrode S., Bakullari A., Galusha D. H., Jaser L., Verzier N. R., Nuti S. V., Hunt D., Normand S. L., and Krumholz H. M.. 2016. “Association between Hospital Performance on Patient Safety and 30‐Day Mortality and Unplanned Readmission for Medicare Fee‐for‐Service Patients with Acute Myocardial Infarction.” Journal of the American Heart Association 5 (7), doi: 10.1161/JAHA.116.003731. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waterson, P. 2014. Patient Safety Culture: Theory, Methods and Application. Surrey, England: Ashgate. [Google Scholar]

- Weick, K. , and Sutcliffe K. M.. 2007. Managing the Unexpected: Resilient Performance in an age of Uncertainty. San Francisco: John Wiley & Sons. [Google Scholar]

- Weingart, S. N. , Farbstein K., Davis R. B., and Phillips R. S.. 2004. “Using a Multihospital Survey to Examine the Safety Culture.” Joint Commission Journal on Quality and Safety 30 (3): 125–32. [DOI] [PubMed] [Google Scholar]

- Yale New Haven Health Services Corporation/Center for Outcomes Research and Evaluation . 2013. “2013 Measures Updates and Specifications: Acute Myocardial Infarction, Heart Failure, and Pneumonia 30‐Day Risk‐Standardized Mortality Measure (Version 7.0): Yale New Haven Health Services Corporation/Center for Outcomes Research and Evaluation (YNHHSC/CORE)” [accessed on April 12, 2013]. Available at http://sepas.srhs.com/wp-content/uploads/2014/01/Mort-AMI-HF-PN_MeasUpdtRept_v7.pdf

- Zohar, D. 1980. “Safety Climate in Industrial Organizations: Theoretical and Applied Implications.” Journal of Applied Psychology 65 (1): 96–102. [PubMed] [Google Scholar]

- Zohar, D. . 2000. “A Group‐Level Model of Safety Climate: Testing the Effect of Group Climate on Microaccidents in Manufacturing Jobs.” Journal of Applied Psychology 85 (4): 587–96. [DOI] [PubMed] [Google Scholar]

- Zohar, D. , Livne Y., Tenne‐Gazit O., Admi H., and Donchin Y.. 2007. “Healthcare Climate: A Framework for Measuring and Improving Patient Safety.” Critical Care Medicine 35 (5): 1312–7. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix SA1: Author Matrix.

Figure S1: Inclusion Exclusion Flow Chart.