Abstract

Research suggests that infants progress from discrimination to recognition of emotions in faces during the first half year of life. It is unknown whether the perception of emotions from bodies develops in a similar manner. In the current study, when presented with happy and angry body videos and voices, 5-month-olds looked longer at the matching video when they were presented upright but not when they were inverted. In contrast, 3.5-month-olds failed to match even with upright videos. Thus, 5-month-olds but not 3.5-month-olds exhibited evidence of recognition of emotions from bodies by demonstrating intermodal matching. In a subsequent experiment, younger infants did discriminate between body emotion videos but failed to exhibit an inversion effect, suggesting that discrimination may be based on low-level stimulus features. These results document a developmental change from discrimination based on non-emotional information at 3.5 months to recognition of body emotions at 5 months. This pattern of development is similar to face emotion knowledge development and suggests that both the face and body emotion perception systems develop rapidly during the first half year of life.

Keywords: Emotion recognition, Intermodal emotion perception, Body emotion, Infant emotion perception, Body knowledge development

Both faces and bodies convey emotional information. For survival purposes, being able to glean emotional information from bodies may be as or more important than gathering that information from faces (de Gelder, 2006); for example, one may be able discern cues that signal a threat in the environment from a greater distance in bodies than in faces. Moreover, adults are more accurate in recognizing peak emotions from bodies than from faces (Aviezer, Trope, & Todorov, 2012a). However, most research on emotion perception in infancy and beyond has been limited to facial expressions. Therefore, the purpose of the current study was to examine the nature of the development of body emotion knowledge. Specifically, we examined recognition of body emotions as assessed by intermodal matching of emotional bodies to vocal emotions and discrimination between emotional body movements during the first half year of life.

The theoretical framework for this research is the model of emotion knowledge development proposed by Walker-Andrews (1997). According to this model, there are several levels of emotion knowledge in infancy, with more sophisticated abilities building upon the more basic levels and coming online later in development. First is the ability to simply detect or sense emotional information in the environment (e.g., can the infant physically see or hear the stimulus?). The next level of development involves the capacity to discriminate among emotional stimuli (e.g., can the infant detect differences between two body postures or vocalizations?). Finally, there is the “recognition” of emotional information, which requires that the infant be able to interpret emotional expressions and exhibit at least some level of understanding of the underlying affect (Walker-Andrews, 1997; 1998). According to Walker-Andrews (1997; also see Walker, 1982), intermodal matching is a reflection of emotion recognition. This is because, in order to match emotional expressions in physically different modalities (e.g., in faces and in voices), infants must recognize at least to some extent that the information portrayed in these modalities depicts the same underlying affect.

Prior research suggests that the development of emotion processing from faces is consistent with the model proposed by Walker-Andrews (1997) described above. In particular, infants around 3-4 months of age appear to be able to discriminate among facial emotional expressions and vocal emotional expressions by around 5-7 months (Flom & Bahrick, 2007), and it takes longer (5-7 months) before infants begin to exhibit evidence of facial emotion recognition by demonstrating intermodal matching (Vaillant-Molina, Bahrick, & Flom, 2013; Walker-Andrews, 1997; 1998). The current study examined whether this model also applies to the development of body emotion knowledge. It is important to study the nature of development of sensitivity to body emotions because, as reviewed briefly below, body and face emotions work in concert in adulthood, and in some cases, emotional information is more accurately derived from bodies than from faces. Moreover, some researchers have argued that knowledge about bodies is slower to develop than knowledge about faces (Slaughter, Heron-Delaney, & Christie, 2012), while others have suggested a similar trajectory of development in both cases (Bhatt, Hock, White, Jubran, & Galati, 2016; Marshall & Meltzoff, 2015; Meltzoff, 2011). The analysis of the development of body emotion knowledge will provide a contrast between these approaches by examining whether the development of sensitivity to body emotions is similar to that of face emotion knowledge.

Children’s and adults’ perception of body emotions

Although still lagging behind research conducted on facial expressions, adults’ and children’s perception of body emotions has been measured in a variety of tasks, encompassing both behavioral and physiological measures (for reviews, see de Gelder, 2006; de Gelder, de Borst, & Watson, 2015; for select studies on children, see Geangu, Quadrelli, Conte, Croci, & Turati, 2016; Peterson, Slaughter, & Brownell, 2015; Tuminello & Davidson, 2011).

Several studies have found that, like facial expressions, body emotion is highly recognizable for adults (Atkinson, Dittrich, Gemmell, & Young, 2004; de Gelder & Van den Stock, 2011) and even preschool-aged children (Nelson & Russell, 2011; Ross, Polson, & Grosbras, 2012). However, adult-like emotion knowledge, including emotion recognition, may not fully develop until adolescence or even later (Ross et al., 2012). For example, Ross and colleagues (2012) found that even older teenagers (16-17 years) were significantly less accurate than adults when identifying body emotions (happy, sad, scared, angry) with blurred faces in a forced-choice task.

Several studies have also investigated how information from other sources (e.g., faces, voices) influences perception of body emotion. For example, Stienen, Tanaka, and de Gelder (2011) found that adults’ emotion categorization of body expressions in a masking paradigm was influenced by concurrently presented vocalizations that were either emotionally congruent or incongruent to the body and vice versa. Similarly, Aviezer, Trope, and Todorov (2012a; 2012b) found that when faces and bodies both express emotions, adults’ perception of the facial emotion is often strongly influenced by the body emotion. Moreover, discrimination among peak emotions is more accurate from isolated bodies than from faces (Aviezer et al., 2012a).

Taken together, these studies suggest that body emotion works with other sources of emotion in an integrated manner and is at least as important as other sources of emotion information (and perhaps more important in some instances, see Aviezer et al., 2012a). However, the way in which this skill develops and the time course of its development is still unclear. Therefore, it is important to further investigate this important ability, as it is clearly an influential source of socioemotional information.

Infants’ perception of body emotions

Based on the Walker-Andrews (1997) model described previously, two of the early markers of emotion perception relate to the ability to detect and discriminate between emotions in the environment. One set of studies examining infants’ detection and discrimination of body emotion using neurological methods comes from Missana and colleagues. When 4- and 8-month-olds viewed happy and fearful body expressions as dynamic, point-light displays (Missana, Atkinson, & Grossmann, 2015; Missana & Grossmann, 2015), only 8-month-olds exhibited significant differences in their Pc (late-latency component) response to happy and fearful bodies, and the magnitude of their Pb (early-latency component) response was larger when the displays were inverted (Missana et al., 2015). Likewise, upright happy and fearful displays elicited differential electroencephalogram (EEG) patterns only in 8-month-olds (Missana & Grossmann, 2015). Finally, 8-month-olds exhibited significantly more negative N290 and Nc responses to static, full-light displays of upright fearful bodies compared to happy bodies, but not when the bodies were inverted (Missana, Rajhans, Atkinson, & Grossmann, 2014). Taken together, these studies by Missana and colleagues indicate that 8-month-olds, but not 4-month-olds, rapidly discriminate between happy and fearful body emotion. However, across their studies, Missana and colleagues only tested 4-month-olds on point-light displays, so it is uncertain whether younger infants can distinguish between full-light displays of body emotion, which would arguably provide more information than the comparatively impoverished point-light displays.

As noted earlier, according to Walker-Andrews’ (1997) model, a more advanced skill related to emotion perception is emotion recognition. Prior research shows that infants recognize facial emotions in the sense proposed by Walker-Andrews (1997) by demonstrating that they match vocal emotions to corresponding facial emotions (Kahana-Kalman & Walker-Andrews, 2001; Vaillant-Molina et al., 2013; Walker, 1982). For example, Vaillant-Molina and colleagues (2013) found that 5-month-olds, but not 3.5-month-olds, match other infants’ vocal emotions to the corresponding dynamic facial expression in an intermodal matching procedure.

Extending this research on intermodal matching to body emotion, Zieber and colleagues found that infants successfully match happy and angry emotional vocalizations to the corresponding body emotion by 6.5 months when the bodies are upright but not inverted (Zieber, Kangas, Hock, & Bhatt, 2014a; 2014b). Furthermore, they matched when viewing both dynamic and static upright bodies. However, 3.5-month-olds failed to do so (Zieber et al., 2014b). Therefore, we know that this skill must develop between these two ages, but it is not clear at what specific point infants are consistently able to match across modalities. Given that 5-month-olds in Valliant-Molina et al. (2013) matched facial emotions to vocal emotions, infants appear to recognize emotions in faces by this age. It remains unclear, however, whether they are similarly able to recognize emotions in bodies. Therefore, in the current study, we tested 5-month-olds on the intermodal matching task previously used by Zieber and colleagues with 6.5- and 3.5-month-olds (Zieber et al., 2014a, 2014b).

In addition to testing 5-month-olds on the intermodal matching test, we also tested 3.5-month-olds. As previously described, 3.5-month-olds did not match emotional vocalizations to the body displaying the same emotion in a previous study (Zieber et al., 2014b). It is unclear why they were unable to do so. It is possible that infants at this age are capable of matching, but only under certain circumstances. Previous studies have shown that younger infants require more processing time than older infants to encode the same amount of information (see Fagan, 1990; Rose, Futterweit, & Jankowski, 1999; Rose, Jankowski, & Feldman, 2002), and processing time requirements increase with task complexity (Fagan, 1990). Consequently, giving 3.5-month-olds additional time to process the correspondence between body and auditory emotion may result in successful matching. Thus, in the current study, both 3.5- and 5-month-olds were exposed to stimuli for twice the duration used in Zieber et al. (2014a, 2014b) to see if this enables young infants to match emotions across bodies and sounds.

It is also possible that 3.5-month-olds’ failure to match across bodies and voices in Zieber et al. (2014b) is due to an inability to “recognize” emotions in body actions in the sense proposed by Walker-Andrews (1997). That is, infants this age may be able to discriminate between body emotions but they may not have access to the common affective information in body actions and vocal emotions. If this is true, then infants may succeed in a discrimination task, but fail in the intermodal matching task. Conversely, 3.5-month-olds may not even be capable of discriminating between different body emotions, as may be expected based on the lack of discrimination of point-light emotion bodies for the 4-month-olds, as documented by Missana and colleagues (2015). We tested these possibilities in the current study. To summarize, the primary goal of the current study was to document the trajectory of the development of body emotion perception. Experiments 1 and 2 addressed emotion recognition by 3.5- and 5-month-olds by examining intermodal perception of body and vocal emotions. Experiment 3 examined a potential reason for young infants’ failure to match, specifically the inability to discriminate between videos of body emotions.

Experiment 1

As previously described, Vaillant-Molina et al. (2013; also see Walker, 1982) found that 5-month-olds match emotions across faces and voices, indicating that infants this age are sensitive to affective information in faces and voices (Walker-Andrews, 1997). In contrast, 3.5-month-olds failed to match facial and vocal emotions, indicating a developmental change from 3.5 months to 5 months of age. To examine whether a similar developmental change is evident in the domain of body emotion perception, in Experiment 1, we tested groups of 3.5- and 5-month-olds on the intermodal preference technique (Spelke, 1976) employed by Zieber and colleagues (2014a, 2014b), in which a happy and an angry video were presented side by side while either a happy or an angry vocalization played simultaneously.

In Zieber et al. (2014a, 2014b), infants were tested on two 15-s trials for their ability to match bodily and vocal emotions. As noted earlier, while 6.5-month-olds exhibited matching, 3.5-month-olds did not. One possible reason for young infants’ failure is that the time provided to process information may have been insufficient. Thus, in Experiment 1, we doubled the duration of testing, as it possible that young infants may benefit from additional processing time (Fagan, 1990; Rose et al., 1999; Rose et al., 2002). If both 3.5-month-olds and 5-month-olds match across modalities with this additional time, then it would indicate that, starting early in life, infants are sensitive to the commonalities in affective information displayed in different modalities. Given that Vaillant-Molina et al. (2013) found intermodal matching across faces and voices at 5 months but not at 3 months, if 3.5-month-olds in the current experiment fail to match but 5-month-olds do match, then it would suggest that the pattern of development of body emotion knowledge is similar to that of face emotion knowledge, with body emotion recognition available by 5 months but not at 3.5 months. If neither 3.5-month-olds nor 5-month-olds match body emotions to vocal emotions, then it would suggest that body emotion knowledge takes longer to develop than face emotion knowledge.

Method

Participants

Twenty 3.5-month-old (14 males; Mage = 101.05 days, SD = 7.21) and 16 5-month-old infants (8 males; Mage = 148.00 days, SD = 4.69) from predominately middle-class, Caucasian families participated in Experiment 1. Infants were recruited from a local university hospital and from birth announcements in the newspaper. Data from six additional 3.5-month-olds and three additional 5-month-olds were excluded due to side bias (n = 5), failure to examine both test stimuli (n = 3), or program error (n = 1).

Visual and auditory stimuli

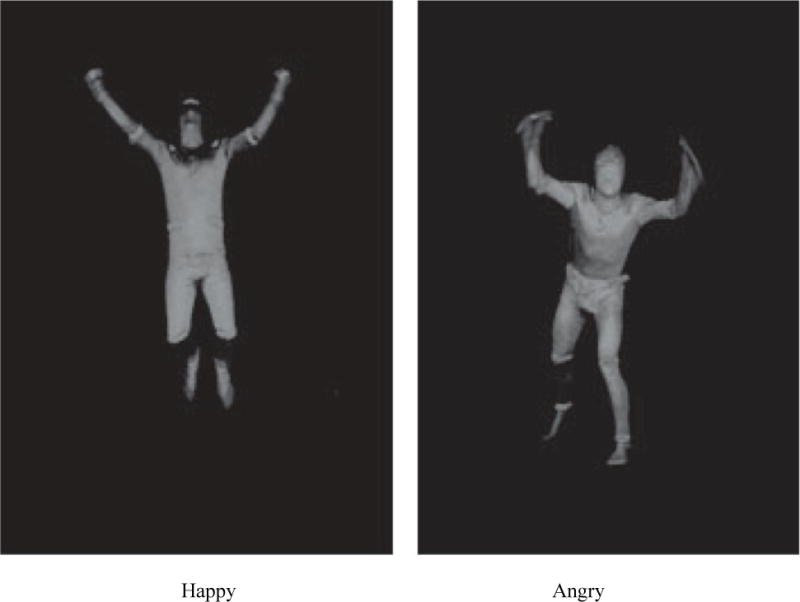

The videos used in Experiment 1 were dynamic displays of happy and angry body expressions that were obtained from Atkinson and colleagues (2004; see Figure 1) and previously used by Zieber et al. (2014a; 2014b). In these videos, male and female actors wore a suit that covered their faces and bodies. Each video clip lasted for 3 seconds and was presented in gray scale. Regardless of emotion, the initial and final positions of the actors were in a forward-facing neutral stance, with the arms down at their sides and feet standing shoulder width apart. Adult raters classified each stimulus as belonging to one of five emotion categories (happy, angry, sad, disgust, and fear), with high levels of accuracy (>85%, with chance being 20%; see Atkinson et al. 2004). Two male and two female pairs were chosen from the set for this experiment, which were the same body pairs and vocalizations used by Zieber and colleagues (2014a; 2014b).

Figure 1.

Examples of the emotions displayed in the videos used in Experiments 1-3 depicting happy and angry body movements. In Experiments 1-2, infants viewed a pair of happy and angry bodies while hearing either a happy or angry vocalization simultaneously. In Experiment 3, 3.5-month-olds first saw two identical bodies (either angry or happy) side-by-side during familiarization, and later saw a happy and an angry video during the test trials, but there were no vocalizations at any time. Infants in the upright conditions viewed stimuli upright, whereas those in the inverted condition saw the same stimuli rotated 180 degrees.

The happy and angry nonverbal vocalizations used in this study were adapted from Sauter, Eisner, Calder, and Scott (2010) and further validated by adult raters across different cultures (Sauter, Eisner, Ekman, & Scott, 2010). Two happy and two angry vocalizations were selected to pair with the body videos. The happy vocalizations had an average intensity of 73.60 dB, duration of 2.10 s, fundamental frequency of 100.30 Hz, and pitch of 284.55 Hz. The angry vocalizations had an average intensity of 77.84 dB, duration of 1.27 s, fundamental frequency of 78.52 Hz, and pitch of 221.93 Hz. Like the body pairs, the vocalizations used in this study were the same as those previously used by Zieber and colleagues (2014a; 2014b). Importantly, the vocalizations and body movements were selected from separate databases, so the body movements and vocalizations were asynchronous (i.e., variations in intensity or pitch in the vocalization did not correspond to variations in the intensity or speed of body movement); therefore, any successful matching would not be due to a synchrony in the timing of the vocalizations with the body movements but rather a recognition that both modalities were expressing the same affect.

Apparatus and Procedure

Infants were seated on their parents’ laps approximately 45 cm from a 50-cm computer monitor in a darkened chamber. Parents wore opaque glasses to prevent them from being able to view the monitor and potentially influencing infants’ performance on the task. A video camera located above the monitor and a DVD recorder were used to record infants’ behavioral looking for offline coding. Infants were first presented with a red flashing star in the center of the monitor to focus their attention to the center of the screen. The experimenter began each trial by pushing a key once the infant was judged to be looking in the center of the screen at the attention getter.

As in Zieber et al. (2014a; 2014b), each infant was tested on a single pair of happy-angry videos and with a single accompanying vocalization (happy or angry). Across infants in each age group, there was a total of eight body-voice pairings, with each pair of happy-angry videos being equally often accompanied by a happy or angry vocalization. Each body movement and vocalization pairing was repeated five times per trial, resulting in 15-s trials.

Infants were tested on four 15-s trials using one of the four body pairs, which were counterbalanced across infants. As previously stated, it is possible that the 3.5-month-olds in Zieber et al. (2014b) failed to match due to an insufficient amount of time to process the information during the two test trials. Using four trials instead would therefore give infants double the amount of processing time. Four trial performance has also been assessed in similar intermodal perception studies (e.g., Kahana-Kalman & Walker-Andrews, 2001). Each infant heard only one emotion vocalization type (happy or angry). The matching sound was counterbalanced across infants, such that for half of the infants the happy body was the congruent emotion, while for the other half the angry body was the congruent emotion. Additionally, the left-right position of the body pairs was counterbalanced within each sequence and across participants. As in Zieber et al. (2014a, 2014b), the dependent measure was the percent preference for the congruent (matching) video. Every infant in the sample contributed data for each of the four trials. Therefore, the dependent measure in Experiment 1 was the percent preference for the matching body emotion across all four trials. This was calculated by dividing the total looking to the congruent body across all four trials by the total looking to both the congruent and incongruent bodies across all four trials; this ratio was multiplied by 100. A mean preference score that is significantly greater than chance performance (50%) would suggest that infant were looking preferentially toward the matching video.

Coding of the infants’ behavior was conducted offline by a naïve coder who was unaware of the left-right location of the matching video. The DVD player was slowed to 25% of the normal speed during playback. Coding reliability for infants in Experiment 1 was verified by a second naïve coder for 25% of the infants at each age group (3-mo: Pearson’s r = 0.998; 5-mo: Pearson’s r = 0.983).

Results and Discussion

Table 1 displays the raw look durations and matching preference scores. In the case of 3.5-month-olds, attention to the congruent body did not differ from 50% chance (M = 47.17%, SE = 4.55), t(19) = −0.62, p = .54, d = 0.14. In contrast, 5-month-olds exhibited a significant preference for the matching body video as compared to chance (M = 59.52%, SE = 3.70), t(15) = 2.57, p = .02, d = 0.64. Additionally, 5-month-olds’ matching preference score was significantly greater than 3.5-month-olds’ score, F(1,34) = 4.13, p = .05, d = 0.68. This indicates that 5-month-olds, but not 3.5-month-olds, successfully match a vocalized emotion to the corresponding body emotion.

Table 1.

3.5- and 5-month-old infants’ mean look durations (in seconds) to the matching and nonmatching body postures and percentage preferences for the matching stimuli in Experiment 1 (both age groups) and Experiment 2 (5-month-olds only).

| Orientation | n | Matching [M (SE)] |

Nonmatching [M (SE)] |

Percent preference [M (SE)] |

ta | pa |

|---|---|---|---|---|---|---|

| Upright (Experiment 1) | ||||||

| 3.5-month-olds | 20 | 6.29 (0.61) | 7.25 (0.69) | 47.17 (4.55) | −0.62 | .54 |

| 5-month-olds | 16 | 7.86 (0.63) | 5.19 (0.46) | 59.52 (3.70) | 2.57 | .02* |

|

| ||||||

| Inverted (Experiment 2) | ||||||

| 5-month-olds | 16 | 6.53 (0.69) | 5.99 (0.66) | 51.98 (4.51) | 0.44 | .67 |

Note. Standard errors are presented in parentheses.

Comparison of mean percentage preference score against the chance level of 50%:

p < .05 (two-tailed).

These findings demonstrate that the period between 3.5 and 5 months is one of transition for emotion recognition, in that 5-month-olds, but not 3.5-month-olds, demonstrate evidence of the ability to match emotion across modalities. Furthermore, even when given extra exposure to stimuli as compared to the previous study by Zieber and colleagues, 3.5-month-olds still failed to match across bodies and voices. Therefore, there is a developmental change in this ability that occurs between 3.5 and 5 months of age, which is also in line with the developmental timeline documented by Vaillant-Molina et al. (2013) for intermodal matching of faces and voices. Based on the model of the development of emotion perception proposed by Walker-Andrews (1997), these findings suggest that 5-month-olds recognize emotion in bodies and voices but 3.5-month-olds do not. However, it is possible that 5-month-olds were matching on the basis of some low-level stimulus correspondence between body movements and voices rather than information pertaining to emotions. We examined this possibility in Experiment 2.

Experiment 2

In order to examine whether 5-month-olds in Experiment 1 were responding to emotion information in the videos rather than some low-level feature (e.g., greater amount or speed of movement) we tested a group of 5-month-old infants using inverted body videos. Inversion as a control manipulation has been used in many previous studies to examine infants’ responses to social stimuli, such as faces and bodies. Inversion disrupts the configural processing of social stimuli (Bertin & Bhatt, 2004; Bhatt, Bertin, Hayden, & Reed, 2005; Heck, Hock, White, Jubran, & Bhatt, 2017; Maurer, Le Grand, & Mondloch, 2002; Missana & Grossmann, 2015; Zieber, Kangas, Hock, & Bhatt, 2015); however, any low-level features (such as movement) that may be present in the upright stimuli are maintained in the inverted condition. Therefore, if infants no longer match across modalities when the bodies are inverted, this would suggest that performance in the upright condition of Experiment 1 was not based on low-level features and inversion disrupted 5-month-olds’ processing of emotion information present in the bodies. In contrast, if infants still match even with inverted body videos, then it would be unclear if infants in the upright condition were responding solely on the basis of emotion information or some low-level stimulus information.

Method

Participants

Sixteen 5-month-old infants (6 males; Mage = 153.00 days, SD = 6.10) from predominately middle-class Caucasian families participated in Experiment 2. Infants were recruited from a local university hospital and from birth announcements in the newspaper. Data from two additional infants were excluded for side bias.

Visual and auditory stimuli

The inverted stimuli were the same body videos used in Experiment 1, which were rotated 180° and paired with the identical corresponding nonverbal vocalizations from Experiment 1.

Apparatus and Procedure

The apparatus and procedure were identical to those used in Experiment 1. Each infant was assigned to one of the inverted body pairs described previously. As in Experiment 1, the left-right position of the emotion displays was counterbalanced within each sequence and across participants. The dependent measure was the percent preference for the matching video across the four trials and was calculated in the same way as in Experiment 1.

Coding of the infants’ behavior was also conducted in the same manner as in Experiment 1. Coding reliability was verified by a second observer for 25% of the infants (Pearson’s r = .987).

Results and Discussion

Five-month-old infants tested with inverted stimuli failed to exhibit a preference for the congruent video when compared to chance (M = 51.98%, SE = 4.51), t(15) = 0.44, p = .67, d = 0.11 (refer to Table 1 for raw look durations and matching preference scores). This finding is in contrast to the significant matching exhibited by 5-month-olds tested with upright stimuli in Experiment 1. This inversion effect indicates that 5-month-olds in the upright condition of Experiment 1 were not simply matching based on low-level features (such as differences in movement). This finding suggests that 5-month-olds have attained knowledge about body emotion at least to some extent, as they must extract emotion information from multiple modalities (visual, auditory) in order to succeed at the task. The current findings also document a younger age at which infants demonstrate knowledge about body emotion than has been found previously by Zieber and colleagues (2014b), as well as Missana and colleagues (Missana et al., 2015; Missana & Grossmann, 2015). Significantly, the age at which infants exhibit matching in the current study, 5 months, is also the age at which infants in the Vaillant-Molina et al. (2013) study exhibited matching of emotional faces to voices, suggesting a correspondence in the development of face and body knowledge. However, it is still unclear why 3.5-month-olds were unable to match in Experiment 1 and in Zieber et al. (2014b). This question was addressed in Experiment 3.

Experiment 3

Consistent with the findings by Zieber and colleagues (2014b), 3.5-month-olds in Experiment 1 failed to match across modalities despite being given double the processing time. It is possible that 3.5-month-olds’ failure was due to a lack of knowledge about body emotions or, alternatively, due to an inability to match across modalities. That is, it is possible that 3.5-month-olds are sensitive to and can discriminate between body emotions but are unable to correlate information from bodies with vocal emotions. We addressed this issue in Experiment 3.

We used a familiarization/novelty preference procedure in Experiment 3 to examine whether 3.5-month-olds can even discriminate happy from angry bodies. Infants were familiarized to a happy or angry body video and tested for their preference between the familiar video and a novel video exhibiting the opposite emotion. If infants exhibit a novelty preference, then it would indicate that infants discriminate between videos depicting happy versus angry body movements.

However, discrimination alone may not necessarily indicate body emotion knowledge as infants could be distinguishing among body videos based on low-level features (e.g., differences in the nature of movement). For example, in the face emotion literature, infants have been found to discriminate among emotions based on the “toothiness” of certain emotions (e.g., happy, angry), but not the emotions themselves (Caron, Caron, & Myers, 1985). In order to increase our confidence that discrimination in this procedure is based on body emotion information rather than some low-level feature, we also included an inverted control condition. As previously outlined, inversion is frequently used to control for performance based on low-level features (Bertin & Bhatt, 2004; Bhatt et al., 2005; Heck et al., 2017; Maurer et al., 2002; Missana & Grossmann, 2015; Zieber et al., 2015). Therefore, if infants in the upright condition discriminate by showing a preference for the novel body emotion but those in the inverted condition do not, this would indicate that 3.5-month-olds do have some basic level of body emotion knowledge. In contrast, if they fail to discriminate even in the upright condition or if they discriminate in the inverted condition as well, then it would indicate that they likely are not responding to emotion-specific information in the bodies.

Method

Participants

Forty 3.5-month-olds (23 males; Mage = 105.08 days, SD = 8.76) from predominately middle-class, Caucasian households participated in Experiment 3. Infants were recruited from a local university hospital and from birth announcements in the newspaper. Data from nine additional infants were excluded for side bias (n = 4), failure to examine both test stimuli (n = 1), failure to look for at least 20% of the study duration (n = 1), fussiness (n = 2) or experimenter error (n = 1).

Stimuli

The upright and inverted videos were identical to those used in Experiments 1 and 2. However, unlike the previous two experiments, no vocalizations were provided.

Apparatus and Procedure

The apparatus was identical to that of the previous experiments. Each infant was assigned to one of the body pairs described previously. Infants were assigned to only one of the conditions (upright or inverted) and one emotion familiarization condition (happy or angry).

During each of four 15-s familiarization trials, two identical videos (happy or angry) were presented on the left and right side of the screen. A colorful attention-getter preceded each trial to ensure that infants focused on the center of the screen. Each infant saw a single video (angry or happy) during the four familiarization trials. Following familiarization to this video, infants were tested on two 15-s test trials for their preference between this video and a novel video from the opposite emotion. The two videos were presented side-by-side during the test trials. The attention-getter also preceded each of the test trials. The left-right location of the novel video was counterbalanced across infants in each of the upright and inverted groups; moreover, the location was switched from the first to the second test trial. Infants in the upright condition were familiarized and tested with upright videos whereas those in the inverted condition were familiarized and tested with inverted videos. The dependent measure was the percent preference for the novel body video across both test trials. This was calculated by dividing the total looking time to the novel video across both test trials by the total looking time to both the novel and familiar videos across both test trials. This ratio was then multiplied by 100.

Coding of infants’ behavior was conducted offline by a naïve coder who was unaware of the left-right location of the novel body video. The DVD player was slowed to 25% of the normal speed during playback. Coding reliability was verified by a second observer for 25% of the infants in each condition (upright: Pearson’s r = 0.989; inverted: Pearson’s r = 0.994).

Results and Discussion

Raw look durations and novelty preference scores are presented in Table 2. Infants in the upright condition exhibited a mean novelty preference score (M = 59.23%, SE = 3.95) that was significantly different from chance, t(19) = 2.34, p = .03, d = 0.52. Infants tested with inverted stimuli also exhibited a preference score (M = 58.74%, SE = 4.15) that was significantly greater than chance, t(19) = 2.11, p = .05, d = 0.47. Moreover, the difference in the scores of the two groups was not statistically significant, t(38) = .08, p = .93, d = 0.03. Thus, in both the upright and inverted conditions, 3.5-month-olds discriminated the novel emotion video from the familiar video. These results indicate that 3.5-month-olds are able to discriminate between videos that depict happy and angry body movements. However, the lack of an inversion effect means that this discrimination may not necessarily indicate knowledge of body emotions. Rather, these infants could be responding to features of these videos that correlate with emotions such as differences in movement characteristics rather than the emotions themselves. This finding is similar to reports of young infants responding to features associated with face emotions (e.g., toothiness associated with happiness; see Caron et al., 1985). Thus, 3.5-month-olds are sensitive to features associated with body emotions but they have not developed enough to exhibit an inversion effect in a body emotion discrimination task (Experiment 3) or to match body emotions to vocal emotions (Experiment 1).

Table 2.

3.5-month-old infants’ mean look durations (in seconds) to the novel and familiar body emotions and percentage preferences for the novel body stimuli in Experiment 3.

| Orientation | n | Novel [M (SE)] |

Familiar [M (SE)] |

Percent preference [M (SE)] |

ta | pa |

|---|---|---|---|---|---|---|

| Upright | 20 | 7.02 (0.48) | 5.30 (0.39) | 59.23 (3.95) | 2.34 | .03* |

| Inverted | 20 | 6.37 (0.61) | 4.76 (0.46) | 58.74 (4.15) | 2.11 | .05* |

Note. Standard errors are presented in parentheses.

Comparison of mean percentage preference score against the chance level of 50%:

p ≤ .05 (two-tailed).

General Discussion

The current study examined the development of sensitivity to body emotions early in life. When presented with happy and angry vocalizations and body movement videos, 5-month-olds preferentially viewed the emotionally congruent body when videos were presented upright but not when they were inverted. In contrast, 3.5-month-olds did not show evidence of intermodal matching even with upright bodies. Thus, 5-month-olds, but not 3.5-month-olds, exhibited evidence of body emotion recognition in the sense proposed by Walker-Andrews (1997). Experiment 3 revealed that lack of sensitivity to emotional information in body movements might account for younger infants’ failure to match across modalities. In this experiment, 3.5-month-olds discriminated between videos depicting different body emotions, but they discriminated even when the videos were inverted, suggesting that performance may be based on low-level stimulus features rather than emotion information. These findings indicate a significant developmental change from 3.5 to 5 months of age in sensitivity to body emotion and parallel the development of sensitivity to face emotion documented in prior studies.

According to Walker-Andrews (1997; also see Walker, 1982), successful intermodal matching indicates emotion “recognition,” a higher level of emotion perception ability than simple discrimination between emotions, as infants have to respond to the commonalities in the affective information in the different modalities in order to match. This requires some level of sensitivity to the meaning and function of emotions, rather than just to the stimulus features associated with emotions. The fact that 5-month-olds exhibited intermodal matching with upright body movements in the current study thus suggests sensitivity to emotional content of body movements by this age. This finding is consistent with other reports of 5-month-olds’ sensitivity to affective information. For example, 5-month-olds in Bornstein and Arterberry (2003) exhibited categorical perception of happy and fearful expressions. Moreover, 5-month-olds in Walker (1982) and in Vaillant-Molina et al. (2013) exhibited intermodal matching of facial emotions to vocal emotions. Infants this age also differentially attend to different facial emotions when faces are presented in competition with other stimuli (Heck, Hock, White, Jubran, & Bhatt, 2016). Thus, the current study adds to a body of literature indicating that the foundation for later knowledge of emotions is set during the first half year of life.

Please note that when we suggest that 5-month-olds recognized body emotions in Experiment 1, we are not claiming that infants’ response to emotions is like that of adults. Adults’ experience of emotions is complex and includes understanding of the internal states and motivations of individuals. Experiment 1 did not address that level of understanding of emotions. It only examined whether infants are sensitive to the correlation between affective information in bodies and voices to the extent of exhibiting intermodal perception.

The fact that 5-month-olds but not 3.5-month-olds in the current experiments exhibited intermodal matching coincides with the age range during which recognition of facial emotions also develops. For example, Vaillant-Molina et al. (2013) found that 5-month-olds but not 3.5-month-olds match emotional faces to voices. The parallel nature of the development of emotion perception in the facial and body domains is significant because, as noted earlier, there are various views in the literature concerning the relative development of knowledge about faces versus bodies. Some researchers (e.g., Slaughter et al., 2012) have suggested that body knowledge is slow to develop compared to face knowledge. According to this view, facial knowledge is innately specified or rapidly developing driven by a dedicated learning mechanism; in contrast, body knowledge is slow to develop, being reliant on general learning mechanisms. Other researchers do not envision a large gap in the development of body versus face knowledge, with some researchers suggesting that a general social development mechanism is responsive to cues from differential modalities such as faces, bodies and voices, and drives the development of social cognition capabilities that are not necessarily modality specific (Bhatt et al., 2016; Simion, Giorgio, Leo, & Bardi, 2011). The finding in the current study that the developmental trajectory of body emotion knowledge is similar to that of face emotion knowledge is more consistent with the latter approach.

However, it would be premature to conclude that the development of body emotion knowledge has the exact same trajectory as face emotion knowledge because there are many aspects of emotion knowledge development that are yet to be examined. The importance of contextual factors in early emotion processing is evident in the finding by Rajhans, Jessen, Missana, and Grossmann (2016) that body cues play a key role in the perception of facial emotions at 8 months of age. Furthermore, in a peek-a-boo paradigm using familiar individuals, Kahana-Kalman and Walker-Andrews (2001) found intermodal matching of facial and vocal emotions even at 3.5 months of age. In other words, stimulus familiarity led to evidence of face emotion recognition earlier in life. To our knowledge, no study has explored the effects of familiarity on body emotion knowledge. Thus, it is unclear whether familiarity will lessen the age at which infants recognize body emotions in a similar manner as it does with face emotions.

In addition to examining contextual factors, such as familiarity, future work should also seek to examine the mechanisms driving the developmental change in emotion perception documented in this study. The failure of 3.5-month-olds in the intermodal matching task is a replication of the failure documented in Zieber et al. (2014b). This clearly establishes that, ordinarily, development beyond this age is necessary for emotion recognition. The results of Experiment 3 of the current study indicate one potential reason for young infants’ failure to match. In that experiment, 3.5-month-olds discriminated between videos of emotional body movements; however, they exhibited discrimination between videos in both upright and inverted orientations. Although the lack of an inversion effect does not necessarily imply that infants are not sensitive to affect, it does not rule out the possibility that infants are responding to low-level features of the videos. Thus, in our opinion, it is prudent to conclude that there is no evidence to suggest that young infants are sensitive to emotional information in body movements. This conclusion is consistent with previous research by Missana and colleagues (Missana et al., 2015; Missana & Grossmann, 2015) who found no physiological evidence that 4-month-olds distinguish point-light displays of happy and fearful bodies. It is also in line with studies that have failed to find evidence of sensitivity to facial emotions at 3 months of age (e.g., Flom & Bahrick, 2007). The lack of sensitivity to emotional information may have prevented 3.5-month-olds from matching across voices and bodies in Zieber et al. (2014b) and in Experiment 1 of the current study.

Overall, it appears based on the findings from the current experiments and those of prior studies that the time between 3.5 and 5 months is one of transition for infants’ perception of body emotion. However, the developmental mechanisms behind that shift between 3.5 and 5-months are yet unknown. In previous studies on face emotion perception, advancement in emotion-related skills have been linked to other developmental changes such as the onset of self-locomotion and the subsequent alterations in parent-infant interactions that may result from this increasing independence of movement. While 5-month-old infants may be too young to crawl, it is possible that they exhibit other forms of independent movement, such as reaching and sitting, which could also lead to increases in potentially precarious situations (e.g., reaching for a sharp object) and changes in parents’ reactions. The resulting experience could facilitate the development of emotion perception. Support for this possibility comes from studies that show that changes in motor behavior during the first half year of life affect social information processing such as face perception (e.g., Cashon, Ha, Allen, & Barna, 2013; Libertus & Needham, 2011). In contrast, motor development by 3.5 months may not be advanced enough to trigger experience with various emotions, both in terms of infants experiencing the emotions themselves as well as observing others express certain emotions.

However, as discussed earlier, in the face emotion recognition literature, there is evidence indicating that even infants as young as 3.5 months of age exhibit evidence of emotion recognition if they are portrayed by familiar people, such as their mothers (Kahana-Kalman & Walker-Andrews, 2001; Montague & Walker-Andrews, 2002). It is thus possible that the developmental change documented in the current study is not absolute or totally correlated with motor development milestones.

One limitation of the current study is that each infant was presented with only one pair of emotional bodies and voices (across infants, four different sets of stimuli were used). The inversion effect found in Experiment 2 indicates that it is unlikely that there is some low-level feature specific to particular pairs that enabled 5-month-olds’ matching in Experiment 1. Nonetheless, it would be fruitful to further investigate infants’ body emotion knowledge using additional body pairs in order to strengthen the conclusion of emotion body recognition at 5 months.

In conclusion, the findings from the current study indicate that infants’ body emotion knowledge undergoes a transition between 3.5 months and 5 months, with infants initially discriminating between body emotion videos, but not based on emotional content, to later exhibiting evidence of emotion recognition through successful intermodal matching across bodies and voices. This trajectory of body emotion knowledge development matches that of face emotion knowledge development. In both instances, rapid development early in life appears to lead to fairly sophisticated processing of emotional information including matching of affective information across modalities.

Highlights.

Infants progress from discrimination to recognition of emotion in faces during the first half year of life

3.5-month-olds in the current study discriminated between body emotions

But, 3.5-month-olds failed to recognize emotions as measured by an intermodal matching task

5-month-olds matched body emotions to vocal emotions

Development of body emotion perception is similar to development of face emotion perception

Acknowledgments

Author Note

This research was supported by a grant from the National Institute of Child Health and Human Development (HD075829). The authors would like to thank the infants and parents who participated in this study.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Atkinson AP, Dittrich WH, Gemmell AJ, Young AW. Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception. 2004;33(6):717–746. doi: 10.1068/p5096. [DOI] [PubMed] [Google Scholar]

- Aviezer H, Trope Y, Todorov A. Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science. 2012a;338:1225–1229. doi: 10.1126/science.1224313. [DOI] [PubMed] [Google Scholar]

- Aviezer H, Trope Y, Todorov A. Holistic person processing: Faces with bodies tell the whole story. Journal of Personality and Social Psychology. 2012b;103(1):20–37. doi: 10.1037/a0027411. [DOI] [PubMed] [Google Scholar]

- Bahrick LE, Hernandez-Reif M, Flom R. The development of infant learning about specific face-voice relations. Developmental Psychology. 2005;41(3):541–552. doi: 10.1037/0012-1649.41.3.541. [DOI] [PubMed] [Google Scholar]

- Bertin E, Bhatt RS. The Thatcher illusion and face processing in infancy. Developmental Science. 2004;7:431–436. doi: 10.1111/j.1467-7687.2004.00363.x. [DOI] [PubMed] [Google Scholar]

- Bhatt RS, Bertin E, Hayden A, Reed A. Face processing in infancy: Developmental changes in the use of different kinds of relational information. Child Development. 2005;76:169–181. doi: 10.1111/j.1467-8624.2005.00837.x. [DOI] [PubMed] [Google Scholar]

- Bhatt RS, Hock A, White H, Jubran R, Galati A. The development of body structure knowledge in infancy. Child Development Perspectives. 2016;10(1):45–52. doi: 10.1111/cdep.12162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bornstein MH, Arterberry ME. Recognition, discrimination and categorization of smiling by 5-month-old infants. Developmental Science. 2003;6(5):585–599. doi: 10.1111/1467-7687.00314. [DOI] [Google Scholar]

- Caron RF, Caron AJ, Myers RS. Do infants see emotional expressions in static faces? Child Development. 1985;56(6):1552–1560. doi: 10.2307/1130474. [DOI] [PubMed] [Google Scholar]

- Cashon CH, Ha O, Allen CL, Barna AC. A U-shaped relation between sitting ability and upright face processing in infants. Child Development. 2013;84(3):802–809. doi: 10.1111/cdev.12024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B. Towards the neurobiology of emotional body language. Nature Reviews Neuroscience. 2006;7(3):242–249. doi: 10.1038/nrn1872. [DOI] [PubMed] [Google Scholar]

- de Gelder B, de Borst AW, Watson R. The perception of emotion in body expressions. WIREs Cognitive Science. 2015;6(2):149–158. doi: 10.1002/wcs.1335. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Van den Stock J. The bodily expressive action stimulus test (BEAST). Construction and validation of a stimulus basis for measuring perception of whole body expressions of emotions. Frontiers in Psychology. 2011;2(181):1–6. doi: 10.3389/fpsyg.2011.00181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fagan J. The paired-comparison paradigm and infant intelligence. Annals of the New York Academy of Science. 1990;608:337–364. doi: 10.1111/j.1749-6632.1990.tb48902.x. [DOI] [PubMed] [Google Scholar]

- Flom R, Bahrick LE. The development of infant discrimination of affect in multimodal and unimodal stimulation: The role of intersensory redundancy. Developmental Psychology. 2007;43(1):238–252. doi: 10.1037/0012-1649.43.1.238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geangu E, Quadrelli E, Conte S, Croci E, Turati C. Three-year-olds’ rapid facial electromyographic responses to emotional facial expressions and body postures. Journal of Experimental Child Psychology. 2016;144:1–14. doi: 10.1016/j.jecp.2015.11.001. [DOI] [PubMed] [Google Scholar]

- Heck A, Hock A, White H, Jubran R, Bhatt RS. The development of attention to dynamic facial emotions. Journal of Experimental Child Psychology. 2016;147:100–110. doi: 10.1016/j.jecp.2016.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heck A, Hock A, White H, Jubran R, Bhatt RS. Further evidence of early development of attention to dynamic facial emotions: Reply to Grossmann and Jessen. Journal of Experimental Child Psychology. 2017;153:155–162. doi: 10.1016/j.jecp.2016.08.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahana-Kalman R, Walker-Andrews AS. The role of person familiarity in young infants’ perception of emotion expressions. Child Development. 2001;72(2):352–369. doi: 10.1111/1467-8624.00283. [DOI] [PubMed] [Google Scholar]

- Libertus K, Needham A. Reaching experience increases face preference in 3-month-old infants. Developmental Science. 14(6):1355–1364. doi: 10.1111/j.1467-7687.2011.01084.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marshall PJ, Meltzoff AN. Body maps in the infant brain. Trends in Cognitive Sciences. 2015;19(9):499–505. doi: 10.1016/j.tics.2015.06.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maurer D, Le Grand R, Mondloch CJ. The many faces of configural processing. Trends in Cognitive Sciences. 2002;6:255–260. doi: 10.1016/S1364-6613(02)01903-4. [DOI] [PubMed] [Google Scholar]

- Meltzoff AN. Social cognition and the origins of imitation, empathy, and theory of mind. The Wiley-Blackwell handbook of childhood cognitive development. 2011;1:49–75. [Google Scholar]

- Missana M, Atkinson AP, Grossmann T. Tuning the developing brain to emotional body expressions. Developmental Science. 2015;18(2):243–253. doi: 10.1111/desc.12209. [DOI] [PubMed] [Google Scholar]

- Missana M, Grossmann T. Infants’ emerging sensitivity to emotional body expressions: Insights from asymmetrical frontal brain activity. Developmental Psychology. 2015;51(2):151–160. doi: 10.1037/a0038469. [DOI] [PubMed] [Google Scholar]

- Missana M, Rajhans P, Atkinson AP, Grossmann T. Discrimination of fearful and happy body postures in 8-month-old infants: An event-related potential study. Frontiers in Human Neuroscience. 2014;9(531):1–7. doi: 10.3389/fnhum.2014.00531. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montague DPF, Walker-Andrews AS. Mothers, fathers, and infants: The role of person familiarity and parental involvement in infants’ perception of emotion expressions. Child Development. 2002;73(5):1339–1352. doi: 10.1111/1467-8624.00475. [DOI] [PubMed] [Google Scholar]

- Nelson NL, Russell JA. Preschoolers’ use of dynamic facial, bodily, and vocal cues to emotion. Journal of Experimental Child Psychology. 2011;110(1):52–61. doi: 10.1016/j.jecp.2011.03.014. [DOI] [PubMed] [Google Scholar]

- Peterson CC, Slaughter V, Brownell C. Children with autism spectrum disorder are skilled at reading emotion body language. Journal of Experimental Child Psychology. 2015;139:35–50. doi: 10.1016/j.jecp.2015.04.012. doi: http://dx.doi.org/10.1016/j.jecp.2015.04.012. [DOI] [PubMed] [Google Scholar]

- Rajhans P, Jessen S, Missana M, Grossmann T. Putting the face in context: Body expressions impact facial emotion processing in human infants. Developmental Cognitive Neuroscience. 2016;19:115–121. doi: 10.1016/j.dcn.2016.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rose SA, Futterweit LR, Jankowski JJ. The relation of affect to attention and learning in infancy. Child Development. 1999;70(3):549–559. doi: 10.1111/1467-8624.00040. [DOI] [PubMed] [Google Scholar]

- Rose SA, Jankowski JJ, Feldman JF. Speed of processing and face recognition at 7 and 12 months. Infancy. 2002;3(4):435–455. doi: 10.1207/S15327078IN0304_02. [DOI] [Google Scholar]

- Ross PD, Polson L, Grosbras M. Developmental changes in emotion recognition from full-light and point-light displays of body movement. PLoS ONE. 2012;7(9):e44815. doi: 10.1371/journal.pone.0044815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sauter DA, Eisner F, Calder AJ, Scott SK. Perceptual cues in nonverbal vocal expressions of emotion. The Quarterly Journal of Experimental Psychology. 2010;63(11):2251–2272. doi: 10.1080/17470211003721642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sauter DA, Eisner F, Ekman P, Scott SK. Cross-cultural recognition of basic emotions through nonverbal emotional vocalizations. PNAS. 2010;107(6):2408–2412. doi: 10.1073/pnas.0908239106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simion F, Di Giorgio E, Leo I, Bardi L. The processing of social stimuli in early infancy: From faces to biological motion perception. Progress in Brain Research. 2011;189:173–193. doi: 10.1016/B978-0-444-53884-0.00024-5. [DOI] [PubMed] [Google Scholar]

- Slaughter V, Heron-Delaney M, Christie T. Developing expertise in human body perception. In: Slaughter V, Brownell CA, Slaughter V, Brownell CA, editors. Early development of body representations. New York, NY, US: Cambridge University Press; 2012. pp. 81–100. [Google Scholar]

- Slaughter V, Stone VE, Reed C. Perception of faces and bodies. Similar or different? Current Directions in Psychological Science. 2004;13(6):219–223. doi: http://www.jstor.org/stable/20182961. [Google Scholar]

- Spelke ES. Infants’ intermodal perception of events. Cognitive Psychology. 1976;8(4):553–560. doi: 10.1016/0010-0285(76)90018-9. [DOI] [Google Scholar]

- Stienen BMC, Tanaka A, de Gelder B. Emotional voice and emotional body postures influence each other independently of visual awareness. PLoS ONE. 2011;6(10):e25517. doi: 10.1371/journal.pone.0025517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tuminello ER, Davidson D. What the face and body reveal: In-group emotion effects and stereotyping of emotion in African American and European American children. Journal of Experimental Child Psychology. 2011;110(2):258–274. doi: 10.1016/j.jecp.2011.02.016. [DOI] [PubMed] [Google Scholar]

- Vaillant-Molina M, Bahrick LE, Flom R. Young infants match facial and vocal emotional expressions of other infants. Infancy. 2013;18(S1):E97–E111. doi: 10.1111/infa.12017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walker AS. Intermodal perception of expressive behaviors by human infants. Journal of Experimental Child Psychology. 1982;33:514–535. doi: 10.1016/0022-0965(82)90063-7. [DOI] [PubMed] [Google Scholar]

- Walker-Andrews AS. Infants’ perception of expressive behaviors: Differentiation of multimodal information. Psychological Bulletin. 1997;121(3):437–456. doi: 10.1037/0033-2909.121.3.437. [DOI] [PubMed] [Google Scholar]

- Walker-Andrews AS. Emotions and social development: Infants’ recognition of emotions in others. Pediatrics. 1998;102(5):1268–1271. [PubMed] [Google Scholar]

- Zieber N, Kangas A, Hock A, Bhatt RS. Infants’ perception of emotion from body movements. Child Development. 2014a;85(2):675–684. doi: 10.1111/cdev.12134. [DOI] [PubMed] [Google Scholar]

- Zieber N, Kangas A, Hock A, Bhatt RS. The development of intermodal emotion perception from bodies and voices. Journal of Experimental Child Psychology. 2014b;126:68–79. doi: 10.1016/j.jecp.2014.03.005. [DOI] [PubMed] [Google Scholar]

- Zieber N, Kangas A, Hock A, Bhatt RS. Body structure perception in infancy. Infancy. 2015;20:1–17. doi: 10.1111/infa.12064. [DOI] [Google Scholar]