Abstract

Background

Optimal visualization of the operative field and methods that additionally provide supportive optical information form the basis for target-directed and successful surgery. This article strives to give an overview of current enhanced visualization techniques in visceral surgery and to highlight future developments.

Methods

The article was written as a comprehensive review on this topic and is based on a MEDLINE search and ongoing research from our own group and from other working groups.

Results

Various techniques for enhanced visualization are described comprising augmented reality, unspecific and targeted staining methods, and optical modalities such as narrow-band imaging. All facilitate our surgical performance; however, due to missing randomized controlled studies for most of the innovations reported on, the available evidence is low.

Conclusion

Many new visualization technologies are emerging with the aim to improve our perception of the surgical field leading to less invasive, target-oriented, and elegant treatment forms that are of significant benefit to our patients.

Keywords: Enhanced visualization, Augmented reality, Optical biopsy, Narrow-band imaging, Fluorescence, Image-guided surgery, Trauma reduction

Introduction

When performing surgery, the main sensory input is derived from vision as palpation can only identify larger structures such as organs, vessels, or solid tumors. Only by vision one can differentiate well perfused from ischemic bowel loops, identify distinct anatomic regions, and preserve small vessels and veins, and will immediately be alarmed if a cut is made too deeply resulting in acute bleeding. This holds true for conventional open surgery and is even more important in laparoscopic surgery with dramatically reduced palpatory feedback. This side effect of trauma reduction, the increasing dependence on visual guidance, is an attribute of all atraumatic modalities (also interventional radiology, endoscopy, etc.) and will play a decisive role in the pending dissemination of robotics.

However, not only trauma reduction contributes to the rising significance of vision in visceral surgery but also the general development in medicine and oncology in particular. For example, neoadjuvant treatment regimens in the majority of patients transform the well-palpable tumor mass of an esophageal or gastric cancer into a tiny scar the location of which can only be visually estimated on the basis of preoperative imaging and anatomical landmarks. Also screening programs and general public health consciousness result in an increasing percentage of small, impalpable, early tumors being detected. The concept of ‘non-touch isolation’ has become a paradigm in oncologic surgery, and cancer is nowadays treated by wide excision following germ layers and related anatomical compartments and by almost omitting any tactile exploration. This is best seen in mesorectal resection for rectal cancer where surgery follows a well-defined thin layer of the Waldeyer's fascia. Lastly, we have learned that cytoreductive surgery and resection of microscopically small tumor seeds can improve the survival of patients with metastatic cancer, and here again only the visual assessment of tissue is sensitive enough to identify tumor spots.

With the borders of surgery being pushed towards increased radicalness while reducing complication rates and overall trauma, enhanced visualization support plays a central if not pivotal role. This article gives insight into current developments and methods for enhanced visualization in surgery. A differentiation is made between the following topics:

i) augmented reality (AR) that provides preoperative imaging in the operating room (OR);

ii) staining techniques that change the appearance of tissue on the basis of biological or immunohistopathological characteristics;

iii) imaging methods that use optical technologies for tissue differentiation in the absence of any markers.

Methods

A MEDLINE search was performed by applying the following search terms: ‘surgery’, ‘enhanced visualization’, ‘augmented reality’, ‘fluorescence’, ‘tissue differentiation’, ‘image-guided’, ‘visualization techniques’, ‘optical methods’, ‘optical navigation’.

Relevant articles from this search were selected by 2 independent reviewers after careful screening for significance and up-to-dateness, and are presented in this article. As most articles were case reports only or technical reports reporting on a variety of heterogeneous techniques, a systematic review on this topic was deemed unwarranted.

This article does not strive for a complete review of the current literature but intends to provide a comprehensive overview of relevant topics.

Augmented Reality

Since the very beginning of medical imaging, surgeons have asked to have preoperative data available for intraoperatively guidance. The ultimate wish of surgeons was to no longer be compelled to fuse all available information with the actual OR scenario in their brains but to simply blend this data over the operative field, thus augmenting the real world with computer-based knowledge (fig. 1). Especially in liver and brain surgery and in fields with adjacent vulnerable structures such as vessels and nerves, AR was to reduce the complication rate and offer an advanced surgical approach. AR was established to highlight vulnerable subsurface structures to make surgery safer and more goal-directed. To this end, preoperative image data is displayed with relevant anatomical structures being segmented and accented (e.g. by coloring or adaptation of thresholds). This processed data is then, anatomically correct, superimposed onto the operative field to provide additional information for the surgeon. Another goal in AR is the inclusion of preoperative planning data by displaying cutting trajectories, virtual tumor models, and access routes (e.g. optimized trocar sites) to guide the surgeon through the surgery [1].

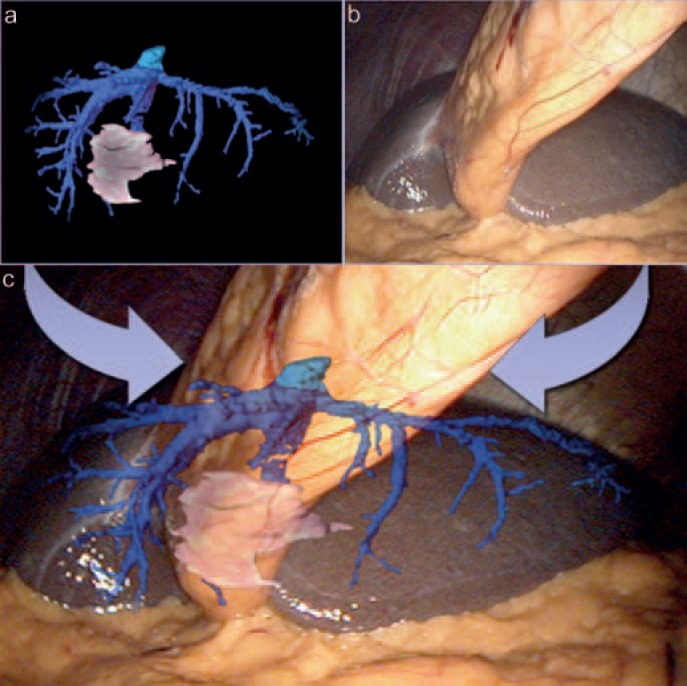

Fig. 1.

a In augmented reality, preoperative imaging is processed (segmented, volume rendered, etc.) and b superimposed onto the current operative field. c The resulting image eases the identification and preservation of hidden structures (MITI, Technical University, Munich, Germany).

For visualization in AR, different displaying technologies can be used to augment the surgical field, of which a separate video screen represents the most often used solution. Although easy to install, it requires the surgeon to alternate his viewing direction between the screen and the monitor [2]. In laparoscopic surgery, the surgical monitor or a head-mounted display allow for fused visualization that can be instantly activated and adapted to the surgeon's needs. A more ergonomic option is described by Gavaghan et al. [3] who introduced a projection system to blend the computer data directly with the surgical field. More recently, navigated tablets have been developed that can be moved freely over the region of interest to augment the scenario with relevant information as shown by Kenngott et al. [4].

To provide intuitive guidance, AR requires alignment of the actual operative situation with preoperative imaging which is achieved by registration of representative landmarks and subsequent calibration of the data. To date, different techniques for registration have been described including point marks, (organ) surface registration, volume-based techniques, or simply manual registration. Depending on the applied registration technique and its accuracy, errors ranging from 2 to 8 mm can be incurred [5]. In contrast to ‘solid organ surgeries’ (e.g. ear/nose/throat (ENT), neurosurgery), in visceral surgery, organ deformation and shifting effects caused by manipulation and breathing movements interfere with the accuracy of this registration and hinder the broad application of this approach. Accordingly, to date, AR in visceral surgery is only used in select institutions and requires subsequent recalibration of the registration as well as remodeling of the applied data.

For this purpose, additional imaging modalities and especially ultrasonography are applied to continuously update existing data based on the current situation. In 2012, for example, Kleemann et al. [6] published a case report on navigated liver surgery where the orientation of the liver was registered by laparoscopic ultrasound facilitating repetitive recalibration of the system during the intervention (fig. 2) [6, 7].

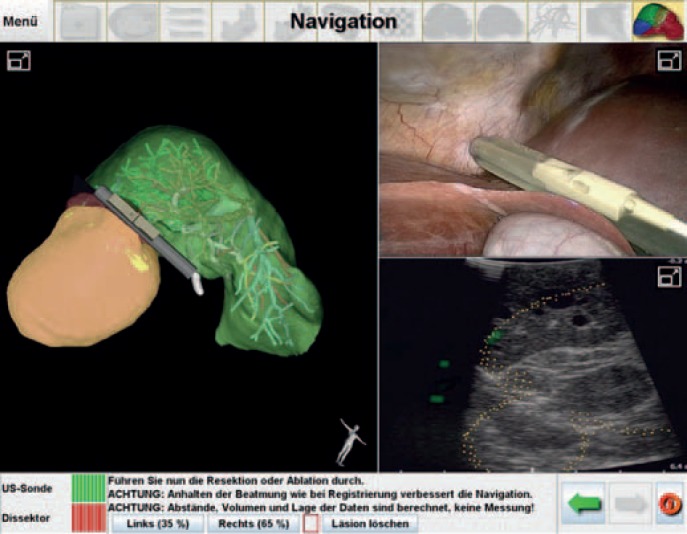

Fig. 2.

Navigated liver surgery with ultrasonic guidance as performed at the University of Lübeck. The right upper image displays the laparoscopic view with the ultrasound probe and the right lower image the respective ultrasound image. By means of a navigation system, the probe is integrated in the preoperative imaging for augmented reality (left image) (Prof. Dr. M. Kleemann, University of Lübeck, Germany).

Continuous registration of organ surfaces by laser scanning technologies [8] or time-of-flight cameras [9] displays more sophisticated modalities to update both the location and the shape of visceral organs. These modalities will potentially overcome current limitations of AR in visceral surgery as they allow for ergonomic, automated, and continuous real-time registration that does not alter the workflow.

Besides the aforementioned techniques, different 3-dimensional (3D) registration methods are available today to be used for the establishment of AR [10] but also to supply surgeons with depth information that can be used to navigate mechatronic devices and prevent them from colliding. One of the most promising techniques in this field is structured light that, when blended onto the operative field, identifies the course of the outline of an organ and subsequently its 3D shape (fig. 3).

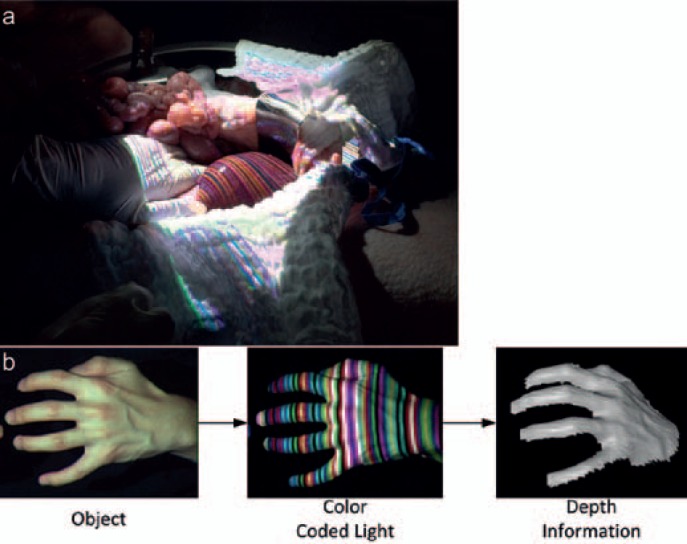

Fig. 3.

Structured light is a technology used to register depth information about a scenario. Colored stripe patterns are used to identify the outline of a 3D object. By projecting the respective patterns for short instances onto the operative field, the depth information is gained unperceived by the surgeon. a Upper image shows intraoperative application; b bottom pictures display the principle of structured light with an object in white light (left), during registration with structured light (middle), and the reconstruction (right) (MITI, Technical University, Munich, Germany).

Until these technologies are available, intraoperative imaging (computed tomography/magnetic resonance imaging (MRI)/fluoroscopy) with registration of firmly attached optical markers can alternatively be applied and is ideally implemented in a hybrid OR. The acquisition of intraoperative data increases the accuracy of imaging data as it can overcome errors caused by positioning of the patient and can be scheduled to a key step of an intervention (e.g. after mobilization of an organ and partial resection). However, scanners available for intraoperative image registration are of limited quality and in the case of MRI require suitable equipment. Furthermore, preoperative planning and segmentation of volume-rendered data is not available, lowering the level of aid provided.

Not only imaging data are of interest for AR in surgery but also any other data source such as patient parameters (e.g. heart rate, blood pressure) or surgery-related data (e.g. transmitted forces [11]) that can compensate for the lost tactile feedback.

Clinical Application of AR

Usage of AR in the clinical field was predominantly derived from neurosurgery and proved superior in guiding interventions as noted by Marcus et al. [12] who compared the effect of different virtual navigation systems in 50 novices showing that with AR and always-on wire mesh support the neurosurgical task was completed significantly faster. Evidence in this field also comes from Meola et al. [13] who summarized current literature on AR-guided neurosurgery and included 18 studies with a total of 195 successfully resected lesions. The authors found AR to be a reliable and versatile tool; however, prospective randomized trials are lacking, and the small patient numbers reported on do not allow for a reliable assessment.

In visceral surgery, available reports focus on hepatobiliary interventions, such as the publication by Okamoto et al. [14] who reported on 19 patients operated on for pancreatic and liver diseases and in whom AR was applied to define the resection line and identify hidden vessels. The authors concluded AR to be feasible but requiring substantial time for preparation (image segmentation) and set-up. Besides registration accuracy, difficulty was reported defining suitable outcome parameters to validate AR application, especially as it was preferentially applied in complex surgeries. Pessaux et al. [15] operated on 3 patients suffering from liver tumors using a robotic system and successfully performed AR-guided anatomical resection. AR in all patients allowed for a correct dissection of the tumor with precise and safe recognition of all major vascular structures and was easily implemented. No adverse events were registered. A comparable technique in open surgery was published by Tang et al. [16] for the resection of a hilar cholangiocarcinoma. They included AR to identify the hilar structures and to guide surgery. The list can be completed by reports from other surgical fields, mainly ENT, craniofacial surgery, and urology [17], stating that likewise AR is applicable in these fields; however, no randomized trials nor large clinical studies exist to prove the superiority of AR in comparison to standard techniques. Still, the accuracy, user friendliness, and time needed to prepare and implement the virtual data are being criticized and demand intelligent analytical tools such as random forests and Bayesian networks.

Tissue Staining

Marking of relevant regions by staining is a simple and reproducible approach to tissue differentiation and already well-established in visceral surgery. In this context, gastroenterologists have for years injected different kinds of dye (methylene blue, India ink, etc.) into the intestinal wall to visualize the location of tumors, or surgeons inject pigments into the skin for identification of sentinel lymph nodes [18]. Favorably, no alignment of preoperative data to the intraoperative situs is necessary as the demarcated site simply appears stained. Easily performed, this technique has several drawbacks such as pigment spillage (overstaining), non-localizability of markings due to injection in secluded regions (e.g. mesentery), bleaching effects, and dependence on correct injection [19].

To overcome these problems, researchers worldwide strive to develop new markers that provide superior marking quality (optical stability and contrast, tissue adherence, preservation of the unmarked tissue) and/or higher tissue specificity. The main focus here is on fluorescent dyes.

Unspecific (Non-Targeted) Markers

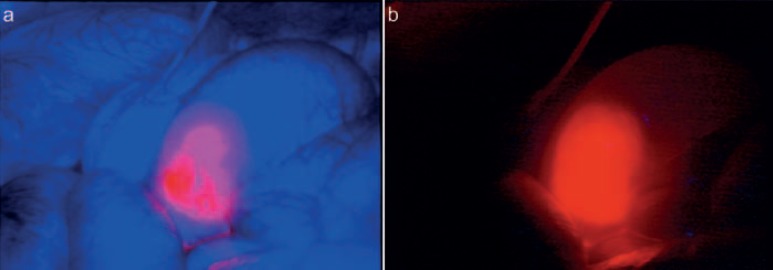

Indocyanine green (ICG) is the most popular marker as it is already approved for clinical application but also due to its favorable characteristics. ICG is a fluorophore that emits light in the range of the so-called near-infrared window (700–930 nm), a range of the light spectrum in which the absorption by blood, water, and oxygen is the lowest and the fluoroscopic signal is the strongest. To derive a signal, excitation of ICG with light of a wavelength similar to the adsorption range and registration of the emitted signal by dedicated camera systems are required [20]. ICG can be injected intravenously and is suitable for visualization of tissue perfusion, e.g. gastrointestinal (GI) anastomosis or transplants (fig. 4). In a recent publication, Boni et al. [21] evaluated the value of ICG perfusion analysis of lower rectal anastomoses and compared a group of 42 patients to a case-matched historical control group. Finding a reduced (albeit not significantly) leakage rate, the authors concluded this technique to be effective in reducing leakages after intestinal anastomosis. A systematic review on perfusion analysis completed by Degett et al. [22] summarizes the current literature on this topic and concludes perfusion analysis to be a promising tool to reduce anastomotic complications; however, evidence was low due to missing prospective comparative trials. Of note, even in the group of patients with assessed perfusion, almost 4% of patients developed an anastomotic leak. Also, in plastic surgery, quality of ICG perfusion correlated well with postoperative healing and survival of pediculated flaps [23, 24]. ICG is excreted via the liver and can visualize the bile duct with a high sensitivity even in obese patients [25, 26]. As liver tumors often affect intrahepatic bile ducts, accumulation of ICG in liver tumors can be used to identify metastasis and facilitate resection as shown by van der Vorst et al. [27] (so-called ‘enhanced permeability and retention effect’ [28]). With an adequate interval between application of ICG and intraoperative assessment, they could find a fluorescent rim surrounding colorectal liver metastases. ICG can also be injected locally and into separated vessels to demarcate anatomical liver segments and support their image-guided resection [29, 30]. Other applications of ICG angiography concern lymph vessels and nodes during sentinel lymph node mapping or the surgical treatment of lymphedema for which several studies have in principal shown the applicability of this approach [31]. Generally, ICG fluorescent imaging, due to its accumulation in certain tissues, allows for improved identification of tumor seeds also in peritoneal carcinomatosis, improving the surgical therapy [32].

Fig. 4.

a, b Bowel perfusion analysis by indocyanine green injection. The fluorescent signal indicates perfect perfusion of the anastomosis assuring its later healing process (MITI, Technical University Munich, Germany).

Unfavorably, the excitation and emission spectrum of ICG are closely adjoined, requiring sharp filters to achieve a suitable signal but also resulting in a weak signal. Especially if the object of interest is covered by surrounding tissue (e.g. fat), the weak signal can interfere with its identification as the signal cannot penetrate through tissue beyond 8–10 mm thickness [28, 33, 34].

Besides ICG, a small number of alternative fluorescent markers are approved for clinical application but have remained in their niche. Methylene blue for example is useful in the detection of insulinomas of the pancreas but besides this affinity has inferior characteristics compared to ICG [35]. Fluorescein, which is well known in ophthalmology, can also be applied for the identification of tumor deposits but is utilized almost exclusively in neurosurgery [36]. This applies also to 5-ALA, which additionally holds some indications in diagnostic laparoscopy, especially in gastric cancer [37].

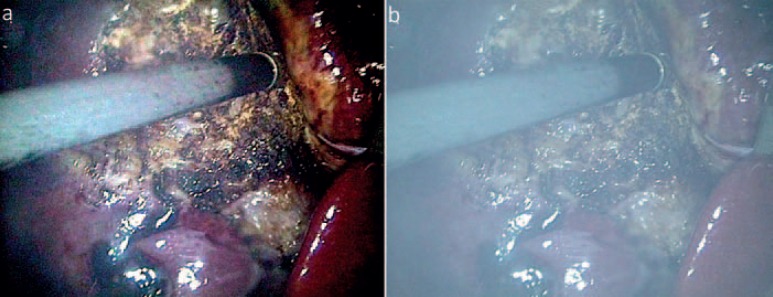

Besides these traditional, mostly approved, markers, several fluorescent dyes have been described over the past years, but have not yet come into clinical practice. In this context, the group of cyano-dyes (e.g. Cy-5.5, Cy-7) and CW800 have to be mentioned [38], but also nanoparticles that offer superior optical characteristics and other favorable features (fig. 5) [39]. However, the pending approval for clinical application as well as some remaining skepticism concerning biocompatibility (toxic components, gene alteration, etc.) currently hamper their further development [20].

Fig. 5.

a, b As compared to traditional fluorophores such as indocyanine green or fluorescein, fluorescent nanoparticles (QDOTS) offer favorable attributes, e.g. higher quantum yield, high tissue contrast, and no bleaching. In the future, they could be used for marking intestinal lesions (MITI, Technical University, Munich, Germany).

All the above-mentioned dyes have in common a certain nonspecificity and demarcation of tissue according to pharmacokinetics rather than targeting specific tissue characteristics. This is the reason for low signal contrast and causes an interfering background noise (staining of all perfused tissue) which lowers the overall sensitivity. Only by considering the respective distribution times of the maker, a utilizable signal can be achieved.

Specific (Targeted) Markers

The mentioned problem of nonspecificity falls away when linking the (fluorescent) marker to a specific antibody so that the dye will bind to the respective epitope. With this, one can easily differentiate healthy from tumorous tissue, allowing for so-called ‘image-guided surgery’ which facilitates complete tumor resection while preserving most of the surrounding healthy tissue. First published in humans in 2011 for the treatment of peritoneal carcinomatosis from ovarian cancer when van Dam et al. [40] applied folate-labelled fluorescein isothiocynate (FITC) for image-guided surgery, this principle has attracted worldwide attention. Several conjugates have been described so far that adjust the intracorporal distribution of dyes to specific needs or that allow for specific demarcation of molecular cell surface properties [41]. However, all available markers have so far only been applied in small sample studies or animal models [42], although various human trials have been initiated [28]. As the introduction of disease-specific contrast agents has to first overcome regulatory hurdles, simple modification of current fluorophores that can also result in increased specificity might be a valuable alternative. In this context, identification of the lymph drainage was improved by conjugating ICG to polyethylene glycol (PEG) and enabled Proulx et al. [43] to identify dysfunctional lymph vessels in the case of metastatic tumor spread. Alternatively, tissue-inherent enzymes can activate modified fluorescent probes and thereby allow for specific marking as demonstrated by Wunderbaldinger et al. [44]. Targeting tumor-specific antigens with formulated dyes is currently the main focus of research and addresses all kinds of epitopes from carcinoembryonic antigen (CEA) to vascular endothelial growth factor. Already in 2008, Kaushal et al. [45] were able to identify residual tumor deposits of colorectal and pancreatic cancer by using CEA-labelled fluorophores; similarly, Sano et al. [46] used epidermal growth factor receptor/trastuzumab-labelled dyes to localize breast cancer cells. A list of ongoing studies is given in a consensus report published by Rosenthal et al. [47] in 2016. This article (same as [20]) also gives insight into the problem of approval of these new dyes and discusses potential solutions and recommendations.

Optical Methods

While in open surgery vision is gained directly through the surgeon's eyes, and enhancement is more or less limited to aspects like the illumination of the surgical field or the use of magnifying glasses, some promising modifications for visualization are available in minimally invasive surgery.

Nowadays, state-of-the-art laparoscopic optics provide high resolution and 3D vision, although their benefit is still debated and/or difficult to estimate. Notwithstanding, Pierre et al. [48] in 2009 found high-definition (HD) resolution to improve surgical performance and to ease the identification of fine structures as well as 3D spatial positioning. For 3D visualization, current publications almost exclusively demonstrate its superiority as compared to standard view. In a comprehensive evaluation, our group compared a state-of-the-art HD monitor with a comparable 3D system and additionally to an autostereoscopic 3D display and an artificial display that offered 3D vision of a quality that was almost comparable to real view [49]. Interestingly, not only novices profited from stereoscopic view but also experts with an improvement in task performance of almost 20%. In our investigation, we also addressed workload issues and objective parameters such as instrument-handling economy. Consistently, 3D vision showed advantages over standard view; however, it could also be demonstrated that the real not-camera-mediated view is still better and that there is room for further improvement. Our results are in line with other publications in this field such as those by Storz et al. [50] and Sorensen et al. [51] and emend the outdated assumption of inferiority of 3D vision that resulted from older publications [52].

Interestingly, and in contrast to the available ‘in-vitro’ evidence, studies that compare 3D vision to standard view during real-life operations generally fail to demonstrate an advantage. For example Curro et al. [53] compared the effect of 3D vision during laparoscopic cholecystectomy and could not find a significant advantage, similar to Agrusa et al. [54] who performed a case-control study for laparoscopic adrenalectomy which equally showed no benefit for 3D vision. This might be due to the fact that the stable situation in the laboratory facilitates the adjustment to 3D working conditions; also, the tasks performed during the investigations were less demanding (cholecystectomy and adrenalectomy), and most studies were undertaken with experienced surgeons well trained in 2D vision.

Spectral imaging is another feature that can potentially enhance laparoscopic vision. By selecting or filtering specific wave lengths of the visible light, a miscolored modified image of the operative field is generated that eases the recognition of different structures such as vessels or tumor deposits. Being widely applied in GI endoscopy for the identification of occult polyps [55], spectral view is at present only used in GI surgery, and here it is of questionable benefit. While Schnelldorfer et al. [56] could not prove any advantage if spectral imaging for the detection of tumor deposits was applied, Kikuchi et al. [57] at least detected that dilated vessels were more easily differentiated and hypothesized that this could potentially support tumor diagnosis. However, in combination with fluoroscopic markers and/or a better integration into the workflow, spectral imaging could offer additional support that could become helpful in the future.

More popular in photography and press, high dynamic range (HDR) imaging offers higher contrast and a more realistic reproduction of a scenery than standard view. Applications in medicine are scarce; however, our group could show that HDR imaging can also be used to erase fog and dust from cutting devices and to moderate blending effects (reflections, light) (fig. 6) [58].

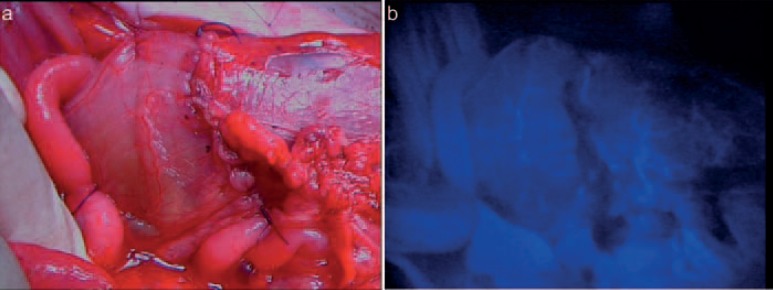

Fig. 6.

a, b High dynamic range imaging is not only useful for extending the dynamic range but can also be supportive by erasing/filtering disturbing image elements like mist or blending effects (MITI, Technical University, Munich, Germany).

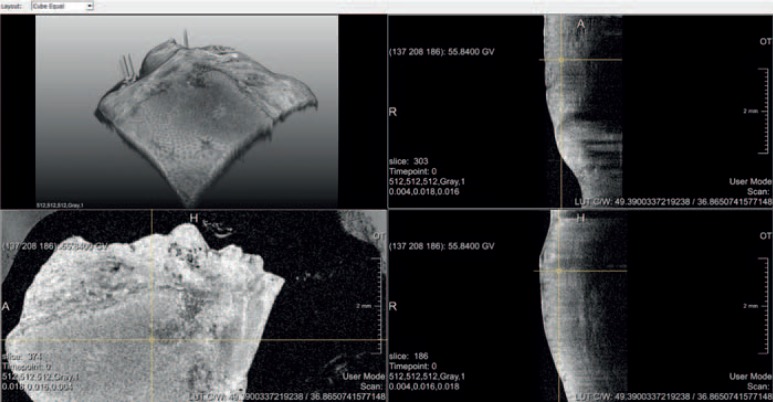

Another field that is currently in development and may potentially impact on surgery in the future are microscopic viewing technologies such as confocal laser microscopy (CLM), optoacoustic tomography, and optical coherence tomography (OCT). While in CLM a narrow laser-illuminated tissue portion is scanned with a confocal microscope, OCT analyses interferences between a measuring and a reference beam of coherent light with the measuring beam being reflected from the tissue of interest. Both techniques allow for the identification of cellular structures in vivo and the possibility of optical biopsies but will require intensive work and computer support before clinical application. While CLM is preferentially applied in flexible endoscopy to differentiate between benign and malignant alterations [59, 60], OCT is currently a highly contributing imaging modality in ophthalmology [60]. However, in surgery, the need for an in-vivo applicable near microscopic imaging modality also exists, and we already demonstrated that the differentiation between benign and malignant tissue is possible in that way (fig. 7) [61].

Fig. 7.

Optical coherence tomography (OCT) imaging of resected colon tissue in 2D (left lower and right images) and 3D view (left upper). While the 2D sections allow identification of cellular structures and intestinal layers, a clear differentiation between benign and malignant was possible only by 3D reconstruction (MITI, Technical University, Munich, Germany and Fraunhofer IPT, Aachen, Germany).

Discussion

Sight is one of our most important senses and the most significant one when performing minimally invasive surgery. Correspondingly, it is no wonder that improvements in the visual reproduction of the OR scenario are correlated with improvements in surgical performance. With 3D and HD resolution, current laparoscopic devices can now reproduce the operative field in a quality that is almost equal to real vision.

Notwithstanding, and as shown in this article, beyond the field of realistic and lifelike reproduction, the domain of enhanced visualization and AR has emerged which provides additional information for the surgeon by highlighting structures of interest, pointing out vulnerable structures, and guiding the surgeon through an intervention. As the integration of these new technologies is more easily achieved in laparoscopic surgery where camera systems have always been part of the armamentarium, we see a chance for this field to further evolve and to turn a former drawback into an advantage. At the same time, any new development is to also be implemented in open surgery, albeit with much more effort.

While some enhanced visualization modalities such as narrow band and HDR imaging are easily implemented, the application of new dyes and fluorescent markers is hindered by regulatory aspects that will delay clinical introduction and potentially prevent some promising developments from ever being used in humans. Surgeons, accordingly, are asked to announce their need for enhanced visualization techniques as these are key to further reducing the interventional trauma and improving our performance. As suggested by Tummers et al. [20], Rosenthal et al. [28], and de Boer et al. [47], we believe it to be permissible to approve new surgical markers based on criteria different to those applied for diagnostic markers as they are used in only a small number of well-indicated instances and will allow for new forms of therapy that will possibly improve the chances of curing cancer. Accordingly, we must always balance the resultant advantage versus the potential risks when examining new approaches.

In conclusion, many new visualization technologies are emerging which are supposed to improve our perception of the surgical field leading to less invasive, target-oriented, and elegant treatment forms that are of significant benefit to our patients.

Disclosure Statement

The authors did not provide a conflict of interest statement.

References

- 1.Bernhardt S, Nicolau SA, Soler L, Doignon C. The status of augmented reality in laparoscopic surgery as of 2016. Med Image Anal. 2017;37:66–90. doi: 10.1016/j.media.2017.01.007. [DOI] [PubMed] [Google Scholar]

- 2.Nicolau S, Soler L, Mutter D, Marescaux J. Augmented reality in laparoscopic surgical oncology. Surg Oncol. 2011;20:189–201. doi: 10.1016/j.suronc.2011.07.002. [DOI] [PubMed] [Google Scholar]

- 3.Gavaghan K A, Peterhans M, Oliveira-Santos T, Weber S. A portable image overlay projection device for computer-aided open liver surgery. IEEE Trans Biomed Eng. 2011;58:1855–1864. doi: 10.1109/TBME.2011.2126572. [DOI] [PubMed] [Google Scholar]

- 4.Kenngott HG, Wagner M, Nickel F, et al. Computer-assisted abdominal surgery: new technologies. Langenbecks Arch Surg. 2015;400:273–281. doi: 10.1007/s00423-015-1289-8. [DOI] [PubMed] [Google Scholar]

- 5.Najmaei N, Mostafavi K, Shahbazi S, Azizian M. Image-guided techniques in renal and hepatic interventions. Int J Med Robot. 2013;9:379–395. doi: 10.1002/rcs.1443. [DOI] [PubMed] [Google Scholar]

- 6.Kleemann M, Deichmann S, Esnaashari H, et al. Laparoscopic navigated liver resection: technical aspects and clinical practice in benign liver tumors. Case Rep Surg. 2012;2012:265918. doi: 10.1155/2012/265918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Shahin O, Beširević A, Kleemann M, Schlaefer A. Ultrasound-based tumor movement compensation during navigated laparoscopic liver interventions. Surg Endosc. 2014;28:1734–1741. doi: 10.1007/s00464-013-3374-9. [DOI] [PubMed] [Google Scholar]

- 8.Raabe A, Krishnan R, Seifert V. Actual aspects of image-guided surgery. Surg Technol Int. 2003;11:314–319. [PubMed] [Google Scholar]

- 9.Haase S, Bauer S, Wasza J, et al. 3-D operation situs reconstruction with time-of-flight satellite cameras using photogeometric data fusion. Med Image Comput Comput Assist Interv. 2013;16:356–363. doi: 10.1007/978-3-642-40811-3_45. [DOI] [PubMed] [Google Scholar]

- 10.Maier-Hein L, Mountney P, Bartoli A, et al. Optical techniques for 3D surface reconstruction in computer-assisted laparoscopic surgery. Med Image Anal. 2013;17:974–996. doi: 10.1016/j.media.2013.04.003. [DOI] [PubMed] [Google Scholar]

- 11.Horeman T, Rodrigues SP, van den Dobbelsteen JJ, et al. Visual force feedback in laparoscopic training. Surg Endosc. 2012;26:242–248. doi: 10.1007/s00464-011-1861-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Marcus HJ, Pratt P, Hughes-Hallett A, et al. Comparative effectiveness and safety of image guidance systems in surgery: a preclinical randomised study. Lancet. 2015;385((suppl 1)):S64. doi: 10.1016/S0140-6736(15)60379-8. [DOI] [PubMed] [Google Scholar]

- 13.Meola A, Cutolo F, Carbone M, et al. Augmented reality in neurosurgery: a systematic review. Neurosurg Rev. 2017;40:537–548. doi: 10.1007/s10143-016-0732-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Okamoto T, Onda S, Yanaga K, et al. Clinical application of navigation surgery using augmented reality in the abdominal field. Surg Today. 2015;45:397–406. doi: 10.1007/s00595-014-0946-9. [DOI] [PubMed] [Google Scholar]

- 15.Pessaux P, Diana M, Soler L, et al. Towards cybernetic surgery: robotic and augmented reality-assisted liver segmentectomy. Langenbecks Arch Surg. 2015;400:381–385. doi: 10.1007/s00423-014-1256-9. [DOI] [PubMed] [Google Scholar]

- 16.Tang R, Ma L, Xiang C, et al. Augmented reality navigation in open surgery for hilar cholangiocarcinoma resection with hemihepatectomy using video-based in situ three-dimensional anatomical modeling: a case report. Medicine (Baltimore) 2017;96:e8083. doi: 10.1097/MD.0000000000008083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Nakamoto M, Ukimura O, Faber K, Gill IS. Current progress on augmented reality visualization in endoscopic surgery. Curr Opin Urol. 2012;22:121–126. doi: 10.1097/MOU.0b013e3283501774. [DOI] [PubMed] [Google Scholar]

- 18.Yeung JM, Maxwell-Armstrong C, Acheson AG. Colonic tattooing in laparoscopic surgery - making the mark? Colorectal Dis. 2009;11:527–530. doi: 10.1111/j.1463-1318.2008.01706.x. [DOI] [PubMed] [Google Scholar]

- 19.Cho YB, Lee WY, Yun HR, et al. Tumor localization for laparoscopic colorectal surgery. World J Surg. 2007;31:1491–1495. doi: 10.1007/s00268-007-9082-7. [DOI] [PubMed] [Google Scholar]

- 20.Tummers WS, Warram JM, Tipirneni KE, et al. Regulatory aspects of optical methods and exogenous targets for cancer detection. Cancer Res. 2017;77:2197–2206. doi: 10.1158/0008-5472.CAN-16-3217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Boni L, Fingerhut A, Marzorati A, et al. Indocyanine green fluorescence angiography during laparoscopic low anterior resection: results of a case-matched study. Surg Endosc. 2017;31:1836–1840. doi: 10.1007/s00464-016-5181-6. [DOI] [PubMed] [Google Scholar]

- 22.Degett TH, Andersen HS, Gogenur I. Indocyanine green fluorescence angiography for intraoperative assessment of gastrointestinal anastomotic perfusion: a systematic review of clinical trials. Langenbecks Arch Surg. 2016;401:767–775. doi: 10.1007/s00423-016-1400-9. [DOI] [PubMed] [Google Scholar]

- 23.Burnier P, Niddam J, Bosc R, et al. Indocyanine green applications in plastic surgery: a review of the literature. J Plast Reconstr Aesthet Surg. 2017;70:814–827. doi: 10.1016/j.bjps.2017.01.020. [DOI] [PubMed] [Google Scholar]

- 24.Holm C, Mayr M, Höfter E, et al. Intraoperative evaluation of skin-flap viability using laser-induced fluorescence of indocyanine green. Br J Plast Surg. 2002;55:635–644. doi: 10.1054/bjps.2002.3969. [DOI] [PubMed] [Google Scholar]

- 25.Boogerd LSF, Handgraaf HJM, Huurman VAL, et al. The best approach for laparoscopic fluorescence cholangiography: overview of the literature and optimization of dose and dosing time. Surg Innov. 2017;24:386–396. doi: 10.1177/1553350617702311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Dip F, Nguyen D, Montorfano L, et al. Accuracy of near infrared-guided surgery in morbidly obese subjects undergoing laparoscopic cholecystectomy. Obes Surg. 2016;26:525–530. doi: 10.1007/s11695-015-1781-9. [DOI] [PubMed] [Google Scholar]

- 27.Van der Vorst JR, Schaafsma BE, Hutteman M, et al. Near-infrared fluorescence-guided resection of colorectal liver metastases. Cancer. 2013;119:3411–3418. doi: 10.1002/cncr.28203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.De Boer E, Harlaar NJ, Taruttis A, et al. Optical innovations in surgery. Br J Surg. 2015;102:e56–72. doi: 10.1002/bjs.9713. [DOI] [PubMed] [Google Scholar]

- 29.Aoki T, Yasuda D, Shimizu Y, et al. Image-guided liver mapping using fluorescence navigation system with indocyanine green for anatomical hepatic resection. World J Surg. 2008;32:1763–1767. doi: 10.1007/s00268-008-9620-y. [DOI] [PubMed] [Google Scholar]

- 30.Diana M, Liu YY, Pop R, et al. Superselective intra-arterial hepatic injection of indocyanine green (ICG) for fluorescence image-guided segmental positive staining: experimental proof of the concept. Surg Endosc. 2017;31:1451–1460. doi: 10.1007/s00464-016-5136-y. [DOI] [PubMed] [Google Scholar]

- 31.Cahill RA, Anderson M, Wang LM, et al. Near-infrared (NIR) laparoscopy for intraoperative lymphatic road-mapping and sentinel node identification during definitive surgical resection of early-stage colorectal neoplasia. Surg Endosc. 2012;26:197–204. doi: 10.1007/s00464-011-1854-3. [DOI] [PubMed] [Google Scholar]

- 32.Liberale G, Bourgeois P, Larsimont D, et al. Indocyanine green fluorescence-guided surgery after IV injection in metastatic colorectal cancer: a systematic review. Eur J Surg Oncol. 2017;43:1656–1667. doi: 10.1016/j.ejso.2017.04.015. [DOI] [PubMed] [Google Scholar]

- 33.Ishizawa T, Kaneko J, Inoue Y, et al. Application of fluorescent cholangiography to single-incision laparoscopic cholecystectomy. Surg Endosc. 2011;25:2631–2636. doi: 10.1007/s00464-011-1616-2. [DOI] [PubMed] [Google Scholar]

- 34.Ntziachristos V. Fluorescence molecular imaging. Annu Rev Biomed Eng. 2006;8:1–33. doi: 10.1146/annurev.bioeng.8.061505.095831. [DOI] [PubMed] [Google Scholar]

- 35.Winer JH, Choi HS, Gibbs-Strauss SL, et al. Intraoperative localization of insulinoma and normal pancreas using invisible near-infrared fluorescent light. Ann Surg Oncol. 2010;17:1094–1100. doi: 10.1245/s10434-009-0868-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Li Y, Rey-Dios R, Roberts DW, et al. Intraoperative fluorescence-guided resection of high-grade gliomas: a comparison of the present techniques and evolution of future strategies. World Neurosurg. 2014;82:175–185. doi: 10.1016/j.wneu.2013.06.014. [DOI] [PubMed] [Google Scholar]

- 37.Kishi K, Fujiwara Y, Yano M, et al. Usefulness of diagnostic laparoscopy with 5-aminolevulinic acid (ALA)-mediated photodynamic diagnosis for the detection of peritoneal micrometastasis in advanced gastric cancer after chemotherapy. Surg Today. 2016;46:1427–1434. doi: 10.1007/s00595-016-1328-2. [DOI] [PubMed] [Google Scholar]

- 38.Korb ML, Huh WK, Boone JD, et al. Laparoscopic fluorescent visualization of the ureter with intravenous IRDye800CW. J Minim Invasive Gynecol. 2015;22:799–806. doi: 10.1016/j.jmig.2015.03.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hill TK, Mohs AM. Image-guided tumor surgery: will there be a role for fluorescent nanoparticles? Wiley Interdiscip Rev Nanomed Nanobiotechnol. 2016;8:498–511. doi: 10.1002/wnan.1381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Van Dam GM, Themelis G, Crane LM, et al. Intraoperative tumor-specific fluorescence imaging in ovarian cancer by folate receptor-alpha targeting: first in-human results. Nat Med. 2011;17:1315–1319. doi: 10.1038/nm.2472. [DOI] [PubMed] [Google Scholar]

- 41.Keereweer S, Kerrebijn JD, van Driel PB, et al. Optical image-guided surgery - where do we stand? Mol Imaging Biol. 2011;13:199–207. doi: 10.1007/s11307-010-0373-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Terwisscha van Scheltinga AG, van Dam GM, Nagengast WB, et al. Intraoperative near-infrared fluorescence tumor imaging with vascular endothelial growth factor and human epidermal growth factor receptor 2 targeting antibodies. J Nucl Med. 2011;52:1778–1785. doi: 10.2967/jnumed.111.092833. [DOI] [PubMed] [Google Scholar]

- 43.Proulx ST, Luciani P, Christiansen A, et al. Use of a PEG-conjugated bright near-infrared dye for functional imaging of rerouting of tumor lymphatic drainage after sentinel lymph node metastasis. Biomaterials. 2013;34:5128–5137. doi: 10.1016/j.biomaterials.2013.03.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Wunderbaldinger P, Turetschek K, Bremer C. Near-infrared fluorescence imaging of lymph nodes using a new enzyme sensing activatable macromolecular optical probe. Eur Radiol. 2003;13:2206–2211. doi: 10.1007/s00330-003-1932-6. [DOI] [PubMed] [Google Scholar]

- 45.Kaushal S, McElroy MK, Luiken GA, et al. Fluorophore-conjugated anti-CEA antibody for the intraoperative imaging of pancreatic and colorectal cancer. J Gastrointest Surg. 2008;12:1938–1950. doi: 10.1007/s11605-008-0581-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Sano K, Mitsunaga M, Nakajima T, et al. In vivo breast cancer characterization imaging using two monoclonal antibodies activatably labeled with near infrared fluorophores. Breast Cancer Res. 2012;14:R61. doi: 10.1186/bcr3167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Rosenthal EL, Warram JM, de Boer E, et al. Successful translation of fluorescence navigation during oncologic surgery: a consensus report. J Nucl Med. 2016;57:144–150. doi: 10.2967/jnumed.115.158915. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Pierre SA, Ferrandino MN, Simmons WN, et al. High definition laparoscopy: objective assessment of performance characteristics and comparison with standard laparoscopy. J Endourol. 2009;23:523–528. doi: 10.1089/end.2008.0277. [DOI] [PubMed] [Google Scholar]

- 49.Wilhelm D, Reiser S, Kohn N, et al. Comparative evaluation of HD 2D/3D laparoscopic monitors and benchmarking to a theoretically ideal 3D pseudodisplay: even well-experienced laparoscopists perform better with 3D. Surg Endosc. 2014;28:2387–2397. doi: 10.1007/s00464-014-3487-9. [DOI] [PubMed] [Google Scholar]

- 50.Storz P, Buess GF, Kunert W, Kirschniak A. 3D HD versus 2D HD: surgical task efficiency in standardised phantom tasks. Surg Endosc. 2012;26:1454–1460. doi: 10.1007/s00464-011-2055-9. [DOI] [PubMed] [Google Scholar]

- 51.Sorensen SM, Savran MM, Konge L, Bjerrum F. Three-dimensional versus two-dimensional vision in laparoscopy: a systematic review. Surg Endosc. 2016;30:11–23. doi: 10.1007/s00464-015-4189-7. [DOI] [PubMed] [Google Scholar]

- 52.Hanna GB, Shimi SM, Cuschieri A. Randomised study of influence of two-dimensional versus three-dimensional imaging on performance of laparoscopic cholecystectomy. Lancet. 1998;351:248–251. doi: 10.1016/S0140-6736(97)08005-7. [DOI] [PubMed] [Google Scholar]

- 53.Curro G, La Malfa G, Lazzara S, et al. Three-dimensional versus two-dimensional laparoscopic cholecystectomy: is surgeon experience relevant? J Laparoendosc Adv Surg Tech A. 2015;25:566–570. doi: 10.1089/lap.2014.0641. [DOI] [PubMed] [Google Scholar]

- 54.Agrusa A, di Buono G, Chianetta D, et al. Three-dimensional (3D) versus two-dimensional (2D) laparoscopic adrenalectomy: a case-control study. Int J Surg. 2016;28((suppl 1)):S114–117. doi: 10.1016/j.ijsu.2015.12.055. [DOI] [PubMed] [Google Scholar]

- 55.Singh R, Lee SY, Vijay N, et al. Update on narrow band imaging in disorders of the upper gastrointestinal tract. Dig Endosc. 2014;26:144–153. doi: 10.1111/den.12207. [DOI] [PubMed] [Google Scholar]

- 56.Schnelldorfer T, Jenkins RL, Birkett DH, Georgakoudi I. From shadow to light: visualization of extrahepatic bile ducts using image-enhanced laparoscopy. Surg Innov. 2015;22:194–200. doi: 10.1177/1553350614531661. [DOI] [PubMed] [Google Scholar]

- 57.Kikuchi H, Kamiya K, Hiramatsu Y, et al. Laparoscopic narrow-band imaging for the diagnosis of peritoneal metastasis in gastric cancer. Ann Surg Oncol. 2014;21:3954–3962. doi: 10.1245/s10434-014-3781-8. [DOI] [PubMed] [Google Scholar]

- 58.Falkinger M, Kranzfelder M, Wilhelm D, et al. Design of a test system for the development of advanced video chips and software algorithms. Surg Innov. 2015;22:155–162. doi: 10.1177/1553350614537563. [DOI] [PubMed] [Google Scholar]

- 59.Nakai Y, Isayama H, Shinoura S, et al. Confocal laser endomicroscopy in gastrointestinal and pancreatobiliary diseases. Dig Endosc. 2014;26((suppl 1)):86–94. doi: 10.1111/den.12152. [DOI] [PubMed] [Google Scholar]

- 60.Kuiper T, van den Broek FJ, van Eeden S, et al. New classification for probe-based confocal laser endomicroscopy in the colon. Endoscopy. 2011;43:1076–1081. doi: 10.1055/s-0030-1256767. [DOI] [PubMed] [Google Scholar]

- 61.Rehberger M, Wilhelm D, Janssen K-P, et al. Optical coherence tomography (OCT): the missing link in gastrointestinal imaging? Int J CARS. 2016;11((suppl 1)):32–33. [Google Scholar]