Abstract

Background:

Advances in image analysis and computational techniques have facilitated automatic detection of critical features in histopathology images. Detection of nuclei is critical for squamous epithelium cervical intraepithelial neoplasia (CIN) classification into normal, CIN1, CIN2, and CIN3 grades.

Methods:

In this study, a deep learning (DL)-based nuclei segmentation approach is investigated based on gathering localized information through the generation of superpixels using a simple linear iterative clustering algorithm and training with a convolutional neural network.

Results:

The proposed approach was evaluated on a dataset of 133 digitized histology images and achieved an overall nuclei detection (object-based) accuracy of 95.97%, with demonstrated improvement over imaging-based and clustering-based benchmark techniques.

Conclusions:

The proposed DL-based nuclei segmentation Method with superpixel analysis has shown improved segmentation results in comparison to state-of-the-art methods.

Keywords: Cervical cancer, cervical intraepithelial neoplasia, convolutional neural network, deep learning, image processing, segmentation, superpixels

INTRODUCTION

The reconstruction of medical images into digital form has propelled the fields of medical research and clinical practice.[1] Image processing for histopathology image applications still has numerous challenges to overcome, especially in accurate nuclei detection.

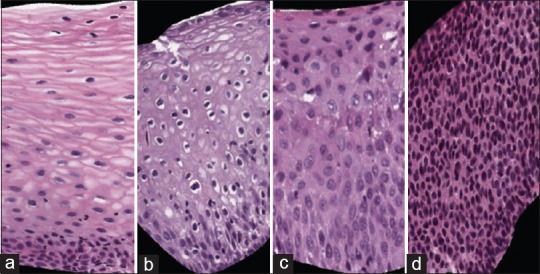

Cervical cancer is the fourth most prevalent female cancer globally.[2] Over 500,000 new cases of this cancer are reported annually, especially in Africa; over half of this total eventuates in death.[2] There is a cure for cervical cancer if it is detected early. The gold standard for early cervical cancer diagnosis is the microscopic evaluation of histopathology images by a qualified pathologist.[3,4,5,6] The severity of cervical cancer increases as the immature atypical cells in the epithelium region increase. Based on this observation, cancer affecting squamous epithelium is classified as normal or one of the three increasingly premalignant grades of cervical intraepithelial neoplasia (CIN): CIN1, CIN2, and CIN3[4,5,6] [Figure 1]. Normal means there is no CIN; CIN1 corresponds to mild dysplasia (abnormal change); CIN2 denotes moderate dysplasia; and CIN3 corresponds to severe dysplasia.

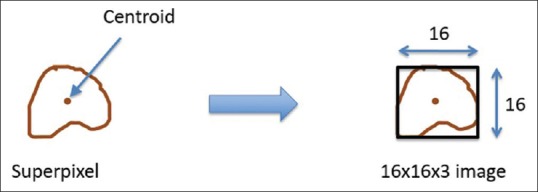

Figure 1.

Cervical intraepithelial neoplasia grades Left to right: (a) Normal, (b)cervical intraepithelial neoplasia 1, (c) cervical intraepithelial neoplasia 2, (d)cervical intraepithelial neoplasia 3

With increasing CIN grade, the epithelium has been observed to show delayed maturation with an increase in immature atypical cells from bottom (basal membrane) to top of the epithelium region.[6,7,8,9,10] This can be observed from Figure 1. Atypical immature cells are most dense in the bottom region of the epithelium for CIN1 [Figure 1b]. For CIN2, two-thirds of the bottom region is affected by the atypical immature cells [Figure 1c]. Finally, for CIN3, the atypical immature cells are densely spread over the whole epithelium region [Figure 1d].

At present, cervical tissue is analyzed manually by the pathologists with significant experience with cervical cancer. These pathology specialists are few, and it takes considerable time to scan the tissue slides. This calls for automatic histology image classification, which could alleviate scarce professional resources for image classification, particularly in developing countries where the burden of cervical cancer is greatest. A critical challenge for automatic classification is the accurate identification of nuclei, the small dark structures which undergo changes as the CIN progresses [Figure 1].

Epithelial nuclei provide critical features needed to classify cervical images. Although CIN grade classification can be done by applying deep learning (DL) techniques directly on the image data without the use of nuclei-based features, the accuracy of the classification can be further improved by fusing a feature-based trained neural network models with the DL model. The classification based on the features extracted from the histology images has shown good results in previous studies.[11,12] Hence, the detection of nuclei is crucial for correct results. Detection accuracy can be limited by variations in tissue and nuclei staining, image contrast, noisy stain blobs, overlapping nuclei, and variation in nuclei size and shape, with the latter being more prominent with higher CIN grades.

In recent years, various algorithms have been proposed to segment nuclei and to extract the nuclei features from digitized medical images. The accuracy of algorithms to identify nuclei may be measured in two ways. The first measure is called nucleus detection or object-based detection. This nucleus-based scoring counts whether a ground-truth nucleus is detected or not. The second method is called nucleus segmentation; nuclei segmentation is pixel-based scoring which counts accuracy pixel by pixel. Recent reviews by Xing and Yang[13] and Irshad et al.[14] summarized techniques in this fast-evolving field for both nuclei detection and segmentation. The review by Irshad et al. provided additional material on nuclear features; the review by Xing and Yang included additional recent studies; both reviews gave detailed descriptions of methods and results for the nuclei detection for many types of histopathology images including brain, breast, cervix, prostate, muscle, skin, and leukocyte.[7,13] In the following, we summarize selected recent methods to find nuclei in histopathology images in general, followed by specific methods to find nuclei in cervical images.

For the general domain of histopathology images, recent studies have employed conventional techniques, various DL techniques, and techniques combining both methods. A graph-cut technique was followed by multiscale Laplacian-of-Gaussian (LoG) filtering, adaptive scale selection, and a second graph-cut operation.[15] Generalized LoG filters were used to detect elliptical blob centers; watershed segmentation was used to split touching nuclei.[16] The generalized LoG filter technique was modified using directional LoG filters followed by adaptive thresholding and mean-shift clustering.[17] A convolutional neural network (CNN) nuclear detection model called “deep voting” used voting based on location of patches and weights based on confidence in the patches to produce final nuclei locations.[18] Stacked sparse autoencoder (SSAE) DL was used for nuclei detection and compared to other DL techniques using CNN variations.[19] SSAE sensitivity was similar to that obtained for the optimal CNN; specificity compared favorably to CNN.[19] Another voting approach to overcome variable nuclear staining exploited nuclear symmetry.[20] An additional voting approach used adaptive thresholding for seed finding followed by elliptical modeling and watershed technique.[21] Canny edge detection was followed by multi-pass directional voting; results surpassed those of the SSAE. A CNN was combined with region merging and a sparse shape and local repulsive deformable model[22] with good results.

In the domain of cervical cytology and histopathology, automated localization of the cervical nuclei used the converging squares algorithm.[23] The Hough transform was implemented to detect the nuclei based on shape features.[24] Cervical cells were classified using co-occurrence matrix textural feature extraction and morphological transforms.[25] Analysis of cell nuclei segmentation was performed through Bayesian interpretation after segmentation by a Viterbi search-based active contour method. Segmentation was also accomplished by a region grid algorithm through contour detection around the nuclei boundary.[26] Nuclei were segmented using level-set active contour methods.[27,28] Intensity and color information were used for nuclei enhancement and segmentation.[29] A DL framework was used for segmentation of cytoplasm and nuclei.[30] K-means clustering was used for nuclei feature extraction followed by classification based on fusion.[12] A multi-scale CNN followed by graph partitioning was used for nuclei detection in cervical cytology images.[31] Transfer learning to recognize cervical cytology nuclei using the CaffeNet architecture was trained first on ImageNet then, using the trained network, retrained on cervical slide images, containing one cell per slide.[32]

Semantic pixel-wise labeling[33] for the detection of nuclei increases computationally expensive since every pixel is individually labeled through a series of encoder and decoder stacks. U-Net[34] utilizes up-sampling approach with deconvolution layers with 23 convolutional layers, which makes the network use more memory and more computations. The nuclei segmentation research here employs DL to extract nuclei patches, a simple linear iterative cluster (SLIC) model and a CNN to classify the obtained superpixel data. A group of similar pixels (superpixels) are classified, requiring reduced memory compared to the pixel-wise approach, also reducing the number of parameters to be tuned. Scoring in the current study, object-based detection, is based upon whether nuclei are correctly detected or not.

METHODS

Biologically inspired CNNs operate upon a digital image, convolving image arrays with the image, producing feature vectors serving as parameters to the CNN. The automatically determined feature vectors serve as weights; these are modified with each iteration as the network learns by training.

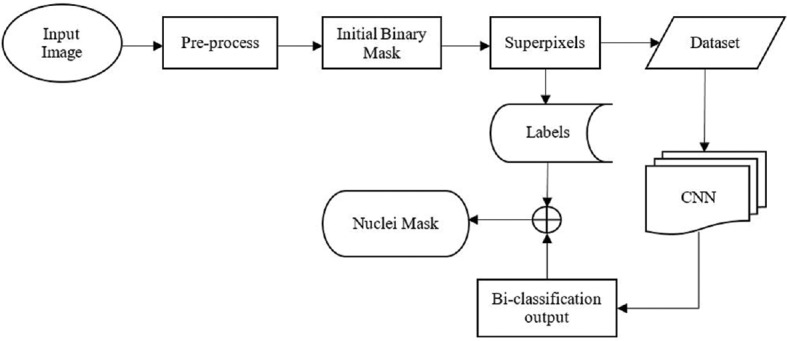

The primary goal of this paper is to segment the nuclei in the epithelium region of cervical cancer histology images by considering local features instead of features from the whole image. This local information is used to classify whether the segment contains nuclei or background. The CNNs use image vectors as inputs and learn different feature vectors, which ultimately solve the classification problem. The proposed methodology is depicted in Figure 2.

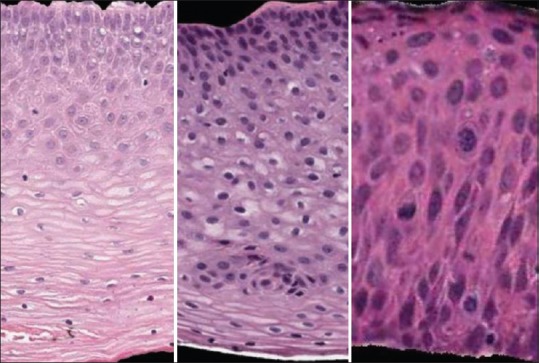

Figure 2.

Proposed methodology

To make use of localized information, small image patches are obtained from the original image using a superpixel extraction method. Superpixel algorithms are devised to group pixels with similar properties into regions to form clusters. Optimal superpixels avoid oversegmentation without information gain, which is present at the pixel level, and undersegmentation with information loss, if superpixels are too large. The SLIC algorithm is chosen as it generates superpixels based on color (intensity) and distance proximities with respect to each pixel.

Preprocessing

Before extracting superpixels, the original image is preprocessed using a Gaussian smoothing filter to smooth the input image to reduce Gaussian and impulse valued noises, which are mainly generated during image capture from the slides and digitization process.[35] The results of oversegmented images through superpixel generation also indicate the importance of smoothing the images. The filter's impulse response is the Gaussian function, which decays rapidly, so as to select narrow windows to avoid the loss of image information. This function divides the image into its respective windows and applies the cost function. The two-dimensional Gaussian function is applied on the input image using a built-in MATLAB® function.

The standard deviation can be user-defined; here, we use the default value of two. The Gaussian filter is applied instead of a trimmed mean filter because the Gaussian filter processes our images 3184.16 times faster than the trimmed mean filter. When the outputs of the algorithms were compared, the output using the Gaussian filter gave a better superpixel result as compared to the output obtained using the trimmed mean filter. The darker nuclei are in general surrounded with red-stained cytoplasm inside a cell and the background region is not stained. Hence, the red, green, blue (RGB) color space of the image is converted to CIE LAB color space[31,36] to improve the contrast between nuclei, cytoplasm, and background. The contrast is further enhanced using a linear transformation, increasing the scale of pixel intensity from [rmin, rmax] to (0, 255). A morphological closing operation is applied on the luminance plane (L component) of the resultant CIE LAB color image to remove any small holes and to smooth boundaries. The L component represents the perceived brightness, which further increases image contrast. These operations produce the initial binary nuclei mask to aid in extracting superpixels from the image. The generated binary mask reduces computational overload and reduces challenges due to noise and other variations in cervical histopathology images, such as variable staining present in cervical tissue, to provide a binary mask overlay to guide the next step in superpixel generation.

Superpixel extraction

Superpixels are generated automatically for the test images. An SLIC algorithm[37] is used to extract superpixels rather than other state-of-the-art methods[38,39,40,41] because it is faster, is more memory efficient, has better adherence to boundaries, and improves segmentation performance. Furthermore, it considers both color and distance properties which are appropriate with color orientation of the nuclei around a small region.

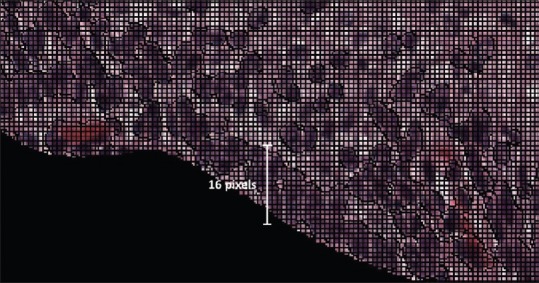

A labeled matrix, with size equal to that of the original image, is obtained as an output from the SLIC function. A manually generated epithelium mask, which is verified by an expert pathologist (RZ), is then applied on the labeled matrix to remove the unwanted region. The resultant matrix is again relabeled. The minimum size for superpixels, 200 pixels, is chosen to be larger than the largest nucleus and smaller than the patch size (256 pixels). The patch width and height (16 pixels) are chosen to contain all superpixels and all nuclei, as shown in Figure 3, so that the whole superpixel region is covered while creating a 16 × 16 × 3 RGB patch image dataset for training the CNN.

Figure 3.

A portion of original image with superpixels. Nuclei do not exceed 16 pixels in height or width or 200 pixels in area

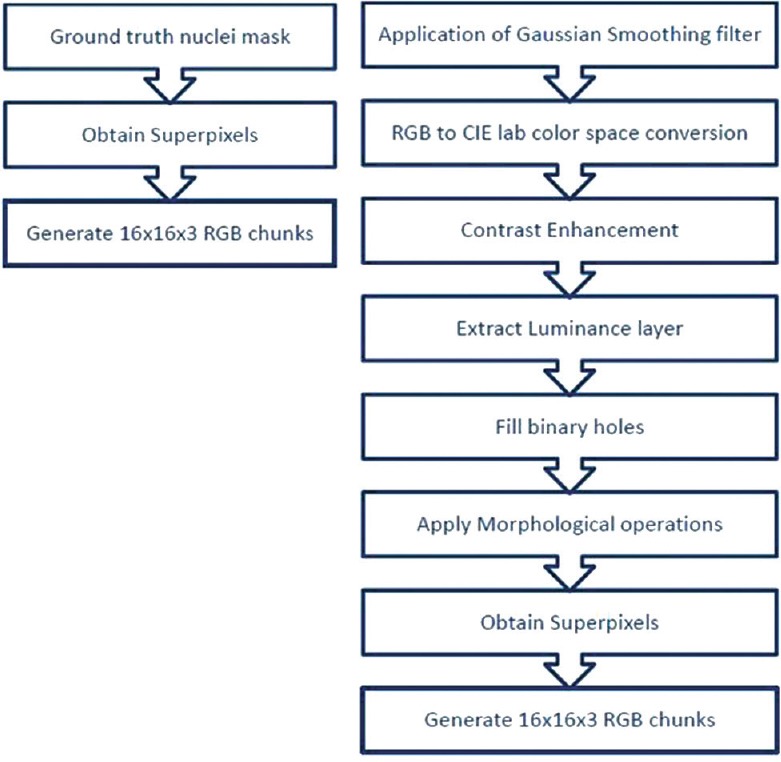

The centroid of each superpixel is computed. With respect to that centroid, a 16 × 16 × 3 image patch is formed as shown in Figure 4. A patch is said to be a part of the nuclei region if nuclei comprise at least 10% of its area. The nuclei region is given the highest priority as compared to the cytoplasm and background. The problem of generating 16 × 16 × 3 patches from superpixels at the edges of the image is solved by mirroring the image.

Figure 4.

Generation of 16 × 16 × 3 red, green, blue image from superpixel

Finally, 16 × 16 × 3 RGB input images are obtained from the superpixels of the original image. As DL benefits from more examples, data augmentation is performed by rotating the original image by 180° and extracting 16 × 16 patches.

Data generation

Data generation is done carefully to prepare both training and test image datasets. For our experiment, a total of 12 images, six images each from the 71-image dataset and 62-image dataset, are used for training the network. The remaining 121 images are used in the testing phase. Thus, the training and test sets used for generating results reported in this study are disjoint. Nuclei segmentation has been investigated in the previous studies using the 71-image[22] and 62-image[25] datasets, providing benchmarks for this study. Training images are carefully chosen so that the network understands how to handle different kinds of images. Observation of images from the datasets discloses three types of images: images with light nuclei and light cytoplasm, images with darker nuclei and moderate cytoplasm, and images with darker nuclei and thicker cytoplasm as shown in Figure 5. To balance the training set for the CNN, six images from each dataset, two images for each of the three image types are included, totaling 12 images.

Figure 5.

Images with lighter nuclei (left), darker nuclei with lighter cytoplasm (center), darker nuclei with thicker cytoplasm (right)

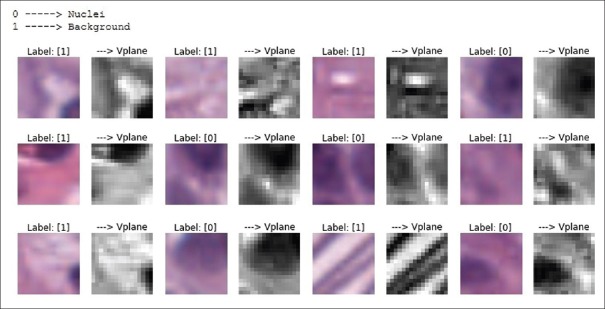

Classifying whether nuclei are present or not in the 16 × 16 × 3 patch is a binary classification problem. The patch target label is obtained from the binary nuclei masks that are already available in the database. Some of the portions of the nuclei masks are modified so that the target labels represent exact ground truth values. The extracted 16 × 16 × 3 patches are as shown in Figure 6. The label “0” denotes nuclei and the label “1” denotes background. A total of 377,012 patches are obtained using preprocessing steps as shown in Figure 7 (left) for 12 original images comprising both nuclei and background.

Figure 6.

Sample 16 × 16 × 3 red, green, blue images and their 16 × 16 V-plane images

Figure 7.

Generation of training dataset (left) and test dataset (right)

The test data are generated by preprocessing the image [Figure 7, right]. The luminance plane is used to generate superpixels, and then, 16 × 16 × 3 image patches are formed for each individual original image.

Convolutional neural network

As a prestep to train the CNN, all small image patches are converted to the HSV color plane and then the value plane (V-plane) is extracted. Before selecting the V-plane, various color planes are observed manually and are also used to train the network. The V-plane and the L-plane (luminance plane) gave promising results. The V-plane is considered for this experiment as shown in Figure 6. The V component indicates the quantity of light reflected and is useful for extraction from the patches because the nuclei are typically blue-black and reflect only a small amount of light.

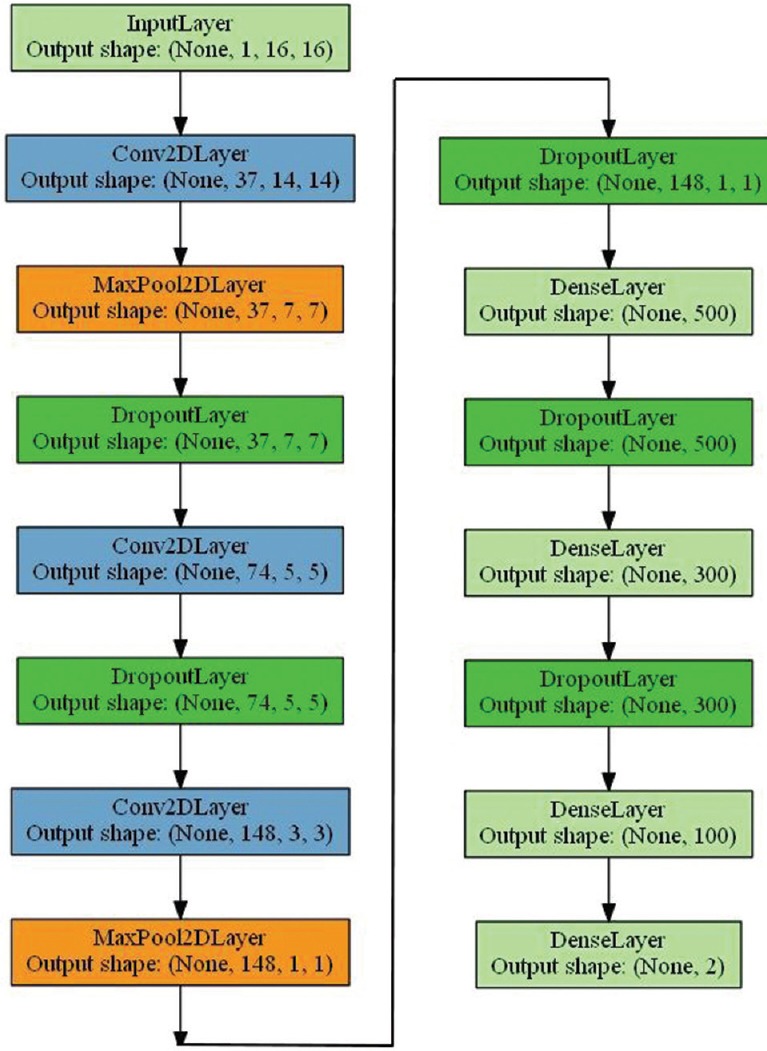

To classify the presence of nuclei, the CNN is trained with the features that were generated by convolutional layers using raw pixel input data. The first stage was a shallow CNN with one convolutional layer and a following max pool layer. A total of 36,478 image patches (extracted from two images) were processed for a quick quality check. To classify the presence of nuclei, the CNN is trained with the features that were generated by convolutional layers using raw pixel input data. A remarkable improvement in the validation accuracy was observed when a deep CNN architecture [modified LeNet-5[42] model with varied layers and hyper-parameters as shown in Figure 8] was considered with multiple convolutional, max pooling, and dropout layers at the beginning of the network and three dense neural networks (convolution and dense layers with a nonlinear ReLU activation function[43]) at the end of the network. The two neurons in the output layer are activated with a SoftMax function.

Figure 8.

Convolutional neural network architecture

This produced 98.1% validation accuracy on two input images. Later, 10 more images were included to make the network learn to classify nuclei in different environments as shown in Figure 5. Upon training with 377,012 patches of 16 × 16 size (extracted from 12 full-size images), a validation accuracy of 95.70% is achieved.

The obtained dataset of inputs and target labels is used to train CNNs with different architectures, and the following architecture [Figure 8] gave best results with higher validation accuracy on test images that were part of the training data.

The training dataset is used to fit the CNN model. A validation dataset, consisting of 20% of the training dataset, is helpful to estimate the prediction error for best model selection. Categorical accuracy (Lμ) is computed between targets (ti, c) and prediction (pi, c) produced from the validation dataset.

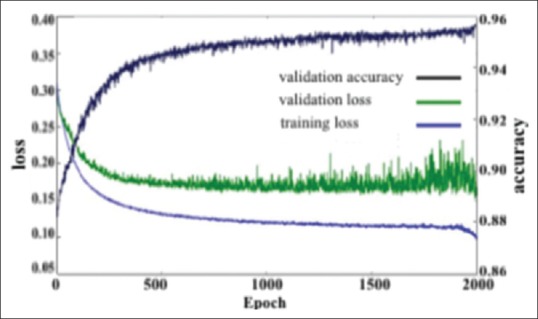

The weights are initialized randomly using Glorot weight initialization.[44] An adaptable learning rat ∈ (0.0001, 0.03) and momentum with range ∈ (0.9, 0.999) are applied to the network while training for 2000 epochs. The architecture produced a validation accuracy of 95.70% at the end of the 2000th epoch. The network is trained for 2000 epochs since further training appears not to decrease validation loss [Figure 9].

Figure 9.

Training loss, validation accuracy, and validation loss versus epochs

The error on the training set is denoted as training loss. Validation loss is the error as a result of running the validation set through the previously trained CNN. Figure 9 represents a drop in training and validation error as the number of epochs increase. This is a clear indication that the network is learning from the data that are given as an input to the network.

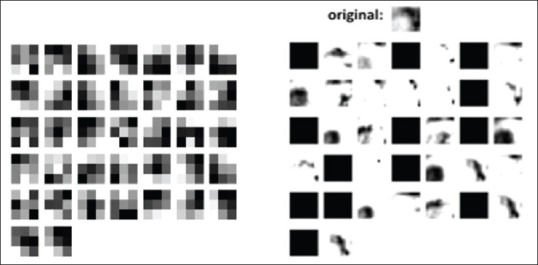

Figure 10 (left) shows all 37 × 3 × 3 first-layer convolutional feature vectors obtained from the trained network. The initial layer of the convolutional network mainly focuses learning on the edge and curve features of the input image. Figure 10 (right) represents the result of the convolution of the feature vectors with the 16 × 16 image producing a 32 × 14 × 14 image.

Figure 10.

32 × 3 × 3 convolutional neural network filters and 32 × 14 × 14 convolved output in first layer

The trained network model is saved along with the weights and filter coefficients. This saved model is loaded back to test on the remaining images of the 71-image and 62-image datasets (121 images) by classifying individual patches generated from each image to assess nuclei detection accuracy. The location of every superpixel extracted from the original image is saved as a labeled image. The results of classification are mapped with the labeled image to finally obtain a binary nuclei mask from the corresponding original image. The nuclei detection rate on the test images is then calculated by manually counting all 108,635 original ground truth nuclei truly detected and those falsely detected by the algorithm.

Experimental results and analysis

Experimental results

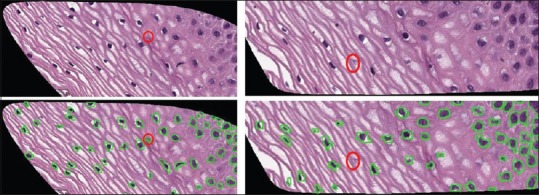

The proposed algorithm is applied on both 71-image and 62-image datasets, using six images from each of the datasets for training the CNN. The remaining images are used for testing the trained model. The training set and test set are disjoint. Figure 11 depicts the nuclei mask generated with nuclei mask boundaries marked in green.

Figure 11.

Nuclei masks (green) superimposed on the original image

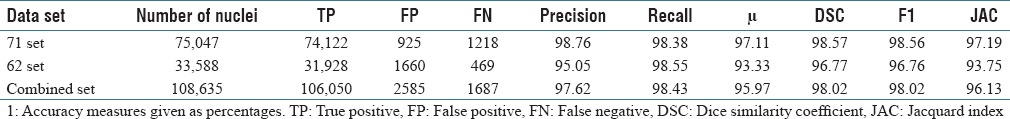

The DL algorithm applied to both the 71-image dataset and the 62-image dataset shows overall segmentation accuracy of 97.11% and 93.33%, respectively. Finally, the overall segmentation accuracy of the combined set is 95.97%.

The accuracy of nuclei detection is calculated on a per-nuclei basis by manually recording the true positive (TP) (i.e., the number of nuclei successfully detected), false negative (FN) (i.e., the number of nuclei not detected), and false positive (FP) (i.e., number of nonnuclei objects found). Using FP and FN totals, accuracy measures are calculated,[25] including precision, recall, accuracy (μ), dice similarity coefficient (DSC), harmonic mean of precision and recall (F1), and Jacquard index, Equations 1–6. Table 1 shows these accuracy measures for the 62, 71, and combined datasets.

Table 1.

Nuclei detection results using the deep learning superpixel approach1

It is observed that if smaller size superpixels are considered, that is, if finer localization is done, the final nuclei masks are better. In addition, a deeper CNN shows improved classification results when compared to a shallow CNN.

Analysis of results

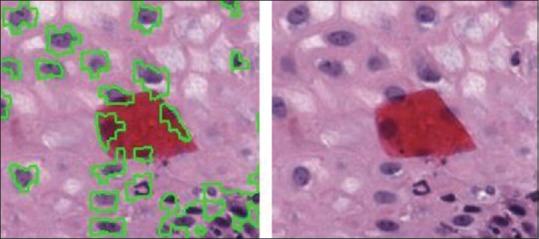

Here, the results obtained above are compared with the results from benchmark algorithms. The following images represent the FP and FN cases. Figure 12 (left) represents a FP condition where false nuclei detection is observed. The circled portion shows the region where there is no nucleus present in the original image but detected as nucleus present with a green contour around the FP object boundary. Figure 12 (right) shows a nucleus misclassified as background. The undetected nucleus is marked in the original image, but there is no contour around the marked nucleus. Both FP and FN cases lower overall object-based detection accuracy.

Figure 12.

Examples of false positive (left) and false negative (right) results. Note variable staining

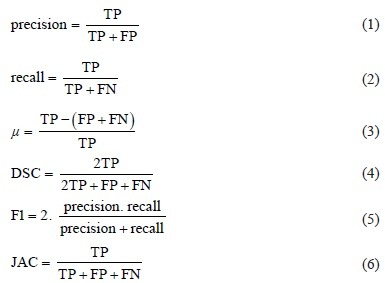

Equations 1-6. Nuclei detection accuracy given TP and TN.

The presence of red stains on the image samples always poses challenges in nuclei detection as the stains are falsely detected as nuclei by various algorithms, yet some nuclei may lie under red stains. The proposed algorithm has overcome this challenge by detecting the nuclei even under the red stains [Figure 13]. The training process of the CNN allows learning about this feature from the ground truth images.

Figure 13.

Nuclei detected even under red stains

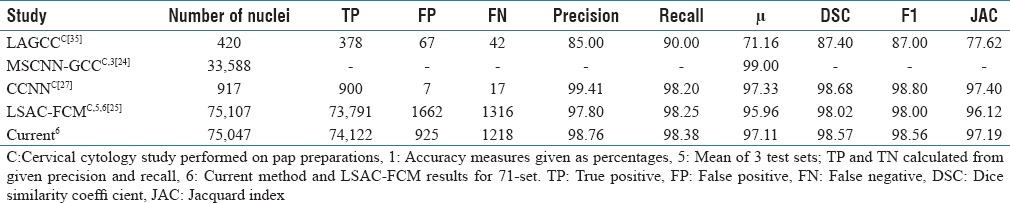

Comparison of results

This article presents a DL-based nuclei segmentation approach, using superpixel extraction followed by a CNN classifier. The algorithm has achieved an overall accuracy μ of 96.0% on the combined set, with 97.11% accuracy achieved on the 71-image dataset [Table 1], outperforming previous cervical histopathology nuclei detection approaches. Previously, segmentation based on K-means clustering followed by mathematical morphology operations[12] produced an overall recall estimated at 89.5% on the 62-set of images. The level set method and fuzzy C-means clustering[28] approach on the 71-image dataset achieved 96.47% accuracy in comparison to the current 97.11% accuracy. Some recent results in cervical cytology nuclei detection have produced very high nuclei detection (object-based results) [Table 2].[30,45] Nuclei detection in cervical cytology images is not comparable to nuclear detection in histopathology images. As Irshad et al. noted, nuclei segmentation “is particularly difficult on pathology images.”[14] Cervical cytology images have “well-separated nuclei and the absence of complicated tissue structures,” while most nuclei in histopathology images are “often part of structures presenting complex and irregular visual aspects.”[14] In addition, we have found that cytology images have a greater contrast and fewer nuclear mimics.

Table 2.

Cervical nuclear detection versus current deep learning superpixel approach1

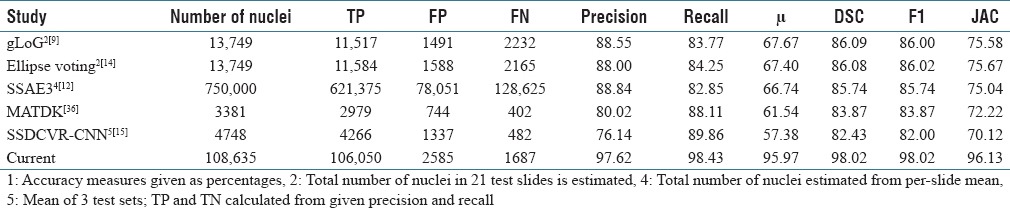

Table 2 compares the current DL superpixel nuclei results with previous cervical nuclei detection studies, with results for all studies using object-based scoring. The current method outperforms the previous cervical histopathology study. Table 3 compares the current study with recent histopathology nuclei detection studies reported for various tissues, using object-based scoring. This object identification accuracy, in comparison to pixel-based nuclear outline accuracy, may be the better of the two measures, because once a nucleus is known with high assurance, then outlines, texture, and other characteristics can be scored. The current method for nuclei object detection outperforms all previous approaches.

Table 3.

General nuclei detection results versus current deep learning superpixel approach1

There has been a noticeable trend recently in the number of studies using DL for nuclei detection. DL is a powerful technique for nuclei detection; with sufficient numbers of nuclei, DL yields superior performance.[13] Yet, the general enthusiasm about DL techniques should be tempered with the reality that datasets often have insufficient samples to allow learning of nuclei characteristics that vary significantly; besides nuclei size, shape, and internal features, nuclear staining varies widely.[20] Since pathologist time is a scarce resource, the number of pathologist-marked nuclei in databases remains over two orders of magnitude lower than the numbers of test nuclei in large test sets; nuclei detection results are often estimated from samples of marked nuclei.[12] In some recent studies, detection accuracy for conventional techniques, which included incorporation of higher level knowledge, e.g., nuclear edge symmetry, surpassed DL results [Table 3].[9,12,14,20,46]

Other studies in histopathology have surpassed DL results by combining conventional techniques with DL techniques. Zhong et al. fused information from supervised and DL approaches. In comparing multiple machine-learning strategies, it was found that the combination of supervised cellular morphology features and predictive sparse decomposition DL features provided the best separation of benign and malignant histology sections.[47] Wang et al. were able to detect mitosis in breast cancer histopathology images using the combined manually tuned cellular morphology data and convolutional neural net features.[48] Arevalo et al. added an interpretable layer they called “digital staining,” to improve their DL approach to classification of basal cell carcinoma.[49] Of interest, the handcrafted layer finds the area of interest, reproducing the high-level search strategy of the expert pathologist.

Additional higher level knowledge has been used to separate nuclei which touch or overlap in multiple studies. However, the higher-level knowledge which pathology specialists use most extensively is the overall architecture present in the arrangement of cells and nuclei in the histopathology image. Thus, certain patterns, such as the gradient of nuclear atypia from basal layer to surface layer in carcinoma in situ, changes as the CIN grade increases, and different patterns of a certain type of cancer, can all provide critical diagnostic information. There is an interaction between these higher-level patterns and nuclei detection; not all nuclei are of equal importance in contributing to the diagnosis. Future studies could incorporate higher-level architectural patterns in the detection of critical cellular components such as nuclei. Thus, higher-level architectural knowledge such as nuclear distribution obtained by conventional image processing techniques fused with DL techniques will be used to advantage in automated diagnosis in the future. Since much higher-level histopathology knowledge is domain-specific, the longstanding goal of applying a single method to multiple histopathology domains remains elusive.

CONCLUSION

The proposed method of DL-based nuclei segmentation with superpixel analysis has shown improved segmentation results in comparison to state-of-the-art methods. The proposed method, oversegmenting the original image by generating superpixels, allows the CNN to learn the localized features better in the training phase. The trained model is finally applied on a larger dataset. Future work includes the application of other CNN architectures as well as fusion with higher-level knowledge with the CNN classifier. Features obtained from the detected nuclei will be used in automatic CIN classification.

Financial support and sponsorship

This research was supported (in part) by the Intramural Research Program of the National Institutes of Health, National Library of Medicine, and Lister Hill National Center for Biomedical Communications.

Conflicts of interest

There are no conflicts of interest.

Acknowledgments

This research was supported (in part) by the Intramural Research Program of the National Institutes of Health, National Library of Medicine, and Lister Hill National Center for Biomedical Communications. In addition, we gratefully acknowledge the medical expertise and collaboration of Dr. Mark Schiffman and Dr. Nicolas Wentzensen, both of the National Cancer Institute's Division of Cancer Epidemiology and Genetics.

Footnotes

Available FREE in open access from: http://www.jpathinformatics.org/text.asp?2018/9/1/5/226565

REFERENCES

- 1.Mcauliffe MJ, Lalonde FM, Mcgarry D, Gandler W, Csaky K, Trus BL. Medical Image Processing, Analysis and Visualization in Clinical Research; Proceedings of the 14th IEEE Symposium on Computer-based Medical Systems. Bethesda, MD. 2001:381–6. [Google Scholar]

- 2.Ferlay J, Soerjomataram I, Dikshit R, Eser S, Mathers C, Rebelo M, et al. Cancer incidence and mortality worldwide: Sources, methods and major patterns in GLOBOCAN 2012. Int J Cancer. 2015;136:E359–86. doi: 10.1002/ijc.29210. [DOI] [PubMed] [Google Scholar]

- 3.He L, Long LR, Antani S, Thoma GR. Histology image analysis for carcinoma detection and grading. Comput Methods Programs Biomed. 2012;107:538–56. doi: 10.1016/j.cmpb.2011.12.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kumar V, Abba A, Fausto N, Aster J. 9th ed. Philadelphia, PA: Elsevier; 2014. The Female Genital tract in Robbins and Cotran Pathologic Basis of Disease. Ch 22. [Google Scholar]

- 5.He L, Long LR, Antani S, Thoma GR. Vol. 15. Hong Kong: iConcept Press; 2011. Computer assisted diagnosis in histopathology. In: Sequence and Genome Analysis: Methods and Applications; pp. 271–87. [Google Scholar]

- 6.Wang Y, Crookes D, Eldin OS, Wang S, Hamilton P, Diamond J. Assisted diagnosis of cervical intraepithelial neoplasia (CIN) IEEE J Sel Top Signal Process. 2009;3:112–21. [Google Scholar]

- 7.Egner JR. AJCC cancer staging manual. JAMA. 2010;304:1726. [Google Scholar]

- 8.He L, Long LR, Antani S, Thoma GR. Hong Kong: iConcept Press; 2011. Computer assisted diagnosis in histopathology. in: ZhaoF Z. (Ed.) Sequence and genome analysis: methods and applications; pp. 271–87. [Google Scholar]

- 9.McCluggage WG, Walsh MY, Thornton CM, Hamilton PW, Date A, Caughley LM, et al. Inter- and intra-observer variation in the histopathological reporting of cervical squamous intraepithelial lesions using a modified Bethesda grading system. Br J Obstet Gynaecol. 1998;105:206–10. doi: 10.1111/j.1471-0528.1998.tb10054.x. [DOI] [PubMed] [Google Scholar]

- 10.Ismail SM, Colclough AB, Dinnen JS, Eakins D, Evans DM, Gradwell E, et al. Reporting cervical intra-epithelial neoplasia (CIN): Intra- and interpathologist variation and factors associated with disagreement. Histopathology. 1990;16:371–6. doi: 10.1111/j.1365-2559.1990.tb01141.x. [DOI] [PubMed] [Google Scholar]

- 11.De S, Stanley RJ, Lu C, Long R, Antani S, Thoma G, et al. A fusion-based approach for uterine cervical cancer histology image classification. Comput Med Imaging Graph. 2013;37:475–87. doi: 10.1016/j.compmedimag.2013.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Guo P, Banerjee K, Joe Stanley R, Long R, Antani S, Thoma G, et al. Nuclei-based features for uterine cervical cancer histology image analysis with fusion-based classification. IEEE J Biomed Health Inform. 2016;20:1595–607. doi: 10.1109/JBHI.2015.2483318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Xing F, Yang L. Robust nucleus/Cell detection and segmentation in digital pathology and microscopy images: A Comprehensive review. IEEE Rev Biomed Eng. 2016;9:234–63. doi: 10.1109/RBME.2016.2515127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Irshad H, Veillard A, Roux L, Racoceanu D. Methods for nuclei detection, segmentation, and classification in digital histopathology: A review-current status and future potential. IEEE Rev Biomed Eng. 2014;7:97–114. doi: 10.1109/RBME.2013.2295804. [DOI] [PubMed] [Google Scholar]

- 15.Al-Kofahi Y, Lassoued W, Lee W, Roysam B. Improved automatic detection and segmentation of cell nuclei in histopathology images. IEEE Trans Biomed Eng. 2010;57:841–52. doi: 10.1109/TBME.2009.2035102. [DOI] [PubMed] [Google Scholar]

- 16.Kong H, Akakin HC, Sarma SE. A generalized laplacian of gaussian filter for blob detection and its applications. IEEE Trans Cybern. 2013;43:1719–33. doi: 10.1109/TSMCB.2012.2228639. [DOI] [PubMed] [Google Scholar]

- 17.Hongming Xu, Cheng Lu, Berendt R, Jha N, Mandal M. Automatic nuclei detection based on generalized laplacian of Gaussian filters. IEEE J Biomed Health Inform. 2017;21:826–37. doi: 10.1109/JBHI.2016.2544245. [DOI] [PubMed] [Google Scholar]

- 18.Xie Y, Kong X, Xing F, Liu F, Su H, Yang L. Deep voting: A robust approach toward nucleus localization in microscopy images. Proceedings of 18th International Conference on Medical Image Computing and Computer-Assisted Intervention. Proceedings, Part III. Munich, Germany, MICCAI 2015. In: Navab N, Hornegger J, Wells WM, Frangi AF, editors. Berlin: Springer; 2015. pp. 374–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Xu J, Xiang L, Liu Q, Gilmore H, Wu J, Tang J, et al. Stacked sparse autoencoder (SSAE) for nuclei detection on breast cancer histopathology images. IEEE Trans Med Imaging. 2016;35:119–30. doi: 10.1109/TMI.2015.2458702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Lu C, Xu H, Xu J, Gilmore H, Mandal M, Madabhushi A, et al. Multi-pass adaptive voting for nuclei detection in histopathological images. Sci Rep. 2016;6:33985. doi: 10.1038/srep33985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Lu C, Mandal M. An efficient technique for nuclei segmentation in histopathological images based on morphological reconstructions and region adaptive threshold. Pattern Recognit Lett. 2014;18:1729–41. [Google Scholar]

- 22.Xing F, Xie Y, Yang L. An automatic learning-based framework for robust nucleus segmentation. IEEE Trans Med Imaging. 2016;35:550–66. doi: 10.1109/TMI.2015.2481436. [DOI] [PubMed] [Google Scholar]

- 23.Ricketts IW, Banda-Gamboa H, Cairns AY, Hussein K, Hipkiss W, McKenna S, et al. London, UK: IEE Colloquium; Towards the automated prescreening of cervical smears. In: Applications of Image Processing in Mass Health Screening; pp. 7/1–7/4. [Google Scholar]

- 24.Thomas AD, Davies T, Luxmoore AR. The hough transform for locating cell nuclei. Anal Quant Cytol Histol. 1992;14:347–53. [PubMed] [Google Scholar]

- 25.Walker RF, Jackway P, Lovell B, Longstaff ID. Brisbane, Australia: ANZIIS; 1994. Classification of cervical cell nuclei using morphological segmentation and textural feature extraction. Proceedings Australia New Zealnd Conference on Intelligent Information Systems; pp. 297–301. [Google Scholar]

- 26.Krishnan N, Nandini Sujatha SN. A Fast Geometric Active Contour Model with Automatic Region Grid. Coimbatore, India: IEEE International Conference on Computational Intelligence and Computing Research (ICCIC) 2010:361–8. [Google Scholar]

- 27.Fan J, Wang R, Li S, Zhang C. 2012. Automated cervical cell image segmentation using level set based active contour model 12th International Conference on Control, Automation, Robotics and Vision, (ICARCV) Guangzhou, China; pp. 877–82. [Google Scholar]

- 28.Edulapuram R, Stanley RJ, Long R, Antani S, Thoma G, Zuna R, et al. Porto, Portugal: 2017. Nuclei segmentation using a level wet active contour method and spatial fuzzy c-means clustering Proceedings 12th International Conference on Computer Vision Theory and Applications VISIGRAPP; pp. 195–202. [Google Scholar]

- 29.Guan T, Zhou D, Fan W, Peng K, Xu C, Cai X. Bali, Indonesia: IEEE ROBIO; 2014. Nuclei enhancement and segmentation in color cervical smear images 2014 IEEE International Conference on Robotics Biomimetics; pp. 107–12. [Google Scholar]

- 30.Song Y, Zhang L, Chen S, Ni D, Li B, Zhou Y, et al. Chicago IL: 2014. deep learning based framework for accurate segmentation of cervical cytoplasm and nuclei conference Proceedings 35th Annual International Conference IEEE Engineering in Medicine and Biology Society; pp. 2903–6. [DOI] [PubMed] [Google Scholar]

- 31.Song Y, Zhang L, Chen S, Ni D, Lei B, Wang T, et al. Accurate segmentation of cervical cytoplasm and nuclei based on multiscale convolutional network and graph partitioning. IEEE Trans Biomed Eng. 2015;62:2421–33. doi: 10.1109/TBME.2015.2430895. [DOI] [PubMed] [Google Scholar]

- 32.Zhang L, Le Lu, Nogues I, Summers RM, Liu S, Yao J, et al. DeepPap: Deep convolutional networks for cervical cell classification. IEEE J Biomed Health Inform. 2017;21:1633–43. doi: 10.1109/JBHI.2017.2705583. [DOI] [PubMed] [Google Scholar]

- 33.Badrinarayanan V, Handa A, Cipolla R. SegNet: A deep convolutional encoder-decoder architecture for robust semantic pixel-wise labelling ArXiv: 150507293v1. 2015. [Last accessed on 2018 Jan 15]. Available from: https://www.arxiv.org/pdf/1505.07293.pdf .

- 34.Ronneberger O, Fischer P, Brox TT. Navab N, Hornegger J, Wells WM, Frangi AF U-Net: Convolutional networks for biomedical image segmentation. Proceedings, Part III. Cham: Springer International Publishing; 2015. Proceedings Medical Image Computing and Computer-Assisted Intervention – Munich, Germany: MICCAI 18th International Conference, 2015; pp. 234–41. [Google Scholar]

- 35.Boyat AK, Joshi BK. A review paper: Noise models in digita image processing. [Last accessed on 2018 Jan 16];Signals Image Process. 2015 6:63–75. Available from: https://www.arxiv.org/ftp/arxiv/papers/1505/1505.03489.pdf . [Google Scholar]

- 36.Hill B, Roger T, Vorhagen FW. Comparative analysis of the quantization of color spaces on the basis of the CIELAB color-difference formula. ACM Trans Graph. 1997;16:109–54. [Google Scholar]

- 37.Achanta R, Shaji A, Smith K, Lucchi A, Fua P, Süsstrunk S, et al. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans Pattern Anal Mach Intell. 2012;34:2274–82. doi: 10.1109/TPAMI.2012.120. [DOI] [PubMed] [Google Scholar]

- 38.Boykov YY, Jolly MP. Vancouver, Canada: ICCV; 2001. Interactive graph cuts for optimal boundary and region segmentation of objects in N-D images computer vision Proceedings of the 8th IEEE International Conference on Compter Vision; pp. 105–12. [Google Scholar]

- 39.Shi J, Malik J. Normalized cuts and image segmentation. IEEE Trans Pattern Anal Mach Intell. 2000;22:888–905. [Google Scholar]

- 40.Comaniciu D, Meer P. Mean shift: A robust approach toward feature space analysis. IEEE Trans Pattern Anal Mach Intell. 2002;24:603–19. [Google Scholar]

- 41.Felzenszwalb PF, Huttenlocher DP. Efficient graph-based image segmentation. Int J Comput Vis. 2004;59:167–81. [Google Scholar]

- 42.LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proc IEEE. 1998;86:2278–324. [Google Scholar]

- 43.Nair V, Hinton GE. Haifa, Israel: Omnipress; 2010. Rectified Linear Units Improve Restricted Boltzmann Machines Proceedings 27th International Conference Mach Learn; pp. 21–4. [Google Scholar]

- 44.Glorot X, Bengio Y. Vol. 9. Sardinia, Italy: 2010. Understanding the difficulty of training deep feedforward neural networks Proceedings of the 13th International Conference on Artificial Intelligence and Statistics; pp. 249–56. [Google Scholar]

- 45.Zhang L, Kong H, Chin CT, Liu S, Chen Z, Wang T, et al. Segmentation of cytoplasm and nuclei of abnormal cells in cervical cytology using global and local graph cuts. Comput Med Imaging Graph. 2014;38:369–80. doi: 10.1016/j.compmedimag.2014.02.001. [DOI] [PubMed] [Google Scholar]

- 46.Lu C, Mahmood M, Jha N, Mandal M. A robust automatic nuclei segmentation technique for quantitative histopathological image analysis. Anal Quant Cytopathol Histpathol. 2012;34:296–308. [PubMed] [Google Scholar]

- 47.Zhong C, Han J, Borowsky A, Parvin B, Wang Y, Chang H, et al. When machine vision meets histology: A comparative evaluation of model architecture for classification of histology sections. Med Image Anal. 2017;35:530–43. doi: 10.1016/j.media.2016.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Wang H, Cruz-Roa A, Basavanhally A, Gilmore H, Shih N, Feldman M, et al. Mitosis detection in breast cancer pathology images by combining handcrafted and convolutional neural network features. J Med Imaging (Bellingham) 2014;1:034003. doi: 10.1117/1.JMI.1.3.034003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Arevalo J, Cruz-Roa A, Arias V, Romero E, González FA. An unsupervised feature learning framework for basal cell carcinoma image analysis. Artif Intell Med. 2015;64:131–45. doi: 10.1016/j.artmed.2015.04.004. [DOI] [PubMed] [Google Scholar]