Abstract

The number of publications in the field of medical education is still markedly low, despite recognition of the value of the discipline in the medical education literature, and exponential growth of publications in other fields. This necessitates raising awareness of the research methods and potential benefits of learning analytics (LA). The aim of this paper was to offer a methodological systemic review of empirical LA research in the field of medical education and a general overview of the common methods used in the field in general. Search was done in Medline database using the term “LA.” Inclusion criteria included empirical original research articles investigating LA using qualitative, quantitative, or mixed methodologies. Articles were also required to be written in English, published in a scholarly peer-reviewed journal and have a dedicated section for methods and results. A Medline search resulted in only six articles fulfilling the inclusion criteria for this review. Most of the studies collected data about learners from learning management systems or online learning resources. Analysis used mostly quantitative methods including descriptive statistics, correlation tests, and regression models in two studies. Patterns of online behavior and usage of the digital resources as well as predicting achievement was the outcome most studies investigated. Research about LA in the field of medical education is still in infancy, with more questions than answers. The early studies are encouraging and showed that patterns of online learning can be easily revealed as well as predicting students’ performance.

Keywords: Learning analytics, medical education, review

Introduction

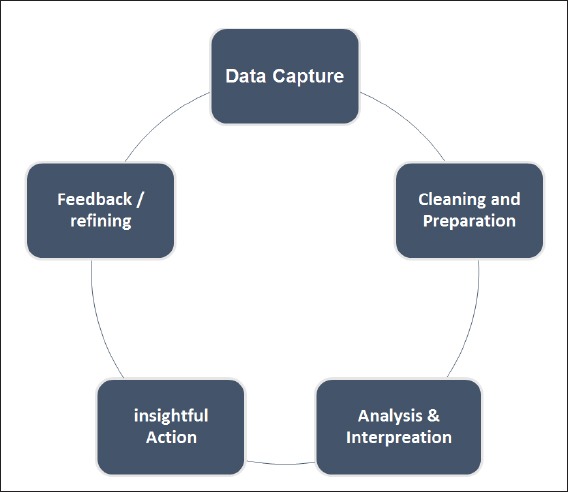

Learning analytics (LA) is a rapidly evolving research discipline that aims at “measurement, collection, analysis, and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs.”[1] The field of LA has been formally recognized after it had the first widely known definition during the first International Conference on LA and Knowledge (LAK, 2011).[1] The process of LA can be conceptualized as a cycle as shown in Figure 1; the first stage involves capturing data produced by learners from various sources; mainly the virtual or learning management system (LMS), registration systems, assessment platforms, and library systems. The second stage involves data preparation, analysis, and interpretation. The final stage is to create meaningful interventions using the insights generated from data analysis such as proactive intervention, personalizing support, or adaptive content. The last step is to evaluate the intervention; the feedback is then used to improve the whole process.[1-3]

Figure 1.

The learning analytics cycle

A General Overview of LA Research Methodology: Data Collection

Both quantitative and qualitative data collection methods are used in empirical LA research. Nonetheless, the majority of studies favor the quantitative approach. Regarding the quantitative approach, the most common data captured about learners are the data generated by LMS such as time on task, the number of logins, participation in assessments, assignments, chats, or forum discussions.[4-8] To increase the scope of data collected and improve the accuracy of prediction, demographic data stored in registration systems or student information systems were added, such as age, gender, socioeconomic status was added.[9-14] To account for the social dimension of online activity, some researchers added social network analysis of online interactions to the demographic and LMS data.[8,15] For more in-depth analysis of online forums beyond the simple counts of replies and posts, Romero et al.[16] used qualitative, quantitative and social networks analysis to predict student performance. While not exactly fitting the strict definition of LA, Templar et al.[14] extended the scope of data collected to include self-reported surveys about learning styles, learning motivation and engagement, along with data from registration systems and LMS, and so did Dawson by including motivation survey results.[15]

Data collected using qualitative methods included open-ended questions about students’ opinions of the usefulness of LA visualizations and how it can be used to support their learning,[17] semi-structured interviews about students’ perception of LA and their understanding of the different metrics.[18] It also includes interviews with registered students to get an understanding of the way they use the LMS,[19] focus group interviews to evaluate the design of a tool,[20] and semi-structured focus group interviews with instructors about patterns of LA data usage.[21]

The quantitative data collection methods capture the number of times a student used a certain learning module or the duration he used an online tool. However It falls short of answering particular questions, like why this learning module was used and why others were not, what is the motive behind usage and how was the user experience. Furthermore, implementing LA requires collecting data from stakeholders such as students, teachers, administrators, and technicians. This necessitates the collection of data about how they perceive the technology, what is their feedback on the implementation, and suggestions to move forward and improve the current setup.

Whereas qualitative methods enable researchers and administrators alike to gain a deep understanding of the practice and the process of LA and answer the why question, they are difficult to verify objectively, need skillful interviewers and resource intensive regarding time and effort. These shortcomings can be a serious hurdle in a field like LA that is built around the premise of automated data processing and recommendations. This problem might be solvable with qualitative data mining techniques.

There is wide variation about what each study considers LA data. However, the common approach is that the nature of instructional conditions dictates to a greater extent which data are collected. In that sense, it would be relevant for courses offering interactive discussions to include social network analysis, financial data in paid colleges and library access in courses requiring research duties.[13] Relatedly, qualitative methods may be used in studies evaluating the implantation of LA or proposing a new framework for assessing the feasibility of a tool.

Data analysis

Similar to data collection, there is a lot of diversity among studies in the approach they take to data analysis and the outcome they are trying to achieve. Early studies used simple bivariate correlations to predict students’ performance[4,5] and were able to find cues to how students perform. Mazza et al.[6] pioneered the idea of using visual dashboards that show a summary of students’ activity. He created a tool, “CourseVis” which was intended to help teachers discover students in need for attention. The evaluation included a focus group discussion with five teachers and a semi-structured interview with another three teachers who used the improved version. Visual dashboards have become widely used later as to give feedback and information to teachers and students.[9,11,12]

Ramos et al.[7] used a stepwise multiple regression models to predict the final outcome of students. To further validate the findings, he performed cross-validation using the equation generated from the “screening sample” to predict another test sample “the calibration sample.” Different regression methods were then used, and became the most widely used method for analysis, however, to predict students who are at risk of failing rather than just the final grade.[8,11,13,14,16] Logistic regression (LR) models are among the most widely used methods for detecting a dichotomous outcome. LR has gained popularity in LA due to the advantages they have, like, assumptions are easy to fulfill, the availability of powerful software to perform the test and comprehensively analyze the performance of the technique.[22]

Exploration of new models of analysis that might prove more effective is a common practice in emergent LA research. This includes Course signals proprietary algorithm (A predictive student success algorithm) to predict students in need for support,[9] Spoon et al.[23] used random forest algorithms to identify which group of students might need a supplementary module and Romero et al.[16] used a classifier algorithm arguing that student success or failure is a classification problem. Wolf et al.[10] from the Open University one of the world’s largest distance education institutions which have an interesting and probably well-developed work in analytics-used General Unary Hypothesis Automation (GUHA) for data analysis. The main hypothesis was that identifying failing students would be more accurate if they are compared to peers of similar condition or their previous levels of activity; their work resulted later in a more mature and probably more complex system called “OU Analyse” reported by Kuzilek.[11] OU Analyse uses Four predictive models: A Bayesian classifier for finding the most significant LMS resources, k-Nearest Neighbors (k-NN) and classification and regression tree for the analysis of demographic/static data and k-NN with LMS data to predict students who are at risk and identify which kind of support they might need.

Quantitative methods analysis might enable researchers to show correlations, predictions, patterns, or trends of usages. Nevertheless, the addition of qualitative data analysis boosts the robustness of the research and highlights different sides of the problem, like “how” and “why.” Analysis of interviews about visualization enabled Kirsi et al.[17] to understand why certain visualizations are better than the others from students’ point of view. Students suggested that visualization needs to be clearer with more hints for easy interpretation. Haden et al.[20] used interviews to get feedback about the infographic LA tool they created; the interviews helped them design a better final version. Simone et al.[18] used structured interviews with the students to assess motivations of LA usage; they found that students used LA to set goals for participation, monitor behavior using themselves and others as a reference and comparison yardstick. Similarly, Mtebe et al.[19] used qualitative methods to understand the “how” question; he conducted interviews with students to find out how they used LA.

It is apparent from the aforementioned studies that there is no unified approach to data analysis, each approach might have some advantages and disadvantages. However, there are general conclusions one can make. Comparing students to their peers in the same course has proven to be the most efficient way to build an accurate predictive model,[9,10,13] that is why many predictive models were applied on course by course basis.[4,5,8,14] This might be because each course is structurally different, uses distinct LMS feature and incur a different load on learners.[10,13] A mixed methods approach that gathers and integrates insights from various data sources and stakeholders would have a significant role in the evolution of the LA, the tools we use and the frameworks we work within.[24]

Validity and reliability

Most studies did not use usual validation methods to test the performance of the predicting models across multiple courses.[10,13] Nonetheless, 10 cross fold-validation was used in two studies.[10] 10 cross fold-validation is a split sample technique (divides dataset it into 10 equal parts, and uses nine parts as a training dataset and one as a test).[10,16] In summary, evidence is growing toward individualizing prediction models for each context and against using a one size fits all prediction model.[13]

Objectives of LAs research

Predicting students’ performance,[4,5] or students who are at-risk of underachieving or failing a course is the most common objective of studies exploring the potential of LA.[4,7-13,16] Examples of this include, Macfadyen et al.,[8] were able to identify to at-risk students 70.3% with accuracy using only LMS data; Open University GUHA predicted failure with 88% confidence using both LMS and demographics[10] Results from OU analyze (included LMS, demographics, and financials) indicated that identifying at-risk students were possible with an accuracy of 50% at the beginning of the semester; accuracy increased to over 90% by the end of a semester.[11]

The predictors of students’ success varied greatly among studies; some studies found that hits or clicking patterns are the main predictors of success.[5,7,10] Others have emphasized the role of forum postings and social network analysis.[8,16] In one study, formative assessment seemed to be the most important predictor,[14] and in another, indicators of student motivation were reported to play a role.[15] The variation in predictors importance among courses was also obvious in studies investigating more than one course at the same time; these studies found predictors for each course are much more accurate than using generalized predictors for all courses.[10,13] This variability in predictor performance is not a sign of inconsistency; it is rather a sign of the importance of considering teaching methods, instructional design and the way each LMS feature is used. It also underscores the need to tailor methodologies to specific contexts.[13]

While most studies have investigated the predictors of students’ success for research purposes, some have built “early warning systems” that present results to students and teachers, so that teachers can create the appropriate intervention.[9-12] Alerting the students along with custom interventions have proven to improve retention and students’ success rates.[9-11]

LA in medical education

The number of publications in the field of medical education is still markedly scarce,[25] Despite recognition of the value of the discipline in the medical education literature,[26,27] and exponential growth of publications in other fields.[28] The reasons LA in medical education is lagging behind other educational fields include implementation issues that require advanced technical and analytical skills, the ethical and privacy issues and the lack of awareness about the potentials and benefits LA can bring.[25,26,29] A review of LA in medical education does not yet exist; Thus, the aim of this paper is to offer a systemic methodological review of empirical LA research in medical education to shed light on an emerging discipline to medical educators. The hope is that more researchers may appreciate the merits of the field and contribute to the existing volume of research.

Methods

The search was done in Medline database through the PubMed search engine, using the search term “LA.” The inclusion criteria included empirical original research articles investigating LA using qualitative, quantitative, or mixed methodologies. Articles were also required to be written in English, published in a scholarly peer-reviewed journal and have a dedicated section for methods and results. The following types of articles were excluded.

Theoretical discussion of a framework, a concept or a suggested model without empirically testing the concept.

Articles that discussed ethical, privacy, policy, or data handling issues.

Editorials, reviews, opinions, reports, or commentary articles.

Abstracts of conference papers where no full text is published.

The research question for this overview is

What are the types of data commonly captured about learners?

What are the types of analytical approaches taken?

What are the objectives and outcome of research papers?

Results

A Medline search for the term “LA” performed on September 13, 2017, resulted in 26 abstracts, one article was excluded as it was repeatedly published, 25 unique abstracts remained. All articles were retrieved and carefully reviewed. Five articles were excluded as they were opinion articles, four articles discussed a possible framework or methodology but did not assess it empirically, seven articles were not relevant to the topic of the study, two articles discussed the ethics of LA, and one article was excluded as it assessed the attitude toward LA.

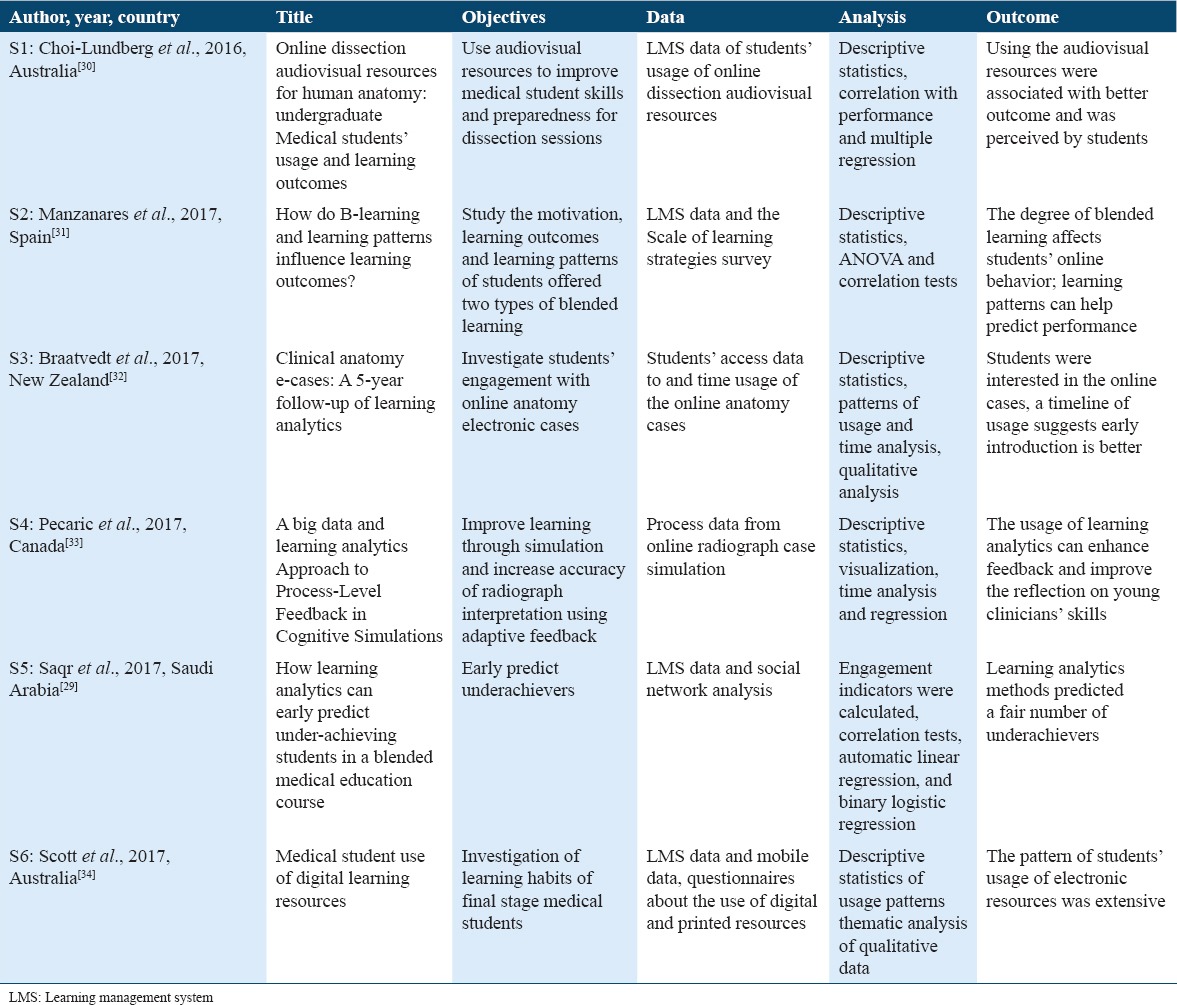

Only six articles were found to be empirical research articles fulfilling the inclusion criteria for this review as shown in Table 1. These articles were labeled as S1 to S6. S1by Choi-Lundberg et al., 2016, Australia,[30] S2 by Manzanares et al., 2017, Spain[31] S3 by Braatvedt et al., 2017, New Zealand,[32] S4 by Pecaric et al., 2017, Canada,[33] S5 by Saqr et al., 2017, Saudi Arabia,[29] and S6 by Scott et al., 2017, Australia.[34] All of these articles were published in the past two years; one study S1 was published in 2016,[30] the other five studies S2, S3, S4, S5, and S6 were published on 2017.[29,31-34]

Table 1.

Tabulation of all studies and their methodologies

The objectives of LA research were quite diverse, S1 aim was to improve students’ preparedness for dissection sessions, S2 was to study the pattern of students’ usage of blended learning, S3 was to study the pattern and engagement of usage of anatomy e-cases, S4 was to improve feedback and diagnostic skills of radiology simulation, S5 was to predict underachievers, and S6 investigated the learning habits and patterns of final stage clinical students. Regarding data collected, most studies (S1, S2, S5, and S6) collected LMS log data of student access, clicks and time of usage of online learning resources. The other two studies were more limited in scope as they collected usage data of a single online (anatomy e-cases in S3, and radiograph case simulation in S4). Given that most studies aimed to study the patterns of usage of online resources, the analysis methods were basic in most studies, including descriptive statistics and time pattern analysis; this was the prevalent analysis method in S1, S2, S3, and S6. S5 and S5 included more advanced regression analysis and predictive techniques. Most of the studies included only quantitative methods except for S3 that included thematic qualitative analysis.

The outcomes of these results were not far from the objectives they sought, S1 was able to show students’ engagement with the e-cases and that e-cases were associated with better dissection skills. S2 was able to show a correlation between the degree of blending in e-learning and students’ online behavior and that pattern of online learning can be used to predict performance. S3 reported the pattern and engagement with the online anatomy cases. Similarly, S6 reported the patterns of usage of online resources. S4 was able to use the insights generated by LA to improve feedback given to radiology trainees. Finally, S5 used LA to predict underachieving students at the end of the course and at the mid-course time.

Discussion

Methodologically, the progress in the field of LA can be categorized into three main areas, the scope of data collected, data analysis methods, and the objectives of the research. In data capture, there has been a lot of advance in the scope of data collected gathering data across multiple systems and domains to increase predictability, namely, LMS, registration data, library data, demographics, and self-reported surveys. Regarding data analysis, the field has achieved considerable developments and understanding of the different models that were proven successful and could be replicated in future research especially regression models and classifiers. Regarding objectives, several studies have achieved fairly acceptable predictive capabilities of under-achieving students between 80% and 90% by the end of the course and (50–60%) at a mid-course time. There are also some early warning systems available, and others are being built and improved. Frameworks for intervention are being developed and tested for efficacy across multiple institutions.

The reviewed literature here highlights the idea that research in LA is still exploratory in nature. There are some areas that need a lot of work, a standard mechanism for data collection and reporting is needed, the mechanism needs to be LMS independent and uses a standard set of metrics that can be shared across multiple systems. An accepted framework for analytics for classification of outcomes is also required and a so that data and results across can be shared or compared across institutions. Unexplored areas include using artificial intelligence, the role of semantic data mining in adding to the predictive accuracy and data collection beyond computer logs.

For Medical education, this review is a testimony that we are yet to scratch the surface. There are lots of venues and uncharted territories that have not been explored, despite the considerable potential that we might get. The list of unanswered questions is long, examples include, the factors that best predict students’ achievement in health-care education, the correlation between online and offline learning, the rule of simulation in students’ learning, the modules that have the most cost-efficient impact on students’ learning, the competencies that students always miss, the areas that students find most challenging and what areas that we need to redesign so that it helps students perform better.

Conclusions

This paper aimed to offer a systemic methodological review of empirical LA research in the field of medical education and a general overview of the common methods used in the field in general. A Medline search resulted in only six articles fulfilling the inclusion criteria for this review. Most of the studies collected data about learners for LMSs or online learning resources. The analysis used mostly quantitative methods including descriptive statistics, correlation tests, and regression in two studies. Patterns of online behavior and usage of the digital resources as well as predicting achievement was the outcome most studies investigated. Apparently, research about LA in the field of medical education is still in infancy, with more questions than answers. The early studies are encouraging and showed that patterns of online learning could be easily revealed as well as predicting students’ performance.

References

- 1.Siemens G, Latour B. Learning analytics:The emergence of a discipline. Am Behav Sci. 2015;57:1380–400. [Google Scholar]

- 2.Clow D. The Learning Analytics Cycle:Closing the Loop Effectively. Proceedings of the 2ndInternational Conference on Learning Analytics and Knowledge. ACM. 2012 [Google Scholar]

- 3.Ferguson R. Learning analytics:Drivers, developments and challenges. Int J Technol Enhanc Learn. 2012;4:304–17. [Google Scholar]

- 4.Wang AY, Newlin MH. Predictors of performance in the virtual classroom:Identifying and helping at-risk cyber-students. Journal. 2002;29:21. [Google Scholar]

- 5.Wang AY, Newlin MH. Predictors of web-student performance:The role of self-efficacy and reasons for taking an on-line class. Comput Hum Behav. 2002;18:151–63. [Google Scholar]

- 6.Mazza R, Dimitrova V. Visualising Student Tracking Data to Support Instructors in Web-Based Distance Education. New York, USA: ACM Press; 2004. [Google Scholar]

- 7.Ramos C, Yudko E. “Hits”(not “Discussion Posts”) predict student success in online courses:A double cross-validation study. Comput Educ. 2008;50:1174–82. [Google Scholar]

- 8.Macfadyen LP, Dawson S. Mining LMS data to develop an “early warning system” for educators:A proof of concept. Comput Educ. 2010;54:588–99. [Google Scholar]

- 9.Arnold KE, Pistilli MD. Course Signals at Purdue. Proceedings of the 2ndInternational Conference on Learning Analytics and Knowledge-LAK'12. 2012:267. [Google Scholar]

- 10.Wolff A, Zdrahal Z, Nikolov A, Pantucek M. Improving retention:Predicting at-risk students by analysing clicking behaviour in a virtual learning environment. LAK'13 Proceedings of the Third International Conference on Learning Analytics and Knowledge. 2013:145–9. [Google Scholar]

- 11.Kuzilek J, Annika W. LAK15 Case Study 1:OU Analyse:Analysing At-Risk Students at The Open University. Learn Anal Rev. 2015 Mar;18:1–6. [Google Scholar]

- 12.Rienties B, Boroowa A, Cross S, Kubiak C, Mayles K, Murphy S. Analytics4action evaluation framework:A review of evidence-based learning analytics interventions at the Open University UK. J Interact Media Educ. 2016;1:1–11. [Google Scholar]

- 13.Gašević D, Dawson S, Rogers T, Gasevic D. Learning analytics should not promote one size fits all:The effects of instructional conditions in predicting academic success. Internet High Educ. 2016;28:68–84. [Google Scholar]

- 14.Tempelaar DT, Rienties B, Giesbers B. In search for the most informative data for feedback generation:Learning analytics in a data-rich context. Comput Hum Behav. 2015;47:157–67. [Google Scholar]

- 15.Dawson SP, Macfadyen L, Lockyer L. Learning or Performance:Predicting Drivers of Student Motivation. Proceedings of the Ascilite Conference in Auckland. 2009:184–93. [Google Scholar]

- 16.Romero C, López MI, Luna JM, Ventura S. Predicting students'final performance from participation in on-line discussion forums. Comput Educ. 2013;68:458–72. [Google Scholar]

- 17.Silius K, Tervakari AM, Kailanto M. Visualizations of user data in a social media enhanced web-based environment in higher education. Int J Emerg Technol Learn (iJET) 2013;8:13. [Google Scholar]

- 18.Wise A, Zhao Y, Hausknecht S. Learning Analytics for Online Discussions:Embedded and Extracted Approaches. J Learn Anal. 2014;1:48–71. [Google Scholar]

- 19.Mtebe JS. Using Learning Analytics to Predict Students'Performance in Moodle Learning Management System:A Case of Mbeya University of Science and Technology Imani. Electron J Inf Syst Dev Ctries. 2017;79(1):1–13. [Google Scholar]

- 20.Ott C, Robins A, Haden P, Shephard K. Illustrating performance indicators and course characteristics to support students'self-regulated learning in CS1. Comput Sci Educ. 2015;25:174–98. [Google Scholar]

- 21.Knight DB, Tech V, States U, Novoselich B. An investigation of first-year engineering student and instructor perspectives of learning analytics approaches cory Brozina Youngstown State University, United States Department of Civil and Mechanical Engineering United States Military Academy, United. J Learn Anal. 2016;3:215–38. [Google Scholar]

- 22.Peng CY, Lee KL, Ingersoll GM. An introduction to logistic regression analysis and reporting. J Educ Res. 2002;2:3–14. [Google Scholar]

- 23.Spoon K, Beemer J, Whitmer JC, Fan J, Frazee JP, Stronach J, et al. Random Forests for Evaluating Pedagogy and Informing Personalized Learning. J Educ Data Mining. 2016;8:20–50. [Google Scholar]

- 24.Chatti MA, Dyckhoff AL, Schroeder U, Thüs H. A Reference Model for Learning Analytics. Int J Technol Enhanc Learn. 2012;4:318–31. [Google Scholar]

- 25.Saqr M. Learning analytics and medical education. Int J Health Sci (Qassim) 2015;9:V–VI. doi: 10.12816/0031225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ellaway RH, Pusic MV, Galbraith RM, Cameron T. Developing the role of big data and analytics in health professional education. Med Teach. 2014;36:216–22. doi: 10.3109/0142159X.2014.874553. [DOI] [PubMed] [Google Scholar]

- 27.Doherty I, Sharma N, Harbutt D. Contemporary and future eLearning trends in medical education. Med Teach. 2015;37:1–3. doi: 10.3109/0142159X.2014.947925. [DOI] [PubMed] [Google Scholar]

- 28.Gasevic D, Dawson S, Mirriahi N, Long PD. Learning analytics-A growing field and community engagement. J Learn Anal. 2015;2:1–6. [Google Scholar]

- 29.Saqr M, Fors U, Tedre M. How learning analytics can early predict under-achieving students in a blended medical education course. Med Teach. 2017;39:757–67. doi: 10.1080/0142159X.2017.1309376. [DOI] [PubMed] [Google Scholar]

- 30.Choi-Lundberg DL, Cuellar WA, Williams AM. Online dissection audio-visual resources for human anatomy:Undergraduate medical students'usage and learning outcomes. Anat Sci Educ. 2016;9:545–54. doi: 10.1002/ase.1607. [DOI] [PubMed] [Google Scholar]

- 31.Sáiz Manzanares MC, Marticorena Sánchez R, García Osorio CI, Díez-Pastor JF. How do B-learning and learning patterns influence learning outcomes? Front Psychol. 2017;8:745. doi: 10.3389/fpsyg.2017.00745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Braatvedt G, Tekiteki A, Britton H, Wallace J, Khanolkar M. Clinical anatomy e-cases:A five-year follow-up of learning analytics. N Z Med J. 2017;130:16–24. [Google Scholar]

- 33.Pecaric M, Boutis K, Beckstead J, Pusic M. A big data and learning analytics approach to process-level feedback in cognitive simulations. Acad Med. 2017;92:175–84. doi: 10.1097/ACM.0000000000001234. [DOI] [PubMed] [Google Scholar]

- 34.Scott K, Morris A, Marais B. Medical student use of digital learning resources. Clin Teach. 2017 doi: 10.1111/tct.12630. [DOI] [PubMed] [Google Scholar]