Abstract

A social-functional approach to face processing comes with a number of assumptions. First, given that humans possess limited cognitive resources, it assumes that we naturally allocate attention to processing and integrating the most adaptively relevant social cues. Second, from these cues, we make behavioral forecasts about others in order to respond in an efficient and adaptive manner. This assumption aligns with broader ecological accounts of vision that highlight a direct action-perception link, even for nonsocial vision. Third, humans are naturally predisposed to process faces in this functionally adaptive manner. This latter contention is implied by our attraction to dynamic aspects of the face, including looking behavior and facial expressions, from which we tend to overgeneralize inferences, even when forming impressions of stable traits. The functional approach helps to address how and why observers are able to integrate functionally related compound social cues in a manner that is ecologically relevant and thus adaptive.

Keywords: facial appearance, facial expression, eye gaze, emotion overgeneralization, compound social cues

When we perceive a face, we perceive a person’s mind, including all its behavioral intentions and their potential consequences for us. No other stimulus conveys such rich information in so finite a space, making our ability to effortlessly draw meaning from it, and all its multiple interacting cues, an exquisite feat. The notion of social vision described here rests on the fundamental assumption that the interpretation of others’ behavioral intent is a vital computational demand, one that likely exerted a defining influence on the evolution the human brain. In this way, social vision implies a social brain.

The need to make sense of social visual information is proposed to have been a major driving force in the evolution of the human neocortex, a part of the brain widely considered to be critical in the cognitive capacity to reason, particularly about others (Dunbar, 1998). In support of the social brain hypothesis, Dunbar found a positive association between various primate species’ average neocortical volume and their average social-network size, with humans having the largest of both. This finding was hailed as evidence that the evolution of the human neocortex was largely socially driven.

Presumably, as the human social brain evolved, so too did the human face, becoming a more versatile and complex mechanism for social exchange. Evidence for this includes the fact that terrestrial Old World monkeys, which live in environments that give them high visual access to one another, possess far greater facial expressivity than do arboreal New World monkeys, which live among branches and foliage that allow them much less visual access (Redican, 1982). Extreme examples include Platyrrhine “night monkeys,” which are both arboreal and nocturnal and exhibit virtually no capacity for facial expression, and humans, who have extensive visual access to one another, large social networks, and the extraordinary ability to produce over 7,000 distinct facial muscle configurations.

In humans, some expressions are thought to have evolved specifically to exploit preexisting perceptual mechanisms in the brain. One example is the human fear expression, argued to have evolved its current form to resemble a babyish appearance, thereby eliciting a functional caretaking response from others (Marsh, Adams, & Kleck, 2005). Likewise, happy facial expressions are argued to have evolved because they efficiently diffuse impressions of threat by perceivers from a distance; happy expressions also benefit from a preattentive recognition advantage that emerges in as little as 27 milliseconds, presumably exploiting older, more basic perceptual mechanisms (Becker & Srinivasan, 2014).

Evolutionary insights like these shed important light on our understanding of human face perception. They suggest that to fully understand the mechanisms underlying face perception, and the evolution of the face itself, we must first consider the social functions they evolved to perform. Such functions include the ability to determine whether others may pose a threat or present a benefit to us. Perceiving meaning from dynamic facial cues (i.e., expression and gaze) is critical to this ability. In combination, these cues signal not only the behavioral intentions of others but the sources and targets of their intentions. An angry face looking directly at you signals a threat to you, whereas an averted gaze on a fearful face can signal where in the environment to beware of threat.

A functional approach to face perception helps explain why humans are naturally attracted to and derive such rich meaning from expressive aspects of faces. Below, we review evidence for the primacy of both eye behavior and facial expression in social perception. We then address how the functional approach to understanding face processing has helped to guide research examining the integration of multiple interrelated social cues—an area where the social dynamics of vision are most evident.

The Eyes Have It

Despite their small size and subtlety of movement, the eyes represent the most dynamic and richly informative social stimulus we encounter in our daily lives (Adams & Nelson, 2016). We are more sensitive to eye movements than to head movements or postural behaviors. Gaze direction is highly correlated with overt attention shifts, and following someone’s eye-movement patterns (whether expressed as saccades, which rapidly jump between fixation points, smooth pursuit, which tracks moving objects, or even vergence, in which the eyes turn in toward or away from one another to accommodate views of objects that are nearer or farther away) reveals a lot about the person’s mental state and social attention. Thus, the eyes produce some of the most genuine, dynamic, and socially relevant signals we use to perceive those around us.

Perhaps not surprisingly, then, our attraction to the eyes appears to be innate. From birth, even minimalistic eye-like stimuli grab our visual attention. For instance, infants will naturally attend to the presence of two large, horizontally arranged eyespots, ignoring similar single-and triple-spot schematic arrays (E. H. Hess, 1975). It has even been documented that newborns as young as 1 day old respond more when an adult is looking at them than when that adult is looking away, and newborns as young as 2 days old follow the gaze of an adult (Farroni, Massaccesi, Pividori, & Johnson, 2004). This attraction to eye gaze is thought to be mediated by a primitive, but early-maturing, subcortical pathway, rather than the more detailed but late-maturing cortical visual pathway (Johnson, Senju, & Tomalski, 2015).

As adults, we use eye gaze to determine where someone is attending to in the environment, and from that we naturally infer thoughts and emotions associated with the object of their gaze. If you see someone looking at you, you will conclude that his or her thoughts and intentions are directed at you, for good or bad. If you see someone looking at the last cupcake on a table, however, you will be likely to infer that he or she intends to eat it. If you missed lunch, this prediction may motivate you to get the cupcake first! The propositional nature of this example highlights why gaze perception has long been considered critical to the development of theory of mind (Baron-Cohen, Jolliffe, Mortimore, & Robertson, 1997), which is generally defined as the ability to reason about others’ states and intentions, particularly in relation to our own.

The region around the eyes is also highly dynamic and expressive. The same structures that surround and protect our eyes (lids, brows, conjunctiva, lachrymal glands) are prominently engaged in social signaling (Ekman & Friesen, 1975). The eye region alone can convey basic emotions (e.g., sadness, fear, and anger) and complex mental states (Baron-Cohen et al., 1997), and eye movements are enough to elicit expressive mimicry from others (U. Hess & Fischer, 2014).

The Primacy of Facial Expression in Social Perception

We are so tuned to processing expressions that seeing a face completely devoid of expressive capacity can be unnerving. Infants often cry when their mothers’ faces are still and unresponsive (Tronick, Als, Adamson, Wise, & Brazelton, 1978), and adults frequently find faces on mindless entities, such as dolls and robots, quite disturbing (Mori, 1970). This is arguably because expressions serve as an external readout of behavioral intentions, a contention with which most existing emotion theories can agree despite having varying perspectives on other aspects of the nature of emotion and expression (e.g., Ekman, 1973; Fridlund, 1994; Frijda & Tcherkassof, 1997; Lazarus, 1991). In this way, various theories of emotion-expression perception fundamentally align with basic assumptions of an ecological approach to visual perception, which highlights a direct action-perception link.

The ecological approach to vision argues that inherent to visual perception are behavioral affordances, defined as opportunities to act on or be acted upon by a visual stimulus (see Gibson, 1979/2015). As an example, Gibson would say that part of seeing a glass of water is its command of “drink me.” Gibson also argued that affordances are influenced by an organism’s attunements to its environment. He defined attunements as perceivers’ sensitivities to stimulus features associated with affordances. Although certain perceiver affordances are thought to be innate, attunements can vary within and across individuals and situations in meaningful ways. For instance, after you run a long race, a glass of water may seem to scream “drink me!”

Our attunement to the behavioral signals derived from the face holds such primacy in our perception that we are prone to form stable trait impressions based just on transient expressions (Knutson, 1996), which can even override information from facial appearance (Todorov & Porter, 2014). Humans are so tuned to read expressive information from the face that we do so from neutral faces devoid of expression. To explain this tendency, researchers have applied the ecological approach to vision, arguing that because we are biologically predisposed to respond to expressive cues, we respond even to cues that only resemble expressions of emotion, an effect referred to as emotion overgeneralization (Zebrowitz, Kikuchi, & Fellous, 2010). For example, a neutral expression on a face with naturally low-set brows and thin lips will be perceived as less approachable and more aggressive. Interestingly, even prosopagnosics (individuals who are “face blind”) will derive affective information from neutral faces to form lasting impressions that are comparable to those formed by healthy controls (Todorov & Duchaine, 2008).

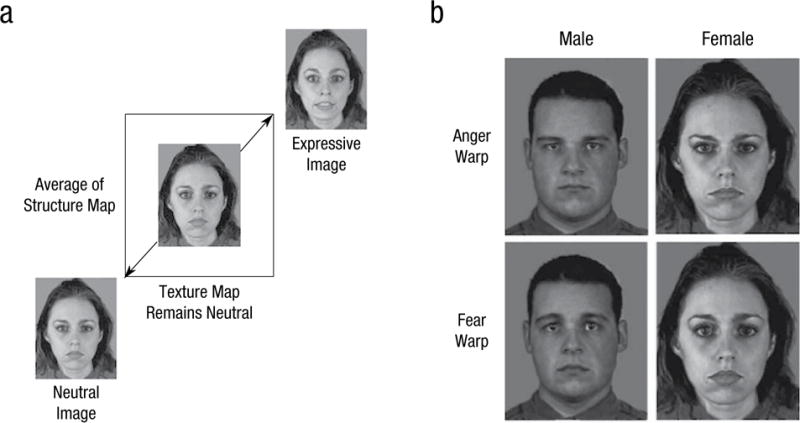

It has also been demonstrated that overt cues of emotion in the face are embedded in our mental representations of the social categories we assign to one another. For instance, androgynous faces that show angry expressions are perceived as male, whereas fearful and happy expressions lead to their being perceived as female (Hess, Thibault, Adams, Kleck, 2010). The role of emotion in social categorization is particularly apparent for perceptually ambiguous social categories, such as political affiliation and sexual orientation. For example, Tskhay and Rule (2015) found that happy cues in the face led to increased perceptions of target faces as gay and liberal versus straight and conservative and facilitated the accurate perception of individuals who self-identified as belonging to these social categories. Some cues, such as gender and facial maturity, are perceptually confounded with emotion expression in a way that directly influences impressions. “Babyish” features (e.g., large round eyes, full lips) are more typical in women and share attributes with fearful facial expressions, whereas “mature” features (e.g., square jaw, pronounced brow) are more typical in men and appear angrier (Adams, Hess, & Kleck, 2015). Unsurprisingly, then, these cues give rise to corresponding perceptions of interpersonal intentions, particularly those associated with dominance and affiliation (see Fig. 1 for an example of how faces whose structures are manipulated to vary in their resemblance to emotion expressions also vary in their masculinity/femininity and maturity cues).

Fig. 1.

An illustration of face-image manipulations used by Adams et al. (2012). Panel (a) illustrates the warping procedure, which averaged a neutral face structure with a target expression’s structure to create a 50/50 average of the structural components of each while maintaining the neutral texture map. Panel (b) shows example stimuli for fear and anger warps of male and female faces. Compared with fear-warped faces, anger-warped faces were rated as more masculine and mature, higher on dominant traits, and lower on affiliative traits. (Face stimuli were drawn from the Montreal Set of Facial Displays of Emotion; Beaupré & Hess, 2005.)

The Combinatorial Nature of Face Perception

A particularly compelling example of the functional nature of face perception is apparent in the integration of multiple functionally related social cues conveyed by the face, body, voice, scene, motion, and more. These unified percepts guide our impressions of and responses to others. Notably, until recently, various facial cues such as eye gaze, emotion, race, gender, and appearance were studied largely independently. When they were studied together, they were generally argued not to be functionally integrated in perception, at least in a bottom-up manner (e.g., Le Gal & Bruce, 2002).

Contemporary visual neuroscience has now revealed that top-down processing, sensitive to meaningful contextual cues, unfolds very quickly (i.e., within 100–200 milliseconds), organizing even low-level visual processing in a functionally meaningful way (Kveraga et al., 2011), thereby underscoring how tightly bound bottom-up and top-down processes can be. In this way, visual context has been shown to help resolve whether a blurry object in a pictorial scene is recognized, for instance, as a hair dryer or a drill (Bar, 2004). This “tunable” quality has also been shown in face perception. For example, when danger is made salient, observers’ attention becomes more tuned to the faces of out-group males, who might signal a potential threat (Maner & Miller, 2013).

Recently, a burgeoning field of research has revealed numerous mutually facilitative influences of various social cues in the face. For instance, combinations of cues such as gender, facial maturity, and emotion all influence the processing fluency of one another (Adams et al., 2015). Admittedly, these particular effects could be due to the visually confounded nature of these cues. Critically, facilitative effects have also been found for emotionally congruent versus incongruent pairings of facial expressions with body language, visual scenes, and vocal cues, even at the earliest stages of face processing (Meeren, van Heijnsbergen, & de Gelder, 2005) and across conscious and nonconscious processing routes (e.g., de Gelder, Morris, & Dolan, 2005).

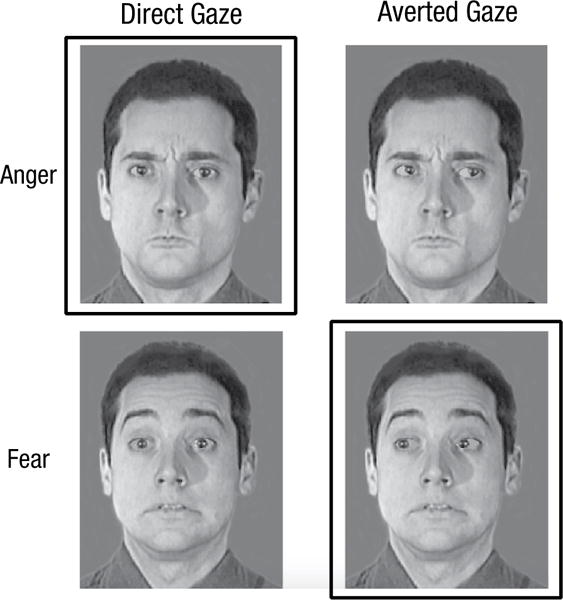

Facilitative interactions have likewise been found in the processing of eye gaze and emotion (also perceptually non-overlapping). In a test of the shared signal hypothesis, functionally congruent pairings, such as angry expressions with direct gazes (both approach signals) and fearful expressions with averted gazes (both avoidance signals), were found to be perceived as more intense and were recognized more quickly and accurately than incongruent pairings (Adams & Kleck, 2003; see Fig. 2). These effects have also been found preattentively using an attentional blink paradigm (Milders, Hietanen, Leppänen, & Braun, 2011) and in amygdalar responses to subliminally presented expression-gaze pairings (Adams et al., 2011). In a related set of studies designed to directly test the action-perception link, Adams and colleagues generated stimuli that appeared to move away from or toward the observer. In one study, they found that participants were quicker at identifying faces as approaching versus withdrawing when those faces showed angry expressions (Adams, Ambady, Macrae, & Kleck, 2006). Similarly, participants were faster at labeling angry versus fearful expressions in faces that appeared to pop out toward them versus fall away, respectively (Nelson, Adams, Stevenson, Weisbuch, & Norton, 2013). Taken together, these findings underscore the primacy of behavioral forecasts in emotion-expression perception.

Fig. 2.

Examples of angry and fearful face images from Adams and Kleck (2003) in which the gaze has been manipulated to illustrate the combinatorial nature of eye gaze with expression. The outlined images represent congruent pairings of eye gaze and expression (i.e., anger and a direct gaze signal approach, whereas fear and an averted gaze signal avoidance). (Face stimuli were drawn from the Montreal Set of Facial Displays of Emotion; Beaupré & Hess, 2005.)

In sum, facial expressions and cues such as eye gaze, body, voice, scene, and perceived motion, which do not overlap in form, nonetheless perceptually combine in an ecologically relevant manner, beginning at the earliest stages in visual processing. This reveals that the visual system is tuned not only to visual coherence but also, fundamentally, to social coherence.

Summary and Conclusions

For many years, face-processing theories argued that functionally distinct sources of facial information (e.g., expression vs. identity) engage doubly dissociable, and presumably non-interacting, processing routes (e.g., Bruce & Young, 1986; Le Gal & Bruce, 2002). Likewise, within the domain of emotion perception, prominent evolutionary theories argued that humans evolved distinct universal affect programs: biologically predetermined guides that govern the experience, expression, and perception of emotions (Keltner, Ekman, Gonzaga, & Beer, 2003). The assumed encapsulated nature of these processes left little room for considering contextual and integrative effects that are at the intersection of face and expression perception.

Taking a social-functional approach to face perception can help explain and predict complex perceptual interactions across a wide variety of facial cues, as well as contextual influences from different visual channels (e.g., body) and perceptual modalities (e.g., voice). It also helps explain why, despite our best efforts not to “judge a book by its cover,” we nonetheless derive rich meaning from facial cues, even in the absence of overt expression and even when we know those impressions are likely not diagnostic of a person’s true character.

At its most fundamental level, face perception begins as a visual process. Applying the functional approach described herein helps to elucidate the action-perception link as it unfolds, beginning at the level of the stimulus itself and progressing through the earliest stages of visual processing. Unlike models that focus on differentiating the sources of information (e.g., expression, gaze, appearance), central to the functional approach are the underlying behavioral forecasts conveyed by these cues and their combined ecological relevance to the observer. This conceptual framework, therefore, holds the promise of offering new insight into the origin and adaptive purpose of face processing and clarifying the cognitive, cultural, and biological underpinnings of socio-emotional perception.

Footnotes

Declaration of Conflicting Interests

The authors declared that they had no conflicts of interest with respect to their authorship or the publication of this article.

References

- Adams RB Jr, Ambady N, Nakayama K, Shimojo S, editors. The science of social vision. New York, NY: Oxford University Press; 2010. An edited volume showcasing work across disciplines that exemplifies a functional approach to social vision. [Google Scholar]

- Adams RB, Jr, Ambady N, Macrae CN, Kleck RE. Emotional expressions forecast approach-avoidance behavior. Motivation & Emotion. 2006;30:179–188. [Google Scholar]

- Adams RB, Jr, Franklin RG, Jr, Kveraga K, Ambady N, Kleck RE, Whalen PJ, Nelson AJ. Amygdala responses to averted versus direct gaze fear vary as a function of presentation speed. Social Cognitive and Affective Neuroscience. 2012;7:568–577. doi: 10.1093/scan/nsr038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adams RB, Jr, Franklin RG, Jr, Nelson AJ, Gordon HL, Kleck RE, Whalen PJ, Ambady N. Differentially tuned responses to restricted versus prolonged awareness of threat: A preliminary fMRI investigation. Brain and Cognition. 2011;77:113–119. doi: 10.1016/j.bandc.2011.05.001. [DOI] [PubMed] [Google Scholar]

- Adams RB, Jr, Hess U, Kleck RE. The intersection of gender-related facial appearance and facial displays of emotion. Emotion Review. 2015;7:5–13. [Google Scholar]

- Adams RB, Jr, Kleck RE. Perceived gaze direction and the processing of facial displays of emotion. Psychological Science. 2003;14:644–647. doi: 10.1046/j.0956-7976.2003.psci_1479.x. To our knowledge, the first empirical evidence for functional interactions in perception across distinct facial cues. [DOI] [PubMed] [Google Scholar]

- Adams RB, Jr, Nelson AJ. Eye behavior and gaze. In: Matsumoto D, Hwang HC, Frank MG, editors. The handbook of nonverbal communication. Washington, DC: American Psychological Association; 2016. pp. 335–386. [Google Scholar]

- Bar M. Visual objects in context. Nature Reviews Neuroscience. 2004;5:617–629. doi: 10.1038/nrn1476. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S, Jolliffe T, Mortimore C, Robertson M. Another advanced test of theory of mind: Evidence from very high functioning adults with autism or Asperger syndrome. Journal of Child Psychology and Psychiatry. 1997;38:813–822. doi: 10.1111/j.1469-7610.1997.tb01599.x. [DOI] [PubMed] [Google Scholar]

- Beaupré MG, Hess U. Cross-cultural emotion recognition among Canadian ethnic groups. Journal of Cross-Cultural Psychology. 2005;36:355–370. [Google Scholar]

- Becker DV, Srinivasan N. The vividness of the happy face. Current Directions in Psychological Science. 2014;23:189–194. [Google Scholar]

- Bruce V, Young A. Understanding face recognition. British Journal of Psychology. 1986;77:305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Morris JS, Dolan RJ. Unconscious fear influences emotional awareness of faces and voices. Proceedings of the National Academy of Sciences, USA. 2005;102:18682–18687. doi: 10.1073/pnas.0509179102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunbar RIM. The social brain hypothesis. Evolutionary Anthropology. 1998;6:178–190. A classic study on primates highlighting social influences on the evolution of the brain. [Google Scholar]

- Ekman P. Cross-cultural studies of facial expression. In: Ekman P, editor. Darwin and facial expression: A century of research in review. Cambridge, MA: Academic Press; 1973. pp. 169–222. [Google Scholar]

- Ekman P, Friesen WV. Unmasking the face: A guide to recognizing emotions from facial cues. Upper Saddle River, NJ: Prentice-Hall; 1975. [Google Scholar]

- Farroni T, Massaccesi S, Pividori D, Johnson MH. Gaze following in newborns. Infancy. 2004;5:39–60. [Google Scholar]

- Fridlund AJ. Human facial expression: An evolutionary view. San Diego, CA: Academic Press; 1994. [Google Scholar]

- Frijda NH, Tcherkassof A. Facial expressions as modes of action readiness. In: Russell JA, Fernández-Dols JM, editors. The psychology of facial expression. Cambridge, United Kingdom: Cambridge University Press; 1997. pp. 78–102. [Google Scholar]

- Gibson JJ. The ecological approach to visual perception. New York, NY: Psychology Press; 2015. (Original work published 1979), A classic theoretical treatise on the functional approach to basic vision perception. [Google Scholar]

- Hess EH. The tell-tale eye: How your eyes reveal hidden thoughts and emotions. New York, NY: Van Nostrand Reinhold; 1975. [Google Scholar]

- Hess U, Fischer AH. Emotional mimicry: Why and when we mimic emotions. Social & Personality Psychology Compass. 2014;8:45–57. [Google Scholar]

- Hess U, Thibault P, Adams RB, Kleck RE. The influence of gender, social roles, and facial appearance on perceived emotionality. European Journal of Social Psychology. 2010;40:1310–1317. [Google Scholar]

- Johnson MH, Senju A, Tomalski P. The two-process theory of face processing: Modifications based on two decades of data from infants and adults. Neuroscience & Biobehavioral Reviews. 2015;50:169–179. doi: 10.1016/j.neubiorev.2014.10.009. [DOI] [PubMed] [Google Scholar]

- Keltner D, Ekman P, Gonzaga GC, Beer J. Facial expression of emotion. In: Davidson RJ, Scherer KR, Goldsmith HH, editors. Handbook of affective sciences. New York, NY: Oxford University Press; 2003. pp. 415–431. [Google Scholar]

- Knutson B. Facial expressions of emotion influence interpersonal trait inferences. Journal of Nonverbal Behavior. 1996;20:165–182. [Google Scholar]

- Kveraga K, Ghuman Singh A, Kassam KS, Aminoff EA, Hamalainen MS, Chaumon M, Bar M. Early onset of neural synchronization in the contextual associations network. Proceedings of the Natation Academy of Sciences, USA. 2011;108:3389–3394. doi: 10.1073/pnas.1013760108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lazarus RS. Emotion and adaptation. New York, NY: Oxford University Press; 1991. [Google Scholar]

- Le Gal PM, Bruce V. Evaluating the independence of sex and expression in judgments of faces. Perception and Psychophysics. 2002;2:230–243. doi: 10.3758/bf03195789. [DOI] [PubMed] [Google Scholar]

- Maner JK, Miller SL. Adaptive attentional attunement: Perceptions of danger and attention to outgroup men. Social Cognition. 2013;31:733–744. [Google Scholar]

- Marsh AA, Adams RB, Jr, Kleck RE. Why do fear and anger look the way they do? Form and social function in facial expressions. Personality and Social Psychology Bulletin. 2005;31(1):73–86. doi: 10.1177/0146167204271306. [DOI] [PubMed] [Google Scholar]

- Meeren HJM, van Heijnsbergen CCRJ, de Gelder B. Rapid perceptual integration of facial expression and emotional body language. Proceedings of the National Academy of Sciences, USA. 2005;102:16518–16523. doi: 10.1073/pnas.0507650102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Milders M, Hietanen JK, Leppänen JM, Braun M. Detection of emotional faces is modulated by the direction of eye gaze. Emotion. 2011;11:1456. doi: 10.1037/a0022901. [DOI] [PubMed] [Google Scholar]

- Mori M. Bukimi no tani [The uncanny valley] Energy. 1970;7:33–35. [Google Scholar]

- Nelson AJ, Adams RB, Jr, Stevenson MT, Weisbuch M, Norton MI. Approach-avoidance oriented movements influence the decoding of threat-related facial expressions. Social Cognition. 2013;31:745–757. [Google Scholar]

- Redican WK. An evolutionary perspective on human facial displays. In: Ekman P, editor. Emotion in the human face. 2nd. Cambridge, England: Cambridge University Press; 1982. pp. 212–280. [Google Scholar]

- Todorov A, Duchaine B. Reading trustworthiness in faces without recognizing faces. Cognitive Neuropsychology. 2008;25:395–410. doi: 10.1080/02643290802044996. [DOI] [PubMed] [Google Scholar]

- Todorov A, Porter JM. Misleading first impressions different for different facial images of the same person. Psychological Science. 2014;25:1404–1417. doi: 10.1177/0956797614532474. [DOI] [PubMed] [Google Scholar]

- Tronick E, Als H, Adamson L, Wise S, Brazelton TB. The infant’s response to entrapment between contradictory messages in face-to-face interaction. Journal of the American Academy of Child Psychiatry. 1978;17:1–13. doi: 10.1016/s0002-7138(09)62273-1. [DOI] [PubMed] [Google Scholar]

- Tskhay KO, Rule NO. Emotions facilitate the communication of ambiguous group memberships. Emotion. 2015;15:812–826. doi: 10.1037/emo0000077. [DOI] [PubMed] [Google Scholar]

- Zebrowitz LA, Kikuchi M, Fellous JM. Facial resemblance to emotions: Group differences, impression effects, and race stereotypes. Journal of Personality and Social Psychology. 2010;98:175. doi: 10.1037/a0017990. A set of studies that applied the ecological approach to facial perception and demonstrated perceptual overlap across expressive and appearance cues using a connectionist model. [DOI] [PMC free article] [PubMed] [Google Scholar]