Abstract

Many facets of an image acquisition workflow leave a digital footprint, making workflow analysis amenable to an informatics-based solution. This paper describes a detailed framework for analyzing workflow and uses acute stroke response timeliness in CT as a practical demonstration. We review methods for accessing the digital footprints resulting from common technologist/device interactions. This overview lays a foundation for obtaining data for workflow analysis. We demonstrate the method by analyzing CT imaging efficiency in the setting of acute stroke. We successfully used digital footprints of CT technologists to analyze their workflow. We presented an overview of other digital footprints including but not limited to contrast administration, patient positioning, billing, reformat creation, and scheduling. A framework for analyzing image acquisition workflow was presented. This framework is transferable to any modality, as the key steps of image acquisition, image reconstruction, image post processing, and image transfer to PACS are common to any imaging modality in diagnostic radiology.

Keywords: Quality control, Informatics, Radiology workflow, Compliance, Stroke, CT

Background

All of the tomographic radiology imaging modalities require a complex technologist workflow. In a 2008 publication, Boland charted out 34 unique tasks required for a CT technologist to process a single patient through a CT scanner [1]. For example, in CT, the technologist is required to perform multiple irradiation events and create multiple reformats and axial image reconstructions to satisfy even a simple order. Such an exam might consist of the acquisition of 1–2 CT localizer radiographs, a bolus tracking reference image and subsequent cine bolus tracking images, and then the actual volume CT acquisition. In additional to interfacing with the CT scanner, the CT technologist must also load intravenous contrast agent into a power injector, log the intravenous contrast agent and/or oral contrast into a billing interface, program the intravenous contrast power injector before the exam, and interact with it during the exam. After the CT acquisition, the CT technologist will be required to create reformatted images, often in multiple planes and slice thicknesses. The CT technologist would then be required to send all of these images to picture and archiving communication system (PACS) for radiologist review. They may be required to confirm the images successfully sent to PACS and mark the study as reviewed and ready for radiologist interpretation. Other duties might include interacting with the hospital scheduling system to note exam completion and update the patient’s location within the hospital. Multiple issues can surround such a complex workflow. Perhaps, the radiologist desired to see the axial images immediately, prior to the CT technologist working on the reformats, to assess the need for additional imaging series. Perhaps, the CT technologist forgets to send one or more of the image series. For routine single phase exams that are non-urgent, such issues will not typically impact the patient’s care. For urgent cases, however, such as for trauma or acute stroke, delays in image acquisition and/or sending to the radiologists for review will have an impact on patient care. Furthermore, for routine imaging, unneeded delays due to inefficient workflows may indicate wasted revenue due to scanner underutilization [2, 3].

Almost all of the steps outlined in the previous paragraph leave a digital footprint. However, these footprints are often stored in separate databases, making a complete analysis difficult. Due to the volume of imaging exams performed, a digital solution for workflow analysis is the only logistically viable solution to analyze global workflow, given the hurdles to a manual approach to recording timing information.

Previous studies have measured aspects of CT technologist/device/scanner/IT infrastructure effects on timeliness and error rates. A large body of literature exists on how the transition to a fully digital workflow decreases error and the time from door to interpretation [4–14]. For example, Humphrey and colleagues compared time between exposure and presentation of results to an ordering physician [15]. This analysis included technologist, device, transfer, and radiologist workflow elements in their results. In the ideas and work presented here, we focus on patient/exam preparation, image acquisition, reconstruction, and transfer, which includes no elements of radiologist or physician interpretation. Therefore, while only analyzing a subset of the workflow of previous authors like Humphrey et al., we aim to provide relatively much more detailed information about the individual series-level steps making up the tasks needed to provide images to radiologists for review. Some imaging exams involve physician input during the exam or physician approval before releasing the patient. In this work, we do not consider how one could digitally capture this physician instruction. Reading room workflows and task switching has been previously studied in the literature and will not be addressed in this work [16–20].

To demonstrate the value of the framework of workflow analysis presented here, we describe their application to efficiency monitoring in acute stroke. Comprehensive Stroke Center Certification by The Joint Commission requires evaluation of performance measures to improve stroke treatment [21]. An essential quality measure of stroke response is timeliness of imaging acquisition and interpretation, and time-efficient CT imaging in acute stroke can significantly contribute to improved patient outcomes [22]. Fast imaging and expedited accessibility of imaging data to interpreting radiologists, even prior to exam completion, is vital for rapid decision-making and patient management. The definition of the goal of workflow analysis put forth by Reiner et al. is “to improve productivity while reducing cycle time” which perfectly captures the goal of our efforts at applying the science of workflow analysis to the acute stroke setting in CT [23]. The objective of this work is to introduce readily available digital data sources for workflow analysis and present a framework for their application in evaluating efficiency of CT imaging in acute stroke response.

Methods

Review of Digital Footprints for Common Imaging Acquisitions

Any imaging acquisition in diagnostic radiology can be broken into the following facets: patient preparation, scanner/device preparation, image acquisition, image reconstruction, image post processing, image networking, and billing/scheduling tasks. These facets and the digital footprints they leave behind form the basis for what we call a framework for analysis of image acquisition workflow. Image interpretation can occur during or after the preceding steps of an imaging acquisition sequence, but the workflow steps related to image interpretation will not be considered here. In workflow analysis, the foundation on which one’s analysis rests is the available data. For this reason, we refer to outlining all the data available for such an analysis as a framework. Figure 1 demonstrates the main facets of image acquisition in diagnostic radiology and the typical temporal relationships between them. Figure 2 shows an example workflow in finer detail for a two phase abdomen exam in CT. Tables 1, 2, 3, and 4 list workflow steps and their best corresponding digital footprint (corresponding to the first four steps shown in Fig. 1). The sources of the digital footprints in diagnostic radiology mainly come from the radiologic information system (RIS), electronic medical record (EMR), DICOM metadata, and the PACS [24, 25]. We also describe the use of a dose monitoring database for this purpose [26, 27], but the information within that database is largely redundant with information already available in a PACS. Scheduling and billing tasks can be performed without the patient in the imaging environment. They require a finite amount of time and represent “unproductive time,” as productivity in medical imaging is defined by the ratio of time spent acquiring images to all time [23].

Fig. 1.

Overview of the temporal relationship between the major steps involved with imaging exam workflow. Time progresses as one moves from left to right. Boxes overlapping in the vertical direction represent workflow tasks that may occur at the same point in time

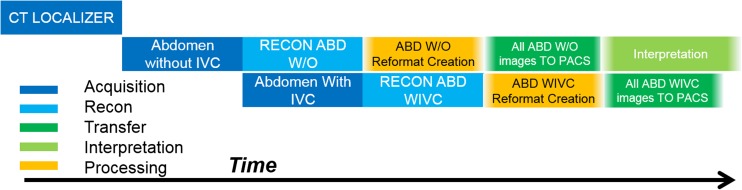

Fig. 2.

Overview of the temporal relationship between the major steps involved with just the imaging acquisition, reconstruction and post processing, and networking facets of diagnostic medical imaging workflow. This example is for a two phase abdomen exam with axial reconstructions and reformats for both the with and without IVC contrast series. Time progresses as one moves from left to right. Boxes overlapping in the vertical direction represent workflow tasks that may occur at the same point in time. The order, time between, and duration of the events in this figure all provide temporal efficiency information on both the operator and scanner/hardware/software

Table 1.

Example workflow steps for patient preparation and their typical corresponding digital record in the diagnostic radiology imaging environment. EMR, electronic medical record (i.e., electronic health record); RIS, radiology information system

| Workflow step | Digital record (i.e., “digital footprint”) |

|---|---|

| Registering patient’s exam as in progress | RIS audit log |

| Checking patient’s creatinine levels | EMR audit log |

| Checking/updating to see if patient has undergone sufficient MRI safety checks | RIS audit log |

| Checking/updating patient’s pregnancy test result | EMR audit log |

| Checking/updating patient’s insulin pump/pace makers, or other implanted medical device history/status (modality dependent) | EMR/RIS audit log |

| Reviewing protocol documentation | Protocol Management System Log |

Table 2.

Example workflow steps for scanner/device preparation and image acquisition and their typical corresponding digital record in the diagnostic radiology imaging environment

| Workflow step | Digital record (i.e., “digital footprint”) |

|---|---|

| Loading power injector with contrast/chaser | Power injector log files (i.e., “Certegra database” for a MedRad Bayer power injector) |

| Exam start on scanner (i.e., picking a patient from the DICOM modality worklist and choosing an imaging protocol) | DICOM tag 0008,0030 STUDY TIME |

| Scan acquisition time | DICOM tag 0008,0032 acquisition time for each irradiation event in CT/radiography, acquisition timestamp for each ultrasound image in US, acquisition timestamp for each MR scan |

| Exam end time | Exam end time in DICOM tag (i.e., when exam as closed on scanner) refers to when the technologist decided the scanner is done with image acquisition but not always post processing and networking. We also have the RIS study completion/scheduling/patient status time usually reserved for the time the patient is out of radiology and all tasks associated with that patient are complete and the scanner/technologist are free to start a new exam |

| Power injector summary (i.e., time of injection, volume, pressure, concentration) for each phase and injected material (i.e., contrast agent and optional chaser) | Power injector log files (i.e., “Certegra database” for a MedRad Bayer power injector), power injector screen save DICOM object |

| Power injector test injection | Power injector log files (i.e., “Certegra database” for a MedRad Bayer power injector), power injector screen save DICOM object |

Table 3.

Example workflow steps for image reconstruction, post processing, and networking times and their typical corresponding digital record in the diagnostic radiology imaging environment. Image reconstruction time can be calculated by subtracting the scan acquisition time (Table 2) from the image creation time. Post processing times can be calculated by subtracting the image creation time of the post processing input images from the creation time of the post processed images. Networking times, in the context of quantifying how long a technologist waits before sending a set of images to PACS for review, can be quantified by subtracting the image creation time from the PACS/VNA file creation timestamp

| Workflow step | Digital record (i.e., “digital footprint”) |

|---|---|

| Marks a study as reviewed in PACS | PACS system database |

| Image creation time | DICOM tag 0008,0033 (image pixel data creation time or waveform formation time) |

| Networking time (i.e., time point when a scanner or technologist transmits and image from the imaging device to another processing step or to a physician for interpretation) | Not a standard DICOM tag. Can be stored by object storage technologies (i.e., hyper-converged infrastructures), can be interrogated from PACS/VNA database system file creation time, can be obtained from an interface engine that stores file transfer times (e.g., Mirth, Nextgen Healthcare, Irvine, CA) |

Table 4.

Example workflow steps for billing and scheduling tasks and their typical corresponding digital record in the diagnostic radiology imaging environment

| Workflow step | Digital record (i.e., “digital footprint”) |

|---|---|

| Medicine Administration Record data entry | EHR (medication administration record) |

| End patient exam on scanner | Possible to obtain this from scanner system logs or on modalities that print exam summaries, the end exam time will correspond to the image creation time of the summary DICOM object (e.g., dose screen save or SR dose object in CT) |

| End patient exam on schedule | RIS |

A patient may be logged as “waiting” hours before actually being scanned so that they appear on the worklist and/or scheduling template within radiology. The time between a patient being marked as “in progress” and the actual first acquisition of images may vary by many minutes, as some techs may mark the patient exam as “in progress,” then leave to get the patient from the waiting room, while another may mark the exam as “in progress” only once the patient is present in the exam room. Other patient needs (transfer, positioning, tracheostomy care, bathroom needs, etc.) can impact the time between the exam being noted as “in progress” and when image acquisition commences. Such differences in time point definitions must be understood so meaningful comparisons can be made from differences between time points. If only the time spent actually being imaged is of importance, a useful exam start time can be the time of the first image acquisition. This is especially useful for exams in ultrasound or MRI where the total exam time can be tens of minutes and is composed of many unique imaging events. Such acquisition-based exam start time points vary by modality. Relevant for CT and PET/CT is the time of the CT localizer radiographs (i.e., scouts, topograms, surviews, scanograms). Relevant exam start times for MRI are the localizer acquisitions or calibration pre-scans. Similarly, for ultrasound exams, the first image creation time is likely the most dependable indicator when the technologists actually began the exam.

Documenting patient and device/scanner preparation times is important for workflow analysis. For example, consider a technologist that anticipates a CT with contrast or CTA on the schedule at 3 PM and is able to load the injector based on the exam indication. Let us also assume the scanner is free for 30 min before the scans scheduled start time. If the technologist waits until the patient is marked as exam in progress prior to loading the injector (i.e., loading the injector with the patient in the room), they may have underutilized the preceding 30 min of scanner down time during which the injector could have been loaded.

CT Acute Stroke Timeliness

An informatics-based solution was constructed to analyze CT imaging workflow in the setting of acute stroke. We will describe in this analysis how workflow influencers related to the CT technologist, CT scanner, and PACS can be analyzed using a subset of the data shown in Tables 1, 2, 3, and 4.

The specific DICOM fields we collected for our acute stroke analysis are listed in Table 5. Unique to all modalities, one must define search criteria for which specific indications and/or protocols are to be included in the analysis. For example, a comparison of exam completion time in both non-contrast head CTs and multiphase acute stroke head CTs may not allow for clinically meaningful discernment of factors that influence scan efficiency. The number of phases and post processing steps varies greatly between these two exam types. To filter only those exams corresponding to a patient receiving a CT for acute stroke, we used our dose monitoring database (DoseWatch, GE Healthcare, Chicago, IL). Our dose database provides 97 different fields. Table 5 lists the fields we used and if available their corresponding DICOM names.

Table 5.

List of data sources used for analyzing technologist workflow. *These names will be vendor specific and may not be available from all dose monitoring solutions

| Parameter name | Dose database name* | DICOM tag/name | Used for |

|---|---|---|---|

| Accession number | ACCESSION_NUMBER | 0008,0050 Accession Number | Linking studies between the dose and PACS databases |

| Study date | STUDY_DATE | 0008,0020 Study Date combined with 0008,0030 Study Time | Narrowing our analysis to a specific date range to observe changes over time |

| Scanner | AE_AET | 0008,1010 Station Name | Narrowing our analysis to scanners performing acute stroke scans |

| Manufacturer | SDM_MANUFACTURER | 0008,0070 Manufacturer | We used this information from DICOM header to distinguish between post processing methods |

| Orderable | STUDY_DESCRIPTION | 0008,1030 Study Description | Narrowing our analysis to acute stroke scans |

| Protocol name | SERIES_PROTOCOL_NAME | 0018,1030 ACQ Protocol Name | Narrowing our analysis to acute stroke scans |

| Series description | SERIES_DESCRIPTION | 3711,1103 Series Description | Mapping images to specific scan phases |

| PACS database timestamp | Not in the dose database. This is the file system file creation time and date | n/a | Calculating the amount of time it took to send an image to PACS |

| Acquisition time | Not in the dose database (dose database only had study level time) | 0008,0032 Acquisition Time | Calculating the amount of time between phases |

| Image reconstruction time | Not in the dose database (dose database only had study level time) | 0008,0033 Image Time | Calculating the amount of time it took for the scanner to create original series and or reformats and for CT technologists to create reformats and perform post processing (i.e., perfusion maps) |

| Study time | STUDY_TIME | 0008,0030 Study Time | Used for sorting exams by time of day |

| Technologist name | OPERATOR_LAST_NAME and OPERATOR_FIRST_NAME | 0008,1070 Operator’s Name | Used for analyzing data against specific technologists |

| Series number | Not in the dose database (dose database only had the total number of series) | 0020,0011 Series Number | Used to distinguish original acquired series from reformatted and post processed series |

Given site-specific ordering systems, physician ordering differences, departmental ordering differences (i.e., ED vs radiology vs neurology, etc.), and technologist billing/labelling differences, exam filtering can be quite challenging and should be considered carefully so that meaningful results can be extracted. We analyzed results from four CT scanners where acute stroke exams are performed within our institution. We included exams that occurred between the dates of 6/2015 to 12/2016. We have multiple protocols for a routine head with or without contrast and multiple protocols for a head CTA and perfusion. Therefore, it is possible for a CT technologist to scan a non-urgent patient using our acute stroke protocol (i.e., we have not urgent indications requiring the perfusion phase contained within our acute stroke protocol) and it is also possible for an acute stroke patient to be scanned using a protocol other than our acute stroke protocol for some of the phases making up a complete acute stroke protocol. In our experience, it is also possible for a CT technologist to use the CT localizer radiograph from a protocol not suitable to fulfill the acute stroke order, but then change to the proper protocol before the actual tomographic portion of the exam. Therefore, we selected patients for inclusion into analysis if the irradiation event data stored by our dose monitoring system met the following requirements: (1) the protocol name used on the scanner corresponded to the acute stroke protocol and (2) the study description used for the patient contained the word “stroke.”

To identify individual scanners, in our institution, both the dose database “AE_AET” (i.e., ae title) and “AE_NAME” fields mapped one to one to individual scanners. Therefore, either of these values could have been used to select a specific scanner. Our dose database reported two values for protocol name, one labelled “STUDY_PROTOCOL_NAME” and one labelled “SERIES_PROTOCOL_NAME.” The study level name is populated in the database by the first irradiation event which is usually the CT localizer radiograph and is the same for all images corresponding to the same accession number. The series level names correspond to what protocol was used for each series and can therefore change within a study if multiple scanner protocols were used. Therefore, we used the series level protocol name in our analysis.

Once accession numbers for acute stroke patients were identified by filtering on orderable and protocol name, we identified where in our PACS storage (McKesson Radiology Station12.1.1, McKesson Corporation, San Francisco, CA) the images were stored and directly copied from the storage location for analysis with image file creation timestamps preserved. Since a typical acute stroke scan at our intuition includes five different scan phases (CT localizers, non-contrast head, CTA head and neck, perfusion of the brain, and a with contrast head) and multiple reconstructions and reformats for each of these phases, we wrote a program to sort all of the images and store the contents of their DICOM header metadata in a structure. We interrogated the image headers using Matlab (MathWorks, Natick, MA) which has a DICOM reader and dictionary.

There are many time tags associated with images in the DICOM standard. For our analysis, we wanted three primary time tags: (1) when a CT technologist acquired a scan phase, (2) when a CT technologist or the scanner reconstructed images from a scan phase, and (3) when images were available in PACS for radiologist interpretation. The DICOM tag for “Acquisition Time” was used for the time of scanning and “Image Time” for the time of image creation by either the technologist or CT scanner (some images can be set up to reconstruct by the scanner without technologist interaction). The difference between acquisition and creation time for reconstructions set to run automatically by the scanner is usually on the order of seconds to tens of seconds. This will vary depending on the number of slices, reconstruction options, and vendor/software version. For technologist-motivated image reconstruction (e.g., a MIP, reformat, volume rendered image, etc.), however, the times can vary greatly depending on individual technologist workflow. The time when a CT scanner sends an image to PACS (or any network destination) is not a standard DICOM field. We obtained image transfer/networking times by interrogating the file from the PACS data storage.

In the setting of acute stroke, the time from the hospital door to treatment is important. To better understand how CT imaging time contributes to this critical time period, we analyzed the time required to complete the 5 CT series in our stroke protocol. For an exam start time point, we used the acquisition time of the first CT localizer radiograph image. The study time was not selected to indicate “exam start” because that time indicates when a technologist opens the exam on the imaging workstation (i.e., selects the patient from the DICOM modality worklist). We found our CT technologists will commonly open the exam before the patient arrives at the CT scanner. Therefore, particularly in the setting of acute stroke, study time was not appropriate to benchmark the amount of time a patient spends in the CT suite.

We filtered the data returned from PACS to ensure only comprehensive acute stroke exams were to be analyzed. Comprehensiveness was defined by an exam having an exam mapping to each of the following classifications: CT localizer radiograph, non-contrast head, CTA, perfusion, with contrast head, and perfusion map. Other DICOM objects were present and included dose sheets, reformats, and power injector screen saves. To classify images from specific series, two parameters were used. The series number tag and series description tags had to match values we pre-defined in a look up table. For example, all perfusion maps had series number over 99 and series descriptions that contained the phrase “RAPID” or “PERFUSION.” The series description tag is something a CT technologist can change at scan time [28], but has a default pre-set value determined by our CT protocol optimization team. The series descriptions in our clinic have a one to one mapping to the five phases we expect an acute stroke exam to contain. Example series descriptions used at our institution include “SCOUT” for the CT localizer radiograph, “HEAD W/O” or “AX ST” for the non-contrast axial head images, “CTA” for the CTA head and neck, “PERFUSION” for the axial cine perfusion images, and “HEAD W IVC” or “AX ST WIVC” for the head with contrast.

An account of the statistical and other analysis methods applied to the data collected as described above will be published in a clinical companion paper to this paper currently in press at the time of this writing with the Journal of the American College of Radiology (JACR).

Results and Discussion

Here, we will go through each of the components of our methodology and discuss some of the challenges we faced in implementation and alternative pathways. Our hope is that others can adopt such an informatics-based approach to monitoring imaging workflow for CT as well as other modalities, as this data is available at any institution through common workflow tools (EHR, RIS, DICOM files, and PACS).

We used a dose database to obtain the accession numbers used to pull studies from our PACS database. The key fields used to filter to a specific indication were the study description and protocol name fields. Many other databases in the hospital/radiology environment could have provided the same data. For example, the system proposed by Langer et al. could have been used not only to filter to acute stroke exams but also to obtain DICOM timestamps and other metadata [29, 30]. A dictation database, the RIS, or the PACS database itself could have all been used to perform the filtering steps needed to produce a list of acute stroke studies. For any of these selections, there will be a balance between sensitivity and specificity for the intended purposes of the analysis. For example, if we were to select all studies using our institution’s comprehensive acute stroke protocol, we would have returned many non-acute stroke exams. As previously mentioned, this is because a CT technologist may use multiple different scanner protocols to satisfy a single order. We found by combining the orderable and series level protocol filters, we were able to avoid inclusion of non-acute and/or non-stroke studies.

Our acute stroke demonstration did not consider scheduling, patient preparation, or billing workflow steps. These steps, as detailed in Tables 1 and 4, require access to one’s RIS database. Since auditing of one’s EMR/RIS is mandated by Federal Regulation [31, 32], it is already facile to pull user, time, content, and duration information. For our stroke analysis demonstration, the billing and scheduling steps requiring RIS/EMR log access were not considered especially clinically important for acute stroke and we did not choose to analyze them. For other indications, it may be of greater clinical importance to ensure technologists are reviewing the appropriate patient information outlined in Table 1. For example, checking renal function should be done prior to the administration of contrast agent. Therefore, the data listed in Table 1 could be used to ensure a technologist actually reviewed the needed lab results prior to the administration of contrast.

The code written to interrogate the DICOM header metadata and PACS database time stamps had to be capable of digesting a multitude of different DICOM objects. The same DICOM tags are not present in all of the objects in a typical CT exam. For example, DICOM tag 0008,1070 (operator) tags were present in source images but not in perfusion maps. Therefore, any code written to interrogate such a mix of DICOM objects must not assume any particular tag exists for all objects.

As it is not a DICOM tag, the networking time proved the most difficult piece of data we gathered. Our method of interrogating the file creation time directly from the file system was effective but was cumbersome. The file creation timestamp was only a surrogate for the network transfer time as there could exist a latency between when a technologist transfers a study and when the scanner sends it and or a latency between when PACS receives a study and writes it to the PACS storage. Our institution has now adopted an object storage technology (i.e., hyper-converged infrastructure) and is transferring medical data storage from our other systems (i.e., vendor neutral archive supporting our PACS) to it. An object storage technology solution could have mitigated our need to use two different pathways to collect the needed DICOM and image transfer time data fields.

There is a recently published IHE profile [33] and DICOM supplement [34] to facilitate protocol management. All of these systems would allow for auditing of the user, time, content, and duration spent reviewing a protocol before or during an examination. This represents another digital footprint not previously discussed in the current work. Monitoring technologist use of protocol management systems could identify technologists who need more training or correlated with instances of technologist non-compliance with imaging protocols [35, 36]. Protocol management systems are available from some vendors and institution specific solutions exist [37, 38].

The workflow steps outlined here cover the main facets of image acquisition and preparation. Boland 2008 maps out a similar technologist/scanner workflow and goes into greater depth on tasks performed by a technologist [1]. Boland’s synopsis does not categorize the technologist/scanner workflow into the facets we propose here (i.e., patient preparation, scanner/device preparation, image acquisition, image reconstruction, image post processing, image networking, and finally billing/scheduling tasks). Boland’s synopsis does, however, contain workflow steps/processes related to each of our categories. Because of the similarities present between the present work and Boland, it is completely possible the revenue model reported by Boland which relies on a high throughput could be monitored using the approaches described in the present work. Using the methods described in this work, it is also possible to confirm the throughput increasing and workflow changing effect of having multiple technologists working together versus a single technologist [39].

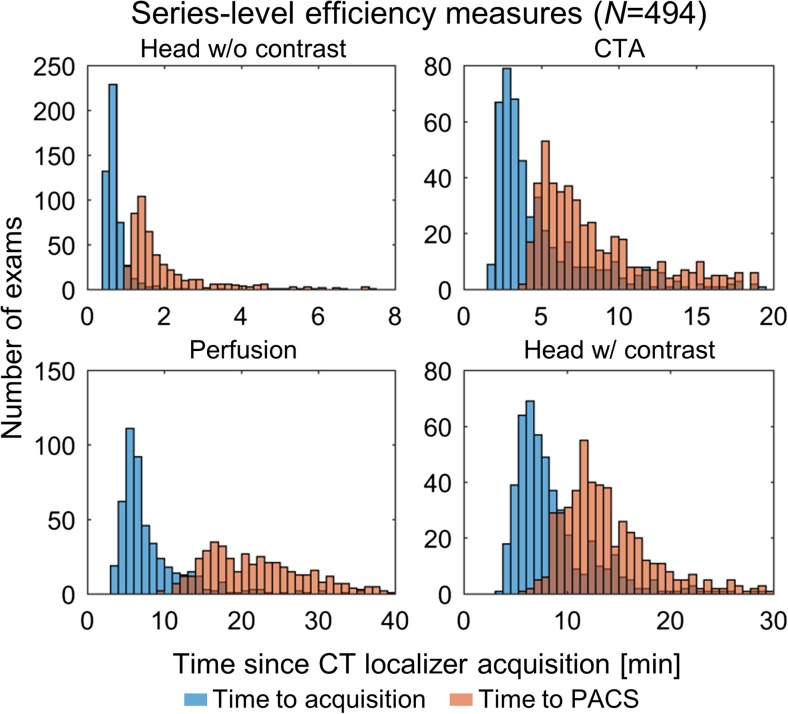

In the clinical companion paper to this paper, we will show clinical results from a fully automated informatics-based solution for monitoring individual CT workflow steps in the setting of acute stroke. In Fig. 3, we show the distributions of the image creation times on the scanner and the image file timestamp in PACS for the four series making up our acute stroke protocol (referenced to the CT localizer image acquisition time). For all cases, the image transfer time lags the image creation time as expected. The head without contrast images were acquired rapidly and available in the PACS within minutes for initial radiologist interpretation. This temporal analysis also allowed us to analyze policy changes with our acute stroke protocol, such as a change in acquisition instructions and a change in perfusion processing software. CT is just a single step in our acute stroke workflow. Data from our neurological endovascular surgery stroke committee from 2016 reported the median door to CTA completion time was 18 min and the median door to groin puncture time was 56 min. The widths of the CTA distributions shown in Fig. 3 are roughly the same size as the total time spent door to CTA and ~ 1/3 of the door to groin time. Therefore, optimization of time spent just in CT should have a large impact on our overall all door to treatment times.

Fig. 3.

Representative histograms of institution-level analysis. Histograms indicate distributions of time to series acquisition (blue) and availability in PACS (orange) in 494 patients over approximately 1.5 years. All PACS times refer to the first recon (not reformats) other than for perfusion, which refers to perfusion maps. All times are referenced to the time of CT localizer radiograph acquisition

Conclusion

This paper describes the richness of workflow-related digital footprints in the imaging environment. These footprints include steps performed by both imaging equipment and operators. We outlined the major workflow steps that have digital footprints and highlighted some possible clinically/administratively meaningful metrics that can be realized using them. We went into detail on such a metric in the setting of acute stroke. In the acute stroke example, we demonstrate that while the high level steps of image acquisition, reconstruction, processing, and transfer are common to all sites, site-specific differences due to vendor and digital footprint storage necessitate site-specific implementation of workflow analysis. This observation supports the statement made by Reiner and colleagues in their overview of workflow optimization in medical imaging “One must realize, however, that workflow redesign must be customized to the unique and idiosyncratic nature of each individual organization” [23].

Funding

This work was supported by a research grant from GE Healthcare.

Compliance with Ethical Standards

Conflict of Interest

All authors are involved in a collaborative project that supplies CT protocols to GE Healthcare. TPS is also a GE consultant and the founder of protocolshare.org. RB is co-founder and officer of ImageMoverMD, and GW is on the MAB of McKesson and HealthMyne, a stockholder in HealthMyne, and co-founder of WITS(MD). AF receives research support from GE Healthcare. WP is a stockholder in GE Healthcare.

References

- 1.Boland GW. Enhancing CT productivity: strategies for increasing capacity. American Journal of Roentgenology. 2008;191(1):3–10. doi: 10.2214/AJR.07.3208. [DOI] [PubMed] [Google Scholar]

- 2.Gunn ML, Maki JH, Hall C, Bhargava P, Andre JB, Carnell J, ... Beauchamp NJ: Improving MRI scanner utilization using modality log files. J Am Coll Radiol 14(6):783–786, 2017. [DOI] [PubMed]

- 3.Flug J, Nagy P. The lean concept of waste in radiology. Journal of the American College of Radiology. 2011;8(6):443–445. doi: 10.1016/j.jacr.2011.02.017. [DOI] [PubMed] [Google Scholar]

- 4.Hirschorn DS, Hinrichs CR, Gor DM, Shah K, Visvikis G. Impact of a diagnostic workstation on workflow in the emergency department at a level I trauma center. Journal of digital imaging. 2001;14:199–201. doi: 10.1007/BF03190338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Reiner BI, Siegel EL, Hooper FJ, Pomerantz S, Dahlke A, Rallis D. Radiologists’ productivity in the interpretation of CT scans: a comparison of PACS with conventional film. American Journal of Roentgenology. 2001;176(4):861–864. doi: 10.2214/ajr.176.4.1760861. [DOI] [PubMed] [Google Scholar]

- 6.Reiner BI, Siegel EL, Hooper FJ, Glasser D. Effect of film-based versus filmless operation on the productivity of CT technologists. Radiology. 1998;207(2):481–485. doi: 10.1148/radiology.207.2.9577498. [DOI] [PubMed] [Google Scholar]

- 7.Kato H, Kubota G, Kojima K, Hayashi N, Nishihara E, Kura H, Aizawa M. Preliminary time-flow study: comparison of interpretation times between PACS workstations and films. Computerized medical imaging and graphics. 1995;19(3):261–265. doi: 10.1016/0895-6111(95)00010-N. [DOI] [PubMed] [Google Scholar]

- 8.Redfern RO, Horii SC, Feingold E, Kundel HL. Radiology workflow and patient volume: effect of picture archiving and communication systems on technologists and radiologists. Journal of Digital Imaging. 2000;13:97–100. doi: 10.1007/BF03167635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Siegel E, Reiner B. Work flow redesign: the key to success when using PACS. American Journal of Roentgenology. 2002;178(3):563–566. doi: 10.2214/ajr.178.3.1780563. [DOI] [PubMed] [Google Scholar]

- 10.Reiner B, Siegel E, Scanlon M. Changes in technologist productivity with implementation of an enterprisewide PACS. Journal of digital imaging. 2002;15(1):22–26. doi: 10.1007/s10278-002-0999-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Reiner BI, Siegel EL. Technologists’ productivity when using PACS: comparison of film-based versus filmless radiography. American Journal of Roentgenology. 2002;179(1):33–37. doi: 10.2214/ajr.179.1.1790033. [DOI] [PubMed] [Google Scholar]

- 12.Siegel EL, Reiner BI, Siddiqui KM. Ten filmless years and ten lessons: a 10th-anniversary retrospective from the Baltimore VA Medical Center. Journal of the American College of Radiology. 2004;1(11):824–833. doi: 10.1016/j.jacr.2004.06.002. [DOI] [PubMed] [Google Scholar]

- 13.Lepanto L, Paré G, Aubry D, Robillard P, Lesage J. Impact of PACS on dictation turnaround time and productivity. Journal of digital imaging. 2006;19(1):92. doi: 10.1007/s10278-005-9245-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wideman C, Gallet J. Analog to digital workflow improvement: a quantitative study. Journal of Digital Imaging. 2006;19(1):29–34. doi: 10.1007/s10278-006-0770-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Humphrey LM, Fitzpatrick K, Atallah N, et al. Time comparison of intensive care units with and without digital viewing systems. J Digit Imaging. 1993;6:37–41. doi: 10.1007/BF03168416. [DOI] [PubMed] [Google Scholar]

- 16.Schemmel A, Lee M, Hanley T, Pooler BD, Kennedy T., Field, A., ... John-Paul JY. Radiology workflow disruptors: a detailed analysis. J Am Coll Radiol 13(10):1210–1214, 2016. [DOI] [PubMed]

- 17.Dhanoa D, Dhesi TS, Burton KR, Nicolaou S, Liang T. The evolving role of the radiologist: the Vancouver workload utilization evaluation study. Journal of the American College of Radiology. 2013;10(10):764–769. doi: 10.1016/j.jacr.2013.04.001. [DOI] [PubMed] [Google Scholar]

- 18.John-Paul JY, Kansagra AP, Mongan J. The radiologist's workflow environment: evaluation of disruptors and potential implications. Journal of the American College of Radiology. 2014;11(6):589–593. doi: 10.1016/j.jacr.2013.12.026. [DOI] [PubMed] [Google Scholar]

- 19.Gay SB, Sobel AH, Young LQ, Dwyer SJ. Processes involved in reading imaging studies: workflow analysis and implications for workstation development. Journal of digital imaging. 1997;10(1):40–45. doi: 10.1007/BF03168549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Beard D. Designing a radiology workstation: a focus on navigation during the interpretation task. Journal of Digital Imaging. 1990;3(3):152–163. doi: 10.1007/BF03167601. [DOI] [PubMed] [Google Scholar]

- 21.TJC. Specifications Manual for Joint Commission National Quality Measures, Version 2017A. 2017. https://manual.jointcommission.org/releases/TJC2017A/rsrc56/Manual/TableOfContentsTJC/TJC_v2017A.pdf

- 22.Saver JL. Time is brain—quantified. Stroke. 2007;37(1):263–266. doi: 10.1161/01.STR.0000196957.55928.ab. [DOI] [PubMed] [Google Scholar]

- 23.Reiner B, Siegel E, Carrino JA. Workflow optimization: current trends and future directions. Journal of Digital Imaging. 2002;15(3):141–152. doi: 10.1007/s10278-002-0022-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Branstetter BF., IV Basics of imaging informatics: Part. Radiology. 2007;243(3):656–667. doi: 10.1148/radiol.2433060243. [DOI] [PubMed] [Google Scholar]

- 25.Branstetter BF., IV Basics of imaging informatics: Part 2. Radiology. 2007;244(1):78–84. doi: 10.1148/radiol.2441060995. [DOI] [PubMed] [Google Scholar]

- 26.Cook TS, Zimmerman SL, Steingall SR, Maidment AD, Kim W, Boonn WW. Informatics in radiology: RADIANCE: an automated, enterprise-wide solution for archiving and reporting CT radiation dose estimates. Radiographics. 2011;31(7):1833–1846. doi: 10.1148/rg.317115048. [DOI] [PubMed] [Google Scholar]

- 27.Gress DA, Dickinson RL, Erwin WD, Jordan DW, Kobistek RJ, Stevens DM, ...: Fairobent LA. AAPM medical physics practice guideline 6. a.: Performance characteristics of radiation dose index monitoring systems. J Appl Clin Med Phys. 2017 [DOI] [PMC free article] [PubMed]

- 28.Grimes J, Leng S, Zhang Y, Vrieze T, McCollough C. Implementation and evaluation of a protocol management system for automated review of CT protocols. Journal of applied clinical medical physics. 2016;17(5):1–11. doi: 10.1120/jacmp.v17i5.6164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Langer SG. A flexible database architecture for mining DICOM objects: the DICOM data warehouse. Journal of digital imaging. 2012;25(2):206–212. doi: 10.1007/s10278-011-9434-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Langer SG. DICOM data warehouse: Part 2. Journal of digital imaging. 2016;29(3):309–313. doi: 10.1007/s10278-015-9830-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.US Department of Health and Human Services. Security standards: General rules, 46 CFR section 164.308(a)-(c)

- 32.US Department of Health and Human Services. Technical safeguards. 45 CFR section 164–312 (b)

- 33.Integrating the Healthcare Enterprise Profile. “Management of Acquisition Protocols (MAP)” Published 2017–07-14 Downloaded on 8/25/2017 from http://ihe.net/Technical_Frameworks/#radiology

- 34.Digital Imaging and Communications in Medicine (DICOM). “Supplement 121: CT Procedure Plan and Protocol Storage SOP Class” Downloaded on 8/25/2017 from http://dicom.nema.org/Dicom/News/oct2013/docs_oct2013/sup121_pc.pdf

- 35.Szczykutowicz TP, Siegelman J. On the same page—physicist and radiologist perspectives on protocol management and review. Journal of the American College of Radiology. 2015;12(8):808–814. doi: 10.1016/j.jacr.2015.03.042. [DOI] [PubMed] [Google Scholar]

- 36.Szczykutowicz TP, Malkus A, Ciano A, Pozniak M. Tracking patterns of nonadherence to prescribed CT protocol parameters. Journal of the American College of Radiology. 2017;14(2):224–230. doi: 10.1016/j.jacr.2016.08.029. [DOI] [PubMed] [Google Scholar]

- 37.Szczykutowicz TP, Rubert N, Belden D, Ciano A, Duplissis A, Hermanns A, Monette S, JanssenSaldivar E: A wiki based solution to managing your institutions imaging protocols. J Am Coll Radiol. 2016 [DOI] [PubMed]

- 38.Szczykutowicz TP, Rubert N, Belden D, Ciano A, Duplissis A, Hermanns A, Monette S, JanssenSaldivar E: A wiki based CT protocol management system. Radiol Manage. 2015 [PubMed]

- 39.Boland GW, Houghton MP, Marchione DG, McCormick W. Maximizing outpatient computed tomography productivity using multiple technologists. Journal of the American College of Radiology. 2008;5(2):119–125. doi: 10.1016/j.jacr.2007.07.009. [DOI] [PubMed] [Google Scholar]