Summary

Where one looks within their environment constrains one’s visual experiences, directly affects cognitive, emotional, and social processing [1–4], influences learning opportunities [5], and ultimately shapes one’s developmental path. While there is a high degree of similarity across individuals with regard to which features of a scene are fixated [6–8], large individual differences are also present, especially in disorders of development [9–13], and clarifying the origins of these differences are essential to understand the processes by which individuals develop within the complex environments in which they exist and interact. Toward this end, a recent paper [14] found that ‘social visual engagement’ – namely, gaze to eyes and mouths of faces – is strongly influenced by genetic factors. However, whether genetic factors influence gaze to complex visual scenes more broadly, impacting how both social and non-social scene content are fixated, as well as general visual exploration strategies, has yet to be determined. Using a behavioral genetic approach and eye tracking data from a large sample of 11-year-old human twins (233 same-sex twin pairs; 51% monozygotic/49% dizygotic), we demonstrate that genetic factors do indeed contribute strongly to eye movement patterns, influencing both one’s general tendency for visual exploration of scene content, as well as the precise moment-to-moment spatiotemporal pattern of fixations during viewing of complex social and non-social scenes alike. This study adds to a now growing set of results that together illustrate how genetics may broadly influence the process by which individuals actively shape and create their own visual experiences.

Keywords: eye tracking, development, behavioral genetics, eye gaze, gene environment correlation, dynamic systems, selective attention, evocative effects, autism, neurodevelopmental disorders

Results

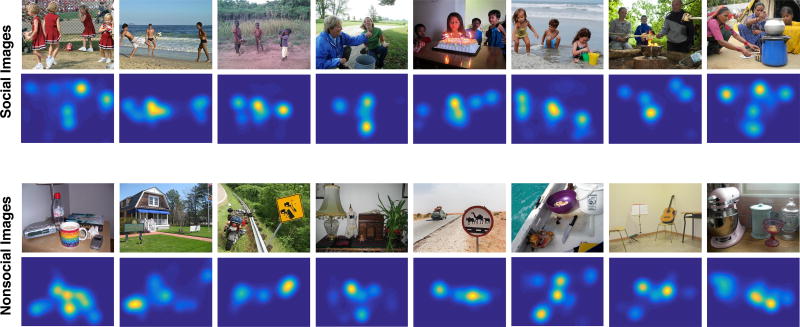

Eighty complex and naturalistic images were shown once to each participant [n = 466; 119 monozygotic (MZ) pairs and 114 same-sex dizygotic (DZ) pairs] and with a duration of 3 seconds, while their eye movements were recorded. Gaze heatmaps were first created by combining data from all individuals together for each image (Figure 1), as well as for each individual and each image separately (Figure 2) and used to quantify gaze similarities and differences between pairs of individuals (see STAR Methods and Figure S1). As an initial test of the heritability of gaze, we examined whether twins exhibited similar visual exploration tendencies when looking at the images, operationalized as the degree of gaze dispersion quantified using Shannon entropy; see STAR Methods). Indeed, MZ twins exhibited more similar exploration tendencies (rMZ = 0.42) compared to DZ twins (rMZ = 0.19), implying a moderate heritability (h2 = 0.38 ±0.25–0.51; for details, see STAR Methods and Table S1).

Figure 1. Examples of social and non-social stimuli, along with their corresponding gaze heatmaps.

Each heatmap represents the spatial pattern of gaze aggregated across all participants (n = 466). The heatmaps are 2D histograms where the x and y axis corresponds to the horizontal and vertical dimension of the stimulus (respectively), and the color represents the amount of gaze data aggregated at each location within the stimulus. See also Figure S3.

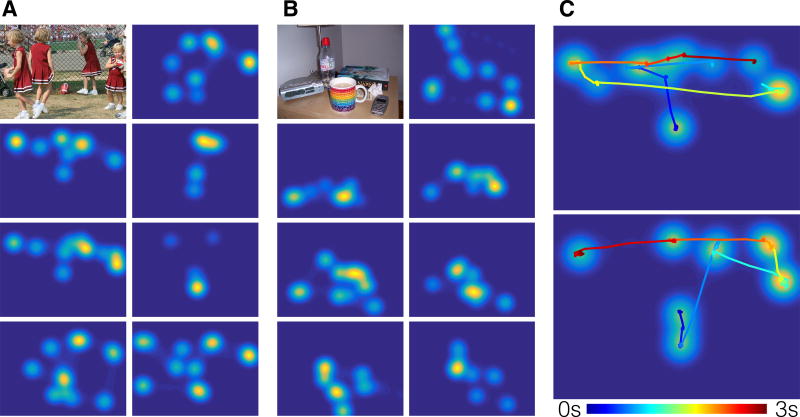

Figure 2. Examples of individual differences in scanpaths.

a) & b) Examples of spatial gaze patterns across different participants for two stimuli (displayed in top left panels), showing substantial individual differences in looking patterns. c) Heatmaps from two individuals with the temporal trace superimposed (colored line). Even though the overall spatial pattern may be similar, the temporal order of gaze across individuals may differ, revealing important individual differences in prioritization of scene content. See also Figure S1 and Movies S1 and S2.

Next, we sought to directly assess the extent to which individuals look at the same locations in the scenes by comparing each twin’s gaze directly with their co-twin. As hypothesized, MZ twins exhibited the highest degree of similarity (mean r (±SD) = 0.60±0.07), followed by DZ twins (mean r = 0.56±0.08), and then by unrelated individuals (mean r = 0.54±0.07; 2000 random non-twin (NT) pairings derived from a bootstrapping procedure; see STAR Methods). Both MZ and DZ twins exhibited greater similarity in gaze patterns than unrelated individuals [t118 = 10.53, p = 1.11 × 10−18, Cohen’s d = 0.99; t113 = 3.61, p = 4.54 × 10−4, d = 0.38, respectively; calculated using one-sample t-tests, but essentially identical results were obtained with two-sample t-tests], and MZ twins exhibited greater similarity compared to DZ twins [t231 = 4.29, p = 2.59 × 10−5, d = 0.56, independent samples t-test] (see Figure S2). Note that these correlations values reported above (means of correlations) are not equivalent to the intraclass correlation measure typically used in quantitative behavioral genetic analyses (see also STAR Methods), so we caution the reader from simply applying Falconer’s formula to estimate heritability.

Repeating the analysis above using a mean-referenced similarity metric (which unlike the above analysis allows for quantitative behavioral genetic analysis; see STAR Methods), we again confirmed that MZ twins were more similar to one another than DZ or NT pairs (rMZ= 0.30, rDZ = 0.10, rNT = 0.00, respectively), and behavioral genetic modeling indicated a moderate heritable component to spatial patterns of gaze measured on short (3 second) timescales (h2 = 0.28±0.12–0.43) (for details, see STAR Methods and Table S1).

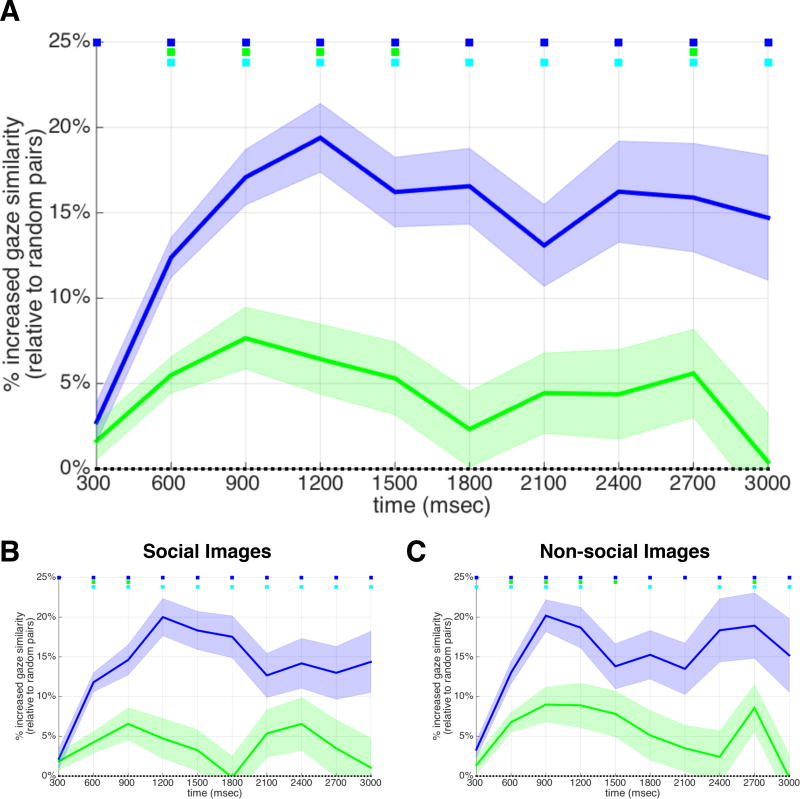

We next examined the effects of twin status on gaze occurring at even shorter timescales. Immediately following image onset (bin 1: 1–300 msec), MZ, DZ, and NT pairs exhibit largely comparable levels of gaze similarity. This was as expected because participants were instructed to initially look at the center fixation cross preceding each stimulus onset, and resulted in very little variance in initial fixation location. Yet, over the course of the trial, we observed a pattern of gaze location becoming more divergent across participants over time (r2=0.978, β = −0.675; power function fitted to averaged data from the 2000 NT pairs for each of the 10 time bins). Increased gaze similarity in MZ pairs relative to both DZ and NT pairs was maintained for all of these subsequent time bins (see Figure 3). As illustrated by Movie S1 and Movie S2, in some cases, MZ twins showed a remarkably high spatiotemporal gaze similarity. Note that these particular videos were selected since they nicely illustrate the phenomenon being discussed, and not due to their representativeness; the correlations shown in the videos are among the highest observed.

Figure 3. Spatiotemporal similarity across monozygotic twins and dizygotic twins relative to non-twin pairs.

a) MZ twins (blue) and DZ twins (green) exhibited more similar gaze patterns than pairs of unrelated individuals. Solid blue and green lines represent the mean correlation between heatmaps of MZ and DZ twins at each time bin (each 300 msec), plotted as a percent relative to non-twin pairs (shaded area = s.e.m.). Except for the very first time bin, gaze between MZ twins was significantly more similar than gaze between DZ twins, demonstrating a genetic contribution to spatiotemporal gaze patterns on strikingly short timescales. Colored squares (top) indicate significant differences (blue=MZ vs. NT; green = DZ vs. NT; cyan = MZ vs. DZ). b) & c) The same overall pattern of MZ>DZ>NT was preserved when analyzing social and non-social images separately. See also Figure S2 and Movies S1 and S2.

To test whether the above described effects were driven by particular sub-classes or particular characteristics of stimuli, we performed two additional analyses. First, we ran the above temporal heatmap analysis separately for social and non-social images. Overall, across both of these different content domains, similar patterns were observed with MZ twins exhibited higher levels of gaze similarity than DZ and NT pairs (Figure 3b,c). Second, we investigated how generalizable the results were across different levels of gaze complexity to an image (gaze complexity was operationally defined as mean entropy of gaze across all participants for each image; see STAR Methods). As shown in Figure S3, we found that the same pattern of gaze similarity across groups (MZ > DZ > NT) was apparent across all levels of entropy (slope/β = − 0.1214, −0.1197, −0.1249, respectively; r2 = 0.804, 0.801, 0.798, respectively).

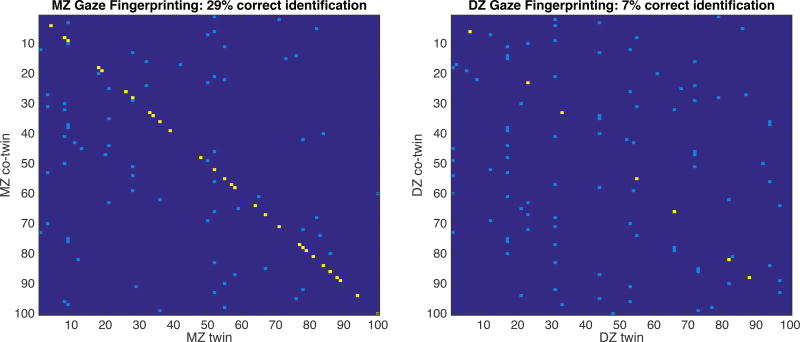

Finally, using a new statistical method that seeks to identify co-twins with one another based on their patterns of gaze alone, termed cross-subject gaze fingerprinting (see Figure 4 and STAR Methods for further details), we found higher identification accuracy for MZ pairs compared to DZ or NT pairs. Across 1000 bootstraps, this procedure resulted in 27.9% (±2.9) accuracy for MZ twins, and 6.7% (±1.7) accuracy for DZ twins, and 0.84%(±0.9)) accuracy for NT pairs (chance would be expected to be 1%). The distributions of MZ and DZ accuracies were entirely non-overlapping and both very different than chance (i.e., NT pairs; all ps < 0.00001; see Figure S4).

Figure 4. Cross-Subject Gaze Fingerprinting for monozygotic and dizygotic twins.

An individual’s pattern of gaze can be used to accurately identify their co-twin from among a pool of 100 individuals. In each panel, 100 randomly selected individuals are shown on the x-axes along with their 100 co-twins on the y-axes. For each individual on the x-axis (column), the individual with the highest gaze similarity on the y-axis (row) is marked, where yellow indicates that co-twin identification was achieved and light blue indicates that co-twin identification was not achieved. Across 1000 bootstrapped iterations of this procedure, accuracy of gaze fingerprinting for MZ twins is 27.9% and 6.7% for DZ twins. Both MZ and DZ identification rates are significantly different from chance accuracy (~1%) and significantly different from each another (all ps<0.00001; MZ>DZ>NT), further demonstrating a high degree of gaze similarity among MZ twins. Note that this figure illustrates the results of one run of the bootstrapping procedure; the statistics in the text reflect the mean result across 1000 runs. See also Figure S4.

Discussion

The current study demonstrates genetic influence on spatiotemporal eye movement patterns to free viewing of complex and highly unconstrained visual environments. We observed these effects even when splitting the analysis into very short time bins, showing that genetics contribute to the precise temporal pattern of eye movements during free viewing in later childhood. The strength of this genetic effect is particularly evident in the cross-subject gaze fingerprinting analysis, wherein we found that patterns of gaze to the scenes contained enough unique information shared among twins to correctly match individuals with their co-twins selected from among a pool of 100 individuals. This effect was most pronounced in MZ twins (~28% accuracy), but was also well above chance in DZ twins (DZ accuracy = ~7%; chance = ~1%).

Our findings serve as a robust initial replication and important extension of recently published work identifying heritable effects on gaze to social videos in toddlers [14], but, importantly, we do so using different analytical methods, different populations (24 and 36 month olds vs. later childhood), and qualitatively different stimuli (dynamic social videos with sound vs. static images of highly varied complex scenes, both social and non-social). Our results extend these initial findings by demonstrating that genes still influence human gaze well beyond infancy and toddlerhood, continuing into at least into later childhood and likely beyond, and influence viewing of highly varied environments, including both social and non-social environments. Furthermore, we find that even the more stimulus-independent exploration tendencies one exhibits when viewing complex scenes – i.e., either highly exploratory or more restricted in scope – has a genetic influence.

Where one looks within complex scenes is influenced by multiple simultaneously interacting factors that include both bottom-up and top-down processes. These bottom-up image properties include low-level (contrast, orientation, color), mid-level (object size, object complexity), and/or higher-level semantic attributes (faces, eye gaze cues, text, etc.) [6–8, 15]. Top-down cognitive processes also have measurable influences on gaze, including specific task demands [16], as well as numerous other trait- and state-level factors (e.g., visual exploration tendencies, as examined here, but also arousal, anxiety, motivation, drowsiness and so on). It is within this multivariate and multicausal context that makes it all the more remarkable that a significant portion of variance in eye movements across individuals can be explained by genetic factors.

Critically, gaze not only reflects one’s bottom-up and top-down attentional tendencies, but also affords subsequent information processing and influences social interaction [1, 4, 17–19]. For example, children who spend more time looking at other people – in the family, in school – will create a very different learning environment for themselves compared to children who tend to look elsewhere. Indeed, even subtle initial differences in looking behavior, which may be influenced by one’s genetic makeup, may over time contribute to divergent developmental trajectories [3, 10]. Importantly, this is not a deterministic perspective, in that we do not believe that genes define an outcome (in this case, spatiotemporal patterns of gaze to complex scenes), but rather that genes contribute (in a probabilistic sense) to a developmental process that gives rise to particular gaze behavior as a consequence of a continually and reciprocally reinforcing biology-environment interaction. Infants gain control over their eye movements earlier than most other exploratory actions (e.g., effective reaching for or crawling towards objects) [20, 21], suggesting that a genetic influence on eye movements may play a particularly important role early in life [14]. Furthermore, not only do these results have implications for our understanding of the emergence of individual differences within the range of typical development, but also for understanding atypical development. Autism Spectrum Disorder, for instance, is both heritable [22–24] and associated with altered looking patterns beginning in infancy and persisting well into adulthood [2, 9, 10, 14, 25].

The present results may also have implications for understanding the emergence of particular gene-environment correlations - a phenomenon whereby aspects of one’s environment are influenced by one’s genes [26–31]. For example, people who have a genetic predisposition to shyness [32] are likely to select environments that fit their socially diffident personality (i.e., an active gene-environment correlation process) and their behavior tends to elicit particular reactions in other people (i.e., an evocative gene-environment correlation process) [33]. However, unlike this example, gene-environment correlations related to eye movements would be occurring at a completely different temporal and spatial scale — instead of directly affecting one’s macro-level environment (e.g., the job they choose, the social events they attend, the people they associate with), it would operate at a micro-level — the immediate, momentary sub-selection of visual information within one’s current environment. In other words, because eye movements are a behavior that allows one to select their visual experiences (and also influence other people within social contexts), then establishing that genetic factors influence eye movements by definition means that eye movements serve as mechanisms of active (and evocative) gene-environment correlations — that is, genes influence the micro-level environments individuals create for themselves. Because exposure to different visual environments — even micro-level environments, such as fixating a specific part of a face [1] — entail differential access to information, learning opportunities, and subsequent cognitive processing, such gene-environment correlations may influence one’s behavior in significant ways [29], especially when considered over development. Demonstrating a causal link between eye movements and subsequent behavior and cognition would be an important next step.

An important limitation of the present study should also be addressed. As with any twin study, the heritability estimates rely on the equal environments assumption (i.e., that the shared environments of MZ and DZ twins are similar). It is possible that the environments of MZ twins are more similar to one another than that of DZ twins and that those environments influence eye gaze patterns, thus resulting in misattributed variance and an overestimation of the heritability of the phenotype [29]. It should also be noted that similarity in gaze to unconstrained complex scenes, where one is free to fixate anywhere within a 2-dimensional scene and each gaze location is treated as a unique and singular event (i.e., not averaged across any other instances), is a particularly unforgiving measurement, with a near infinite number of ways for gaze to be different between two people (including measurement error) but only one way for it to be similar. Thus, one could also make the case that the precise heritability estimate may be an underestimate.

A second limitation is that because of the multivariate and multicausal nature of eye movements, together with the design of the experiment, we cannot disentangle the specific genetic and environmental contributions of each of the many bottom-up and top-down factors. In free viewing of naturalistic scenes, some of these factors may be inextricably linked together and only separable through specifically designed experimental manipulations. At the same time, it is possible (and perhaps likely) that there will not be a perfectly even level of heritability across each of these various bottom-up and top-down processes, making such questions ripe for future investigation. Indeed, there is much interest in the use of eye movements as relatively inexpensive and easily measurable quantitative endophenotypes (e.g., [9–11, 34]), so parsing the heritability of gaze behavior further into its component processes is a worthwhile pursuit. Yet, even without such parsing, our results highlight the possibility of using eye movements even to complex scenes in highly unconstrained task contexts as quantitative endophenotypes in future investigations of behavioral and cognitive traits and psychopathology (see also [14]).

Finally, our findings of genetic factors influencing the temporal order of gaze location (on the order of hundreds of milliseconds) to static scenes is consistent with recent finding by Constantino and colleagues [14] that genetic factors also influence viewing of dynamic scene content. The demonstration of these spatiotemporal effects is particularly important when considering gaze in the real world: when viewing static scenes, it is possible to look back to a previously ignored location at a later time and still acquire that visual input. However, in everyday life, some significant events (like a quick glance, a subtle facial expression, a car unexpectedly merging into your lane) can be quite fleeting and there may never be a second chance to acquire this information again [35]. Thus, where we direct our eyes on a moment-to-moment basis describes a continuous and interactive process in which we actively create, constrain, and shape our worlds. By demonstrating a genetic contribution to precise spatiotemporal gaze patterns to complex visual scenes in human children, this study adds to a now growing set of results that together provide a new developmental perspective on the emergence of individual differences in cognition and behavior.

STAR Methods

Contact for Reagent and Resource Sharing

Further information and requests for resources should be directed to and will be fulfilled by the Lead Contact, Dr. Dan Kennedy (dpk@indiana.edu).

Experimental Model and Subject Details

130 monozygotic (MZ) twin pairs and 130 same-sex dizygotic (DZ) twin pairs participated in the study (n=520 individuals total). After excluding 33 individuals based on insufficient data (see below for details), and then removing their corresponding co-twins, the final sample consisted of 119 MZ twin pairs and 114 same-sex DZ twin pairs (n=466 individuals). The participants (mean age = 11.4 (±1.3) years); range = [9.2, 14.1]) were recruited from a population-based twin study in Sweden [36], and were living in the larger Stockholm area. The study was approved by the Regional Ethics Review Board in Stockholm, and written informed consent was obtained from parents. Gift vouchers were given to the children as incentive for participation ($35 for each child).

The results described here comprise one specific experiment that was conducted in the context of a larger approximately 50-minute-long eye tracking testing session, which was preceded or followed by an additional 30 min of behavioral and cognitive testing. Zygosity was confirmed by molecular genetic analyses or, in cases where DNA samples were not available, by a highly accurate classifier based on five questions of twin similarity (see [36] for full details). The proportion of boys was similar in MZ and DZ groups (43.7% and 45.6%, respectively; X2(1,N=466) = .17, p = .68, chi-square test), and age did not differ between the two groups of twins (t231= 1.10, p = .27). According to parent report, no child had uncorrected impairments in vision or hearing.

Method Details

Stimuli

The stimuli consisted of 80 different complex scenes spanning a wide range of naturalistic non-social and social snapshots of visual environments that people may encounter in everyday life (from ref [8]; retrieved from http://www-users.cs.umn.edu/~qzhao/predicting.html; Figure 1). Forty of these images included two or more people (“social images”) and 40 others did not include any people (“non-social images”). Each image was displayed for 3 sec with a 1 sec inter-trial interval where a central fixation point was displayed. Stimuli subtended 29.3° × 22.7° (width × height) degrees of visual angle on a screen subtending 29.3° × 24.2°. Participants were instructed to simply look at the images for the total duration of the experiment (5 min, 20 sec). The presentation order of the 80 images was randomized for each individual (including within twin pairs).

Eye tracking data collection

Eye tracking data was recorded using a Tobii T120 eye tracker, which samples gaze location at 120Hz. Immediately prior to stimulus presentation, participants completed a 9-point calibration followed by a validation procedure. Data quality was then evaluated and calibration/validation was repeated if necessary. Excluding the initial calibration/validation time, the entire task lasted 5 min 20 sec. All analyses were carried out using in-house code written in MATLAB (version 2014b; Mathworks, Natick, MA), and all statistical tests were performed using a two-tailed alpha level.

Quantification and Statistical Analysis

Eye tracking processing and analyses

Minimal preprocessing of eye tracking data was carried out. Binocular gaze data (acquired at 120 HZ or every 8.3 msec) were analyzed in raw form. The eye tracker (Tobii T120; Tobii Technologies, Danderyd, Sweden) outputs a value corresponding to measurement confidence for each gaze sample acquired and for each eye (ranging from 0 to 4), and only gaze samples where both eyes were confidently identified by the software were included (i.e., the highest validity code of 0). We excluded any individual with missing data, defined as less than 50% of usable data on more than 50% of trials (n = 33 individuals, resulting in 11 MZ pairs and 16 DZ pairs excluded), but the results remain essentially unchanged with both stricter and more liberal inclusion criteria.

To assess gaze similarity between pairs of individuals, we next created three distinct types of gaze heatmaps: (1) mean heatmaps that combined gaze data from all 466 participants together for each image (resulting in 80 heatmaps total; see Figure 1); (2) individual heatmaps for each participant and image (resulting in 80 heatmaps/participant; see Figure 2a,b); (3) temporally-binned heatmaps that divided gaze data from each 3 second stimulus presentation into 10 equally spaced 300 msec bins for each participant and image (resulting 10 heatmaps/image/participant). Heatmaps were produced using a 2° full width at half maximum (FWHM) Gaussian kernel spatial smoothing.

Similarities in gaze patterns were then assessed by either comparing (i.e., correlating) heatmaps either directly between two individuals (pairwise analyses) or between two individuals when both are compared to the average heatmap (mean-referenced analyses). To calculate correlation coefficients between heatmaps, heatmaps were vectorized (i.e., converted to a single column of values), and then Pearson correlation between these vectors could readily be calculated35. As shown in Figure S1, Pearson correlations are a straightforward method to capture similarity and dissimilarity, and match closely with human intuition. To properly account for the non-normal distribution of correlation coefficients, statistical tests were performed on the fisher z-transformed correlation coefficients, rather than the correlation coefficients directly.

Establishing a baseline of gaze similarity

In order to define a baseline level of gaze similarity between unrelated individuals (Non-Twin pairs; NT), we randomly selected two unrelated individuals and calculated Pearson correlations between those two individuals’ heatmaps for each of the 80 stimuli. This sampling procedure was repeated 2000 times, with replacement, to provide a robust baseline measure of pairwise similarity between two unrelated individuals.

Assessing similarity in visual exploration

For each individual participant, their 80 image heatmaps were averaged together to produce a single mean heatmap upon which Shannon entropy was then calculated. Entropy provides a measure of statistical randomness, such that spatially diffuse gaze to many different areas (i.e., fewer peaks) across the images would result in higher entropy, and tightly focused gaze to only certain parts of the images (e.g., the center) would result in lower entropy values, as in ref [8]. The correlation in entropy values across MZ and DZ pairs separately was then calculated. Ten twin pairs (5 MZ, 5 DZ) where either twin exhibited entropy values greater than 3 SD away from the mean were excluded to produce a more normal distribution, but results were essentially unchanged with their inclusion. Heritability was calculated as described in the Behavioral Genetic Analyses, below.

Assessing similarity in gaze location

While analysis of entropy can determine whether heritable factors contribute to general strategies of how individuals look at these images, it does not directly assess the extent to which individuals look at the same locations in the scenes. To address this issue, we calculated the pairwise similarity in heatmaps for each of the 80 image heatmaps and for each twin pair (using Pearson correlation [37] – e.g., the correlation between twin A and A’ for image 1, the correlation between twin A and A’ for image 2, and so on. A single value was then derived for each twin or (NT) pair by calculating the mean of the correlations across all 80 images. Mean similarity was then calculated for MZ, DZ, and NT groups.

Heritability of gaze location

Given the relative pairwise nature of the above measure of gaze similarity (i.e., only a single value was derived from each twin pair, as opposed to the analysis of entropy where an absolute measure was obtained for each individual twin), a heritability estimate could not be readily calculated using standard quantitative behavioral genetic models. Therefore, in order to derive a heritability estimate, we repeated the analysis by comparing each individual participant heatmap to the overall mean heatmap for that image (i.e., mean-referenced analysis). For example, for image 1, we calculated similarity (Pearson correlation) between participant A and the mean heatmap for image 1, similarity between participant A’ and the mean heatmap for image 1, similarity between participant B and the mean heatmap for image 1, and so on. In this way, a mean-referenced value for each image and individual could be obtained, indicating the degree of similarity to a common reference. The r-to-z transformed values for each of the 80 images were then averaged together for each participant yielding a single value. Correlation coefficients were then calculated across MZ, DZ, and NT participants. Heritability could then be readily calculated using standard quantitative behavioral genetic analyses (see Behavior Genetic Analyses, below). Note that this mean-referenced approach is a slightly less direct method for approximating gaze similarity between twins, but results obtained from this method are consistent with the more sensitive pairwise analysis.

Quantifying image complexity

To further assess whether observed effects were consistently observed across individual images (and their particular image characteristics), we operationally defined image complexity as the Shannon entropy of the mean heatmap across all participants for each image separately (see Figure 1 and Figure S3; high entropy values = more broadly distributed gaze to the scene and fewer peaks), as in ref [8]. Note that, similar to the earlier analysis assessing exploration strategy, this analysis also utilizes entropy. However, it is quite distinct and yields a different meaning here in that rather than characterizing an individuals’ pattern of gaze dispersion across all images (as in the previous analysis), it uses mean gaze dispersion across individuals simply as a proxy for image characteristics – e.g., are there a few dominant objects that most individuals gaze at, or is gaze more broadly distributed across individuals? More “complex” images are those whose gaze is more dispersed across participants. We then assessed pairwise similarity between MZ, DZ, and NT twins for each image as a function of image complexity (Figure S3).

Temporal similarity analyses

Although the analyses described above are based on eye movements occurring over short stimulus durations (i.e., 3 sec), they nonetheless still average over more nuanced spatiotemporal aspects of eye movements that occur on even shorter sub-second timescales (see Figure 2c). To address this, for each image and participant, we divided gaze data into 10 equally spaced 300 msec bins spanning the entire 3-sec stimulus presentation and derived heatmaps for each bin separately. As above, we then calculated the pairwise Pearson correlations for each MZ, DZ, or NT pair and image, but this time separately for each 300 msec bin (Figure 3a). Then, for each bin separately, we averaged these Fisher transformed r-to-z values across the 80 images and aross twins within each group (MZ, DZ, NT). This analysis was carried out for all 80 images combined (Figure 3a), as well as separately for the 40 social and 40 non-social images (Figure 3b,c).

Behavior Genetic Analyses

We calculated twin correlations and fit standard behavior genetic twin models to estimate the degree to which genetic factors influence eye gaze patterns using Mplus (Los Angeles, CA: Muthén & Muthén). These results are provided in Table S1. Given the sample sizes and twin correlations, the best fitting model (highlighted) included only two variance components: additive genetic and non-shared environmental factors. Any twin pair that included a value that was three standard deviations above or below the mean was removed from the analyses (visual exploration analysis: 5 MZ and 5 DZ pairs; gaze similarity analysis: 1 MZ and 5 DZ pairs), though we obtained comparable results when they were included.

Cross-subject gaze fingerprinting

Finally, to further convey the similarity of gaze between MZ and DZ twin pairs, we adapted a recent analytic approach from the field of functional neuroimaging, known as “functional connectome fingerprinting” [38]. Here, using what we call cross-subject gaze fingerprinting, we ask whether, given one person’s gaze data, can we accurately identify their co-twin from among a pool of 100 individuals (see Figure 4 for further details; see also Figure S4). Chance performance would be expected to be 1%, and can be empirically determined.

In the analyses reported in the main text, Figure 4, and Figure S4, the twin-co-twin identification performance was tested among a pool of individuals with the same zygosity (i.e., a pool of MZ twins for MZ twin pair identification; a pool of DZ twins for DZ twin pair identification). To verify that the use of different comparison groups did not confound the results, we re-ran the analysis using a common pool of both MZ and DZ individuals. This procedure yielded essentially identical results (MZ accuracy = 27.2%; DZ accuracy = 6.9%; NT accuracy = 0.8%).

Data and Software Availability

Data and software can be obtained from the Lead Contact on request.

Supplementary Material

Movies S1. Illustrative example #1 of highly similar gaze in MZ twin pairs. Note that the spatiotemporal similarity of gaze within a twin pair can be contrasted with the lower similarity across twin pairs when contrasted with Movie S2. Related also to Figure 2 and Figure 3.

Movie S2. Illustrative example #2 of highly similar gaze in MZ twin pairs. Note that the spatiotemporal similarity of gaze within a twin pair can be contrasted with the lower similarity across twin pairs when contrasted with Movie S1. Related also to Figure 2 and Figure 3.

KEY RESOURCES TABLE

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Experimental Models: Organisms/Strains | ||

| Monozygotic and Dizygotic Twins | Recruited from the Stockholm, Sweden area | N/A |

| Software and Algorithms | ||

| MATLAB | Version 2014b; Mathworks, Natick, MA | https://www.mathworks.com/products/new_products/release2014b.html |

| Tobii Studio | Tobii Technologies, Danderyd, Sweden | Discontinued; see https://www.tobiipro.com/product-listing/tobii-pro-studio/ |

| Custom Matlab scripts | Dan Kennedy | dpk@indiana.edu |

| Other | ||

| Image Stimuli | Ref [8] | http://www-users.cs.umn.edu/~qzhao/predicting.html |

| Tobii T120 Eye Tracker | Tobii Technologies, Danderyd, Sweden | https://www.tobiipro.com/product-listing/tobii-t60-and-t120/ |

| Eye tracking data | Dan Kennedy | dpk@indiana.edu |

Highlights.

-

-

We recorded eye movements in a large sample of identical and fraternal twins.

-

-

We find eye movements to complex social and nonsocial scenes are heritable.

-

-

The influence of genes manifest even in the precise temporal order of fixations.

-

-

This suggests genes influence the experiences individuals create for themselves.

Acknowledgments

Funding and Acknowledgements: This study was supported by seed funding from Indiana University to D.P.K. and B.M.D., the NIH (R00MH094409 and R01MH110630 to D.P.K.; K99DA040727 to P.D.Q.), grants to T.F.-Y. from Stiftelsen Riksbankens Jubileumsfond (NHS14-1802:1; Pro Futura Scientia [in collaboration with SCAS]), the Swedish Research Council (2015–03670), EU (MSC ITN 642996), the Strategic Research Area Neuroscience at Karolinska Institutet (StratNeuro) and Sällskapet Barnavård, grants to SB from the Swedish Research Council (523-2009-7054) and grants to PL from the Swedish Research Council for Health, Working Life and Welfare (project 2012-1678) and the Swedish Research Council (2011–2492). We thank Viktor Persson, Fanny Engman, Clara Holmberg, Anna Sahlström, Sigrid Elfström, Ida Hensler, Anton Gezelius, Helena Nizic, Ronja Runnström Brandt, Mathilda Eriksson, Lotta Sjöberg and Linnea Adolfsson for invaluable help with the data collection, and Ralf Kuja-Halkola, Martin Rickert, Lisa Byrge, and Lindon Eaves for valuable comments on various aspects of the analyses. We also thank Qi Zhao (National University of Singapore) for making the stimuli available for use in this study.

Sven Bölte receives royalties for textbooks and psychodiagnostic instruments from Hogrefe, Huber, Kohlhammer and UTB publishers. Bölte has in the last 3 years acted as a consultant or lecturer for Shire, Medice, Roche, Eli Lilly, Prima Psychiatry, GLGroup, System Analytic, Kompetento, Expo Medica, and Prophase. Paul Lichtenstein has served as a speaker for Medice.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Author Contributions: D.P.K. and T.F.-Y. developed the study concept and study design with support from B.M.D., S.B., and P.L. D.P.K. analyzed the eye tracking data, and P.D.Q. performed the behavioral genetic analyses. D.P.K. and T.F.-Y. drafted the initial manuscript. D.P.K., B.M.D., P.D.Q., S.B., P.L., and T.F.-Y. revised the manuscript critically and approved the final version. D.P.K. and T.F.-Y. had full access to all the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis.

Conflicts of Interest: There are no conflicts of interest related to this article.

References

- 1.Adolphs R, Gosselin F, Buchanan TW, Tranel D, Schyns P, Damasio AR. A mechanism for impaired fear recognition after amygdala damage. Nature. 2005;433:68–72. doi: 10.1038/nature03086. [DOI] [PubMed] [Google Scholar]

- 2.Klin A, Jones W, Schultz R, Volkmar F. The enactive mind, or from actions to cognition: lessons from autism. Philosophical Transactions Of The Royal Society Of London Series B-Biological Sciences. 2003;358:345–360. doi: 10.1098/rstb.2002.1202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Young GS, Merin N, Rogers SJ, Ozonoff S. Gaze behavior and affect at 6 months: predicting clinical outcomes and language development in typically developing infants and infants at risk for autism. Developmental science. 2009;12:798–814. doi: 10.1111/j.1467-7687.2009.00833.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bush JC, Pantelis PC, Duchesne XM, Kagemann SA, Kennedy DP. Viewing Complex, Dynamic Scenes"Through the Eyes” of Another Person: The Gaze-Replay Paradigm. PloS one. 2015;10:e0134347. doi: 10.1371/journal.pone.0134347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Yu C, Smith LB. What you learn is what you see: using eye movements to study infant cross-situational word learning. Developmental science. 2011;14:165–180. doi: 10.1111/j.1467-7687.2010.00958.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Itti L, Koch C. Computational modelling of visual attention. Nature reviews. Neuroscience. 2001;2:194–203. doi: 10.1038/35058500. [DOI] [PubMed] [Google Scholar]

- 7.Cerf M, Frady EP, Koch C. Faces and text attract gaze independent of the task: Experimental data and computer model. Journal of vision. 2009;9:10, 11–15. doi: 10.1167/9.12.10. [DOI] [PubMed] [Google Scholar]

- 8.Xu J, Jiang M, Wang S, Kankanhalli MS, Zhao Q. Predicting human gaze beyond pixels. Journal of vision. 2014;14:28–28. doi: 10.1167/14.1.28. [DOI] [PubMed] [Google Scholar]

- 9.Wang S, Jiang M, Duchesne XM, Laugeson EA, Kennedy DP, Adolphs R, Zhao Q. Atypical Visual Saliency in Autism Spectrum Disorder Quantified through Model-Based Eye Tracking. Neuron. 2015;88:604–616. doi: 10.1016/j.neuron.2015.09.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jones W, Klin A. Attention to eyes is present but in decline in 2–6-month-old infants later diagnosed with autism. Nature. 2013;504:427-+. doi: 10.1038/nature12715. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Tseng PH, Cameron IG, Pari G, Reynolds JN, Munoz DP, Itti L. High-throughput classification of clinical populations from natural viewing eye movements. Journal of neurology. 2013;260:275–284. doi: 10.1007/s00415-012-6631-2. [DOI] [PubMed] [Google Scholar]

- 12.Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of general psychiatry. 2002;59:809–816. doi: 10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]

- 13.Sasson NJ, Pinkham AE, Weittenhiller LP, Faso DJ, Simpson C. Context Effects on Facial Affect Recognition in Schizophrenia and Autism: Behavioral and Eye-Tracking Evidence. Schizophrenia bulletin. 2016;42:675–683. doi: 10.1093/schbul/sbv176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Constantino JN, Kennon-McGill S, Weichselbaum C, Marrus N, Haider A, Glowinski AL, Gillespie S, Klaiman C, Klin A, Jones W. Infant viewing of social scenes is under genetic control and is atypical in autism. Nature. 2017;547:340–344. doi: 10.1038/nature22999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Borji A, Parks D, Itti L. Complementary effects of gaze direction and early saliency in guiding fixations during free viewing. Journal of vision. 2014;14:3. doi: 10.1167/14.13.3. [DOI] [PubMed] [Google Scholar]

- 16.Borji A, Itti L. Defending Yarbus: eye movements reveal observers' task. Journal of vision. 2014;14:29. doi: 10.1167/14.3.29. [DOI] [PubMed] [Google Scholar]

- 17.Emery NJ. The eyes have it: the neuroethology, function and evolution of social gaze. Neuroscience And Biobehavioral Reviews. 2000;24:581–604. doi: 10.1016/s0149-7634(00)00025-7. [DOI] [PubMed] [Google Scholar]

- 18.Senju A, Csibra G. Gaze following in human infants depends on communicative signals. Current Biology. 2008;18:668. doi: 10.1016/j.cub.2008.03.059. [DOI] [PubMed] [Google Scholar]

- 19.Falck-Ytter T, Carlström C, Johansson M. Eye contact modulates cognitive processing differently in children with autism. Child Dev. 2015;86 doi: 10.1111/cdev.12273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.von Hofsten C, Rosander K. Development of smooth pursuit tracking in young infants. Vision research. 1997;37:1799–1810. doi: 10.1016/s0042-6989(96)00332-x. [DOI] [PubMed] [Google Scholar]

- 21.Gredebäck G, Ornkloo H, von Hofsten C. The development of reactive saccade latencies. Experimental Brain Research. 2006;173:159–164. doi: 10.1007/s00221-006-0376-z. [DOI] [PubMed] [Google Scholar]

- 22.Bailey A, Le Couteur A, Gottesman I, Bolton P, Simonoff E, Yuzda E, Rutter M. Autism as a strongly genetic disorder: evidence from a British twin study. Psychological medicine. 1995;25:63–77. doi: 10.1017/s0033291700028099. [DOI] [PubMed] [Google Scholar]

- 23.Sandin S, Lichtenstein P, Kuja-Halkola R, Larsson H, Hultman CM, Reichenberg A. The Familial Risk of Autism. Jama-Journal of the American Medical Association. 2014;311:1770–1777. doi: 10.1001/jama.2014.4144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Tick B, Bolton P, Happe F, Rutter M, Rijsdijk F. Heritability of autism spectrum disorders: a meta-analysis of twin studies. Journal of child psychology and psychiatry, and allied disciplines. 2016;57:585–595. doi: 10.1111/jcpp.12499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Pierce K, Conant D, Hazin R, Stoner R, Desmond J. Preference for Geometric Patterns Early in Life As a Risk Factor for Autism. Archives of general psychiatry. 2011;68:101–109. doi: 10.1001/archgenpsychiatry.2010.113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Kendler KS, Baker JH. Genetic influences on measures of the environment: a systematic review. Psychological medicine. 2007;37:615–626. doi: 10.1017/S0033291706009524. [DOI] [PubMed] [Google Scholar]

- 27.Polderman TJ, Benyamin B, De Leeuw CA, Sullivan PF, Van Bochoven A, Visscher PM, Posthuma D. Meta-analysis of the heritability of human traits based on fifty years of twin studies. Nature genetics. 2015 doi: 10.1038/ng.3285. [DOI] [PubMed] [Google Scholar]

- 28.Turkheimer E. Three Laws of Behavior Genetics and What They Mean. Current Directions in Psychological Science. 2000;9:160–164. [Google Scholar]

- 29.Plomin R, DeFries JC, Knopik VS, Neiderheiser J. Behavioral genetics. Palgrave Macmillan: 2013. [Google Scholar]

- 30.Plomin R, DeFries JC, Loehlin JC. Genotype-environment interaction and correlation in the analysis of human behavior. Psychological bulletin. 1977;84:309. [PubMed] [Google Scholar]

- 31.Roberts R. Behavior-genetic analysis. New York: McGraw-Hill; 1967. Some concepts and methods in quantitative genetics; pp. 214–257. [Google Scholar]

- 32.Eley TC, Bolton D, O'connor TG, Perrin S, Smith P, Plomin R. A twin study of anxiety-related behaviours in pre-school children. Journal of Child Psychology and Psychiatry. 2003;44:945–960. doi: 10.1111/1469-7610.00179. [DOI] [PubMed] [Google Scholar]

- 33.Cheek JM, Buss AH. Shyness and sociability. Journal of Personality and Social Psychology. 1981;41:330–339. [Google Scholar]

- 34.Itti L. New Eye-Tracking Techniques May Revolutionize Mental Health Screening. Neuron. 2015;88:442–444. doi: 10.1016/j.neuron.2015.10.033. [DOI] [PubMed] [Google Scholar]

- 35.Falck-Ytter T, von Hofsten C, Gillberg C, Fernell E. Visualization and Analysis of Eye Movement Data from Children with Typical and Atypical Development. Journal of autism and developmental disorders. 2013;43:2249–2258. doi: 10.1007/s10803-013-1776-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Anckarsäter H, Lundstrom S, Kollberg L, Kerekes N, Palm C, Carlstrom E, Langstrom N, Magnusson PKE, Halldner L, Bolte S, et al. The Child and Adolescent Twin Study in Sweden (CATSS) Twin Research and Human Genetics. 2011;14:495–508. doi: 10.1375/twin.14.6.495. [DOI] [PubMed] [Google Scholar]

- 37.Holmqvist K, Nyström M, Andersson R, Dewhurst R, Jarodzka H, van de Weijer J. Eye Tracking: A comprehensive guide to methods and measures. Oxford, UK: Oxford University Press; 2011. [Google Scholar]

- 38.Finn ES, Shen X, Scheinost D, Rosenberg MD, Huang J, Chun MM, Papademetris X, Constable RT. Functional connectome fingerprinting: identifying individuals using patterns of brain connectivity. Nature neuroscience. 2015 doi: 10.1038/nn.4135. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Movies S1. Illustrative example #1 of highly similar gaze in MZ twin pairs. Note that the spatiotemporal similarity of gaze within a twin pair can be contrasted with the lower similarity across twin pairs when contrasted with Movie S2. Related also to Figure 2 and Figure 3.

Movie S2. Illustrative example #2 of highly similar gaze in MZ twin pairs. Note that the spatiotemporal similarity of gaze within a twin pair can be contrasted with the lower similarity across twin pairs when contrasted with Movie S1. Related also to Figure 2 and Figure 3.