Key Points

Question

Do the practice patterns and clinical outcomes of general surgeons differ by type of residency training?

Findings

In this cohort study of 3638 general surgeons trained at nonuniversity- and university-based residency programs, significant differences were noted in types and proportion of procedures performed between the inpatient and outpatient setting, but no statistically significant difference was observed in clinical outcomes among surgeons with similar practice patterns operating within the same hospital.

Meanings

Surgeons trained in nonuniversity- and university-based residency programs have distinct practice patterns. When compared within the same clinical setting, surgeons from both training backgrounds achieve similar clinical outcomes.

Abstract

Importance

Important metrics of residency program success include the clinical outcomes achieved by trainees after transitioning to practice. Previous studies have shown significant differences in reported training experiences of general surgery residents at nonuniversity-based residency (NUBR) and university-based residency (UBR) programs.

Objective

To examine the differences in practice patterns and clinical outcomes between surgeons trained in NUBR and those trained in UBR programs.

Design, Setting, and Participants

This observational cohort study linked the claims data of patients who underwent general surgery procedures in New York, Florida, and Pennsylvania between January 1, 2012, and December 31, 2013, to demographic and training information of surgeons in the American Medical Association Physician Masterfile. Patients who underwent a qualifying procedure were grouped by surgeon. Practice pattern analysis was performed on 3638 surgeons and 1 237 621 patients, representing 214 residency programs. Clinical outcomes analysis was performed on 2301 surgeons and 312 584 patients. Data analysis was conducted from February 1, 2017, to July 31, 2017.

Exposures

NUBR or UBR training status.

Main Outcomes and Measures

Inpatient mortality, complications, and prolonged length of stay.

Results

No significant differences were observed between the NUBR-trained surgeons and UBR-trained surgeons in age (mean, 53.3 years vs 53.7 years), sex (female, 18.2% vs 16.9%), or years of clinical experience (mean, 16.5 years vs 16.5 years). Overall, NUBR-trained surgeons compared with UBR-trained surgeons performed more procedures (median interquartile range [IQR], 328 [93-661] vs 164 [49-444]; P < .001) and performed a greater proportion of procedures in the outpatient setting (risk difference, 6.5; 95% CI, 6.4 to 6.7; P < .001). Before matching, the mean proportion of patients with documented inpatient mortality was lower for NUBR-trained surgeons than for UBR-trained surgeons (risk difference, −1.01; 95% CI, −1.41 to −0.61; P < .001). The mean proportion of patients with complications (risk difference, −3.17%; 95% CI, −4.21 to −2.13; P < .001) and prolonged length of stay (risk difference, −1.89%; 95% CI, −2.79 to −0.98; P < .001) was also lower for NUBR-trained surgeons. After matching, no significant differences in patient mortality, complications, and prolonged length of stay were found between NUBR- and UBR-trained surgeons.

Conclusions and Relevance

Surgeons trained in NUBR and UBR programs have distinct practice patterns. After controlling for patient, procedure, and hospital factors, no differences were observed in the inpatient outcomes between the 2 groups.

This cohort study analyzes the claims data of patients who underwent general surgery and the practice and training information of surgeons in the American Medical Association Physician Masterfile to determine the association of surgical residency training with patient outcomes.

Introduction

Over the past decade, the increased national focus on safety and quality in medicine has had a substantial influence on graduate medical education. The introduction of the Accreditation Council for Graduate Medical Education Next Accreditation System and the Institute of Medicine’s 2014 call for implementing pay-for-performance methods in graduate medical education funding highlight the need for new methods to define and measure the success of medical training programs. In recent years, researchers have started closely examining the association of provider training with patient outcomes.1,2,3

Previous studies have found differences in the experiences of trainees between university-based residency (UBR) and nonuniversity-based residency (NUBR) programs (also called hospital-based or independent training programs). A cross-sectional national survey of categorical general surgery residents found that trainees in NUBR programs were more satisfied with their operative experience during training compared with residents in UBR programs. Trainees in NUBR programs also indicated a more supportive clinical learning environment.4 Additional surveys found that graduating chief residents of NUBR programs were more likely to pursue general surgery rather than fellowship training.5,6

Despite these documented differences in training experience, little is known about the association of training setting with future practice patterns or patient outcomes. Our goal in this study was to examine the association of training in NUBR vs UBR programs with practice patterns and outcomes achieved by program graduates. We matched NUBR- to UBR-trained surgeons within the same hospitals to remove hospital influences on outcomes.

Methods

We conducted a retrospective observational study using a data set linking all-payer claims from New York,7 Florida,8 and Pennsylvania9 between January 1, 2012, and December 31, 2013, to physician demographic and training information from the American Medical Association (AMA) Physician Masterfile.10 These states were selected as they include identifiers that allow patient claims to be linked to hospital and physician information. This study was deemed exempt from continuing review by the institutional review board of the University of Pennsylvania. Data analysis was conducted from February 1, 2017, to July 31, 2017.

General surgery residency programs were classified as either UBR or NUBR. Assignment was based on review of the sponsoring institution listed on the Accreditation Council for Graduate Medical Education website11 and was supplemented with previously collected data on program affiliations, program size, and geographic region.12 Community-based programs with affiliations to universities were classified as NUBR. Surgeons were classified as NUBR- or UBR-trained on the basis of their residency program listed in the AMA Masterfile. Surgeon age, sex, and year of training completion were also abstracted. Surgeon experience was defined as year of training completion subtracted from year of operation.

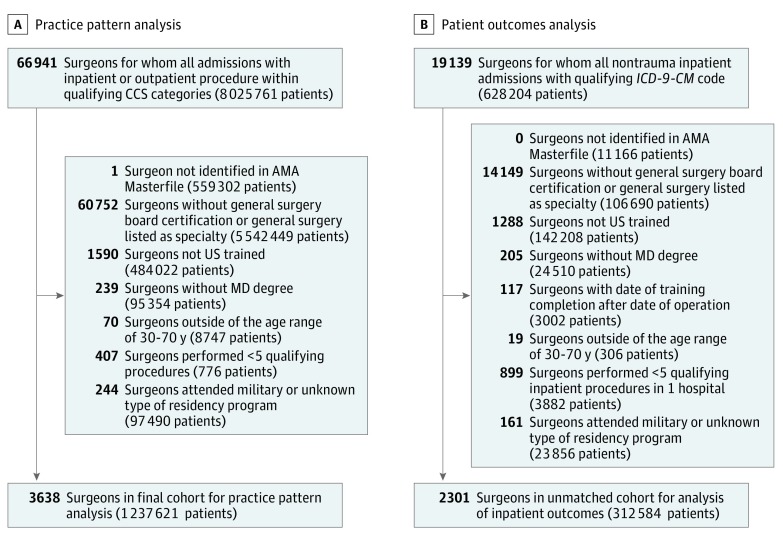

Surgeons were excluded if they could not be identified in the AMA Masterfile, did not meet the criteria for general surgery training, did not attend an allopathic program identified as an NUBR or a UBR, did not train within the United States, or did not perform at least 5 operations within the 2-year study time frame. Overall practice patterns of surgeons trained at UBR and NUBR programs were compared using inpatient and outpatient procedures. Analysis of patient outcomes was then performed using a set of inpatient operations, as complications in the outpatient setting are difficult to analyze in administrative data. The Figure details the exclusion criteria for the overall and inpatient cohorts. See eAppendix 1 in the Supplement for a list of all codes used to define the cohort and define complications.

Figure. Flow Diagram for Surgeon and Patient Exclusion Criteria.

Inclusion and exclusion criteria for practice pattern analysis (A) and patient outcome analysis (B). AMA indicates American Medical Association; CCS, Clinical Classifications Software; ICD-9-CM, International Classification of Diseases, Ninth Revision, Clinical Modification; MD, doctor of medicine.

Practice Pattern Analysis

To examine practice patterns, we selected and grouped patients by surgeon if they underwent a general surgery procedure. Procedures representing the scope of general surgery13 were defined using Agency for Healthcare Research and Quality Clinical Classifications Software (CCS) categories, which map both the International Classification of Diseases, Ninth Revision, Clinical Modification procedure codes and the Current Procedural Terminology (CPT) codes.14

We compared the characteristics of NUBR- and UBR-trained surgeons. The contribution of each CCS category to the overall practice of each type of surgeon was calculated, and the proportion of procedures performed in the outpatient setting and inpatient setting overall as well as within each CCS category for both surgeon types was compared over the study time frame.

Outcomes Analysis

To examine clinical outcomes, we selected patients using the International Classification of Diseases, Ninth Revision, Clinical Modification codes that mapped to a narrower set of operations typically performed by general surgeons in the inpatient setting. Patients were classified as having undergone 1 of 44 general surgical operations during a nontrauma inpatient admission. Operations were categorized as complex or essential on the basis of the Surgical Council on Resident Education Curriculum Outline.15

Patient sociodemographic and clinical characteristics were abstracted from the claims data. Comorbidities were defined using Elixhauser indices.16 Patients were classified by admission type (emergent, urgent, or elective) and grouped by surgeon. Surgeon volume was calculated as total operations, complex operations, and essential operations.

The primary outcomes were inpatient mortality, postoperative complications, and prolonged length of stay (PLOS). Complications were identified using the International Classification of Diseases, Ninth Revision, Clinical Modification diagnosis codes and collapsed into a binary variable indicating the development of 1 or more complications. Prolonged length of stay was defined as hospital- and operation-specific length of stay greater than the 75th percentile. The PLOS is a well-defined measure used to capture inefficiencies in care and complications that result in prolonged hospitalization.17,18

Means were calculated for all patient-level measures, including outcomes, for each surgeon to provide surgeon-level covariates. Aggregating the patient data to the surgeon level ensured that the statistical precision of our estimates was not underestimated.19

Matching Strategy

We used matching to compare clinical outcomes of NUBR- and UBR-trained surgeons. Matching was used instead of hierarchical modeling as it allowed us to directly establish that the covariate balance between the exposed group (NUBR) and the control group (UBR) was similar and, at the same time, compare outcomes of NUBR- and UBR-trained surgeons within the same hospital. Exact matching of NUBR- to UBR-trained surgeons within hospitals ensured high levels of comparability between the 2 groups by restricting the analytic sample to surgeons with different residency types but practicing in the same clinical environment. Moreover, an exact match within hospitals controlled for measured and unmeasured hospital factors that may contribute to patient-level outcomes. Surgeons who operated in multiple hospitals were assigned to the hospital in which they performed the most operations. Finally, matching separated the design stage from the outcome analysis stage, decreasing the risk of multiple testing issues.

We used optimal cardinality matching to pair surgeons who were most similar on the basis of more than 100 covariates. Cardinality matching returns the largest matched sample that satisfies set specifications for covariate balance.20 Surgeon-level covariates included sex, age, years of experience, and operative volume (essential, complex, and total). Patient-level covariates (demographics, comorbidities, and procedure types) were calculated at the surgeon level as the mean value for patients cared for by that surgeon or as the percentage of patients cared for by that surgeon with given characteristics. We used fine balance to nearly exact balance years of experience, primary surgical specialty, and secondary surgical specialty.21 See eAppendix 2 in the Supplement for the complete matching algorithm.

A planned sensitivity analysis was performed to assess the magnitude of bias from unmeasured confounders that would need to be present to alter the conclusions.22,23 In the context of the sensitivity analysis, we used secondary board-certification status as a proxy for fellowship training. Secondary board certification was defined as surgeons with both general surgery and any other board certification listed in the AMA Masterfile.

Statistical Analysis

To assess the quality of the matches, we computed the standardized difference for each covariate. The standardized difference is calculated for a given covariate as the mean difference between matched patients divided by the pooled SD before matching.24,25,26 We allowed for a maximum standardized difference of 0.10, or one-tenth of an SD, in the matched cohorts.24,25,26,27

Descriptive comparisons were made using unpaired, 2-tailed t test; 2-sample proportion test; and Wilcoxon rank test. We tested for significant differences in outcomes using the Wilcoxon rank test. All hypothesis tests were 2-sided. We used a significance threshold of a 2-sided P < .05. Statistical analyses were conducted using Stata/IC, version 13.1 (StataCorp LLC) and R software (R Foundation for Statistical Computing). All matching analyses were conducted using the Designmatch package28 in R, version 3.3.2.

Results

Analysis of Practice Patterns

We identified 3638 surgeons who operated on 1 237 621 patients with a qualifying inpatient or outpatient procedure between January 1, 2012, and December 31, 2013, in New York, Florida, and Pennsylvania. Of these surgeons, 885 (24.3%) trained in NUBR programs. There was no significant difference between the NUBR-trained surgeons and UBR-trained surgeons in age (mean, 53.3 years vs 53.7 years; risk difference, −0.37 years; 95% CI, −1.12 to 0.38), sex (female, 18.2% vs 16.9%; risk difference, 0.01%; 95% CI, −0.02 to 0.04), or years of clinical experience (mean, 16.5 years vs 16.5 years; risk difference, 0.05 years; 95% CI, −0.75 to 0.85). A total of 214 residency programs were represented in our sample, of which 98 (45.7%) were NUBR. Table 1 displays the geographic and size distributions of training programs.

Table 1. Characteristics of University- and Nonuniversity-Based Residency Programs Included in Practice Pattern Analysis.

| Variable | No. (%) | |||

|---|---|---|---|---|

| Nonuniversity-Based Residency | University-Based Residency | |||

| Programs | Trained Surgeons | Programs | Trained Surgeons | |

| Total | 98 | 885 | 116 | 2753 |

| Region | ||||

| Northeast | 36 (36.7) | 406 (45.8) | 35 (30.1) | 1596 (57.9) |

| South | 25 (25.5) | 280 (31.6) | 44 (37.9) | 702 (25.4) |

| Midwest | 26 (26.5) | 166 (18.7) | 24 (20.6) | 357 (12.9) |

| West | 11 (11.2) | 33 (3.7) | 13 (11.2) | 98 (3.5) |

| Size | ||||

| ≤3 | 62 (63.2) | 365 (41.2) | 15 (12.9) | 176 (6.4) |

| 4-6 | 34 (34.6) | 454 (51.2) | 85 (73.2) | 2000 (72.6) |

| ≥7 | 2 (2.0) | 66 (7.4) | 16 (13.7) | 577 (20.9) |

Overall practice patterns by surgeon training type and breakdown of inpatient compared with outpatient practice by procedure category are shown in Table 2. Surgeons trained in NUBR programs performed a greater proportion of procedures in the outpatient setting (risk difference, 6.5; 95% CI, 6.4-6.7; P < .001). The contribution of each CCS category to the overall practice pattern of NUBR-trained surgeons compared with UBR-trained surgeons was significantly different for 21 of 23 CCS categories. The largest difference was seen in colonoscopy or proctoscopy with biopsy (risk difference, 11.6; 95% CI, 11.4-11.8). On average, NUBR-trained surgeons compared with UBR-trained surgeons performed more procedures per surgeon overall (median [interquartile range (IQR)], 328 [93-661] vs 164 [49-444]; P < .001), in the outpatient setting (median [IQR], 195.5 [41-482] vs 80 [16-296]; P < .001), and in the inpatient setting (median [IQR], 106.5 [38-192.5] vs 75 [22-169.5]; P < .001).

Table 2. Practice Patterns of Nonuniversity- vs University-Based Residency Trained Surgeons.

| CCS Procedure Category | NUBR-Trained Surgeons | UBR-Trained Surgeons | ||||

|---|---|---|---|---|---|---|

| No. (%) | Practice Breakdown by CCS Procedure, % (95% CI) | No. (%) | Practice Breakdown by CCS Procedure, % (95% CI) | |||

| Outpatient | Inpatient | Outpatient | Inpatient | |||

| Total patients treated | 273 691 (70.2) | 116 291 (29.8) | 100 | 539 375 (63.6) | 308 264 (36.4) | 100 |

| Thyroidectomy and other endocrine procedures | 4277 (73.5) | 1545 (26.5) | 1.49 (1.45-1.53) | 18 009 (68.4) | 8316 (31.6) | 3.11 (3.07-3.14) |

| Dialysis access | 4528 (69.8) | 1955 (30.2) | 1.66 (1.62-1.70) | 28 932 (79.0) | 7686 (21.0) | 4.32 (4.28-4.36) |

| Spleen procedures | 41 (4.7) | 833 (95.3) | 0.22 (0.21-0.24) | 36 (1.1) | 3313 (98.9) | 0.4 (0.38-0.41) |

| Hemic and lymphatic procedures | 12 665 (71.0) | 5179 (29.0) | 4.58 (4.51-4.64) | 38 721 (59.1) | 26 814 (40.9) | 7.73 (7.67-7.79) |

| Upper GI endoscopy | 21 705 (72.9) | 8089 (27.1) | 7.64 (7.56-7.72) | 27 795 (55.4) | 22 340 (44.6) | 5.91 (5.86-5.96) |

| Other enterostomy procedures | 808 (10.2) | 7098 (89.8) | 2.03 (1.98-2.07) | 1800 (9.0) | 18 120 (91.0) | 2.35 (2.32-2.38) |

| Gastrectomy (partial and total) | 183 (4.4) | 3933 (95.6) | 1.06 (1.02-1.09) | 242 (1.9) | 12 170 (98.1) | 1.46 (1.44-1.49) |

| Small bowel resection | 21 (0.5) | 4244 (99.5) | 1.09 (1.06-1.13) | 25 (0.2) | 10 566 (99.8) | 1.25 (1.23-1.27) |

| Colonoscopy or proctoscopy and biopsy | 88 076 (93.2) | 6456 (6.8) | 24.24 (24.11-24.37) | 94 009 (87.7) | 13 190 (12.3) | 12.65 (12.58-12.72) |

| Colorectal resection | 1218 (6.5) | 17 452 (93.5) | 4.79 (4.72-4.85) | 1481 (4.2) | 33 752 (95.8) | 4.16 (4.11-4.20) |

| Appendectomy | 3759 (26.9) | 10 224 (73.1) | 3.59 (3.53-3.64) | 8282 (26.1) | 23 457 (73.9) | 3.74 (3.70-3.78) |

| Hemorrhoid procedures | 8971 (94.8) | 493 (5.2) | 2.43 (2.38-2.48) | 11 617 (92.7) | 910 (7.3) | 1.48 (1.45-1.5) |

| Cholecystectomy and common duct exploration | 30 704 (63.1) | 17 962 (36.9) | 12.48 (12.38-12.58) | 61 793 (58.6) | 43 672 (41.4) | 12.44 (12.37-12.51) |

| Inguinal and femoral hernia repair | 24 156 (88.5) | 3153 (11.5) | 7.00 (6.92-7.08) | 55 776 (88.2) | 7480 (11.8) | 7.46 (7.41-7.52) |

| Other hernia repair | 23 907 (59.9) | 15 996 (40.1) | 10.23 (10.14-10.33) | 47 936 (54.6) | 39 869 (45.4) | 10.36 (10.29-10.42) |

| Laparoscopy (GI) | 2416 (37.1) | 4103 (62.9) | 1.67 (1.63-1.71) | 6148 (36.2) | 10 813 (63.8) | 2.00 (1.97-2.03) |

| Exploratory laparotomy | 46 (2.4) | 1859 (97.6) | 0.49 (0.47-0.51) | 99 (1.5) | 6302 (98.5) | 0.76 (0.74-0.77) |

| Excision; lysis peritoneal adhesions | 657 (5.6) | 11 136 (94.4) | 3.02 (2.97-3.08) | 1244 (4.5) | 26 124 (95.5) | 3.23 (3.19-3.27) |

| Other operative GI therapeutic procedures | 15 070 (29.6) | 35 808 (70.4) | 13.05 (12.94-13.15) | 23 605 (20.4) | 91 852 (79.6) | 13.62 (13.55-13.69) |

| Procedures on breast | 25 660 (87.1) | 3809 (12.9) | 7.56 (7.47-7.64) | 73 578 (85.5) | 12 457 (14.5) | 10.15 (10.09-10.21) |

| Skin and subcutaneous incision and drainage | 2136 (33.1) | 4313 (66.9) | 1.65 (1.61-1.69) | 5476 (32.3) | 11 498 (67.7) | 2.00 (1.97-2.03) |

| Debridement of wound, infection, and burn | 6262 (59.9) | 4191 (40.1) | 2.68 (2.63-2.73) | 23 448 (60.7) | 15 163 (39.3) | 4.56 (4.51-4.60) |

| Excision of skin lesion | 26 355 (96.0) | 1088 (4.0) | 7.04 (6.96-7.12) | 66 290 (95.1) | 3437 (4.9) | 8.23 (8.17-8.28) |

| Outpatient | Inpatient | Total | Outpatient | Inpatient | Total | |

| Patients per surgeon, median (IQR) | 195.5 (41-482) | 106.5 (38-192.5) | 328 (93-661) | 80 (16-296) | 75 (22-169.5) | 164 (49-444) |

Abbreviations: CCS, Clinical Classification Software; GI, gastrointestinal; IQR, interquartile range; NUBR, nonuniversity-based residency; UBR, university-based residency.

Analysis of Inpatient Outcomes

A total of 2301 surgeons and 312 584 patients were identified for analysis of inpatient outcomes. Of these surgeons, 662 (28.7%) were trained at NUBR programs, representing 95 134 patients (30.4%). A total of 209 residency programs were represented, 96 (45.9%) of which were NUBR.

Representative characteristics of NUBR- and UBR-trained surgeons and their associated hospitals and patients are displayed in Table 3. Before matching, several differences in surgeon and patient characteristics were noted, as indicated by a standardized difference greater than 0.10. These differences included number of essential operations performed per surgeon, number of patient comorbidities, proportion of elective admissions, and proportion of commercial insurance. Hospital setting and size characteristics varied between the 2 groups. Differences in distribution of operation type were also seen prior to matching (eTable 1 in the Supplement).

Table 3. Selected Surgeon and Patient Characteristics Before and After Matchinga.

| Characteristic | Before Matching | After Matching | ||||

|---|---|---|---|---|---|---|

| NUBR | UBR | Standardized Differenceb | NUBR | UBR | Standardized Differenceb | |

| Surgeon, No. | 662 | 1639 | 494 | 494 | ||

| Age, y | 52.63 (9.18) | 53.27 (9.57) | −0.07 | 52.43 (9.4) | 52.86 (9.31) | −0.05 |

| Clinical experience, y | 16.1 (9.56) | 16.42 (10.17) | −0.03 | 15.84 (9.86) | 16.09 (9.73) | −0.02 |

| All operations, No. | 145.23 (126.37) | 133.92 (131) | 0.09 | 144.12 (123.11) | 147.5 (136.27) | −0.03 |

| Complex operations, No. | 30.94 (64.07) | 33.29 (73.9) | −0.03 | 31.01 (62.88) | 31.58 (63.19) | −0.01 |

| Essential operations, No. | 121.96 (104.01) | 109.52 (105.47) | 0.12 | 120.77 (101) | 123.21 (114.01) | −0.02 |

| Female sex, No. (%) | 124 (0.19) | 312 (0.19) | −0.01 | 100 (0.2) | 92 (0.19) | 0.04 |

| Patientc | ||||||

| Age, y | 56.43 (6.31) | 56.07 (7.34) | 0.05 | 56.35 (6.33) | 56.35 (6.36) | 0 |

| Comorbidities, No. (%) | 2.12 (0.64) | 2.24 (0.74) | −0.17 | 2.16 (0.65) | 2.17 (0.64) | −0.01 |

| Median income, US$ | 52 449 (12 031) | 53 550 (11 629) | −0.09 | 53 115.63 (12 143.61) | 53 228.09 (12 340.47) | −0.01 |

| Proportion of Patients per Surgeon | Proportion of Patients per Surgeon | |||||

| Male sex | 0.40 (0.14) | 0.40 (0.18) | 0.01 | 0.4 (0.15) | 0.4 (0.15) | −0.01 |

| White race/ethnicity | 0.79 (0.22) | 0.74 (0.25) | 0.21 | 0.77 (0.23) | 0.76 (0.23) | 0.04 |

| Hispanic race/ethnicity | 0.12 (0.18) | 0.10 (0.15) | 0.10 | 0.1 (0.15) | 0.11 (0.15) | −0.01 |

| Admission type | ||||||

| Elective | 0.42 (0.30) | 0.47 (0.33) | −0.17 | 0.43 (0.31) | 0.43 (0.31) | −0.01 |

| Urgent | 0.09 (0.16) | 0.08 (0.15) | 0.06 | 0.09 (0.16) | 0.09 (0.15) | 0.01 |

| Emergent | 0.49 (0.3) | 0.44 (0.32) | 0.14 | 0.48 (0.3) | 0.48 (0.31) | 0.01 |

| Insurance type | ||||||

| Commercial | 0.42 (0.17) | 0.45 (0.19) | −0.18 | 0.43 (0.17) | 0.43 (0.18) | −0.01 |

| Medicare | 0.38 (0.15) | 0.37 (0.17) | 0.06 | 0.38 (0.16) | 0.37 (0.16) | 0.03 |

| Medicaid | 0.11 (0.1) | 0.10 (0.1) | 0.10 | 0.10 (0.09) | 0.11 (0.11) | −0.05 |

| Self-pay | 0.05 (0.08) | 0.05 (0.09) | 0.03 | 0.05 (0.09) | 0.05 (0.09) | −0.01 |

| Other | 0.04 (0.06) | 0.03 (0.05) | 0.18 | 0.04 (0.06) | 0.03 (0.05) | 0.03 |

| Hospitalc | ||||||

| Setting | ||||||

| Not-for-profit, urban | 345 (0.80) | 1053 (0.87) | −0.19 | 283 (0.85) | 283 (0.85) | 0 |

| Not-for-profit, rural | 26 (0.06) | 39 (.03) | 0.13 | 16 (0.05) | 16 (0.05) | 0 |

| Investor owned | 60 (0.14) | 118 (0.10) | 0.13 | 33 (0.10) | 33 (0.10) | 0 |

| Size | ||||||

| Small | 49 (0.07) | 64 (0.04) | 0.15 | 21 (0.04) | 21 (0.04) | 0 |

| Medium | 236 (0.36) | 522 (0.32) | 0.08 | 168 (0.34) | 168 (0.34) | 0 |

| Large | 375 (0.57) | 1048 (0.64) | −0.15 | 304 (0.62) | 304 (0.62) | 0 |

Abbreviations: NUBR, nonuniversity-based residency; UBR, university-based residency.

Data are mean (SD) values unless otherwise indicated.

In units of standard deviation.

Because data in this table are presented at the surgeon level, the number of patients in each cohort and the number of hospitals were not included.

Before matching, all outcomes differed significantly by residency training type (Table 4). The mean proportion of patients who died was lower for NUBR-trained surgeons (risk difference, −1.01%; 95% CI, −1.41 to −0.61; P < .001). The mean proportion of patients who experienced a complication was lower for NUBR-trained surgeons (risk difference, −3.17%; 95% CI, −4.21 to −2.13; P < .001), as was the mean proportion of patients who experienced PLOS (risk difference, −1.89%; 95% CI, −2.79 to −0.98; P < .001).

Table 4. Outcomes at Surgeon Level Before and After Matching.

| Outcome | Mean (SD) | Mean Risk Difference (95% CI) | P Value | Median Risk Difference (95% CI) | P Value | |

|---|---|---|---|---|---|---|

| NUBR | UBR | |||||

| Before Matching | ||||||

| Complication | 14.15 (10.20) | 17.32 (14.82) | −3.17 (−4.21 to −2.13) | <.001 | −1.52 (−2.32 to −0.72) | <.001 |

| PLOS | 19.54 (8.97) | 21.43 (12.78) | −1.89 (−2.79 to −0.98) | <.001 | −0.87 (−1.56 to −0.17) | .01 |

| Mortality | 1.80 (3.32) | 2.80 (6.54) | −1.01 (−1.41 to −0.61) | <.001 | −0.002 (−0.005 to 0.002) | .93 |

| After Matching | ||||||

| Complication | 14.69 (10.35) | 14.50 (9.68) | 0.20 (−1.04 to 1.43) | .76 | 0.02 (−1.09 to 1.11) | .98 |

| PLOS | 20.02 (8.94) | 19.61 (9.03) | 0.40 (−1.76 to 1.57) | .50 | 0.30 (−0.68 to 1.30) | .55 |

| Mortality | 1.94 (3.63) | 1.81 (2.71) | 0.13 (−0.28 to 0.53) | .53 | 0.07 (−0.17 to 0.32) | .55 |

Abbreviations: NUBR, nonuniversity-based residency; PLOS, prolonged length of stay; UBR, university-based residency.

We then used an optimal 1 to 1 match to identify 494 NUBR-trained to UBR-trained surgeon pairs in 216 hospitals. Each matched pair operated within the same hospital. The match resulted in a population of 70 404 patients operated on by NUBR-trained surgeons and 72 010 patients operated on by UBR-trained surgeons. After matching, all surgeon characteristics were similar (Table 3). Marginal distributions of primary and secondary surgical specialty were nearly identical. Distributions of patient comorbidities and operation types were also highly comparable (eTable 1 of the Supplement).

After matching, clinically equivalent outcomes were seen between surgeons trained in NUBR and UBR programs. No significant difference was observed in the mean proportion of patients who died (risk difference, 0.13%; 95% CI, −0.28 to 0.53; P = .53), had a complication (risk difference, 0.20%; 95% CI, −1.04 to 1.43; P = .76), or experienced PLOS (risk difference, 0.40%; 95% CI, −1.76 to 1.57; P = .50).

Next, we performed a sensitivity analysis to explore whether an unobserved confounder could have masked a stronger association than what we observed in the matched sample.29 We tested whether the estimated complication and PLOS outcomes were statistically significantly different from a difference in rates of 5%. For mortality, we tested whether the proportion was significantly different from a difference in proportions of 2.5%. The sensitivity analysis indicated that the test of equivalence in mortality would remain statistically significant in the presence of a confounder that increased the odds of having surgery performed by an NUBR-trained surgeon by 601%. This finding indicated that our observed null result was not likely a product of unobserved confounding. For the 2 other outcomes, the level of confounding required to mask an association was approximately one-third that of the mortality outcome. (See eAppendix 3 in the Supplement for a detailed explanation of the sensitivity analysis.)

As a proxy for fellowship status, we examined the association between the training type and additional board certification, allowing us to use sensitivity analysis to determine if confounding due to differences in additional training beyond residency would have changed our findings. This test was not significant: The proportion of NUBR-trained surgeons with additional certification was 16.9%, compared with the 18.2% proportion of UBR-trained surgeons (risk difference, 1.3%; 95% CI, −2.1 to 4.7). The difference in additional board certification would correspond to a 10% change in odds of being operated on by an NUBR-trained surgeon, a percentage that is much less than the threshold of the sensitivity analysis.

Discussion

As the training system continues to adapt to the changing surgical needs of the population, additional tools are necessary to evaluate the quality of residency programs and performance of the overall graduate medical education system. Evaluating surgical training programs using patient outcomes after trainees transition to independent practice is 1 proposed method. Our study examined the association of training in UBR or NUBR programs with overall practice patterns and inpatient outcomes using matching techniques to control for patient and hospital factors.

Previous studies have shown the differences in the experiences reported by trainees in NUBR and UBR programs. Our results extend this work by examining the performance of graduates from each type of training program. We show the differences in the distribution of procedures performed by surgeons trained in either program, with NUBR-trained surgeons performing a higher volume of cases and having a greater proportion of their case mix devoted to outpatient procedures. In addition, a greater proportion of NUBR-trained surgeons’ case mix is devoted to endoscopy.

General surgery residency programs in the United States are currently facing the challenge of producing enough qualified graduates to meet a predicted national shortage of general surgeons,30 a shortage compounded by increasing subspecialization throughout the field.31,32 Due to the increasing complexity of procedures and patients as well as the aging population, the health care system needs both general surgeons and those with specialized areas of focus.33 Our results support the existing literature on the important role that surgeons trained in both NUBR and UBR programs play in our health care system. Both NUBR and UBR programs train surgeons to perform essential and complex operations, supporting the proposal for NUBR programs to serve as a repository of clinical educators skilled in the breadth of general surgery and as a source of future general surgeons.34

Prior to matching, we noted the differences in the types of inpatient procedures performed as well as the practice setting and types of patients treated by NUBR- and UBR-trained surgeons. For example, a greater proportion of NUBR-trained surgeons practiced in small hospitals, not-for-profit rural hospitals, and investor-owned hospitals. Patients treated by NUBR-trained surgeons had fewer comorbidities, and a greater proportion of these patients were white.

After controlling for the differences in practice patterns among NUBR- and UBR-trained surgeons, we found that, when operating in the same clinical environment, both types of surgeons have clinically equivalent patient outcomes across a wide range of procedures. To our knowledge, no other studies have examined the clinical outcomes achieved by NUBR- and UBR-trained surgeons after transitioning to independent practice. The outcome rates observed in this study for both groups are consistent with those reported in other studies examining clinical outcomes after transition to practice.2

By adjusting for observed confounders using matching rather than regression, we were able to better isolate the consequences of training setting. These results confirm the effectiveness of a surgical training system built within different practice settings. Based on the study findings, both pathways result in surgeons who can perform essential and complex operations on a variety of patient types while achieving similar clinical results.

Limitations

Our study has some limitations, including its retrospective nature and the inherent limitations associated with an observational study. The practice pattern analysis of NUBR- and UBR-trained surgeons reflects the group of individual surgeons and does not account for possible clustering. It is possible we failed to find a statistically significant difference in outcomes due to the study sample size. However, given the small magnitude of the differences observed in the matched outcomes across all measures, the clinical significance of the study findings remains relevant. Like in other observational studies using matching, the generalizability of our findings may not extend to other settings. By limiting our analysis of inpatient outcomes to paired surgeons practicing within the same hospital, we excluded surgeons practicing in hospitals with only 1 surgeon or surgeons from only 1 type of training background. This study was not intended to assess outcomes at the individual resident or program level but rather to evaluate overall success of the training system in producing surgeons who deliver high-quality care. We were only able to examine the clinical results of surgeons who completed training and transitioned to independent practice. Surgeons were excluded if their training type could not be determined. Fellowship data were not available, but our analysis of additional board certification indicated that the different rates observed between the 2 groups were not likely to mask a true difference in outcomes. Finally, our statistical methods only controlled for observed covariates. However, the overall sensitivity analysis indicated that our results were unlikely to be caused by an unobserved confounder.

Conclusions

Surgeons trained in NUBR and UBR programs play distinct roles in the delivery of surgical care and when operating in the same hospitals achieve similar clinical outcomes. This finding is encouraging in the face of ongoing discussions regarding the best training system and setting to produce highly competent, independent general surgeons to meet the evolving surgical needs of the population.

eAppendix 1. Diagnosis and Procedure Codes Used in Analysis

eAppendix 2. Complete Matching Algorithm

eAppendix 3. Sensitivity Analysis

eTable 1. Complete Results of Match

eFigure 1. Distribution of Procedures per Surgeon in Practice Pattern Analysis

eFigure 2. Distribution of Procedures per Surgeon in Outcomes Analysis

References

- 1.Asch DA, Nicholson S, Srinivas S, Herrin J, Epstein AJ. Evaluating obstetrical residency programs using patient outcomes. JAMA. 2009;302(12):1277-1283. [DOI] [PubMed] [Google Scholar]

- 2.Bansal N, Simmons KD, Epstein AJ, Morris JB, Kelz RR. Using patient outcomes to evaluate general surgery residency program performance. JAMA Surg. 2016;151(2):111-119. [DOI] [PubMed] [Google Scholar]

- 3.Zaheer S, Pimentel SD, Simmons KD, et al. Comparing international and United States undergraduate medical education and surgical outcomes using a refined balance matching methodology. Ann Surg. 2017;265(5):916-922. [DOI] [PubMed] [Google Scholar]

- 4.Sullivan MC, Sue G, Bucholz E, et al. Effect of program type on the training experiences of 248 university, community, and US military-based general surgery residencies. J Am Coll Surg. 2012;214(1):53-60. [DOI] [PubMed] [Google Scholar]

- 5.Adra SW, Trickey AW, Crosby ME, Kurtzman SH, Friedell ML, Reines HD. General surgery vs fellowship: the role of the independent academic medical center. J Surg Educ. 2012;69(6):740-745. [DOI] [PubMed] [Google Scholar]

- 6.Friedell ML, VanderMeer TJ, Cheatham ML, et al. Perceptions of graduating general surgery chief residents: are they confident in their training? J Am Coll Surg. 2014;218(4):695-703. [DOI] [PubMed] [Google Scholar]

- 7.Bureau of Health Informatics Office of Quality and Health Safety . Statewide Planning and Research Cooperative System (SPARCS). New York State Department of Health. https://www.health.ny.gov/statistics/sparcs/. Published 2016. Accessed April 10, 2017.

- 8.Agency for Health Care Administration . Hospital Inpatient Discharge Data, Florida Center for Health Information and Policy Analysis. http://www.floridahealthfinder.gov/researchers/researchers.aspx. Accessed April 10, 2017.

- 9.Pennsylvania Health Care Cost Containment Council . Pennsylvania uniform claims and billing form reporting manual. http://www.phc4.org/dept/dc/adobe/ub04inpatient.pdf. Published April 2007. Accessed April 4, 2017.

- 10.American Medical Association . AMA Physician Masterfile. http://www.ama-assn.org/ama/pub/about-ama/physician-data-resources/physician-masterfile.page. Published 2015. Accessed April 10, 2017.

- 11.Accreditation Council for Graduate Medical Education . Accreditation Data System (ADS). https://apps.acgme.org/ads/Public/Programs/Search. Published 2017. Accessed May 5, 2017.

- 12.Sellers MM, Fordham M, Miller CW, Ko CY, Kelz RR. The quality in-training initiative: giving residents data to learn clinical effectiveness. J Surg Educ. 2017;(July):S1931-7204(17)30300-8. [DOI] [PubMed] [Google Scholar]

- 13.Decker MR, Dodgion CM, Kwok AC, et al. Specialization and the current practices of general surgeons. J Am Coll Surg. 2014;218(1):8-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Healthcare Cost and Utilization Project Clinical Classifications Software. Clinical Classifications Software (CCS) for ICD-9-CM. http://www.hcup-us.ahrq.gov/toolssoftware/ccs/ccs.jsp. Published March 2017. Accessed April 10, 2017.

- 15.Surgical Council on Resident Education . SCORE Curriculum Outline for General Surgery 2013–2014. Philadelphia, PA: Surgical Council on Resident Education ; 2014. [Google Scholar]

- 16.Elixhauser A, Steiner C, Harris DR, Coffey RM. Comorbidity measures for use with administrative data. Med Care. 1998;36(1):8-27. [DOI] [PubMed] [Google Scholar]

- 17.Silber JH, Rosenbaum PR, Koziol LF, Sutaria N, Marsh RR, Even-Shoshan O. Conditional Length of Stay. Health Serv Res. 1999;34(1, pt 2):349-363. [PMC free article] [PubMed] [Google Scholar]

- 18.Silber JH, Rosenbaum PR, Even-Shoshan O, et al. Length of stay, conditional length of stay, and prolonged stay in pediatric asthma. Health Serv Res. 2003;38(3):867-886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Angrist JD, Pischke J-S . Mostly Harmless Econometrics: An Empiricist’s Companion. Princeton, NJ: Princeton University Press; 2009. [Google Scholar]

- 20.Zubizarreta JR, Paredes RD, Rosenbaum PR. Matching for balance, pairing for heterogeneity in an observational study of the effectiveness of for-profit and not-for-profit high schools in Chile. Ann Appl Stat. 2014;8(1):204-231. doi: 10.1214/13-AOAS713 [DOI] [Google Scholar]

- 21.Rosenbaum PR, Ross RN, Silber JH. Minimum distance matched sampling with fine balance in an observational study of treatment for ovarian cancer. J Am Stat Assoc. 2007;102(477):75-83. doi: 10.1198/016214506000001059 [DOI] [Google Scholar]

- 22.Rosenbaum PR. Sensitivity analysis for m-estimates, tests, and confidence intervals in matched observational studies. Biometrics. 2007;63(2):456-464. [DOI] [PubMed] [Google Scholar]

- 23.Heller R, Rosenbaum PR, Small DS. Split samples and design sensitivity in observational studies. J Am Stat Assoc. 2009;104(487):1090-1101. doi: 10.1198/jasa.2009.tm08338 [DOI] [Google Scholar]

- 24.Silber JH, Rosenbaum PR, Trudeau ME, et al. Multivariate matching and bias reduction in the surgical outcomes study. Med Care. 2001;39(10):1048-1064. [DOI] [PubMed] [Google Scholar]

- 25.Rosenbaum PR, Rubin DB. Constructing a control group using multivariate matched sampling methods. Am Stat. 1985;39(1):33-38. doi: 10.2307/2683903 [DOI] [Google Scholar]

- 26.Cochran WG, Rubin DB. Controlling bias in observational studies: a review. Sankyha-Indian J Stat Ser A. 1973;35(4):417-446. [Google Scholar]

- 27.Rosenbaum PR. Design of Observational Studies. New York, NY: Springer; 2010. [Google Scholar]

- 28.Zubizarreta JR, Kilcioglu C. Designmatch: construction of optimally matched samples for randomized experiments and observational studies that are balanced by design. R package version 0.1.1 [computer software]. https://CRAN.R-project.org/package=designmatch. Accessed February 1, 2016.

- 29.Rosenbaum PR, Silber JH. Sensitivity analysis for equivalence and difference in an observational study of neonatal intensive care units. J Am Stat Assoc. 2009;104(486):501-511. doi: 10.1198/jasa.2009.0016 [DOI] [Google Scholar]

- 30.Are C. Workforce needs and demands in surgery. Surg Clin North Am. 2016;96(1):95-113. [DOI] [PubMed] [Google Scholar]

- 31.Bruns SD, Davis BR, Demirjian AN, et al. ; Society for Surgery of Alimentary Tract Resident Education Committee . The subspecialization of surgery: a paradigm shift. J Gastrointest Surg. 2014;18(8):1523-1531. [DOI] [PubMed] [Google Scholar]

- 32.Stitzenberg KB, Sheldon GF. Progressive specialization within general surgery: adding to the complexity of workforce planning. J Am Coll Surg. 2005;201(6):925-932. [DOI] [PubMed] [Google Scholar]

- 33.Decker MR, Bronson NW, Greenberg CC, Dolan JP, Kent KC, Hunter JG. The general surgery job market: analysis of current demand for general surgeons and their specialized skills. J Am Coll Surg. 2013;217(6):1133-1139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Joshi ART, Trickey AW, Kallies K, Jarman B, Dort J, Sidwell R. Characteristics of independent academic medical center faculty. J Surg Educ. 2016;73(6):e48-e53. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eAppendix 1. Diagnosis and Procedure Codes Used in Analysis

eAppendix 2. Complete Matching Algorithm

eAppendix 3. Sensitivity Analysis

eTable 1. Complete Results of Match

eFigure 1. Distribution of Procedures per Surgeon in Practice Pattern Analysis

eFigure 2. Distribution of Procedures per Surgeon in Outcomes Analysis