Abstract

Tracking accuracy for the CyberKnife's Synchrony system is commonly evaluated using a film‐based verification method. We have evaluated a verification system that uses a webcam and a printed calibrated grid to verify tracking accuracy over three different motion patterns. A box with an attached printed calibrated grid and four fiducial markers was attached to the motion phantom. A target marker was positioned at the grid's center. The box was set up using the other three markers. Target tracking accuracy was evaluated under three conditions: 1) stationary; 2) sinusoidal motion with different amplitudes of 5, 10, 15, and 20 mm for the same cycle of 4 s and different cycles of 2, 4, 6, and 8 s with the same amplitude of 15 mm; and 3) irregular breathing patterns in six human volunteers breathing normally. Infrared markers were placed on the volunteers’ abdomens, and their trajectories were used to simulate the target motion. All tests were performed with one‐dimensional motion in craniocaudal direction. The webcam captured the grid's motion and a laser beam was used to simulate the CyberKnife's beam. Tracking error was defined as the difference between the grid's center and the laser beam. With a stationary target, mean tracking error was measured at 0.4 mm. For sinusoidal motion, tracking error was less than 2 mm for any amplitude and breathing cycle. For the volunteers’ breathing patterns, the mean tracking error range was 0.78‐1.67 mm. Therefore, accurate lesion targeting requires individual quality assurance for each patient.

PACS number(s): 87.55.D‐, 87.55.km, 87.55.Qr, 87.56.Fc

Keywords: CyberKnife, synchrony, motion tracking, quality assurance, verification system

I. INTRODUCTION

Respiratory motion varies substantially between patients, (1) and the consequent tumor motion during radiotherapy can compromise the accuracy of dose delivery and produce interplay and blurring effects. 2 , 3 Thus, various methods have been devised to compensate for motion, including dynamic multileaf collimator (DMLC) tracking (4) and the use of a robotic arm with six axes. (5) The CyberKnife system (Accuray Inc., Sunnyvale, CA) uses image guidance with two pairs of X‐ray tubes and a flat‐panel detector to detect bone anatomy, fiducial marker position, and tumor position according to image densities. (6) Although the overall system‐targeting precision is less than 1 mm 7 , 8 the accuracy of the robotic system is highly variable, as reflected by the precision of production and the efficacy of control.

The American Association of Physicists in Medicine Task Group 135 introduced tolerance values and quality assurance (QA) intervals for motion tracking using film. (9) Accordingly, the company Accuray provided a QA phantom, which moves in a sinusoidal pattern, and Nioutsikou et al. (10) evaluated the tracking accuracy of the CyberKnife system using film and gamma analyses during lung treatments. In addition, Chan et al. (11) evaluated the accuracy and sensitivity of four‐dimensional dose calculations in the treatment planning system of CyberKnife, and made comparisons with measured film data from tracking irradiation. Although tracking system accuracy with film irradiation may be achievable as an overall tracking result, tracking errors of each beam remain difficult to determine. Hence, motion phantoms that can move in all directions to simulate tumor motion are used to predict motion‐tracking accuracy. Moreover, several previous studies report detection of irradiation targets motions using charge‐coupled device (CCD) cameras. 12 , 13 However, this method requires attachment of the camera to coaxial fixation jigs, which are not commercially available. (13) Thus, to determine the precision of the CyberKnife system, Wong et al. (14) attached LED markers to the CyberKnife head and to the target, and simultaneously evaluated precision during irradiation.

The aim of the present study was to evaluate tracking accuracy of the CyberKnife system using a webcam and a printed calibrated grid over various motion patterns, including those of the stationary position, sinusoidal motion, and human respiratory motion.

II. MATERIALS AND METHODS

The CyberKnife system is a 6 MV linear accelerator that is mounted on a robotic arm. The system includes the Synchrony Respiratory Tracking System v.9.6, which makes real‐time adjustments to the robotic arm position to follow the moving target. 15 , 16 , 17 The target locating system (TLS) is composed of two ceiling‐mounted diagnostic X‐ray sources paired with amorphous silicon detectors, and acquires live digital radiographic images of the target, bony anatomy, and fiducial markers. The Synchrony system enables three‐dimensional real‐time tracking of tumors that move with respiration. (18) The system uses infrared markers that are continually tracked by three flash‐point cameras, which generate 26 images per second. The TLS acquires two orthogonal X‐ray images within a preset interval to locate three‐dimensional positions of fiducial markers, which are implanted into, or are present close to, the tumor. The Synchrony system then creates correlation models between positions of external infrared markers and fiducial markers, and tracks the tumor according to predictions that are based on infrared marker positions. This system allows tumors that are subject to respiratory motion to be treated with an accuracy of 2 mm or less during normal respiration. 19 , 20

A. Phantom configuration

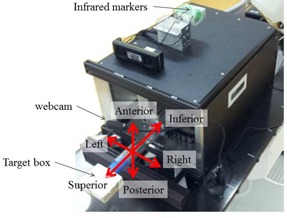

We used the motion phantom from Enomoto BeA Co., Ltd. (Gifu, Japan), which simulates respiratory motion by moving in axial, sagittal, and coronal planes, and in oblique axes (Fig. 1). (21) Infrared markers were placed on the phantom, and a webcam (BSW20KM14BK, Buffalo Inc., Aichi, Japan) was used to capture video signals in AVI file format at 30 frames/s. The webcam produces a pixel matrix of , and was placed on the treatment couch to afford a field of view that was unobstructed by the CyberKnife instrument. The target comprised a box covered with a printed calibrated grid, and was attached to the end of the motion phantom. The box had four fiducial markers (Fig. 2(a)), three of which were used to align the motion phantom with translations and rotations, which were detected using the fiducial tracking method in the Synchrony system. The tolerance for alignment of the motion phantom was 0.1 mm for translation and 0.1° for rotation. The other fiducial marker was used as a target.

Figure 1.

Motion phantom movable in all directions. A webcam on a flexible arm was placed on the treatment couch and the target box was attached to the end of the motion phantom.

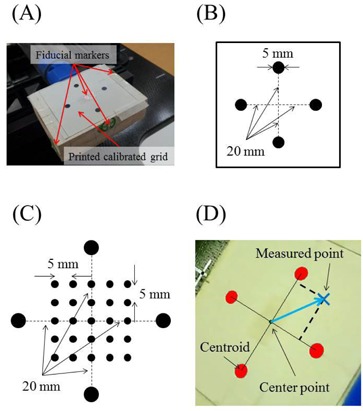

Figure 2.

A printed calibrated grid on the target box (a). Four fiducial markers were attached to the box on the top, the two sides, and in the center of four black solid circles. (b) Four black solid circles were printed on white paper. Circles of 5 mm in diameter were placed 40 mm from the other circle centers. (c) A printed calibrated grid was used to verify coordinate recognition accuracy. Four large solid black circles and 25 small solid black circles were printed on a sheet. The four large circles were used to create the coordinate system on the sheet. The 25 small circles were used to verify detection accuracy. (d) Four solid red circles were detected by the software.

B. Validation of coordinate recognition accuracy

The software was developed using Delphi2007 (Borland Software Corporation, Austin, TX) to analyze tracking accuracy and to measure the detection accuracy of printed markers on the calibrated grid. Four marker points that were identical to the outer circles in Fig. 2(b) surrounded 25 smaller calibration points on the sheet (Fig. 2(c)), and were evenly spaced with 5 mm intervals. Centroids for each of the four black solid circles were calculated in pixels on the captured image using the software, and distances from the centroid of each of the four black solid circles to the center point were calculated in pixels. The center point was defined as the origin of the coordinate system (Fig. 2(d)). Distance in mm was divided by distance in pixels, giving a pixel size of 0.24. The centroids of the four black solid circles were considered vectors from the center point, and locations were calculated for all 25 marker points. Distances between these and expected locations were recorded as software calibration errors.

C. Treatment planning using the fiducial tracking method

Planning CT scans of the motion phantom were acquired with a slice thickness of 1.25 mm, and phantom CT images were imported into the treatment planning system (TPS; Multiplan 4.6.0, Accuray, Inc., Sunnyvale, CA) via DICOM. The four fiducial markers were then delineated using the TPS, the beam isocenter was localized at the base of the designated fiducial marker, and an isocentric irradiation plan was devised. To record tracking using the webcam, irradiation beams to the target from the anterior direction for irradiation were chosen in the TPS. The vertical position of the source was less than 800 mm in accordance with the International Electrotechnical Commission coordinate system. The red laser was used as a substitute for the actual beam, and was hence available to visualize the red laser and the printed calibrated grid from the webcam. Incidence angles from the beam to the target ranged between 31° and 54°. A total of 20 fields were used and planned beams at the time of each field were changed to 240 monitor units (MUs) to maximize tracking times.

D. Checking tracking accuracy for different breathing patterns

Baseline data for tracking accuracy were obtained in the motionless state. Subsequently, sinusoidal motion patterns were evaluated in the superior‐inferior (SI) direction, and were assessed at amplitudes of 5, 10, 15, and 20 mm over 4 s with cycles of 2, 4, 6, and 8 s at an amplitude of 15 mm. Subsequently, six volunteers from cases (A) to (F) were recruited under appropriate institutional guidance from our department for evaluations of tracking accuracy during normal breathing. It was not possible to capture target motion using implanted fiducial markers because the volunteers were not patients. Thus, infrared markers were positioned on volunteers’ abdomens and positions were recorded using the Synchrony system. The largest degree of motion of the infrared marker in the anterior‐posterior (AP) direction was recorded and tumor trajectory in the SI direction was assumed equal. Hence, tumor trajectories were not identical to real tumor motion. Similarly, to estimate the abdominal motion of the phantom, the trajectory in the SI direction was also assumed in the AP direction, and the infrared markers moved with the tumor. Volunteers’ breathing patterns (Fig. 3) were recorded for approximately 3 min. Although the CyberKnife system performs motion tracking during longer treatment times, warranting the use of longer wave forms, these may limit the motion trajectory for the actual treatment. Accordingly, the motion of the phantom was limited and data for the upper motion limit were imported. Mean amplitudes and cycles of breathing patterns were recorded for all volunteers (Table 1).

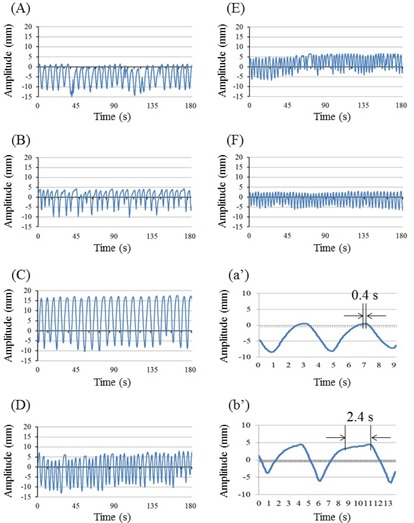

Figure 3.

Breathing patterns of the six volunteers (A‐F). The y‐axis represents the breathing motion amplitude in millimeters. The expiratory and inspiratory phases increase and decrease Y coordinates, respectively. The x‐axis represents the recording time in seconds. Cases (A‐F) correspond with respective cases in Table 1, and (a’) and (b’) depict detailed patterns of (A) and (B), respectively, over two cycles. The distance between the two black arrows is a motionless pause; (a’) negligible pause between phases; (b’) measurable pause between phases.

Table 1.

Mean amplitudes and breathing cycles of six volunteer cases

| Amplitude (mm) | Breathing cycle (s) | |||

|---|---|---|---|---|

| Case |

|

|

||

| A |

|

|

||

| B |

|

|

||

| C |

|

|

||

| D |

|

|

||

| E |

|

|

||

| F |

|

|

The center point of the four solid black coordinate markers (Fig. 2(d)) was used as the target, and the CyberKnife red laser beam was used to represent the radiation beam center. The coincidence of laser beam and radiation beam centers in monthly QA tests was . To calculate tracking accuracy, deviations between the red laser point and the center point were calculated by the software. These measurements were performed for stationary, sinusoidal motion, and breathing patterns of the six volunteers. Tracking errors were recorded at 16 frames/s in log files in text format. During detection of the red laser point by the software, the laser remained on for 1 to 2 s after the actual beam was turned off. Thus, to avoid false detection of tracking errors in motion cases, errors for the last 2 s of each beam were excluded from analyses. Cumulative histograms of the tracking errors of the motion pattern were calculated using log file data, and tracking errors were subdivided into magnitudes of 1, 2, 3, 4, and 5 mm and were presented in histograms.

III. RESULTS

A. Validation of coordinate recognition accuracy by the software

The software located the 25 points on the printed calibrated grid and coordinate recognition accuracy was verified. When a region of was defined to evaluate tracking errors, deviations between the expected point and the measured point were (, ), which was sufficient to evaluate tracking errors with the present software. Maximum deviations occurred at the four corners.

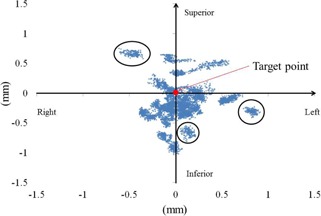

B. Tracking accuracy: stationary

In these experiments, laser points were aimed at a stationary target (Fig. 4) and tracking errors in left‐right (LR) and SI directions were determined using the software. These increased with decreasing angles of incidence of the laser beam to the target plane, and of LR and SI errors were and , respectively. The mean radial error was .

Figure 4.

Laser point distributions around the target point for a stationary case. Blue marks represent laser points detected by the software. The target is shown as a solid red circle. Three black circles present laser point distributions at a certain beam. Although the robot aimed at the target point, deviations of distance were increased with incident beam angles.

C. Tracking accuracy: sinusoidal motion

Tracking errors were measured during various breathing patterns against the intended data, and root mean square errors (RMSEs) were calculated. RMSEs were 0.49, 0.58, 0.67, and 0.76 mm for amplitudes of 5, 10, 15, and 20 mm, respectively, and were 1.01, 0.63, 0.53, and 0.49 mm for 2, 4, 6, and 8 s cycles, respectively. Magnitudes of tracking errors were correlated positively with amplitude and were negatively correlated with cycle lengths .

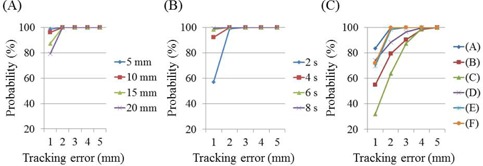

Probabilities of irradiation for various tracking errors are shown in Figs. 5(a) and (b) using a cumulative distribution function (CDF). Probability was defined as the ratio of the time taken by the CyberKnife to track the target point with 1 to 5 mm tracking errors against the . The time was calculated as the number of frames divided by 16 frames/s.

Figure 5.

Probabilities of webcam‐detected tracking errors at different amplitudes (a), cycles (b), and in six volunteers (c).

D. Tracking accuracy: breathing patterns of six volunteers

RMSEs of webcam‐detected tracking errors in breathing patterns were 0.73, 1.62, 1.96, 1.20, 0.93, and 0.86 mm for cases (A), (B), (C), (D), (E), and (F), respectively. Probabilities of tracking errors (Fig. 5(c)) were calculated according to a CDF, and CyberKnife tracked the target point with errors of with probabilities of 99.9%, 98.3%, and 99.8% for cases (A), (E), and (F), respectively. This probability gradually increased in the other volunteers over the tracking error range of 2‐5 mm, and was for tracking errors of 4 mm in all cases.

IV. DISCUSSION

According to the manufacturer, (19) the tracking error of the CyberKnife using the Synchrony system during respiratory motion is less than 1.5 mm. However, this was measured using a vendor phantom that moves in a sinusoidal pattern, and breathing patterns of patients are generally not sinusoidal. Accordingly, Lujan et al. (20) proposed a formula with an adjustable trajectory profile to better mimic patient breathing. Although the formula can be used to simulate patient breathing on the motion phantom, we assessed real breathing patterns and evaluated tracking accuracy under in situ conditions. To determine tracking accuracy of CyberKnife during irradiation with attention to time resolution, video webcam‐based analyses were performed. Inoue et al. (13) detected tracking errors using a coaxial CCD camera attached to the beam exit. The CyberKnife tracks a colored ball that is controlled by the motion phantom in three dimensions and, when motion tracking is successful, the ball appears motionless on the video of the CCD camera. The present webcam acquires 30 frames/s with a field of view. Although pixel‐scaling factors for the four directions depend on the field of view, 0.24 mm / pixel was achieved and was adequate for validation of coordinate recognition accuracy by the software. Pixel‐scaling factors increased with the distance of the measured point from the center. The same pixel scaling factor value was used in each axis and the required 0.3 mm / pixel tolerance was achieved. Although the printed calibrated grid was used for QA of tracking errors, use of the grid on volunteer abdomens may lead to deformations and rotations of the grid. However, upon detection of the four black solid circles, the software could calibrate according to the coordination of the grid during detection without changing the configuration of the printed calibrated grid, warranting further investigation in future studies.

In the present study, only 20 beams were used, whereas CyberKnife has 120‐140 beams for use in actual treatments. Thus, the overall accuracy of the CyberKnife tracking system cannot be assessed, and tracking accuracy requires further investigation over longer times. Nonetheless, the beam‐on time with 240 MUs irradiated by 800 MU / min was 18 s for each beam in the present study, and covered the longest breathing cycle length of 8.2 s, case (C) (Table 1).

In experiments with stationary targets, the mean tracking error using the fiducial tracking method was approximately 0.4 mm. However, errors increased with decreasing incident angles of the laser beam to the plane of the target. Incidence angles were calculated using the plan file in XML format, which describes source and target positions of each beam, and ranged between 31° and 54°. In light of the 0.3 mm targeting error in the AP direction, the incidence angle of 31° led to a tracking error of 0.5 mm. In contrast, Inoue et al. (13) reported no influence of the incidence angle of each beam on the detection method, because the target shape was a circle. Moreover, Antypas et al. (22) reported a motionless tracking accuracy of 0.19 mm using a film method, whereas the tracking error in the present detection method was approximately 0.4 mm. Hence, tracking errors may be reduced with the distance between the webcam and the plane of target, resulting in higher resolution analyses. Under conditions of larger numbers of beam directions and different DeltaMan settings, CyberKnife can only be calibrated to an accuracy of 0.5 mm. However, this error is strongly influenced by slice thicknesses of the phantoms for end‐to‐end test, even in the presence of coincident laser and actual beams, and by the accuracy of fiducial markers in the TPS. Hence, the present system inaccuracy of is less than that shown in previous measurements. Moreover, according to Ho et al., (23) CT slice thicknesses in the range of 1‐1.5 mm did not affect tracking accuracy, and we used a slice thickness of 1.25 mm. Nonetheless, because thickness affects the resolution of digitally reconstructed radiography for assessments of registration accuracy, further studies using finer slice thicknesses are planned.

To verify tracking errors of moving targets, all tests of sinusoidal and irregular motions were performed according to one‐dimensional motions in the SI direction. We assumed that the motion of a lung tumor occurs in this direction, and the infrared marker on the abdomen was used to estimate tumor motion. Because the major axis of tumor motion inside the patient is in the SI direction rather than in AP or LR directions, the synchrony system reduces tracking errors. (16) However, directions and amplitudes of the motion of normal tumors in the lung or liver differ in absolute terms from those of the chest and abdomen. Thus, although SI motions are sufficient for initial evaluations, future real tumor motion assessments from log files or from fluoroscopy analyses will be required to estimate 3D motions. During tracking of sinusoidal motions, tracking errors gradually increased with the amplitude of the same cycle, and with shorter cycles of the same amplitude. Thus, faster target movements gave greater tracking errors. Nonetheless, the Synchrony system tracked the target within a tracking error of 2 mm, and this was consistent with a previous study, (9) but was greater than that reported by Inoue et al., (13) who demonstrated a median tracking error of 1 mm with a probability of for 10 beams.

In the present study, we assessed breathing patterns in six volunteers over approximately 3 minutes. Because the Synchrony system can be used to track tumors during irradiation, evaluations of tracking errors are required for extended times. Accordingly, Ernst et al. 24 , 25 evaluated respiratory motion traces for an average duration of 71 min. However, previous assumptions of periodic breathing motions have not necessarily included variations in breathing patterns, such as different amplitudes and cycles. 22 , 26 , 27 In this study, we generated a correlation model between fiducial and infrared markers, but did not update the model. Malinowski et al. (28)) suggested that the mean time to alarm for the CyberKnife Synchrony system was 1.1 min without updating the prediction model, and showed increasing model errors over time for the tumor‐surrogate relationship. However, this effect was abolished by updating the model during the treatment fraction. (29) Because patient breathing motion can change completely over 10 to 15 min, (30) future studies with real patient data should be performed with longer treatment times and with model updates. In actual treatments, the placement of infrared markers on the abdominal surface is critical for accurate correlations between external infrared markers and internal fiducial markers, (31) and phase shifts are an important consideration. (32) In the present analyses, we assumed that there were no phase shifts between external and internal markers in sinusoidal and irregular motion cases. Thus, further studies are required to evaluate changes in tracking accuracy with phase shifts using this verification system. However, assuming the absence of such phase shifts, tracking errors and standard deviations varied among the present six volunteers, with mean differences of 0.64‐1.67 mm. Thus, the Synchrony system tracked the target with an accuracy of over 95% within a tracking error tolerance of 4 mm. Inoue et al. (13) showed that median tracking errors with a probability of ranged between 1.0 and 3.5 mm. Similarly, the present tracking errors were between 1.4 and 3.7 mm.

The typical breathing patterns presented in Figs. 3(a') and (b') correspond to (a) and (b) in Fig. 3, but show more details of the two respiratory cycles. Specifically, in the absence of a pause between expiration and inspiration (Fig. 3(a’)), CyberKnife tracked the target accurately on the basis of the prediction model. However, in the presence of an appreciable pause between the end of expiration and beginning of inspiration and no motion of the target (Fig. 3(b’)), CyberKnife predictions were inaccurate and future target positions lost accuracy. Hence, according to investigations of prediction latency by Ernst et al., (25) the prediction models require further modification.

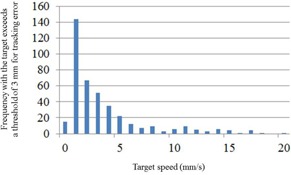

Figures 3(b) and (c) show the same breathing patterns, potentially reflecting the large tracking errors of over 3 mm at the end of the expiratory phase in cases (B) and (C). Thus, we examined correlations between target speed and the frequency with which the target exceeded a threshold of 3 mm in case (B) (Fig. 6). These data were sampled and calculations were made under assumptions of normal distribution because the duration of tracking and the sampling frequency were sufficiently large, with target speeds of 0‐1 mm/s.

Figure 6.

Correlation histograms between target speeds and frequencies of tracking errors for case (B). The vertical axis indicates the frequency with which the target exceeds a tracking‐error threshold of 3 mm.

Estimating appropriate tracking accuracy requires performance of QA on a patient‐by‐patient basis. Specifically, pauses between the end of expiration and the beginning of inspiration could be eliminated by breath coaching, thereby reducing tracking error.

The present study was limited by isocentric performance of the irradiation technique and two‐dimensional assessments of tracking accuracy. Because the Synchrony tracking system can track tumors in three dimensions, the proposed webcam system should be considered in all three translational directions. In particular, the AP direction of the tumor has not been evaluated due to difficulty detecting changes in sizes of the four solid black circles, which would be used to calculate the magnitude of movements in the AP direction. Therefore, the webcam system requires further improvement, and use of a real radiation beam instead of the laser in combination with three‐dimensional dosimeters, such as plastic scintillation detectors or plenoptic cameras, would be useful. (33) Moreover, the CyberKnife system commonly accommodates nonspherical tumors with nonisocentric beams from a semispherical space. Therefore, the verification system needs to include three‐dimensional tracking accuracy and adaptations for nonisocentric techniques.

V. CONCLUSIONS

We evaluated a beam‐by‐beam verification system for tracking errors of the CyberKnife Synchrony system using a webcam and a printed calibrated grid, and calculated an overall treatment accuracy of 1‐2 mm for sinusoidal beam motions at various amplitudes and cycle lengths. However, varied median tracking errors for the six volunteers indicate the necessity of patient‐specific treatment margins.

COPYRIGHT

This work is licensed under a Creative Commons Attribution 4.0 International License.

Supporting information

Supplementary Material Files

Supplementary Material Files

Supplementary Material Files

Supplementary Material Files

REFERENCES

- 1. Maxim PG, Loo BW Jr, Shirazi H, Thorndyke B, Luxton G, Le QT. Quantification of motion of different thoracic locations using four‐dimensional computed tomography: implications for radiotherapy planning. Int J Radiat Oncol Biol Phys. 2007;69(5):1395–401. [DOI] [PubMed] [Google Scholar]

- 2. Bortfeld T, Jiang SB, Rietzel E. Effects of motion on the total dose distribution. Semin Radiat Oncol. 2004;14(1):41–51. [DOI] [PubMed] [Google Scholar]

- 3. Li X, Yang Y, Li T, Fallon K, Heron DE, Huq MS. Dosimetric effect of respiratory motion on volumetric‐modulated arc therapy‐based lung SBRT treatment delivered by TrueBeam machine with flattening filter‐free beam. J Appl Clin Med Phys. 2013;14(6):195–204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Keall PJ, Joshi S, Vedam SS Siebers JV, Kini VR, Mohan R. Four‐dimensional radiotherapy planning for DMLC‐based respiratory motion tracking. Med Phys. 2005;32(4):942–51. [DOI] [PubMed] [Google Scholar]

- 5. Ozhasoglu C, Saw CB, Chen H, et al. Synchrony‐CyberKnife respiratory compensation technology. Med Dosim. 2008;33(2):117–23. [DOI] [PubMed] [Google Scholar]

- 6. Adler JR Jr., Murphy MJ, Chang SD, Hancock SL. Image‐guided robotic radiosurgery. Neurosurgery. 1999;44(6):1299–306. [PubMed] [Google Scholar]

- 7. Drexler C and Fürweger C. Quality assurance of a robotic, image guided radiosurgery system. World Congress on Medical Physics and Biomedical Engineering. IFMBE Proceedings. 2009;25(1):492–95. [Google Scholar]

- 8. Murphy MJ and Cox RS. The accuracy of dose localization for an image‐guided frameless radiosurgery system. Med Phys. 1996;23(12):2043–49. [DOI] [PubMed] [Google Scholar]

- 9. Dieterich S, Cavedon C, Chuang CF, et al. Report of AAPM TG 135: quality assurance for robotic radiosurgery. Med Phys. 2011;38(6):2914–36. [DOI] [PubMed] [Google Scholar]

- 10. Nioutsikou E, Seppenwoolde Y, Symonds‐Tayler JR, Heijmen B, Evans P, Webb S. Dosimetric investigation of lung tumor motion compensation with a robotic respiratory tracking system: an experimental study. Med Phys. 2008;35(4):1232–40. [DOI] [PubMed] [Google Scholar]

- 11. Chan MK, Kwong DL, Ng SC, Tong AS, Tam EK. Accuracy and sensitivity of four‐dimensional dose calculation to systematic motion variability in stereotactic body radiotherapy (SBRT) for lung cancer. J Appl Clin Med Phys. 2012;13(6):303–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Sawant A, Venkat R, Srivastava V, et al. Management of three‐dimensional intrafraction motion through real‐time DMLC tracking. Med Phys. 2008;35(5):2050–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Inoue M, Shiomi H, Iwata H, et al. Development of system using beam's eye view images to measure respiratory motion tracking errors in image‐guided robotic radiosurgery system. J Appl Clin Med Phys. 2015;16(1):100–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Wong KH, Dieterich S, Tang J, Cleary K. Quantitative measurement of CyberKnife robotic arm steering. Technol Cancer Res Treat. 2007;6(6):589–94. [DOI] [PubMed] [Google Scholar]

- 15. Seppenwoolde Y, Berbeco RI, Nishioka S, Shirato H, Heijmen B. Accuracy of tumor motion compensation algorithm from a robotic respiratory tracking system: a simulation study. Med Phys. 2007;34(7):2774–84. [DOI] [PubMed] [Google Scholar]

- 16. Hoogeman M, Prevost JB, Nuyttens J, Pöll J, Levendag P, Heijmen B. Clinical accuracy of the respiratory tumor tracking system of the cyberknife: assessment by analysis of log files. Int J Radiat Oncol Biol Phys. 2009;74(1):297–303. [DOI] [PubMed] [Google Scholar]

- 17. Pepin EW, Wu H, Zhang Y, Lord B. Correlation and prediction uncertainties in the cyberknife synchrony respiratory tracking system. Med Phys. 2011;38(7):4036–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Nuyttens JJ and van de Pol M. The CyberKnife radiosurgery system for lung cancer. Expert Rev Med Devices. 2012;9(5):465–75. [DOI] [PubMed] [Google Scholar]

- 19. Dieterich S, Taylor D, Chuang C, et al. The CyberKnife synchrony respiratory tracking system: evaluation of systematic targeting uncertainty. Sunnyvale, CA: Accuray Inc.; 2004. [Google Scholar]

- 20. Lujan AE, Larsen EW, Balter JM, Ten Haken RK. A method for incorporating organ motion due to breathing into 3D dose calculations. Med Phys. 1999;26(5):715–20. [DOI] [PubMed] [Google Scholar]

- 21. Casamassima F, Cavedon C, Francescon P, et al. Use of motion tracking in stereotactic body radiotherapy: evaluation of uncertainty in off‐target dose distribution and optimization strategies. Acta Oncol. 2006;45(7):943–47. [DOI] [PubMed] [Google Scholar]

- 22. Antypas C and Pantelis E. Performance evaluation of a CyberKnife G4 image‐guided robotic stereotactic radiosurgery system. Phys Med Biol. 2008;53(17):4697–718. [DOI] [PubMed] [Google Scholar]

- 23. Ho AK, Fu D, Cotrutz C, et al. A study of the accuracy of cyberknife spinal radiosurgery using skeletal structure tracking. Neurosurgery. 2007;60(2 Suppl 1):147–56. [DOI] [PubMed] [Google Scholar]

- 24. Ernst F, Schlaefer A, Schweikard A. Predicting the outcome of respiratory motion prediction. Med Phys. 2011;38(10):5569–81. [DOI] [PubMed] [Google Scholar]

- 25. Ernst F, Dürichen R, Schlaefer A, Schweikard A. Evaluating and comparing algorithms for respiratory motion prediction. Phys Med Biol. 2013;58(11):3911–29. [DOI] [PubMed] [Google Scholar]

- 26. Ozhasoglu C and Murphy MJ. Issues in respiratory motion compensation during external‐beam radiotherapy. Int J Radiat Oncol Biol Phys. 2002;52(5):1389–99. [DOI] [PubMed] [Google Scholar]

- 27. Hoisak JD, Sixel KE, Tirona R, Cheung PC, Pignol JP. Correlation of lung tumor motion with external surrogate indicators of respiration. Int J Radiat Oncol Biol Phys. 2004;60(4):1298–306. [DOI] [PubMed] [Google Scholar]

- 28. Malinowski K, McAvoy TJ, George R, Dieterich S, D'Souza WD. Online monitoring and error detection of real‐time tumor displacement prediction accuracy using control limits on respiratory surrogate statistics. Med Phys. 2012;39(4):2042–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Malinowski K, McAvoy TJ, George R, Dieterich S, D'Souza WD. Mitigating errors in external respiratory surrogate‐based models of tumor position. Int J Radiat Oncol Biol Phys. 2012;82(5):e709–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Malinowski K, McAvoy TJ, George R, Dieterich S, D'Souza WD. Incidence of changes in respiration‐induced tumor motion and its relationship with respiratory surrogates during individual treatment fractions. Int J Radiat Oncol Biol Phys. 2012;82(5):1665–73. [DOI] [PubMed] [Google Scholar]

- 31. Fayad H, Pan T, Clement JF, Visvikis D. Technical note: Correlation of respiratory motion between external patient surface and internal anatomical landmarks. Med Phys. 2011;38(6):3157–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Tsunashima Y, Sakae T, Shioyama Y, et al. Correlation between the respiratory waveform measured using a respiratory sensor and 3D tumor motion in gated radiotherapy. Int J Radiat Oncol Biol Phys. 2004;60(3):951–58. [DOI] [PubMed] [Google Scholar]

- 33. Goulet M, Rilling M, Gingras L, Beddar S, Beaulieu L, Archambault L. Novel, full 3D scintillation dosimetry using a static plenoptic camera. Med Phys. 2014;41(8):082101‐1–082101‐113. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Material Files

Supplementary Material Files

Supplementary Material Files

Supplementary Material Files