Abstract

In this paper we present a new non-parametric calibration method called ensemble of near isotonic regression (ENIR). The method can be considered as an extension of BBQ (Pakdaman Naeini, Cooper and Hauskrecht, 2015b), a recently proposed calibration method, as well as the commonly used calibration method based on isotonic regression (IsoRegC) (Zadrozny and Elkan, 2002). ENIR is designed to address the key limitation of IsoRegC which is the monotonicity assumption of the predictions. Similar to BBQ, the method post-processes the output of a binary classifier to obtain calibrated probabilities. Thus it can be used with many existing classification models to generate accurate probabilistic predictions.

We demonstrate the performance of ENIR on synthetic and real datasets for commonly applied binary classification models. Experimental results show that the method outperforms several common binary classifier calibration methods. In particular, on the real data we evaluated, ENIR commonly performs statistically significantly better than the other methods, and never worse. It is able to improve the calibration power of classifiers, while retaining their discrimination power. The method is also computationally tractable for large scale datasets, as it is O(N logN) time, where N is the number of samples.

Keywords: classifier calibration, accurate probability, ensemble of near isotonic regression, ensemble of inear trend estimation, ENIR, ELiTE

1. Introduction

In many real world data mining applications, intelligent agents often must make decisions under considerable uncertainty due to noisy observations, physical randomness, incomplete data, and incomplete knowledge. Decision theory provides a normative basis for intelligent agents to make rational decisions under such uncertainty. To do so, decision theory combines utilities and probabilities to determine the optimal actions that maximize expected utility (Russell and Norvig, 2010). The output in many of the machine learning models that are used in data mining applications is designed to discriminate the patterns in data. However, such output should also provide accurate (calibrated) probabilities in order to be practically useful for rational decision making in many real world applications (Bahnsen, Stojanovic, Aouada and Ottersten, 2014; Dong, Gabrilovich, Heitz, Horn, Lao, Murphy, Strohmann, Sun and Zhang, 2014; Gronat, Obozinski, Sivic and Pajdla, 2013; Wallace and Dahabreh, 2014; Zadrozny and Elkan, 2001a).

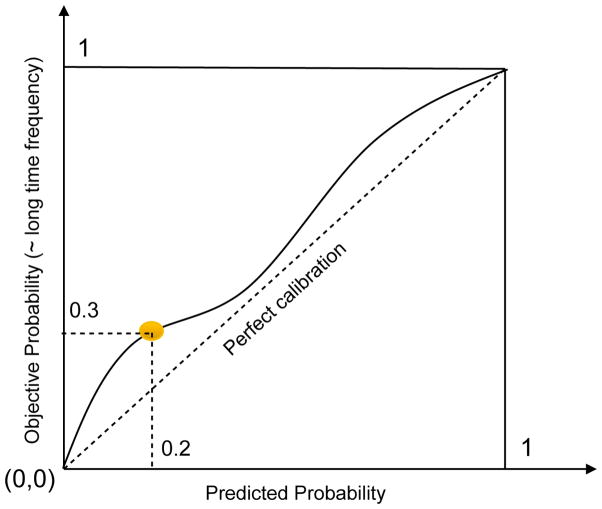

This paper focuses on developing a new non-parametric calibration method for post-processing the output of commonly used binary classification models to generate accurate probabilities. Informally, we say that a classification model is well-calibrated if events predicted to occur with probability p do occur about p fraction of the time, for all p. This concept applies to binary as well as multiclass classification problems(Takahashi, Takamura and Okumura, 2009). Figure 1 illustrates the binary calibration problem using a reliability curve (DeGroot and Fienberg, 1983; Niculescu-Mizil and Caruana, 2005). The curve shows the probability predicted by the classification model versus the actual fraction of positive outcomes for a hypothetical binary classification problem, where Z is the binary event being predicted. The curve shows that when the model predicts Z = 1 to have probability 0.2, the outcome Z = 1 occurs in about 0.3 fraction of the time. The curve shows that the model is fairly well calibrated, but it tends to underestimate the actual probabilities. In general, the straight dashed line connecting (0, 0) to (1, 1) represents a perfectly calibrated model. The closer a calibration curve is to this line, the better calibrated the associated prediction model. Deviations from perfect calibration are very common in practice and may vary widely depending on the binary classification model that is used (Pakdaman Naeini et al., 2015b).

Fig. 1.

The solid line shows a calibration (reliability) curve for predicting Z = 1. The dotted line is the ideal calibration curve.

Producing well-calibrated probabilistic predictions is critical in many areas of science (e.g., determining which experiments to perform), medicine (e.g., deciding which therapy to give a patient), business (e.g., making investment decisions), and many others. In data mining problems, obtaining well-calibrated classification model is crucial not only for decision-making, but also for combining output of different classification models (Bella, Ferri, Hernández-Orallo and Ramírez-Quintana, 2013; Robnik-Šikonja and Kononenko, 2008; Whalen and Pandey, 2013). It is also useful when we aim to use the output of a classifier not only to discriminate the instances but also to rank them (Hashemi, Yazdani, Shakery and Naeini, 2010; Jiang, Zhang and Su, 2005; Zhang and Su, 2004). Research on learning well calibrated models has not been explored in the data mining literature as extensively as, for example, learning models that have high discrimination (e.g., high accuracy).

There are two main approaches to obtaining well-calibrated classification models. The first approach is to build a classification model that is intrinsically well-calibrated ab initio. This approach will restrict the designer of the data mining model by requiring major changes in the objective function (e.g, using a different type of loss function) and could potentially increase the complexity and computational cost of the associated optimization program to learn the model. The other approach is to rely on the existing discriminative data mining models and then calibrate their output using post-processing methods. This approach has the advantage that it is general, flexible, and it frees the designer of a data mining algorithm from modifying the learning procedure and the associated optimization method (Pakdaman Naeini and Cooper, 2016b). However, this approach has the potential to decrease discrimination while increasing calibration, if care is not taken. The method we describe in this paper is shown empirically to improve calibration of different types of classifiers (e.g., LR, SVM, and NB) while maintaining their discrimination performance.

Existing post-processing binary classifier calibration methods include Platt scaling (Platt, 1999), histogram binning (Zadrozny and Elkan, 2001b), isotonic regression (Zadrozny and Elkan, 2002), and a recently proposed method BBQ which is a Bayesian extension of histogram binning (Pakdaman Naeini et al., 2015b). In all these methods, the post-processing step can be seen as a function that maps the outputs of a prediction model to probabilities that are intended to be well-calibrated. Figure 1 shows an example of such a mapping.

In general, there are two main applications of post-processing calibration methods. First, they can be used to convert the outputs of discriminative classification methods with no apparent probabilistic interpretation to posterior class probabilities (Platt, 1999; Robnik-Šikonja and Kononenko, 2008; Wallace and Dahabreh, 2014). An example is an SVM model that learns a discriminative model that does not have a direct probabilistic interpretation. In this paper, we show this use of calibration to map SVM outputs to well-calibrated probabilities. Second, calibration methods can be applied to improve the calibration of predictions of a probabilistic model that is miscalibrated. For example, a naïve Bayes (NB) model is a probabilistic model, but its class posteriors are often miscalibrated due to unrealistic independence assumptions (Niculescu-Mizil and Caruana, 2005). The method we describe is shown empirically to improve the calibration of NB models without reducing their discrimination. The method can also work well on calibrating models that are less egregiously miscalibrated than are NB models.

2. Related work

Existing post-processing binary classifier calibration models can be divided into parametric and non-parametric methods. Platt’s method is an example of the former; it uses a sigmoid transformation to map the output of a classifier into a calibrated probability (Platt, 1999). The two parameters of the sigmoid function are learned in a maximum-likelihood framework using a model-trust minimization algorithm (Gill, Murray and Wright, 1981). The method was originally developed to transform the output of an SVM model into calibrated probabilities. It has also been used to calibrate other type of classifiers (Niculescu-Mizil and Caruana, 2005). The method runs in O(1) at test time, and thus, it is fast. Its key disadvantage is the restrictive shape of sigmoid function that rarely fits the true distribution of the predictions (Jiang, Osl, Kim and Ohno-Machado, 2012).

A popular non-parametric calibration method is the equal frequency histogram binning model which is also known as quantile binning (Zadrozny and Elkan, 2001b). In quantile binning, predictions are partitioned into B equal frequency bins. For each new prediction y that falls into a specific bin, the associated frequency of observed positive instances will be used as the calibrated estimate for P(z = 1|y), where z is the true label of an instance that is either 0 or 1. Histogram binning can be implemented in a way that allows it to be applied to large scale data mining problems. Its limitations include (1) bins inherently pigeonhole calibrated probabilities into only B possibilities, (2) bin boundaries remain fixed over all predictions, and (3) there is uncertainty in the optimal number of the bins to use (Zadrozny and Elkan, 2002).

The most commonly used non-parametric classifier calibration method in machine learning and data mining applications is the isotonic regression based calibration (IsoRegC) model (Zadrozny and Elkan, 2002). To build a mapping from the uncalibrated output of a classifier to the calibrated probability, IsoRegC assumes the mapping is an isotonic (monotonic) mapping following the ranking imposed by the base classifier. The commonly used algorithm for isotonic regression is the Pool Adjacent Violators Algorithm (PAVA), which is linear in the number of training data (Barlow, Bartholomew, Bremner and Brunk, 1972). An IsoRegC model based on PAVA can be viewed as a histogram binning model (Zadrozny and Elkan, 2002) where the position of the boundaries are selected by fitting the best monotone approximation to the train data according to the ordering imposed by the classifier. There is also a variation of the isotonic-regression-based calibration method for predicting accurate probabilities with a ranking loss (Menon, Jiang, Vembu, Elkan and Ohno-Machado, 2012). In addition, an extension to IsoRegC combines the outputs generated by multiple binary classifiers to obtain calibrated probabilities (Zhong and Kwok, 2013). While IsoRegC can perform well on some real datasets, the monotonicity assumption it makes can fail in real data mining applications. This can specifically occur when we encounter large scale data mining problems in which we have to make simplifying assumptions to build the classification models. Thus, there is a need to relax the assumption, which is the focus of the current paper.

Adaptive calibration of predictions (ACP) is another extension to histogram binning (Jiang et al., 2012). ACP requires the derivation of a 95% statistical confidence interval around each individual prediction to build the bins. It then sets the calibrated estimate to the observed frequency of the instances with positive class among all the predictions that fall within the bin. To date, ACP has been developed and evaluated using only logistic regression as the base classifier (Jiang et al., 2012).

Recently, a new non-parametric calibration model called BBQ was proposed which is a refinement of the histogram-binning calibration method (Pakdaman Naeini et al., 2015b). BBQ addresses the main drawbacks of the histogram binning model by considering multiple different equal frequency histogram binning models and their combination using a Bayesian scoring function (Heckerman, Geiger and Chickering, 1995). However, BBQ has two disadvantages. First, as a post-processing calibration method, it does not take advantage of the fact that in real world applications a classifier with poor discrimination performance (e.g., low area under the ROC curve) will seldom be used. Thus, BBQ will usually be applied to calibrate classifiers with at least fair discrimination performance. Second, BBQ still selects the position and boundary of the bins by considering only equal frequency histogram binning models. A Bayesian non-parametric method called ABB addresses the latter problem by considering Bayesian averaging over all possible binning models induced by the training instances (Pakdaman Naeini, Cooper and Hauskrecht, 2015a). The main drawback of ABB is that it is computationally intractable for most real world applications, as it requires O(N2) computations for learning the model as well as O(N2) computations for computing the calibrated estimate for each of the test instances1.

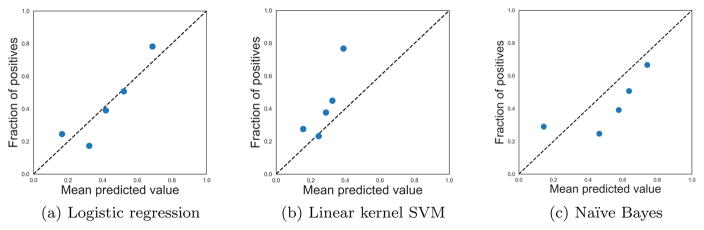

This paper presents a new binary classifier calibration method called ensemble of near isotonic regression (ENIR) that can post process the output generated by a wide variety of classification models. The essential idea in ENIR is to use prior knowledge that the scores to be calibrated are in fact generated by a well-performing classifier in terms of discrimination. IsoRegC also uses such prior knowledge; however, it is biased by constraining the calibrated scores to obey the ranking imposed by the classifier. In the limit, this is equivalent to presuming the classifier has AUC equal to 1, which rarely happens in real world applications. In contrast, BBQ does not make any assumptions about the correctness of classifier rankings. ENIR provides a balanced approach that spans between IsoRegC and BBQ. In particular, ENIR assumes that the mapping from uncalibrated scores to calibrated probabilities is a near isotonic (monotonic) mapping; it allows violations of the ordering imposed by the classifier and then penalizes them through the use of a regularization term. Figure 2 shows the calibration curve of three commonly used binary classifiers trained on the liver-disorder UCI dataset. The dataset consist of 345 total instances and the final auc is equal to 0.73. The figure shows that the isotonicity assumption made by IsoRegC is violated comparing the frequency of observations in the first and the second bins.

Fig. 2.

calibration curves based on using 5 equal frequency bins when we use logistic regression, SVM, and naïve Bayes classification models for the binary classification task in the liver-disorder UCI dataset. Considering the frequency of observations in the first and the second bin, we notice the violation of the isotonicity assumption that is made by IsoReg in all the classification models.

ENIR utilizes the path algorithm modified pool adjacent violators algorithm (mPAVA) that can find the solution path to a near isotonic regression problem in O(N logN), where N is the number of training instances (Tibshirani, Hoefling and Tibshirani, 2011). Finally, it uses the BIC scoring measure to combine the predictions made by these models to yield more robust calibrated predictions.

We perform an extensive set of experiments on a large suite of real datasets, to show that ENIR outperforms both IsoRegC and BBQ. Our experiments show that the near-isotonic assumption made by ENIR is a realistic assumption about the output of classifiers, and unlike the isotonicity assumption that is made by IsoReg, it is not biased. Moreover, our experiments show that by post processing the output of classifiers using ENIR, we can gain high calibration improvement, without losing any statistically meaningful discrimination performance. Finally, we also compare the performance of ENIR with our other newly introduced binary classifier calibration method, ELiTE (Pakdaman Naeini and Cooper, 2016a).

The remainder of this paper is organized as follows. Section 3 introduces the ENIR method. Section 4 describes a set of experiments that we performed to evaluate ENIR and other calibration methods. Section 5 describes briefly our other newly introduced calibration model based on using an ensemble of Linear trend filtering models and compares its performance ENIR. Finally, Section 6 states conclusions and describes several areas for future work.

3. Method

In this section we introduce the ensemble of near isotonic regression (ENIR) calibration method. ENIR utilizes the near isotonic regression method that seeks a nearly monotone approximation for a sequence of data y1, …, yn (Tibshirani et al., 2011). The proposed calibration method extends the commonly used isotonic regression-based calibration by an approximate selective Bayesian averaging of a set of nearly isotonic regression models. The set includes the isotonic regression model as an extreme member. From another viewpoint, ENIR can be considered as an extension to a recently introduced calibration model BBQ (Pakdaman Naeini et al., 2015b) by relaxing the assumption that probability estimates are independent inside the bins and finding the boundary of the bins automatically through an optimization algorithm.

Before getting into the details of the method, we define some notation. Let yi and zi define respectively an uncalibrated classifier prediction and the true class of the i’th instance. In this paper, we focus on calibrating a binary classifier’s output2, and thus, zi ∈ {0, 1} and yi ∈ [0, 1]. Let 𝒟 define the set of all training instances (yi, zi). Without loss of generality, we can assume that the instances are sorted based on the classifier scores yi, so we have y1 ≤ y2 ≤ … ≤ yN, where N is the total number of samples in the training data.

The standard isotonic regression-based calibration model finds the calibrated probability estimates by solving the following optimization problem:

| (1) |

where p̂iso is the vector of calibrated probability estimates. The rationale behind this model is to assume that the base classifier ranks the instances correctly. To find the calibrated probability estimates, it seeks the best fit of the data that is consistent with the classifier’s ranking. A unique solution to the above convex optimization program exists and can be obtained by an iterative algorithm called pool adjacent violators algorithm (PAVA) that runs in O(N). Note, however, that isotonic regression calibration still needs O(N logN) computations due to the fact that instances are required to be sorted based on the classifier scores yi. PAVA iteratively groups the consecutive instances that violate the ranking constraint and uses their average over z (frequency of positive instances) as the calibrated estimate for all the instances within the group. We define the set of these consecutive instances that are located in the same group and attain the same predicted calibrated estimate as a bin. Therefore, an isotonic regression-based calibration can be viewed as a histogram binning method (Zadrozny and Elkan, 2002) where the position of boundaries are selected by fitting the best monotone approximation to the training data according to the ranking imposed by the classifier.

One can show that the second constraint in the optimization given by Equation 1 is redundant, and it is possible to rewrite the equation in the following equivalent form:

| (2) |

where νi = 𝟙(pi > pi+1) is the indicator function of ranking violation. Relaxing the equality constraint in the above optimization program leads to a new convex optimization program as follows:

| (3) |

where λ is a positive real number that regulates the trade-off between the monotonicity of the calibrated estimates with the goodness of fit by penalizing adjacent pairs that violate the ordering imposed by the base classifier. The above optimization problem is known as the near-isotonic regression problem (Tibshirani et al., 2011). It yields a unique solution , where the use of the subscript λ emphasizes the dependency of the final solution to the value of λ.

The entire path of solutions for any value of λ of the near isotonic regression problem can be found using a similar algorithm to PAVA which is called modified pool adjacent violators algorithm (mPAVA) (Tibshirani et al., 2011). mPAVA finds the whole solution path in O(N logN), and needs O(N) memory space. Briefly, the algorithm works as follows: It starts by constructing N bins, each bin containing a single instance of the train data. Next, it finds the solution path by starting from the saturated fit pi = zi, that corresponds to setting λ = 0, and then increasing λ iteratively. As the λ increases the calibrated probability estimates p̂λ,i, for each bin, will change linearly with respect to λ until the calibrated probability estimates of two consecutive bins attain equal value. At this stage, mPAVA merges the two bins that have the same calibrated estimate to build a larger bin, and it updates their corresponding estimate to a common value. The process continues until there is no change in the solution for a large enough value of λ that corresponds to finding the standard isotonic regression solution. The essential idea of mPAVA is based on a theorem stating that if two adjacent bins are merged on some value of λ to construct a larger bin, then the new bin will never split for all larger values of λ (Tibshirani et al., 2011).

mPAVA yields a collection of nearly isotonic calibration models, with the over fitted calibration model at one end (p̂λ=0 = z) and the isotonic regression solution at the other (p̂λ=λ∞ = p̂iso), where λ∞ is a large positive real number. Each of these models can be considered as a histogram binning model where the position of boundaries and the size of bins are selected according to how well the model trades off the goodness of fit with the preservation of the ranking generated by the classifier, which is governed by the value of λ, (as λ increases the model is more concerned to preserving the original ranking of the classifier, while for the small λ it prioritizes the goodness of fit.)

ENIR employs the approach just described to generate a collection of models (one for each value of λ). It then uses the Bayesian Information Criterion (BIC) to score each of the models3. Assume mPAVA yields the binning models M1, M2, …, MT, where T is the total number of models generated by mPAVA. For any new classifier output y, the calibrated prediction in the ENIR model is defined using selective Bayesian model averaging (Hoeting, Madigan, Raftery and Volinsky, 1999):

where P(z = 1|y, Mi) is the probability estimate obtained using the binning model Mi for the uncalibrated classifier output y. Also, Score(Mi) is defined using the BIC scoring function4 (Schwarz et al., 1978).

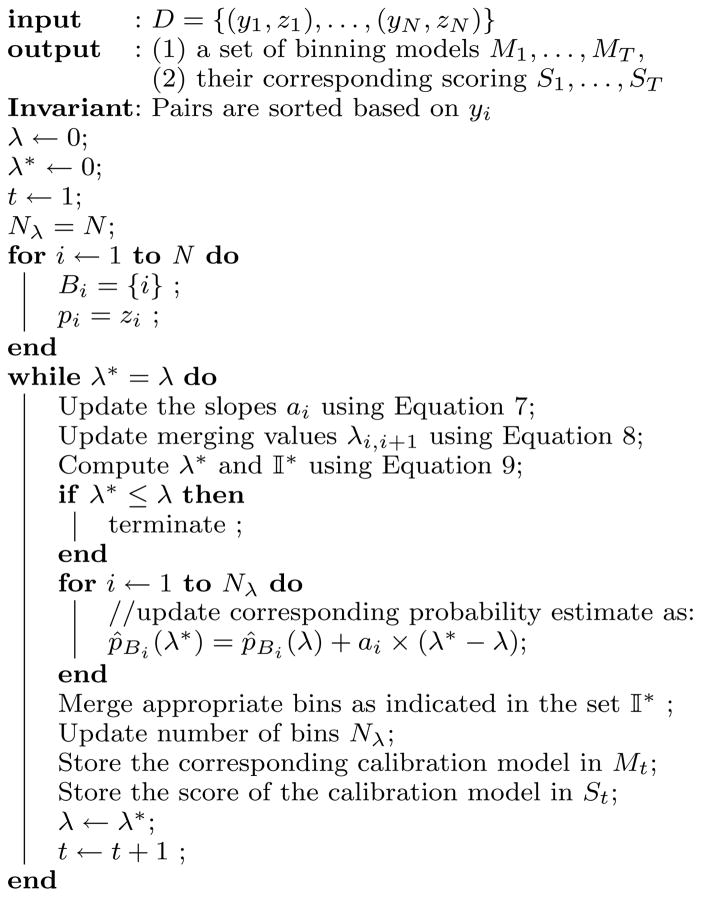

Next, for the sake of completeness, we briefly describe the mPAVA algorithm; more detailed information about the algorithm and the derivations can be found in (Tibshirani et al., 2011).

3.1. The modified PAV algorithm

Suppose at a value of λ we have Nλ bins, B1, B2, …, BNλ. We can represent the unconstrained optimization program given by Equation 3 as the following loss function that we seek to minimize :

| (4) |

where pBi defines the common estimated value for all the instances located at the bin Bi. The loss function ℒB,λ is always differentiable with respect to pBi unless two calibrated probabilities are just being joined (which only happens if pBi = pBi+1 for some i). Assuming that p̂Bi (λ) is optimal, the partial derivative of ℒB,λ has to be 0 at p̂Bi (λ), which implies:

| (5) |

Rewriting the above equation, the optimum predicted value for each bin can be calculated as:

| (6) |

While PAVA uses the frequency of instances in each bin as the calibrated estimate, Equation 6 shows that mPAVA uses a shrunken version of the frequencies by considering the estimates that are not following the ranking imposed by the base classifier. In Equation 5, taking derivatives with respect to λ yields:

| (7) |

where we set ν0 = νN = 0 for notational convenience. As we noted above, it has been proven that the optimal values of the instances located in the same bin are tied together and the only way that they can change is to merge two bins as they can never split apart as λ increases (Tibshirani et al., 2011). Therefore, as we make changes in λ, the bins Bi, and hence the values νi remain constant. This implies the term is a constant in Equation 7. Consequently, the solution path remains piecewise linear as λ increases, and the breakpoints happen when two bins merge together. Now, using the piecewise linearity of the solution path and assuming that the two bins Bi and Bi+1 are the first two bins to merge by increasing λ, the value of λi,i+1 at which the two bins Bi and Bi+1 will merge is calculated as:

| (8) |

where is the slope of the changes of p̂Bi with respect to λ according to Equation 7. Using the above identity, the λ at which the next breakpoint occurs is obtained using the following equation:

| (9) |

where 𝕀* indicates the set of the indexes of the bins that will be merged by their consecutive bins changing the λ5. If λ* ≤ λ then the algorithm will terminate since it has obtained the standard isotonic regression solution, and by increasing λ none of the existing bins will ever merge. Having the solutions of the near isotonic regression problem in Equation 3 at the breakpoints, and using the piecewise linearity property of the solution path, it is possible to recover the solution for any value of λ through interpolation. However, the current implementation of ENIR only uses the near isotonic regression based calibration models that corresponds to the value of λ at the breakpoints. The sketch of the algorithm is shown as Algorithm 1.

Algorithm 1.

The modified pool adjacent violators algorithm (mPAVA) that yields a set of near-isotonic-regression-based calibration models M1, …, MT

4. Empirical Results

This section describes the set of experiments that we performed to evaluate the performance of ENIR in comparison to Isotonic Regression based Calibration (IsoRegC) (Zadrozny and Elkan, 2002), and a recently introduced binary classifier calibration method called BBQ (Pakdaman Naeini et al., 2015b). We use IsoRegC because it is one of the most commonly used calibration models showing promising performance on real world applications (Niculescu-Mizil and Caruana, 2005; Zadrozny and Elkan, 2002). Moreover ENIR is an extension of IsoRegC, and we are interested in evaluating whether it performs better than IsoRegC. We also include BBQ as a state-of-the-art binary classifier calibration model, which is a Bayesian extension of the simple histogram binning model (Pakdaman Naeini et al., 2015b). We did not include Platt’s method since it is a simple and restricted parametric model and there are prior works showing that IsoRegC and BBQ perform superior to Platt’s method (Niculescu-Mizil and Caruana, 2005; Zadrozny and Elkan, 2002; Pakdaman Naeini et al., 2015b). We also did not include the ACP method since it requires not only probabilistic predictions, but also a statistical confidence interval (CI) around each of those predictions, which makes it tailored to specific classifiers, such as LR (Jiang et al., 2012); this is counter to our goal of developing post-processing methods that can be used with any existing classification models. Finally, we did not include ABB in our experiments mainly because it is not computationally tractable for real datasets that have more than a couple of thousand instances. Moreover, even for small size datasets, we have observed that ABB performs similar to BBQ.

4.1. Evaluation Measures

In order to evaluate the performance of the calibration models, we use 5 different evaluation measures. We use Accuracy (ACC) and area under ROC curve (AUC) to evaluate how well the methods discriminate the positive and negative instances in the feature space. We also utilize three measures of calibration, namely, root mean square error (RMSE)6, maximum calibration error (MCE), and expected calibration error (ECE) (Pakdaman Naeini et al., 2015b; Pakdaman Naeini et al., 2015a). MCE and ECE are two simple statistics of the reliability curve (Figure 1 shows a hypothetical example of such curve) computed by partitioning the output space of the binary classifier, which is the interval [0, 1], into K fixed number of bins (K = 10 in our experiments). The estimated probability for each instance will be located in one of the bins. For each bin we can define the associated calibration error as the absolute difference between the expected value of predictions and the actual observed frequency of positive instances. The MCE calculates the maximum calibration error among the bins, and ECE calculates expected calibration error over the bins, using empirical estimates as follows:

where P(k) is the empirical probability or the fraction of all instances that fall into bin k, ek is the mean of the estimated probabilities for the instances in bin k, and ok is the observed fraction of positive instances in bin k. The lower the values of MCE and ECE, the better is the calibration of a model.

4.2. Simulated Data

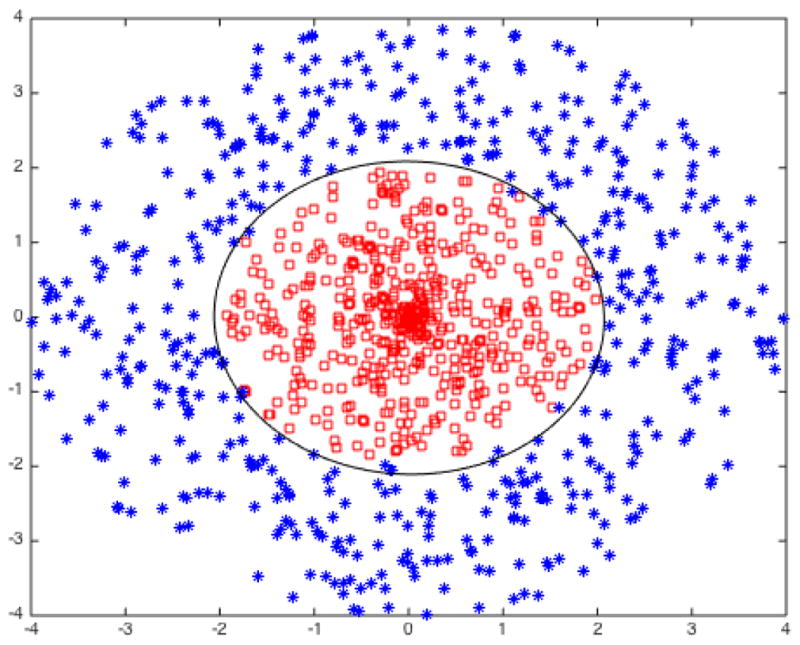

For the simulated data experiments, we used a binary classification dataset in which the outcomes were not linearly separable. The scatter plot of the simulated dataset is shown in Figure 3. We developed this classification problem to illustrate how IsoRegC can suffer from a violation of the isotonicity assumption, and to compare the performance of IsoRegC with our new calibration method that assumes approximate isotonicity. In our experiments, the data are divided into 1000 instances for training and calibrating the prediction model, and 1000 instances for testing the models. We report the average results of 10 random runs for the simulated dataset. To conduct the experiments with the simulated data, we used two extreme classifiers: support vector machines (SVM) with linear and quadratic kernels. The choice of SVM with a linear kernel allows us to see how ENIR perform when the classification model makes an over simplifying (linear) assumption. Also, to achieve good discrimination on the circular configuration data in Figure 3, SVM with a quadratic kernel is a reasonable choice (as is also evidenced qualitatively in Figure 3 and quantitatively in Table 1b). So, the experiment using quadratic kernel SVM allows us to see how well ENIR performs when we use models that should discriminate well.

Fig. 3.

Scatter plot of the simulated data. The two classes of the binary classification task are indicated by the red squares and blue stars. The black oval indicates the decision boundary found using SVM with a quadratic kernel.

Table 1.

Experimental Results on a simulated dataset

| (a) SVM Linear Kernel | ||||

|---|---|---|---|---|

|

| ||||

| SVM | IsoRegC | BBQ | ENIR | |

| AUC | 0.52 | 0.65 | 0.85 | 0.85 |

| ACC | 0.64 | 0.64 | 0.78 | 0.79 |

| RMSE | 0.52 | 0.46 | 0.39 | 0.38 |

| ECE | 0.28 | 0.35 | 0.05 | 0.05 |

| MCE | 0.78 | 0.60 | 0.13 | 0.12 |

| (b) SVM Quadratic Kernel | ||||

|---|---|---|---|---|

|

| ||||

| SVM | IsoRegC | BBQ | ENIR | |

| AUC | 1.00 | 1.00 | 1.00 | 1.00 |

| ACC | 0.99 | 0.99 | 0.99 | 0.99 |

| RMSE | 0.21 | 0.09 | 0.10 | 0.09 |

| ECE | 0.14 | 0.01 | 0.01 | 0.00 |

| MCE | 0.36 | 0.04 | 0.05 | 0.03 |

As seen in Table 1, ENIR generally outperforms IsoRegC on the simulation dataset, especially when the linear SVM method is used as the base learner. This is due to the monotonicity assumption of IsoRegC which presumes the best calibrated estimates will match the ordering imposed by the base classifier. When we use SVM with a linear kernel, this assumption is violated due to the non-linearity of the data. Consequently, IsoRegC only provides limited improvement of the calibration and discrimination performance of the base classifier. ENIR performs very well in this case since it is using the ranking information of the base classifier, but it is not anchored to it. The violation of the monotonicity assumption can happen in real data as well, especially in large scale data mining problems in which we use simple classification models due to the computational constraints. As shown in Table 1b, even when we apply a highly appropriate SVM classifier to classify the instances for which IsoRegC is expected to perform well (and indeed does so), ENIR performs as well or better than IsoRegC.

4.3. Real Data

We ran two sets of experiments on 40 randomly selected baseline datasets from the UCI and LibSVM repositories7 (Lichman, 2013; Chang and Lin, 2011). Five summary statistics of the size of the datasets and the percentage of the minority class are shown in Table 2. We used three common classifiers, logistic regression (LR), support vector machines (SVM), and naïve Bayes (NB) to evaluate the performance of the proposed calibration method. In both sets of experiments on real data, we used 10 random runs of 10-fold cross validation, and we always used the train data for calibrating the models.

Table 2.

Summary statistics of the size of the real datasets and the percentage of the minority class. Q1 and Q3 defines the first quartile and thirds quartile, respectively.

| Min | Q1 | Median | Q3 | Max | |

|---|---|---|---|---|---|

| Size | 42 | 683 | 1861 | 8973 | 581012 |

| Percent | 0.009 | 0.076 | 0.340 | 0.443 | 0.500 |

In the first set of experiments on real data, we were interested in evaluating whether there is experimental support for using ENIR as a post-processing calibration method. Table 3 shows the 95% confidence interval for the mean of the random variable X, which is defined as the percentage of the gain (or loss) of ENIR with respect to the base classifier:

| (10) |

where measure is one of the evaluation measures AUC, ACC, ECE, MCE, or RMSE. Also, method denotes one of the choices of the base classifiers, namely, LR, SVM, or NB. For instance, Table 3 shows that by post-processing the output of SVM using ENIR, we are 95% confident to gain anywhere from 17.6% to 31% average improvement in terms of RMSE. This could be a significant improvement, depending on the application, considering the 95% CI for the AUC which shows that by using ENIR we are 95% confident not to lose more than 1% of the SVM discrimination power in terms of AUC (Note, however, that the CI includes zero, which indicates that there is not a statistically significant difference between the performance of SVM and ENIR in terms of AUC).

Table 3.

The 95% confidence interval for the average percentage of improvement over the base classifiers(LR, SVM, NB) by using the ENIR method for post-processing. Positive entries for AUC and ACC mean ENIR is on average providing better discrimination than the base classifiers. Negative entries for RMSE, ECE, and MCE mean that ENIR is on average performing better calibration than the base classifiers.

| LR | SVM | NB | |

|---|---|---|---|

| AUC | [−0.008, 0.003] | [−0.010, 0.003] | [−0.010, 0.000] |

| ACC | [0.002, 0.016] | [−0.001, 0.010] | [0.012, 0.068] |

| RMSE | [−0.124, −0.016] | [−0.310, −0.176] | [−0.196, −0.100] |

| ECE | [−0.389, −0.153] | [−0.768, −0.591] | [−0.514, −0.274] |

| MCE | [−0.313, −0.064] | [−0.591, −0.340] | [−0.552, −0.305] |

Overall, the results in Table 3 show that there is not a statistically meaningful difference between the performance of ENIR and the base classifiers in terms of AUC. The results support at a 95% confidence level that ENIR improves the performance of LR and NB in terms of ACC. Furthermore, the results in Table 3 show that by post-processing the output of LR, SVM, and NB using ENIR, we can obtain dramatic improvements in terms of calibration measured by RMSE, ECE, and MCE. For instance, the results indicate that at a 95% confidence level, ENIR improved the average performance of NB in terms of MCE anywhere from 30.5% to 55.2%, which could be practically significant in many decision-making and data mining applications.

In the second set of experiments on real data, we were interested to evaluate the performance of ENIR compared with other calibration methods. To evaluate the performance of models, we used the recommended statistical test procedure by Janez Demsar (Demšar, 2006). More specifically, we used the non-parametric testing method based on the FF test statistic (Iman and Davenport, 1980), which is an improved version of Freidman non-parametric hypothesis testing method (Friedman, 1937), followed by Holm’s step-down procedure (Holm, 1979) to evaluate the performance of ENIR in comparison with other methods, across the 40 baseline datasets.

Tables [4,5,6] show the results of the performance of ENIR in comparison with IsoRegC and BBQ. In these tables, we show the average rank of each method across the baseline datasets, where boldface indicates the best performing method. In these tables, the marker */⊛ indicates whether ENIR is statistically superior/inferior to the compared method using the improved Friedman test followed by Holm’s step-down procedure at a 0.05 significance level. For instance, Table 5 shows the performance of the calibration models when we use SVM as the base classifier; the results show that ENIR achieves the best performance in terms of RMSE by having an average rank of 1.675 across the 40 baseline datasets. The result indicates that in terms of RMSE, ENIR is statistically superior to BBQ; however, it is not performing statistically differently than IsoRegC.

Table 4.

Average rank of the calibration methods on the benchmark datasets using LR as the base classifier. Marker */⊛ indicates whether ENIR is statistically superior/inferior to the compared method (using an improved Friedman test followed by Holm’s step-down procedure at a 0.05 significance level).

| IsoRegC | BBQ | ENIR | |

|---|---|---|---|

| AUC | 1.963 | 2.225 | 1.813 |

| ACC | 1.675 | 2.663* | 1.663 |

| RMSE | 1.925* | 2.625* | 1.450 |

| ECE | 2.125 | 1.975 | 1.900 |

| MCE | 2.475* | 1.750 | 1.775 |

Table 5.

Average rank of the calibration methods on the benchmark datasets using SVM as the base classifier. Marker */⊛ indicates whether ENIR is statistically superior/inferior to the compared method (using an improved Friedman test followed by Holm’s step-down procedure at a 0.05 significance level).

| IsoRegC | BBQ | ENIR | |

|---|---|---|---|

| AUC | 1.988 | 2.025 | 1.988 |

| ACC | 2.000 | 2.150 | 1.850 |

| RMSE | 1.850 | 2.475* | 1.675 |

| ECE | 2.075 | 2.025 | 1.900 |

| MCE | 2.550* | 1.625 | 1.825 |

Table 6.

Average rank of the calibration methods on the benchmark datasets using NB as the base classifier. Marker */⊛ indicates whether ENIR is statistically superior/inferior to the compared method (using an improved Friedman test followed by Holm’s step-down procedure at a 0.05 significance level).

| IsoRegC | BBQ | ENIR | |

|---|---|---|---|

| AUC | 2.150 | 1.925 | 1.925 |

| ACC | 1.963 | 2.375* | 1.663 |

| RMSE | 2.200* | 2.375* | 1.425 |

| ECE | 2.475* | 2.075* | 1.450 |

| MCE | 2.563* | 1.850 | 1.588 |

Table 4 shows the results of comparison when we use LR as the base classifier. As shown, the performance of ENIR is always superior to BBQ and IsoRegC except for MCE in which BBQ is superior to ENIR; however, this difference is not statistically significant. The results show that in terms of discrimination based on AUC, there is not a statistically significant difference between the performance of ENIR compared with BBQ and IsoRegC. However, ENIR performs statistically better than BBQ in terms of ACC. In terms of calibration measures, ENIR is statistically superior to both IsoRegC and BBQ in terms of RMSE. In terms of MCE, ENIR is statistically superior to IsoRegC.

Table 5 shows the results when we use SVM as the base classifier. As shown, the performance of ENIR is always superior to BBQ and IsoRegC except for MCE in which BBQ performs better than ENIR; however, the difference is not statistically significant for MCE. The results show that although ENIR is superior to IsoRegC and BBQ in terms of discrimination measures, AUC and ACC, the difference is not statistically significant. In terms of calibration measures, ENIR performs statistically superior to BBQ in terms of RMSE and it is statistically superior to IsoRegC in terms of MCE.

Table 6 shows the results of comparison when we use NB as the base classifier. As shown, the performance of ENIR is always superior to BBQ and IsoRegC. In terms of discrimination, for AUC there is not a statistically significant difference between the performance of ENIR compared with BBQ and IsoRegC; however, in terms of ACC, ENIR is statistically superior to BBQ. In terms of calibration measures, ENIR is always statistically superior to IsoRegC. ENIR is also statistically superior to BBQ in terms of ECE and RMSE.

Overall, in terms of discrimination measured by AUC and ACC, the results show that the proposed calibration method either outperforms IsoRegC and BBQ, or has a performance that is not statistically significantly different. In terms of calibration measured by ECE, MCE, and RMSE, ENIR either outperforms other calibration methods, or it has a statistically equivalent performance to IsoRegC and BBQ.

Finally, Table 7 shows a summary of the time complexity of different binary classifier calibration methods in learning for N training instances and the test time for only one instance.

Table 7.

Note that N and B are the size of training sets and the number of bins found by the method, respectively. T is the number of iterations required for convergence of the Platt method and M is defined as the total number of models used in the associated ensemble model.

| Training Time | Testing Time | |

|---|---|---|

| Platt | O(NT) | O(1) |

| Hist | O(N logN) | O(logB) |

| IsoRegC | O(N logN) | O(logB) |

| ACP | O(N logN) | O(N) |

| ABB | O(N2) | O(N2) |

| BBQ | O(N logN) | O(M logN) |

| ENIR | O(N logN) | O(M logB) |

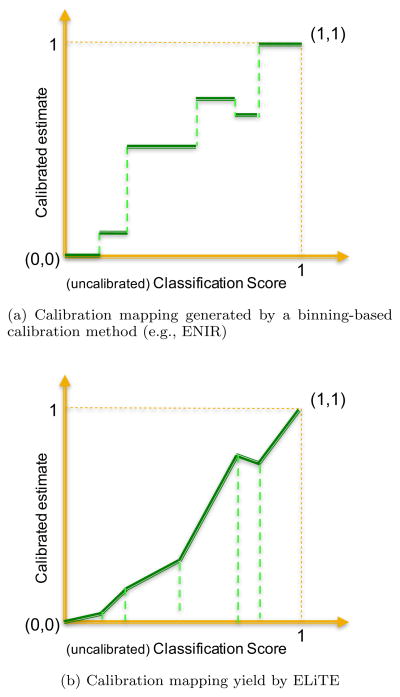

5. An Extension to ENIR

This section briefly describes an extension to ENIR model which is called an Ensemble of Linear Trend Estimation (ELiTE) (Pakdaman Naeini and Cooper, 2016a). Figure 4 shows the main idea in developing ELiTE. As shown, in all of the histogram binning-based calibration models —including quantile binning (i.e., the equal frequency histogram binning), IsoRegC, Bayesian extensions to the histogram binning such as BBQ and ABB, and also ENIR— the generated mapping function is a piecewise constant function. The main idea of ELiTE is to extend ENIR and other binning-based calibration methods by using an ensemble of piecewise linear functions 8 as it is shown in Figure 4b.

Fig. 4.

The figure shows how ELiTE extends binning-based calibration methods( e.g., ENIR) by using a piecewise linear calibration mapping instead of a piecewise constant mapping.

Recall zi and yi are the true class of the i’th training instance and its corresponding classification score, respectively. Without loss of generality, we assume the training instances to be sorted in increasing order by their associate classification scores yi. Borrowing the term “bin” from the histogram binning literature, we define each bin as the largest interval over the training data with a uniform slope of change (e.g., Figure 4b indicates that there are 6 bins in the calibration mapping). ELiTE uses the ℓ1 (linear) trend filtering signal approximation method (Kim, Koh, Boyd and Gorinevsky, 2009) and poses the problem of finding a piecewise calibration mapping as the following optimization program:

| (11) |

where p̂ = (p̂1, …, p̂n) is the vector of calibrated probability estimates and the vector v ∈ RN−2 is defined as the second order finite difference vector associated with the training data 9 . Also, λ is a penalization parameter that regulates the trade-off between the goodness of fit and the complexity of the model by penalizing the total variance over the slope of the resulting calibration mapping. Kim et al. used the shrinkage property of the ℓ1 norm and showed that the final solution to the above optimization program p̂ will be a continuous piecewise linear function with the kink points occurring on the training data (Kim et al., 2009).

ELiTE uses a specialized ADMM algorithm proposed by A. Ramdas et al. (Ramdas and Tibshirani, 2016) and a warm start procedure that iterates over the values of lambda by ranging equally in the log space from λmax to λmax * 10−4, where λmax is the corresponding value of λ that gives the best affine approximation of the calibration mapping that can be computed in O(N), where N is the number of training instances (Kim et al., 2009). ELiTE uses the Akaike information criterion with a correction for finite sample sizes (AICc) (Cavanaugh, 1997) to score each of the piecewise linear calibration models associated with various values of the λ. Finally, for a new test instance, ELiTE uses the AICc scores to run a selective Bayesian averaging and estimate the final calibrated estimate (Pakdaman Naeini and Cooper, 2016a).

Similar to ENIR, ELiTE is shown to perform superior to the commonly used binary classifier calibration methods including Platt’s method, isotonic regression, and BBQ (Pakdaman Naeini and Cooper, 2016a). In this section, we are interested to compare the performance of these two new calibration methods in terms of the discrimination and calibration capability. Table 8 shows the results of a comparison between the performance of ENIR and ELiTE over the 40 real datasets used in our previous experiments. A Wilcoxon signed rank test is used to statistically measure the significance of performance difference between ELiTE and ENIR. Median difference performance of ELiTE and ENIR over the baseline datasets is reported along with the corresponding p-value of the test which is indicated in parentheses. The results show that there are some cases that ELiTE is statistically superior to ENIR. However, in terms of running time, ELiTE runs more than eight times slower, on a MacBook Pro with a 2.5 GHz Intel Core i7 CPU and a 16 GB RAM memory, in comparison to the ENIR even though its running time complexity is O(NlogN) (Ramdas and Tibshirani, 2016; Pakdaman Naeini and Cooper, 2016a). Also, note that the median of the difference between the performance of ELiTE and ENIR is always very small (i.e., less than 0.01 in all cases).

Table 8.

Median of the difference between the performance of ELiTE and ENIR on the 40 baseline datasets. Bold face indicates the differences that are statistically significant, based on using a Wilcoxon signed ranked test at a 0.05 significance level. The numbers inside parentheses indicate the corresponding p-value of the test. The bottom row of the table shows the overall Running Time (RT) of ENIR versus ELiTE in minutes over the 10-fold cross validation experiments of the real datasets, using a single core of a MacBook Pro with a 2.5 GHz Intel Core i7 CPU and a 16 GB RAM memory.

| LR | SVM | NB | |

|---|---|---|---|

| AUC | 0.003(0.001) | 0.002(0.009) | 0.002 (0.001) |

| ACC | 0.001(0.032) | 0.001(0.348) | 0.001(0.806) |

| RMSE | −0.001(0.018) | −0.001(0.105) | −0.001(0.0619) |

| ECE | −0.001(0.055) | −0.001(0.077) | −0.001(0.295) |

| MCE | −0.009(0.001) | −0.005(0.001) | −0.006(0.001) |

|

| |||

| Run time | 226/1808 | 225/2082 | 235/2056 |

6. Conclusion

In this paper, we presented a new non-parametric binary classifier calibration method called ensemble of near isotonic regression (ENIR) to build accurate probabilistic prediction models. The method generalizes the isotonic regression-based calibration method (IsoRegC) (Zadrozny and Elkan, 2002) in two ways. First, ENIR makes a more realistic assumption compared to IsoRegC by assuming that the transformation from the uncalibrated output of a classifier to calibrated probability estimates is approximately (but not necessarily exactly) a monotonic function. Second, ENIR is an ensemble model that utilizes the BIC scoring function to perform selective model averaging over a set of near isotonic regression models that indeed includes IsoRegC as an extreme member. The method is computationally tractable, as it runs in O(N logN) for N training instances. It can be used to calibrate many different types of binary classifiers, including logistic regression, support vector machines, naïve Bayes, and others. Our experiments show that by post processing the output of classifiers using ENIR, we can gain high calibration improvement in terms of RMSE, ECE, and MCE, without losing any statistically meaningful discrimination performance. Moreover, our experimental evaluation on a broad range of real datasets showed that ENIR outperforms IsoRegC and BBQ (i.e. a state-of-the-art binary classifier calibration method (Pakdaman Naeini et al., 2015b)).

We also evaluated the performance of ENIR in comparison to a newly introduced binary classifier calibration method called ELiTE 10. Our experiments show that even though ENIR is slightly inferior to ELiTE in terms of AUC and MCE measures, it runs overall (training + testing time) at least eight times faster than ELiTE over all of our experimental datasets. Note that, it is possible to combine the near-isotonicity constraints and the piecewise-linear constraints of calibration mapping to build a calibration mapping that is both near-isotonic and piecewise linear. This can be easily done by simply adding the near-isotonic constraint to the ADMM constraint optimization program of ELiTE (Ramdas and Tibshirani, 2016; Pakdaman Naeini and Cooper, 2016a). We leave this extension as future work.

An important advantage of of ENIR over Bayesian binning models (e.g., BBQ, and ABB) is that they can be extended to a multi-class and multi-label calibration models similar to what has done for the standard IsoRegC method (Zadrozny and Elkan, 2002). This is an area of our current research. We also plan to investigate theoretical properties of ENIR. In particular, we are interested to investigate theoretical guarantees regarding the discrimination and calibration performance of these calibration methods, similar to what has been proved for the AUC guarantees of IsoRegC (Fawcett and Niculescu-Mizil, 2007).

Acknowledgments

We thank anonymous reviewers for their very useful comments and suggestions. Research reported in this publication was supported by grant U54HG008540 awarded by the National Human Genome Research Institute through funds provided by the trans-NIH Big Data to Knowledge (BD2K) initiative. It was also supported in part by NIH grants R01GM088224 and R01LM012095. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH. This research was also supported by grant #4100070287 from the Pennsylvania Department of Health. The Department specifically disclaims responsibility for any analyses, interpretations, or conclusions.

Biographies

Gregory F. Cooper, M.D., Ph.D. is

Professor of Biomedical Informatics and of Intelligent Systems at the University of

Pittsburgh. His research focuses on the development and application of methods for

probabilistic modeling, machine learning, Bayesian statistics, and artificial

intelligence to address biomedical informatics problems. He is Director of the

Center for Causal Discovery, which is an NIH-funded Big Data to Knowledge (BD2K)

Center of Excellence. He is an elected member of the American College of Medical

Informatics and of the Association for the Advancement of Artificial

Intelligence.

Gregory F. Cooper, M.D., Ph.D. is

Professor of Biomedical Informatics and of Intelligent Systems at the University of

Pittsburgh. His research focuses on the development and application of methods for

probabilistic modeling, machine learning, Bayesian statistics, and artificial

intelligence to address biomedical informatics problems. He is Director of the

Center for Causal Discovery, which is an NIH-funded Big Data to Knowledge (BD2K)

Center of Excellence. He is an elected member of the American College of Medical

Informatics and of the Association for the Advancement of Artificial

Intelligence.

Mahdi Pakdaman Naeini, Ph.D. is a

postdoctoral fellow of Paulson School of Engineering and Applied Sciences and

Department of Biomedical Informatics at Harvard University. He has received his Ph.D

from University of Pittsburgh at 2016. His research is focused on statistical

machine learning, causal discovery, and their applications in biomedical

informatics.

Mahdi Pakdaman Naeini, Ph.D. is a

postdoctoral fellow of Paulson School of Engineering and Applied Sciences and

Department of Biomedical Informatics at Harvard University. He has received his Ph.D

from University of Pittsburgh at 2016. His research is focused on statistical

machine learning, causal discovery, and their applications in biomedical

informatics.

Footnotes

Note that the running time for the test instance can be reduced to O(1) in any post-processing calibration model by using a simple caching technique that reduces calibration precision in order to decrease calibration time (Pakdaman Naeini et al., 2015a)

For classifiers that output scores that are not in the unit interval (e.g. SVM), we use a simple sigmoid transformation to transform the scores into the unit interval.

Note that we exclude the highly overfitted model that corresponds to λ = 0 from the set of models in ENIR

Note that, as it is recommended in (Tibshirani et al., 2011), we use the expected degree of freedom of the nearly isotonic regression models, which is equivalent to the number of bins, as the number of parameters in the BIC scoring function.

Note that there could be more than one bin achieving the minimum in Equation 9, so they should be all merged with the bins that are located next to them.

Note that, to be more precise, RMSE evaluates both calibration and refinement of the predicted probabilities. Refinement accounts for the usefulness of the probabilities by favoring those that are either close to 0 or 1 (DeGroot and Fienberg, 1983; Cohen and Goldszmidt, 2004)

The datasets used were as follows: spect, adult, breast, pageblocks, pendigits, ad, mamography, satimage, australian, code rna, colon cancer, covtype, letter unbalanced, letter balanced, diabetes, duke, fourclass, german numer, gisette scale, heart, ijcnn1, ionosphere scale, liver disorders, mushrooms, sonar scale, splice, svmguide1, svmguide3, coil2000, balance, breast cancer, leu, w1a, thyroid sick, scene, uscrime, solar, car34, car4, protein homology.

It is possible to generalize ELiTE to obtain piecewise polynomial calibration functions; however, we have noticed an inferior results when using piecewise polynomial degrees higher than 1, and we hypothesize it is because of the overfitting to the training data.

Note that an element of v is zero if and only if there is no change in the slope between two successively predicted points.

An R implementation of ENIR and ELiTE can be found at the following address: https://github.com/pakdaman/calibration.git

References

- Bahnsen AC, Stojanovic A, Aouada D, Ottersten B. Improving credit card fraud detection with calibrated probabilities. Proceedings of the 2014 SIAM International Conference on Data Mining.2014. [Google Scholar]

- Barlow RE, Bartholomew DJ, Bremner J, Brunk HD. Statistical Inference Under Order Restrictions: Theory and Application of Isotonic Regression. Wiley; New York: 1972. [Google Scholar]

- Bella A, Ferri C, Hernández-Orallo J, Ramírez-Quintana MJ. On the effect of calibration in classifier combination. Applied Intelligence. 2013;38(4):566–585. [Google Scholar]

- Cavanaugh JE. Unifying the derivations for the Akaike and corrected Akaike information criteria. Statistics & Probability Letters. 1997;33(2):201–208. [Google Scholar]

- Chang CC, Lin CJ. Libsvm: A library for support vector machines. ACM Transactions on Intelligent Systems and Technology (TIST) 2011;2(3):27. [Google Scholar]

- Cohen I, Goldszmidt M. Properties and benefits of calibrated classifiers. Proceedings of the European Conference on Principles of Data Mining and Knowledge Discovery; Springer; 2004. pp. 125–136. [Google Scholar]

- DeGroot M, Fienberg S. The comparison and evaluation of forecasters. The Statistician. 1983:12–22. [Google Scholar]

- Demšar J. Statistical comparisons of classifiers over multiple data sets. The Journal of Machine Learning Research. 2006;7:1–30. [Google Scholar]

- Dong X, Gabrilovich E, Heitz G, Horn W, Lao N, Murphy K, Strohmann T, Sun S, Zhang W. Knowledge vault: A web-scale approach to probabilistic knowledge fusion. Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining; ACM; 2014. pp. 601–610. [Google Scholar]

- Fawcett T, Niculescu-Mizil A. Pav and the roc convex hull. Machine Learning. 2007;68(1):97–106. [Google Scholar]

- Friedman M. The use of ranks to avoid the assumption of normality implicit in the analysis of variance. Journal of the American Statistical Association. 1937;32(200):675–701. [Google Scholar]

- Gill PE, Murray W, Wright MH. Practical Optimization. Academic press; London: 1981. [Google Scholar]

- Gronat P, Obozinski G, Sivic J, Pajdla T. Learning and calibrating per-location classifiers for visual place recognition. Proceedings of the IEEE conference on computer vision and pattern recognition; 2013. pp. 907–914. [Google Scholar]

- Hashemi HB, Yazdani N, Shakery A, Naeini MP. Application of ensemble models in web ranking. Proceedings of 5th International Symposium on Telecommunications (IST); IEEE; 2010. pp. 726–731. [Google Scholar]

- Heckerman D, Geiger D, Chickering D. Learning Bayesian networks: The combination of knowledge and statistical data. Machine Learning. 1995;20(3):197–243. [Google Scholar]

- Hoeting JA, Madigan D, Raftery AE, Volinsky CT. Bayesian model averaging: a tutorial. Statistical Science. 1999:382–401. [Google Scholar]

- Holm S. A simple sequentially rejective multiple test procedure. Scandinavian Journal of Statistics. 1979:65–70. [Google Scholar]

- Iman RL, Davenport JM. Approximations of the critical region of the friedman statistic. Communications in Statistics-Theory and Methods. 1980;9(6):571–595. [Google Scholar]

- Jiang L, Zhang H, Su J. Learning k-nearest neighbor naïve Bayes for ranking. Proceedings of the Advanced Data Mining and Applications; Springer; 2005. pp. 175–185. [Google Scholar]

- Jiang X, Osl M, Kim J, Ohno-Machado L. Calibrating predictive model estimates to support personalized medicine. Journal of the American Medical Informatics Association. 2012;19(2):263–274. doi: 10.1136/amiajnl-2011-000291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim SJ, Koh K, Boyd S, Gorinevsky D. ℓ1 trend filtering. SIAM Review. 2009;51(2):339–360. [Google Scholar]

- Lichman M. UCI machine learning repository. 2013 http://archive.ics.uci.edu/ml.

- Menon A, Jiang X, Vembu S, Elkan C, Ohno-Machado L. Predicting accurate probabilities with a ranking loss. Proceedings of the International Conference on Machine Learning; 2012. pp. 703–710. [PMC free article] [PubMed] [Google Scholar]

- Niculescu-Mizil A, Caruana R. Predicting good probabilities with supervised learning. Proceedings of the International Conference on Machine Learning; 2005. pp. 625–632. [Google Scholar]

- Pakdaman Naeini M, Cooper GF. Binary classifier calibration using an ensemble of linear trend estimation. Proceedings of the 2016 SIAM International Conference on Data Mining; SIAM; 2016a. pp. 261–269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pakdaman Naeini M, Cooper GF. Binary classifier calibration using an ensemble of near isotonic regression models. Data Mining (ICDM), 2016 IEEE 16th International Conference on; IEEE; 2016b. pp. 360–369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pakdaman Naeini M, Cooper GF, Hauskrecht M. Binary classifier calibration using a Bayesian non-parametric approach. Proceedings of the SIAM Data Mining (SDM) Conference; 2015a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pakdaman Naeini M, Cooper G, Hauskrecht M. Obtaining well calibrated probabilities using Bayesian binning. Proceedings of Twenty-Ninth AAAI Conference on Artificial Intelligence; 2015b. [PMC free article] [PubMed] [Google Scholar]

- Platt JC. Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods. Advances in Large Margin Classifiers. 1999;10(3):61–74. [Google Scholar]

- Ramdas A, Tibshirani RJ. Fast and flexible admm algorithms for trend filtering. Journal of Computational and Graphical Statistics. 2016;25(3):839–858. [Google Scholar]

- Robnik-Šikonja M, Kononenko I. Explaining classifications for individual instances. IEEE Transactions on Knowledge and Data Engineering. 2008;20(5):589–600. [Google Scholar]

- Russell S, Norvig P. Artificial Intelligence: a Modern Approach. Prentice hall; Englewood Cliffs: 2010. [Google Scholar]

- Schwarz G, et al. Estimating the dimension of a model. The Annals of Statistics. 1978;6(2):461–464. [Google Scholar]

- Takahashi K, Takamura H, Okumura M. Direct estimation of class membership probabilities for multiclass classification using multiple scores. Knowledge and information systems. 2009;19(2):185–210. [Google Scholar]

- Tibshirani RJ, Hoefling H, Tibshirani R. Nearly-isotonic regression. Technometrics. 2011;53(1):54–61. [Google Scholar]

- Wallace BC, Dahabreh IJ. Improving class probability estimates for imbalanced data. Knowledge and information systems. 2014;41(1):33–52. [Google Scholar]

- Whalen S, Pandey G. A comparative analysis of ensemble classifiers: case studies in genomics. Data Mining (ICDM), 2013 IEEE 13th International Conference on; IEEE; 2013. pp. 807–816. [Google Scholar]

- Zadrozny B, Elkan C. Learning and making decisions when costs and probabilities are both unknown. Proceedings of the seventh ACM SIGKDD international conference on Knowledge discovery and data mining; ACM; 2001a. pp. 204–213. [Google Scholar]

- Zadrozny B, Elkan C. Obtaining calibrated probability estimates from decision trees and naïve Bayesian classifiers. Proceedings of the International Conference on Machine Learning; 2001b. pp. 609–616. [Google Scholar]

- Zadrozny B, Elkan C. Transforming classifier scores into accurate multiclass probability estimates. Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining; 2002. pp. 694–699. [Google Scholar]

- Zhang H, Su J. Naïve Bayesian classifiers for ranking. Proceedings of the European Conference on Machine Learning (ECML); Springer; 2004. pp. 501–512. [Google Scholar]

- Zhong LW, Kwok JT. Accurate probability calibration for multiple classifiers. Proceedings of the Twenty-Third international Joint Conference on Artificial Intelligence; AAAI Press; 2013. pp. 1939–1945. [Google Scholar]