Highlights

-

•

Infants use intersensory redundancy provided by social touch to learn auditory patterns.

-

•

There is wide variation in the frequency of different patterns of touch from caregivers.

-

•

Less frequent patterns of touch may be more likely to enhance attention and learning.

-

•

The findings suggest that infants track patterns of touch in naturalistic input from caregivers.

Keywords: Social touch, Auditory learning, Statistical learning, Intersensory redundancy

Abstract

Infants’ experiences are defined by the presence of concurrent streams of perceptual information in social environments. Touch from caregivers is an especially pervasive feature of early development. Using three lab experiments and a corpus of naturalistic caregiver-infant interactions, we examined the relevance of touch in supporting infants’ learning of structure in an altogether different modality: audition. In each experiment, infants listened to sequences of sine-wave tones following the same abstract pattern (e.g., ABA or ABB) while receiving time-locked touch sequences from an experimenter that provided either informative or uninformative cues to the pattern (e.g., knee-elbow-knee or knee-elbow-elbow). Results showed that intersensorily redundant touch supported infants’ learning of tone patterns, but learning varied depending on the typicality of touch sequences in infants’ lives. These findings suggest that infants track touch sequences from moment to moment and in aggregate from their caregivers, and use the intersensory redundancy provided by touch to discover patterns in their environment.

1. Introduction

Infants learn in environments filled with social-communicative signals. The pervasiveness of other human beings in the lives of infants has been described as a feature of infancy itself: “There is no such thing as a baby – meaning that if you set out to describe a baby, you will find you are describing a baby and someone” (Winnicott, 1964, p. 88). Given this human presence, infants are exposed to constant streams of interconnected signals from these humans, including speech, manual gesture, eye gaze, and – most notable for the investigation here – touch (Abu-Zhaya et al., 2016, Frith and Frith, 2007). Although there may be differences across cultures in the extent to which these signals are directed to infants vs. merely observable in infants’ perceptual environments (Pye, 1986, Schieffelin and Ochs, 1986, Shneidman and Goldin-Meadow, 2012), every culture offers extensive opportunities for infants to learn in the context of social-communicative signals (Tomasello and Carpenter, 2007, Tomasello et al., 1994).

Decades of research suggest that human infants have a propensity for taking advantage of these opportunities for learning (Bandura, 1971, Csibra and Gergely, 2006, Tomasello et al., 1993, Vygotsky, 1962). Social-communicative signals not only engage infants’ attention (Farroni et al., 2004, Krentz and Corina, 2008, Vouloumanos and Werker, 2007), but also, via a suite of social-cognitive capacities (Herrmann et al., 2007), facilitate efficient learning in social and pedagogical contexts (Csibra and Gergely, 2009, Ferguson and Waxman, 2016, Over and Carpenter, 2012, Tomasello, 2000, Yoon et al., 2008). In rare cases where infants are raised outside of socially enriching environments (e.g., in understaffed orphanages), they demonstrate deficits not only in capacities underlying social interaction but also in fundamental cognitive capacities, such as pattern learning, similarity matching, memory (Nelson et al., 2007, Sheridan et al., 2012; Windsor et al., 2011), as well as language (Beverly et al., 2008, Hough and Kaczmarek, 2011, Schoenbrodt et al., 2007).

One component of social-communication is touch. Touch is prominent in infant-caregiver dyadic interactions (e.g. Feldman et al., 2010, Ferber et al., 2008, Herrera et al., 2004), and has been shown to play a role in directing infants’ attention, regulating arousal levels, and reducing distress (Hertenstein, 2002, Jean and Stack, 2012, Stack and Muir, 1990). The use of touch within dyadic interactions also reflects a mother’s well-being (Ferber et al., 2008) and sensitivity to her infant (Jean and Stack, 2009). Infants may be deprived of touch in cases of maternal depression (Ferber, 2004) or low birthweight, which does not allow for full body contact between the newborns and their caregivers at the beginning of life (Beck et al., 2010). Interestingly, low-birthweight infants who receive regular tactile stimulation show a decrease in behavioral distress cues and an increase in quiet sleep (Modrcin-Talbott et al., 2003), as well as increased cognitive scores and growth relative to those who are not regularly touched (e.g., Aliabadi and Askary, 2013, Field et al., 1986, Weiss et al., 2004). The benefits of tactile stimulation in early infancy have been observed following the implementation of the Kangaroo-care intervention, which provides intensive mother-newborn skin-to-skin contact, over both short and long time scales (Feldman et al., 2002, Feldman et al., 2014). Further, maternal touch has been shown to reduce infants’ cortisol levels (Feldman et al., 2010, Mooncey et al., 1997), which may, in turn, aid learning.

Other social-communicative signals have been shown to support infants’ learning about their environments. One component of early auditory and visual learning is the ability to find regularities in patterned input, often referred to as ‘statistical learning’ or ‘rule learning.’ According to seminal studies of early language learning, the mechanisms underlying the discovery of patterns like those found in natural language are evolutionarily tuned to speech (Marcus et al., 2007, Marcus et al., 1999). In several studies, 7-month-olds successfully extracted rules or patterns from sequences of speech (e.g., syllable triads following an ABA or ABB pattern, such as ‘ga ti ga’ or ‘ga ti ti’). However, they repeatedly failed to do so for non-speech sounds, such as sine-wave tones, animal sounds, and musical timbres. That is, infants’ learning and subsequent generalization of patterns were inconsistent across different kinds of sounds. Marcus et al. (2007) concluded that speech initiates infants’ machinery for learning and generalizing patterns, perhaps resulting from speech-specific adaptations that evolved with our capacity for language. Importantly, other studies indicate that such pattern learning is possible from a range of non-speech signals (Rabagliati et al., 2012, Saffran et al., 2007), but that the ability to do so changes during the first year of life (Dawson and Gerken, 2009).

Subsequent studies examining the learning of speech and non-speech auditory sequences proposed an alternative account for the observed advantage of speech in rule or pattern learning. Ferguson and Lew-Williams (2016) reasoned that infants’ massive experience watching people use speech to communicate could be responsible for their expertise in processing speech, suggesting that the advantage for speech is learned through social exposure to it. In two experiments designed to test this idea, infants were introduced to sine-wave tones as if they could be used to communicate with other people. For example, infants watched a short video in which two individuals communicated with each other. One individual communicated using speech while the other communicated exclusively using sine-wave tones, but the two “talkers” appeared to understand one another. With this evidence that tones could be used as a communicative signal, infants were then familiarized with tones following ABA or ABB patterns, and they succeeded in learning this structure – unlike previous work by Marcus et al. (2007), which did not contain this social exposure. In two control experiments, infants failed to learn the very same patterns when familiarized with tones outside of this communicative exchange. Thus, infants’ learning of patterns can be engaged by social-communicative cues in general, rather than speech per se. These findings suggest that it is infants’ attention to social agents and communicative contexts that facilitates the discovery of structure in the input.

Conversational turn-taking is one type of cue that engages infants, but what is the range of social signals that could promote infants’ learning? Can any social-communicative interaction facilitate infants’ learning of auditory patterns, even across perceptual systems? Based on the findings of Ferguson and Lew-Williams (2016), we hypothesized that touch delivered in a social context could engage infants’ attention as a social-communicative signal. Touch is not usually examined in relation to language learning (though see Seidl et al., 2015 as an exception), yet it would be difficult to find a child, in a typical caregiving setting, who has not experienced touch coupled with language on a regular basis (e.g., during diaper changing: Nomikou and Rohlfing, 2011; or book-reading interactions: Abu-Zhaya et al., 2016). And from the caregiving perspective, it would be nearly impossible for caregivers to avoid touching while using language – for example, changing an infant’s diaper or feeding an infant requires contact and is facilitated by both touch and speech. Thus, it is imperative to explore the impact that touch may have on infants’ attention to and use of auditory signals and whether touch might function to mark a signal as human-related and communicative.

In this paper, we examined the impact of touch on pattern learning for three reasons. First, touch is a pervasive social signal in infants’ lives (Frith and Frith, 2007), yet we do not understand how it interacts with learning from the acoustic signal. Because pattern learning may be socially driven, we predicted better pattern learning with the presence of concurrent touch. Second, touch can be delivered such that it provides redundant, cross-modal cues to auditory information without requiring attentional control by the infant (unlike visual cues). Because redundancies in general have been shown to enhance pattern learning (Bahrick et al., 2004, Frank et al., 2009, Thiessen, 2012), we predicted that touch would enhance auditory pattern learning. Finally, touch has been shown to promote the learning of patterns of syllable sequences from speech in infants as young as 4 months (Seidl et al., 2015). Following from this finding, we predicted that touch would support pattern learning from a different and more challenging auditory signal: tones.

In a series of experiments, we explored whether touch – a ubiquitous feature of infants’ environments (Stack and Muir, 1990) – can interact with infants’ abilities to learn auditory patterns. The experiments were designed to ask not only whether touch promotes pattern learning from tones, but also, in light of prior findings, whether touch does so because of its status as a social cue or as an informative cross-modal cue. In the first experiment, we examined how redundant/informative vs. non-redundant/uninformative touch cues influence infants’ learning of tone patterns.

2. Experiment 1

In Experiment 1, 7-month-old infants were familiarized with sequences of sine-wave tones following either an ABA or ABB pattern while experiencing simultaneous touches from an experimenter, the locations of which were manipulated between two between-subjects conditions. In the Informative condition, touches followed the same pattern as and were temporally aligned with the tones played to infants (e.g., knee-elbow-knee for the ABA tone pattern), thus providing cross-modal cues that supported the auditorily presented pattern. In the Uninformative condition, touches were temporally aligned with tones, but did not follow the same pattern as the acoustic stimuli. Instead, touches occurred in a single location for each sequence of the ABA auditory pattern and alternated between the two touch locations (e.g., knee-knee-knee for one ABA tone sequence and elbow-elbow-elbow for the next ABA tone sequence). After familiarization to auditory and tactile stimuli, infants were tested using the Headturn Preference Procedure (Jusczyk and Aslin, 1995). We measured infants’ listening times to new tone sequences that followed either the familiarized pattern (e.g., ABA if they heard that pattern during familiarization) or a novel pattern (e.g., ABB if they heard ABA during familiarization). If touches enhance pattern learning only via their status as a social cue, we predicted that infants would show a preference for novel sequences in both the Informative and Uninformative conditions. If touches serve not only as a social cue but also as an informative cross-modal cue that supports learning of auditory patterns, we predicted that infants would show a preference on novel trials in the Informative condition only.

2.1. Method

2.1.1. Participants

Forty monolingual English-learning, full-term, typically developing 7-month-olds with no known history of hearing or language impairments were tested (M = 7.40 months; range = 6.94–8.19; 18 males). Following exclusion criteria from prior pattern-learning experiments (Ferguson and Lew-Williams, 2016), additional infants were tested but excluded for fussing or crying (n = 7), providing mean looking times that were more than 2 standard deviations off the mean (n = 2), or looking for the maximum possible duration on 8+ trials (n = 5). Informed consent was obtained for each participant in Experiments 1–4 and infants were given a book or toy for participating.

2.1.2. Stimuli

Two-channel auditory stimuli were created in which one set of pure tones was played to the infant (on one channel) and another set of pure tones was played to the experimenter (on the other channel). In both channels, tones were organized into triadic sequences. Each tone was 300 milliseconds in length, with 250 ms between tones and 1000 ms between sequences. Prior to the first sequence beginning in the infant’s channel, the experimenter heard a single sequence that acted as a ‘warning’ to begin the touching procedure and primed the rhythmic timing for touches.

Infants were randomly assigned to one of two between-subjects conditions: Informative (n = 20) or Uninformative (n = 20). Ten infants within each condition heard ABA rules during familiarization, and 10 heard ABB rules. During the familiarization in both conditions, tone sequences were constructed using notes C, C#, D, Eb, E, F, F#, and G, with intervals between any two notes in a sequence ranging from 1 (e.g., C#–C#–D) to 5 semitones (e.g., C–C–F).

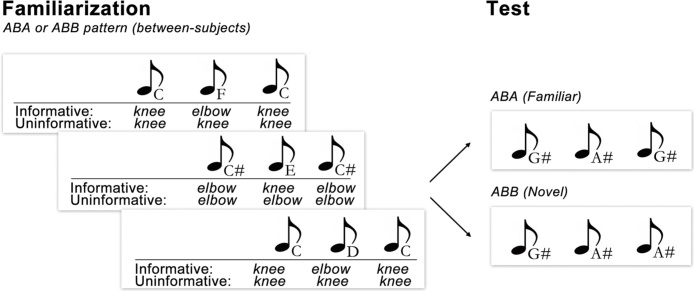

In the Informative condition, the audio track included 16 distinct sequences that were randomly arranged into 37-s blocks and looped four times. Each sequence followed either an ABB (e.g., C–F–F) or ABA pattern (e.g., C–F–C) on both channels. In the Uninformative condition, however, these 16 sequences followed either the ABB or ABA pattern on the infant’s channel, but always followed an AAA (e.g., C–C–C) alternating with BBB (e.g., F–F–F) pattern on the experimenter’s channel (see Fig. 1).

Fig. 1.

Design of the Informative and Uninformative conditions in Experiment 1 for infants who were familiarized to the ABA touch/tone pattern. In the Informative condition, touches provided information that was redundant with the tonal pattern. In the Uninformative condition, touches did not provide information that was redundant with the tonal pattern.

Touch stimuli were delivered by the experimenter who was trained to begin her touches at the onset of tone sequences played over her headphones, and to end her touches at the offset of those tones. All touches in this experiment were taps, one of several naturalistic touch types observed in everyday dyadic interactions between caregivers and infants (book-reading: Abu-Zhaya et al., 2016; free play: Jean et al., 2009; diaper-changing: Nomikou and Rohlfing, 2011). Because the experimenter heard a warning tone before each touch onset, she was able to accurately align her touch onsets with the onsets of the target tones played over her headphones. All touches were of equal duration and matched the duration of tones played to the infant (300 ms). Touch location was controlled by the second channel played to the experimenter, such that a low warning tone followed by a low tone indicated that she should touch the infant’s knee and a high warning tone followed by a high tone indicated that she should touch the infant’s elbow.

The test phase of the experiment involved no experimenter touches, only auditory stimuli. Test stimuli contained one channel, and used tone frequencies that were not heard during familiarization. Tone sequences followed the ABB and ABA pattern, thus, in order to demonstrate learning, the infant would need to generalize the familiarized pattern to these new tones. The test sequences consisted of notes G#, A, Bb, and B (not previously heard during familiarization), arranged into sequences with whole tone intervals, e.g., G#–Bb–Bb (ABB pattern) and B–A–B (ABA pattern).

2.1.3. Procedure

Infants and their caregivers were brought into the lab and provided informed consent before the onset of the experiment. After consent, infants were brought into the soundbooth that housed the Headturn Preference Procedure for both familiarization and testing. In the familiarization phase, they sat comfortably on their caregivers' laps across from an experimenter who was wearing headphones and who could easily reach out and touch the infants' arms and legs. While seated, infants were familiarized with a series of tones displaying one of two abstract patterns (ABB, ABA) for 2.5 min. During this time, infants also received a series of precisely timed touches to their elbows and knees. Infants listened to 4 blocks of 16 distinct tone sequences that followed one of the two patterns while being provided with simultaneous touches from an experimenter, the locations of which (elbow, knee) were manipulated between conditions and orders, as shown in Table 1.

Table 1.

The orders and conditions used during the familiarization phases of Experiments 1, 2, and 4. (k = knee, e = elbow).

| Order | Tone Pattern | Touch Pattern | Condition | Type of Touch |

|---|---|---|---|---|

| Experiment 1 | ||||

| 1 | ABA | e-k-e | Informative | Tap |

| 2 | ABA | k-e-k | Informative | Tap |

| 3 | ABB | k-e-e | Informative | Tap |

| 4 | ABB | e-k-k | Informative | Tap |

| 1 | ABA | k-k-k, e-e-e | Uninformative | Tap |

| 2 | ABA | e-e-e, k-k-k | Uninformative | Tap |

| 3 | ABB | k-k-k, e-e-e | Uninformative | Tap |

| 4 | ABB | e-e-e, k-k-k | Uninformative | Tap |

| Experiment 2 | ||||

| 1 | ABB | e-k-e | Uninformative | Tap |

| 2 | ABB | k-e-k | Uninformative | Tap |

| Experiment 4 | ||||

| 1 | ABB | k-e-e | Informative | Poke |

| 2 | ABB | e-k-k | Informative | Poke |

Touch sequence timing commands were delivered to the experimenter via headphones connected to a channel splitter so that her touches could be precisely timed to align with the infants' acoustic input stream. Specific tones were associated with specific body parts (knee, elbow) and the touches were timed to coincide with those body parts in the Informative condition and strictly alternated between three touches to a single body part in the Uninformative condition. For example, if an infant in the Informative condition heard the ABA tone pattern, she would be touched following the same pattern (knee-elbow-knee or vice-versa, with body part order counterbalanced between participants), and if an infant in this same condition heard the ABB pattern she would be touched following that same pattern (e.g., knee-elbow-elbow or vice-versa).

After familiarization, the experimenter left the room and removed her chair, at which point infants were tested for their learning of these patterns in the Headturn Preference Procedure (Jusczyk and Aslin, 1995). Specifically, each infant was seated on a caregiver’s lap in the middle of a single-walled structure inside a sound booth. The experimenter, who now sat outside of the booth, observed the infant through a monitor connected to an in-booth video camera which recorded the infant’s head orientation during the experiment. The caregiver wore a set of headphones (Peltor™ Aviation headset 7050) which played continuous music and white noise designed to mask the stimuli played to the infant. The booth was quiet and comfortable, and consisted of three panels: a center panel with a green light and two side panels each with a red light. An overhead light was dimmed to make the panel lights more salient. Each trial began with the blinking of the green light on the center panel. When the infant looked at the green light, the light was extinguished and one of the two red lights would begin to blink. A computer program randomly chose which red light to trigger. When the infant oriented at least 30° in the direction of the red light, the stimuli for that trial began to play. The stimuli played until either the infant looked away for 2 consecutive seconds or the sound file was complete. At this point, the red light was extinguished and the sound stopped. Then, the center green light began to blink in preparation for the next trial. The computer recorded the amount of time the infant oriented to the red light while the stimuli played on each trial. Looking time was defined as the amount of time the infant spent looking at the red light. If the infant turned away from the target by 30° for less than two seconds, that time was not included in the looking time calculation, although the light did not extinguish and the sound did not terminate.

During the test phase, infants were tested with 12 trials of ABB and ABA tonal stimuli. The test trials were blocked in groups of 4 so that each pattern occurred 2 times per block. Each infant received 3 blocks of test trials with familiar and novel patterns randomized within a block. At test, the dependent measure was the average looking time across trials to each stimulus type (familiar, novel). A Macintosh computer controlled the presentation of the stimuli and recorded the experimenter’s coding of the infant’s orientation via a button box. The audio output was fed to two Cambridge SoundWorks Ensemble II speakers. As in past work (e.g., Ferguson and Lew-Williams, 2016), we predicted that if infants learned these patterns, then they should listen longer to forms which violated the familiarized pattern (novel) than to forms which followed the familiarized patterns (familiar; see design in Fig. 1).

2.1.4. Analysis

We used a hierarchical linear model to predict infants’ looking times (in seconds) trial-by-trial. Each model included fixed effects of Condition (Informative, Uninformative), Trial Type (Familiar, Novel), Familiarized Pattern (ABB, ABA), and Trial (1–12), using the lme4 package (Bates et al., 2015) in R (R, Version 3.3.0). All fixed effects were sum-coded and centered prior to model fitting so that independent fixed effect estimates controlled for other fixed effects (holding them at their average value) and represented the mean difference between conditions, trial types, familiarized patterns, and trials, respectively. We also included by-subject random intercepts and random slopes for Trial. P-values were calculated for each model parameter using –2 log-likelihood ratio tests, yielding a Chi-squared value that, when combined with the degrees of freedom (representing the difference in number of parameters between a model with and without this parameter), yielded a p-value.

To follow up on significant effects, we performed linear contrasts of critical estimates (e.g., Trial Type slope) between groups of interest (e.g., Condition) using the lsmeans R package (Lenth, 2016). These comparisons yielded a mean effect estimate for each group and a 95% confidence interval indicating the reliability of this estimate.

2.2. Results and discussion

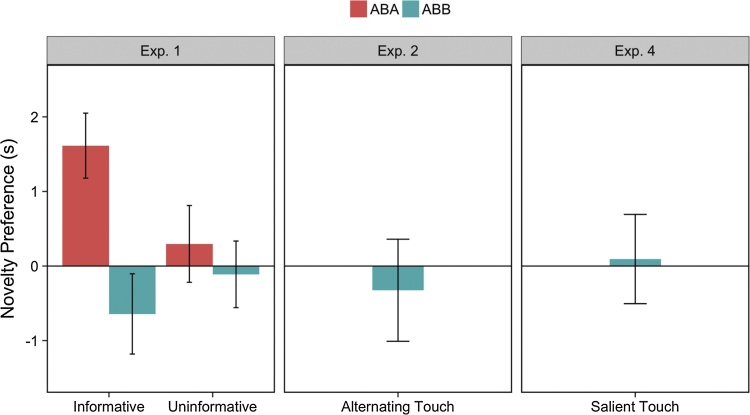

As expected, we observed a significant decline in infants’ looking over trials (β = −0.45, SE = 0.048, χ2(1) = 50.38, p < 0.001). We also observed a main effect of Condition: infants in the Informative condition looked for significantly less time on each trial compared to infants in the Uninformative condition (β = −1.11, SE = 0.49, χ2(1) = 5.37, p = 0.020). Finally, although we did not observe an overall significant effect of Trial Type (β = 0.10, SE = 0.32, χ2(1) = 0.11, p = 0.74), we observed a Trial Type by Familiarized Pattern interaction indicating that infants familiarized to ABA patterns responded differently to novel and familiar trials than infants familiarized to ABB patterns (β = 1.40, SE = 0.64, χ2(1) = 4.94, p = 0.027; see Fig. 2).

Fig. 2.

Infants’ novelty preferences during the test phases of Experiments 1, 2, and 4. The y-axis shows infants’ novelty preference in each experiment, calculated by subtracting looking times on familiar trials from looking times on novel trials. Positive values correspond to a novelty preference. In Experiment 1, infants who were familiarized to the ABA pattern in the Informative condition showed a preference for novel tone patterns over familiar tone patterns (also see Supplementary material). Infants did not show a novelty or familiarity preference in any other conditions or experiments. Error bars represent +/− 1 SEM (between subjects).

We next performed post-hoc analyses to determine whether or not infants discriminated the test stimuli within each condition (also see Supplementary material). To do so, we used linear contrasts to compare the Trial Type slope estimates between Familiarized Patterns and Conditions. These contrasts revealed that infants in the Informative condition familiarized to the ABA pattern looked significantly longer on novel trials than familiar trials (M = 1.45, SE = 0.64, 95% CI [.19,2.70]). Contrasts in each of the other groups revealed that looking times did not significantly differ between Trial Types (all 95% CI’s included zero). See Supplementary material for analyses with higher power for Experiment 1 (as well as Experiments 2 and 4).

The results of Experiment 1 reveal that infants in the Uninformative condition did not learn tone patterns, but that infants in the Informative condition who were familiarized to the ABA pattern, but not the ABB pattern, did show evidence of learning. We do not attribute this ABA-ABB difference to ease of learning, because this asymmetry only occurred in the Informative condition and has not been observed in previous studies (in cases where asymmetries have occurred, ABB patterns are typically learned more easily than ABA patterns; e.g., Johnson et al., 2009). Nonetheless, we cannot rule out the possibility that ABA patterns are easier to learn than ABB with the addition of touch, or in contexts with intersensory redundancy involving touch. The findings are also not likely to be attributable to ABA tone sequences being more interesting or pleasant than ABB tone sequences, because if that were the case we would have observed a preference for ABA items at test regardless of condition. What, then, can account for this asymmetry between the two familiarized patterns? One possibility is that the ABA touches, relative to ABB touches, were somehow more salient or arousing, because they alternated between two body regions, or alternated two times in the sequence instead of just once in the sequence.

3. Experiment 2

In Experiment 2, we sought to explore whether the effect in the Informative condition only for the ABA pattern was the result of the presence of 2 alternating touches, or whether it was due to the coupling of ABA touches with ABA tones. Thus, infants were familiarized with an ABB pattern, but received ABA touches (e.g., elbow-knee-elbow). Touches were temporally aligned with the tones, but did not provide a redundant cue to the tonal pattern. If infants’ successful learning of the ABA pattern in the Informative condition of Experiment 1 occurred because touches alternated between two locations (perhaps because touches of this kind are rare and/or more arousing), then infants in this experiment should similarly show learning of the pattern. If, however, intersensory matching between the tonal pattern and touch pattern is necessary to promote learning, then infants in Experiment 2 should fail to learn the familiarized pattern even in the presence of alternating touches. If the latter, then there must be a different reason for the observed ABA-ABB asymmetry in the Informative condition of Experiment 1.

3.1. Method

3.1.1. Participants

Fourteen monolingual English-learning, full-term, typically developing 7-month-olds with no known history of hearing or language impairments were tested (M = 7.39 months; range = 6.94–7.99; 9 males). Additional infants were tested but excluded from analyses for looking for the maximum possible duration on 8+ test trials (n = 2) or for failing to complete the study due to fussiness (n = 1).

3.1.2. Stimuli

Test stimuli were identical to those used in Experiment 1. Familiarization stimuli on channel one (the channel delivered to the infant) were identical to the ABB condition from Experiment 1. Stimuli on the second channel were distinct from Experiment 1 in that touch commands to the experimenter followed an ABA pattern. Thus, while temporally aligned with the tones to the infant, experimenter touches did not match the ABB tone pattern.

3.1.3. Procedure

The familiarization phase of Experiment 2 was nearly identical to the ABB condition of Experiment 1, except that in the familiarization phase of Experiment 2, touches were temporally aligned with the auditory signal but did not provide tactile information that was redundant with the ABB tone pattern. Importantly, this experiment had the same number of touches and touch locations as in Experiment 1.

3.1.4. Analysis

We used a hierarchical linear model to predict infants’ looking times (in seconds) trial-by-trial using fixed effects of Trial Type (Familiar, Novel) and Trial (1–12) and by-subject random intercepts and random slopes for Trial.

3.2. Results and discussion

Once again, we observed a significant decline in infants’ looking over trial (β = -0.46, SE = 0.08, χ2(1) = 20.04, p < 0.001). However, we found no evidence for a significant effect of Trial Type; infants looked equally between novel and familiar test trials (all ps > 0.19).

The results of this experiment allow us to rule out the possibility that ABA touching alone accounted for the performance of infants in the ABA pattern of the Informative condition from Experiment 1, but do not satisfactorily explain why successful learning was only observed for this pattern in the Informative condition. To address this question, we turned to natural caregiver-infant interactions to understand why it might be that a redundant ABA pattern of tones and touches helps infants learn, but a redundant ABB pattern of tones and touches does not.

4. Experiment 3

In this experiment, we used naturalistic data from mother-infant dyadic interactions during book-reading to explore why infants in Experiment 1 only learned from the ABA tone + ABA touch pattern, but not from the ABB tone + ABB touch pattern, even though both provided infants with intersensory redundancy which should aid in learning (Bahrick and Lickliter, 2000). We used a corpus of naturalistic caregiver-infant dyadic interactions to examine how caregivers typically touch their infants. Specifically, we explored whether caregivers ever touch in ABA or ABB three sequence patterns and, if so, which patterns are more vs. less frequent. We predicted that if the ABA touch pattern was less frequent than the ABB pattern in infants’ daily lives, then it may be more attention-grabbing, which could potentially explain the impact of touch on the learning of the ABA pattern in the Informative condition of Experiment 1. Similar effects of salience on pattern learning and generalization have been reported in previous studies (Gerken et al., 2014). The aim of these natural corpus analyses was also to inform an additional experiment investigating whether salient (vs. less salient) touch types might support infants’ learning from intersensory redundancy.

4.1. Method

4.1.1. Participants

Twenty-four monolingual English-learning, full-term, typically developing 5-month-olds with no known history of hearing or language impairments, and their mothers, were tested (M = 5.33; range = 4.34–5.82; 12 females). Ten additional dyads were excluded due to non-compliance with instructions (e.g., reading the books more than twice, n = 6), and due to poor video quality that rendered annotating maternal behaviors impossible (e.g., the video was too blurry; n = 4).

4.1.2. Stimuli

Stimuli consisted of books that were created specifically for this task. We created 8 books, targeting two semantic categories: animals and body parts. Each dyad was randomly assigned to read one book about animals and one book about body parts. The books were constructed in an identical manner differing only in the target words. For example, in one of the books about body parts, we targeted the words belly, nose, chin and leg, using the following text (accompanied with pictures): “Do you see the belly? Where is the belly? Here is the belly.” The same text, with the replacement of the target word, was repeated throughout the book for each target word, as well as across all other books (animals and body parts).

4.1.3. Procedure

Infants were seated in a high-chair facing their mothers in a sound-attenuated booth. Mothers were asked to read each of the two books twice and to interact with their infants as they would normally do at home. No reference was made to our interest in observing the use of touch. Interactions were videotaped to allow detailed micro-genetic annotation of maternal touches.

4.1.4. Analysis

Videos were annotated by trained research assistants using ELAN (Brugman and Russel, 2004). A template was created to ensure unified annotation of all videos. Research assistants worked in pairs and annotated each intentional maternal touch event on the infant’s body after reaching consensus on all features of that event. Each touch event was annotated for three different components/features: location of the touch, type of touch, and number of beats of the touch. Beats were defined as consecutive touches either with or without a pause between each instance (e.g., three squeezes to the belly with or without separation in time), or as touches separated in motion trajectory. To promote research assistants’ precision in coding touch events, each type of touch was defined in detail. For example, a brush was defined as a subtle motion on the infant’s skin, either with the whole hand, one finger, or several fingers; a single beat for a brush event was defined as a continuous movement in one direction, regardless of its length, while a switch in direction was considered as the beginning of a new beat. A squeeze was defined as a motion in which the mother’s whole hand was stretched out before squeezing the specific body part, and then stretched out again at end of the squeeze; a single beat of a squeezing event consisted of this whole cycle. More details about the annotation of touch events can be found in Abu-Zhaya et al. (2016). Upon completing the annotation of touch events, a Praat (Boersma and Weenink, 2013) textgrid file was exported from ELAN for each dyad. These textgrids were analyzed using R to detect the frequency and location of each touch type, with a focus on how frequently caregivers change locations in their touch sequences.

4.2. Results and discussion

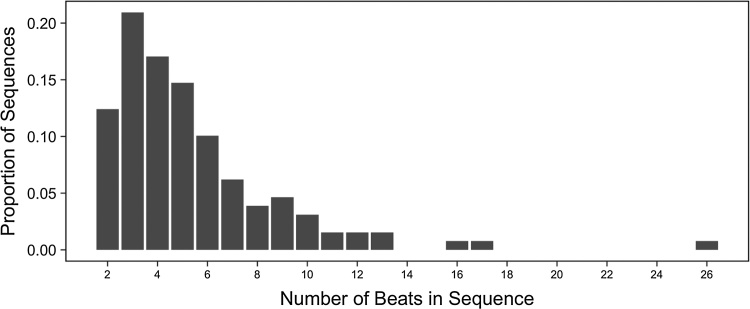

Data from the naturalistic touch corpus yielded several new insights into the nature of touch interactions in mother-infant dyadic interactions. The first major observation was that, overall, it was quite common for mothers to touch infants using beat gestures (see Fig. 3) in a way comparable to the beat gestures used in the experiments.

Fig. 3.

Number of beats in touch sequences from the naturalistic corpus of touch behaviors in mother-infant dyads. By definition, single touches are not included.

Specifically, of 463 instances of maternal touches, 28% were classified as beats involving repetitive squeezing, brushing, tapping, poking, moving, or pinching. Moreover, the modal number of beats in these touches — observed in 21% of all beats — was 3, corresponding to the number of touches used in the triad sequences in our experiments. These two factors converge to suggest that the experimenter’s touches in our experiments were not atypical for infants’ natural social interactions.

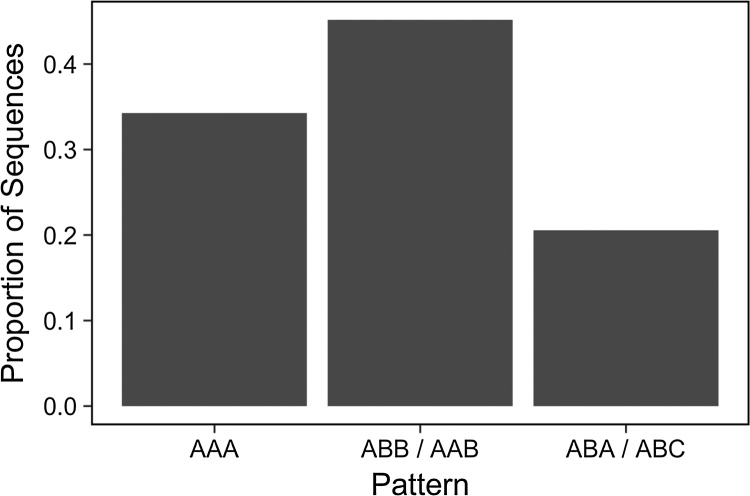

We next asked how the patterns of locations in touch sequences compared to the ABB and ABA patterns used in our experiments. The critical difference between these patterns is that, in the ABB pattern, a single touch location is repeated consecutively while, in the ABA pattern, each touch is in a different location from the previous touch (e.g., a brush gesture that moves from the hand to the foot and back to the hand). To assess the prevalence of touch patterns in parent-infant dyadic interactions with and without beats, we examined each 3-touch sequence for each dyad and classified it as including (1) three identical, consecutive touch locations (AAA), (2) two identical, consecutive touch locations and one other location (ABB or AAB), or (3) no immediately repeated touch locations (ABA or ABC). We found that 34% of all 3-touch sequences matched pattern 1, 45% matched pattern 2, and only 21% matched pattern 3 (see Fig. 4). The ABA pattern was particularly rare, accounting for only 3% of the 3-touch sequences in the corpus.

Fig. 4.

Proportion of 3-beat touches, separated by pattern type. Patterns are defined by touch location(s), such that AAA corresponds to sequences with three identical, consecutive touch locations (e.g., elbow-elbow-elbow); ABB (e.g., elbow-knee-knee) corresponds to sequences with identical, consecutive repetition of the second touch; and so on.

Thus, data from this corpus of touch patterns in parent-infant interactions suggest that the ABA sequences used in Experiment 1 are rare in infants’ natural experience.1 This means that such touches (ABA) might be experienced by the child as perceptually salient relative to ABB touch sequences. Thus, we have a plausible explanation for our finding that ABA touches supported infants’ learning of ABA tonal patterns, but that ABB touches do not support infants’ learning of ABB tonal patterns. Specifically, the rare ABA touch sequences may garner more attention or arousal, while the more common ABB touches may be ignored or perceived as less informative.

5. Experiment 4

We reasoned that if ABA touches are rare in infants’ natural interactions with their caregivers compared to ABB touches, as suggested in Experiment 3, then it might be the case that infants could learn from intersensorily redundant ABB auditory stimuli if we could somehow elevate the salience of ABB touches, thereby rendering them more noticeable for the infant. Thus, in Experiment 4, we attempted to amplify the salience of ABB touches aligned with ABB tonal patterns. Experiment 4 was essentially a replication of the ABB pattern from the Informative condition of Experiment 1, but with amplified touches. Specifically, the experimenter provided pokes (instead of the taps) corresponding to the tone pattern, e.g., ABB tones with pokes in the sequence knee-elbow-elbow. Pokes – another naturalistic touch type found in dyadic interactions (Abu-Zhaya et al., 2016) – were ideally suited for amplifying the salience of touches because they have a clear onset and offset, thereby increasing the likelihood that infants would segregate the second and third touches in the sequence ABB. We predicted that two consecutive, exaggerated pokes on the same location would highlight infants’ perception of the reduplicated location in ABB touch sequences.

5.1. Method

5.1.1. Participants

Ten monolingual English-learning, full-term, typically developing 7-month-olds with no known history of hearing or language impairments were tested (M = 7.31; range = 7.07–7.86; 6 males). One infant was excluded due to inattentiveness during the test phase.

5.1.2. Stimuli

All familiarization and test stimuli were identical to the ABB condition of Experiment 1. The only difference between this experiment and the ABB condition of Experiment 1 was that we attempted to increase the tactile segmentability of repeated touches on infants’ knees and elbows. To do this, the experimenter used exaggerated hand motions and pokes (rather than taps, as in Experiment 1), such that each poke in a sequence would be unlikely to blend together with other pokes.

5.1.3. Procedure

The familiarization phase of Experiment 4 was identical to that of the Informative condition of Experiment 1 in which infants were familiarized to the ABB pattern, except that touches were amplified as described above.

5.1.4. Analysis

We used a hierarchical linear model to predict infants’ looking times (in seconds) trial-by-trial using fixed effects of Trial Type (Familiar, Novel) and Trial (1–12) and by-subject random intercepts and random slopes for Trial.

5.2. Results and discussion

As in prior experiments, we observed a significant decline in infants’ looking over trial (β = −0.44, SE = 0.09, χ2(1) = 13.67, p < 0.001). However, we found no evidence of a significant effect of Trial Type; infants looked equally between novel and familiar trials (p = 0.96).

Rather than broadly promoting auditory pattern learning as either a social signal or cross-modal cue, the effect observed here – restricted to infants’ learning of the ABA pattern in the Informative condition of Experiment 1 – suggests that touches may only promote pattern learning when applied sequentially to distinct locations. Results from Experiment 4 suggest that infants’ failure in Experiment 1 to learn the ABB pattern with informative touches did not result from difficulty perceiving reduplicated touches. That is, in Experiment 4, the use of pokes – which had clear onsets and offsets – did not facilitate infants’ learning of ABB touch/tone sequences. To confirm this result, future work will need to explore other salient touch types, perhaps drawing from the moment-to-moment and aggregate patterns in our corpus of mother-infant touch. These data are also unlikely to be attributable to the ABB pattern merely being a more difficult pattern to learn than ABA, as many experiments show this pattern to be learnable (e.g., Marcus et al., 2007). Further, the results are not fully explained by the salience of ABA touches, as infants who heard ABB tones but were touched in ABA patterns (Experiment 2) failed to learn the ABB patterns under such conditions. Thus, the cause of the difficulty in learning of the ABB tone pattern with informative ABB touches is likely due to the interaction of touches and sounds, as ABA tone patterns are learnable with informative touches (see Experiment 1 and replications in Supplementary material).

Why, then, is the ABB pattern not learnable from tones even with informative and amplified touches? We speculate that the reason for this finding stems from the nuances of touch input that infants receive in everyday interactions. As shown in Experiment 3, caregivers do produce three-beat touch gestures, but they very rarely do so in alternating patterns. Patterns with at least two identical, consecutive touches were much more common. Thus, we suspect that the much less familiar ABA touch pattern gave rise to the learning observed among infants receiving intersensorily informative touch sequences while listening to ABA tone patterns in Experiment 1. If so, this is the first finding to suggest that infants track touch patterns in their input just as they track patterns in other modalities, such as vision and audition.

6. General discussion

Processing in the real world is replete with multisensory information (sight, sound, touch, smell, taste) that engages infants’ attention. Some of these sensory channels operate in tandem in naturalistic environments; for example, infant-directed speech is often produced both with visual object motion (Brand et al., 2002) and touches (Abu-Zhaya et al., 2016, Nomikou and Rohlfing, 2011). A question that follows from these findings is whether multisensory cues, such as touch and speech, are useful to the infant, or whether they complicate learning processes by derailing the detection of structure over time. The intersensory redundancy hypothesis (Bahrick and Lickliter, 2014) favors the former possibility, suggesting that events which are intersensorily redundant will ‘pop out’ for the infant, and thereby draw the infant’s attention to perceptually salient information. Supporting evidence from the literature shows that intersensorily redundant events appear to be processed in a privileged manner (Bahrick and Lickliter, 2000, Bahrick et al., 2004). For example, 5-month-olds show better rhythm discrimination when presented with intersensorily redundant stimuli over unimodal stimuli or asynchronous bimodal stimuli (Bahrick and Lickliter, 2009). However, this boost in arousal and learning from stimulation of two senses vs. one sense might not apply equally to all types of sensory input. In particular, some senses might be privileged over others in multisensory learning. Touch, in particular, is redundant across senses because the person being touched can often see (and sometimes even hear) the touch, and in infancy, touch often co-occurs with other sensory input (Frith and Frith, 2007). If infants are sensitive to intersensory redundancy, and not accustomed to it to the point of disinterest, then they should be able to use social touch as a cue for learning.

Using a combination of three experiments and analyses of a naturalistic corpus, we show that intersensory redundancy can support the learning of auditory patterns in infancy, and specifically that touch to an infant’s body – a pervasive, natural social-communicative cue in developmental contexts – can enhance the learnability of abstract patterns even in non-speech auditory stimuli. Specifically, we found that touch + tone stimuli that were redundant across modalities induced learning of an ABA tonal pattern, but this was not the case in the absence of such redundancy across modalities (i.e., the Uninformative conditions). Thus, social touch may go beyond arousal by impacting the learning of structure in incoming streams of information.

Why, however, does intersensorily redundant touch facilitate infants’ learning of tone patterns? We propose that touch calls attention even to non-speech sounds, and shifts them into an incrementally more social signal. With this boost in salience and relevance, spotlighted tone patterns become learnable. Related findings have been observed in the domain of speech, an inherently social signal (aside from self-directed speech, which is the exception rather than the norm). In previous research, infants readily learned auditory patterns from speech stimuli both because infants have ample experience learning patterns from speech and because social stimuli engage their attention (Ferguson and Lew-Williams, 2016, Marcus et al., 2007). Caregiver-provided touch is similarly social and commonly experienced by infants. In fact, it is so frequent that it is nearly impossible to imagine a caregiver providing touch that is not social in nature. Thus, by aligning a social signal (touch) with a non-social/non-human signal (pure tones), infants’ attention may have been engaged in a way that allowed them to process both modalities as socially relevant signals, which in turn supported their learning of patterns. However, there are important qualifications for this interpretation. First, we do not have access to the infant’s perspective on the social nature of caregiver-provided touch. Second, many mechanisms factor into attentional biases, including but not limited to the abundance vs. rarity of perceptual stimuli in the input. Third, there is likely to be a complex interaction between touch, attention, and learning in different contexts. Touch could trigger social and/or attentional processes, which in turn support learning separately or in combination.

It is important to note that a purely social account of touch cannot explain our results, because in Experiment 1, infants learned the ABA pattern more successfully than the ABB pattern even though social touch was informative in both cases. An additional consideration emerged from our corpus analyses in Experiment 3: the frequency of particular touch patterns in infants’ everyday interactions. While we found that three-beat touch sequences are very common, ABA sequences were rare relative to ABB sequences in our corpus. Infants, then, may have succeeded in learning the informative ABA pattern in Experiment 1 because ABA touches were particularly noticeable and arousing. Studies on encoding of novel stimuli suggest that a series of neural responses directs attention to salient events and, in doing so, enhances memory for those stimuli (Corbetta and Shulman, 2002, Ranganath and Rainer, 2003). Conversely, ABB touches (or touch sequences with any reduplication) may be so common that infants attend less to them − an idea supported by proposals that infants do not allocate attention to overly predictable stimuli (Kidd et al., 2012). This lesser degree of attention devoted to ABB touches may block their potential contribution to cross-modal learning. This raises an additional and novel insight provided by the interaction of our experiments and corpus analyses: that infants track touch patterns across time in their natural interactions with caregivers. Thus, while a purely social account is not compelling, it is possible that infants are sensitive to variations in the frequency of different patterns of social touch, both in the real world and in the lab. Future work exploring physiological effects of the two different patterns of touch, i.e., ABA and ABB, will help to adjudicate between a predominantly social account vs. a more broadly attention-driven account (or a combination thereof).

Finally, we would like to call attention to what we see as a gap in previous literature, which we have taken a first step in filling here: Despite the fact that caregiver touch and language have both been shown to contribute separately to infant development (e.g., language: Hart and Risley, 1995; touch: Feldman et al., 2014), and both are key social cues that engage infants’ attention, the relationship between caregiver touch and caregiver language use has been largely ignored. We have little understanding of how touch might contribute to the learning of the speech signal, which is exceptionally rich in co-occurrence information. Here, we began to address this gap by asking whether touch can boost the learnability of an otherwise difficult-to-learn auditory pattern. The fact that touch made an unlearnable auditory pattern learnable has implications for how touch (and other sources of intersensorily redundant experiences) might support the learning of important environmental patterns among young children with language delays and impairments.

Conflict of Interest

None.

Acknowledgments

We are very grateful to the participating families. This work was generously supported by a grant from the National Institute of Child Health and Human Development to CLW (R03HD079779), a SSHRC Doctoral Fellowship to BF, and a grant from Collaboration in Research (CTR) Indiana (CTSI) to AS.

Footnotes

The context of our corpus – a book-reading situation with repeated references to body parts – may have artificially encouraged same-location touch sequences. We cannot rule out that this resulted in the low incidence of ABA touches, as we do not currently have data on caregiver touch during natural play.

Supplementary data associated with this article can be found, in the online version, at https://doi.org/10.1016/j.dcn.2017.09.006.

Contributor Information

Casey Lew-Williams, Email: caseylw@princeton.edu.

Brock Ferguson, Email: brock.ferguson@gmail.com.

Rana Abu-Zhaya, Email: rabuzhay@purdue.edu.

Amanda Seidl, Email: aseidl@purdue.edu.

Appendix A. Supplementary data

The following is Supplementary data to this article:

References

- Abu-Zhaya R., Seidl A., Cristia A. Multimodal infant-directed communication: how caregivers combine tactile and linguistic cues. J. Child Lang. 2016;44:1088–1116. doi: 10.1017/S0305000916000416. [DOI] [PubMed] [Google Scholar]

- Aliabadi F., Askary R.K. Effects of tactile–kinesthetic stimulation on low birth weight neonates. Iran. J. Pediatr. 2013;23:289–294. [PMC free article] [PubMed] [Google Scholar]

- Bahrick L.E., Lickliter R. Intersensory redundancy guides attentional selectivity and perceptual learning in infancy. Dev. Psychol. 2000;36:190–201. doi: 10.1037//0012-1649.36.2.190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bahrick L.E., Lickliter R. Perceptual development: intermodal perception. In: Goldstein B., editor. vol. 2. Sage Publishers; Newbury Park, CA: 2009. pp. 753–756. (Encyclopedia of Perception). [Google Scholar]

- Bahrick L.E., Lickliter R. Learning to attend selectively: the dual role of intersensory redundancy. Curr. Dir. Psychol. Sci. 2014;23:414–420. doi: 10.1177/0963721414549187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bahrick L.E., Lickliter R., Flom R. Intersensory redundancy guides the development of selective attention, perception and cognition in infancy. Curr. Dir. Psychol. Sci. 2004;13:99–102. [Google Scholar]

- Bandura A. General Learning Press; New York: 1971. Social Learning Theory. [Google Scholar]

- Bates D., Mächler M., Bolker B., Walker S. Fitting linear mixed-effects models using lme4. J. Stat. Softw. 2015;67:1–48. [Google Scholar]

- Beck S., Wojdyla D., Say L., Betran A.P., Merialdi M., Requejo J.H., Rubens C., Menon R., Van Look P.F. The worldwide incidence of preterm birth: a systematic review of maternal mortality and morbidity. Bull. World Health Organ. 2010;88:31–38. doi: 10.2471/BLT.08.062554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beverly B.L., McGuinness T.M., Blanton D.J. Communication and academic challenges in early adolescence for children who have been adopted from the former Soviet Union. Lang. Speech Hear. Serv. Sch. 2008;39:303–313. doi: 10.1044/0161-1461(2008/029). [DOI] [PubMed] [Google Scholar]

- Boersma P., Weenink D. 2013. Praat: Doing Phonetics by Computer [Computer Program], Version 5.3.45.http://www.praat.org/ Retrieved 30 December 2013 from. [Google Scholar]

- Brand R.J., Baldwin D.A., Ashburn L.A. Evidence for ‘motionese’: modifications in mothers’ infant-directed action. Dev. Sci. 2002;5:72–83. [Google Scholar]

- Brugman H., Russel A. Annotating multimedia/multi-modal resources with ELAN. Proceedings of LREC Fourth International Conference on Language Resources and Evaluation. 2004 [Google Scholar]

- Corbetta M., Shulman G.L. Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Csibra G., Gergely G. Social learning and social cognition: the case for pedagogy. In: Munakata Y., Johnson M.H., editors. Processes of Change in Brain and Cognitive Development. Attention and Performance XXI. Oxford University Press; Oxford: 2006. pp. 249–274. [Google Scholar]

- Csibra G., Gergely G. Natural pedagogy. Trends Cogn. Sci. 2009;13:148–153. doi: 10.1016/j.tics.2009.01.005. [DOI] [PubMed] [Google Scholar]

- Dawson C., Gerken L. From domain-generality to domain-sensitivity: 4-month-olds learn an abstract repetition rule in music that 7-month-olds do not. Cognition. 2009;111:378–382. doi: 10.1016/j.cognition.2009.02.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farroni T., Massaccesi S., Pividori D., Johnson M.H. Gaze following in newborns. Infancy. 2004;5:39–60. [Google Scholar]

- Feldman R., Eidelman A.I., Sirota L., Weller A. Comparison of skin-to-skin (kangaroo) and traditional care: parenting outcomes and preterm infant development. Pediatrics. 2002;110:16–26. doi: 10.1542/peds.110.1.16. [DOI] [PubMed] [Google Scholar]

- Feldman R., Singer M., Zagoory O. Touch attenuates infants’ physiological reactivity to stress. Dev. Sci. 2010;13:271–278. doi: 10.1111/j.1467-7687.2009.00890.x. [DOI] [PubMed] [Google Scholar]

- Feldman R., Rosenthal Z., Eidelman A.I. Maternal-preterm skin-to-skin contact enhances child physiologic organization and cognitive control across the first 10 years of life. Biol. Psychiatry. 2014;75:56–64. doi: 10.1016/j.biopsych.2013.08.012. [DOI] [PubMed] [Google Scholar]

- Ferber S.G., Feldman R., Makhoul I.R. The development of maternal touch across the first year of life. Early Hum. Dev. 2008;84:363–370. doi: 10.1016/j.earlhumdev.2007.09.019. [DOI] [PubMed] [Google Scholar]

- Ferber S.G. The nature of touch in mothers experiencing maternity blues: the contribution of parity. Early Hum. Dev. 2004;79:65–75. doi: 10.1016/j.earlhumdev.2004.04.011. [DOI] [PubMed] [Google Scholar]

- Ferguson B., Lew-Williams C. Communicative signals support abstract rule learning by 7-month-old infants. Sci. Rep. 2016;6:1–7. doi: 10.1038/srep25434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferguson B., Waxman S.R. What the [beep]? Six-month-olds link novel communicative signals to meaning. Cognition. 2016;146:185–189. doi: 10.1016/j.cognition.2015.09.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Field T.M., Schanberg S.M., Scafidi F., Bauer C.R., Vega-Lahr N., Garcia R., Nystrom J., Kuhn C.M. Tactile/kinesthetic stimulation effects on preterm neonates. Pediatrics. 1986;77:654–658. [PubMed] [Google Scholar]

- Frank M.C., Slemmer J.A., Marcus G.F., Johnson S.P. Information from multiple modalities helps 5-month-olds learn abstract rules. Dev. Sci. 2009;12:504–509. doi: 10.1111/j.1467-7687.2008.00794.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frith C.D., Frith U. Social cognition in humans. Curr. Biol. 2007;17:R724–R732. doi: 10.1016/j.cub.2007.05.068. [DOI] [PubMed] [Google Scholar]

- Gerken L., Dawson C., Chatila R., Tenenbaum J.B. Surprise! Infants consider possible bases of generalization for a single input example. Dev. Sci. 2014;18:80–89. doi: 10.1111/desc.12183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hart B., Risley T. Paul H. Brookes Publishing; Baltimore: 1995. Meaningful Differences in the Everyday Experience of Young American Children. [Google Scholar]

- Herrera E., Reissland N., Shepherd J. Maternal touch and maternal child-directed speech: effects of depressed mood in the postnatal period. J. Affect. Disord. 2004;81:29–39. doi: 10.1016/j.jad.2003.07.001. [DOI] [PubMed] [Google Scholar]

- Herrmann E., Call J., Hernandez-Lloreda M.V., Hare B., Tomasello M. Humans have evolved specialized skills of social cognition: the cultural intelligence hypothesis. Science. 2007;317:1360–1366. doi: 10.1126/science.1146282. [DOI] [PubMed] [Google Scholar]

- Hertenstein M.J. Touch: its communicative functions in infancy. Hum. Dev. 2002;45:70–94. [Google Scholar]

- Hough S.D., Kaczmarek L. Language and reading outcomes in young children adopted from Eastern European orphanages. J. Early Interv. 2011;33:51–74. [Google Scholar]

- Jean A.D., Stack D.M. Functions of maternal touch and infants’ affect during face-to-face interactions: new directions for the still-face. Infant Behav. Dev. 2009;32:123–128. doi: 10.1016/j.infbeh.2008.09.008. [DOI] [PubMed] [Google Scholar]

- Jean A.D., Stack D.M. Full-term and very-low-birth-weight preterm infants’ self-regulating behaviors during a still-face interaction: influences of maternal touch. Infant Behav. Dev. 2012;35:779–791. doi: 10.1016/j.infbeh.2012.07.023. [DOI] [PubMed] [Google Scholar]

- Jean A.D., Stack D.M., Fogel A. A longitudinal investigation of maternal touching across the first 6 months of life: age and context effects. Infant Behav. Dev. 2009;32:344–349. doi: 10.1016/j.infbeh.2009.04.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson S.P., Fernandes K.J., Frank M.C., Kirkham N., Marcus G., Rabagliati H., Slemmer J.A. Abstract rule learning for visual sequences in 8- and 11-month-olds. Infancy. 2009;14:2–18. doi: 10.1080/15250000802569611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jusczyk P.W., Aslin R.N. Infants′ detection of the sound patterns of words in fluent speech. Cognit. Psychol. 1995;29:1–23. doi: 10.1006/cogp.1995.1010. [DOI] [PubMed] [Google Scholar]

- Kidd C., Piantadosi S.T., Aslin R.N. The goldilocks effect: human infants allocate attention to visual sequences that are neither too simple nor too complex. PLoS One. 2012;7:e36399. doi: 10.1371/journal.pone.0036399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krentz U.C., Corina D.P. Preference for language in early infancy: the human language bias is not speech specific. Dev. Sci. 2008;11:1–9. doi: 10.1111/j.1467-7687.2007.00652.x. [DOI] [PubMed] [Google Scholar]

- Lenth R.V. Least-squares means: the r package lsmeans. J. Stat. Softw. 2016;69:1–33. [Google Scholar]

- Marcus G.F., Vijayan S., Rao S.B., Vishton P.M. Rule learning by seven-month-old infants. Science. 1999;283:77–80. doi: 10.1126/science.283.5398.77. [DOI] [PubMed] [Google Scholar]

- Marcus G.F., Fernandes K.J., Johnson S.P. Infant rule learning facilitated by speech. Psychol. Sci. 2007;18:387–391. doi: 10.1111/j.1467-9280.2007.01910.x. [DOI] [PubMed] [Google Scholar]

- Modrcin-Talbott M.A., Harrison L.L., Groer M.W., Younger M.S. The biobehavioral effects of gentle human touch on preterm infants. Nurs. Sci. Q. 2003;16:60–67. doi: 10.1177/0894318402239068. [DOI] [PubMed] [Google Scholar]

- Mooncey S., Giannakoulopoulos X., Glover V., Acolet D., Modi N. The effect of mother-infant skin-to-skin contact on plasma cortisol and β-endorphin concentrations in preterm newborns. Infant Behav. Dev. 1997;20:553–557. [Google Scholar]

- Nelson C.A., Zeanah C.H., Fox N.A., Marshall P.J., Smyke A.T., Guthrie D. Cognitive recovery in socially deprived young children: the Bucharest early intervention project. Science. 2007;318:1937–1940. doi: 10.1126/science.1143921. [DOI] [PubMed] [Google Scholar]

- Nomikou I., Rohlfing K.J. Language does something: body action and language in maternal input to three-month-olds. IEEE Trans. Auton. Ment. Dev. 2011;3:113–128. [Google Scholar]

- Over H., Carpenter M. The social side of imitation. Child Dev. Perspect. 2012;7:6–11. [Google Scholar]

- Pye C. Quiché Mayan speech to children. J. Child Lang. 1986;13:85–100. doi: 10.1017/s0305000900000313. [DOI] [PubMed] [Google Scholar]

- Rabagliati H., Senghas A., Johnson S.P., Marcus G.F. Infant rule learning: advantage language, or advantage speech? PLoS One. 2012;7:e40517. doi: 10.1371/journal.pone.0040517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ranganath C., Rainer G. Neural mechanisms for detecting and remembering novel events. Nat. Rev. Neurosci. 2003;4:193–202. doi: 10.1038/nrn1052. [DOI] [PubMed] [Google Scholar]

- Saffran J.R., Pollak S.D., Seibel R.L., Shkolnik A. Dog is a dog is a dog: infant rule learning is not specific to language. Cognition. 2007;105:669–680. doi: 10.1016/j.cognition.2006.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schieffelin B.B., Ochs E. Cambridge University Press; Cambridge: 1986. Language Socialization Across Cultures. [Google Scholar]

- Schoenbrodt L.A., Carran D.T., Preis J. A study to evaluate the language development of post-institutionalised children adopted from Eastern European countries. Lang. Cult. Curric. 2007;20:52–69. [Google Scholar]

- Seidl A., Tincoff R., Baker C., Cristia A. Why the body comes first: effects of experimenter touch on infants' word finding. Dev. Sci. 2015;18:155–164. doi: 10.1111/desc.12182. [DOI] [PubMed] [Google Scholar]

- Sheridan M.A., Fox N.A., Zeanah C.H. Variation in neural development as a result of exposure to institutionalization early in childhood. Proceedings of the 29th Annual Conference of the Cognitive Science Society. 2012 doi: 10.1073/pnas.1200041109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shneidman L.A., Goldin-Meadow S. Language input and acquisition in a Mayan village: how important is directed speech? Dev. Sci. 2012;15:659–673. doi: 10.1111/j.1467-7687.2012.01168.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stack D.M., Muir D.W. Tactile stimulation as a component of social interchange: new interpretations for the still-face effect. Br. J. Dev. Psychol. 1990;8:131–145. [Google Scholar]

- Thiessen E.D. Effects of inter-and intra-modal redundancy on infants' rule learning. Lang. Learn. Dev. 2012;8:197–214. [Google Scholar]

- Tomasello M., Carpenter M. Shared intentionality. Dev. Sci. 2007;10:121–125. doi: 10.1111/j.1467-7687.2007.00573.x. [DOI] [PubMed] [Google Scholar]

- Tomasello M., Kruger A.C., Ratner H.H. Cultural learning. Behav. Brain Sci. 1993;16:495–552. [Google Scholar]

- Tomasello M., Call J., Nagell K., Olguin R., Carpenter M. The learning and use of gestural signals by young chimpanzees: a trans-generational study. Primates. 1994;35:137–154. [Google Scholar]

- Tomasello M. Harvard University Press; Cambridge, MA: 2000. The Cultural Origins of Human Cognition. [Google Scholar]

- Vouloumanos A., Werker J.F. Listening to language at birth: evidence for a bias for speech in neonates. Dev. Sci. 2007;10:159–164. doi: 10.1111/j.1467-7687.2007.00549.x. [DOI] [PubMed] [Google Scholar]

- Vygotsky L.S. MIT Press; Cambridge, Massachusetts: 1962. Thought and Language. [Google Scholar]

- Weiss S.J., Wilson P., Morrison D. Maternal tactile stimulation and the neurodevelopment of low birth weight infants. Infancy. 2004;5:85–107. [Google Scholar]

- Windsor J., Benigno J.P., Wing C.A., Carroll P.J., Koga S.F., Nelson C.A., III Effect of foster care on young children’s language learning. Child Dev. 2011;82:1040–1046. doi: 10.1111/j.1467-8624.2011.01604.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winnicott D.W. Perseus Publishing; Cambridge, MA: 1964. The Child, the Family, and the Outside World. [Google Scholar]

- Yoon J.M.D., Johnson M.H., Csibra G. Communication-induced memory biases in preverbal infants. Proc. Natl. Acad. Sci. U. S. A. 2008;105:13690–13695. doi: 10.1073/pnas.0804388105. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.