Abstract

Cochlear implant (CI) users find it extremely difficult to discriminate between talkers, which may partially explain why they struggle to understand speech in a multi-talker environment. Recent studies, based on findings with postlingually deafened CI users, suggest that these difficulties may stem from their limited use of vocal-tract length (VTL) cues due to the degraded spectral resolution transmitted by the CI device. The aim of the present study was to assess the ability of adult CI users who had no prior acoustic experience, i.e., prelingually deafened adults, to discriminate between resynthesized “talkers” based on either fundamental frequency (F0) cues, VTL cues, or both. Performance was compared to individuals with normal hearing (NH), listening either to degraded stimuli, using a noise-excited channel vocoder, or non-degraded stimuli. Results show that (a) age of implantation was associated with VTL but not F0 cues in discriminating between talkers, with improved discrimination for those subjects who were implanted at earlier age; (b) there was a positive relationship for the CI users between VTL discrimination and speech recognition score in quiet and in noise, but not with frequency discrimination or cognitive abilities; (c) early-implanted CI users showed similar voice discrimination ability as the NH adults who listened to vocoded stimuli. These data support the notion that voice discrimination is limited by the speech processing of the CI device. However, they also suggest that early implantation may facilitate sensory-driven tonotopicity and/or improve higher-order auditory functions, enabling better perception of VTL spectral cues for voice discrimination.

Keywords: cochlear implant, voice discrimination, talker discrimination, VTL, vocal-tract length, early implantation

Introduction

Tracking the voice of a specific talker of interest has high ecological and social relevance, as it contains important information regarding the sex of the speaker, his/her physical characteristics, and his/her affect (Belin et al. 2004). Identifying the voice characteristics of a specific talker can also be crucial for segregating talkers in a multi-talker environment, an ability that has been found to improve speech perception in a noisy environment (Bronkhorst 2015). These include characteristics such as the fundamental frequency (F0) and the formant frequencies, which provide cues on vocal-tract length (VTL) of a specific speaker (Baskent and Gaudrain 2016; Darwin et al. 2003; Mackersie et al. 2011; Vestergaard et al. 2009, 2011). Differences in F0 between two simultaneous talkers, for example, were shown to produce systematic improvements in speech perception when greater than two semitones, and differences in VTL were shown to produce systematic improvements in performance when greater than 1.3 semitones, in adults with normal hearing (NH) (Darwin et al. 2003). The largest gains in performance were shown, however, following a combined change in both F0 and VTL, suggesting that NH listeners rely strongly on both cues to differentiate between talkers (Darwin et al. 2003), as was also reported for gender categorization (Fuller et al. 2014; Skuk and Schweinberger 2013; Smith et al. 2007; Smith and Patterson 2005).

Adults with severe to profound hearing loss who use cochlear implants (CIs) find it difficult to discriminate between talkers (e.g., Mühler et al. 2009) as well as to identify the specific talker of interest (e.g., Cullington and Zeng 2011; Vongphoe and Zeng 2005), which may partially explain why they struggle to understand speech in a multi-talker environment (e.g., Fu et al. 1998; Munson and Nelson 2005). They also show poor perception of a speaker’s gender (e.g., Fu et al. 2005; Fuller et al. 2014; Massida et al. 2013), vocal emotions (e.g., Luo et al. 2007), lexical tones (e.g. Han et al. 2009; Morton et al. 2008; Peng et al. 2004), and speech prosody (e.g., Chatterjee and Peng 2008; Luo et al. 2012), which are all speech features known to be mediated by changes in F0 (Moore et al. 2009). Difficulties in gender and speaker identification, degraded vocal emotion recognition (e.g., Luo et al. 2007), as well as a degraded ability to discriminate voice stimuli from environmental sounds (e.g., Massida et al. 2011) were also shown for listeners with NH who were assessed with noise-excited channel vocoders. Taken together, these findings have been attributed primarily to the poor place pitch cues determined by the limited spectral presentation in the CI electrode array (Laneau et al. 2004; Rogers et al. 2006). It was suggested that in the presence of spectrally reduced speech, CI users also rely on periodicity cues transmitted to individual electrodes via the temporal envelope of the signal (e.g., Chatterjee and Peng 2008; Shannon 1983; Zeng 2002). These periodicity cues may be insufficient, however, because temporal pitch perception for CI users has been shown to saturate at around 300 Hz (e.g., Zeng 2002), especially in the less advanced CI processors where stimulation rate is low (e.g., Laneau et al. 2006). This may be the result of the limited amplitude modulation frequency of the temporal envelope of the incoming signal, which is super-imposed on fixed-rate pulse trains (e.g., Luo et al. 2012). Nevertheless, recent evidence suggests that CI users are able to perceive F0 cues, at least to some extent, via the temporal envelope of the signal (e.g., Chatterjee and Peng 2008; Luo et al. 2008; Segal et al. 2016; Schvartz-Leyzac and Chatterjee 2015). Taken together, these studies may reflect degraded temporal and, even more so, spectral resolution with a CI, which may hamper F0 and VTL perception.

Recent studies have separately assessed the perception of F0 or VTL cues by CI users to better understand the relative weight of the degraded CI spectro-temporal resolution on the coding of these cues (Fuller et al. 2014; Meister et al. 2016). In these studies, the ability to recognize a speaker’s gender was tested, while manipulating word or sentence stimuli to systematically vary F0, VTL, or both. Specifically, adult CI users with postlingual deafness were asked to categorize the stimuli as spoken by man or woman (Fuller et al. 2014), or to rate the “maleness” or “femaleness” of the stimuli (Meister et al. 2016). Results from both studies indicated abnormal gender categorization; CI users made only limited use of the VTL cues, judging the speaker’s gender almost entirely based on F0 cues. These results were explained in relation to the accessibility of the different cues for speaker gender identification. That is, whereas VTL is coded solely based on spectral (place) information, which is generally highly degraded in the CI device, F0 is coded using both spectral and temporal information, the latter more preserved in most CIs (e.g., Fu et al. 2004; Laneau and Wouters 2004; Xu and Pfingst 2008). This explanation is supported by recent evidence from CI simulations, showing F0 perception to be more resilient to a reduction in spectral resolution than VTL perception (Gaudrain and Başkent 2015).

There may be additional factors, other than the poor frequency resolution of the CI device that may influence VTL perception in CI users. It may be the case, that cortical reorganization, induced by a prolonged period of deafness, is limiting the ability of CI users to benefit from the spectral cues transmitted by the CI. This explanation is supported by findings of Coez et al. (2008) showing degraded functional activation in voice-sensitive temporal regions for postlingual CI users. A different explanation for limited VTL perception by CI users is their possible difficulties in exploiting the VTL cues delivered by the CI device (Gaudrain and Başkent 2015). If, for example, the process of VTL perception requires the listener to relate the acoustic speech information to the vocal-tract gestures of the speaker (e.g., Galantucci et al. 2006; Liberman and Mattingly 1985), postlingual CI users, who learned to process VTL cues based on acoustic information, may find it difficult to adapt to the spectro-temporal information provided by CI (Massida et al. 2011, 2013). Based on this notion, early-implanted prelingual CI users may be expected to exhibit better generalization of information across speakers compared to postlingual CI users, because of no prior experience with acoustic information. To our knowledge, this hypothesis has not been tested.

The major aim of the present study was to assess the ability of CI users with prelingual deafness to discriminate between sentences based on either fundamental frequency cues (F0 discrimination), VTL cues (VTL discrimination), or both (F0 + VTL discrimination), in relation to age at implantation. Performance scores were compared to those of NH individuals, listening to stimuli that were spectrally degraded via vocoder or unprocessed. The secondary aim of the study was to explore possible associations between voice discrimination of prelingually deafened CI users and auditory and cognitive abilities. These abilities included speech recognition in noise and in quiet, frequency discrimination of pure tones, auditory memory, attention, and non-verbal reasoning. It was hypothesized that deterioration of the spectral organization in cortical and subcortical regions occurring prior to implantation (Fallon et al. 2014a; Shepherd and Hardie 2001) will result in inferior VTL performance by late implanted prelingually deafened adults compared to those implanted early. Thus, we expected the late-implanted individuals to put more weight on F0 cues as compared to the early-implanted individuals. Following this line of reasoning, we also expected those who are late-implanted to show only minimal benefits, if any, from the combined F0 and VTL cues beyond the F0 cues alone, because of difficulties in perceiving VTL cues. Finally, voice discrimination scores were expected to correlate with speech recognition, given that both tasks require efficient spectral processing of sound.

Materials and Methods

Participants

Eighteen CI adult users (M age = 25.11 ± 3.94) and nine adults with NH (M age = 23.78 ± 2.39) participated in the present study. All the CI users were prelingually deafened: Sixteen of the participants were congenitally deaf and two were deafened before age 1.5 years old. All the participants had used hearing aids with minimal success before being implanted. Seven participants were bilateral CI users who were sequentially implanted. All used spoken language as their primary mode of communication. Background information of the CI users is shown in Table 1. Half of the participants (n = 9) were “early-implanted” (up to 4 years of age), whereas the other half were “late-implanted” (6 years of age and older). Note that this determination was based on studies showing the most sensitive period for auditory deprivation to be up to 4 years of age, with normal synaptogenesis occurring up to this age in the human auditory cortex (Kral and Tillein 2006). Demographic background for these two subgroups is shown in Table 2. The CI users were recruited via the internet and social networks and were paid for their participation. The NH adults were university students. All had pure-tone thresholds ≤ 20-dB HL bilaterally, at 250, 500, 1000, 2000, and 4000 Hz. None of the participants had previous musical training and none had known attention deficits, based on self-reporting. Note that mean standard scores on non-verbal intelligence test for the two CI groups were shown to be within the norms of the test (Raven 1998), suggesting that the cognitive abilities of both groups of CI users were similar to that of adults with NH.

Table 1.

Background information of the CI participants

| Subject ID | Gender | Etiology | Age at identification (years:months) |

Age at implantation (years:months) |

Age at testing (years:months) |

Implant | Vocation |

|---|---|---|---|---|---|---|---|

| CI1 | M | Genetic | 0:10 | 3 (L) | 23 | Cochlear nucleus 24 | Student |

| CI2 | M | Unknown | 0:03 | 12:09 (R) | 28:05 | Cochlear nucleus 24 | Practical engineer |

| CI3 | F | Connexin | 0:07 | 13 (R) | 23:10 | Advanced bionics-Naida | Ultrasound technician |

| CI4 | M | Connexin 26 | 1:0 | 21:06 (R) | 31:02 | Cochlear nucleus 22 | Hi-Tech |

| CI5 | F | Unknown | 0:07 | 8:04 (R) | 24 | Advanced bionics-Naida | Student |

| CI6 | M | Genetic | 1:06 | 3 (L) | 21.02 | Cochlear nucleus 22 | Soldier |

| CI7 | F | Genetic | 0:06 | 2:06 (L) | 19.07 | Cochlear-Esprit | Student |

| CI8 | M | Connexin 26 | 1:0 | 29:08 (L) | 31:02 | Advanced bionics-Naida | Hi-Tech |

| CI9 | M | Viral | 1:03 | 4 (R) 20 (L) |

25:06 | Cochlear nucleus 24 | Student |

| CI10 | M | Unknown | 0:06 | 9 (L) 24:06 (R) |

25:08 | Both: cochlear nucleus 22 | Salesman |

| CI11 | F | Unknown | 0:03 | 2:10 (L) 5.2 (R) |

20:11 | Both: cochlear nucleus freedom | Soldier |

| CI12 | M | Genetic | 1:06 | 3:09 (R) 15 (L) |

21:07 | Cochlear nucleus Esprit (R) Freedom (L) |

Student |

| CI13 | M | Unknown | 0:06 | 6 (L) | 21:11 | Cochlear nucleus 22 | Gym instructor, basketball player |

| CI14 | M | Unknown | 0:08 | 2:06 (L) | 24:04 | Cochlear nucleus freedom | Soldier |

| CI15 | F | Waardenburg syndrome | 0:03 | 2:06 (L) 16 (R) |

22:09 | Cochlear nucleus freedom (R) Nucleus5 (L) |

Student |

| CI16 | M | Connexin | 0:03 | 15:04 (R) 25:08 (L) | 27:01 | Both: Med-El Opus | Yeshiva student |

| CI17 | M | Meningitis | 0:07 | 2:03 (R) 14 (L) |

22:11 | Cochlear nucleus 22 R) 24 (L) |

Odd jobs |

| CI18 | M | Unknown | 1:06 | 33:04(L) | 35:03 | Med-El Opus 2 | Hi-Tech |

Table 2.

Means (M), standard deviations (SD), and range of the demographic background for the two subgroups of CI users: “early-implanted” and “late-implanted”

| CI group | Age at identification (years:months) | Age at first implantation (years:months) |

Age at testing (years:months) |

|---|---|---|---|

| “Early-implanted” |

M = 0:10 SD = 0:06 Range = 0:03–1:06 |

M = 3:01 SD = 0:07 Range = 2:03–4:0 |

M = 22:05 SD = 1:10 Range = 19:05–25:06 |

| “Late-implanted” |

M = 0:08 SD = 0:05 Range = 0:03–1:06 |

M = 16:07 SD = 10:01 Range = 6:0–33:04 |

M = 27:07 SD = 4:03 Range = 21:09–35:03 |

The study was approved by the Institutional Review Board of ethics at Tel Aviv University.

Stimuli

Three sentences from the Hebrew version of the Matrix sentence test (following Kollemeier 2015), recorded by a native Hebrew female speaker, were used for the voice discrimination task. The original five-word sentences of the Hebrew Matrix test were shortened to include only three words: a subject, a predicate, and an object, in order to reduce working memory demands (mean duration = 104.67 ± 6.11 ms). Mean F0 and first four formants of the three tested sentences are detailed in Table 3. Sentences were manipulated to produce three sets of materials in which either the F0, the VTL, or both F0 and VTL were acoustically lower in semitones than the original sentences. For these manipulations, a 13-point stimulus continuum was constructed, with values between 0.18 and 8 semitones, in steps. Specifically, the processed continuum of speech stimuli contained stimuli that were manipulated to be 0.18, 0.26, 0.36, 0.51, 0.72, 1.02, 1.44, 2.02, 2.86, 4.02, 5.67, and 8 semitones lower than the original stimuli either in F0, VTL, or both F0 and VTL. Thus, for the first sentence, in which mean F0 was 175.62 Hz, F0 manipulations exponentially ranged in steps from 174 to 110.35 Hz, and VTL manipulations exponentially ranged in steps from 0.99 (the smallest ratio between the original formant frequencies and the manipulated formant frequencies) to 0.63 (the highest manipulation ratio). All manipulations were implemented using the PRAAT software version 5.4.17 (copyright© 1992–2015 by Paul Boersma and David Weenink). F0 changes were performed using the PRAAT’s Manipulation editor, which employed the PSOLA algorithm (Moulines and Charpentier 1990) to extract and change pitch. VTL changes were performed using the “change gender editor,” which involved resampling the sound in order to compress the frequency axis by a range of factors (ratios as the F0 changes) and then PSOLA algorithm to obtain the original pitch and duration. F0 + VTL changes were performed using first the change gender editor and then the “manipulation” editor.

Table 3.

Mean F0 and first four formants (F1–F4) in Hertz of the three tested sentences (S1–S3)

| Sentences/frequency (Hz) | S1 | S2 | S3 |

|---|---|---|---|

| F0 | Mean = 176 ± 28 Range = 133–238 |

Mean = 184 ± 18 Range = 146–218 |

Mean = 183 ± 21 Range = 149–241 |

| F1 (mean) | 586 | 872 | 907 |

| F2 (mean) | 1880 | 2001 | 2129 |

| F3 (mean) | 3092 | 3395 | 3397 |

| F4 (mean) | 4213 | 4147 | 4243 |

Vocoded Stimuli

Participants with NH were assessed both with and without stimulus processing using a noise-excited channel vocoder. The vocoder used eight channels for the analysis and reconstruction filterbanks and Gaussian noise for acoustic reconstruction. An eight-channel vocoder was used because previous studies have demonstrated such a configuration to yield speech intelligibility performance similar to that of the best performing CI users (Friesen et al. 2001; Fu et al. 2004, 2005). The analysis and reconstruction filterbanks were specified on a logarithmic frequency scale with center frequencies spaced between 250 and 4000 Hz. Each filter was implemented as a 256th-order finite impulse response filter constructed using the Hann window method. The bandwidth of the filters was defined such that the 6-dB crossover point occurred midway between center frequencies with logarithmic spacing. Given that the filterbank used 8 filters spanning 4 octaves, the corresponding bandwidth of these filters is 1/2 octave; specifically, the 6-dB crossover points occurred at 2±1/4 times the center frequency of the filter. The channel envelopes were extracted from the filterbank outputs using the Hilbert transform method. Independent Gaussian noise was generated for each channel and then multiplied by the corresponding channel envelope and processed through the reconstruction filterbank. The sentences that were processed were the same ones used by the CI users. For each sentence, the average power across time was calculated for each channel output from the analysis filterbank, which was then used to scale the outputs of the reconstruction stage just prior to adding the signals together for the vocoder output.

Apparatus

Stimuli were delivered using a laptop personal computer through an external sound-card and two tabletop loudspeakers that were located 45° to the right and left of the participant. The effect of reflective surface on the stimuli was minimized because most of the table was covered with the laptop computer, loudspeakers, and mouse pad. The stimuli were presented at approximately 65-dB SPL, as was determined by a portable sound level meter held at the approximate location of the participant’s head. All testing took place in a single-wall sound-treated room. Bilateral CI users were tested wearing both CIs. Unilateral CI users were tested only with their CI (without a hearing aid on the other side). Participants with NH had their right (n = 5) or left (n = 4) ear plugged using earplugs and earphones during the entire testing process to simulate single-sided hearing loss.

Procedure

Difference limens (DLs) were assessed for the F0 manipulation, VTL manipulation, and F0 + VTL cues using a three-interval three-alternative forced choice procedure. Each trial consisted of three sentences, two reference sentences, and one comparison sentence, specified at a random interval. Inter-stimulus interval was 300 ms. Participants were instructed to select the sentence that “sounded different” using the computer interface. A two-down one-up adaptive tracking procedure was used to estimate the DLs corresponding to the 70.7% detection threshold on the psychometric function (Levitt 1971). The difference between the stimuli was reduced by a factor of two until the first reversal. Following the first reversal, the difference was reduced or increased by , until the sixth reversal. DLs were calculated as the geometric mean of the last four reversals. There was no time limit for the response and no feedback was provided.

Study Design

Before formal testing, each participant performed a short familiarization task, including 5–10 trials with F0 manipulation at a difference of − 8 semitones between the reference and the comparison sentences, in order to ensure that the task was understood. Three threshold estimates were obtained for each of the three manipulations (i.e., F0 manipulation, VTL manipulation, and F0 + VTL manipulation) for the CI users. Thus, each participant performed a total of nine tests. One of three sentences was assigned to each threshold estimate for a given manipulation type. Four threshold estimates were obtained for each of the three manipulations for the NH group. The first three in each manipulation were conducted with vocoded stimuli: using the same sentences as the CI users heard. The fourth was conducted with non-vocoded stimuli, based on a pilot study that showed no learning effects between sentences in adults with NH. The order of the manipulation types and the sentences was randomized across participants. Each measurement lasted approximately 2–3 min. A short break was provided when needed.

Secondary Test Measures

Secondary test measures were obtained before the voice discrimination testing for half of the participants and following the voice discrimination testing for the other half.

Speech recognition was evaluated for the CI users using two different tests: (1) The prerecorded Hebrew version of the AB (Arthur Boothroyd; Boothroyd 1968) open-set monosyllabic, consonant–vowel–consonant isophonemic word recognition test (HAB; Kishon-Rabin et al. 2004). Two 10-word lists were presented at 65-dB SPL in quiet. (2) The Hebrew version of the Matrix sentence in noise test (following Kollemeier 2015). This test included 20 five-word sentences presented at 79-dB SPL with a fixed speech-shaped background noise at 65-dB SPL, producing a signal-to-noise ratio of + 14 dB.

Frequency discrimination for pure tones (DLF) was evaluated for all participants using a reference pure tone of 1000 Hz and 200 different comparison tones that varied from 1001 to 1200 Hz in 1-Hz steps. Each stimulus had a total duration of 300 ms and was gated with rise/fall time cosine ramps of 25 ms. Stimuli were presented at 65-dB SPL. Thresholds were determined using an adaptive three-interval two-alternative procedure (Zaltz et al. 2010) in a two-down one-up tracking procedure. No feedback was provided. Two DLF thresholds were obtained for each participant.

Auditory memory capacity and auditory working memory abilities were assessed for 17 of the 18 CI users and for all the participants in the control group, using the forward and backwards digit span subtests of the “Wechsler intelligence scale” (Wechsler 1991). Visual attention and task switching abilities were assessed for the same participants using the “trail making test” parts A and B (Tombaugh 2004). Non-verbal intelligence was assessed for 12 of the 18 CI users using “Raven’s standard progressive matrices” test (Raven 1998).

Data Analysis

All the data, except from the speech results, were log-transformed for the statistical analyses to normalize the distributions (Kolmogorov-Smirnov test: p > 0.05) and to allow parametric statistics. Results in the speech tests were arcsine-transformed before statistical analysis. Statistical analysis was conducted using SPSS software.

Results

Voice Discrimination

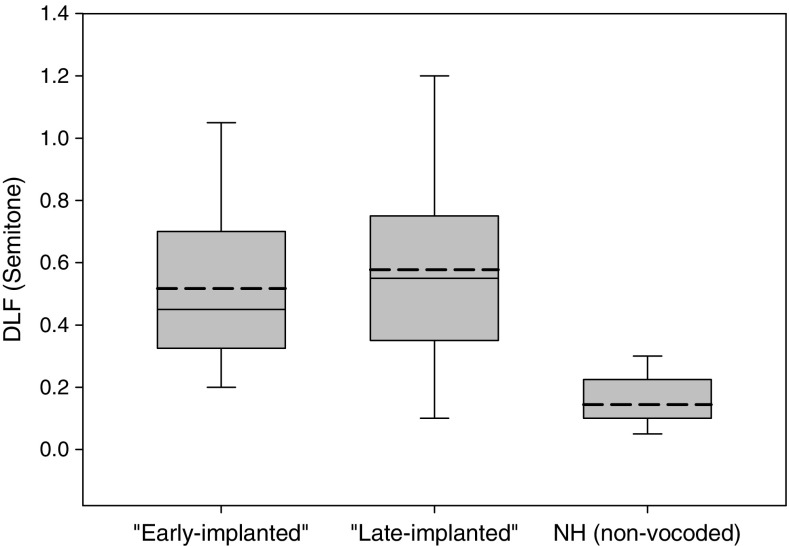

Measured DLs are shown as a function of age at implantation for the CI participants in Fig. 1, with results for the three voice discrimination cues (F0, VTL, and F0 + VTL) shown in separate panels. A linear trend line is shown, which accounts for 2, 54, and 35% of the variance for the F0, VTL, and F0 + VTL DLs, respectively. A power-law trend line (i.e., y = axb + c) is shown, which accounts for 14, 67, and 42 % of the variance for the respective conditions. Pearson product moment correlation showed a highly significant association between age at implantation and years of experience with CI [r(18) = − 0.97, p < 0.001]. Therefore, correlations with DLs were conducted only for age at implantation. Results showed significant correlations with VTL DL [r(18) = − 0.797, p < 0.001] and F0 + VTL DL [r(18) = − 0.570, p = 0.013] and non-significant correlation with F0 DL [r(18) = 0.183, p = 0.466]. These results suggest that the earlier the individual was implanted the better his or her ability to discriminate between different voices, based on VTL cues. However, because age at CI implantation was highly related to experience with CI, the results may also suggest that VTL discrimination was positively affected by longer durations of CI use. The effect of experience with CI was difficult to test, however, because all but two CI users in the present study had more than 10 years of CI experience. Furthermore, the two CI users who had less than 10 years with the CIs, i.e., the ones who had 1:06 and 1:11 years with CI, were also the ones who were implanted the latest (at 29:08 and 33:04 years old, respectively). Of them, the first participant showed the worst VTL DL whereas the second showed close to average VTL results. Therefore, the subsequent analyses were also conducted using the variable age at implantation, assuming it to be the factor that derived these correlations.

Fig. 1.

Mean F0, VTL, and F0 + VTL DL plotted as a function of age at implantation and years with CI. Also shown in frames are the results of Pearson product moment correlations that were conducted separately for each variable

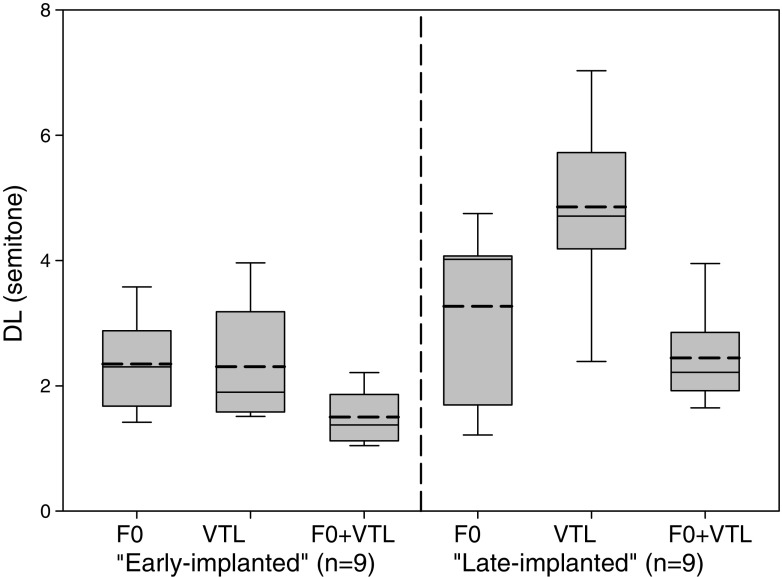

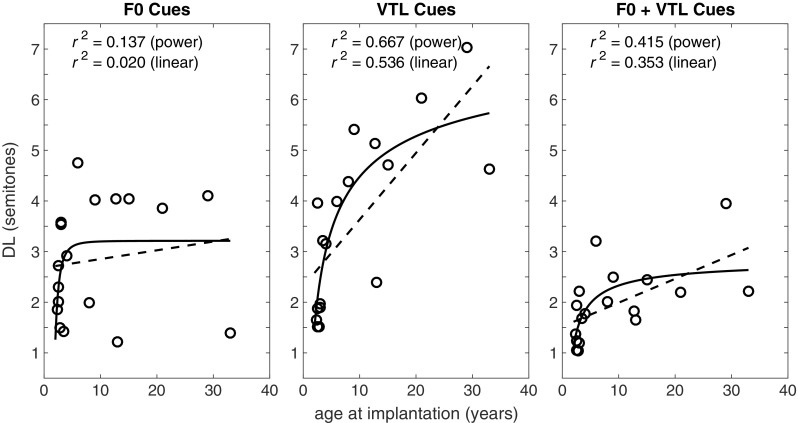

Table 4 displays the DL thresholds of the CI users with age categorized into two groups (early-implanted = ≤ 4 years and late-implanted = ≥ 6 years) and of the NH listeners who listened to vocoded and non-vocoded stimuli. Figure 2 displays a box & whiskers plot of the DL thresholds only for the CI users.

Table 4.

Detailed mean F0, VTL, and F0 + VTL discrimination thresholds of the CI users (+ SD) with age categorized into two groups (“early-implanted” = ≤ 4 years and “late-implanted” = ≥ 6 years) and of the NH listeners who listened to vocoded and non-vocoded stimuli

| Manipulation | F0 | VTL | F0 + VTL | Mean all manipulations | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Measurement | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 |

| “Early-implanted” | 2.60 (1.03) |

2.49 (0.68) |

2.19 (1.16) |

2.81 (1.73) |

2.44 (2.03) |

1.67 (0.98) |

1.82 (0.66) |

1.34 (0.96) |

1.35 (0.61) |

2.41 (0.73) |

2.09 (0.66) |

1.73 (0.66) |

| Mean 1–3 | Mean 1–3 | Mean 1–3 | Mean 1–3 | |||||||||

| 2.35 (0.72) |

2.30 (0.90) |

1.50 (0.42) |

2.05 (0.48) |

|||||||||

| “Late-implanted” | 3.70 (1.69) |

2.83 (1.40) |

3.27 (1.81) |

4.79 (1.76) |

4.51 (1.74) |

5.27 (1.76) |

2.46 (1.42) |

2.72 (1.63) |

2.15 (1.24) |

3.65 (1.05) |

3.35 (1.04) |

3.56 (1.29) |

| Mean 1–3 | Mean 1–3 | Mean 1–3 | Mean 1–3 | |||||||||

| 3.27 (1.34) |

4.86 (1.31) |

2.44 (0.72) |

3.52 (1.23) |

|||||||||

| NH listeners (vocoded stimuli) | 4.06 (2.26) |

2.7 (2.02) |

3.06 (1.8) |

2.22 (1.15) |

2.18 (1.31) |

1.82 (1) |

1.67 (0.58) |

1.82 (0.8) |

1.21 (0.64) |

2.65 (1.78) |

2.24 (1.46) |

2.03 (1.43) |

| Mean 1–3 | Mean 1–3 | Mean 1–3 | Mean 1–3 | |||||||||

| 3.28 (2.04) |

2.07 (1.13) |

1.57 (0.7) |

2.36 (1.57) |

|||||||||

| NH listeners (non-vocoded stimuli) | 0.63 (0.22) |

0.58 (0.17) |

0.36 (0.12) |

0.52 (0.21) |

||||||||

Fig. 2.

Box and whiskers plots for the mean F0, VTL, and F0 + VTL discrimination thresholds, separately for “early-implanted” individuals and “late-implanted” individuals. Box limits include the 25th–75th percentile data. The continues horizontal line within each box represents the median and the dashed horizontal line represents the mean. Bars extend to the 10th and 90th percentiles

A two-way repeated measures ANOVA was conducted, with manipulation type (F0, VTL, F0 + VTL) and measurement (1, 2, 3) as within-subject variables and age group as the between-subject variable. Muchley’s test of sphericity yielded insignificant results, and therefore sphericity was assumed for determination of degrees of freedom in this analysis. Results showed significant effects of manipulation type [F(2,32) = 16.725, p < 0.001, ƞ2 = 0.511] and age group [F(1,16) = 16.556, p = 0.001, ƞ2 = 0.509], with a significant manipulation type ∗ age group interaction [F(2,32) = 5.696, p = 0.008, ƞ2 = 0.263]. Pairwise comparisons with Bonferroni adjustment revealed that for the early-implanted participants the F0 + VTL manipulation yielded significantly lower (better) DLs than the F0 manipulation (p = 0.010) and marginally lower DLs than the VTL manipulation (p = 0.07). No significant difference was found between the F0 and VTL manipulations (p = 0.460). These results indicate that while the F0 and VTL cues contributed to discrimination in the early-implanted group, the combination of both cues yielded the best discrimination scores. In contrast, for the late-implanted group, the F0 + VTL manipulation and the F0 manipulation yielded significantly better DLs than the VTL manipulation (p < 0.001, p = 0.012, respectively) with no significant difference between the F0 and F0 + VTL manipulations (p = 0.177). These results may suggest that voice discrimination in the late-implanted group was primarily based on the F0 cues, with minimal, if any, contribution from the VTL cues. Pairwise comparisons with Bonferroni adjustment comparing DLs between the late- and early-implanted groups for each manipulation showed significantly better DLs for the early-implanted group with the VTL (p < 0.001) and F0 + VTL (p = 0.006) manipulations, but not with the F0 (p = 0.268) manipulation. No significant main effect was found for measurement (1, 2, 3) [F(2,32) = 2.797, p = 0.079], with borderline significant linear effect (p = 0.052) indicating a trend for improvement between the measurements, as was shown by a mean improvement of 0.31 semitones between the first and second measurements (an improvement of 10%) and 0.07 semitones between the second and third measurements (an improvement of 2.5%). These improvements may have reflected the procedural (task) learning that occurred in the course of testing, as participants were all naïve to the current task. No other significant interactions were found (p > 0.05).

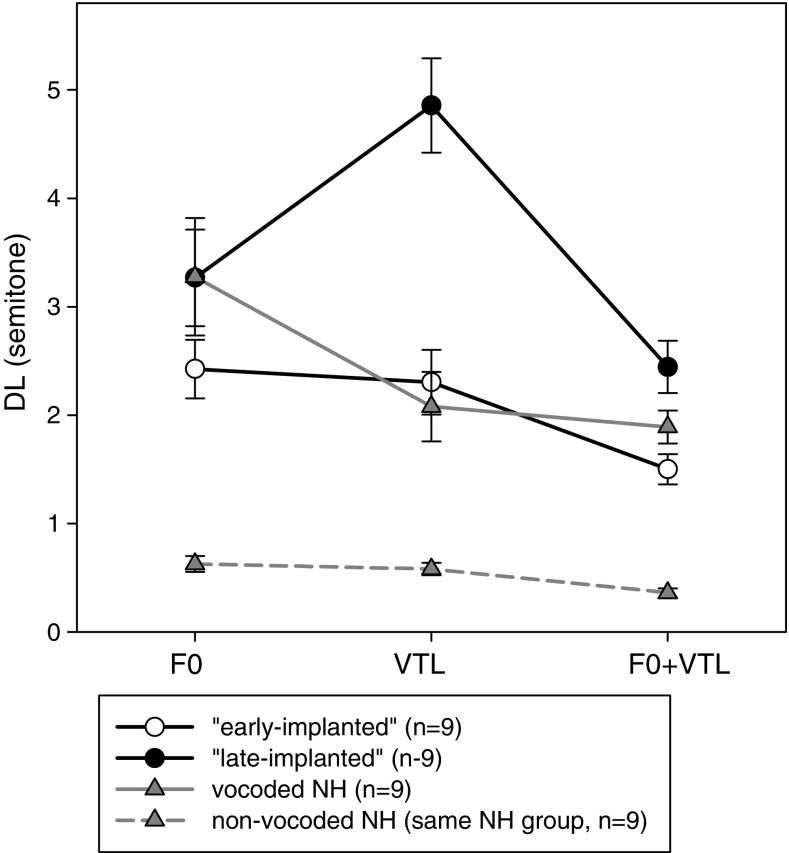

The DLs of the CI group were compared to the vocoded DLs of the NH group. Because there was no significant effect of learning between the three measurements in the vocoded stimuli for the NH participants [F(2,16) = 2.834, p = 0.088], the comparison was conducted using the mean of the three measurements in each manipulation (Fig. 3). A two-way repeated measures ANOVA for manipulation type (F0, VTL, F0 + VTL) as the within-subject variable and group (early-implanted, late-implanted, NH vocoded) as the between-subject variable. Results showed significant main effects of manipulation type [F(2,24) = 25.580, p < 0.001, ƞ2 = 0.516] and group [F(2,24) = 7.034, p = 0.004, ƞ2 = 0.370] with a significant interaction [F(4,24) = 5.688, p = 0.001, ƞ2 = 0.322]. Pairwise comparisons with Bonferroni adjustment revealed that there was no difference between the groups for the F0 manipulation (p > 0.05). For the VTL and F0 + VTL manipulations, the DL of the late-implanted group was worse than that of the early-implanted group (p < 0.001, p = 0.012, respectively) and that of the vocoded NH group (p < 0.001, p = 0.056, respectively). There was no significant difference, however, between the early-implanted group and the vocoded NH group in the VTL and F0 + VTL manipulations (p > 0.05). These results suggest that although all three groups reached similar talker discrimination thresholds when the differences between the talkers were based on F0 cues, only the early-implanted group of CI users reached thresholds similar to the vocoded NH listeners when VTL or F0 + VTL cues were provided.

Fig. 3.

Mean (± SE) F0, VTL, and F0 + VTL DL for the early-implanted CI users (n = 9) and “late-implanted” CI users (n = 9) as compared to the NH listeners who listened to vocoded stimuli (n = 9). Also shown are the F0, VTL, and F0 + VTL DL of the (same) NH listeners who listened (in their fourth measurement) to non-vocoded stimuli (dashed line)

An examination of the non-vocoded results by the NH group indicated much better thresholds when compared to the vocoded thresholds (Fig. 3). Two-way ANOVA with repeated measures was conducted on the DL of the NH listeners with measurement (third measurement: vocoded, fourth measurement: non-vocoded) and manipulation type (F0, VTL, F0 + VTL) as the between-subject variables. A main effect of measurement confirmed that the non-vocoded DL was significantly smaller than the vocoded DL [F(1,16) = 72.489, p < 0.001, ƞ2 = 0.901]. There was a significant difference between the manipulation types [F(2,16) = 26.655, p < 0.001, ƞ2 = 0.769] with no measurement ∗ manipulation interaction [F(2,16) = 1.864, p = 0.202]. Pairwise comparisons revealed better thresholds with the F0 + VTL manipulation as compared to the F0 and VTL manipulations (p = 0.001). Differences between F0 and VTL thresholds were not found to be statistically significant (p = 0.083).

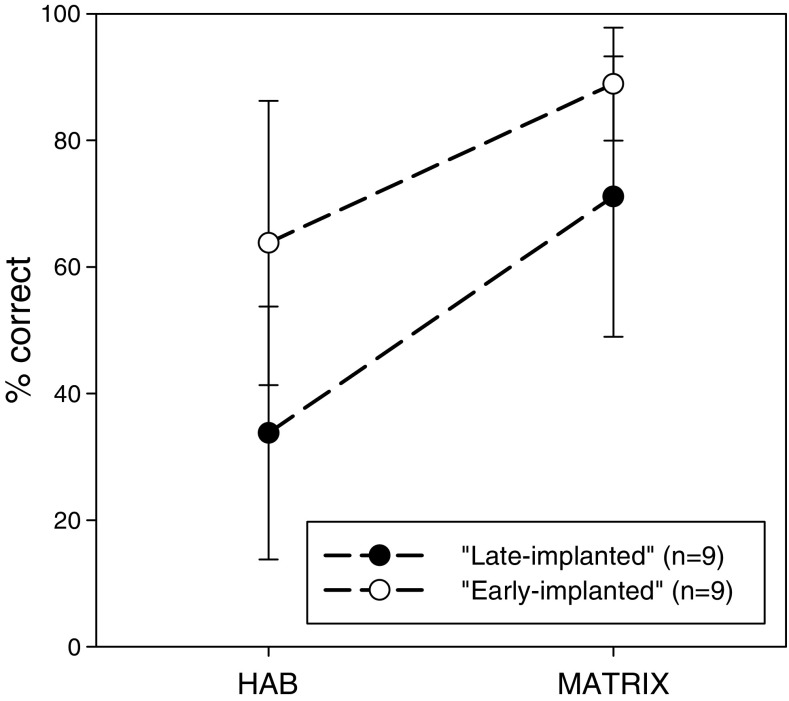

Speech Recognition

Figure 4 shows the mean scores (± SD) of the speech recognition tests for the early-implanted and the late-implanted CI groups. Univariate analysis that was separately conducted for each test revealed that the early-implanted group was significantly better than the late-implanted group in both the HAB results [F(1,16) = 11.278, p = 0.004, ƞ2 = 0.429] (difference = 37.36%) and the Matrix results [F(1,17) = 8.372, p = 0.011, ƞ2 = 0.344] (difference = 25.11%).

Fig. 4.

Mean scores (± SD) in the HAB and MATRIX speech recognition tests for the “early-implanted” and “late-implanted” CI users

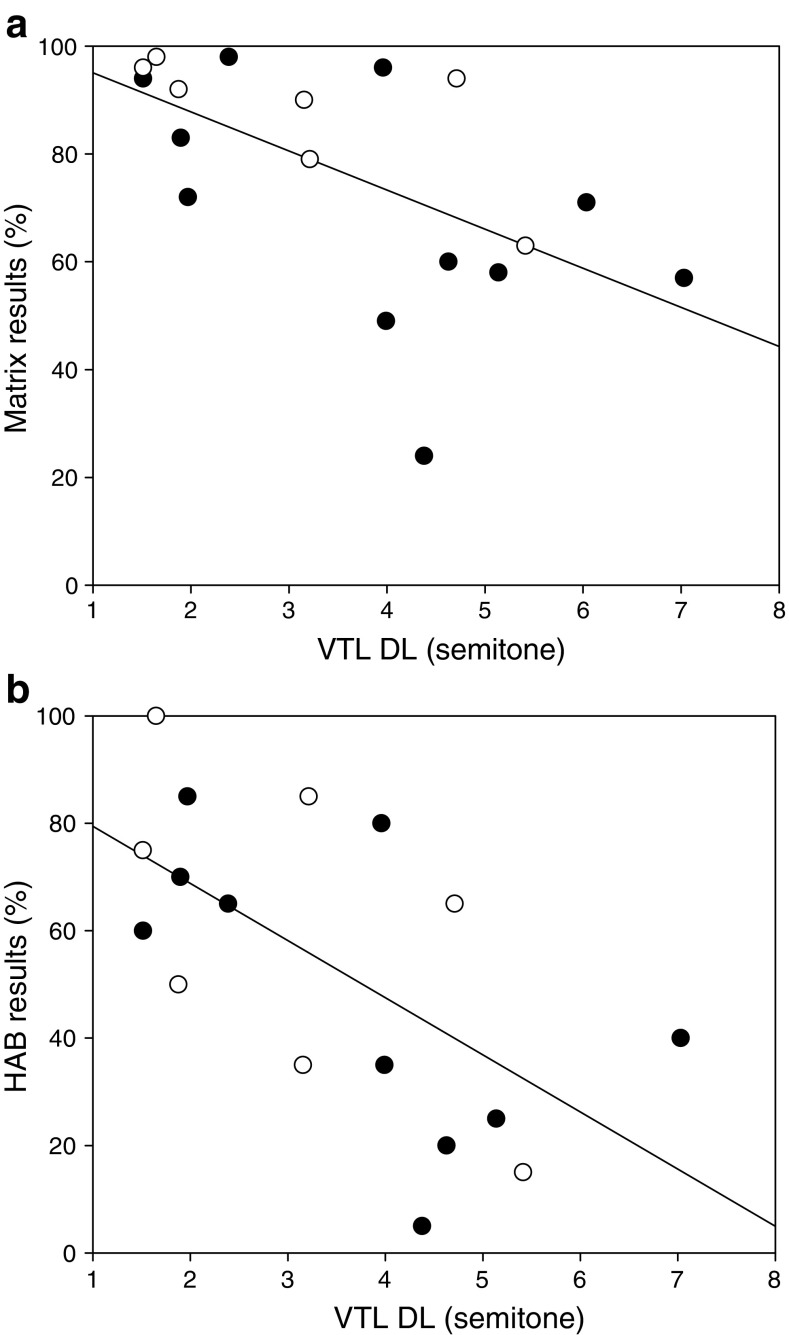

Significant correlation coefficients were found between HAB scores and VTL DL [r(17) = − 0.637, p = 0.006], between HAB scores and F0 + VTL DL [r(17) = − 0.521, p = 0.032] and between Matrix scores and VTL DL [r(18) = − 0.629, p = 0.005] (Fig. 5 shows correlation with VTL DL). No significant correlations were found for the two other manipulation types (p > 0.05).

Fig. 5.

Individual results in the HAB and Matrix speech recognition tests as a function of mean VTL DL for participants with a single CI (filled dots) and participants with two CIs (empty dots)

Frequency Discrimination (DLF) for Pure Tones

Figure 6 shows the DLF results (mean of two measurements) of the early-implanted CI users, late-implanted CI users, and the NH group (non-vocoded). One-way ANOVA with group as the between-subject variable revealed a significant effect of group [F(2,24) = 15.539, p < 0.001], with the NH group showing better thresholds than the early-implanted and the late-implanted CI users (p < 0.001). No significant difference was shown between the two CI groups. No significant correlations were found between mean DLF and F0 DL, VTL DL, or F0 + VTL DL (p > 0.05) for the CI users. No association was found between mean DLF and age at implantation (p > 0.05).

Fig. 6.

Box and whiskers plots for frequency discrimination thresholds of the “early-implanted” CI users, “late-implanted” CI users, and NH control group

Cognitive Function

Results on the cognitive tests for the early-implanted group, late-implanted group, and control group are shown in Table 5. T test for two-sided hypothesis comparisons between the two CI groups indicated no significant difference (p > 0.05). Moreover, cognitive test scores did not correlate with VTL, F0, or F0 + VTL results, when age at implantation was held as a continuous covariate variable, except for a significant correlation between results on the trail B and VTL DL [r(16) = 0.64, p = 0.031]. Age at implantation explained 40% of the variance in the trail B results [r(16) = 0.63, p = 0.04]. One-way ANOVAs conducted on the Wechsler and trail test scores with group (early-implanted, late-implanted, control) as the between-subject variable revealed a significant effect of group only for the Wechsler forward digit span results [F(2, 25) = 8.613, p = 0.002], with the NH control group showing better results than the early-implanted CI users (p = 0.007). No other significant differences were found.

Table 5.

Average results of the cognitive tests (SD)

| “Early-implanted” (n = 9) | “Late-implanted” (n = 8) | NH control (n = 9) | |

|---|---|---|---|

| Trail A (s) | 18.89 (3.63) |

18.91 (4.48) |

16.04 (3.79) |

| Trail B (s) | 42.54 (12.01) |

48.24 (19.04) |

40.76 (16.68) |

| Wechsler forward (n) | 6.33 (2.96) |

7.25 (2.71) |

9.44 (1.66) |

| Wechsler backwards (n) | 6.78 (3.35) |

6.88 (2.23) |

6.22 (2.05) |

| Raven (%) | 83.4 (n = 5) (12.68) |

86.29 (n = 7) (8.20) |

Discussion

Effects of Age at Implantation

The present study has two major findings: first, age at implantation was found to be strongly associated with discrimination of VTL but not F0 voice cues. That is, early implantation was found to improve voice discrimination based on VTL cues, with essentially no effect on F0 cues. Second, a moderate positive relationship was found between VTL discrimination and speech recognition scores, but not with frequency discrimination of pure tones or cognitive measures for the CI users.

For those subjects categorized as being implanted late, they also experienced longer durations of deafness than those subjects implanted early. The finding that VTL discrimination was associated with age at implantation may have several possible explanations. First, it may imply that auditory deprivation adversely affects CI users’ ability to process spectral information. This explanation is further supported by studies suggesting the utility of different coding mechanisms for the different cues for voice discrimination. Specifically, although the coding of F0 was shown to depend more on temporal than spectral information (e.g., Fu et al. 2004; Laneau and Wouters 2004; Xu and Pfingst 2008), VTL coding was more strongly dependent on spectral information (Gaudrain and Başkent 2015). Therefore, the finding that only VTL cues were influenced by years of auditory deprivation prior to implantation may suggest that spectral coding is more susceptible than temporal coding to distorted sensory input.

Why might early implantation positively affect spectral processing in adults with prelingual deafness? Although the behavioral results in this study do not directly answer this question, we can speculate from animal studies. Congenital deafness causes almost complete absence of input from the peripheral auditory system, thus adversely affecting both the morphology and the utility of neurons along the auditory pathway (for reviews see Fallon et al. 2014a; Shepherd and Hardie 2001). Findings from animal studies have shown that neonatal deafness causes almost complete loss of normal tonotopic organization (cochleotopy) of the primary auditory cortex (A1) (Dinse et al. 1997; Fallon et al. 2009; Raggio and Schreiner 1999), with minimal effects on the temporal response characteristics of the auditory pathway (Fallon et al. 2014a). This reduced cochleotopy of A1 may predict poor perceptual outcomes following subsequent cochlear implantation primarily due to limited spectral resolution. However, cochleotopy was almost completely restored following the provision of afferent input to A1 via chronic intracochlear electrical stimulation (ICES) shortly after deafness (Fallon et al. 2009) and in some cases after a long period of deafness (Fallon et al. 2014b). These data suggest that cortical connectivity can be restored even following a long period of deafness, weakening the supposition that changes in connectivity are responsible for the poor spectral coding of late-implanted individuals.

Deficits in higher-order auditory functions, rather than alternations in cortical connectivity, may cause inferior spectral coding in individuals with prelingual deafness, which may explain the poor VTL discrimination in the late-implanted participants of the present study. Barone et al. (2013) show, for example, that the basic tonotopic organization of the auditory cortex is preserved in congenitally deafened cats, with near normal connectivity pattern at the levels of the thalamo-cortical pathway and the cortico-cortical network, but with a functional smear of the nucleotopic gradient of A1. They suggest, therefore, that congenital deafness has more of an effect on the neuron’s threshold adaptation than it has on connectivity, impeding complex top-down interactions from high-order associative fields that are based on the same circuitry. These studies may have relevance for early deafened adults. We propose that when synaptic properties are immature and synaptic densities are high (Kral et al. 2002, 2005; Winfield 1983), the sensory input from the CI may restore tonotopy as well as strengthen synaptic transmission and wiring patterns in the auditory pathway. This strengthening may offer highly efficient spectral resolution of sound, allowing early-implanted prelingual adults to utilize the degraded VTL cues provided by the CI. In contrast, when implantation occurs after the sensitive period of synaptic pruning in the auditory pathway, it may restore some of the tonotopy without functionally improving synaptic efficiency. Thus, late-implanted individuals, for whom the synaptic connections in the auditory pathway may not be robust, do not attain similar results even after several years of electrical stimulation. Lacking efficient spectral coding, prelingually deafened individuals who were implanted after 4 years of age probably rely primarily on F0 cues, achieving poorer voice discrimination in natural conditions as compared to NH individuals, who can utilize both F0 and VTL cues. This explanation is supported by studies showing that normal synaptogenesis in the human auditory cortex occurs up to 4 years of age (Huttenlocher and Dabholkar 1997). It is also in agreement with electrophysiology studies in cochlear-implanted children. These studies show significant differences in evoked middle latency response (eMLR) (Gordon et al. 2005) and in cortical auditory-evoked potentials (CAEP) (Sharma et al. 2005) between early-implanted children (implanted before 3.5–5 years old) and late-implanted children, suggesting that the most sensitive period to auditory deprivation, at least in functional measures, is up to 4 years of age (Kral and Tillein 2006).

There may be a second explanation, however, for the poor VTL perception of the late-implanted CI users in the present study, suggesting that age at implantation does not affect auditory processing per se. Rather, it affects the ability to build auditory objects (Griffiths and Warren 2004) or events (Blauert 1997) and to form associations between these auditory objects and the motor areas that produce them. In the hearing brain, auditory objects (i.e., neuronal representations) of delimited acoustic patterns are generated based on repeated exposure to the physical features of the auditory stimuli (Kral 2013). Then, according to the motor theory of speech perception (Galantucci et al. 2006; Liberman and Mattingly 1985; Liberman et al. 1967), NH listeners form associations between motor and auditory areas in order to translate the acoustic information of speech to the vocal-tract gestures of the speaker. Doing so, they are able to extract articulatory patterns and identify the physical characteristics of the speech organs of the speaker. It is assumed that this complex “extracting” process is inherent in humans and feeds from the continuous exposure to (acoustic) speech stimuli in NH individuals. Individuals with congenital hearing impairment who use CIs, on the other hand, were not exposed to acoustic stimuli during early years and, following implantation, have access only to the degraded cues transmitted by their CI device. When a CI device is implanted early enough, i.e., in the sensitive period in which the brain can “bootstrap” its function from a general pattern of connectivity (Kral 2013), the degraded spectral cues transmitted by the CI may be enough to construct auditory categories and form associations between acoustic stimuli and the vocal-tract characteristics of the speaker. When a CI is provided later in development, i.e., after the sensitive period of plasticity is over, auditory objects and associations between motor and auditory areas may be hard to construct, based only on the degraded cues transmitted by the CI. Instead, the late-implanted individuals will use the more reliable F0 timing cues that are more accessible to them. The finding that VTL perception was worse in CI users who were implanted after 4 years of age may suggest, according to this explanation, that a critical period for the formation of associations between the motor and auditory areas occurs before the fifth year of life. This explanation is supported by previous studies showing auditory deprivation to affect non-auditory functions of the brain as well as auditory functions (for examples from the visual and motor systems see Kral 2013).

Finally, the finding that VTL discrimination was associated with age at implantation may be related to the natural range of variation relative to sensitivity, which is smaller for one cue than the other. In natural speech, F0 varies considerably to indicate prosodic distinctions, with a standard deviation (SD) of about 3.7 semitones, whereas VTL is relatively fixed, with a SD of only about one semitone (Chuenwattanapranithi et al. 2008; Kania et al. 2006). Thus, late-implanted CI users who have degraded spectral resolution may find the learning of VTL cues more difficult as compared to F0 cues.

The present findings are supported by those of Kovačić and Balaban (2010), who showed gender identification to be inversely related with the period of auditory deprivation in CI users 5.3–18.8 years of age. Other studies, however, have reported no correlation between experience with CI and gender categorization (Massida et al. 2011, 2013). This controversy probably stems from the fact that the participants of the current study and those of Kovačić and Balaban’s study were prelingually deafened, whereas participants in Massida et al.’s studies were postlingually deafened. Individuals with postlingual deafness have the advantage of early exposure to acoustic hearing, and it is possible that their robust spectral organization, as well as their top-down interactions with early sensory areas in the auditory pathway remained viable following auditory deprivation and subsequent cochlear implantation (Kral and Tillein 2006; Tao et al. 2015). Moreover, this early auditory experience may have enabled the formation of robust “extraction” processing, translating the salient spectral information from the acoustic cues of speech to VTL perception. Therefore, one would expect gender discrimination ability, though inferior to that of individuals with NH, to be restricted only by the limited spectral (and temporal) resolution transmitted by the CI in postlingual adults. The prelingually deafened adults assessed in the current study have relied exclusively on electric stimulation as their template for hearing, thus may have been more susceptible to the degraded spectral (and temporal) cues provided by the CI.

One may expect postlingually deafened adult CI users to utilize VTL cues for voice discrimination at least as well as our early-implanted prelingually deafened adults, based on their robust spectral organization. While no direct comparison has yet been made in voice discrimination between prelingual and postlingual deafened CI users, recent findings suggest that the latter have poorer VTL perception as compared to their F0 perception (Fuller et al. 2014), a pattern of results that, surprisingly, resembles the results of the late-implanted prelingual participants in the current study. These findings may favor the notion that the ability to form an extracting process (from acoustic/electric information to abstract physical knowledge on the speaker’s vocal-tract) may account for the VTL perception of deafened adults. Fuller et al. (2014) raise similar explanation by suggesting that adults with postlingual deafness may have difficulties in interpreting the detected VTL cues as talker-size differences because they perceive the transmitted VTL cues as not reliable for accurate voice discrimination. It is possible that the brain of the postlingual deafened adults is prewired to acoustic information (which they were exposed to during the critical time in their development) and not to electrical information and have, therefore, difficulties to adapt. Further study is needed, therefore, in order to clarify the different factors that may affect voice discrimination in postlingually vs. prelingually deafened CI users.

Word Recognition

The finding of a significant association between word recognition scores and VTL DLs is consistent with the notion that exposure to acoustic/electric stimuli in early life is crucial not only for voice discrimination but also for speech perception (Svirsky et al. 2004). Thus, late implantation may result in degraded ability to perceive and translate formant information, hampering both voice discrimination of natural voices and speech perception. Previous studies showed a clear relationship between a variety of tests for spectral resolution and speech perception, including electrode discrimination (Nelson et al. 1995), electrode pitch ranking (Donaldson and Nelson 2000), spectral ripple discrimination (Henry et al. 2005; Jones et al. 2013; Winn et al. 2016; Won et al. 2007), and syllable categorization (Winn et al. 2016). These studies favor the notion that poor speech perception abilities by early-deafened CI users stem primarily from poor spectral coding. Based on the earlier discussion, another possible explanation for the strong association found between word recognition scores and VTL DLs is that high-order auditory functions may be essential for both tasks. These may include the ability to construct efficient auditory objects from the acoustic information of speech and form an association between these objects and the vocal-tract gestures of the speaker. Finally, the stance that late implantation is associated with poor talker discrimination and speech perception may also favor the well accepted view that the benefits of CI are most pronounced the earlier in life the individual is implanted (for a review see Kral and Tillein 2006).

Psychophysics

The DLF thresholds of the CI users in the current study were not associated with the F0, VTL, or F0 + VTL discrimination thresholds nor were they associated with age at implantation. Nevertheless, they were markedly reduced when compared to the NH DLF thresholds as was shown in previous studies (Goldsworthy et al. 2013; Kopelovich et al. 2010; Turgeon et al. 2015). These findings may also favor the view that age at implantation affects high-order auditory functions rather than it affects low-level spectral resolving abilities. Thus, this may explain why VTL perception is influenced by age at implantation but that frequency discrimination of 1000-Hz pure tones (a psychophysical task that depends primarily on spectral coding) is not. The poor frequency discrimination performance shown for the CI users in the present study may have been a result of the poor spectro-temporal processing of the CI itself. Future studies may want to test this explanation by measuring frequency discrimination thresholds in NH individuals, listening via noise-excited channel vocoder. A different explanation for the lack of association between the DLF and VTL DL in the CI participants may be related to the different stimulus types, i.e., pure tones versus complex speech stimuli, which may have produced different auditory nerve excitation patterns. A pure tone stimulus produces excitation in one region of the auditory nerve and thus, frequency discrimination is achieved by comparing any shift in that location. Hence, pure tone frequency discrimination might be achieved even with relatively poor spectral resolution as the overall place of excitation moves with frequency. For voice discrimination based on VTL cues, the sentence stimulus excites multiple regions of the auditory nerve simultaneously, so spectral resolution comes into play at a much more fundamental level. Insufficient spectral resolution may cause the multiple formants to interact in a dynamic manner that makes discrimination very difficult, as may have happened to the late-implanted participants in the present study.

Cognition

In the current study, cognitive data were not associated with discrimination of F0, VTL, or F0 + VTL, possibly because the cognitive abilities of the CI users were matured. It has been suggested, that auditory and verbal experiences are critical for the development of executive functions because they support the development of intrinsic feedback mechanisms, working memory abilities, and sequential processing strategies (Conway et al. 2009). Children born deaf who are subsequently implanted may be expected to show deficits in executive functions as a result of the degraded auditory input experienced during the early critical periods of brain development (Figueras et al. 2008; Pisoni et al. 2011; Kronenberger et al. 2013). In the present study, however, the adult CI users presented executive function scores that were on par with those of the NH group. This finding may suggest that children with CI are able to narrow the gap in terms of their cognitive abilities as they mature. Although this is an appealing notion, Kronenberger et al. (2013) have reported findings of poor executive functions in a group of children, adolescents, and young adults with CI, suggesting other possible explanations to the current findings. Future studies should further test a broader range of executive functions in well-defined age groups of CI users in order to get more insight on their ability to “catch up” with their age matched NH peers.

Comparison to NH Listeners Assessed with Spectrally Degraded Stimuli

The finding that performance on VTL cues was equivalent for early-implanted CI users and NH participants assessed with vocoded stimuli and that the late-implanted CI users performed much worse may provide evidence that early implantation allows high-level reorganization of spectral representation of sound. This reorganization may enable near “normal” spectral processing and thus better use of the spectral information provided by the CI. It should be noted, however, that the performance of the NH listeners was likely affected by the specific vocoder parameters. Manipulating the number of filterbank channels may potentially alter VTL discrimination by the NH listeners. Likewise, a different manipulation of the envelope content of the filterbank output might have affected F0 discrimination. Thus, although we used a representative vocoder configuration to predict the pattern of F0 and VTL results, systematic evaluation of different vocoder parameters and their perceptual effects on NH listeners remains for future study.

Limitations and Future Investigations

The present study provides novel information on the ability of CI users with prelingual deafness to perceive different cues for talker discrimination, as dependent on their age at implantation. This information has high ecological and social relevance, because the ability to identify the voice characteristics of a specific talker is associated with the ability to identify his/her sex, his/her physical characteristics, and his/her affect (Belin et al. 2004). However, one should bear in mind that the current study was conducted in quiet conditions, which are very different from everyday life. Considering that tracking the voice of a specific talker of interest can also be crucial for segregating talkers in a multi-talker environment, an ability found to improve speech perception in a noisy environment (Bronkhorst 2015), future studies may want to replicate the study using background noise conditions. In order to gain more knowledge on where the processing breakdown takes place in early-implanted prelingually deafened CI users, future studies may also want to examine this population using advanced psychoacoustic tests with complex stimuli. In addition, in the present study, we compared the voice discrimination results of the CI users to those of NH listeners who listened to degraded stimuli using noise-vocoder with a narrow frequency range (250 to 4000 Hz). This range was chosen based on the frequency-importance functions derived in Articulation Index and Speech Transmission Index literature (e.g., Steeneken and Houtgast 2002), which demonstrate reduction in frequency-importance weighting for speech below 250 Hz and above 4000 Hz, especially for vowels. While we believe that the current vocoder range was sufficient for extracting VTL information that is associated with vowel information, this should be substantiated in future studies. Finally, in order to better understand the effects of auditory versus electrical experience on VTL perception, future studies may want to design a testing protocol that will directly compare VTL perception between early-implanted prelingually deafened individuals, late-implanted prelingually deafened individuals, and postlingually deafened CI users.

Implications

In summary, the present results present theoretical and clinical implications. From a theoretical perspective, they suggest that early implantation allows high-level reorganization of spectral representation of sound. This reorganization may enable better use of the spectral information provided by the CI for voice discrimination and speech perception. From a clinical perspective, the finding that both the early-implanted CI users and the NH participants who listen to vocoded stimuli perform poorer than the NH participants who listen to non-vocoded stimuli may infer on the constraints of the CI. Specifically, it may imply that VTL perception in CI users is limited, nevertheless, by the inherent mechanism of the CI device and may be completely restored only with better technology.

Compliance with Ethical Standards

Conflict of Interest

The authors declare that they have no conflict of interest.

References

- Barone P, Lacassagne L, Kral A. Reorganization of the connectivity of cortical field DZ in congenitally deaf cat. PLoS One. 2013;8(4):e60093. doi: 10.1371/journal.pone.0060093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baskent D, Gaudrain E. Musicians advantage for speech-on-speech perception. J Acoust Soc Am. 2016;139(3):EL51–EL56. doi: 10.1121/1.4942628. [DOI] [PubMed] [Google Scholar]

- Belin P, Fecteau S, Bedard C. Thinking the voice: neural correlates of voice perception. Trends Cogn Sci. 2004;8(3):129–135. doi: 10.1016/j.tics.2004.01.008. [DOI] [PubMed] [Google Scholar]

- Blauert J. Spatial hearing: the psychophysics of human spatial localization. Cambridge: MIT Press; 1997. [Google Scholar]

- Boothroyd A. Statistical theory of the speech discrimination score. J Acoust Soc Am. 1968;43(2):362–367. doi: 10.1121/1.1910787. [DOI] [PubMed] [Google Scholar]

- Bronkhorst AW. The cocktail-party problem revisited: early processing and selection of multi-talker speech. Atten Percept Psychophys. 2015;77(5):1465–1487. doi: 10.3758/s13414-015-0882-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chatterjee M, Peng SC. Processing F0 with cochlear implants: modulation frequency discrimination and speech intonation recognition. Hear Res. 2008;235(1-2):143–156. doi: 10.1016/j.heares.2007.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chuenwattanapranithi S, Xu Y, Thipakorn B, Maneewongvatana S. Encoding emotions in speech with the size code. A perceptual investigation. Phonetica. 2008;65(4):210–230. doi: 10.1159/000192793. [DOI] [PubMed] [Google Scholar]

- Coez A, Zilbovicius M, Ferrary E, Bouccara D, Mosnier I, Ambert-Dahan E, Bizaguet E, Syrota A, Samson Y, Sterkers O. Cochlear implant benefits in deafness rehabilitation: PET study of temporal voice activations. J Nucl Med. 2008;49(1):60–67. doi: 10.2967/jnumed.107.044545. [DOI] [PubMed] [Google Scholar]

- Conway CM, Pisoni DB, Kronenberger WG. The importance of sound for cognitive sequencing abilities: the auditory scaffolding hypothesis. Curr Dir Psychol Sci. 2009;18(5):275–279. doi: 10.1111/j.1467-8721.2009.01651.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cullington HE, Zeng FG. Comparison of bimodal and bilateral cochlear implant users on speech recognition with competing talker, music perception, affective prosody discrimination, and talker identification. Ear Hear. 2011;32(1):16–30. doi: 10.1093/eurheartj/ehq357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Darwin CJ, Brungart DS, Simpson BD. Effects of fundamental frequency and vocal-tract length changes on attention to one of two simultaneous talkers. J Acoust Soc Am. 2003;114(5):2913–2922. doi: 10.1121/1.1616924. [DOI] [PubMed] [Google Scholar]

- Dinse HR, Reuter G, Cords SM, Godde B, Hilger T, Lenarz T. Optical imaging of cat auditory cortical organization after electrical stimulation of a multichannel cochlear implant: differential effects of acute and chronic stimulation. Am J Otol. 1997;8(Suppl):S17–S18. [PubMed] [Google Scholar]

- Donaldson GS, Nelson DA. Place-pitch sensitivity and its relation to consonant recognition by cochlear implant listeners using the MPEAK and SPEAK speech processing strategies. J Acoust Soc Am. 2000;107(3):1645–1658. doi: 10.1121/1.428449. [DOI] [PubMed] [Google Scholar]

- Fallon JB, Irvine DR, Shepherd RK. Cochlear implant use following neonatal deafness influences the cochleotopic organization of the primary auditory cortex in cats. J Comp Neurol. 2009;512(1):101–114. doi: 10.1002/cne.21886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fallon JB, Shepherd RK, Nayagam DA, Wise AK, Heffer LF, Landry TG, Irvine DR. Effects of deafness and cochlear implant use on temporal response characteristics in cat primary auditory cortex. Hear Res. 2014;315:1–9. doi: 10.1016/j.heares.2014.06.001. [DOI] [PubMed] [Google Scholar]

- Fallon JB, Shepherd RK, Irvine DR. Effects of chronic cochlear electrical stimulation after an extended period of profound deafness on primary auditory cortex organization in cats. Eur J Neurosci. 2014;39(5):811–820. doi: 10.1111/ejn.12445. [DOI] [PubMed] [Google Scholar]

- Figueras B, Edwards L, Langdon D. Executive function and language in deaf children. J Deaf Stud Deaf Educ. 2008;13(3):362–377. doi: 10.1093/deafed/enm067. [DOI] [PubMed] [Google Scholar]

- Friesen LM, Shannon RV, Baskent D, Wang X. Speech recognition in noise as a function of the number of spectral channels: comparison of acoustic hearing and cochlear implants. J Acoust Soc Am. 2001;110(2):1150–1163. doi: 10.1121/1.1381538. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Shannon RV, Wang X. Effects of noise and spectral resolution on vowel and consonant recognition: acoustic and electric hearing. J Acoust Soc Am. 1998;104:3586–3596. doi: 10.1121/1.423941. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Chinchilla S, Galvin JJ., 3rd The role of spectral and temporal cues in voice gender discrimination by normal-hearing listeners and cochlear implant users. J Assoc Res Otolaryngol. 2004;5:253–260. doi: 10.1007/s10162-004-4046-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu QJ, Chinchilla S, Nogaki G, Galvin JJ., 3rd Voice gender identification by cochlear implant users: the role of spectral and temporal resolution. J Acoust Soc Am. 2005;118:1711–1718. doi: 10.1121/1.1985024. [DOI] [PubMed] [Google Scholar]

- Fuller C, Gaudrain E, Clarke J, Galvin JJ 3rd, Fu QJ, Free R, Baskent D (2014) Gender categorization is abnormal in cochlear implant users. J Assoc Res Otolaryngol 15: 1037–1048. 10.1007/s10162-014-0483-7, 6 [DOI] [PMC free article] [PubMed]

- Galantucci B, Fowler CA, Turvey MT. The motor theory of speech perception reviewed. Psychon Bull Rev. 2006;13(3):361–377. doi: 10.3758/BF03193857. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaudrain E, Başkent D. Factors limiting vocal-tract length discrimination in cochlear implant simulations. J Acoust Soc Am. 2015;137(3):1298–1308. doi: 10.1121/1.4908235. [DOI] [PubMed] [Google Scholar]

- Goldsworthy RL, Delhorne LA, Braida LD, Reed CM. Psychoacoustic and phoneme identification measures in cochlear-implant and normal-hearing listeners. Trends Amplif. 2013;17(1):27–44. doi: 10.1177/1084713813477244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gordon KA, Papsin BC, Harrison RV. Effects of cochlear implant use on the electrically evoked middle latency response in children. Hear Res. 2005;204(1-2):78–89. doi: 10.1016/j.heares.2005.01.003. [DOI] [PubMed] [Google Scholar]

- Griffiths TD, Warren JD. What is an auditory object? Nat Rev Neurosci. 2004;5(11):887–892. doi: 10.1038/nrn1538. [DOI] [PubMed] [Google Scholar]

- Han D, Liu B, Zhou N, Chen X, Kong Y, Liu H, Zheng Y, Xu L. Lexical tone perception with HiResolution and HiResolution 120 sound-processing strategies in pediatric Mandarin-speaking cochlear implant users. Ear Hear. 2009;30(2):169–177. doi: 10.1097/AUD.0b013e31819342cf. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henry BA, Turner CW, Behrens A. Spectral peak resolution and speech recognition in quiet: normal hearing, hearing impaired, and cochlear implant listeners. J Acoust Soc Am. 2005;118(2):1111–1121. doi: 10.1121/1.1944567. [DOI] [PubMed] [Google Scholar]

- Huttenlocher PR, Dabholkar AS. Regional differences in synaptogenesis in human cerebral cortex. J Comp Neurol. 1997;387(2):167–178. doi: 10.1002/(SICI)1096-9861(19971020)387:2<167::AID-CNE1>3.0.CO;2-Z. [DOI] [PubMed] [Google Scholar]

- Jones GL, Won JH, Drennan WR, Rubinstein JT. Relationship between channel interaction and spectral-ripple discrimination in cochlear implant users. J Acoust Soc Am. 2013;133(1):425–433. doi: 10.1121/1.4768881. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kania RE, Hartl DM, Hans S, Maeda S, Vaissiere J, Brasnu DF. Fundamental frequency histograms measured by electroglottography during speech: a pilot study for standardization. J Voice. 2006;20(1):18–24. doi: 10.1016/j.jvoice.2005.01.004. [DOI] [PubMed] [Google Scholar]

- Kishon-Rabin L, Patael S, Menahemi M, Amir N. Are the perceptual effects of spectral smearing influenced by speaker gender? J Basic Clin Physiol Pharmacol. 2004;15:41–55. doi: 10.1515/jbcpp.2004.15.1-2.41. [DOI] [PubMed] [Google Scholar]

- Kollemeier MA. Overcoming language barriers: Matrix sentence tests with closed speech corpora. Int J Audiol. 2015;54(Suppl 2):1–2. doi: 10.3109/14992027.2015.1074295. [DOI] [PubMed] [Google Scholar]

- Kopelovich JC, Eisen MD, Franck KH. Frequency and electrode discrimination in children with cochlear implants. Hear Res. 2010;268(1-2):105–113. doi: 10.1016/j.heares.2010.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kovačić D, Balaban E. Hearing history influences voice gender perceptual performance in cochlear implant users. Ear Hear. 2010;31(6):806–814. doi: 10.1097/AUD.0b013e3181ee6b64. [DOI] [PubMed] [Google Scholar]

- Kral A. Auditory critical periods: a review from system’s perspective. Neuroscience. 2013;247:117–133. doi: 10.1016/j.neuroscience.2013.05.021. [DOI] [PubMed] [Google Scholar]

- Kral A, Tillein J. Brain plasticity under cochlear implant stimulation. Adv Otorhinolaryngol. 2006;64:89–108. doi: 10.1159/000094647. [DOI] [PubMed] [Google Scholar]

- Kral A, Hartmann R, Tillein J, Heid S, Klinke R. Hearing after congenital deafness: central auditory plasticity and sensory deprivation. Cereb Cortex. 2002;12(8):797–807. doi: 10.1093/cercor/12.8.797. [DOI] [PubMed] [Google Scholar]

- Kral A, Tillein J, Heid S, Hartmann R, Klinke R. Postnatal cortical development in congenital auditory deprivation. Cereb Cortex. 2005;15(5):552–562. doi: 10.1093/cercor/bhh156. [DOI] [PubMed] [Google Scholar]

- Kronenberger WG, Pisoni DB, Henning SC, Colson BG. Executive functioning skills in long-term users of cochlear implants: a case control study. J Pediatr Psychol. 2013;38(8):902–914. doi: 10.1093/jpepsy/jst034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laneau J, Wouters J (2004) Mutichannel place pitch sensitivity in cochlear implant recipients. J Assoc Res Otolaryngol 5:285–294 [DOI] [PMC free article] [PubMed]

- Laneau J, Wouters J, Moonen M. Relative contributions of temporal and place pitch cues to fundamental frequency discrimination in cochlear implantees. J Acoust Soc Am. 2004;116(6):3606–3619. doi: 10.1121/1.1823311. [DOI] [PubMed] [Google Scholar]

- Laneau J, Wouters J, Moonen M (2006) Improved music perception with explicit pitch coding in cochlear implants. Audiol Neurootol 11:38–52 [DOI] [PubMed]

- Levitt H. Transformed up-down methods in psychoacoustics. J Acoust Soc Am. 1971;49(2B):467–477. doi: 10.1121/1.1912375. [DOI] [PubMed] [Google Scholar]

- Liberman AM, Mattingly IG. The motor theory of speech perception revised. Cognition. 1985;21(1):1–36. doi: 10.1016/0010-0277(85)90021-6. [DOI] [PubMed] [Google Scholar]

- Liberman AM, Cooper FS, Shankweiler DP, Studdert-Kennedy M. Perception of the speech code. Psychol Rev. 1967;74(6):431–461. doi: 10.1037/h0020279. [DOI] [PubMed] [Google Scholar]

- Luo X, QJ F, Galvin JJ., 3rd Vocal emotion recognition by normal-hearing listeners and cochlear implant users. Trends Amplif. 2007;11(4):301–315. doi: 10.1177/1084713807305301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luo X, Fu QJ, Wei CG, Cao KL (2008) Speech recognition and temporal amplitude modulation processing by Mandarin-speaking cochlear implant users. Ear Hear 29:957–70. 10.1097/AUD.0b013e3181888f61 [DOI] [PMC free article] [PubMed]

- Luo X, Padilla M, Landsberger DM. Pitch contour identification with combined place and temporal cues using cochlear implants. J Acoust Soc Am. 2012;131(2):1325–1336. doi: 10.1121/1.3672708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mackersie CL, Dewey J, Guthrie LA. Effects of fundamental frequency and vocal-tract length cues on sentence segregation by listeners with hearing loss. J Acoust Soc Am. 2011;130(2):1006–1019. doi: 10.1121/1.3605548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Massida Z, Belin P, James C, Rouger J, Fraysse B, Barone P, Deguine O. Voice discrimination in cochlear-implanted deaf subjects. Hear Res. 2011;275(1-2):120–129. doi: 10.1016/j.heares.2010.12.010. [DOI] [PubMed] [Google Scholar]

- Massida Z, Marx M, Belin P, James C, Fraysse B, Barone P, Deguine O. Gender categorization in cochlear implant users. J Speech Lang Hear Res. 2013;56(5):1389–1401. doi: 10.1044/1092-4388(2013/12-0132). [DOI] [PubMed] [Google Scholar]

- Meister H, Fürsen K, Streicher B, Lang-Roth R, Walger M. The use of voice cues for speaker gender recognition in cochlear implant recipients. J Speech Lang Hear Res. 2016;59(3):546–556. doi: 10.1044/2015_JSLHR-H-15-0128. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Tyler LK, Marslen-Wilsen WD. The Perception of speech: from sound to meaning (revised and updated) USA: Oxford University Press; 2009. [Google Scholar]

- Morton KD, Torrione PA, Jr, Throckmorton CS, Collins LM. Mandarin Chinese tone identification in cochlear implants: predictions from acoustic models. Hear Res. 2008;244(1-2):66–76. doi: 10.1016/j.heares.2008.07.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moulines E, Charpentier F (1990) Pitch-synchronous waveform processing techniques for text-to-speech synthesis using diphones. Speech Commun 9:453–467

- Mühler R, Ziese M, Rostalski D. Development of a speaker discrimination test for cochlear implant users based on the Oldenburg Logatome corpus. ORL J Otorhinolaryngol Relat Spec. 2009;71(1):14–20. doi: 10.1159/000165170. [DOI] [PubMed] [Google Scholar]

- Munson B, Nelson PB. Phonetic identification in quiet and in noise by listeners with cochlear implants. J Acoust Soc Am. 2005;118(4):2607–2617. doi: 10.1121/1.2005887. [DOI] [PubMed] [Google Scholar]

- Nelson D, Van Tasell D, Schroder A, Soli S, Levine S. Electrode ranking of ‘place-pitch’ and speech recognition in electrical hearing. J Acoust Soc Am. 1995;98(4):1987–1999. doi: 10.1121/1.413317. [DOI] [PubMed] [Google Scholar]

- Peng SC, Tomblin JB, Cheung H, Lin YS, Wang LS. Perception and production of mandarin tones in prelingually deaf children with cochlear implants. Ear Hear. 2004;25(3):251–264. doi: 10.1097/01.AUD.0000130797.73809.40. [DOI] [PubMed] [Google Scholar]

- Pisoni DB, Kronenberger WG, Roman AS, Geers AE. Measures of digit span and verbal rehearsal speed in deaf children after more than 10 years of cochlear implantation. Ear Hear. 2011;32(Suppl):60S–74S. doi: 10.1097/AUD.0b013e3181ffd58e. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raggio MW, Schreiner CE. Neuronal responses in cat primary auditory cortex to electrical cochlear stimulation. III. Activation patterns in short- and long-term deafness. J Neurophysiol. 1999;82(6):3506–3526. doi: 10.1152/jn.1999.82.6.3506. [DOI] [PubMed] [Google Scholar]

- Raven manual, Section 1 . Standard progressive matrices. Oxford: Oxford Psychologist Press Ltd; 1998. [Google Scholar]

- Rogers CF, Healy EW, Montgomery AA. Sensitivity to isolated and concurrent intensity and fundamental frequency increments by cochlear implant users under natural listening conditions. J Acoust Soc Am. 2006;119(4):2276–2287. doi: 10.1121/1.2167150. [DOI] [PubMed] [Google Scholar]

- Schvartz-Leyzac KC, Chatterjee M. Fundamental-frequency discrimination using noise-band-vocoded harmonic complexes in older listeners with normal hearing. J Acoust Soc Am. 2015;138(3):1687–1695. doi: 10.1121/1.4929938. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Segal O, Houston D, Kishon-Rabin L. Auditory discrimination of lexical stress patterns in hearing-impaired infants with cochlear implants compared with normal hearing: influence of acoustic cues and listening experience to the ambient language. Ear Hear. 2016;37(2):225–234. doi: 10.1097/AUD.0000000000000243. [DOI] [PubMed] [Google Scholar]

- Shannon RV. Multichannel electrical stimulation of the auditory nerve in man. I. Basic psychophysics. Hear Res. 1983;11(2):157–189. doi: 10.1016/0378-5955(83)90077-1. [DOI] [PubMed] [Google Scholar]

- Sharma A, Dorman MF, Kral A. The influence of a sensitive period on central auditory development in children with unilateral and bilateral cochlear implants. Hear Res. 2005;203(1-2):134–143. doi: 10.1016/j.heares.2004.12.010. [DOI] [PubMed] [Google Scholar]

- Shepherd RK, Hardie NA. Deafness-induced changes in the auditory pathway: implications for cochlear implants. Audiol Neurootol. 2001;6(6):305–318. doi: 10.1159/000046843. [DOI] [PubMed] [Google Scholar]

- Skuk VG, Schweinberger SR. Influences of fundamental frequency, formant frequencies, aperiodicity and spectrum level on the perception of voice gender. J Speech Lang Hear Res. 2013;57(1):285–296. doi: 10.1044/1092-4388(2013/12-0314). [DOI] [PubMed] [Google Scholar]

- Smith DR, Patterson RD. The interaction of glottal-pulse rate and vocal-tract length in judgements of speaker size, sex, and age. J Acoust Soc Am. 2005;118(5):3177–3186. doi: 10.1121/1.2047107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith DR, Walters TC, Patterson RD. Discrimination of speaker sex and size when glottal-pulse rate and vocal-tract length are controlled. J Acoust Soc Am. 2007;122(6):3628–3639. doi: 10.1121/1.2799507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steeneken HJM, Houtgast T. Phoneme-group specific octave-band weights in predicting speech intelligibility. Speech Comm. 2002;38(3–4):399–411. doi: 10.1016/S0167-6393(02)00011-0. [DOI] [Google Scholar]

- Svirsky MA, Teoh SW, Neuburger H. Development of language and speech perception in congenitally, profoundly deaf children as a function of age at cochlear implantation. Audiol Neurootol. 2004;9(4):224–233. doi: 10.1159/000078392. [DOI] [PubMed] [Google Scholar]

- Tao D, Deng R, Jiang Y, Galvin JJ, 3rd, Fu QJ, Chen B. Melodic pitch perception and lexical tone perception in Mandarin-speaking cochlear implant users. Ear Hear. 2015;36(1):102–110. doi: 10.1097/AUD.0000000000000086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tombaugh TN. Trail making test A and B: normative data stratified by age and education. Arch Clin Neuropsychol. 2004;19(2):203–214. doi: 10.1016/S0887-6177(03)00039-8. [DOI] [PubMed] [Google Scholar]

- Turgeon C, Champoux F, Lepore F, Ellemberg D. Deficits in auditory frequency discrimination and speech recognition in cochlear implant users. Cochlear Implants Int. 2015;16(2):88–94. doi: 10.1179/1754762814Y.0000000091. [DOI] [PubMed] [Google Scholar]

- Vestergaard MD, Fyson NR, Patterson RD. The interaction of vocal characteristics and audibility in the recognition of concurrent syllables. J Acoust Soc Am. 2009;125(2):1114–1124. doi: 10.1121/1.3050321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vestergaard MD, Fyson NR, Patterson RD. The mutual roles of temporal glimpsing and vocal characteristics in cocktail-party listening. J Acoust Soc Am. 2011;130(1):429–439. doi: 10.1121/1.3596462. [DOI] [PubMed] [Google Scholar]

- Vongphoe M, Zeng FG. Speaker recognition with temporal cues in acoustic and electric hearing. J Acoust Soc Am. 2005;118(2):1055–1061. doi: 10.1121/1.1944507. [DOI] [PubMed] [Google Scholar]

- Wechsler D. Wechsler intelligence scale for children-III. San Antonio: The Psychological Corporation; 1991. [Google Scholar]

- Winfield DA. The postnatal development of synapses in the different laminae of the visual cortex in the normal kitten and in kittens with eyelid suture. Brain Res. 1983;285(2):155–169. doi: 10.1016/0165-3806(83)90048-2. [DOI] [PubMed] [Google Scholar]