Abstract

Purpose:

Development and implementation of robust reporting processes to systematically provide quality data to care teams in a timely manner is challenging. National cancer quality measures are useful, but the manual data collection required is resource intensive, and reporting is delayed. We designed a largely automated measurement system with our multidisciplinary cancer care programs (CCPs) to identify, measure, and improve quality metrics that were meaningful to the care teams and their patients.

Methods:

Each CCP physician leader collaborated with the cancer quality team to identify metrics, abiding by established guiding principles. Financial incentive was provided to the CCPs if performance at the end of the study period met predetermined targets. Reports were developed and provided to the CCP physician leaders on a monthly or quarterly basis, for dissemination to their CCP teams.

Results:

A total of 15 distinct quality measures were collected in depth for the first time at this cancer center. Metrics spanned the patient care continuum, from diagnosis through end of life or survivorship care. All metrics improved over the study period, met their targets, and earned a financial incentive for their CCP.

Conclusion:

Our quality program had three essential elements that led to its success: (1) engaging physicians in choosing the quality measures and prespecifying goals, (2) using automated extraction methods for rapid and timely feedback on improvement and progress toward achieving goals, and (3) offering a financial team-based incentive if prespecified goals were met.

INTRODUCTION

Impetus for Quality Care Delivery

The cancer care delivery system, which operates at the intersection of basic and translational science, clinical medicine, and patient and family needs, is complex and multidisciplinary. All stakeholders agree that the system must deliver better health care, at a higher quality and at an appropriate cost. Physicians and provider teams can directly influence the quality of cancer care delivered to patients; this is one component of value.1 New payment models (eg, the Medicare Access & CHIP Reauthorization Act of 2015)2 encourage achievement of this “triple aim”3 through a focus on payment for value delivered rather than on volume of services.

Quality Measures at National and Health System Levels

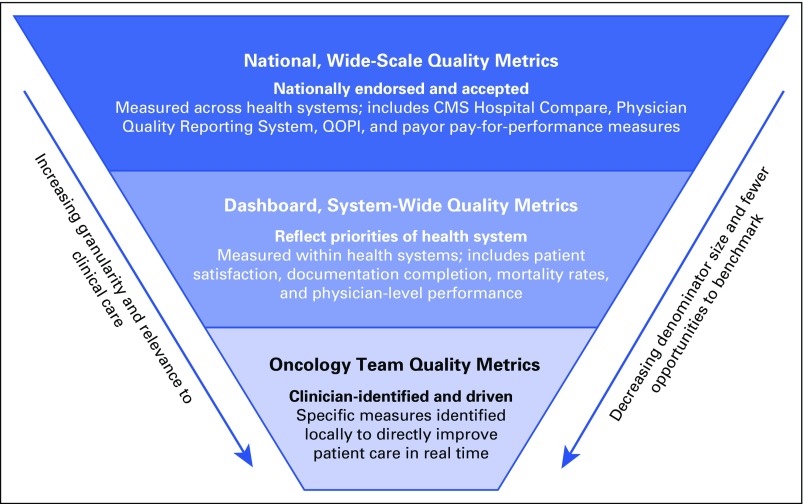

We propose a three-level construct for quality measures (Fig 1). Broadly, there are measures that either are widely endorsed by national organizations such as the National Quality Forum,4 are in the process of adoption by government and by other payers such as the Center for Medicare and Medicaid Services,2 or are endorsed by professional societies such as ASCO or the American Society for Therapeutic Radiologic Oncology for voluntary use by their members and others.5,6

Fig 1.

Quality measurement at three defined levels. CMS, Center for Medicare and Medicaid Services; QOPI, Quality Oncology Practice Initiative.

We call the next level of quality measures dashboard measures. Dashboard measures are also broad and are generally used for comparison both across and within health care systems and by providers of care. As the name implies, these measures are often used to create dashboards to track improvement or deterioration in performance over time. Examples include patient satisfaction measurement systems, such as those reported by Press-Ganey,7 or the observed-to-expected mortality ratios promulgated by the University HealthCare Consortium (now Vizient).8

Quality Measures at the Oncology Care Team Level

The third level of measurement consists of those measures that oncologists may find more directly meaningful and relevant to their patient care. These measures are often applicable specifically to their subspecialty or practice setting and are thus relevant for evaluating the team’s performance. One lesson from a previous experience in implementing ASCO’s Quality Oncology Practice Initiative (QOPI) measurement system in a large multidisciplinary academic cancer center was that oncologist engagement in quality improvement was essential to drive improvement and to sustain broad-based quality care.9

On the basis of this construct and previous experience, we initiated a project to implement and test a robust quality improvement program in a large, academic, multidisciplinary National Cancer Institute–designated comprehensive cancer center. The three fundamental aspects of the program were engaging physicians in selection metrics and targets, automating data extraction and providing timely provider feedback, and providing group financial incentives to meet targets. We report the methods used to engage physicians, the resources needed, the success to date, and the next steps in our program.

METHODS

Setting

Patient care in our center is provided by oncologists (medical, surgical, and radiation), with closely aligned advanced practice providers (APPs), nurse coordinators, and associated administrative staff, organized into 13 disease-site or treatment-specific teams known as cancer care programs (CCPs). During fiscal year 2016 (FY16 [September 1, 2015, to August 31, 2016]), we had 140 physicians and 58 full-time equivalent (FTE) APPs at our Palo Alto center, where we had had 5,397 analytic patient-cases and delivered 20,092 new patient visits (each patient usually had new patient visits to multiple subspecialists). We provided 51,177 infusions and 27,337 radiation treatments.

Stanford Health Care (SHC) uses the Epic electronic health record (EHR), Version 2015 (Epic Systems, Verona, WI). For quality measurement purposes, we used the SHC Enterprise Data Warehouse, the Stanford Cancer Institute (SCI) research database, which includes curated EHR and Stanford cancer registry data. In addition, we used investigator-maintained, disease-specific databases in the neuro-oncology, blood and marrow transplantation, and hematology CCPs.

Physician Engagement Activities

The clinical and administrative executive leadership of the SCI and SHC designed a program to build a portfolio of cancer quality metrics in FY16. The medical director of cancer quality met individually with the physician leader of each CCP to develop potential metric ideas for their CCP, with the guiding principles that final metrics must (1) be meaningful and important to the care team and patients, (2) have data elements available in existing databases, (3) not have been broadly measured at this cancer center, (4) be multidisciplinary, and (5) not increase clinician workload significantly.

The cancer quality team reviewed and additionally refined the metric ideas with SHC and the SCI analytic teams. The executive leadership team reviewed and approved the final metrics.

A monetary incentive was provided by SHC for each CCP that met its predetermined and mutually agreed on metric target at the end of FY16. The incentive funds were provided to the CCP (not to individuals) for reinvestment in their program, with strong encouragement to spend funds on quality improvement activities. Potential distributions were $75,000 to $125,000 per CCP, depending on patient volume and number of physicians in the CCP. To set the targets, the team reviewed the results of the metric for the final quarter of fiscal year 2015, then added a percentage for improvement on the basis of attainability and challenge to the CCP. The final quarter of FY16 was used to determine achievement of the target performance.

CCPs in which tumor stage was relevant, and for which the EHR contained a specific staging module (ie, breast, cutaneous, GI, gynecologic, head and neck, lymphoma, sarcoma, thoracic, and urologic CCPs), were offered staging module adherence as a metric. Individual new patient disease and staging information is entered in coded data fields in the Epic staging module, and is finalized with a signature by a physician or an APP. Adherence to this documentation standard was scored as successful if completed within 60 days of the initial cancer treatment at Stanford, regardless of modality of treatment. The physician who provided the initial treatment was responsible for compliance, although any provider on the patient's care team was encouraged to complete staging information. Targets were calculated by doubling the fiscal year 2015 baseline performance, with a minimum of 40% and a maximum of 70% adherence.

To increase adherence to the staging module, the quality team distributed interim reports that demonstrated their performance during the past month to each CCP physician leader at the end of the month. Included in the report were the medical record numbers of those patients whose staging module had not yet been completed, by attributing physician. CCP physician leaders shared the interim results with the entire team or with individual physicians who provided incomplete data. Final monthly reports that included CCP performance over time were provided to all CCPs, and each CCP physician leader received a report with unblinded physician-level performance, including patient-level data.

A similar reporting feedback loop was conducted for the other quality metrics. Specifically, each CCP physician leader was provided a routine report of his or her metric performance. Reports included the overall rate of success, physician-level results, and patient-level detail. Physician leaders shared the results with the entire CCP through e-mail reminders or in presentations at CCP meetings. Improvement efforts were driven by the CCP physician leaders, who engaged with underperformers in their group and identified opportunities for increased participation by the entire care team to increase performance.

RESULTS

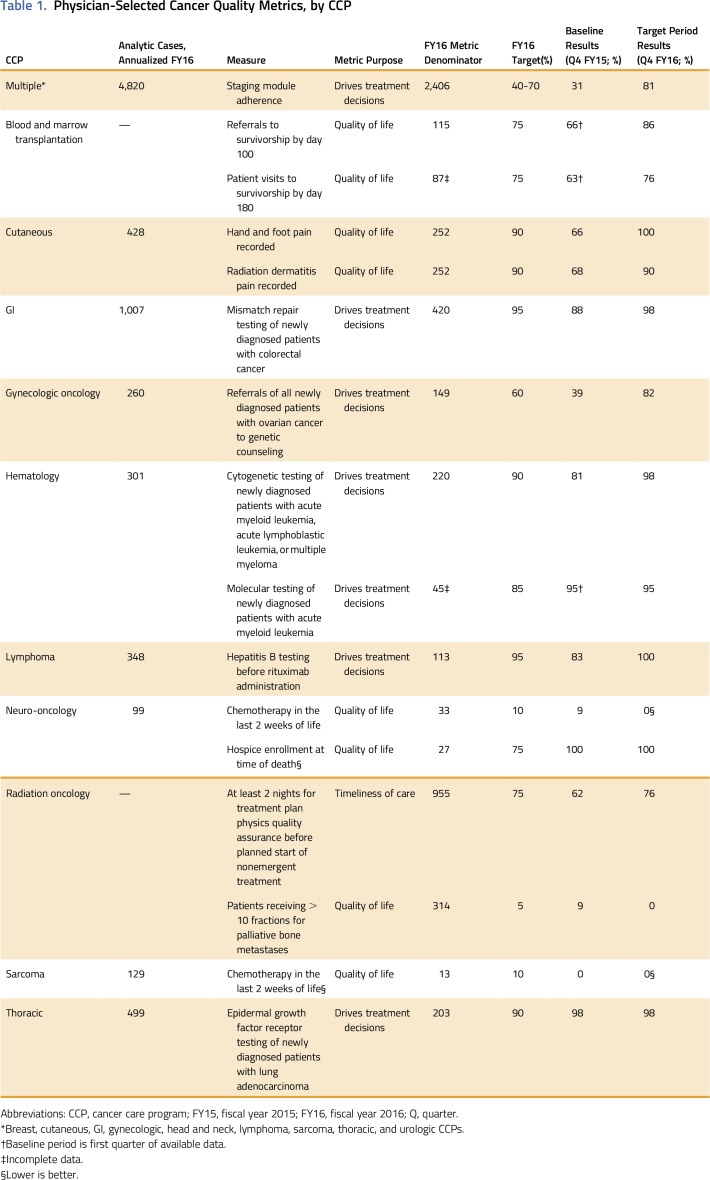

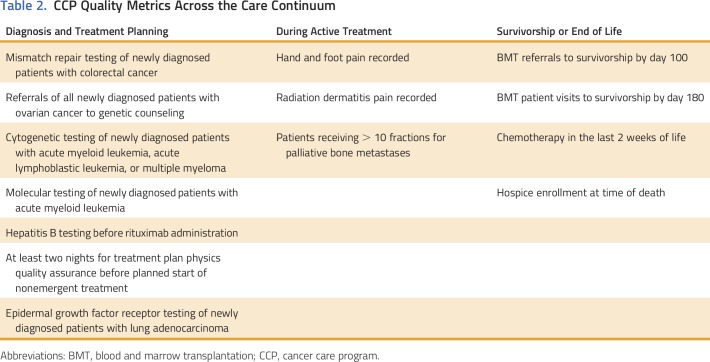

In total, 15 distinct quality metrics were identified, developed, and measured for 13 CCPs (Table 1) in FY16. Nine CCPs chose staging module adherence, two chose chemotherapy in the last 2 weeks of life, and the remaining 13 metrics were unique to a specific CCP, for a total of 24 CCP-specific metrics. All metrics were process measures, and six were based on nationally recognized measures. The remaining nine metrics were based on evidence-based guidelines; they were identified as clinically meaningful and important to the care team and the patients but are not nationally endorsed. Seven metrics were relevant to patient quality of life, seven to treatment decision making, and one to timeliness of care. Importantly, the metrics spanned the period from cancer diagnosis through survivorship or end of life (Table 2). All the metrics related to treatment decision making and timeliness of care fell under the heading of diagnosis, whereas the quality-of-life metrics fell under the heading of treatment or the heading of survivorship or end-of-life.

Table 1.

Physician-Selected Cancer Quality Metrics, by CCP

Table 2.

CCP Quality Metrics Across the Care Continuum

A total of 5,604 patients or visits (depending on the measure) were included in the denominators for the metrics. Data from five different databases were accessed to measure and track results for all metrics on a monthly or quarterly basis. The development and continuous refinement of the quality metrics required the time of two FTE roles, divided among a quality manager and three data architects over the course of 9 months. The management and dissemination of the metric reports to the 13 CCPs required an average of 8 hours per month.

The staging module adherence metric was rolled out in phases by clusters of CCPs over the course of 4 months. Within 6 months, the nine CCPs were receiving monthly staging module adherence reports. Baseline performance was 31%, which rose to 81% by study end, for a total of 1,506 records staged out of 2,406 eligible over the year. The lowest CCP adherence at study end was 57% (an increase from 11%) and the maximum was 100%, which was reached by two CCPs. A major driver for staging module adherence was the encouragement for APPs to enter and finalize staging information in the module.

DISCUSSION

Our quality program had three essential elements: (1) engaging physicians in choosing the quality measures and prespecifying goals, (2) using automated extraction methods for rapid and timely feedback on improvement and progress toward achieving goals, and (3) offering a financial team-based incentive if prespecified goals were met.

Our new quality program was successful in embedding and centralizing our physician leaders in its design and implementation. This led to increased interest and engagement, while achieving the goal of improving care as measured by their selected metrics' improvements.

Measurement of quality performance, including implementation of the QOPI measurement tool within an academic medical center,9 in a statewide network sponsored by a regional payer,10 in a state-wide affiliation of practices centered on an academic medical center,11 and in a government-sponsored affiliation of oncology practices,12 has been accomplished. Retrospective analysis of quality of care measures within a system, such as at the Veterans Health Administration,13,14 is also possible. Unfortunately, a gap exists in the use of measures to improve the quality of care, demonstrate measurable outcome improvement, and sustain the improvement. Engaging physicians and patient care teams to improve the quality of care that they deliver and to change behavior is difficult.15

Stanford Cancer Center has participated in QOPI since 2011 and was certified in 2013. Although the QOPI site and benchmarking results are useful in identifying improvement opportunities, our physicians were relatively removed from the process, including the selection and development of the metrics and the collection of the data. In addition, the results were provided only twice a year for a subset of our CCPs, were based on a small sample of our patients, and were not well communicated across CCPs.

Physician leaders were surveyed, and they noted that this program was either useful or very useful to improving patient care, and all were very satisfied with their experiences. They also noted that the most important driver was their engagement and leadership in the selection and improvement efforts for their metrics, more so than the financial incentive or data transparency. Data and comments are presented in the Appendix (online only).

As measures were developed and analyzed for the first time in our setting, it was unknown what the baseline performance would be. Some CCPs showed high performance from the beginning of the measurement period, such as in the measurement of epidermal growth factor mutation testing for newly diagnosed patients with lung adenocarcinoma. The formal measurement verified that that evidence-based care was being delivered in that area, knowledge that was not available before this measurement. We acknowledge that our results may be unique to our academic medical center, but note that our clinical care program has multiple stakeholders and multiple competing demands on the EHR and information technology reporting staff and on our clinicians’ time and attention. We posit that our findings of early clinician engagement in the quality process, timely and accurate performance feedback, the setting of achievable targets, and a small financial incentive award to the program can be effectively replicated in other settings, including smaller community-based practices and hospital-based cancer programs.

Quality measures, including the widely used QOPI system, were designed in the era of paper-based recording, and measurement requires by-hand abstraction of data16 of relatively small samples of patients. It was important to our care teams that we measure entire, defined populations of patients, and this was a major impetus to select only metrics that were pulled by automated extraction, which would require little to no manual abstraction.

Although outcome measures were not excluded from the program, all metrics for the portfolio ended up being process metrics because of the limited resources typically required to build and improve outcome measures, such as time, information technology support, and agreement on which outcome measure to select. The development and management of the metrics were resource intensive, requiring approximately two FTEs of data architects, quality analysts, and other EHR experts. Although many of the metrics were easily extractable from the EHR, others required manual effort to abstract records and perform quality control on all negative results (to ensure that missing data were not scored incorrectly as negative or nonadherent, because clinical data are captured in the EHR in a variety of fields and text notes) and to abstract records for the death-related metrics. This manual review effort was important to maintain credibility by the care teams, who could then focus on improvement efforts.

Close collaboration among the cancer quality team, physician leaders, and data architects was critical to ensure high-quality metric development. Although metric selection was a critical milestone of engagement, the involvement of the physician leader to continuously improve the metric was arguably the most important in developing additional engagement by the CCPs. For example, the original staging module associated with lymphoma, cutaneous, and head and neck cancer was incomplete, inaccurate, and poorly constructed, and needed refinement by a local disease-level expert’s leadership. The engagement and leadership of these three CCPs led to increased rates of staging module adherence.

Automated, timely, high-quality reporting allowed our physician leaders to provide their CCPs with unblinded, recent data that were immediately actionable by individual providers. All providers and CCP staff were incentivized to meet targets, because the financial incentive would be provided to the CCP. Including adherence to quality measures as part of a physician compensation plan for an 11-member academic health center–based hematology-oncology division has been reported to be effective.17 Financial awards here were distributed to the CCP team for use at their discretion and were not meant for individual compensation. There seemed to be more communication and collaboration by care teams in an effort to have these metrics be successful, because the entire team was incentivized to meet their CCP goal. For example, APPs increased their involvement in staging module input, medical assistants ensured that pain was documented for cutaneous patients, and nurse coordinators checked that hepatitis B testing was complete for patients with lymphoma who were starting rituximab. In addition, at the gynecologic oncology CCP tumor board, the multidisciplinary team systematically reviewed whether genetic referrals were completed for newly diagnosed patients with ovarian cancer. Our findings may be useful in promulgating team science in oncology.18

Staging data, which are now available as a discrete data field, will serve as the foundation for measuring value-driven care and providing our teams with data to build patient cohorts by disease, stage, and other clinical characteristics that measure the care being delivered. Building these specific patient cohorts will help the cancer center in the development of clinical trials that are based on potential accrual information, in the business development of new or expanding programs, and in ensuring that consistent care is being delivered across our network of care by analyzing use data for these cohorts.

Although the first iteration of this portfolio of quality metrics was successful, lessons learned have led to enhancements for the FY17 portfolio. First, CCPs have been strongly encouraged to select outcome, rather than process, metrics, especially ones that will define and increase the value of care. Many CCPs have chosen to measure unplanned care events (ie, emergency department visits, unplanned admissions within our system) for patients they have treated in the preceding 6 months. The logic of the unplanned care report is based on the staging module, which will also permit CCPs to build specific cohorts and measure the care used for a defined period. This information will drive efforts to reduce identified unnecessary variation and ensure consistent, predictable care. Furthermore, in consideration of sustainability, we have consolidated CCPs around specific metrics, such as unplanned care, to limit the number of unique metrics that require development and management.

Second, because these metrics were determined to be meaningful to the care teams and their patients, we will verify and solicit feedback regarding future metric ideas from our cancer patient and family advisory council, composed of former and actively treated patients with cancer and family members or caregivers of patients. An important opportunity exists to better engage patients and family members in defining quality of care. One example of this may be patient-reported outcome measures, which we will incorporate as resources allow.

Third, benchmarking opportunities are a missing component in using locally identified metrics. We invite peer and local cancer centers to explore measuring with us and collaborating on identifying best practices.

Finally, an increasing challenge for many organizations is how to monitor and maintain quality of care across growing networks of care. Although these measures were completed for our Palo Alto center, the next steps are to promulgate these metrics at our other network sites of care and to identify opportunities to improve across the network.

ACKNOWLEDGMENT

We thank Ruchille B. Ruiz for administrative support and thank Terri Owen, Karen Tong, James Martin, Jimmy Lau, Raj Behal, MD, and Chris Holsinger, MD, for their contributions.

Appendix

Six months after conclusion of implementation, we surveyed physician leadership to get their opinions. There were nine responses (out of 13 cancer care program [CCP] leaders):

When asked how satisfied they were on a scale from 1 (very unsatisfied) to 5 (very satisfied), the mean response was 4.56.

When asked how useful the program was to improve quality of patient care, four said somewhat useful, four said very useful, and one was unsure.

When asked how important the financial incentive was to the CCP, six said somewhat important, and three said very important.

-

When asked what the key drivers were, in order of importance:

Eight of nine said the participation and leadership of selecting a metric that was meaningful to the patient and care team was most important

Seven of nine said transparent data and competition was second most important

Six of nine said financial incentive was least important (of choices, but respondents were able to note other drivers and rank them).

-

Weaknesses identified by respondents included:

Continued challenges with sustainability

Criticism of colleagues when imperfect data were provided

Need for more involvement from administration leadership to ensure that targets require striving to achieve; conflict of interest for quality and clinical teams to identify targets

Limited resources to create and carry out improvement projects

Challenges in identifying quality metrics that can easily be measured across entire CCP

Difficulty in measuring outcomes rather than process. Need to continue to focus on measuring how our efforts translate into patient benefit.

AUTHOR CONTRIBUTIONS

Conception and design: Julie Bryar Porter, Eben Lloyd Rosenthal, Sridhar Belavadi Seshadri, Douglas W. Blayney

Collection and assembly of data: Julie Bryar Porter, Andrea Segura Smith, Yohan Vetteth, Eileen F. Kiamanesh, Amogh Badwe, Lawrence David Recht, Joseph B. Shrager, Douglas W. Blayney

Data analysis and interpretation: Julie Bryar Porter, Marcy Winget, Andrea Segura Smith, Eileen F. Kiamanesh, Amogh Badwe, Ranjana H. Advani, Mark K. Buyyounouski, Steven Coutre, Frederick Dirbas, Vasu Divi, Oliver Dorigo, Kristen N. Ganjoo, Laura J. Johnston, Joseph B. Shrager, Eila C. Skinner, Susan M. Swetter, Brendan C. Visser, Douglas W. Blayney

Manuscript writing: All authors

Final approval of manuscript: All authors

Accountable for all aspects of the work: All authors

AUTHORS' DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

Improving Care With a Portfolio of Physician-Led Cancer Quality Measures at an Academic Center

The following represents disclosure information provided by authors of this manuscript. All relationships are considered compensated. Relationships are self-held unless noted. I = Immediate Family Member, Inst = My Institution. Relationships may not relate to the subject matter of this manuscript. For more information about ASCO's conflict of interest policy, please refer to www.asco.org/rwc or ascopubs.org/journal/jop/site/misc/ifc.xhtml.

Julie Bryar Porter

No relationship to disclose

Eben Lloyd Rosenthal

No relationship to disclose

Marcy Winget

No relationship to disclose

Andrea Segura Smith

No relationship to disclose

Sridhar Belavadi Seshadri

No relationship to disclose

Yohan Vetteth

No relationship to disclose

Eileen F. Kiamanesh

No relationship to disclose

Amogh Badwe

No relationship to disclose

Ranjana H. Advani

Consulting or Advisory Role: Genentech/Roche, Juno, Bristol-Myers Squibb, Spectrum Pharmaceuticals, Sutro, NanoString, Pharmacyclics, Gilead Sciences, Bayer HealthCare Pharmaceuticals, Cell Medica

Research Funding: Millennium Pharmaceuticals (Inst), Seattle Genetics (Inst), Genentech(Inst), Bristol-Myers Squibb (Inst), Pharmacyclics (Inst), Janssen Pharmaceuticals (Inst), Celgene (Inst), Forty Seven (Inst), Agensys (Inst), Merck (Inst), Kura (Inst), Regeneron Pharmaceuticals (Inst), Infinity Pharmaceuticals (Inst)

Mark K. Buyyounouski

Patents, Royalties, Other Intellectual Property: Up-To-Date

Steven Coutre

Consulting or Advisory Role: Genentech, Celgene, Pharmacyclics, Gilead Sciences, Abbvie, Novartis, Janssen Oncology

Research Funding: Celgene (Inst), Novartis (Inst), Pharmacyclics (Inst), Gilead Sciences (Inst), Abbvie (Inst), Janssen Oncology (Inst)

Travel, Accommodations, Expenses: Celgene, Gilead Sciences, Novartis, Abbvie, Pharmacyclics, Janssen Oncology

Frederick Dirbas

Stock or Other Ownership: Cianna Medical, Hologic

Vasu Divi

No relationship to disclose

Oliver Dorigo

No relationship to disclose

Kristen N. Ganjoo

Honoraria: Novartis, Daiichi Sankyo (I)

Consulting or Advisory Role: Novartis, Daiichi Sankyo

Laura J. Johnston

No relationship to disclose

Lawrence David Recht

No relationship to disclose

Joseph B. Shrager

Consulting or Advisory Role: Benton Dickinson, Varian Medical Systems

Research Funding: Varian Medical Systems

Patents, Royalties, Other Intellectual Property: Patent on a method of preventing muscle atrophy

Eila C. Skinner

No relationship to disclose

Susan M. Swetter

No relationship to disclose

Brendan C. Visser

No relationship to disclose

Douglas W. Blayney

Honoraria: Apotex, Mylan, TerSera, Cascadian Therapeutics, Varian Medical Systems

Research Funding: Amgen (Inst), BeyondSpring Pharmaceuticals (Inst)

REFERENCES

- 1.Porter ME: What is value in health care? N Engl J Med 363:2477-2481, 2010 [DOI] [PubMed] [Google Scholar]

- 2. Centers for Medicare and Medicaid Services: Oncology care model. https://innovation.cms.gov/initiatives/oncology-care/

- 3.Institute for Healthcare Improvement : The IHI triple aim initiative. http://www.ihi.org/engage/initiatives/TripleAim/Pages/default.aspx

- 4. National Quality Forum: NQF endorses cancer measures. http://www.qualityforum.org/News_And_Resources/Press_Releases/2012/NQF_Endorses_Cancer_Measures.aspx.

- 5.Blayney DW, McNiff K, Eisenberg PD, et al. : Development and future of the American Society of Clinical Oncology’s Quality Oncology Practice Initiative. J Clin Oncol 32:3907-3913, 2014 [DOI] [PubMed] [Google Scholar]

- 6.Hampton T: Radiation oncology organization, FDA announce radiation safety initiatives. JAMA 303:1239-1240, 2010 [DOI] [PubMed] [Google Scholar]

- 7. Press-Ganey: Patient experience – Patient-centered care. Understanding patient needs to reduce suffering and improve care. http://www.pressganey.com/solutions/patient-experience.

- 8. Vizient. https://www.vizientinc.com/

- 9.Blayney DW, McNiff K, Hanauer D, et al. : Implementation of the Quality Oncology Practice Initiative at a university comprehensive cancer center. J Clin Oncol 27:3802-3807, 2009 [DOI] [PubMed] [Google Scholar]

- 10.Blayney DW, Severson J, Martin CJ, et al. : Michigan oncology practices showed varying adherence rates to practice guidelines, but quality interventions improved care. Health Aff (Millwood) 31:718-728, 2012 [DOI] [PubMed] [Google Scholar]

- 11.Gray JE, Laronga C, Siegel EM, et al. : Degree of variability in performance on breast cancer quality indicators: Findings from the Florida initiative for quality cancer care. J Oncol Pract 7:247-251, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Siegel RD, Castro KM, Eisenstein J, et al. : Quality improvement in the National Cancer Institute community cancer centers program: The quality oncology practice initiative experience. J Oncol Pract 11:e247-e254, 2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Nipp RD, Kelley MJ, Williams CD, et al. : Evolution of the Quality Oncology Practice Initiative supportive care quality measures portfolio and conformance at a Veterans Affairs medical center. J Oncol Pract 9:e86-e89, 2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Walling AM, Tisnado D, Asch SM, et al. : The quality of supportive cancer care in the Veterans Affairs health system and targets for improvement. JAMA Intern Med 173:2071-2079, 2013 [DOI] [PubMed] [Google Scholar]

- 15.Wilensky G: Changing physician behavior is harder than we thought. JAMA 316:21-22, 2016 [DOI] [PubMed] [Google Scholar]

- 16.Goldwein JW, Rose CM: QOPI, EHRs, and quality measures. J Oncol Pract 2:262, 2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Makari-Judson G, Wrenn T, Mertens WC, et al. : Using quality oncology practice initiative metrics for physician incentive compensation. J Oncol Pract 10:58-62, 2014 [DOI] [PubMed] [Google Scholar]

- 18.Kosty MP, Hanley A, Chollette V, et al. : National Cancer Institute-American Society of Clinical Oncology Teams in Cancer Care Project. J Oncol Pract 12:955-958, 2016 [DOI] [PubMed] [Google Scholar]