Abstract

Background

Medication safety strategies involving trigger alerts have demonstrated potential in identifying drug-related hazardous conditions (DRHCs) and preventing adverse drug events in hospitalized patients. However, trigger alert effectiveness between intensive care unit (ICU) and general ward patients remains unknown. The objective was to investigate trigger alert performance in accurately identifying DRHCs associated with laboratory abnormalities in ICU and non-ICU settings.

Methods

This retrospective, observational study was conducted at a university hospital over a 1-year period involving 20 unique trigger alerts aimed at identifying possible drug-induced laboratory abnormalities. The primary outcome was to determine the positive predictive value (PPV) in distinguishing drug-induced abnormal laboratory values using trigger alerts in critically ill and general ward patients. Aberrant lab values attributed to medications without resulting in an actual adverse event ensuing were categorized as a DRHC.

Results

A total of 634 patients involving 870 trigger alerts were included. The distribution of trigger alerts generated occurred more commonly in general ward patients (59.8%) than those in the ICU (40.2%). The overall PPV in detecting a DRHC in all hospitalized patients was 0.29, while the PPV in non-ICU patients (0.31) was significantly higher than the critically ill (0.25) (p = 0.03). However, the rate of DRHCs was significantly higher in the ICU than the general ward (7.49 versus 0.87 events per 1000 patient days, respectively, p < 0.0001). Although most DRHCs were considered mild or moderate in severity, more serious and life-threatening DRHCs occurred in the ICU compared with the general ward (39.8% versus 12.4%, respectively, p < 0.001).

Conclusions

Overall, most trigger alerts performed poorly in detecting DRHCs irrespective of patient care setting. Continuous process improvement practices should be applied to trigger alert performance to improve clinician time efficiency and minimize alert fatigue.

Keywords: adverse drug event, adverse drug event surveillance, automated medication monitoring system, critically ill, drug related hazardous condition, intensive care unit, medication safety, trigger alert

Introduction

The risk of adverse drug events (ADEs) is higher in the intensive care unit (ICU) and is associated with more pronounced deleterious outcomes than general ward patients. An ADE is any patient injury directly attributed to medication use.1 A landmark trial found the rate of ADEs was highest in the medical ICU (19.4 events per 1000 patient days) compared with medical and surgical general wards (10.6 and 8.9 events per 1000 patient days, respectively).2 After adjusting for the number of medications administered, medical ICU patients continued to have the highest risk of experiencing an ADE.2 Also, the rate of preventable and potential ADEs in the critically ill has been estimated to be almost twofold higher than the general ward population, with the medical ICU associated with the highest risk.3 Although no significant differences between ICU and non-ICU rates were found in this study after adjusting the number of medications used, it is important to recognize that nonpreventable events were not included.3 The hospital length of stay resulting from ADEs transpiring in ICU patients is 1.6 days longer than those occurring in the general ward.3 The economic burden related to drug-induced adverse events on healthcare institutions has been significant. Incremental costs associated with an ADE transpiring in the ICU has been estimated at $10,100 (adjusted year 2017 currency) more than those in the general ward.4

Comprehensive ADE surveillance programs have historically focused on identification rather than prevention.5,6 However, one particular surveillance strategy utilizing trigger alerts has shown promise as a viable preventative strategy.7–10 Trigger alerts are real-time notifications to healthcare providers resulting from logic-based rules involving abnormal laboratory or physiologic values detected from the electronic medical record. Ideally to prevent ADEs, trigger alerts should be generated from abnormal values preceding patient injury also known as drug-related hazardous conditions (DRHCs).11,12 Real-time trigger alerts may allow for clinician intervention, thereby minimizing the severity of drug-induced injury or avoid ADEs altogether.5

Few studies have evaluated the impact of pharmacists utilizing trigger alerts to prevent ADEs, especially in an ICU setting.13–15 Pharmacists incorporating this strategy into their patient care responsibilities demonstrated improved patient safety with a high degree of intervention recommendations accepted by the provider.13–15 Only one study compared the performance of automated trigger alerts between ICU and general ward patient populations.13 Pharmacists intervened more frequently on trigger alerts in the general ward than for critically ill patients, although physician acceptance rates of drug therapy recommendation changes were exceedingly high in both of these patient care areas.13 Moreover, the positive predictive value (PPV) of alerts associated with a DRHC were higher in general ward patients compared with those in the ICU (0.76 versus 0.66, respectively).13 These findings suggest trigger alerts performed better in non-ICU patients. A limitation of this study was that only 20% of all trigger alerts evaluated involved drug-induced laboratory abnormalities, thereby identifying a DRHC. Furthermore, for those alerts that were related to DRHCs in the previous study, the overall sample was low and not the primary focus of the investigation. In other words, the vast majority of triggers were designed for detection and not in a manner to notify the pharmacist of impending injury. The previously reported performance values do not reflect the trigger alert performance for prevention. Therefore, the purpose of this study was to address this gap and evaluate the performance of trigger alerts involving laboratory abnormalities to identify DRHCs and prevent ADEs between ICU and general ward patients.

Methods

Patients and study design

This was a retrospective, observational study conducted at a major academic medical center over a 1-year period (from 1 January 2015 to 31 December 2015) after Institutional Review Board approval. Banner University Medical Center Phoenix is a 733-bed quaternary care center including 88 adult ICU beds with 38,417 total adult admissions consisting of 196,968 total adult patient days during 2015. Our previous work was used to identify 20 unique triggers at our institution that identified DRHCs being drug associated laboratory abnormalities and possibly preventative of ADEs (Appendix 1).13 Patients at least 18 years of age with one or more of the 20 trigger alerts associated with abnormal laboratory values generated during their hospital stay were included. Patients were excluded if data were missing or not accessible in the electronic medical record. Only the initial unique trigger alert was evaluated in patients with duplicate alerts resulting from the same medication during the same hospital admission stay as a previously generated alert. However, triggers alerts associated with warfarin and international normalized ratio (INR) for the same patient were included for each instance generated on separate patient hospital days.

Patients were identified through the electronic medical record database. A pharmacist (JRR) not involved with the care of these patients investigated all trigger alert data from a centralized database. Pertinent demographic and clinical data were collected, including age, sex, comorbidities, specific trigger alert generated and patient location (ICU or general ward) at time of alert, identification of DRHCs and ADEs, severity (only for DRHCs identified) as well as the rate of drug therapy changes resulting from trigger alerts.

Trigger alerts at our institution are automated notifications available in both paper reports and electronically on a designated webpage available within the hospital. These notifications were initially developed as proprietary clinical decision support software (Discern Expert Rules, Cerner Corp, Kansas City, MO, USA). However, trigger alerts are modified at our institution based on their unique needs by adding, removing, or changing the logic-based criteria for each alert. All trigger alerts are generated in real time when an identified medication is present in conjunction with a specified laboratory abnormality exceeding an established threshold of either an absolute value (i.e. higher or lower than the normal range) or the rate of change between the current and previously reported laboratory value. For example, one logic-based rule involves warfarin and the INR. A trigger alert would be produced if the patient has an active order for warfarin on the medication administration record and the INR either increased by more than 0.5 from the previous INR to the most current value or the reported INR value was over 4.0, irrespective of the degree of change from the previous value. It should be noted, false-positive alerts for a possible DRHC or ADE may be the result of other causes such as disease-related issues (e.g. end-stage liver disease). Therefore, a manual chart review resultant to the trigger alert was required to establish if the laboratory abnormality was drug induced or attributed to nondrug-related causes.

Definitions

Causality assessment and severity for drug-induced laboratory abnormalities with or without harm experience by the patient (i.e. DRHCs and ADEs) were evaluated using a standardized approach as previously described.13,16–18 The pharmacist conducting the data collection determined the probability of the event identified by the trigger alert to be drug related and excluding nondrug causes by using three validated tools.19–21 This approach was used to determine causality for possible DRHCs (i.e. laboratory abnormality was drug induced with the potential for harm without any organ injury transpiring at the point of evaluation) and resultant ADEs (i.e. injury defined as the presence of drug-induced organ injury).11 The pharmacist classified all trigger alerts as DRHCs if two of the three instruments concluded the probability of a drug-related laboratory abnormality was ‘possible’, ‘probable’, or ‘definite’. The pharmacist reviewed the patient chart for all identified DRHCs for the presence of related ADEs. Potential adverse outcomes included bleeding (any type or severity), pancreatitis, arrhythmia, neutropenia, acute hepatic failure, acute renal failure, acidosis, altered mental status, nausea/vomiting, nystagmus, ataxia, coma, respiratory depression, seizures, and death. The pharmacist used the same validated tools, causality assessment and agreement between instruments to identify if the adverse outcome was an ADE.

Severity of harm was evaluated for all DRHCs using a standardized classification system based on the National Cancer Institute’s Common Terminology Criteria for Adverse Events (NCI CTCAE).22 This classification system uses a five-point numeric scale from ‘1’ (mild) to ‘5’ (death). The NCI CTCAE scale is based on predefined laboratory serum concentration ranges to categorize corresponding severity. Unfortunately, the NCI CTCAE does not classify phenytoin and digoxin serum concentrations. Therefore, the investigators established severity criteria for both of these agents. Severity was considered ‘mild’, ‘moderate’, or ‘severe’ for corresponding serum total phenytoin concentrations of 20–30, 31–39, and over 40 μg/ml, respectively. Digoxin serum concentrations in the ranges of 2.1–3.0, 3.1–4.0, and over 4.0 ng/ml were considered ‘mild’, ‘moderate’, and ‘severe’, respectively. For comparison purposes, the NCI CTCAE numeric scale (1–5) was categorized into the corresponding degree of severity as follows: ‘mild’, ‘moderate’, ‘severe’, ‘life threatening’, and ‘fatal’.

Study endpoints and analysis

The primary endpoint was the PPV of trigger alerts involving laboratory abnormalities identifying DRHCs in the ICU versus the general ward overall and for each unique trigger alert in identifying DRHCs. Secondary analyses evaluated severity of harm associated with DRHCs and ADE rates between ICU and non-ICU patients. The PPV for each trigger alert identifying a DRHC was calculated by dividing the rate of true-positive trigger alerts generated (i.e. the trigger accurately identified the drug-induced laboratory abnormality) by the sum of both true- and false-positive alerts (i.e. total number of alerts produced that were drug related or not). The DRHC and ADE rates (events per 1000 patient days and events per 1000 admissions) were calculated by the number of events detected divided by the total number of patient days or admissions recorded during the study period (i.e. calendar year 2015) and multiplying by 1000. The DRHC and ADE rates in the ICU and general ward were calculated by the same process previously mentioned but dividing the number of events by the patient days and admissions recorded in the ICU and general ward during the study period and multiplying by 1000.

A 12-month study period was estimated to provide an adequate sample size, being a convenience sample of over 500 alerts for the 20 unique trigger alerts based on a previously published study.13 This approach for study sample size and time period estimation is also supported by another report.23 Statistical analyses were performed using GraphPad Prism version 7.0b for Windows (GraphPad Software, Inc., La Jolla, CA, USA, 2016). χ2 or Fisher’s exact test was used for categorical variables and student t test for continuous data. A comparison of DRHC and ADE rates was completed with a two-sample test for binomial proportions. A p value of less than 0.05 was considered statistically significant.

Results

A total of 870 trigger alerts in 634 unique patients were generated during the study period from 20 logic-based rules (Appendix 1). The majority of trigger alerts occurred on the general ward floors (59.8%) compared with ICUs (40.2%) (Table 1). The overall distribution of 11 (55%) of the 20 unique trigger alerts evaluated were similar between ICU and general ward patients (Table 2). However, five (25%) unique trigger alerts were significantly more frequent in the ICU, while four (20%) alerts were significantly more common in the general ward population (Table 2).

Table 1.

Trigger alert and patient characteristics.*

| Variable | General ward | ICU | p value |

|---|---|---|---|

| Total unique patients, n | 395 | 239 | – |

| Total trigger alerts, n | 520 | 350 | – |

| Male, n (%) | 211 (53.4%) | 138 (57.7%) | 0.051 |

| Mean age, years (SD) | 60.4 ± 17.2 | 58.7 ± 15.1 | 0.861 |

| Median (IQR) length of hospital stay, days | 10 (5, 20) | 15 (7, 25) | 0.001 |

| Median (IQR) days after hospital admission trigger alert generated | 5 (2, 10) | 5 (2, 10) | 0.294 |

The number of admissions and patient days reported during the study period.

ICU, intensive care unit; IQR, interquartile range; SD, standard deviation.

Table 2.

Trigger alert distribution in general ward and ICU patients.*

| Trigger alert | General ward (n = 520) | ICU (n = 350) | Total (n = 870) | p value$ |

|---|---|---|---|---|

| Thrombocytopenia (heparin or LMWH) | 106 (20.4%) | 134 (38.2%) | 240 (27.6%) | <0.001 |

| Supratherapeutic INR (warfarin and DDI) | 87 (16.8%) | 19 (5.4%) | 106 (12.2%) | <0.001 |

| Nephrotoxicity | 50 (9.6%) | 54 (15.4%) | 104 (12.0%) | 0.011 |

| Hepatotoxicity | 36 (6.9%) | 32 (9.1%) | 68 (7.8%) | 0.248 |

| Rapid INR increase (warfarin dose change) | 55 (10.6%) | 5 (1.4%) | 60 (6.9%) | <0.001 |

| Rapid INR increase (new warfarin start) | 48 (9.2%) | 4 (1.1%) | 52 (6.0%) | <0.001 |

| Supratherapeutic phenytoin serum concentration | 29 (5.6%) | 14 (4.0%) | 43 (4.9%) | 0.340 |

| Hyperkalemia | 17 (3.3%) | 21 (6.0%) | 38 (4.4%) | 0.065 |

| Thrombocytopenia (nonspecific) | 12 (2.3%) | 26 (7.4%) | 38 (4.4%) | <0.001 |

| Hyperkalemia | 15 (2.9%) | 8 (2.3%) | 23 (2.6%) | 0.670 |

| Neutropenia | 14 (2.7%) | 1 (0.3%) | 15 (1.7%) | 0.007 |

| Metabolic acidosis (nonspecific) | 4 (0.8%) | 10 (2.8%) | 14 (1.6%) | 0.025 |

| Pancreatitis | 11 (2.1%) | 3 (0.9%) | 14 (1.6%) | 0.178 |

| Supratherapeutic digoxin serum concentration | 11 (2.1%) | 2 (0.6%) | 13 (1.5%) | 0.087 |

| Metabolic acidosis (topiramate) | 8 (1.5%) | 4 (1.1%) | 12 (1.4%) | 0.771 |

| Hypertriglyceridemia (propofol) | 1 (0.2%) | 10 (2.8%) | 11 (1.3%) | 0.007 |

| Hyponatremia | 6 (1.2%) | 1 (0.3%) | 7 (0.8%) | 0.252 |

| Positive antinuclear antibody test (i.e. lupus) | 6 (1.2%) | 1 (0.3%) | 7 (0.8%) | 0.252 |

| Metabolic acidosis (zonisamide) | 2 (0.4%) | 1 (0.3%) | 3 (0.3%) | NS |

| Thrombocytopenia (fondaparinux) | 1 (0.2%) | 1 (0.3%) | 2 (0.2%) | NS |

Data presented as n (%).

χ2 or Fisher’s exact test comparing distribution of trigger alerts between the general ward and ICU groups.

DDI, drug–drug interaction; ICU, intensive care unit; INR, international normalized ratio; LMWH, low molecular weight heparin; NS, not significant.

The PPV for all 20 trigger alerts in identifying DRHCs was 0.29, with the general ward performing better than the ICU (0.31 versus 0.25, respectively, p = 0.03). The vast majority (90%) of all laboratory abnormality trigger alerts were associated with PPVs less than 0.50 (Table 3). Only two alerts (supratherapeutic INR resulting from drug–drug interaction with warfarin and supratherapeutic digoxin serum concentrations) were associated with a PPV greater than 0.75, while five trigger alerts were all false positives (PPV = 0.00) in identifying a DRHC. Although the trigger designed to identify heparin-induced thrombocytopenia (HIT) was the most frequently generated alert overall as well as in the ICU and general ward areas, it resulted in very low performance for identifying HIT (PPV = 0.16).

Table 3.

Positive predictive values for identifying drug-related hazardous conditions.*

| Trigger alert | General ward PPV (n) | ICU PPV (n) | Total PPV (n) | p value |

|---|---|---|---|---|

| Supratherapeutic INR (warfarin and DDI) | 0.80 (87) | 0.89 (19) | 0.82 (106) | 0.51 |

| Supratherapeutic digoxin serum concentration | 0.73 (11) | 1.00 (2) | 0.77 (13) | 0.99 |

| Hypertriglyceridemia (propofol) | 1.0 (1) | 0.40 (10) | 0.45 (11) | 0.36 |

| Hyperkalemia (ACE-I/ARB) | 0.40 (15) | 0.50 (8) | 0.43 (23) | 0.69 |

| Rapid INR increase (new warfarin start) | 0.40 (48) | 0.75 (4) | 0.42 (52) | 0.30 |

| Hyperkalemia (nonspecific) | 0.47 (17) | 0.33 (21) | 0.39 (38) | 0.51 |

| Rapid INR increase (warfarin dose change) | 0.29 (55) | 0.60 (5) | 0.32 (60) | 0.31 |

| Nephrotoxicity | 0.20 (50) | 0.20 (54) | 0.20 (104) | 0.99 |

| Hepatotoxicity | 0.08 (36) | 0.25 (32) | 0.16 (68) | 0.74 |

| Thrombocytopenia (heparin or LMWH) | 0.12 (106) | 0.16 (134) | 0.15 (240) | 0.54 |

| Pancreatitis | 0.18 (11) | 0.00 (3) | 0.14 (14) | 0.99 |

| Supratherapeutic phenytoin serum concentration | 0.10 (29) | 0.21 (14) | 0.14 (43) | 0.37 |

| Thrombocytopenia (nonspecific) | 0.00 (12) | 0.15 (26) | 0.11 (38) | 0.28 |

| Neutropenia | 0.07 (14) | 0.00 (1) | 0.07 (15) | 0.99 |

| Positive antinuclear antibody test (i.e. lupus) | 0.00 (6) | 0.00 (1) | 0.00 (7) | N/A |

| Metabolic acidosis (nonspecific) | 0.00 (4) | 0.00 (10) | 0.00 (14) | N/A |

| Metabolic acidosis (topiramate) | 0.00 (8) | 0.00 (4) | 0.00 (12) | N/A |

| Hyponatremia | 0.00 (6) | 0.00 (1) | 0.00 (7) | N/A |

| Metabolic acidosis (zonisamide) | 0.00 (2) | 0.00 (1) | 0.00 (3) | N/A |

| Thrombocytopenia (fondaparinux) | 0.00 (1) | 0.00 (1) | 0.00 (2) | N/A |

Data presented as the positive predictive value (PPV) of the total number of alerts generated (n) in the general ward, ICU and total. p value represents comparison between ICU and general ward

ACE-I, angiotensin-converting-enzyme inhibitor I; ARB, angiotensin II receptor antagonist; DDI, drug–drug interaction; ICU, intensive care unit; INR, international normalized ratio; LMWH, low molecular weight heparin; N/A, not applicable.

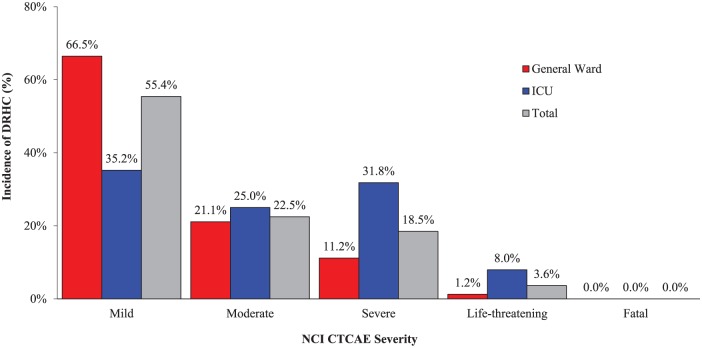

The rate (events per 1000 patient days) of DRHCs and ADEs in the ICU were over 8- and 20-fold higher, respectively, than the general ward (Table 4). About 78% of all DRHCs were deemed as mild or moderate in severity, which was found predominately in the general ward population (Figure 1). The overall rate of serious and life-threatening DRHCs in both ICU and general ward groups were 46 and 9 events, respectively. However, the ICU group experienced significantly higher rates of severe (n = 28) and life-threatening (n = 7) DRHCs compared with the general ward group (total severe and life-threatening DRHCs, 39.8% versus 12.4%, respectively, p < 0.001). No patients were determined to have been exposed to a fatal DRHC. Drug therapy was either modified or discontinued in most patients (74%) as a result of trigger alerts accurately identifying DRHCs. The overall rate of ADEs observed was low, with the more common events involving acute renal failure, bleeding, acute hepatic failure, and arrhythmias.

Table 4.

Drug-related hazardous condition and adverse drug event rates associated with trigger alerts.

| Variable | General ward (n = 520) | ICU (n = 350) | Total (n = 870) | p value* |

|---|---|---|---|---|

| Total admissions, n | 36,590 | 1827 | 38,417 | – |

| Total patient days, n | 185,226 | 11,742 | 196,968 | – |

| DRHC | ||||

| Incidence, n (%) | 161 (31.0%) | 88 (25.1%) | 249 (28.6%) | 0.03 |

| Events/1000 admissions | 4.4 | 48.2 | 6.5 | <0.0001 |

| Events/1000 patient days | 0.87 | 7.49 | 1.26 | <0.0001 |

| ADE | ||||

| Incidence, n (%) | 20 (3.8%) | 27 (7.7%) | 47 (5.4%) | <0.015 |

| Events/1000 admissions | 0.5 | 14.8 | 1.2 | <0.001 |

| Events/1000 patient-days | 0.11 | 2.30 | 0.24 | <0.001 |

p value represents comparison between ICU and general ward.

ADE, adverse drug event; DRHC, drug-related hazardous condition; ICU, intensive care unit.

Figure 1.

Severity of drug-related hazardous conditions identified by trigger alerts.

DRHC, drug-related hazardous condition; ICU, intensive care unit; NCI CTCAE, National Cancer Institute-Common Terminology Criteria for Adverse Events.

Discussion

The aim of this retrospective study was to describe the performance of alerts that identified DRHCs and compare the performance between critically ill and general ward patients. The overall performance of all laboratory abnormality trigger alerts was low with only about 30% accurately identifying DRHCs. Trigger alerts generated in ICU patients performed slightly worse than the general ward. However, trigger alerts in the critically ill population identified more DRHCs associated with severe and life-threatening DRHCs than general ward patients. Our study suggests trigger alerts show promise as an ADE prevention strategy in the ICU and general ward; however, the overall utility of these alerts for detecting drug-induced lab abnormalities prior to an ADE transpiring needs improvement in both patient care settings. Poor performance may result in clinician alert fatigue, which is counterproductive to the purpose of the technology and may negatively impact the provider on taking appropriate action to prevent a potential ADE.24 The clinical significance of this study highlights the importance of continuous re-evaluation of the logic-based criteria of trigger alerts to optimize performance and minimize the risk of alert fatigue.

Critically ill patients remain a ‘high-risk’ population vulnerable to experiencing actual and potential ADEs.4 Cullen and colleagues published the landmark trial comparing the rate of preventable and potential ADEs among 5 ICUs and 11 general ward units in two tertiary care hospitals.3 The combined preventable and potential ADE rate in the ICU was twofold higher in the ICU compared with the general ward (19 versus 10 ADEs per 1000 patient days, respectively). Furthermore, not accounting for potential ADEs, the actual ADE rate remained twice as high in the ICU patients over the non-critically ill (5.2 versus 2.6 ADEs per 1000 patient days, respectively). Our study found the ADE rate was significantly higher in the ICU population compared with the general ward (2.3 versus 0.11 ADEs per 1000 patient days, respectively). Also, DRHCs were almost seven times higher in the critically ill over non-ICU patients (7.5 versus 0.9 events per 1000 patient days, respectively). Cullen and colleagues and our current study both suggest the critically ill population is at a higher risk of experiencing drug-related events.

Few studies have evaluated the performance of trigger alerts using abnormal laboratory data to identify DRHCs.13,17 A subgroup analysis from our previous report of the same abnormal laboratory trigger alerts used in this current study showed acceptable rates of identifying DRHCs in both the ICU and general ward populations (PPV 0.66 and 0.76, respectively).13 This is in stark contrast to our current study results, which showed worse performance in detecting DRHCs for the ICU and non-ICU patients (PPV 0.25 versus 0.31, respectively). However, both studies corroborated trigger alert performance associated with drug-induced laboratory abnormalities was better in general ward patients compared with the critically ill. No changes to the logic-based rule criteria in these trigger alerts were made between evaluation periods. Although our current study evaluated the same trigger alerts to identify DRHCs, there were some slight differences in the study design that may explain our discordant results evaluating trigger alert performance. Our previous study only evaluated 161 trigger alerts associated with laboratory abnormalities over two, non-consecutive months, while this analysis provides a more robust assessment on DRHC recognition performance with a higher number alerts (n = 870) being evaluated over a longer period of time. DiPoto and colleagues included trigger alerts from three distinct hospitals (large, academic medical center, small community hospital, and rural facility) within the same health system, while the current study was only conducted at the large, academic medical center.13 The patient populations in the previous report may have contributed to differences in performance due to more stable, hospitalized patients in the small, less acute hospitals. Another major difference between these reports was the DRHC assessment was conducted by different study investigators. This may have contributed to differences in interpretation of DRHCs despite using validated tools. Furthermore, another study also showed a higher overall PPV of 0.44 (0.38–0.50) for abnormal laboratory trigger alerts in the medical ICU compared with the overall ICU PPV of 0.25 in our study.17 Unfortunately, several major differences between our studies make comparisons challenging. Kane-Gill and colleagues evaluated 253 trigger alerts over a 6-week period with the analysis only containing five potential drug-induced laboratory abnormalities (e.g. elevated blood urea nitrogen, hypoglycemia, hyponatremia, elevated quinidine and vancomycin serum concentrations), although only four alerts were analyzed.17 This was a much narrower focus on a few trigger alerts compared with the 20 alerts we evaluated. Also, patient populations between their hospital and our institution may have been significantly different. Unfortunately, the investigators did not disclose the exact logic-based rule criteria used in their trigger alerts.

Potential drug-induced thrombocytopenia was the most common trigger alert generated overall (32.2%), with potential HIT alerts comprising the majority of these (27.6%). Unfortunately, these alerts resulted in very poor performance in the ICU (PPV = 0.16) and general ward (PPV = 0.12) populations. Kane-Gill and colleagues observed thrombocytopenia as the most common abnormal laboratory condition as well from random chart reviews in ICU patients.11 Although their detection method differed from ours, the rate of thrombocytopenia DRHCs (i.e. drug induced) identified in their study was also very low (1.4%), suggesting nondrug-related causes were more common in ICU patients. Harinstein and colleagues investigated the performance of three distinct trigger alerts with different criteria for thrombocytopenia in an ICU population.18 The PPV associated with these trigger alerts was significantly higher (PPV range = 0.36–0.83) than our findings. Although heparin was one of the most common medications associated with these alerts, the investigators did not report PPVs specific to heparin. Nonetheless, the difference in performance between our results and theirs may have been attributed to the logic-based criteria incorporated within the trigger alert rule. Drug-induced thrombocytopenia trigger alerts with the best performance in their study were based on the criteria of the absolute platelet count reduction by at least 50% from the previous to the most recent laboratory result (PPV = 0.83), while our criteria was an absolute platelet count less than 300,000 per microliter or over 50% decrease from the previous laboratory value with an active order for heparin-like products (unfractionated heparin or low molecular weight heparin).

Trigger alerts remain a viable strategy within a comprehensive ADE surveillance program.5 This technology has demonstrated advantages over other ADE detection methods and the American College of Critical Care Medicine has endorsed its use in their clinical practice guidelines.25 More importantly, the application of trigger alerts within clinical decision support systems appears promising as an effective approach to prevent ADEs.6,13–15 Ideally, trigger alerts should identify DRHCs before the occurrence of any deleterious outcomes transpire so the healthcare provider has an opportunity to intervene. Using only absolute laboratory threshold values in trigger alerts without incorporating the magnitude of change from one laboratory value to the next may lead to limited success in preventing ADEs. For example, a patient initiated on an appropriate warfarin dosing regimen who experiences an increase in the INR from 0.8 to 2.0 over 24 h may not alert the provider if an absolute cutoff value of INR greater than 4 was the only criterion used in the trigger alert logic-based rule. However, accounting for the degree of change in a laboratory value would have notified the provider that this particular patient’s INR was rapidly increasing and potentially at risk of having subsequent elevated INRs. Using trajectory analysis within the logic-based criteria could be useful for identifying drug-induced issues such as thrombocytopenia, renal dysfunction, and dosing of anticoagulants before any significant injury occurs or at least provide an intervention opportunity to minimize the severity of injury that could have been realized.6 Unfortunately, the paucity of data demonstrating trigger alert rule characteristics resulting in optimal performance for preventing ADEs remains elusive.

Another challenge facing healthcare providers is the potential variability of trigger performance in critically ill and general ward patients. The primary focus of our research was to investigate performance of laboratory abnormality trigger alerts between these two populations. However, our findings have identified an opportunity to improve the performance of these alerts in identifying DRHCs. Unfortunately, the specific changes to each logic-based rule needed to drastically improve the performance to each trigger alert remains questionable. Institutions evaluating quality improvement opportunities associated with trigger alert performance must also consider the potential severity rather than merely focusing on the PPV. In other words, a trigger alert designed to avoid potentially fatal events may warrant continued use despite ‘low performance’. Although mediation safety teams should strive for effective, time-efficient prevention strategies, serious consideration should also involve potential negative, unintended consequences as a result of removing the trigger alert altogether or modifications to the logic-based criteria within the alert. The severity of the DRHC was assessed at the time of the alert and thus is not reflective of the pharmacists’ intervention or response to the alert. Further research is needed so health systems can adopt trigger alert best practices for optimal performance.

This study had several limitations that should be acknowledged. First, this was a single-center evaluation at a university hospital so the results observed in this study may not be applicable to other health-system settings. Also, the retrospective study design may have underestimated the true incidence of ADEs since documentation in the electronic medical chart was necessary to identify all ADEs. Lack of documentation in the provider progress notes may have been attributed to healthcare providers either not recognizing the adverse event was drug related or the ADEs may not have been deemed severe or significant enough to be included. Furthermore, this evaluation only focused on laboratory abnormality trigger alerts incorporated into our clinical decision support system so our findings may not be applicable to other institutions with different logic-based rule criteria. As such, the ability to capture all events related and unrelated to triggers is unachievable, thus not permitting the ability to calculate other performance measures such as sensitivity and specificity. PPV was used as the marker for performance characteristic but is influenced by prevalence of alerts. The standardized approach of utilizing the NCI CTCAE criteria to stratify severity of potential harm of DRHCs associated with laboratory abnormalities was a strength in this study, but severity of harm resulting from an actual ADE was not assessed since it was not the primary focus of our investigation. Still, if evaluated, the severity of an ADE may have been mitigated by the pharmacist’s management of the alert. Only one study investigator identified DRHCs as well as assessed severity despite using a validated and standardized approach. Finally, the distribution of alerts for each specific trigger and within each patient population (ICU versus non-ICU) significantly varied, which may limit our ability to make meaningful comparisons of performance between these study groups as well as develop process improvement strategies to improve alert performance.

Conclusion

Surveillance systems utilizing real-time trigger alerts associated with laboratory abnormalities to identify DRHCs may be a viable ADE prevention strategy. Overall, most trigger alerts were associated with a low PPV in identifying DRHCs with slightly better performance in the general ward compared with the ICU patient population. Poor trigger alert performance in identifying drug-induced events may contribute to alert fatigue. Logic-based rule criteria associated with trigger alerts should be continuously re-evaluated and take into consideration differences in various patient populations to improve performance.

Acknowledgments

This original research was presented as an oral abstract at the Society of Critical Care Medicine Annual Congress in February 2018.

Appendix

Appendix 1.

Trigger alerts associated with a high probability of a drug-induced laboratory abnormality.

| Category | Logic-based rule |

|---|---|

| Thrombocytopenia (heparin or LMWH) | Heparin/LMWH, platelets <300, and decrease by >50% |

| Supratherapeutic INR (warfarin and DDI) | Warfarin + interacting drug and INR >3.0 |

| Nephrotoxicity | Nephrotoxic agent, SCr >1, and >30% increase in SCr |

| Hepatotoxicity | ALT >200 and total bilirubin >2 and potentially hepatotoxic agent |

| Rapid INR increase (warfarin dose change) | Warfarin dose greater than or equal to the dose on previous day and INR increase >0.5 in 30 h |

| Rapid INR increase (new warfarin start) | New start warfarin and INR increase >0.5 in 30 h |

| Supratherapeutic phenytoin serum concentration | Corrected phenytoin level >20 |

| Hyperkalemia | K >6 and medication linked to hyperkalemia |

| Thrombocytopenia (nonspecific) | Platelets <30 and medication linked with thrombocytopenia |

| Hyperkalemia (ACE-I or ARB) | K >6 and ACE inhibitor or ARB |

| Neutropenia | WBC <4 and medication linked with neutropenia |

| Metabolic acidosis (nonspecific) | Anion gap >14, pH <7.33, and med linked with acidosis |

| Pancreatitis | Amylase >199 or lipase >350 and med linked to pancreatitis |

| Supratherapeutic digoxin serum concentration | Digoxin level >2, and either Mg <1.5 or K <3.2 |

| Metabolic acidosis (topiramate) | Topiramate and serum bicarbonate <15 |

| Hypertriglyceridemia (propofol) | Propofol and triglycerides >400 |

| Hyponatremia | Na <125 and medication linked with hyponatremia |

| Positive antinuclear antibody test (i.e. lupus) | Positive ANA and medication linked with lupus |

| Metabolic acidosis (zonisamide) | Zonisamide, bicarbonate <16 and anion gap <16 |

| Thrombocytopenia (fondaparinux) | Fondaparinux and platelets <100 |

ACE-I, angiotensin-converting-enzyme inhibitor I; ALT, alanine aminotransferase; ANA, antinuclear antibody test; ARB, angiotensin II receptor antagonist; DDI, drug–drug interaction; INR, international normalized ratio; K, potassium; LMWH, low molecular weight heparin; Mg, magnesium; Na, sodium; SCr, serum creatinine; WBC, white blood cell.

Footnotes

Funding: This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Conflict of interest statement: The authors declare that there is no conflict of interest.

Contributor Information

Mitchell S. Buckley, Department of Pharmacy, Banner University Medical Center Phoenix, 1111 E. McDowell Road, Phoenix, AZ 85006, USA.

Jeffrey R. Rasmussen, Department of Pharmacy, Banner University Medical Center Phoenix, Phoenix, AZ, USA

Dale S. Bikin, Department of Pharmacy, Banner University Medical Center Phoenix, Phoenix, AZ, USA

Emily C. Richards, Department of Pharmacy, Banner University Medical Center Phoenix, Phoenix, AZ, USA

Andrew J. Berry, Department of Pharmacy, Banner University Medical Center Phoenix, Phoenix, AZ, USA

Mark A. Culver, Department of Pharmacy, Banner University Medical Center Phoenix, Phoenix, AZ, USA

Ryan M. Rivosecchi, Department of Pharmacy, University of Pittsburgh Medical Center Presbyterian Hospital, Pittsburgh, PA, USA

Sandra L. Kane-Gill, Clinical Translational Science Institute, University of Pittsburgh School of Pharmacy and Department of Pharmacy, University of Pittsburgh Medical Center, Pittsburgh, PA, USA

References

- 1. Bates DW, Boyle DL, Vander Vliet MB, et al. Relationship between medication errors and adverse drug events. J Gen Intern Med 1995; 10: 199–205. [DOI] [PubMed] [Google Scholar]

- 2. Bates DW, Cullen DJ, Laird N, et al. Incidence of adverse drug events and potential adverse drug events: implications for prevention. JAMA 1995; 274: 29–34. [PubMed] [Google Scholar]

- 3. Cullen DJ, Sweitzer BJ, Bates DW, et al. Preventable adverse drug events in hospitalized patients: a comparative study of intensive care and general ward units. Crit Care Med 1997; 25: 1289–1297. [DOI] [PubMed] [Google Scholar]

- 4. Kane-Gill SL, Jacobi J, Rothschild JM. Adverse drug events in intensive care units: risk factors, impact, and the role of team care. Crit Care Med 2010; 38(Suppl. 6): S83–S89. [DOI] [PubMed] [Google Scholar]

- 5. Stockwell DC, Kane-Gill SL. Developing a patient safety surveillance system to identify adverse events in the intensive care unit. Crit Care Med 2010; 38(Suppl. 6): S117–S125. [DOI] [PubMed] [Google Scholar]

- 6. Kane-Gill SL, Achanta A, Kellum JA, et al. Clinical decision support for drug related events: moving towards better prevention. World J Crit Care Med 2016; 5: 204–211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Cho I, Slight SP, Nanji KC, et al. Understanding responses to a renal dosing decision support system in primary care. Stud Health Technol Inform 2013; 192: 931. [PubMed] [Google Scholar]

- 8. Evans RS, Pestotnik SL, Classen DC, et al. Preventing adverse drug events in hospitalized patients. Ann Pharmacother 1994; 28: 523–527. [DOI] [PubMed] [Google Scholar]

- 9. Kirkendall ES, Kouril M, Minich T, et al. Analysis of electronic medication orders with large overdoses: opportunities for mitigating dosing errors. Appl Clin Inform 2014; 5: 25–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Raschke RA, Gollihare B, Wunderlich TA, et al. A computer alert system to prevent injury from adverse drug events. JAMA 1998; 280: 1317–1320. [DOI] [PubMed] [Google Scholar]

- 11. Kane-Gill SL, Dasta JF, Schneider PJ, et al. Monitoring abnormal laboratory values as antecedents to drug-induced injury. J Trauma 2005; 59: 1457–1462. [DOI] [PubMed] [Google Scholar]

- 12. Kane-Gill SL, LeBlanc JM, Dasta JF, et al. A multicenter study of the point prevalence of drug-induced hypotension in the ICU. Crit Care Med 2014; 42: 2197–2203. [DOI] [PubMed] [Google Scholar]

- 13. DiPoto JP, Buckley MS, Kane-Gill SL. Evaluation of an automated surveillance system using trigger alerts to prevent adverse drug events in the intensive care unit and general ward. Drug Saf 2015; 38: 311–317. [DOI] [PubMed] [Google Scholar]

- 14. Silverman JB, Stapinski CD, Huber C, et al. Computer-based system for preventing adverse drug events. Am J Health Syst Pharm 2004; 61: 1599–1603. [DOI] [PubMed] [Google Scholar]

- 15. Rommers MK, Teepe-Twiss IM, Guchelaar HJ. A computerized adverse drug event alerting system using clinical rules. Drug Saf 2011; 34: 233–242. [DOI] [PubMed] [Google Scholar]

- 16. Rozich JD, Haraden CR, Resar RK. Adverse drug event trigger tool: a practical methodology for measuring medication related harm. Qual Saf Health Care 2003; 12: 194–200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Kane-Gill SL, Visweswaran S, Saul MI, et al. Computerized detection of adverse drug reactions in the medical ICU. Int J Med Inform 2011; 80: 570–578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Harinstein LM, Kane-Gill SL, Smithburger PL, et al. Use of an abnormal laboratory value–drug combination alert to detect drug-induced thrombocytopenia in critically Ill patients. J Crit Care 2012; 27: 242–249. [DOI] [PubMed] [Google Scholar]

- 19. Naranjo CA, Busto U, Sellers EM, et al. A method for estimating the probability of adverse drug reactions. Clin Pharmacol Ther 1981; 30: 239–245. [DOI] [PubMed] [Google Scholar]

- 20. Kramer MS, Leventhal JM, Hutchinson TA, et al. An algorithm for the operational assessment of adverse drug reactions. I. Background, description, and instructions for use. JAMA 1979; 242: 623–632. [PubMed] [Google Scholar]

- 21. Jones JK. Definition of events associated with drugs: regulatory perspectives. J Rheumatol 1988; 17: 14–19. [PubMed] [Google Scholar]

- 22. National Cancer Institute. Cancer therapy evaluation program, http://ctep.cancer.gov/protocolDevelopment/electronic_applications/ctc.htm (accessed 24 July 2017).

- 23. Wong A, Amato MG, Seger DL, et al. Evaluation of medication-related clinical decision support alert overrides in the intensive care unit. J Crit Care 2017; 39: 156–161. [DOI] [PubMed] [Google Scholar]

- 24. Kane-Gill SL, O’Connor MF, Rothschild JM, et al. Technologic distractions (Part 1): summary of approaches to manage alert quantity with intent to reduce alert fatigue and suggestions for alert fatigue metrics. Crit Care Med 2017; 45: 1481–1488. [DOI] [PubMed] [Google Scholar]

- 25. Kane-Gill SL, Dasta JF, Buckley MS, et al. Clinical practice guideline: safe medication use in the ICU. Crit Care Med 2017; 45: e877–e915. [DOI] [PubMed] [Google Scholar]