Abstract

Research in brain-computer interfaces has achieved impressive progress towards implementing assistive technologies for restoration or substitution of lost motor capabilities, as well as supporting technologies for able-bodied subjects. Notwithstanding this progress, effective translation of these interfaces from proof-of concept prototypes into reliable applications remains elusive. As a matter of fact, most of the current BCI systems cannot be used independently for long periods of time by their intended end-users. Multiple factors that impair achieving this goal have already been identified. However, it is not clear how do they affect the overall BCI performance or how they should be tackled. This is worsened by the publication bias where only positive results are disseminated, preventing the research community from learning from its errors. This paper is the result of a workshop held at the 6th International BCI meeting in Asilomar. We summarize here the discussion on concrete research avenues and guidelines that may help overcoming common pitfalls and make BCIs become a useful alternative communication device.

Keywords: BCI, EEG, limitations, user centered design, user training, signal processing, artifacts, publication bias

Introduction

Brain-Computer Interfaces (BCIs) have proven promising for a wide range of applications ranging from communication and control for motor impaired users, to gaming targeted at the general public, real-time mental state monitoring and stroke rehabilitation, to name a few [1, 2]. Despite this promising potential, BCIs are scarcely used outside laboratories for practical applications. Several publications and reviews have pointed out some of the roadblocks faced on the translation of BCIs -using either invasive and non-invasive techniques-towards practical applications [3, 4]. These include safety and biocompatibility of invasive approaches, as well as wearability and ergonomics of non-invasive recording techniques. In the later case, the main reason preventing electroencephalography (EEG)-based BCIs from being widely used is arguably their poor usability, which is notably due to their low robustness and reliability, as well as their often long calibration and training times. Although, BCIs based on P300, Steady-State Visual Evoked Potentials (SSVEP) generally require shorter training periods [9, 10].

As their name suggests, BCIs require communication between two components: the user’s brain and the computer. In particular, users have to volitionally modulate his/her brain activity to operate a BCI. The machine has to decode these patterns by using signal processing and machine learning. So far, most research efforts have addressed the reliability issue of BCIs by focusing on command decoding only [5,6,7,8]. While this has contributed to increased performances, improvements have been relatively modest, with correct mental command decoding rates being still relatively low and a reportedly significant amount of study participants unable to achieve satisfactory BCI control [1, 9, 10, 11]. Thus, the reliability issue of BCI is unlikely to be solved by solely focusing on command decoding [12, 13].

We argue that limiting factors on the successful translation of BCI systems stem from inadequate practices commonly followed by the research community. This paper summarizes the discussions on this topic held at the workshop “What’s wrong with us? Roadblocks and pitfalls in designing BCI applications” held on May 30th 2016 at the 6th International Brain-Computer Interface Meeting in Asilomar, CA, USA. The workshop was attended by 35 people of different backgrounds who identified methodological aspects worth of improving. Although we discussed here mainly the cases of non-invasive approaches, most of the topics addressed are relevant to the BCI research field as a whole. The paper is organized around four main topics, each of which represents a major component of any closed-loop BCI system. (i) End users. So far, little focus was on put on the inclusion of user-requirement aspects when designing BCI solutions. The need of end-user (group) specific tailoring of the system, of course, requires additional resources, which limits the possibility of successful transfer into marketable applications. However, design and evaluation of BCI systems should go beyond the “plain” decoding of neural signals and should take into account all components of the brain-machine interactions. Including, but not limited to feedback, human factors, learning strategies, etc. [12, 13, 14]. (ii) Feedback and user training. BCI researchers seem to neglect the fact that these systems consist of a closed loop between the human and the machine. In spite of this, the human is typically considered as a static, compliant entity that always perform what the experimenter asks for in a precise and consistent way. In reality, subject’s behavior is determined by his/her understanding of the task, abilities, motivation, etc. Therefore, both feedback and training instructions should be designed such as to maximize the interplay among them and help the user to learn a proper strategy to achieve good BCI control skills. (iii) Signal processing and decoding. Real end user application environments are much noisier, dynamic and provide multi-sensory impressions to the user, leading to drastic changes in EEG signals as compared to well-controlled lab environments or during BCI calibration situations. As result, lab-based BCIs potentially fail in real-life contexts. (iv) Performance metrics and reporting. There is a lack of clear metrics to assess the effective performance of a BCI system. It is generally acknowledged that these metrics should comprise both machine and human factors altogether (e.g. decoding accuracy and usability), but it is not clear how to weigh them. This in turn means that it is also not clear on how to explicitly report BCI results. This prevents the field from having a scientific discussion based on proper reporting and interpretation of results.

These topics and related pitfalls and challenges are further elaborated below. The aim of this paper is to start a discussion on strategies to improve current research and evaluation practices in BCI research. The vision is that open discussion on pitfalls and opportunities eventually leads to the development of systems that end-users can operate independently.

End-user-related issues

Several roadblocks within the aim of transferring BCI technology to end-user’s homes were addressed and discussed in our workshop. We suggest improvements in the areas of end-user selection, BCI system selection and discuss the potential of a common BCI end-user database. Furthermore, we point out how to reach the circumstances under which the user can be successfully included during the BCI application design process and thereby contribute to the integration of human factors in the usability of a BCI application (e.g. by following a user-centered design, UCD).

Identifying a potential BCI end-user

The choice of a potential candidate for using a BCI-based device is not trivial since not every end-user per se is suited. Kübler and colleagues stated several internal and external preconditions for successful BCI use [15]: (1) A potential candidate should be interested in and (2) in need of assistive technology, (3) be able to give informed consent, (4) be skilled concerning the cognitive abilities required to control the BCI system and (5) be supported by family members or caregivers. Already proper judgement of an end-user’s interest might be challenging (both for his/her relatives/caregivers as well as for outsiders like us as researchers). We might be tempted to believe that the enthusiasm we experience after implementing a working BCI system should also be felt by the end-user. But even though generally high satisfaction with the currently available BCI systems was reported, a clear demand for BCI improvements was also articulated by end-users [16] and caregivers [17]. Some of these aspects concern technological and practical aspects such as usability and ergonomics of the setup. For instance, the use of electrode cables and electrode gel as well as the general design of the BCI cap has been criticized. Furthermore, end-users reported not being able to use a BCI system on a regular basis as they would not be supported by family members or caregivers [16]. Several reports of end-user outcomes for BCI [18-22] show that successful translation of BCI systems not only depends on technology-related matters but also on personal, transactional, and societal challenges. Some of them are imposed by the healthcare system leading to a lack of external support for potential BCI end-users. Including healthcare providers in the BCI application design process might raise awareness of end-users needs. Additionally, their perspective might be valuable concerning the identification of potential BCI end-user groups.

On the other hand, there are single reports of successful BCI system transitions to end-users’ homes [23-25]. End-users in these studies not only fulfilled internal prerequisites for successful BCI use, but were also supported by caregivers or family members and thereby share beneficial external preconditions. Additionally, they had something else in common: Incentives to use the BCI were tremendously high as end-users were enabled by the BCI system to do something another assistive technology would not have allowed them. While the end-user reported by Sellers and colleagues [24], ran his research lab using the BCI system for up to 8 hours a day, the end-user reported by Holz and colleagues used the system for artistic self-expression which gave her back a lost communication channel. Therefore, it is our challenge as researchers, to create BCI applications that really increase incentives for potential BCI end-users.

Identifying a potential BCI system

As the choice of a BCI system that fits the end-user might be time consuming and frustrating for the end-user, several approaches feasible at bedside were suggested within the BCI community to facilitate this process. These approaches can be sub-divided into physiological and behavioral approaches.

Physiological approaches to predict BCI performance with one specific BCI system

The possibility of finding markers to predict future performance may help to rapidly screen suitable users to a given BCI system. A neurophysiological predictor within the sensorimotor rhythm (SMR) based on recordings of two-minutes of resting state data was found to be significantly (r=.53) correlated with SMR BCI performance [26]. Also gamma activity during a one-minute resting state period showed significantly correlations with later motor imagery performance [27]. The resting state EEG network (two minutes assessment) can be a predictor for successful use of a SSVEP based BCI [28]. However, these studies have only involved able-bodied subjects. Further research with subjects with motor impairments is required to evaluate transferability of results.

One challenge to be faced is the assessment of resting state activation with a reduced number of electrodes and in clinical samples to realize bedside data assessment. To achieve this goal, advice from neuroscientists might be helpful as this interdisciplinary approach could facilitate choosing a reliable screening method. Recent works both with able-bodied subjects and one end-user with locked-in syndrome have proposed methods to assess performance variation within a session and during runtime. They may help to better assess the suitability of a given BCI approach, and to provide user-specific support during the training periods [29,30].

Concerning the P300 BCI, resting state heart rate variability (HRV) which is an indicator of self-regulatory capacity was found to explain 26% of the P300 BCI performance variance [31]. Other screening paradigms basically rely on using standard neuropsychological paradigms that assess the same EEG correlations the BCI system relies upon. One easily applicable paradigm is the oddball paradigm [32]. P300 amplitudes in the oddball paradigm measured at frontal locations were found to correlate up to r=.72 with later P300 BCI performance [33]. P300 amplitude responses to oddball paradigms were also successfully utilized to decide which stimulation modality -among auditory, visual or somatosensory- would most likely lead to best classifiable results [34].

Behavioral approaches to predict BCI performance with one specific BCI system

The assessment of behavioral predictors of BCI performance is usually based on either questionnaires or button-press response tasks. Concerning psychological predictors of BCI performance, the willingness and ability to allocate attention towards a task was found to explain 19% of variance when using an SMR BCI [35]. Also the ability to kinesthetically imagine motor movements was significantly correlated with later SMR BCI performance [36]. Finally, the user’s spatial abilities, as measured using mental rotation tests, were also found to correlate to mental-imagery BCI performance, both for motor and non-motor imageries [37]. In P300 BCI use, one specific component of attention, the ability for temporal filtering was found to predict P300 BCI performance in people diagnosed with Amyotrophic Lateral Sclerosis (ALS) [38]. Motivation as measured with a visual analogue scale was reported to explain up to 19% of variance in P300 based BCI applications [39, 40].

In conclusion, the identification of potential BCI performance predictors has not been exhaustively investigated so far [7]. A standardized screening procedure including easily applicable instruments and methods should be agreed upon. One such suggestion was already proposed for the identification of potential BCI users for a rapid serial visual presentation keyboard [41]. In case researchers could collaboratively and interdisciplinary contribute to the identification of reliable predictor variables, end-users might be equipped fast with the BCI system best suited for their individual needs.

Advantages of an end-user database

Another step towards identifying methods for fast and reliable BCI system selection might be sharing a common database [42, 43] in which selected information about end-users and the BCI systems that fit them are merged. Possible information of interest might be diagnoses and assessed predictor variables (see above). An end-user database might also be based on level of function rather than diagnosis as a primary descriptor. Similar to the International Classification of Functioning, Disability and Health (ICF)1 that have been developed for specific patient groups [44, 45, 46], an end-user database could describe functional limitations, and include a full range of diagnoses that lead to needs for potential BCI use.

Furthermore, experience reports by caregivers, BCI experts and people with additional backgrounds who work with an end-user might add crucial information which allow to deduce the choice of the best BCI system for a user by evaluating previous success stories. Additionally, potential dead ends once detected could help others to prevent wasting time and effort on a less efficient BCI based approach.

Of course, ethical issues must be taken into account as highly detailed reports about end-users including their diagnoses and their BCI experiences might threaten end-users anonymity which must be prevented. However, after having gathered a sufficiently big and meaningful database, for which criteria must be agreed upon, data mining approaches can be used to support the decision making process. Not only physiological data (brain activation) but also behavioral data (questionnaire data) could be integrated and utilized to support the best possible fit between end-users and specific BCI systems. To reach this goal, collaborations between BCI researchers, clinicians, caregivers and family members all over the world is crucial, as well as common efforts to decide which information should be shared and how. This would require standard protocols and procedures to collect physiological or behavioral data, such that they can be compared between different research and clinical groups, different EEG recording devices, or BCI processing pipelines for instance. Alternatively, this could also be the opportunity to design data analysis tools dedicated to compare BCI performances or EEG signals collected with different devices or BCI processing pipelines, in order to enable such comparisons.

The user-centered design (UCD) and why it is rarely implemented

In case a BCI user and the appropriate BCI system were selected, the system still needs to be adjusted to the end-users’ needs as otherwise, long-term use remains elusive. One procedure that was successfully implemented [23] is the user-centered design, UCD [14]. The end-user should be involved as early as possible by being asked to express needs and requirements the BCI based assistive technology (AT) is supposed to fulfill. Methods to elicit involvement and feedback from potential BCI users have been proposed [47]. End-users should be encouraged to actively influence the process of their BCI system being adjusted. First trials of using the technology are to be evaluated and the technology or features of it should be accustomed to the user’s requirements. This process is to be iterated such that by trying new solutions and re-adjusting the system, the end-user receives an AT device that truly supports him or her in daily life. Another key factor suggested by UCD is the multidisciplinary approach. In case an end-user is in a medical condition requiring professional care, close interaction between family, caregivers and medical staff tremendously facilitates the process of transferring an AT to the end-user’s home as not only the needs and requirements of the end-user can be taken into account, but also the device should be adjusted to the needs and requirements of the ones who support the end-user. Furthermore, a multidisciplinary approach would allow for improvement of end-user training. For example, by taking into account experts opinions on how to create learning paradigms that promote success but also facilitate BCI use for the end-user (See next section).

To mention just one example of UCD-based development: The aim of the FP7 ABC project was to develop an interface for individuals with cerebral palsy (CP) that improves independent interaction, enhances non-verbal communication and allows expressing and managing emotions [48]. As described above, as first step the potentially most useful BCI system was identified [49]. Then, based on the end users preferences and demands, a BCI-operated communication board was implemented [50]. To provide a better training experience (see next section) and educate end user on how to use BCI technology, a game-based training environment was implemented [51]. BCI-based communication only works when end users understand the working principles and relevance of their own active involvement. Not providing clear and detailed information to end users will become one major pitfall. Finally, cooperative/competitive elements were added to the game-based user training [52]. Users can now train together with friends and not alone in front of the computer. Each developmental step was discussed with end users and caregivers. Development, however, was not linear. Advances in the end users understanding of BCI technology, let to the redesign of several aspects of the system over and over again.

A crucial point to be addressed when applying UCD is the resources it requires. So far, only rare cases of long-term independent BCI use were reported [23-25], which might be due to limited resources available. Not only is the hardware expensive, also experts need time to adjust the system properly. And after UCD based BCI system transfer to an end-users home, we can report results of this one single case. Thus, awareness for the value of case studies must be increased. Even though for fundamental research the claim of generalizability of results is a valid one, this claim cannot be transferred to a BCI context, in which per definition, every end-user might present different needs and requirements [53]. As a consequence, researchers are faced with the conundrum of how to provide the possibility of permanently refining of the system depending on the (changing) conditions of the user given the available resources. The truth is that currently few research groups can afford such process due to lack of funding programs in this type of long-term, high-risk research, as well as little interest from publishing venues as outcomes appear to be incremental. Some alternatives to improve in this respect is to develop better, formal strategies to support the interface adaptation to the user. These strategies can be based on the typification of the experience from previous studies, along with the characterization of the users for which they worked. This process can identify which parts of the process can or not rely on automatic processes (using strategies for co-adaptation, online performance evaluation, shared control, etc.) and devise guidelines for evaluating potential design alternatives. Finally, the engagement of other actors, besides the research community, specifically focused on translational aspects of the technology is a no-brainer for creating the conditions of successful design of BCI solutions.

Feedback and training

BCI control is known to be a skill that must be learned and mastered by the user [54, 55]. Indeed, user’s BCI performances (i.e., how accurately his/her mental commands are decoded) become better with practice and BCI training leads to a reorganization of brain networks as with any motor or cognitive training [54, 55]. Therefore, to ensure a reliable BCI, users must learn to successfully encode mental commands in their brain signals, with high signal-to-noise ratio. In other words they should be trained to produce neural activity patterns that are as stable, clear and as distinct as possible. With poor BCI command encoding skills, even the best signal processing algorithms will not be able to decode commands correctly. Unfortunately, how to train users to encode these commands has been rather scarcely studied so far. As a consequence, the best way to train users to successfully encode BCI commands is still unknown [1,7, 54]. Worse, as we argue in this paper, current user training approach in BCI are actually even inappropriate, and most likely one of the major causes of poor BCI performance, and high BCI deficiency rates. In the following, we present recent evidences explaining why they are inappropriate, and present open research questions that would address this fundamental limitation.

Current BCI user training approaches are inappropriate

One common approach used in non-invasive BCI training consists in asking users to perform mental imagery tasks i.e., the kinesthetic imagination of body limb movements [56]. These tasks are mapped used to encode commands (e.g., imagining left or right hand movements to move a cursor in the corresponding direction). Meanwhile, users are being provided with some visual feedback about the mental command decoded by the BCI [54]. However, currently used standard BCI training tasks and feedbacks are extremely simple, based on heuristics, and provide very little information. Typical BCI feedback indeed often consists of a bar displayed on screen whose length and direction vary according to the EEG signal processing output and the decoded command. Is that really the best way we can train our users to gain BCI control? To answer that question, we have studied the literature from the fields of human learning, educational science and instructional design [11, 57]. These fields have indeed studied across multiple disciplines, e.g., language learning, motor learning or mathematical learning, what are the principles and guidelines that can ensure efficient and effective training approaches. We have then compared such principles and guidelines to the training approaches currently used in BCI. In short, we have shown that standard BCI user training approaches do not satisfy general human learning and education principles ensuring successful learning [11, 58, 59]. Notably, typical BCI feedback is corrective only, i.e., it only indicates users whether they performed the mental tasks correctly. Oppositely, human learning principles recommend providing explanatory feedback by indicating what was right or wrong about the task performed [58]. BCI feedback is also usually unimodal, based only on the visual modality, whereas exploiting multimodality is also known to favor learning [60]. Moreover, training tasks should be varied and adapted to the user’s skills, traits (personality or cognitive profile) and states [58]. They should also include self-paced training tasks, to let the user explore the skills he/she has learnt. BCI training tasks, in contrast, are fixed over time and users, synchronous and repeated identically during training. Finally, and intuitively, training environments should be motivating and engaging, whereas standard BCI training environments may not favor engagement, and appear to be boring after prolonged used as often informally reported by users. As mentioned in the previous section, feedback and training tasks design may as well take into account the user’s preferences and psychological profile to maximize his/her motivation. Note that an engaging environment does not necessarily have to be a visually appealing but complex and charged visual environment (like most modern video games), as it may overload the user. Unfortunately, there are many other training principles and guidelines not satisfied by classical BCI training [11, 57].

We have also shown that in practice, standard feedback and training tasks used for BCI are also suboptimal to teach even simple motor tasks [61]. In particular, we studied how people could learn to do two simple motor tasks using the same training tasks and feedback as those given to motor imagery (MI) BCI users. More precisely, we asked subjects to learn to draw on a graphic tablet a triangle and a circle (the correct size, angles and speed of drawing of these two shapes being unknown to the subject) that can be recognized by the system, using a synchronous training protocol and an extending bar as feedback, like for standard MI-based BCI training. Our results show that most subjects (out of N=53 subjects) improved with this feedback and practice (i.e., the shapes they draw are increasingly more accurately recognized by the system), but that 17% of them completely fail to learn how to draw the correct shapes, despite the simplicity of the motor tasks [62]. This suggests that the currently used training protocols may be suboptimal to teach even a simple skill. As such, and although not a definitive proof, this could suggest such protocols are in part responsible for the poor BCI control achieved by some users, and thus should be improved.

Therefore, both theory and practice suggest that current BCI training approaches are likely to be suboptimal. This a bad news for current BCI systems and users, but a good news for BCI research and BCI future: it means there is a lot of room for improvement, and many exciting and promising research directions to explore, as described in following section.

Open research questions in BCI user training

As shown in the previous section, evidence converges towards indicating that current BCI user training approaches are inappropriate. A promising direction to change them in a relevant way would be to make them satisfy principles and guidelines from human learning theories and educational science.

At the level of the training tasks we propose our BCI users, educational science recommends providing varied, adaptive and adapted training tasks. This raises a number of currently unanswered questions. How to design varied and relevant training tasks (e.g., by taking into account the end user objective and needs, as mentioned in UCD above)? What should these tasks train? In order to design adapted training tasks, we should also find out about how the user’s profile impact BCI learning and performances. Some recent research results go into that direction and are worth being further studied (35, 62, 63, 64]. To design adaptive training tasks also requires adjusting the training tasks sequence to each user over time. How to do so to ensure an efficient and effective learning? The BCI community could learn on this topic from the field of Intelligent Tutoring Systems (ITS), which are tools specifically designed for digital education, to find an optimal sequence of training exercises for each user, depending of this user’s skills, traits and states [62].

At the level of the feedback provided, educational science recommends to exploit multimodal feedback and to provide feedback that is explanatory rather than purely corrective, which it is so far for BCI. This raises the question of whether we can exploit other feedback modalities (e.g., auditory, tactile) for training, and how? Recent results show that complementary tactile feedback can enhance motor imagery BCI performances for instance [65, 66], which confirms this is a promising direction to explore. Designing an explanatory feedback for BCI is currently very challenging given the lack of fundamental knowledge on motor imagery and on BCI feedback [67]. For instance, why mental commands are sometimes erroneously recognized? Which feedback content providing to the user? Which feedback presentation should be used to represent this content? These are crucial research questions that the BCI community will have to answer to design appropriate feedbacks for BCI, and thus to efficiently and effectively train our BCI users.

Finally, at the level of the training environment, improving BCI training requires to design motivating and engaging training environment. How to do so? How to keep BCI users being motivated and engaged in the training? Some recent works showed the positive impact of video games and virtual reality on BCI training and performance [51, 52, 68]. There is now a need to understand why it is so, and to formalize these approaches to ensure the next generation of BCI training approaches will be motivating and engaging at all times, for all types of users.

From a non-technical and non-scientific point of view, all this research also depends on how to report such studies on BCI user training. Indeed, many different training tasks, feedbacks and environments could be tested and explored. Not all of them will lead to improve BCI training efficiency or improved BCI performances. However, it is essential to know what works and what does not work to deepen our knowledge on BCI training. It would also be really inefficient, especially considering how costly (in time and money) BCI experiments on user training can be, if several research groups were to try the same experiments without knowing that other groups have already tried before but that it failed. This all points to the necessity to publish negative results in BCI research, to ensure an efficient research and to ensure access to all available relevant knowledge.

Signal processing and decoding

As mentioned before, research in signal processing and decoding algorithms have played a significant role in the field of BCI in the past two decades. First attempts to gain BCI control were based on operant conditioning [69]. The BCI decoding algorithm was pre-defined and users had to learn by trial-and-error to modulate brain rhythms (for example, by controlling a cursor to hit targets). By introducing machine learning (ML) and pattern recognition (PR) methods the burden of learning was shifted towards the machine. The parameters of the BCI decoding algorithm were adapted to the user’s individual EEG signatures [70]. PR refers to methods that translate characteristic EEG features (patterns) into messages. ML refers to methods that optimize feature and PR parameters. According to the guiding principle let the machine learn, followed by some groups, a number of ML and PR methods have been applied [6, 71]. Some improvements in gaining BCI control have been made. However, the goal of yielding good control for everyone was not achieved. Today, a co-adaptive approach is most common. First the PR methods are trained to detect user specific patterns. Then feedback training, based on the trained PR model output, is used to reinforce the EEG pattern generation. By repeated application of the two steps the user and the machine mutually co-adapt. More recent approaches include online co-adaptation and transfer learning [72- 77]. In the former case BCI model parameters are adapted and iteratively updated during feedback training. In the latter case models are transferred between users and/or between days.

The above discussion mostly applies to BCIs that are based on the detection of changes in spontaneous EEG. PR performance in BCIs that are based on the detection of evoked potentials (EP) is robust. Since properties of EP signatures are time-locked to visual, auditory or sensorimotor stimuli, increased detection performance can be achieved. In the vast majority of healthy users detection rates higher than 90% are achieved in the case of SSVEP in less than 30 minutes of time [9]. The performance, however, drops considerably when moving out of the lab and into end users’ homes [49]. Some studies have shown EP-based BCIs that yield good performance with subject-independent classifiers, i.e., without calibration at all for a new user [78, 79]. However, so far these approaches have mainly been tested in offline studies and with healthy users only. It is therefore still unknown whether this results generalize to end-users. Moreover, performances are still better when exploiting subject-specific data and user training, even for P300-based BCI [79, 80].

Significant improvement in performance was achieved by using co-adaptation. However, fundamental issues of BCI learning were not tackled. ML/PR were mostly treated separately without considering all components in the BCI feedback loop [12, 13]. As a matter of fact, typically linear time invariant models are used to translate non-stationary and inherently variable EEG signals. This means that BCI models may perform well for limited periods of time - BCI experiments typically will not take more than one hour - but not for extended time or 24/7 use.

Statistical PR methods such as linear discriminant analysis (LDA) or support vector machines (SVMs) are most commonly used [6]. Feature extraction and decoding parameters are optimized based on collected EEG data. Since data collection is time consuming, optimization is typically based on a limited amount of data. It is therefore essential to estimate the generalization of selected models. Methods such as regularization, shrinkage or cross validation may prevent over fitting to the data [81-84]. Overfitting means that PR models memorize the training data. Due to EEG non-stationarity, this may lead to suboptimal performance on unseen data. Another important prerequisite is to select PR models based on the properties of the selected EEG features. Hence, the probability density function of features and of the PR framework should match for optimal performance. For example, using power spectral density (PSD) estimators with LDA will likely fail, since LDA assumes Normally distributed data, which PSDs are usually not. Computing the logarithm of the PSD features will make them more Normal-like, thus increasing the LDA classification performances. This leads to the most crucial aspect: features. Optimal performance requires the proper choice of features. For instance, ERP and MRCP-based BCI typically reach better performances when using time-domain features, whereas SSVEP and mental imagery-based BCIs should use PSD or similar features [85, 86, 87]. Preprocessing and decoding algorithms should also be selected depending on the amount of available training data and EEG channels [85, 87]. Typically, the more training data, the more complex the algorithms can be. For multi-channel mental imagery-based BCIs, a very common pre-processing approach is the common spatial pattern (CSP) and its evolutions [85,88,89]. Despite recent improvements in feature extraction and preprocessing algorithms (see [71] for a review), there are still a large number of users that are not able to control a BCI (BCI inefficiency). At least not in the short term. And there is not enough experience with long-term training! Which features are most informative and lead to enhanced performance? There is no answer to this question at this time. More basic research and a better understanding of brain functioning is required to be able to answer this question. Some attempts to enhance the interpretability of brain oscillations are already ongoing (e.g. [90,91]).

Obviously, BCI end users that are able to reliably generate EEG patterns achieve higher PR performance. Typical mental tasks used to encode messages for imagery-BCIs are hand and feet motor imagery combinations. It turns out that a user-specific combination of “brain-teaser”, i.e., tasks that require problem specific mental work (e.g. mental subtraction or word association), and “dynamic imagery” tasks (e.g. motor imagery or spatial navigation) significantly enhance BCI performance in healthy [92-93] as well as in users with disability [94-95]. In the latter case binary classification accuracy was up to 15% higher when compared to a classical motor imagery task combination [94]. These results again emphasize the need to consider the different components in the BCI feedback loop and their interplay.

Another important aspect that impacts on PR and ML are contamination artifacts. Artifacts are interference signals that share some of the characteristic features of EEG and can produce misleading EEG signals or destroy them altogether. When developing BCIs, one has to take care that cortical signals are used for communication and control and not artifacts [96-98]. Muscle artifacts, for example, are highly correlated to the user’s behavior and have much higher amplitudes. This means that they are also easier to detect and may impact on PR performance. If the aim is to establish communication for an end user and muscular activity is voluntarily generated then this approach may be suitable. However, the nature of the signal used for control should be clearly identified by the developers, i.e. this communication device does not qualify as BCI if no brain activity is used; alternatively, it may correspond to a hybrid BCI if both muscle and EEG signals contribute for control [99-101]. Artifact or not an artifact? Labeling and characterization of artifacts is one major issue. Often, the performance of artifact removal algorithms is derived from comparisons to human ratings (e.g. [97,102]). Scorings between humans, however, may vary to a large extent. Finding a common definition and elaborating scoring guidelines may help creating an artifact database that serves as benchmarking for algorithms validation. One idea is to setup a web-based artifact-scoring tool where experts from all over the world can score EEG signals and contribute to the building of such a comprehensive database. However, as said before, not only EEG data and scoring information should be shared, but also source code and protocols. Only that would allow an open and transparent validation of new methods and lead to intense scientific discussion.

Performance metrics and reporting

Another pitfall that hinders advancement of BCI technologies lies on the methods used to assess the quality of the developed systems. To a large extent, the research community has mainly focused on evaluating the performance of the decoding engine and applied mainly PR and ML performance metrics. A widely used metric is the accuracy of the classifier (true positive rate, TPR)2. Other metrics also take into account the effect of misclassifications (false positive rate, FPR) and estimating the specificity (1-FPR) of the decoder as well as the true and false negative classification (TNR and FNR, respectively) [103]. Other metrics, inspired from information theory have also been proposed by analyzing the BCI as a communication channel between the brain and the controlled device [104]. However, these metrics have their limitations as it has been already pointed out in previous works [105-108].

Besides these limitations, they are often poorly used in an important proportion of BCI related literature. One important characteristic of most BCI studies is that they rely on a small quantity of data, both in terms of the number of subjects that participate in the experiments as well as the number of trials recorded for each of them. This clearly limits the conclusions that can be drawn from these studies. Nevertheless, BCI published works rarely address nor discuss the population effect size and often overstate the impact of their findings by implying they would generalize to greater populations. Since the interpretation of the numerical values depends on the amount of data available, it is important to consider the chance level performance [109]. The less data available, the higher the chance level or the broader the confidence interval of the performance estimation. This means for a limited amount of data the chance level performance may be very high. This in turn leads to an overly optimistic interpretation of the results. Moreover, special care should be taken when choosing the statistical tests used for comparing different experimental conditions [83,110,111], and the decoding performance evaluation should specifically take into account the characteristics of the dataset used (in terms of number of trials and class distribution).

In addition, these metrics typically evaluate the output of the classification function against a set of labelled samples acquired during a calibration period (i.e. supervised learning paradigms). Therefore, these metrics rely on the assumption that the brain activity that is fed to the classifier during operation follows the same distribution than the one observed during calibration. This assumption is widely recognized as incorrect and often brushed off by arguing that EEG signals are non-stationary, we claim that there is a more fundamental reason for changes in the neural activity during BCI operation. As a matter of fact, the BCI system is inherently a closed loop system and, in consequence the feedback it provides is one of the causes of signal changes in the user’s brain activity. Therefore, even though offline evaluation of the classifier performance may be useful for preliminary evaluation of different decoding methods it is not enough to assess the performance of a BCI and performance evaluation during online usage is necessary.

The aforementioned aspects concern exclusively the evaluation of the BCI decoding engine. Thus, they only reflect one element in the BCI loop and do not provide information about how suitable the system is for its user. Several studies have shown that human factors and user characteristics influence performance [39, 112, 113,114]. In consequence, proper evaluation of the BCI system cannot be limited to decoding metrics, and should also include efficiency/effectiveness metrics in the human-computer interaction sense [115,116], as well as explicit assessment of the human factors (cognitive workload, sense of agency, among others), e.g., through the use of questionnaires [117,118]. This implies the need for combining both quantitative objective performance measures with subjective, qualitative assessments based on self-reporting, and highlights the fact that BCI performance may not be comprehensively reflected by a single figure [14]. Nevertheless, The appropriate way to weigh in these different metrics is strongly application dependent and remain one outstanding challenge in the field.

Note, that introducing mechanisms that support users to control BCIs (for example word prediction or evidence accumulation for decision making) positively impact on the performance. It is essential to identify the impact of such mechanisms when computing chance performance levels [50], and properly evaluate the contribution of the BCI to the achievement of the task.

Last but not least, it is necessary to improve the way BCI research is reported in peer-reviewed publications. At the current state, other research groups cannot independently replicate most experiments. Mainly due to the lack of a complete description of the methods used for processing the signals (from signal acquisition and conditioning to feature selection and classification), as well as the specific instructions given to user. We exhort the community to be more careful in the design and reporting of their experiments. As mentioned above, experimental studies are typically performed with small populations of subjects that do not match the intended BCI users (e.g. graduate students), thus their results may not be applicable to a large population. Besides these issues, the BCI field also suffers from problems inherent to the scientific literature, as is the bias to publish only positive results that prevents the research community of learning from its errors [119]. As a countermeasure for these weaknesses we proposed the need of clear a guidelines for good reporting practices. Even though we can adopt guidelines from related fields (c.f., [111, 120-122]) some aspects inherent to BCI systems may require specific new guidelines to be drawn (e.g. procedures for decoder calibration, evaluation performance on small populations, among others). Such guidelines can be endorsed by the BCI society encouraging authors and reviewers to follow them. Importantly, a great amount of information about these systems can be obtained from qualitative data, good practices for collecting, reporting and analyzing it can be extremely helpful to the research community. Last but not least, encouragement for reporting negative BCI results, e.g., through dedicated peer reviewed journal special issues can be also beneficial. However, reporting of these results should come from rigorous and unbiased studies that yield results that are strong enough to refute a hypothesis and help to design follow up studies. Only in these cases they can be useful to advance the field towards more robust and reliable systems.

Conclusion

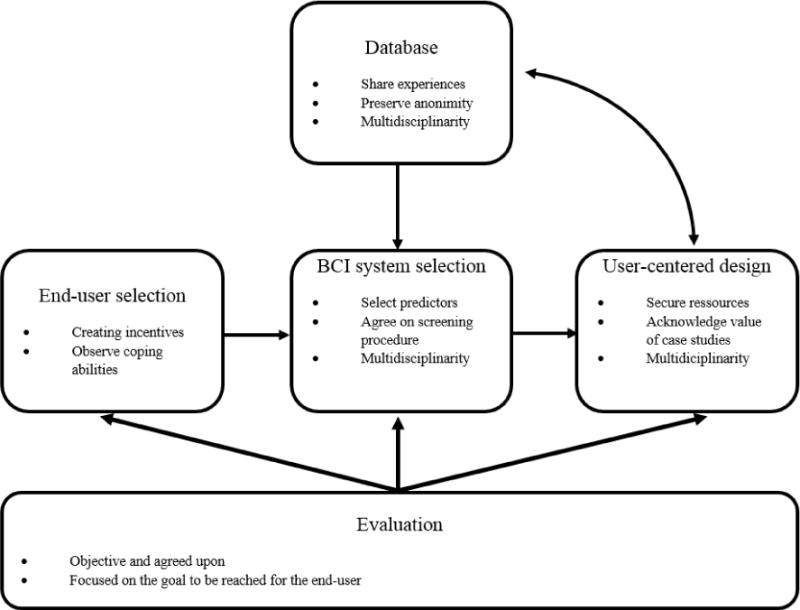

The design of BCI systems reliable and robust enough to allow independent use is a challenging task involving significant advances in diverse domains. Researchers in the field have gone a long way demonstrating the feasibility of extracting meaningful information from neural signals to control external devices. However, there are still several roadblocks to surmount for these systems to be successfully deployed to their intended end-users. We have summarized in this paper several methodological aspects that need to be taken into account in order to achieve this goal. A first aspect corresponds to the need of better ways integrate the user’s needs and preferences into the design of the BCI solution. Several actions have been suggested along this line (see Figure 1), which require intense and interdisciplinary collaborations in order to go beyond the existing success stories of single cases of independent BCI use, towards formal methods for effective translation from the research lab into real applications. Experience from other cases in neurotechnologies can serve as model or inspiration for the BCI case. One model to be used as a starting point on how the design process might be optimized is Scherer’s Matching Persons and Technology Model [123,124] that examines the psychotechnological interplay of device and end-user from the perspective of optimizing user outcomes.

Figure 1.

User related topics to avoid roadblocks in transferring BCI systems from the lab to the end-users home.

In addition, at the user training level, there are also a number of pitfalls we have to overcome. Indeed, currently used training protocols are most likely highly suboptimal, both from a theoretical and practical point of view. It is therefore necessary to conduct fundamental research to understand what BCI skills users are learning, how they are learning, and what makes them fail or succeed at BCI control. It is also necessary to try to go towards adapted and adaptive training tasks, as well as towards explanatory feedback, to ensure successful BCI skills acquisition.

Digital signal processing, pattern recognition and machine learning are essential components of modern BCIs that allow for brain-computer co-adaptation. Dealing with artifacts and brain signal non-stationarity, i.e., finding appropriate features, are issues that have the largest potential to increase performance and need closer attention. Sophisticated machine learning methods s may help tackling these issues and contribute to the further advancement of BCI. However, the highly multidisciplinary nature of BCI research makes it very difficult for researchers in the field to know enough of each discipline involved to avoid all methodological flaws associated to each of them (e.g., statistical flaws or protocol design with confounding factors). This has lead to a number of BCI publications with biases, confounding factors, and contradictory results that may slow down progress in the field. We identify a need within the field to be more cautious on the application and evaluation of these methods. This can be improved through published guidelines and more interdisciplinary participation in BCI related events and research projects.

Last but not least, it is crucial for the BCI community to fully embrace its interdisciplinary nature and effectively engage all possible stakeholders on its scientific events, and development projects. It should be widely acknowledged that successful translation of these technologies go beyond scientific research and should involve interest groups (e.g., patient associations), insurance and public health representatives, and the private sector so as to identify clear user needs, as well as strategies to support research and development of BCI solutions in a sustainable way.

Acknowledgments

Authors would like to thank the workshop attendants, in particular to J. Huggins, C. Jeunet, B. Lance, KC Lee, and B. Peters, for their involvement and valuable contributions to the discussion. This work was supported by the Swiss National Science Foundation NCCR Robotics (Dr. Chavarriaga), the French National Research Agency with project REBEL (grant ANR-15-CE23-0013-01, Dr. Lotte) and the Land Steiermark project rE(EG)map! (grant ABT08-31142/2014-10, Dr. Scherer). Dr. Fried-Oken’s participation was supported by NIH grant #DC009834-01 and NIDILRR grant #90RE5017. Dr. Scherer would also like to thank the FP7 Framework EU Research Project ABC (No. 287774) for giving him the opportunity to work with end users with cerebral palsy. This paper only reflects the authors’ views and funding agencies are not liable for any use that may be made of the information contained herein.

Biographies

Ricardo Chavarriaga, Ph.D. is a senior researcher at the Defitech Foundation Chair in Brain-Machine Interface, Center for Neuroprosthetics of the École Polytechnique Fédérale de Lausanne (EPFL), Switzerland. He holds a PhD in computational neuroscience from the EPFL (2005). His research focuses on robust brain-machine interfaces and multimodal human-machine interaction. In particular, the study of brain correlates of cognitive processes such as error recognition, learning and decision-making. As well as their use for interacting with complex neuroprosthetic devices.

Melanie Fried-Oken, Ph.D. is a clinical translational researcher in Augmentative and Alternative Communication (AAC) and Brain-Computer Interface (BCI). She provides expertise about assistive technology for persons with severe communication impairments who cannot use speech or writing for expression. She is a Professor of Neurology, Pediatrics, Biomedical Engineering and Otolaryngology at the Oregon Health & Science University (OHSU); Director of OHSU Assistive Technology Program, and clinical speech-language pathologist. She received her Ph.D. from Boston University in 1984 in Psycholinguistics; her M.A. from Northwestern University in Speech-Language Pathology, and B.A. from the University of Rochester. She has written over 40 peer-reviewed articles, numerous chapters and given over 200 national and international lectures on AAC for persons with various medical conditions. She has been PI on 16 federal grants and co-investigator on six federal grants to research communication technology, assessment and intervention for persons with developmental disabilities, neurodegenerative diseases, and the normally aging population.

Sonja Kleih, Ph.D. received her M.Sc. in Psychology and investigated the effects of motivation on BCI performance. She received her PhD in Neuroscience in 2013 from the University of Tübingen, Germany. Since then she worked as a Post-Doctoral researcher in Prof. Andrea Kübler’s BCI lab at the University of Würzburg, Germany. Her research interests are BCI based rehabilitation after stroke, user-centered design and psychological factors influencing BCI performance.

Fabien Lotte, Ph.D. obtained a M.Sc., a M.Eng. and a PhD in computer sciences, all from INSA Rennes, France, in 2005 (MSc. and MEng.) and 2008 (PhD) respectively. His PhD Thesis received both the PhD Thesis award 2009 from the French Association for Pattern Recognition and the PhD Thesis award 2009 accessit (2nd prize) from the French Association for Information Sciences and Technologies. In 2009 and 2010, he was a research fellow at I2R, Singapore, in the BCI Laboratory. Since January 2011, he is a tenured Research Scientist at Inria Bordeaux, France. His research interests include BCI, HCI and signal processing.

Reinhold Scherer, PhD. received his M.Sc. and Ph.D. degree in Computer Science from Graz University of Technology (TUG), Graz, Austria in 2001 and 2008, respectively. From 2008 to 2010 he was postdoctoral researcher and member of the Neural Systems and the Neurobotics Laboratories at the University of Washington, Seattle, USA. Currently, he is Associate Professor at the Institute of Neural Engineering, BCI Lab at TUG and member of the Institute for Neurological Rehabilitation and Research at the clinic Judendorf-Strassengel, Austria. His research interests include BCIs based on EEG/ECoG, statistical and adaptive signal processing, mobile brain and body imaging and robotics-mediated rehabilitation.

Footnotes

Also called hit-rate, sensitivity or recall.

References

- 1.Wolpaw J, Wolpaw E. Brain-computer interfaces: principles and practice. Oxford University Press; 2012. [Google Scholar]

- 2.Lotte F, Bougrain L, Clerc M. Wiley Encyclopedia on Electrical and Electronics Engineering. Wiley; 2015. Electroencephalography (EEG)-based Brain-Computer Interfaces. [Google Scholar]

- 3.Borton D, Micera S, Millán JDR, Courtine G. Personalized neuroprosthetics. Sci Transl Med. 2013;5:210rv2. doi: 10.1126/scitranslmed.3005968. [DOI] [PubMed] [Google Scholar]

- 4.Thakor NV. Translating the brain-machine interface. Sci Transl Med. 2013;5:210ps17. doi: 10.1126/scitranslmed.3007303. [DOI] [PubMed] [Google Scholar]

- 5.Bashashati A, Fatourechi M, Ward RK, et al. A Survey of Signal Processing Algorithms in Brain-Computer Interfaces Based on Electrical Brain Signals. Journal of Neural engineering. 2007;4:R32–R57. doi: 10.1088/1741-2560/4/2/R03. [DOI] [PubMed] [Google Scholar]

- 6.Lotte F, Congedo M, Lécuyer A, et al. A Review of classification algorithms for EEG-based Brain-Computer Interfaces. Journal of Neural Engineering. 2007;4:R1–R13. doi: 10.1088/1741-2560/4/2/R01. [DOI] [PubMed] [Google Scholar]

- 7.Allison B, Neuper C. Could Anyone Use a BCI? Brain-Computer Interfaces. Springer; 2010. [Google Scholar]

- 8.Makeig S, Kothe C, Mullen T, et al. Evolving signal processing for brain–computer interfaces. Proceedings of the IEEE. 2012;100:1567–1584. [Google Scholar]

- 9.Allison B, Luth T, Valbuena D, et al. BCI Demographics: How Many (and What Kinds of) People Can Use an SSVEP BCI? IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2010;18(2):107–116. doi: 10.1109/TNSRE.2009.2039495. [DOI] [PubMed] [Google Scholar]

- 10.Guger C, Daban S, Sellers E, et al. How many people are able to control a P300-based brain-computer interface (BCI)? Neuroscience Letters. 2009;462:94–98. doi: 10.1016/j.neulet.2009.06.045. [DOI] [PubMed] [Google Scholar]

- 11.Lotte F, Larrue F, Mühl C. Flaws in current human training protocols for spontaneous Brain-Computer Interfaces: lessons learned from instructional design. Front Hum Neurosci. 2013;7:568. doi: 10.3389/fnhum.2013.0056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Scherer R, Faller J, Balderas D, et al. Brain-computer interfacing: more than the sum of its parts. Soft computing. 2013;17(2):317–331. [Google Scholar]

- 13.Scherer R, Pfurtscheller G. Thought-based interaction with the physical world. Trends in cognitive sciences. 2013;17(10):490–492. doi: 10.1016/j.tics.2013.08.004. [DOI] [PubMed] [Google Scholar]

- 14.Kübler A, Holz E, Riccio A, et al. The user-centered design as novel perspective for evaluating the usability of BCI-controlled applications. PLoS One. 2014;9:e112392. doi: 10.1371/journal.pone.0112392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kübler A, Holz EM, Sellers EW, et al. Toward independent home use of brain-computer interfaces: a decision algorithm for selection of potential end-users. Arch Phys Med Rehabil. 2015;96(3 Suppl):S27–32. doi: 10.1016/j.apmr.2014.03.036. [DOI] [PubMed] [Google Scholar]

- 16.Zickler C, Halder S, Kleih SC, et al. Brain Painting: usability testing according to the user-centered design in end users with severe motor paralysis. Artif Intell Med. 2013;59(2):99–110. doi: 10.1016/j.artmed.2013.08.003. [DOI] [PubMed] [Google Scholar]

- 17.Nijboer F, Plass-Oude Bos D, Blokland Y, et al. Design requirements and potential target users for brain-computer interfaces–recommendations from rehabilitation professionals. Brain-Computer Interfaces. 2014;1(1):50–61. [Google Scholar]

- 18.Huggins JE, Wren PA, Gruis KL. What would brain–computer interface users want? Opinions and priorities of potential users with amyotrophic lateral sclerosis. Amyotrophic Lat Scler. 2011;12(5):318–324. doi: 10.3109/17482968.2011.572978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Blain-Moraes S, Schaff R, Gruis KL, et al. Barriers to and mediators of brain–computer interface user acceptance: focus group findings. Ergonomics. 2012;55(5):516–525. doi: 10.1080/00140139.2012.661082. [DOI] [PubMed] [Google Scholar]

- 20.Andresen EM, Fried-Oken M, Peters B, et al. Initial constructs for patient-centered outcome measures to evaluate brain–computer interfaces. Disabil Rehabil Assist Technol. 2015:1–10. doi: 10.3109/17483107.2015.1027298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Huggins JE, Moinuddin AA, Chiodo AE, et al. What would brain–computer interface users want: opinions and priorities of potential users with spinal cord injury. Arch Phys Med Rehabil. 2015;96(3):S38–S45.e5. doi: 10.1016/j.apmr.2014.05.028. [DOI] [PubMed] [Google Scholar]

- 22.Peters B, Bieker G, Heckman SM, et al. Brain-computer interface users speak up: the Virtual Users’ Forum at the 2013. International Brain-Computer Interface meeting Archives of physical medicine and rehabilitation. 2015;96(3):S33–S37. doi: 10.1016/j.apmr.2014.03.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Holz EM, Botrel L, Kaufmann T, et al. Long-term independent brain-computer interface home use improves quality of life of a patient in the locked-in state: a case study. Arch Phys Med Rehabil. 2015;96(3 Suppl):S16–26. doi: 10.1016/j.apmr.2014.03.035. [DOI] [PubMed] [Google Scholar]

- 24.Sellers EW, Vaughan TM, Wolpaw JR. A brain-computer interface for long-term independent home use. Amyotroph Lateral Scler. 2010;11(5):449–455. doi: 10.3109/17482961003777470. [DOI] [PubMed] [Google Scholar]

- 25.Sellers EW, Ryan DB, Hauser CK. Noninvasive brain-computer interface enables communication after brainstem stroke. Sci Transl Med. 2014;6:257re7. doi: 10.1126/scitranslmed.3007801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Blankertz B, Sannelli C, Halder S, et al. Neurophysiological predictor of SMR-based BCI performance. Neuroimage. 2010;51(4):1303–1309. doi: 10.1016/j.neuroimage.2010.03.022. [DOI] [PubMed] [Google Scholar]

- 27.Ahn M, Ahn S, Hong JH, et al. Gamma band activity associated with BCI performance – Simultaneous MEG/EEG study. Front Hum Neurosci. 2013;7 doi: 10.3389/fnhum.2013.00848. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Zhang Y, Xu P, Guo D, et al. Prediction of SSVEP-based BCI performance by the resting-state EEG network. J Neural Eng. 2013;10(6):066017. doi: 10.1088/1741-2560/10/6/066017. [DOI] [PubMed] [Google Scholar]

- 29.Saeedi S, Chavarriaga R, Leeb R, Millán JDR. Adaptive Assistance for Brain-Computer Interfaces by Online Prediction of Command Reliability. IEEE Computational Intelligence Magazine. 2015;11:32–29. [Google Scholar]

- 30.Saeedi S, Chavarriaga R, Millán JDR. Long-term stable control of motor-imagery BCI by a locked-in user through adaptive assistance. IEEE Transactions of Neural System and Rehabilitation Engineering. doi: 10.1109/TNSRE.2016.2645681. In Press. [DOI] [PubMed] [Google Scholar]

- 31.Kaufmann T, Vögele C, Sütterlin S, et al. Effects of resting heart rate variability on performance in the P300 brain-computer interface. International Journal of Psychophysiology. 2012;83(3):336–341. doi: 10.1016/j.ijpsycho.2011.11.018. doi: http://dx.doi.org/10.1016/j.ijpsycho.2011.11.018. [DOI] [PubMed] [Google Scholar]

- 32.Sutton S, Braren M, Zubin J, et al. Evoked-Potential Correlates of Stimulus Uncertainty. Science. 1965;150(3700):1187–1188. doi: 10.1126/science.150.3700.1187. [DOI] [PubMed] [Google Scholar]

- 33.Halder S, Furdea A, Varkuti B, et al. Auditory standard oddball and visual P300 brain-computer interface performance. International Journal of Bioelectromagnetism. 2011;13(1):5–6. [Google Scholar]

- 34.Kaufmann T, Holz EM, Kubler A. Comparison of tactile, auditory, and visual modality for brain-computer interface use: a case study with a patient in the locked-in state. Front Neurosci. 2013;7:129. doi: 10.3389/fnins.2013.00129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hammer EM, Halder S, Blankertz B, et al. Psychological predictors of SMR-BCI performance. Biol Psychol. 2012;89(1):80–86. doi: 10.1016/j.biopsycho.2011.09.006. [DOI] [PubMed] [Google Scholar]

- 36.Vukovic A. Motor imagery questionnaire as a method to detect BCI illiteracy. Paper presented at: 2010 3rd International Symposium on Applied Sciences in Biomedical and Communication Technologies (ISABEL 2010); 2010 7–10 Nov.2010. [Google Scholar]

- 37.Jeunet C, N’Kaoua B, Lotte F. Advances in User-Training for Mental-Imagery Based BCI Control: Psychological and Cognitive Factors and their Neural Correlates. Progress in Brain Research. 2016 doi: 10.1016/bs.pbr.2016.04.002. [DOI] [PubMed] [Google Scholar]

- 38.Riccio A, Simione L, Schettini F, et al. Attention and P300-based BCI performance in people with amyotrophic lateral sclerosis. Front Hum Neurosci. 2013:7. doi: 10.3389/fnhum.2013.00732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kleih SC, Nijboer F, Halder S, et al. Motivation modulates the P300 amplitude during brain-computer interface use. Clin Neurophysiol. 2010;121(7):1023–1031. doi: 10.1016/j.clinph.2010.01.034. [DOI] [PubMed] [Google Scholar]

- 40.Käthner I, Ruf CA, Pasqualotto E, et al. A portable auditory P300 brain–computer interface with directional cues. Clinical Neurophysiology. 2013;124(2):327–338. doi: 10.1016/j.clinph.2012.08.006. doi: http://dx.doi.org/10.1016/j.clinph.2012.08.006. [DOI] [PubMed] [Google Scholar]

- 41.Fried-Oken M, Mooney A, Peters B, et al. A clinical screening protocol for the RSVP Keyboard brain–computer interface. Disability and Rehabilitation: Assistive Technology. 2015;10(1):11–18. doi: 10.3109/17483107.2013.836684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Zander TO, Ihme K, Gärtner M, et al. A public data hub for benchmarking common brain–computer interface algorithms. J Neural Eng. 2011;8(2):025021. doi: 10.1088/1741-2560/8/2/025021. [DOI] [PubMed] [Google Scholar]

- 43.Ledesma-Ramirez C, Bojorges-Valdez E, Yáñez-Suarez O, et al. An Open-Access P300 Speller Database; Paper presented at: Fourth International Brain-Computer Interface Meeting; 2010; Monterey, USA. 2010. https://hal.inria.fr/inria-00549242. [Google Scholar]

- 44.Bernabeu M, Laxe S, Lopez R, et al. Developing core sets for persons with traumatic brain injury based on the International Classification of Functioning, Disability, and Health. Neurorehabil Neural Repair. 2009;23(5):464–467. doi: 10.1177/1545968308328725. [DOI] [PubMed] [Google Scholar]

- 45.Schwarzkopf SR, Ewert T, Dreinhofer KE, et al. Towards an ICF Core Set for chronic musculoskeletal conditions: commonalities across ICF Sets for osteoarthritis, rheumatoid arthritis, osteoporosis, low back pain and chronic widespread pain. Chin Rheumatol. 2008;27:1355–1361. doi: 10.1007/s10067-008-0916-y. [DOI] [PubMed] [Google Scholar]

- 46.Cieza A, Ewer T, Ustun B, et al. Development of ICF core sets for patients with chronic conditions. J Rehabil Med. 2004;(Suppl. 9–11) doi: 10.1080/16501960410015353. [DOI] [PubMed] [Google Scholar]

- 47.Peters B, Mooney A, Oken B, et al. Soliciting BCI user experience feedback from people with severe speech and physical impairments. Brain Computer Interfaces. 2016 doi: 10.1080/2326263X.2015.1138056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Scherer R, Wagner J, Billinger M, et al. Augmenting communication, emotion expression and interaction capabilities of individuals with cerebral palsy; Paper presented at: 2014 6th International Brain-Computer Interface Conference; 2014; Graz, Austria. pp. 312–315. [DOI] [Google Scholar]

- 49.Daly I, Billinger M, Laparra-Hernández J, et al. On the control of brain-computer interfaces by users with cerebral palsy. Clinical Neurophysiology. 2013;124(9):1787–1797. doi: 10.1016/j.clinph.2013.02.118. [DOI] [PubMed] [Google Scholar]

- 50.Scherer R, Billinger M, Wagner J, et al. Thought-based row-column scanning communication board for individuals with cerebral palsy. Annals of Physical and Rehabilitation Medicine. 2015;58(1):14–22. doi: 10.1016/j.rehab.2014.11.005. [DOI] [PubMed] [Google Scholar]

- 51.Scherer R, Schwarz A, Müller-Putz GR, et al. Game-based BCI training: Interactive design for individuals with cerebral palsy; Paper presented at: 2015 IEEE International Conference on Systems, Man, and Cybernetics (SMC); 2015 Oct; Hong-Kong. 2015a. pp. 3175–3180. [Google Scholar]

- 52.Scherer R, Schwarz A, Müller-Putz GR, et al. Let’s play Tic-Tac-Toe: A Brain-Computer Interface case study in cerebral palsy; Paper presented at: 2016 IEEE International Conference on Systems, Man and Cybernetics (SMC); Budapest, Hungary. 2016. Oct 9–12, [Google Scholar]

- 53.Neumann N, Kubler A. Training locked-in patients: a challenge for the use of brain-computer interfaces. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2003;11(2):169–172. doi: 10.1109/TNSRE.2003.814431. [DOI] [PubMed] [Google Scholar]

- 54.Neuper C, Pfurtscheller G. Brain-Computer Interfaces Neurofeedback Training for BCI Control. The Frontiers Collection. 2010:65–78. [Google Scholar]

- 55.Wander JD, Blakely T, Miller KJ, et al. Distributed cortical adaptation during learning of a brain-computer interface task. Proceedings of the National Academy of Sciences. 2013;110(26):10818–23. doi: 10.1073/pnas.1221127110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Neuper C, Scherer R, Reiner M, et al. Imagery of motor actions: differential effects of kinesthetic and visual-motor mode of imagery in single-trial EEG. Cognitive Brain Research. 2005;25(3):668–77. doi: 10.1016/j.cogbrainres.2005.08.014. [DOI] [PubMed] [Google Scholar]

- 57.Lotte F, Jeunet C. Towards Improved BCI based on Human Learning Principles. Paper presented at: 3rd International Brain-Computer Interfaces Winter Conference. 2015 [Google Scholar]

- 58.Shute V. Focus on Formative Feedback. Review of Educational Research. 2008;78(1):153–189. [Google Scholar]

- 59.Hattie J, Timperley H. The Power of Feedback. Review of Educational Research. 2007;77(1):81–112. [Google Scholar]

- 60.Merrill M. 2nd. First principles of instruction: a synthesis. In: Reiser RA, Dempsey JV, editors. Trends and issues in instructional design and technology. Hill: 2007. pp. 62–71. [Google Scholar]

- 61.Jeunet C, Jahanpour E, Lotte F. Why Standard Brain-Computer Interface (BCI) Training Protocols Should be Changed: An Experimental Study. Journal of Neural Engineering. 2016;13(3):036024. doi: 10.1088/1741-2560/13/3/036024. [DOI] [PubMed] [Google Scholar]

- 62.Jeunet C, N’Kaoua B, N’Kambou R, et al. Why and How to Use Intelligent Tutoring Systems to Adapt MI-BCI Training to Each User?; Paper presented at: International BCI meeting; Monterey, USA. 2016. [Google Scholar]

- 63.Jeunet C, N’Kaoua B, Subramanian S, et al. Predicting Mental Imagery-Based BCI Performance from Personality, Cognitive Profile and Neurophysiological Patterns. PLoS ONE. 2015;10(12):e0143962. doi: 10.1371/journal.pone.0143962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Kleih SC, Kübler A. Psychological Factors Influencing Brain-Computer Interface (BCI) Performance; Paper presented at: 2015 IEEE International Conference on Systems, Man, and Cybernetics (SMC); Hong-Kong. 2015. pp. 3192–3196. [Google Scholar]

- 65.Jeunet C, Vi C, Spelmezan D, et al. Continuous Tactile Feedback for Motor-Imagery based Brain-Computer Interaction in a Multitasking Context; Paper presented at: Proc Interact; 2015; 2015. [Google Scholar]

- 66.Gomez Rodriguez M, Peters J, Hill J, et al. Closing the sensorimotor loop: haptic feedback helps decoding of motor imagery. Journal of Neural Engineering. 2011;8:036005. doi: 10.1088/1741-2560/8/3/036005. [DOI] [PubMed] [Google Scholar]

- 67.Sollfrank T, Ramsay A, Perdikis S, et al. The effect of multimodal and enriched feedback on SMR-BCI performance. Clin Neurophysiol. 2016;127:490–498. doi: 10.1016/j.clinph.2015.06.004. [DOI] [PubMed] [Google Scholar]

- 68.Lotte F, Faller J, Guger C, et al., editors. Combining BCI with Virtual Reality: Towards New Applications and Improved BCI Towards Practical Brain-Computer Interfaces. Springer; Berlin Heidelberg: 2013. pp. 197–220. [Google Scholar]

- 69.Birbaumer N, Ghanayim N, Hinterberger T, et al. A spelling device for the paralysed. Nature. 1999;398(6725):297–298. doi: 10.1038/18581. [DOI] [PubMed] [Google Scholar]

- 70.Blankertz B, Dornhege G, Krauledat M, et al. The non-invasive Berlin Brain–Computer Interface: Fast acquisition of effective performance in untrained subjects. NeuroImage. 2007;37(2):539–550. doi: 10.1016/j.neuroimage.2007.01.051. [DOI] [PubMed] [Google Scholar]

- 71.Mason SG, Bashashati A, Fatourechi M, et al. A comprehensive survey of brain interface technology designs. Annals of Biomedical Engineering. 2007;35(2):137–69. doi: 10.1007/s10439-006-9170-0. [DOI] [PubMed] [Google Scholar]

- 72.Vidaurre C, Kawanabe M, von Bünau P, et al. Toward unsupervised adaptation of LDA for brain-computer interfaces. IEEE Transactions on Biomedical Engineering. 2011;58(3):587–97. doi: 10.1109/TBME.2010.2093133. [DOI] [PubMed] [Google Scholar]

- 73.Faller J, Vidaurre C, Solis-Escalante T, et al. Autocalibration and recurrent adaptation: Towards a plug and play online ERD-BCI. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2012;20(3):313–319. doi: 10.1109/TNSRE.2012.2189584. [DOI] [PubMed] [Google Scholar]

- 74.Faller J, Scherer R, Costa U, et al. A Co-Adaptive Brain-Computer Interface for End Users with Severe Motor Impairment. PLoS ONE. 2014;9(7):e101168. doi: 10.1371/journal.pone.0101168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Samek W, Meinecke FC, Müller KR. Transferring subspaces between subjects in brain–computer interfacing. IEEE Transactions on Biomedical Engineering. 2013;60(8):2289–2298. doi: 10.1109/TBME.2013.2253608. [DOI] [PubMed] [Google Scholar]

- 76.Perdikis S, Leeb R, Millán JDR. Subject-oriented training for motor imagery brain-computer interfaces. Paper presented at: 2014 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBC’14. 2014:1259–1262. doi: 10.1109/EMBC.2014.6943826. [DOI] [PubMed] [Google Scholar]

- 77.Kobler R, Scherer R. Restricted Boltzmann Machines in Sensory Motor Rhythm Brain-Computer Interfacing: A Study on Inter-Subject Transfer and Co-Adaptation; Paper presented at: 2016 IEEE International Conference on Systems, Man and Cybernetics (SMC); Budapest, Hungary. 2016. Oct 9–12, [Google Scholar]

- 78.Cecotti H. A self-paced and calibration-less SSVEP-based brain–computer interface speller. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2010;18(2):127–133. doi: 10.1109/TNSRE.2009.2039594. [DOI] [PubMed] [Google Scholar]

- 79.Kindermans PJ, Schreuder M, Schrauwen B, Müller KR, Tangermann M. True zero-training brain-computer interfacing–an online study. PloS one. 2014;9(7):e102504. doi: 10.1371/journal.pone.0102504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Baykara E, Ruf CA, Fioravanti C, Käthner I, Simon N, Kleih SC, Halder S. Effects of training and motivation on auditory P300 brain–computer interface performance. Clinical Neurophysiology. 2016;127(1):379–387. doi: 10.1016/j.clinph.2015.04.054. [DOI] [PubMed] [Google Scholar]

- 81.Hastie T, Tibshirani R, Friedman J. The Elements of Statistical Learning (Second Ed) Springer-Verlag; New York: 2009. [DOI] [Google Scholar]

- 82.Ledoit O, Wolf M, Honey I. Shrunk the Sample Covariance Matrix. The Journal of Portfolio Management. 2004;30(4):110–119. [Google Scholar]

- 83.Lemm S, Blankertz B, Dickhaus T, Müller K-R. Introduction to machine learning for brain imaging. Neuroimage. 2011;56:387–399. doi: 10.1016/j.neuroimage.2010.11.004. [DOI] [PubMed] [Google Scholar]

- 84.Lotte F. Signal Processing Approaches to Minimize or Suppress Calibration Time in Oscillatory Activity-Based Brain–Computer Interfaces. Proceedings of the IEEE. 2015;103(6):871–890. [Google Scholar]

- 85.Blankertz B, Tomioka R, Lemm S, et al. Optimizing spatial filters for robust EEG single-trial analysis. IEEE Signal Processing Magazine. 2008;25:41–56. doi: 10.1109/MSP.2008.4408441. [DOI] [Google Scholar]

- 86.Xu R, Jiang N, Mrachacz-Kersting N, Dremstrup K, Farina D. Factors of Influence on the Performance of a Short-Latency Non-Invasive Brain Switch: Evidence in Healthy Individuals and Implication for Motor Function Rehabilitation. Frontiers in neuroscience. 2015:9. doi: 10.3389/fnins.2015.00527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Lotte F. Guide to Brain-Computer Music Interfacing. Springer; London: 2014. A tutorial on EEG signal-processing techniques for mental-state recognition in brain–computer interfaces; pp. 133–161. [Google Scholar]

- 88.Ramoser H, Müller-Gerking J, Pfurtscheller G. Optimal spatial filtering of single trial EEG during imagined hand movement. IEEE Transactions on Rehabilitation Engineering. 2000;8:441–446. doi: 10.1109/86.895946. [DOI] [PubMed] [Google Scholar]

- 89.Samek W, Kawanabe M, Muller K-R. Divergence-based framework for common spatial patterns algorithms. IEEE Reviews in Biomedical Engineering 2013. 2014;7:50–72. doi: 10.1109/RBME.2013.2290621. [DOI] [PubMed] [Google Scholar]

- 90.Seeber M, Scherer R, Wagner J, et al. EEG beta suppression and low gamma modulation are different elements of human upright walking. Frontiers in Human Neuroscience. 2014;8:485. doi: 10.3389/fnhum.2014.00485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Seeber M, Scherer R, Wagner J, et al. High and low gamma EEG oscillations in central sensorimotor areas are conversely modulated during the human gait cycle. NeuroImage. 2015;112:318–26. doi: 10.1016/j.neuroimage.2015.03.045. [DOI] [PubMed] [Google Scholar]

- 92.Friedrich EVC, Scherer R, Neuper C. The effect of distinct mental strategies on classification performance for brain-computer interfaces. International Journal of Psychophysiology. 2012;84(1):86–94. doi: 10.1016/j.ijpsycho.2012.01.014. [DOI] [PubMed] [Google Scholar]

- 93.Friedrich EVC, Neuper C, Scherer R. Whatever works: a systematic user-centered training protocol to optimize brain-computer interfacing individually. PLoS ONE. 2013;8(9):e76214. doi: 10.1371/journal.pone.0076214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Scherer R, Faller J, Friedrich EVC, et al. Individually adapted imagery improves brain-computer interface performance in end-users with disability. PloS One. 2015b;10(5):e0123727. doi: 10.1371/journal.pone.0123727. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Scherer R, Faller J, Opisso E, et al. Bring mental activity into action! Self-tuning brain-computer interfaces. Paper presented at: 2015 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBC’15. 2015c doi: 10.1109/EMBC.2015.7318858. [DOI] [PubMed] [Google Scholar]

- 96.Fatourechi M, Bashashati A, Ward RK, et al. EMG and EOG artifacts in brain computer interface systems: A survey. Clinical Neurophysiology. 2007;118(3):480–494. doi: 10.1016/j.clinph.2006.10.019. [DOI] [PubMed] [Google Scholar]

- 97.Schlögl A, Keinrath C, Zimmermann D, et al. A fully automated correction method of EOG artifacts in EEG recordings. Clinical Neurophysiology. 2007;118(1):98–104. doi: 10.1016/j.clinph.2006.09.003. [DOI] [PubMed] [Google Scholar]

- 98.Daly I, Scherer R, Billinger M, et al. FORCe: Fully online and automated artifact removal for brain-computer interfacing. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2015;23(5):725–736. doi: 10.1109/TNSRE.2014.2346621. [DOI] [PubMed] [Google Scholar]

- 99.Pfurtscheller G, Allison B, Bauernfeind G, et al. The hybrid BCI Front. Neurosci. 2010;4:42. doi: 10.3389/fnpro.2010.00003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Leeb R, Sagha H, Chavarriaga R, Millán JDR. A hybrid brain-computer interface based on the fusion of electroencephalographic and electromyographic activities. J Neural Eng. 2011;8:025011. doi: 10.1088/1741-2560/8/2/025011. [DOI] [PubMed] [Google Scholar]