Summary

The parietal cortex is an intriguing patch of association cortex. Throughout the history of modern neuroscience, it has been associated with a wide array of sensory, motor, and cognitive functions. The use of non-human primates as a model organism has been instrumental in our current understanding of how areas in the posterior parietal cortex (PPC) modulate our perception and influence our behavior. In this review, we highlight a series of influential studies over the last five decades examining the role of PPC in visual perception and motor planning. We also integrate longstanding views of PPC function with more recent evidence to propose a more general model framework to explain integrative sensory, motor, and cognitive functions of PPC.

Graphical abstract

Freedman and Ibos review how past debates about Posterior Parietal Cortex (PPC) led to the development of recent theories. Based on this review and recent advances in the field, they propose a novel Integrative Comparative Framework.

Introduction

In the last 50 years, a large corpus of studies has focused on understanding the role of the posterior parietal cortex (PPC) in sensory, motor, and cognitive functions using non-human primates (NHPs), especially rhesus monkeys, as a model organism. Rhesus monkeys are a well-suited model for studying human parietal functions because they explore their environment in a manner similar to humans—mostly visually and manually. Anatomically, their cortical organization, including the parietal cortices, show a high degree of homology with ours (Sereno and Tootell, 2005). Moreover, they are capable of learning complex behavioral tasks that allow for the study of the neural correlates of behavioral and cognitive functions using electrophysiological recordings.

Since the 1950s, our understanding of PPC functions has vastly evolved as neurophysiological investigations have shown PPC neurons to be involved in an increasingly diverse set of sensory, cognitive and motor functions. In this review, we present a historical perspective of how key theories of parietal functions in the NHP arose, and consider these theories within a more general framework based on recent work from our group and others. We will mostly focus on cortical areas lateral to the intraparietal sulcus, with a specific emphasis on the lateral intraparietal area (LIP) on the lateral bank of the intraparietal sulcus. In this article, we describe how modern theories of PPC functions find their roots in past scientific debates that unfolded in a series of related debates. Our goal is to summarize some of these debates as accurately as possible based on our read from the literature. However, for conciseness and clarity, it is not possible to present a detailed description of each of the relevant studies spanning more than 40 years of research.

In the first part, we will describe the original discoveries that shaped a high-profile and influential debate regarding the role of PPC in visual attention and motor intention. We will link the evolution of these hypotheses to two dominant current theories that describe LIP either as a map reflecting the behavioral relevance (priority) of stimuli or as transforming sensory evidence into decisions. Next, we will discuss a range of studies examining the influence of non-spatial variables on PPC activity. Based on these findings and some recently published work, we will propose a coherent framework in which we distinguish between signals resulting from integrative mechanisms and signals reflecting local computations. This integrative comparative framework incorporates these diverse functions of PPC, and accounts for how PPC integrates, groups and compares diverse sensory, motor and cognitive signals, and transforms them into decision-related encoding.

How previous debates shaped recent PPC models

Early investigations of primate PPC (areas located around the intraparietal sulcus) described distinct subregions that appeared to differ in their encoding of sensory and motor factors. At the end of the nineteenth century, David Ferrier gave a series of lectures before the Royal College of Physicians of London about cerebral localization (Ferrier, 1890) in which he described the effects of selective cortical electrical stimulation or cortical ablation on the behavior of different mammals (including macaque monkeys). He described, among other things, that stimulation of the lateral (area 7) and medial (area 5) gyrus around the intraparietal sulcus resulted in movements of the eyes and upper limbs, respectively. Sixty-five years later, Fleming and Crosby (Fleming and Crosby, 1955) proposed that these cortical structures represent motor areas for controlling extremities, trunk and head (area 5) and eye movements (area 7).

In later characterizations of electrophysiological responses of its neurons, area 5 (medial intraparietal area (MIP)) appeared to be involved in monkeys' manual exploration of their peri-personal space (Duffy and Burchfiel, 1971). Similarly, pioneering studies of area 7 (located on the lateral bank and on the gyrus lateral to the intraparietal sulcus) showed that its neurons were selectively activated when monkeys made saccadic eye movements to, or attentively fixated, grapes in the vicinity of their face (Hyvärinen and Poranen, 1974).

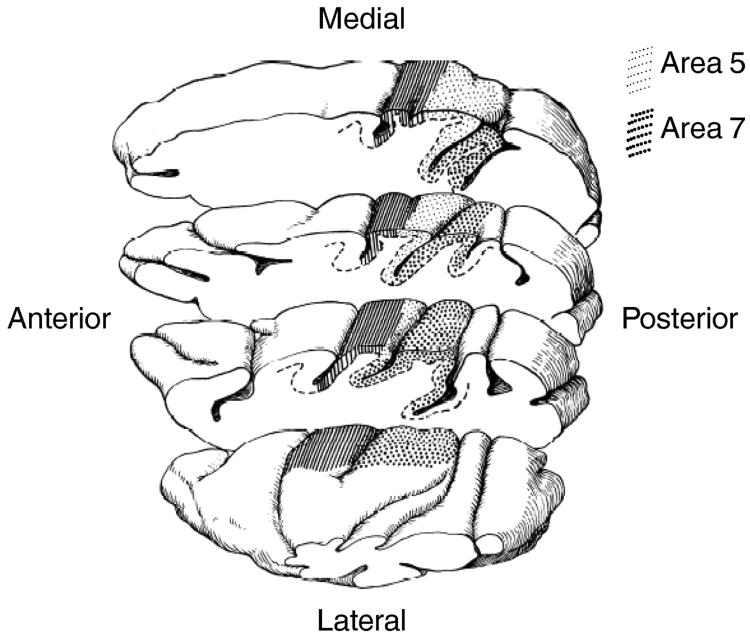

Subsequently, several groups investigated the spatial properties of area 7 neurons in relation to ocular exploration of the monkeys' environment (Figure 1). In two seminal studies (Lynch et al., 1977; Mountcastle et al., 1975), Mountcastle and colleagues showed that area 5 and area 7 neurons responded strongly to hand and eye movements, respectively. Of relevance to this review, they concluded that area 7 contains three neuronal populations distinguished by their apparent functional roles 1) a large population (∼60% of neurons) that responds during attentive fixation (but not passive fixation) of behaviorally relevant stimuli; 2) a medium-sized population (∼20%) that responds in concert with visually-guided saccadic eye movements; 3) a smaller population (∼7%) that responds in conjunction with smooth pursuit eye movements. Interestingly, none of these neurons were found to be activated by either passive fixation or spontaneous eye movements, suggesting that their activity was neither purely visual nor purely motor. The authors of these early studies explicitly proposed that the parietal cortex encodes command functions. In this framework, these three neuronal pools were proposed to represent “mechanisms for directed visual attention” toward relevant stimuli in preparation of saccadic eye movements. Although the term attention was never explicitly defined in this study, we assume that the authors associated it with foveation mechanisms (through the control of saccadic eye movements).

Figure 1.

Anatomical subdivisions of PPC (reproduced from Mountcastle et al 1975 with the permission of C. Acuna).

The view that PPC is central to directing visual attention for the purpose of guiding saccadic eye movements was almost immediately challenged in another seminal study by a different group led by Goldberg and Robinson (Robinson et al., 1978). The authors found that nearly all area 7 neurons with movement-related activity were also modulated by sensory stimuli in the absence of motor movement. Interestingly, these sensory-related responses were larger when monkeys had to detect a change in stimulus luminance than during passive viewing tasks, suggesting that PPC is “related to visual attention”. This was presented as a direct challenge to the command functions hypothesis proposed by Mountcastle and colleagues. Instead, Robinson, Goldberg and colleagues claimed that area 7 should be described as a “sensory associative area” (Robinson et al., 1978). Moreover, they suggested that area 7 neurons' relationship to motor movements were epiphenomenological:

“the activity of cells in area 7 is better understood as signaling the presence of a stimulus in the environment than as commanding movement. We propose that parietal neurons are best described according to their sensory properties, not according to epiphenomenological movement relationships.”

A major controversy

The discrepant views about whether PPC is primarily involved in command functions for action or attention-related stimulus representations laid the foundation for a vigorous debate between the groups favoring an attentional/sensory role of PPC (Goldberg and colleagues) and proponents of an action-related or intentional role for PPC (Anderson and colleagues).

The attention hypothesis (for review see (Colby and Goldberg, 1999)) originated from observations that representation of relevant stimuli in area 7 are unaffected by monkeys' motor plans (Bushnell et al., 1981). The authors trained monkeys to report the dimming of a visual stimulus using either saccadic eye movements, a manual bar release or a manual reach. They showed that area 7 neuronal responses to sensory stimuli were spatially selective, and were greater in magnitude for target stimuli compared to the same stimuli viewed passively, outside the context of an active behavioral task. Interestingly, the amplitude of task-related or attentional modulation was independent of the kind of action (e.g. eye or limb movement) that the animals used to report the dimming of the stimuli. This lack of effector-specific (i.e. eye vs hand movement) modulation of area 7 visual responses (Bushnell et al., 1981) led the authors to conclude that PPC neuronal responses represent spatial shifts of attention.

The intention hypothesis (for review (Snyder et al., 2000)) was first explicitly proposed in a seminal paper by Gnadt and Andersen (Gnadt and Andersen, 1988). This study included two major new features that impacted the field's approach to studying the parietal cortex: electrophysiological exploration of the lateral bank of the parietal sulcus (area LIP) and the use of the delayed saccade (DS) task. Before the study was published, area LIP and the fundus located lateral to the intraparietal sulcus (area 7a) were known to be different brain regions (Petrides and Pandya, 1984). However, this neurophysiological study was the first to functionally differentiate between 7a and LIP (Gnadt and Andersen, 1988), and the functions of the two areas were directly compared in a following study (Barash et al., 1991a). Also, this study employed a novel (for PPC) behavioral task: the DS task (originally developed by a different group studying saccadic-related activity in brain regions including the substantia nigra pars reticulata (Hikosaka and Wurtz, 1983)). This task required monkeys to make saccadic eye movements to the remembered position of a previously presented visual target. Compared to a visually-guided saccade task, the DS task has the advantage of temporally dissociating neuronal responses related to the sensory stimuli and saccadic eye movements, even though the location of the visual stimuli and resulting eye movement are still spatially coupled. During the DS task, a large population of LIP neurons showed sustained and spatially-selective activity in the absence of a visual stimulus during the delay period between stimulus offset and the saccade period. The authors interpreted this sustained neuronal activity as a marker of monkeys' intention to perform an eye movement to the location of the remembered target stimulus. They concluded that the parietal cortex is “intimately involved in the guiding and motor planning of saccadic eye movements”. This study foreshadowed future work in LIP, as it was also the first to associate the PPC to “memory-linked (…) representation of visual or motor space” (Gnadt and Andersen, 1988).

Coordinate transformation

At that time, a closely related matter was subject of a parallel debate by Goldberg and Andersen's groups: coordinate transformation for either action (sensorimotor transformation) (Barash et al., 1991b; Goldberg et al., 1990) or perception (perceptual constancy) (Andersen, 1989; Duhamel et al., 1992). As we explore our environment, we execute a continuous stream of saccadic eye movements, and each saccade changes the position of the visual stimuli on the retina. This poses at least two challenges. First, sensory stimuli and actions are encoded in different coordinate frames. For example, visual stimuli are encoded in retinotopic coordinates along the hierarchy of visual areas while saccades are encoded by frontal eye field (FEF) and superior colliculus (SC) neurons in oculomotor coordinates (i.e. vectors with specific direction and amplitude in charge of controlling extraocular muscles). Planning movements toward a stimulus therefore requires a transformation from retinotopic coordinates into a different spatial frame of reference. Second, despite constant shifts of gaze, we perceive a stable visual world. Given the retinotopic organization of striate and extrastriate visual cortical areas, the brain must somehow compensate for each saccade in order to bring retinotopic and world-centered reference frames into register. At the end of the 1980's, the existence of an explicit supra-modal, effector non-specific representation of space, intermediate between retinotopic and oculomotor representations, was the center of the hypothesis for coordinate transformations (Andersen, 1989; Goldberg et al., 1990). For example, Goldberg and colleagues stated (Goldberg et al., 1990):

“one possibility is that visual input is remapped into an explicit representation of space. The motor coordinates of a desired saccade could then be calculated from this spatial map, which may be coded in head-centered or in inertial coordinates. This formulation requires two coordinate transformations (…). It also requires an explicit representation of extrapersonal space. A second possibility (…) is that visual inputs is remapped directly into motor coordinates. This could be accomplished by coding a visual target according to the saccade vector needed to acquire it.”

The groups of both Goldberg and Andersen examined reference frames of spatial encoding in PPC during a double-step saccade task (Barash et al., 1991b; Gnadt and Andersen, 1988; Goldberg et al., 1990). In this task, monkeys had to remember the locations of two successively presented visual targets and make saccades in the correct sequence toward the locations of those targets. The key aspect of this task is that the execution of the first saccade changes the retinotopic position of the second target stimulus, thereby dissociating the encoded retinal location of the second target from the vector of the saccade needed to foveate it. In this task, LIP neurons responded after the execution of the first saccade (in this trial epoch, the RF of each recorded neuron overlapped with the position of the second target), even though the sensory stimuli were never presented in the retinotopic position of the RF. LIP neurons therefore encoded the second target in either “dynamic retinotopic” (Goldberg and Colby, 1992) or motor coordinates (Barash et al., 1991b; Gnadt and Andersen, 1988). The explicit representation of space was nowhere to be found in PPC.

Andersen and colleagues argued that such responses in the absence of direct sensory stimulation of LIP RFs shows that LIP encodes “the forthcoming intended saccade.” They proposed a three-layer network (Andersen and Zipser, 1988; Andersen et al., 1985; Zipser and Andersen, 1988) that explicitly maps stimuli in head-centered coordinates by comparing eye positions to the position of the stimuli in retinocentric coordinates (using the retinotopy of LIP neurons). The information about the eye position is encoded in LIP and has been described as a gain field (Lynch et al., 1977), or linear modulation of neuronal responses as a function of the position of the eyes in their orbits. Goldberg's group directly challenged this interpretation as they showed that non-saccadic LIP neurons responded to the second target during the double-step saccade task, suggesting that these activities were not saccade-related but rather stimulus-related (Goldberg et al., 1990). Also, they showed that, when monkeys planned a single saccade, LIP neurons' RFs appeared to anticipate the intended eye movement and began responding, prior to the eye movement, to stimuli located in future location of LIP neurons' RF (Duhamel et al., 1992). The authors argued that this apparent updating of LIP spatial selectivity (or future RF remapping; updating and remapping were, at this time, interchangeably used) allows the brain to anticipate the perceptual outcome of saccadic eye movements and to stabilize our perception. Their results replicated what both groups had observed with double-step saccades, but showed that execution of the second saccade was not needed, thereby directly questioning the Andersen group's intentional interpretation of this LIP signal. However, recently, future RF remapping has been proposed to reflect target selection rather than saccade anticipation (Zirnsak and Moore, 2014).

Interestingly, in this updating framework, saccade-related responses in LIP are hypothesized to result from corollary discharges from motor command signals in core oculomotor areas such as the SC or the FEF. If so, this indicates that LIP saccade-related activity has a limited role in saccade generation, but might play more complex functions that are not addressed by the relative simplicity of saccadic behavioral tasks used in PPC studies at that time. Support for this hypothesis was later provided by experiments that showed that basic oculomotor behaviors were weakly affected by reversible LIP inactivation (Chafee and Goldman-Rakic, 2000; Katz et al., 2016; Wardak et al., 2002).

Development and evolution of the debate

Today, it is generally accepted that PPC activity can be simultaneously modulated by sensory-related and motor-related factors. However, our modern acceptance of the diversity of encoding in PPC was predated by the vigorous debate in the 1980s and 1990s between the Andersen and Goldberg groups, described above. During this debate, Rizzolati and colleagues developed the premotor theory of attention (Rizzolatti et al., 1987) in which covert shifts of spatial attention are strongly linked to the execution of oculomotor movements. This hypothesis was later supported by studies showing that microstimulation of FEF (with intensities below the threshold for saccade generation) increased monkeys' abilities to detect subtle changes of stimulus luminance (Armstrong and Moore, 2007) and increased extrastriate visual neuronal responses (Moore and Armstrong, 2003). In this framework, LIP attentional modulations reflect the preparation of saccadic eye movements (whether they are executed or not).

In parallel to the premotor theory of attention, Goldberg's group (Gottlieb et al., 1998; Kusunoki et al., 2000) showed that LIP neurons encode stimuli based on either their exogenous visual salience or their endogenous behavioral significance. They proposed that attention is directed toward the location encoded by the LIP neuron with the highest activity (Bisley and Goldberg, 2003). In parallel, Platt and Glimcher (Platt and Glimcher, 1999) argued that LIP encodes the gain (later described as the value (Sugrue et al., 2004)) expected from the execution of planned actions and not the behavioral salience associated to sensory stimuli. However, it has been noted (Maunsell, 2004) that dissociating between expected value and behavioral salience is experimentally difficult since both concepts co-vary with spatial attention and that the above mentioned protocols were not properly designed to disambiguate this confound. This limitation was later addressed in a study (Leathers and Olson, 2012) that showed that LIP responds similarly to behaviorally salient stimuli independently of the expected value associated to the planned action (but see (Newsome et al., 2013)).

In an attempt to reconcile these views, Bisley and Goldberg (2010) proposed that LIP represents a priority map, as defined previously by other groups (Serences and Yantis, 2006):

“the stimulus array is initially filtered to form a bottom-up (or ‘stimulus-driven’) map in which the degree of salience is represented (without regard for the meaning or task relevance of the stimuli). Next, top-down (or voluntary) influences, which are based on goals that might involve prior knowledge about target-defining features or locations, combine with stimulus-driven factors to form a ‘master’ attention map. Thus, attentional priority is a convolution of physical salience (stimulus driven contributions), and the degree to which either salient or non-salient features match the current goal state of the observer (voluntary contributions).” (Serences and Yantis, 2006)

Specifically, in an overt visual search task (Ipata et al., 2009), LIP neuronal responses can be linearly decomposed into a visual signal related to the bottom-up salience of the stimuli, a motor signal related to saccadic behavior and a cognitive signal highlighting the presence of a target stimulus. However, as noted by the authors, this cognitive signal could originate from several sources:

“[It] could reflect the value of the signal (Platt and Glimcher, 1999; Sugrue et al., 2004) (…). Alternatively, it could reflect the pattern identification of the signal, in which case it could arise from V4 or inferior temporal cortex (…). Finally, it could represent an attentional signal once some other area has found [the target].”

In this framework, the LIP priority map projects toward and drives activity in brain areas responsible for controlling and orienting both covert attention and overt behaviors (e.g. saccadic eye movements) toward “prioritized” locations. But the specific role of LIP in the control of attention is unclear here. According to Bisley and Goldberg's theory (Bisley and Goldberg, 2010), LIP integrates top-down attentional signals but also orients visual attention and gaze, according to feedback projections targeting either visual cortical neurons (Saalmann et al., 2007) or the SC. This apparent contradiction raises a crucial question about the mechanisms by which LIP neurons acquire their selectivity for behaviorally relevant (prioritized) stimuli and manage to influence the subject's overt behavior. Before providing hypothetical explanations and putative mechanisms (see the section “Spatial and non-spatial representation: a consensus attempt”), we must first discuss some recent work in the framework of the previously described intention hypothesis.

In parallel with work on attention and priority, Desmurget and Sirigu took advantage of electrodes implanted in human patients' cortex for pre-surgical monitoring of neuronal activity (Desmurget and Sirigu, 2012; Desmurget et al., 2009) to assess the effect of electrical microstimulation of the inferior parietal lobule (IPL) on patients' sensations. Remarkably, during parietal stimulation, patients reported a feeling of “wanting to move” a specific part of their body. At high current intensity stimulation, patients believed that they had actually moved their arm, even though they had not. It is possible that this intention-like sensation comes directly from PPC activity, but it could also result from the activity induced by neuronal projections from PPC to the myriad of brain regions anatomically connected with neurons at the stimulation site, including those controlling arm movements.

In another well-known and highly influential line of work, the groups of Newsome and Shadlen pushed Andersen's exploration of delay and saccade-related activity of LIP neurons a step further (Leon and Shadlen, 1998; Shadlen and Newsome, 1996, 2001). They attempted to understand the cognitive link between sensation and action, i.e. the visuo-motor process required to transform sensory information into motor actions. Specifically, they linked “the sensory representation of motion direction to the motor representation of saccadic eye movement” (Shadlen and Newsome, 1996). In these studies, monkeys were presented with two target stimuli (located inside and outside LIP neurons' RF, respectively) and patches of dots (random dot patterns; RDPs) with those stimuli always located outside LIP neurons' RFs. The monkey's task was to make a saccadic eye movement toward the target that was aligned with (i.e. in the same direction as) the motion of the random dot stimulus (e.g. rightward saccade for rightward motion). On each trial, the authors varied the fraction of dots moving coherently toward one of two target stimuli (one of the targets was located in the RF of LIP neuron being recorded). Importantly, the tested range of coherence of the RDP stimuli spanned each monkey's behavioral threshold. Therefore, discriminating the motion-direction of RDP stimuli required monkeys to accumulate sensory evidence in time. Accordingly, planning a saccadic eye movement toward the rewarded target followed a similar dynamic. This work revealed an impressive correlation between the pattern of LIP neuronal responses and the monkeys' trial-by-trial decisions. For stimulus motion toward the target located in the recorded LIP neuron's RF, neuronal activity increased (“ramped up”) monotonically (but see (Latimer et al., 2015)). Even more compellingly, the slope of the increase of LIP activity predicted not only the directions of the monkeys' saccades, but their reaction times as well, and eye movements were initiated when neuronal responses reached a specific threshold. This work therefore linked monkeys' decisions about the motion direction of visual stimuli to motor decisions about the direction and timing of eye movements.

This is an appealing framework due to its rigorous quantitative underpinnings, the linking of sensory input with motor responses, and the impressive correspondence of model predictions and experimental observations. However, because of the design of the experiments—particularly the rigid linking of the direction of stimulus motion and the direction of the monkey's saccadic report and the placement of the motion stimulus outside LIP neurons' RFs—it is difficult to conclusively rule out alternative hypotheses about the precise source and functions of these modulations (Filimon et al., 2013; Freedman and Assad, 2011, 2016; Yates et al., 2017). For example, the ramping activity of an LIP neuron seems most likely to be related to the stimulus located in that neuron's RF (one of the putative target stimuli) than to the visual motion stimulus located outside its RF. Or minimally, it is expected that there is another population of LIP neurons with RFs overlapping the motion stimulus that are likely to contribute to the analysis of that stimulus, particularly since multiple studies have described robust motion direction selectivity in LIP for stimuli placed in the RF (Fanini and Assad, 2009; Ibos and Freedman, 2014, 2016; Sarma et al., 2016). Thus, as shown by a recent study (Yates et al., 2017), the pattern of LIP activity observed by Shadlen and colleagues might primarily reflect the intention of monkeys to perform a saccade toward the target stimulus in the LIP neuron's RF. It could also be described as reflecting the behavioral significance (or priority according to (Bisley and Goldberg, 2010)) assigned to the target stimulus as monkeys extract information about the direction of the visual motion stimulus, the location of the target stimulus and the planned eye-movement. This may also relate to the finding that LIP activity during the noisy motion task reflects the monkeys' confidence about their decisions (Fetsch et al., 2014; Kiani and Shadlen, 2009).

These interpretations have recently been subject to debate (Filimon et al., 2013; Fitzgerald et al., 2011; Freedman and Assad, 2016; Ibos and Freedman, 2017; Yates et al., 2017). Interestingly, this debate about the nature of the ramping activity in LIP during noisy motion direction discrimination (MDD) tasks was foreshadowed many years earlier in a review (Andersen et al., 1997) shortly after the publication of Newsome and Shadlen's first report about LIP activity during an MDD task (Shadlen and Newsome, 1996):

“Shadlen and Newsome (1996) have recently shown that LIP neurons become active when the animal performs a task in which it must plan a saccade in the direction it perceives a display of dots to be moving. The activity that builds up during the task prior to the eye movement is consistent with the animal planning an eye movement, although it could also reflect the direction the animal decides the stimulus is moving.”

Causal manipulations

Most of the work discussed so far considered correlations between neuronal activity and the monkeys' perception or behavior, but a few of the studies discussed above (Desmurget and Sirigu, 2015; Desmurget et al., 2009) suggest that PPC plays a causal role in the proposed functions of PPC. Several studies have tried to understand LIP's role in attention and sensory-motor transformation using causal manipulations such as microstimulation or reversible inactivation. Reversible inactivation has been particularly informative about the role of LIP in the control of saccadic eye movements (Katz et al., 2016; Li et al., 1999; Wardak et al., 2002).

It is important to stress that, compared to other brain structures such as FEF or supplementary eye field (SEF), LIP has a more limited impact on the control of saccadic eye movements. Contrary to FEF inactivation (Dias et al., 1995), which leads to context-independent impact on saccadic behavior, LIP inactivation leads to behavioral deficits that strongly depend on behavioral and motivational aspects of experimental protocols. A first study showed that behavioral deficits resulting from LIP inactivation were larger during memory-guided than visually-guided saccades (Li et al., 1999), indicating that LIP is more involved in the cognitive aspects of saccade generation than the motor production of eye movements. These results were later reproduced by subsequent experiments (Liu et al., 2010; Yttri et al., 2013), even though the amplitudes of the deficits were smaller than previously described by Li et al., (1999). However, other studies failed to detect behavioral deficits after LIP inactivation during both visually and memory guided saccadic tasks, likely due to minor differences in experimental protocols (Chafee and Goldman-Rakic, 2000; Wardak et al., 2002).

The modest impact of LIP inactivation on saccadic behaviors is consistent with the lack of anatomical connections between LIP and key midbrain oculomotor nuclei (Leichnetz et al., 1984a, 1984b; Li et al., 1999) and the modest impact of LIP micro-stimulation on saccadic eye movements (Thier and Andersen, 1996, 1998): 1) higher currents were required to trigger eye movements in LIP than in the FEF; 2) the velocity of the movements generated through LIP microstimulation matched memory-guided but not visually-guided saccades; 3) eye movements were directed toward the upper visual field only; 4) often, eye movements were complex as their initial phases were directed in the opposite direction as later phases; 5) saccadic amplitude depended on the position of the eyes in their orbits. The contrast with the systematic effects of FEF microstimulation (Bruce et al., 1985) on saccades supports the conclusion from reversible inactivation that LIP plays a limited role in direct control of saccades.

In order to better understand the influence of LIP on saccadic behavior, it is informative to consider the deficits resulting from LIP inactivation during a free choice two-target (FC) saccadic task (Katz et al., 2016; Wardak et al., 2002) and during the MDD task discussed above (Katz et al., 2016). In both tasks, monkeys have to make a saccade toward one of two target stimuli, one located in the contralesional hemifield and the other in the ipsilesional hemifield. During the FC task, monkeys are rewarded equally for saccades toward either target, conferring equivalent salience or priority to the two targets. During the MDD, the reward is associated only with the target stimulus associated with the motion direction of the stimulus presented in the ipsilesional hemifield, giving that saccade target greater priority.

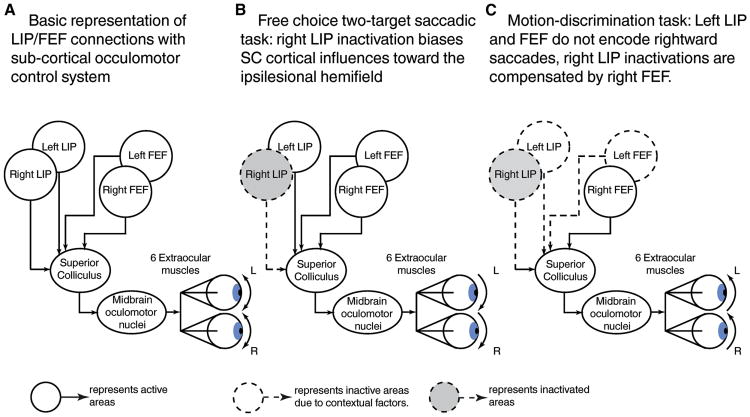

The effects of LIP inactivation in both the FC and MDD tasks are strikingly different. Using the FC task (Figure 2B), multiple studies have consistently shown that LIP inactivation biases monkeys' choices away from the visual field contralateral to the inactivated hemisphere (contralesional hemifield) (Balan and Gottlieb, 2009; Katz et al., 2016; Wardak et al., 2002, 2004)—monkeys prefer the ipsilesional target stimulus. In contrast to the clear and consistent impact on behavioral performance during the FC task (a task in which both targets have the same behavioral significance), LIP inactivation had no detectable impact on performance of the MDD task, and monkeys were equally able to saccade toward the contralesional and the ipsilesional saccade targets (Figure 2C). Importantly, the authors of that study showed that their inactivation of LIP was successful, as they observed marked behavioral deficits on the FC task on the same behavioral sessions. These results indicate that LIP is not necessary for 1) planning and executing saccades toward the target stimulus located in the inactivated hemifield and 2) deciding about the motion-direction of RDP stimuli located outside the inactivated hemifield. The effects of motivational factors (reward associated to a contralesional stimulus) on deficits related to LIP inactivation during both tasks supports the idea that LIP can influence (through its connections with FEF, SEF and SC) saccadic behaviors but does not directly control the saccadic eye movements themselves.

Figure 2.

Putative effects of LIP inactivation on monkeys' saccadic behavior during different tasks. This model posits that the superior colliculus's motor command is a function of the weighted sum of its cortical inputs (bilateral FEF and LIP). A. Basic representation of LIP/FEF/SC connectivity. B. Inactivating R-LIP biases SC's activity toward ipsilesional target in a two-target free choice saccadic task. C. Unilateral LIP inactivation during motion-discrimination task does not affect monkeys' behavior because of Right FEF encoding of contraversive saccades.

This specific pattern of behavioral deficits following LIP inactivation could be understood by considering the cortical influences on the oculomotor system. The extraocular muscles are directly driven by input from midbrain oculomotor neurons, which themselves receive input from burst saccadic neurons of the deep layers of the SC (for review (Gandhi and Katnani, 2011)). SC neurons integrate diverse cortical inputs (Figure 2A), including from LIP, frontal eye field (FEF), supplementary eye field (SEF), anterior cingulate cortex (ACC) and dorsolateral prefrontal cortex (dLPFC) (for review (Johnston and Everling, 2008)). For simplicity, here we focus on LIP and FEF. Figure 2 illustrates how shifting the balance of cortical inputs on SC burst saccadic neurons, by either unilaterally inactivating LIP or by modifying the behavioral context, can modify monkeys' oculomotor behavior. We posit that when the behavioral context equally favors left or right saccades (such as in the FC task), unilaterally inactivating LIP should bias cortical inputs to the SC, and oculomotor behaviors, toward ipsilesional saccades (Figure 2B). However, for contexts where ipsiversive but not contraversive saccades are behaviorally irrelevant (as in some conditions of the MDD task or during delayed saccadic tasks), it is expected that LIP inactivation would have less of an impact on saccadic behavior. This is because the bias of cortical inputs to SC due to unilateral LIP inactivation should be compensated by 1) a stronger influence of ipsilesional compared to contralesional FEF on the SC and 2) weaker response of the contralesional LIP (Figure 2C).

In addition to the pattern of deficits observed during visually- and memory-guided saccades, as well during as the FC and MDD tasks, LIP inactivation can also impair monkeys' abilitity to perform both overt and covert visual search tasks (Balan and Gottlieb, 2009; Liu et al., 2010; Wardak et al., 2002, 2004). In these tasks, monkeys need to detect target stimuli by comparing sensory stimuli to a target stimulus. Inactivating LIP increases reaction times for detecting targets located in the contralesional hemifield. Specifically, the magnitude of behavioral deficits correlated with task difficulty, with the largest deficits observed when monkeys had to detect conjunctions of visual features (Wardak et al., 2004). We will argue in the following sections that these deficits may be related to the mechanisms that allow LIP to integrate and group conjunctions of visual features and to encode decision-related variables about the behavioral relevance (or priority) of the stimuli.

Non-spatial representations and working memory

The work discussed in the previous sections focused mainly on how PPC neurons in general, and LIP neurons in particular, encode spatial aspects of the environment, whether they represent the location of the intended eye movement or the location of behaviorally relevant stimuli. However, in the past 20 years, a large corpus of studies has shown that LIP also encodes non-spatial aspects of visual scenes including visual shape (Fitzgerald et al., 2011; Sereno and Maunsell, 1998; Subramanian and Colby, 2014), motion direction (Fanini and Assad, 2009; Ibos and Freedman, 2014, 2016; Sarma et al., 2016) and color (Ibos and Freedman, 2014, 2016; Toth and Assad, 2002). In addition to these low-level visual features, other studies showed that LIP neurons also encode cognitive signals such as cognitive set or task rules (Stoet and Snyder, 2004). In addition, neuronal activity in PPC has been shown to encode the learned abstract category membership of visual stimuli. This categorization process has been studied in the context of a delayed-match to category task in which monkeys had to group 360° of visual motion directions into two arbitrary categories defined by a learned category boundary (Freedman and Assad, 2006).

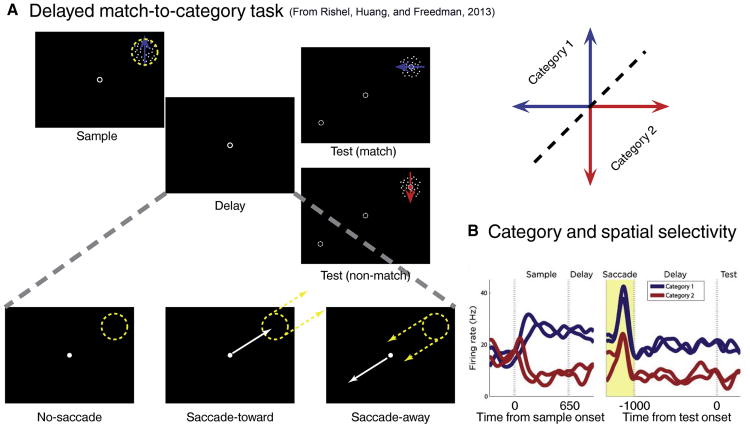

This consistent ability of LIP neurons to encode non-spatial/abstract variables was the center of a fundamental controversy regarding the relationship between spatial and non-spatial representation in LIP. Specifically, in the priority map hypothesis discussed earlier, non-spatial representations are thought to be used for spatially guiding visual search (Zelinsky and Bisley, 2015). However, recent studies (Meister et al., 2013; Rishel et al., 2013) showed that non-spatial signals are encoded independently of spatial signals in LIP, suggesting that non-spatial representations in LIP play a more complex role than just supporting spatial processing. For example, our group recently characterized how category-encoding and saccade-related signals interact in LIP (Rishel et al., 2013). In this study, monkeys were trained to group visual motion directions into two arbitrary categories in a delayed match-to-category task (Figure 3A). On some trials, during the delay period of the task, the monkeys were cued to make a saccade either toward or away from the RF of the LIP neuron being recorded. However, the saccade was not related to the categorization demands of the task. During saccade preparation and execution, they had to maintain in working memory the category of the previously viewed sample stimulus in order to decide whether the upcoming test stimulus was a category match to the sample. Interestingly, the LIP population independently encoded, or multiplexed, both spatial (i.e. saccade) and non-spatial (i.e. category) aspects of the task. This multiplexing was even evident at the level of individual LIP neurons, as some neurons showed firing rates that were independently modulated by both saccade and category factors (Figure 3B). These results show that LIP encoding of cognitive and spatial/motor factors is consistent with the integration of independent signals from specific brain networks.

Figure 3.

LIP multiplexes spatial and non-spatial signals (reproduced from Rishel and Freedman, 2013). A. Behavioral task: delayed match-to-category. A sample stimulus was followed by a delay and a test stimulus. If the category membership of the test stimulus matched the one of the sample stimulus, monkeys were required to release a lever. In some trials, the fixation point was moved either toward or away the receptive field of the recorded LIP neuron. B. Example of a LIP neuronal response during the delayed match-to-category task.

In previous sections of this review, we presented evidence that LIP neurons can encode diverse spatial and non-spatial aspects of visual scenes. However, while the priority map hypothesis is an important step toward a more coherent model of LIP's role in representing behaviorally relevant spatial locations, we still lack a fully integrated understanding about the mechanisms by which non-spatial visual features, non-spatial cognitive signals and spatial encoding interact in order to guide perception, decisions, and behavior.

Spatial and non-spatial representation: A consensus attempt

In a recent series of experiments (Ibos and Freedman, 2014, 2016), we described mechanisms by which feature-based (FBA) and space-based attention (SBA) could allow LIP neurons to represent both spatial and non-spatial visual features. In an additional study (Ibos and Freedman, 2017), we described how sensory information and cognitive signals are combined and transformed into signals related to the monkeys' decisions and motor responses. Results from our studies led us to propose an integrative comparative framework that may account for a large set of the previously described results.

We trained monkeys to perform a covert delayed conjunction matching task (DCM) in which they had to detect specific conjunctions of color and motion direction. On each trial, a sample stimulus was followed by a delay and a succession of stimuli located either at the same location (test stimuli) or in the opposite hemifield (distractor stimuli). The color and motion direction of the sample stimulus was randomly picked among two stimuli: sample A (yellow dots moving downward) or sample B (red dots moving upward). Test and distractor stimuli were any conjunction of one of eight colors (ranging from yellow to red) and one of eight directions (evenly spaced across 360°). The monkeys were rewar ded if they correctly identified test stimuli that matched the sample stimulus in color, direction, and position. Distractor stimuli were always behaviorally irrelevant and had to be ignored.

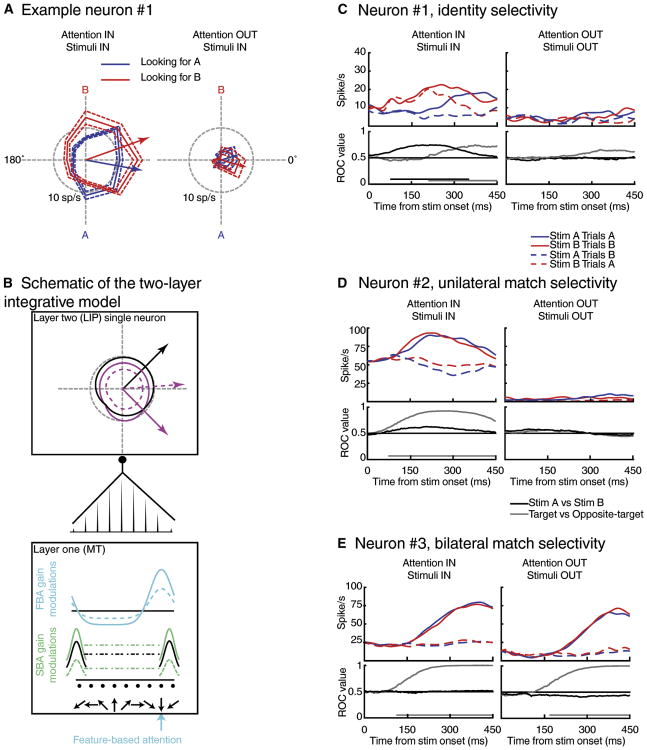

This task allowed us to manipulate several key sensory, cognitive and motor factors. First, we characterized the impact of both SBA and FBA on LIP spatial and non-spatial feature encoding (Ibos and Freedman, 2014, 2016). We observed that both types of attention interact in LIP and modulate LIP neurons' spatial and non-spatial feature selectivity. These results led us to propose a model to account for the emergence of non-spatial and spatial sensory encoding in LIP. Second, we compared the dynamics of LIP encoding of sensory and cognitive/behavioral factors (Ibos and Freedman, 2017). We found that LIP sequentially encodes the identity and the match status of visual stimuli. This work supported our proposal that behaviorally relevant spatial encoding (e.g. the match/non-match status of the relevant stimulus located inside a neuron's RF, independent of motor-factors) results from computations reflecting the comparison of bottom-up sensory signals to top-down signals about the remembered identity of the sample stimulus. Finally, we observed a specific population of LIP neurons that encoded the match/non-match status of stimuli, independently of their location, whose responses could be driven by non-spatial aspects of the task such as reward expectation or monkeys' behavioral manual responses.

Attention gates the bottom-up flow of spatial and non-spatial sensory information which is integrated by LIP

In our first studies, we characterized the impact of FBA and SBA on color and motion direction encoding in LIP (Ibos and Freedman, 2014, 2016). This led us to propose that spatial and non-spatial encoding in LIP reflects integration of the bottom-up flow of sensory information gated by space-based and feature-based attention. First, we compared color and direction selectivity of LIP neurons during performance of the DCM task and during passive viewing of similar stimuli. During passive viewing, a small fraction of neurons were tuned to motion-direction and almost none were tuned to color. However, we observed that a substantial fraction of LIP neurons gained selectivity to both color and motion-direction during the DCM task, suggesting that FBA plays an important role in non-spatial selectivity in LIP. Specifically, we found that LIP neurons' tuning to color and motion direction during the DCM task were shifted toward the relevant features (Figure 4A). For example, during sample A trials (yellow dots moving downward), the preferred direction of the neuron shown in Figure 4A was shifted toward direction A. During sample B trials (red dots moving upward), the preferred direction of the same LIP neuron was shifted toward direction B. In addition, we analyzed how SBA and FBA interact in LIP (Ibos and Freedman, 2016). We showed that the amplitude of feature tuning shifts were larger when the relevant stimuli were located inside each neuron's RF and that the amplitude of SBA modulations were larger when monkeys attended to the preferred feature of each LIP neuron. These effects are consistent with a feed-forward two-layer neural network model described in the following (Figure 4B).

Figure 4.

Response of LIP neurons during DCM tasks (from Ibos & freedman, 2016; 2017). A. Effect of feature-based and space-based attention on direction selectivity of one LIP neuron. B. Schematic of the two-layer integrative model. The effects of both space-based and feature-based attention in LIP can be explained by bottom-up linear integrative mechanisms. C. Example of an LIP neuron encoding the identity of the test stimulus. D. Example of an LIP neuron encoding the match status of the stimulus located inside its RF. E. Example of an LIP neuron encoding the match status of the stimulus both inside and outside its RF.

Most past studies of FBA (with the exception of one study (David et al., 2008)) found that the impact of FBA on feature-selective neurons in visual cortical areas is consistent with changes in their response gain, without modifying their preferred feature-value or width of feature tuning (Martinez-Trujillo and Treue, 2004; Maunsell and Treue, 2006; McAdams and Maunsell, 1999; Treue and Martínez Trujillo, 1999). We developed a neural network model (Ibos and Freedman, 2014, 2016) (Figure 4B) that suggests that shifts in feature tuning in LIP are consistent with linear integration of attention-related response-gain changes in MT and V4 (Martinez-Trujillo and Treue, 2004; Maunsell and Treue, 2006; McAdams and Maunsell, 1999; Treue and Martínez Trujillo, 1999). The model consists of two interconnected neuronal layers (L1 and L2), corresponding to MT and LIP respectively, as this example focuses on the impact of FBA on direction tuning in LIP, although the same model applies similarly to color with L1 neurons corresponding to color-selective V4 neurons. Each L2 neuron integrates multiple inputs from a population of direction-tuned L1 neurons. The distribution of connection weights between L1 and L2 determines the direction selectivity of L2 neurons: the sharper the distribution of synaptic weights, the sharper the direction tuning of L2 neurons. We considered the impact of gain modulations (Martinez-Trujillo and Treue, 2004; Maunsell and Treue, 2006; McAdams and Maunsell, 1999; Treue and Martínez Trujillo, 1999) in L1 on tuning in L2, which is assumed to simply linearly integrate L1 activity. Gain modulations of L1 neurons (whose amplitudes depend on the distance between each neuron's preferred direction and the relevant direction) resulted in shifts of direction tuning in L2, similar to that observed in LIP (Ibos and Freedman, 2014, 2016). In addition, we show that the combined effects of both FBA and SBA in LIP reflect the bottom-up integration of their super-additive interactions in L1 similar to the ones described in visual cortical areas (Hayden and Gallant, 2009; Patzwahl and Treue, 2009).

Sensory information and decision-related signals

The findings described above and the related model framework account for the spatial and non-spatial encoding of sensory information in LIP. However, they do not account for how LIP neurons' encoding of behaviorally relevant stimuli is generated. This raises the question of why LIP integrates and represents information that is already reliably encoded in upstream sensory areas. We propose that LIP neuronal networks integrate, group, and transform sensory information into decision-related signals by comparing bottom-up sensory information (what monkeys are looking at) to top-down signals (what monkeys are looking for).

During the DCM task, the sample stimulus varied pseudo-randomly between two different conjunctions of color and direction features (stimulus A: yellow dots moving downward; stimulus B: red dots moving upward). Therefore, when stimulus A was shown as a test stimulus, it was a match on sample A trials and required a behavioral response. The same test stimulus was a non-match stimulus during sample B trials and had to be ignored. This task design allowed us to test how sensory features (i.e. identity of the test stimuli) and behavioral relevance (i.e. match status of test stimuli) impacted LIP neuronal encoding. We found that LIP contains two overlapping populations of neurons showing a range of mixed selectivity for the identity of the stimuli (Figure 4C), their match status (Figure 4D) or both. Interestingly, encoding of test stimulus features preceded match selectivity and was less correlated with the monkeys' behavioral responses. Match selectivity spanned a certain degree of spatial selectivity among LIP neurons as some neurons were unilaterally match selective (Figure 4D; whose responses couldn't be explained by motor-related signals) and some were bilaterally match-selective (Figure 4E; whose responses could potentially be explained by motor-related signals). The presence of spatially-selective match-selective neurons independent of motor-related variables in our data pool was predicted by the priority map hypothesis, which posits that behaviorally relevant stimuli are encoded by LIP neurons. However, the priority map hypothesis doesn't explicitly predict the presence of identity-selective neurons in LIP and can only account for the subpopulation of match-selective neurons. This highlights the importance of understanding the mechanisms by which selectivity to match stimuli arises in LIP.

In the DCM task (Ibos and Freedman, 2014, 2016, 2017), encoding stimulus identity requires combining signals related to their color (presumably encoded in V4) and their direction (presumably encoded in MT). Thus, we tested how LIP neurons combine multiple sources of bottom-up sensory signals in order to understand whether and how LIP contributes to the identification of matching test stimuli. We found that LIP activity was consistent with an additive process applied to bottom-up signals related to the relevant colors and the relevant motion directions. However, encoding of the match-status of stimuli was super-additive and could not be explained by a linear combination of color and motion-direction signals. This super-additivity could reflect several processes (such as local computations or the integration of additional signals from a different source) and it is difficult at this point to decipher the mechanisms that lead to these non-linear representations of match stimuli. In the following, we propose that such super-additivity reflects computations related to the comparison of bottom-up sensory signals that provide LIP with information about the currently viewed stimulus with top-down signals that provide LIP with a template of the remembered sample stimulus. However, in order to explain the rationale of this hypothesis, it is necessary first to consider how LIP encodes behaviorally relevant information in short-term working memory.

Top-down attention and working memory

In two of our studies (Ibos and Freedman, 2014, 2017), we described how we were able to decode the identity of the sample stimulus based on LIP neuronal responses in each time epoch of the DCM task (sample presentation, delay period, test period). A substantial fraction of LIP neurons encoded the sample identity during the delay period and subsequent test periods. This is consistent with several previous studies that showed that LIP neurons encode a wide variety of task-relevant information in working memory (Fitzgerald et al., 2011; Freedman and Assad, 2006; Sereno and Maunsell, 1998; Toth and Assad, 2002) during delay-based tasks. However, sustained delay-period encoding of stimuli and locations has been observed in a number of brain areas such as the inferior temporal cortex (Fuster and Jervey, 1981) and multiple subdivisions of the prefrontal cortex ((Miller et al., 1996) for reviews, (Leavitt et al., 2017; Miller and Cohen, 2001)), raising a crucial question about the origin and role of delay period activity in LIP.

Two recent studies from our group (Masse et al., 2017; Sarma et al., 2016) highlighted the prevalence of PFC over LIP in maintaining task-relevant information in working memory (WM). A neurophysiological marker of WM encoding is the presence of sustained selectivity to previously presented stimuli whose identity must be remembered during behavioral tasks. In order to examine how both learning and task demands impact WM encoding in PFC and LIP, Sarma et al. (2016) compared neuronal selectivity to motion direction at multiple stages of long-term behavioral categorization training. Prior to categorization training, the monkeys performed a delayed match-to-sample (DMS) task in which they had to identify test stimuli whose motion direction matched previously presented sample stimuli. PFC showed sustained selectivity for sample direction during the delay period of the DMS task. LIP neurons did not exhibit such selectivity. However, after categorization training, monkeys performed a delayed match-to-category (DMC) task in which they viewed the same visual stimuli as during the previous DMS task, but had to group the stimuli according to abstract category memberships learned during months of categorization training. During the DMC task (after categorization training) both LIP and PFC showed strong sustained delay-period category encoding. This suggests that the incidence of delay-period encoding, and whether LIP robustly encodes task-relevant information in WM, depends on task demands and the monkeys' training history.

This variability is consistent with the notion that mnemonic encoding in LIP reflects the integration of cognitive signals from a different source. Given its more generalized delay-period encoding, PFC is a credible putative source for mnemonic encoding in LIP. However, it leaves open a question about the function of delay-period encoding in LIP and why it is evident during the categorization but not discrimination tasks. As we discussed above, LIP selectivity for remembered spatial locations during the memory delayed saccade task had been proposed to reflect corollary discharges from areas more closely involved in the control of saccadic eye movements (such as FEF or SC) (Colby and Duhamel, 1996; Duhamel et al., 1992). Similarly, our recent findings along with recent theoretical work (Murray et al., 2017) suggest that LIP selectivity for higher-order behaviorally relevant aspects of remembered sample stimuli during the DCM task reflects the integration of top-down signals by LIP, presumably originating in PFC. Modulations of LIP sample selectivity during the delay period of the DMS and DMC tasks could, for example, be related to learning-dependent plasticity in the synaptic connections between PFC and LIP (Engel et al., 2015; Rombouts et al., 2015). It could also be related to differences in cognitive sets between tasks and how they correlate with modulations of the signals sent from PFC and integrated by LIP.

Toward a general model

Integrative Comparative framework

In the previous section, we showed that LIP can encode all of the information required to solve the DCM task:

- the identity of the stimulus monkeys were looking for (presumably integrated from PFC);

- the feature content of stimuli modulated by attention (presumably integrated from upstream feature-selective cortical areas such as MT and V4);

- the identity of the stimuli (resulting from additive pooling of feed-forward signals projecting to LIP);

- signals related to the monkeys' decisions about the match status of test stimuli.

Based on these results, we propose a general model, an integrative comparative framework (Figure 5), in which LIP highlights the presence of behaviorally relevant stimuli by integrating and comparing multiple sources of bottom-up and top-down information. We propose that the super-additivity that characterizes encoding of match stimuli during the DCM task reflects computations related to the comparison of bottom-up/sensory and top-down/mnemonic signals. LIP is therefore a likely candidate for computing the behavioral relevance of the stimuli by comparing what monkeys are looking at to what they are looking for.

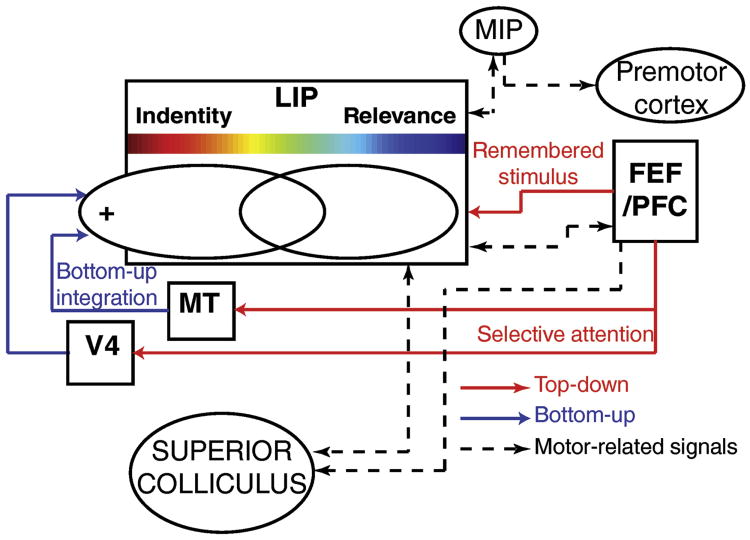

Figure 5.

Schematic of the LIP integrative comparative framework proposed in this review. LIP integrates bottom-up and top-down signals and inform several brain networks about the behavioral relevance of the observed stimuli.

Specifically, we distinguish between two different routes of top-down signals: 1) top-down attentional modulation related to selecting and gating the bottom-up flow of sensory information (which is subsequently integrated and combined by LIP neurons); 2) top-downworking-memory signals serving the purpose of informing LIP about the identity and visual features of the remembered sample stimulus. The most likely candidate sources for both types of top-down signals are within the PFC/FEF networks (Miller and Cohen, 2001). FEF and PFC are directly connected to extrastriate cortical visual areas and to LIP. FEF/PFC have been proposed to explicitly control voluntary attention by modulating the activity of visual neurons via top-down projections (Armstrong et al., 2009; Bichot et al., 2015; Gregoriou et al., 2014; Ibos et al., 2013; Lennert and Martinez-Trujillo, 2013; Moore and Armstrong, 2003; Zhou and Desimone, 2011). Also, decades of work have highlighted the role of PFC networks ((Miller et al., 1996) for reviews, (Leavitt et al., 2017; Miller and Cohen, 2001)) in encoding information held in working memory.

We believe this model is helpful for conceptualizing LIP's position within the cortical hierarchy as a key intersection point for bottom-up sensory signals and top-down task-related and mnemonic encoding. It can potentially account for a large amount of the data presented along this article. The model provides a mechanism for how both bottom-up connectivity and contextual demands shape LIP selectivity for spatial and non-spatial visual features. It is noteworthy and important to emphasize that this model proposes that selectivity to both spatial and non-spatial features of sensory stimuli in LIP reflect similar integrative mechanisms. Specifically, we propose that LIP sensory selectivity (to both spatial and non-spatial features) reflects the linear integration of the bottom-up flow of sensory information. In this framework, the spatial and non-spatial tuning of LIP neurons is strongly task dependent and is shaped by top-down attentional signals (presumably originating in PFC) that gate the bottom-up flow of sensory signals. This model therefore accounts for LIP selectivity to the exogenous salience of visual stimuli (Arcizet et al., 2011; Gottlieb et al., 1998), for context-dependent modulations of LIP spatial RFs (Ben Hamed et al., 2002) and context-dependent non-spatial feature encoding (such as color (Ibos and Freedman, 2014, 2016; Toth and Assad, 2002), motion direction (Fanini and Assad, 2009; Ibos and Freedman, 2014, 2016) or shape (Fitzgerald et al., 2011; Sereno and Maunsell, 1998)). In addition, this model could potentially account for the selectivity of LIP neurons for spatial and non-spatial features during cue (or sample, depending on task designs) presentation in different task protocols (Fitzgerald et al., 2011; Freedman and Assad, 2006; Ibos et al., 2013). For example, before the presentation of the sample during the DCM task (Ibos and Freedman, 2014, 2016, 2017), monkeys knew they had to discriminate between yellow-dots moving downward, and red dots moving upward. Voluntary attention at this point is limited to these specific features (yellow, red, upward and downward motion directions). Physiologically, this could correspond to top-down signals targeting and modulating populations of neurons of V4 and MT tuned to these specific features. This model thus incorporates recent findings regarding the roles of LIP and FEF during voluntary and involuntary (reflexive) deployment of attention (Astrand et al., 2015; Buschman and Miller, 2007; Ibos et al., 2013). In these studies, and consistently with our model, exogenous markers of attention rely exclusively on the bottom-up flow of sensory information and therefore are predominantly expressed in LIP compared to FEF. Finally, reversibly inactivating LIP during overt and covert visual search tasks led to larger behavioral deficits when monkeys searched for conjunctions of visual features compared to simpler versions of the same tasks (Wardak et al., 2002, 2004). This specific pattern of behavioral deficits supports the integrative comparative framework, which proposes that LIP plays an important role in grouping representations of attended visual features. This grouping of visual features dependent on visual attention has been hypothesized by the feature integration theory of attention (Treisman and Gelade, 1980).

This model also accounts for how LIP neurons express selectivity for behaviorally relevant stimuli as computations leading to their encoding are post-attentive and related to the monkeys' decisions. Compared to previous theoretical frameworks, such as the priority map hypothesis (Bisley and Goldberg, 2003, 2010) that we discussed previously, our model clarifies the role played by attention and the mechanisms by which it facilitates the evaluation of sensory stimuli and the decision making process. In our framework, top-down modulations from LIP to visual cortical neurons (Saalmann et al., 2007) are therefore unlikely to represent pure attentional signals. Instead, they potentially support other cognitive processes such as conscious perception of the stimuli (visual awareness, i.e. the subjective sensation of sight), which is often difficult to dissociate from selective attention even though it is feasible under certain experimental conditions (Kentridge et al., 2008; Koivisto and Revonsuo, 2007). Visual awareness, which strongly relies on top-down signals (Wyart and Tallon-Baudry, 2008), has been linked to the parietal cortex. For example, human patients suffering from severe unilateral neglect as a consequence of parietal cortex damage show evidence of unconscious processing of visual stimuli presented in the neglected field (Berti and Rizzolatti, 1992).

Effector-specific modulations in PPC

As proposed by the priority map hypothesis, the encoding of behavioral significance in LIP is thought to influence monkeys' behavior. This is supported by several studies showing that both intended saccadic-eye movements (Gnadt and Andersen, 1988) and limb movements (Ibos and Freedman, 2017; Oristaglio et al., 2006) modulate LIP neuronal responses. For example, we showed in a recent study (Ibos and Freedman, 2017) that a population of LIP neurons was selective for match stimuli located either inside or outside their RFs (Figure 4E), consistent with the encoding of motor-preparation signals. This raises a crucial question about the mechanisms by which LIP acquires motor-related activity and what role it plays in decision-making. The integrative comparative framework includes the previously discussed proposition that motor-related selectivity in LIP reflects the integration of corollary discharge signals from areas more directly driving movement (such as FEF or SC for eye movements, or MIP for hand movements). Specifically, given the strong interconnection between LIP, FEF and the SC (Gandhi and Katnani, 2011), it is worth discussing the specificity of saccadic-related signals in LIP compared to limb movement-related signals in terms of integration from and projection toward different brain motor control networks. Recently, Snyder's group compared the effect of LIP inactivation on both saccadic eye movements and manual reaching movements (Yttri et al., 2013). LIP inactivation slightly impacted saccadic behaviors while reaching behaviors were impacted only when arm and saccadic eye movements were coupled, showing that LIP selectivity to limb movements weakly influences motor planning. Along with results discussed in the Causal manipulations section of this article, this shows that LIP's influence on saccade control centers (FEF, SC, SEF…) is stronger than LIP's influence on other effector-specific control centers. It also suggests that LIP encoding related to limb-movements may reflect integrative mechanisms from brain networks expressing selectivity for arm or hand movements (e.g. MIP). This highlights the importance of characterizing the networks and the mechanisms that route LIP decision-related signals toward different effector-specific motor-control networks in order to drive appropriate motor behavioral responses.

This raises a question of whether other areas such as medial (MIP/PRR), ventral (VIP) or anterior (AIP) parts of the parietal cortex act in a similar manner. For example, Janssen and Scherberger recently reviewed the function of AIP (Janssen and Scherberger, 2015). Strikingly, AIP and LIP show a large degree of resemblance in the way they have been studied and described in earlier reports, particularly in their connectivity with occipital and frontal areas and the characteristics of their neurons. For example, AIP was first described as being strongly involved in the control of hand movements, especially grasping and 3-dimensional manipulation of objects. Then, later studies decomposed AIP into three classes of neurons: motor-related neurons, visuo-motor neurons and visual neurons. Similar to LIP (which is directly connected to both visual areas and FEF), AIP is strongly connected to visual cortical areas (such as inferior temporal cortex) and to frontal areas such as ventral premotor area (PMv or F5). AIP has been proposed to be involved in categorization of three-dimensional depth features by interacting with ventral stream cortical areas (Verhoef et al., 2015), sensory-motor transformation (between visual cues and hand-reaching movements), and action-related decision-making. In their review, Janssen and Scherberger proposed:

“[AIP is] at the center stage of motor preparation for grasping, where intentional, perceptual, and spatial object information converges for the generation of grasping movements. However, the exact nature of these processes and their underlying mechanisms are currently not well understood.”

This strong resemblance between LIP and AIP highlights the importance of understanding whether the model proposed in this review for LIP can be generalized to other PPC areas.

Concluding remarks

In this review, we showed that PPC neurons encode a large variety of sensory, cognitive and motor-related signals during a wide range of behavioral contexts and tasks. We propose that PPC is a central interface in which visual, cognitive and motor-related signals converge and are integrated in order to highlight behaviorally relevant stimuli and to adaptively influence task-dependent motor control. Using LIP as a model, we attempted to emphasize the importance of functionally dissecting sensory, cognitive or motor-related encoding within each PPC area. At the neurophysiological level, this consists of characterizing how afferent signals are integrated and locally computed, and how efferent signals manage to target and influence specific networks depending on contextual demands. We believe that this line of investigation is a necessary step to fully understand how PPC impacts and mediates overt and covert behaviors. It is our hope that this framework for understanding PPC functioning will help guide our understanding and treatment of parietal damage and dysfunction, and perhaps extend beyond PPC to provide a more generalized understanding of interactions and computations within diverse cortical areas and networks.

Acknowledgments

We thank J.R. Duhamel, J.H. Maunsell, N. Masse and L. Goffard for discussions. We thank J. Assad and G. Masson for comments on the manuscript. We thank B. Peysakhovich for comments and edits on the manuscript. We thank C. Acuna for authorization to reproduce figure 1.

Footnotes

Declaration of Interests: The authors declare no competing interests.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Andersen RA. Visual and Eye Movement Functions of the Posterior Parietal Cortex. Annu Rev Neurosci. 1989;12:377–403. doi: 10.1146/annurev.ne.12.030189.002113. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Zipser D. The role of the posterior parietal cortex in coordinate transformations for visual-motor integration. Can J Physiol Pharmacol. 1988;66:488–501. doi: 10.1139/y88-078. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Essick GK, Siegel RM. Encoding of spatial location by posterior parietal neurons. Science. 1985;230:456–458. doi: 10.1126/science.4048942. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Snyder LH, Bradley DC, Xing J. Multimodal representation of space in the posterior partietal cortex and its use in planning movements. Annu Rev Neurosci. 1997;20:303–330. doi: 10.1146/annurev.neuro.20.1.303. [DOI] [PubMed] [Google Scholar]

- Arcizet F, Mirpour K, Bisley JW. A pure salience response in posterior parietal cortex. Cereb Cortex. 2011;21:2498–2506. doi: 10.1093/cercor/bhr035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Armstrong KM, Moore T. Rapid enhancement of visual cortical response discriminability by microstimulation of the frontal eye field. Proc Natl Acad Sci U S A. 2007;104:9499–9504. doi: 10.1073/pnas.0701104104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Armstrong KM, Chang MH, Moore T. Selection and maintenance of spatial information by frontal eye field neurons. J Neurosci. 2009;29:15621–15629. doi: 10.1523/JNEUROSCI.4465-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Astrand E, Ibos G, Duhamel JR, Ben Hamed S. Differential Dynamics of Spatial Attention, Position, and Color Coding within the Parietofrontal Network. J Neurosci. 2015;35:3174–3189. doi: 10.1523/JNEUROSCI.2370-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balan PF, Gottlieb J. Functional significance of nonspatial information in monkey lateral intraparietal area. J Neurosci. 2009;29:8166–8176. doi: 10.1523/JNEUROSCI.0243-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barash S, Bracewell RM, Fogassi L, Gnadt JW, Andersen RA. Saccade-related activity in the lateral intraparietal area. I. Temporal properties; comparison with area 7a. J Neurophysiol. 1991a;66:1095–1108. doi: 10.1152/jn.1991.66.3.1095. [DOI] [PubMed] [Google Scholar]

- Barash S, Bracewell RM, Fogassi L, Gnadt JW, Andersen RA. Saccade-related activity in the lateral intraparietal area. II. Spatial properties. J Neurophysiol. 1991b;66:1109–1124. doi: 10.1152/jn.1991.66.3.1109. [DOI] [PubMed] [Google Scholar]

- Berti A, Rizzolatti G. Visual Processing without Awareness: Evidence from Unilateral Neglect. J Cogn Neurosci. 1992;4:345–351. doi: 10.1162/jocn.1992.4.4.345. [DOI] [PubMed] [Google Scholar]

- Bichot NP, Heard MT, DeGennaro EM, Desimone R. A Source for Feature-Based Attention in the Prefrontal Cortex. Neuron. 2015;88:832–844. doi: 10.1016/j.neuron.2015.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bisley JW, Goldberg ME. Neuronal activity in the lateral intraparietal area and spatial attention. Science. 2003;299:81–86. doi: 10.1126/science.1077395. [DOI] [PubMed] [Google Scholar]

- Bisley JW, Goldberg ME. Attention, intention, and priority in the parietal lobe. Annu Rev Neurosci. 2010;33:1–21. doi: 10.1146/annurev-neuro-060909-152823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruce CJ, Goldberg ME, Bushnell MC, Stanton GB. Primate frontal eye fields. II. Physiological and anatomical correlates of electrically evoked eye movements. J Neurophysiol. 1985;54:714–734. doi: 10.1152/jn.1985.54.3.714. [DOI] [PubMed] [Google Scholar]

- Buschman TJ, Miller EK. Top-down versus bottom-up control of attention in the prefrontal and posterior parietal cortices. Science. 2007;315:1860–1862. doi: 10.1126/science.1138071. [DOI] [PubMed] [Google Scholar]

- Bushnell MC, Goldberg ME, Robinson DL. Behavioral enhancement of visual responses in monkey cerebral cortex. I. Modulation in posterior parietal cortex related to selective visual attention. J Neurophysiol. 1981;46:755–772. doi: 10.1152/jn.1981.46.4.755. [DOI] [PubMed] [Google Scholar]

- Chafee MV, Goldman-Rakic PS. Inactivation of parietal and prefrontal cortex reveals interdependence of neural activity during memory-guided saccades. J Neurophysiol. 2000;83:1550–1566. doi: 10.1152/jn.2000.83.3.1550. [DOI] [PubMed] [Google Scholar]

- Colby CL, Duhamel JR. Spatial representations for action in parietal cortex. Brain Res Cogn Brain Res. 1996;5:105–115. doi: 10.1016/s0926-6410(96)00046-8. [DOI] [PubMed] [Google Scholar]

- Colby CL, Goldberg ME. Space and attention in parietal cortex. Annu Rev Neurosci. 1999;22:319–349. doi: 10.1146/annurev.neuro.22.1.319. [DOI] [PubMed] [Google Scholar]

- David SV, Hayden BY, Mazer Ja, Gallant JL. Attention to stimulus features shifts spectral tuning of V4 neurons during natural vision. Neuron. 2008;59:509–521. doi: 10.1016/j.neuron.2008.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desmurget M, Sirigu A. Conscious motor intention emerges in the inferior parietal lobule. Curr Opin Neurobiol. 2012;22:1004–1011. doi: 10.1016/j.conb.2012.06.006. [DOI] [PubMed] [Google Scholar]

- Desmurget M, Sirigu A. Revealing humans' sensorimotor functions with electrical cortical stimulation. Philos Trans R Soc B Biol Sci. 2015;370:20140207. doi: 10.1098/rstb.2014.0207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desmurget M, Reilly KT, Richard N, Szathmari A, Mottolese C, Sirigu A. Movement Intention After Parietal Cortex Stimulation in Humans. Science (80-) 2009;324:811–813. doi: 10.1126/science.1169896. [DOI] [PubMed] [Google Scholar]

- Dias EC, Kiesau M, Segraves MA. Acute activation and inactivation of macaque frontal eye field with GABA-related drugs. J Neurophysiol. 1995;74:2744–2748. doi: 10.1152/jn.1995.74.6.2744. [DOI] [PubMed] [Google Scholar]

- Duffy FH, Burchfiel JL. Somatosensory system: organizational hierarchy from single units in monkey area 5. Science. 1971;172:273–275. doi: 10.1126/science.172.3980.273. [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME. The updating of the representation of visual space in parietal cortex by intended eye movements. Science. 1992;255:90–92. doi: 10.1126/science.1553535. [DOI] [PubMed] [Google Scholar]

- Engel TA, Chaisangmongkon W, Freedman DJ, Wang XJ. Choice-correlated activity fluctuations underlie learning of neuronal category representation. Nat Commun. 2015;6:6454. doi: 10.1038/ncomms7454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fanini A, Assad Ja. Direction selectivity of neurons in the macaque lateral intraparietal area. J Neurophysiol. 2009;101:289–305. doi: 10.1152/jn.00400.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferrier D. The Croonian Lectures on Cerebral Localisation. Br Med J. 1890;1:1349–1355. doi: 10.1136/bmj.1.1537.1349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, Kiani R, Shadlen MN. Predicting the Accuracy of a Decision: A Neural Mechanism of Confidence. Cold Spring Harb Symp Quant Biol. 2014;79:185–197. doi: 10.1101/sqb.2014.79.024893. [DOI] [PubMed] [Google Scholar]

- Filimon F, Philiastides MG, Nelson JD, Kloosterman NA, Heekeren HR. How embodied is perceptual decision making? Evidence for separate processing of perceptual and motor decisions. J Neurosci. 2013;33:2121–2136. doi: 10.1523/JNEUROSCI.2334-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzgerald JK, Freedman DJ, Assad Ja. Generalized associative representations in parietal cortex. Nat Neurosci. 2011;14:1075–1079. doi: 10.1038/nn.2878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fleming JFR, Crosby EC. The parietal lobe as an additional motor area. The motor effects of electrical stimulation and ablation of cortical areas 5 and 7 in monkeys. J Comp Neurol. 1955;103:485–512. doi: 10.1002/cne.901030306. [DOI] [PubMed] [Google Scholar]

- Freedman DJ, Assad JA. Experience-dependent representation of visual categories in parietal cortex. Nature. 2006;443:85–88. doi: 10.1038/nature05078. [DOI] [PubMed] [Google Scholar]

- Freedman DJ, Assad JA. A proposed common neural mechanism for categorization and perceptual decisions. Nat Neurosci. 2011;14:143–146. doi: 10.1038/nn.2740. [DOI] [PubMed] [Google Scholar]

- Freedman DJ, Assad JA. Neuronal Mechanisms of Visual Categorization: An Abstract View on Decision Making. Annu Rev Neurosci. 2016;39:129–147. doi: 10.1146/annurev-neuro-071714-033919. [DOI] [PubMed] [Google Scholar]

- Fuster JM, Jervey JP. Inferotemporal neurons distinguish and retain behaviorally relevant features of visual stimuli. Science. 1981;212:952–955. doi: 10.1126/science.7233192. [DOI] [PubMed] [Google Scholar]