Abstract

With an increasing focus on biomarkers in dementia research, illustrating the role of neuropsychological assessment in detecting mild cognitive impairment (MCI) and Alzheimer’s dementia (AD) is important. This systematic review and meta-analysis, conducted in accordance with PRISMA (Preferred Reporting Items for Systematic reviews and Meta-Analyses) standards, summarizes the sensitivity and specificity of memory measures in individuals with MCI and AD. Both meta-analytic and qualitative examination of AD versus healthy control (HC) studies (n = 47) revealed generally high sensitivity and specificity (≥ 80% for AD comparisons) for measures of immediate (sensitivity = 87%, specificity = 88%) and delayed memory (sensitivity = 89%, specificity = 89%), especially those involving word-list recall. Examination of MCI versus HC studies (n = 38) revealed generally lower diagnostic accuracy for both immediate (sensitivity = 72%, specificity = 81%) and delayed memory (sensitivity = 75%, specificity = 81%). Measures that differentiated AD from other conditions (n = 10 studies) yielded mixed results, with generally high sensitivity in the context of low or variable specificity. Results confirm that memory measures have high diagnostic accuracy for identification of AD, are promising but require further refinement for identification of MCI, and provide support for ongoing investigation of neuropsychological assessment as a cognitive biomarker of preclinical AD. Emphasizing diagnostic test accuracy statistics over null hypothesis testing in future studies will promote the ongoing use of neuropsychological tests as Alzheimer’s disease research and clinical criteria increasingly rely upon cerebrospinal fluid (CSF) and neuroimaging biomarkers.

Keywords: Alzheimer’sdisease, Mild cognitive impairment, Neuropsychological testing, Memory, Sensitivity and specificity, Meta-analysis

Introduction

Neuropsychological testing has demonstrated sensitivity to dementia, Mild Cognitive Impairment (MCI) and early preclinical stages of Alzheimer’s disease (AD) and is relatively inexpensive. Only recently has neuropsychological testing been clearly listed as an important component of the diagnostic work-up for AD and MCI by the National Institute on Aging and Alzheimer’s Association work groups (NIA-AA; MCI; Albert et al. 2011; McKhann et al. 2011) and for diagnosis of Major and Mild Neurocognitive Disorder (comparable to Dementia and MCI, respectively) in the DSM-5 (American Psychiatric Association 2013). However, despite the recognized importance and clear utility of neuropsychological testing (Bondi and Smith 2014), it is not a required component for diagnosis of AD or MCI in newly revised diagnostic systems (Albert et al. 2011; American Psychiatric Association 2013; McKhann et al. 2011). Just as is the case for neuropsychological testing, recent diagnostic systems have also incorporated biomarkers into updated consensus criteria for diagnosis of MCI due to AD and preclinical AD (Albert et al. 2011; McKhann et al. 2011; Sperling et al. 2011). However, the core clinical diagnostic criteria for AD do not include biomarkers, and biomarkers are seen only as “complimentary,” serving to increase confidence that the clinical syndrome is due to the AD pathophysiological process (Jack et al. 2011). In addition, the use of biomarkers in preclinical AD and MCI are specifically prescribed for research and not for clinical purposes (Albert et al. 2011; Sperling et al. 2011). Nevertheless, biomarkers are often viewed as compelling additions to diagnosis and many clinical centers have adopted expensive and often invasive biomarker studies to aid in diagnosis of the AD pathological process, at times prior to ordering neuropsychological assessment. In addition, the the “A/T/N” (amyloid, tau, and neurodegeneration/neuronal injury) system is a recently proposed AD descriptive biomarker classification scheme (Jack et al. 2016), and it does not include cognition. However, recent evidence suggests that subtle cognitive decline alone can herald later development of biomarker positive states and mild cognitive impairment (MCI) or Alzheimer’s dementia (Edmonds et al. 2015b), and cognitive differences are detectable in biomarker positive cognitively normal individuals (Han et al. 2017). One purpose of the present review and subsequent meta-analysis is to highlight the utility of neuropsychological testing as an equally valuable and arguably more affordable, less invasive cognitive biomarker of AD.

An illustration of how neuropsychological testing meets suggested guidelines for a useful biomarker may help to consolidate the evidence for the continued role of neuropsychology in the clinical diagnostic work-up. In the first review to do so, Fields et al. (2011) broadly outlined how neuropsychological testing may offer unique value as a biomarker for dementia. The current systematic review and meta-analysis further illustrates the utility of neuropsychology as a biomarker of AD by reviewing studies that report the diagnostic accuracy of memory measures in MCI and Alzheimer’s dementia. To our knowledge, this is the first meta-analysis of the diagnostic accuracy of neuropsychological measures beyond cognitive screening measures.

A consensus report published in 1998 by The Ronald and Nancy Reagan Research Institute of the Alzheimer’s Association and the National Institute on Aging Working Group on Molecular and Biochemical Markers of Alzheimer’s Disease (Growdon et al. 1998; referred to as Consensus Workgroup hereafter) described the ideal features of a potential biomarker and operationalized criteria by which they can be evaluated. These criteria include range recommendations for sensitivity and specificity, most simply, that sensitivity and specificity should be no less than 80%within at least two independent studies distinguishing between patients with probable AD and normal control subjects. After this level of diagnostic accuracy is demonstrated, then further application in patients with possible AD or preclinical AD would be warranted. The consensus report also highlights that biomarkers can serve various purposes including diagnosis, screening, predicting conversion, monitoring disease progression, and detecting response to treatment. The value of any given biomarker may vary across its different applications. The more useful a biomarker is across settings, the higher its general value (see Fields et al. 2011 for a thorough discussion of how neuropsychological testing can serve most of these roles).

Although several reviews and meta-analyses have summarized diagnostic test accuracy statistics for the most commonly reported AD biomarkers, to our knowledge there has not been a review of diagnostic test accuracy statistics for neuropsychological measures. In fact, the relative lack of studies reporting diagnostic accuracy statistics was highlighted by Ivnik et al. (2000), who summarized this as a valid criticism of neuropsychology (reported in the 1996 Neuropsychological Assessment Panel of the American Academy of Neurology’s Therapeutics and Technology Assessment Subcommittee). This gap in the literature was due mainly to an early over-reliance on null hypothesis testing and the unfortunate omission of diagnostic test accuracy statistics. The paucity of neuropsychological research and test manuals that include information about diagnostic validity is well recognized (Therapeutics and Technology Assessment Subcommittee of AAN 1996; Chelune 2010; Ivnik et al. 2000). This early overwhelming focus on null hypothesis testing has rendered much of the prior research demonstrating the utility of neuropsychology in assessment of dementia and MCI inapplicable at the individual clinical level. Fortunately, more studies recently have begun to include diagnostic test accuracy statistics, although these studies have yet to be summarized within one review.

The overall objective of this systematic review and meta-analysis was to evaluate the sensitivity and specificity of memory measures in individuals with MCI and AD. We hypothesized that the diagnostic accuracy of memory measures for studies comparing individuals with AD and healthy controls (HC) would meet the minimum criteria put forth by the 1998 Consensus Workgroup.

Method

This review was conducted in accordance with the Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) guidelines (Moher et al. 2009). Although a review protocol was not registered prospectively, the primary objectives and methods were specified in advance. Meta-analyses were conducted whenever appropriate, and qualitative reviews were provided on measures of memory that were less widely reported or conceptually heterogeneous.

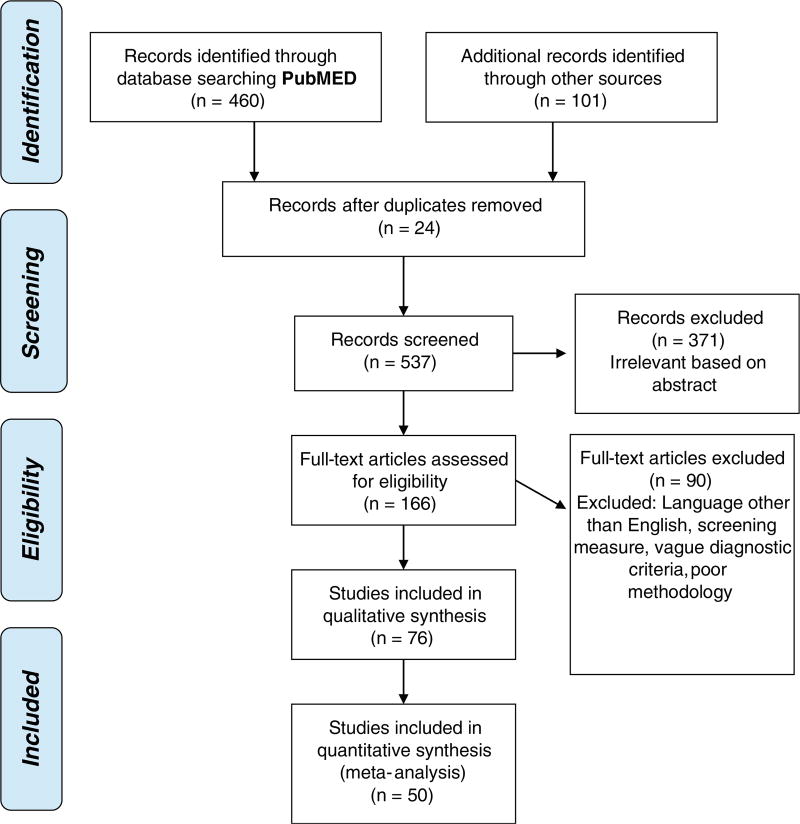

Articles to be considered for systematic review and meta-analysis were identified through a PubMed/MEDLINE search of studies that report diagnostic accuracy statistics for neuropsychological measures of memory for Mild Cognitive Impairment (MCI) or Alzheimer’s disease (AD). Key words used for the search were [Neuropsychological Tests] or [Neuropsychology] and [Alzheimer] or [Mild Cognitive Impairment] and [sensitivity] or [specificity] or [ROC]. We identified and reviewed studies published before the date of our online database search on April 26, 2017 that included information regarding diagnostic accuracy of neuropsychological measures. Often, this was not the primary objective of the study. Additional studies were also identified through other sources including prior knowledge of studies, additional PubMed/MEDLINE searches outside the above search parameters, and review of references during screening. Because the vast majority of studies focused on memory, and to limit the scope of this review, studies were only included if they included an episodic memory measure. Some studies, particularly those with more complicated methodology or results, were reviewed by two or three reviewers (GW, JS, NS), whereas most studies were selected and reviewed by one reviewer (GW or JS). See Fig. 1 for a flow diagram describing the number of studies screened and meeting inclusion criteria.

Fig. 1.

Final number of studies meeting inclusionary criteria based on PRISMA (Preferred Reporting Items for Systematic reviews and Meta-Analyses) 2009 standards

Information on sensitivity and specificity was either directly extracted from the studies by the reviewers, or calculated using 2 × 2 tables that indicate number of false positives and negatives and true positives and negatives, if these data were presented. The extracted or calculated information is presented in the Online Resources (Tables i– iii). These tables present the author(s), year of publication, sample sizes for all groups, name of memory measure or neuropsychological test, sensitivity and specificity values, a cutoff value (if reported), whether the study used the test of interest in the diagnostic evaluation, and if the study reported 2 × 2 data. Studies were excluded if they did not report sensitivity and specificity data or sufficient information to calculate these statistics. We also excluded studies if 1) widely accepted diagnostic criteria for MCI or AD were not implemented (for AD - McKhann et al. 1984; McKhann et al. 2011; American Psychiatric Association 2000; for MCI - Albert et al. 2011; Petersen 2007; Petersen and Kanow 2001; Petersen 2004; Petersen et al. 2001; Petersen et al. 1999; Portet et al. 2006; Winblad et al. 2004), 2) sample characteristics could not be determined based on the information provided or the sample was heterogeneous (e.g., inclusion of comorbid neurological conditions in MCI or dementia samples, such as Parkinson’s disease, that did not allow clear separation of results by suspected etiology), 3) a measure of episodic memory was not included, 4) methodology appeared to be ambiguous or insufficiently specified (for example, vague reporting of specific neuropsychological measures used or unclear statistical analyses or results; in other words the neuropsychological measure used was unclear), or 5) published in a language other than English. In addition, studies investigating the diagnostic accuracy of screening measures were excluded as diagnostic validity of these measures for dementia have been previously reported (see Lin et al. 2013) and screening measures were viewed as beyond the scope of the current review. We only included studies that provided diagnostic accuracy statistics for scores reflecting memory, excluding several studies reporting combined scores of memory and other cognitive domains (e.g., naming and memory scores combined). In some cases, studies used the test under investigation as part of their diagnostic criteria used to classify their sample (“incorporation bias”, see Noel-Storr et al. 2014). Although this circularity introduces bias and may overestimate the value of the diagnostic test (Noel-Storr et al. 2014), we chose to include these articles and identified them in the online resources (Tables i–iii). When feasible, we performed separate meta-analyses on studies of high and low quality to examine the potential influence of incorporation bias on results. Our criteria for “quality” was based on classifying each study for whether or not the measure of interest was used in the diagnosis (“Yes”, “No”, or “Unclear” if no explicit statement made by authors as to whether test was used in participant diagnosis).

Two types of cut-offs were typically used for the reported sensitivity and specificity. Optimal cutoffs are study-specific derivations that provide the best balance between sensitivity and specificity, typically derived through ROC analysis. Optimal cutoffs are typically represented as raw scores. Conventional cutoffs are based on an acceptable clinical standard (e.g., −1.5 standard deviations below the mean, etc.) derived from a test manual or other published normative data. If more than one conventional cut-off was reported in a study, we chose the value with the best balance of sensitivity and specificity to include here. Conventional cut-offs have the advantage of being more generalizable across studies and more easily applied to clinical settings, whereas optimal cutoffs maximize diagnostic accuracy regardless of whether the cut-off represents “impairment” in the clinical setting (e.g., many optimal cutoffs do not reach the minimally accepted clinical cut-off of more than or equal to −1 standard deviation, or SD, below the mean). Unfortunately, many studies did not report the specific value used as a cutoff. These studies were still included within the review and meta-analyses but are clearly noted in the online resources.

Qualitative Review

Types of memory measures were divided into four categories to better evaluate patterns across studies: Immediate, Delayed, Associative Learning, and Other. “Immediate memory” was operationalized as recall of information directly following presentation of stimuli, such that measures using a distraction task or minutes of delay before recall were included under “delayed memory.” Associative learning tasks require participants to bind together stimulus pairs (e.g., word pairs, object and location). This is distinguished from tasks that use higher-order category cues to assist in encoding or retrieval (e.g., selective reminding tasks or SRT). We included both verbal and visual associative learning tasks into this category, as well as measures of short-term visual memory binding (Parra et al. 2010; Parra et al. 2011). The “other” category was used for recognition memory, combined (e.g., immediate recall score combined with yes/no recognition score) or interference scores, and other miscellaneous tests or indices. Interference (e.g., Fuld Object Memory Evaluation; Loewenstein et al. 2004) is any recall of an item or word that was not on the original list of presented stimuli. To optimally compare the diagnostic accuracy of different types of memory measures, we often report several different memory measures from the same study.

For both immediate and delayed categories, studies were further subdivided into verbal list free recall, verbal list cued recall or selective reminding, story free recall, visual free recall, retention (included in Delayed only), and “other.” Free recall measures asked individuals to provide to-be-remembered information (e.g., a list, visual stimuli, or a story) from memory without any cues. Measures of cued-recall and combined measures of free- and cued-recall using a selective reminding paradigm1 were included within the same division. Retention is the percent savings, or the number of words or items recalled on delay divided by the maximum number of words or items learned. Recognition tasks included yes/no recognition (e.g., “was car on the list you heard earlier?”) and forced choice recognition (e.g., “Was car or banana on the list you heard earlier?”). Some studies included in the verbal memory section supplemented auditory stimuli with visual stimuli (e.g., pre-senting written words as they are read aloud or a corresponding picture with a to-be-learned word). Visual memory tasks included those with simple or complex geometric shapes, as well as route or map learning tasks.

For the qualitative review of studies, we used the minimum cutoff of 80%sensitivity and specificity for differentiating AD from HCs and from other dementias that was suggested by the Consensus Workgroup (1998). One limitation to our qualitative observations is that in general, direct comparisons across studies are confounded by varying methods and sample characteristics, prohibiting strong conclusions regarding which measures are most sensitive to AD. However, general patterns are discussed and where one published study directly examined two or more different memory measures, we comment on this comparison as appropriate. Studies also inconsistently reported cutoffs based on a conventional or an optimal cut point, further complicating direct comparison of diagnostic accuracy statistics across studies.

Data Synthesis and Meta-Analysis

Meta-analyses were performed for immediate and delayed memory when an appropriate number of studies were available (minimum of 3 studies per test type was deemed as sufficient). It is important to note that for meta-analyses performed here using a single dependent variable (rather than several dependent variables considered jointly), where there are less than 5 studies there are important limitations to the validity of the meta-analysis, at k = 3 it is not possible to compute a rho correlation coefficient to assess potential threshold effects. Some studies listed in Tables i and iii were not included in meta-analysis due to concern about duplication of subjects. For example, it is probable that a significant proportion of the data report by Chapman et al. (2016) is already represented by other studies as it is drawn from the National Alzheimer’s Coordinating Centre (NACC database). Associative Learning and Other categories were not included in meta-analyses due to heterogeneity of measures. All meta-analyses were performed using R package ‘Mada,’ designed specifically for meta-analysis of diagnosticity data (Doebler 2015; Doebler and Holling 2012; Schwarzer et al. 2015). Using specificity and sensitivity values for each test, contingency data for each study (true positives, false positives, false negatives and true negatives) were computed using Microsoft Excel. Contingency data was rounded to whole numbers (≥0.5 rounded up, <0.5 rounded down). Contingency data and k were then entered into R to perform meta-analyses.

Table 1.

Meta-analyses of immediate recall measures for Alzheimer’s disease vs. healthy controls

| Univariate Analysis | Immediate Recall Measures |

Immediate List Free Recall |

Immediate List Cued/Selective Reminding |

Immediate Story Free Recall |

Immediate Visual Free Recall |

|---|---|---|---|---|---|

| k | 26 | 17 | 7 | 3 | 4 |

| Equality of sensitivities | χ2(25) = 114.84 p. < .0001 | χ2(16) = 75.44 p. < .0001 | χ2(6) = 19.72 p. = .003 | χ2(2) = 3.33 p. = .189 | χ2(3) = .95 p. = .812 |

| Equality of specificities | χ2(25) = 104.10 p. < .0001 | χ2(16) = 53.30 p. < .0001 | χ2(6) = 14.56 p. = .024 | χ2(2) = 6.59 p. = .037 | χ2(3) = 11.33 p. = .010 |

| Rho (Se and false positive rate correlation) (95% CI) | −.53 (−.76, −.18) | −.09 (−.55, .41) | −.93 (−.99, −.61) | NA | −.85 (−1.0, .61) |

| DOR (95% CI) | 56.18 (35.63, 88.58) | 55.98 (35.38, 88.58) | 95.31 (26.76, 339.45) | 7.29 (2.70, 19.71) | 105.45 (32.30, 344.25) |

| Cochran’s Q | Q(25) = 31.57 p. = .171 | Q(16) = 18.12 p. = .317 | Q(6) = 6.06 p. = .417 | Q(2) = 2.18 p. = .335 | Q(3) = 2.74 p. = .434 |

| Tau (95% CI) | .97 (0.00, 1.46) | .74 (0.00, 1.31) | 1.46 (0.00, 3.42) | .75 (0.00, 5.80) | .81 (0.00, 4.43) |

| Tau-squared (95% CI) | .95 (0.00, 2.13) | .55 (0.00, 1.72) | 2.13 (0.00, 11.68) | .57 (0.00, 33.68) | .65 (0.00, 19.61) |

| Meta-analysis | |||||

| Sensitivity (95% CI) | .87 (.83, .90) | .87 (.83, .90) | .87 (.78, .93) | .71 (.61, .78) | .92 (.86, .96) |

| Specificity (95% CI) | .88 (.85, .90) | .88 (.85, .91) | .93 (.87, .97) | .75 (.58, .86) | .90 (.78, .95) |

Table 3.

Meta-analyses of delayed recall measures for Alzheimer’s disease vs. healthy controls

| Univariate Analysis | Delayed Recall Measures |

Delayed List Free Recall |

Delayed List Cued/ Selective Reminding |

Delayed List Retention |

Delayed Visual Free Recall |

Delayed Story Free Recall |

|---|---|---|---|---|---|---|

| k | 27 | 16 | 10 | 5 | 6 | 4 |

| Equality of sensitivities | χ2(26) = 63.40 p. < .0001 | χ2(15) = 38.54 p. < .001 | χ2(9) = 19.62 p. = .020 | χ2(4) = 35.40 p. < .0001 | χ2(5) = 5.06 p. = .409 | χ2(3) = 13.12 p. = .004 |

| Equality of specificities | χ2(26) = 77.89 p. < .0001 | χ2(15) = 26.71 p. = .031 | χ2(9) = 14.46 p. = .107 | χ2(4) = 9.61 p. = .048 | χ2(5) = 5.25 p. = .386 | χ2(3) = 20.87 p. < .001 |

| Rho (Se and false positive rate correlation) (95% CI) | −.38 (−.66, .00) | −.44 (−.77, .07) | −.68 (−.92, −.08) | .46 (−.71, .95) | .11 (−.77, .85) | −.69 (−.99, .80) |

| DOR (95% CI) | 78.41 (51.32, 119.80) | 69.35 (42.33, 113.60) | 146.01 (60.21, 354.08) | 27.40 (16.90, 44.42) | 56.54 (35.21, 90.79) | 113.47 (24.42, 527.14) |

| Cochran’s Q | Q(26) = 24.26 p. = .561 | Q(15) = 15.75, p. = .399 | Q(9) = 5.84 p. = .756 | Q(4) = 5.57 p. = .233 | Q(5) = 4.47 p. = .484 | Q(3) = 3.44 p. = .329 |

| Tau (95% CI) | .88 (0.00, .95) | .75 (0.00, 1.32) | 1.12 (0.00, 1.43) | .35 (0.00, 2.94) | .00 (0.00, 2.11) | 1.43 (0.00, 6.08) |

| Tau-squared (95% CI) | .77 (0.00, .91) | .56 (0.00, 1.74) | 1.25 (0.00, 2.05) | .13 (0.00, 8.65) | .00 (0.00, 4.46) | 2.05 (0.00, 36.99) |

| Meta-analysis | ||||||

| Sensitivity (95% CI) | .89 (.87, .91) | .90 (.86, .92) | .91 (.87, .94) | .84 (.73, .91) | .86 (.82, .89) | .93 (.84, .98) |

| Specificity (95% CI) | .89 (.87, .91) | .87 (.84, .89) | .92 (.88, .95) | .81 (.77, .84) | .88 (.85, .91) | .89 (.79, .94) |

Univariate Analysis

Equality of sensitivity and specificity proportions was examined by χ2 test. The sensitivity and specificity values depend on the cut-off values used by different studies. Lowering the cut-off improves sensitivity but reduces specificity, whereas increasing the cut-off reduces sensitivity but increases specificity. The relationship between sensitivity and specificity as determined by the cut-off threshold is important to consider when performing meta-analyses of diagnostic test accuracy data where cut-off thresholds are likely to vary between studies included in the meta-analysis. Threshold effects were examined by Spearman rho correlation (sensitivity and false positive rate (1 – specificity)), with correlations ≥0.6 indicating potential threshold effects. The correlations are usually in a positive direction, but can be negative in direction. Diagnostic Odds Ratios (DOR) were calculated using the DSL method (DerSimonian and Laird random-effects; DerSimonian and Laird 1986). Coupled forest plots were used to examine threshold effects. Forest plots displaying an inverse relation (V or an inverted V pattern) indicate potential threshold effects. Where threshold effects are identified, interpretation of analyses should be based on descriptive analyses. Heterogeneity can be identified when the probability of the Q statistic falls below. 10. However, this statistical criterion may be less appropriate for meta-analysis of diagnostic tests which employ bivariate outcomes (sensitivity and specificity; Kim et al. 2015; Lee et al. 2015). Tau-squared quantifies the variance across studies, with a value of zero indicating minimal or no heterogeneity in the data.

Hierarchical Meta-Analyses

Following methods outlined by Kim et al. (2015) and Lee et al. (2015), we employed hierarchical methods, known as the bivariate and Rutter & Gatsonis hierarchical summary receiver operating characteristic (HSROC) models, respectively. These are random effect models in that they account for variance within studies as well as across studies. The use of such hierarchical methods is recommended (Lee et al. 2015) because these methods also account for the relationship between sensitivity and specificity, thereby directly addressing potential threshold effects. These methods produce the same results when no covariates are considered. Bivariate random-effects model, restricted maximum likelihood (REML) estimation, was employed with continuity correction set at 0.5. Some studies examined reported sensitivity or specificity values of 100. As such, the contingency data included values of 0. Such values have been noted to undermine the statistical validity of the meta-analysis. We addressed this by adding a small continuity correction of 0.5 (default option) to each study, where required (Doebler and Holling 2012).

Studies were excluded if sensitivity and specificity values were missing or where 2 × 2 contingency data were missing, including those studies reporting a “set” cut-off value. For main analyses (AD Immediate, AD Delayed, MCI Immediate, MCI Delayed) single studies reporting multiple data points were statistically combined to form a single synthetic score. Synthetic scores were computed using the hierarchical methods described above, irrespective of sample size. As the combination of different types of measures of immediate and delayed recall into single meta-analyses may create additional variability, we conducted a series of subsequent meta-analyses of specific subclasses of immediate recall (list free recall, list cued selective reminding, story free recall, visual free recall) and subclasses of delayed recall (list free recall, list cued selective reminding, list retention, story free recall, visual free recall). For subclass analyses where single studies reported multiple data points, a single data point from each study was selected rather than calculation of synthetic score. The method used to select the single representative data point in these cases was to select the same measure as was used in other studies contained in the meta-analysis, and where this was not possible to identify the measure with the closest construct similarity to the other measures contained in the meta-analysis.

Results

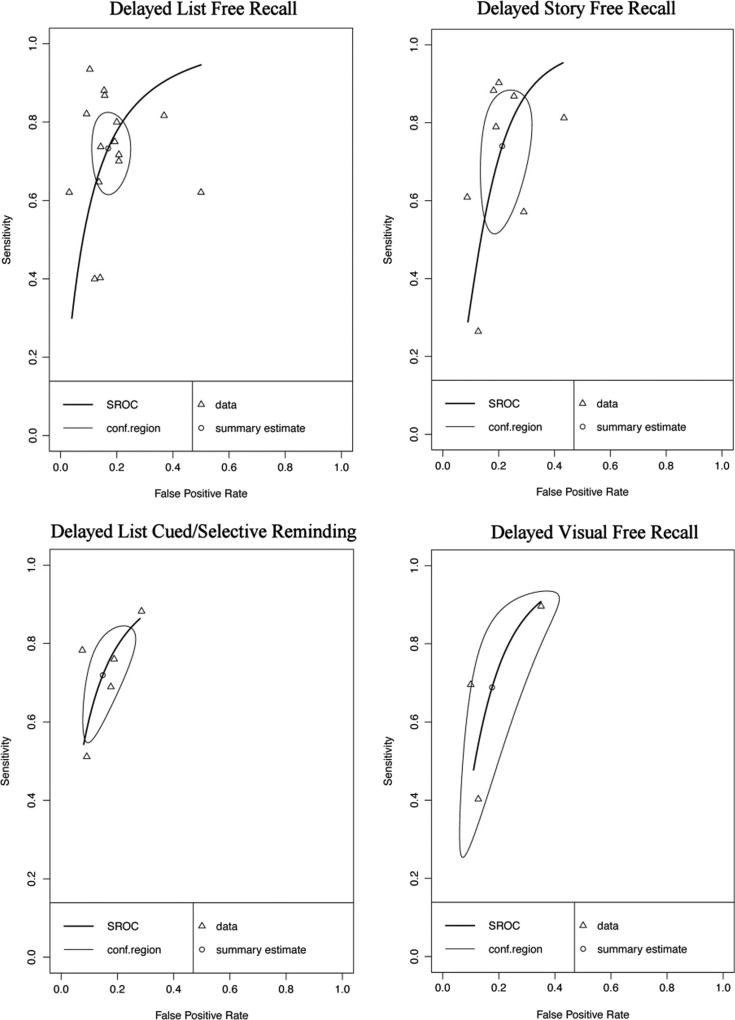

Descriptive (univariate) data and pooled estimates for AD and MCI can be found in Tables 1, 2, 3, 4, 5 and 6 respectively. Overall, the series of meta-analyses indicate that while immediate and delayed memory measures have high diagnostic accuracy in identifying AD, their capacity to discriminate between MCI and healthy persons is adequate but lower. For all analyses, the SROC (summary receiver operative characteristic) presented plots sensitivity values against false positive rate (FPR = 1 – specificity). Careful inspection of the SROC curves for MCI indicate substantial heterogeneity in sensitivity and specificity values across studies. We review quantitative and qualitative results for each subgroup of analyses.

Table 2.

Sensitivity analyses of Alzheimer’s disease vs. healthy control studies where the measure of interest was not used in participant diagnosis

| Univariate Analysis | Immediate Recall Measures | Delayed Recall Measures |

|---|---|---|

| k | 18 | 19 |

| Equality of sensitivities | χ2(17) = 56.30 p. < .0001 | χ2(18) = 47.67 p. < .001 |

| Equality of specificities | χ2(17) = 71.57 p. < .0001 | χ2(18) = 45.75 p. < .001 |

| Rho (Se and false positive rate correlation) (95% CI) | −.71 (−.88, −.36) | −.35 (−.69, .13) |

| DOR (95% CI) | 56.33 (30.03, 105.66) | 75.36 (44.77, 126.85) |

| Cochran’s Q | Q(17) = 16.74 p. = .472 | Q(18) = 15.53 p. = .625 |

| Tau (95% CI) | 1.16 (0.00, 1.49) | .91 (0.00, 1.06) |

| Tau-squared (95% CI) | 1.35 (0.00, 2.21) | .82 (0.00, 1.12) |

| Meta-analysis | ||

| Sensitivity (95% CI) | .86 (.82, .90) | .89 (.85, .91) |

| Specificity (95% CI) | .88 (.84, .92) | .89 (.86, .91) |

Table 4.

Meta-analyses of immediate recall measures for Mild Cognitive Impairment vs. healthy controls

| Univariate Analysis | Immediate Recall Measures |

Immediate List Free Recall |

Immediate Story Free Recall |

Immediate List Cued/Selective Reminding |

|---|---|---|---|---|

| k | 17 | 13 | 6 | 3 |

| Equality of sensitivities | χ2(16) = 104.82 p. < .0001 | χ2(12) = 84.60 p. < .0001 | χ2(5) = 92.32 p. < .0001 | χ2(2) = 7.10 p. = .029 |

| Equality of specificities | χ2(16) = 74.25 p. < .0001 | χ2(12) = 56.41 p. < .0001 | χ2(5 = 29.98 p. < .0001 | χ2(2) = 2.40 p. = .301 |

| Rho (Se and false positive rate correlation) (95% CI) | .11 (−.39, .56) | .37 (−.23, .76) | .38 (−.63, .91) | NA |

| DOR (95% CI) | 11.19 (6.76, 18.53) | 12.76 (7.53, 21.64) | 8.55 (2.86, 25.54) | 14.26 (4.22, 48.26) |

| Cochran’s Q | Q(16) = 15.99 p. = .453 | Q(12) = 13.34 p. = .345 | Q(5) = 4.40 p. = .494 | Q(2) = 2.70 p. = .259 |

| Tau (95% CI) | .90 (0.00, 1.19) | .77 (0.00, 1.47) | 1.25 (0.00, 2.78) | .93 (0.00, 8.23) |

| Tau-squared (95% CI) | .82 (0.00, 1.41) | .59 (0.00, 2.16) | 1.57 (0.00, 7.71) | .87 (0.00, 67.79) |

| Meta-analysis | ||||

| Sensitivity (95% CI) | .72 (.63, .79) | .72 (.62, .81) | .74 (.50, .89) | .74 (.54, .87) |

| Specificity (95% CI) | .81 (.75, .85) | .81 (.75, .86) | .74 (.60, .84) | .84 (.73, .90) |

Table 5.

Sensitivity analyses of Mild Cognitive Impairment vs. healthy control studies where the measure of interest was not used in participant diagnosis

| Univariate Analysis | Immediate Recall Measures | Delayed Recall Measures |

|---|---|---|

| k | 13 | 16 |

| Equality of sensitivities | χ2(12) = 87.65 p. < .0001 | χ2(15) = 133.69 p. < .0001 |

| Equality of specificities | χ2(12) = 48.96 p. < .0001 | χ2(15) = 71.99 p. < .0001 |

| Rho (Se and false positive rate correlation) (95% CI) | .08 (−.49, .60) | .04 (−.47, .52) |

| DOR (95% CI) | 11.69 (6.60, 20.70) | 14.53 (8.03, 26.29) |

| Cochran’s Q | Q(12) = 11.34 p. = .50 | Q(15) = 8.16 p. = .917 |

| Tau (95% CI) | .89 (0.00, 1.27) | 1.09 (0.00, .64) |

| Tau-squared (95% CI) | .80 (0.00, 1.61) | 1.18 (0.00, .41) |

| Meta-analysis | ||

| Sensitivity (95% CI) | .73 (.63, .81) | .76 (.68, .82) |

| Specificity (95% CI) | .80 (.75, .85) | .81 (.77, .85) |

Table 6.

Meta-analyses of delayed recall measures for Mild Cognitive Impairment vs. healthy controls

| Univariate Analysis | Delayed Recall Measures |

Delayed List Free Recall |

Delayed Story Free Recall |

Delayed List Cued/Selective Reminding |

Delayed Visual Recall |

|---|---|---|---|---|---|

| k | 22 | 15 | 8 | 5 | 3 |

| Equality of sensitivities | χ2(21) = 160.59 p. < .0001 | χ2(14) = 105.45 p. < .0001 | χ2(7) = 138.44 p. < .0001 | χ2(4) = 15.65 p. = .004 | χ2(2) = 40.64 p. < .0001 |

| Equality of specificities | χ2(21) = 93.22 p. < .0001 | χ2(14) = 75.94 p. < .0001 | χ2(7) = 39.23 p. < .0001 | χ2(4) = 7.96 p. = .093 | χ2(2) = 18.73 p. = < .0001 |

| Rho (Se and false positive rate correlation) (95% CI) | .22 (−.22, .59) | .02 (−.50, .52) | .36 (−.46, .85) | .64 (−.56, .97) | NA |

| DOR (95% CI) | 13.61 (8.63, 21.45) | 14.31 (8.14, 25.16) | 11.00 (4.62, 26.19) | 15.23 (9.49, 24.43) | 11.01 (4.35, 27.87) |

| Cochran’s Q | Q(21) = 11.71 p. = .947 | Q(14) = 14.71 p. = .398 | Q(7) = 5.03 p. = .656 | Q(4) = 3.51 p. = .476 | Q(2) = 1.91 p. = .385 |

| Tau (95% CI) | .95 (0.00, 0.39) | .96 (0.00, 1.40) | 1.16 (0.00, 1.72) | 0.00 (0.00, 1.63) | .66 (0.00, 4.95) |

| Tau-squared (95% CI) | .91 (0.00, .15) | .92 (0.00, 1.95) | 1.34 (0.00, 2.95) | 0.00 (0.00, 2.64) | .44 (0.00, 24.48) |

| Meta-analysis | |||||

| Sensitivity (95% CI) | .75 (.69, .81) | .73 (.64, .81) | .74 (.56, .86) | .72 (.58, .82) | .69 (.33. .91) |

| Specificity) (95% CI) | .81 (.77, .84) | .83 (.77, .88) | .79 (.70, .85) | .85 (.76, .91) | .82 (.64, .92) |

Alzheimer's Disease Versus Healthy Controls

We found a total of 84 studies comparing AD and HC based on our literature review and PubMed search criteria described above. After more careful review, 37 studies were excluded per the exclusion criteria described in the Method. We included 47 total studies for AD versus HC, many of which provide the sensitivity and specificity for multiple measures. Of the 47 studies, four studies explicitly stated that the measure of interest (in combination with other measures and clinical information) was considered during diagnosis, and in nine additional studies, this could not be determined based on the method sections. Eleven of the studies did not report the cut-off used or derived for the sensitivity and specificity values. Only four studies provided 2 × 2 data in the article (Cahn et al. 1995; O'Connell et al. 2004; Parra et al. 2010; Welsh et al. 1991). Almost all used diagnostic criteria of the National Institute of Neurological and Communicative Disorders and the Alzheimer’s Disease and Related Disorders Association (NINCDS-ADRDA). See online resources (Tables i and ii) for additional information about diagnostic criteria applied by each study. In summary, over half (54%) of the AD studies (included in both AD versus HC and AD versus Other; k = 29 of 54) included “probable” AD diagnoses only (i.e., excluded “possible AD” participants), 23%(k = 12) included both probable and possible AD diagnoses, k = 2 included confirmatory autopsy data (Salmon et al. 2002; Storandt and Morris 2010), one used familial gene sequence (Parra et al. 2010), and 19% (k = 10) did not specify probable or possible AD diagnoses.

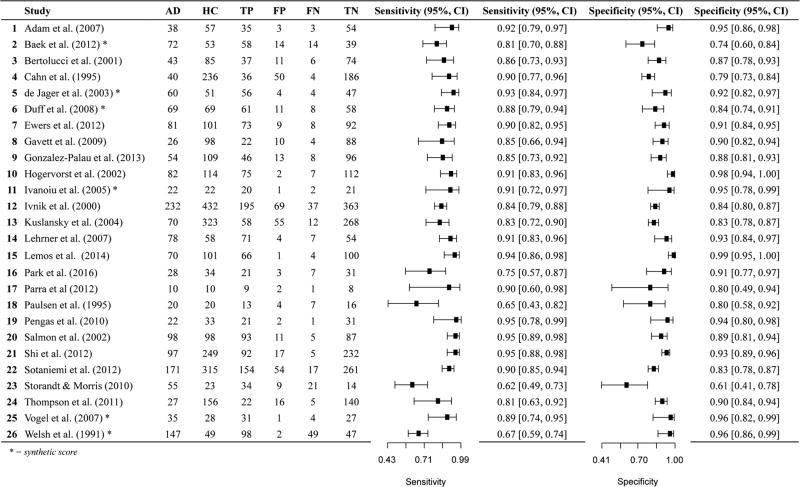

Immediate Memory

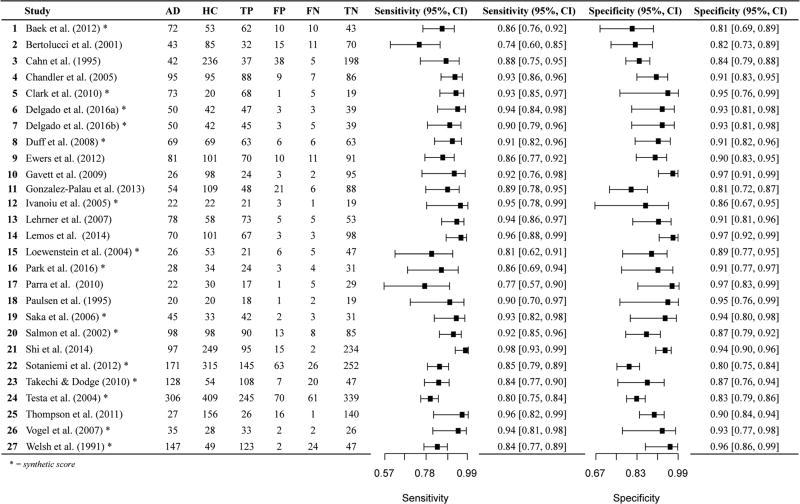

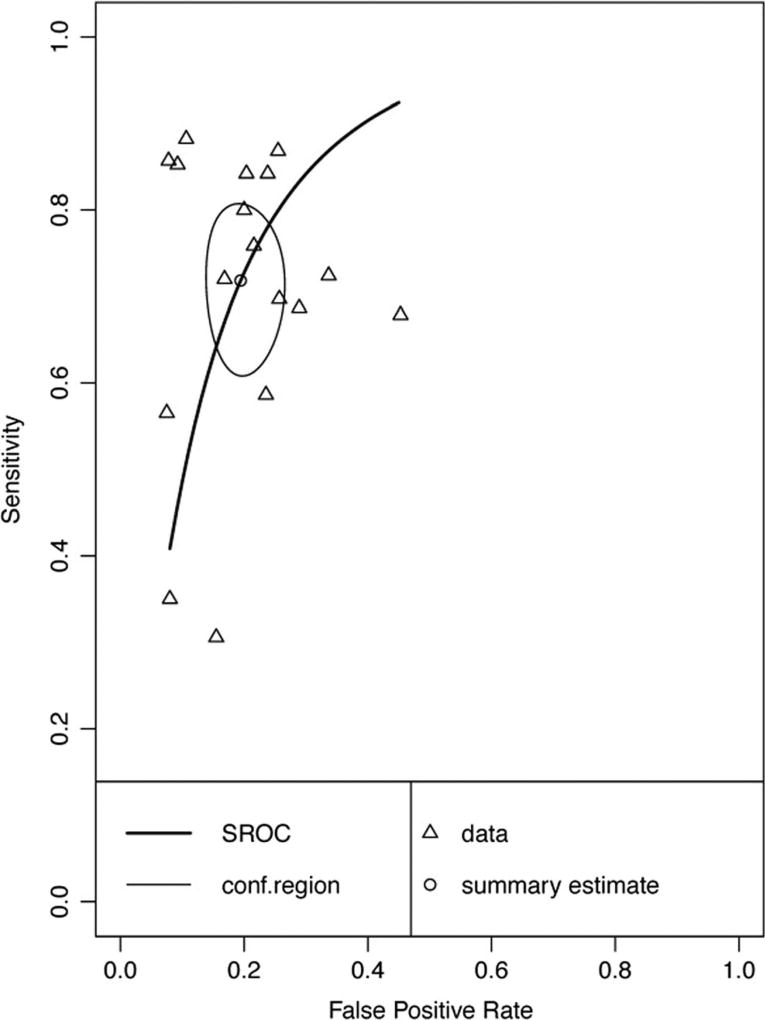

Twenty-six data points contributed to meta-analysis of immediate recall measures in differentiating AD from HC (Table 1 and Fig. 2). Overall, these measures demonstrated excellent diagnostic accuracy with values well exceeding the suggested minimum cut-off values with a 95% confidence interval (95% CI) for sensitivity (Se = .87, 95% CI [.83, .90]) and specificity (Sp = .88, 95% CI [.85, .90]). Visual inspection of the forest plots (Fig. 2) and SROC curves (Fig. 3) supports this conclusion.

Fig. 2.

Paired forest plot AD vs HC Immediate Recall measures. AD: Alzheimer’s disease, HC: healthy controls, TP: true positive, FP: false positive, FN: false negative, TN: true negative

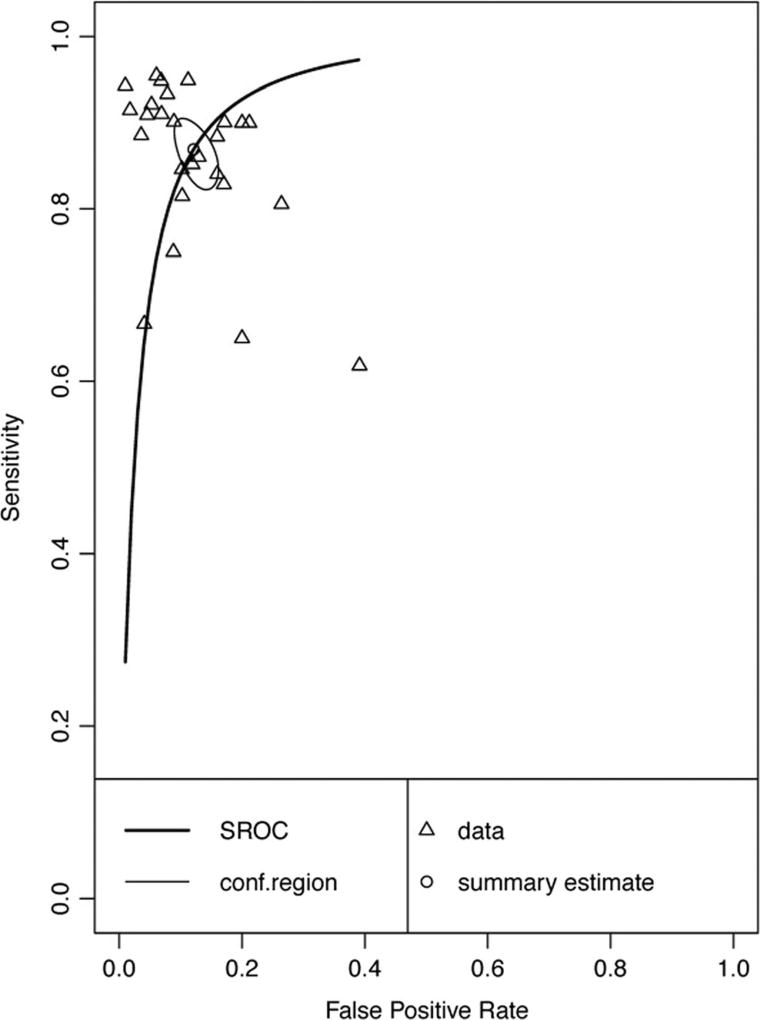

Fig. 3.

Hierarchical summary receiver-operator characteristic (SROC) curve for AD vs HC Immediate Recall measures. Conf.region = confidence region at the 95th percentile

Due to the potential bias in some studies where the measures examined were also used to diagnose participants with AD, we classified all 26 papers according to whether or not the measure was used to diagnose participants. A total of 18 studies did not use the measure under examination for diagnosis of participants, with 3 studies using the measure for diagnosis and the remaining 5 studies being unclear as to how the measure was used in participant diagnosis. A meta-analysis of the 18 studies not using the measure for diagnosis was conducted (Table 2), indicating that the immediate memory recall measures continued to display excellent diagnostic accuracy for differentiating AD from HC with values well exceeding the suggested minimum cut-off for sensitivity (Se = .86, 95% CI [.82, .90]) and specificity (Sp = .88, 95% CI [.84, .92]). Visual inspection of the forest plots and SROC curves (Supplemental Figures xvii and xviii) supports this conclusion. However, the rho correlation did exceed the cutoff for potential threshold effects.

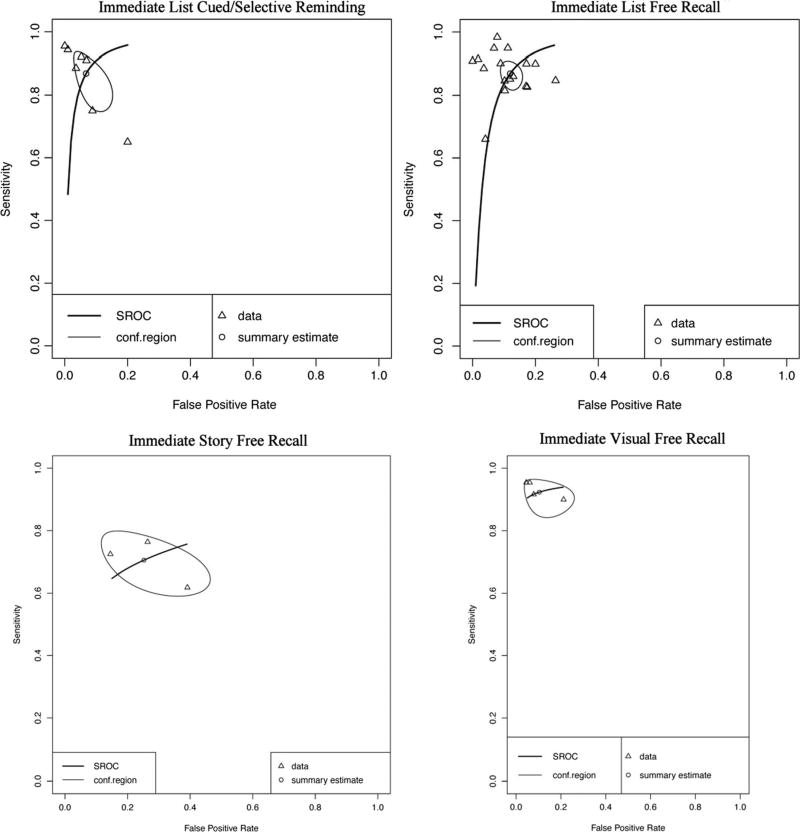

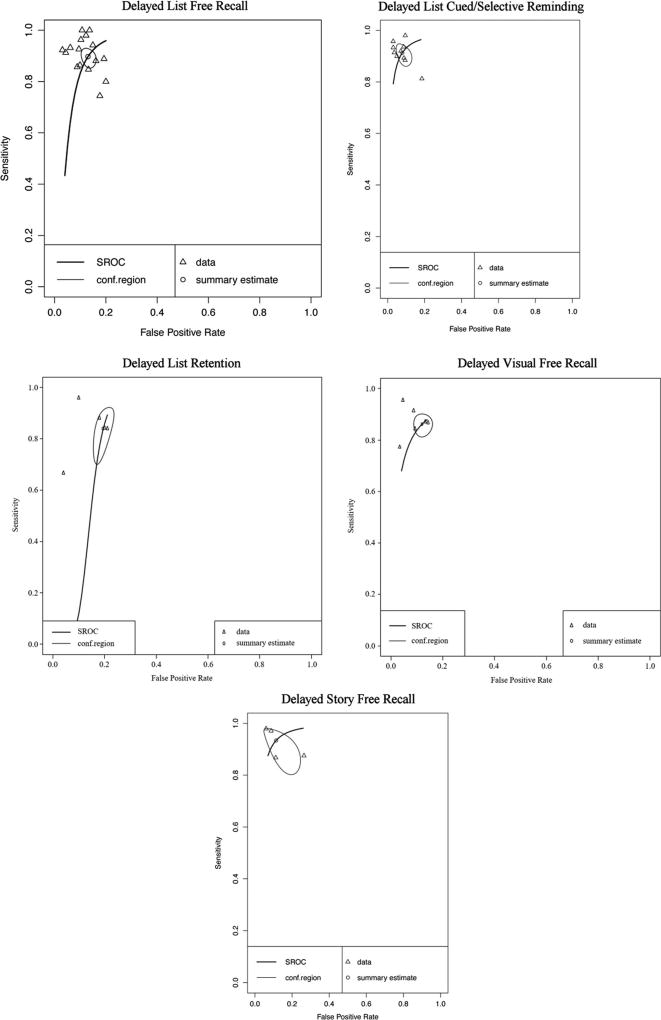

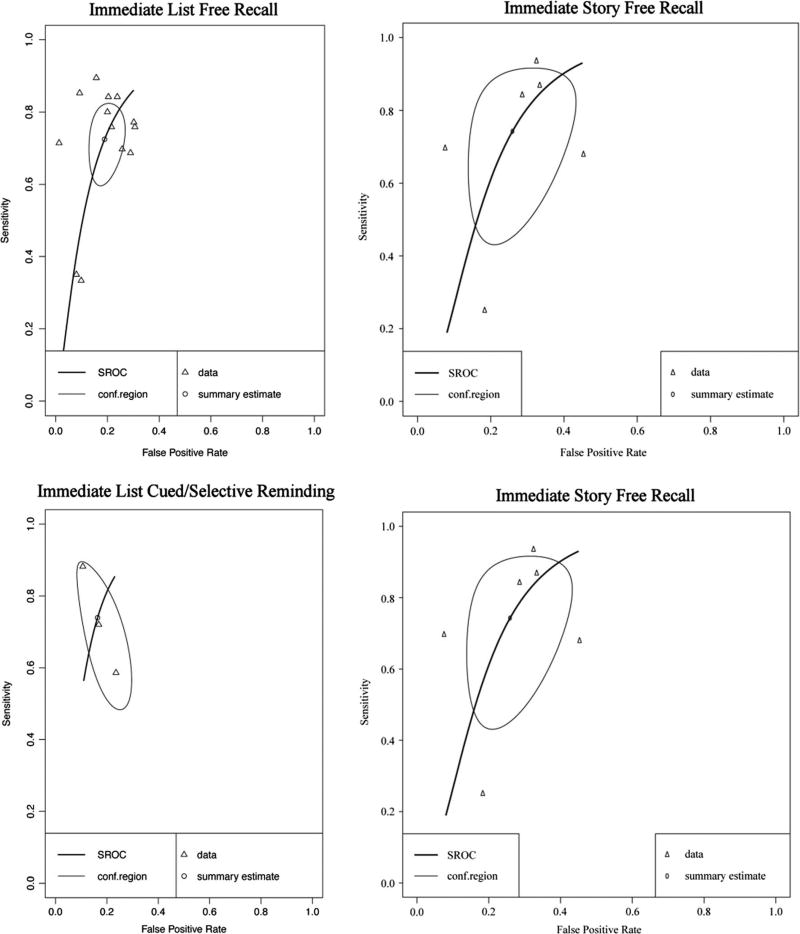

Forest plots for subclasses of immediate recall measure are presented in Online Resources (Figures i, ii, iii, and iv). Immediate memory recall subclasses (Table 1) generally displayed good to excellent sensitivity and specificity - List Free Recall Se = .87, 95%CI [.83, .90], Sp = .88, 95%CI [.85, .91]; List Cued Selective Reminding Se = .87, 95% CI [.78, .93], Sp = .93, 95% CI [.87, .97]; Visual Free Recall Se = .92, 95% CI [.86, .96], FPR = .90, 95% CI [.78, .95], with the exception of Immediate Story Recall which showed adequate sensitivity and specificity (Story Free Recall Se = .71, 95% CI [.61, .78], Sp = .75, 95% CI [.58, .86]). Visual inspection of the SROC curves for Immediate List Free Recall, Immediate List Cued Selective Reminding, and Visual Free Recall tests (Fig. 4) confirms that these measures display good diagnostic accuracy for differentiating AD from healthy controls. Story Free Recall displays lower diagnostic accuracy, however, it is important to note that due to small numbers of studies caution must be exercised in drawing firm conclusions regarding the diagnostic accuracy of Story Free Recall (k = 3) and Visual Free Recall measures (k = 4). Although no concerns were raised in the inspection of forest plots, correlations between sensitivity and FPR for AD Immediate List Cued or Selective Reminding and AD Immediate Visual Free Recall (cannot be calculated for Story Free Recall due to k = 3 cases) exceeded the cut-off for potential threshold effects (≥.60).

Fig. 4.

Hierarchical summary receiver-operator characteristic (SROC) curve for AD versus HC for subclasses of Immediate Recall measures. Conf.region = confidence region at the 95th percentile

Immediate memory indices or factor scores were reported in two studies but were not included in the meta-analysis due to insufficient data (<3 studies; see Online Resources -Table i). Both the Repeatable Battery for the Assessment of Neuropsychological Status (RBANS) Immediate Memory Index and Mayo Cognitive Factor Scales (MCFS) Learning Recall Factor Score demonstrated higher than minimum cutoff for both sensitivity and specificity. However, for both of these studies (Duff et al. 2008; Ivnik et al. 2000), the test of interest was available, in addition to multiple other measures, when determining the diagnostic status of study participants, which could lead to inflated diagnostic accuracy statistics.

In summary, immediate memory measures, including immediate list free recall, immediate list cued or selective reminding, and immediate visual free recall demonstrated high diagnostic accuracy for differentiating AD from HC, with values well exceeding the suggested minimum cut-off for sensitivity and specificity (> .80). Immediate story recall measures displayed lower diagnostic accuracy. However, very few studies using story recall measures could be incorporated into meta-analysis.

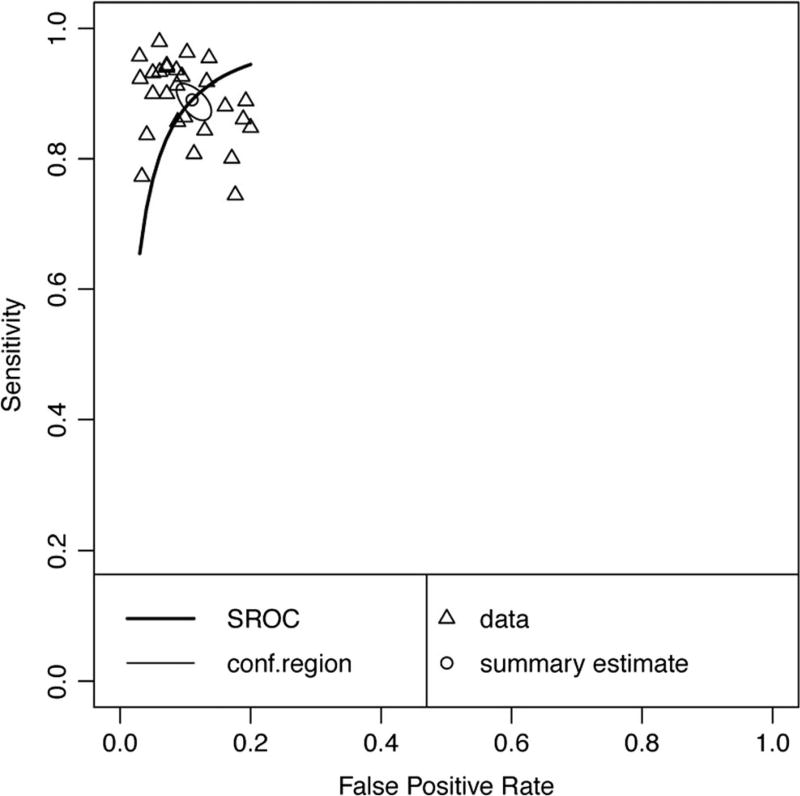

Delayed Memory

Twenty-seven data points contributed to the meta-analytic evaluation of delayed recall measures in differentiating AD from HC (Table 3 and Fig. 5). Overall, these measures demonstrated excellent diagnostic accuracy with values well exceeding the suggested minimum cut-off for sensitivity (Se = .89, 95% CI [.87, .91]) and specificity (Sp = .89, 95% CI [.87, .91]). Visual inspection of the forest plots (Fig. 5) and SROC curves (Fig. 6) supports this conclusion.

Fig. 5.

Paired forest plot AD vs HC Delayed Recall measures. AD: Alzheimer’s disease, HC: healthy controls, TP: true positive, FP: false positive, FN: false negative, TN: true negative

Fig. 6.

Hierarchical summary receiver-operator characteristic (SROC) curve for AD vs HC Delayed Recall measures. Conf.region = confidence region at the 95th percentile

To assess for potential bias in some studies where the measures examined were also used to diagnose participants with AD, we classified studies according to whether or not the measure was used to diagnose participants. A total of 18 (two data points from Delgado et al. 2016- Delgado 2016a, b) studies did not use the measure under examination for diagnosis of participants, with two studies using the measure for diagnosis and the remaining six studies being unclear as to how the measure was used in participant diagnosis. A meta-analysis of the 19 studies not using the measure for diagnosis was conducted (Table 2), indicating that the delayed memory recall measures continued to display excellent diagnostic accuracy for differentiating AD from HC with values well exceeding the suggested minimum cut-off for sensitivity (Se = .89, 95% CI [.85, .91]) and specificity (Sp = .89, 95% CI [.86, .91]). Visual inspection of the forest plots and SROC curves (Supplemental Figures xix and xx) supports this conclusion.

Forest plots for subclass analyses are presented in Online Resources (Figures v, vi, vii, viii, and ix respectively). Delayed memory subclasses displayed good to excellent sensitivity and specificity - List Free Recall Se = .90, 95%CI [.86, .92], Sp = .87, 95% CI [.84, .89]; List Cued Selective Reminding Se = .91, 95% CI [.87, .94], Sp = .92, 95% CI [.88, .95]; List Retention Se = .84, 95%CI [.73, .91], Sp = .81, 95% CI [.77, .84]; Visual Free Recall Se = .86, 95% CI [.82, .89], Sp = .88, 95% CI [.85, .91]; Story Free Recall Se = .93, 95% CI [.84, .98], Sp = .89, 95% CI [.79, .94]). Visual inspection of the SROC curves for the five subclasses of delayed memory recall (Fig. 7) confirms that all measures display good diagnostic accuracy for differentiating AD and HC. However caution is warranted in interpreting data for List Cued Selective Reminding and Story Free Recall, as these exceeded the cut-off for potential threshold effects.

Fig. 7.

Hierarchical summary receiver-operator characteristic (SROC) curve for AD vs HC for subclasses of Delayed Recall measures. Conf.region = confidence region at the 95th percentile

A few data points could not be included in the meta-analysis due to insufficient data (<3 studies), including story retention, visual retention, and other delayed scores that combined multiple indices. Values for story and visual retention were variable based on qualitative review. One study of story percent retention (WMS-R, 1987, Logical Memory percent retention; Testa et al. 2004) did not have sensitivity values that met the suggested minimum cutoff (Consensus Workgroup 1998), whereas another study (Clark et al. 2010) demonstrated values for RBANS-Story Retention into the 90s for specificity and sensitivity. Two studies reported values for visual retention and only one value, specificity of WMS-R Visual Reproduction Savings (Cahn et al. 1995), was above the minimum suggested cutoff (Consensus Workgroup 1998). The Mayo Cognitive Factor Score (MCFS) Retention score (Ivnik et al. 2000), derived from Wechsler Adult Intelligence Scales –Revised (WAIS-R), WMS-R, and Rey Auditory Verbal Learning Test (RAVLT), performed better than any individual retention indices. Duff et al. (2008) reported sensitivity and specificity values for the RBANS Delayed Memory Index, an index incorporating delayed word list recall, story recall, recognition, and delayed visual recall. The index had high sensitivity and specificity (92%) at a − 1.5 SD conventional cutoff.

Few studies provided data allowing for qualitative comparison of diagnostic accuracy across different memory measure types within the same study. Baek et al. (2012) reported values for immediate, delayed, and recognition memory on list learning (Korean Hopkins Verbal Learning Test; K-HVLT) and story learning (Korean Story Recall Test), with results suggesting list learning confers higher diagnostic accuracy relative to story recall, particularly for immediate recall trials. The diagnostic accuracy of recognition was poorer than recall for both stories and the word list. Duff et al. (2008) compared subtests of the RBANS in differentiating between AD and HCs. RBANS List Learning demonstrated good sensitivity and specificity using a conventional −1 SD cutoff and showed better balance across both sensitivity and specificity when compared to the RBANS Story Memory (immediate) in this study, although both measure types had excellent sensitivity and specificity after a delay. Salmon et al. (2002) reported higher sensitivity (98%) for delayed list recall (CVLT) relative to delayed story recall (WMS Logical Memory, 87% sensitivity), although specificity values were similar. Parra et al. (2012) reported immediate and delayed recall for a word list task, with values favoring immediate recall (sensitivity = 90%, specificity = 80%) over delay (sensitivity and specificity = 80%). Finally, Park et al. (2016) reported immediate and delayed cued recall on the RI-24 task. The delayed task showed higher sensitivity (89%) compared to the immediate task (75%) and the specificity was equivalent (91%). Fourteen studies presented data for both immediate and delayed verbal memory tasks (including both list and story). When compared directly within the same sample, most studies did not show large differences between immediate and delayed memory tasks for story or list-learning. Five studies showed a small improvement for either sensitivity or specificity on the delayed memory tasks, suggesting a possible advantage in diagnostic accuracy for the delayed task (e.g., Gavett et al. 2009). However, the remaining nine studies showed no such difference and some even demonstrated the opposite pattern – stronger diagnostic accuracy values on immediate memory, compared to the delayed memory (e.g., Bertolucci et al. 2001).

In summary, delayed verbal memory tests of free and cued list and story memory, as well as non-verbal or visual tasks demonstrated good to excellent sensitivity and specificity for differentiating AD patients from HC participants. Delayed free recall of word list and delayed free recall of stories both consistently demonstrated strong sensitivity and specificity values above the minimum suggested cutoff (Consensus Workgroup 1998). Percent retention tended to have lower sensitivity and specificity. It is important to note that savings or retention is dependent on initial encoding, and therefore the sensitivity and specificity values may be artificially lowered. For example, if a patient only learns one item and remembers that one item, the calculated score will be 100% retention. The specificity of percent retention may be additionally important when comparing AD with other disorders (e.g., Vascular Dementia, Huntington’s, or Parkinson’s disease; Lundervold et al. 1994). Results suggest that immediate and delayed memory tasks may be similar in their diagnostic accuracy (meta-analysis findings show.86 and .88 sensitivity, .89 and .89 specificity, respectively) for differentiating AD patients from HC participants. In a clinical context, immediate memory tasks require much less time compared to delay tasks for both patients and examiners, thus it is important to determine whether delayed measures offer superior diagnostic accuracy relative to immediate memory.

Associative Learning

Seven studies, two of which used the same sample (Parra et al. 2010, 2011) were included, reporting data for ten tasks. The Visual Association Test (VAT)2 had sensitivity (83%) and specificity (91%) values above the suggested cutoff (Lindeboom et al. 2002). A second paradigm3 used by Lowndes et al. (2008) measured performance on Verbal Paired Associate-Recognition and Verbal Paired Cued-Recall tasks. Both of these tasks demonstrated strong specificity (100 and 96, respectively) and sensitivity (86%), meeting the recommended cutoff of 80% (Consensus Workgroup 1998). Storandt and Morris (2010) reported lower than expected values for both sensitivity (62%) and specificity (70%) on the WMS Associate Learning Immediate Recall task. Though they reported multiple cutoff values in the article, a standard deviation of −0.5 was the best balance between sensitivity and specificity. O’Connell and colleagues (O'Connell et al. 2004) reported 100% specificity and only 68% sensitivity on the Cambridge Neuropsychological Test Automated Battery (CANTAB) – Paired Associates Learning (PAL) test,4 suggesting that the test may not meet the minimum criteria for detecting AD. In a comparison of the paper and pencil and computerized versions of The Placing Test,5 diagnostic accuracy was equivalent (Vacante et al. 2013). Specificity for both was reportedly 79%, which is just below the suggested minimum cutoff. The total score of the computerized test, which included faces, objects, and an additional 10 items, was reported to have equal sensitivity to the others (89%) and improved specificity (93%).

Parra et al. (2010) reported adequate sensitivity (82%) and specificity (77%) on a traditional associative learning task6 in individuals with early-onset familial AD. Parra and colleagues also created a novel “visual short term memory binding” task7 based on a change detection paradigm. The sensitivity (77%) and specificity (83%) for this test were also adequate. Of note, however, is that their study also included asymptomatic individuals who were known carriers of the E280A mutation who did not meet criteria for AD or MCI. Sensitivity of the binding condition in these individuals was 73%, a promising value given that they are in the preclinical phase of AD. For comparison, sensitivity of the WMS Verbal Paired Associates (VPA) was 40% for asymptomatic carriers. Another study published by Parra et al. (2011) found specific deficits in color-color short-term memory binding in both sporadic and familial AD. Sporadic AD cases demonstrated a 79% specificity and sensitivity, whereas familial AD cases demonstrated 77% sensitivity and 100% specificity for the bound colors condition.

Overall the associative learning tasks varied in terms of their sensitivity and specificity values. Of the ten measures reported, two-thirds demonstrated specificity values that were above the minimum cutoff. Similarly, seven of the ten measures demonstrated sensitivity values that were above the minimum cutoff. However, all but one study (Lindeboom et al. 2002) had small sample sizes ranging from 18 to 55, resulting in large confidence intervals.

Other Memory Measures

Ten studies that included recognition memory studies reported data on fourteen recognition tasks (yes-no recognition and forced-choice paradigms). All but one (Consortium to Establish a Registry for Alzheimer’s Disease - CERAD -Word List Recognition – Finnish version; (Sotaniemi et al. 2012) reported specificity values above the minimum suggested cutoff of 80% (Consensus Workgroup 1998). In contrast, only 5 of the 14 sensitivity values reported met the minimum suggested cutoff. This pattern suggests that if recognition memory is impaired, it is likely to indicate AD (high specificity), but if it is not impaired we cannot be confident about ruling out AD (low sensitivity). Future research needs to examine other recognition memory tasks that may have better overall diagnostic accuracy (e.g., California Verbal Learning Test-II Total Recognition Discriminability).

All of the studies reviewed that were included under the Combined or Interference category (k = 8) reported sensitivity values well above the minimum recommended cutoff (Consensus Workgroup 1998) at 85% or higher. In addition, all of the specificity values were excellent, with all but one above 90%. It is difficult to draw conclusions on this category as a whole due to the variability of types of tests within the category. However, the success in diagnosing AD based on tests in this category was quite strong. Two of the combined scores (recall plus recognition, HVLT: Shi et al. 2012; CERAD: Sotaniemi et al. 2012), both demonstrated sensitivity and specificity scores into the 90%s. Another study in this category reported recognition span total (verbal, visual, facial), with excellent sensitivity and specificity values at 95% and 96%, respectively (Salmon et al. 1989). Unfortunately, this study did not report cutoff values. An interference score for the Fuld Object Memory Evaluation (Loewenstein et al. 2004) reported a specificity of 85% and sensitivity of 96%, again without a cutoff. As mentioned above, a factor score combining retention performance across multiple measures performed well, with 85% sensitivity and 92% specificity (Ivnik et al. 2000). Both prospective and retrospective components of a prospective memory test (Marcone et al. 2017) had high sensitivity (93 and 85%, respectively) and specificity (86 and 98%, respectively). Troster et al. (1993) reported sensitivity (88%) and specificity (99%) for the combined WMSR Logical Memory and Visual Reproduction percent savings scores, both well above the recommended 80% (Consensus Workgroup 1998). Finally, total recall of the Buschke Selective Reminding (combining recall from short-term and long-term memory) yielded a sensitivity of 95% and specificity of 100%, both well above the minimum suggested cutoff. Unfortunately, no cutoff values were reported (Paulsen et al. 1995). The results overall from this section suggest that using a combination of scores, particularly recall and recognition scores added together, could be beneficial in diagnosing AD. Future research should continue to examine combination scores with more uniformity. Normative data for such combined measures are needed.

AD Versus Other Dementias/Disorders

Another important area of research focuses on the ability of neuropsychological measures to differentiate between AD and other neurological syndromes, including other dementias, neurological conditions and psychiatric disorders impacting neuropsychological functioning. The “other” category for this review was heterogeneous, and therefore unable to be included in meta-analysis. A total of 24 studies were initially identified, however, 14 were excluded according to exclusionary criteria described in the method. Online Resources (Table ii) presents the remaining 10 studies. These studies included comparisons between AD and semantic dementia, dementia due to Huntington’s disease (HD), Parkinson’s disease (PD), psychiatric populations, subcortical vascular dementia or small vessel disease (VaD), a sample of “non-AD” (described below), and Dementia with Lewy Bodies (DLB). In four of the nine studies, it was unclear if the test of interest was used to diagnose the disorder. Additionally, no studies in this section made 2 × 2 data available.

Four studies included measures of immediate memory and compared detection of AD to HD, VaD, semantic dementia, and “non-AD.” Three of these studies had acceptable sensitivity, including the RBANS Story Memory for AD versus VaD (McDermott and DeFilippis 2010), the Visual Route Learning Test for AD versus Semantic dementia (Pengas et al. 2010), and the Neuropsychological Assessment Battery (NAB) Daily Living Memory – Immediate recall (Gavett et al. 2012) for AD versus “non-AD.” Values for specificity were lower and more variable, but two studies (Gavett et al. 2012; Pengas et al. 2010) demonstrated both sensitivity and specificity values for immediate recall (NAB Daily Living Memory – Immediate Recall) that were above the recommended cutoff of 80%(Consensus Workgroup 1998). The Buschke Selective Reminding Test – Short-Term Memory (Paulsen et al. 1995) did not reach the recommended cutoff for either sensitivity or specificity in differentiating AD and HD.

Of nine delayed memory measures in six studies, five measures met the recommended cutoff for sensitivity, including Delayed Word Recall (O'Carroll et al. 1997) for AD versus Depression and the NAB Daily Memory Delayed Recall (Gavett et al. 2012) for AD versus “non-AD” group, as well as RBANS – List Recall (McDermott and DeFilippis 2010), RBANS – Delayed Memory Index (McDermott and DeFilippis 2010) and delayed figure recall for AD versus VaD (Matioli and Caramelli 2010). Only two of the nine tasks met the recommended cutoff for specificity, including an Enhanced Cued Recall task (Esen Saka and Elibol 2009) for AD versus PD, and the NAB Daily Living Memory – Delayed Recall (Gavett et al. 2012) for AD versus “non-AD.”

Three measures of recognition were included and all met the recommended cutoff of 80%(Consensus Workgroup 1998) for sensitivity, including Delayed Word Recognition (O'Carroll et al. 1997) for AD versus depression, RBANS-List Recognition (McDermott and DeFilippis 2010) for AD versus VaD, and CERAD or Auditory Verbal Learning Test (AVLT) List Recognition (Schmidtke and Hüll 2002) for AD versus small vessel disease. However, importantly, the specificity of recognition measures was low (ranging from 47 to 66%), for AD versus depression and VaD. A combined score of the WMS-R Logical Memory II and Object Assembly (Oda et al. 2009) for AD versus DLB had 81% sensitivity, and specificity falling just below the recommended cutoff at 76%. A combined measure reflecting recall from both short- and long-term memory on a selective reminding paradigm was below suggested cutoffs for differentiating dementia due to AD and HD (Paulsen et al. 1995). A second study (Troster et al. 1993) examined accuracy for differentiating mild AD from mild HD and moderate AD from moderate HD using the WMS-R Logical Memory plus Visual Reproduction percent savings. The moderate stage of the disease demonstrated good sensitivity (80%) and specificity (88%), whereas the mild stage of the disease had good sensitivity (86%) but poor specificity (36%).

In general for AD versus other comparisons studies found acceptable levels of sensitivity with low but varied specificity. Of note, the study with the highest reported specificity (Gavett et al. 2012) used a “non-AD” combined group that included healthy controls, MCI, dementia that was not AD, and ambiguous non-MCI. This enabled Gavett and colleagues to have a much larger sample relative to other studies and to evaluate specificity in terms of a broader neurological sample. Their findings suggest that although specificity for Alzheimer’s dementia may appear low when compared directly to other dementing conditions, relative to a broader neurological sample, differentiation based on objective memory scores fares well. Additionally, when considering individual measures, values for specificity or sensitivity may appear low, yet differential diagnosis in the clinical setting considers multiple measures and numerous factors in addition to neuropsychological test scores, likely resulting in better specificity for differential diagnosis than values based on a single memory score imply (see Fields et al. 2011 for discussion).

Importantly, clinicians often heavily weight recognition scores as a differential for AD compared to other dementias. Studies here indicate that it may be an erroneous assumption that non-AD populations have better recognition than AD patients. In addition to further exploration of the specificity of recognition or cued-recall paradigms, future research in the area of differentiating AD from other syndromes should also examine list learning immediate free recall, as no studies were found that included data for this type of measure.

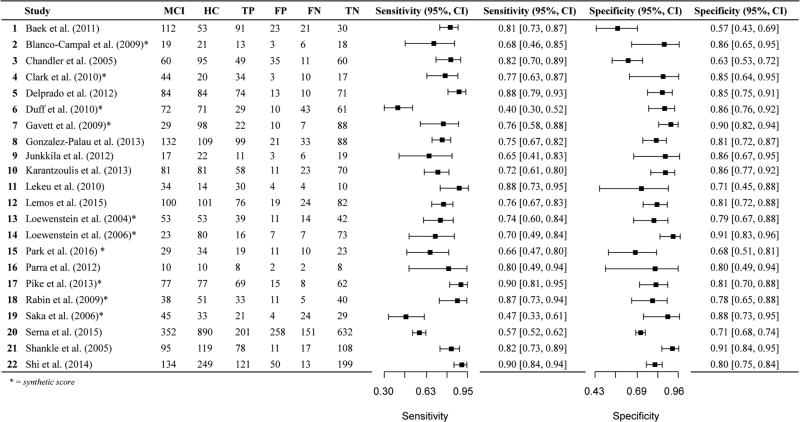

MCI Versus HC

The literature review and PubMed search yielded 60 studies that were deemed relevant based on our initial search criteria. After a more careful review of each study, 22 were excluded according to exclusionary criteria described in the method. Online Resources (Table iii) present the remaining 38 studies. Of the 38 studies, one study explicitly stated using the measure of interest in combination with other measures and diagnostic methods to diagnose MCI (Yassuda et al. 2010) and one study is presumed to have used the measure of interest to diagnose MCI (Karantzoulis et al. 2013). Additional studies have unclear diagnostic methods and do not explicitly state whether the measures of interest were used to diagnose individuals with MCI (Baek et al. 2012, 2011; Clark et al. 2010; Gavett et al. 2012; Karrasch et al. 2005; Lekeu et al. 2010; Lemos et al. 2014; Saka et al. 2006). Two studies by the same group of authors used separate samples of MCI participants (they report non-overlapping recruitment dates) but the same HC participants (Baek et al. 2012, 2011). Although we still report on these studies separately, the use of the same HC group may increase the similarity of diagnostic accuracy values between the studies. Most studies reported the cutoffs used to determine sensitivity and specificity values, however, seven studies did not (Lekeu et al. 2010; Loewenstein et al. 2004; Rabin et al. 2009; Serna et al. 2015; Shankle et al. 2005). Only two studies reported 2 × 2 data in addition to sensitivity and specificity values (Junkkila et al. 2012; Troyer et al. 2008). Almost all of the 38 studies (k = 36) used well-established MCI diagnostic criteria (Albert et al. 2011; Petersen et al. 1999; Petersen et al. 2001; Petersen 2007, 2004; Portet et al. 2006; Winblad et al. 2004). One study classified individuals with MCI based on NINCDS-ADRDA criteria for diagnosis of Alzheimer’s disease, but without functional impairment (Loewenstein et al. 2006). A second study used the Clinical Dementia Rating (CDR) scale to diagnose MCI (Shankle et al. 2005).

Overall, when examining the data both qualitatively and quantitatively for a subset of the measures applied to the meta- analysis, sensitivity and specificity values for differentiating between individuals with MCI versus healthy elderly controls are lower than the suggested minimum cutoffs of 80% sensitivity and specificity recommended for differentiating AD patients from HC participants and other dementias (Consensus Workgroup 1998), and generally lower than the values for AD versus HC reported in the present literature review and meta-analyses. We use the qualifier “adequate” to refer to sensitivity and specificity values ≥70%.

Immediate Memory

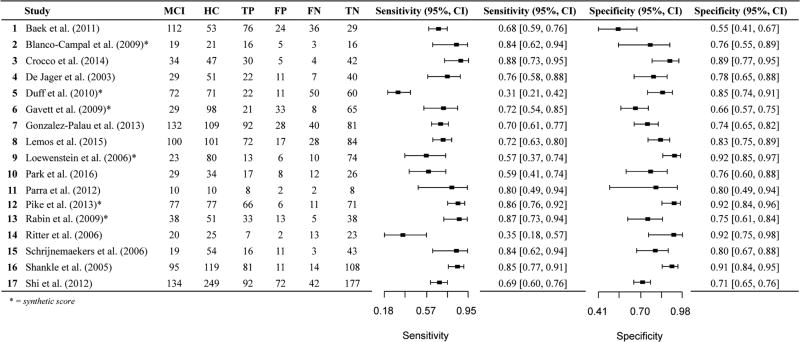

Seventeen data points contributed to the evaluation of immediate recall measures in differentiating MCI from HC (Table 4, Fig. 8). Overall, these measures demonstrated adequate diagnostic accuracy with values lower than the recommended minimum cutoff, but just above 70% for sensitivity (Se = .72, 95% CI [.63, .79]) and just at the cutoff recommended by the Consensus Workgroup (1998) for detecting AD for specificity (Sp = .81, 95% CI [.75, .85]) (Table 4). Visual inspection of the forest plots (Fig. 8) and SROC curves (Fig. 9) further suggests adequate diagnostic accuracy for differentiating MCI from healthy controls, but values are much lower than for AD vs HC comparisons.

Fig. 8.

Paired forest plot MCI vs HC Immediate Recall measures. MCI: Mild Cognitive Impairment, HC: healthy controls, TP: true positive, FP: false positive, FN: false negative, TN: true negative. Conf.region = confidence region at the 95th percentile

Fig. 9.

Hierarchical summary receiver-operator characteristic (SROC) curve for MCI vs HC Immediate Recall measures

To assess for potential bias in studies where the measures examined were also used to diagnose participants with MCI, we classified all 17 data points according to whether or not the measure was used to diagnose participants. A total of 13 studies did not use the measure under examination for diagnosis of participants, with 1 study using the measure for diagnosis and the remaining 3 studies being unclear as to how the measure was used in participant diagnosis. A meta-analysis of the 13 studies not using the measure for diagnosis was conducted (Table 5), indicating that the immediate memory recall measures continued to display adequate diagnostic accuracy for differentiating MCI from HC with values remaining lower than the suggested minimum cut-off for sensitivity (Se = .73, 95% CI [.63, .81]) and at the specificity cutoff recommended by the Consensus Workgroup (1998) for detecting AD (Sp = .80, 95% CI [.75, .85]). Visual inspection of the forest plots and SROC curves (Supplemental Figures xxi and xxii) supports this conclusion.

For immediate memory recall subclasses, all types of measures displayed at least adequate sensitivity and specificity (Table 4; List Free Recall Se = .72, 95% CI [.62, .81], Sp = .81, 95% CI [.75, .86]; Story Free Recall Se = .74, 95% CI [.50, .89], Sp = .74, 95% CI [.60, .84]; List Cued / Selective Reminding Se = .74, 95% CI [.54, .87], Sp = .84, 95% CI [.73, .90]). Visual inspection of the SROC curves for Immediate List Free Recall, Immediate Story Free Recall, and List Cued / Selective Reminding tests (Fig. 10) confirm that these measures display adequate (at least greater than 70%) diagnostic accuracy for differentiating MCI from healthy controls. Forest plots for subclass analyses are presented in Online Resource (Figures x–xii). Many of the studies reporting on immediate story recall also report sensitivity and specificity for immediate recall of word lists. In general, comparing story recall to list recall within each study revealed a trend of better sensitivity and specificity values for immediate list recall compared to immediate story recall (e.g., Baek et al. 2012; Blanco-Campal et al. 2009; Duff et al. 2010).

Fig. 10.

Hierarchical summary receiver-operator characteristic (SROC) curve for MCI vs HC for subclasses of Immediate Recall measures. Conf.region = confidence region at the 95th percentile

Three additional studies investigated measures of immediate memory that do not fall into any of the above mentioned categories. Loewenstein et al. (2006) investigated the diagnostic accuracy of the WMS-III immediate recall portion of Visual Reproduction and report 44% sensitivity and 91% specificity. Gavett et al. (2012) reports sensitivity (86%) and specificity (90%) values above the suggested minimal cutoff of 80% (1998) for the immediate recall portion of NAB Daily Living Memory, which investigates memory for common daily information (e.g., medications, addresses). Duff et al. (2010) reported low sensitivity (35%) but good specificity (85%) on a combined memory score from the RBANS (immediate memory scores for list and story).

Overall, although the majority of studies that investigate the diagnostic accuracy of immediate memory measures do not exceed the suggested minimum cutoff of 80% put forth by the Consensus Workgroup (1998) for differentiating between AD and HC, many exceed 70% sensitivity and specificity.

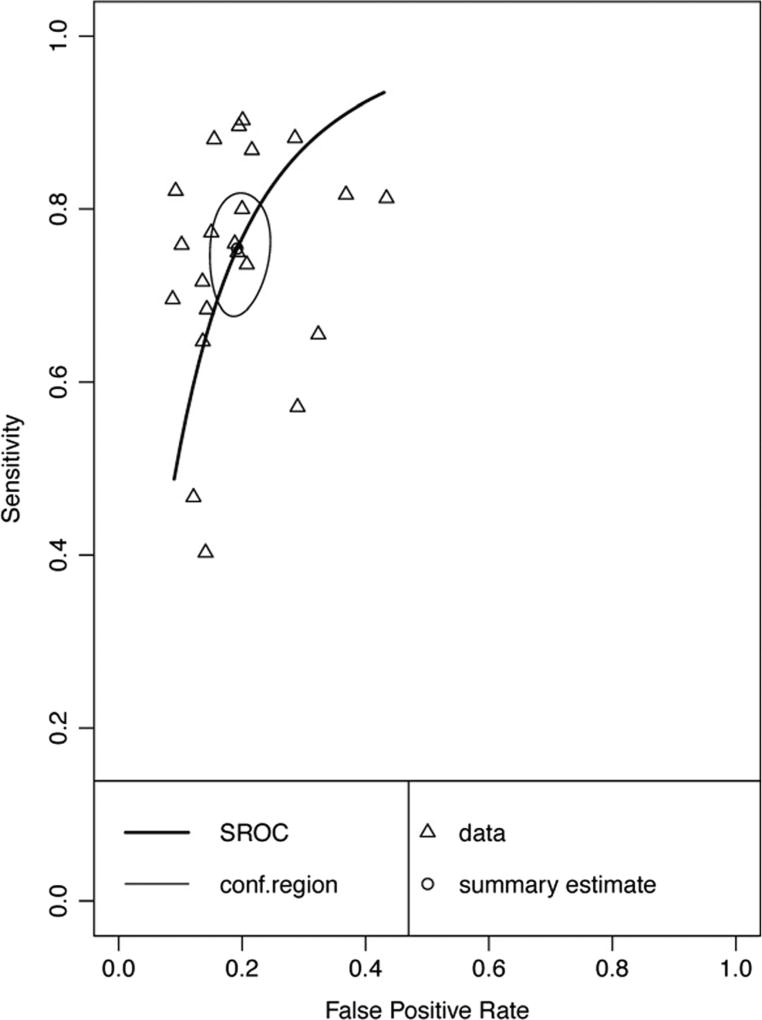

Delayed Memory

Twenty-two data points contributed to the evaluation of delayed recall measures in differentiating MCI from HC (Table 6, Fig. 11). Overall, these measures demonstrated adequate diagnostic accuracy with values below, but approaching the recommended cutoff of 80% proposed by the Consensus Workgroup (1998) for sensitivity (Se = .75, 95% CI [.69, .81]) and just above the cutoff for specificity (Sp = .81, 95% CI [.77, .84]) (Table 6). Visual inspection of the forest plots (Fig. 11) and SROC curves (Fig. 12) further confirms adequate diagnostic accuracy of delayed recall measures for differentiating MCI from HC, with the values being lower than for AD vs HC comparisons. Comparing the diagnostic accuracy of delayed word-list recall to immediate wordlist recall within studies reporting both types of measures did not yield a consistent pattern with regards to one type of measure having higher diagnostic accuracy relative to another.

Fig. 11.

Paired forest plot MCI vs HC Delayed Recall measures. MCI: Mild Cognitive Impairment, HC: healthy controls, TP: true positive, FP: false positive, FN: false negative, TN: true negative

Fig. 12.

Hierarchical summary receiver-operator characteristic (SROC) curve for MCI vs HC Delayed Recall measures. Conf.region = confidence region at the 95th percentile

To assess for potential bias in studies where the measures examined were also used to diagnose participants with MCI, we classified all 22 papers according to whether or not the measure was used to diagnose participants. A total of 16 studies did not use the measure under examination for diagnosis of participants, with 1 study using the measure for diagnosis and the remaining 5 studies being unclear as to how the measure was used in participant diagnosis. A meta-analysis of the 16 studies not using the measure for diagnosis was conducted (Table 5), indicating that the delayed memory recall measures continued to display adequate diagnostic accuracy for differentiating MCI from HC with values remaining lower than the suggested minimum cut-off for sensitivity (Se = .76, 95% CI [.68, .82]) and at the cutoff recommended by the Consensus Workgroup (1998) for detecting AD for specificity (Sp = .81, 95% CI [.77, .85]). Visual inspection of the forest plots and SROC curves (Supplemental Figures xxiii and xxiv) supports this conclusion.

Forest plots for delayed memory recall subclasses are presented in Online Resources (Figures xiii–xvi, respectively). All measures display sensitivity values below the recommended cutoff (generally in the adequate range), although several specificity values fall above the recommended cutoff (List Free Recall Se = .73, 95% CI [.64, .81], Sp = .83, 95% CI [.77, .88]; Story Free Recall Se = .74, 95% CI [.56, .86], Sp = .79, 95% CI [.70, .85]; List Cued / Selective Reminding Se = .72, 95% CI [.58, .82], Sp = .85, 95% CI [.76, .91]; Visual Recall Se = .69, 95% CI [.33, .91], Sp = .82, 95% CI [.64, .92]). Visual inspection of the SROC curves for the delayed recall subclasses (Fig. 13) confirm that all measures display adequate diagnostic accuracy for differentiating MCI from HC. However, it is important to note that threshold effects were apparent for list cued / selective reminding (as evidenced by the forest plot and rho correlation). Comparing the diagnostic accuracy of delayed story recall to immediate story recall in studies that examined both revealed an overall pattern of improved or comparable sensitivity and/or specificity for delayed story recall compared to immediate story recall.

Fig. 13.

Hierarchical summary receiver-operator characteristic (SROC) curve for MCI vs HC for subclasses of Delayed Recall measures. Conf.region = confidence region at the 95th percentile

There were an insufficient number of studies (<3) found for inclusion in a meta-analysis for a few types of measures. Of the two studies investigating the sensitivity and specificity of verbal retention scores (list or story), only one of the four measures (RBANS List Learning Retention; Clark et al. 2010) exceeded the suggested 80% cutoff (1998). The other tasks report specificity values above 80% but sensitivity values below 70%. Comparing measures within the same study revealed a pattern of superior sensitivity for recall scores relative to scores of retention belonging to the same measure (e.g. CERAD Word List; Blanco-Campal et al. 2009). Similarly, comparing within two studies (Lemos et al. 2015; Park et al. 2016) that investigated both immediate and delayed conditions of cued or selective reminding paradigms showed mixed results. Improved sensitivity and specificity values were reported by Park et al. (2016) for delayed conditions of the RI-24 (adapted from the RI-48) compared to immediate conditions. Lemos et al. (2015) reported comparable values between the immediate and delayed conditions of the FCSRT in Portuguese.

Within the “Other Delayed Recall” category, two of the three studies examined the Delayed Memory Index of the RBANS (Duff et al. 2010; Karantzoulis et al. 2013). Both report specificity that exceeds the 80% suggested minimum cutoff (Consensus Workgroup 1998), but sensitivity was variable, with only one (Karantzoulis et al. 2013) demonstrating adequate sensitivity (72%). Methodological differences between the two studies may account for this difference. Specifically, although not explicitly stated, it is presumed that Karantzoulis et al. (2013) used the RBANS to diagnose individuals with MCI. This circularity in methodology has the potential of inflating scores of sensitivity. Comparatively, Duff et al. (2010) explicitly did not use the RBANS to diagnose individuals with MCI and found much lower sensitivity on this measure (56%). Comparable with Gavett et al.’ (2012) examination of immediate recall of the NAB Daily Living Memory measure, the delayed recall trial yielded sensitivity and specificity values exceeding the suggested minimum cutoff of 80% (97% and 88%, respectively).

In summary, the values for sensitivity and specificity of delayed recall measures were significantly lower for differentiating MCI and HC than AD and HC, and often did not meet suggested minimum cutoffs (Consensus Workgroup 1998). However, a majority of the values reported within the meta-analyses as well as our qualitative review exceeded sensitivity and specificity levels of 70%.

Associative Learning

Eight studies reporting on fourteen different measures of associative learning were found through the literature search. These studies are qualitatively reviewed. Four measures exceeded suggested minimum cutoffs of the consensus report (1998). Wang et al. (2013) examined the Modified Spatial Context memory Test (SCMT).8 The authors report strong sensitivity and specificity for the total score and the event-place association memory subtest (97% and 93% for total score, and 97% and 100% for subtest). A measure of associative memory investigated by Pike et al. (2013), the WMS-IV VPA delayed score, also exceeded suggested cutoffs for sensitivity and specificity. Finally, Troyer et al. (2008) report sensitivity and specificity for the Brief Visual Memory Test – Revised (BVMT-R) Object Location Recall9 test (Benedict, 1997). In the case of the Troyer et al. study, an association score was derived separately from an accuracy score in order to examine associative learning independent of accuracy. The authors report 86% sensitivity and 97% specificity for the association score. Troyer et al. also examined sensitivity and specificity of Digit Symbol Incidental Recall.10 They report specificity of 90% which exceeded the minimum cutoff of 80%, and sensitivity of 76%.

In summary, tasks of associative learning varied widely in their sensitivity and specificity for distinguishing between older adults with MCI and HC participants. Only eight of fourteen measures are reported to have sensitivity and specificity that both exceed even 70%. Overall, studies that investigated the sensitivity and specificity of the same measure in AD versus HC report higher values than MCI versus HC.

Other Memory Measures

This section is mainly comprised of studies that investigated the diagnostic accuracy of recognition and combined/interference scores. Additionally, three studies are included in the “miscellaneous” portion of the Online Resource (Table iii), two of which report on prospective memory measures (Blanco-Campal et al. 2009; Delprado et al. 2012) and one of which reports diagnostic accuracy scores stratified by education groups (Yassuda et al. 2010).

Six studies investigated the diagnostic accuracy of recognition measures. Only one study by Rabin et al. (2009) report sensitivity (92%) and specificity (84%) values that exceed the 80%suggestedminimum cutoff (Consensus Workgroup 1998), for the recognition portion of WMS-III Logical Memory, although the cut-off used was not reported. Only one of the remaining 5 studies report both sensitivity and specificity that exceed 70% (Fuld Object Memory Evaluation; Loewenstein et al. 2004). The remaining studies report sensitivity values of 74%or below and specificity values of 73%or below for wordlist recognition (Baek et al. 2012; Duff et al. 2010; Karrasch et al. 2005), story recognition (Baek et al. 2012), or photograph recognition (Ritter et al. 2006).

Overall, with the exception of two studies (Loewenstein et al. 2004; Rabin et al. 2009), recognition measures do not seem to provide strong diagnostic accuracy in distinguishing between MCI and HC groups. MCI versus HC findings varied with regards to sensitivity and specificity tradeoffs across recognition measures.

With regards to combined or interference scores, Shankle et al. (2005) investigated the diagnostic accuracy of a weighted score derived using correspondence analysis from the CERAD Word List test, reporting both sensitivity (94%) and specificity (89%) that exceed the minimum suggested cutoff. Of note, the authors did not report the cutoff score used to derive these values, limiting the clinical applicability of the results. Two studies by Loewenstein et al. (2004, 2006) report sensitivity and specificity for the Fuld Combined Interference score. The first study by Loewenstein et al. (2004) reports sensitivity that nearly met the 80% minimum recommended cutoff (Consensus Workgroup 1998), while the second study (Loewenstein et al. 2006) reports lower sensitivity at 70%. Specificity was relatively comparable between the two studies (87% and 91% respectively). A study by Crocco et al. (2014) examined sensitivity and specificity of the Loewenstein-Acevedo Scales of Semantic Interference and Learning (LASSI-L) task, which involves free and cued recall of two different 15-item word lists (see Crocco et al. for full task description). The authors report high diagnostic accuracy for a combined score of List A and List B cued recall (88% sensitivity and 92% specificity).