Abstract

Electronic health records (EHRs) provide great promise for identifying cohorts and enhancing research recruitment. Such approaches are sorely needed, but there are few descriptions in the literature of prevailing practices to guide their use. A multidisciplinary workgroup was formed to examine current practices in the use of EHRs in recruitment and to propose future directions. The group surveyed consortium members regarding current practices. Over 98% of the Clinical and Translational Science Award Consortium responded to the survey. Brokered and self-service data warehouse access are in early or full operation at 94% and 92% of institutions, respectively, whereas, EHR alerts to providers and to research teams are at 45% and 48%, respectively, and use of patient portals for research is at 20%. However, these percentages increase significantly to 88% and above if planning and exploratory work were considered cumulatively. For most approaches, implementation reflected perceived demand. Regulatory and workflow processes were similarly varied, and many respondents described substantive restrictions arising from logistical constraints and limitations on collaboration and data sharing. Survey results reflect wide variation in implementation and approach, and point to strong need for comparative research and development of best practices to protect patients and facilitate interinstitutional collaboration and multisite research.

Key words: Electronic health records, recruitment, clinical research, biomedical informatics, CTSA

Introduction

Participant recruitment is often the major rate-limiting step in the conduct of clinical trials and other health-related research [1, 2]. Electronic health records (EHRs) contain rich patient information and are a promising resource to facilitate recruitment activities, such as eligibility determination and engaging prospective participants [3–5]. In recent years, there has been widespread adoption of EHRs in health care systems, hospitals, and clinical practices; nearly all hospitals had a certified EHR technology as of 2015 [6]. However, investigators face differing and sometimes conflicting institutional policies and practices for the use of EHRs, which can discourage collaboration and inhibit research.

The National Institutes of Health’s National Center for Advancing Translational Science funded a consortium of ~60 centers through the Clinical and Translational Science Award (CTSA) with a focus on interinstitutional collaboration to accelerate the initiation, recruitment, and reporting of multisite trials. The CTSA Consortium established a workgroup of trialists, regulatory specialists, informaticians, and others to describe policies and practices for the research use of EHRs. The workgroup conducted a survey of key individuals at CTSA-funded institutions to examine informatics tools employed across the Consortium and current practices in EHR-based cohort identification and research recruitment. Results from the survey were analyzed to elucidate the range of practices across the CTSA Consortium. From review of the literature, there is inadequate comparative data to firmly establish best practices; however, the workgroup developed a preliminary sense of practices that may help facilitate transparent research recruitment using EHRs while accommodating the interests of patients, clinicians, and health care systems.

Methods

Data Collection

The workgroup developed a survey (online Supplementary Material) to collect data on current and emerging EHR-based recruitment practices at all CTSA sites (some comprised more than 1 institution) with regard to institution-specific informatics practices and tools, and regulatory and workflow issues. The questionnaire was constructed in REDCap [7] and emailed to individual CTSA program directors by the CTSA coordinating center at Vanderbilt University. Four weeks were allowed for responses from March to April 2016. Directors were asked to consult with their informatics, regulatory, and recruitment cores, and to respond as a team as needed. The survey process was reviewed and determined exempt by the Institutional Review Board (IRB) at the University of North Carolina at Chapel Hill.

Using a combination of closed and open-ended questions, we queried participants regarding site-specific implementation of 7 common informatics methods:

Use of EHR patient portals to notify patients of research opportunities.

Use of electronic alerts to care providers about patients in clinic who meet eligibility requirements.

Use of electronic alerts to the research team about patients in clinic who meet eligibility requirements.

Access to data warehouse via a staff member/analyst.

Use of self-service tools to run de-identified queries.

Use of business intelligence tools to give researcher teams direct query access.

Use of EHRs to build registries to aid in recruitment.

For each method, participants were asked to assess the level of implementation at their institutions using a 5-level Likert-type scale, which included options ranging from no plans to implement such a tool, to fully operational implementations. An option was provided for not sure or not applicable.

Participants were also asked to estimate the level of demand for each method by researchers at their institution using a 6-level Likert-type scale with options ranging from never, to rarely, to very frequently. Here again an option was provided for not sure or not applicable.

To explore regulatory and workflow processes, we posed a hypothetical case involving a study of type 2 diabetes (online Supplementary Material). The local principal investigator encounters multiple steps in order to use EHR data to aid in recruitment: regulatory review and approval, engagement of informatics staff to generate a list of potential participants by translating inclusion/exclusion criteria into a database query, and finally the process of reaching out to patients or their providers to determine if patients are interested in study participation. Based on this hypothetical scenario, we posed several questions to survey participants about the regulatory and workflow processes at their institutions.

Data Analysis

Data were exported from REDCap and analyzed. Quantitative statistics were generated for structured answers using Microsoft Excel. Free text responses were analyzed using QSR International’s NVivo qualitative data analysis software.

Results

Participant/EHR Characteristics

There were 64 responses in the survey representing over 98% of the CTSA Consortium; 122 individuals contributed to the responses. The largest group of respondents (48%) self-identified as informatics specialists while 16% did so as recruitment specialists, 11% as regulatory specialists, and 25% as other.

When asked what EHR program their institution/hospital uses, 42 respondents said Epic, 13 said Cerner, and 3 said they use a homegrown system. Fourteen indicated “other” (including most commonly Allscripts and Centricity), with 1 of these reporting they were in the planning stages of identifying a system. Five CTSAs reported using 2 or more EHR systems.

Methods of EHR-Based Recruitment

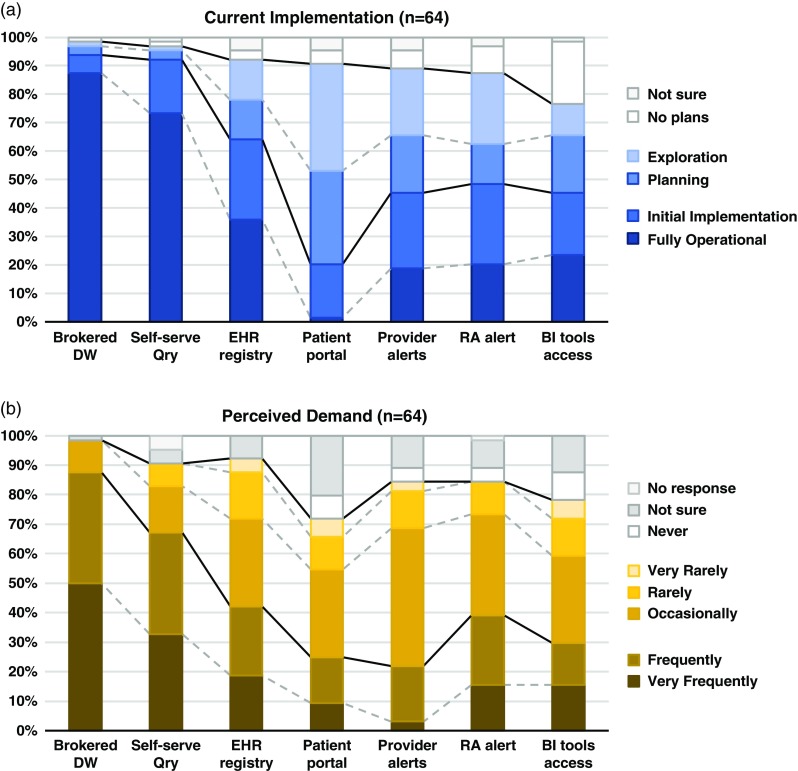

Although the use of patient portals (e.g., Epic MyChart) to notify patients about research opportunities was reported as initially implemented or fully operational at only 20% of responding institutions; however, 70% were either exploring or planning the use of such tools (Table 1). Demand for these tools was reported as frequent or very frequent by 25% of respondents, and another 30% described it as “occasional” (see Fig. 1).

Table 1.

Responses to questions regarding current implementation of methods of electronic health records (EHR)-based cohort identification and recruitment

| Patient portal | Provider alerts | Research team alerts | Brokered data warehouse | Self-service query | Business intelligence tools | EHR registry | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| n | % | n | % | n | % | n | % | n | % | n | % | n | % | |

| Implementation | ||||||||||||||

| Not sure | 3 | 5 | 3 | 5 | 2 | 3 | 1 | 2 | 1 | 2 | 1 | 2 | 3 | 5 |

| No plans | 3 | 5 | 4 | 6 | 6 | 9 | 0 | 0 | 1 | 2 | 14 | 22 | 2 | 3 |

| Exploration | 24 | 38 | 15 | 23 | 16 | 25 | 1 | 2 | 1 | 2 | 7 | 11 | 9 | 14 |

| Planning | 21 | 33 | 13 | 20 | 9 | 14 | 2 | 3 | 2 | 3 | 13 | 20 | 9 | 14 |

| Initial Implementation | 12 | 19 | 17 | 27 | 18 | 28 | 4 | 6 | 12 | 19 | 14 | 22 | 18 | 28 |

| Fully Operational | 1 | 2 | 12 | 19 | 13 | 20 | 56 | 88 | 47 | 73 | 15 | 23 | 23 | 36 |

| Demand | ||||||||||||||

| Not sure | 13 | 20 | 7 | 11 | 6 | 9 | 1 | 2 | 3 | 5 | 8 | 13 | 5 | 8 |

| Never | 5 | 8 | 3 | 5 | 3 | 5 | 0 | 0 | 0 | 0 | 6 | 9 | 0 | 0 |

| Very Rarely | 4 | 6 | 2 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 4 | 6 | 3 | 5 |

| Rarely | 7 | 11 | 8 | 13 | 7 | 11 | 0 | 0 | 5 | 8 | 8 | 13 | 10 | 16 |

| Occasionally | 19 | 30 | 30 | 47 | 22 | 34 | 7 | 11 | 10 | 16 | 19 | 30 | 19 | 30 |

| Frequently | 10 | 16 | 12 | 19 | 15 | 23 | 24 | 38 | 22 | 34 | 9 | 14 | 15 | 23 |

| Very Frequently | 6 | 9 | 2 | 3 | 10 | 16 | 32 | 50 | 21 | 33 | 10 | 16 | 12 | 19 |

Patient portal, use of EHR patient portals to notify patients of research opportunities; Provider alerts, use of electronic alerts to care providers of patients in clinic meeting eligibility requirements; Research team alerts, use of electronic alerts to the research team if patients in clinic meet eligibility requirements; Brokered data warehouse, access to data warehouse by staff members; Self-Service query, use of self-service tools to run de-identified queries; Business intelligence tools, research given direct query access to data warehouse through business intelligence tools; EHR registry, use of EHRs to build patient lists to aid in recruitment.

Fig. 1.

Summary of responses to questions regarding methods of electronic health records (EHR)-based cohort identification and recruitment. (a) Current implementation. (b) Perceived demand. Brokered data warehouse (DW), access to data warehouse by staff members; Self-serve Qry, use of self-service tools to run de-identified queries; EHR registry, use of EHRs to build patient lists to aid in recruitment; Patient portal, use of EHR patient portals to notify patients of research opportunities; Provider alerts, use of electronic alerts to care providers of patients in clinic meeting eligibility requirements; Research associate (RA) alert, use of electronic alerts to the research team if patients in clinic meet eligibility requirements; Business intelligence (BI) tools access, research given direct query access to data warehouse through business intelligence tools.

EHR alerts to care providers about patients in clinic meeting eligibility criteria were reported as in exploratory or planning stages by 44% and initially or fully implemented by 45% of respondents. Demand for these tools was reported as frequent or very frequent by 22% and “occasional” by nearly half (47%) of the respondents. Similarly, EHR alerts addressed to research teams, when an eligible patient is scheduled or attends a clinic visit, were described as exploratory or planning by 39% and some form of implementation at 48%. However, reported demand for this method was notably higher, with 39% describing it as frequent/very frequent.

With regard to access to EHR data via a data warehouse, most institutions (94%) said they were in initial implementation of, or had fully operational processes for providing access via a staff member or analyst (Table 1). This high level of implementation was consistent with perceived demand for such services; 88% of respondents described demand as frequent/very frequent. Similarly, most institutions (92%) said they had initially or fully implemented self-service tools (e.g., i2b2 [8]), allowing researchers to run queries on de-identified aggregate data. Demand was also high, with two-thirds describing it as frequent/very frequent. Use of off-the-shelf business intelligence tools that allow researchers to run more complex queries on EHR data was much less prevalent with 45% reporting initial or full implementation. Perceived demand for these tools was mixed, with 30% reporting it as frequent or very frequent.

Finally, nearly two-thirds of institutions (64%) had initially or fully implemented the use of EHR data to build registries to aid in recruitment (Table 1). Demand for this approach was strong, with 30% describing it as occasional and 42% as frequent/very frequent.

When asked whether there were other EHR-related approaches they had considered, piloted, or implemented to facilitate research recruitment, 50 respondents (78%) said yes. Among those who provided additional details, common elements included:

National networks of data sharing, either industry based (such as TriNetX®, or Cerner PowerTrials) or funded programs such as the PCORnet and SHRINE-based networks.

Vendor-based or homegrown tools to assist in matching patients with clinical trials eligibility criteria.

Other outreach, for example, asking patients directly for permission to be contacted for recruitment purposes (including both opt-in and opt-out approaches); the use of patient portals and Web sites to allow patients to indicate preferred method of contact; the use of direct mail, electronic communication (email, text, apps), and phone; point of patient registration or clinic visit procedures; and community-based efforts.

Nearly three-fourths (72%) of respondents said they gather metrics or other evidence of the impact of EHR-based recruitment. Among those who provided additional details, descriptions fell into 3 broad categories: (1) system utilization measures, such as numbers of hits, requests, or logons; (2) recruitment measures, such as numbers of patients who were identified as potentially eligible, responded after being contacted, screened and/or enrolled; and (3) other user-related measures, such as the numbers of grants, publications, or the results of surveys and gathered anecdotes.

Workflow and Regulatory Process for Cohort Recruitment

When asked, “Does your institution have 1 or more established workflow processes for cohort recruitment into clinical research which leverages the power of EHR to identify large numbers?”, nearly two-thirds (64%) said yes, and another 22% said they were piloting such programs. Given the option to provide additional details, respondents described a variety of workflow elements, such as regulatory and related review/approval processes, including the use of committees that review requests; informatics tools and processes (e.g., i2b2/SHRINE, ACT, tools that link EHRs to CTMS or REDCap); data sources (e.g., databases, registries, data warehouses); tools and processes to assess eligibility; and tools and processes associated with initiating patient contact, including use of MyChart portal for recruitment purposes. They also described general processes and approaches, including the use of dedicated/specialized teams and services, including honest brokers, data analysts, and recruitment specialists; self-services approaches; and standard operating procedures on issues like direct mail recruitment, guidebooks, and consultations to improve recruitment letters. Examples of specific processes and tools respondents mentioned included:

A listing of patients who can be contacted after committee approval (as opposed to having to go through their direct provider).

An automated interface that streamlines the load of EHR data into REDCap; template implementation for data extraction to make the process easier and more efficient for analysts.

Using patient portals and tablets to engage volunteers to opt-in for research creating a flag in the EHR to allow subsequent identification.

Nearly one-third (30%) of respondents reported substantive restrictions on workflow processes, beyond regulatory reviews and approvals. These often included logistical constraints, such as limited resources (e.g., time, staff) to carry out recruitment processes, and the need for more training and awareness regarding the tools and systems available. In addition, respondents often described limitations related to collaborations and data sharing, such as intrainstitutional challenges (e.g., sharing data between the university and hospital); the need to identify a collaborator at the institution; and, with regard to multisite studies, additional steps such as contracting, data harmonization, network review panels and governance, and IRB approval and local Principal Investigator at each site. Somewhat less commonly, respondents noted:

Challenges associated with the nature of the study and/or data, such as studies that require chart review, study topics that are seen to be sensitive, and data availability in complex cases.

Additional regulatory requirements, such as compliance review of data security plans, and the need for data use agreements.

Challenges in the process of contacting patients, such as required provider involvement, creating burden for the provider as well as delays for the researcher, and the restrictions on the number of times a patient can be contacted for recruitment purposes.

A similar number of respondents (30%) reported that alternative approaches of regulatory and workflow processes had been implemented or piloted at their institution. Among those who offered addition details, descriptions commonly included workflow elements, such as:

Regulatory and related review/approval processes, for example enabling the informatics program to sign off on compliance/security issues for studies that meet criteria for routine data uses, and the use of a centralized or delegated IRB.

Informatics tools and processes, such as building a library of queries and computational phenotypes to streamline queries and enhance consistency across defined diseases.

Data sources, such as use of an external registry linked to EHRs of enrolled subjects.

Tools and processes to assess eligibility, for instance the informatics and participant core working together using honest brokers to develop lists of patients meeting inclusion/exclusion criteria.

Tools and processes associated with initiating patient contact, such as the expanded use of EHRs to engage patients in recruitment through MyChart.

Many also described general processes and approaches, such as the use of dedicated teams and services (e.g., a recruitment team that contacts providers on behalf of the study to obtain approval to contact the patient, and creation of a Participant Recruitment Center); the use of self-services tools that have built-in honest broker capability; and workflows tailored to the type of project, data needs and sources.

Finally, over one-third (36%) of respondents said they had additional insights to offer on using EHRs to facilitate research recruitment that might be useful. Workflow elements were commonly mentioned, including:

Regulatory and related review/approval processes, such as ensuring that HIPAA waivers for permission to contact for research recruitment become a standard part of the patient intake workflow; minimizing the time for regulatory review by the use of standard data use agreements developed in collaboration with the IRB and legal office; use of data review committees to relieve the burden of regulatory compliance assessment on the honest broker.

Informatics tools and processes, such as the importance of evaluating data quality and provenance for each query; strict segregation of identified Versus de-identified data in data warehouse; use of open standards, non-proprietary software, and data extraction tools designed by programmers who are trained in statistics, and closer collaboration with academic informaticians and software engineers.

Data sources, such as addressing the physical, technological, and cultural separation between the operational and research sides of the institution; integrating EHRs from other clinical partners (with different EHR platforms) into data warehouse; tools and processes to assess eligibility, including the necessity of allowing flexibility by working in “what if” mode, and dialog with investigators to help them clarify their ideas.

-

Tools and processes for initiating patient contact, including: obtaining consent from patients for direct researcher contact in the future (including mention of a regional registry built by having admission clerks ask about willingness to be contacted); an automated algorithm to identify prospective participants de-identified to researchers, allowing a message to go directly to patients without releasing PHI to the investigator; the use of new technologies such as ResearchKit to aid in recruitment; development of templates and guidance for how to initiate contact; collection of data on satisfaction among patients who receive research invitations.

Respondents also offered insights on general processes and approaches, such as:

The use of dedicated teams and services (e.g., offering a suite of recruitment services with a mix of technology and human-supported options), and the importance of having “people available who have a good understanding of the tools, clinical contexts, etc. to help users develop reproducible and trustworthy best practices for obtaining and using data for research”.

The importance of creating a business model that justifies institutional support.

Other ideas, such as leveraging PCORnet experiences and recognition that communication, trust, effective process, and data sharing agreements are the key to working with community partners in collaborative clinical and translational research.

Recruitment Practices

Once a researcher receives a list of potential research participants, respondents reported a wide range of recruitment practices allowed at their institution (Table 2). Practices involving an intermediary were allowed at over half of institutions, including approaching potential participants in clinic who were previously identified (55%) and contact only after introduction by the care provider or clinic (53%). Direct approaches were less commonly allowed; for example, a letter sent from the researcher explaining how s/he got the potential participant’s name (34%).

Table 2.

Distribution of responses to the question “Once the researcher receives the list of participants, what recruitment practices are allowed, with IRB approval?”*

| Recruitment practices | n | % |

|---|---|---|

| Letter or email may be sent to potential participants inviting them to the research study | 49 | 76 |

| Investigators are allowed to use EHR to build a registry of potential participants for recruitment | 47 | 73 |

| Investigators are allowed to contact patient who have “opted in” to institutional research communication | 38 | 59 |

| Investigator allowed to approach potential participants in clinic who have been previously identified | 35 | 54 |

| Investigator contact with potential participants allowed only after introduction of PCP or clinic/practice | 34 | 53 |

| Investigators are allowed to call potential participants directly | 28 | 43 |

| Letter may be sent from researcher if it provides an explanation about how s/he got the potential participant’s name | 22 | 34 |

| Investigators are allowed to contact patients unless the patients have opted out of institutional research communications | 19 | 29 |

| Other | 15 | 23 |

| Contact with potential participants allowed only if researcher is an MD who works with the study population | 14 | 22 |

EHR, electronic health records; IRB, Institutional Review Board; PCP, primary care provider.

Respondents were instructed to identify all the practices that were allowed at their institution.

Twenty-three percent of respondents indicated “other” recruitment practices, many of whom offered details on provider involvement, such as making initial contact to introduce the study or obtain consent for researcher contact, providing a signed letter for researchers to use to make initial contact, or providing approval to make contact. Many also noted that their recruitment practices were study specific, for example, depending on whether the study topic is considered sensitive or specific IRB approvals. A few described recruitment processes carried out by others, such as recruitment specialists.

Variations on the Hypothetical Scenario

When asked whether workflow processes would substantially differ if the research involved a rare disease, cancer, or pediatrics, only a minority of participants said yes. One respondent indicated that, for a rare disease, they would extend the search to include patients from other medical centers within their larger academic system. For a cancer study, a few (8%) respondents identified additional review and approval processes (e.g., cancer center specific processes) and the use of cancer registries for recruitment. About one-fifth (19%) of respondents noted substantial differences for pediatric studies, based on separation between health care entities focused on children Versus adults, initial contact with surrogates (e.g., parents, guardians), and separate IRBs.

Discussion

There is widespread recognition of the nascent promise of EHRs to facilitate cohort identification and research recruitment [3, 4]. Our survey results suggest significant activity in this arena at CTSA institutions, with the level of implementation across various practices and tools ranging from exploratory to fully operational. In nearly every case, reported demand for these practices and tools exceeded implementation (Fig. 1). Regulatory and workflow processes were similarly varied, and a substantive proportion of survey respondents described restrictions on the use of EHR data for recruitment purposes arising from logistical constraints and limitations on collaboration and data sharing. These findings highlight the need for further implementation and evaluation research—including comparative research—to help identify best practices that efficiently and effectively meet the needs of patients, providers, and researchers.

Studies thus far have generally indicated public support for research use of EHRs [9–11], albeit with some potential concerns about the use of sensitive information [12]. EHR patient portals are one possible tool to enhance patients’ awareness of research opportunities and perhaps offer some level of control. Even so, only a minority of institutions responding to our survey had implemented the use of EHR patient portals for research purposes. In addition, there continue to be disparities in individuals’ access to and use of EHRs and PHRs, particularly among certain socio-demographic groups [13]; however, this is improving and there is some evidence that underrepresented groups are just as amenable to recruitment via such approaches [14].

With regard to electronic alerts to care providers about patients’ eligibility and recruitment for research, our survey found what could arguably be described as a moderate level of both implementation and demand. Embi et al. described an approach to research decision support at the point of care referred to as a “clinical trial alert” that demonstrated an 8-fold increase in physician-generated referrals to studies and a doubling of enrollment [15]. Rollman et al. [16] compared similar electronic physician prompts to waiting room case finding, and found that physicians referred a smaller number of patients (compared to the number approached by waiting room recruiters), but a substantially higher proportion of them met inclusion criteria and enrolled. There were also significant demographic and clinical differences between subjects enrolled via the 2 methods. However, declining responsiveness to alerts (or “alert fatigue”) is a well-recognized concern [17]. Embi et al. [18] examined physician perceptions of a clinical trials alert system for subject recruitment. Although 77% of physician respondents appreciated being reminded about the trial, a similar majority stated that they dismissed the alerts sometimes (54%) or every time (25%). Among those who ignored all of the alerts, common reasons included lack of time, knowledge of patients’ ineligibility, and limited knowledge of the trial. Compared to alerts targeting providers, our survey respondents reported a higher demand for alerts to the research team, and published reports [19–21] provide preliminary indication that these may help increase the efficiency of the recruitment process. As opposed to providers who lack time and incentive to act on the recruitment alerts, clinical research staff are highly motivated to receive recruitment alerts and often seek to create such alerts with the help of IT team. In the study reported by Thadani et al., the recruit efficiency or manual chart review effort were reduced by 90% for the ACCORD study by creating the recruitment alerts for research coordinators.

Data warehouses with self-service query tools had been fully implemented at nearly all of our responding institutions and were described as the subject of frequent demand. Our results suggest that business intelligence tools have less often been implemented, but the benefits of using advanced analytics to build complex queries across multiple data sets from disparate sources have been demonstrated in several contexts [22–25].

Finally, approaches involving direct patient engagement, for example building registries of patients who have agreed to be contacted, had been initially or fully implemented at a majority of our responding institutions. Positive experiences with these approaches have been described in the literature [26–29].

Our results suggest it may be common and essential practice for an institution to offer a suite of recruitment services comprising a mixture of the above approaches, including both technology and human‐supported options. Many institutions have self-service tools and also provide support for investigators with data analysts and recruitment specialists who together develop efficient queries to support recruitment or gather data. Investigator dialog with an analyst is often necessary to help clarify ideas and refine selection criteria for a study population. Table 3 highlights some of our findings. Greater experience and careful evaluation over time will provide insights into optimizing services that enhance sensitivity and specificity of recruitment strategies.

Table 3.

Lessons learned

| Related to EHR-based methods |

| Brokered access to data warehouses (94%) and self-service query (92%) are widely implemented and used |

| Demand for EHR data for research use is high (88%) |

| When use of EHR data for recruitment is limited, it is often the result of logistical constraints and limitations on collaboration |

| A minority of institutions use EHR patient portals for research purposes (20%) |

| Electronic alerts targeting care providers and research teams about patients’ eligibility are moderately implemented (45% and 48%, respectively); however, those targeting research teams seem to be higher demand (22% and 39%, respectively) |

| Related to workflow and regulatory processes |

| A variety of direct patient engagement (e.g., registries of potential research subjects) are implemented at the majority of institutions |

| Many institutions provide a combination of self-service tools, data analysts and recruitment specialists |

| Recruitment procedures (including cohort identification and contact) vary widely |

EHR, electronic health records.

In all cases, research recruitment must take place within well-established principles for ethically responsible research. Even so, it important to distinguish between risks associated with identifying and contacting individuals about their interest in research participation, and risks associated with actually participating in research [30]. Survey respondents reported wide variation in recruitment practices allowed at their institution, including, for example, whether investigators are allowed to contact prospective participants directly or only after introduction by the healthcare provider. The concept of “physician-as-gatekeeper” has been the subject of empirical study [31–33] and ethical analysis [30, 34], and is a topic ripe for focused effort and debate to identify and justify best practice guidelines.

This study has a few limitations. First, while we present prevailing practices, we are not able to provide strong recommendations for best practices due to lack of evidence from comparative effectiveness research on EHR-based recruitment methods. In addition, there is a need for a consensus-based approach for adaptation of metrics from the practice of clinical research (e.g., accrual index [35]) or development of metrics to measure the efficiency of different EHR-based recruitment approaches.

The lack of a sufficient body of comparative research to provide an evidence base for the application of these technologies limits our ability to make specific recommendations. Nonetheless, we believe our survey results provide a useful sense of the spectrum of activities and approaches being employed and frequent implementation considerations associated with different approaches. Moreover, our survey examined practices only at academically-based health care systems with relatively large federally-funded research portfolios. Furthermore, the results are based on the perceptions of a limited number of stakeholders at each institution. Perhaps most importantly, these data reflect a snapshot from the spring of 2016 that will need to be updated as the field evolves. Future studies should focus on the costs, yield, patient and provider acceptability, and study retention achieved with various approaches in order to drive the development and adoption of innovative best practices that both protect patients and facilitate interinstitutional collaboration and multisite research.

Acknowledgments

The authors thank the workgroup members listed in the online Supplementary Material for helpful discussion and input. The authors also thank Ms. Bridget Swindell and Jodie Jackson of Vanderbilt University for supporting the effort. The results would not have been possible without the support and effort of hundreds of individuals working at each of the CTSA institutions who participated in the survey.

This project has been funded in whole or in part with Federal funds from the National Center for Research Resources and National Center for Advancing Translational Sciences (NCATS), National Institutes of Health (NIH), through the Clinical and Translational Science Awards Program, grant numbers: UL1TR001073, UL1TR001430, UL1TR000439, UL1TR001876, UL1TR001873, UL1TR001086, UL1TR001117, UL1TR000454, UL1TR001409, UL1TR001102, UL1TR001433, UL1TR001108, UL1TR001079, UL1TR000135, UL1TR001436, UL1TR001450, UL1TR001445, UL1TR001422, UL1TR002369, UL1TR002014, UL1TR001866, UL1TR001114, UL1TR001085, UL1TR001070, UL1TR001064, UL1TR001417, UL1TR000039, UL1TR001412, UL1TR001860, UL1TR001414, UL1TR001881, UL1TR001442, UL1TR001872, UL1TR000430, UL1TR001425, UL1TR001082, UL1TR001427, UL1TR002003, U54TR001356, UL1TR000001, UL1TR001998, UL1TR001453, UL1TR000460, UL1TR000433, UL1TR000114, UL1TR001449, UL1TR001111, UL1TR001878, UL1TR001857, UL1TR002001, UL1TR001855, UL1TR000371, UL1TR001120, UL1TR001439, UL1TR001105, UL1TR001067, UL1TR000423, UL1TR000427, UL1TR000445, UL1TR000058, UL1TR001420, UL1TR000448, UL1TR000457, UL1TR001863, and U54 TR000123.

These authors contributed equally to this work.

Workgroup members are listed in the online Supplementary Material.

Contributor Information

Collaborators: for the Methods and Process and Informatics Domain Task Force Workgroup

Disclosures

The authors have no conflicts of interest to declare.

Supplementary material

For supplementary material accompanying this paper visit https://doi.org/10.1017/cts.2017.301.

click here to view supplementary material

References

- 1. Lovato LC, et al. Recruitment for controlled clinical trials: literature summary and annotated bibliography. Controlled Clinical Trials 1997; 18: 328–352. [DOI] [PubMed] [Google Scholar]

- 2. Ross S, et al. Barriers to participation in randomised controlled trials: a systematic review. Journal of Clinical Epidemiology 1999; 52: 1143–1156. [DOI] [PubMed] [Google Scholar]

- 3. Cowie MR, et al. Electronic health records to facilitate clinical research. Clinical Research Cardiology 2017; 106: 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Coorevits P, et al. Electronic health records: new opportunities for clinical research. Journal of Internal Medicine 2013; 274: 547–560. [DOI] [PubMed] [Google Scholar]

- 5. Weng C, et al. Using EHRs to integrate research with patient care: promises and challenges. Journal of American Medical Informatics Association 2012; 19: 684–687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Henry J, et al. Adoption of electronic health record systems among U.S. Non-Federal Acute Care Hospitals: 2008-2015 (ONC Data Brief No. 35). Office of the National Coordinator for Health Information Technology, Washington, DC.

- 7. Harris PA, et al. Research electronic data capture (REDCap) – a metadata-driven methodology and workflow process for providing translational research informatics support. Journal of Biomedical Informatics 2009; 42: 377–381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Murphy SN, et al. Serving the enterprise and beyond with informatics for integrating biology and the bedside (i2b2). Journal of American Medical Informatics Association 2010; 17: 124–130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Damschroder LJ, et al. Patients, privacy and trust: patients’ willingness to allow researchers to access their medical records. Social Science & Medicine 2007; 64: 223–235. [DOI] [PubMed] [Google Scholar]

- 10. Grande D, et al. Public preferences about secondary uses of electronic health information. JAMA Internal Medicine 2013; 173: 1798–1806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Grande D, et al. The importance of purpose: moving beyond consent in the societal use of personal health information. Annals of Internal Medicine 2014; 161: 855–U837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Caine K, Hanania R. Patients want granular privacy control over health information in electronic medical records. Journal of the American Medical Informatics Association 2013; 20: 7–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Patel V, Barker W, Siminerio E. Disparities in individuals’ access and use of health IT in 2014 (ONC Data Brief No. 34). Office of the National Coordinator for Health Information Technology, Washington, DC, 2016.

- 14. Bower JK, et al. Active use of electronic health records (EHRs) and personal health records (PHRs) for epidemiologic research: sample representativeness and nonresponse bias in a study of women during pregnancy. EGEMS (Washington, DC) 2017; 5: 1263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Embi PJ, et al. Effect of a clinical trial alert system on physician participation in trial recruitment. Archives of Internal Medicine 2005; 165: 2272–2277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Rollman BL, et al. Comparison of electronic physician prompts versus waitroom case-finding on clinical trial enrollment. Journal of General Internal Medicine 2008; 23: 447–450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Embi PJ, Leonard AC. Evaluating alert fatigue over time to EHR-based clinical trial alerts: findings from a randomized controlled study. Journal of American Medical Informatics Association 2012; 19: e145–e148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Embi PJ, Jain A, Harris CM. Physicians’ perceptions of an electronic health record-based clinical trial alert approach to subject recruitment: a survey. BMC Medical Informatics and Decision Making 2008; 8: 13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Weng C, et al. A real-time screening alert improves patient recruitment efficiency. AMIA . Annual Symposium Proceedings/AMIA Symposium. AMIA Symposium 2011; 2011: 1489–1498. [PMC free article] [PubMed] [Google Scholar]

- 20. Ferranti JM, et al. The design and implementation of an open-source, data-driven cohort recruitment system: the Duke Integrated Subject Cohort and Enrollment Research Network (DISCERN). Journal of American Medical Informatics Association 2012; 19: e68–e75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Thadani SR, et al. Electronic screening improves efficiency in clinical trial recruitment. Journal of American Medical Informatics Association 2009; 16: 869–873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Fihn SD, et al. Insights from advanced analytics at the Veterans Health Administration. Health Affairs (Millwood) 2014; 33: 1203–1211. [DOI] [PubMed] [Google Scholar]

- 23. Hurdle JF, et al. Courdy, identifying clinical/translational research cohorts: ascertainment via querying an integrated multi-source database. Journal of American Medical Informatics Association 2013; 20: 164–171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Horvath MM, et al. The DEDUCE Guided Query tool: providing simplified access to clinical data for research and quality improvement. Journal of Biomedical Informatics 2011; 44: 266–276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Narus SP, et al. Federating clinical data from six pediatric hospitals: process and initial results from the PHIS+ Consortium. AMIA . Annual Symposium Proceedings/AMIA Symposium. AMIA Symposium 2011; 2011: 994–1003. [PMC free article] [PubMed] [Google Scholar]

- 26. Kluding PM, et al. Frontiers: integration of a research participant registry with medical clinic registration and electronic health records. Clinical and Translational Science 2015; 8: 405–411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Cheah S, et al. Permission to contact (PTC) – a strategy to enhance patient engagement in translational research. Biopreservation and Biobanking 2013; 11: 245–252. [DOI] [PubMed] [Google Scholar]

- 28. Sanderson IC, et al. Managing clinical research permissions electronically: a novel approach to enhancing recruitment and managing consents. Clinical Trials 2013; 10: 604–611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Tan MH, et al. Design, development and deployment of a Diabetes Research Registry to facilitate recruitment in clinical research. Contemporary Clinical Trials 2016; 47: 202–208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Beskow LM, et al. Ethical issues in identifying and recruiting participants for familial genetic research. American Journal of Medical Genetics. Part A 2004; 130A: 424–431. [DOI] [PubMed] [Google Scholar]

- 31. Beskow LM, et al. Patient perspectives on research recruitment through cancer registries. Cancer Causes & Control 2005; 16: 1171–1175. [DOI] [PubMed] [Google Scholar]

- 32. Beskow LM, Sandler RS, Weinberger M. Research recruitment through US central cancer registries: balancing privacy and scientific issues. American Journal of Public Health 2006; 96: 1920–1926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Beskow LM, et al. The effect of physician permission versus notification on research recruitment through cancer registries (United States). Cancer Causes & Control 2006; 17: 315–323. [DOI] [PubMed] [Google Scholar]

- 34. Sharkey K, et al. Clinician gate-keeping in clinical research is not ethically defensible: an analysis. Journal of Medical Ethics 2010; 36: 363–366. [DOI] [PubMed] [Google Scholar]

- 35. Corregano L, et al. Accrual Index: a real-time measure of the timeliness of clinical study enrollment. Clinical and Translational Science 2015; 8: 655–661. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

For supplementary material accompanying this paper visit https://doi.org/10.1017/cts.2017.301.

click here to view supplementary material