Abstract

Purpose

Knowledge about patient experience within emergency departments (EDs) allows services to develop and improve in line with patient needs. There is no standardized instrument to measure patient experience. The aim of this study is to identify patient reported experience measures (PREMs) for EDs, examine the rigour by which they were developed and their psychometric properties when judged against standard criteria.

Data sources

Medline, Scopus, CINAHL, PsycINFO, PubMed and Web of Science were searched from inception to May 2015.

Study selection

Studies were identified using specific search terms and inclusion criteria. A total of eight articles, reporting on four PREMs, were included.

Data extraction

Data on the development and performance of the four PREMs were extracted from the articles. The measures were critiqued according to quality criteria previously described by Pesudovs K, Burr JM, Harley C, et al. (The development, assessment, and selection of questionnaires. Optom Vis Sci 2007;84:663–74.).

Results

There was significant variation in the quality of development and reporting of psychometric properties. For all four PREMs, initial development work included the ascertainment of patient experiences using qualitative interviews. However, instrument performance was poorly assessed. Validity and reliability were measured in some studies; however responsiveness, an important aspect on survey development, was not measured in any of the included studies.

Conclusion

PREMS currently available for use in the ED have uncertain validity, reliability and responsiveness. Further validation work is required to assess their acceptability to patients and their usefulness in clinical practice.

Keywords: patient experience, emergency department, experience measure, PREM

Background

Hospital Emergency Departments (EDs) assume a central role in the urgent and emergency care systems of countries around the world. Each and every patient attending ED should receive the highest quality of care. Currently, this is not always the case [1–4]. In the United Kingdom, for example, the 2014 Care Quality Commission report identified substantial variation in the care provided by EDs.

Patient experience is one of the fundamental determinants of healthcare quality [5]. Studies have demonstrated its positive associations with health outcomes [6–11]. Opening up dialogue between patients and providers by giving patients a ‘voice’ has proved key to improving quality of clincal experience [9]. Accordingly, there have been efforts made around the world to improve patient experience. In the UK, delivering high quality, patient-centred care has been at the forefront of health policy since 2008 [12, 13]. The UK government stated the importance of public involvement in prioritization of care needs and has recognized the significance of patient and public participation in the development of clinical services [14, 15]. It will only be possible to know if interventions and changes in practice are successful if processes and outcomes are measured.

To be able to identify where improvements in patient experience are required and to judge how successful efforts to change have been, a meaningful way of capturing what happens during a care episode is required. Patient reported experience measures (PREMs) attempt to meet this need. A PREM is defined as ‘a measure of a patient's perception of their personal experience of the healthcare they have received’. These questionnaire-based instruments ask patients to report on the extent to which certain predefined processes occurred during an episode of care [16]. For example, whether or not a patient was offered pain relief during an episode of care and the meaning of this encounter.

PREMs are now in widespread use, with both generic and condition-specific measures having been developed. The Picker Institute developed the National Inpatient Survey for use in the UK National Health Service. This PREM, which has been used since 2002, is given annually to an eligible sample of 1250 adult inpatients who have had an overnight stay in a trust during a particular timeframe. The results are primarily intended for use by trusts to help improve performance and service provision, but are also used by NHS England and the Department of Health to measure progress and outcomes.

Such ‘experience’-based measures differ from ‘satisfaction’-type measures, which have previously been used in an effort to index how care has been received. For example, while a PREM might include a question asking the patient whether or not they were given discharge information, a patient satisfaction measure would ask the patient how satisfied they were with the information they received. Not only are PREMS therefore able to provide more tangible information on how a service can be improved, they may be less to prone to the influence of patient expectation, which is known to be influenced by varying factors [17–21].

A number of PREMs have been developed for use within the ED. If the results from these PREMs are to be viewed with confidence, and used to make decisions about how to improve clinical services, it is important that they are valid and reliable. This means an accurate representation of patient experience within EDs (validity) and a consistent measure of this experience (reliability). If validity and reliability are not sound there is a risk of imprecise or biased results that may be misleading. Despite this, there has, to date, been no systematic attempt to identify and appraise those PREMs which are available for use in ED.

Beattie et al. [22] systematically identified and assessed the quality of instruments designed to measure patient experience of general hospital care [22]. They did not include measures for use in ED. This is important as there is evidence that what constitutes high quality care from a patient's perspective can vary between specialties, and by the condition, or conditions, that the person is being treated for [22–25]. Stuart et al. [26] conducted a study in Australia where patients were interviewed about what aspects of care mattered most to them in the ED. Patients identified the interpersonal (relational) aspects of care as most important, such as communication, respect, non-discriminatory treatment and involvement in decision-making [26]. This differs to what matters most to inpatients, where a survey in South Australia revealed issues around food and accommodation to be the most common source of negative comments and dissatisfaction [27].

This review aims to systematically identify currently reported PREMs that measure patient experience in EDs, and to assess the quality by which they were developed against standard criteria.

Study objectives

The objectives of this review are as follows:

To identify questionnaires currently available to measure patient experience in EDs.

To identify studies which examine psychometric properties (validity and reliability) of PREMs for use in ED.

Critique the quality of the methods and results of the measurement properties using defined criteria for each instrument.

Primarily, these objectives will lead to a clearer understanding of the validity and reliability of currently available instruments. This will support clinician and managerial decision-making when choosing a PREM to use in practice.

Methods

Eligibility criteria

Measure selection criteria were (i) description of the development and/or evaluation of a PREM for use with ED patients; (ii) instrument designed for self-completion by participant (or a close significant other, i.e. relative or friend); (iii) participants aged 16 years or older; (iv) study written in English.

Exclusion criteria were (i) studies focusing on Patient Reported Outcome Measures or patient satisfaction; (ii) review articles and editorials.

Search strategy

Six bibliographic databases (MEDLINE, Scopus, CINAHL, PsycINFO, PubMed and Web of Science) were searched from inception up to December 2016. These searches included both free text words and Medical Subject Headings (MeSH) terms. The keywords used were ‘patient experience’ OR ‘patient reported experience’ OR ‘patient reported experience measure’; ‘emergency medical services’ (MeSH); ‘measure’ OR ‘tool’ OR ‘instrument’ OR ‘score’ OR ‘scale’ OR ‘survey’ OR ‘questionnaire’; and ‘psychometrics’ (MeSH) along with Boolean operators. Appendix 1 outlines the specific Medline search strategy used.

The Internet was used as another source of data; searches were conducted on Picker website, NHS surveys website and CQC, along with contacting experts in the field, namely at the Picker Institute. Finally, the reference lists of studies identified by the online bibliographic search were examined.

The search methodology and reported findings comply with the relevant sections of the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement [28].

Study selection

Articles were screened first by title and abstract to eliminate articles not meeting inclusion criteria. This was completed by two reviewers. Where a decision could not be made on the basis of the title and abstract, full text articles were retrieved.

Data collection process

Using a standardized form, L.M. extracted the following information: name of instrument, aim, the target population, sample size, patient recruitment information, mode of administration, scoring scale, number of items/domains and the subscales used. This was also completed separately by J.A.

Quality assessment tool

A number of frameworks exist to evaluate the quality of patient-reported health questionnaires and determine usability within the target population. This study utilized the Quality Assessment Criteria framework developed by Pesudovs et al. which has been used in the assessment of a diverse range of patient questionnaires [29–31].

The framework includes a robust set of quality criteria to assess instrument development and psychometric performance. The former includes defining the purpose of the instrument and its target population, the steps taken in defining the content of the instrument, and the steps involved in developing an appropriate rating scale and scoring system. The latter focuses on validity and reliability, as well as responsiveness and interpretation of the results. Some aspects of the Quality Assessment Criteria framework were relevant to development of questionnaires in which the patient reports on health status only rather than care experience. These were not considered when evaluating the PREMs.

Table 1 outlines the framework used to assess how the measure performs against each criterion. Within the study, each PREM was given either a positive (✓✓), acceptable (✓) or negative rating (X) against each criterion.

Table 1.

Quality assessment tool

| Property | Definition | Quality criteria |

|---|---|---|

| Instrument development | ||

| Pre-study hypothesis and intended population | Specification of the hypothesis pre-study and if the intended population have been studied. |

|

| Actual content area (face validity) | Extent to which the content meets the pre-study aims and population. |

|

| Item identification | Items selected are relevant to the target population. |

|

| Item selection | Determining of final items to include in the instrument. |

|

| Unidimensionality | Demonstration that all items fit within an underlying construct. |

|

| Response scale | Scale used to complete the measure. |

|

| Instrument performance | ||

| Convergent validity | Assessment of the degree of correlation with a new measure. |

|

| Discriminant validity | Degree to which an instrument diverges from another instrument that it should not be similar to. |

|

| Predictive validity | Ability for a measure to predict a future event. |

|

| Test-retest reliability | Statistical technique used to estimate components of measurement error by testing comparability between two applications of the same test at different time points. |

|

| Responsiveness | Extent to which an instrument can detect clinically important differences over time. |

|

Each PREM was independently rated by two raters (L.M., J.A.) against the discussed criteria. Raters were graduates in health sciences who had experience in PREM development and use. They underwent training, which included coding practice, using sample articles. Once the PREMs had been rated, any disagreements were resolved through discussion.

Results

Study selection

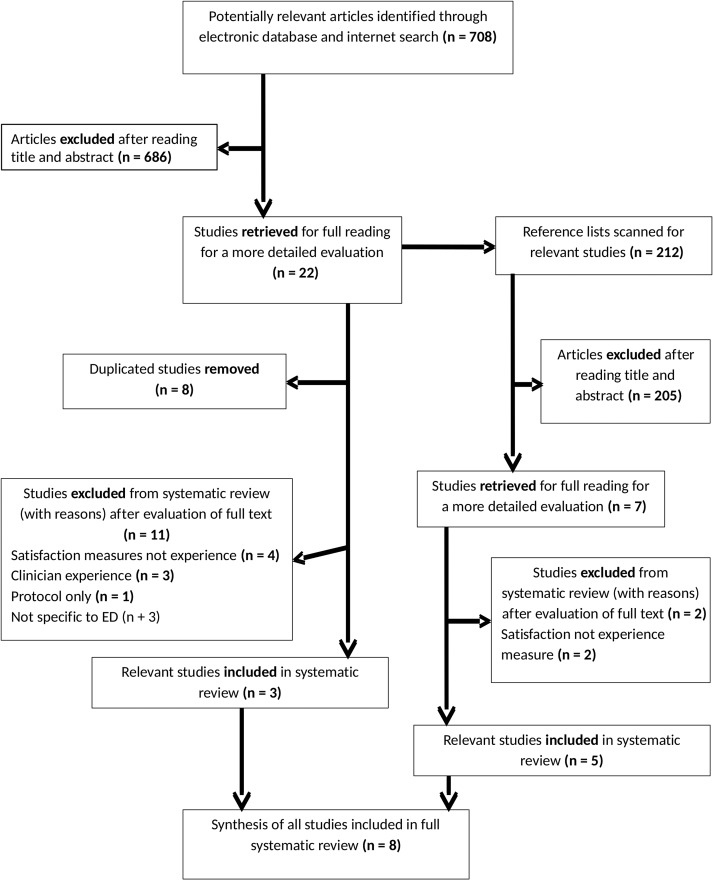

Study selection results are documented with the PRISMA flow diagram in Fig. 1. A total of 920 articles were identified, of which 891 were excluded. Full text articles were reviewed for the remaining 29 articles, after which a further 21 articles were excluded for the following reasons: duplication of same publication (n = 8), patient satisfaction measure rather than experience (n = 6), protocol only (n = 1), clinician experience measure (n = 3) and PREM not specific to ED (n = 3). A total of eight papers met the inclusion criteria representing four different PREMs.

Figure 1.

Selection process flow diagram.

Characteristics of included studies

Study characteristics are summarized in Table 2. All eight studies were conducted after 2008 within Europe. Three studies described the development of a PREM using qualitative data to elicit concepts. The other five studies evaluated psychometric development of the PREMs. Four were original studies and one further evaluated and developed the psychometric testing of an original instrument [32]. Within these four original studies there was variety in the recruitment process. Two were multicentre studies in hospital trusts [33, 34], one targeted a single specific hospital trust [35] and one recruited through general practice [36]. All five studies assessing the psychometric development of PREMs had over 300 participants with a mean age range of 51–56. Not all measures reported specific age ranges and one did not discuss participant demographics [37]. Two of the studies recruited using purposive sampling [33, 34], one through a systematic random sample [35] and one used a geographically stratified sample combined with random digit dialling for telephone surveys [37].

Table 2.

Data extraction results

| Reference | PREM developed | Research aim(s) | Qualitative method | Participants, sample selection and socioeconomic (SE) factors | Setting | Main themes |

|---|---|---|---|---|---|---|

| Picker Institute Europe [38] |

|

|

|

|

|

|

| Frank et al. [36] |

|

Describe patients’ different conceptions of patient participation in their care in an ED. | Interviews (n = 9) |

|

One ED in a metropolitan district in Sweden |

|

| O'Cathain et al. [39] |

|

To explore patients views and experiences of the emergency and urgent care system to inform the development of a questionnaire for routine assessment of the systems performance from the patient's perspective. | Focus groups (n = 8) and interviews (n = 13) |

|

|

|

| Reference | Research aim(s) | Mode of administration | Participants, sample selection and socioeconomic (SE) factors | No. of items/domains in measure | Domains measured |

|---|---|---|---|---|---|

| To determine which method of obtaining summary scores for the A&E department questionnaire optimally combined good interpretability with robust psychometric characteristics | Self-completion postal questionnaire |

|

51 items; 11 domains |

|

|

|

To develop and test the psychometric properties of a patient participation questionnaire in emergency departments | Self-completion postal questionnaire |

|

17 items; 4 domains |

|

|

To psychometrically test the Urgent Care System Questionnaire (UCSQ) for the routine measurement of the patient perspective of the emergency and urgent care system | Self-completion postal questionnaire and telephone survey |

|

21 items; 3 domains |

|

| Development of a patient reported experience measure for accident and emergency departments—Consumer Quality index of the accident and emergency department (CQI-A&E)a | Self-completion postal questionnaire |

|

84 items; 9 domains |

|

|

| To test internal consistency, the validity and discriminative capacity of CQI-A&Ea in a multicentre study design, to confirm and validate preliminary results from Bos et al. [34]. | Self-completion postal questionnaire |

|

78 items; 9 domains |

|

aAccident and Emergency (A&E) used interchangeably with Emergency Department (ED).

All of the studies utilized postal self-completion questionnaires [33–35,37], with the Urgent Care System Questionnaire (UCSQ) also incorporating telephone surveys [37]. The length of the PREMs described within the studies varied from 17–84 items across 3–11 domains. Domain contents and names varied, as detailed in Appendix 3, but did cover characteristics identified by the Department of Health [5]. Half focused on the sequential stages of the hospital episode [34, 35], whereas others focused on specific areas of care, such as patient participation [33] and convenience [37]. All instruments were administered following discharge from hospital but the time from discharge to completion varied between measures.

Instrument development and performance

A summary of the instrument development is presented in Table 3. All of the measures reported aspects of psychometric testing with evidence that validity was tested more frequent than reliability. Content validity was reported on most often.

Table 3.

Quality assessment of PREMs

| Measure | Pre-study hypothesis/ intended population | Actual content area (face validity) | Item identification | Item selection | Unidimensionality | Choice of Response Scale |

|---|---|---|---|---|---|---|

| Instrument development | ||||||

|

✓✓ | ✓✓ |

|

|

|

|

|

✓✓ | ✓✓ |

|

✓✓ |

|

X |

|

✓✓ | ✓✓ |

|

✓✓ |

|

✓✓ |

|

✓✓ |

|

|

|

|

✓✓ |

| Measure | Convergent validity | Discriminant validity | Predictive validity | Test-retest reliability | Responsiveness |

|---|---|---|---|---|---|

| Instrument performance | |||||

|

X |

|

X | X | X |

|

|

X | X | X | X |

|

X | X | X |

|

X |

|

X | X | X | X | X |

✓✓- positive rating, ✓- acceptable rating, X- negative rating.

The key patient-reported concepts that were incorporated into the quantitative measures through item selection included waiting time, interpersonal aspects of care, tests and treatment, and the environment. Qualitative concept elicitation work revealed similar concepts that were most important to patients [36, 39]. CQI-A&E also conducted an importance study to establish relative importance of items within the questionnaire to patients visiting the ED [34]. All measures addressed very similar themes under varying headings.

Item selection was generally well reported with adequate discussion of floor/ceiling effects. Likert scales were used in all bar one study [35], where choice of response scale was not discussed.

Quality appraisal of instrument performance demonstrated a limited level of information on construct validity, reliability and responsiveness throughout all four measures.

All instruments demonstrated the use of unidimensionality to determine homogeneity among items. Of the four measures identified, not one study assessed the responsiveness by measuring minimal clinically important difference.

Discussion

Methodological quality of the instruments

To our knowledge, this is the first systematic review to identify PREMs for use in the ED and evaluate their psychometric properties. Four PREMs were identified and subjected to an appraisal of their quality. While the developers of each measure reported them to be valid and reliable, the quality appraisals completed within this review do not fully support this position. Further primary studies examining their psychometric performance would be beneficial before the results obtained can be confidently used to inform practice.

Content validity and theoretical development have been well reported across all four PREMs. Item generation through patient participation is important to determine what quality of care means to local populations. It is imperative, however, that it is recognized and this may vary across populations. For example, work carried out to find out what matters to patients in the concept elicitation phase of UCSQ [39] was completed in the UK. If this instrument was to be used in another country, then studies of cross-country validity would have to be completed before using the questionnaire.

Validity and reliability are not an inherent property of an instrument and should be addressed in an iterative manner throughout development. Often, validity and reliability changes over time, as refinements are made. Instrument validity and reliability should be reassessed throughout development to ensure the overall performance is not altered. For example, there are previous versions of the AEDQ dating back to 2003. However, there are validation papers for survey development up until 2008 [38] where focus groups are used to discuss what is important to patients. It is important to keep up to date with changes, as relying on past data can render an instrument poor in terms of validity.

Furthermore, issues around validity of the instrument can change dependent on the data collection process. For example, the UCSQ used both postal and telephone survey to collect data. However, there was no discussion of validation of the PREM for use in both methods.

Disappointingly, for none of the PREMs studied did we find evidence on responsiveness. Responsiveness refers to the ability of an instrument to detect change over time. This is a highly relevant factor if a PREM is to be used to assess how successful an intervention has been to enact change within a service [13]. This review highlights the current gap in studies assessing the responsiveness of PREMs, which should be addressed.

Some instruments appear to have limited positive psychometric properties and caution should be taken when using such measures. This is not to say that these instruments do not have their uses but careful consideration should be taken when selecting an instrument.

Using Pesudovs criteria for quality assessment [29] offered a rigorous and standardized critique of validity and reliability. At times it appeared difficult to fit particular psychometric results into the quality criteria used. For example, CQI-A&E used an important study as part of content validity which did not fall agreeably into any particular quality criteria category. We used consensus discussion to reach agreement on anomalies within the data. Pesudovs criteria prove to be a good starting point for assessing psychometric properties of PREM development.

Strengths and limitations of this review

Application of the search strategy identified four PREMs that fitted the inclusion criteria. This low number was expected considering the current advances in the importance of patient experience measures within healthcare and the specificity of the population of an ED. It may be that not all PREMs were identified in the search, but scoping searches and reference list searches attempted to address this issue. Poor reporting and inadequate abstracts may have led to PREMs being erroneously left out in some cases; however, a representative sample has been included.

Data extraction of papers not included in the study was completed by both the main author (L.M.) and supervisor (A.N.) to cross-check data extraction and quality appraisal process. Papers containing PREMs not included in the study were selected to reduce bias in findings. This process allowed assessment of the rate of agreement prior to data extraction of the studies included in the review. Data extraction of studies included within the review was conducted by L.M. and J.A..

Interpretation of findings in relation to previously published work

There is little evidence of similar reviews evaluating the psychometric properties of PREMs for emergency care. Findings regarding the limited information about the reliability and validity of the measures within the general population are supported by outcomes of a recent evidence review conducted by The Health Foundation [40]. This research recognized that hospital surveys often have limited information about their validity and reliability as there is no standardized or commonly used instrument or protocol for sampling and administration [40]. Beattie et al.’s systematic review of general patient experience measures is a useful addition to research [22].

Implications of the review

Concerns are raised by the fact that multiple PREMs have been developed for the same patient population with little concern given to the validation of the measures. It is unknown why researchers continue to develop poorly validated PREMs for the same population. Future research should consider drawing on the most promising existing PREMs as a starting point for the development of new measures. Existing instruments which have not been tested on certain criteria are not necessarily flawed, just untested. Such instruments may give useful information, but should be used with caution. Improving validation will allow them to provide more credible findings for use in future service improvement.

Conclusion

Current PREMs for use within the ED were found to be adequately developed and offer promise for use within clinical settings. The review identified limited PREMs for emergency care service provision, with a low quality rating in terms of instrument performance. Without further work on validation, it is difficult to make recommendations for their routine use, as well as being difficult to draw credible findings from the results they produce. Further development and testing will make them more robust, allowing them to be better used within the population. Looking ahead, it would be of benefit to have a standardized sampling and administration protocol to allow easier development of PREMs specific to various areas and disease populations.

Appendix 1. Search strategy

Medline search strategy—search conducted 11/05/2015

| # | Advanced search |

|---|---|

| 1 | ‘patient experience*’.mp. |

| 2 | ‘patient reported experience*’.mp. |

| 3 | Emergency Medical Services/ |

| 4 | Psychometrics/ |

| 5 | 1 and 3 and 4 |

| 6 | 2 and 3 and 4 |

| 7 | 1 and 3 |

| 8 | 2 and 3 |

| 9 | 3 and 4 |

| 10 | ‘Measure*’ or ‘tool*’ or ‘instrument*’ or ‘survey*’ or ‘score*’ or ‘scale*’ or ‘questionnaire*’.mp. |

| 11 | 1 and 3 and 8 |

| 12 | 2 and 3 and 8 |

| 13 | ‘emergency care’ or ‘unscheduled care’ or ‘unplanned care’.mp. |

| 14 | 4 and 13 |

Search results—May 2015

| Database | Results |

|---|---|

| MEDLINE | 52 |

| CINHAL | 63 |

| PsycINFO | 111 |

| Scopus | 157 |

| Total Database results | 383 |

| Google Scholar | 1 |

| Picker website/emails | 0 |

| Secondary references | 212 |

| Total Identified Through Other Sources | 213 |

| Total | 596 |

Funding

This work was supported by the National Institute for Health Research (NIHR), Collaboration for Leadership and Health Research and Care North West Coast and sponsorship from University of Liverpool. The views expressed are those of the author(s) and not necessarily those of the NHS, the NIHR or the Department of Health.

References

- 1. Spilsbury K, Meyer J, Bridges J et al. . Older adults’ experiences of A&E care. Emerg Nurse 1999;7:24–31. [DOI] [PubMed] [Google Scholar]

- 2. National Patient Safety Agency Safer care for the acutely ill patient: learning from serious incidents. London, UK: National Patient Safety Agency, http://www.nrls.npsa.nhs.uk/resources/?EntryId45=59828 (22 February 2017, date last accessed). [Google Scholar]

- 3. Weiland TJ, Mackinlay C, Hill N et al. . Optimal management of mental health patients in Australian emergency departments: barriers and solutions. Emerg Med Australas 2011;23:677–88. [DOI] [PubMed] [Google Scholar]

- 4. Banerjee J, Conroy S, Cooke MW. Quality care for older people with urgent and emergency care needs in UK emergency departments. Emerg Med J 2012;30:699–700. [DOI] [PubMed] [Google Scholar]

- 5. Department of Health High Quality Care For All. NHS Next Stage Review Final Report 2008.

- 6. Kings Fund ‘What matters to Patients’? Developing the evidence base for measuring and improving patient experience. 2011.

- 7. Doyle C, Lennox L, Bell D. A systematic review of evidence on the links between patient experience and clinical safety and effectiveness. BMJ Open 2013;3:e001570. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Tierney M, Bevan R, Rees CJ et al. . What do patients want from their endoscopy experience? The importance of measuring and understanding patient attitudes to their care. Frontline Gastroenterol 2015;7:191–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Shale S. Patient experience as an indicator of clinical quality in emergency care. Clin Govern Int J 2013;18:285–92. [Google Scholar]

- 10. Ahmed F, Burt J, Roland M. Measuring patient experience: concepts and methods. Patient 2014;7:235–41. [DOI] [PubMed] [Google Scholar]

- 11. Manary MP, Boulding W, Staelin R et al. . The patient experience and health outcomes. N Engl J Med 2013;368:201–3. [DOI] [PubMed] [Google Scholar]

- 12. Goodrich J, Cornwell J. Seeing the Person in the Patient. London: The King's Fund, 2008. [Google Scholar]

- 13. Coombes R. Darzi review: Reward hospitals for improving quality, Lord Darzi says. BMJ 2008;337:a642. [Google Scholar]

- 14. Coulter A. What do patients and the public want from primary care. Br Med J 2005;331:1199–201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. White K. Engaging patients to improve the healthcare experience. Healthc Financ Manage 2012;66:84–8. [PubMed] [Google Scholar]

- 16. Hodson M, Andrew S. Capturing experiences of patients living with COPD. Nurs Times 2014;110:12–4. [PubMed] [Google Scholar]

- 17. Morris BJ, Jahangir AA, Sethi MK. Patient satisfaction: an emerging health policy issue. Am Acad Orthop Surg 2013;9:29. [Google Scholar]

- 18. Urden LD. Patient satisfaction measurement: current issues and implications. Lippincotts Case Manag 2002;7:194–200. [DOI] [PubMed] [Google Scholar]

- 19. Gill L, White L. A critical review of patient satisfaction. Leadersh Health Serv 2009;22:8–19. [Google Scholar]

- 20. Bleich S. How does satisfaction with the health-care system relate to patient experience. Bull World Health Organ 2009;87:271–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Ilioudi S, Lazakidou A, Tsironi M. Importance of patient satisfaction measurement and electronic surveys: methodology and potential benefits. Int J Health Res Innov 2013;1:67–87. [Google Scholar]

- 22. Beattie M, Murphy DJ, Atherton I et al. . Instruments to measure patient experience of healthcare quality in hospitals: a systematic review. Syst Rev 2015;4:97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Garratt AM, Bjærtnes ØA, Krogstad U et al. . The OutPatient Experiences Questionnaire (OPEQ): data quality, reliability, and validity in patients attending 52 Norwegian hospitals. Qual Saf Health Care 2005;14:433–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Lasalvia A, Ruggeri M, Mazzi MA et al. . The perception of needs for care in staffand patients in community‐based mental health services. Acta Psychiatr Scand 2000;102:366–75. [DOI] [PubMed] [Google Scholar]

- 25. Reitmanova S, Gustafson D. ‘They Can't Understand It’: maternity health and care needs of immigrant muslim women in St. John's, Newfoundland. Matern Child Health J 2008;12:101–11. [DOI] [PubMed] [Google Scholar]

- 26. Stuart PJ, Parker S, Rogers M. Giving a voice to the community: a qualitative study of consumer expectations for the emergency department. Emerg Med 2003;15:369–74. [DOI] [PubMed] [Google Scholar]

- 27. Grant JF, Taylor AW, Wu J Measuring Consumer Experience. SA Public Hospital Inpatients Annual Report Adelaide: Population Research and Outcome Studies, 2013.

- 28. Moher D, Liberati A, Tetzlaff J et al. . Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. J Clin Epidemiol 2009;62:1006–12. [DOI] [PubMed] [Google Scholar]

- 29. Pesudovs K, Burr JM, Harley C et al. . The development, assessment, and selection of questionnaires. Optom Vis Sci 2007;84:663–74. [DOI] [PubMed] [Google Scholar]

- 30. Worth A, Hammersley V, Knibb R et al. . Patient-reported outcome measures for asthma: a systematic review. NPJ Prim Care Respir Med 2014;24:14020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Salvilla SA, Dubois AE, Flokstra-de Blok BM et al. . Disease-specific health-related quality of life instruments for IgE-mediated food allergy. Allergy 2014;69:834–44. [DOI] [PubMed] [Google Scholar]

- 32. Bos N, Sturms LM, Stellato RK et al. . The Consumer Quality Index in an accident and emergency department: internal consistency, validity and discriminative capacity. Health Expect 2013;18:1426–38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Frank C, Asp M, Fridlund B et al. . Questionnaire for patient participation in emergency departments: development and psychometric testing. J Adv Nurs 2011;67:643–51. [DOI] [PubMed] [Google Scholar]

- 34. Bos N, Sturms LM, Schrijvers AJ et al. . The consumer quality index (CQ-index) in an accident and emergency department: development and first evaluation. BMC Health Serv Res 2012;12:284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Bos N, Sizmur S, Graham C et al. . The accident and emergency department questionnaire: a measure for patients’ experiences in the accident and emergency department. BMJ Qual Saf 2013;22:139–46. [DOI] [PubMed] [Google Scholar]

- 36. Frank C, Asp M, Dahlberg K. Patient participation in emergency care—a phenomenographic study based on patients’ lived experience. Int Emerg Nurs 2009;17:15–22. [DOI] [PubMed] [Google Scholar]

- 37. O'Cathain A, Knowles E, Nicholl J. Measuring patients’ experiences and views of the emergency and urgent care system: psychometric testing of the urgent care system questionnaire. BMJ Qual Saf 2011;20:134–40. [DOI] [PubMed] [Google Scholar]

- 38. Picker Institute Europe Development of the Questionnaire for use in the NHS Emergency Department Survey 2008. Oxford: Picker Institute Europe, 2008. [Google Scholar]

- 39. O'Cathain A, Coleman P, Nicholl J. Characteristics of the emergency and urgent care system important to patients: a qualitative study. J Health Serv Res Policy 2008;13:19–25. [DOI] [PubMed] [Google Scholar]

- 40. Health Foundation Measuring Patient Experience: No. 18, evidence scan. 2013.