Abstract

Purpose

The literature is reviewed to examine how ‘improvement capability’ is conceptualized and assessed and to identify future areas for research.

Data sources

An iterative and systematic search of the literature was carried out across all sectors including healthcare. The search was limited to literature written in English.

Data extraction

The study identifies and analyses 70 instruments and frameworks for assessing or measuring improvement capability. Information about the source of the instruments, the sectors in which they were developed or used, the measurement constructs or domains they employ, and how they were tested was extracted.

Results of data synthesis

The instruments and framework constructs are very heterogeneous, demonstrating the ambiguity of improvement capability as a concept, and the difficulties involved in its operationalisation. Two-thirds of the instruments and frameworks have been subject to tests of reliability and half to tests of validity. Many instruments have little apparent theoretical basis and do not seem to have been used widely.

Conclusion

The assessment and development of improvement capability needs clearer and more consistent conceptual and terminological definition, used consistently across disciplines and sectors. There is scope to learn from existing instruments and frameworks, and this study proposes a synthetic framework of eight dimensions of improvement capability. Future instruments need robust testing for reliability and validity. This study contributes to practice and research by presenting the first review of the literature on improvement capability across all sectors including healthcare.

Keywords: quality improvement, quality management, external quality assessment, other quality evaluation (EFQM), external quality assessment, training/education, human resources, quality culture, quality management

Introduction

Variation in organizational performance persists across sectors including healthcare and the identification of the factors which influence organizational performance continues to be of great interest to researchers and practitioners. In the business and management literature, researchers have increasingly sought to understand how organizations integrate, build and reconfigure internal and external competencies, deploy organizational routines, and improve performance through their use of these ‘dynamic capabilities’ [1]. Some have contended that quality improvement (QI) programmes and methods such as Total Quality Management (TQM) Business Process Management (BPM) and Lean should be seen as ways to develop such dynamic capabilities [2].

While some health systems and organizations have been successful at improving performance through such QI approaches [3–5] many are less successful. Some researchers have explored why some organizations are less able to deliver improved performance and argue that a systematic approach to developing improvement capability is required [6].

However, improvement capability is ambiguously defined and challenging to measure. It seems that improvement capability requires further conceptualization [7] and research is needed to understand how improvement capability is developed, and to explore whether variation in improvement capability may explain inter-organizational variation in performance [8]. Thus, the purpose of this study is:

to clarify how improvement capability is conceptualized and assessed;

to identify areas for future research and development.

Methods

Search strategy

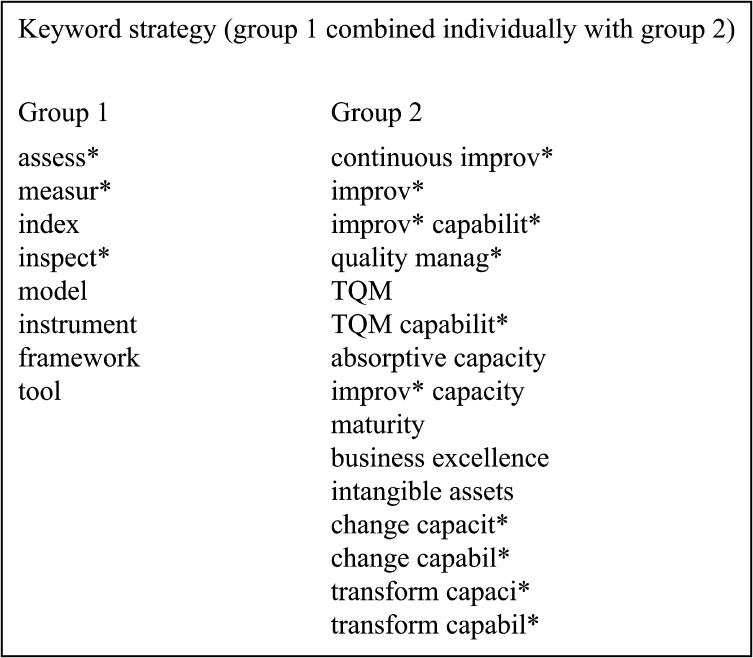

The review was guided by Whittemore and Knafl's integrative review method [9] which is particularly suited to summarizing the accumulated state of knowledge in fields with a diverse and methodologically heterogeneous literature, undertaking comparative synthesis and to generating new perspectives and areas for research [10, 11]. For example, because there is not a single accepted definition of improvement capability, the use of an integrative approach allowed several a priori operational definitions to be used during searching, potentially leading to more robust conclusions [12]. The review adhered to the principles of the PRISMA statement and guidelines [13] although there were some aspects where it was not appropriate as this was not a systematic review as defined in the PRISMA statement. The review was conducted between September 2014 and January 2015. The search sought to identify qualitative and quantitative instruments that assessed improvement capability in organizations. All sectors were included and no date restrictions were used, though preliminary searching had revealed that a substantial body of the literature on improvement capability related to the healthcare sector, and this influenced the choice of databases searched. Scopus, Web of Science, Medline, HMIC, Embase and the Cochrane Database of Systematic Reviews were searched. MeSH headings and free text keywords were selected to take account of terminological differences and language often used synonymously, such as ‘quality improvement’ and ‘continuous improvement’. Library staff were consulted to develop the search algorithm. Figure 1 details the full set of search keywords used. The search used an iterative ancestry and snowballing approach until saturation was reached [14].

Figure 1.

Search keywords.

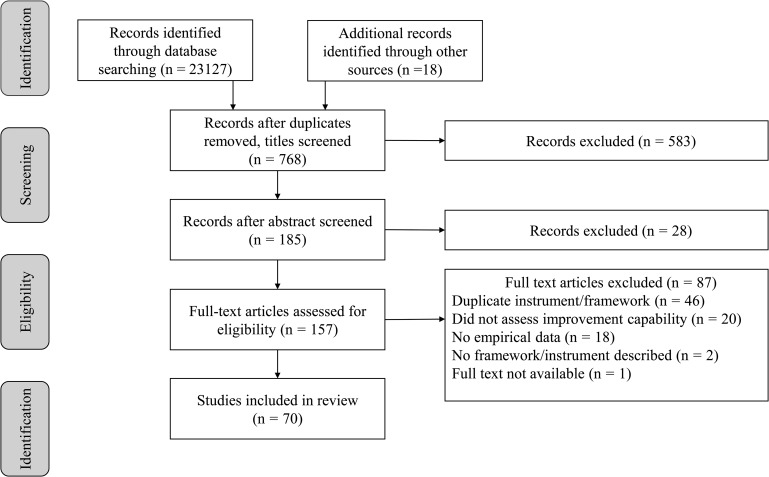

Articles were discarded if they did not contain empirical data and filters were used to remove irrelevant articles. The search excluded articles that were not peer reviewed, or written in English. A PRISMA flowchart [13] was developed to reduce the risk of inconsistency in decision-making (Fig. 2). Where multiple articles detailing studies of the same framework or instrument were found, the earliest article was used as the primary reference source (e.g. for Grandzol, Gershon [15] use of Anderson et al. [16]).

Figure 2.

PRISMA flowchart.

There are a number of very similar country-specific quality awards which assess improvement capability, therefore only the three most popular quality award frameworks were included: the Deming Prize [17], the European Foundation for Quality Management (EFQM) [18] and the Baldrige Award [19]. The search identified 23 127 papers, a further 18 papers through snowballing, and after removal of duplicates and title review, this reduced to 768 papers. Application of the search criteria led to a sample of 70 frameworks or instruments (Fig. 2). The Supplementary File details the full sample.

Literature reviews

About 14 existing literature reviews related to improvement capability were identified (summarized in Table 1). These had varying search and inclusion criteria and included nine focused on TQM and excellence models, two on maturity models within industry and three developed within healthcare. However, the study did not identify any reviews that sought to comprehensively review literature from all sectors (Fig. 3).

Table 1.

Summary of literature reviews

| Author and year | Title | Sector | Date period | # found | Search terms | Key findings |

|---|---|---|---|---|---|---|

| Brennan et al. [20] | Measuring organizational and individual factors thought to influence the success of quality improvement in primary care: a systematic review of instruments | Healthcare (Primary Care) | Not stated | 41 | Questionnaire; instrument, instrumentation, instruments; tool; measuring, measures, measure, measurement; quality improvement; organisational change; TQM, CI, change and practice, quality of life | Literature synthesis leading to common core of factors to support primary care improvement. The critical factors identified are: customer based approach, management commitment and leadership, quality planning, management based on facts, continuous improvement, human resources, learning, process management and co-operation with suppliers. |

| Doeleman et al. [21] | Empirical evidence on applying the European Foundation for Quality Management Excellence Model, a literature review | Not stated | 2002–2012 | 24 | EFQM | Found that evidence is limited to support EFQM adoption. Concluded that the use of EFQM does improve organisational results, though is an effective tool for benchmarking. Indicated that participative approach, intrinsic motivation and leadership are important driving forces. |

| Groene et al. [22] | A systematic review of instruments that assess the implementation of hospital quality management systems. | Healthcare (Acute) | 1990–2011 | 18 | Generic: Quality Management Systems AND hospital AND instrument (and variations thereof) | Indicates that there is a set of instruments that can assess quality management systems implementation in healthcare. These examine core areas including process management, human resources, leadership, analysis and monitoring. They differ in areas of conceptualisation and rigour requiring further research. |

| Heitschold et al. [23] | Measuring critical success factors of TQM implementation successfully: A systematic literature review | Industry | Not stated | 62 | TQM, Total Quality Management, implementation, CSFs AND instrument | Through identification and analysis of critical success factors for TQM, measured quantitatively within industrial settings, a three-level framework with eleven dimensions for TQM is developed. |

| Kaplan [24] | The influence of context on quality improvement success in health care: A systematic review of the literature | Healthcare (Acute) | 1980–2008 | 47 | Keywords and MeSH: TQM, CQI, QI implementation, quality management, PDSA, PDCA, lean management, six sigma Organisational behaviour, culture, teamwork, theory, change, structure | Identified that leadership, culture, data infrastructure and data systems and length of improvement implementation were important for successful improvement. Indicated that research was limited through the lack of a practical conceptual model and defined measures and definitions. |

| Karuppusami, Gandhinathan [25] | Pareto analysis of critical success factors of TQM | Industry | 1989–2003 | 37 | Quality instrument; empirical quality; quality performance; quality improvement; quality critical factors; empirical TQM—factors, construct, instrument, performance, quality index, evaluation | Found there is a lack of well-established framework for identification of critical TQM success factors to guide instrument scale development. |

| Mehra et al. [26] | TQM as a management strategy for the next millennia | Industry | Not stated | Not stated | Not stated | Through review of TQM literature, identifies forty-five elements that affect implementation, grouped into five areas: Human resources focus, management structure, quality tools, supplier support and customer orientation. Finds that TQM efforts must emphasise self-assessment of capabilities. |

| Minkman et al. [27] | Performance improvement based on integrated quality management models: what evidence do we have? A systematic literature review | Healthcare (Chronic Care) | 1995–2006 | 37 | Baldrige, EFQM, MBQA, excellence model, quality award AND chronic care, chronic care model | Found that there is limited evidence that the use of excellence models improve processes or outcomes. Chronic care models show more evidence and further research should focus on integrated care settings. |

| Motwani [28] | Critical factors and performance measures of TQM | Industry | Not stated | Not stated | Not stated | Following a literature review, forty-five performance measures of TQM are identified together with seven critical factors. |

| Rhydderch et al. [29] | Organisational assessment in general practice: A systematic review and implications for quality improvement | Healthcare | 1996–2003 | 13 papers; 5 assessments | MeSH and textword: organisational assessment; assessment method | Review indicated a developing field for measuring aspects of primary care. Found there was a paucity of peer reviewed assessments and assessment focus varied with different perspectives of quality improvement. |

| Röglinger et al. [30] | Maturity models in business process management | Industry | Not stated | 10 | Business process management (BPM) maturity models | Identified ten BPM maturity models. Finds that the basic principles and descriptions are well defined however guidelines on their use and purpose need developing. |

| Sila, Ebrahimpour [31] | An investigation of the total quality management survey based research published between 1989 and 2000: A literature review | Industry | 1989–2000 | 76 | TQM, strategic QM, QM, best practice, TQI, business excellence, performance excellence, quality excellence, CI, CQI, QI, QA, world class manufacturing | Twenty-five factors identified. Found that more surveys needed to identify the extent factors contribute to TQM in companies and to understand the conflicting results through some of the results, ideally through longitudinal studies, of which none were identified. |

| Van Looy et al. [32] | A conceptual framework and classification of capability areas for business process maturity | Industry | Not stated | 69 | Process maturity | Developed three maturity types: business process maturity, intermediate business process orientation maturity and business process orientation maturity, representing different levels of capability. Found that there is a lack of consensus on capability areas needed. |

| Wardhani et al. [33] | Determinants of quality management systems (QMS) implementation in hospitals | Healthcare (Acute Care) | 1992–2006 | 14 | TQM; Quality assurance healthcare, hospital, implement | Identified six supporting and limiting factors for QMS implementation: organisational design, culture, quality structure, technical support, and leadership and physician involvement. Found that the degree of QMS implementation is proportional to the degree of employee empowerment, risk free environments and innovation emphasis. |

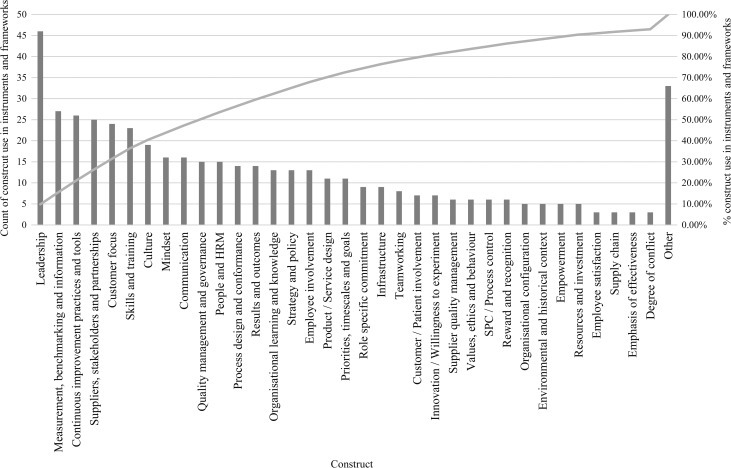

Figure 3.

Pareto chart of construct frequency.

The 14 literature reviews were grounded in a number of different paradigms of quality assurance, improvement and organizational theory, and this was reflected in variations in their content, terminology and perspectives on how to conceptualize and assess improvement capability. Even in reviews from the same paradigm, there were variations in content and terminology. For example, the two literature reviews focused on BPM maturity models [30, 32] have different content and coverage of instruments and constructs despite being conducted during a similar period.

Results

The study contributes to research by conducting a comprehensive review of literature on improvement capability across all sectors, including healthcare.

The review draws on relevant literature from 1989 to 2015 and from 43 journals and identified 70 instruments that met the criteria including 14 literature reviews. Data extracted for each instrument included the abstract, measurement constructs, assessment method, instrument validity and reliability (for quantitative instruments), and the country and sector it had been used in. The study reviews and categorizes the instruments by theme, explores instrument reliability and validity and inductively develops a synthetic framework of eight improvement capability dimensions. Comparative analysis highlights conceptual, terminological and methodological differences and the review considers how improvement capability assessment instruments are used and why. A summary of the instruments and extracted data is in the Supplementary File.

Improvement capability definitions

This review finds that there are many terminological and definitional differences across sectors. Some conceive of improvement capability as a dynamic capability, that is ‘an organisation-wide process of focused and sustained incremental innovation’ [34, p.1106] while others see it primarily in terms of human capital, as ‘knowledgeable and skilled human resources’ [35, p.29], or in terms of organizational assets as ‘resources and processes, supporting both the generation and the diffusion of appropriate innovations’ [36, p.14]. For the purposes of this review, we define improvement capability as ‘the organisational ability to intentionally and systematically use improvement approaches, methods and practices, to change processes and products/services to generate improved performance’.

Assessing improvement capability

Some authors assert that the lack of consistent operational definitions and reliable measures hinders the assessment of improvement capability and argue that more robust measures are required [37]. The 70 instruments were inductively categorized thematically into four main groups or QI paradigms and their most common measurement constructs were identified. The instruments were further analysed by lead author location and sector. This shows that approximately half of the instruments were developed within the USA and about 40% were developed in the healthcare sector. The Supplementary File details in which group each instrument or framework was categorized and their origins. The four groups were:

Improvement models: approaches assessing organization-wide efforts to develop climates in which the organization continuously improves its ability to deliver high-quality products and services to customers.

Maturity models: assessment approaches that examine the degree of formality and optimization of processes, from ad hoc practices, to pro-active optimization and processes over time.

Governance models: assessment approaches that focus on the existence of systematic policies to manage risk through measurement and standards within organizations.

Change models: assessments that focus on the human side of change and improvement, examining individual and organizational behaviours and the conditions and context that nurture change and improvement capabilities.

Improvement models

For 40 instruments (57% of those identified) improvement capability is conceptualized as being grounded in improvement models, where self-reflection and assessment provide critical feedback and an opportunity to improve. These instruments focus on constructs such as leadership, strategy, customer focus, stakeholder engagement and continuous improvement. Three frameworks and instruments relate to quality awards, requiring self-assessment before applying for external assessment. The remaining instruments, bar two [38, 39] are externally administered cross-sectional surveys or structured interviews. Some instruments, for example Saraph et al. [40] are designed for manufacturing plants, rather than organizations and include specific binary indicators (such as whether statistical process control (SPC) is used). A minority originate in healthcare.

Maturity models

Nine instruments take a maturity perspective on improvement capability. The perspective assesses constructs typically against five or six levels of maturity, ranging from ‘novice’ or ‘not implemented’, through to ‘expert’ or ‘fully implemented’. These are used to identify development priorities. This group typically contains instruments for assessment by accrediting organizations. This group also focuses on leadership, strategy and customer focus, but lack constructs related to people and stakeholders.

Governance models

Nine instruments take an assurance perspective. All are designed to be administered by a third party, except for Schilling et al. [41] where a central function administers the framework to many hospitals within its organization. The Deepening our Understanding of Quality Improvement in Europe (DUQuE) project [42] has several scales to measure different aspects for organizational comparison. The main constructs in this group are almost identical to that within the improvement models group but with an additional emphasis on culture.

Change models

There are eight instruments in the change model group; they have an organizational development perspective on improvement capability and emphasize that leadership is a key factor. Most originate in healthcare and all but one survey multiple staff within the sampled organizations. Most constructs relate to aspects internal to the organization. Only one instrument in this group [43] includes a customer focus construct, and only two include a stakeholder construct, indicating limited focus on external requirements.

Assessment processes

The instruments use differing methods to gather data. Two-thirds (66%) are employee surveys designed for cross-sectional use, and few have been used longitudinally. The rest use qualitative assessment methods such as case studies and interviews and some compare findings with other organizations and pre-existing standards.

Two-thirds of the survey instruments involved contacting only one or two respondents within the organization. Sample sizes range from single responses from 1082 organizations [44] to 25 940 respondents from 188 organizations [42]. Response rates are similarly variable. Little attention is given to the potential biases arising from different sampling methods, sample sizes and response rates.

There are few papers describing how the results of the assessments are used. Publicity material from the quality award frameworks such as EFQM state that they are widely used, highly valued and often imitated. However, no single instrument for measuring improvement capability seems to be widely utilized.

Constructs comparison

Analysis of the instrument constructs reveals that there is wide heterogeneity reflecting the paradigmatic divergence in the models and the diverse terminology employed. No construct is included in all the instruments identified. Pareto analysis of the constructs reveals that 22 constructs account for 80% of those measured across all instruments. The most frequent relate to leadership, suppliers and stakeholders, customer focus, measurement, skills, training and improvement practices, but this differs between model groups.

A further inductive analysis of constructs and items across all instruments was undertaken, and this led to the development of a synthetic framework of eight dimensions of improvement capability. These were: organizational culture; data and performance; employee commitment; leadership commitment; service-user focus; stakeholder focus; process improvement and learning and strategy. Descriptions were developed for each dimension as shown in Table 2. The eight dimensions identified can be viewed as eight high level routines that when assembled together in particular ways, bundle to form ‘improvement capability’.

Table 2.

Improvement capability dimensions

| # | Improvement capability dimension | Description |

|---|---|---|

| 1 | Organizational culture | The core values, attitudes and norms and underlying ideologies and assumptions within an organization |

| 2 | Data and performance | The use of data and analysis methods to support improvement activity |

| 3 | Employee commitment | The level of commitment and motivation of employees for improvement |

| 4 | Leadership commitment | The support by formal organizational leaders for improvement and performance |

| 5 | Service-user focus | The identification and meeting of current and emergent service needs and expectations with service users |

| 6 | Process improvement and learning | Systematic methods and processes used within an organization to make improvements through ongoing experimentation and reflection |

| 7 | Stakeholder and supplier focus | The extent of the relationships, integration and goal alignment between the organization and stakeholders such as public interest groups, suppliers and regulatory agencies |

| 8 | Strategy and governance | The process in which organizational aims are implemented and managed through policies, plans and objectives |

Assessment of instrument and framework quality

The empirical testing of instruments focuses on their reliability and validity [45]. Reliability is concerned with the level of random measurement error and consistency. Internal consistency measures how well different items in scales vary together in a sample and is typically measured through Cronbach's alpha, scales should meet a reliability of >0.8 (ibid). Some reliability information, most using Cronbach's alpha, was reported for 44 instruments, though only 14 reported alphas above 0.8.

Construct validity assesses the relationships between measures, their ability to measure what is intended and their consistency with theory. Half the articles reported on construct validity. Most self-assessment and qualitative frameworks have little information regarding the framework validity and reliability, however some describe how consensus was reached and how reflexivity on framework design was used.

Discussion and implications

This study has found that there is wide heterogeneity across the 70 instruments for assessing improvement capability which have been reviewed. It is interesting that none dominate usage and that few have been tested properly for validity and reliability. Further, many of the constructs within the instruments are somewhat ambiguous and inter-related and there are widespread differences in conceptualization, definitions and terminology used. This heterogeneity may be a reflection of different paradigmatic perspectives or conceptualizations of improvement capability. For example, a narrow definition of improvement capability focused on improvement methods may lead to measurement of aspects such as improvement skills and could lead to the establishment of improvement training programmes, where the numbers completing the programme are tracked. In contrast, a more people-centred perspective could lead to a focus on understanding patient and staff experiences and contributions to improvement capability, together with a more qualitative and relational approach to assessing and developing improvement capability. Our synthetic framework of eight dimensions of improvement capability represents a pluralistic perspective on improvement capability, and seeks to balance the different paradigmatic approaches we have found and to recognize that measures of different dimensions may be appropriate for different purposes and usages. For example, a regulatory agency wishing to assess organisations’ improvement capability may focus on dimensions such as strategy and governance, and leadership commitment; while an organizational development consultancy advising organizations might focus on dimensions such as organizational culture, and stakeholder and supplier or service-user focus. It was striking that few of the papers presenting instruments focused on how, where and for what purposes they might be used.

Conclusion

This review provides a comprehensive overview of the current research literature on conceptualizing and measuring improvement capability in organizations and we can identify several fruitful avenues for further research. First, the detailed conceptualization of improvement capability requires more attention, drawing on relevant theoretical frameworks such as the literature on dynamic capabilities to provide a more coherent and consistent theoretical foundation for work in this area.

Second, the absence of any widely accepted and empirically tested and validated instruments and frameworks for assessing improvement capability suggests that the development of such an instrument is needed, and this review and the synthetic framework we have presented may be the first step in that process. Third, the utility of measures of improvement capability, their deployment and uptake in organizations, and the effects this may have on improvement and performance are a much-neglected area needing further research.

Supplementary Material

Supplementary material

Supplementary material is available at International Journal for Quality in Health Care online.

Funding

This research was supported by the Health Foundation in the UK. The Health Foundation was not involved during the design, delivery or submission of the research.

References

- 1. Teece D, Pisano G, Shuen A. Dynamic capabilities and strategic management. Strat Manag J 1997;18:509–33. [Google Scholar]

- 2. Anand G, Ward P, Tatikonda M et al. Dynamic capabilities through continuous improvement infrastructure. J Oper Manag 2009;27:444–61. doi:http://dx.doi.org/10.1016/j.jom.2009.02.002. [Google Scholar]

- 3. McGrath SP, Blike GT. Building a foundation of continuous improvement in a rapidly changing environment: The Dartmouth-Hitchcock Value Institute experience. Jt Comm J Qual Patient Saf 2015;41:435–44. [DOI] [PubMed] [Google Scholar]

- 4. Richardson A, Peart J, Wright SE et al. Reducing the incidence of pressure ulcers in critical care units: a 4-year quality improvement. Int J Qual Health Care 2017: 1–7. doi:10.1093/intqhc/mzx040. [DOI] [PubMed] [Google Scholar]

- 5. Smith JM, Gupta S, Williams E et al. Providing antenatal corticosteroids for preterm birth: a quality improvement initiative in Cambodia and the Philippines. Int J Qual Health Care 2016;28:682–8. doi:10.1093/intqhc/mzw095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Babich LP, Charns MP, McIntosh N et al. Building systemwide improvement capability: does an organization's strategy for quality improvement matter? Qual Manag Health Care 2016;25:92–101. [DOI] [PubMed] [Google Scholar]

- 7. Gonzalez RVD, Martins MF. Capability for continuous improvement: analysis of companies from automotive and capital goods industries. Total Qual Manag J 2016;28:250–74. doi:10.1108/TQM-07-2014-0059. [Google Scholar]

- 8. Furnival J. Regulation for Improvement? A Study of How Improvement Capability is Conceptualised by Healthcare Regulatory Agencies in the United Kingdom. Manchester: University of Manchester, 2017. [Google Scholar]

- 9. Whittemore R, Knafl K. The integrative review: updated methodology. J Adv Nurs 2005;52:546–53. [DOI] [PubMed] [Google Scholar]

- 10. Torraco RJ. Writing integrative literature reviews: guidelines and examples. Hum Resource Develop 2005;4:356–67. doi:10.1177/1534484305278283. [Google Scholar]

- 11. Cowell JM. Literature reviews as a research strategy. J Sch Nurs 2012;28:326–7. doi:10.1177/1059840512458666. [DOI] [PubMed] [Google Scholar]

- 12. Cooper HM. Scientific guidelines for conducting integrative research reviews. Rev Educ Res 1982;52:291–302. doi:10.2307/1170314. [Google Scholar]

- 13. Moher D, Liberati A, Tetzlaff J et al. The PRISMA Group Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med 2009;6:e1000097 doi:10.1371/journal.pmed.1000097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Greenhalgh T, Peacock R. Effectiveness and efficiency of search methods in systematic reviews of complex evidence: audit of primary sources. Br Med J 2005;331:1064–5. doi:http://dx.doi.org/10.1136/bmj.38636.593461.68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Grandzol JR, Gershon M. A survey instrument for standardising TQM modelling research. Int J Qual Sci 1998;3:80–105. [Google Scholar]

- 16. Anderson J, Rungtusanatham M, Schroeder R et al. A path analytic model of a theory of quality management underlying the deming management method: preliminary empirical findings. Decis Sci 1995;26:637–58. doi:10.1111/j.1540-5915.1995.tb01444.x. [Google Scholar]

- 17. Deming Prize Committee The Application Guide for the Deming Prize. 2014.

- 18. European Foundation for Quality Management The European Foundation for Quality Management (EFQM) Excellence Model. EFQM, Europe. 2014. http://www.efqm.org/the-efqm-excellence-model. Accessed 16/1/2015.

- 19. National Institute of Standards and Technology Baldrige Excellence Framework. National Institute of Standards and Technology (NIST), USA. 2013–14. http://www.nist.gov/baldrige/publications/business_nonprofit_criteria.cfm. Accessed 16/01 2015.

- 20. Brennan S, Bosch M, Buchan H et al. Measuring organizational and individual factors thought to influence the success of quality improvement in primary care: a systematic review of instruments. Implement Sci 2012;7:121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Doeleman HJ, ten Have S, Ahaus CTB. Empirical evidence on applying the European Foundation for Quality Management excellence model. A literature review. Total Qual Manag Bus 2013;25:439–60. doi:10.1080/14783363.2013.862916. [Google Scholar]

- 22. Groene O, Botje D, Sunol R et al. A systematic review of instruments that assess the implementation of hospital quality management systems. Int J Qual Health Care 2013;25:525–41. [DOI] [PubMed] [Google Scholar]

- 23. Heitschold N, Reinhardt R, Gurtner S. Measuring critical success factors of TQM implementation successfully: a systematic literature review. Int J Prod Res 2014;52:6234–72. [Google Scholar]

- 24. Kaplan HC, Brady PW, Dritz MC et al. The influence of context on quality improvement success in health care: a systematic review of the literature. Milbank Q 2010;88:500–59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Karuppusami G, Gandhinathan R. Pareto analysis of critical success factors of total quality management. Total Qual Manage Mag 2006;18:372–85. doi:http://dx.doi.org/10.1108/09544780610671048. [Google Scholar]

- 26. Mehra S, Hoffman JM, Sirias D. TQM as a management strategy for the next millennia. Int J Oper Prod Manag 2001;21:855–76. [Google Scholar]

- 27. Minkman M, Ahaus K, Huijsman R. Performance improvement based on integrated quality management models: what evidence do we have? A systematic literature review. Int J Qual Health Care 2007;19:90–104. doi:10.1093/intqhc/mzl071. [DOI] [PubMed] [Google Scholar]

- 28. Motwani J. Critical factors and performance measures of TQM. Total Qual Manag Mag 2001;13:292–300. [Google Scholar]

- 29. Rhydderch M, Edwards A, Elwyn G et al. Organizational assessment in general practice: a systematic review and implications for quality improvement. J Eval Clin Pract 2005;11:366–78. [DOI] [PubMed] [Google Scholar]

- 30. Röglinger M, Pöppelbuß J, Becker J. Maturity models in business process management. Bus Process Manag J 2012;18:328–46. [Google Scholar]

- 31. Sila I, Ebrahimpour M. An investigation of the total quality management survey based research published between 1989 and 2000: a literature review. Int J Qual Reliab Manage 2002;19:902–70. [Google Scholar]

- 32. Van Looy A, De Backer M, Poels G. A conceptual framework and classification of capability areas for business process maturity. Enterp Infom Syst 2012;8:188–224. doi:10.1080/17517575.2012.688222. [Google Scholar]

- 33. Wardhani V, Utarini A, van Dijk JP et al. Determinants of quality management systems implementation in hospitals. Health Policy 2009;89:239–51. doi:http://dx.doi.org/10.1016/j.healthpol.2008.06.008. [DOI] [PubMed] [Google Scholar]

- 34. Bessant J, Francis D. Developing strategic continuous improvement capability. Int J Oper Prod Manag 1999;19:1106–19. [Google Scholar]

- 35. Kaminski GM, Schoettker PJ, Alessandrini EA et al. A comprehensive model to build improvement capability in a pediatric academic medical center. Acad Pediatr 2014;14:29–39. [DOI] [PubMed] [Google Scholar]

- 36. Adler PS, Riley P, Kwon SW et al. Performance improvement capability: keys to accelerating performance improvement in hospitals. Calif Manage Rev 2003;45:12–33. [Google Scholar]

- 37. Gagliardi AR, Majewski C, Victor JC et al. Quality improvement capacity: a survey of hospital quality managers. BMJ Qual Saf 2010;19:27–30. [DOI] [PubMed] [Google Scholar]

- 38. Batalden P, Stoltz P. A framework for the continual improvement of health care: building and applying professional and improvement knowledge to test changes in daily work. Jt Comm J Qual Improv 1993;19:424–47. [DOI] [PubMed] [Google Scholar]

- 39. Dahlgaard JJ, Pettersen J, Dahlgaard-Park SM. Quality and lean health care: a system for assessing and improving the health of healthcare organisations. Total Qual Manag Bus 2011;22:673–89. doi:10.1080/14783363.2011.580651. [Google Scholar]

- 40. Saraph JV, Benson PG, Schroeder RG. An instrument for measuring the critical factors of quality management. Decis Sci 1989;20:810–29. doi:10.1111/j.1540-5915.1989.tb01421.x. [Google Scholar]

- 41. Schilling L, Chase A, Kehrli S et al. Kaiser Permanente's performance improvement system, part 1: from benchmarking to executing on strategic priorities. Jt Comm J Qual Patient Saf 2010;36:484–98. [DOI] [PubMed] [Google Scholar]

- 42. Secanell M, Groene O, Arah OA et al. Deepening our understanding of quality improvement in Europe (DUQuE): overview of a study of hospital quality management in seven countries. Int J Qual Health Care 2014;26:5–15. doi:10.1093/intqhc/mzu025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Bobiak SN, Zyzanski SJ, Ruhe MC et al. Measuring practice capacity for change: a tool for guiding quality improvement in primary care settings. Qual Manag Health Care 2009;18:278–84. doi:10.1097/QMH.0b013e3181bee2f5. [DOI] [PubMed] [Google Scholar]

- 44. Wagner C, De Bakker DH, Groenewegen PP. A measuring instrument for evaluation of quality systems. Int J Qual Health Care 1999;11:119–30. [DOI] [PubMed] [Google Scholar]

- 45. Carmines EG, Zeller RA. Reliability and Validity Assessment. Newbury Park, CA: Sage, 1979. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.