Abstract

Face-to-face communication has several sources of contextual information that enables language comprehension. This information is used, for instance, to perceive mood of interlocutors, clarifying ambiguous messages. However, these contextual cues are absent in text-based communication. Emoticons have been proposed as cues used to stress the emotional intentions on this channel of communication. Most studies have suggested that their role is to contribute to a more accurate perception of emotions. Nevertheless, it is not clear if their influence on disambiguation is independent of their emotional valence and its interaction with text message valence. In the present study, we designed an emotional congruence paradigm, where participants read a set of messages composed by a positive or negative emotional situation sentence followed by a positive or negative emoticon. Participants were instructed to indicate if the sender was in a good or bad mood. With the aim of analyzing the disambiguation process and observing if the role of the emoticons in disambiguation is different according their valence, we measure the rate of responses of perceived mood and the reaction times (RTs) for each condition. Our results showed that the perceived mood in ambiguous messages tends to be more negative regardless of emotion valence. Nonetheless, we observed that this tendency was not the same for positive and negative emoticons. Specifically, negative mood perception was higher for incongruent positive emoticons. On the other hand, RTs for positive emoticons were faster than for the negative ones. Responses for incongruent messages were slower than for the congruent ones. However, the incongruent condition showed different RTs depending on the emoticons’ valence. In the incongruent condition, responses for negative emoticons was the slowest. Results are discussed taking into account previous observations about the potential role of emoticons in mood perception and cognitive processing. We concluded that the role of emoticons in disambiguation and mood perception is due to the interaction of emoticon valence with the entire message.

Keywords: mood detection, disambiguation, text-based communication, emoticon, emotional valence

Introduction

Social human interactions are based on emotional expressions. This information comes from several sources such as body gestures (Stekelenburg and de Gelder, 2004; Van Heijnsbergen et al., 2007), prosody (Heilman et al., 1984), or facial expressions (Haxby et al., 2002). The reason is that the affective information is crucial to understanding the others during the communicative situations (Pessoa, 2009). This issue has lead an important body of research to be focused on the emotional influence on human cognition, showing that human faces, for instance, are processed integrating their emotional valence (Krombholz et al., 2007; Lynn and Salisbury, 2008; Hinojosa et al., 2015), and the emotional context (Righart and de Gelder, 2008; Diéguez-Risco et al., 2015).

Nevertheless, nowadays digital technology has become one of the most used channels in human social communication. One of the technological options used in our interactions has been text-based conversations with instant messages. In this communication, information provided from face-to-face interactions is not available. This issue has been considered a factor that could hinder comprehension during text-based interactions (Kiesler et al., 1984). To compensate the lack of information in text-based communication, people use alternative pragmatic cues to enhance the emotional expressivity (Zhou et al., 2004; Hancock et al., 2007). One cue that has been extensively used to stress the emotional intentions on this channels has been the emoticon, which is considered a non-linguistic tool for the successful comprehension of messages (Walther and D’Addario, 2001; Kaye et al., 2016). Some authors have compared emoticons to non-verbal expressive gestures, suggesting that they replace them on text-based communication giving dynamicity to the conversations, and facilitating emotional emphasis (Feldman et al., 2017a,b). The function of emoticons in this dimension has yielded an incipient research to study their role in emotional comprehension during virtual communication (for a review see Aldunate and González-Ibáñez, 2017).

Most of the studies have focused their research on the active influence of emoticons in language comprehension. For example, it has been observed that interpreting the meaning in text-based messages is affected by the emoticons, which even facilitates the use of sarcasm in communication through the creation of ambiguities by manipulating its emotional valence (Derks et al., 2008). This issue is important because figurative language is characterized by ambiguity and it requires contextual information and strategies for its comprehension (Cornejo et al., 2007, 2009; Gibbs and Colston, 2012), which makes it more difficult to be understood in these channels. Other studies of sarcastic language comprehension have shown that emoticons have an important role for the design of machines to classify figurative language (Muresan et al., 2016). Filik et al. (2016) compared the effect of different emoticons (including non-face emojis) with the effect of punctuation marks on the comprehension of sarcastic messages, observing that emoticons were more expressive and effective for the disambiguation.

But language comprehension in human interactions requires knowledge of the interlocutors’ mood or emotional intentions in communicative acts because of their social character (Panksepp, 2004; Decety and Ickes, 2011). Lo (2008) studied the influence of emoticons on affective interpretation of messages. He asked participants to indicate on a scale the degree of happiness or sadness of messages without emoticons, and with congruent and incongruent emoticons. In the study it was observed that messages were interpreted as more positive when they were presented with happy emoticons, and that messages were considered more negative when they were accompanied by sad emoticons. Based on this, Lo (2008) concluded that emoticons contribute to a more accurate perception of the intentions of the text messages.

Although there are more studies about how emoticons help the comprehension of language, it has not been studied in depth the role that they play in the process of inferring the emotional state of the others. This point is necessary because it is known that cognitive and neural activity prioritize the emotional information influencing the processes (Haxby et al., 2002), but depending on the valence, because it has been observed that the integration of negative emotional information and positive emotional information is not the same at early stages of processing (Blau et al., 2007). The observed differences of the effect of the emotional valence in the processing implies that it is necessary to provide experimental evidence to observe if emoticons are independent of the valence of other sources in text-based communication, like linguistic information, or if they are prioritized in these channels of information. In this case, in ambiguous messages, we expect the emoticons to guide the inference of the mood of sender. On the other hand, if emoticons interact with other information present on messages, like the text’s emotional valence, during the disambiguation, we should expect differences in the inference of mood depending on the valence of both sources of valence information (text/emoticon).

In the present study, we designed an experiment to investigate the influence of the valence of the text in the influence of the emoticons to disambiguate messages on the inference of sender’s mood. Using an emotional decision task in an affective congruence paradigm, we presented text-based messages with positive and negative valence, followed by typographic emoticons which could have positive and negative valence. Our aim was to analyze the influence of emoticon valence on the capacity to infer the interlocutor’s mood and on the reaction time (RT) in order to observe if the emoticon is the prioritized source of information, or if it depends on the affective contextual information in which it appears.

Materials and Methods

Participants

Seventy-four undergraduate students participated on the experiment (Mean age = 21.3; ± 1.93 SD; 52 women). All of them were native speakers, and none had psychiatric disorders or neurological diseases. All the participants signed an informed consent form in accordance with the Declaration of Helsinki, which was authorized by the Institutional Ethical Committee.

Materials

A list of 60 sentences (Supplementary Table S1) was created describing an emotional situation uttered by a person (Table 1). Thirty sentences were about positive situations and 30 were about negative situations. The complete set of sentences was previously validated to control the equivalence in emotional intensity between both lists of situational sentences (positive vs. negative). Stimuli validation was carried out through the administration of a questionnaire with all the situational sentences. Sixty-eight participants (49 women, Mean age: 22.9 years ± 0.7 SD) were instructed to indicate the valence and the emotional intensity with a Likert scale of response where one meant very negative, and five meant very positive. Both lists of situational sentences were statistically similar in emotional intensity [t(58) = 1.062; p = 0.290], with a comparable mean of intensity of valence for positive (Mean = 4.6; ± 0.3 SD) and negative (Mean = 4.5; ± 0.3 SD).

Table 1.

Examples of positive and negative situational sentences.

| Positive situational sentences | Negative situational sentences |

|---|---|

| Me regalaron un auto nuevo | Me chocaron el auto |

| (They gave me a new car) | (They crashed my car) |

| Me felicitaron en el trabajo | Me echaron del trabajo |

| (They congratulated me at work) | (I was fired) |

| Entregué mi tesis | Se borró mi tesis |

| (I handed in my thesis) | (My thesis was deleted) |

| Tengo una pareja hermosa | Mi novio me va a dejar |

| (I have a wonderful partner) | (My boyfriend is going to break up with me) |

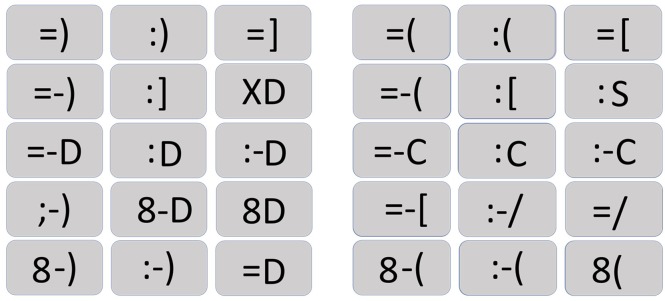

For the experiment were used a set of 30 typographic emoticons (Figure 1) with Font Calibri (15 positive emoticons and 15 negative emoticons). Both lists were controlled by emotional intensity, through the administration of a questionnaire were participants had to indicate the valence and emotional intensity for each one. 75 participants (Mean Age = 20.46; SD = 0.4; 47 women) complete the questionnaire in a Likert scale, where 1 meant very negative, and 5 meant very positive. Both sets of emoticons (positive vs. negative) presented the same emotional intensity [t(28) = 1.193; p = 0.24], with a comparable mean of intensity of valence for positive (Mean = 4.4; ± 0.3 SD) and negative (Mean = 4.3; ± 0.4 SD).

FIGURE 1.

Typographic emoticons set. The 15 positive emoticons used on the experiment are on the left side of the figure, and the 15 negative emoticons are on the right side.

Procedure

In laboratory, all the participants were handed an informed consent form, which all signed after being briefed on the conditions and instructions of the experiment. They had to sit in front of a screen and they were instructed to respond in each trial with a button if they considered if the person that wrote the message was with a good or bad mood at the time he/she sent it. In the first half of the task, subjects pressed a right bottom when they consider the message was written in a good mood. Conversely, subjects were prompted to response with a left bottom when they consider the message was written in a bad mood. To control lateralized motor response effect, we counterbalanced response buttons after the first half of trials. Subjects were instructed to respond as fast as possible once they perceive the emotional content of the message.

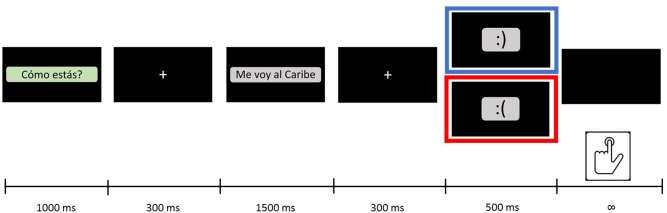

Psychopy software (Peirce, 2007) was used to build and present the experiment. Trials simulated a brief text-based conversation where a person responded to the question “How are you?” with a situational sentence finishing with a typographic emoticon (Figure 2). Each trial began with the text-based message asking “How are you?” presented 1,000ms on a black background. After that, it was presented the situational sentence during 1,500ms on the screen. Finally, the typographic emoticon was presented on screen during 400ms. Participants had no time restrictions to respond for each trial, but they were instructed to do it as fast it was possible. Once they press some of the response buttons, next trial initiated. The reason for the order of each trial (first text; second emoticon) was to maintain the structure of the most frequent text-based communication (Novak et al., 2015).

FIGURE 2.

Experimental trial. Sequence of events on each trial. Time of presentation is represented in milliseconds (ms). Blue corresponds to congruent emoticon, and red corresponds to incongruent emoticon.

To avoid buildup, all trials were randomized controlling the sequence of trials presentation belonging to the same conditions. Randomization considered the following criteria: (a) trials with the same condition of affective congruence (valence x congruence: pp / nn / np / pn) could not be presented more than twice in a row; (b) trials with the same congruence condition (congruent / incongruent) could not be presented more than three times in a row; (c) trials with the same valence in situational sentence could not be presented more than three times in a row; (d) trials with the same emoticons’ valence could not be presented more than three times in a row; and finally, (e) trials with the same emoticon could not be presented twice in a row.

Statistics

All statistics were done using STATISTICA 7.0 software (StatSoft, Inc). We performed a dependent samples t-test to compare the amount of trials in which participants judged as good or bad mood in the different conditions. Repeated-measures ANOVA was performed to compare RTs. We used congruency (levels: congruent, incongruent), emoticon valence (levels: positive, negative) and response (levels: good mood, bad mood) as factors.

Results

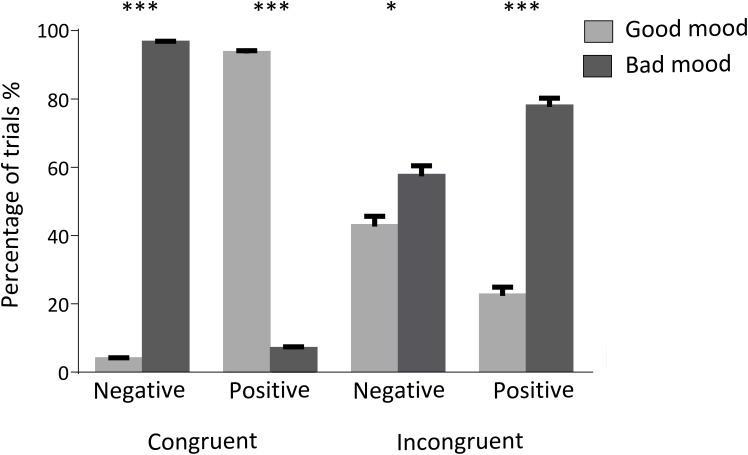

We measured mood selection by calculating the number of trials which participants judged as good or bad mood in the different conditions (Table 2). Differences can be observed for each condition. Specifically, participants judged as bad mood in the negative congruent condition (t(78.89), p < 0.01) and good mood in the positive congruent (t(55.17), p < 0.01). On the other hand, participants judged as bad mood in the incongruent conditions regardless of the emoticon emotional valence. However, the difference observed in the negative incongruent condition (good mood = 12.789, bad mood = 17.211; t(2.423), p = 0.018) is notoriously smaller than the positive incongruent condition (good mood = 6.704, bad mood = 23.296; t(10.79), p < 0.01) (Table 2 and Figure 3).

Table 2.

Descriptive behavioral results. The table shows the selection response rate (%), according to whether participants perceived a good or bad mood of senders, and Reaction times (RT), for each condition (NN: Negative Congruent; PP: Positive Congruent; NP: Positive Incongruent; PN: Negative Incongruent). For all results, Standard Deviation (SD) and Standard Error (SEM) are indicated.

| GOOD MOOD |

BAD MOOD |

||||

|---|---|---|---|---|---|

| Frequency | RT | Frequency | RT | ||

| NN | MEAN | 1,099 (3,662%) | 1,138 | 28,901 (96,338%) | 1,028 |

| SD | 1,485 | 0,621 | 1,485 | 0,404 | |

| SEM | 0,176 | 0,074 | 0,176 | 0,048 | |

| PP | MEAN | 28,000 (93,333%) | 1,065 | 2,000 (6,667%) | 1,502 |

| SD | 1,986 | 0,429 | 1,986 | 0,902 | |

| SEM | 0,236 | 0,051 | 0,236 | 0,107 | |

| NP | MEAN | 6,704 (22,347%) | 1,636 | 23,296 (77,653%) | 1,467 |

| SD | 6,477 | 0,886 | 6,477 | 0,651 | |

| SEM | 0,769 | 0,105 | 0,769 | 0,077 | |

| PN | MEAN | 12,789 (42,629%) | 1,872 | 17,211 (57,371%) | 1,878 |

| SD | 7,688 | 1,280 | 7,688 | 1,102 | |

| SEM | 0,912 | 0,152 | 0,912 | 0,131 | |

FIGURE 3.

Percentage of trials judged as good or bad mood. Bars indicates the mean and SEM of the percentage of trials judged as good or bad mood for each condition. ‘Negative’ and ‘Positive’ indicates the emoticon’s valence. ∗, ∗∗∗: statistically significant differences with p values < 0.05, or < 0.001, respectively.

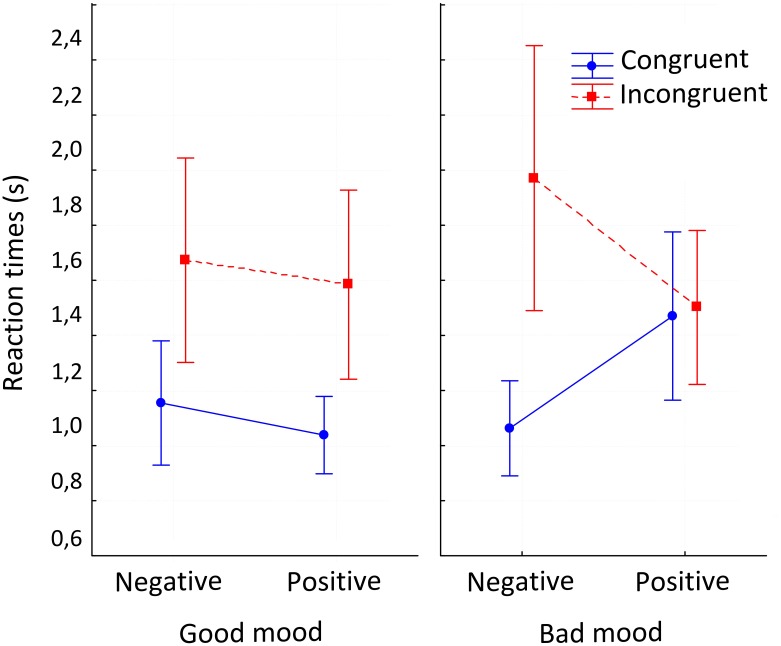

We measured task performance by calculating the RTs for each condition. We compared them in order to assess whether there are interactions between the emotional congruence between a text message and the subsequent emoticon stimulus, the valence of the emoticon and mood detection, and how these dimensions influence behavioral response.

We found a main effect for mood detection, where “bad mood” showed slower RTs than “good mood” (F(1,31) = 7.435; p = 0.010). We also found an effect for congruency factor, where incongruent condition showed slower RTs than congruent condition [F(1,31) = 19.27; p < 0.01]. Valence factor showed no differences [F(1,31) = 1.217; p = 0.278] (Figure 4).

FIGURE 4.

Interaction between the three factors. Interaction effect in repeated measure ANOVA between congruency, emoticon’s valence and mood detection factors. Vertical bars denote 0.95 confidence intervals.

Furthermore, an interaction effect between two factors (congruence/valence) was observed [F(1,31) = 10.43; p < 0.01]. Specifically, during incongruent conditions, negative emoticons had slower RTs than positive emoticons. We also found an interaction between the three factors (congruency, valence and mood detection) [F(1,31) = 16.56; p < 0.01]. Specifically, when participants selected good mood response, incongruent condition showed slower RTs contrasted with congruent condition, regardless of the valence of emoticon. On the other hand, when they selected bad mood response, the positive emoticon showed the same RT for both congruent and incongruent. However, when emoticon valence is negative, incongruent condition showed slower RTs contrasted with congruent condition (Figure 4).

Discussion

The current study analyzes the role of emoticons to disambiguate messages to detect the state of mood of the sender during a text-based communication. We used an affective congruence task where participants had to select if the sender was on a good or bad mood, reading text-messages describing emotional situations with a critical emoticon at the end. We manipulated the emotional valence of the emoticons, generating congruent and incongruent affective messages. Our focus was in the incongruous conditions to observe which information is used by the participants to detect emotions. Our results indicated that the emoticons did not always guide the disambiguation processes on text-based interactions. Specifically, we observed that the ambiguous messages (incongruent condition) with positive emoticons (negative text) and with negative emoticons (positive text), are selected more frequently as messages sent by persons with a negative state of mood. In addition, our results show that there are interactions between the valence of the emoticon and the valence of the preceding text. In spite of the tendency to select the negative mood, this tendency differs if we analyze the different types of ambiguities. Specifically, in conditions where emoticons had a positive valence in incongruent messages, the rate of selection as good or bad mood was more similar than when the incongruent condition ended with a negative emoticon. In this case, the tendency to select bad mood was higher than the incongruent condition which presented positive emoticons, with a greater difference of response rates selecting good or bad mood. Additionally, this tendency is inverted, observing that incongruent messages with negative emoticons tend to be related to good emotional states than when the incongruent messages ended with a positive emoticon.

On the other hand, a congruence effect was observed on the RTs when the participants selected good mood response, with slower RT for incongruent messages; but this effect disappeared when the participants selected bad mood response. Furthermore, the congruence effect was observed only when emoticons were negative with slower RT in incongruent condition. Additionally, negative emoticons had slower RTs than positive emoticons.

In the following we will further discuss some of these points.

Ambiguous Messages Tend to Be Perceived as Bad State of Mood of the Sender, but This Tendency Depends on the Valence of the Emoticon

Results of this experiment show that ambiguous messages are more frequently associated with a bad mood of the sender, but also that this difference is more pronounced when emoticons had a positive valence. This evidence is contradictory with results obtained in other studies, which suggests that emoticons valence guides the mood perception being prioritized to detect emotions in text-based communication (Derks et al., 2007). Lo (2008), with a task similar to the one set out in this study, presented different conclusions from those obtained from our results. Lo (2008) observed that the valence of the emoticon guided the perception of mood; while we observed that in the incongruent conditions, the influence of emoticons on the disambiguation of incongruous messages for the perception of mood is not the same when the emoticons are positive or negative.

Some distinctions can be crucial to explain the differences between both studies. Stimuli used by Lo (2008) were presented during 1 min, while in our study emoticons were presented during 500 ms. This implies that when participants have less time to respond to select the mood of the message, they do it with less deliberated inference processing. Longer times to select responses could facilitate a reflexive analysis for judging the mood; while we obtained results of earliest stages of mood detection process. Additionally, Lo (2008) instructed the participants to indicate the degree of sadness or happiness perceived on each messages. Our responses were dichotomic alternatives, where participants had to indicate only if they perceived a good or bad mood. This difference is related with the previous point (i.e., the deliberation), because, to indicate the degree of a specific emotion intensity, implies analyzing the stimuli which is a more complex process. In our experiment, we did not take the level of perceived emotional intensity, but, with the previous validation of stimuli, we controlled that the observed valence effects in ambiguous messages, were not due to differences on emotional intensity.

The observed interaction between the emoticons and the ambiguity suggests that considering text and emoticon have dissimilar and isolated functions – text communicating ideas, and emoticons communicating emotions (Knapp and Hall, 2002) – is not an appropriate approach. Our results imply that it is more plausible to consider that their role (text and emoticon) has to be considered from the relationship between both of them to detect sender’s mood from ambiguous messages.

One aspect that has been proposed about emoticons is that these cues provide enjoyment to the interaction (Huang et al., 2008). Recording electrodermal activity to measure arousal, and electromyography to measure facial micro-expressions, Thompson et al. (2016) observed that sarcastic expressions (which by definition are ambiguous) presented more positive responses when they were accompanied by emoticons, increasing arousal levels and enhanced smiling. Considering the observations of Thompson et al. (2016) and the fact that we observed that people tend to detect negative emotions from ambiguous messages, make us to assume that although we enjoy conversation when emoticons are included, we are detecting the negative emotions in the messages.

Our results are not the first to show the negative emotions detection tendency in ambiguous messages. Similarly, Walther and D’Addario (2001) found that when messages contained any negative information (in text or emoticon) there was a higher tendency to perceived negative emotions. These findings, including ours, indicate that considering an isolated influence of emoticons is a limited explanation when messages are ambiguous. Rather, we propose that it has been considered that the emoticon’s functionality has to be related with a role on the indication of illocutionary force on text-based CMC (Dresner and Herring, 2010, 2014). Thus, from a Speech Act Theory (Austin, 1975; Searle, 1985), emoticon would have a pragmatic meaning, with a function which goes beyond its emotional information, similar to what happens with punctuation marks or even gestures (Feldman et al., 2017b).

Future Directions

To our knowledge, this is the first study where the detection of emotions in ambiguous text-based communication is experimentally measured from the RTs, and not just from a selection response. Ambiguity has been a difficulty for CMC, not just for human interactions, but also to create computational algorithms to sentiment analysis. Example of this is irony or metaphorical language, which are part of the figurative language in opposition to literality (Sarbaugh-Thompson and Feldman, 1998). With our results, we can see that disambiguation not only depends on the emoticon, but also on the interaction with its contextual information. Nevertheless, further experimental research is required to understand the active role on disambiguation of emoticons in their context.

This study did not control the perceived irony of the expressions after every message, which is undoubtedly one of the ways of making sense of ambiguous sentences (Cornejo et al., 2007). Therefore, further designs should also control for the perceived irony. In addition, previous studies have observed that virtual interactions present individual differences according to personality traits like extraversion or openness to experience, which could be influencing on the user’s perception in virtual communication (Kaye et al., 2016, 2017). We did not measure personal traits which could be influencing the detection of mood states from text-messages related with social interactions (receiver’s mood; empathy levels, communicative skills or social skills). It would be useful to observe if some of these traits could predict personal differences on the tendency to infer emotional states of others on communicational interactions. Additionally, experimental manipulations of factors, which is known to influence comprehension, like for example, age, gender, cultural differences, information knowledge speaker or common ground, among others (Kronmüller and Barr, 2007; Van Berkum et al., 2008; Galati and Brennan, 2010), would allow to understand emotional detection of sender and similarities and differences between text-based communication and face-to-face communication.

Regarding this study, it is necessary to bear in mind that this experiment used typographic emoticons to assess the question of their role on emotional disambiguation in text-based communication. These cues are schematic symbols and not complex images like emojis, which have more details on the emotional expressions. Differences in their influence on the emotional expressivity has been observed on previous studies (Nobuyuki et al., 2014), so it is important to consider this difference on new researches.

Finally, this experiment uses trials initiated by text and not observed if the order of the text and emoticons had another effect into comprehension, but it is known that text + emoticons is the more usual structure of messages (Novak et al., 2015). Nevertheless, to study if there is a difference in results due to the order of the composition of each trial could help to control emotional priming effects influenced by the way in which stimuli are presented (text vs. emoticon) (Hermans et al., 1994; Stenberg et al., 1998; Spruyt et al., 2002).

Conclusion

Emoticons have been considered the expressive cue used in text-based messages to enhance the intended emotion in CMC. Nevertheless, future theories and studies have to consider the contextual influence to understand the emoticons processing in CMC. It is known that human communication requires the integration of all available contextual information (Tanenhaus et al., 1995; Xu et al., 2005). For example, gestures and body movements are integrated to the speech processing (Willems et al., 2006; Özyürek et al., 2007; Cornejo et al., 2009). Our results indicate that this also occurs in the virtual text-based communication, integrating text and emoticon information for detecting the mood of the sender on affective communication. Furthermore, we conclude that this integration depends on the valence of the information on the disambiguation of messages, which seems to be more difficult in the conditions were the incongruity contains a positive text and a negative emoticon. All this leads us to emphasize the need to study the role of emoticons taking into account their relation to the context in which they are presented, and not as isolated information. All this leads us to emphasize the need to study the role of emoticons taking into account their relation to the context in which they are presented, and not as isolated emotional information, considering that communication is a complex phenomenon. Specifically, we observed from our study that it is important to consider emoticons from their pragmatic function in CMC.

Author Contributions

NA, MV-G, FR-T, VL, and CB made substantial contributions for the conception and design of the work, drafting and revising this manuscript critically, and approving the final version to be published. All of them agreed to be accountable for all aspects of the work ensuring that any question related to the work are appropriately investigated and resolved.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Camila Bueno, Francisco Donoso, Naiomi Naipan, Paloma Ruiz, and Santiago Toledo, because their help in the data acquisition process in the Laboratorio de Afectividad y Comunicación (Psicología-USACH).

Footnotes

Funding. This study was funded by Inserción a la Academia #79140067 (PAI), Comisión Nacional de Investigación Científica y Tecnológica (CONICYT), granted to NA and FONDECYT Regular de Investigación #11502141, Comisión Nacional de Investigación Científica y Tecnológica (CONICYT), granted to VL.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2018.00423/full#supplementary-material

References

- Aldunate N., González-Ibáñez R. (2017). An integrated review of emoticons in computer-mediated communication. Front. Psychol. 7:2061. 10.3389/fpsyg.2016.02061 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Austin J. L. (1975). How to Do Things with Words. Oxford: Oxford university press; 10.1093/acprof:oso/9780198245537.001.0001 [DOI] [Google Scholar]

- Blau V. C., Maurer U., Tottenham N., McCandliss B. D. (2007). The face-specific N170 component is modulated by emotional facial expression. Behav. Brain Funct. 3:7. 10.1186/1744-9081-3-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cornejo C., Simonetti F., Aldunate N., Ibáñez A., López V., Melloni L. (2007). Electrophysiological evidence of different interpretative strategies in irony comprehension. J. Psycholinguist. Res. 36 411–430. 10.1007/s10936-007-9052-0 [DOI] [PubMed] [Google Scholar]

- Cornejo C., Simonetti F., Ibáñez A., Aldunate N., Ceric F., López V., et al. (2009). Gesture and metaphor comprehension: electrophysiological evidence of cross-modal coordination by audiovisual stimulation. Brain Cogn. 70 42–52. 10.1016/j.bandc.2008.12.005 [DOI] [PubMed] [Google Scholar]

- Decety J., Ickes W. (2011). The Social Neuroscience of Empathy. Cambridge, MA: MIT Press. [Google Scholar]

- Derks D., Bos A. E., Von Grumbkow J. (2007). Emoticons and social interaction on the internet: the importance of social context. Comput. Hum. Behav. 23 842–849. 10.1016/j.chb.2004.11.013 [DOI] [Google Scholar]

- Derks D., Bos A. E., Von Grumbkow J. (2008). Emoticons and online message interpretation. Soc. Sci. Comput. Rev. 26 379–388. 10.1177/0894439307311611 22394421 [DOI] [Google Scholar]

- Diéguez-Risco T., Aguado L., Albert J., Hinojosa J. A. (2015). Judging emotional congruency: explicit attention to situational context modulates processing of facial expressions of emotion. Biol. Psychol. 112 27–38. 10.1016/j.biopsycho.2015.09.012 [DOI] [PubMed] [Google Scholar]

- Dresner E., Herring S. C. (2010). Functions of the nonverbal in CMC: emoticons and illocutionary force. Commun. Theory 20 249–268. 10.1111/j.1468-2885.2010.01362.x [DOI] [Google Scholar]

- Dresner E., Herring S. C. (2014). Emoticons and Illocutionary Force Perspectives on Theory of Controversies and the Ethics of Communication. Amsterdam: Springer, 81–90. 10.1007/978-94-007-7131-4_8 [DOI] [Google Scholar]

- Feldman L. B., Aragon C. R., Chen N. C., Kroll J. F. (2017a). Emoticons in informal text communication: a new window on bilingual alignment. Bilingualism 21 209–218. 10.1017/S1366728917000359 [DOI] [Google Scholar]

- Feldman L. B., Aragon C. R., Chen N. C., Kroll J. F. (2017b). Emoticons in text may function like gestures in spoken or signed communication. Behav. Brain Sci. 40:e55. 10.1017/S0140525X15002903 [DOI] [PubMed] [Google Scholar]

- Filik R., Durcan A., Thompson D., Harvey N., Davies H., Turner A. (2016). Sarcasm and emoticons: comprehension and emotional impact. Q. J. Exp. Psychol. 69 2130–2146. 10.1080/17470218.2015.1106566 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galati A., Brennan S. E. (2010). Attenuating information in spoken communication: for the speaker, or for the addressee? J. Mem. Lang. 62 35–51. 10.1016/j.jml.2009.09.002 [DOI] [Google Scholar]

- Gibbs R. W., Jr., Colston H. L. (2012). Interpreting Figurative Meaning. Cambridge: Cambridge University Press; 10.1017/CBO9781139168779 [DOI] [Google Scholar]

- Hancock J. T., Landrigan C., Silver C. (2007). “Expressing emotion in text-based communication,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, San Jose, CA: 10.1145/1240624.1240764 [DOI] [Google Scholar]

- Haxby J. V., Hoffman E. A., Gobbini M. I. (2002). Human neural systems for face recognition and social communication. Biol. Psychiatry 51 59–67. 10.1016/S0006-3223(01)01330-0 [DOI] [PubMed] [Google Scholar]

- Heilman K. M., Bowers D., Speedie L., Coslett H. B. (1984). Comprehension of affective and nonaffective prosody. Neurology 34 917921 10.1212/WNL.34.7.917 [DOI] [PubMed] [Google Scholar]

- Hermans D., Houwer J. D., Eelen P. (1994). The affective priming effect: automatic activation of evaluative information in memory. Cogn. Emot. 8 515–533. 10.1080/02699939408408957 [DOI] [Google Scholar]

- Hinojosa J. A., Mercado F., Carretié L. (2015). N170 sensitivity to facial expression: a meta-analysis. Neurosci. Biobehav. Rev. 55 498–509. 10.1016/j.neubiorev.2015.06.002 [DOI] [PubMed] [Google Scholar]

- Huang A. H., Yen D. C., Zhang X. (2008). Exploring the potential effects of emoticons. Informat. Manag. 45 466–473. 10.1016/j.im.2008.07.001 [DOI] [Google Scholar]

- Kaye L. K., Malone S. A., Wall H. J. (2017). Emojis: insights, affordances, and possibilities for psychological science. Trends Cogn. Sci. 21 66–68. 10.1016/j.tics.2016.10.007 [DOI] [PubMed] [Google Scholar]

- Kaye L. K., Wall H. J., Malone S. A. (2016). “Turn that frown upside-down”: a contextual account of emoticon usage on different virtual platforms. Comput. Hum. Behav. 60 463–467. 10.1016/j.chb.2016.02.088 [DOI] [Google Scholar]

- Kiesler S., Siegel J., McGuire T. W. (1984). Social psychological aspects of computer-mediated communication. Am. Psichol. 39 1123–1134. 10.1037/0003-066X.39.10.1123 14511448 [DOI] [Google Scholar]

- Knapp M. L., Hall J. A. (2002). Nonverbal Communication in Human Interaction. Boston, MA: Thomson Learning. [Google Scholar]

- Krombholz A., Schaefer F., Boucsein W. (2007). Modification of N170 by different emotional expression of schematic faces. Biol. Psychol. 76 156–162. 10.1016/j.biopsycho.2007.07.004 [DOI] [PubMed] [Google Scholar]

- Kronmüller E., Barr D. J. (2007). Perspective-free pragmatics: broken precedents and the recovery-from-preemption hypothesis. J. Mem. Lang. 56 436–455. 10.1016/j.jml.2006.05.002 [DOI] [Google Scholar]

- Lo S. K. (2008). The nonverbal communication functions of emoticons in computer-mediated communication. Cyberpsychol. Behav. 11 595–597. 10.1089/cpb.2007.0132 [DOI] [PubMed] [Google Scholar]

- Lynn S. K., Salisbury D. F. (2008). Attenuated modulation of the N170 ERP by facial expressions in schizophrenia. Clin. EEG Neurosci. 39 108–111. 10.1177/155005940803900218 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muresan S., González-Ibáñez R., Ghosh D., Wacholder N. (2016). Identification of nonliteral language in social media: a case study on sarcasm. J. Assoc. Inform. Sci. Technol. 67 2725–2737. 10.1002/asi.23624 [DOI] [Google Scholar]

- Nobuyuki H., Yusuke U., Shuji M. (2014). Effects of emoticons and pictograms on communication of emotional states via mobile text-messaging. Jpn. J. Res. Emot. 22 20–27. 10.4092/jsre.22.1_20 [DOI] [Google Scholar]

- Novak P. K., Smailovic J., Sluban B., Mozetic I. (2015). Sentiment of emojis. PLoS One 10:e0144296. 10.1371/journal.pone.0144296 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Özyürek A., Willems R. M., Kita S., Hagoort P. (2007). On-line integration of semantic information from speech and gesture: Insights from event-related brain potentials. J. Cogn. Neurosci. 19 605–616. 10.1162/jocn.2007.19.4.605 [DOI] [PubMed] [Google Scholar]

- Panksepp J. (2004). Affective Neuroscience: The Foundations of Human and Animal Emotions. Oxford: Oxford university press. [Google Scholar]

- Peirce J. W. (2007). PsychoPy—psychophysics software in Python. J. Neurosci. Methods 162 8–13. 10.1016/j.jneumeth.2006.11.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L. (2009). How do emotion and motivation direct executive control? Trends Cogn. Sci. 13 160–166. 10.1016/j.tics.2009.01.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Righart R., de Gelder B. (2008). Recognition of facial expressions is influenced by emotional scene gist. Cogn. Affect. Behav. Neurosci. 8 264–272. 10.3758/CABN.8.3.264 [DOI] [PubMed] [Google Scholar]

- Sarbaugh-Thompson M., Feldman M. S. (1998). Electronic mail and organizational communication: does saying “hi” really matter? Organ. Sci. 9 685–698. 10.1287/orsc.9.6.685 [DOI] [Google Scholar]

- Searle J. R. (1985). Expression and Meaning: Studies in the Theory of Speech Acts. Cambridge: Cambridge University Press. [Google Scholar]

- Spruyt A., Hermans D., Houwer J. D., Eelen P. (2002). On the nature of the affective priming effect: affective priming of naming responses. Soc. Cogn. 20 227–256. 10.1521/soco.20.3.227.21106 17533884 [DOI] [Google Scholar]

- Stekelenburg J. J., de Gelder B. (2004). The neural correlates of perceiving human bodies: an ERP study on the body-inversion effect. Neuroreport 15 777–780. 10.1097/01.wnr.0000119730.93564.e8 [DOI] [PubMed] [Google Scholar]

- Stenberg G., Wiking S., Dahl M. (1998). Judging words at face value: Interference in a word processing task reveals automatic processing of affective facial expressions. Cogn. Emot. 12 755–782. 10.1080/026999398379420 [DOI] [Google Scholar]

- Tanenhaus M. K., Spivey-Knowlton M. J., Eberhard K. M., Sedivy J. C. (1995). Integration of visual and linguistic information in spoken language comprehension. Science 268 1632–1634. 10.1126/science.7777863 [DOI] [PubMed] [Google Scholar]

- Thompson D., Mackenzie I. G., Leuthold H., Filik R. (2016). Emotional responses to irony and emoticons in written language: evidence from EDA and facial EMG. Psychophysiology 53 1054–1062. 10.1111/psyp.12642 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Berkum J. J., Van den Brink D., Tesink C. M., Kos M., Hagoort P. (2008). The neural integration of speaker and message. J. Cogn. Neurosci. 20 580–591. 10.1162/jocn.2008.20054 [DOI] [PubMed] [Google Scholar]

- Van Heijnsbergen C. C. R. J., Meeren H. K. M., Grezes J., de Gelder B. (2007). Rapid detection of fear in body expressions, an ERP study. Brain Res. 1186 233–241. 10.1016/j.brainres.2007.09.093 [DOI] [PubMed] [Google Scholar]

- Walther J. B., D’Addario K. P. (2001). The impacts of emoticons on message interpretation in computer-mediated communication. Soc. Sci. Comput. Rev. 19 324–347. 10.1177/089443930101900307 [DOI] [Google Scholar]

- Willems R. M., Özyürek A., Hagoort P. (2006). When language meets action: the neural integration of gesture and speech. Cereb. Cortex 17 2322–2333. 10.1093/cercor/bhl141 [DOI] [PubMed] [Google Scholar]

- Xu J., Kemeny S., Park G., Frattali C., Braun A. (2005). Language in context: emergent features of word, sentence, and narrative comprehension. Neuroimage 25 1002–1015. 10.1016/j.neuroimage.2004.12.013 [DOI] [PubMed] [Google Scholar]

- Zhou L., Burgoon J. K., Nunamaker J. F., Twitchell D. (2004). Automating linguistics-based cues for detecting deception in text-based asynchronous computer-mediated communications. Group Decis. Negot. 13 81–106. 10.1023/B:GRUP.0000011944.62889.6f [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.