Abstract

Purpose

The purpose of this educational report is to provide an overview of the present state‐of‐the‐art PET auto‐segmentation (PET‐AS) algorithms and their respective validation, with an emphasis on providing the user with help in understanding the challenges and pitfalls associated with selecting and implementing a PET‐AS algorithm for a particular application.

Approach

A brief description of the different types of PET‐AS algorithms is provided using a classification based on method complexity and type. The advantages and the limitations of the current PET‐AS algorithms are highlighted based on current publications and existing comparison studies. A review of the available image datasets and contour evaluation metrics in terms of their applicability for establishing a standardized evaluation of PET‐AS algorithms is provided. The performance requirements for the algorithms and their dependence on the application, the radiotracer used and the evaluation criteria are described and discussed. Finally, a procedure for algorithm acceptance and implementation, as well as the complementary role of manual and auto‐segmentation are addressed.

Findings

A large number of PET‐AS algorithms have been developed within the last 20 years. Many of the proposed algorithms are based on either fixed or adaptively selected thresholds. More recently, numerous papers have proposed the use of more advanced image analysis paradigms to perform semi‐automated delineation of the PET images. However, the level of algorithm validation is variable and for most published algorithms is either insufficient or inconsistent which prevents recommending a single algorithm. This is compounded by the fact that realistic image configurations with low signal‐to‐noise ratios (SNR) and heterogeneous tracer distributions have rarely been used. Large variations in the evaluation methods used in the literature point to the need for a standardized evaluation protocol.

Conclusions

Available comparison studies suggest that PET‐AS algorithms relying on advanced image analysis paradigms provide generally more accurate segmentation than approaches based on PET activity thresholds, particularly for realistic configurations. However, this may not be the case for simple shape lesions in situations with a narrower range of parameters, where simpler methods may also perform well. Recent algorithms which employ some type of consensus or automatic selection between several PET‐AS methods have potential to overcome the limitations of the individual methods when appropriately trained. In either case, accuracy evaluation is required for each different PET scanner and scanning and image reconstruction protocol. For the simpler, less robust approaches, adaptation to scanning conditions, tumor type, and tumor location by optimization of parameters is necessary. The results from the method evaluation stage can be used to estimate the contouring uncertainty. All PET‐AS contours should be critically verified by a physician. A standard test, i.e., a benchmark dedicated to evaluating both existing and future PET‐AS algorithms needs to be designed, to aid clinicians in evaluating and selecting PET‐AS algorithms and to establish performance limits for their acceptance for clinical use. The initial steps toward designing and building such a standard are undertaken by the task group members.

Keywords: PET/CT, PET segmentation, treatment assessment, treatment planning

1. Introduction

Positron emission tomography (PET) has the potential to improve the outcome of cancer therapy because it allows the identification and characterization of tumors to be conducted based on their metabolic properties,1 which are inherently tied to cancer biology. PET is helpful in delineating the tumor target for radiation therapy, in quantitating tumor burden for therapy assessment, in determining patient prognosis and in detecting and quantitating recurrent or metastatic disease. This is especially true when the cancer lesion boundaries are not easily distinguished from surrounding normal tissue in anatomical images. Combined PET/CT (computed tomography) and PET/MRI (magnetic resonance imaging) provide both anatomical/morphological and functional information in one imaging session. In addition to segmentation, this allows for the division of the tumors into subregions based on metabolic activity, which could potentially be used to treat/evaluate these subregions differentially (e.g., by increasing the dose to the more aggressive and radioresistant sub‐volumes, an approach known as “dose painting”2). Accurate delineation of the metabolic tumor volume in PET is important for predicting and monitoring response to therapy. Aside from standardized uptake value (SUV) measurements,3, 4 other parameters (e.g., total lesion glycolysis (TLG) or textural and shape features, as well as tracer kinetic parameters) with complementary/additional predictive/prognostic value can be extracted from PET images.

For radiation therapy, leaving parts of the tumor untreated, because its extent is underestimated by anatomic imaging, or conversely irradiating healthy tissue because boundaries between the tumor and the adjacent normal tissue cannot be defined, can result in suboptimal response and/or (possibly severe) adverse side‐effects. It has been shown in several clinical studies that PET, using the [18F]2‐fluoro‐2‐deoxy‐D‐glucose (Fluorodeoxyglucose) radiotracer (18F‐FDG PET), has led to changes in clinical management for about 30% of patients.5, 6, 7 Other studies, involving nonsmall‐cell lung cancer (NSCLC)8, 9, 10 and head‐and‐neck (H&N) cancer11 have demonstrated that the incorporation of PET imaging in radiotherapy planning can result in significant changes (either increase or decrease) in treatment volumes.

In addition, the quantitative assessment of the metabolically active tumor volume, may provide independent prognostic or predictive information. This has been shown in several malignancies, including locally advanced esophageal cancer,12 non‐Hodgkin lymphoma,13 pleural mesothelioma,14 cervical and H&N cancers,15 and lung cancer.16

These promising data impose the need to establish and validate algorithms for the segmentation of PET metabolic volumes before and during treatment. The gross tumor volumes (GTV) defined by PET are intended to contain the macroscopic extend of the tumors. Currently, inaccuracies in defining PET‐based GTV arise from variations in the biological processes determining the radiotracer uptake, as well as from physical and image acquisition phenomena which affect the reconstructed PET images.4, 17, 18, 19, 20, 21, 22 Furthermore, uncertainty can be introduced by the segmentation process itself. It has been shown that volume differences of up to 200% can arise from using different GTV contouring algorithms.23

Regardless of these uncertainties, many radiation oncology departments have started using PET/CT for lesion delineation in radiation treatment planning (RTP)1, 7, 8, 9, 11, 24 Numerical auto‐segmentation techniques can be used for guidance in the PET delineation process, which have been shown to reduce intra‐ and inter‐observer variations25, 26 and some commercial vendors are now offering tools for semi‐automatic delineation of tumor volumes in PET images for radiotherapy planning or response assessment. While these approaches may work reasonably well when applied in conjunction with anatomical imaging and clinical expertise, their accuracy and limitations have not been fully assessed.

Due to the complexity of the problem of PET‐based tumor segmentation and due to the abundance of potentially applicable numerical approaches, a large variety of automatic, semi‐automatic and combined PET‐AS approaches have been proposed over the past 20 years.27, 28, 29, 30 Multiple semi‐automatic approaches derived from phantom data as well as fully automated algorithms differing in terms of the algorithmic basis, fundamental assumptions, clinical goals, workflow, and accuracy have been proposed. In addition, algorithms for segmenting combinations of images from PET and other imaging modalities have appeared in literature.31, 32, 33, 34 The majority of these approaches have been tested on either simplistic phantom studies or patient datasets, where the ground truth is largely unknown. Finally, only a few of these algorithms have been tested for their ability to segment lesions with irregular shapes or nonuniform activity distributions, which are essential for the implementation of accurate delineation protocols. In addition, most methods have been evaluated using different datasets and protocols, which makes comparing the results difficult, even impossible. As a result, in essence, there is currently no commonly adopted technique for reliable, routine, clinical PET image auto‐segmentation.

In this educational report, we provide a description with examples of the main classes of PET‐AS algorithms (section 2), highlight the advantages and the limitations of the current techniques (section 2.B.4) and discuss possible evaluation approaches (section 2.B.5). In that section, the types of available image datasets and the existing approaches for contour evaluation are discussed with the intention of laying out a basis for a standard for effective evaluation of PET auto‐segmentation algorithms. The clinician interested in the practical aspects of PET segmentation may find most useful section 4.C.4, which highlights the biological, physiological, and image acquisition factors affecting the performance of the PET‐AS methods, as well as preliminary guidelines for their acceptance and implementation.

2. Description and classification of the algorithms

The following is a glossary of the abbreviations, definitions, and notations used in this report:

Abbreviations

- ARG

Adaptive Region Growing

- ATS

Adaptive Threshold Segmentation

- BTV

Biological Target Volume

- CT

Computed Tomography

- CTV

Clinical Target Volume

- DSC

Dice Similarity Coefficient

- DWT

discrete wavelet transforms

- FTS

Fixed Threshold Segmentation

- EM

Expectation Maximization

- FCM

Fuzzy C‐Means

- FDG

[18F]2‐fluoro‐2‐deoxy‐D‐glucose (Fluorodeoxyglucose)

- FLT

18F‐3′‐fluoro‐3′‐deoxy‐ L‐thymidine

- FMISO

18F‐fluoromisonidazole

- FOM

Figure of Merit

- GTV

Gross Tumor Volume

- H&N

Head and Neck

- ML

Maximum Likelihood

- MRI

Magnetic Resonance Imaging

- MVLS

Multi Valued Level Sets

- NEMA

National Electrical Manufacturers Association

- NGTDM

neighborhood gray‐tone difference matrices

- NSCLC

Non‐Small Cell Lung Cancer

- PET

Positron Emission Tomography

- PET‐AS

PET Auto ‐ Segmentation

- PPV

Positive Predictive Value

- PSF

Point Spread Function

- PTV

Planning Target Volume

- PVE

Partial Volume Effect

- ROC

Receiver Operating Characteristic

- SNR

Signal to Noise Ratio

Definitions

ROI (Region of Interest): A 2D or 3D region drawn on an image for purposes of restricting and focusing analysis to its contents. It is closely related to and often used interchangeably with volume of interest (VOI).

SUV (Standardized Uptake Value): A measure of the intensity of radiotracer uptake in an object (lesion or body region) or region of interest; measured activity in that region is normalized to the injected activity and some measurement of patient size, most commonly weight (mass).

TLG (Total Lesion Glycolysis): The integral of the FDG‐SUV over the volume, or the product of the mean FDG‐SUV and the volume. The same paradigm can be applied to other radiotracers and is called for instance Total Proliferative Volume, or Total Hypoxic Volume in the case of FLT or FMISO, respectively.

VOI (Volume of Interest): A 3D region defined in a set of images for purposes of restricting and focusing analysis to its contents. It is closely related to and often used interchangeably with region of interest (ROI).

Notations

I : The image set.

I VOI : The VOI in image set I that the segmented region is taken from.

: The segmented region from image set I.

I i : The intensity of the i th element (image pixel) from image set I. This intensity is often normalized with respect to activity and weight to SUV.

ξ i : Normalized uptake for the th voxel (see Appendix II).

T : Threshold. Commonly used in threshold segmentation. It defines the value at which a voxel is segregated between one set and another.

T* : The estimated segmentation threshold.

: Volume as a function of threshold.

V known : The known volume of a segmented object.

x i : The position of the i th voxel.

: The cluster center of the k th cluster at the n th iteration.

: The membership probability of the th pixel in the k th cluster at the n th iteration.

N : The number of images sets/modalities.

: The internal/external mean intensities of the enclosed contour region at level set = 0 in the i th image set.

: User‐defined importance weights for inclusion/exclusion from a region defined by the enclosed contour at level set in the i th image set.

ϕ : A level set function.

Ω: The domain of the image.

2.A. Possible classifications

The first objective of this document was to provide introductory information about the different classes of PET auto‐segmentation (PET‐AS) algorithms. Classifications of PET‐AS algorithms can be based on several different aspects:

The segmentation/image processing algorithm employed and its assumptions and complexity;

The use of pre‐ and post‐processing steps;

The level of automation;

The first classification, relying on the type of image segmentation paradigm (e.g., simple or adaptive thresholding, active contours, statistical image segmentation, clustering, etc.), has been used in previous reviews.27, 29, 35, 36 In most cases, detailed descriptions of the numerical algorithms and their assumptions and limitations are given.

The second classification is based on the use of pre‐ and post‐processing steps. Most algorithms do not use pre‐processing steps, although some use either denoising or deconvolution image restoration techniques before the segmentation or as part of the algorithm itself.37, 38 Other algorithms require either an image‐based database39, 40 to build a classifier (i.e., learning algorithms), or phantom acquisitions for the optimization of parameters (i.e., adaptive threshold algorithms).

Regarding the third classification based on automation, Udupa, et al.41 divide image segmentation into two processes: recognition and delineation, and point to the “essential” need of “incorporation of high‐level expert knowledge into the computer algorithm, especially for the recognition step.” For this reason, most existing algorithms rely on the identification of the tumor first, by the user drawing a volume of interest (VOI) around the tumor to delineate (denoted from here onwards as “standard user interaction”, see Table 1), whereas other approaches require the identification of the tumor after the segmentation process in the resulting map (e.g., Belhassen, et al.28). Other examples of manual interaction are user‐definition of background regions (used by some of the adaptive threshold algorithms), manual selection of markers to initialize the algorithm,42 or the manual input of parameters in case of failure of the automatic initialization.43 Furthermore, the level of automation can be quite difficult to assess, as factors such as the requirement of building a classifier for each image region, the individual optimization for each combination of scanner system/reconstruction algorithm, the selection and validation of the parameters of the optimization approach, or finally, the detection of lesions to segment, are usually not included in these assessments. In practice, all algorithms require some level of user interaction.

Table 1.

Summary of the main characteristics of some representative advanced PET‐AS algorithms (not an exhaustive list) and their respective evaluation

| Reference(s) | Image segmentation paradigm(s) used | User interactiona | Pre‐ and post‐processing steps | Aimed applicationb | Validation data and ground truthc | Accuracy evaluation on realistic tumorsd | Robustness evaluatione | Repeatability evaluationf |

|---|---|---|---|---|---|---|---|---|

| Tylski, et al. 2006243 | Watershed | Std + multiple markers placement | None | Global | PA(1): Vol. and CiTu images | No | No | No |

| Werner‐Wasik, et al. 2012135 | Gradient‐based | Std + initialization using drawn diameters | Unknown | Global | PA(5): Diam. 31 MCST: Vol. | Yes | Yes | Yes |

| Geets, et al. 200737 | Gradient‐based | Std + initialization | Denoising and deconvolution steps | Global | PS(1) and PA(1): Vol. + Diam. 7 CiTuH: Complete | No | No | No |

| El Naqa, et al. 2008130 | AT + active contours | Std + several parameters to set | None | Global | PA(1): Vol. 1 CiTu – Ø | Yes | No | Yes |

| El Naqa, et al. 200731 | Multimodal (PET/CT) active contours | Std + initialization of the contour shape, selection of weights | Normalization and registering of PET and CT images, deconvolution of PET images. | GTV definition on PET/CT | PA(1): Vol. 2 CiTu: MC(1), FT | Yes | No | No |

| Dewalle‐Vignion, et al. 2011140 | Possibility theory applied to MIP projections | Std | None | Global | PA(1): Vol. 5 MCST: Vox. 7 CiTuH: Complete | Yes | No | No |

| Belhassen and Zaidi 201028 | Improved Fuzzy C‐Means (FCM) | A posteriori interpretation of resulting classes in the segmentation of entire image | Denoising, wavelet decompositions | Global | 3 AST: Vox. 21 CiTuH: Diam. 7 CiTuH: Complete | Yes | No | No |

| Aristophanous, et al. 200765 | Gaussian mixture modeling without spatial constraints | Std + initialization of the model and selection of the number of classes | None | Pulmonary tumors | 7 CiTu: Ø | No | No | Yes |

| Montgomery, et al. 200764 | Multi scale Markov field segmentation | A posteriori interpretation of resulting segmentation on the entire image | Wavelet decompositions | Global | PA(1) : Vol. 3 CiTu: Ø | No | No | No |

| Hatt, et al. 200769 | Fuzzy Hidden Markov Chains | Std | None | Global | PS(1) and PA(2): Vox. | No | No | No |

| Hatt, et al. 2009,68 2010,70 201143 | Fuzzy locally adaptive Bayesian (FLAB) | Std | None | Global | PS(1) and PA(4): Vox. 20 MCST: Vox. 18 CiTuH: Diam. | Yes | Yes | Yes |

| Day, et al. 200960 | Region growing based on mean and SD of the region | Std + optimization on each scanner | None | Rectal tumors | 18 CiTu: MC(1) | No | No | No |

| Yu, et al. 2009,40 Markel, et al. 201398 | Decision tree built based on learning of PET and CT textural features | A posteriori interpretation of resulting segmentation on the entire image | Learning for building the decision tree | GTV definition of H&N and lung tumors | 10 CiTu: MC(3) 31 CiTu: MC(3) | No | No | No |

| Sharif, et al. 2010273, 2012244 | Neural network | A posteriori interpretation of resulting segmentation on the entire image. | Learning for building the neural network | Global | PA(1): Vol. 3 AST: Vox. 1 CiTuH: Diam. | No | No | Yes |

| Sebastian, et al. 2006138 | Spherical Mean shift | Std | Resampling in a different spatial domain | Global | 280 AST: Vox. | No | No | No |

| Janssen, et al. 2009152 | Voxels classification based on time‐activity curve | Std + Initialization and choice of the number of classes | Only on dynamic imaging, denoising and deconvolution steps | Rectal tumors in dynamic imaging | PA(1): Vol. + Diam. 21 CiTu: MC(1) | No | No | No |

| De Bernardi, et al. 2010245 | Combined with PVE (image reconstruction) | Std + initialization | PSF model of the scanner and access to raw data required | Global | PA(1): Vol. | No | Yes | No |

| Bagci, et al. 201333 | Multimodal random walk | Std | Multimodal images registration | Global for PET/CT or PET/MR | 77 CiTu: MC(3) PA(1) | Yes | No | No |

| Onoma, et al. 2014246 | Improved random walk | Std | None | Global | PA(1), 4 AST: Vox, 14 CiTu: MC(2) | Yes | No | No |

| Song, et al. 201332 | Markov field + graph cut | Std | None | Global | 3 CiTu: MC(3) | Yes | No | No |

| Hofheinz, et al. 201361 | Locally adaptive thresholding | Std + one parameter to determine on phantom acquisitions | None | Global | 30 AST: Vox. | Yes | No | No |

| Abdoli, et al. 2013141 | Active contour | Std + several parameters to optimize | Filtering in the wavelet domain | Global | 1 AST: Vox. 9 CiTuH: Complete. 3 CiTuH: Complete 2 CiTuH: Complete | No | Yes | No |

| Mu, et al. 2015247 | Level set combined with PET/CT Fuzzy C‐Means | Std | None | Specific to cervix | 7 AST: Vox, 27 CiTu: MC(2) | Yes | No | No |

| Cui, et al. 2015134 | Graph cut improved with topology modeling | Std + one free parameter previously optimized | PET/CT registration | Specific to lung tumors and PET/CT | 20 PA(1), 40 CiTu(2) | Yes | No | No |

| Lapuyade‐Lahorgue, et al. 2015248 | Generalized fuzzy C‐means with automated norm estimation | Std | None | Global | PA(4): Vol. 34 MCST: Vox. 9 CiTu: MC(3). | Yes | Yes | Yes |

| Devic et al., 2016249 | Differential uptake volume histograms for identifying biological target sub‐volumes | Selection of three ROIs encompassing PET avid area; iterative decomposition of differential uptake histograms into multiple Gaussian functions | None | Isolation of glucose phenotype driven biological sub‐volumes specific to NSCLC, | None | No | No | No |

| Berthon et al., 201673 | Decision tree based learning using nine different segmentation approaches (region growing, thresholds, FCM, etc.) with the goal of selecting the most appropriate method given the image characteristics | Std | Learning on 100 simulated cases to train/build the decision tree | Global | 85 NSTuP: Vox. | Yes | No | No |

| Schaefer et al., 201699 | Consensus between contours from 3 segmentation methods (contrast‐oriented, possibility theory, adaptive thresholding) based on majority vote or STAPLE | Std | None | Global | 22 CiTuH; Complete 10 CiTu: MC(4) 10 CiTu: MC(1) | Yes | No | Yes |

std = « standard » interaction (i.e., the metabolic volume of interest is first manually isolated in a region of interest that is used as an input to the algorithm.)

global = not application specific.

PA(x) = Phantom (spheres) Acquisitions on x different scanners; PS(x) = Phantom (spheres) Simulations on x different scanners; AST = Analytically Simulated Tumors; MCST = Monte Carlo Simulated Tumors; NSTuP = Non spherical tumors simulated in phantoms (thin‐wall inserts, printed phantoms, etc.). CiTu = Clinical Tumors; CiTuH = Clinical Tumors with Histopathology; Vol. = only volume; Vox. = voxel‐by‐voxel; Diam = histopathology maximum diameter; Complete = 3D histopathology reconstruction; MC(x) = manual contouring by x experts; FT = fixed threshold, AT = adaptive threshold.

Highly heterogeneous, complex shapes, low contrasts, and rigorous ground truth.

Requires multiple acquisitions on different systems and a large number of parameters.

With respect to repeated automated runs or delineation by multiple users.

In the following section, we used the first classification scheme with emphasis on the algorithm complexity.

2.B. Classes of algorithms for PET auto‐segmentation

2.B.1. Fixed and adaptive threshold algorithms

Segmentation via a threshold is conceptually simple. It consists of defining a specific uptake (often expressed as a fixed fraction or percentage of SUV) between the background and imaged object's intensities (tracer uptake) and then using that intensity to partition the image and recover the true object's boundaries. All voxels with intensities at or above the threshold are assigned to one set while the remaining voxels are assigned the other (Appendix I). The details of how the threshold and the uptake values are normalized are discussed in Appendix II.

The decision to use threshold segmentation is generally based upon its simplicity and the ease of implementation. Threshold segmentation carries a number of implied assumptions that should be understood and accounted for. These are:

The true object has a well‐defined boundary and uniform uptake near its boundary, i.e., the image is bi‐modal.

The background intensity is uniform around the object.

The noise in the background and in the object is small compared to the intensity change at the tumor edge.

The resolution is constant near the edges of the object.

The model, used to define the threshold, is consistent with its application, e.g., a segmentation scheme designed for measuring tumor volume may not be appropriate for radiation therapy and vice versa (see section 5.B).

In practice, these assumptions rarely hold and some effort is required to determine their validity/acceptability in the context of the intended application.

Several points above are illustrated in a review of PET segmentation by Lee.29 In this review, the effect of the thickness of the phantom wall on estimating the segmentation threshold and the dependence of this effect on the Point Spread Function (PSF) of the PET scanner, were shown via mathematical analysis. This work also showed that to obtain the correct threshold on a phantom with cold walls (a certain thickness of material without any uptake, such as the plastic surrounding spheres in physical phantoms), a lower threshold is required than for the case without walls, and that due to the limited PET spatial resolution, small volumes require higher thresholds. This was further investigated recently, demonstrating the important impact of cold walls on the segmentation approaches, and the potential improvement brought by thin‐wall inserts.44, 45 A similar result was shown by Biehl, et al.,46 who concluded that for NSCLC the optimal threshold for their specific scanner and protocol was related to volume as shown in Appendix I. Finally, it is interesting to note that, given knowledge of the local PSF and the assumption of uniform uptake, the threshold for the lesion's boundary can be estimated analytically, with the result being independent from the tumor‐to‐background ratio, provided the background has been subtracted beforehand.47

Generally, threshold segmentation can be loosely categorized into two separate categories: fixed threshold segmentation (FTS) and adaptive threshold segmentation (ATS). In FTS, a general test/model of the problem is developed and a set of parameters is estimated by minimizing the error of the model to deduce an “optimal” threshold, . The threshold value may be dependent or not (e.g., 42% of peak lesion activity48 or SUV = 2.549) on the tumor‐to‐background ratios. Other tumor or image aspects are generally ignored. For ATS, an objective function is chosen that generates a threshold based upon the properties of each individual tumor/object and PET image. In this case, rather than depending on simple measures, such as tumor‐to‐background ratio, the threshold calculation depends upon an ensemble of lesion properties such as volume46, 48, 50, 51, 52 or SUV mean‐value,53 and thus makes the threshold segmentation process iterative.

Both FTS and ATS have been discussed in several recent literature reviews of general segmentation in PET27, 29, 36, 54 Each of these reviews provides a fairly complete literature survey of the state of various threshold segmentation algorithms. In addition, the review by Zaidi and El Naqa54 provides a brief description and summary of the rationale of many ATS algorithms. These are summarized in Table A1 of Appendix I.

2.B.2. Advanced algorithms

A list of some of the advanced PET‐AS algorithms published is given in Table 1, with a focus on the evaluation protocols that were followed. Below they are divided into three subcategories (advanced algorithms applied directly to PET images, approaches combined with image processing or reconstruction, and those dealing with multiple imaging modalities), which are discussed in separate subsections 2.B, Statistical, Learning and texture‐based segmentation algorithms.

Gradient‐based segmentation

The underlying assumption in threshold‐based delineation (2.B.1) is that the uptake within the target is significantly different from that in the background. With this idea in mind, the gradient naturally finds the transition contour that delineates a high‐uptake volume from the surrounding low uptake regions. The immediate advantage of this alternative method is that uptake inside and outside the target need not be uniform for successful segmentation, nor need it be constant along the contour.

In practice, the method consists of computing the gradient vector for each voxel and then using it to form a new image composed of the gradient magnitude values. Segmentation based on gradient information is an important part of what the human visual system does when looking at natural scenes. The difficulty lies in interpreting the gradient image to translate the relevant information into target contours. The general idea is to locate and follow the crests of the gradient magnitude. The points where the gradient is the largest in magnitude (where the second derivative, or Laplacian, is null) correspond to the target contours. There are several ways to locate the crests. For instance, adaptive contours or “snakes” with various smoothness constraints can be programmed in such a way that the contours are attracted toward the crest.55 Another very popular way to track the gradient crests is the watershed transform. It considers the gradient image as a landscape in which the gradient crests are mountain chains. Then it “floods” the landscape and keeps a record of the boundaries of all hydrographic basins that progressively merge as the water level rises. The hierarchy of all basins can be displayed as a tree in a dendrogram. Clustering tools can help in identifying the branch that gathers all basins corresponding to the target.

The quality of gradient‐based segmentation depends on the accuracy and precision of the gradient information, which can be biased by spatial resolution blur. For objects with a concave or convex surface, the uptake spill‐in and spill‐out caused by blur tends to slightly shift, smooth, and distort the real object boundary. This effect can be partially compensated for with deblurring methods, such as deconvolution algorithms and some tools for Partial Volume Effect (PVE) correction. The gradient computation is affected by the image noise. Therefore, denoising tools are needed as well, provided they do not decrease the image resolution.

The algorithm described by Geets, et al.37 relies on deblurring and denoising tools prior to segmentation. The deblurring parameters are adjusted according to the resolution of the PET system and are therefore PET‐camera dependent. The watershed transform is applied to the gradient magnitude image and a clustering technique creates a hierarchy of basins. The user can choose the tree branch associated with the high‐uptake region in the images expected to correspond to the target volume. In the case of a low tumor‐to‐background ratio (surrounding inflammation, other causes of tracer concentration, uptake reduction due to treatment), the hierarchy can get more complicated and the branch corresponding to the target volume might be difficult to isolate. This usually indicates that the images do not convey enough information for the target volume to be accurately delineated. This approach has been validated using phantom PET acquisitions as well as clinical datasets of both H&N37 and lung56 tumors with tridimensional (3D) histopathology reconstructions as ground truths.

Region growing and adaptive region growing

Region growing algorithms start from a seed region inside the object and progressively include the neighboring voxels to the region if they satisfy certain similarity criteria.57, 58, 59 Similarity is often calculated based on image intensity, but can be based on other features such as textures. Let I( x ) represent the image intensity at x . The similarity criteria can be a fixed interval: I( x ) ∈ [lower, upper], or a confidence interval: I( x ) ∈ [m − fσ, m + fσ], where m and σ are the mean intensity and standard deviation of the current region, and f is a factor defined by the user.59 Region growing with a fixed interval is essentially a connected threshold algorithm. Small f restricts the inclusion of voxels to only those having very similar intensities to the mean in the current region, and thus can result in under growth. Large f relaxes the similarity criteria, and thus may result in over growth into neighboring regions. It is often difficult, if not impossible to identify experimentally an optimal f for all objects. For example, four different f values were experimentally determined based on the maximum intensity and its location using phantoms by Day, et al.60 The authors noted that these f values are specific to their clinic.

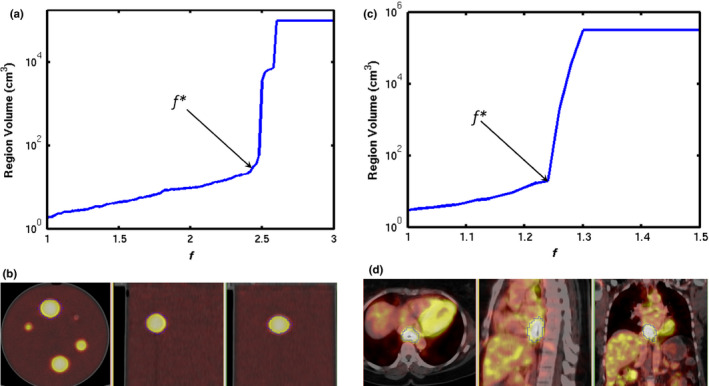

To overcome this limitation, an adaptive region growing (ARG) algorithm that can automatically identify f for each specific object in PET was proposed by Li, et al.55 As illustrated in Fig. 1, in ARG f is varied from small to large values so that the grown volume changes from the small seed region to the entire image. A sharp volume increase occurs at a certain f*, where the region grows just beyond the object (e.g., high activity sphere or tumor) into the background (low activity water or normal tissue). As the background typically consists of large homogeneous regions, a great number of voxels are added to the current region at this transition point. The ARG algorithm automatically identifies f* for which the volume would be increased by more than 200% at the next iterative value of f. The resulting volume V* was proven to be quite an accurate representation of the homogeneous object. The quality of the segmentation performed by ARG depends mainly on the homogeneity of the background and the contrast between the tumor and background. The performance of ARG in segmenting tumors with various levels of heterogeneous uptake still needs to be studied. The ARG algorithm does not have any parameters that require experimental determination. It uses the intrinsic contrast between a tumor and its neighboring normal tissue in each image to determine the tumor boundary. Therefore, it can be directly applied to various imaging conditions such as different scanners or imaging protocols.

Figure 1.

An illustration of applying the adaptive region growing (ARG) algorithm to PET: (a) plot of segmented volume growing as a function of f, the arrow indicates the location of the transition point f* for a spherical lesion in a PET/CT of a phantom; (b) the thin blue contour indicates the delineated volume V*; (c) – (d) selection of f* and the corresponding delineation for an esophageal tumor. [Color figure can be viewed at wileyonlinelibrary.com]

Another approach based on adaptive region growing has been proposed by Hofheinz, et al.,61 in which the approach was made able to deal with heterogeneous distributions. The method is based on an adaptive threshold, in which instead of a lesion‐specific threshold for the whole ROI, a voxel‐specific threshold is computed locally in the close vicinity of the voxel. The absolute threshold Tabs for the considered voxel is then obtained based on a parameter T previously determined with phantom measurements (T = 0.39): Tabs =T×(R‐Bg)+Bg, where R is a tumor reference value (e.g., ROI maximum) and Bg is the background. Region growing algorithms use statistical properties (mean and standard deviation) of the region to stop the iterative process.60 The algorithms, which exploit the statistical properties of a noisy function and a noisy argument and rely on probabilistic calculations, are described in the next subsection.

Statistical

Statistical image segmentation: Statistical image segmentation aims at classifying voxels and creating regions in an image or volume based on the statistical properties of these regions and voxels, by relying on probabilistic calculations and estimation for the decision process. Numerous approaches have been proposed; most are based on Bayesian inference. In essence, it is assumed that the observed image Y (usually taking its values in the set of real numbers) is a noisy and degraded version of a ground truth field X (usually taking its values in several classes C). Therefore, X has to be estimated from Y, assuming that X and Y can be modeled as realizations of random variables. These algorithms usually combine an iterative estimation procedure of the parameters of interest, since parameters defining the distributions of X and Y are not known in real situations. In addition, a decision step to classify voxels (i.e., assigning a label among the possible values of X to each voxel, based on its observation Y) and the estimated distributions of X and Y, are required. Hence, the voxel classification is carried out based on the previously estimated statistical properties and the resulting probabilities for each voxel to belong to a specific class or region.

Spatial and observation models: The parameters of interest are usually defined within both a spatial model of X (also called a priori model) and an observation model of Y (also called a noise model). Most spatial models are based on Markovian modeling of the voxels field, such as Markov chains, fields, or trees, although simpler spatial neighboring definitions (blind, adaptive or contextual) also exist.62 Noise models are used to model uncertainty in the decision to classify a given voxel, and are most often defined using Gaussian distributions, but more advanced noise models have also been proposed, allowing for the modeling of correlated, multidimensional and non‐Gaussian noise distributions.63 Parameters estimation is usually carried out using algorithms such as Expectation Maximization (EM), Stochastic EM (SEM), or Iterative Conditional Estimation (ICE), depending on the assumptions of the model. These methods have been demonstrated to provide robust segmentation results in several imaging applications, such as astronomical, satellite, or radar images, by selecting appropriate noise models.

Adaptation to PET image segmentation: Some of the algorithms above, have been applied to PET image segmentation. One example is the use of a multiresolution model applied to wavelet decomposition of the PET images within a Markov field framework.64 Another approach is a mixture of Gaussian distributions for classification without spatial modeling.65 Although these models are robust for noisy distributions of voxels (each voxel has an assigned label, but its observation is noisy), they do not explicitly take into account imprecision of the acquired data (a given voxel can contain a mixture of different classes). Therefore, they do not include the modeling of the fuzzy nature of PET images. As a result, to be applied efficiently to PET images, which are not only intrinsically noisy but also blurry due to PVE, more recent models can be used that allow the modeling of the imprecision within the statistical framework, using a combination of “hard” classes and a fuzzy measure. In such a model, the actual image, X does not take its values in a set number of classes, but in a continuous [0,1] interval: the fuzzy Lebesgue measure being associated with the open interval (0,1) and the Dirac measure being associated with {0} and {1}.66 Such a model has been proposed using Markov chains67 and fields62 and also using local neighborhoods without Markovian modeling. These models retain the flexibility and robustness of statistical and Bayesian algorithms versus noise, with the added ability to deal with more complex distributions, due to the presence of both hard and fuzzy classes in the images. The Fuzzy Locally Adaptive Bayesian (FLAB) method takes advantage of this model,68 which had previously been proposed within the context of Markov chains.69 In addition, FLAB modeling has been extended to take into account heterogeneous uptake distributions by considering three classes and their associated fuzzy transitions instead of only two classes and one fuzzy transition. The extended FLAB model has been validated on phantom acquisitions and simulated tumors, as well as clinical datasets.70

Learning and texture‐based segmentation algorithms

For PET image segmentation, the learning task consists of discriminating tracer uptake in lesion voxels (foreground) from surrounding normal tissue voxels (background) based on a set of extracted features from these images.28 Two common categories of statistical learning approaches have been proposed: supervised and unsupervised.71, 72 Supervised learning is used to estimate an unknown (input, output) mapping from known (labeled) samples called the training set (e.g., classification of lesions given a database of example images). In unsupervised learning, only input samples are given to the learning system without their labels (e.g., clustering or dimensionality reduction).

In machine learning and classification, there are two steps: training and testing. In the training step, the optimal parameters of the model are determined given the training data and its best in‐sample performance is assessed. This is usually followed by a validation step, aimed at optimal model selection. The testing step then specifically aims to estimate the expected (out‐of‐sample) performance of a model with respect to its chosen training parameters. A recent example of such a development is the ATLAAS method,73 which is an automatic decision tree that selects the most appropriate PET‐AS method based on several image characteristics, achieving significantly better accuracy than any of the PET‐AS methods considered alone. There are also numerous other types of machine learning techniques that could be applied to PET segmentation, such as random forest, support vector machines, or even deep learning techniques,74 which have been applied to the task of image segmentation in other modalities such as MRI or CT.75, 76 Although these approaches are promising for the future of PET image segmentation, the use of these techniques for PET is currently rather scarce in the literature.77 Today these techniques are exploited to classify patients in terms of outcome based on characteristics extracted from previously delineated tumors.78, 79

PET‐AS algorithms can be trained on pathological findings or physician contours. The advantage of training an algorithm using these contours is that additional information, not present in the PET image, is taken into account since the physician draws contours based on additional a priori information (anatomical imaging, clinical data, etc.). On the other hand, training algorithms using physician contours can be biased by the particular physician's background, goals, or misconceptions.

One of the most used approaches to extract image features that can be used for segmentation is texture analysis. Uptake heterogeneity in PET images can be characterized using regional descriptors such as textures. Unlike intensity or morphological features, textures represent more complex patterns composed of entities or sub‐patterns, that have unique characteristics of brightness, color, slope, size, etc.80 “Image texture” can refer to the relative distribution of gray levels within a given image neighborhood. It integrates intensity with spatial information resulting in higher order histograms when compared to common first‐order intensity histograms. Texture‐based algorithms heavily use image statistical properties; however, since human visual perception often relies on subtle visual properties, such as texture, to differentiate between image regions of similar gray level intensity, they are separated from the iterative, model‐based approaches described in the previous section.

Furthermore, the human visual system is limited in its ability to distinguish variations in gray tone and is subject to observer bias. Variation in image texture can reflect differences in underlying physiological processes such as vascularity or ordered/disordered growth patterns. The use of automated computer algorithms to differentiate tumor from normal tissue based on textural characteristics may offer an objective and potentially more sensitive algorithm of tumor segmentation than those based on simple image thresholds. Among the methods that have been suggested to calculate image texture features are those based on (a) Gabor filters, (b) discrete wavelet transforms (DWT), (c) the co‐occurrence matrix, (d) neighborhood gray‐tone difference matrices (NGTDM), and (e) run‐length matrices.

Gabor filters81 and DWT82 measure the response of images to sets of filters at varying frequencies, scales and orientations. The Gabor filter (a Gaussian phasor), using a bank of kernels for each direction, scale, and frequency, can produce a large number of nonorthogonal features, which makes processing and feature selection difficult. DWTs take a multiscale approach to texture description. Orthogonal wavelets are commonly used resulting in independent features. DWT, however, have had more difficulty discriminating fractal textures with nonstationary scales.83

The co‐occurrence matrices proposed by Haralick, et al.84 and spatial gray level dependence matrix (SGLDM) features, are based on statistical properties derived from counting the number of times pairs of gray values occur next to each other. These are referred to as “second‐order” features because they are based on the relationship of two voxels at a time. The size of a co‐occurrence matrix is dependent on the number of gray values within a region. Each row (i) and column (j) entry in the matrix is the number of times voxels of gray values i and j occur next to each other at a given distance and angle. Higher order features refer to techniques that take into account spatial context from more than two voxels at a time. Amadasun and King proposed several higher order features based on NGTDM.85 For every gray level i, the difference between this level, and the average neighborhood around it, is summed over every occurrence to produce the ith entry in the NGTDM.

Another category of higher order features makes use of “run‐length matrices”. In this case, analysis of the occurrence of consecutive voxels in a particular direction with the same gray level is used to extract textural descriptors such as energy, homogeneity, entropy, etc.86 However, run‐length matrices are a computationally intensive means of deriving texture descriptors.86

Although textural features have been used to characterize uptake heterogeneity within tumors after the segmentation step,15, 79 their use as a means of automatic segmentation can also provide additional information beyond simple voxel intensity that may improve the robustness of delineation criteria. This has been shown in multiple modalities including ultrasound (US)87 and MRI.88 PET and CT textures in the lung have been used in a series of applications including differentiating between malignant and benign nodes,89, 90 judging treatment response,15, 16 diagnosing diffuse parenchymal lung disease,91, 92, 93 determining tumor staging, detection and segmentation.94 With dual modality PET/CT systems (also PET/MRI in the near future95, 96), it is also possible to make use of image textures from PET and CT (MRI) in combination to improve image segmentation results. However, this leads to including anatomy for tumor volume characterization, instead of characterizing the functional part of the tumor only. In two separate studies, combinations of PET and CT texture features in images of patients with H&N cancer97 and those with lung cancer98 improved tumor segmentation with respect to the dual modality ground truth, versus using PET and CT separately. This is discussed in more detail in section 2.B.4 below.

Within the learning category would also fall the recent approaches to account for a set or contours generated via multiple automatic methods, through averaging/consensus methods,99 statistical methods such as the “inverse–ROC (receiver operating characteristic)” approach,100 STAPLE (simultaneous truth and performance level estimation)‐derived methods,101 majority voting,102 or decision tree73 to generate a surrogate of truth. Most of these methods would need some type of “training” or preliminary determination of parameters for the particular type of lesions and may therefore avoid the limitations of the individual methods used.

2.B.3. Combined with image processing and/or reconstruction

The limited and variable resolution of PET scanners, which results in anisotropic and spatially variant blur affecting PET images, leads to PVE, spill‐in and spill‐out of activity in nearby tissues17 and is therefore one of the main challenges for segmentation and for uptake quantification of oncologic lesions. In principle, all the segmentation strategies not explicitly intended for blurred images, but widely used for imaging modalities less affected by PVE than PET (e.g., thresholding, region growing, gradient‐based algorithms, etc.),103 can be applied to PET images after a PVE recovery step.104 PVE recovery can be performed after105, 106, 107, 108, 109 or during image reconstruction with algorithms taking into account a model of the scanner PSF.110, 111, 112 These images, however, should be handled with caution since PVE recovery techniques can introduce artifacts (e.g., variance increase related to the Gibbs phenomenon). The accuracy of PVE‐recovered images can be improved by introducing regularizations such as a priori models, constraints, or iteration stopping rules. An approach of this kind has been followed by Geets, et al.37 (described in section 2.B.2), where a gradient‐based segmentation algorithm was applied on deblurred and denoised images. To avoid the Gibbs phenomenon artifacts near the edges, deconvolution was refined with constraints on the deconvolved uptake.

An alternative approach to account for blur is to model it explicitly in the segmentation procedure. For example, FLAB,68 described in section 2.B.2, or FHMC (Fuzzy Hidden Markov Chains),69 parameterize a generic form of uncertainty to assign special intermediate classes for the blurry borders of the main classes. Such algorithms, if combined with a post‐segmentation PVE recovery technique for objects of known dimension/shape, like recovery coefficients, geometric transfer matrix17 or VOI‐based deconvolution,113 may also be able to provide an estimate of PVE‐recovered lesion uptake inside the delineated borders.114

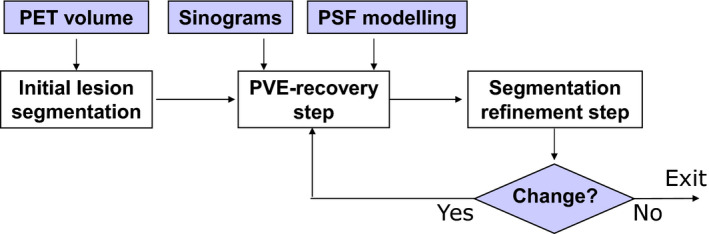

Another means to account for PVE recovery in segmentation is to model it in an iterative process. The lesion border estimate can be iteratively refined using the result of the PVE recovery inside the lesion area and vice versa. Such an approach can potentially improve the estimation accuracy while providing a joint estimate of lesion borders and uptake. This approach was originally proposed by Chen, et al. for spherical objects.115 More recently, De Bernardi, et al. have further developed the idea by proposing a strategy that combines segmentation with a PVE recovery step obtained through a targeted maximum likelihood (ML) reconstruction algorithm with PSF modeling in the lesion area.38 A scheme of the approach is shown in Fig. 2.

Figure 2.

A schematic representation of the algorithm proposed by De Bernardi, et al.,38 which combines segmentation and PVE recovery within an iterative process. [Color figure can be viewed at wileyonlinelibrary.com]

To reduce blur in the latter approach, algorithms using transition regions between lesion and background are employed. These regions correspond to spill‐out due to PVE and are modeled by regional basis functions in the PVE recovery reconstruction step. The reconstruction adjusts the activity inside each region according to the ML convergence with respect to the sinogram data. The subsequent segmentation refinement step acts on the lesion borders in the improved image, until borders no longer change. A requirement of the algorithm is that a model of the scanner PSF and access to raw data are available. Conversely, the advantage is that a joint estimate of lesion borders and activity can be obtained.

In the work of De Bernardi, et al.,38 the segmentation was obtained using k‐means clustering and the refinement was achieved by smoothing the result with the local PSF and by re‐segmenting. The algorithm, suited for the simplest case of homogeneous lesions, was validated in a sphere phantom study. More recently, an improved strategy was proposed, in which the segmentation is performed with a Gaussian Mixture Model and PVE recovery is performed on a mixture of regional basis functions and voxel intensities. The algorithm was validated on a phantom in which lesions are simulated with zeolites (see section 4.C.1).116

2.B.4. Segmentation of multimodality images

Multimodality imaging is of increasing importance for cancer detection, staging, and monitoring of treatment response.117, 118, 119, 120, 121

In radiotherapy treatment planning, significant variability can occur when multiple observers contour the target volume.122 This inter‐observer variability has been shown to be reduced by combining information from multimodality imaging and performing single delineations on fused images, such as CT and PET, or MRI and PET.25, 123, 124, 125, 126, 127 However, traditional visual assessment of multimodality images is subjective and prone to variation. Alternatively, algorithms have been proposed for integrating complementary information into multimodality images by extending semi‐automated segmentation algorithms into an interactive multimodality segmentation framework to define the target volume.31, 32, 33, 34

Consequently, the accuracy of the overall segmentation results would be improved, although, as a word of caution, it should be emphasized that the goal may be different from mono‐modality delineation and its realization would depend on the application endpoint combined with the clinical association objective of the different image modalities. For instance, in radiotherapy planning, the main rationale behind the use of combining several images of different modalities to define the GTV is that they complement each other by combining different aspects of the underlying biology, physiology, and/or anatomy. However, in reality, this may not be the case for all patients and all pathologies, for example, the lesion may not be seen in the additional modality, or may exhibit an artifact. In addition, misregistration between the different modalities and respiratory motion may lead to a potentially erroneous GTV if the images were simply fused without careful consideration of geometric correspondence and the logic by which the different image data are combined (union, intersection, or other forms of fusion).

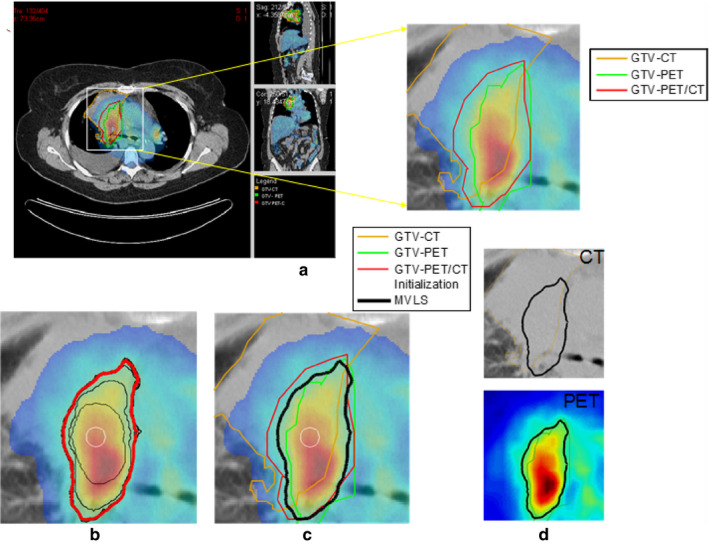

Exploitation of multimodal images for segmentation has been applied to define myocardial borders in cardiac CT, MRI, and ultrasound using a multimodal snake deformable model.128 Another example is the classification of coronary artery plaque composition from multiple contrast MRI images using a k‐means clustering algorithm.129 To define tumor target volumes using PET/CT/MRI images for radiotherapy treatment planning, a multivalued deformable level set approach was used as illustrated in Fig. 3.31 This approach was extended further later on using the Jensen Renyi divergence as the segmentation metric.34

Figure 3.

(a) PET/CT images of a patient with lung cancer in case of atelectasis (lung collapse), with manual segmentation for CT (orange), PET (green) and fused PET/CT (red). (b) The multivalued level sets (MVLS) algorithm initialized (white circle), evolved contours in steps of 10 iterations (black), and the final contour (red). (c) MVLS results shown along with manual contour on the fused PET/CT. (d) MVLS contour superimposed on CT (top) and PET (bottom). Reproduced with permission from El Naqa, et al.31 [Color figure can be viewed at wileyonlinelibrary.com]

Mathematically, approaches that aim at simultaneously exploiting several image modalities represent a mapping from the imaging space to the “perception” space as identified by experts such as radiation oncologists.130 Several segmentation algorithms are amenable to such generalization.131 Among these, algorithms are multiple thresholding, clustering such as k‐means and fuzzy c‐means (FCM) and active contours. In the case of multiple thresholding, CT volumes can be used to guide selection of PET thresholds46 or using thresholds on the CT intensities to constrain the PET segmentation.131 These conditions are typically developed empirically but could be optimized for a specific application. For clustering, the process is carried out by redefining the image intensities and clustering centers as vectors (with elements being the intensities of the different modalities) in contrast to the typical scalars used in single modality images.129 The formalism for FCM is given in Appendix I.B. However, both thresholding and clustering algorithms in their basic form suffer from loss of spatial connectivity, which is accounted for in active contour models using a continuous geometrical form such as the level sets. The level set provides a continuous implicit representation of geometric models, which easily allows for adaptation of topological changes and its generalization to different image modalities. Assuming there are N imaging modalities, then using the concept of multivalued level sets (MVLS)132, 133 the different imaging modalities are represented by a weighted level set functional objective of the different modalities and the target boundary is defined at the zero level set31 (Appendix I.B).

Finally, other approaches based on the Markov field combined with graph‐cut methods,32 as well as random walk segmentation33 or including topology,134 were developed and validated on clinical images for multimodal (PET, CT, MRI) images tumor segmentation with promising results.

2.B.5. Vendor implementation examples

Here, we provide a brief summary of several vendor implementations of PET‐AS methods at the time when this report was written. Therefore, it may not describe the PET‐AS methods provided by all vendors at the time of publication due to constant evolution of vendor software. Vendors also provide tools for manual segmentation that have been omitted for brevity. Since the algorithms implemented by vendors are not exactly known, the summary, and classification provided below do carry a significant degree of uncertainty.

Gradient‐based edge detection tool is avaible by MIM Software Inc. (Cleveland, OH, see Section 2.B.2) and Table 1,135, 136). VelocityAI (Varian Medical Systems|Velocity Medical Solutions, Atlanta, GA) also point that their tool uses “rates of spatial change” in the segmentation process. PET‐AS methods based on region growing tools (Section 2.B.2) are available by Mirada XD (Mirada Medical, Oxford, UK) and RayStation (RaySearch Laboratories AB, Stockholm, Sweden).

Adaptive thresholding approaches (Section 2.B.1) are available by VelocityAI (the method by Daisne, et al.137), GE Healthcare VCARTM system (V 1.10) (GE Healthcare Inc., Rahway, NJ, the method by Sebastian, et al.,138 see Table 1), and ROVER (ABX GmbH, Radeberg, Germany, an iterative approach following Hofheinz et al26, 61).

Finally, practically all vendor implementations use some type of fixed or adaptive threshold‐based method (Section 2.B.1). For example, Varian's Eclipse V.10 (Varian Medical Systems, Inc., Palo Alto, CA) as well as other vendor implementations including Philips Healthcare PinnacleTM (Philips Healthcare, Andover, MA) and Raystation allow users to perform PET segmentation using thresholding in different units (Bq/ml or different SUV definitions), and percent from peak SUV.

3. Comparison of the pet‐as algorithms based on current publications

A comparison of PET‐AS algorithms based on published reports is difficult and subject to controversy because each algorithm has been developed and validated (and often optimized) on different datasets, often using a single type of scanner and/or processing software. However, some limited conclusions can be drawn. For instance, it is possible to compare the algorithms based on their level of validation as well as those algorithms that have been applied to the same datasets. Table 2 contains a survey of various papers in which several algorithms were compared, providing the type of datasets and methods used, the conclusions of the study, as well as some comments.

Table 2.

A summary of segmentation method comparisons and reviews

| No. | Reference | Compared methods | Images or phantoms used | Results and/or recommendations reported by the authors | Limitations and comments by TG211 or others as cited |

|---|---|---|---|---|---|

| Comparison studies | |||||

| 1 | Nestle, et al. 200523 | Visual segmentation, 40% of SUVmax threshold, SUV>2.5 threshold, and an adaptive threshold | Patient scans | Large differences between volumes obtained with the four approaches | Only visual segmentation used as a surrogate of truth and only clinical data. |

| 2 | Schinagl, et al. 2007250 | Visual segmentation, 40% and 50% of SUVmax threshold, and adaptive thresholding | 78 Clinical PET/CT images of head and neck | The five methods led to very different volumes and shapes of the GTV. Fixed threshold at SUV of 2.5 led to the most disappointing results. | The GTV was defined manually on CT and used as a surrogate of truth for PET‐derived delineation and only clinical data was used. |

| 3 | Geets, et al. 2007,37 Wanet, et al. 201156 | Fixed and adaptive thresholding, gradient‐based segmentation | Phantom (spheres), simulated images, clinical images of lung and H&N cancers with histopathology 3D measurements | More accurate segmentation with gradient‐based approach compared to threshold | Issues associated with the 3D reconstruction of the surgical specimen used as gold standard. |

| 4 | Greco, et al. 2008251 | Manual segmentation, 50% SUVmax, SUV>2.5 threshold and iterative thresholding | 12 Head and neck cancer patients. | Thresholding PET‐AS algorithms are strongly threshold‐dependent and may reduce target volumes significantly when compared to visual and physician‐determined volumes. | Reference GTVs defined manually on CT and MRI. |

| 5 | Vees, et al. 2009252 | Manual segmentation, SUV>2.5 threshold, 40% and 50% of SUVmax threshold, adaptive thresholding, gradient‐based method and region growing. | 18 Patients with high grade glioma |

PET often detected tumors that are not visible on MRI and added substantial tumor extension outside the GTV defined by MRI in 33% of cases. The 2.5 SUV isocontour and “Gradient Find” “segmentation techniques performed poorly and should not be used for GTV delineation”. |

Ground truth derived from manual segmentation on MRI. |

| 6 | Belhassen, et al. 2009253 | Three different implementations of the fuzzy C‐means (FCM) clustering algorithm | Patient scans | Incorporating wavelet transform and spatial information through nonlinear anisotropic diffusion filter improved accuracy for heterogeneous cases | No comparison with other standard methods |

| 7 | Tylski, et al. 201042 | Four different threshold methods (% of max activity and three adaptive thresholding), and a model‐based thresholding | Spheres in an antropomorphic torso phantom as well as non spherical simulated tumors | Large differences between volumes obtained with different segmentation algorithms. Model‐based or background‐adjusted algorithms performed better than fixed thresholds. | No clinical data, limited to threshold‐based algorithms only, only volume error considered as a metric |

| 8 | Hatt, et al. 2010,70 201143, 143 | Fixed and adaptive thresholding, Fuzzy C‐means, FLAB | IEC phantom (spheres); simulated images, clinical images with maximum diameter measurements in histopathology | Advanced algorithms are more accurate compared to threshold‐based and are also more robust and repeatable. | For clinical images, only maximum diameters along one axis were available from histology. |

| 9 | Dewalle‐Vignion, et al. 2011,140 2012254 | Manual segmentation, 42% of SUVmax, two different adaptive thresholding, fuzzy c‐mean and an advanced method based on fuzzy set theory. | Phantom images, simulated images, clinical images with manual delineations | The advanced algorithm is more accurate and robust than threshold‐based and closer to manual delineations by clinicians |

Only manual delineation for surrogate of truth of clinical data. Comments: Interesting use of various metrics for assessment of image segmentation accuracy. |

| 10 | Werner‐Wasik, et al. 2012135 | Manual segmentation, fixed thresholds at 25% to 50% of SUVmax (by 5% increments) and gradient‐based segmentation | IEC phantom (spheres) in multiple scanners, simulated images of lung tumors | A gradient‐based algorithm is more “accurate and consistent” than manual and threshold segmentation. | Only volume error used as a metric of performance. Comment: The need for joint, manual and CT based verification by nuclear medicine physicians, radiologists, and radiation oncologists was highlighted in follow‐up communications.255 |

| 11 | Zaidi, et al. 2012139 | Five thresholding methods, Standard and improved fuzzy c‐means, level set technique, stochastic EM. | Patient scans with histopathology 3D measurements (same as #5 above) N/A |

The automated Fuzzy c‐means algorithm provided was shown to be more accurate than five thresholding algorithms, the level set technique, the stochastic EM approach and regular FCM. Adaptive threshold techniques need to be calibrated for each PET scanner and acquisition/processing protocol and should not be used without optimization. |

Issues associated with the 3D reconstruction of the surgical specimen used as gold standard. See Table 1 |

| 12 | Shepherd, et al. 2012100 | 30 methods from 13 different groups. | Tumor and lymph‐node metastases in H&N cancer and physical phantom (irregular shapes). Simulated, experimental and clinical studies | Highest accuracy is obtained from optimal balance between interactivity and automation. Improvements are seen from visual guidance by PET gradient as well as using CT. | A small number of objects (n = 7) were used for the evaluation. |

| 13 | Schaefer, et al. 2012148 | One adaptive thresholding technique | Phantoms, same threshold algorithm, different scanners | The calibration of an adaptive threshold PET‐AS algorithm is scanner and image analysis software‐ dependent. | Confirmation of previous findings about adaptive threshold segmentation. |

| 14 | Schinagl et al. 2013256 | Visual, SUV of 2.5, fixed threshold of 40% and 50%, and two adaptive threshold‐based methods using either the primary or the metastasis | Evaluation of the segmentation of metastatic lymph nodes against pathology in 12 head and neck cancer patients |

SUV of 2.5 was unsatisfactory in 35% of cases; for the last four methods: i) using the node as a reference gave results comparable to visual ii) using the primary as a reference gave poor results; |

Shows the limitations of threshold‐based methods. |

| 15 | Hofheinz, et al. 2013,61 | Voxel‐specific adaptive thresholding and standard lesion‐specific adaptive threshold | 30 simulated images based on real clinical datasets. | The voxel‐specific adaptive threshold method was more accurate than the lesion‐specific one in heterogeneous cases | Only simulated data were used. |

| 16 | Lapuyade‐Lahorgue, et al. 2015248 | Improved generalized fuzzy c‐means, fuzzy local information C‐means and FLAB61 (Table 1) | 34 simulated tumors and nine clinical images with consensus of manual delineations. Three acquisitions of phantoms for robustness of evaluation. | In both simulated and clinical images, the improved generalized FCM led to better results than another FCM implementation and FLAB, especially on complex and heterogeneous tumors, without any loss of robustness on data acquired in different scanners. | Only nine clinical images used. |

| Reviews | |||||

| 1 | Boudraa, et al. 200627 | N/A | Mostly clinical images | Extensive review of the fomalism of image segmentation algorithms used in nuclear medicine (not specific to PET and clinical oncology) | Only a few algorithms have been rigorously validated for accuracy, repeatability and robustness. |

| 2 | Lee, 201029 | N/A | Simulated and mostly clinical images | Discussed the main caveats of threshold‐ based techniques including the effect of phantom cold walls on threshold. A discussion of the available and desirable validation datasets and approaches is also included. | Extensive review of nuclear medicine image segmentation algorithms (not specific to PET and clinical oncology) |

| 3 | Zaidi and El Naqa, 201054 | N/A | Simulated, experimental and clinical studies | Despite being promising, advanced PET‐AS algorithms are not used in the clinic. | |

| 4 | Hatt, et al. 201143 | N/A | N/A | Only a few algorithms have been rigorously validated for accuracy, repeatability and robustness. | See the three last columns of Table 1 |

| 5 | Kirov and Fanchon, 2014,176 | N/A | Clinical images with pathology derived ground truth. | Articles comparing PET‐AS methods are summarized separately for lesions in five groups based on location in the body with a focus on the accuracy, usefulness and the role of the pathology‐validated PET image sets. | |

| 6 | Foster, et al. 201436 | N/A | N/A | “although there is no PET image segmentation method that is optimal for all applications or can compensate for all of the difficulties inherent to PET images, development of trending image segmentation techniques which combine anatomical information and metabolic activities in the same hybrid frameworks (PET‐CT, PET‐CT, and MRI‐PET‐CT) is encouraging and open to further investigations.” | Most exhaustive review of the state‐of‐the‐art in 2014. Uses a similar classification of methods as in the present report. |

Most of the algorithms have been optimized/validated on phantom acquisitions of spheres, as this is a common tool in PET imaging to evaluate the sensitivities, noise properties, and spatial resolution of PET scanners. On one hand, most algorithms usually give satisfactory results in these phantom acquisitions, even for varying levels of noise and contrast levels. However, homogeneous spheres on a homogeneous background are not realistic tumors. The number of algorithms that have been successfully applied to realistic simulated tumors or real clinical tumors with an acceptable surrogate of truth (e.g., histopathological measurements) is much smaller. Finally, algorithms that have been validated for robustness against several scanner models and their associated reconstruction algorithms are even less numerous since the datasets are not usually made publicly available.

It should also be emphasized that there are a few algorithms that have been applied to common (although not publically available) datasets. For instance, the gradient‐based algorithm by Geets, et al.,37 the improved fuzzy c‐means (FCM) by Belhassen and Zaidi,28, 139 the theory of possibility applied to Maximum intensity projections (MIP) by Dewalle‐Vignon, et al.140 and the contourlet‐based active contour model by Abdoli, et al.141 have all been applied to a dataset of seven patients with 3D reconstruction of the surgical specimen in histology (from a dataset of nine patients originally obtained in a study by Daisne, et al.142), with 19 ± 22%, 9 ± 28%, 17 ± 13% and 0.29 ± 0.6% volume mean errors, respectively. Similarly, the improved fuzzy c‐means by Belhassen, et al.,28 FLAB by Hatt, et al.70, 143 and the level sets and Jensen‐Rényi divergence algorithm by Markel, et al.34 were applied to the NSCLC tumors dataset with maximum diameters from MAASTRO (Maastricht Radiation Oncology)124 (with ± 6% error for FLAB, ± 15% for the improved FCM and ± 14.8% for the level sets approach, respectively). In addition, most of the advanced algorithms that have been proposed have been compared to some kind of fixed and/or adaptive thresholding using their respective test datasets and have, for the most part, demonstrated improvements in accuracy and robustness. In particular, it was observed that fixed and adaptive thresholding might lead to over 100% errors in cases of small and/or low‐contrast objects and significant underestimation (−20 to −100%) in cases of larger volumes with more heterogeneous uptake distributions, whereas advanced methods were able to provide more satisfactory error rates (around or below 10 to 20% errors).143, 144 However, it is possible that simpler, e.g., adaptive threshold PET‐AS‐methods optimized for a specific body site, may perform comparably well or even better than some of the more advanced techniques.145

In the largest comparison to date, Shepherd, et al.100 segmented 7 VOIs in PET using variants of threshold‐, gradient‐, hybrid image‐, region growing‐, and watershed‐based algorithms, as well as more complex pipeline algorithms. Along with manual delineations, a total of 30 distinct segmentations were performed per VOI and grouped according to type and dependence upon complementary information from the user and from simultaneous CT. According to a statistical accuracy measure that accounts for uncertainties in ground truth, the most promising algorithms within the wider field of computer vision were a deformable contour model using energy minimization techniques, a fuzzy c‐means (FCM) algorithm, and an algorithm that combines variants of region growing and the watershed transform. Another important finding was that user interaction proved in general to benefit segmentation accuracy, highlighting the need to incorporate expert human knowledge, and this in turn was made more effective by visualization of PET gradients or CT from PET‐CT hybrid imaging.

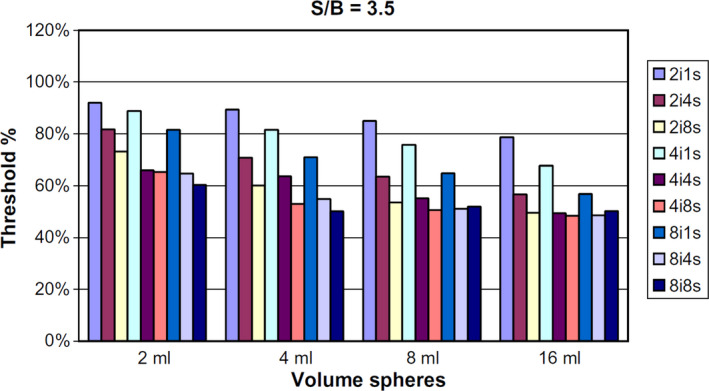

There is little information to date concerning the comparison of the performance of an algorithm using datasets from different scanners and/or with the implementation of that algorithm in different software packages. In one study,146 an adaptive threshold segmentation algorithm was applied in three centers using two similar types of scanners from the same manufacturer. The authors demonstrated that significant differences were observed in the optimal threshold values depending on the center and imaging protocols, despite that both the scanner and reconstruction method were the same. In addition, significant differences were also observed, depending on the reconstruction settings (Fig. 4). They concluded that synchronization of imaging protocols can facilitate contouring activities between cooperating sites. In another investigation, dependence of the segmentation threshold providing the correct sphere volume on the reconstruction algorithm was also observed for small spheres.147

Figure 4.

Variation in the optimal threshold value (y axis) obtained according to different settings of the PET reconstruction with varying number of iterations and subsets (from two iterations one subset to eight iterations eight subsets, colored bars), and for spheres of different volumes (x axis) and a sphere‐to‐background ratio of 3.5, for one single scanner model. Reproduced with permission from Ollers, et al.146 [Color figure can be viewed at wileyonlinelibrary.com]

In a German multicenter study,148 Schaefer, et al. evaluated the calibration of their adaptive threshold algorithm (contrast‐oriented algorithm) for FDG PET‐based delineation of tumor volumes in eleven centers, using three different scanner types from two vendors. They observed only minor differences in calibration parameters for scanners of the same type, provided that identical imaging protocols were used, whereas significant differences were found between scanner types and vendors. After calibrating the algorithm for all three scanners, the calculated SUV thresholds for auto‐contouring did not differ significantly.

On the other hand, the FLAB algorithm by Hatt, et al. showed robustness to scanner type and performed well without pre‐optimization, on four different scanners from three vendors (Philips GEMINI GXL and GEMINI TF, Siemens Biograph 16 and GE Discovery LS) using a large range of acquisition parameters such as voxel size, acquisition duration, and sphere‐to‐background contrast.43

While the natural incentive is to create algorithms which perform universally well across body sites and disease types, for at least one body site it was shown145 that simpler (e.g., adaptive threshold) methods may perform comparably well if specifically optimized for these conditions. At present, there is not a sufficient amount of published data to give specific recommendations for each clinical site. The emerging consensus99 and decision tree73 based methods, however, provide a potential to provide adequate solution for each site if appropriately adapted and trained.

Given the above results, the validation of PET‐AS algorithms, as described in current publications, does not provide sufficient information regarding which of the known approaches would be most accurate, applicable, or convenient for clinical use. In the following sections, we attempt to lay the basis for a framework that avoids the methodological weaknesses of the past and addresses the challenges inherent in segmentation in PET.

4. Components of an evaluation standard

A main conclusion of the work of this task group is that a common and standardized evaluation protocol or “benchmark” to assess the performance of PET–AS methods is needed. The design of such a protocol requires:

Selection of evaluation endpoint and definition of performance criteria;

Selection of a set of images;

Selection of contour evaluation tools;

4.A. Evaluation endpoints