Abstract

Humans commonly operate within 3D environments such as multifloor buildings and yet there is a surprising dearth of studies that have examined how these spaces are represented in the brain. Here, we had participants learn the locations of paintings within a virtual multilevel gallery building and then used behavioral tests and fMRI repetition suppression analyses to investigate how this 3D multicompartment space was represented, and whether there was a bias in encoding vertical and horizontal information. We found faster response times for within-room egocentric spatial judgments and behavioral priming effects of visiting the same room, providing evidence for a compartmentalized representation of space. At the neural level, we observed a hierarchical encoding of 3D spatial information, with left anterior hippocampus representing local information within a room, while retrosplenial cortex, parahippocampal cortex, and posterior hippocampus represented room information within the wider building. Of note, both our behavioral and neural findings showed that vertical and horizontal location information was similarly encoded, suggesting an isotropic representation of 3D space even in the context of a multicompartment environment. These findings provide much-needed information about how the human brain supports spatial memory and navigation in buildings with numerous levels and rooms.

Keywords: 3D space, compartmentalized, fMRI, hippocampus, isotropic

Introduction

We live in a 3D world. The ideal method to enable navigation in 3D space would be to have a 3D compass or global positioning system (GPS) that identifies direction and distance in relation to all 3 axes in an isotropic manner. Such a 3D compass system would seem to be essential for animals who fly or swim. Indeed, place cells and head direction cells found in flying bats were observed to be sensitive to all 3 axes (Yartsev and Ulanovsky 2013; Finkelstein et al. 2014). Behavioral experiments with fish also indicated a volumetric 3D representation of space (Burt de Perera et al. 2016). However, as suggested by Jeffery et al. (2015), extending spatial encoding from 2D to 3D space comes with complications such as the noncommutative property of 3D rotation, and a fully volumetric representation of 3D space might be costly and unnecessary for certain environments and species. When an animal’s movement is restricted on the earth’s surface due to gravity, its position can be identified by 2 coordinates on that surface and a quasiplanar representation could be more efficient than a volumetric 3D representation.

Multifloor buildings are the most common type of working and living spaces for humans today. Regionalization is a key characteristic of these environments—multiple floors stacked on top of each other and multiple rooms located side by side on a floor. When we navigate within multifloor buildings, we can use hierarchical planning rather than using a 3D vector shortcut or volumetric 3D map. For example, we decide which floor to go to (“second floor”), and which room on that floor (“the first room nearest the stairs”), then the location within the room (“the inside left corner of the room”). The regionalization and hierarchical representation of space involving multiple scales has been consistently observed (Hirtle and Jonides 1985; Han and Becker 2014; Balaguer et al. 2016), but it is not fully understood how spatial information about multiple scales is encoded at the neural level, particularly in a 3D context.

One obvious question is whether a common neural representation is used for each compartment (room). Using a generalized code to register local information is an efficient strategy compared with assigning unique codes for every location in an entire environment in the context of repeating substructures. Moreover, a common local representation can be seen as a “spatial schema” that captures the essence of an environment and helps future learning of relevant environments or events (Tse et al. 2007; Marchette et al. 2017). The retrosplenial cortex (RSC) and hippocampus are candidate brain regions for the encoding of within-compartment, local information. Place cells in the hippocampus are known to repeat their firing fields in a multicompartment environment (Derdikman et al. 2009; Spiers et al. 2015). Moreover, human fMRI studies have shown that the hippocampus contains order information that generalizes across different temporal sequences (Hsieh et al. 2014), and the RSC contains location codes that generalize across different virtual buildings (Marchette et al. 2014).

Another question is where in the brain each compartment is represented within the larger environment in order to complement the local room information. To the best of our knowledge, no study has simultaneously interrogated the neural representation of local spatial representations and the compartment information itself. The hippocampus might contain both types of information. It has been suggested that the hippocampus represents spatial information of multiple scales down its long axis. For example, the size of place fields is larger in ventral hippocampus than dorsal hippocampus in rats (Kjelstrup et al. 2008). In a human fMRI study, increased activation in posterior (dorsal) hippocampus was associated with a fine-grained spatial map, whereas the anterior (ventral) hippocampus was linked with coarse-grained encoding (Evensmoen et al. 2015).

It is also interesting to consider the question of whether vertical and horizontal information is equally well encoded in a 3D environment. In other words, is it the case that when a room is located directly above another room, are they as equally distinguishable as 2 rooms that are side by side on the same floor? At the neural level in rats, place cells and grid cells show vertically elongated firing fields, implying reduced encoding of vertical information, and it has been proposed that this is evidence of the quasiplanar representation of 3D space (Hayman et al. 2011; Jeffery et al. 2013). However, this asymmetry could have arisen from the repeating shape of the environment. By contrast, Kim et al. (2017) found that the human hippocampus encoded vertical and horizontal location information equally well in a 3D virtual grid-like environment during fMRI scanning.

Behaviorally, there are mixed results in the literature in relation to vertical–horizontal symmetry/asymmetry. In a study by Grobéty and Schenk (1992), rats located the vertical coordinate of a goal earlier than the horizontal coordinate, whereas in Jovalekic et al. (2011), rats prioritized horizontal movements and foraged at the horizontal level before moving to the next level. In humans, a group who learned the location of objects in a virtual multifloor building along a floor route had, overall, better spatial memory than a group who learned along a vertical columnar route, suggesting a bias towards the floor-base representation (Thibault et al. 2013). However, another study reported that twice as many participants reported a columnar representation of a building than a floor representation (Büchner et al. 2007).

Considering active navigation in a real buildings, Zwergal et al. (2016) found that participants were better during horizontal navigation than navigation across multiple floors. However, this difference could have arisen from reduced attention to visual landmarks during vertical navigation. It has also been reported that dogs correctly remembered horizontal locations within a floor but not the vertical floor itself (Brandt and Dieterich 2013). However, it is not clear whether the dogs’ navigation errors were due to inherent differences in vertical and horizontal encoding, or because of unequal landmark information consequent upon a lack of visual and odour controls in this study. Hummingbirds have been reported to be more accurate at vertical locations in a cubic maze, while the opposite pattern was observed for rats (Flores-Abreu et al. 2014).

We sought to address the issues outlined above in order to provide much-needed information about how regionalization of space is realized at the neural level, in particular in a 3D context. Participants learned the locations of paintings in a virtual multifloor gallery building. Before scanning, we compared their spatial judgments within and across vertical and horizontal boundaries. Then participants performed an object-location memory test while being passively moved in the virtual building during fMRI scanning. Repetition suppression analysis was used to ascertain which brain regions represented the local information within a room, or the room information within the building. In addition, we also asked whether vertical and horizontal room information in the brain was symmetrically or asymmetrically represented.

Materials and Methods

Participants

Thirty healthy adults took part in the experiment (15 females; age 23.7 ± 4.6 years; range 18–35 years; all right-handed). All had normal or corrected to normal vision and gave informed written consent to participation in accordance with the local research ethics committee.

The Virtual Environment

The virtual environment was a gallery building. There were 4 identical-looking rooms within the building, 2 rooms on each of 2 main floors (Fig. 1A,C). There was a unique painting located in each of the 4 corners of a room, resulting in 16 unique locations in the building. The paintings were simple and depicted animals or plants such as a dog, rose or koala bear. Painting locations were randomized across the participants, therefore, spatial location was orthogonal to the content of the painting associated with it. The virtual environment was implemented using Unity 4.6 (Unity Technologies, CA, USA). A first-person-perspective was used and the field-of-view was ±30° for vertical axes and ±37.6° for horizontal axes. During the prescan training, the stimuli were rendered on a standard PC (Dell Optiplex 980, integrated graphic chipset) and presented on a 20.1 inch LCD monitor (Dell 2007FP). The stimuli filled 70% of the screen width. The same PC was used during scanning, and the stimuli were projected (using an Epson EH-TW5900 projector; resolution 1024 × 768) on a screen at the back of the MRI scanner bore and participants saw the screen through a mirror attached to the head coil.

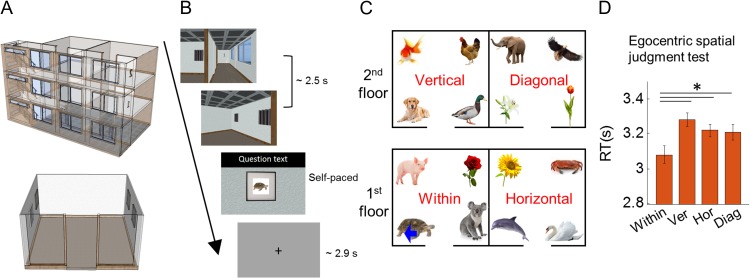

Figure 1.

Stimuli and experimental design. (A) Top panel, overview of the virtual gallery building with transparent walls for display purposes. Bottom panel, overview of one room with transparent walls for display purposes. (B) On each trial, participants were virtually transported to one of the paintings from a corridor, and then the participant performed a spatial memory task. During the prescan egocentric judgment test, they were asked to make spatial judgments about the locations of other paintings, for example, “Is the pig on your left?” (mean RT 3.2 s). During the scanning test, they were asked to indicate whether the painting was the correct one or not for that location, “Is this picture correct?” (mean RT 1.3 s). (C) An example layout of 16 paintings located in the 4 rooms of the gallery. The within, vertical, horizontal and diagonal rooms were defined relative to a participant’s current location. In this example, the participant was standing in front of the turtle painting (blue arrow). (D) Spatial judgments were significantly faster for the within-room (Within) condition. There were no differences between vertical (Ver), horizontal (Hor), or diagonal (Diag) rooms. Error bars are SEM adjusted for a within-subjects design (Morey 2008). *P < 0.05.

Tasks and Procedure

Each participant completed the tasks in following order: learning prior to scanning, a prescan egocentric judgment task, and the object location memory task during scanning (which was preceded by a short practice of the scanner task).

Learning Prior to Scanning

We allocated 20 min for the initial free exploration learning phase, but allowed participants to proceed to the test phase before 20 min had elapsed if they felt that they had learned the layout very well. The purpose of this self-determined criterion was to prevent participants becoming bored. Seventeen out of the 30 participants moved on to the test phase before 20 min had passed (mean 16 min, SD 2 min). We used this subjective criterion because experimenters could check the participants’ objective memory performance afterwards and let the participants revisit the building and learn the layout again if performance was suboptimal, before they proceeded to the scanner. Four out of the 30 participants (only one of whom was among the 17 participants who asked to move on to testing prior to the 20 min elapsing) had to revisit the virtual building for up to 5 additional minutes because the accuracy either in the egocentric judgment test or the short practice for the scanning object-location test was below 70%. These 4 participants’ accuracy during scanning was between 78% and 83%. The mean accuracy of the 30 participants for the scanning task was 93% (SD 5.5%). We are confident, therefore, that every participant had good knowledge of the spatial layout.

Prescan Egocentric Judgment Task

Immediately after the learning phase, there was a spatial memory test which required participants to make egocentric spatial judgments in the gallery. This test was used to examine the influence of vertical and horizontal boundaries on the mental representation of 3D space (see Behavioral Analyses).

On each trial of this test, participants saw a short dynamic video which provided the sensation of being transported to one of the 16 paintings (locations) from the corridor (duration 2.5 s; Fig. 1B, Supplementary Fig. S1). These videos were used to promote the impression of navigation whilst providing full experimental control. On half of the trials, participants started from one end of the corridor facing a floor sign on the wall, while on the remaining trials, they started from the other end of the corridor facing the stairs. In both cases they would terminate in the same location within a room, regardless of whether they began the journey facing the floor sign or stairs.

On every trial, participants were transported to one of 2 rooms on the floor where they started—thus, the videos did not contain vertical movement via the staircase. Of note, except for the target painting, the other 3 paintings in a room were concealed behind curtains (Fig. 1B). Once a participant arrived at the target painting within a room, a question appeared on the screen. The question asked about the position of another painting relative to the participant’s current position, for example, “Is the pig on your left?,” “Is the sunflower on your right?,” “Is the dog above you?,” “Is the duck below you?.” Participants responded yes or no by pressing a keypad with their index or middle finger. Similar to a previous study by Marchette et al. (2014), we instructed participants to interpret the left/right/above/below broadly, “including anything that would be on that side of the body” and not just the painting directly left/right/above/below. For example when a participant was facing the turtle painting in Figure 1C, the sunflower was on their right and the duck was above. The time limit for answering the question was up to 5 s. The intertrial interval (ITI) was drawn from a truncated gamma distribution (mean 2.9 s, minimum 2.0 s, maximum 6.0 s, shape parameter 4, scale parameter 0.5) and there were 64 trials. Participants were provided with their total number of correct and wrong answers at the end of the test, but did not receive feedback on individual trials.

fMRI Scanning Object Location Memory Task

On each trial of the scanning task, participants were transported to one of the paintings from the corridor as in the prescan memory test (duration 2.5 s; Fig. 1B). All 4 paintings in the room were concealed behind curtains. Once a participant arrived at a painting, the curtain was lifted. The participant then indicated whether the painting was the correct one or not for that location by using an MR-compatible keypad. On 80% of the trials, the correct painting was presented and on 20% of trials a painting was replaced by one of the other 15 paintings. The response to the question was self-paced with an upper limit of 4.5 s (mean response time [RT] 1.3 s, SD 0.7 s), and ITIs were the same as those in the prescan memory test. There were 100 trials for each scanning session and each participant completed 4 scanning sessions with a short break between them, making a total functional scanning time of ~50 min. Participants were told the total number of correct and wrong answers at the end of each scanning session, but individual trial feedback was not given.

The order of visiting the paintings (locations) was designed to balance first-order carry-over effects (Aguirre 2007; Nonyane and Theobald 2007). This balancing sequence has been used in other fMRI studies involving repetition suppression (Vass and Epstein 2013; Sulpizio et al. 2014). This meant that one location was followed by every other location with similar frequency. One issue relating to this sequence is that the trials in which the corner or room, or both, were repeated, occurred less frequently than trials where none were repeated. This is because the chance of visiting the same corner (or room) in the following trial is 25% as there are 4 possible corners (or rooms). This resulted in different numbers of trials being included in the “same corner” and “different corner” conditions (or “same room” and “different room” conditions). For maximum statistical power when contrasting these conditions, ideally every regressor would contain a similar number of trials and as many trials as possible. We nevertheless found robust and dissociable effects when we contrasted the same and different corner (or room) conditions (see Results). Furthermore, when we shuffled the trial identity by randomly assigning the “same corner” and “different corner” labels and preserving the ratio of trials, we did not observe significant corner or room encoding in any of our ROIs, or anywhere else in the brain. This implies that a difference in the number of trials included in each condition does not invariably generate a significant difference in BOLD activity. Each participant visited the locations in a different order because we used random permutation for the association between the actual location and the index in the sequence that balanced the carry-over effects.

Behavioral Analyses

Prescan Egocentric Judgment Test

To test the influence of compartmentalization by vertical and horizontal boundaries on spatial judgments, we compared the accuracy and RT of egocentric spatial judgments between 4 conditions (Fig. 1C): (1) within; when the painting in question was in the same room as a participant; for example, a participant is facing the turtle and made a spatial judgment about the pig, rose, or koala; (2) vertical; when the painting in question was in the room above or below a participant; for example, a participant facing the turtle was asked about the dog, gold fish, duck, or chicken; (3) horizontal; when the painting in question was in the adjacent room on the same floor as a participant; for example, a participant facing the turtle was asked about the sunflower, crab, dolphin, or swan; and (4) diagonal; when the painting in question was in a diagonal room; for example, a participant facing the turtle was asked about the elephant, eagle, lily, or tulip.

If participants had a holistic mental representation of 3D space irrespective of physical boundaries within the building, performance for all 4 conditions should be similar. In contrast, if their mental representation was segmented into each room, spatial judgments within the same room (within) would be facilitated and therefore higher accuracy and/or faster RT would be expected compared with spatial judgments across different rooms (vertical, horizontal, or diagonal conditions). If space is predominantly divided into a horizontal plane, as suggested by some previous studies (Jovalekic et al. 2011; Thibault et al. 2013; Flores-Abreu et al. 2014), spatial judgments about paintings on different floors (vertical, diagonal) would be more difficult than paintings on the same floor (within, horizontal). We used a repeated one-way ANOVA and post hoc paired t-tests to compare the accuracy and RT for the 4 conditions.

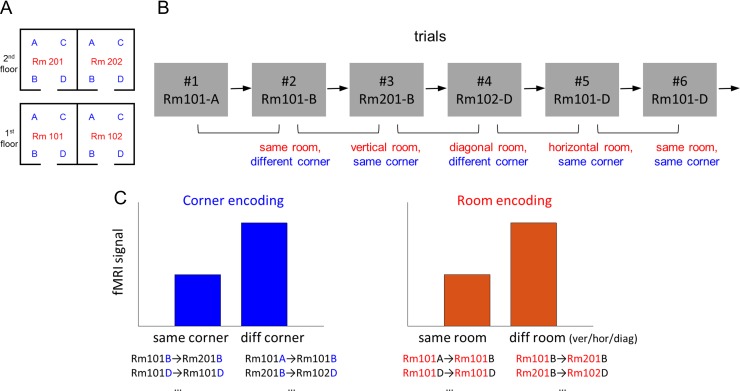

Object Location Memory Test During Scanning

We tested whether spatial knowledge of 3D location was organized into multiple compartments by measuring a behavioral priming effect. Each trial was labeled as one of 4 conditions depending on the room participants visited in the immediately preceding trial (Fig. 2A,B). Figure 2B shows an example trial sequence and the room label for each trial in red: (1) same; when participants visited the same room in the previous trial, for example, the second trial; (2) vertical; when participants previously visited the room above or below the current room, for example, the third trial; (3) horizontal; when participants previously visited the adjacent room on the same floor, for example, the fifth trial; and (4) diagonal; when participants previously visited neither vertically nor horizontally adjacent room, for example, fourth trial. A holistic, volumetric representation of space would result in similar behavioral performance for all 4 conditions. If representations were compartmentalized, participants would make more accurate and/or faster judgments when spatial memory was primed by the representation of the same compartment (room). If spatial representations were further grouped along the horizontal plane, visiting the adjacent room on the same floor (horizontal condition) will also evoke a behavioral priming effect. Alternatively, the space might be represented in a vertical column, leading to a prediction of a priming effect for the vertical condition. We compared accuracy and RT for the 4 conditions using a repeated-measure ANOVA and post hoc paired t-tests.

Figure 2.

Analysis overview. (A) A floor plan of the virtual building. The 4 rooms are labeled as “Rm101,” “Rm102,” “Rm201,” “Rm202” and the 4 corners as “A,” “B,” “C,” “D” for the purposes of explanation here. Participants were not told of any explicit labels during the experiment. (B) An example trial sequence. For the behavioral and fMRI repetition suppression analyses, each trial was labeled based on its spatial relationship with the preceding trial, for example, the second trial belongs to the “same room, different corner” condition. Of note, this trial definition is used for analysis only and participants were not asked to pay attention to the preceding trial. (C) Predictions for the fMRI signals. If some brain regions encode corner information, lower fMRI signal is expected for the same corner condition compared with the different corner condition. If room information is encoded, fMRI signal is expected to be lower for the same room condition compared with the different room condition.

Scanning and Preprocessing

T2*-weighted echo planar images (EPI) were acquired using a 3 T Siemens Trio scanner (Siemens, Erlangen, Germany) with a 32-channel head coil. Scanning parameters optimized for reducing susceptibility-induced signal loss in areas near the orbitofrontal cortex and medial temporal lobe were used: 44 transverse slices angled at −30°, TR = 3.08 s, TE = 30 ms, resolution = 3 × 3 × 3 mm3, matrix size = 64 × 74, z-shim gradient moment of −0.4 mT/m ms (Weiskopf et al. 2006). Fieldmaps were acquired with a standard manufacturer’s double echo gradient echo field map sequence (short TE = 10 ms, long TE = 12.46 ms, 64 axial slices with 2 mm thickness and 1 mm gap yielding whole brain coverage; in-plane resolution 3 × 3 mm2). After the functional scans, a 3D MDEFT structural scan was obtained with 1 mm isotropic resolution.

Preprocessing of data was accomplished using SPM12 (www.fil.ion.ucl.ac.uk/spm). The first 5 volumes from each functional session were discarded to allow for T1 equilibration effects. The remaining functional images were realigned to the first volume of each run and geometric distortion was corrected by the SPM unwarp function using the fieldmaps. Each participant’s anatomical image was then coregistered to the distortion corrected mean functional images. Functional images were normalized to MNI space, then spatial smoothing (FWHM = 8 mm) was applied.

fMRI Analyses

Main Analysis: Room and Corner Encoding

We used an fMRI repetition suppression analysis to search for 2 types of spatial information in the brain: (1) corner: a participant’s location within a room, and (2) room: which room a participant was in. fMRI repetition suppression analysis is based on the assumption that when a similar neural population is activated across 2 consecutive trials, the fMRI signal is reduced during the second trial. Therefore, if a brain region encodes the corner information, visiting the same corner in a consecutive trial would result in reduced fMRI signal compared with visiting a different corner (Fig. 2C). For example, visiting Rm201-B after Rm101-B (third trial in the example sequence, Fig. 2B) or visiting Rm101-D twice in a row (sixth trial in the example) would evoke reduced fMRI signal than visiting Rm101-B after Rm101-A (second trial in the example) or visiting Rm102-D after Rm201-B (fourth trial). On the other hand, if a brain region encodes room information, visiting the same room in consecutive trials (e.g., in Fig. 2B, Rm101-A → Rm101-B, second trial) would result in reduced fMRI signal compared with visiting a different room (e.g., Rm101-B →Rm201-B, third trial).

We also tested whether vertical and horizontal boundaries similarly influenced neural similarity between rooms. If there was a bias in encoding horizontal information better than vertical (floor), then the 2 rooms on top of each other (e.g., Fig. 2B, Rm101 and Rm201) would be less distinguishable than the 2 rooms on the same floor (e.g., Rm101 and Rm102). Therefore, visiting a vertically adjacent room (e.g., Rm101-B → Rm201-B, third trial) would result in more repetition suppression, leading to a reduced fMRI signal, than visiting a horizontally adjacent room (e.g., Rm102-D → Rm101-D, fifth trial). We were also able to ask whether 2 rooms in a diagonal relationship (e.g., Rm201-B → Rm102-D, fourth trial) were more distinguishable than vertically or horizontally adjacent rooms.

To answer these questions, we constructed a GLM which modeled each trial based on its spatial relationship to the preceding trial in terms of 2 factors: corner and room. The corner factor had 2 levels: same or different corner, and the room factor had 4 levels: same, vertical, horizontal or diagonal room. This resulted in a 2 × 4 = 8 main regressors. Each regressor was a boxcar function which for each trial modeled the entire stimulus duration including the virtual navigation period (2.5 s) and subsequent object-location memory test (mean RT 1.3 s, SD 0.7 s) (Fig. 1B, top 3 panels) convolved with the SPM canonical hemodynamic response function. Information about spatial location was cumulatively processed throughout the navigation video and continued until participants decided whether the painting was the correct one or not for the location, and hence we modeled the entire period as a single boxcar. Moreover, this fMRI study was not designed to distinguish between temporally adjacent events or cognitive processes, because we favored a more naturalistic task (i.e., without the delays that jitter would introduce). The first trial of each scanning session, which did not have an immediately preceding trial, or the trials where participants were incorrect (mean 6.8%, SD 5.5%) were excluded from the main regressors and modeled separately. The GLM also included nuisance regressors: 6 head motion realignment parameters and the scanning session-specific constant regressor.

First, we conducted a whole-brain analysis to search for corner and room information using 2 contrasts: (1) “same corner < different corner,” collapsed across the room factor, and (2) “same room < different room (=average of the vertical room/horizontal room/diagonal rooms),” collapsed across the corner factor. Each participant’s contrast map was then fed into a group level random effects analysis. Given our a priori hypothesis about the role of hippocampus and RSC for encoding spatial information, we report voxel-wise P-values corrected for anatomically defined hippocampus and RSC regions-of-interest (ROIs). For the rest of the brain, we report regions that survived a whole-brain corrected family-wise error (FWE) rate of 0.05.

The hippocampus and RSC ROIs were manually delineated on the group-averaged structural MRI scans from a previous independent study on 3D space representation (Kim et al. 2017) (Supplementary Fig. S3). The bilateral hippocampus mask contained the whole hippocampus from head to tail (304 voxels in 3 × 3 × 3 mm3 EPI resolution). Although some navigation fMRI studies have defined a “retrosplenial complex” which includes Brodmann areas 29–30, occipitotemporal sulcus and posterior cingulate cortex (Marchette et al. 2014), we used a more precise anatomical definition of RSC, based on cytoarchitecture, which includes only Brodmann areas 29–30 (Vann et al. 2009) (293 voxels in 3 × 3 × 3 mm3 EPI resolution). Functionally defined ROIs vary from one study to another depending on the statistical threshold and individual differences. We would expect that the anatomical RSC and functionally defined RSC overlap, and whether an anatomical or functional definition is more appropriate depends on the specific research question. In our study, we were interested in finding corner or room information across the entire brain, with precise anatomical priors in hippocampus and RSC, and so we preferred a conservative and threshold-free anatomical definition.

Having identified brain regions that contained significant corner information from the whole brain analysis, we examined the spatial encoding in these regions further by extracting the mean fMRI activity. As a proxy for the mean fMRI activity, beta weights for every voxel within the spherical ROIs (radius 5 mm, centered at the peak voxel) were averaged for each participant, and then compared at the group level by paired t-tests. For this functional ROI-based analysis, we divided the “same corner” condition into “same corner, same room” and “same corner, different room” and compared each condition to “different corner.” This analysis allowed us to rule out the possibility that the corner encoding was driven purely by the repetition suppression effect of “same corner, same room” < “different corner.” If a brain region encodes each of the 16 locations (or associated paintings) without a spatial hierarchy, repetition suppression would only occur for the “same corner, same room” condition and there would be no difference between “same corner, different room” and “different corner.”

We conducted a similar control analysis in the brain regions that contained significant room information (“same room < different room”). We compared the mean activity of “same room, same corner” and “same room, different corner” to “different room” to rule out the possibility that the room encoding was driven by the repetition suppression of the exactly same location. Crucially, we also compared mean activity of different room conditions (vertical/horizontal/diagonal rooms) to test for any potential bias in encoding vertical or horizontal information.

Note that our experiment was specifically designed to examine the main effect of corner and room information, rather than to test a pure nonhierarchical encoding model where only the exact same location shows repetition suppression (“same corner, same room” < “different corner, same room” = “same corner, different room” = “different corner, different room”). Such an encoding hypothesis cannot be separated from the painting encoding hypothesis, given that each location was associated with a unique painting. Nevertheless, for completeness, we tested this hypothesis by creating a mask that showed no difference between the nonexact location conditions: “same corner, diff room,” “diff corner, same room,” “diff corner, diff room” (an intersect of 2 contrast images: “same corner, diff room—diff corner, diff room” and “diff corner, same room—diff corner, diff room,” each thresholded at P > 0.05). We then examined the “same corner, same room < nonexact location” contrast within this mask.

The data could also be examined in terms of 3D physical metric distance from the preceding trial modeled as a linear parametric regressor. However, the highly discretized nature of the environment makes inferences about metric encoding difficult in this context, and this issue would be better addressed with a different type of environment.

Supplementary Analysis: Room Versus View Encoding

In this experiment, room information was cued by a distinctive view such as a wall containing a floor sign, therefore, the room encoding effect could arise due to view encoding and/or more abstract spatial information about a room that was not limited to a particular view. We were able to test for these possibilities because participants were virtually transported to each room from 2 directions (Supplementary Fig. S1), which means they could visit the same room on consecutive trials from the same or different direction. For example, if they had visited Rm101 from the floor sign side in the preceding trial and visited Rm101 from the stair side in the current trial, the views were very different even though the same room was visited. On the other hand, if they had visited Rm101 from the floor sign side in the preceding trial and visited Rm201 from the floor sign side in the current trial, the views were similar even though 2 rooms were different.

We constructed a GLM which modeled each trial based on 2 factors: whether it was the same or a different room from the previous trial, and whether the starting direction (view) was the same or different direction from the previous trial. This resulted in 4 trial types: “same room, same view,” “same room, different view,” “different room, similar view,” and “different room, different view.” As in the main analysis, only correct trials were included for the main regressors, and head motion realignment parameters and scanning session-specific constant regressors were included in the GLM. For each participant, we extracted the mean activity (beta weights) for each trial type in the room encoding regions identified in the “same room < different room” contrast described earlier. We conducted a repeated measures ANOVA and post hoc paired t-tests to compare the mean beta weights between the “same room, same view,” “same room, different view,” and “different room” (collapsed over similar and different view). If only the view was encoded, then the “same room, same view” would have a reduced fMRI signal compared with “different room,” but “same room, different view” would not be associated with a reduced fMRI signal compared with the “different room” condition. If abstract room information was encoded, the “same room, different view” condition would also be associated with reduced fMRI signal compared with the “different room” due to repetition of the room. We were also able to compare “same room, same view” and “same room, different view” to test view dependency when the room was repeated.

Results

Behavioral Results

Prescan Egocentric Judgment Task

In order to examine the influence of vertical and horizontal boundaries on the mental representation of 3D space, we compared the accuracy and RT of spatial judgments for 4 conditions: within, vertical, horizontal, and diagonal rooms. Participants were faster at judging the location of paintings within the same room compared with paintings in different rooms (Fig. 1D; F[3,87] = 5.4, P = 0.002, post hoc paired t-tests: within vs. vertical, t[29] = −3.5, P = 0.001; within vs. horizontal, t[29] = −2.7, P = 0.011; within vs. diagonal, t[29] = −2.2, P = 0.034). There was no significant difference in RT between the vertical, horizontal and diagonal rooms. This result suggests the importance of a physical boundary, but this was not influenced by whether the boundary was vertical or horizontal. Accuracy did not differ significantly between the 4 conditions (F[3,87] = 1.2, P = 0.3; mean overall accuracy 80%, SD 12%).

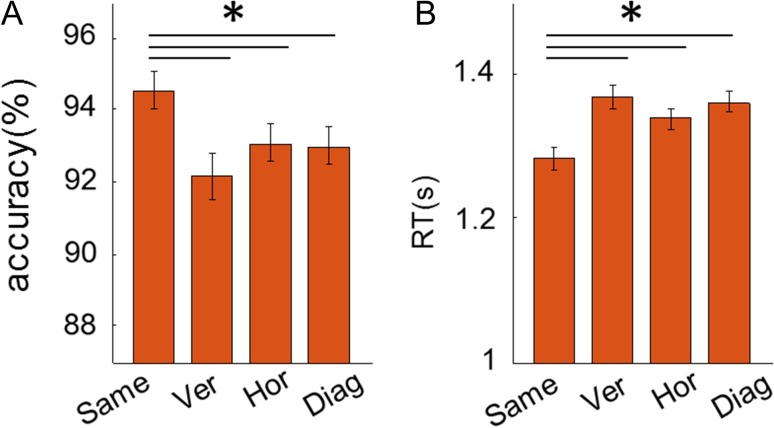

Object-Location Memory Task During Scanning

Overall, participants performed well on the object-location memory task (mean accuracy 93%, SD 5.5%). Participants were more accurate and faster at judging whether a painting was in the correct location if they had visited the same room in the preceding trial (Fig. 3A, B; accuracy: F[3,87] = 4.2, P = 0.008; post hoc paired t-tests: same vs. vertical, t[29] = 3.4, P = 0.002; same vs. horizontal, t[29] = 2.3, P = 0.032; same vs. diagonal, t[29] = 2.3, P = 0.028; RT: F(3,87) = 8.3, P < 0.001; same vs. vertical, t[29] = −4.0, P < 0.001; same vs. horizontal, t[29] = −2.8, P = 0.009; same vs. diagonal, t[29] = −4.1, P < 0.001), and neither accuracy nor RT differed between the vertical, horizontal, or diagonal rooms. This result, along with the prescan memory task, suggests the mental representation of 3D space was segmented into each room, regardless of vertical floor.

Figure 3.

The behavioral priming effect of room during the scanning task. (A) Accuracy was significantly higher for the same room condition compared with all other rooms. There was no significant difference between the different room types. (B) RT was significantly reduced for the same room condition compared with all other conditions. There was no significant difference between different room types. Error bars are SEM adjusted for a within-subjects design (Morey 2008). *P < 0.05.

fMRI Results

We tested if the brain represents a multicompartment 3D building space in a hierarchical manner by separately encoding the corner (“where am I within a room?”) and room (“in which room am I in the building?”). We searched for these 2 types of information using an fMRI repetition suppression analysis. Furthermore, we investigated whether there were differences in how vertical and horizontal information was encoded. We present the results for our 2 ROIs—the RSC and hippocampus—and any other region that survived whole brain correction—there was only one, the right parahippocampal cortex.

Corner Information

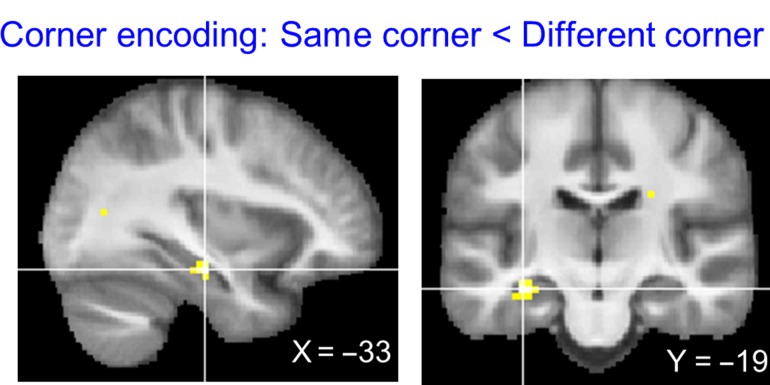

The “same corner < different corner” contrast revealed left anterior lateral hippocampus (Fig. 4, peak MNI coordinate [−33, −19, −16], t[29] = 5.31, P = 0.001, small volume corrected with a bilateral hippocampal mask), suggesting that this region encodes which corner a participant is located within a room. No other brain region showed a significant corner repetition suppression effect at the whole brain corrected level.

Figure 4.

Corner encoding regions. The whole brain contrast “same corner < different corner” revealed only the left anterior hippocampus (peak MNI = [−33, −19, −16], t[29] = 5.31, P < 0.001). The thresholded map is overlaid on the group average structural MRI scan (P < 0.001, uncorrected for display purposes). No other brain region survived multiple comparison correction.

We further examined the spatial encoding in the left anterior lateral hippocampus by extracting the mean activity (beta) for each condition. We investigated the fMRI signal when exactly the same location was visited (“same corner, same room,” e.g., Fig. 2B, Rm101-A → Rm101-A) and when the same corner, but a different room was visited (“same corner, different room,” e.g., Rm101-A → Rm201-A) and compared them to the “different corner” condition (e.g., Rm101-A → Rm201-B). If the entire building is represented in a single volumetric space without a hierarchy, then each of the locations would be uniquely encoded, so repetition suppression is expected only for the “same corner, same room” condition. Our finding speaks against the single volumetric representation hypothesis because both “same corner, same room” and “same corner, different room” conditions evoked significant repetition suppression effects compared with the “different corner” condition (one-sided paired t-tests: “same corner, same room” < “different corner,” t[29] = −4.4, P < 0.001; “same corner, different room” < “different corner,” t[29] = −4.2, P < 0.001). This implies that the anterior hippocampus contains local corner information that is generalized across different rooms, supporting an efficient hierarchical representation of 3D space.

On a related note, one might ask whether the hippocampus showed sensitivity to the heading direction instead of location. Participants faced opposite walls when they were at corner A (or B) and when they were at corner C (or D) (Fig. 1A, 2A). However, they faced the same direction when they were at location A and B (or C and D) and further analysis revealed that there was no difference in the anterior hippocampus when participants visited a corner on the same wall or the opposite wall. Thus, we can conclude that the hippocampus encoded corner information rather than heading direction.

Room Information

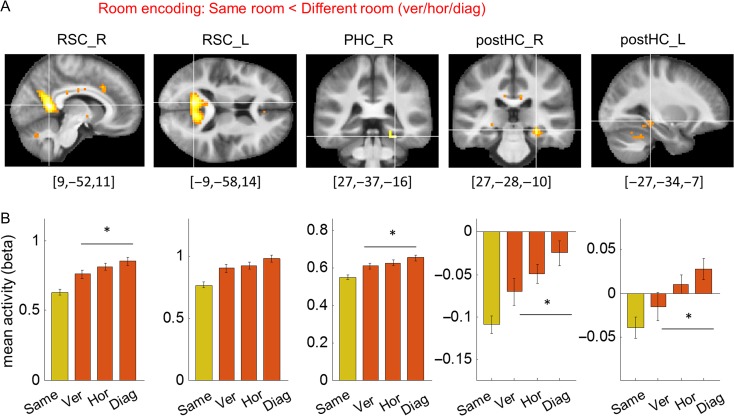

The “same room < different room” contrast revealed bilateral RSC (right RSC peak [9, −52, 11], t[29] = 8.55, P < 0.001; left RSC peak [−9, −58, 14], t[29] = 7.91, P < 0.001, small volume corrected with a bilateral RSC mask), right parahippocampal cortex (peak [27, −37, −16], t[29] = 7.21, P < 0.001), and the posterior part of the hippocampus (right hippocampus peak [27, −28, −10], t[29] = 6.12, P < 0.001; left hippocampus peak [−27, −34, −7], t[29] = 4.32, P = 0.014, small volume corrected with a bilateral hippocampus mask) (Fig. 5A). This suggests that these regions encode in which room a participant was located in the building. It is notable that the room information was detectable in the posterior portion of hippocampus, compared with corner information which was detectable in the anterior hippocampus.

Figure 5.

Room encoding regions. (A) The whole brain contrast “same room < different room” revealed bilateral RSC (RSC_R, RSC_L), right parahippocampal cortex (PHC_R) and bilateral posterior HC (postHC_R, postHC_L). Given our a priori interest in RSC and posterior hippocampus, their clusters are shown with a small volume corrected threshold level (t[29] > 3.67, t[29] > 3.75), while the parahippocampal cortex cluster is shown with a whole-brain corrected threshold (t[29] > 6.01). The peak MNI coordinate is shown below each cluster. (B) Comparison of mean activity for 3 different room types (vertical/horizontal/diagonal) at each cluster (5 mm sphere at peak voxel). The “same” condition (in yellow) is shown for reference purposes. The response to the diagonal condition was significantly larger than for the vertical condition in all regions except the left RSC. There was no significant difference between the vertical and horizontal conditions. Error bars are SEM adjusted for a within-subjects design (Morey 2008). *P < 0.05.

We further examined spatial encoding in the right and left RSC (RSC_R, RSC_L), right parahippocampal cortex (PHC_R), and right and left posterior hippocampus (postHC_R, postHC_L) by extracting the mean fMRI activity for the “same corner, same room,” “different corner, same room,” and “different room” conditions. In all regions, we found significant repetition suppression effects for both “same corner, same room” and “different corner, same room” conditions compared with the “different room” (one-sided paired t-tests: “same corner, same room” < “different room”: RSC_R, t[29] = −6.6, P < 0.001; RSC_L, t[29] = −4.9, P < 0.001; PHC_R, t[29] = −4.9, P < 0.001; postHC_R, t[29] = −4.9, P < 0.001; postHC_L, t[29] = −3.2, P = 0.002; “different corner, same room” < “different room”: RSC_R, t[29] = −4.1, P < 0.001; RSC_L, t[29] = −3.6, P < 0.001; PHC_R, t[29] = −5.0, P < 0.001; postHC_R, t[29] = −3.0, P = 0.003; postHC_L, t[29] = −2.2, P = 0.02). These findings suggest the presence of room information that is independent of the local corner.

We then tested for the existence of vertical–horizontal asymmetry in these 5 room encoding regions—RSC_R, RSC_L, PHC_R, postHC_R, postHC_L—by extracting the mean activity for subcategories of the different room conditions: vertical room, horizontal room, and diagonal room (Fig. 5B). If vertical information was relatively poorly encoded compared with horizontal information, we would expect that 2 rooms on top of each other (e.g., Rm101 and Rm201, Fig. 2A) to be more similarly represented in the brain than the 2 adjacent rooms on the same floor (e.g., Rm101 and Rm 102). Consequently, we would expect less fMRI activity for the vertical room condition than the horizontal condition. We also tested whether 2 rooms in a diagonal relationship were more distinguishable than either the vertically or horizontally adjacent room due to physical or perceptual distance. For this comparison, we used a repeated measures ANOVA with 3 room types as a main factor. In Figure 5B, we also plot the same room condition for reference purposes. Since the room encoding region was defined by the “same room < different room” contrast, the “same room” should be associated with reduced activity in all regions. We found a significant main effect in all regions except for the left RSC (RSC_R, F[2,58] = 3.8, P = 0.029; RSC_L, F[2,58] = 2.4, P = 0.10; PHC_R, F[2,58] = 3.2, P = 0.049; postHC_R, F[2,58] = 3.5, P = 0.036; postHC_L, F[2,58] = 3.6, P = 0.032). Post hoc t-tests showed that this main effect was driven by a small difference between the vertical and diagonal conditions (“ver” vs. “diag,” RSC_R, t[29] = −2.4, P = 0.022; PHC_R, t[29] = −2.5, P = 0.017; postHC_R, t[29] = −2.3, P = 0.031; postHC_L, t[29] = −2.3, P = 0.028). The diagonal condition evoked a larger signal than the vertical condition, implying that 2 rooms in a diagonal relationship are more differently encoded than 2 rooms on top of each other. None of the regions showed a significant difference between the vertical and horizontal conditions.

As a side note, the sign of the mean activity (beta) was negative in the hippocampus, implying that the activity was lower during the stimulus presentation period (virtual navigation and subsequent object-location memory test) compared with the fixation cross ITI. In the literature the hippocampus is often reported to show negative beta values during stimulus presentation or task periods (Bakker et al. 2008; Evensmoen et al. 2015; Hodgetts et al. 2015; Brodt et al. 2016). We believe that the absolute beta value of a single condition has little meaning in our study as the implicit baseline (ITI period) was not a meaningful experimental condition. Our study explicitly focussed on comparisons between the main experimental conditions such as the “same room” versus the “horizontal room.” The comparisons showed the predicted pattern of repetition suppression, with the fMRI signal associated with the “same” condition reduced compared with the different room conditions.

Supplementary Analysis: Exact Location (or Associated Painting) Encoding

Although the main goal of the study was to test the effect of corner and room information, we also tested whether there was any brain region that encoded the exact location without a hierarchy. The analysis revealed a small cluster of activity in the posterior cingulate cortex which was located posterior and superior to RSC, as well as one active voxel in the vicinity of the putamen (Supplementary Fig. S4). These regions encoded the unique locations or the paintings associated with each location.

Supplementary Analysis: Room Versus View Encoding

In order to know whether the RSC, parahippocampal cortex and posterior hippocampus encoded view information associated with each room and/or more abstract spatial knowledge about the room, we conducted a supplementary analysis that separated the same room condition into subcategories of same view and different view conditions. We then compared them to the different room condition (see Materials and Methods and Supplementary Fig. S1). We observed repetition suppression effects even when participants visited the same room but approached it from a different view (Supplementary Fig. S2; one-sided paired t-tests: “same room, different view” < “different room,” RSC_R, t[29] = −2.1, P = 0.021; RSC_L, t[29] = −2.4, P = 0.011; PHC_R, t[29] = −1.9, P = 0.034; postHC_R, t[29] = −1.8, P = 0.041; postHC_L, t[29] = −1.9, P = 0.034). This suggests that these regions contained abstract room information that was not limited to the exact view. However, there was also evidence for view encoding in some regions. For example, visiting the same room from the same view evoked significantly less activity compared with visiting the same room from different view in the right RSC, PHC_R and the postHC_R (one-sided paired t-tests: “same room, same view” < “same room, different view,” RSC_R, t[29] = −2.5, P = 0.010; PHC_R, t[29] = −3.4, P = 0.001; postHC_R, t[29] = −1.8, P = 0.041). In contrast, the left RSC and left posterior hippocampus did not show any significant differences between the same view and different view (P > 0.1). In summary, left RSC and left posterior hippocampus showed relatively pure room encoding that was independent of view. Other regions showed additional view dependency, and this was particularly strong in right parahippocampal cortex.

Discussion

In this study we investigated how a multicompartment 3D space (a multilevel gallery building) was represented in the human brain using behavioral testing and fMRI repetition suppression analyses. Behaviorally, we observed faster within-room egocentric spatial judgments and a priming effect of visiting the same room in an object-location memory test, suggesting a segmented mental representation of space. At the neural level, we found evidence of hierarchical encoding of this 3D spatial information, with the left anterior lateral hippocampus containing local corner information within a room, whereas RSC, parahippocampal cortex and posterior hippocampus contained information about the rooms within the building. Furthermore, both behavioral and fMRI data were concordant with unbiased encoding of vertical and horizontal information.

We consider first our behavioral findings. There is an extensive psychological literature suggesting that space is encoded in multiple “submaps” instead of a flat single map. Accuracy and/or reaction time costs for between-region spatial judgments (McNamara et al. 1989; Montello and Pick 1993; Han and Becker 2014), and context swap errors, where only the local coordinate is correctly retrieved (Marchette et al. 2017), are evidence for multiple or recurring submaps. Here, we observed faster RT for within-room direction judgments and a behavioral priming effect of visiting the same room during a spatial memory task. Our findings are therefore consistent with the idea of a segmented representation of space.

Importantly, the current study examined regionalization in 3D space and compared the influence of vertical and horizontal boundaries for the first time. Some previous studies have suggested a bias in dividing space in the horizontal plane (Jovalekic et al. 2011; Thibault et al. 2013; Flores-Abreu et al. 2014). The horizontal planar encoding hypothesis predicts an additional behavioral cost for spatial judgments across floors and priming effects for the rooms within a same floor. However, we did not find any significant difference in performance for spatial judgments across vertical and horizontal boundaries, or priming effects for rooms on the same floor. Although the absence of significant difference does not necessarily mean equivalence, the most parsimonious interpretation would be that each room within our 3D space was similarly distinguishable.

This fits with the symmetric encoding of 3D location information in a semivolumetric space previously reported in bats and humans (Yartsev and Ulanovsky 2013; Kim et al. 2017). One concern might be that the small number of rooms in our virtual building allowed participants to encode each room categorically without being truly integrated in a 3D spatial context. However, in order to be successful at the egocentric judgments task across rooms (mean accuracy was 80%), our participants must have had an accurate representation of the 3D building. Testing an environment with more floors and rooms in the future could facilitate the search for any additional hierarchies within 3D spatial representations. For example, rooms might be further grouped into the horizontal plane or a vertical column in a more complex environment. It might also help to reveal subtle differences, if they exist, between vertical and horizontal planes.

Considering next our fMRI results, we found that fMRI responses in the left anterior lateral hippocampus were associated with local corner information that was generalized across multiple rooms. This fits well with previous findings that hippocampal place cells in rodents fire at similar locations within each segment of a multicompartment environment (Derdikman et al. 2009; Spiers et al. 2015). This common neural code enables efficient encoding of information. For example, the 16 locations in our virtual building could be encoded using only 8 unique codes (4 for distinguishing the corners of rooms and 4 for distinguishing the rooms themselves) given its regular substructures. This room-independent representation in the anterior lateral hippocampus can also be seen as a “schematic” representation of space (Marchette et al. 2017) where the regular structure of the environment is extracted. Furthermore, there is evidence that the ability of the hippocampus to extract regularity in the world is not limited to the spatial domain. A previous fMRI study found that temporal order information in the hippocampus generalized across different sequences (Hsieh et al. 2014). Statistical learning of temporal community structure has also been associated with the hippocampus (Schapiro et al. 2016) and, interestingly, localized to the anterior portion. Rodent electrophysiology and modeling work also suggests that ventral hippocampus (analogous to the human anterior hippocampus) is well suited to generalizing across space and memory compared with dorsal hippocampus (analogous to the human posterior hippocampus) (Keinath et al. 2014).

In addition to generalized within-room information, it is also important to know a room’s location to identify one’s exact position within a building. We found that multiple brain regions represented room information, with the RSC exhibiting the most reliable room repetition effect. At first this finding might seem surprising, given that head direction information has been consistently associated with the RSC in humans and rodents (Baumann and Mattingley 2010; Marchette et al. 2014; Jacob et al. 2016; Shine et al. 2016). In our virtual building, participants faced paintings on opposite walls within a room. Therefore, if RSC encoded the participant’s facing direction, local corner encoding would be expected instead of room encoding. However, numerous findings suggest that RSC encodes more than head direction; processing of multiple spatial features such as location, view, velocity and distance have been linked with this region (Cho and Sharp 2001; Sulpizio et al. 2014; Alexander and Nitz 2015; Chrastil et al. 2015). Moreover, RSC was found to be involved in both a location and an orientation retrieval task when participants viewed static pictures of an environment during fMRI (Epstein et al. 2007). Given the rich repertoire of spatial, visual and motor information the RSC processes, it is perhaps not surprising that some studies observed local head direction signals and others found global head direction information in this region (Marchette et al. 2014; Shine et al. 2016). This might also be influenced by functional differences within the RSC, or indeed laterality effects. In our experiment, the right RSC showed stronger repetition suppression when participants visited the same room from same view compared with when they visited the same room from different view, whereas the left RSC’s response was only influenced by the repetition of the room.

RSC might have a role in integrating local representations within a global environment. A recent theory about the neural encoding of large-scale 3D space proposed that 3D space is represented by multiple 2D fragments, and RSC is a candidate area for stitching these together (Jeffery et al. 2015). The authors’ argument was based on the reasoning that the RSC is suitable for updating orientation in multiple adjoining, sloped planes. In our experiment, room information can be broadly viewed as the orienting cue within a building that allows integration of the fragmented space. For localization and orientation of local representations within a larger spatial context, landmark information is crucial. In the current experiment, room information was cued by salient landmarks such as the floor sign or the staircase. Landmark information could, therefore, be the key to understanding the RSC’s various spatial functions including the representation of abstract room information, scene perception, processing of directional signals, and the integration of multiple local reference frames. RSC is known to support the learning of and processing of stable landmarks (Auger et al. 2012, 2015), and its head direction signal is dominated by local landmarks (Jacob et al. 2016).

The second region that represented room information was the parahippocampal cortex. It also showed a strong view dependency in addition to room information. This contrasts with the left RSC which only showed a room repetition effect. Together these findings are consistent with the proposed complementary roles of the parahippocampal cortex and RSC in scene perception, whereby the former seems to respond in a view-dependent manner whereas the RSC represents integrative and more abstract scene information. For example, it has been shown that when participants saw identical or slightly different snapshot views from one panoramic scene, RSC showed fMRI repetition effects for both identical and different views, but parahippocampal cortex only exhibited repetition suppression for the identical view (Park and Chun 2009). In addition, multivoxel patterns in RSC have been observed to be consistent across different views from each location, whereas this was not the case for the parahippocampal cortex (Vass and Epstein 2013).

Along with RSC and parahippocampal cortex, the final area to represent room information was the posterior hippocampus. The similarity in spatial encoding between these regions might be predicted from their close functional and anatomical connectivity (Kobayashi and Amaral 2003; Kahn et al. 2008; Blessing et al. 2016). It is notable that in our previous fMRI study that examined 3D spatial representation, we also found that posterior hippocampus and RSC encoded the same type of spatial information (vertical direction) while anterior hippocampus encoded a different type of spatial information (3D location) (Kim et al. 2017). In that study, different vertical directions resulted in more distinguishable views, although direction information observed in the multivoxel patterns remained significant after controlling for low level visual similarities. Our current results do not fit precisely with accounts that associate the posterior hippocampus with a fine-grained spatial map (Poppenk et al. 2013; Evensmoen et al. 2015). In fact, our findings could be interpreted as evidence in the opposite direction, namely that coarser-grained representations of the whole building engage the posterior hippocampus. Nevertheless, overall our anterior and posterior hippocampal findings provide further evidence of functional differentiation down the long axis of the hippocampus (Baumann and Mattingley 2013; Poppenk et al. 2013; Strange et al. 2014; Zeidman and Maguire 2016).

Finally, as with our behavioral data, we also examined the fMRI data for possible differences between the horizontal and vertical planes. We did not find significant differences in fMRI amplitude between the vertical and horizontal conditions in the brain structures that contained room information. This neural finding is consistent with our behavioral results of similar accuracy and RT for spatial judgments across vertical and horizontal rooms, and similar priming effects for each room. These results fit well with an isotropic representation of 3D space, similar to our previous experiment (Kim et al. 2017).

Again, as with the behavioral data, one concern might be that each room is represented in RSC, parahippocampal cortex and posterior hippocampus in a categorical, semantic manner without consideration of their physical 3D location in building. However, as we discussed earlier, egocentric spatial judgments in the prescan task prevented participants from separately encoding each room without the 3D spatial context. Furthermore, we found that visiting a diagonal room evoked a larger fMRI signal than visiting a vertical room, and this finding cannot be explained if each room was encoded in a flat manner without a spatial organization. This implies that the neural representation of 2 rooms in a diagonal relationship were more distinguishable than 2 rooms on top of each other. This might be due to the change in 2 axes for the diagonal room (vertical and horizontal) compared with change along only one axis for the vertical room, or simply because of a longer distance between 2 rooms in diagonal relationship. Distance encoding has been previously reported in parahippocampal cortex and RSC (Marchette et al. 2014; Sulpizio et al. 2014).

To disambiguate these possibilities, a larger environment consisting of multiple vertical and horizontal sections should be tested. For example, if the physical distance between the rooms is the main factor for neural dissimilarity, 2 rooms on the same floor that were separated by 5 other rooms (e.g., Rm101 and Rm106) would be more distinguishable than 2 rooms that are both vertically and horizontally adjacent (e.g., Rm101 and Rm 202, Fig. 2A). If the change in both vertical and horizontal axes always has a greater effect than the change in one axis, the diagonal rooms would be more distinguishable than horizontally or vertically aligned rooms regardless of distance. Use of a larger environment would also widen the scope for detecting subtle differences, if any, in the vertical and horizontal axes.

In addition to absolute physical distance, path or navigation distance is also a consideration. For example, a typical multilevel building like the one used in the current study has limited access points to movement across the floors. People cannot directly move up to the room above through the ceiling; rather they have to use stairs or elevators which are often sparsely located in the building. Thus, 2 rooms on top of each other are further apart in terms of actual navigation than 2 rooms side by side on the same floor, even when absolute distances are identical or the vertical rooms have even shorter physical distance than the horizontal rooms. Representation of space in the hippocampus is not only influenced by absolute distance but also by path distance (Howard et al. 2014), and it has also been suggested that topology instead of physical geometry is encoded in hippocampal place cells (Dabaghian et al. 2014). It would be intriguing to systematically investigate the effect of physical and path distance, and the potential interaction with vertical/horizontal boundaries, in future studies.

In summary, here we presented novel evidence showing that a multicompartment 3D space was represented in a hierarchical manner in the human brain, where within-room corner information was encoded by the anterior lateral hippocampus and room (within the building) information was encoded by RSC, parahippocampal cortex, and posterior hippocampus. Moreover, our behavioral and neural findings showed equivalence of encoding for vertical and horizontal information, suggesting an isotropic representation of 3D space even in the context of multiple spatial compartments. Despite multilevel environments being common settings for much of human behavior, little is known about how they are represented in the brain. These findings therefore provide a much-needed starting point for understanding how a crucial and ubiquitous behavior—navigation in buildings with numerous levels and rooms—is supported by the human brain.

Supplementary Material

Notes

Conflict of Interest: The authors declare no competing financial interests.

Supplementary Material

Supplementary material is available at Cerebral Cortex online.

Funding

Wellcome Trust (101759/Z/13/Z to E.A.M. and 203147/Z/16/Z to the Centre; 102263/Z/13/Z to M.K.) and a Samsung Scholarship (to M.K).

References

- Aguirre GK. 2007. Continuous carry-over designs for fMRI. NeuroImage. 35:1480–1494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alexander AS, Nitz DA. 2015. Retrosplenial cortex maps the conjunction of internal and external spaces. Nat Neurosci. 18:1143–1151. [DOI] [PubMed] [Google Scholar]

- Auger SD, Mullally S, Maguire EA. 2012. Retrosplenial cortex codes for permanent landmarks. PLoS One. 7:e43620. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Auger SD, Zeidman P, Maguire EA. 2015. A central role for the retrosplenial cortex in de novo environmental learning. eLife. 4:1–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bakker A, Kirwan CB, Miller M, Stark CEL. 2008. Pattern separation in the human hippocampal CA3 and dentate gyrus. Science. 319:1640–1642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balaguer J, Spiers H, Hassabis D, Summerfield C. 2016. Neural mechanisms of hierarchical planning in a virtual subway network. Neuron. 90:893–903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baumann O, Mattingley JB. 2010. Medial parietal cortex encodes perceived heading direction in humans. J Neurosci. 30:12897–12901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baumann O, Mattingley JB. 2013. Dissociable representations of environmental size and complexity in the human hippocampus. J Neurosci. 33:10526–10533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blessing EM, Beissner F, Schumann A, Brünner F, Bär K-J. 2016. A data-driven approach to mapping cortical and subcortical intrinsic functional connectivity along the longitudinal hippocampal axis. Hum Brain Mapp. 37:462–476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brandt T, Dieterich M. 2013. “Right Door,” wrong floor: a canine deficiency in navigation. Hippocampus. 23:245–246. [DOI] [PubMed] [Google Scholar]

- Brodt S, Pöhlchen D, Flanagin VL, Glasauer S, Gais S, Schönauer M. 2016. Rapid and independent memory formation in the parietal cortex. Proc Natl Acad Sci USA. 113:13251–13256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Büchner S, Hölscher C, Strube G. 2007. Path choice heuristics for navigation related to mental representations of a building In: Vosniadou S, Kayser D, Protopapas A, editors. Proceedings of the European cognitive science conference. Delphi, Greece: Taylor and Francis; p. 504–509. [Google Scholar]

- Burt de Perera T, Holbrook RI, Davis V. 2016. The representation of three-dimensional space in fish. Front Behav Neurosci. 10:40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cho J, Sharp PE. 2001. Head direction, place, and movement correlates for cells in the rat retrosplenial cortex. Behav Neurosci. 115:3–25. [DOI] [PubMed] [Google Scholar]

- Chrastil ER, Sherrill KR, Hasselmo ME, Stern CE. 2015. There and back again: hippocampus and retrosplenial cortex track homing distance during human path integration. J Neurosci. 35:15442–15452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dabaghian Y, Brandt VL, Frank LM. 2014. Reconceiving the hippocampal map as a topological template. eLife. 3:e03476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Derdikman D, Whitlock JR, Tsao A, Fyhn M, Hafting T, Moser M-B, Moser EI. 2009. Fragmentation of grid cell maps in a multicompartment environment. Nat Neurosci. 12:1325–1332. [DOI] [PubMed] [Google Scholar]

- Epstein RA, Parker WE, Feiler AM. 2007. Where am I now? Distinct roles for parahippocampal and retrosplenial cortices in place recognition. J Neurosci. 27:6141–6149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Evensmoen HR, Ladstein J, Hansen TI, Møller JA, Witter MP, Nadel L, Håberg AK. 2015. From details to large scale: the representation of environmental positions follows a granularity gradient along the human hippocampal and entorhinal anterior-posterior axis. Hippocampus. 25:119–135. [DOI] [PubMed] [Google Scholar]

- Finkelstein A, Derdikman D, Rubin A, Foerster JN, Las L, Ulanovsky N. 2014. Three-dimensional head-direction coding in the bat brain. Nature. 517:159–164. [DOI] [PubMed] [Google Scholar]

- Flores-Abreu IN, Hurly TA, Ainge JA, Healy SD. 2014. Three-dimensional space: locomotory style explains memory differences in rats and hummingbirds. Proc R Soc B Biol Sci. 281:20140301–20140301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grobéty MC, Schenk F. 1992. Spatial learning in a three-dimensional maze. Anim Behav. 43:1011–1020. [Google Scholar]

- Han X, Becker S. 2014. One spatial map or many? Spatial coding of connected environments. J Exp Psychol Learn Mem Cogn. 40:511–531. [DOI] [PubMed] [Google Scholar]

- Hayman R, Verriotis MA, Jovalekic A, Fenton AA, Jeffery KJ. 2011. Anisotropic encoding of three-dimensional space by place cells and grid cells. Nat Neurosci. 14:1182–1188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hirtle SC, Jonides J. 1985. Evidence of hierarchies in cognitive maps. Mem Cognit. 13:208–217. [DOI] [PubMed] [Google Scholar]

- Hodgetts CJ, Postans M, Shine JP, Jones DK, Lawrence AD, Graham KS. 2015. Dissociable roles of the inferior longitudinal fasciculus and fornix in face and place perception. eLife. 4:1–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howard LR, Javadi AH, Yu Y, Mill RD, Morrison LC, Knight R, Loftus MM, Staskute L, Spiers HJ. 2014. The hippocampus and entorhinal cortex encode the path and Euclidean distances to goals during navigation. Curr Biol. 24:1331–1340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsieh L-T, Gruber MJ, Jenkins LJ, Ranganath C. 2014. Hippocampal activity patterns carry information about objects in temporal context. Neuron. 81:1165–1178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacob P-Y, Casali G, Spieser L, Page H, Overington D, Jeffery K. 2016. An independent, landmark-dominated head-direction signal in dysgranular retrosplenial cortex. Nat Neurosci. 20:173–175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeffery KJ, Jovalekic A, Verriotis M, Hayman R. 2013. Navigating in a three-dimensional world. Behav Brain Sci. 36:523–543. [DOI] [PubMed] [Google Scholar]

- Jeffery KJ, Wilson JJ, Casali G, Hayman RM. 2015. Neural encoding of large-scale three-dimensional space—properties and constraints. Front Psychol. 6:1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jovalekic A, Hayman R, Becares N, Reid H, Thomas G, Wilson J, Jeffery K. 2011. Horizontal biases in rats’ use of three-dimensional space. Behav Brain Res. 222:279–288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahn I, Andrews-Hanna JR, Vincent JL, Snyder AZ, Buckner RL. 2008. Distinct cortical anatomy linked to subregions of the medial temporal lobe revealed by intrinsic functional connectivity. J Neurophysiol. 100:129–139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keinath AT, Wang ME, Wann EG, Yuan RK, Dudman JT, Muzzio IA. 2014. Precise spatial coding is preserved along the longitudinal hippocampal axis. Hippocampus. 24:1533–1548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim M, Jeffery KJ, Maguire EA. 2017. Multivoxel pattern analysis reveals 3D place information in the human hippocampus. J Neurosci. 37:2703–2716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kjelstrup KB, Solstad T, Brun VH, Hafting T, Leutgeb S, Witter MP, Moser EI, Moser M-B. 2008. Finite scale of spatial representation in the hippocampus. Science. 321:140–143. [DOI] [PubMed] [Google Scholar]

- Kobayashi Y, Amaral DG. 2003. Macaque monkey retrosplenial cortex: II. Cortical afferents. J Comp Neurol. 466:48–79. [DOI] [PubMed] [Google Scholar]

- Marchette SA, Ryan J, Epstein RA. 2017. Schematic representations of local environmental space guide goal-directed navigation. Cognition. 158:68–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marchette SA, Vass LK, Ryan J, Epstein RA. 2014. Anchoring the neural compass: coding of local spatial reference frames in human medial parietal lobe. Nat Neurosci. 17:1598–1606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McNamara TP, Hardy JK, Hirtle S. 1989. Subjective hierarchies in spatial memory. J Exp Psychol Learn Mem Cogn. 15:211–227. [DOI] [PubMed] [Google Scholar]

- Montello D, Pick H. 1993. Integrating knowledge of vertically aligned large-scale spaces. Environ Behav. 25:457–484. [Google Scholar]

- Morey RD. 2008. Confidence intervals from normalized data: a correction to Cousineau (2005). Tutor Quant Methods Psychol. 4:61–64. [Google Scholar]

- Nonyane BAS, Theobald CM. 2007. Design sequences for sensory studies: achieving balance for carry-over and position effects. Br J Math Stat Psychol. 60:339–349. [DOI] [PubMed] [Google Scholar]

- Park S, Chun MM. 2009. Different roles of the parahippocampal place area (PPA) and retrosplenial cortex (RSC) in panoramic scene perception. Neuroimage. 47:1747–1756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poppenk J, Evensmoen HR, Moscovitch M, Nadel L. 2013. Long-axis specialization of the human hippocampus. Trends Cogn Sci. 17:230–240. [DOI] [PubMed] [Google Scholar]

- Schapiro AC, Turk-Browne NB, Norman KA, Botvinick MM. 2016. Statistical learning of temporal community structure in the hippocampus. Hippocampus. 26:3–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shine JP, Valdés-Herrera JP, Hegarty M, Wolbers T. 2016. The human retrosplenial cortex and thalamus code head direction in a global reference frame. J Neurosci. 36:6371–6381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spiers HJ, Hayman RMA, Jovalekic A, Marozzi E, Jeffery KJ. 2015. Place field repetition and purely local remapping in a multicompartment environment. Cereb Cortex. 25:10–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strange BA, Witter MP, Lein ES, Moser EI. 2014. Functional organization of the hippocampal longitudinal axis. Nat Rev Neurosci. 15:655–669. [DOI] [PubMed] [Google Scholar]

- Sulpizio V, Committeri G, Galati G. 2014. Distributed cognitive maps reflecting real distances between places and views in the human brain. Front Hum Neurosci. 8:716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thibault G, Pasqualotto A, Vidal M, Droulez J, Berthoz A. 2013. How does horizontal and vertical navigation influence spatial memory of multifloored environments? Atten Percept Psychophys. 75:10–15. [DOI] [PubMed] [Google Scholar]

- Tse D, Langston RF, Kakeyama M, Bethus I, Spooner PA, Wood ER, Witter MP, Morris RGM. 2007. Schemas and memory consolidation. Science. 316:76–82. [DOI] [PubMed] [Google Scholar]

- Vann SD, Aggleton JP, Maguire EA. 2009. What does the retrosplenial cortex do? Nat Rev Neurosci. 10:792–802. [DOI] [PubMed] [Google Scholar]

- Vass LK, Epstein RA. 2013. Abstract representations of location and facing direction in the human brain. J Neurosci. 33:6133–6142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiskopf N, Hutton C, Josephs O, Deichmann R. 2006. Optimal EPI parameters for reduction of susceptibility-induced BOLD sensitivity losses: a whole-brain analysis at 3T and 1.5 T. NeuroImage. 33:493–504. [DOI] [PubMed] [Google Scholar]

- Yartsev MM, Ulanovsky N. 2013. Representation of three-dimensional space in the hippocampus of flying bats. Science. 340:367–372. [DOI] [PubMed] [Google Scholar]

- Zeidman P, Maguire EA. 2016. Anterior hippocampus: the anatomy of perception, imagination and episodic memory. Nat Rev Neurosci. 17:173–182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zwergal A, Schöberl F, Xiong G, Pradhan C, Covic A, Werner P, Trapp C, Bartenstein P, la Fougère C, Jahn K, et al. 2016. Anisotropy of human horizontal and vertical navigation in real space: behavioral and PET correlates. Cereb Cortex. 26:4392–4404. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.