Abstract

A fundamental question for the field of healthcare improvement is the extent to which the results achieved can be attributed to the changes that were implemented and whether or not these changes are generalizable. Answering these questions is particularly challenging because the healthcare context is complex, and the interventions themselves tend to be complex and multi-dimensional. The Salzburg Global Seminar Session 565—‘Better Health Care: How do we learn about improvement?’ was convened to address questions of attribution, generalizability and rigor, and to think through how to approach these concerns in the field of quality improvement. The Salzburg Global Seminar Session 565 brought together 61 leaders in improvement from 22 countries, including researchers, evaluators and improvers. The primary conclusion that resulted from the session was the need for evaluation to be embedded as an integral part of the improvement. We have invited participants of the seminar to contribute to writing this supplement, which consists of eight articles reflecting insights and learning from the Salzburg Global Seminar. This editorial serves as an introduction to the supplement. The supplement explains results and insights from Salzburg Global Seminar Session 565.

Keywords: improvement, learning, complex adaptive systems, implementation, delivery

Background

A fundamental question for the field of healthcare improvement is the extent to which the results achieved truly can be attributed to the changes that were implemented. Attribution involves determining whether, and to what extent, an action can be said to be caused by a certain person or thing. In the case of attribution in improvement, improvers and evaluators seek to understand both internal and external attribution [1]. On one hand, improvers and evaluators want to know whether, and to what extent, a change in healthcare outcomes results from the changes that were implemented. On the other hand, improvers and evaluators must also determine whether, and to what extent, factors other than the improvement intervention could have resulted in changes in healthcare outcomes. Even if attribution seems convincing, it must still be demonstrated whether or not the interventions are generalizable and ready for broader implementation and dissemination. Answering these questions is particularly challenging because the healthcare context is complex, and the changes themselves tend to be complex and multi-dimensional [2].

Improvement science was developed in industry, with a focus on improving processes in manufacturing and services [3, 4]. Not surprisingly, in applying improvement science to healthcare, initial efforts focused on improving administrative processes, such as reducing patient wait times and reducing the number of lost patient files and laboratory specimens [5, 6]. Over the years, improvement initiatives evolved to address clinical care processes and the health system as a whole, in order to test and implement changes that may yield better health outputs and outcomes. Given the complexity of healthcare systems, there are parallels between contemporary healthcare improvement and the increasing emphasis on complex adaptive and dynamic systems in the industry.

Health systems consist of inputs, processes and outputs, with the outputs dependent on the inputs and processes in the system. While inputs, or resources, are required in all systems, quality improvement focuses on how these inputs are used in the system’s processes. Processes are the series or sequence of steps used in a system to transform inputs into outputs and outcomes. Without changes to either the inputs or processes within a system, the outputs and outcomes produced by the system will remain the same [7]. Quality improvement in healthcare focuses on analysing processes of care delivery and making changes to these processes to yield improved outputs and outcomes. When implementing changes to processes of care, the changes are tested and adapted, as necessary, based on the results achieved.

In testing and implementing changes in healthcare, the emphasis has been on collecting and analysing time-series data. These data are preferably collected in real time, in order to guide improvers in making necessary adaptions in their implementation efforts. These methods, including statistical process control and interrupted time-series analysis, can be used to determine whether results obtained are due to chance, or random variation in system performance [2]. The application of these methods has become ubiquitous in rigorous improvement work, and are appropriate analytic methods embedded in widely available data analysis software packages.

Unfortunately, the vast majority of improvement initiatives using time-series methods have not included controls or comparison groups. Although, there are practical barriers to performing improvement projects with appropriate comparators, the absence of a counterfactual (what would have happened over time if the intervention had not occurred) has raised reasonable concerns. These concerns surround whether the results claimed might be attributable to other factors or secular trends, not the improvement interventions themselves.

The field is at a stage where we must now improve our understanding of how we learn about the changes we test and implement. This means that we need to better understand whether or not the results being realized are related to the interventions we are testing and implementing. If so, we must also understand to what extent, how they worked and why, as well as whether the changes are generalizable or only specific to that context. The answers to these questions are not straightforward. The purpose of the Salzburg Global Seminar—Session 565 was to convene and address these questions and to think through how to approach this concern emerging in the field of quality improvement.

Introduction

Introduction to the Supplement

This supplement describes the results and conclusions reached after deliberations at the Salzburg Global Seminar—Session 565 on ‘Better Health Care: How do we learn about improvement?’. The Seminar was designed to move away from simply debating and critiquing the rigor, attribution, and generalizability of improvement but rather move towards producing actionable knowledge around these topics. In particular, the meetings focused on developing practical guidance on how to rigorously design, implement and evaluate improvement interventions in service of enhancing the creditability of the results and ongoing learning and improvement [4, 8].

Introduction to the Editorial

This editorial will describe the process by which the session was convened to reach some of the conclusions and actions achieved through the session. We will introduce highlight the cross-cutting issues and insights that were identified throughout the session and that will be detailed in the supplement articles.

Articles included in this supplement

In addition to this editorial, the supplement is divided into the following papers, each addressing a key component of these discussions:

‘Quality Improvement and Emerging Global Health Priorities’ discusses the importance of improvement in addressing the United Nations Sustainable Development Goals (SDGs); achieving Global Health Coverage and combatting global health threats.

‘A Framework for Learning about Improvement: Embedded Implementation and Evaluation Design to Optimize Learning’ presents and describes a framework and continuum developed by participants of the Salzburg Global Seminar Session 565 to better design and evaluate improvement. This paper concludes with a call for embedded evaluation and improvement—a key conclusion developed by session participants.

‘Unpacking the Black Box of Improvement’ discusses the importance of understanding what actually happens during an improvement intervention and what factors contributed to the success or failure of an improvement intervention. This paper highlights and analyses case studies discussed during the Salzburg Global Seminar Session 565 to ‘unpack’ the black box of improvement.

‘Adapting Improvements to Context: When, Why and How?’ analyses the role of context in contributing to the success or failure of improvement intervention. This paper discusses how, when and why improvement interventions can be adapted to work successfully within a given context.

‘Research versus Practice in Quality Improvement? Understanding How We Can Bridge the Gap’ uncovers the gap between researchers, evaluators and improvers in the field of improvement and how this gap can be bridged.

‘Practical Recommendations for the Evaluation of Improvement Initiatives’ summarizes the recommendations from the Salzburg Global Seminar Session 565. In particular, this paper discusses how embedded evaluation and improvement can be applied without compromising the integrity of the research and evaluation of the improvement.

‘Learning About Improvement to Address Global Health and Health care Challenges—Lessons and the Future’ is a high-level reflection paper on the Salzburg Global Seminar Session 565 as a whole. This paper emphasized the value of the thinking and insights from the session and their role in the future of global health.

Salzburg Global Seminar Session 565 Planning and Convening

Preparation for the Salzburg Global Seminar Session 565 required much planning, organizing and convening ahead of the session itself. Planning for the session took place over the course of 2 years, during which the session organizers reached out to leaders in the field of improvement to identify and clarify the key issues to be addressed during the session, as well as the session structure and agenda. In the planning process, the session organizers reviewed the literature on the topic of learning about improvement, which involved a review of over 100 articles. Individuals were identified to serve as faculty for the session and an organizational group was formed to manage the logistics for the Seminar. Participant selection criteria were also developed, which included discussion of how participant attendance would be funded. Individuals known to the faculty were invited as potential participants. Other individuals were invited to apply for participation, with selection criteria in place.

Salzburg Global Seminar Session 565 was attended by 61 leaders from 22 countries with a range of different backgrounds needed to address the issue at hand. These included improvers, researchers, knowledge management experts, global health experts, funders and experts in complex adaptive systems. The 5-day session was held in Salzburg, Austria from 10 to 14 July 2016. In preparation for the Seminar, a framing paper ‘How do we learn about improving health care: a call for a new epistemological paradigm’ was published in the ‘ISQua Journal for Quality in Health care' [3].

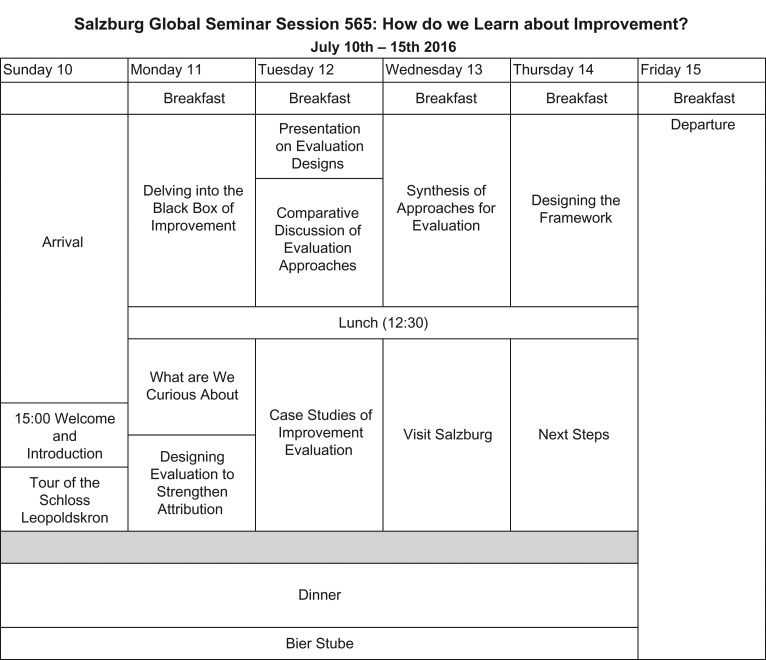

As shown in Fig. 1, sessions were structured for discussions across each of the 4 days, starting with an exploration of the iterative adaptive nature of improvement. The discussion then continued into an exploration of what we were curious to learn about as we are improving healthcare and an exploration of research and evaluation methods and their application to learning about improvement. Towards the end of the Seminar, participants then proceeded to develop a framework on how we learn about improving healthcare. The session structure enabled continuous participant interaction through case study exercises and group discussion.

Figure 1.

Agenda.

The faculty met every evening to review progress and adjust the agenda accordingly. The purpose was to arrive at a way forward on design, research and evaluation in consideration of the complex adaptive nature of improvement. Embedded evaluation, as will be discussed in-depth throughout the supplement, became a cross-cutting theme throughout the Seminar as participants recognized the importance of increasing collaboration between evaluators and improvers. At the conclusion of these discussions, we aimed to develop a framework to help guide our thinking on learning about improvement.

Results and insights from the Salzburg Global Seminar Session 565

The discussions started with identifying research designs and evaluation methods that would optimize learning from different types of improvement initiatives. Participants were interested in how we can more deeply and efficiently learn from improvement. The original agenda was adjusted daily to enhance discussion, which moved away from which evaluation methods were better suited for which purposes, into how evaluation and research could be designed as an integral, ongoing part of the implementation. During the session, we concluded that more iterative collaboration and communication between implementers and evaluators could lead to rigorous adaptive designs. This was based on the assumption that adaptive designs have a better chance of reaching program aims while still allowing evaluation of the attributable effect of the interventions and their generalizability.

Consensus was reached that such ‘embedded’ evaluation could permit disciplined, timely modifications in implementation approaches and activities while preserving independent evaluation of outcomes. At the conclusion of the meeting, initial steps were taken to establish the development of a community of practice. The community of practice will allow for participants to continue to work together in a way that will enhance learning in the field of improvement.

Conclusions

Reflective of its title, Salzburg Global Seminar Session 565 challenged participants to answer the difficult and complex question of ‘How do we learn about improvement?’. The session quickly revealed that to find solutions to these issues, implementers, evaluators and researchers must work together to better learn about improvement activities. This is in contrast to the current situation in which evaluators too often work independently, rather than collaboratively, with improvement program designers and implementers. Many agreed that this traditional model, with its silos of design, implementation and evaluation, limits learning and often leads to less flexible and rigorous quality improvement initiatives.

When evaluation occurs after an improvement intervention has been implemented, learning is lost. Only by using evaluation as an improvement intervention is occurring, can this learning be harvested to understand what factors are contributing to the results of the improvement intervention. Embedding evaluation and improvement design also allows evaluation to inform the improvement intervention as it is occurring, thereby yielding improved results based on evaluator recommendations. Embedded improvement and evaluation requires increased flexibility in improvement and evaluation designs to promote collaboration, learning, and feedback, while maintaining rigor.

A strong consensus emerged during the Seminar that there is a separation between implementation and evaluation. This separation is primarily due to concerns about insufficient credible evidence regarding the effectiveness of interventions. In essence, participants concluded that the principal accomplishment of the Seminar was to ‘marry’ the world of improvement and evaluation to bridge gaps. A ‘wedding ceremony’ between rigorous implementation and insightful evaluation concluded the Seminar in the inspiring environment of the Schloss Leopoldskron and its magical surroundings where the 'Sound of Music' was filmed.

References

- 1. Potela MC, Pronovost PJ, Woodcock T et al. How to study improvement interventions: a brief overview of possible study types. Br Med J Qual Saf 2015;24:325–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Davidoff F. Improvement interventions are social treatments, not pills. Ann Intern Med 2014; 161, 526–7. doi:10.7326/M14-1789 [DOI] [PubMed] [Google Scholar]

- 3. Massoud MR, Barry D, Murphy A et al. How do we learn about improving healthcare: a call for a new epistemological paradigm. Int J Qual Healthcare 2016;28:420–4. doi:10.1093/intqhc/mzw039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Chowfla A, Massoud RM, Dixon N et al. Session Report 565 Better Health Care: How do we learn about improvement? Salzburg Global Seminar. 2016. www.SalzburgGlobal.org/go/565 (November 2017, date last accessed).

- 5. Nolan T, Schall M, Berwick DM, Roessner J. Reducing Delays and Waiting Times Throughout the Healthcare System: Breakthrough Series Guide. Boston, Massachusetts, USA: Institute for Healthcare Improvement, 1996.

- 6. Batalden PB, Stoltz PK. A framework for the continual improvement of health care: building and applying professional and improvement knowledge to test changes in daily work. Jt Comm J Qual Improv 1993;19:424–47. [DOI] [PubMed] [Google Scholar]

- 7. Juran JM, Gryna FM. Juran’s Quality Handbook. New York: McGraw-Hill, 1988. [Google Scholar]

- 8. AcademyHealth Evaluating Complex Health Interventions: a guide to rigorous research designs. 2017. http://www.academyhealth.org/evaluationguide (November 2017, date last accessed).