Abstract

Objective. To determine if the new 2016 version of the North American Pharmacy Licensure Examination (NAPLEX) affected scores when controlling for student performance on other measures using data from one institution.

Methods. There were 201 records from the classes of 2014-2016. Doubly robust estimation using weighted propensity scores was used to compare NAPLEX scaled scores and pass rates while considering student performance on other measures. Of the potential controllers of student performance: Pharmacy Curricular Outcomes Assessment (PCOA), scaled composite scores from the Pharmacy College Admission Test (PCAT), and P3 Grade Point Average (GPA). Only PCOA and P3 GPA were found to be appropriate for propensity scoring.

Results. The weighted NAPLEX scaled scores did not significantly drop from the old (2014-2015) to the new (2016) version of NAPLEX. The change in pass rates between the new and old versions of NAPLEX were also non-significant.

Conclusion. Using data from one institution, the new version itself of the NAPLEX did not have a significant effect on NAPLEX scores or first-time pass rates when controlling for student performance on other measures. Colleges are encouraged to repeat this analysis with pooled data and larger sample sizes.

Keywords: pharmacy licensure, logistic models, pass rates, NAPLEX, assessment

INTRODUCTION

In May 2015, the executive committee of the National Association of Boards of Pharmacy (NABP) approved a revised passing standard for licensure of pharmacists in the United States.1 This revision went into effect in November 2015 and included new competency statements for the North American Pharmacist Licensure Examination (NAPLEX).1 In November 2016, the NAPLEX transitioned to a new administration model in which the exam became longer and contained more test questions.2 All of these changes were part of NABP’s process to ensure that the NAPLEX adequately tests the knowledge, skills, and abilities of entry-level pharmacists.2

In 2016, there were 14,190 graduates from accredited pharmacy schools who attempted the NAPLEX for the first time. There were 404 fewer graduates in 2015 (13,786) and 821 fewer in 2014 (13,369).3 Of the 13,786 graduates in 2015, all but 206 (1.5%) took the exam before the new competencies went into effect. Likewise, it is assumed that most of the 2016 graduates took the NAPLEX under the new competency but prior to the switch to the longer exam.

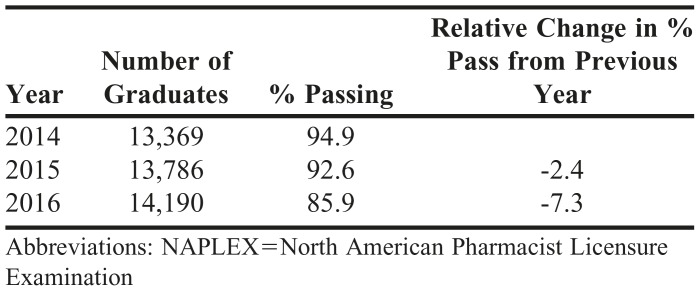

The NAPLEX is scored using item response theory and a mathematically based weighted scoring model with a range of 0 to 150. The minimum passing scale score is 75 and does not reflect a percent of correct answers.4 The pass rates for the NAPLEX over the previous three years can be found in Table 1. In 2016, nationwide pass rates were lower than the previous two years. A possible theory to explain the drop in pass rates is that the level of student performance has declined over the last three years. From a matriculating year 2010 to 2012, the average school of pharmacy saw a 12.95% reduction in the number of applicants for their program.5Another theory is that the scoring model on the NAPLEX has changed and the exam is being graded with higher difficulty. The aim of this study is to use data from one institution to determine if the new 2016 version of the NAPLEX affected scores when controlling for variables related to student performance measures. This study was approved by the East Tennessee State University Institutional Review Board.

Table 1.

NAPLEX Pass Rates for 2014-2016 (first-time attempts)

METHODS

A dataset was created of NAPLEX first-time scaled scores for three cohorts (classes of 2014-2016). The data included matriculation year and potential confounders of student performance, including scaled scores from the Pharmacy Curricular Outcomes Assessment (PCOA), scaled composite scores from the Pharmacy College Admission Test (PCAT), and P3 Grade Point Average (GPA). The dataset contained 201 usable records (out of 243 with 161 in old and 82 in new NAPLEX version). Doubly robust estimation was used to determine whether the decline in NAPLEX performance observed at East Tennessee State University in 2016 could have been explained by the confounding effect of a decline in student-performance related variables, rather than a change in the NAPLEX.6

Doubly robust estimation contains four steps: analysis of the unweighted data, selection of confounders, evaluation of confounder balance, and analysis of the treatment effect. For step 1, analyzing the unweighted data, performance on the new and old NAPLEX versions was compared to determine whether there was a significant change in 2016. In step 2, selection of potential confounders, variables of student performance (PCOA, PCAT, P3 GPA) were evaluated to determine whether they changed in 2016. If a student performance variable differed with NAPLEX scores, it was considered a confounder and used to calculate the propensity scores. If a variable did not change, then it was not considered a confounding variable for this doubly robust estimation. The data were then weighted by the propensity scores to minimize the effects of the confounders. In step 3, evaluation of confounder balance, the means of the confounds were compared between the graduates taking the new and old NAPLEX versions using the propensity score weighted data. If the means of the confounds did not differ significantly between the new and old versions, this would indicate that the propensity score weighting successfully controlled for the confounds. In other words, weighting caused the graduates who had taken the old and new NAPLEX versions to look similar in terms of the PCAT, PCOA, and P3 GPA.

Finally, during the analysis of the treatment effect, the confounds and NAPLEX version were used to predict NAPLEX performance using the weighted data. Including the confounds in the analysis helped to capture any residual biasing effect that was not addressed by the propensity score weighting. This final step answered whether the change in NAPLEX performance observed in 2016 could have been explained by changes in the confounds (PCAT, PCOA, and P3 GPA), rather than a change in the NAPLEX itself.

RESULTS

Without controlling for the student performance measures, a two-tailed independent samples t-test on the unweighted data revealed that students scored lower on the new version of the NAPLEX (Mean (SD)) 97.1 (17.7) vs the old version 103.3(13.6), overall scaled score reduction of 6.2 (95%CI [-10.7 to -1.8]), p=.006. Pass rates, however did not differ significantly in the logistic regression model with NAPLEX version as the predictor variable, p=.072. The non-significant result of the logistic model was likely a function of relatively high pass rates and a relatively small dataset. While the effect of NAPLEX version was not statistically significant, it was a concern for the school, as first-time pass rates declined from 93.4% in 2015 to 87.8% in 2016.

Student performance measures were defined by standardized and un-standardized assessments. The potential confounders included PCAT, PCOA, and P3 GPA. The PCAT was not used as a confounder in later analyses since PCAT scores did not differ significantly between the old 413.2 (SD 10.1) and new 412.6 (10.0) versions of the NAPLEX, p=0.62. As such, it was unlikely to have a biasing effect. The difference in PCOA scores between the old 394.6 (50.1) and new 382.5 (43.7) versions, while not statistically significant, was large enough that it was included as a confounder, p=.077. P3 GPA differed significantly between the old 3.69 (0.23) and new 3.57 (0.35) versions, p=.001, and was subsequently included as a confounder. P3 GPA is an un-standardized covariate compared to PCOA. Theoretically, a 3.0 GPA three years ago may not be the same thing as a 3.0 GPA today. However, the significant difference in GPA by NAPLEX version indicates that it could be an important confound, thus, it was included as a covariate.

Confounder balancing is important to ensure that the propensity score weighting made the two conditions (new vs old NAPLEX) more similar in terms of the chosen confounders (PCOA and P3 GPA). A comparison of confounder means and effect sizes indicated that propensity score weighting had effectively balanced the data in terms of the confounders. Mean PCOA scores for the new and old NAPLEX were much closer in the weighted (382.5 vs 388.5, respectively) than in the unweighted data (382.5 vs 394.6). This was also reflected by a reduction in PCOA’s standardized effect size between the weighted (d=.14) and unweighted (d=.28) data. P3 GPA was similarly balanced. In the weighted data, the old and new version P3 GPAs were more similar (3.61 vs 3.57) than in the unweighted data (3.69 vs 3.57), and the effect size was smaller in the weighted data (d=.094) than in the unweighted data (d=.35).

To conduct doubly robust estimation, multiple regression was performed on the propensity score weighted data, where PCOA and P3 GPA were included in the model with NAPLEX version (new vs old) to adjust for any imbalance missed by the weighting. Both PCOA β=.13, p<.001 and P3 GPA β=26.4, p<.001 significantly predicted NAPLEX scores. The NAPLEX version, however, did not predict NAPLEX scores, β=1.97, p=.22. The weighted NAPLEX scores differed by 2.0 points, 100.7 vs. 98.7 (95% CI [-5.1 to 1.2]). A similar pattern was observed for NAPLEX pass rates (though the difference in pass rates by version was not significant even with the unweighted data). Both PCOA β =.028, p<.001, and P3 GPA β=3.24, p=.001 predicted pass rates in a logistic model, but NAPLEX version did not β=.62, OR=0.53 (95% CI [0.12 to 2.46]), p=.42.

There were 42 (17.3%) records that were not used in this analysis. Of the 42, six were delayed students that altered their cohort, eight did not complete the pharmacy program, 17 did not release their NAPLEX scores, and 11 did not take the PCOA. Analyses were conducted to determine if the results had been compromised because missing students were at a different performance level than non-missing students. Students who did not report their NAPLEX scores 413.0 (8.8) had similar PCAT scores to students who reported their NAPLEX scores 413.0 (10.2), p=1.00. Missing students 3.47 (.42), however, did have a lower P3 GPA than non-missing students 3.66 (.28), p=.048. A logistic regression with NAPLEX missing score status (missing or not) as the predictor and NAPLEX version (new or old) as the outcome indicated that NAPLEX scores were missing at similar rates in the new and old versions, p=.53. Even though students who did not report their NAPLEX scores had a lower GPA than those who did, the fact that scores were missing at similar rates between the new and old versions provide evidence that changes in NAPLEX performance are not due to missing data.

DISCUSSION

Data from one institution suggest that the new version of the NAPLEX yielded similar mean scaled scores (98.7 vs 100.7, new vs old, respectively) when controlling for student performance on the PCOA and P3 GPA. Without controlling for student performance on other measures, the NAPLEX scaled score means for new and old were 97.1 and 103.3, respectively. The new NAPLEX version also failed to show a significant effect on first-time pass rates, however, the sample size was small. It is likely that this analysis was underpowered. The NABP identified in a webinar to colleges in April 2017 that in their item response theory approach to scoring the NAPLEX, the ability estimate (theta) was higher in 2016 than 2015.7 In practical terms, overall pass rates dropped in 2016. Enhancing NAPLEX preparation services for graduating pharmacists is one way to address the decline in pass rates. Another approach is to assess practice readiness earlier in the curriculum, so that inadequate performance can be identified and remediated. It is also possible that students/graduates of this institution are not generalizable to the rest of the graduating pharmacy population. Pharmacy programs vary in prerequisites, curricular sequencing, PCOA administration, and program length.

CONCLUSION

To more thoroughly identify if the new NAPLEX had an effect on scores and pass rates, pharmacy schools should pool their data to create a larger, more representative sample. Data sharing could help better assess the current landscape of pharmacy education and licensure.

ACKNOWLEDGMENTS

The authors thank Steve Ellis, assistant dean for student affairs, for assistance in preparing the data for analysis.

REFERENCES

- 1. Revised NAPLEX blueprint and passing standards to be implemented in November 2015; Recommendations follow thorough analysis. June-July 2015 NABP Newsletter. National Association of Boards of Pharmacy. https://nabp.pharmacy/wp-content/uploads/2016/07/June-July-2015-NABP-Newsletter-FINAL.pdf. Accessed February 23, 2017.

- 2. New NAPLEX to launch in November 2016. March 2016 NABP Newsletter. National Association of Boards of Pharmacy. https://nabp.pharmacy/wp-content/uploads/2016/07/March2016NABPNewsletter_Reduced.pdf. Accessed February 23, 2017.

- 3. NAPLEX passing rates for 2014-2016 graduates per pharmacy school. 2016 NAPLEX Pass Rates. National Association of Boards of Pharmacy. https://nabp.pharmacy/wp-content/uploads/2017/02/2016-NAPLEX-Pass-Rates.pdf. Accessed February 23, 2017.

- 4. NAPLEX and MPJE 2018 candidate registration bulletin. National Association of Boards of Pharmacy. https://nabp.pharmacy/wp-content/uploads/2018/01/NAPLEX-MPJE-Bulletin-January-2018.pdf. Accessed March 9, 2018.

- 5. AACP Student Trend Data. First professional application trends 1998-2015. American Association of Colleges of Pharmacy https://public.tableau.com/profile/aacpdata#!/vizhome/StudentTrendDataDashboard/StudentDataTrends. Accessed March 9, 2018.

- 6.Funk MJ, Westreich D, Wiesen C, Stürmer T, Brookhart MA, Davidian M. Doubly robust estimation of causal effects. Am J Epidemiol. 2011;173(7):761–767. doi: 10.1093/aje/kwq439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Incrocci M. North American Pharmacist Licensure Examination (NAPLEX) program [webinar]. April 13, 2017.