Abstract

Cognitive control – the ability to override a salient or prepotent action to execute a more deliberate one – is required for flexible, goal-directed behavior, and yet it is subjectively costly: decision-makers avoid allocating control resources, even when doing so affords more valuable outcomes. Dopamine likely offsets effort costs just as it does for physical effort. And yet, dopamine can also promote impulsive action, undermining control. We propose a novel hypothesis that reconciles opposing effects of dopamine on cognitive control: during action selection, striatal dopamine biases benefits relative to costs, but does so preferentially for “proximal” motor and cognitive actions. Considering the nature of instrumental affordances and their dynamics during action selection facilitates a parsimonious interpretation and conserved corticostriatal mechanisms across physical and cognitive domains.

Keywords: Cognitive control, effort, dopamine, motivation, action selection, striatum, proximity, prepotency

Introduction

Cognitive control is essential for flexible, context-sensitive planning and decision-making. Recent studies have shown that striatal dopamine (DA) signaling can alternatively promote cognitive control, boosting accuracy and speeding reaction times, and undermine it, yielding impulsivity. Here, we review evidence for these opposing effects and propose a novel hypothesis to explain why DA sometimes promotes and sometimes undermines cognitive control, in terms of cortico-striatal action selection mechanisms and biases.

DA offsets effort costs, promoting control

Although cognitive control is necessary for flexible, adaptive functioning, it is also subjectively costly [1–3], causing demand avoidance [4] and reward discounting [5,6]. Control is thought to be recruited in proportion to potential benefits less effort costs [7]. The nature of subjective effort costs is yet unresolved: they may reflect mechanisms to reduce cross-talk interference among multiplexed control signals, or opportunity costs incurred by resource allocation [2]. Nevertheless, the consequences are real: higher subjective costs erode control under fatigue [8], and in advanced cognitive aging [5]. Deficient motivation may also partly account for cognitive deficits in schizophrenia [9–12] and disorders including depression and ADHD [1].

Incentives, conversely, promote cognitive control [3], and these effects are likely mediated, in part, by dopamine (DA) signaling in the striatum [13]. Phasic DA signals train cortico-striatal synapses to gate cognitive actions, such as working memory updating and task-set selection, according to their relative reward and punishment histories, by modulating synaptic plasticity in direct and indirect pathways, respectively [14,15]. Extracellular DA can also convey momentary motivation, biasing high-benefit, high-cost actions over low-benefit, low-cost actions during action selection [15–21]. Momentary, DA-mediated motivational signaling explains both why incentives boost apparent control for speed, accuracy, and distractor resistance in a saccade task, and also why these incentive effects are attenuated in Parkinson’s disease [22].

Importantly, striatal DA dynamics during goal-directed behavior suggest that they are well-suited to convey an evolving willingness to work over extended intervals that cognitive control requires [21,23]. Key features include protracted ramps during goal approach which adapt to unanticipated state transitions, encode temporally-discounted rewards, and predict action likelihood [21,24]. Computational theory has highlighted the influence of costs in arbitrating between cheap and efficient “model-free” (MF) action selection, and precise but costly “model-based” (MB) planning over complex state-action-outcome transitions [25–27]. Evolving striatal DA dynamics may thus be important for conveying the expected values of costly MB processes. Indeed, decision-makers rely more on MB over MF control when the stakes are higher [28] and with increased striatal DA signaling [29–31].

DA also undermines control

If DA promotes control by conveying incentives that offset effort costs, there is also evidence that it undermines control. Notably, the DA precursor levodopa yields impulse control disorders in 17% of Parkinson’s disease patients [32] and may also drive impulsive responding to irrelevant stimuli as a function of patients’ trait impulsivity [33]. Trait impulsivity itself has been linked with higher adolescent DA function [34], higher striatal D2 receptor density in healthy adults [35], and D2 autoreceptor density and amphetamine-induced DA release [36]. Experimentally, DA can both promote and undermine control within a single task: during a Stroop task, trial-wise incentives enhance performance (reduce conflict costs) for those with low striatal DA synthesis capacity, while incentives undermine performance (increase conflict costs) for those with high synthesis capacity [37]. Beyond altering control performance, DA can also increase the degree to which individuals explicitly choose to avoid high versus low control-demanding tasks. Specifically, the DA transporter blocker methylphenidate caused high trait-impulsive participants to avoid control demands more [38], suggesting that DA may undermine control by altering when individuals choose to exert it.

DA may also undermine control, in part, due to DA’s well-established effects on behavioral vigor [39–43]. In short, higher extracellular DA tone in the striatum increases the likelihood, and reduces the latency of action commission [21,24,40,41]. Thus, prepotent actions that control is intended to override (e.g., reading a Stroop word) are also potentiated by higher DA, just like controlled actions. That is, DA can potentiate actions that require incentive motivation for overcoming effort costs, but also actions which do not require motivation. Indeed, DA-mediated incentives can simultaneously potentiate both performance-contingent and non-contingent behaviors like speeding saccades both when rewards depend on reaction times and when they do not [44].

DA interacts with proximity to modulate control

What determines when DA will promote control and when it will undermine it? One suggestion comes from an elegant series of studies which implicate both DA and spatial proximity in conditioned approach to instrumental apparatus [45]. Subpopulations of striatal neurons respond to discriminative stimuli and their activity determines whether rats approach and engage instrumental apparatus. Critically, this activity is DA-dependent [40,46] and is modulated by spatial proximity: more proximal apparatus evoke more firing, greater likelihood of approach, and shorter latency reaction times [47]. As a consequence, rats are biased towards closer low-cost, low-reward levers, even if they otherwise prefer a high-cost, high-reward lever [47].

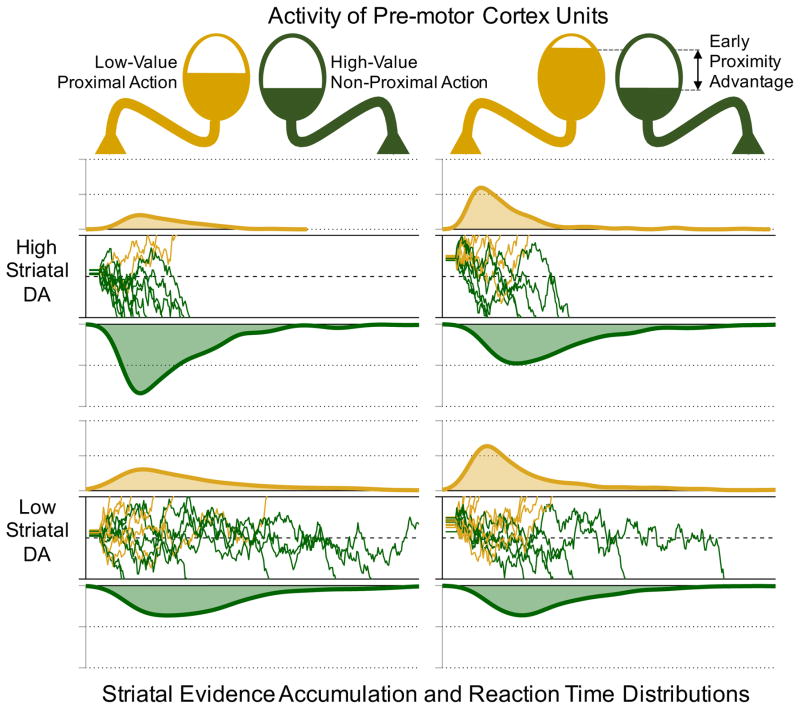

Striatal proximity effects themselves reflect early cortical dynamics of competing action proposals (e.g. in pre-motor cortex) that are evoked as instrumental affordances and filtered by mutual inhibition, biased by multiple factors predicting action probability [48–50]. Filtered actions are then “proposed” to the striatum where they are gated, via thalamic disinhibition, according to the relative activity in the direct and indirect pathways [14,15]. Thus, actions which are proposed more rapidly and robustly, will be earlier and stronger candidates for action gating. Generalizing to any factor which causes cortical action representations to be evoked rapidly and robustly, we can see that spatial proximity as well as attention, salience, prepotency, familiarity, concreteness, etc. will all have similar effects, thus proximity is hereafter used to refer to psychological rather than strictly spatial proximity.

Striatal DA tone will also interact during action selection by increasing direct versus indirect pathway excitability [15,21,24,40], functionally equivalent to more benefit and less cost evidence across all candidate actions [14,19]. If an instrumental apparatus is more proximal, then, it will be an earlier candidate for potentiation by striatal DA tone. We therefore propose the following hypothesis concerning the interaction of DA and proximity: DA will potentiate action commission, by up-weighting benefit over cost evidence, preferentially for proximal actions (Fig. 1). Thus, when DA tone is high, proximity will strongly determine output. If no actions are uniquely proximal, high-benefit, high-cost alternatives (e.g. controlled over automatic responses) will win out. However, as one action becomes relatively more proximal, it is more likely to be selected. Conversely, when DA tone is low, preferences will shift towards low-benefit, low-cost alternatives, but, since the general likelihood of action commission is reduced, proximity effects will also be attenuated. Moreover, even under high DA tone, proximity biases can be overcome by raising the gating threshold when detecting the need for cognitive control, via recruitment of prefrontal-subthalamic nucleus circuits [51,52], as less proximal actions will have more time to compete.

Figure 1.

Proximity and DA shape competition between low-value, proximal, and high-value, distal actions. Higher DA amplifies value differences, favoring high-value actions more when none are uniquely proximal, but will selectively potentiate low-value actions when early cortical dynamics confer a strong proximity advantage. Here, using DDM-style simulations, we simulate the effect of higher DA as an increasing drift rate towards the high-value action when the proximity advantage is small, while a large proximity advantage is simulated as a large starting point bias in the direction of the proximal action, which is itself amplified by DA. Relative distribution densities reflect probability of proximal and non-proximal action at a given time-point.

We can formalize key features of the proposed DA-proximity interaction in a choice between a low-cost, low-benefit action b, that has a proximity advantage ΔP over a high-cost, high-benefit action a. Specifically, we can write the net action value of a as the linear combination of activity evoked in the direct (Da) and indirect pathway (Ia; following [15]):

| (1) |

where βD and βI weights reflect D1 and D2 receptor effects and increase, and decrease with striatal DA levels, respectively. Accordingly, we can express the probability of executing action a (vs. b) with a softmax function, modified such that the net cost-benefit information for action a is attenuated by its proximity disadvantage (ΔP) to capture the proposal that DA potentiates action values preferentially for proximal actions:

| (2) |

where ρ is:

| (3) |

Note that in Eqn. 3, the proximity advantage itself can be amplified by striatal DA, capturing the prediction that very high DA levels can amplify a proximity bias in action selection1.

Importantly, since cognitive actions are selected by similar cortico-striatal loops as those involved in physical action selection [14], a proximity bias may shape cognitive action selection just as it does for physical actions. This is particularly relevant considering that while physical apparatus are often proximal (levers at hand, stairs underfoot, etc.), controlled cognitive actions are, by definition, psychologically distant. Controlled actions are not automatically evoked by the environment. Instead, they must be constructed slowly by combining current percepts with rules retrieved from long-term memory, and maintained over protracted intervals, withstanding interference from the environment [53,54]. Conversely, action proposals which are automatically evoked by the environment, or prepotent actions, enjoy a selection bias which controlled actions must overcome.

The neural mechanisms of inhibitory control provide a useful blueprint [52]: prepotent actions are rapidly evoked in sensorimotor cortex (e.g. frontal eye fields, FEF), while controlled actions arise more slowly by the conjunction of afferent input from the environment and internal (e.g. lateral frontal) rule representations. Critically, errors are predicted by earlier and more robust FEF unit activity corresponding to the prepotent action, and slower and attenuated activity in controlled action units. By contrast, correct responses are predicted by faster and more robust activity in controlled response units. Since proximity sets the speed and intensity with which action proposals are evoked, proximity shapes the competition between controlled and prepotent responses. Thus while high DA favors high-benefit, high-cost controlled actions, it can also potentiate action prepotency, undermining control.

A normative account of DA-proximity interactions

Though we motivated a DA-proximity interaction with choice behavior and neurophysiological data, there are functional motivations as well. Multiple theories posit a role for striatal DA in optimizing foraging as a function of environmental richness, uncertainty, and effort costs [55–57]. An older, influential account [55] suggests that tonic DA mediates the tradeoff between the opportunity cost of failing to act quickly in reward rich environments, and the higher effort costs of behavioral vigor. By this account, higher tonic DA signals increasing average reward, a proxy for environmental richness, and potentiates vigorous action. Recent studies support that higher DA tone promotes vigor [20,21,24,39–42,58], reward rates predict vigor [39,42,43], and DA mediates the reward rate-vigor relationship [39,42]. Another account posits that DA arbitrates between exploration and exploitation where, according to Marginal Value Theory, when local reward rates falls below the long-run average, it is better to explore than exploit [57,59]. By this account, tonic DA is again proposed to signal the long-run average and thus bias exploration when higher and exploitation when lower. Indeed, higher DA availability predicts exploration over exploitation in foraging tasks [59,60].

There is a tension between the proposal that higher DA promotes vigorous exploitation of an instrumental apparatus on one hand, and disengagement and exploration on the other. Interactions with proximity help resolve this tension. If a valuable reward is near (and both DA and proximity are thus high), devoting resources to exploration will detract from reward pursuit. Consider, e.g., that choking is more likely when there is very high incentive motivation, distraction from relevant task features and diminished cortico-striatal coordination for task performance [61]. Moreover, distracted planning increases the risk that someone else will grab a proximal reward before you can. Thus, a proximity bias is desirable to promote focus on the immediate task. If, however, there are valuable rewards in the environment, but none enjoys a uniquely strong proximity advantage, then DA can promote costly but valuable exploration. This maps to the observation that while rats are biased to approach proximal levers, they appear to engage in more “cognitive” forms of cost-benefit comparisons when far from all levers [45], perhaps indicating prospective, cognitive exploration across future outcomes [62]. In sum, a DA-proximity interaction is normative in that it promotes quick, focused action when rewards are close at hand and opportunity costs are high, while it promotes wider exploration when rewards are equidistant and it is more important to pick the best opportunity before committing.

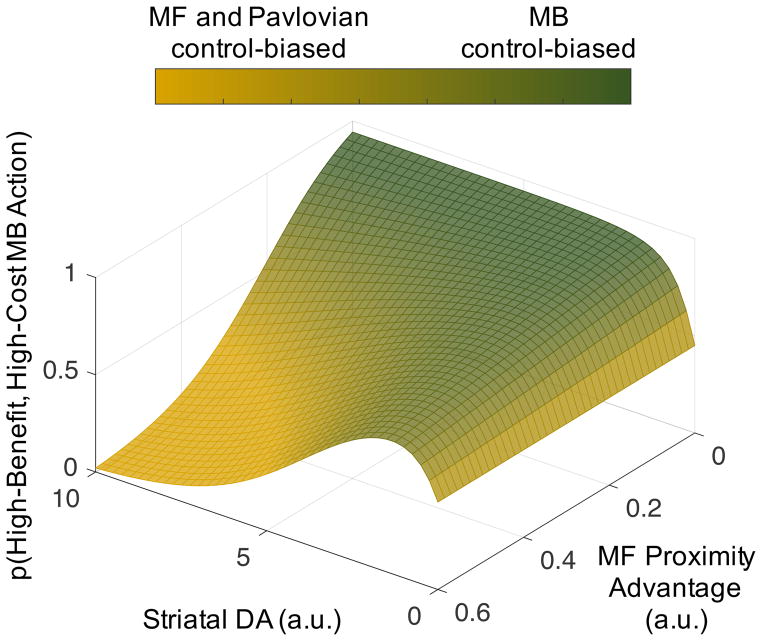

Among the many functions performed by cognitive control, MB planning and control provide a particularly illustrative case. In comparison with MF habits, MB planning is slow and computationally expensive (i.e., MF actions have a built-in proximity advantage over MB actions), but offers the capacity for prospective, cognitive exploration to maximize reward over future states, actions, and transitions [62]. Hence, an optimal forager should be biased towards MB exploration when the environment is rich, but not when there is a uniquely proximal reward at hand. The proposed DA-proximity interaction predicts this pattern exactly (Fig. 2). First, higher striatal DA signaling high average reward promotes disengagement for exploration [59]. While this disengagement might itself be a MF habit, our hypothesis predicts that higher DA signaling high average reward should also promote either costly prospection or costly physical travel required for exploration. Second, the prediction that higher striatal DA offsets effort costs is supported by data showing more costly exploration and MB versus MF control when stakes are higher [25,28], or with greater striatal DA [30,31,59,63]. More MB versus MF behavior may obtain due to more MB control [30,31], or reduced coupling between MF action values and choice, i.e., lower softmax inverse temperatures [63]. Though not systematically explored, reduced coupling between MF action values and choice may itself be due to proximity effects (e.g. momentary attention drives choice rather than reward/punishment history). Third, critically, proximity should arbitrate between MF and MB control of behavior when DA is high. While MF action values are pre-computed and thus have a built-in proximity advantage, factors which influence the size of that advantage should arbitrate between MF and MB control. Support for this prediction includes a computational model of arbitration in which choice behavior is more MF to the extent that the MF system initially converges on a dominant action [64]. Conversely, the proximity advantage of the MF system will shrink if MB planning mechanisms are more robust. This prediction is consistent with evidence for more MB control with increasing working memory capacity for MB planning [63,65,66].

Figure 2.

MF actions will dominate unless both striatal DA tone is high, and the relative proximity advantage of MF actions is small, supporting more MB action. The surface was plotted according to Eqns. 1—3 assuming action weights that vary symmetrically as a function of striatal DA: βG = 1 − ε −DA and βN = ε −DA.

Conclusions

We think it is possible to resolve paradoxical effects of striatal DA signaling in both promoting and undermining control, in part, by accounting for psychological proximity and precise temporal dynamics in cortico-striatal-thalamic action selection. Importantly, a DA-proximity interaction is not only consistent with extant data, but offers an adaptive mechanism for mediating between fast, habitual action, and slow planning and control over complex action sequences. While future work is needed to directly test key predictions, it will also be important to consider the implications of a DA-proximity interactions for multiple domains including not only MF and MB control, but also incentive effects on choking [61], saliency effects in intertemporal choice [67], and Pavlovian biases [68] and familiarity biases [69] in information search. Finally, articulating the parameters of a DA-proximity interaction may prove crucial for optimizing drug therapies that promote desirable cognitive control without also promoting impulsivity.

Highlights.

Striatal dopamine can both promote cognitive control and undermine it.

Opposing effects may be resolved considering striatal action selection biases.

Dopamine may undermine control by amplifying a bias for proximal actions.

Dopamine-proximity biases may be adaptive in foraging contexts.

Acknowledgments

We thank Roshan Cools, Saleem Nicola, Leah Somerville, Amitai Shenhav, Fiery Cushman, and various members of the Frank, Shenhav, and Badre Labs at Brown University for valuable discussions on these topics.

Funding:

This work was supported by awards from the National Institutes of Mental Health [R01MH080066], and the National Science Foundation [#1460604].

Footnotes

We intend these equations as an intuitive, quantitative depiction only, rather than commitment to a particular form. On-going work will formalize these ideas more concretely, including modeling other potential interactions, outside the scope of this review.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References Cited

* of special interest ** of outstanding interest

- 1.Westbrook A, Braver TS. Cognitive effort: A neuroeconomic approach. Cognitive, Affective, & Behavioral Neuroscience. 2015;15:395–415. doi: 10.3758/s13415-015-0334-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- *2.Shenhav A, Musslick S, Lieder F, Kool W, Griffiths TL, Cohen JD, Botvinick MM. Toward a rational and mechanistic account of mental effort. Annual Review of Neuroscience. 2017:40. doi: 10.1146/annurev-neuro-072116-031526. In an excellent review, the Authors describe recent evidence that control is costly and advance novel theories about the nature of control costs. [DOI] [PubMed] [Google Scholar]

- 3.Botvinick MM, Braver TS. Motivation and Cognitive Control: From Behavior to Neural Mechanism. Annual Review of Psychology. 2015;66:83–113. doi: 10.1146/annurev-psych-010814-015044. [DOI] [PubMed] [Google Scholar]

- 4.Kool W, McGuire JT, Rosen ZB, Botvinick MM. Decision making and the avoidance of cognitive demand. Journal of Experimental Psychology-General. 2010;139:665–682. doi: 10.1037/a0020198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Westbrook A, Kester D, Braver TS. What Is the Subjective Cost of Cognitive Effort? Load, Trait, and Aging Effects Revealed by Economic Preference. PLoS One. 2013;8:e68210. doi: 10.1371/journal.pone.0068210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Apps M, Grima LL, Manohar S, Husain M. The role of cognitive effort in subjective reward devaluation and risky decision-making. Scientific Reports. 2015 doi: 10.1038/srep16880. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Musslick S, Shenhav A, Botvinick MM. A computational model of control allocation based on the expected value of control. Reinforcement Learning and Decision Making. 2015 doi: 10.2307/2334680. [DOI] [Google Scholar]

- 8.Blain B, Hollard G, Pessiglione M. Neural mechanisms underlying the impact of daylong cognitive work on economic decisions. Proceedings of the National Academy of Sciences. 2016;113:6967–6972. doi: 10.1073/pnas.1520527113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gold JM, Waltz JA, Frank MJ. Effort Cost Computation in Schizophrenia: A commentary on the Recent Literature. Biological Psychiatry. 2015 doi: 10.1016/j.biopsych.2015.05.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Foussias G, Siddiqui I, Fervaha G, Mann S, McDonald K, Agid O, Zakzanis KK, Remington G. Motivated to do well: An examination of the relationships between motivation, effort, and cognitive performance in schizophrenia. Schizophrenia Research. 2015 doi: 10.1016/j.schres.2015.05.019. [DOI] [PubMed] [Google Scholar]

- 11.Culbreth A, Westbrook A, Barch D. Negative symptoms are associated with an increased subjective cost of cognitive effort. Journal of Abnormal Psychology. 2016;125:528–536. doi: 10.1037/abn0000153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Collins AG, Albrecht MA, Waltz JA, Gold JM, Frank MJ. Interactions between working memory, reinforcement learning and effort in value- based choice: a new paradigm and selective deficits in schizophrenia. Biological Psychiatry. 2017 doi: 10.1016/j.biopsych.2017.05.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Westbrook A, Braver TS. Dopamine Does Double Duty in Motivating Cognitive Effort. Neuron. 2016;89:695–710. doi: 10.1016/j.neuron.2015.12.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Frank MJ. Computational models of motivated action selection in corticostriatal circuits. Current Opinion in Neurobiology. 2011;21:381–386. doi: 10.1016/j.conb.2011.02.013. [DOI] [PubMed] [Google Scholar]

- *15.Collins AGE, Frank MJ. Opponent actor learning (OpAL): Modeling interactive effects of striatal dopamine on reinforcement learning and choice incentive. Psychological Review. 2014;121:337–366. doi: 10.1037/a0037015. A computational model articulates the dual influences of striatal DA signaling on 1) training cortico-striatal synapses in response to rewards and punishments (i.e., reinforcement learning), and 2) instantaneously modulating motivational choices to emphasize benefits vs costs, via effects on direct and indirect pathway neuronal excitability. [DOI] [PubMed] [Google Scholar]

- 16.Chong TTJ, Bonnelle V, Manohar S, Veromann K-R, Muhammed K, Tofaris GK, Hu M, Husain M. Dopamine enhances willingness to exert effort for reward in Parkinson’s disease. Cortex. 2015;69:40–46. doi: 10.1016/j.cortex.2015.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Le Bouc R, Rigoux L, Schmidt L, Degos B, Welter ML, Vidailhet M, Daunizeau J, Pessiglione M. Computational Dissection of Dopamine Motor and Motivational Functions in Humans. Journal of Neuroscience. 2016;36:6623–6633. doi: 10.1523/JNEUROSCI.3078-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Rigoli F, Rutledge RB, Chew B, Ousdal OT, Dayan P, Dolan RJ. Dopamine Increases a Value-Independent Gambling Propensity. 2016;41:2658–2667. doi: 10.1038/npp.2016.68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Zalocusky KA, Ramakrishnan C, Lerner TN, Davidson TJ, Knutson B, Deisseroth K. Nucleus accumbens D2R cells signal prior outcomes and control risky decision-making. Nature. 2016;531:642–646. doi: 10.1038/nature17400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zenon A, Devesse S, Olivier E. Dopamine Manipulation Affects Response Vigor Independently of Opportunity Cost. Journal of Neuroscience. 2016;36:9516–9525. doi: 10.1523/JNEUROSCI.4467-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21**.Hamid AA, Pettibone JR, Mabrouk OS. Mesolimbic dopamine signals the value of work. Nature. 2016 doi: 10.1038/nn.4173. Varying DA tone, on multiple timescales (seconds to minutes) is shown to encode an evolving value function that predicts animals’ motivational drive to act. Moreover, midbrain optogenetic stimulation was used to show that DA drives both reward and punishment learning, when stimulating during outcomes, and motivating action when stimulating during cue onsets. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22**.Manohar SG, Chong TTJ, Apps MAJ, Batla A, Stamelou M, Jarman PR, Bhatia KP, Husain M. Reward Pays the Cost of Noise Reduction in Motor and Cognitive Control. Current Biology. 2015 doi: 10.1016/j.cub.2015.05.038. In most direct test to date that incentive effects on cognitive control are mediated by midbrain DA, incentives enhance control in a rapid saccade to target task, and these incentive effects are attenuated in Parkinson’s disease. Precise behavioral features including precision and distractor resistance are moreover articulated in a computational model in which incentives “pay” the effort costs of control. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lloyd K, Dayan P. Tamping Ramping: Algorithmic, Implementational, and Computational Explanations of Phasic Dopamine Signals in the Accumbens. PLoS Computational Biology. 2015;11:e1004622. doi: 10.1371/journal.pcbi.1004622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Collins AL, Greenfield VY, Bye JK, Linker KE, Wang AS, Wassum KM. Dynamic mesolimbic dopamine signaling during action sequence learning and expectation violation. Scientific Reports. 2016:6. doi: 10.1038/srep20231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Keramati M, Dezfouli A, Piray P. Speed/Accuracy Trade-Off between the Habitual and the Goal-Directed Processes. PLoS Computational Biology. 2011;7:e1002055. doi: 10.1371/journal.pcbi.1002055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Boureau Y-L, Sokol-Hessner P, Daw ND. Deciding How To Decide: Self-Control and Meta-Decision Making. Trends in Cognitive Sciences. 2015 doi: 10.1016/j.tics.2015.08.013. [DOI] [PubMed] [Google Scholar]

- 27.Gershman SJ, Horvitz EJ, Tenenbaum JB. Computational rationality: A converging paradigm for intelligence in brains, minds, and machines. Science. 2015;349:273–278. doi: 10.1126/science.aac6076. [DOI] [PubMed] [Google Scholar]

- 28.Kool W, Gershman SJ, Cushman FA. Cost-benefit arbitration between multiple reinforcement-learning systems. Psychological Science. 2017 doi: 10.1177/0956797617708288. [DOI] [PubMed] [Google Scholar]

- 29.Wunderlich K, Smittenaar P, Dolan RJ. Dopamine Enhances Model-Based over Model-Free Choice Behavior. Neuron. 2012;75:418–424. doi: 10.1016/j.neuron.2012.03.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Deserno L, Huys QJM, Boehme R, Buchert R, Heinze H-J, Grace AA, Dolan RJ, Heinz A, Schlagenhauf F. Ventral striatal dopamine reflects behavioral and neural signatures of model-based control during sequential decision making. Proceedings of the National Academy of Sciences. 2015;112:1595–1600. doi: 10.1073/pnas.1417219112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Sharp ME, Foerde K, Daw ND, Shohamy D. Dopamine selectively remediates “model-based” reward learning: a computational approach. Brain. 2016;139:355–364. doi: 10.1093/brain/awv347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Voon V, Napier CT, Frank MJ, Sgambato-Faure V, Grace AA, Rodriguez-Oroz M, Obeso J, Bezard E, Fernagut P-O. Impulse control disorders and levodopa-induced dyskinesiasin Parkinson’s disease: an update. The Lancet Neurology. 2017;16:238–250. doi: 10.1016/S1474-4422(17)30004-2. [DOI] [PubMed] [Google Scholar]

- 33.Duprez J, Houvenaghel J-F, Argaud S, Naudet F, Robert G, Drapier D, Vérin M, Sauleau P. Impulsive oculomotor action selection in Parkinson’s disease. Neuropsychologia. 2017 doi: 10.1016/j.neuropsychologia.2016.12.027>. [DOI] [PubMed] [Google Scholar]

- 34.Hartley CA, Somerville LH. The neuroscience of adolescent decision-making. Current Opinion in Behavioral Sciences. 2015;5:108–115. doi: 10.1016/j.cobeha.2015.09.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Anderson BA, Kuwabara H, Wong DF, Courtney SM. Density of available striatal dopamine receptors predicts trait impulsiveness during performance of an attention-demanding task. Journal of Neurophysiology. 2017;118:64–68. doi: 10.1152/jn.00125.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Buckholtz JW, Treadway MT, Cowan RL, Woodward ND, Li R, Ansari MS, Baldwin RM, Schwartzman AN, Shelby ES, Smith CE, et al. Dopaminergic Network Differences in Human Impulsivity. Science. 2010;329:532–532. doi: 10.1126/science.1185778. [DOI] [PMC free article] [PubMed] [Google Scholar]

- **37.Aarts E, Wallace DL, Dang LC, Jagust WJ, Cools R, D’Esposito M. Dopamine and the Cognitive Downside of a Promised Bonus. Psychological Science. 2014;25:1003–1009. doi: 10.1177/0956797613517240. In the context of a Stroop task, incentives are shown to both promote performance on a classic cognitive control task for some participants while undermining performance for others. Critically, these individual differences varied as a function of DA synthesis capacity, measured by PET. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38**.Froböse MI, Swart JC, Cook JL, Geurts DE, Ouden den HE, Cools R. Catecholaminergic modulation of the avoidance of cognitive control. BioRxiv. 2017 doi: 10.1101/191015. Methylphenidate both increased and decreased the consistency with which participants avoided control demands when given the chance to select between low- and high-demand tasks. Interestingly, methylphenidate’s effects on explicit choices to exert more or less cognitive control depended on baseline trait impulsivity. [DOI] [PubMed] [Google Scholar]

- 39.Beierholm U, Guitart-Masip M, Economides M, Chowdhury R, Duzel E, Dolan R, Dayan P. Dopamine modulates reward-related vigor. Neuropsychopharmacology. 2013;38:1495–1503. doi: 10.1038/npp.2013.48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- **40.Hoffmann du J, Nicola SM. Dopamine Invigorates Reward Seeking by Promoting Cue-Evoked Excitation in the Nucleus Accumbens. Journal of Neuroscience. 2014;34:14349–14364. doi: 10.1523/JNEUROSCI.3492-14.2014. Systematic blockade of striatal D1 and D2 receptors is used to demonstrate that cued approach to instrumental apparatus is mediated by neuronal firing in the ventral striatum and that cue-evoked firing as well as approach are both DA-dependent. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ko D, Wanat MJ. Phasic Dopamine Transmission Reflects Initiation Vigor and Exerted Effort in an Action- and Region-Specific Manner. Journal of Neuroscience. 2016;36:2202–2211. doi: 10.1523/JNEUROSCI.1279-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Rigoli F, Chew B, Dayan P, Dolan RJ. The Dopaminergic Midbrain Mediates an Effect of Average Reward on Pavlovian Vigor. Journal of Cognitive Neuroscience. 2016;28:1303–1317. doi: 10.1162/jocn_a_00972. [DOI] [PubMed] [Google Scholar]

- 43.Griffiths B, Beierholm UR. Opposing effects of reward and punishment on human vigor. Scientific Reports. 2017:7. doi: 10.1038/srep42287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Manohar SG, Finzi RD, Drew D, Husain M. Distinct Motivational Effects of Contingent and Noncontingent Rewards. Psychological Science. 2017;28:1016–1026. doi: 10.1177/0956797617693326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Nicola SM. Reassessing Wanting and Liking in the Study of Mesolimbic Influence on Food Intake. American Journal of Physiology Regulatory, Integrative and Comparative Physiology. 2016;311:R811–R840. doi: 10.1152/ajpregu.00234.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Hoffmann du J, Nicola SM. The Ventral Tegmental Area Is Required for the Behavioral and Nucleus Accumbens Neuronal Firing Responses to Incentive Cues. Frontiers in Behavioral Neuroscience. 2016;10:2923–2933. doi: 10.1523/JNEUROSCI.5282-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Morrison SE, Nicola SM. Neurons in the Nucleus Accumbens Promote Selection Bias for Nearer Objects. Journal of Neuroscience. 2014;34:14147–14162. doi: 10.1523/JNEUROSCI.2197-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Cisek P. Making decisions through a distributed consensus. Current Opinion in Neurobiology. 2012;22:927–936. doi: 10.1016/j.conb.2012.05.007. [DOI] [PubMed] [Google Scholar]

- 49.Klaes C, Westendorff S, Chakrabarti S, Gail A. Choosing Goals, Not Rules: Deciding among Rule-Based Action Plans. Neuron. 2011;70:536–548. doi: 10.1016/j.neuron.2011.02.053. [DOI] [PubMed] [Google Scholar]

- 50.Thura D, Cisek P. Deliberation and Commitment in the Premotor and Primary Motor Cortex During Dynamic Decision Making. Neuron. 2014;81:1401–1416. doi: 10.1016/j.neuron.2014.01.031. [DOI] [PubMed] [Google Scholar]

- 51.Cavanagh JF, Wiecki TV, Cohen MX, Figueroa CM, Samanta J, Sherman SJ, Frank MJ. Subthalamic nucleus stimulation reverses mediofrontal influence over decision threshold. Nature Neuroscience. 2011;14:1462–1467. doi: 10.1038/nn.2925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- **52.Wiecki TV, Frank MJ. A computational model of inhibitory control in frontal cortex and basal ganglia. Psychological Review. 2013;120:329–355. doi: 10.1037/a0031542. Neurophysiology and behavior are captured in a biologically-constrained neural network model of action selection in inhibitory control tasks. Within-trial neural dynamics map closely to construct of proximity considered here. [DOI] [PubMed] [Google Scholar]

- 53.Jacob SN, Nieder A. Complementary Roles for Primate Frontal and Parietal Cortex in GuardingWorking Memory from Distractor Stimuli. Neuron. 2014;83:226–237. doi: 10.1016/j.neuron.2014.05.009. [DOI] [PubMed] [Google Scholar]

- 54.Shahar N, Teodorescu AR, Karmon-Presser A, Anholt GE, Meiran N. Memory for Action Rules and Reaction Time Variability in Attention-Deficit/Hyperactivity Disorder. Biological Psychiatry: Cognitive Neuroscience and Neuroimaging. 2016;1:132–140. doi: 10.1016/j.bpsc.2016.01.003. [DOI] [PubMed] [Google Scholar]

- 55.Niv Y, Daw N, Joel D, Dayan P. Tonic dopamine: opportunity costs and the control of response vigor. Psychopharmacology. 2007;191:507–520. doi: 10.1007/s00213-006-0502-4. [DOI] [PubMed] [Google Scholar]

- 56.Beeler JA. Thorndike’s law 2.0: dopamine and the regulation of thrift. Frontiers in Neuroscience. 2012 doi: 10.3389/fnins.2012.00116/abstract. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Constantino SM, Daw ND. Learning the opportunity cost of time in a patch-foraging task. Cognitive, Affective, & Behavioral Neuroscience. 2015;15:837–853. doi: 10.3758/s13415-015-0350-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- **58.McGinty VB, Lardeux S, Taha SA, Kim JJ, Nicola SM. Invigoration of Reward Seeking by Cue and Proximity Encoding in the Nucleus Accumbens. Neuron. 2013;78:910–922. doi: 10.1016/j.neuron.2013.04.010. Subtle behavioral and trial features reveal that rats are biased towards proximal instrumental levers over and above their preferences between high- and low-effort alternatives and moreover that a proximity bias is reflected in the activity of striatal neurons mediating approach to levers. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Constantino SM, Dalrymple J, Gilbert RW, Varenese S, Di Rocco A, Daw N. A Neural Mechanism for the Opportunity Cost of Time. BioRxiv. 2017 doi: 10.1101/173443. [DOI] [Google Scholar]

- 60.Rutledge RB, Lazzaro SC, Lau B, Myers CE, Gluck MA, Glimcher PW. Dopaminergic Drugs Modulate Learning Rates and Perseveration in Parkinson’s Patients in a Dynamic Foraging Task. Journal of Neuroscience. 2009;29:15104–15114. doi: 10.1523/JNEUROSCI.3524-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Lee TG, Grafton ST. Out of control: Diminished prefrontal activity coincides with impaired motor performance due to choking under pressure. Neuroimage. 2015;105:145–155. doi: 10.1016/j.neuroimage.2014.10.058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Hills TT, Todd PM, Lazer D, Redish AD, Couzin ID Group9 TCSR. Exploration versus exploitation in space, mind, and society. Trends in Cognitive Sciences. 2015;19:46–54. doi: 10.1016/j.tics.2014.10.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Kroemer NB, Lee Y, Pooseh S, Eppinger B, Goschke T. L-DOPA reduces model-free control of behavior by attenuating the transfer of value to action. BioRxiv. 2016 doi: 10.1101/086116. [DOI] [PubMed] [Google Scholar]

- 64.Viejo G, Khamassi M, Brovelli A, Girard B. Modeling choice and reaction time during arbitrary visuomotor learning through the coordination of adaptive working memory and reinforcement learning. Frontiers in Behavioral Neuroscience. 2015;9:208. doi: 10.3389/fnbeh.2015.00225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Doll BB, Duncan KD, Simon DA, Shohamy D, Daw ND. The Curse of Planning: Dissecting Multiple Reinforcement-Learning Systems by Taxing the Central Executive. Psychological Science. 2015;18:767–772. doi: 10.1177/0956797612463080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Doll BB, Bath KG, Daw ND, Frank MJ. Variability in Dopamine Genes Dissociates Model-Based and Model-Free Reinforcement Learning. Journal of Neuroscience. 2016;36:1211–1222. doi: 10.1523/JNEUROSCI.1901-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Kurth-Nelson Z, Bickel W, Redish AD. A theoretical account of cognitive effects in delay discounting. European Journal of Neuroscience. 2012;35:1052–1064. doi: 10.1111/j.1460-9568.2012.08058.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Hunt LT, Rutledge RB, Malalasekera WMN, Kennerley SW, Dolan RJ. Approach-Induced Biases in Human Information Sampling. PLoS Biology. 2016;14:e2000638. doi: 10.1371/journal.pbio.2000638. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Vandormael H, Herce Castañón S, Balaguer J, Li V, Summerfield C. Robust sampling of decision information during perceptual choice. Proceedings of the National Academy of Sciences. 2017;114:2771–2776. doi: 10.1073/pnas.1613950114. [DOI] [PMC free article] [PubMed] [Google Scholar]