Abstract

Numerous quantitative indicators are currently available for evaluating research productivity. No single metric is suitable for comprehensive evaluation of the author-level impact. The choice of particular metrics depends on the purpose and context of the evaluation. The aim of this article is to overview some of the widely employed author impact metrics and highlight perspectives of their optimal use. The h-index is one of the most popular metrics for research evaluation, which is easy to calculate and understandable for non-experts. It is automatically displayed on researcher and author profiles on citation databases such as Scopus and Web of Science. Its main advantage relates to the combined approach to the quantification of publication and citation counts. This index is increasingly cited globally. Being an appropriate indicator of publication and citation activity of highly productive and successfully promoted authors, the h-index has been criticized primarily for disadvantaging early career researchers and authors with a few indexed publications. Numerous variants of the index have been proposed to overcome its limitations. Alternative metrics have also emerged to highlight ‘societal impact.’ However, each of these traditional and alternative metrics has its own drawbacks, necessitating careful analyses of the context of social attention and value of publication and citation sets. Perspectives of the optimal use of researcher and author metrics is dependent on evaluation purposes and compounded by information sourced from various global, national, and specialist bibliographic databases.

Keywords: Research Evaluation, Bibliometrics, Bibliographic Databases, h-index, Publications, Citations

INTRODUCTION

In the era of digitization of scholarly publishing, opportunities for improving visibility of researchers and authors from any corner of the world are increasing rapidly. The indexing and permanent archiving of published journal articles and other scholarly items with assigned identifiers, such as Digital Object Identifier (DOI) from CrossRef, help create online profiles, which play an important role in the evaluation of research performance throughout an academic career.1,2 Researchers need such profiles to show off their academic accomplishments, interact with potential collaborators, and successfully compete for funding and promotion.

Choosing ethical, widely visible, and professionally relevant sources for publishing research data is the first step toward making a global impact by skilled researchers. The choice of target journals is critical in the times of proliferation of bogus journals, which increase their number of articles at the expense of the quality, visibility, and citability. Researchers should be trained to manage their online profiles by listing the most valuable and widely visible works, which may attract attention of both professionals and the public-at-large. It is equally important to understand the value and relevance of the currently available evaluation metrics, which can be displayed on individual profiles by sourcing information from various bibliographic databases, search engines, and social networking platforms.

This article aims to overview current approaches to comprehensive research evaluation and provide recommendations on optimal use of widely employed author-level metrics.

AUTHOR PROFILES IN THE CONTEXT OF RESEARCH EVALUATION

Scopus and Web of Science are currently the most prestigious multidisciplinary bibliographic databases, which provide information for comprehensive evaluation of research productivity across numerous academic disciplines. Notably, Scopus is the largest multidisciplinary database, which indexes not only English, but also numerous non-English scholarly sources.3 Professionals can find a critical number of Scopus-indexed sources, reflecting scientific progress and impact in their subject categories. The database has adopted stringent indexing and re-evaluation strategies to maintain the list of ethically sound and influential journals and delist sources that fail to meet publication ethics and bibliometric standards.4 Unsurprisingly, publication and citation data in the most prestigious global academic ranking systems, such as the Times Higher Education World University Rankings, QS World University Rankings, and Shanghai Rankings, are currently sourced from the Scopus database. Scopus author profiles can be viewed as highly informative for most non-Anglophone countries and emerging scientific powers. Mistakes in the author profiles and availability of several automatically generated identifiers for the same authors can be easily corrected by pointing to the inaccuracies and requesting profile mergers at the Scopus customer service domain.

Web of Science and Google Scholar author profiles are also processed for author impact evaluations, though emerging evidence from comparative analyses does not favor these platforms on calculation of citations and feasibility grounds.5,6,7 Compared with Scopus, Science Citation Index, and Social Science Citation Index, the most selective databases of Web of Science, index much less journals, which are predominantly in English, thereby disadvantaging profiles of non-Anglophone researchers and authors, who are better represented at Scopus, Google Scholar, and regional/local indexing platforms. Google Scholar profiles often contain information about multilingual publications, which are not covered by prestigious citation databases. Such profiles are valuable for countries with limited coverage of their journals by Scopus and Web of Science (e.g., Russia, Kazakhstan, other Central Asian countries) and for authors of online monographs, book chapters, and non-peer-reviewed and grey literature items.8

Google Scholar provides information about citations of authors by tracking a wide variety of online journals, book chapters, conference papers, web pages, and grey literature items. However, several versions of the same items may appear in Google searches, affecting the automatic calculation of citation metrics. Also, this search engine fails to recognize and exclude from bibliometric calculations low-quality and apparently ‘predatory’ sources.9 The authors themselves may intentionally or unintentionally fill in their Google Scholar profiles with substandard items and articles of other authors with identical names. These and many other caveats are highlighted by experts, who disqualify author impact metrics generated by this platform.10

Regional indexing services, such as the Russian Science Citation Index (RSCI), Chinese Science Citation Database, and Indian Citation Index, fill gaps in research evaluation of authors underrepresented by Scopus and Web of Science. In fact, of 9,560 journals currently published in Russia, only 1.8% are indexed by Web of Science and 3.5% by Scopus.11 Moreover, the proportion of Russian articles tracked by the Journal Citation Reports' Science Edition (Thomson Reuters) is extremely small (1.7% in 2010).12 To improve the citation tracking of Russian academic journals, the RSCI was launched in 2009, providing an opportunity for a sizable proportion of Eurasian authors to register, acquire profiles, and monitor their locally generated metrics. With more than 650 Russian core journals selected for coverage by the Web of Science platform in 2016,13 Eurasian authors, and especially those of disadvantaged subject categories (e.g., social sciences, education, humanities) improved their global visibility and prestige through locally generated citation metrics. Although the indexing criteria of RSCI and regularity of updating its contents are different from those of the global bibliographic databases, the availability of local author impact metrics can be instrumental for further research evaluations.

Additional information on author-level impact can be gathered from emerging scholarly networking platforms, such as ResearchGate and ImpactStory, combining traditional publication and citation records with indicators of social attractiveness of scholarly items (e.g., reads, online mentions).14,15 ResearchGate, a social platform with its own policy of open archiving, tracking citations, networking, and sharing archived works, depends chiefly on items uploaded by its users, some of whom are not so active in doing so, limiting the reliability of related author metrics.16 The automatically calculated ResearchGate scores are displayed on registered user profiles and processed for ranking by the platform. Although the ResearchGate platform has more than 14 million registrants, related profiles are unevenly distributed across academic disciplines and countries. Additionally, items uploaded by its users do not pass the quality checks, leaving a room for promoting substandard sources and further limiting the value of the calculated scores.

TRADITIONAL AUTHOR IMPACT METRICS

h-index

One of the widely promoted and highly cited author metrics is the h-index. It was proposed by physicist Jorge Hirsch in 2005 to quantify the cumulative impact of an individual's scientific articles, which are tracked by a citation database (Web of Science at the time of proposal).17 Jorge Hirsch suggested to analyze both publication and citation records. He defined his index as the h number of papers (Np) with at least h citations each and the rest of papers (Np−h) with equal or less than h citations each. This metric can be manually calculated by listing an individual's articles next to their citation counts in decreasing order. For example, if an author published 100 articles, each of which is cited at least 100 times, his/her h-index is 100.

By comparing the proposed index with single-number metrics, such as number of publications, citations, and citations per paper, several advantages of the combined approach were noted by J. Hirsch. He considered papers with at least h citations (“Hirsch core”) as the most impactful and important for an individual's achievements in his/her professional area.18 An important conclusion drawn by J. Hirsch from an analysis of h-indices of Nobel laureates in physics was that a breakthrough in current science is possible with continuing scientific effort over a certain period of time and other authors' positive attitude, which is reflected in increasing citations of innovative research items.

Since its proposal in 2005, the h-index has been endorsed globally as a simple, intuitive, and universally applicable metric, which can be automatically calculated and displayed on author profiles at Scopus, Web of Science, and Google Scholar. Remarkably, it has revolutionized research evaluation strategies.19

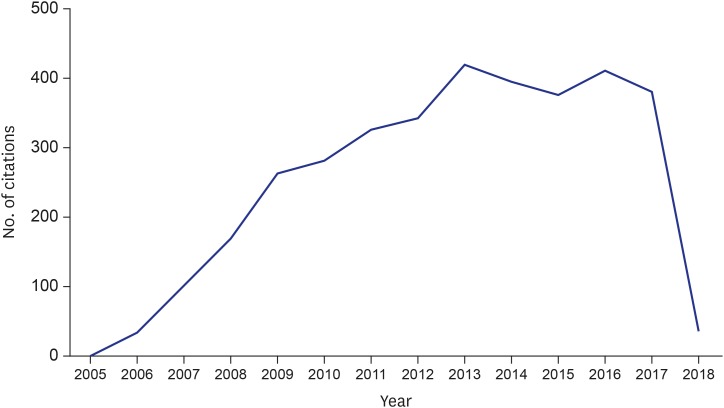

As of February10, 2018, J. Hirsch's index article is cited 3,533 times by Scopus-index items (Fig. 1). The growing global interest to the issue of the h-index and related evaluation metrics is reflected in the snapshot Scopus-based analysis of the 3,533 citing items. Of these items, 84 are cited at least 84 times (h-index of this research topic = 84). The citation activity rapidly increased over the period of 2005–2017, and reached a peak with 411 citations in 2013. The number of citations for 2018 is relatively small, but it will most probably increase with complete processing of the annual records.

Fig. 1.

Number of Scopus-indexed items citing J. Hirsch's landmark article on the h-index in 2005–2018 (as of February 10, 2018).

The top 5 sources of the citations are Scientometrics (n = 415), J Informetr (n = 227), J Am Soc Inf Sci Technol (n = 97), PLOS One (n = 83), and Lecture Notes in Computer Science (n = 61). The top 5 citing authors are leading experts in scientometrics Ronald Rousseau (Leuven, Belgium, number of publications with the index citation = 54), Leo Egghe (Diepenbeek, Belgium, n = 47), Lutz Bornmann (Munich, Germany, n = 46), Jean A. Eloy (Newark, United States, n = 35), and Peter F. Svider (Detroit, United States, n = 33). The USA is the leading country in terms of citation activity with 864 related documents, followed by China (n = 348), Spain (n = 272), the United Kingdom (n = 259), and Germany (n = 234). The largest proportion of citing documents are articles (n = 2,456, 69.5%), followed by conference papers (n = 452, 12.8%), and reviews (n = 239, 6.8%). The main subject areas of the citing items are computer science (n = 1,499, 42.4%), social sciences (n = 1,137, 32.2%), and medicine (n = 761, 21.5%).

Numerous studies have employed the h-index for evaluating the lifetime performance of scholars across most fields of science. This metric is widely employed for academic promotion, research fund allocation, and evaluation of the journal editorial board prestige.20,21,22 The results of several studies have allowed extending its use for ranking journals, research topics, institutions, and countries.23,24,25,26

Despite some advantages for evaluating influential, or ‘elite,’ researchers, the h-index has been criticized for numerous limitations and inappropriate uses.27 Even though related thresholds are often arbitrary, this index is apparently inappropriate for ranking authors with a few publications and citations. At the same time, scores of the index for ‘elite’ researchers can increase with growing citations to their ‘old’ articles despite decreasing their overall publication activity, thus disqualifying it as a metric of dynamic changes in productivity. With the digitization and expanded indexing of historic papers, the h-index scores of deceased authors can increase, giving a false impression of growing productivity.24

The h-index calculations are insensitive to article types, highly cited items, total citations, self-citations, public accessibility (open access), number and gender of co-authors, cooperation networks, and referencing patterns, research funding, differing widely across disciplines and countries. On the dark side, some authors, and particularly those with a small number of publications and citations, may be tempted to intentionally inflate their scores by irrelevant self-citations, resulting in annual increases of the h-index by at least 1. Such manipulations can be easily detected by calculation of a related score (q-index) and contextual qualitative analyses of citation patterns.28

A recent large bibliometric analysis of 935 US-based plastic surgeons revealed that research-specific funding and higher funding amounts significantly correlate with their h-index scores.29

The importance and value of articles indexed by specialist databases are not reflected in the h-indices. For example, the list of items indexed by the evidence-based MEDLINE database is more important for biomedical authors than their publication records in other databases.30

The h-index values of the same author vary widely, depending on the employed citation-tacking platform.31 Compared with Scopus and Web of Science, Google Scholar calculations often provide higher values of the index. Any such calculations, however, overlook the scientific prestige, ethical soundness (predatory and retracted vs. ethical and actively indexed items), and context of publications and citations. The articles in top journals increase scientific prestige and academic competitiveness of their authors. The same is true for citations from top journals.

h-index variants

At least 50 variants of the h-index have been proposed to overcome some of its disadvantages and improve numerical analyses of an individual's research productivity.32 Nonetheless, an analysis of 37 variants revealed that most of the proposed alternative metrics correlate highly with the original h-index and add no new dimension for measuring research productivity.33 And above all, all new indicators have a number of specific limitations (Table 1), which point to the need of their further validation by experts in bibliometrics.

Table 1. Main strengths and limitations of some author-level metrics.

| Metrics | Strengths | Limitations |

|---|---|---|

| h-index | Easily calculated, bi-dimensional metric for measuring publication and citation impact of highly productive researchers | Not suitable for early career researchers and those with a small number of publications; can be manipulated by self-citations; does not fluctuate |

| Author Impact Factor | Focuses on publication and citation activity at different 5-year periods; fluctuates over time | Five-year time window can be narrow for authors in slowly developing disciplines |

| g-index | Gives more weight to highly cited items and helps visualize an individual's impact when the h-index score and total citations are low | Unlike the h-index, the g-index is dependent on the average number of citations for all published papers |

| e-index | Focuses on highly cited items and helps distinguish highly productive authors with identical h-index scores but differing total citation counts | Unsuitable for individuals with small publication and citation counts |

| PageRank index | Considers weight of citations, does not increase with growing (self)citations from low-impact sources | Calculations are based on a version of PageRank algorithm, which is not easily understandable to non-experts; values of the index are highly dependent on visibility and promotion of cited items |

| Total publications | True reflection of productivity, which can be recorded by sourcing information from bibliographic databases and summing up the number of works published annually | Type of articles and their quality are not taken into account; manipulations by publishing low-quality and nonsense items can boost publication records |

| Total citations | Simple measure of an individual's influence; reflect citing authors' interest to published items | Context of citations and weight of cited articles are overlooked |

To visualize publication and citation dynamics throughout an individual's career, the timed h-index was proposed.34 It is calculated in analogy with the original h-index, but with restriction for a time window of 5 years in Web of Science. Such an approach focuses on recent publications and citations rather than lifetime performance and compares activity at various 5-year time points. Similar empirical approaches have been experimented by restricting time window to 1 and 3 years.35,36 The shorter time window, the lower values of the resultant h-index scores. These indexes can be calculated for various periods of time, depending on specifics of publication and citation traditions across academic disciplines.

A 5-year time window was considered for the Author Impact Factor (AIF), which is calculated in analogy with the Journal Impact Factor.37 The AIF is the number of citations to an author's articles, which are published in a certain year, divided by the number of the evaluated author's articles in the previous 5-year period (based on data from Web of Science). Like the timed h-index, the AIF is sensitive to fluctuations in publication and citation activity over certain periods and may reveal an unusual increase of citations to an author's work(s) following publication of groundbreaking papers.

Also, J. Hirsch proposed the m-quotient to correct the h-index for career length and compare the impact of researchers with various periods of academic activities in the same field. The m-quotient is calculated by dividing the h-index score by the number of years since the evaluated author's first publication.17 This metric correlates positively with academic rank of evaluated individuals.38 Like the h-index, m-quotient is unsuitable for evaluating early career researchers. Besides, the first publication is not always the start of an active career in a certain field. It takes years until a researcher finds his/her academic niche. Finally, the m-quotient overlooks interruptions in an individual's career, which can be an important issue particularly for young and female researchers. To overcome this limitation, the contemporary h-index (hc) was proposed to consider age of each article, give more weight to recent articles, and credit researchers with lasting contributions to a specific field of science.39

g-index

In 2006, Leo Egghe40 designed another metric to focus on a set of highly-cited articles. His proposal has received 802 citations in Scopus (as of February 10, 2018). The g-index can be calculated on the Harzing's Publish or Perish website, using data from Google Scholar or subscription citation databases.41 To compute this index, a set of articles is ranked in decreasing order of the number of citations. The resultant score is the largest number of top g articles cited at least g2 times. Roughly, the h-index requires a reasonable number of cited articles to get a high score whereas the g-index can be high even with a few highly cited articles.42 For the same individual, the g-index is always greater than the h-index. For example, an author with 10 published articles, three of which are cited 60, 30, and 10 times (100 in total), will get g-index of 10 and h-index of 3.

e-index

Chun-Ting Zhang43 proposed the e-index to rank researchers with identical h-index scores but different total citations. As of February 10, 2018, the article is cited 170 times in Scopus. This complementary index helps distinguish researchers with excessive total citations. It is an informative indicator for highly cited researchers, and particularly for Nobel laureates. Although the g-index is sensitive to highly cited items, the e-index is a more accurate metric for evaluating differences in the Hirsch core citations of highly cited researchers.44

n-index

There is a field-normalization approach to evaluate researchers with identical h-index scores. The Namazi (n)-index was proposed to compare researchers' impact in view of citation patterns in related scientific fields.45 This index is calculated by dividing an individual's h-index by h-index of a top journal in a related subject category. Information is sourced from Scopus and SCImago Journal & Country Rank platform. Despite its originality, the n-index has not attracted much attention, primarily because of the lack of mathematic justification of the idea. In practical terms, the resultant n-index scores are small numbers. The n-index can be calculated using Scopus data only. Also, the employed individual and journal metrics reflect different phenomena, making the proposed formula difficult to justify.

PageRank index

A completely different approach to the evaluation of an individual's impact by correcting for possible citation manipulations is undertaken by the originators of the PageRank index.46,47 The proposal is still new and not widely endorsed. The idea refers to the analysis of citation networks using the PageRank algorithm of Google. It gives more weight to citations from webpages with high PageRank score and allows distinguishing early career researchers with even a few innovative publications, attracting attention of citing authors. Essentially, the PageRank index does not consider the quantity as does the h-index, it pays more attention to innovative dimension and the ‘quality’ of the analyzed publications. The computation of the PageRank index is sophisticated and not readily understandable to non-experts. What is easy to understand is that the first step toward increasing the impact is to gain more visibility on Google Scholar for a set of articles, which may increase chances of citations from widely visible, highly prestigious, and well cited online items. The index can increase even with a few citations from articles which attract a growing number of citations.

Total publications

One of the main limitations of the h-index relates to its focus on a large number of publications as a reflection of productivity, which is achievable over a certain period of academic activities, and particularly in a team of experts of prestigious institutions, who work on ‘hot’ research topics with proper funding, supply of modern equipments, and support of co-authors and collaborators.48,49

As a notable example, leading American cardiologists, who are involved in cardiovascular drug trials at prestigious research centers, have astronomically high h-index scores (e.g., Eugene B. Braunwald and Paul M. Ridker have 1,100 and 959 Scopus-indexed articles with 182 and 177 h-index scores, respectively). Apparently, the h-index cannot be employed for ranking and promotion of early career researchers, academic faculty members overburdened with teaching responsibilities, or experts of emerging disciplines with a small number of articles.

To increase their h-index scores, researchers have to publish more in the first place and increase their total publications, which is the basic activity indicator.50 Scopus-based analyses, for example, generate graphs with details of who publishes most in certain fields. This type of information can be employed for mapping the scientific progress.51

While high publication counts can be associated with great achievements, innovations, and high citation counts in developed countries,52,53 sloppy and otherwise unethical publications may occasionally inflate this measure of productivity elsewhere. Some researchers erroneously believe that the higher the number of publications, the greater the chances of making an impact and achieving a high academic rank. Such beliefs, which are dominant in some non-mainstream science countries, have led to the growth of unjustified, ‘salami-sliced,’ inconclusive, redundant, and pseudoscientific publications.54,55,56 Alarmingly, the unprecedented growth of secondary publications, such as systematic reviews and meta-analyses, often generated on common templates, pollutes the evidence base in oncology, medical genetics, and other critically important fields of clinical medicine and public health.57,58 By comparing records of active and non-active researchers, the evaluators should recognize and filter out any ‘useless’ and nonsense publications.

Total publication count as a quantitative metric overlooks the relevance of article type, number of co-authors, subject category, indexing database, and value of publishing in both national and top journals (e.g., Nature, Science). Publication records of early career researchers can be easily inflated by numerous case studies, which rarely find their way to top journals, weakly influence the evidence accumulation and bibliometric analyses, and often convey a practical message only.59,60,61 Publication counts are also high among authors who produce numerous letters and commentaries, dispersed across journals of various academic disciplines. Such practice of boosting numbers by generating pointless items is harshly criticized as counterproductive and unethical.55

Although the authors themselves can collect their publications from various indexed and non-indexed sources and display related records on their online profiles,2 evaluators have to navigate through several platforms, including related national citation indexes and specialist databases, to comprehensively and accurately judge the publication outputs. Korean research managers, for example, prioritize not just total publications, but articles indexed by the Korean Citation Index, which attract more local citations than those indexed by global databases and remained unnoticed for the global community.62

Busy practitioners, academic faculty members, and researchers in emerging fields of science usually produce much less and lag behind their colleagues, who work in established research areas. Evidence suggests that elementary mentorship and writing support by peers and professional writers, which is aimed at adhering to available standards and citing reliable sources, effectively and ethically increases publication activity.63,64,65

Total citations

Citation counts reflect readers and citing authors' interest to a certain set of articles. To some extent, this indicator is a surrogate measure of the quality of research. Authors with several thousand citations, received in the course of long-lasting academic activities, can be viewed as the generators of quality items, influencing the scientific progress.

In medicine, large amount of citations in an individual's profile is often due to authoring innovative methodology reports, clinical trials, large cohort studies, systematic reviews, and practice recommendations.66,67,68 A notable example is the case of Oliver H. Lowry,69 who published his paper on protein measurement with the folin phenol reagent in 1951. As of February 10, 2018, Lowry's profile in Scopus records 261,840 total citations for 226 documents with 244,589 linked to the seminal methodology paper.

Evidence from a systematic review suggests that authors who publish statistically significant results, supporting their working hypotheses, receive 1.6–2.7 times more citations than those who, quite correctly, publish non-significant and negative results.70

The detailed analysis of the distribution of citations provides crucial information about the context of research productivity. An individual's single landmark article may garner more citations than all other publications altogether.71,72 The excessive citations to a single article are often eyeballing, with Oliver H. Lowry's methodology paper being the best example. The citation speed with which published articles attract citing authors' attention can be also eyeballing and reveal researchers with truly innovative and popular research studies.73

A recent bibliometric study suggested that the growing citation counts in the past 5 years, reflected in the h5-index scores, can reveal candidates with a growing influence and the best chances for academic promotion.74

A larger time window is employed by Clarivate Analytics to distinguish exceptional and actively publishing researchers. Researchers are ranked as highly cited if their articles are regularly listed in the top first percentile of highly cited items in their subject category of Web of Science over the past 10 years. Since 2014, more than 3,000 highly cited researchers have been grouped in one of 21 subject fields and credited annually by Clarivate Analytics.75 The results of related annual reports of the Essential Science Indicators (Web of Science component) have influenced the world's university rankings by the Academic Ranking of World Universities.76

Despite the growing role of citation counts for crediting previously published works,77 it is also increasingly important to identify irrelevant auto- and reciprocal, or ‘friendly,’ citations that artificially inflate an individual's profile.78 These unethical citations, which often originate from non-mainstream science countries and substandard sources, primarily skew the evaluation of authors with a small number of citations.79

EMERGING ALTERNATIVE METRICS

Citation counts and related traditional metrics are dependent on the authors' citing behavior and speed with which their subsequent articles are published. Even in the times of the wide availability of fast-track online periodicals, the publication process is still lengthy, which makes it impossible to evaluate immediate public attention to research output. The Altmetrics indicators, which are displayed on Altmetrics.com (supported by Macmillan Science and Education, the owner of Nature Publishing Group), reflect public attention in a wider context and counterbalance some of the limitations of the citation metrics. The website was established in 2011. Downloads, reads, bookmarks, shares, mentions, and comments on published items at any, not necessarily scholarly, outlets of social media are now available to analyze public value, or “societal impact,” of publications.80,81 The detailed analyses may help ascertain geography and professional interests of the immediate evaluators, who tweet, share, and positively mark relevant and valuable items on social media channels. The evaluators are not just researchers and potential authors, but also trainees, practitioners, and public figures.82 Therefore, careful interpretation of the context of social media attention is advisable.83

Scholarly users of social media are unevenly distributed across disciplines, with social scientists representing the most active and life scientists the least active groups.84 Such discrepancies necessitate field normalization.

One of the scholarly components of the alternative impact evaluation takes into account comments and recommendations posted on Faculty of 1,000 Prime, PubPeer, Publons, and other emerging services for publicizing peer review and crediting peer reviewers.85 A recent analysis of research performance at Google Scholar and peer-review activities of scholars registered with Publons revealed that the peer-review forum provides scholarly information of great importance to journal editors.86 Publons has been also recognized as a supplier of data for quantifying reviewer activities and combining verifiable alternative metrics with traditional ones.87

Although no author-level composite alternative metric is currently available, the Altmetric Attention Scores of separate items can be grouped to distinguish the most attractive articles at an individual's online profile. These scores for items with digital identifiers, such as DOI and PubMed ID, can be freely computed using the Altmetric bookmarklet tool.88

CONCLUSION

The evaluation of individual research productivity is a complex procedure that takes into account volume of research output and its influence (Table 2). Apparently, no single metric is capable of comprehensively reflecting a scope of scholarly activities. Concurrently, a single scholarly work with innovative design and outcomes may attract global attention, point to a great scholarly achievement, and necessitate crediting. Readers and prospective authors are the main evaluators, who refer to published works on their social networking platforms and scholarly articles, highlighting strengths and limitations of research data. Apparently, those who wish to increase their scholarly impact should be concerned not just with volume, but also with the quality, visibility, and openness of their works.89 Carefully editing, structuring, and adequately illustrating articles may increase the chances of publishing in widely visible and highly prestigious sources. Visibility of research may increase attention of readers and attract constructive post-publication criticism along with relevant citations.

Table 2. Pointers for employing researcher and author impact metrics.

| • | No single metric, and especially the universally applicable h-index, is suitable for comprehensive evaluation of an individual's research impact. |

| • | Individual profiles at several, including national and specialist bibliographic databases should be analyzed to comprehensively evaluate global and local components of an individual's research productivity. |

| • | Various published works reflect priorities of research productivity across academic disciplines (e.g., conference papers in physics, journal articles in medicine, monographs in humanities). |

| • | A mere number of researcher publications, citations, and related metrics should not be viewed as a proxy of the quality of their scholarly activities. |

| • | No any thresholds of number of publications, citations, and related metrics can be employed for distinguishing productive researchers from non-productive peers. Any such threshold (e.g., 50 articles) is arbitrary. |

| • | Optimal metrics for research evaluation should be simple, intuitive, and easily understandable for non-experts. |

| • | Quantitative indicators should complement, but not substitute, expert evaluation. |

| • | All author-level metrics are confounded by academic discipline, geography, (multi)authorship, time window, and age of researchers. |

| • | Productivity of early career researchers with a few publications and seasoned authors with established academic career and a large number of scholarly works should be evaluated separately. |

| • | Comprehensive research evaluation implies understanding of the context of all traditional and alternative metrics. When manipulation of publication and citation counts is suspected, the evaluation should preferably source information from higher-rank periodicals. |

Tools for quantitative evaluation of the researcher and author impact have proliferated over the past decade. This trend is mainly due to the proposal of the h-index in 2005 and numerous attempts to overcome its limitations.90 The h-index as a simple and easily understandable metric has been well accepted by evaluators. Several variants of the h-index have been proposed to correct for some, but not all confounders (e.g., age of academic activities, level of activity in the past 5 years, excessive citations to some items). However, the initial enthusiasm toward the simplicity of the quantitative evaluation has been overshadowed by concerns over the inappropriate uses of individual metrics and prompted more detailed analyses of publication and citation sets. Professional relevance of publications and context of citations often reveal the true impact of individual academic activities. The analysis of citation sources and their scientific prestige provide more valuable information than simply recording citation counts.

Research productivity includes not just scholarly articles, but also peer reviewer comments, which can be counted as units of publication activity provided these are properly digitized and credited by evaluators. The Publons platform (Clarivate Analytics) is currently the emerging hub for publicizing reviewer comments and ranking skilled contributors to the journal quality. Registering with Publons and transferring information to individual online identifiers is an opportunity to further diversify researcher and author impact assessment.

Prospective use of researcher and author metrics is increasingly dependent on evaluation purposes. The evaluators should take a balanced approach and consider information sourced from various global, national, and specialist bibliographic databases.

Footnotes

Disclosure: The authors have no potential conflicts of interest to disclose.

Author Contributions: Data curation: Gasparyan AY, Yessirkepov M, Duisenova A. Investigation: Gasparyan AY, Yessirkepov M, Trukhachev VI, Kostyukova EI, Kitas GD. Writing - original draft: Gasparyan AY, Yessirkepov M, Duisenova A, Kostyukova EI, Kitas GD.

References

- 1.Peters I, Kraker P, Lex E, Gumpenberger C, Gorraiz J. Research data explored: an extended analysis of citations and altmetrics. Scientometrics. 2016;107:723–744. doi: 10.1007/s11192-016-1887-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Gasparyan AY, Nurmashev B, Yessirkepov M, Endovitskiy DA, Voronov AA, Kitas GD. Researcher and author profiles: opportunities, advantages, and limitations. J Korean Med Sci. 2017;32(11):1749–1756. doi: 10.3346/jkms.2017.32.11.1749. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Gasparyan AY, Ayvazyan L, Kitas GD. Multidisciplinary bibliographic databases. J Korean Med Sci. 2013;28(9):1270–1275. doi: 10.3346/jkms.2013.28.9.1270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Scopus launches annual journal re-evaluation process to maintain content quality. [Updated 2015]. [Accessed February 10, 2018]. https://blog.scopus.com/posts/scopus-launches-annual-journal-re-evaluation-process-to-maintain-content-quality. Updated 2015.

- 5.Bodlaender HL, van Kreveld M. Google Scholar makes it hard - the complexity of organizing one's publications. Inf Process Lett. 2015;115(12):965–968. [Google Scholar]

- 6.Walker B, Alavifard S, Roberts S, Lanes A, Ramsay T, Boet S. Inter-rater reliability of h-index scores calculated by Web of Science and Scopus for clinical epidemiology scientists. Health Info Libr J. 2016;33(2):140–149. doi: 10.1111/hir.12140. [DOI] [PubMed] [Google Scholar]

- 7.Powell KR, Peterson SR. Coverage and quality: a comparison of Web of Science and Scopus databases for reporting faculty nursing publication metrics. Nurs Outlook. 2017;65(5):572–578. doi: 10.1016/j.outlook.2017.03.004. [DOI] [PubMed] [Google Scholar]

- 8.Mering M. Bibliometrics: understanding author-, article- and journal-level metrics. Ser Rev. 2017;43(1):41–45. [Google Scholar]

- 9.Shamseer L, Moher D, Maduekwe O, Turner L, Barbour V, Burch R, et al. Potential predatory and legitimate biomedical journals: can you tell the difference? A cross-sectional comparison. BMC Med. 2017;15(1):28. doi: 10.1186/s12916-017-0785-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jacsó P. The pros and cons of computing the h-index using Google Scholar. Online Inf Rev. 2008;32(3):437–452. [Google Scholar]

- 11.Gorin SV, Koroleva AM, Ovcharenko NA. The Russian Science Citation Index (RSCI) as a new trend in scientific editing and publishing in Russia. Eur Sci Ed. 2016;42(3):60–63. [Google Scholar]

- 12.Libkind AN, Markusova VA, Mindeli LE. Bibliometric indicators of Russian journals by JCR-Science Edition, 1995–2010. Acta Naturae. 2013;5(3):6–12. [PMC free article] [PubMed] [Google Scholar]

- 13.Russian science citation index. [Updated 2016]. [Accessed February 10, 2018]. http://wokinfo.com/products_tools/multidisciplinary/rsci/

- 14.Stuart A, Faucette SP, Thomas WJ. Author impact metrics in communication sciences and disorder research. J Speech Lang Hear Res. 2017;60(9):2704–2724. doi: 10.1044/2017_JSLHR-H-16-0458. [DOI] [PubMed] [Google Scholar]

- 15.Orduna-Malea E, Delgado López-Cózar E. Performance behavior patterns in author-level metrics: a disciplinary comparison of Google Scholar Citations, ResearchGate, and ImpactStory. Front Res Metr Anal. 2017;2:14. [Google Scholar]

- 16.Shrivastava R, Mahajan P. An altmetric analysis of ResearchGate profiles of physics researchers: a study of University of Delhi (India) Perform Meas Metr. 2017;18(1):52–66. [Google Scholar]

- 17.Hirsch JE. An index to quantify an individual's scientific research output. Proc Natl Acad Sci USA. 2005;102(46):16569–16572. doi: 10.1073/pnas.0507655102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bornmann L, Marx W. The h index as a research performance indicator. Eur Sci Ed. 2011;37(3):77–80. [Google Scholar]

- 19.Ball P. Achievement index climbs the ranks. Nature. 2007;448(7155):737. doi: 10.1038/448737a. [DOI] [PubMed] [Google Scholar]

- 20.Carpenter CR, Cone DC, Sarli CC. Using publication metrics to highlight academic productivity and research impact. Acad Emerg Med. 2014;21(10):1160–1172. doi: 10.1111/acem.12482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Doja A, Eady K, Horsley T, Bould MD, Victor JC, Sampson M. The h-index in medical education: an analysis of medical education journal editorial boards. BMC Med Educ. 2014;14:251. doi: 10.1186/s12909-014-0251-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Asnafi S, Gunderson T, McDonald RJ, Kallmes DF. Association of h-index of editorial board members and Impact Factor among Radiology Journals. Acad Radiol. 2017;24(2):119–123. doi: 10.1016/j.acra.2016.11.005. [DOI] [PubMed] [Google Scholar]

- 23.Jacsó P. The h‐index for countries in Web of Science and Scopus. Online Inf Rev. 2009;33(4):831–837. [Google Scholar]

- 24.Mester G. Rankings scientists, journals and countries using h-index. Interdiscip Descr Complex Sys. 2016;14(1):1–9. [Google Scholar]

- 25.Bornmann L, Marx W, Gasparyan AY, Kitas GD. Diversity, value and limitations of the journal impact factor and alternative metrics. Rheumatol Int. 2012;32(7):1861–1867. doi: 10.1007/s00296-011-2276-1. [DOI] [PubMed] [Google Scholar]

- 26.Tabatabaei-Malazy O, Ramezani A, Atlasi R, Larijani B, Abdollahi M. Scientometric study of academic publications on antioxidative herbal medicines in type 2 diabetes mellitus. J Diabetes Metab Disord. 2016;15:48. doi: 10.1186/s40200-016-0273-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Barnes C. The h-index debate: an introduction for librarians. J Acad Librariansh. 2017;43(6):487–494. [Google Scholar]

- 28.Bartneck C, Kokkelmans S. Detecting h-index manipulation through self-citation analysis. Scientometrics. 2011;87(1):85–98. doi: 10.1007/s11192-010-0306-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ruan QZ, Cohen JB, Baek Y, Bletsis P, Celestin AR, Epstein S, et al. Does industry funding mean more publications for subspecialty academic plastic surgeons? J Surg Res. 2018;224:185–192. doi: 10.1016/j.jss.2017.12.025. [DOI] [PubMed] [Google Scholar]

- 30.Misra DP, Ravindran V, Wakhlu A, Sharma A, Agarwal V, Negi VS. Publishing in black and white: the relevance of listing of scientific journals. Rheumatol Int. 2017;37(11):1773–1778. doi: 10.1007/s00296-017-3830-2. [DOI] [PubMed] [Google Scholar]

- 31.Wildgaard L. A comparison of 17 author-level bibliometric indicators for researchers in Astronomy, Environmental Science, Philosophy and Public Health in Web of Science and Google Scholar. Scientometrics. 2015;104(3):873–906. [Google Scholar]

- 32.Bornmann L. h-Index research in scientometrics: a summary. J Informetrics. 2014;8(3):749–750. [Google Scholar]

- 33.Bornmann L, Mutz R, Hug S, Daniel H. A multilevel meta-analysis of studies reporting correlations between the h index and 37 different h index variants. J Informetrics. 2011;5(3):346–359. [Google Scholar]

- 34.Schreiber M. Restricting the h-index to a publication and citation time window: a case study of a timed Hirsch index. J Informetrics. 2015;9(1):150–155. [Google Scholar]

- 35.Mahbuba D, Rousseau R. Year-based h-type indicators. Scientometrics. 2013;96(3):785–797. [Google Scholar]

- 36.Fiala D. Current index: a proposal for a dynamic rating system for researchers. J Assoc Inf Sci Technol. 2014;65(4):850–855. [Google Scholar]

- 37.Pan RK, Fortunato S. Author Impact Factor: tracking the dynamics of individual scientific impact. Sci Rep. 2014;4:4880. doi: 10.1038/srep04880. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Khan NR, Thompson CJ, Taylor DR, Gabrick KS, Choudhri AF, Boop FR, et al. Should the h-index be modified? An analysis of the m-quotient, contemporary h-index, authorship value, and impact factor. World Neurosurg. 2013;80(6):766–774. doi: 10.1016/j.wneu.2013.07.011. [DOI] [PubMed] [Google Scholar]

- 39.Sidiropoulos A, Katsaros D, Manolopoulos Y. Generalized Hirsch h-index for disclosing latent facts in citation networks. Scientometrics. 2007;72(2):253–280. [Google Scholar]

- 40.Egghe L. Theory and practise of the g-index. Scientometrics. 2006;69(1):131–152. [Google Scholar]

- 41.Publish or perish. [Updated 2016]. [Accessed February 10, 2018]. https://harzing.com/resources/publish-or-perish.

- 42.Bartolucci F. A comparison between the g-index and the h-index based on concentration. J Assoc Inf Sci Technol. 2015;66(12):2708–2710. [Google Scholar]

- 43.Zhang CT. The e-index, complementing the h-index for excess citations. PLoS One. 2009;4(5):e5429. doi: 10.1371/journal.pone.0005429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Zhang CT. Relationship of the h-index, g-index, and e-index. J Am Soc Inf Sci Technol. 2010;61(3):625–628. [Google Scholar]

- 45.Namazi MR, Fallahzadeh MK. n-index: a novel and easily-calculable parameter for comparison of researchers working in different scientific fields. Indian J Dermatol Venereol Leprol. 2010;76(3):229–230. doi: 10.4103/0378-6323.62960. [DOI] [PubMed] [Google Scholar]

- 46.Senanayake U, Piraveenan M, Zomaya A. The Pagerank-index: going beyond citation counts in quantifying scientific impact of researchers. PLoS One. 2015;10(8):e0134794. doi: 10.1371/journal.pone.0134794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Gao C, Wang Z, Li X, Zhang Z, Zeng W. PR-index: using the h-index and PageRank for determining true impact. PLoS One. 2016;11(9):e0161755. doi: 10.1371/journal.pone.0161755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Wuchty S, Jones BF, Uzzi B. The increasing dominance of teams in production of knowledge. Science. 2007;316(5827):1036–1039. doi: 10.1126/science.1136099. [DOI] [PubMed] [Google Scholar]

- 49.Spiroski M. Current scientific impact of Ss Cyril and Methodius University of Skopje, Republic of Macedonia in the Scopus Database (1960–2014) Open Access Maced J Med Sci. 2015;3(1):1–6. doi: 10.3889/oamjms.2015.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Moed HF, Halevi G. Multidimensional assessment of scholarly research impact. J Assoc Inf Sci Technol. 2015;66(10):1988–2002. [Google Scholar]

- 51.Ram S. India's contribution on “Guillain-Barre syndrome”: mapping of 40 years research. Neurol India. 2013;61(4):375–382. doi: 10.4103/0028-3886.117612. [DOI] [PubMed] [Google Scholar]

- 52.Sandström U, van den Besselaar P. Quantity and/or quality? The importance of publishing many papers. PLoS One. 2016;11(11):e0166149. doi: 10.1371/journal.pone.0166149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Cancino CA, Merigó JM, Coronado FC. Big names in innovation research: a bibliometric overview. Curr Sci. 2017;113(8):1507–1518. [Google Scholar]

- 54.Cheung VW, Lam GO, Wang YF, Chadha NK. Current incidence of duplicate publication in otolaryngology. Laryngoscope. 2014;124(3):655–658. doi: 10.1002/lary.24294. [DOI] [PubMed] [Google Scholar]

- 55.Gasparyan AY, Nurmashev B, Voronov AA, Gerasimov AN, Koroleva AM, Kitas GD. The pressure to publish more and the scope of predatory publishing activities. J Korean Med Sci. 2016;31(12):1874–1878. doi: 10.3346/jkms.2016.31.12.1874. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Rai R, Sabharwal S. Retracted publications in orthopaedics: prevalence, characteristics, and trends. J Bone Joint Surg Am. 2017;99(9):e44. doi: 10.2106/JBJS.16.01116. [DOI] [PubMed] [Google Scholar]

- 57.Riaz IB, Khan MS, Riaz H, Goldberg RJ. Disorganized systematic reviews and meta-analyses: time to systematize the conduct and publication of these study overviews? Am J Med. 2016;129(3):339.e11–339.e18. doi: 10.1016/j.amjmed.2015.10.009. [DOI] [PubMed] [Google Scholar]

- 58.Park JH, Eisenhut M, van der Vliet HJ, Shin JI. Statistical controversies in clinical research: overlap and errors in the meta-analyses of microRNA genetic association studies in cancers. Ann Oncol. 2017;28(6):1169–1182. doi: 10.1093/annonc/mdx024. [DOI] [PubMed] [Google Scholar]

- 59.Gaught AM, Cleveland CA, Hill JJ., 3rd Publish or perish?: physician research productivity during residency training. Am J Phys Med Rehabil. 2013;92(8):710–714. doi: 10.1097/PHM.0b013e3182876197. [DOI] [PubMed] [Google Scholar]

- 60.Rosenkrantz AB, Pinnamaneni N, Babb JS, Doshi AM. Most common publication types in Radiology Journals: what is the level of evidence? Acad Radiol. 2016;23(5):628–633. doi: 10.1016/j.acra.2016.01.002. [DOI] [PubMed] [Google Scholar]

- 61.Firat AC, Araz C, Kayhan Z. Case reports: should we do away with them? J Clin Anesth. 2017;37:74–76. doi: 10.1016/j.jclinane.2016.10.006. [DOI] [PubMed] [Google Scholar]

- 62.Oh J, Chang H, Kim JA, Choi M, Park Z, Cho Y, et al. Citation analysis for biomedical and health sciences journals published in Korea. Healthc Inform Res. 2017;23(3):218–225. doi: 10.4258/hir.2017.23.3.218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Baldwin C, Chandler GE. Improving faculty publication output: the role of a writing coach. J Prof Nurs. 2002;18(1):8–15. doi: 10.1053/jpnu.2002.30896. [DOI] [PubMed] [Google Scholar]

- 64.Pohlman KA, Vallone S, Nightingale LM. Outcomes of a mentored research competition for authoring pediatric case reports in chiropractic. J Chiropr Educ. 2013;27(1):33–39. doi: 10.7899/JCE-12-008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Bullion JW, Brower SM. Enhancing the research and publication efforts of health sciences librarians via an academic writing retreat. J Med Libr Assoc. 2017;105(4):394–399. doi: 10.5195/jmla.2017.320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Willis DL, Bahler CD, Neuberger MM, Dahm P. Predictors of citations in the urological literature. BJU Int. 2011;107(12):1876–1880. doi: 10.1111/j.1464-410X.2010.10028.x. [DOI] [PubMed] [Google Scholar]

- 67.Antoniou GA, Antoniou SA, Georgakarakos EI, Sfyroeras GS, Georgiadis GS. Bibliometric analysis of factors predicting increased citations in the vascular and endovascular literature. Ann Vasc Surg. 2015;29(2):286–292. doi: 10.1016/j.avsg.2014.09.017. [DOI] [PubMed] [Google Scholar]

- 68.Zhang Z, Poucke SV. Citations for randomized controlled trials in sepsis literature: the halo effect caused by journal impact factor. PLoS One. 2017;12(1):e0169398. doi: 10.1371/journal.pone.0169398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Lowry OH, Rosebrough NJ, Farr AL, Randall RJ. Protein measurement with the Folin phenol reagent. J Biol Chem. 1951;193(1):265–275. [PubMed] [Google Scholar]

- 70.Duyx B, Urlings MJ, Swaen GM, Bouter LM, Zeegers MP. Scientific citations favor positive results: a systematic review and meta-analysis. J Clin Epidemiol. 2017;88:92–101. doi: 10.1016/j.jclinepi.2017.06.002. [DOI] [PubMed] [Google Scholar]

- 71.Shadgan B, Roig M, Hajghanbari B, Reid WD. Top-cited articles in rehabilitation. Arch Phys Med Rehabil. 2010;91(5):806–815. doi: 10.1016/j.apmr.2010.01.011. [DOI] [PubMed] [Google Scholar]

- 72.Jafarzadeh H, Sarraf Shirazi A, Andersson L. The most-cited articles in dental, oral, and maxillofacial traumatology during 64 years. Dent Traumatol. 2015;31(5):350–360. doi: 10.1111/edt.12195. [DOI] [PubMed] [Google Scholar]

- 73.Bornmann L, Daniel HD. The citation speed index: a useful bibliometric indicator to add to the h index. J Informetrics. 2010;4(3):444–446. [Google Scholar]

- 74.Ruan QZ, Ricci JA, Silvestre J, Ho OA, Lee BT. Academic productivity of faculty associated with microsurgery fellowships. Microsurgery. 2017;37(6):641–646. doi: 10.1002/micr.30145. [DOI] [PubMed] [Google Scholar]

- 75.2017 Highly cited researchers. [Updated 2017]. [Accessed February 10, 2018]. https://clarivate.com/hcr/2017-researchers-list/

- 76.Essential Science Indicators (ESI) latest data ranks 21 PKU disciplines in global top 1%. [Updated 2016]. [Accessed February 10, 2018]. http://english.pku.edu.cn/news_events/news/focus/5017.htm.

- 77.Tolisano AM, Song SA, Cable BB. Author self-citation in the otolaryngology literature: a pilot study. Otolaryngol Head Neck Surg. 2016;154(2):282–286. doi: 10.1177/0194599815616111. [DOI] [PubMed] [Google Scholar]

- 78.Bornmann L, Daniel HD. What do citation counts measure? A review of studies on citing behavior. J Doc. 2008;64(1):45–80. [Google Scholar]

- 79.Biagioli M. Watch out for cheats in citation game. Nature. 2016;535(7611):201. doi: 10.1038/535201a. [DOI] [PubMed] [Google Scholar]

- 80.Bornmann L, Haunschild R. Measuring field-normalized impact of papers on specific societal groups: an altmetrics study based on Mendeley Data. Res Eval. 2017;26(3):230–241. [Google Scholar]

- 81.Delli K, Livas C, Spijkervet FK, Vissink A. Measuring the social impact of dental research: an insight into the most influential articles on the Web. Oral Dis. 2017;23(8):1155–1161. doi: 10.1111/odi.12714. [DOI] [PubMed] [Google Scholar]

- 82.Maggio LA, Meyer HS, Artino AR., Jr Beyond citation rates: a real-time impact analysis of health professions education research using altmetrics. Acad Med. 2017;92(10):1449–1455. doi: 10.1097/ACM.0000000000001897. [DOI] [PubMed] [Google Scholar]

- 83.Konkiel S. Altmetrics: diversifying the understanding of influential scholarship. Palgrave Commun. 2016;2:16057 [Google Scholar]

- 84.Ke Q, Ahn YY, Sugimoto CR. A systematic identification and analysis of scientists on Twitter. PLoS One. 2017;12(4):e0175368. doi: 10.1371/journal.pone.0175368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Hogan AM, Winter DC. Changing the rules of the game: how do we measure success in social media? Clin Colon Rectal Surg. 2017;30(4):259–263. doi: 10.1055/s-0037-1604254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Ortega JL. Are peer-review activities related to reviewer bibliometric performance? A scientometric analysis of Publons. Scientometrics. 2017;112(2):947–962. [Google Scholar]

- 87.Nassi-Calò L. In time: Publons seeks to attract reviewers and improve peer review. Rev Paul Pediatr. 2017;35(4):367–368. [Google Scholar]

- 88.Altmetric bookmarklet. [Updated year]. [Accessed February 10, 2018]. https://www.altmetric.com/products/free-tools/

- 89.Wagner CS, Jonkers K. Open countries have strong science. Nature. 2017;550(7674):32–33. doi: 10.1038/550032a. [DOI] [PubMed] [Google Scholar]

- 90.Wildgaard L, Schneider JW, Larsen B. A review of the characteristics of 108 author-level bibliometric indicators. Scientometrics. 2014;101(1):125–158. [Google Scholar]