Abstract

Objectives

The medial olivocochlear (MOC) efferent system can modify cochlear function to improve sound detection in noise, but its role in speech perception in noise is unclear. The purpose of this study was to determine the association between MOC efferent activity and performance on two speech-in-noise tasks at two signal-to-noise ratios (SNRs). It was hypothesized that efferent activity would be more strongly correlated with performance at the more challenging SNR, relative to performance at the less challenging SNR.

Design

Sixteen adults ages 35 to 73 years old participated. Subjects had pure-tone averages ≤25 dB HL and normal middle ear function. High-frequency pure-tone averages (HFPTAs) were computed across 3000 to 8000 Hz and ranged from 6.3 to 48.8 dB HL. Efferent activity was assessed using contralateral suppression of transient-evoked otoacoustic emissions (TEOAEs) measured in right ears, and MOC activation was achieved by presenting broadband noise to left ears. Contralateral suppression was expressed as the dB change in TEOAE magnitude obtained with versus without the presence of the broadband noise. TEOAE responses were also examined for middle-ear muscle reflex (MEMR) activation and synchronous spontaneous otoacoustic emissions (SSOAEs). Speech-in-noise perception was assessed using the closed-set Coordinate Response Measure (CRM) word recognition task and the open-set Institute of Electrical and Electronics Engineers (IEEE) sentence task. Speech and noise were presented to right ears at two SNRs. Performance on each task was scored as percent correct. Associations between contralateral suppression and speech-in-noise performance were quantified using partial rank correlational analyses, controlling for the variables age and HFPTA.

Results

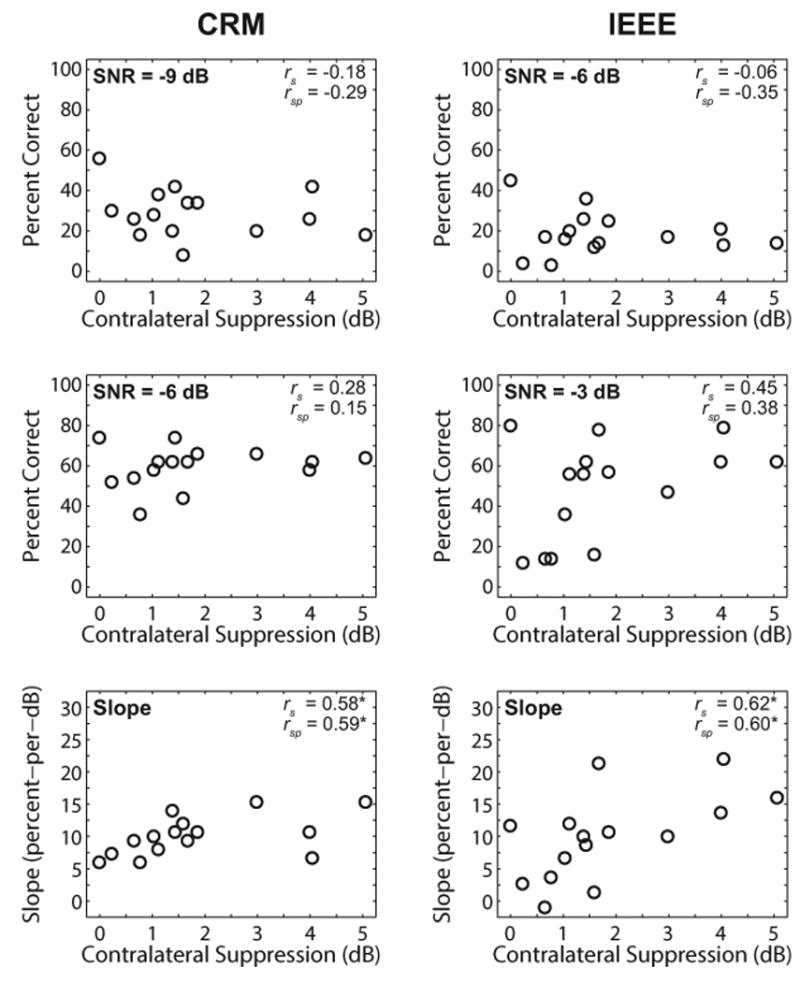

One subject was excluded due to probable MEMR activation. Subjects showed a wide range of contralateral suppression values, consistent with previous reports. Three subjects with SSOAEs had similar contralateral suppression results as subjects without SSOAEs. The magnitude of contralateral suppression was not significantly correlated with speech-in-noise performance on either task at a single SNR (p > 0.05), contrary to hypothesis. However, contralateral suppression was significantly correlated with the slope of the psychometric function, computed as the difference between performance levels at the two SNRs divided by 3 (dB difference between the two SNRs) for the CRM task (partial rs = 0.59, p = 0.04) and for the IEEE task (partial rs = 0.60, p = 0.03).

Conclusions

In a group of primarily older adults with normal hearing or mild hearing loss, olivocochlear efferent activity assessed using contralateral suppression of TEOAEs was not associated with speech-in-noise performance at a single SNR. However, auditory efferent activity appears to be associated with the slope of the psychometric function for both a word and sentence recognition task in noise. Results suggest that individuals with stronger MOC efferent activity tend to be more responsive to changes in SNR, where small increases in SNR result in better speech-in-noise performance relative to individuals with weaker MOC efferent activity. Additionally, the results suggest that the slope of the psychometric function may be a more useful metric than performance at a single SNR when examining the relationship between speech recognition in noise and MOC efferent activity.

Introduction

The auditory system has the ability to modify how it responds to sound input via descending projections from the medial superior olive to the outer hair cells of the cochlea. Activation of this medial olivocochlear (MOC) efferent system by sound stimulation hyperpolarizes outer hair cells, reducing cochlear amplifier gain (Guinan 2006). When this process occurs in the context of a target sound presented in continuous noise, the reduced cochlear amplifier gain in turn decreases neural adaptation caused by the noise, thereby increasing the detection of the target sound, referred to as “antimasking” (Winslow & Sachs 1987; Guinan & Gifford 1988; Kawase et al. 1993). Otoacoustic emissions (OAEs) have been used to assess activity of the efferent system. OAEs are sounds generated as a byproduct of cochlear amplification (Brownell 1990) and can be noninvasively measured in the ear canal. Efferent activation reduces the gain of the cochlear amplifier and is referred to as the MOC reflex (MOCR). Eliciting the MOCR therefore reduces OAE amplitudes (Collet et al. 1990). The strength of efferent activity is quantified as the change in OAE amplitudes measured with versus without noise presented to the contralateral ear (hereafter referred to as contralateral suppression), where larger changes in amplitude are interpreted as evidence of stronger efferent activity (Berlin et al. 1993; Backus & Guinan 2007).

In normal-hearing individuals, contralateral suppression has often been shown to be significantly correlated with the ability to perceive speech in noise, where individuals who have better performance in noise tend to demonstrate larger contralateral suppression (Giraud et al. 1997; Kumar & Vanaja 2004; Kim et al. 2006; Abdala et al. 2014; Mishra & Lutman 2014; Bidelman & Bhagat 2015). The hypothesis underlying these results is that the MOC system provides antimasking of signals in the presence of noise (Winslow & Sachs 1987; Kawase et al. 1993), and that stronger MOC activity (evidenced as larger magnitudes of contralateral suppression) results in greater antimasking (indicated by better performance on speech-in-noise tasks). Although this hypothesis is attractive, other studies have demonstrated either a lack of significant correlation (Harkrider & Smith 2005; Mukari & Mamat 2008; Wagner et al. 2008; Stuart & Butler 2012) or an inverse correlation (de Boer et al. 2012; Milvae et al. 2015) between MOC activity and speech-in-noise performance. Although physiologic data from animals demonstrates a clear role of the MOC for increasing auditory nerve responses to tones in the presence of noise (Kawase et al. 1993), perceptual studies from humans make it difficult to determine the contribution of the MOC to everyday listening to speech in the presence of background noise.

There are a number of possible explanations for the discrepancy in findings, some of which include differences in the choice of OAE stimulus and recording parameters to assess MOC activity. Some studies used distortion-product (DP) OAEs, which can be complicated to use in measuring MOC activity due to the contribution of both distortion and reflection sources that generate the DPOAEs (Shera & Guinan 1999). The two components can be differentially affected by MOC activity (Abdala et al. 2009), resulting in effects that are more difficult to interpret relative to transient-evoked (TE) OAE-based metrics that are presumably generated by a single reflection source (Guinan 2011). Two studies (Kim et al. 2006; Mukari & Mamat 2008) did not exercise careful control over this differential effect [e.g., by measuring contralateral suppression only at peaks in the DPOAE fine structure (Abdala et al. 2009)], so the MOC results should be interpreted with caution. Additionally, TEOAE-based measures of the MOC have often used fast click rates, which can activate the ipsilateral portion of the MOC pathway (Boothalingam & Purcell 2015). Such activation is problematic because it minimizes the difference in TEOAE amplitude that can be detected between the conditions with and without a contralateral activator.

An additional confound in OAE-based measures of MOC activity is activation of the middle ear muscle reflex (MEMR), which can present as a change in OAE amplitude but is not due to the MOC (Goodman et al. 2013). Previous studies of the MOC have exercised varying degrees of control over the MEMR; studies that did not directly measure MEMR activation using the OAE measurements themselves may have results that were contaminated with MEMR. Finally, the OAE-evoking stimulus levels and frequency regions over which MOC activity was analyzed differed across studies. Both level and frequency have been demonstrated to have an effect on the size of MOC activity (e.g., Collet et al. 1990; Hood et al. 1996).

In addition to the differences in OAE measurements across studies, the speech perception tasks also varied. It has been speculated that the type of speech task as well as the relative levels of the speech and noise may contribute to differences in associations between MOC activity and perception (Guinan 2011). However, studies employing similar speech tasks have found discrepant results. For example, de Boer and Thornton (2008) and de Boer et al. (2012) both employed a phoneme discrimination task in noise, but de Boer and Thornton (2008) found a significant positive correlation and de Boer et al. (2012) found a significant negative correlation. It appears that the speech task itself may not dictate the strength or direction of the relationship between MOC activity and speech perception, but the speech task may interact with other factors such as the specific OAE metric.

One important parameter of the speech tasks is the signal-to-noise ratio (SNR) of the speech materials. All previous studies in this area examined the association between MOC activity and speech performance at particular SNRs. Kumar and Vanaja (2004) showed that word recognition at SNRs of +10 and +15 dB yielded significant correlations with MOC activity, whereas SNRs of +20 dB and in quiet did not yield a significant correlation. These results may be interpreted in the context of the antimasking benefit provided by the MOC, where favorable SNRs would confer little benefit to an MOC unmasking effect, relative to more challenging SNRs.

Milvae et al. (2015) examined the association between performance on a speech-in-noise task (QuickSIN) and a psychophysical forward masking task to assess MOC activity. The forward masking task compared detection thresholds for tonal stimuli in quiet and in the presence of a forward-masking noise that activated the MOC, where larger threshold differences were interpreted as stronger MOC activity. They found a significant inverse correlation between efferent activity and speech-in-noise performance, where stronger MOC activity was associated with poorer performance on the QuickSIN. The authors speculated that the QuickSIN's adaptive adjustment of the SNR to approximate the point at which 50% correct performance occurs meant that stimuli were presented at different SNRs, where the MOC may have been more or less beneficial for different subjects, depending upon the SNR at which they were being tested. They suggested that future studies should examine the relationship between MOC activity and performance on a speech task across a range of SNRs to better assess the strength and direction of this relationship.

The purpose of the current study was to examine the relationship between MOC activity and speech-in-noise performance by improving upon the methodology used in previous studies. We investigated whether the type of speech task influences the relationship between MOC activity and speech-in-noise performance by utilizing both a word and a sentence recognition task. Additionally, we investigated how SNR of the speech tasks influences the relationship between MOC activity and speech-in-noise performance by presenting materials at two SNRs, rather than a single SNR as used in some previous studies. Furthermore, we also avoided potential confounds in OAE-based measurements of the MOC (Guinan 2014) by utilizing a TEOAE-based metric, rather than a DPOAE-based metric, and by exercising careful control of unwanted activation of the MEMR and of the ipsilateral MOC pathway. We hypothesized that MOC activity would be more strongly correlated with speech-in-noise performance at the more challenging SNR relative to the less challenging SNR.

Materials and Methods

Subjects

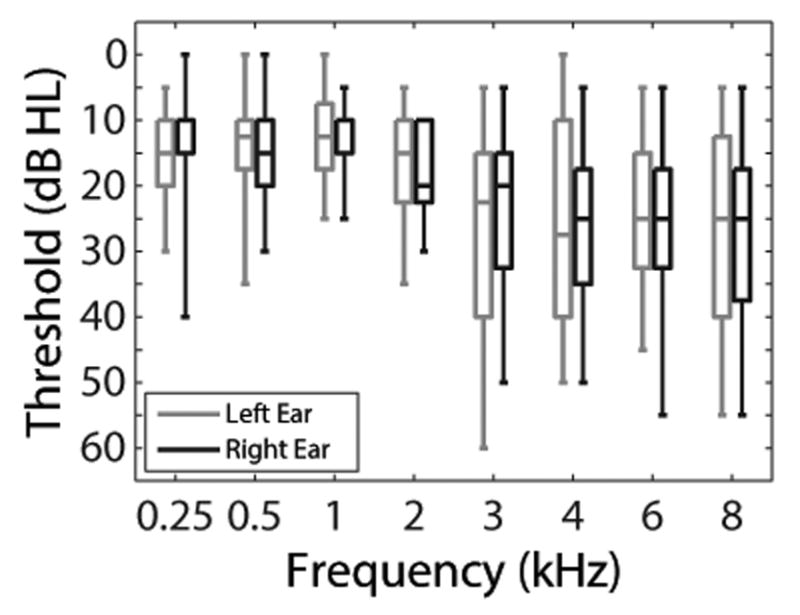

Subjects were recruited from the Veterans Affairs (VA) Loma Linda Healthcare System. A total of 16 adult subjects (14 males) were enrolled. The median age was 60.5 years [interquartile range (IQR) = 55.5 to 67.5, minimum = 35, maximum = 73]. These age and gender distributions reflect the demographics of the VA population from which subjects were recruited. Subjects were required to have pure-tone averages (500, 1000, and 2000 Hz) ≤40 dB HL bilaterally, no air-bone gaps >10 dB at two or more octave frequencies from 500 to 4000 Hz, 226-Hz tympanograms within normal clinical limits, 1000-Hz ipsilateral acoustic reflex thresholds ≤110 dB HL, no history of chronic middle ear pathology, no chronic health condition or use of medication that interfered with study participation, the ability to read and speak English, and measurable TEOAEs in the right ear as defined by a signal-to-noise ratio (SNR) of >6 dB computed across 1000 to 4000 Hz. Descriptive measures of audiometric thresholds from 250 to 8000 Hz are plotted in Figure 1. The study protocol was approved by the Institutional Review Board of the VA Loma Linda Healthcare System. Written informed consent was obtained from all subjects prior to their enrollment. All subjects received monetary compensation for their participation.

Fig. 1.

Box plots of audiometric thresholds. The bottom and top of each box represents the first and third quartiles, respectively. The horizontal line within each box represents the median. The lower and upper vertical lines extend to the minimum and maximum values, respectively.

Equipment

All testing occurred in a double-walled sound-treated booth (Industrial Acoustics Company, Bronx, NY) housed inside a laboratory. Subjects were seated in a recliner for contralateral suppression testing and were seated at a desk in a comfortable office chair for all other procedures. Immittance audiometry was performed using a GSI TympStar Middle Ear Analyzer (Grason-Stadler, Eden Prairie, MN). Pure-tone air- and bone-conduction thresholds were obtained using an Astera2 audiometer (GN Otometrics, Taastrup, Denmark). Contralateral suppression testing was performed using an RZ6 I/O processor [Tucker-Davis Technologies (TDT), Alachua, FL] and a WS4 workstation (TDT) controlled by custom software written in MATLAB (The MathWorks, Inc., Natick, MA) and RPvdsEx (TDT). Stimuli for contralateral suppression testing were routed from PA5 programmable attenuators (TDT) to ER-2 insert earphones (Etymōtic Research, Elk Grove Village, IL). TEOAEs were recorded using an ER-10B+ probe microphone system (Etymōtic Research) with the preamplifier gain set to +40 dB. For the speech-in-noise testing, stimuli were routed from the onboard sound card of a Precision T3610 workstation (Dell, Round Rock, TX) to HD580 circumaural headphones (Sennheiser, Wedemark, Germany). A computer monitor was placed inside the sound booth so the subject could participate in the speech testing. This monitor signal was cloned on the experimenter's side (control room) so that the experimenter could visually monitor subjects' progress with the experiment.

Experimental Procedures

Contralateral Suppression Measurement

MOC activity was assessed using contralateral suppression of TEOAEs based on methods described in Mertes and Leek (2016), where TEOAEs were measured in the right ear with and without the presence of contralateral acoustic stimulation (CAS) presented to the left ear to activate the contralateral MOC pathway. TEOAE-eliciting stimuli consisted of 80-μs clicks generated digitally at a sampling rate of 24414.1 Hz (the default sampling rate of the RZ6 processor). Clicks were presented at 75 dB pSPL to ensure elicitation of TEOAEs in this subject group of primarily older adults (Mertes & Leek 2016). Additionally, this stimulus level was verified to yield absent responses (SNR <6 dB from 1000 to 4000 Hz) in a 2-cc syringe, indicating sufficiently low system distortion and stimulus artifact. Click stimulus levels were calibrated in each subject's ear canal prior to recording. Clicks were presented at a rate of 19.5/s (inter-stimulus interval = 51.2 ms). Based on recent work (Boothalingam & Purcell 2015), this stimulus rate was sufficiently slow to avoid activation of the middle-ear muscle reflex (MEMR), which is desirable because MEMR activation alters TEOAE amplitudes in a similar manner to that of MOCR activation and confounds the interpretation of the results (Guinan et al. 2003; Goodman et al. 2013).

The CAS consisted of broadband Gaussian noise generated digitally by the RZ6 processor at a sampling rate of 24414.1 Hz. The CAS was presented at an overall root-mean-square (RMS) level of 60 dB(A) SPL, selected to ensure sufficient activation of the MOCR while also avoiding elicitation of the MEMR (Guinan et al. 2003). The CAS level was calibrated in an AEC202 2-cc coupler (Larson Davis, Depew, NY).

Measurements of contralateral suppression consisted of recording a set of TEOAEs without and with the presence of CAS (hereafter referred to as the no CAS and CAS conditions, respectively). Prior to each measurement, an in-situ calibration routine was performed in which the SPL of the click stimuli measured in the ear canal was adjusted to be within ±0.3 dB of the target level of 75 dB pSPL. The experimenter monitored the recorded output of the ER-10B+ microphone in real-time to ensure that stimulus levels were stable and that the subject remained adequately quiet across the duration of the recording. Subjects participated in a visual attention task described by Mertes and Goodman (2016) to maintain a consistent attentional state within and across recordings. Subjects were instructed to click a mouse button quickly whenever a white computer screen turned blue. Feedback regarding each response time was presented to subjects to encourage them to respond consistently, and the experimenter also monitored performance to ensure that subjects complied with the procedure.

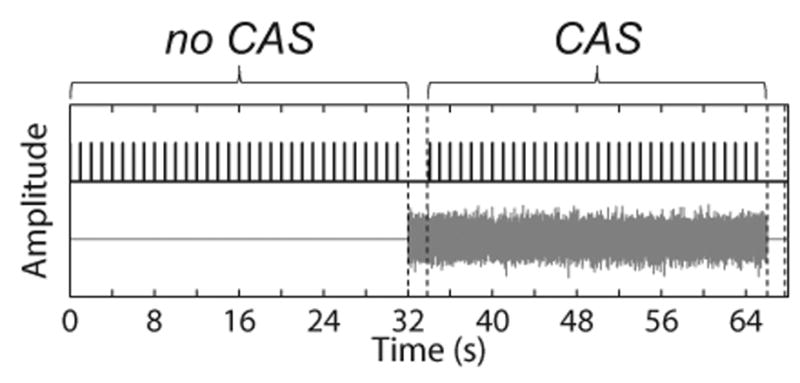

An interleaved stimulus presentation paradigm (Fig. 2) was used, in which the recording alternated between the no CAS and CAS conditions to help ensure that factors such as changes in probe position and subject attention were distributed across both conditions over time (Goodman et al. 2013; Mertes & Leek 2016). Within a single trial, first the no CAS condition (i.e., clicks in the right ear only) was presented for 32 s, then 2 s of CAS was presented alone to allow for onset of the MOCR (Backus & Guinan 2006), then the CAS condition (clicks in the right ear and CAS in the left ear) was presented for 32 s, and finally 2 s of silence was presented to allow for offset of the MOCR (Backus & Guinan 2006). A total of 10 interleaves were presented, lasting 11.3 min and yielding 6,250 recorded TEOAE buffers (19.53125 clicks/s × 32 s × 10 interleaves) in each condition (no CAS and CAS).

Fig. 2.

Schematic of stimuli presentation in the no CAS and CAS conditions. Clicks and CAS are shown in the top and bottom of the figure, respectively. Amplitude is displayed in arbitrary units. The two pairs of dashed vertical lines represent the 2-s interval of noise alone and silence, respectively, to allow for the onset and offset of the MOCR. The number of clicks displayed in the figure is reduced so that individual click stimuli can be visualized. The figure was adapted from Mertes & Leek (2016). CAS indicates contralateral acoustic stimulation; MOCR indicates medial olivocochlear reflex.

The ER-10B+ microphone output was sampled at a rate of 24414.1 Hz, high pass filtered using a second-order Butterworth filter with a cutoff frequency of 250 Hz, and streamed to disk for offline analysis. After the recording was finished, the subject was provided with a 1-min break and the earphones were re-inserted prior to the next measurement. Three measurements of contralateral suppression, lasting a total of 34 min, were obtained from each subject.

Contralateral Suppression Analysis

Recorded waveforms were de-interleaved into two matrices corresponding to the no CAS and CAS conditions. Prior to analyzing the TEOAE waveforms, absence of MEMR activation was verified by comparing the mean amplitudes of the recorded click stimuli in the no CAS and CAS conditions. The rationale for this method is that activation of the MEMR alters the impedance characteristics of the middle ear, and will therefore alter the amplitude of the click stimulus recorded in the ear canal (Guinan et al. 2003). A mean click amplitude in the CAS condition differing by more than ±1.4% of the mean click amplitude in the no CAS condition was taken as evidence of MEMR activation (Abdala et al. 2013). Subjects demonstrating evidence of MEMR activation were excluded from further analyses.

Because some subjects had low-amplitude TEOAEs, the TEOAE SNR was increased by first pooling all buffers from the three measurements of contralateral suppression, yielding 18,750 recorded buffers each in the no CAS and CAS conditions. Artifact rejection was then performed by excluding any buffer whose peak amplitude fell outside 1.5 times the interquartile range of all recorded buffers. To further reduce contamination from artifacts, an additional round of artifact rejection based on the root-mean-square amplitude was explored, but this was found to reduce the TEOAE SNR in some subjects, so only one round was implemented. A rectangular window was applied to the waveforms from 3.5 to 18.5 ms post-stimulus onset, where time zero corresponded to the time of maximum amplitude of the click stimulus. Waveform onsets and offsets were ramped using a 2.5 ms raised cosine ramp, then band pass filtered from 1000 to 2000 Hz using a Hann window-based filter with an order of 256 and correcting for filter group delay. This frequency region was selected because MOC-induced changes in TEOAEs are largest there (Collet et al. 1990; Hood et al. 1996; Goodman et al. 2013).

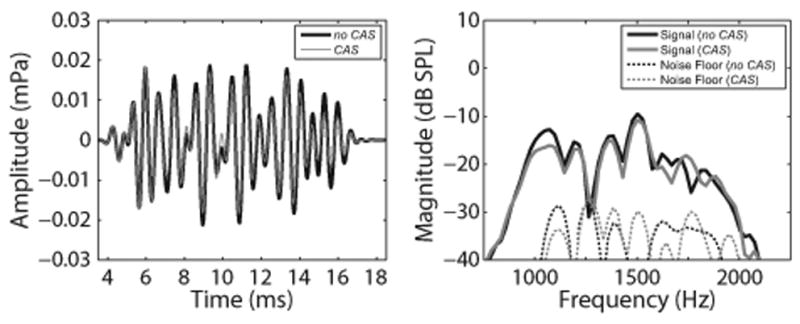

The TEOAE signals and noise floors were computed for the no CAS and CAS conditions using a two-buffer approach, where odd- and even-numbered buffers were divided into sub-buffers A and B. The signal was computed as and the noise floor was computed as . The mean of the signal and noise floor waveforms were calculated for the no CAS and CAS conditions. Example waveforms and spectra from one subject are shown in Figure 3.

Fig. 3.

Example of mean TEOAE waveforms (left panel) and spectra (right panel) for one representative subject. TEOAE amplitudes and magnitudes decreased in the presence of contralateral noise, as expected. In both panels, black and gray solid lines represent responses in the no CAS and CAS conditions, respectively. In the right panel, the thin black and gray dotted lines represent the recording noise floors in the no CAS and CAS conditions, respectively. TEOAE indicates transient-evoked otoacoustic emission; CAS indicates contralateral acoustic stimulation; mPa indicates millipascal.

Measurements of contralateral suppression may be influenced by the presence of OAE responses that become entrained to the stimuli and ring out in time for longer than the transient response, and are referred to as synchronized spontaneous (SS) OAEs. The presence of SSOAEs was examined by viewing the SNR in an analysis window from 30 to 45 ms (relative to the stimulus peak). SSOAEs were deemed present if the SNR within this later time window was >6 dB (the same criterion for determining if TEOAEs were present). Subjects demonstrating SSOAEs were flagged for additional analysis but were not excluded.

The mean signal waveforms in the no CAS and CAS conditions were required to have an SNR >6 dB to be included in the analysis; all subjects had adequately high SNRs and so all TEOAE data were included. Contralateral suppression was computed using the magnitudes obtained from a fast Fourier transform (FFT) of the mean signal waveforms. For the no CAS and CAS conditions, the mean magnitude in the frequency domain was computed across 1000 to 2000 Hz. In order to reduce the contribution of noisy responses, only magnitudes from FFT bins with an SNR >6 dB were included in the calculation of the mean magnitude. Contralateral suppression was computed in the traditional way of subtracting the magnitude in dB in the CAS condition from the no CAS condition (e.g., Collet et al. 1990; Hood et al. 1996). Because some recent studies have quantified contralateral suppression as a percentage change in amplitude (Mishra & Lutman 2013; Marshall et al. 2014; Stuart & Cobb 2015), we also computed contralateral suppression as the percentage change in magnitude normalized by the magnitude in the no CAS condition, , where magnitudes were expressed in linear units. For both calculations of contralateral suppression, positive values indicate that the TEOAE magnitude decreased in the presence of CAS, and larger values were indicative of stronger efferent activity.

Speech-in-Noise Recognition

Both a word and sentence recognition task (each described below in more detail) were utilized in this study. Use of two tasks allowed for an examination of how olivocochlear efferent activity is associated with a closed-set task with no contextual cues (word recognition) versus a more ecologically valid open-set task with limited contextual cues (sentence recognition).

The coordinate response measure (CRM; Bolia et al. 2000) was used to assess word recognition in noise. Target stimuli consisted of a single male talker speaking a carrier phrase followed by two target words consisting of a color-number combination. For example, “Ready Charlie go to blue one now,” where blue one are the target words. There were 32 possible color-number combinations (four colors × eight numbers). The Institute of Electrical and Electronics Engineers (IEEE) sentence corpus (IEEE 1969) was used to assess sentence recognition in noise. Stimuli consisted of 72 lists of 10 sentences each. Target stimuli were spoken by a single female talker, where each sentence contained five key words (e.g., “The birch canoe slid on the smooth planks”, where the key words are italicized).

The CRM and IEEE stimuli were presented in speech-shaped noise developed from their respective corpuses. The noise was created by first concatenating all speech waveforms and ramping the waveforms on and off with 50 ms cosine-squared ramps. An FFT was computed on the concatenated waveform and pointwise-multiplied by the FFT of a random number sequence of equal length, resulting in an FFT that had the same magnitudes as the speech stimuli but with randomized phases. The real part of an inverse FFT was computed to yield the speech-shaped noise waveform.

The target speech stimuli were presented through circumaural headphones at an overall RMS level of 70 dB(C) SPL to ensure audibility and comfort. The speech-shaped noise was presented to yield two fixed SNRs (−9 and −6 dB for the CRM; -6 and -3 dB for the IEEE sentences). These SNRs were selected to avoid floor and ceiling effects, respectively, and were based on pilot data obtained in our laboratory from two normal-hearing subjects, as well as from the IEEE sentence data from Summers et al. (2013). Speech and noise stimuli were both presented to the right ear (i.e., the same ear in which MOC activity was measured). For each trial, the speech-shaped noise was played first for 500 ms to allow for full onset of the MOCR (Backus & Guinan 2006), then the target stimulus was played in addition to the noise. To avoid any effects of repeating the same noise stimulus on each presentation, a random segment (approximately 2.3 s) of the full noise waveform was presented on each trial.

For the CRM, subjects were seated in front of a computer monitor with a graphical user interface with a grid of 32 color-coded buttons corresponding to all color-number combinations. The interface included text headings for the four colors in case any subjects were colorblind. Subjects were instructed to click on the button corresponding to the color-number combination they heard and were encouraged to guess whenever they were unsure. Onscreen feedback regarding whether the response was correct or incorrect was provided, but the correct answer was not provided. Subjects were given a practice session of 10 trials in quiet to orient them to the task. Subjects were required to answer all practice trials correctly before proceeding to the testing. Subjects were presented a total of 100 trials broken down into two blocks of 25 trials at each SNR. The order of block presentations was randomized for each subject.

For the IEEE sentences, subjects were seated in the booth and were instructed to verbally repeat back as much of the sentence as they could, and they were encouraged to guess. No feedback was provided on any trial. After each sentence presentation, the number of key words repeated correctly was entered into the computer by the experimenter, sitting in the control room outside the sound booth. Subjects were presented with four lists (10 sentences per list), with two lists for each of the two SNRs. The lists and order of SNR presentation were randomized for each subject.

Statistical Analysis

Statistical analyses were conducted using the MATLAB Statistics and Machine Learning Toolbox (ver. 11.1). Due to the relatively small sample size, descriptive statistics are presented as medians, IQRs, minima, and maxima. Spearman rank correlation coefficients were computed due to the relatively small sample size, as in Bidelman and Bhagat (2015). A significance level of α = 0.05 was utilized. Previous studies have demonstrated an effect of aging and high-frequency hearing loss on the magnitude of contralateral suppression (e.g., Castor et al. 1994; Keppler et al. 2010; Lisowska et al. 2014). Subjects in the current study demonstrated a range of ages and high-frequency thresholds (described below). Therefore, when the association between olivocochlear efferent activity and speech-in-noise recognition was examined, the variables of age and high-frequency threshold were controlled for using two-tailed partial rank correlational analyses (“partialcorr.m” function in MATLAB).

Results

High-Frequency Audiometric Thresholds

All subjects had pure-tone averages ≤25 dB HL, allowing for measurement of robust TEOAEs. However, hearing thresholds >2000 Hz are relevant to the measures of contralateral suppression and speech-in-noise performance. Twelve subjects had sensorineural hearing loss (defined as a threshold ≥25 dB HL) at one or more frequencies, possibly due to age and/or noise exposure history. To assess the impact of high-frequency hearing thresholds on contralateral suppression and speech-in-noise perception, we first quantified subjects' high-frequency pure-tone averages (HFPTAs) computed across 3000 to 8000 Hz (Spankovich et al. 2011). HFPTAs were only computed for right ears because TEOAEs and speech-in-noise perception were measured in right ears. The median HFPTA was 25.6 dB HL [IQR = 16.3 to 33.1; minimum = 6.3; maximum = 48.8]. HFPTA was controlled for in the partial rank correlational analyses described below.

TEOAEs and Contralateral Suppression

One subject (35-year-old male, HFPTA = 23.8 dB HL) demonstrated probable MEMR activation, evidenced by a change in TEOAE stimulus amplitude of -1.6% between the no CAS and CAS conditions. Therefore, this subject's data were excluded from further analyses. Descriptive statistics for TEOAE data from the remaining 15 subjects are displayed in Table 1. Signal magnitudes showed a substantial range across subjects, while noise floor magnitudes had a smaller range. Median TEOAE SNRs were consistent with recent studies of contralateral suppression (Goodman et al. 2013; Mishra & Lutman 2013; Mertes & Leek 2016).

Table 1.

Descriptive statistics for TEOAE group data. For each parameter (except artifacts rejected), the results in the no CAS and CAS conditions are displayed to facilitate comparisons. IQRs are reported as the first and third quartiles, respectively. TEOAE indicates transient-evoked otoacoustic emission; CAS indicates contralateral acoustic stimulation; IQR indicates interquartile range; SNR indicates signal-to-noise ratio.

| Parameter | Median | IQR | Minimum | Maximum |

|---|---|---|---|---|

| Signal Magnitude (dB SPL) | ||||

| no CAS | -15.7 | -19.1 to -12.1 | -22.2 | -1.2 |

| CAS | -15.7 | -20.3 to -13.1 | -26.7 | -4.0 |

| Noise Floor Magnitude (dB SPL) | ||||

| no CAS | -33.8 | -35.6 to -31.3 | -37.8 | -27.9 |

| CAS | -33.0 | -35.1 to -28.5 | -36.8 | -25.4 |

| SNR (dB) | ||||

| no CAS | 18.1 | 12.3 to 24.6 | 9.1 | 33.2 |

| CAS | 16.2 | 9.4 to 20.9 | 6.8 | 32.5 |

| Artifacts Rejected (%) | 15.1 | 12.7 to 22.0 | 2.8 | 30.0 |

Contralateral suppression was computed as both a dB change and a percentage change in TEOAE magnitude. The median dB change was 1.4 (IQR = 0.8 to 2.7; minimum = -0.01; maximum = 5.1) and the median percentage change was 15.2 (IQR = 9.1 to 26.6; minimum = -0.1; maximum = 44.1). These two metrics of contralateral suppression demonstrated a perfect monotonic relationship (rs = 1). Additionally, neither measure of contralateral suppression was significantly correlated with the magnitude of TEOAEs obtained in the no CAS condition, rs = 0.03 (p = 0.93). Therefore, subsequent contralateral suppression results are reported as the more frequently-reported dB change.

Three subjects demonstrated the presence of SSOAEs (i.e., a TEOAE SNR >6 dB in the time-domain analysis window from 30 to 45 ms). The age, HFPTA, and contralateral suppression values of these subjects were as follows: subject 1 -- 67 years, 27.5 dB HL, 0.8 dB; subject 2 -- 53 years, 6.3 dB HL, 1.4 dB; subject 3 -- 39 years, 13.8 dB HL, 1.1 dB. Contralateral suppression in these subjects did not appear to differ substantially from the subjects without SSOAEs, consistent with recent reports (Marshall et al. 2014; Mertes & Goodman 2016; Marks & Siegel 2017).

Speech-in-Noise Recognition

Descriptive statistics for the CRM and IEEE tests are displayed in Table 2. The ranges indicated that floor and ceiling effects were not reached, although one subject approached the floor for the IEEE sentences at an SNR of -6 dB. It was expected that performance on the word and sentence recognition tasks would be significantly correlated (Bilger et al. 1984). The relationship approached significance at the lower SNRs (rs = 0.46, p = 0.08) and was significant at the higher SNRs (rs = 0.70, p = 0.003).

Table 2.

Descriptive statistics for speech perception group data. Values at individual SNRs are displayed in percentage correct. Slope values are displayed in percent-per-dB. IQRs are displayed as first and third quartiles, respectively. SNR indicates signal-to-noise ratio; CRM indicates coordinate response measure; IEEE indicates Institute of Electrical and Electronics Engineers; IQR indicates interquartile range.

| Speech Test | SNR (dB) | Median | IQR | Minimum | Maximum |

|---|---|---|---|---|---|

| CRM | -9 | 28 | 20 to 37 | 8 | 56 |

| -6 | 62 | 55 to 65.5 | 36 | 74 | |

| Slope | 10 | 7.5 to 11.7 | 6 | 15.3 | |

| IEEE | -6 | 17 | 13.3 to 24 | 3 | 45 |

| -3 | 56 | 21 to 62 | 12 | 80 | |

| Slope | 10 | 4.4 to 13.3 | -1 | 22 |

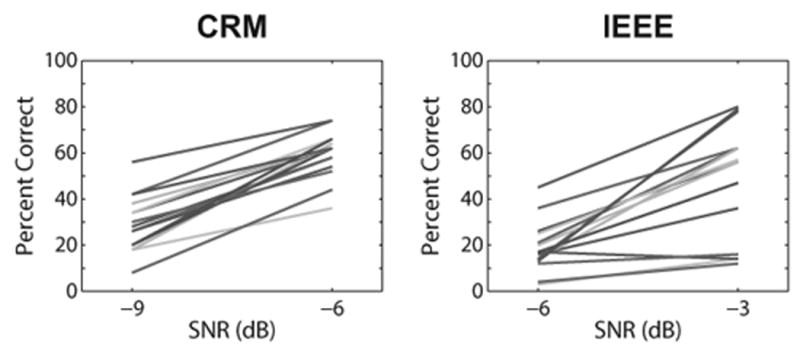

For both tests, the median percent correct increased from the more challenging to the less challenging SNR, as expected. For the CRM, all subjects showed some level of improvement moving from an SNR of -9 to -6 dB. However, for the IEEE sentences, some subjects showed little improvement moving from an SNR of -6 to -3 dB, and one subject showed a slight decrement (17% at -6 dB SNR and 14% at -3 dB SNR).

In addition to computing scores on each test at a given SNR, we were also interested in how the slope of the psychometric function (i.e., performance change across SNRs) was related to contralateral suppression. Figure 4 displays the change in performance across SNR for each test. The slope of the psychometric function (percent correct per dB) was computed as the difference between percent correct at the higher SNR minus the percent correct at the lower SNR, divided by 3 dB (the difference between SNRs). Descriptive statistics for the slopes are also presented in Table 2. Median slopes were identical between the CRM and IEEE tasks. However, IQRs and ranges were smaller for the CRM than for the IEEE task, suggesting less intersubject variability for the CRM task.

Fig. 4.

Changes in speech perception scores across SNRs for the CRM (left panel) and IEEE (right panel) tests. Each line represents performance for a different individual subject. The difference in percent correct at each SNR was divided by 3 to yield the slopes of the individual psychometric functions. SNR indicates signal-to-noise ratio; CRM indicates coordinate response measure; IEEE indicates Institute of Electrical and Electronics Engineers.

Associations Between Contralateral Suppression and Speech-in-Noise Recognition

Correlations between contralateral suppression and speech-in-noise performance at each SNR and slope of the psychometric function were examined. Scatter plots of speech-in-noise recognition as a function of contralateral suppression are shown in Figure 5. In each panel, the outcome of interest is the Spearman partial correlation coefficient (rsp), which controls for the variables age and HFPTA. For reference, the Spearman correlation coefficients (rs) are also displayed in each panel. None of the correlations at a particular SNR were statistically significant (Fig. 5, top and middle rows), contrary to hypothesis. However, correlations between contralateral suppression and the slopes of the psychometric functions for each test were both statistically significant (Fig. 5, bottom row). The direction of these significant correlations was positive, indicating that the slopes of the psychometric functions tended to increase (or steepen) as contralateral suppression increased.

Fig. 5.

Associations between contralateral suppression and speech-in-noise performance. Left and right columns display results for the CRM and IEEE tests, respectively. The top and middle rows display percentage correct at the SNR displayed in the top left corner of each panel. The bottom row displays results for the slopes of the psychometric functions. Spearman correlation coefficients (rs) and partial correlation coefficients (rsp) are displayed in the top right corner of each panel. Asterisks denote correlations that were significant at p < 0.05. CRM indicates coordinate response measure; IEEE indicates Institute of Electrical and Electronics Engineers; SNR indicates signal-to-noise ratio.

Discussion

TEOAEs and Contralateral Suppression

TEOAE signal magnitudes, noise floor magnitudes, and contralateral suppression values reported in Table 1 are consistent with those reported by Mertes and Leek (2016), who tested contralateral suppression in a similar group of primarily older Veteran subjects. The finding of no probable MEMR activation in 15 of 16 subjects is also consistent with this recent work, and is likely due in part to the slow click rate used to obtain TEOAEs (Boothalingam & Purcell 2015). An additional benefit of the slow click rate is that it is less likely to activate the ipsilateral MOC pathway (Guinan et al. 2003), allowing for measurement of larger contralateral suppression values. A wide range of contralateral suppression values was demonstrated in the current subject group, consistent with previous reports (De Ceulaer et al. 2001; Backus & Guinan 2007; Goodman et al. 2013). The presence of SSOAEs did not appear to impact measurements of contralateral suppression, also consistent with recent studies (Marshall et al. 2014; Mertes & Goodman 2016; Marks & Siegel 2017). However, SSOAEs may affect measurements of MOC-induced changes in TEOAE phase and latency and should be analyzed carefully in such cases.

Association Between MOC Activity and Speech Recognition in Noise

The current study represents a step toward better understanding how the MOC may be related to speech recognition in noise at different SNRs and represents, to the authors' knowledge, the first description of the slope of the psychometric function being significantly associated with contralateral suppression. By examining performance at two SNRs, a more complete characterization of a subject's speech-in-noise abilities is developed, relative to performance at a single SNR. These results suggest that the slope of the psychometric function may be a more useful metric for examining the relationship between MOC activity and speech recognition in noise, relative to performance at a single SNR. Kumar and Vanaja (2004) showed that at high SNRs (>15 dB), there was no significant correlation between speech-in-noise performance and MOC activity. At such high SNRs, the listener would not benefit from antimasking because the signal is sufficiently intelligible. Conversely, a very poor SNR would render the signal unintelligible, even in the presence of MOC antimasking. Thus, there should be a range of SNRs in which the MOC will confer an antimasking benefit. By testing a single SNR, one cannot determine where in this range the listener is being tested (Milvae et al. 2015). Additionally, it is likely that this range will be different across subjects, just as the contralateral suppression and speech-in-noise performance scores were in our subject group.

Previous studies have yielded conflicting results regarding whether or not MOC activity is involved in speech in noise recognition. In the current study, MOC activity did not correspond to how an individual will perform in a speech recognition task at a given SNR, consistent with some other studies (Mukari & Mamat 2008; Wagner et al. 2008). Rather, MOC activity measured in this study was associated with how a listener's performance changes with improvements in SNR. This is in contrast to other studies that found that performance at a single SNR was significantly correlated with MOC activity (Giraud et al. 1997; Kumar & Vanaja 2004; Kim et al. 2006; Abdala et al. 2014; Mishra & Lutman 2014; Bidelman & Bhagat 2015). It is possible that had we tested other SNRs, performance at these other SNRs might be correlated with MOC activity. We conclude that the current results are consistent with the hypothesis that the MOC efferent system is involved in speech recognition in noise, and that specific findings of previous studies may have depended in part upon the SNR at which the speech materials were presented.

Several of these previous studies (Giraud et al. 1997; Kumar & Vanaja 2004; Mishra & Lutman 2014) differed from the current study because they compared speech-in-noise performance with ipsilateral noise alone and with bilateral noise. Each of these studies found that the difference in performance between these two noise conditions was significantly correlated with contralateral suppression. To understand these results, one must consider the efferent activity that is elicited by the presentation of noise. In the ipsilateral noise condition, this would activate the ipsilateral MOC pathway, whereas in the bilateral noise condition, both the ipsilateral and contralateral MOC pathways would be activated. Bilateral activation results in stronger MOC activity than either ipsilateral or contralateral activation (Berlin et al. 1995; Lilaonitkul & Guinan 2009a), and therefore a bilateral elicitor may cause greater antimasking and thus better speech-in-noise performance (Kumar & Vanaja 2004; Mishra & Lutman 2014; but see also Stuart & Butler 2012).

Two studies found a significant correlation between contralateral suppression and performance on a speech-in-noise task when the noise was presented to the ipsilateral ear (Abdala et al. 2014; Bidelman & Bhagat 2015). The correlations were only significant when considering contralateral suppression that was measured by presenting clicks to the right ear and noise to the left ear (Bidelman & Bhagat 2015) and only for a handful of consonant and vowel features at specific SNRs (Abdala et al. 2014). This suggests that the association between MOC activity and speech-in-noise performance at a single SNR may be highly dependent on the test conditions, which could explain why other studies have shown that speech-in-noise measures are not significantly correlated with contralateral suppression at a given SNR (Harkrider & Smith 2005; Mukari & Mamat 2008; Wagner et al. 2008; Stuart & Butler 2012).

The detrimental effects of age on speech-in-noise abilities have been well-documented (e.g., Pichora-Fuller et al. 1995). Studies have also demonstrated that the magnitude of contralateral suppression declines with age (Castor et al. 1994; Keppler et al. 2010; Lisowksa et al. 2014) as well as with increasing audiometric threshold (Keppler et al.). The purpose of this study was not to investigate the impact of age or hearing threshold on the MOCR, but these variables were controlled for in the analyses due to the range of ages and HFPTAs in our subject group. Associations between MOCR activity and slopes of the psychometric functions remained significant, suggesting that the MOCR is involved in speech-in-noise perception even in the presence of older age and elevated high-frequency thresholds.

Caveats and Future Directions

The current study investigated primarily older adults, many with mild sensorineural hearing loss at one or more audiometric frequencies. This is in contrast to most previous studies discussed in this article, which examined younger, normal-hearing individuals (e.g., Kumar & Vanaja 2004; Mishra & Lutman 2014; Milvae et al. 2015). This difference in subject populations must be considered when comparing the current results to other studies. Although we accounted for the effects of age and HFPTA in our correlational analyses, it is possible that the relationship between efferent activity and the slope of the psychometric function may be different for younger, normal-hearing individuals and warrants further investigation. Additionally, a larger sample size is needed to determine how generalizable the current results are and to determine if the correlations in the current study (Fig. 5, bottom row) remain significant after obtaining data from more subjects.

A limitation of the current study is the discrepancy in MOC pathways assessed by the OAE and speech-in-noise perception measurements: contralateral suppression measurements invoked the contralateral MOC pathway, whereas the speech-in-noise measurements invoked the ipsilateral MOC pathway. This presentation paradigm is consistent with other recent studies which found a relationship between contralateral suppression and ipsilateral speech-in-noise perception (Abdala et al. 2014; Bidelman & Bhagat 2015), suggesting that MOC activity of the ipsilateral and contralateral pathways may be correlated. However, the ipsilateral and contralateral MOC pathways differ both in terms of their cochlear innervation patterns (Brown 2014) and the MOCR strength (Lilaonitkul & Guinan 2009b), so more investigation into the validity of the comparisons between ipsilateral and contralateral pathways is warranted. As discussed above, several studies have attempted to parse out the contribution of the contralateral MOC pathway to speech-in-noise perception by measuring performance in the presence of ipsilateral and bilateral noise (Giraud et al. 1997; Kumar & Vanaja 2004; Stuart & Butler 2012; Mishra & Lutman 2014). Computing the difference in performance between the two conditions can allow for assessment of how the contralateral MOC pathway contributes to perception. Several of these studies found that this difference score was significantly correlated with the magnitude of contralateral suppression of TEOAEs (Giraud et al. 1997; Kumar & Vanaja 2004; Mishra & Lutman 2014), but one study found no significant correlation (Stuart & Butler 2012). The studies varied in terms of the speech tasks utilized, which may account for the discrepant findings. Future studies should consider implementing multiple speech tasks (e.g., phoneme, word, and sentence recognition) presented with ipsilateral and bilateral noise, all in the same group of subjects, to better determine the relationship between MOC function and speech-in-noise perception as well as to provide the ability to directly compare to previous studies.

In the current study, contralateral suppression was measured during a visual vigilance task, so presumably there was some cortically-mediated impact on the contralateral suppression results (Froehlich et al. 1993; Wittekindt et al. 2014). However, comparisons of contralateral suppression obtained with versus without the use of the vigilance task were not made, so this presumption remains speculative. Ideally, contralateral suppression would be measured simultaneously while the subject is participating in the perceptual task (Zhao et al. 2014). However, such measurements are difficult to achieve in practice because OAEs cannot be simultaneously measured in the same ear in which speech or noise is being presented due to the low-level nature of OAEs, and the subject's response method would have to involve little to no acoustic noise (e.g., a button press or mouse click, rather than verbally repeating back the words). Contralateral suppression measurements will require significant modifications in order to measure concurrently during a speech perception task.

The correlational analysis carried out in this study precludes any conclusions regarding whether the MOC influenced the slope of the psychometric function, or whether the two metrics merely related to some common underlying cause. Experimental manipulation of the amount of MOC activity could allow for a more direct test of a possible causal relationship, which may include lesion studies in animals or an examination of the effects of a treatment (e.g., auditory training) on MOC activity (de Boer & Thornton 2008). Insights into the mechanisms responsible for the observed results may also be obtained through comparisons of the observed results to those predicted by computational models of the auditory system that include efferent feedback (e.g., Clark et al. 2012; Smalt et al. 2014).

Recommendations for future work in this area include the consideration of methods for providing a more complete understanding of how efferent activity is associated with speech recognition in noise during realistic listening conditions. Subjects could be tested across a wider range of SNRs to obtain more detailed psychometric functions. Both positive and negative SNRs should be included (the current study only included negative SNRs) since the benefit for speech-in-noise perception conferred by the MOCR likely depends on an interaction between the strength of the reflex along with the degree to which the signal and noise are impacted by a change in cochlear amplifier gain, as suggested by Milvae et al. (2016). It is of interest to note that subjects with steeper slopes tended to perform more poorly at the low SNR but better at the high SNR, relative to subjects with shallower slopes (Fig. 4). It is unclear why strong MOC activity may involve a decrement in performance at lower SNRs, but more data obtained across a wider range of SNRs in a larger subject group could provide insight and determine if this finding is replicable.

An additional recommendation for future work is to incorporate bilateral presentation of both speech and noise, and bilateral testing of efferent activity, to activate all efferent pathways and reflect binaural listening and processing as encountered in real-world settings. Given that contralateral suppression is correlated with word recognition in bilateral noise (Giraud et al. 1997; Kumar & Vanaja 2004; Mishra & Lutman 2014) but not sentence recognition in bilateral noise (Stuart & Butler 2012), further investigation is needed to determine under which listening conditions the MOC confers a benefit for speech recognition in noise. Additionally, characterizing MOC activity at multiple stimulus levels would allow computation of input-output suppression or “effective attenuation” (de Boer & Thornton 2007), which may better reveal relationships with perceptual processes that are not apparent from MOC activity assessed at a single stimulus level.

Conclusions

In a group of primarily older adults with normal hearing or mild hearing loss, auditory efferent activity, as assessed using contralateral suppression of TEOAEs, was not associated with speech-in-noise performance at a single SNR. However, the strength of auditory efferent activity was significantly correlated with the slope of the psychometric function computed across performance at two SNRs. The results suggest that listeners with stronger MOC efferent activity demonstrate more improvement in speech recognition performance with increases in SNR relative to individuals with weaker MOC activity. Future work should consider expanding upon this relationship by examining a wider range of SNRs and including bilateral presentation of speech and noise stimuli.

Acknowledgments

The authors thank the participants for their time and effort, Dr. Curtis J. Billings for providing MATLAB code for IEEE sentence testing, and Dr. Frederick J. Gallun for providing MATLAB code for CRM testing.

This investigation was supported by National Institutes of Health grant numbers F32 DC015149 (I.B.M.) and R01 DC000626 (M.R.L.), and by a U.S. Department of Veterans Affairs RR&D Senior Research Career Scientist Award number C4042L (M.R.L.). Support for this work was also provided to I.B.M. by the Loma Linda Veterans Association for Research and Education.

I.B.M. designed and performed experiments, analyzed data, and wrote the paper. E.C.W. performed experiments and wrote the paper. M.R.L. designed experiments, analyzed data, and wrote the paper.

Footnotes

Portions of this article were presented at the 44th Annual Scientific and Technology Meeting of the American Auditory Society, March 2–4, 2017, Scottsdale, AZ.

This work was part of a study registered with clinicaltrials.gov (Identifier #NCT02574247).

Financial Disclosures/Conflicts of Interest: The authors have no conflicts of interest to disclose.

References

- Abdala C, Dhar S, Ahmadi M, et al. Aging of the medial olivocochlear reflex and associations with speech perception. J Acoust Soc Am. 2014;135:754–765. doi: 10.1121/1.4861841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Abdala C, Mishra S, Garinis A. Maturation of the human medial efferent reflex revisited. J Acoust Soc Am. 2013;133:938–950. doi: 10.1121/1.4773265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Abdala C, Mishra SK, Williams TL. Considering distortion product otoacoustic emission fine structure in measurements of the medial olivocochlear reflex. J Acoust Soc Am. 2009;125:1584–1594. doi: 10.1121/1.3068442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Backus BC, Guinan JJ., Jr Time-course of the human medial olivocochlear reflex. J Acoust Soc Am. 2006;119:2889–2904. doi: 10.1121/1.2169918. [DOI] [PubMed] [Google Scholar]

- Backus BC, Guinan JJ., Jr Measurement of the distribution of medial olivocochlear acoustic reflex strengths across normal-hearing individuals via otoacoustic emissions. J Assoc Res Otolaryngol. 2007;8:484–496. doi: 10.1007/s10162-007-0100-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berlin CI, Hood LJ, Hurley AE, et al. Binaural noise suppresses linear click-evoked otoacoustic emissions more than ipsilateral or contralateral noise. Hear Res. 1995;87:96–103. doi: 10.1016/0378-5955(95)00082-f. [DOI] [PubMed] [Google Scholar]

- Berlin CI, Hood LJ, Wen H, et al. Contralateral suppression of non-linear click-evoked otoacoustic emissions. Hear Res. 1993;71:1–11. doi: 10.1016/0378-5955(93)90015-s. [DOI] [PubMed] [Google Scholar]

- Bidelman GM, Bhagat SP. Right-ear advantage drives the link between olivocochlear efferent ‘antimasking’ and speech-in-noise listening benefits. Neuroreport. 2015;26:483–487. doi: 10.1097/WNR.0000000000000376. [DOI] [PubMed] [Google Scholar]

- Bilger RC, Neutzel JM, Rabinowitz WM, et al. Standardization of a test of speech perception in noise. J Speech Lang Hear Res. 1984;27:32–48. doi: 10.1044/jshr.2701.32. [DOI] [PubMed] [Google Scholar]

- Bolia RS, Nelson WT, Ericson MA, et al. A speech corpus for multitalker communications research. J Acoust Soc Am. 2000;107:1065–1066. doi: 10.1121/1.428288. [DOI] [PubMed] [Google Scholar]

- Boothalingam S, Purcell DW. Influence of the stimulus presentation rate on medial olivocochlear system assays. J Acoust Soc Am. 2015;137:724–732. doi: 10.1121/1.4906250. [DOI] [PubMed] [Google Scholar]

- Brown MC. Single-unit labeling of medial olivocochlear neurons: The cochlear frequency map for efferent axons. J Neurophysiol. 2014;111:2177–2186. doi: 10.1152/jn.00045.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brownell WE. Outer hair cell electromotility and otoacoustic emissions. Ear Hear. 1990;11:82–92. doi: 10.1097/00003446-199004000-00003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Castor X, Veuillet E, Morgon A, et al. Influence of aging on active cochlear micromechanical properties and on the medial olivocochlear system in humans. Hear Res. 1994;77:1–8. doi: 10.1016/0378-5955(94)90248-8. [DOI] [PubMed] [Google Scholar]

- Clark NR, Brown GJ, Jürgens T, et al. A frequency-selective feedback model of auditory efferent suppression and its implications for the recognition of speech in noise. J Acoust Soc Am. 2012;132:1535–1541. doi: 10.1121/1.4742745. [DOI] [PubMed] [Google Scholar]

- Collet L, Kemp DT, Veuillet E, et al. Effect of contralateral auditory stimuli on active cochlear micro-mechanical properties in human subjects. Hear Res. 1990;43:251–261. doi: 10.1016/0378-5955(90)90232-e. [DOI] [PubMed] [Google Scholar]

- de Boer J, Thornton AR. Effect of subject task on contralateral suppression of click evoked otoacoustic emissions. Hear Res. 2007;233:117–123. doi: 10.1016/j.heares.2007.08.002. [DOI] [PubMed] [Google Scholar]

- de Boer J, Thornton AR. Neural correlates of perceptual learning in the auditory brainstem: Efferent activity predicts and reflects improvement at a speech-in-noise discrimination task. J Neurosci. 2008;28:4929–4937. doi: 10.1523/JNEUROSCI.0902-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Boer J, Thornton AR, Krumbholz K. What is the role of the medial olivocochlear system in speech-in-noise processing? J Neurophysiol. 2012;107:1301–1312. doi: 10.1152/jn.00222.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Ceulaer G, Yperman M, Daemers K, et al. Contralateral suppression of transient evoked otoacoustic emissions: Normative data for a clinical test set-up. Otol Neurotol. 2001;22:350–355. doi: 10.1097/00129492-200105000-00013. [DOI] [PubMed] [Google Scholar]

- Froehlich P, Collet L, Morgon A. Transiently evoked otoacoustic emission amplitudes change with changes of directed attention. Physiol Behav. 1993;53:679–682. doi: 10.1016/0031-9384(93)90173-d. [DOI] [PubMed] [Google Scholar]

- Giraud AL, Garnier S, Micheyl C, et al. Auditory efferents involved in speech-in-noise intelligibility. Neuroreport. 1997;8:1779–1783. doi: 10.1097/00001756-199705060-00042. [DOI] [PubMed] [Google Scholar]

- Goodman SS, Mertes IB, Lewis JD, et al. Medial olivocochlear-induced transient-evoked otoacoustic emission amplitude shifts in individual subjects. J Assoc Res Otolaryngol. 2013;14:829–842. doi: 10.1007/s10162-013-0409-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guinan JJ. Physiology of the medial and lateral olivocochlear systems. In: Ryugo DK, Fay RR, Popper AN, editors. Auditory and Vestibular Efferents. New York, NY: Springer; 2011. pp. 39–81. [Google Scholar]

- Guinan JJ., Jr Olivocochlear efferents: Anatomy, physiology, function, and the measurement of efferent effects in humans. Ear Hear. 2006;27:589–607. doi: 10.1097/01.aud.0000240507.83072.e7. [DOI] [PubMed] [Google Scholar]

- Guinan JJ., Jr Olivocochlear efferent function: Issues regarding methods and the interpretation of results. Front Syst Neurosci. 2014;8:142. doi: 10.3389/fnsys.2014.00142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guinan JJ, Jr, Backus BC, Lilaonitkul W, et al. Medial olivocochlear efferent reflex in humans: Otoacoustic emission (OAE) measurement issues and the advantages of stimulus frequency OAEs. J Assoc Res Otolaryngol. 2003;4:521–540. doi: 10.1007/s10162-002-3037-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guinan JJ, Jr, Gifford ML. Effects of electrical stimulation of efferent olivocochlear neurons on cat auditory-nerve fibers. I. Rate-level functions. Hear Res. 1988;33:97–114. doi: 10.1016/0378-5955(88)90023-8. [DOI] [PubMed] [Google Scholar]

- Harkrider AW, Smith SB. Acceptable noise level, phoneme recognition in noise, and measures of auditory efferent activity. J Am Acad Audiol. 2005;16:530–545. doi: 10.3766/jaaa.16.8.2. [DOI] [PubMed] [Google Scholar]

- Hood LJ, Berlin CI, Hurley A, et al. Contralateral suppression of transient-evoked otoacoustic emissions in humans: Intensity effects. Hear Res. 1996;101:113–118. doi: 10.1016/s0378-5955(96)00138-4. [DOI] [PubMed] [Google Scholar]

- Institute of Electrical and Electronics Engineers [IEEE] IEEE Recommended Practice for Speech Quality Measures. New York, NY: IEEE; 1969. [Google Scholar]

- Kawase T, Delgutte B, Liberman MC. Antimasking effects of the olivocochlear reflex. II. Enhancement of auditory-nerve response to masked tones. J Neurophysiol. 1993;70:2533–2549. doi: 10.1152/jn.1993.70.6.2533. [DOI] [PubMed] [Google Scholar]

- Keppler H, Dhooge I, Corthals P, et al. The effects of aging on evoked otoacoustic emissions and efferent suppression of transient evoked otoacoustic emissions. Clin Neurophysiol. 2010;121:359–365. doi: 10.1016/j.clinph.2009.11.003. [DOI] [PubMed] [Google Scholar]

- Kim S, Frisina RD, Frisina DR. Effects of age on speech understanding in normal hearing listeners: Relationship between the auditory efferent system and speech intelligibility in noise. Speech Commun. 2006;48:855–862. [Google Scholar]

- Kumar UA, Vanaja CS. Functioning of olivocochlear bundle and speech perception in noise. Ear Hear. 2004;25:142–146. doi: 10.1097/01.aud.0000120363.56591.e6. [DOI] [PubMed] [Google Scholar]

- Lilaonitkul W, Guinan JJ., Jr Human medial olivocochlear reflex: Effects as functions of contralateral, ipsilateral, and bilateral elicitor bandwidths. J Assoc Res Otolaryngol. 2009a;10:459–470. doi: 10.1007/s10162-009-0163-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lilaonitkul W, Guinan JJ., Jr Reflex control of the human inner ear: A half-octave offset in medial efferent feedback that is consistent with an efferent role in the control of masking. J Neurophysiol. 2009b;101:1394–1406. doi: 10.1152/jn.90925.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lisowska G, Namyslowski G, Orecka B, et al. Influence of aging on medial olivocochlear system function. Clin Interv Aging. 2014;9:901–914. doi: 10.2147/CIA.S61934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marks KL, Siegel JH. Differentiating middle ear and medial olivocochlear effects on transient-evoked otoacoustic emissions. J Assoc Res Otolaryngol. 2017;18:529–542. doi: 10.1007/s10162-017-0621-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marshall L, Lapsley Miller JA, Guinan JJ, et al. Otoacoustic-emission-based medial-olivocochlear reflex assays for humans. J Acoust Soc Am. 2014;136:2697–2713. doi: 10.1121/1.4896745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mertes IB, Goodman SS. Within- and across-subject variability of repeated measurements of medial olivocochlear-induced changes in transient-evoked otoacoustic emissions. Ear Hear. 2016;37:e72–e84. doi: 10.1097/AUD.0000000000000244. [DOI] [PubMed] [Google Scholar]

- Mertes IB, Leek MR. Concurrent measures of contralateral suppression of transient-evoked otoacoustic emissions and of auditory steady-state responses. J Acoust Soc Am. 2016;140:2027–2038. doi: 10.1121/1.4962666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Milvae KD, Alexander JM, Strickland EA. Is cochlear gain reduction related to speech-in-babble performance? Proc ISAAR. 2015;5:43–50. [Google Scholar]

- Milvae KD, Alexander JM, Strickland EA. Investigation of the relationship between cochlear gain reduction and speech-in-noise performance at positive and negative signal-to-noise ratios. J Acoust Soc Am. 2016;139:1987. doi: 10.1121/10.0003964. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mishra SK, Lutman ME. Repeatability of click-evoked otoacoustic emission-based medial olivocochlear efferent assay. Ear Hear. 2013;34:789–798. doi: 10.1097/AUD.0b013e3182944c04. [DOI] [PubMed] [Google Scholar]

- Mishra SK, Lutman ME. Top-down influences of the medial olivocochlear efferent system in speech perception in noise. PLoS One. 2014;9:e85756. doi: 10.1371/journal.pone.0085756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mukari SZ, Mamat WH. Medial olivocochlear functioning and speech perception in noise in older adults. Audiol Neurootol. 2008;13:328–334. doi: 10.1159/000128978. [DOI] [PubMed] [Google Scholar]

- Pichora-Fuller MK, Schneider BA, Daneman M. How young and old adults listen to and remember speech in noise. J Acoust Soc Am. 1995;97:593–608. doi: 10.1121/1.412282. [DOI] [PubMed] [Google Scholar]

- Shera CA, Guinan JJ., Jr Evoked otoacoustic emissions arise by two fundamentally different mechanisms: A taxonomy for mammalian OAEs. J Acoust Soc Am. 1999;105:782–798. doi: 10.1121/1.426948. [DOI] [PubMed] [Google Scholar]

- Smalt CJ, Heinz MG, Strickland EA. Modeling the time-varying and level-dependent effects of the medial olivocochlear reflex in auditory nerve responses. J Assoc Res Otolaryngol. 2014;15:159–173. doi: 10.1007/s10162-013-0430-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spankovich C, Hood LJ, Silver HJ, et al. Associations between diet and both high and low pure tone averages and transient evoked otoacoustic emissions in an older adult population-based study. J Am Acad Audiol. 2011;22:49–58. doi: 10.3766/jaaa.22.1.6. [DOI] [PubMed] [Google Scholar]

- Stuart A, Butler AK. Contralateral suppression of transient otoacoustic emissions and sentence recognition in noise in young adults. J Am Acad Audiol. 2012;23:686–696. doi: 10.3766/jaaa.23.9.3. [DOI] [PubMed] [Google Scholar]

- Stuart A, Cobb KM. Reliability of measures of transient evoked otoacoustic emissions with contralateral suppression. J Commun Disord. 2015;58:35–42. doi: 10.1016/j.jcomdis.2015.09.003. [DOI] [PubMed] [Google Scholar]

- Summers V, Makashay MJ, Theodoroff SM, et al. Suprathreshold auditory processing and speech perception in noise: Hearing-impaired and normal-hearing listeners. J Am Acad Audiol. 2013;24:274–292. doi: 10.3766/jaaa.24.4.4. [DOI] [PubMed] [Google Scholar]

- Wagner W, Frey K, Heppelmann G, et al. Speech-in-noise intelligibility does not correlate with efferent olivocochlear reflex in humans with normal hearing. Acta Otolaryngol. 2008;128:53–60. doi: 10.1080/00016480701361954. [DOI] [PubMed] [Google Scholar]

- Winslow RL, Sachs MB. Effect of electrical stimulation of the crossed olivocochlear bundle on auditory nerve response to tones in noise. J Neurophysiol. 1987;57:1002–1021. doi: 10.1152/jn.1987.57.4.1002. [DOI] [PubMed] [Google Scholar]

- Wittekindt A, Kaiser J, Abel C. Attentional modulation of the inner ear: A combined otoacoustic emission and EEG study. J Neurosci. 2014;34:9995–10002. doi: 10.1523/JNEUROSCI.4861-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao W, Strickland E, Guinan J. Measurement of medial olivocochlear efferent activity during psychophysical overshoot. Assoc Res Otolaryngol Abs. 2014;37:78–79. [Google Scholar]