Abstract

Distinct processing of objects and space has been an organizing principle for studying higher-level vision and medial temporal lobe memory. Here, however, we discuss how object and spatial information are in fact closely integrated in vision and memory. The ventral, object-processing visual pathway carries precise spatial information, transformed from retinotopic coordinates into relative dimensions. At the final stages of the ventral pathway (including area TEd), object-sensitive neurons are intermixed with neurons that process large-scale environmental space. TEd projects primarily to perirhinal cortex (PRC), which in turn projects to lateral entorhinal cortex (LEC). PRC and LEC also combine object and spatial information. For example, PRC and LEC neurons exhibit place fields that are evoked by landmark objects or the remembered locations of objects. Thus, spatial information, on both local and global scales, is deeply integrated into the ventral/temporal object-processing pathway in vision and memory.

Introduction

The fundamental insight that the visual hierarchy is divided into two pathways, ventral and dorsal1, has guided research on visual cortex for decades, and has also influenced ideas about organization in prefrontal2, auditory3,4, and medial temporal lobe cortex5. The ventral (“what”) pathway is usually described as processing objects, whereas the dorsal (“where”) pathway is described as processing space (although the two pathways have also been described as processing perception [“what”] vs. processing action [“how”]6,7). Recent research has refined and extended understanding of anatomy and function in the two visual pathways7,8.

Here, we reexamine the object/space distinction for the ventral visual pathway and the medial temporal lobe processing stream it feeds. We discuss how spatial information, rather than being entirely segregated into a different pathway, is closely integrated with object processing throughout, in two senses. First, precise retinotopic spatial information about objects is not lost but instead transformed into relational dimensions. On the finest scales, neurons encode the regular, smooth relationships between points on boundaries and surfaces in the natural world. On a somewhat larger scale, neurons encode the positions of object fragments relative to each other and to the object as a whole. Second, information about large-scale, environmental space is closely intermixed with object information in the ventral visual pathway. This seems to support representation of object position within environments.

The two visual pathways continue into the medial temporal lobe memory system, in which the lateral entorhinal cortex (LEC) conveys ventral-pathway input to the hippocampus and the medial entorhinal cortex (MEC) conveys dorsal-pathway input7–10 (Box 1). Episodic memory, defined as explicit memory of specific items or events tied to a specific spatiotemporal context, is fundamentally and inextricably tied to spatial processing11. Many have proposed that the hippocampus is the site of binding of the “what” and “where” information to create and store conjunctive representations of experience that can be later retrieved and re-experienced as a conscious recollection of the original event12–16. However, much evidence indicates that the ventral stream encodes spatial information at processing stages well before the hippocampus.

Transformation of retinotopic space into relational space

One of the defining features of the visual hierarchy is that receptive field size increases progressively at successive stages17. Concomitantly, retinotopic organization becomes gradually less clear. In the final stages of the ventral pathway in anterior temporal lobe (TE), receptive fields cover substantial bilateral portions of the visual field, making retinotopy coarse or absent18,19. These strong trends naturally suggest that spatial information is discarded in the ventral pathway. Loss of spatial information could be regarded as a virtue, since a major goal of ventral pathway processing is to produce invariant representations of objects that do not depend on retinotopic position or size. Through either geometric transformations20 and/or associative learning21–23, TE could evolve stable signals for object identity completely independent of space.

Spatial information could be considered dispensable in this way for purely conceptual information like categorical identity. But object vision comprises much more than conceptual knowledge. In particular, we appreciate the detailed structure of objects and surfaces, on scales ranging down to millimeters. We do not just see a “dog”; we see a dog in glorious Technicolor, with all the subtle conformational characteristics that define its breed, all the variations and quirks that betray its individual identity, all the postural cues that reveal its emotional state and behavioral intentions, and all the incidental details that characterize a perceptual moment. We have immediate cognitive access to such information, allowing us to understand, manipulate, and verbally report on the precise structure of physical reality. We can explain, for example, how to differentiate dog breeds and read canine behavioral cues in terms of precise proportions, positions, and configurations of eyes, nose, lips, teeth, ears, neck, torso, limbs, toes, and tail. Thus, detailed spatial information about objects must be carried forward in explicit form to the final stages of the ventral pathway, the pathway that processes the finest-scale, foveal information and then communicates it to the rest of the brain1,8,17–19. How can this be reconciled with the disappearance of retinotopic detail?

The perhaps obvious answer is that loss of retinotopy does not mean that spatial information is discarded or becomes cognitively inaccessible. Instead, it is transformed, into more useful, relational dimensions. While our cognitive access to absolute retinotopic image position is vague and coarse, we are acutely aware of relative positional relationships in the world. We don’t describe dogs in Cartesian image coordinates; we describe lengths, widths, diameters, aspect ratios, orientations, curvatures, attachments, relative distances and angles, and other measures of how one or more points or anatomical features relate to each other. As discussed below, the transformation of retinotopic space into relational dimensions is observable at the neural level throughout the ventral pathway.

Local spatial relationships: Neural coding of natural smoothness

Transformation into relative dimensions is represented in V1 by orientation tuning24. Orientation is a spatial relationship between the points along an extended contour, such that the distances in the retinotopic x and y dimensions between any two points have the same ratio. It is a useful re-description for our natural world, in which physical boundaries have a high degree of smoothness, and thus constant orientation, on the scale of V1 receptive fields. A contour originally represented by many retinal photoreceptors can be re-described with a single orientation value. Complex cells, which generalize orientation tuning across a small span of visual space25, implement an early trade-off of retinotopic accuracy for precise relational information.

On slightly larger scales, natural surfaces do not maintain a consistent orientation. But change in orientation, whether abrupt (corners) or gradual (curves), is itself a local spatial relationship that can be divorced from retinotopy. Thus, in V4, where receptive fields cover several degrees of visual angle (depending on eccentricity), tuning for change in orientation (curvature, the derivative of orientation) is prominent26–34. V4 neurons are simultaneously tuned for both orientation and curvature, so that a given V4 neuron might respond to sharp convex angles pointing upwards or shallow concave curves opening to the left (Fig. 1A). These tuning characteristics are maintained across the larger V4 receptive fields (Fig. 1B), reflecting a further trade-off of retinotopic accuracy for relative spatial information about points along contours. (There is also evidence that V4 neurons can be tuned for spirality35, a higher-order derivative that describes point relationships along some contours, for example the tails of dog breeds like Basenjis.)

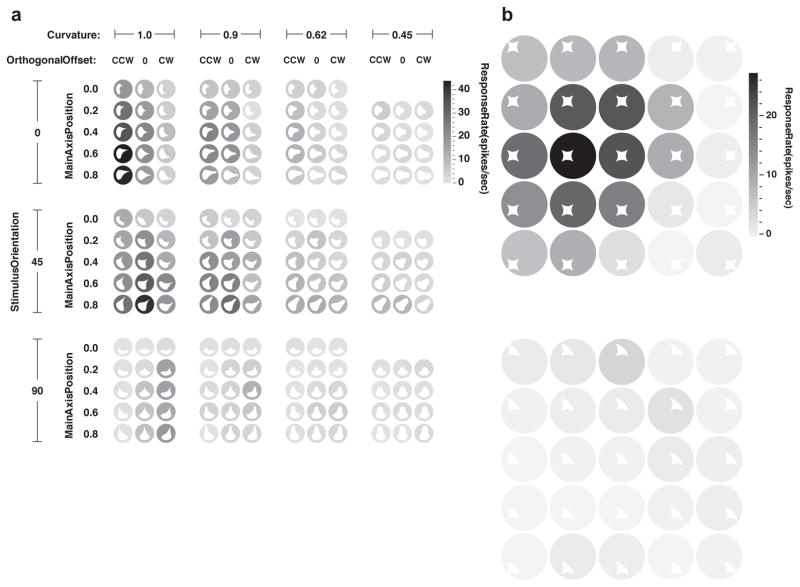

Fig. 1. Transformation of retinotopic information into contour coding in area V4.

This neuron exemplifies how precise retinotopic information is recoded in terms of contour orientation, curvature, and object-relative position. The stimuli shown here (white shapes) were derived from a more wide-ranging test of shape sensitivity that revealed tuning for sharp convex curvature pointing (oriented) toward the upper right and positioned to the upper right of object center. (a) This fine-grained test shows gradual tuning for curvature (horizontal axis), orientation (vertical axis), and object-relative position (recursively plotted within axes) of the convex curvature. Response rate for each shape is indicated by background shade (see scale bar at right). (b) This test demonstrates how a shape with the critical convex curvature at its top right drives responses across a broad range of retinotopic positions (top), while a similar shape without this feature elicits little response (bottom). Adapted from Pasupathy and Connor (2001)27.

These 2D orientations and curvatures in flat visual images typically reflect the orientations and curvatures of 3D structures in the real world. By the final stages of the ventral visual pathway neurons represent 3D surface orientation and curvature36–40, and this representation is causally related to perception41. While 2D contour orientation occupies a polar domain, 3D surface orientation occupies a spherical domain: A surface can face towards you, away from you, to your right, to your left, upwards, or downwards, and anywhere in between. Neurons in TE are tuned for 3D surface orientations, with a predictable large bias toward orientations visible to the viewer38, which span half the spherical space (the half represented by the near side of the moon in relationship to viewers on Earth; surface orientations on the moon’s far side cannot be seen). TE neurons are simultaneously tuned for 3D surface curvature, which is mathematically describable in terms of two “principal” cross-sectional curvatures, one maximum (most convex) and one minimum (most concave) (Fig. 2). A bump has convex max and min curvatures; a cylinder has convex and 0 (flat) curvatures; a dimple has concave curvatures, etc. TE neurons are tuned for a wide range of surface curvatures, with a strong bias toward convexity38,40, which dominates the visible external surfaces of natural objects. By virtue of tuning for 3D orientation and curvature, TE neurons represent the regular spatial relationships between points across the smooth surface fragments that make up real world objects. These representations support detailed spatial perception of the infinite variety of bumps, dimples, ridges, creases and other features that can occur on natural surfaces41.

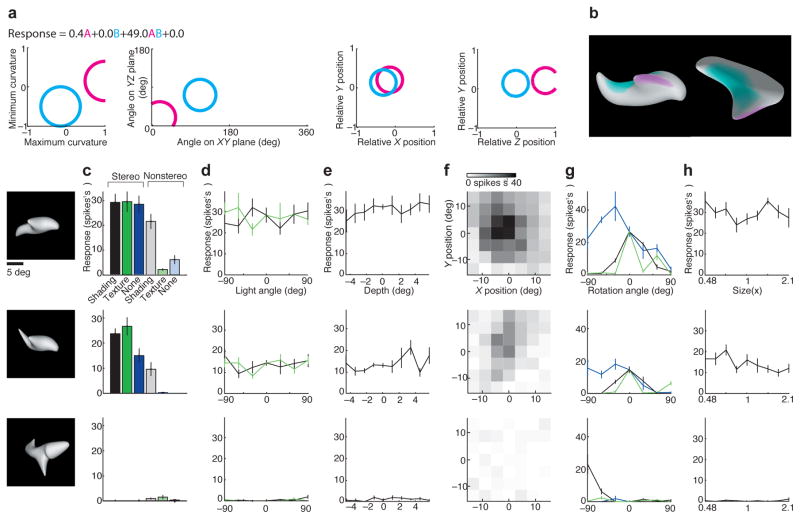

Fig. 2. Transformation of retinotopic information into surface coding in TE.

This neuron exemplifies how retinotopic spatial information is recoded in terms of 3D orientation, 3D curvature, and 3D object-relative position of surface fragments. (a) The response rates for this neuron were best fit by a model based on two multi-dimensional Gaussian tuning components that describe surface structure at a given point. Cyan and magenta circles mark the 1.0 s.d. boundaries for these Gaussians in minimum and maximum cross-sectional curvature (the most and least convex cross-sections through a point on the surface), 3D orientation (of a surface normal vector pointing away from the interior), x/y position, and z/y position (of a surface point, relative to object center of mass) (left to right). The response equation shows that only the product term had substantial weight, meaning that this neuron only responded to shapes with surfaces in both the cyan and magenta tuning ranges. The two tuning ranges were found with iterative fitting of the non-linear model, which required both tuning ranges even though they did not individually drive responses. (b) A high-response stimulus, generated by an adaptive algorithm responding to spike rates, shown in front view (left) and top view (right), with surfaces tinted to show regions within the two Gaussian tuning ranges. This neuron responded to objects with ridges (convex/flat curvature) facing the viewer and positioned in front of object center (magenta) and shallow flat/concave surfaces facing upwards and positioned near object center (cyan). (c–h) Responses were highly consistent across a wide range of transformations, as long as depth cues were present, demonstrating consistent coding of 3D surface shape. (c) Responses to highly effective (top), moderately effective (center) and minimally effective (bottom) stimuli with varying cues for shape in depth. Responses were strongest when stereo (binocular disparity) cues were present (black, dark green, dark blue). Responses remained substantial when only shading cues were present (gray). Responses collapsed when both stereo and shading were removed (light green, light blue). (d) Responses remained consistent across a 180° range of lighting directions in the horizontal plane (black line) and the sagittal plane (green line), which produced extremely different images. (e) Responses were consistent across a wide range of stereoscopic depths. (f) Tuning was consistent across a wide range of positions in the image plane. (g) Responses were consistent across a wide range of rotations in the image plane (around the z axis, blue line). There was less tolerance for rotations outside the image plane, around the x axis (black) or y axis (green). (h) Responses were consistent across two octaves of scale. Adapted from Yamane et al. (2008)38.

Another prominent regularity in the natural world is medial axis structure—the cross-sectional symmetry of elongated structural elements often formed by biological growth processes or constructed according to engineering or aesthetic principles. Such structures can be efficiently described in terms of their extended axis of symmetry and the cross-sectional shape propagated along it42–48. Many TE neurons encode these quantities simultaneously and thus represent the spatial structure of torsos, limbs, columns and beams in terms of smooth surface continuity along the paths defined by their medial axes (Fig. 3). (Late signals in V1 for 2D medial axes may reflect feedback from these TE representations49.) These signals would support perception of spatial details like the lengths, diameters, curvatures, and musculature of a dog’s neck, chest, belly, thigh, etc.

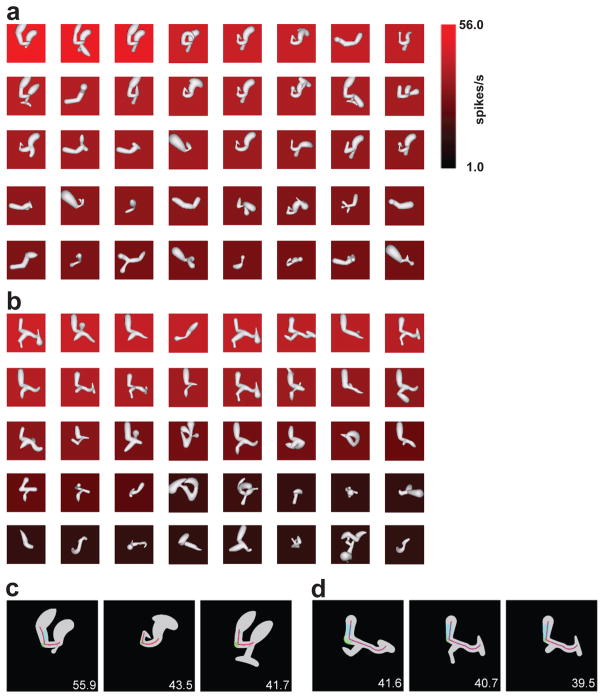

Fig. 3. Transformation of retinotopic information into medial axis coding in TE.

This neuron exemplifies how retinotopic information is recoded in terms of medial symmetry axes (skeletal shape) of elongated, branching structures. (a) Results of an adaptive algorithm driven by spike rates. The response rate for each 3D shape is represented by background color (see scale bar at right), and stimuli are ordered by response rate from top left to bottom right. (b) Results from a simultaneous, independent stimulus lineage driven by the same adaptive algorithm. (c) Best-fit shape model applied to three high-response stimuli from the first lineage. Medial axis fit is represented by the red lines and surface fragment fits by the cyan- and green-tinted regions. (d) Best-fit shape model applied to three high response stimuli from the second lineage. Adapted from Hung et al. (2012)39.

TE tuning for surface fragments and medial axis components is strikingly consistent across different image cues (shading, disparity; Fig. 2c), across different lighting directions that produce entirely different 2D images (Fig. 2d), across stereoscopic position in depth (Fig. 2e), across 2D position (Fig. 2f), across scale (Fig. 2g), across out-of-plane rotations of objects on the order of 60° (Fig. 2g), and across scale. In addition, responses of most neurons collapse when 3D cues (disparity and shading) are removed38,39,50 (Fig. 2c). Thus, neurons in TE are no longer operating in retinotopic image space but rather in the 3D space of real physical structures.

Object-level relationships: Neural coding of spatial configurations

All of the spatial coding strategies discussed so far leverage some local smoothness or regularity in the natural world to transform retinotopic image space into relational descriptions of points along boundaries, surfaces, and symmetry axes. On larger scales, however, objects comprise entirely different parts with arbitrary spatial relationships and no surface continuity. Even on this larger scale, however, retinotopic space is transformed into relational signals. This is apparent by at least V4, where larger-scale retinotopic coding begins to give way to object-relative coordinates. V4 neurons that encode boundary orientation and curvature (see above), thus capturing detailed local spatial relationships, are also remarkably sensitive to object-relative position, thus capturing spatial relationships on the whole-object scale27. The V4 example neuron (Fig. 1) tuned for convex curvature pointing to the upper right is also tuned for object-relative positions near the top right (Fig. 1a). In a cluttered environment, this relative spatial tuning is organized around the attended object51,52. Together, V4 neurons span curvature, orientation, and object-relative position. As a result, V4 population response patterns represent boundary parts and where they occur, and thus the overall spatial configuration of an object53.

Further along the ventral pathway, sensitivity to object-relative position remains acute as receptive field sizes increase and retinotopy fades. In addition, by at least TEO, neurons synthesize spatial configurations of multiple, disjoint parts54,55. As a result, their response functions can only be described with equations that combine two or more tuning components, each defined in part by tuning for object-relative position (Fig. 2a). By the final stages in TE, neurons are tuned for 3D spatial configurations of surface fragments and/or medial axis elements38,39 (Figs. 2, 3). Thus, the ventral pathway carries explicit signals for part-part and part-object spatial relationships.

Such signals must underlie our detailed understanding of 3D object structure, e.g. our ability to say that an antique silver teapot has a conical lid on top of a long, narrow neck that flows down into a round bottom, from which protrude an S-shaped spout on one side, a C-shaped handle in the same plane on exactly the opposite side, and four short legs oriented 45° degrees from vertical attached in a square configuration aligned with the spout and handle. Our perception of these structural relationships is precise, not coarse—if one part is even slightly misaligned, we recognize that the teapot is a cheap knockoff or a repaired original. And the percept is relational, not retinotopic—we fully appreciate the configuration of the teapot even as we turn it over to examine it from every angle, producing a confusing stream of retinotopic signals.

As noted above, explicit neural representation of and cognitive access to precise 3D structure is not necessary for recognition and discrimination. This point is beautifully illustrated by face discrimination. Humans and other primates are remarkably expert at discriminating and remembering thousands of faces based on extremely subtle, composite differences in the appearance and configuration of facial features (eyes, brows, nose, mouth, jaws, chin). Neurons in face-processing patches in anterior TE (ML/MF, AM) represent facial appearance so accurately that face photographs can be convincingly reconstructed from their population activity patterns56. ML/MF neurons represent more information about larger-scale spatial configuration (e.g. face width and eye height), while AM neurons represent more information about finer details within features. However, this massive amount of spatial information is not represented with an explicit, easy to read code. Instead, neurons exhibit ramp-like tuning along specific directions in a high-dimensional space (on the order of 50D) in which each dimension represents composite changes in many features and configural relationships (Fig. 4)56–58. This is a powerful strategy for discriminating thousands of faces that normally differ only in subtle, highly composite ways. It explains our seemingly unlimited capacity to distinguish thousands of essentially similar faces.

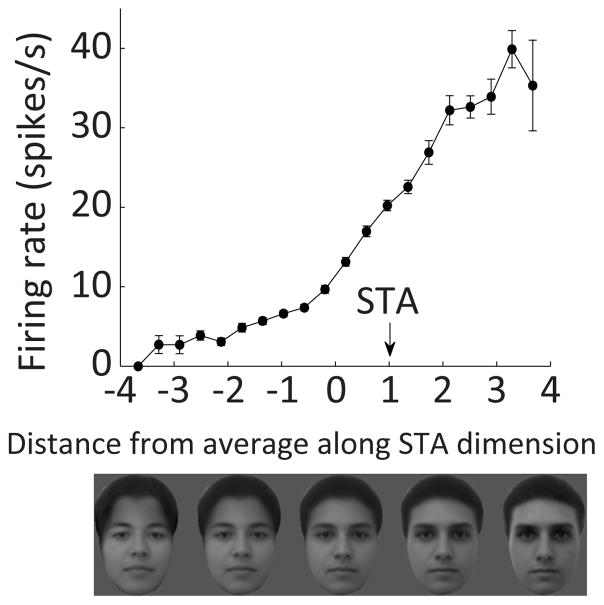

Fig. 4. Neural coding of face structure in highly composite dimensions.

This typical example neuron from face patch AM exhibits ramp-like tuning on a composite dimension along which forehead height, eyebrow shape, eye depth/surround contrast, nose length, mouth height, mouth shape, chin indentation, and face width all change gradually. This coding scheme is highly efficient for discriminating faces, but does not provide explicit, easily read signals for the underlying structure. Adapted with permission from the authors from Chang & Tsao (2017)56.

The price, however, is that the underlying spatial structure of faces is largely buried in the complexity of the coding dimensionality. There are no explicit signals for things that determine facial appearance like the spatial relationship between the eyes and brows. Presumably, as a result, while I can instantly distinguish Scarlett Johansson, Jennifer Lawrence, Amy Adams, Emilia Clarke, and hundreds of other actresses (regardless of hair color and style), I cannot tell you what makes each woman’s face unique without deliberate, laborious measurement. Thus, identification can be superb without explicit neural representation of or cognitive access to the underlying spatial information. This argues that the explicit spatial coding observed for most objects exists to support not just recognition but also cognitive appreciation of structure.

Integration of objects with large-scale space

Large-scale spatial information about objects

The preceding section dealt entirely with spatial information about objects themselves. But objects exist within environments, and their relationships to and interactions with environments are inextricable aspects of object experience. This brings up another conundrum: If the ventral pathway achieves translation and scale invariance of object representations, mustn’t that entail loss of information about object/environment relationships? The simple answer to this one is that the ventral pathway does not throw away information about large-scale environmental space or object position. In fact, information about object position, scale, and orientation appears to increase along the ventral pathway, in parallel with information about categorical identity59.

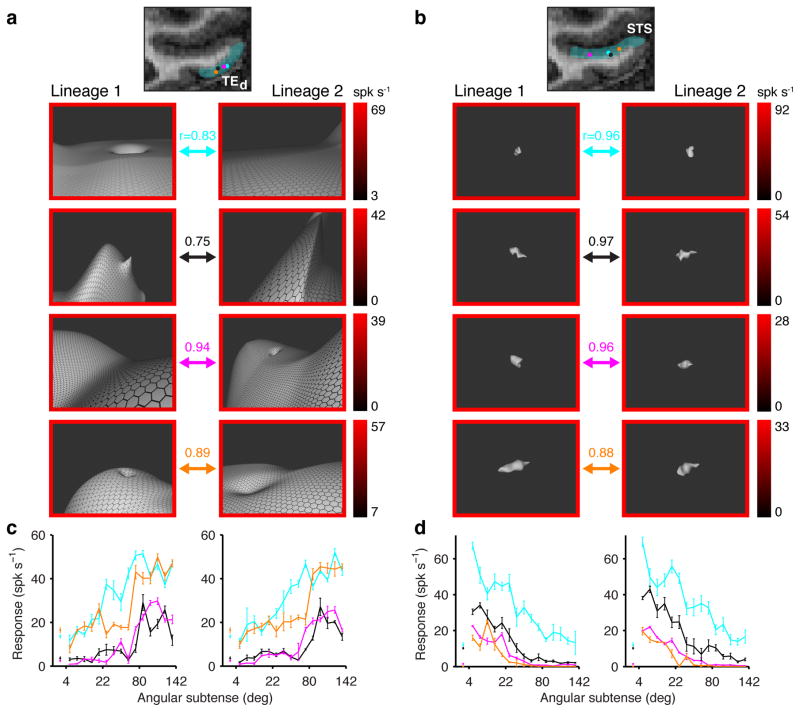

Moreover, the longstanding notion that the ventral pathway processes objects exclusively appears to be incorrect. Object coding is predominant in the uppermost channel through the ventral pathway, which in monkeys occupies the ventral bank of the superior temporal sulcus (STS). Below this channel, however, in TEd (dorsal TE), a majority of neurons respond strongly to large-scale environmental stimuli—landscapes and interiors—and only weakly to object-sized stimuli50 (Fig. 5). These neurons are especially responsive to 3D planes, corners and edges, specifically within the orientation ranges that characterize natural landscapes and floors—the surfaces that most objects occupy in the real world40. The upper/lower distinction in processing scale, surface curvature, and object/place organization is consistent across multiple studies60–68. This organization may be inherited from retinotopic organization of early visual cortex7,8,69.

Fig. 5. Large-scale environmental shape information in TE.

(a) Five representative neurons from channel TEd, on the lateral surface of the inferotemporal gyrus. Recording locations are shown in the coronal plane as colored dots superimposed on an MRI section. In each row, two high response stimuli are shown for a neuron. Responses are indicated by border colors (see scales at right). In each case, the left hand stimulus was generated in lineage 1 of a spike-adaptive shape algorithm and the right hand stimulus was generated in separate lineage 2. The arrows connecting each pair of stimuli color-code neuron identity. The majority of TEd neurons (66%), including these examples, were significantly more responsive to large-scale stimuli that exceeded the boundaries of the projection screen, which subtended 77° and 61° in the horizontal and vertical directions, respectively. (b) Five representative neurons from channel STS, in the ventral bank of the superior temporal sulcus. Conventions as in (a). The majority of STS neurons (75%) were more responsive to object-sized stimuli subtending on the order of 10° or less. (c) Average responses across all stimuli in lineage 1 (left) and 2 (right) as a function of stimulus size (maximum angular subtense), color-coded for the five TEd neurons. The correlations between these functions across lineages is given by the r values in (a). (d) Average responses as a function of stimulus size for the five STS neurons. Conventions as in (c). Adapted from Vaziri et al. (2014)50.

The close juxtaposition of object and environment information in TEd is a natural basis for processing object-environment relationships and interactions. Significantly, the major cortical target for TEd is perirhinal cortex (PRC)8, the link between ventral pathway vision and medial temporal lobe memory70–72. (In contrast, STS projects primarily to ventrolateral prefrontal cortex and orbitofrontal cortex, which are associated with short-term object memory and object value.) In some views 73–75 (but see Ref. 76), PRC occupies the highest level of a hierarchy of object perception, binding together configurations of multiple attributes that define objects into a single neural representation. This binding includes the spatial arrangement of the components of an object77. As discussed below, PRC and its distal targets carry forward the association between objects and environmental space inherited in part from TEd.

Object-based spatial processing: marking the locations of objects on a cognitive map

In order to serve as a useful guide to behavior and a framework for episodic memory, a cognitive map needs to incorporate representations of the locations of the objects and landmarks that are embedded within it. Current theories propose that the binding of objects to locations occurs in the hippocampus12–16. However, increasing evidence shows that this binding may occur earlier in the processing stream. PRC, in conjunction with the hippocampus, is required for object-place association tasks, in which rats have to associate reward with a particular object in a particular location, but it is not required for simple discrimination of very different objects78–79. Similarly, LEC and PRC processing is required to associate reward with specific objects within specific spatial contexts, even though simple object recognition and context recognition are intact80–86. In spontaneous exploration studies, LEC lesions cause impairments in the ability to detect a spatial change of the configuration of objects when one of many objects (n > 3) is moved to a novel location87–90. These studies show a clear role for the PRC/LEC pathway in object-space associations, but by themselves they do not reveal whether the PRC and/or LEC explicitly represent the spatial component of this association or whether they merely provide the object component to a downstream region (such as the hippocampus).

Early single-unit recording studies of PRC and LEC suggested that the contributions of these regions might be limited to providing object information. Neurons in PRC and IT of monkeys91–93 and in PRC and LEC of rats94,95 were responsive to 3-D objects or 2D images of objects. In both species, the neural responses to objects tended to decrease with repeated exposures. This “response suppression” was proposed to be a neural correlate of recognition memory91,93,96. Other neurons encoded recency and familiarity of items, in line with the putative mnemonic functions of PRC and other MTL regions92.

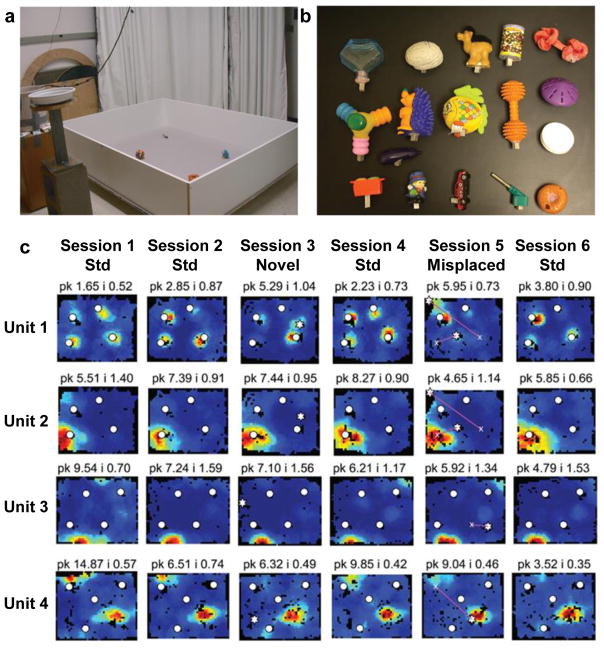

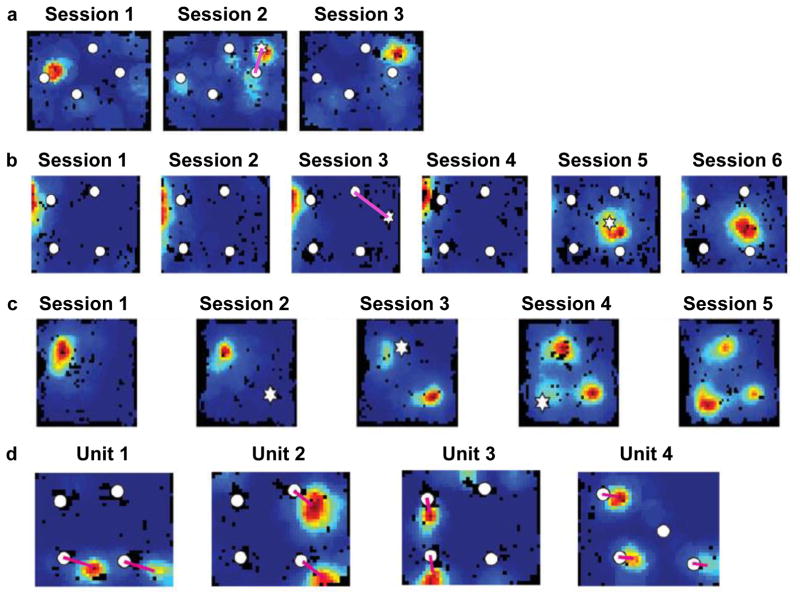

More recent studies addressed the responsiveness of PRC and LEC neurons to 3-D objects in freely moving rats97–99 (Fig. 6). Although many PRC and LEC neurons were active when the rats explored the objects, most did not discriminate strongly among the different objects. One possible interpretation is that these neurons do not encode objects per se, but rather the spatial locations of any salient objects that the rat encounters (i.e., they act as spatial pointers or drop pins on a map). There is a host of other interpretations, however (e.g., the cells may be encoding aspects of the exploratory behavior of the rat). A clue comes from studies in which objects are spatially displaced, similar to the spontaneous exploration lesion studies described above. In these experiments, a standard configuration of familiar objects is altered by moving one of the objects to a new location. Deshmukh and Knierim97 reported that a small number of LEC cells fired not only at the new location of the object but also at the remembered location that the object had previously occupied (Fig. 7a). Tsao and colleagues100 studied these rare “object trace” cells in detail and discovered remarkably that this object-place memory trace in LEC could last for at least 17 days. Similar findings of object trace activity were reported from neurons in anterior cingulate cortex101 and hippocampus102 (Fig. 7b,c). Because the objects were no longer present at these locations, the most likely interpretation is that these cells encoded the remembered locations that the objects had previously occupied.

Fig. 6. LEC responses to objects in freely moving rats.

(a) Recording apparatus containing 4 objects. (b) Each row is the firing rate map of a different LEC unit in 6 consecutive recording sessions. Sessions 1, 2, 4, and 6 were sessions with the familiar objects (white circles) in their standard spatial configuration. In Session 3, a novel object (white star) was placed into the arena. In Session 5, one or more objects were moved to novel locations. Unit 1 fired when the rat was in the proximity of each object. Unit 2 had a strong firing field at the location of one object, but when the object was moved in Session 5, the cell continued to fire at the original location. Units 3 and 4 were cells that had specific spatial firing in locations that were never occupied by an object. Unit 1 thus exhibited object-related firing, whereas units 2–4 showed spatial firing. PRC neurons similar to Unit 1, but not Units 2–4, were reported by Deshmukh et al (2012)98. Reproduced from Deshmukh & Knierim (2011)97.

Fig. 7. Object-space responses in LEC and hippocampus.

(a–c) Responses to remembered prior locations of objects. (a) A LEC neuron fired when the rat was in the proximity of one object in Session 1, and then fired at multiple objects in Session 2 when one of the objects was moved (magenta line connects old position [circle] and new position [star]). Note that the cell continued to fire weakly at the prior location of the moved object (white circle attached to magenta line). When the object was returned to its initial location in Session 3, the cell fired robustly at the location that the object had occupied in the previous session. From Deshmukh & Knierim (2011)97. (b,c) Two units from the hippocampus that displayed object-location memory traces. Unit b had a place field along the left wall for sessions 1–4. In session 5, the cell fired when the rat was near a novel object, and maintained this firing in Session 6 after the novel object was removed. Unit c had a standard place field in Session 1. When a novel object was placed in the arena (Session 2), the cell did not respond, but it developed place fields at the previously occupied locations when the object was moved to new locations in Sessions 3 and 4. When the object was removed entirely (Session 5), the cell continued to fire at the 3 previously occupied locations of the object. From Deshmukh and Knierim (2013)102. (d) Four examples of “landmark vector cells” in the hippocampus. Each cell fires at a specific distance and allocentric bearing (denoted by magenta lines) relative to two or more objects in an environment. From Deshmukh & Knierim (2013)102.

Object-based spatial processing: defining locations relative to local objects

Spatial locations can be defined in multiple ways. Prominent, current models of the spatial firing of MEC grid cells and hippocampal place cells emphasize path integration computations and the calculation of distances and directions to extended, environmental boundaries103. Spatial locations can also be defined relative to local object landmarks11, which may be a function of the PRC and LEC given their role in object processing.

However, there is a conflicting literature about whether PRC/LEC lesions in rodents cause deficits in large-scale spatial tasks and whether cells in these regions show spatial firing properties (see Refs. 104 and 105 for comprehensive reviews of the lesion literature on this issue). Some studies showed little evidence of spatial functions of PRC and LEC. On quintessential, hippocampus-dependent spatial memory tasks, such as the Morris water maze and the Barnes circular platform, PRC and LEC lesions tend to have modest or no effects83,85,87,106–109 (but see Ref. 110). These results are consistent with early reports that PRC and LEC neurons do not display strong spatial firing when rats forage in an open field98,99,111–114. Furthermore, LEC cells are weakly modulated by the theta rhythm compared to MEC, although some individual LEC cells show a modest phase-locking to theta115. Because the theta rhythm in rodents is strongly associated with movement through space, the lack of a strong theta signal in LEC reinforces its fundamentally different coding principles relative to the extremely specific and robust spatial coding of MEC116,117.

Nonetheless, the responsiveness of upstream TEd neurons to landscape-scale scenes50 (as described above) suggests that PRC and LEC might be involved in spatial processing at navigationally relevant spatial scales, at least in some tasks. Consistent with this prediction, PRC lesions cause a robust deficit in delayed nonmatch to position tasks118–121 (but see Ref. 122), the radial 8-arm maze85,120,121,123,124, and contextual fear conditioning108,125,126 (but see Ref. 84). In a particularly compelling demonstration of the contribution of PRC to spatial memory in a plus-maze127, control rats used an allocentric spatial strategy to solve the task (i.e., they chose an arm based on its spatial location in the room) whereas rats with PRC lesions used a response strategy (i.e., they chose an arm based on a left- or right-turn response or based on an intramaze floor cue). This change in strategies indicated that PRC was involved in allocentric spatial processing in the control rats127. Finally, PRC neurons show broad, spatial selectivity in a visual-cue discrimination, spatial response task on a Figure-8 maze128. These results support the idea that PRC neurons can display a degree of spatial representation, and that they do not only respond to discrete items or objects.

Animals can navigate to goals relative to local landmarks in an environment129,130, and this type of navigation may depend on LEC processing90,131. Consistent with this idea, some LEC neurons show place-field like responses in environments containing objects. These spatial firing fields can exist at locations distant from the objects, showing that they are spatial in nature and not simply responding to attributes of an object97 (Fig. 6b, units 3 and 4). Such strong place fields have not been reported in environments that lack the local objects97,113,114. They have also not been observed in PRC (although this negative result must be taken with caution given that they are rare [estimated < 10%97] even in LEC and thus may have been missed in the PRC recordings98). Despite this absence of spatial firing in PRC at the single-unit level of analysis, a hierarchical clustering analyses of PRC (and LEC) ensembles, recorded as rats performed a context-dependent, object-association task, revealed a significant signal related to spatial location (in addition to stronger signals related to context and objects)132. (Interestingly, a weak object-related signal was revealed in the space-dominated MEC ensemble. Thus, MEC and LEC/PRC showed evidence of both spatial and object-related activity, but the relative weights of each type of information differed between the two processing streams.)

There is strong evidence of object-relative spatial coding in the hippocampus. Many studies show responsiveness to the present (or remembered) locations of objects11,102,133–135. Hippocampal cells can also encode locations defined by objects at a distance. Some hippocampal cells, called landmark vector cells, fire when the rat occupies multiple locations in an environment. Each location is defined by an identical distance and bearing from an object in the environment102 (Fig. 7d). Similarly, some hippocampal cells in bats fire when the bat is at a specific distance and bearing to a goal location136. These results unequivocally demonstrate object-relative, spatial position coding. Conceivably, the hippocampus derives object-relative positions by combining LEC object location inputs with MEC distance and direction inputs.

Rethinking the roles of LEC and MEC

The hippocampus is thought to combine item/object information from the PRC/LEC pathway with spatial/temporal information from the parahippocampal (PHC)/MEC pathway, in order to represent the individual components of an experience within a spatiotemporal context10–16,137–140. This conjunctive representation allows the components to be stored and later retrieved together, to be reconstructed as a coherent, episodic memory. However, as discussed here and argued elsewhere132,141–144, spatial and object processing are already intertwined throughout the ventral stream, and it no longer seems accurate to characterize PRC/LEC as strictly object-related and PHC/MEC as strictly spatial. How then should the functions of the two pathways be described, and what is the precise role of the hippocampus in integrating these pathways?

Since PRC/LEC as well as PHC/MEC carry both what and where information (albeit to different degrees), the more accurate distinction might be how that information is used. The MEC, with its dense connectivity with retrosplenial cortex and presubiculum9, appears to be part of a path-integration-based navigation system that reports the moment-by-moment, allocentric position of the animal (or, under certain conditions when the animal is not moving, the passage of time145). This system requires external sensory input to keep the position signal aligned to the external world (primarily via representations of the environmental borders and head direction, although distal landmarks are also influential). In contrast, the LEC system appears to represent primarily information about the external world, including (but not limited to) spatial information about objects in the environment and the animal’s location relative to these objects. In this view, the MEC might be part of what O’Keefe and Nadel11 called the “internal navigation” system (path integration) and the LEC part of the “external navigation” system, relying on representations of local landmarks and their spatial relationships (see also 141,142). Via anatomical crosstalk, these two systems interact to stay calibrated with each other. The hippocampus may be necessary for the rapid, one-trial binding of these representations to each other when they are novel or altered, on a fast time scale that is relevant for episodic encoding. In addition, through the process of hippocampal place cell remapping, the hippocampus may be crucial for creating context-specific, spatiotemporal representations of environments, and the events experienced in them, that are necessary for flexible, context-dependent learning and episodic memory.

Conclusions

Like many great ideas, the object/space distinction between ventral and dorsal pathways1 has initiated a dialectical process leading to a more complex picture. Thus, while the dorsal pathway clearly emphasizes spatial information, it is now known to carry information about object shape146–148 and object categories149. Likewise, the spatial nature of the dorsal pathway is now viewed in part as a reflection of its role in guiding targeted actions in space6,7.

Here, we have taken the ventral pathway identification with objects as a basis for examining the extent to which spatial processing is also involved. We have discussed how object shape processing is fundamentally a re-coding of local retinotopic spatial information in terms of common spatial configurations in the real world. This re-coding transforms the redundant, unreadable spatial information in 2D photoreceptor maps into compressed, explicit representations of 3D spatial structure. We have also discussed recent evidence that even large-scale space information is carried forward in parallel through the ventral visual pathway, providing a potential basis for perceiving object–scene relationships.

We next discussed how PRC and LEC, considered to be the continuation of the ventral object pathway into medial temporal lobe memory systems, also process spatial information, as demonstrated by lesion effects on spatial tasks. We examined how LEC place fields can be defined by object locations or even remembered object locations. We noted that the representation of space itself in the hippocampus can be organized with respect to landmark objects.

These observations reflect the fundamental nature of the world in which the brain must operate. It is a world in which all things are spatial and most important things are objects. Even at the highest levels of perception and cognition, objects do not become disembodied abstractions characterized only by semantic labels. They remain real things whose detailed meanings are defined by their precise spatial structures and their spatiotemporal interactions with the rest of the world. Likewise, space itself is not experienced as an independent abstraction, but as a dimensionality that organizes and is organized by the ecologically important objects it contains. The ventral and dorsal pathways treat objects and space differently, but they cannot treat them separately.

Acknowledgments

Work from the authors’ laboratories was funded by Public Health Service grants EY024028 (CEC), EY011797 (CEC), NS039456 (JJK), and MH094146 (JJK).

References

- 1.Mishkin M, Ungerleider LG, Macko KA. Object vision and spatial vision: two cortical pathways. Trends Neurosci. 1983;6:414–417. This seminal paper distinguished the dorsal and ventral visual pathways. [Google Scholar]

- 2.Wilson FA, Scalaidhe SP, Goldman-Rakic PS. Dissociation of object and spatial processing domains in primate prefrontal cortex. Science. 1993;260:1955–1958. doi: 10.1126/science.8316836. [DOI] [PubMed] [Google Scholar]

- 3.Kaas JH, Hackett TA. ‘What’ and ‘where’ processing in auditory cortex. Nat Neurosci. 1999;2:1045–1047. doi: 10.1038/15967. [DOI] [PubMed] [Google Scholar]

- 4.Romanski LM, Tian B, Fritz J, Mishkin M, Goldman-Rakic PS, Rauschecker JP. Dual streams of auditory afferents target multiple domains in the primate prefrontal cortex. Nat Neurosci. 1999;2:1131–1136. doi: 10.1038/16056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.McNaughton BL, Leonard B, Chen LL. Cortical-hippocampal interactions and cognitive mapping: a hypothesis based on reintegration of the parietal and inferotemporal pathways for visual processing. Psychobiol. 1989;17:230–235. [Google Scholar]

- 6.Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends Neurosci. 1992;15:20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- 7.Kravitz DJ, Saleem KS, Baker CI, Mishkin M. A new neural framework for visuospatial processing. Nat Rev Neurosci. 2011;12:217–230. doi: 10.1038/nrn3008. This comprehensive review reorganizes the dorsal visual pathway in terms of its output functionalities. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kravitz DJ, Saleem KS, Baker CI, Ungerleider LG, Mishkin M. The ventral visual pathway: an expanded neural framework for the processing of object quality. Trends Cogn Sci. 2013;17:26–49. doi: 10.1016/j.tics.2012.10.011. This reconsideration of ventral pathway organization reveals greater complexity and diversity in connectivity. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Witter MP, Amaral DG. In: The rat nervous system. 3. Paxinos G, editor. Elsevier; Amsterdam: 2004. pp. 635–704. [Google Scholar]

- 10.Burwell RD. The parahippocampal region: corticocortical connectivity. Ann N Y Acad Sci. 2000;911:25–42. doi: 10.1111/j.1749-6632.2000.tb06717.x. This article summarizes the data in rodents regarding parallel pathways from the perirhinal cortex and postrhinal cortex to the hippocampus, via the lateral and medial entorhinal cortical regions, respectively. [DOI] [PubMed] [Google Scholar]

- 11.O’Keefe J, Nadel L. The hippocampus as a cognitive map. Clarendon Press; Oxford: 1978. [Google Scholar]

- 12.Suzuki WA, Miller EK, Desimone R. Object and place memory in the macaque entorhinal cortex. J Neurophysiol. 1997;78:1062–1081. doi: 10.1152/jn.1997.78.2.1062. [DOI] [PubMed] [Google Scholar]

- 13.Gaffan D. Idiothetic input into object-place configuration as the contribution to memory of the monkey and human hippocampus: a review. Exp Brain Res. 1998;123:201–209. doi: 10.1007/s002210050562. [DOI] [PubMed] [Google Scholar]

- 14.Manns JR, Eichenbaum H. Evolution of declarative memory. Hippocampus. 2006;16:795–808. doi: 10.1002/hipo.20205. [DOI] [PubMed] [Google Scholar]

- 15.Knierim JJ, Lee I, Hargreaves EL. Hippocampal place cells: parallel input streams, subregional processing, and implications for episodic memory. Hippocampus. 2006;16:755–764. doi: 10.1002/hipo.20203. [DOI] [PubMed] [Google Scholar]

- 16.Diana RA, Yonelinas AP, Ranganath C. Imaging recollection and familiarity in the medial temporal lobe: a three-component model. Trends Cogn Sci. 2007;11:379–386. doi: 10.1016/j.tics.2007.08.001. [DOI] [PubMed] [Google Scholar]

- 17.Felleman DJ, Van Essen DC. Distributed hierarchical processing in the primate cerebral cortex. Cereb Cortex. 1991;1:1–47. doi: 10.1093/cercor/1.1.1-a. This is the most complete and well-known version of the visual system wiring diagram. [DOI] [PubMed] [Google Scholar]

- 18.Gross CG, Rocha-Miranda CED, Bender DB. Visual properties of neurons in inferotemporal cortex of the Macaque. J Neurophysiol. 1972;35:96–111. doi: 10.1152/jn.1972.35.1.96. [DOI] [PubMed] [Google Scholar]

- 19.Kobatake E, Tanaka K. Neuronal selectivities to complex object features in the ventral visual pathway of the macaque cerebral cortex. J Neurophysiol. 1994;71:856–867. doi: 10.1152/jn.1994.71.3.856. [DOI] [PubMed] [Google Scholar]

- 20.Ullman S. Aligning pictorial descriptions: An approach to object recognition. Cognition. 1989;32:193–254. doi: 10.1016/0010-0277(89)90036-x. [DOI] [PubMed] [Google Scholar]

- 21.Vetter T, Hurlbert A, Poggio T. View-based models of 3D object recognition: invariance to imaging transformations. Cereb Cortex. 1995;5:261–269. doi: 10.1093/cercor/5.3.261. [DOI] [PubMed] [Google Scholar]

- 22.Bülthoff HH, Edelman SY, Tarr MJ. How are three-dimensional objects represented in the brain? Cereb Cortex. 1995;5:247–260. doi: 10.1093/cercor/5.3.247. [DOI] [PubMed] [Google Scholar]

- 23.Li N, DiCarlo JJ. Unsupervised natural experience rapidly alters invariant object representation in visual cortex. Science. 2008;321:1502–1507. doi: 10.1126/science.1160028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hubel DH, Wiesel TN. Receptive fields of single neurones in the cat’s striate cortex. J Physiol. 1959;148:574–591. doi: 10.1113/jphysiol.1959.sp006308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hubel DH, Wiesel TN. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J Physiol. 1962;160:106–154. doi: 10.1113/jphysiol.1962.sp006837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Pasupathy A, Connor CE. Responses to contour features in macaque area V4. J Neurophysiol. 1999;82:2490–2502. doi: 10.1152/jn.1999.82.5.2490. [DOI] [PubMed] [Google Scholar]

- 27.Pasupathy A, Connor CE. Shape representation in area V4: position-specific tuning for boundary conformation. J Neurophysiol. 2001;86:2505–2519. doi: 10.1152/jn.2001.86.5.2505. [DOI] [PubMed] [Google Scholar]

- 28.Sharpee TO, Kouh M, Reynolds JH. Trade-off between curvature tuning and position invariance in visual area V4. P Natl Acad Sci USA. 2013;110:11618–11623. doi: 10.1073/pnas.1217479110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Nandy AS, Sharpee TO, Reynolds JH, Mitchell JF. The fine structure of shape tuning in area V4. Neuron. 2013;78:1102–1115. doi: 10.1016/j.neuron.2013.04.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Yau JM, Pasupathy A, Brincat SL, Connor CE. Curvature processing dynamics in macaque area V4. Cerebl Cortex. 2012;23:198–209. doi: 10.1093/cercor/bhs004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Bushnell BN, Harding PJ, Kosai Y, Pasupathy A. Partial occlusion modulates contour-based shape encoding in primate area V4. J Neurosci. 2011;31:4012–4024. doi: 10.1523/JNEUROSCI.4766-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kosai Y, El-Shamayleh Y, Fyall AM, Pasupathy A. The role of visual area V4 in the discrimination of partially occluded shapes. Journal of Neuroscience. 2014;34:8570–8584. doi: 10.1523/JNEUROSCI.1375-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Oleskiw TD, Pasupathy A, Bair W. Spectral receptive fields do not explain tuning for boundary curvature in V4. J Neurophysiol. 2014;112:2114–2122. doi: 10.1152/jn.00250.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.El-Shamayleh Y, Pasupathy A. Contour curvature as an invariant code for objects in visual area V4. J Neurosci. 2016;36:5532–5543. doi: 10.1523/JNEUROSCI.4139-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Gallant JL, Braun J, Van Essen DC. Selectivity for polar, hyperbolic, and Cartesian gratings in macaque visual cortex. Science. 1993;259:100–103. doi: 10.1126/science.8418487. [DOI] [PubMed] [Google Scholar]

- 36.Janssen P, Vogels R, Orban GA. Macaque inferior temporal neurons are selective for disparity-defined three-dimensional shapes. P Natl Acad Sci USA. 1999;96:8217–8222. doi: 10.1073/pnas.96.14.8217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Janssen P, Vogels R, Orban GA. Three-dimensional shape coding in inferior temporal cortex. Neuron. 2000;27:385–397. doi: 10.1016/s0896-6273(00)00045-3. [DOI] [PubMed] [Google Scholar]

- 38.Yamane Y, Carlson ET, Bowman KC, Wang Z, Connor CE. A neural code for three-dimensional object shape in macaque inferotemporal cortex. Nat Neurosci. 2008;11:1352–1360. doi: 10.1038/nn.2202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hung CC, Carlson ET, Connor CE. Medial axis shape coding in macaque inferotemporal cortex. Neuron. 2012;74:1099–1113. doi: 10.1016/j.neuron.2012.04.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Vaziri S, Connor CE. Representation of gravity-aligned scene structure in ventral pathway visual cortex. Curr Biol. 2016;26:766–774. doi: 10.1016/j.cub.2016.01.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Verhoef BE, Vogels R, Janssen P. Inferotemporal cortex subserves three-dimensional structure categorization. Neuron. 2012;73:171–182. doi: 10.1016/j.neuron.2011.10.031. [DOI] [PubMed] [Google Scholar]

- 42.Binford TO. Visual perception by computer. Paper presented at IEEE Systems Science and Cybernetics Conference; Miami, FL. 1971. [Google Scholar]

- 43.Blum H. Biological shape and visual science. J Theor Biol. 1973;38:205–287. doi: 10.1016/0022-5193(73)90175-6. [DOI] [PubMed] [Google Scholar]

- 44.Marr D, Nishihara HK. Representation and recognition of the spatial organization of three-dimensional shapes. Proc R Soc Lond B Biol Sci. 1978;200:269–294. doi: 10.1098/rspb.1978.0020. [DOI] [PubMed] [Google Scholar]

- 45.Biederman I. Recognition-by-components: a theory of human image understanding. Psychol Rev. 1987;94:115–147. doi: 10.1037/0033-295X.94.2.115. [DOI] [PubMed] [Google Scholar]

- 46.Leyton M. A Generative Theory of Shape. Berlin: Springer-Verlag; 2001. [Google Scholar]

- 47.Kimia BB. On the role of medial geometry in human vision. J Physiol Paris. 2003;97:155–190. doi: 10.1016/j.jphysparis.2003.09.003. [DOI] [PubMed] [Google Scholar]

- 48.Feldman J, Singh M. Bayesian estimation of the shape skeleton. Proc Natl Acad Sci USA. 2006;103:18014–18019. doi: 10.1073/pnas.0608811103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Lee TS, Mumford D, Romero R, Lamme VA. The role of the primary visual cortex in higher level vision. Vision Res. 1998;38:2429–2454. doi: 10.1016/s0042-6989(97)00464-1. [DOI] [PubMed] [Google Scholar]

- 50.Vaziri S, Carlson ET, Wang Z, Connor CE. A channel for 3D environmental shape in anterior inferotemporal cortex. Neuron. 2014;84:55–62. doi: 10.1016/j.neuron.2014.08.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Connor CE, Gallant JL, Preddie DC, Van Essen DC. Responses in area V4 depend on the spatial relationship between stimulus and attention. J Neurophysiol. 1996;75:1306–1308. doi: 10.1152/jn.1996.75.3.1306. [DOI] [PubMed] [Google Scholar]

- 52.Connor CE, Preddie DC, Gallant JL, Van Essen DC. Spatial attention effects in macaque area V4. J Neurosci. 1997;17:3201–3214. doi: 10.1523/JNEUROSCI.17-09-03201.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Pasupathy A, Connor CE. Population coding of shape in area V4. Nat Neurosci. 2002;5:1332–1338. doi: 10.1038/nn972. [DOI] [PubMed] [Google Scholar]

- 54.Brincat SL, Connor CE. Underlying principles of visual shape selectivity in posterior inferotemporal cortex. Nat Neurosci. 2004;7:880–886. doi: 10.1038/nn1278. [DOI] [PubMed] [Google Scholar]

- 55.Brincat SL, Connor CE. Dynamic shape synthesis in posterior inferotemporal cortex. Neuron. 2006;49:17–24. doi: 10.1016/j.neuron.2005.11.026. [DOI] [PubMed] [Google Scholar]

- 56.Chang L, Tsao DY. The Code for Facial Identity in the Primate Brain. Cell. 2017;169:1013–1028. doi: 10.1016/j.cell.2017.05.011. This paradigmatic coding analysis makes a conclusive case for ramp-coding along highly composite linear dimensions in facial structure space. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Freiwald WA, Tsao DY, Livingstone MS. A face feature space in the macaque temporal lobe. Nat Neurosci. 2009;12:1187–1196. doi: 10.1038/nn.2363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Leopold DA, Bondar IV, Giese MA. Norm-based face encoding by single neurons in the monkey inferotemporal cortex. Nature. 2006;442:572–575. doi: 10.1038/nature04951. [DOI] [PubMed] [Google Scholar]

- 59.Hong H, Yamins DL, Majaj NJ, DiCarlo JJ. Explicit information for category-orthogonal object properties increases along the ventral stream. Nat Neurosci. 2016;19:613–622. doi: 10.1038/nn.4247. Hong et al. show that more information about position, etc. can be decoded from a population of IT neurons compared to an equal number of V4 neurons. This might reflect the smaller receptive fields of V4 neurons, which necessarily carry information about less visual space, but in any case demonstrates that position information is not lost in IT. This does not mean that it is retinotopic information, which seems unlikely given the scale of IT receptive fields. Instead, it seems likely to be information about position relative to the (comparatively small) viewing aperture, the fixation point, or background features. [DOI] [PubMed] [Google Scholar]

- 60.Konkle T, Oliva A. A real-world size organization of object responses in occipitotemporal cortex. Neuron. 2012;74:1114–1124. doi: 10.1016/j.neuron.2012.04.036. This study shows that object representations in human ventral pathway exhibit a small-to-large dorsal/ventral gradient, based on perceived size rather than retinotopic extent. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Srihasam K, Vincent JL, Livingstone MS. Novel domain formation reveals proto-architecture in inferotemporal cortex. Nat Neurosci. 2014;17:1776–1783. doi: 10.1038/nn.3855. This paper shows that object-value training in young monkeys produces dedicated processing regions in ventral pathway cortex organized by the shape characteristics of the learned objects. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Ponce CR, Hartmann TS, Livingstone MS. End-stopping predicts curvature tuning along the ventral stream. J Neurosci. 2017;37:648–659. doi: 10.1523/JNEUROSCI.2507-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. This landmark paper initiated the study of category-specific patches in ventral pathway cortex with the discovery of the fusiform face area. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Kornblith S, Cheng X, Ohayon S, Tsao DY. A network for scene processing in the macaque temporal lobe. Neuron. 2013;79:766–781. doi: 10.1016/j.neuron.2013.06.015. This group used fMRI and microelectrode recording to study place processing in a patch of monkey occipitotemporal visual cortex that could correspond to the human parahippocampal place area. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Epstein R, Kanwisher N. A cortical representation of the local visual environment. Nature. 1998;392:598–601. doi: 10.1038/33402. This paper was the first to report the existence of the parahippocampal place area in human visual cortex. [DOI] [PubMed] [Google Scholar]

- 66.Lafer-Sousa R, Conway BR. Parallel, multi-stage processing of colors, faces and shapes in macaque inferior temporal cortex. Nat Neurosci. 2013;16:1870–1878. doi: 10.1038/nn.3555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Verhoef BE, Bohon KS, Conway BR. Functional architecture for disparity in macaque inferior temporal cortex and its relationship to the architecture for faces, color, scenes, and visual field. J Neurosci. 2015;35:6952–6968. doi: 10.1523/JNEUROSCI.5079-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Lafer-Sousa R, Conway BR, Kanwisher NG. Color-biased regions of the ventral visual pathway lie between face-and place-selective regions in humans, as in macaques. J Neurosci. 2016;36:1682–1697. doi: 10.1523/JNEUROSCI.3164-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Arcaro MJ, Livingstone MS. Retinotopic Organization of Scene Areas in Macaque Inferior Temporal Cortex. bioRxiv. 2017:131409. doi: 10.1523/JNEUROSCI.0569-17.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Scoville WB, Milner B. Loss of recent memory after bilateral hippocampal lesions. J Neurol Neurosurg Psychiatry. 1957;20:11–21. doi: 10.1136/jnnp.20.1.11. The classic case report of the famous amnesic patient H.M. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Meunier M, Bachevalier J, Mishkin M, Murray EA. Effects on visual recognition of combined and separate ablations of the entorhinal and perirhinal cortex in rhesus monkeys. J Neurosci. 1993;13:5418–5432. doi: 10.1523/JNEUROSCI.13-12-05418.1993. This and the following reference provided strong evidence that damage to the PRC, not the hippocampus proper, was the primary cause of the mnemonic deficits in the DNMS task of visual recognition memory. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Meunier M, Hadfield W, Bachevalier J, Murray EA. Effects of rhinal cortex lesions combined with hippocampectomy on visual recognition memory in rhesus monkeys. J Neurophysiol. 1996;75:1190–1205. doi: 10.1152/jn.1996.75.3.1190. [DOI] [PubMed] [Google Scholar]

- 73.Murray EA, Bussey TJ. Perceptual-mnemonic functions of the perirhinal cortex. Trends Cogn Sci. 1999;3:142–151. doi: 10.1016/s1364-6613(99)01303-0. This article proposes that the perirhinal cortex should be viewed as processing high-order perceptual information as well as memory. [DOI] [PubMed] [Google Scholar]

- 74.Bussey TJ, Saksida LM, Murray EA. The perceptual-mnemonic/feature conjunction model of perirhinal cortex function. Q J Exp Psychol B. 2005;58:269–282. doi: 10.1080/02724990544000004. [DOI] [PubMed] [Google Scholar]

- 75.Murray EA, Wise SP. Why is there a special issue on perirhinal cortex in a journal called hippocampus? The perirhinal cortex in historical perspective. Hippocampus. 2012;22:1941–1951. doi: 10.1002/hipo.22055. This paper puts forth the provocative argument that the perirhinal cortex should not be considered a component of the medial temporal lobe memory system but rather a part of sensory neocortex. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Suzuki WA. Perception and the medial temporal lobe: evaluating the current evidence. Neuron. 2009;61:657–666. doi: 10.1016/j.neuron.2009.02.008. [DOI] [PubMed] [Google Scholar]

- 77.Norman G, Eacott MJ. Dissociable effects of lesions to the perirhinal cortex and the postrhinal cortex on memory for context and objects in rats. Behav Neurosci. 2005;119:557–566. doi: 10.1037/0735-7044.119.2.557. [DOI] [PubMed] [Google Scholar]

- 78.Jo YS, Lee I. Perirhinal cortex is necessary for acquiring, but not for retrieving object-place paired association. Learn Mem. 2010;17:97–103. doi: 10.1101/lm.1620410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Jo YS, Lee I. Disconnection of the hippocampal-perirhinal cortical circuits severely disrupts object-place paired associative memory. J Neurosci. 2010;30:9850–9858. doi: 10.1523/JNEUROSCI.1580-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Wilson DI, et al. Lateral entorhinal cortex is critical for novel object-context recognition. Hippocampus. 2013;23:352–366. doi: 10.1002/hipo.22095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Wilson DI, Watanabe S, Milner H, Ainge JA. Lateral entorhinal cortex is necessary for associative but not nonassociative recognition memory. Hippocampus. 2013;23:1280–1290. doi: 10.1002/hipo.22165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Hunsaker MR, Chen V, Tran GT, Kesner RP. The medial and lateral entorhinal cortex both contribute to contextual and item recognition memory: a test of the binding of items and context model. Hippocampus. 2013;23:380–391. doi: 10.1002/hipo.22097. [DOI] [PubMed] [Google Scholar]

- 83.Stouffer EM, Klein JE. Lesions of the lateral entorhinal cortex disrupt non-spatial latent learning but spare spatial latent learning in the rat (Rattus norvegicus) Acta Neurobiol Exp (Wars) 2013;73:430–437. doi: 10.55782/ane-2013-1949. [DOI] [PubMed] [Google Scholar]

- 84.Heimer-McGinn VR, Poeta DL, Aghi K, Udawatta M, Burwell RD. Disconnection of the Perirhinal and Postrhinal Cortices Impairs Recognition of Objects in Context But Not Contextual Fear Conditioning. J Neurosci. 2017;37:4819–4829. doi: 10.1523/JNEUROSCI.0254-17.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Liu P, Bilkey DK. The effect of excitotoxic lesions centered on the hippocampus or perirhinal cortex in object recognition and spatial memory tasks. Behav Neurosci. 2001;115:94–111. doi: 10.1037/0735-7044.115.1.94. [DOI] [PubMed] [Google Scholar]

- 86.Bachevalier J, Nemanic S. Memory for spatial location and object-place associations are differently processed by the hippocampal formation, parahippocampal areas TH/TF and perirhinal cortex. Hippocampus. 2008;18:64–80. doi: 10.1002/hipo.20369. [DOI] [PubMed] [Google Scholar]

- 87.Van Cauter T, et al. Distinct roles of medial and lateral entorhinal cortex in spatial cognition. Cereb Cortex. 2013;23:451–459. doi: 10.1093/cercor/bhs033. [DOI] [PubMed] [Google Scholar]

- 88.Hunsaker MR, Mooy GG, Swift JS, Kesner RP. Dissociations of the medial and lateral perforant path projections into dorsal DG, CA3, and CA1 for spatial and nonspatial (visual object) information processing. Behav Neurosci. 2007;121:742–750. doi: 10.1037/0735-7044.121.4.742. [DOI] [PubMed] [Google Scholar]

- 89.Rodo C, Sargolini F, Save E. Processing of spatial and non-spatial information in rats with lesions of the medial and lateral entorhinal cortex: Environmental complexity matters. Behav Brain Res. 2017;320:200–209. doi: 10.1016/j.bbr.2016.12.009. [DOI] [PubMed] [Google Scholar]

- 90.Kuruvilla MV, Ainge JA. Lateral entorhinal cortex lesions impair local spatial frameworks. Front Syst Neurosci. 2017;11:30. doi: 10.3389/fnsys.2017.00030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Brown MW, Wilson FA, Riches IP. Neuronal evidence that inferomedial temporal cortex is more important than hippocampus in certain processes underlying recognition memory. Brain Res. 1987;409:158–162. doi: 10.1016/0006-8993(87)90753-0. This paper showed the phenomenon of response suppression in inferomedial temporal cortex, in which neural responses to novel stimuli decrease with repeated exposures. [DOI] [PubMed] [Google Scholar]

- 92.Fahy FL, Riches IP, Brown MW. Neuronal activity related to visual recognition memory: long-term memory and the encoding of recency and familiarity information in the primate anterior and medial inferior temporal and rhinal cortex. Exp Brain Res. 1993;96:457–472. doi: 10.1007/BF00234113. [DOI] [PubMed] [Google Scholar]

- 93.Miller EK, Li L, Desimone R. Activity of neurons in anterior inferior temporal cortex during a short-term memory task. J Neurosci. 1993;13:1460–1478. doi: 10.1523/JNEUROSCI.13-04-01460.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Zhu XO, Brown MW, Aggleton JP. Neuronal signalling of information important to visual recognition memory in rat rhinal and neighbouring cortices. Eur J Neurosci. 1995;7:753–765. doi: 10.1111/j.1460-9568.1995.tb00679.x. [DOI] [PubMed] [Google Scholar]

- 95.Wan H, Aggleton JP, Brown MW. Different contributions of the hippocampus and perirhinal cortex to recognition memory. J Neurosci. 1999;19:1142–1148. doi: 10.1523/JNEUROSCI.19-03-01142.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Young BJ, Fox GD, Eichenbaum H. Correlates of hippocampal complex-spike cell activity in rats performing a nonspatial radial maze task. J Neurosci. 1994;14:6553–6563. doi: 10.1523/JNEUROSCI.14-11-06553.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Deshmukh SS, Knierim JJ. Representation of non-spatial and spatial information in the lateral entorhinal cortex. Front Behav Neurosci. 2011;5:69. doi: 10.3389/fnbeh.2011.00069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Deshmukh SS, Johnson JL, Knierim JJ. Perirhinal cortex represents nonspatial, but not spatial, information in rats foraging in the presence of objects: comparison with lateral entorhinal cortex. Hippocampus. 2012;22:2045–2058. doi: 10.1002/hipo.22046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Burke SN, et al. Representation of three-dimensional objects by the rat perirhinal cortex. Hippocampus. 2012;22:2032–2044. doi: 10.1002/hipo.22060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Tsao A, Moser MB, Moser EI. Traces of experience in the lateral entorhinal cortex. Curr Biol. 2013;23:399–405. doi: 10.1016/j.cub.2013.01.036. [DOI] [PubMed] [Google Scholar]

- 101.Weible AP, Rowland DC, Monaghan CK, Wolfgang NT, Kentros CG. Neural correlates of long-term object memory in the mouse anterior cingulate cortex. J Neurosci. 2012;32:5598–5608. doi: 10.1523/JNEUROSCI.5265-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Deshmukh SS, Knierim JJ. Influence of local objects on hippocampal representations: Landmark vectors and memory. Hippocampus. 2013;23:253–267. doi: 10.1002/hipo.22101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Giocomo LM, Moser MB, Moser EI. Computational models of grid cells. Neuron. 2011;71:589–603. doi: 10.1016/j.neuron.2011.07.023. [DOI] [PubMed] [Google Scholar]

- 104.Aggleton JP, Kyd RJ, Bilkey DK. When is the perirhinal cortex necessary for the performance of spatial memory tasks? Neurosci Biobehav Rev. 2004;28:611–624. doi: 10.1016/j.neubiorev.2004.08.007. [DOI] [PubMed] [Google Scholar]

- 105.Kealy J, Commins S. The rat perirhinal cortex: A review of anatomy, physiology, plasticity, and function. Prog Neurobiol. 2011;93:522–548. doi: 10.1016/j.pneurobio.2011.03.002. [DOI] [PubMed] [Google Scholar]

- 106.Ferbinteanu J, Holsinger RM, McDonald RJ. Lesions of the medial or lateral perforant path have different effects on hippocampal contributions to place learning and on fear conditioning to context. Behav Brain Res. 1999;101:65–84. doi: 10.1016/s0166-4328(98)00144-2. [DOI] [PubMed] [Google Scholar]

- 107.Wiig KA, Bilkey DK. The effects of perirhinal cortical lesions on spatial reference memory in the rat. Behav Brain Res. 1994;63:101–109. doi: 10.1016/0166-4328(94)90055-8. [DOI] [PubMed] [Google Scholar]

- 108.Burwell RD, Saddoris MP, Bucci DJ, Wiig KA. Corticohippocampal contributions to spatial and contextual learning. J Neurosci. 2004;24:3826–3836. doi: 10.1523/JNEUROSCI.0410-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Nelson AJ, Olarte-Sanchez CM, Amin E, Aggleton JP. Perirhinal cortex lesions that impair object recognition memory spare landmark discriminations. Behav Brain Res. 2016;313:255–259. doi: 10.1016/j.bbr.2016.07.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Stranahan AM, Salas-Vega S, Jiam NT, Gallagher M. Interference with reelin signaling in the lateral entorhinal cortex impairs spatial memory. Neurobiol Learn Mem. 2011;96:150–155. doi: 10.1016/j.nlm.2011.03.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111.Burwell RD, Shapiro ML, O’Malley MT, Eichenbaum H. Positional firing properties of perirhinal cortex neurons. Neuroreport. 1998;9:3013–3018. doi: 10.1097/00001756-199809140-00017. [DOI] [PubMed] [Google Scholar]

- 112.Hargreaves EL, Rao G, Lee I, Knierim JJ. Major dissociation between medial and lateral entorhinal input to dorsal hippocampus. Science. 2005;308:1792–1794. doi: 10.1126/science.1110449. [DOI] [PubMed] [Google Scholar]

- 113.Yoganarasimha D, Rao G, Knierim JJ. Lateral entorhinal neurons are not spatially selective in cue-rich environments. Hippocampus. 2011;21:1363–1374. doi: 10.1002/hipo.20839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114.Zironi I, Iacovelli P, Aicardi G, Liu P, Bilkey DK. Prefrontal cortex lesions augment the location-related firing properties of area TE/perirhinal cortex neurons in a working memory task. Cereb Cortex. 2001;11:1093–1100. doi: 10.1093/cercor/11.11.1093. [DOI] [PubMed] [Google Scholar]

- 115.Deshmukh SS, Yoganarasimha D, Voicu H, Knierim JJ. Theta modulation in the medial and the lateral entorhinal cortex. J Neurophysiol. 2010;104:994–1006. doi: 10.1152/jn.01141.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116.Hafting T, Fyhn M, Molden S, Moser MB, Moser EI. Microstructure of a spatial map in the entorhinal cortex. Nature. 2005;436:801–806. doi: 10.1038/nature03721. [DOI] [PubMed] [Google Scholar]

- 117.Savelli F, Luck JD, Knierim JJ. Framing of grid cells within and beyond navigation boundaries. Elife. 2017;6 doi: 10.7554/eLife.21354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 118.Wiig KA, Bilkey DK. Perirhinal cortex lesions in rats disrupt performance in a spatial DNMS task. Neuroreport. 1994;5:1405–1408. [PubMed] [Google Scholar]

- 119.Wiig KA, Burwell RD. Memory impairment on a delayed non-matching-to-position task after lesions of the perirhinal cortex in the rat. Behav Neurosci. 1998;112:827–838. doi: 10.1037//0735-7044.112.4.827. [DOI] [PubMed] [Google Scholar]

- 120.Liu P, Bilkey DK. Excitotoxic lesions centered on perirhinal cortex produce delay-dependent deficits in a test of spatial memory. Behav Neurosci. 1998;112:512–524. doi: 10.1037//0735-7044.112.3.512. [DOI] [PubMed] [Google Scholar]

- 121.Liu P, Bilkey DK. The effect of excitotoxic lesions centered on the perirhinal cortex in two versions of the radial arm maze task. Behav Neurosci. 1999;113:672–682. doi: 10.1037//0735-7044.113.4.672. [DOI] [PubMed] [Google Scholar]

- 122.Ennaceur A, Neave N, Aggleton JP. Neurotoxic lesions of the perirhinal cortex do not mimic the behavioural effects of fornix transection in the rat. Behav Brain Res. 1996;80:9–25. doi: 10.1016/0166-4328(96)00006-x. [DOI] [PubMed] [Google Scholar]

- 123.Liu P, Bilkey DK. Lesions of perirhinal cortex produce spatial memory deficits in the radial maze. Hippocampus. 1998;8:114–121. doi: 10.1002/(SICI)1098-1063(1998)8:2<114::AID-HIPO3>3.0.CO;2-L. [DOI] [PubMed] [Google Scholar]

- 124.Otto T, Wolf D, Walsh TJ. Combined lesions of perirhinal and entorhinal cortex impair rats’ performance in two versions of the spatially guided radial-arm maze. Neurobiol Learn Mem. 1997;68:21–31. doi: 10.1006/nlme.1997.3778. [DOI] [PubMed] [Google Scholar]

- 125.Bucci DJ, Phillips RG, Burwell RD. Contributions of postrhinal and perirhinal cortex to contextual information processing. Behav Neurosci. 2000;114:882–894. doi: 10.1037//0735-7044.114.5.882. [DOI] [PubMed] [Google Scholar]

- 126.Bucci DJ, Saddoris MP, Burwell RD. Contextual fear discrimination is impaired by damage to the postrhinal or perirhinal cortex. Behav Neurosci. 2002;116:479–488. [PubMed] [Google Scholar]

- 127.Ramos JMJ. Perirhinal cortex involvement in allocentric spatial learning in the rat: Evidence from doubly marked tasks. Hippocampus. 2017;27:507–517. doi: 10.1002/hipo.22707. [DOI] [PubMed] [Google Scholar]

- 128.Bos JJ, et al. Perirhinal firing patterns are sustained across large spatial segments of the task environment. Nat Commun. 2017;8:15602. doi: 10.1038/ncomms15602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 129.Collett TS, Cartwright BA, Smith BA. Landmark learning and visuo-spatial memories in gerbils. J Comp Physiol A. 1986;158:835–851. doi: 10.1007/BF01324825. This study shows that animals can learn to search for food at locations defined by a vector relationship to individual landmarks. [DOI] [PubMed] [Google Scholar]

- 130.Biegler R, Morris RG. Landmark stability is a prerequisite for spatial but not discrimination learning. Nature. 1993;361:631–633. doi: 10.1038/361631a0. [DOI] [PubMed] [Google Scholar]

- 131.Neunuebel JP, Yoganarasimha D, Rao G, Knierim JJ. Conflicts between local and global spatial frameworks dissociate neural representations of the lateral and medial entorhinal cortex. J Neurosci. 2013;33:9246–9258. [Google Scholar]

- 132.Keene CS, et al. Complementary Functional Organization of Neuronal Activity Patterns in the Perirhinal, Lateral Entorhinal, and Medial Entorhinal Cortices. J Neurosci. 2016;36:3660–3675. doi: 10.1523/JNEUROSCI.4368-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 133.Manns JR, Eichenbaum H. A cognitive map for object memory in the hippocampus. Learn Mem. 2009;16:616–624. doi: 10.1101/lm.1484509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 134.Burke SN, Maurer AP, Nematollahi S, Uprety AR, Wallace JL, Barnes CA. The influence of objects on place field expression and size in distal CA1. Hippocampus. 2011;21:783–801. doi: 10.1002/hipo.20929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 135.Rivard B, Li Y, Lenck-Santini PP, Poucet B, Muller RU. Representation of objects in space by two classes of hippocampal pyramidal cells. J Gen Physiol. 2004;124:9–25. doi: 10.1085/jgp.200409015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 136.Sarel A, Finkelstein A, Las L, Ulanovsky N. Vectorial representation of spatial goals in the hippocampus of bats. Science. 2017;355:176–180. doi: 10.1126/science.aak9589. [DOI] [PubMed] [Google Scholar]

- 137.Eichenbaum H, Yonelinas AP, Ranganath C. The medial temporal lobe and recognition memory. Annu Rev Neurosci. 2007;30:123–152. doi: 10.1146/annurev.neuro.30.051606.094328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 138.Maass A, Berron D, Libby LA, Ranganath C, Duzel E. Functional subregions of the human entorhinal cortex. Elife. 2015;4 doi: 10.7554/eLife.06426. This study provides evidence for a functional parcellation of the medial and lateral entorhinal cortex in humans, similar to that shown in rodents. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 139.Schultz H, Sommer T, Peters J. Direct evidence for domain-sensitive functional subregions in human entorhinal cortex. J Neurosci. 2012;32:4716–4723. doi: 10.1523/JNEUROSCI.5126-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 140.Reagh ZM, Yassa MA. Object and spatial mnemonic interference differentially engage lateral and medial entorhinal cortex in humans. Proc Natl Acad Sci U S A. 2014;111:E4264–73. doi: 10.1073/pnas.1411250111. This paper demonstrates a functional space vs. object dissociation between putative medial and lateral entorhinal cortex regions in the human. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 141.Lisman JE. Role of the dual entorhinal inputs to hippocampus: a hypothesis based on cue/action (non-self/self) couplets. Prog Brain Res. 2007;163:615–625. doi: 10.1016/S0079-6123(07)63033-7. [DOI] [PubMed] [Google Scholar]

- 142.Knierim JJ, Neunuebel JP, Deshmukh SS. Functional correlates of the lateral and medial entorhinal cortex: objects, path integration and local-global reference frames. Philos Trans R Soc Lond B Biol Sci. 2013;369:20130369. doi: 10.1098/rstb.2013.0369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 143.Furtak SC, Ahmed OJ, Burwell RD. Single neuron activity and theta modulation in postrhinal cortex during visual object discrimination. Neuron. 2012;76:976–988. doi: 10.1016/j.neuron.2012.10.039. [DOI] [PMC free article] [PubMed] [Google Scholar]