Abstract

Objective

Several neuroimaging studies have demonstrated that the ventral temporal cortex contains specialized regions that process visual stimuli. This study investigated the spatial and temporal dynamics of electrocorticographic (ECoG) responses to different types and colors of visual stimulation that were presented to four human participants, and demonstrated a real-time decoder that detects and discriminates responses to untrained natural images.

Approach

ECoG signals from the participants were recorded while they were shown colored and greyscale versions of seven types of visual stimuli (images of faces, objects, bodies, line drawings, digits, and kanji and hiragana characters), resulting in 14 classes for discrimination (experiment I). Additionally, a real-time system asynchronously classified ECoG responses to faces, kanji and black screens presented via a monitor (experiment II), or to natural scenes (i.e., the face of an experimenter, natural images of faces and kanji, and a mirror) (experiment III). Outcome measures in all experiments included the discrimination performance across types based on broadband γ activity.

Main Results

Experiment I demonstrated an offline classification accuracy of 72.9% when discriminating among the seven types (without color separation). Further discrimination of grey versus colored images reached an accuracy of 67.1%. Discriminating all colors and types (14 classes) yielded an accuracy of 52.1%. In experiment II and III, the real-time decoder correctly detected 73.7% responses to face, kanji and black computer stimuli and 74.8% responses to presented natural scenes.

Significance

Seven different types and their color information (either grey or color) could be detected and discriminated using broadband γ activity. Discrimination performance maximized for combined spatial-temporal information. The discrimination of stimulus color information provided the first ECoG-based evidence for color-related population-level cortical broadband γ responses in humans. Stimulus categories can be detected by their ECoG responses in real time within 500 ms with respect to stimulus onset.

1. Introduction

Real-time detection and discrimination of visual perception could lead to improved human-computer interfaces, and may also provide the foundations for new communication tools for people with serious neurological disorders such as amyotrophic lateral sclerosis (ALS).

Substantial research based primarily on functional magnetic resonance imaging (fMRI) has shown that categorization of visual perception is implemented by the brain across different regions on the ventral temporal cortex. Most notably, areas on or around the fusiform gyrus are well known to process face stimuli (Collins and Olson, 2014; Halgren et al., 1999; Kadosh and Johnson, 2007; Kanwisher et al., 1997), and can be used to discriminate visual stimuli of different categories (Grill-Spector and Weiner, 2014). The left fusiform gyrus is known to process visually presented words (Cohen et al., 2000; McCandliss et al., 2003) and the inferior temporal gyrus has been shown to play an important role in recognition of numerals (Shum et al., 2013).

The neural basis of face perception has also been investigated with electrocorticographic (ECoG) recordings. Initial work in this area investigated ECoG evoked responses to faces versus scrambled faces (Allison et al., 1994), faces versus non-faces (Allison et al., 1999), and more diverse stimuli including faces versus parts of faces versus scaled and rotated faces (McCarthy et al., 1999) and faces versus bodies (Engell and McCarthy, 2014a). In addition to investigating traditional evoked potentials, whose physiological origin is complex and unresolved (Kam et al., 2016; Makeig et al., 2002; Mazaheri and Jensen, 2006, 2008), other studies have suggested that ECoG activity in the broadband γ (70–170 Hz) range is a general indicator of cortical population-level activity during auditory (Crone et al., 2001; Edwards et al., 2005; Potes et al., 2014, 2012), language (Chang et al., 2011; Edwards et al., 2010, 2009; Kubanek et al., 2013; Leuthardt et al., 2012; Pei, Barbour, Leuthardt and Schalk, 2011; Pei, Leuthardt, Gaona, Brunner, Wolpaw and Schalk, 2011), sensori-motor (Crone et al., 1998; Kubanek et al., 2009; Miller et al., 2007; Schalk et al., 2007; Wang et al., 2012), attention (Gunduz et al., 2011, 2012; Ray et al., 2008), and memory (Jensen et al., 2007; Maris et al., 2011; Sederberg et al., 2007; Tort et al., 2008; van Vugt et al., 2010) tasks. Physiologically, broadband γ has been shown to be a direct reflection of the average firing rate of neurons directly underneath the electrode (Manning et al., 2009; Miller et al., 2009; Ray and Maunsell, 2011; Whittingstall and Logothetis, 2009), and has been shown to drive the BOLD signal identified using fMRI (Engell et al., 2012; Jacques et al., 2016; Logothetis et al., 2001; Mukamel et al., 2005; Niessing et al., 2005). Hence, more recent studies of visual perception investigated ECoG broadband responses to faces and other objects (Engell and McCarthy, 2011, 2014a,b; Ghuman et al., 2014; Lachaux et al., 2005; Tsuchiya et al., 2008), and used them to predict the N200 evoked response (Engell and McCarthy, 2011), or to predict the onset and identity of visual stimuli (Miller et al., 2016).

Different studies investigated the degree to which faces or other objects can be decoded from brain signals in individual trials. These offline studies reported detection performance of 85% for recognized faces on the ventral temporal cortex (VTC) (Tsuchiya et al., 2008), 90.4% for faces and objects (Gerber et al., 2016), 96% for faces and houses (Miller et al., 2016), and about 60% for animals, chairs, faces, fruits and vehicles (Liu et al., 2009). Another study reported 69% online accuracy in a target selection task of two overlaying images (Cerf et al., 2010). ECoG’s high signal-to-noise ratio even supports significant discrimination of two different faces or two different expressions of one face in single trials (Ghuman et al., 2014). One study decoded twelve categories (excluding faces) during an object naming task with a mean rank accuracy of 76% (i.e., in a list of 100 objects, ranked by their probability to be selected by the classifier, the target object appears on position 24 on average) with a chance level of 50% (Rupp et al., 2017). Another study decoded 24 different categories with an accuracy of 25% (chance level 4.2%) (Majima et al., 2014).

The present study extends this large body of work via three experiments that decode type and color information in experiment I (offline), and (in real time) decode different computer-based stimuli in experiment II and natural image stimuli in experiment III. Specifically, ECoG signals were recorded in four patients while they were shown both color and greyscale versions of seven different types of visual stimuli (photos of faces, objects and bodies, images of line drawings and digits, and kanji and hiragana characters), thus creating a total of 14 stimulus classes. Experiment I investigated the spatial and temporal activity reflecting responses to visual stimulation in terms of discrimination performance at individual instants and sites, and classified across all types and colors in single trials. In addition, a real-time system was implemented to identify presented faces or kanji characters on a computer screen (experiment II), natural scenes with real faces (i.e., the faces of two experimenters and a mirror) and printed faces and kanji characters (experiment III).

2. Methods

2.1. Subjects

Four patients with epilepsy at Asahikawa Medical University (A and D) and The University of Tokyo Hospital (B and C) participated in this study. Each patient was temporarily implanted with subdural electrode grids to localize seizure foci and underwent neuro-monitoring prior to resective brain surgery. The grids consisted of platinum electrodes with an exposed diameter of 1.5–3.0 mm and an inter-electrode distance of 5–10 mm. After grid placement, each subject had postoperative computed tomography (CT) imaging to identify electrode locations in conjunction with preoperative magnetic resonance imaging (MRI). Table 1 provides an overview of the subjects and their clinical profiles. The study was approved by the institutional review boards of Asahikawa Medical University and The University of Tokyo Hospital. All subjects gave informed consent prior to the experiment.

Table 1.

Clinical profiles of the four subjects. The IQ values in the table represent combined verbal and performance IQ scores. Handedness (H) was obtained and language lateralization (LL) was defined using WADA tests. “# of elec.” refers to the total number of electrode contacts in each patient. Subjects participated in up to three experiments (EXP).

| Subject | Age | Sex | IQ | H | LL | Seizure focus | Covered hemisphere | # of elec. | EXP |

|---|---|---|---|---|---|---|---|---|---|

| A | 26 | M | 96 | R | L | Right temporal | Bilateral | 188 | 1–3 |

| B | 20 | F | 74 | R | L | Left temporal | Left | 254 | 1 |

| C | 18 | M | 122 | R | L | Occipital | Bilateral | 254 | 1 |

| D | 29 | F | 85 | R | L | Right | Right | 144 | 2 |

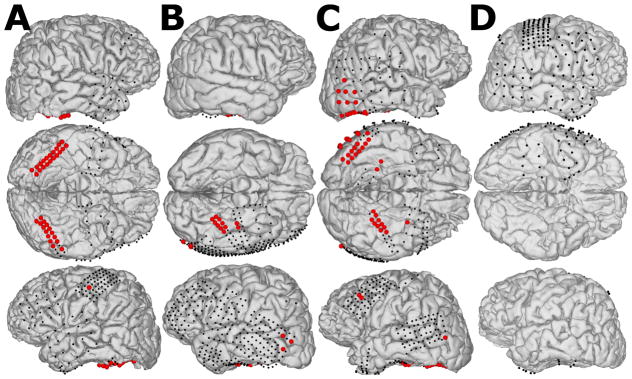

Figure 1 shows the subjects’ reconstructed brain models and indicates implanted electrode locations (dots). Each subject’s brain model was reconstructed in FreeSurfer (Martinos Center for Biomedical Imaging, Cambridge, USA) using pre-operative T1-weighted MRI data (Dale et al., 1999). Then pre-operative MRI data were co-registered to post-operative CT scans using SPM (Wellcome Trust Centre for Neuroimaging, London, UK) to localize electrode positions on the cortex (Penny et al., 2007). Finally, the resulting 3D cortical models and electrode locations were visualized in NeuralAct (Kubanek and Schalk, 2015).

Figure 1.

ECoG recording sites for subjects A, B, C and D. Black dots represent implanted subdural electrodes. Red balls highlight ECoG electrodes at locations that demonstrated significant broadband γ responses to visual stimuli for subjects who participated in experiment I (A, B and C). Subject D only participated in experiment II, in which all channels were spatially filtered.

2.2. Data Acquisition

ECoG signals were recorded at the bed-side with a DC-coupled g.HIamp biosignal amplifier (g.tec medical engineering, Austria) after neuro-monitoring was completed – prior to resective surgery. Data were digitized with 24-bit resolution at 2,400 Hz for offline assessment and 1,200 Hz for real-time processing, synchronized with stimulus presentation using a photo diode, and stored using the g.HIsys real-time processing library (g.tec medical engineering GmbH, Austria). Ground (GND) and reference (REF) were located in dorsal parietal cortex (i.e., distant from task-related electrodes in the temporal lobe).

2.3. Experimental Procedure

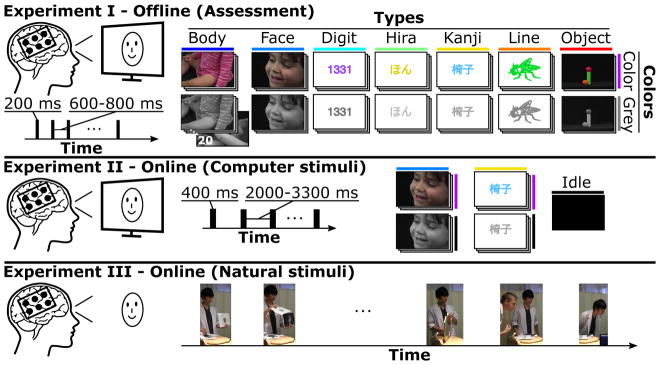

The three experiments in this study are illustrated in Figure 2. Experiment I assessed neural responses to visual stimuli using offline analysis. We also obtained online accuracies during real-time visual perception tasks, where the subjects looked at monitor-based stimuli in experiment II and natural images in experiment III.

Figure 2.

Presented stimulus types and experimental procedures for the three experiments. Experiment I, the assessment, collected ECoG responses from visual stimuli (200 ms presentation time) of seven types (Body, Face, Digit, Hira, Kanji, Line and Object). Examples show one out of 20 stimuli for each type in colored (Color) and greyscale (Grey) versions. Each stimulus occurred twice within the experiment (i.e., 40 stimuli per type and color, 560 stimuli in total). Experiment II evaluated the real-time discrimination performance of ECoG responses to presented Face and Kanji computer stimuli (400 ms presentation time), and to idle stimuli (black screen). Subjects viewed 30–40 stimuli of each type (180–240 trials in total) to calibrate the decoder and repeated the experiment with real-time discrimination (without getting any feedback). Experiment III tested the real-time discrimination performance of ECoG responses to natural stimuli (i.e., printed faces and kanji, mirror, real face) presented by the experimenter, one face presented by a co-experimenter and intermediate idle periods where nothing was shown.

During the assessment (experiment I) subjects A, B and C observed stimuli that were presented on a computer screen, which was placed about 80 cm in front of the subject. The stimuli were about 20 cm in size, and consisted of seven types ((i) Body, (ii) Face, (iii) Digit, (iv) Hira (Hiragana), (v) Kanji, (vi) Line and (vii) Object), all seven of which were shown in color (Color) or greyscale (Grey). This led to a total of 14 different classes for discrimination, which were presented sequentially in random order. Kanji and hiragana characters are components of the Japanese writing system and corresponded to the subjects’ native language. Experiment I in Figure 2 illustrates examples from 20 different stimuli for each class and shows the timeline of four out of 560 trials in the visual stimulation paradigm. Each trial consisted of a 200 ms presentation period and a subsequent black screen for 600–800 ms.

Experiment II employed real-time decoding of stimuli shown on a monitor, including images of faces and kanji characters, and an additional black screen as a new type. Thus, the three possible classification outcomes were Face, Kanji, and Idle (i.e., neither Face nor Kanji, see Figure 2, experiment II). Two subjects (A and D) participated in this discrimination experiment and were asked to observe a sequence of 30 (subject D) or 40 (subject A) stimuli of each type in randomized order with a presentation time of 400 ms each. Inter-stimulus-intervals (ISI) showed a black screen for 2.0–3.3 s. Each subject performed two runs (a total of 3 classes · 30 trials · 2 runs = 180 trials for subject D and 3 · 40 · 2 = 240 trials for subject A), one for calibration and another to validate the real-time decoding performance.

Subject A also participated in experiment III, a real-world scenario with natural stimuli (see Figure 2, experiment III), in which one of the people attending the experiment presented the subject with kanji characters and faces printed on pieces of paper, a mirror and two experimenters’ faces – who appeared in front of the subject. A computer classified Face, Kanji and Idle in real time, and provided visual feedback about that type via a monitor next to the subject, by displaying a face, a kanji character or a black screen. The monitor output was not visible to the subject, but was recorded by a video camera that taped the experiment at a rate of 30 FPS for later synchronization of stimulus onset with ECoG data and for quantification of the decoder’s performance. Frames of the video were synchronized with ECoG data based on the decoder output (i.e., the first video frame showing a kanji character on the monitor corresponded to the sample time at which the decoder classified a Kanji stimulus).

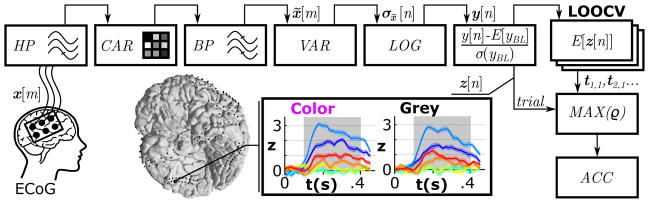

2.4. Signal Processing for Assessment

Figure 4 illustrates the feature extraction and classification method for assessment in experiment I. Recorded ECoG signals were denoted as x[m] (digitized multi-channel data at time m) and underwent a 2 Hz Butterworth high-pass (HP) filter (4th order) to remove DC drifts. Visual inspection of filtered data left 182, 247 and 246 channels (after exclusion of artifactual signals like epileptic activity, etc.) for subsequent processing for subjects A-C, respectively. A common average reference (CAR) montage re-referenced the signals (Liu et al., 2015) and a 110–140 Hz bandpass (BP) filter (Butterworth low and high pass filter, each of 4th order) extracted broadband γ activity. Given the time-frequency maps in Figure 3, this band turned out to be most discriminant for individual classes. Next, the signals were temporally stabilized by computing the variance σx̃[n] based on 20 ms (50% overlap) epochs of x̃ [m], and further normalized by log-transformation. This provided the output metric y[n], where n was an instant of the down sampled signals (fs = 100 Hz). Data from each channel were further z-scored based on all samples of the baseline periods of all trials (−300 to 0 ms pre-stimulus interval), generating standardized data z[n]. Information in z[n] was used to identify reactive ECoG locations and to discriminate ECoG responses to the visual stimulus types.

Figure 4.

Feature extraction pipeline and classification method for the assessment (experiment I). Signal processing steps for the multi-channel ECoG signals x[m] included drift removal by a high-pass filter (HP), spatial filtering (CAR), temporal band-pass filtering (BP), variance estimation (VAR), log-transformation (LOG) and standardization (z-score). Colored time series show the mean z-scores (z[n]) with standard errors for all stimulus types (color codes are based on Figure 1) from ECoG electrode location 182 of subject A. Areas shaded in grey represent the active period used for discrimination. One active period (trial) of z[n] was correlated with templates (t1,1,tt2,1…) based on the remaining trials of each stimulus type. The template that correlated most strongly (MAX(ρ) assigned the trial class according to the template class. A leave-one-out cross validation (LOOCV) yielded the classification accuracy (ACC) of all trials and stimulus types.

Figure 3.

Time-frequency maps of two locations (yellow star and red diamond) of subjects A and B. Each map contains the standardized power change in z-scores for a given type (Line, Digit…) and color (Grey and Color) with respect to a reference window −300 to 0 ms relative to the visual stimulation onset. The yellow star location in A turned out to be Face selective, showing a strong broadband γ power increase for Face stimuli, whereas other most other locations remained silent or show little activation (like Body). A Color selective region was located in B (indicated by the red diamond). The average power change in z-scores of 120 ECoG locations on the VTC in subjects A, B and C, averaged over a period of 100 to 400 ms after stimulus onset, revealed the strongest change for the selected 110 to 140 Hz band (highlighted by the red bar).

Channels were considered for classification only if the standardized data z[n] of any class was significantly higher for the task period compared to the baseline period. Thus, a Wilcoxon rank-sum test compared the average z-scores of a stimulus type’s baseline periods (−300 to 0 ms pre-stimulus interval) with the average z-scores of the corresponding active periods (100 to 400 ms post-stimulus interval). This test was performed for each stimulus type and if a significant response was found (p < 0.01, Bonferroni corrected for the number of channels and tested stimulus types) the channels were considered for further analysis.

Standardized responses z[n] in selected channels (highlighted with red balls in Figure 1) were discriminated by a pattern recognition approach. To do this, the assessment data were separated into NT = 40 trials of each class i (i ∈ {1, 2…14}). Each trial zi,l (l ∈ {1, 2…NT }) consisted of a 100–400 ms post-stimulus interval of z[n]. For pattern recognition templates were computed from 39 trials of each class, which were derived as follows:

| (1) |

Each template vector ti,k was calculated from training data, and was subsequently compared to the kth trial of each class (leave-one-out cross validation (LOOCV) approach, k ∈ {1, 2…40}). Thus, the remaining trial vector zi,k was correlated with the template ti,k leading to ri,k, the correlation coefficient for class i and trial k.

| (2) |

The correlation followed the definition of the Pearson’s correlation coefficient with σti,k,zi,k as the covariance of ti,k and zi,k, and σti,k and σzi,k as the variance of ti,k and zi,k, respectively. Correlation coefficients were computed for all 14 templates for each of the 14 test trials. Hence, for a given tested feature vector, the classifier determined the type and color that produced the highest correlation (MAX(ρ)). Results from 40 repetitions (14 · 40 = 560 classifications in total) with new sets of templates yielded class specific positive rates (TPR) and an overall accuracy (ACC).

The same assessment approach was applied to paired conditions of colored and greyscale types to investigate any color or type specific bias that affected the discrimination performance. For paired conditions a test trial was correlated with the template vectors of the two selected classes and assigned to the class that correlated most.

Additionally, the assessment led to classification accuracies using temporal and spatial features only. Specifically, the temporal features contained concatenated z[n] of the selected channels for a dedicated 20 ms epoch and were classified by the pattern discrimination in 10 ms steps (from −300 to 450 ms relative to stimulus onset). A similar strategy for the spatial assessment included the pattern discrimination of concatenated z[n] over time (100–400 ms post-stimulus interval), tested for each selected channel.

For each assessment, an additional permutation test generated a random distribution of accuracies based on trial labels that were shuffed 1,000 times. Hence, the rank of the assessment output in the random distribution gave the probability p for random classification. This probability was transformed into an activation index (AI) as follows (Gunduz et al., 2011, 2012, 2016; Kubanek et al., 2009; Liu et al., 2015; Lotte et al., 2015; Schalk et al., 2007; Wang et al., 2012):

| (3) |

The AIs were used to highlight reliable discrimination for the temporal and spatial assessment, whereas the p values were used to indicate results that were significantly better than chance (p < 0.05).

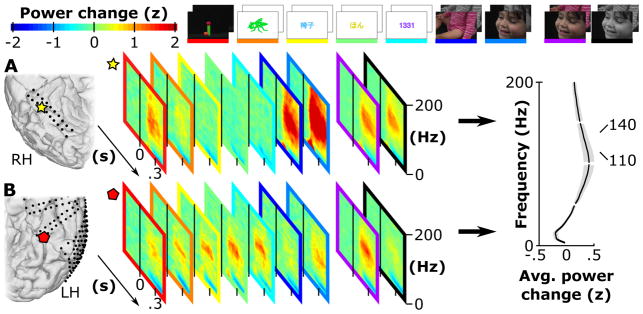

2.5. Signal Processing for Online Discrimination

Real-time processing of multichannel ECoG signals requires time efficient feature extraction methods that guarantee a certain processing time, independent from the number of recorded channels. At the same time, asynchronous detection of visual perception requires robust features that are stable over time to enable detection and discrimination of visual stimuli based on sliding windows. Hence, the signal processing pipeline used for the assessment had to be modified to fulfill the aforementioned requirements.

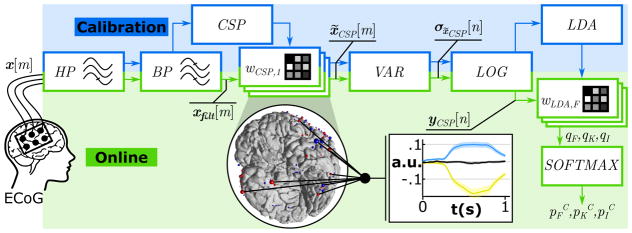

Before classifying ECoG data in real-time, it was necessary to first process calibration data. Figure 5 shows the required signal processing steps. First, a 4th order Butterworth high-pass (HP) filter removed the DC drift of the recorded ECoG signals x[m] for visual inspection. If a channel contained power line interferences or epileptic waveforms, it was manually excluded. This led to 182 and 140 remaining channels for subjects A and D, respectively. Then, a 110–140 Hz band-pass (BP) filter extracted broadband γ activity xfilt[m]. Common spatial patterns (CSP) were computed from filtered signals to improve the signal-to-noise ratio (SNR) and reduce the feature dimensionality (Guger et al., 2000; Müller-Gerking et al., 1999; Ramoser et al., 2000). Since CSPs maximize the signals’ variance for one condition and minimize it for another condition, a set of spatial filters for three “one-versus-all” conditions generated distinctive features for Face, Kanji and Idle. First, Face against combined Idle and Kanji stimuli, second Kanji against combined Idle and Face stimuli and finally, Idle against Face and Kanji stimuli. Hence, each combination resulted in a set of spatial filters sorted by their impact on the conditions’ variance. The CSP filters were calculated from ECoG data from 100–600 ms post-stimulus. For further processing only the four most relevant filters of each paired condition were used (i.e., the filters that corresponded to the two highest and the two lowest eigenvalues (Blankertz et al., 2008)), resulting in twelve feature channels in total. Specifically, spatial filters were applied as channel weights (wCSP,j,j ∈ {1, 2…12}) for all electrodes:

| (4) |

Figure 5.

Feature extraction pipeline and classification method for real-time detection and discrimination in experiment II and III. Calibration: Recorded multi-channel ECoG signals x[m] were HP and BP filtered and submitted to a common spatial pattern (CSP) analysis that computed a set of spatial filters (wCSP). Spatially filtered signals x̃CSP [m] underwent variance estimation (VAR) and log-transformation (LOG) and resulted in normalized yCSP [n]. A linear discriminant analysis (LDA) generated class specific weights (wLDA) for real-time processing. Online: Real-time processing steps included the HP and BP filtering and the spatial (wCSP) filtering, followed by the variance estimation (VAR) and log-transformation (LOG). The linear classifier (wLDA) weighted the features in yCSP [n] and generated LDA outputs (qF,qK,qI) for Face, Kanji and Idle. Finally, a Softmax function transformed the LDA output in complementary probabilities (pFC,pKC,pIC). The diagram yCSP [n] shows the real-time processing output for Face (blue), Kanji (yellow) and Idle (black) based on 182 combined ECoG locations in subject A.

Then, from each resultant time series x̃CSP,j[m] the variance σx̃CSP,j [n] was calculated from 500 ms epochs with a 97% overlap. These signals were log-transformed to normalize the data and to get yCSP [n], the normalized broadband γ power. Finally, three linear discriminant analyses (LDA) were trained to discriminate the twelve features of each class (30–40 trials per class of Face, Kanji and Idle), from data of the remaining classes. Each of the three combinations (denoted with i ∈ {F, K, I}) gave class specific weights wLDA,i.

After the calibration phase, the subsequent processing occurred in real time. Therefore, the ECoG data were sampled with 1,200 Hz and read into the real-time processing in frames of 16 samples, which resulted in a processing rate of 75 Hz. In each processing step, data were HP and BP filtered, yielding xfilt[m] (as shown in Figure 5). The twelve spatial filters were applied on xfilt[m] to get twelve time series x̃CSP [m] for variance estimation and log-transformation, which yielded yCSP [n]. Subsequently, the three weight vectors wLDA,i were applied to yCSP [n] to get the three LDA outputs qi for Face, Kanji and Idle:

| (5) |

Each LDA output was translated into a probability using a Softmax function (Sutton and Barto, 1998):

| (6) |

Here, piC was the complement probability that features represent class i. Hence, the classifier selected the class that corresponded to the lowest piC. In the case that no piC reached the confidence threshold of piC < 0.05, the output was automatically set to Idle. Finally, the activation index (AI) was calculated from the complementary probability piC according to the following equation:

| (7) |

In the real-time processing mode, when the AI crossed the significance threshold (piC or AI > 3), an image of a face, a kanji character or a black screen appeared on a feedback monitor. This feedback was only visible to the experimenter, not the subjects.

3. Results

3.1. Assessment (experiment I)

3.1.1. Pairwise Discrimination of Colored and Greyscale Types

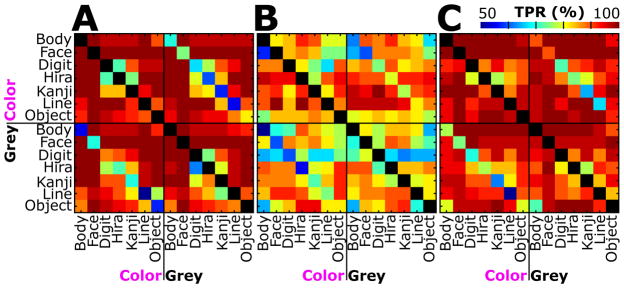

Figure 6 shows the TPR for each type of stimulation versus all each other type. TPR values were obtained by assigning the test trials to one of two template classes. Every matrix contains the TPR for each possible combination of classes. Subjects A and C reached very high TPRs (> 90%) for Face stimulation versus all other types, except for Color Face versus Grey Face. Of course, the TPR reached its maximum if a type was compared with itself as illustrated by the diagonals. Figure 6 further depicts that the TPR minimized for comparisons of Color and Grey stimuli of the same type. For example, subject A correctly classified only 50% of Grey Line versus Color Line stimuli.

Figure 6.

Results for pair-wise classification of colored (Color) and greyscale (Grey) stimulus types for subjects A, B and C. Colored squares indicate the true positive rate (TPR) for each type (rows) and color (columns) against every other type and color. A blue box indicates random classification, while perfect classification is highlighted in red (see color bar; 50% chance for paired classification). Diagonals are shown in black (i.e., no TPR available), as the same class templates used for discrimination were the same. The diagonals in the bottom left and top right quarter of each subject contain the TPR of colored stimuli against greyscale stimuli of the same type.

Table 2 contains the classification accuracies (50% chance) for each subject and each type. Accuracies correspond to the average TPR obtained from all pairwise discrimination tests of a certain type with any other type (i.e., the mean of each type’s row and column TPR in Figure 6). The highest classification accuracy of 97.9% was reached by subject C for Color Face stimulation. Subject A reached the second and third highest accuracies of 97.8% for Color and Grey Face stimulation. Subject B yielded the lowest classification accuracies (76.7%, 78.7% and 80.7%) for Grey Digit and Body, and for Color Body stimulation. Face stimulation achieved 92.3%, the highest average accuracy across all subjects, followed by Body (90.1%) and Object (90.0%) stimulation. The weakest average performance was found for Digit (88.0%) stimulation. Across all stimulus types and colors, the average accuracies were 92.8%, 83.1% and 93.6% for subjects A, B and C, respectively. Accuracies above 65.0% (for A) and 62.5% (for B and C) were statistically better than chance (p < 0.05).

Table 2.

Classification accuracies for each type (Body,Face…) for colored and greyscale stimuli (Colors) for subjects (S) A, B and C. The best three accuracies are bold, whereas the worst three accuracies are underlined.

| S | Colors | Accuracy (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||

| Body | Face | Digit | Hira | Kanji | Line | Object | Average | |||

| A | Color | 94.7 | 97.8 | 91.5 | 90.9 | 91.0 | 93.2 | 93.0 | 93.2 | 92.8 |

| Grey | 94.8 | 97.8 | 91.5 | 89.0 | 91.3 | 89.4 | 92.2 | 92.3 | ||

| B | Color | 80.7 | 80.8 | 83.6 | 88.4 | 86.5 | 86.3 | 84.1 | 84.3 | 83.1 |

| Grey | 78.7 | 82.8 | 76.7 | 84.9 | 84.5 | 84.1 | 81.1 | 81.8 | ||

| C | Color | 96.1 | 97.9 | 92.0 | 91.4 | 93.4 | 91.8 | 96.1 | 94.1 | 93.6 |

| Grey | 95.6 | 97.0 | 92.8 | 90.7 | 90.9 | 90.6 | 93.8 | 93.0 | ||

|

| ||||||||||

| Average | 90.1 | 92.3 | 88.0 | 89.2 | 89.6 | 89.2 | 90.0 | 89.8 | 89.8 | |

3.1.2. Overall Discrimination of Types and Colors

Table 3 contains the classification accuracy after discrimination of Color and Grey images (“Color vs. Grey”), whereby subject C achieved the highest accuracy of 73.0% (50% chance). It contains also the classification accuracy after discrimination of stimulus types without color separation (“7-Types”) when the seven types were classified against each other. Again, subject C reached the highest accuracy of 82.1% (14.3% chance). “T&C” in Table 3 contains the accuracy after classification of all 14 colored and greyscale stimulus types against each other. Here subject C reached 61.6% (7.1% chance). Interestingly, “7-Types” performed better than “Colors vs. Grey”. Although “T&C” performed worst, because of the 14 different classes, all subjects achieved highly significant accuracies (p < 0.0004).

Table 3.

Overall offline classification accuracies (ACC) for colors (Color vs. Grey), types without color separation (7-Types) and types with color separation (T&C) for subjects (S) A, B and C. Random accuracies (RAND) and p values derived from a randomization test with scrambled trial labels.

| S | Color vs. Grey | 7-Types | T&C | ||||||

|---|---|---|---|---|---|---|---|---|---|

|

|

|

|

|||||||

| ACC (%) | RAND (%) | p | ACC (%) | RAND (%) | p | ACC (%) | RAND (%) | p | |

| A | 58.8 | 50.0 | 0.0865 | 77.5 | 14.3 | <0.0002 | 51.1 | 7.1 | <0.0004 |

| B | 69.5 | 50.0 | <0.0005 | 59.5 | 14.3 | <0.0002 | 43.6 | 7.1 | <0.0001 |

| C | 73.0 | 50.0 | <0.0005 | 82.1 | 14.3 | <0.0002 | 61.6 | 7.1 | <0.0001 |

|

| |||||||||

| All | 67.1 | 73.0 | 52.1 | ||||||

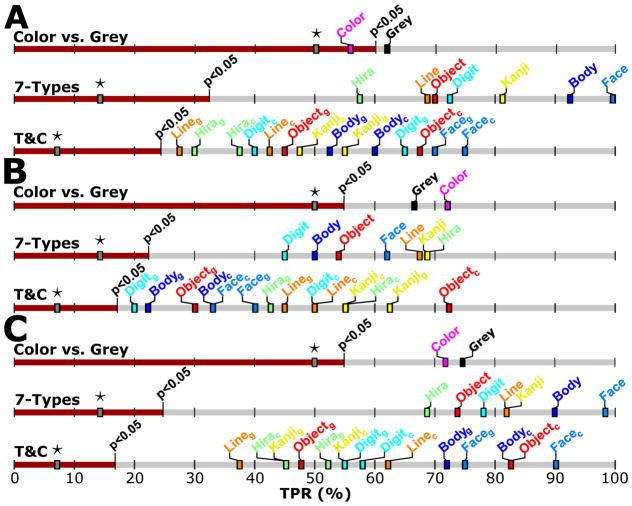

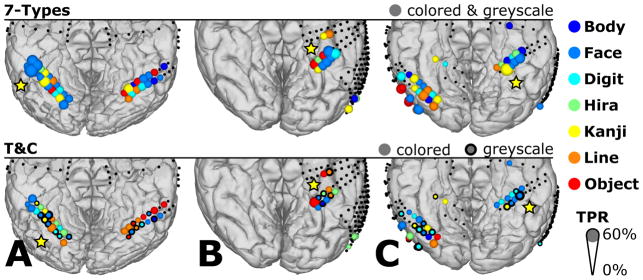

Figure 7 illustrates the TPRs for “Color vs. Grey”, “7-Types” and “T&C”. In the “Color vs. Grey” assessment, subject C achieved a TPR of 74.3% for Grey and 71.8% for Color, whereas subject B correctly classified Color (72.1%) more often than Grey (66.8%). Subject A identified 61.8% of the Grey stimuli, but did not reach significant TPR for Color. In the “7-Types” classification Face performed best for subjects A and C. Indeed, all Face stimuli were identified in subject A. In subject B the TPR maximized for Hira and reached 68.8%. In the “T&C” mode, the classification worked best for Color Face in subjects A (75.0%) and C (90.0%), and Color Object in subject B (72.5%). Grey Line in subjects A (27.5%) and C (37.5%) and Grey Digit in subject B (20.0%) performed worst.

Figure 7.

TPR for colors (Color vs. Grey), types (7-Types) and both (T&C) after a leave-one-out cross validation test for three subjects (A, B, C). Stars indicate the expected random accuracy for each test. The red bar ends at the significance border (p < 0.05) of an empirically derived random distribution based on scrambled trial labels. As an example, subject A had a 50% chance level for “Color vs. Grey” with a threshold TPR of 60.0% (p < 0.05), a chance level of 14.3% for “7-Types” with a threshold TPR of 32.5% (p < 0.05), and a chance level of 7.1% for “T&C” with a threshold TPR of 25.0% (p < 0.05).

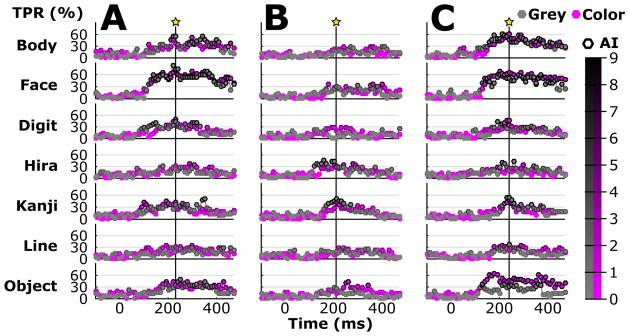

3.1.3. Temporal and Spatial Characteristics

The temporal pattern of the TPR is plotted in Figure 8 for each type (Body, Face…), and Grey and Color. Each star shows the time point with the highest average TPR (classification accuracy) of all types. The classification accuracy reached its peak at 240, 210 and 250 ms after stimulus onset for subject A, B and C, respectively. The classification accuracies (7.1% chance) at those times were 41.8%, 24.6% and 41.8%, respectively, and thus led to an average peak accuracy of 36.1% at about 233 ms across subjects. Responses to Face and Body stimuli gave a high TPR after 200 ms and remained stable until about 400 ms for subject A and C. The biggest TPR difference could be found for subject C for Object. Subject B attained the highest TPR of about 58% for Kanji at about 210 ms. Interestingly, Body, Face and Object produced a high TPR over a long period of about 150–400 ms, while all other stimulus types showed a much shorter peaks.

Figure 8.

TPR and activation index (AI) over time for types and colors. Each time segment (20 ms epochs with 50% overlap) led to a feature vector and resulted in an independent classification output. Thus, the curve represents the TPR for individual segments and the edge color of each bullet shows the AI (black edges indicate reliable activation), which was derived from a randomization test with scrambled trial labels. Stars with vertical lines represent the times for which average TPR and AI maximized for types and colors.

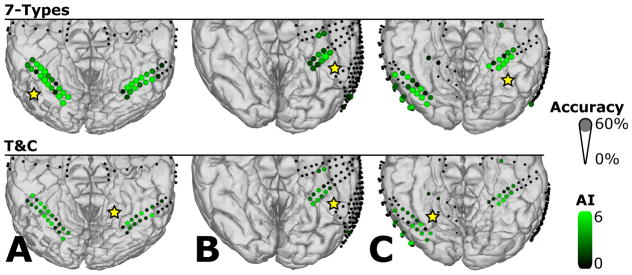

Figure 9 summarizes the classification accuracy for each selected electrode channel discriminating all “7-Types” and all seven types and two colors (“T&C”). Yellow stars label those ECoG electrode locations that provided the highest classification accuracy for each subject. For the “7-Types” comparison, the highest classification accuracies (14.3% chance) were 26.4%, 24.3% and 21.6% for subjects A, B, and C, respectively. Note that each accuracy resulted from a single channel. The corresponding average peak accuracy across subjects was 24.1%. For the “T&C” assessment the highest classification accuracies (7.1% chance) reached 12.5%, 10.4% and 11.6% for subjects A, B, and C, respectively. Here, the average peak accuracy across subjects was 11.5%.

Figure 9.

Spatial distribution of the average classification accuracy for types without color separation (7-Types) and types with color separation (T&C) for subjects A, B and C. Diameters show the average classification accuracy. Only channels with significant activation in the channel selection test were considered for classification and are marked with different AI scale values in green. All other recording locations were excluded from EXP1 and are indicated with small black dots. Yellow stars mark sites that showed the best discrimination performance between types (with and without color separation).

Figure 10 shows the highest TPR of all stimulus types and electrode locations for “7-Types” or “T&C”. Subject A reached a high TPR for Face around the area indicated with the star. In the “7-Types” condition, the types with the highest TPR (14.3% chance) were Face, for subject A (62.5%) and B (47.5%), and Kanji for subject C (61.3%). Adding the color information in “T&C”, the types with the highest TPR (7.1% chance) were Color Line (37.5%), Color Object (42.5%) and Grey Face (42.5%) for subjects A, B and C, respectively.

Figure 10.

Spatial distribution of the classes with the highest TPR for types without color separation (7-Types) and types with color separation (T&C) for subjects A, B and C. Diameters show the TPR and the colors indicate the types with the highest TPR. Only channels with significant activation in the channel selection test were considered for classification (locations with colored dots). All other recording locations were excluded from experiment I and are indicated with small black dots. Yellow stars highlight sites with the highest TPR.

3.2. Online Discrimination of Computer and Natural Stimuli (experiment II and III)

Table 4 lists the total duration of data collection, the latency of the real-time classification output with respect to stimulus onset, the asynchronous classification accuracies and the corresponding random accuracies for subjects A and D. The actual stimulus and the decoder output matched best after shifting the decoder output 440–467 ms backwards in time, and thus showed the processing speed for real-time classification. In experiment II the real-time decoder correctly identified 73.7% of the computer stimuli for both subjects on average. The highest accuracy of 80.80% was achieved by subject A in the computer stimulus run. Even in the natural run subject A achieved an accuracy of 74.82% and performed better than subject D performed in the computer stimulus run. The latency of the decoder during the natural run was fixed to the 467 ms obtained in experiment II.

Table 4.

Asynchronous classification accuracy (Accuracy) of the computer stimuli in experiment II and natural stimuli in experiment III for subjects A and D. Accuracy with sub-sampling (w ss) includes classification results (ACC) from data sub-sampled randomly 50 times, with equally balanced conditions (i.e., Face, Kanji and Idle). Accuracy without sub-sampling (w/ss) contains the classification results (ACC) including all Idle data. Random (RAND) accuracies for the three classes reflect the mean accuracy after a permutation test with 1,000 times shuffed trial labels. The duration (DUR) shows the length of each experiment. The latency (LAT) shows the time delay between the stimulus onset and the actual real-time decoder output with best match.

| S | Stimulus | DUR (s) | LAT (ms) | Accuracy (w ss) | Accuracy (w/ss) | ||||

|---|---|---|---|---|---|---|---|---|---|

|

|

|

||||||||

| ACC (%) | RAND (%) | p | ACC (%) | RAND (%) | p | ||||

| D | Computer | 275 | 440 | 66.7 | 33.3 | <0.001 | 91.1 | 83.7 | <0.001 |

| A | Computer | 275 | 468 | 80.8 | 33.3 | <0.001 | 90.7 | 75.6 | <0.001 |

| A | Natural | 39 | 468 | 74.8 | 33.3 | <0.001 | 82.8 | 69.7 | <0.001 |

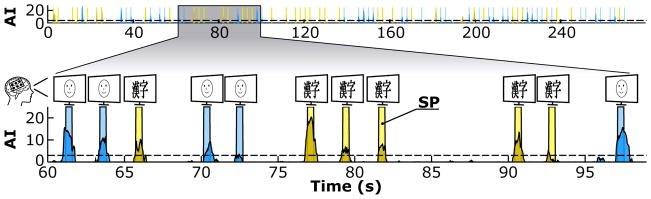

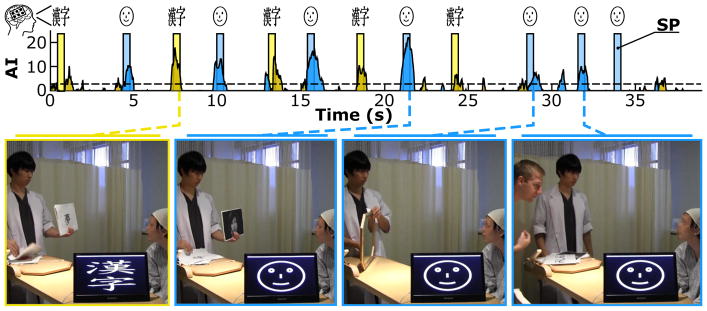

Figures 11 and 12 illustrate the AI over time for the computer stimuli in experiment II and the natural stimuli in experiment III. The decoder classified this output in real time into Face or Kanji when AI exceeded the dashed significance line (AI > 3, corresponding to p < 0.05), and Idle otherwise. The AI time series in both figures were corrected for the mean latency of the cortical responses (i.e., 440–468 ms) and thus shifted compared to the stimulus presentation bars.

Figure 11.

Real-time classification output (AI) over time for the computer stimuli in experiment II of subject A. Stimulus presentation (SP) times of Face (blue) or Kanji (yellow) computer stimuli are overlapped with the AI of Face (blue) and Kanji (yellow).

Figure 12.

Real-time classification output (AI) over time for the natural stimuli in experiment III of subject A. Stimulus presentation (SP) times of natural Face (blue) or Kanji (yellow) stimuli are overlapped with the AI of Face (blue) and Kanji (yellow). These four photographs were taken from a video during the experiment and show the experimenter(s) (on the left) and the subject (on the right). From left to right, the pictures show: (1) the experimenter holding a printed kanji and the BCI system successfully decoding Kanji; (2) the experimenter holding a printed face; (3) the experimenter holding a mirror; (4) a second experimenter. The video monitor on the bottom demonstrates that the brain signals were classified in real time.

4. Discussion

Many neuroimaging studies have demonstrated that ventral temporal cortex and inferior temporal gyrus are well known to contain specialized regions that process visual stimuli, and represent objects, words, numbers, faces and other categories. Some electrophysiological studies using electrocorticography (ECoG) have corroborated and extended these findings by identifying broadband ECoG responses to visual stimuli in the γ band. The present study provides the first human electrocorticographic evidence for color-related population-level cortical broadband γ responses, and demonstrated that neural categories established using stimuli presented on a video screen may generalize to the presentation of real-world visual scenes.

Results in this study were obtained by offline (experiment I) and online analyses (experiment II and III), which were fundamentally different in their decoding strategy. Specifically, the synchronous classification strategy during offline analysis revealed subject and location specific differences of individual categories, whereas the online decoder aimed to asynchronously detect and decode neural categories in real time.

The assessment showed that all types Body, Face, Digit, Hira, Kanji, Line and Object could be classified with a grand average accuracy of 89.8%, and for each type, the accuracy was ≥ 88% (see Table 2). The best performance was achieved for Grey Face and Color Face yielding 92.3% classification accuracy on average. Subject B achieved a lower accuracy than subjects A and C, which may result from missing coverage of the right fusiform gyrus, the location of fusiform face area (FFA). In contrast, subjects A and C had at least partly coverage of the left and right fusiform gyri and showed almost perfect Face classification in pairwise discrimination, which is consistent with the 86–96% correctly detected faces reported elsewhere (Gerber et al., 2016; Miller et al., 2016; Tsuchiya et al., 2008).

Aside from the detection of face-related neural responses, it is noteworthy that the accuracy for Color and Grey Digits reached 92.8% and 92.0% for subject C. Interestingly, electrode sites in subject C covered the right inferior temporal gyrus, which has been identified as a number form area (Shum et al., 2013).

Overall discrimination showed that the best classification accuracy of 72.9% was achieved when the “7-Types” were discriminated from each other based on class templates obtained from broadband γ responses. Other decoders have utilized event-related potentials (ERP), achieving a discrimination performance of about 60% for five stimulus categories (Liu et al., 2009), or single neuron recordings, leading to 69% correctly assigned image labels in a two class selection task (Cerf et al., 2010).

Furthermore, the discrimination of colored and greyscale stimuli yielded 67.1% correct classification. In the present study ECoG signals for color discrimination were obtained mainly from visual area VO1, which has been reported to be color and object selective (Brewer et al., 2005), and further to be responsive to color changes (Brouwer and Heeger, 2013). A previous reported decoder based on fMRI utilized signals obtained from visual area VO1 and discriminated responses to eight colors with an accuracy of 48% after more than 15 repetitions (Brouwer and Heeger, 2009).

The discrimination of all 14 classes in “T&C” gave the lowest accuracy of 52.1%, but contained of course 14 different classes. Subjects A and C performed better for types than for colors, but in contrast, subject B performed better for colors than for types. Therefore, the electrode location could play an important part for color or type separation. Several ECoG locations showed the highest classification accuracy for Face or Kanji stimuli. Those locations were spread across the cortex and support the model of alternating face and letterstring selective cortex regions around the middle fusiform sulcus (Matsuo et al., 2015). Notably, ECoG locations that showed the highest TPR to Face stimuli were grouped into clusters of bigger regions than for Kanji locations. One cluster was located on the right FFA in subject A and turned out to be face selective and causally involved in face processing after systematic electrical cortical stimulation (Schalk et al., 2017). Such a face selective cluster of ECoG locations has also been previously reported in another electrical stimulation study (Parvizi et al., 2012). In the current study two of these clusters were found in subject A (one in each hemisphere), whereas only one cluster, located in the left hemisphere, was found in subjects B and C. This can be most likely explained by missing or only partial coverage of the right fusiform gyrus.

Another interesting finding is that features obtained from Kanji locations enabled the decoder to discriminate even between Hiragana and Kanji stimuli. Such a discrimination task has not been presented elsewhere and shows that the letterstring locations reported in (Matsuo et al., 2015) can be further subdivided into more spe-cific regions.

The assessment further showed that even a single ECoG electrode location decoded specific stimulus types with an accuracy of 24.1% in the “7-Types” discrimination. Although this is already remarkable, combined information from multiple locations revealed the 72.9% accuracy of the “7-Types”. For real-time processing, it was important to efficiently consider multiple electrodes. This was realized with the CSPs that automatically weighted each electrode according to its importance for the classification task. Therefore, the most important electrodes were considered automatically, resulting in higher classification accuracy than single channel analysis.

The spatial distribution of type-specific information remained stable throughout experiments, whereas the onset of broadband γ activity varied from trial to trial and caused a different temporal pattern for each repetition. Hence, it is important to train the classifier on multiple trials and utilize moving variance windows for real-time classification. It is likely that the known relationship between modulatory activity in the α band and cortical population-level broadband γ activity (phase-amplitude coupling (Canolty et al., 2006; Coon and Schalk, 2016; Coon et al., 2016)) resulted in variable broadband γ responses. Hence, in real-time mode the variance was calculated from 500 ms windows and induced, together with the response time of the subject, a delay of 400–500 ms with respect to the stimulus onsets. However, this latency did not affect the performance of the decoder in the present study, as the feedback was not presented to the subject. Still, the observed delay here is much shorter than the reported 1–10 s of the online decoder presented in (Cerf et al., 2010). This is mainly due to the different experimental design, which required the subject to voluntarily activate stimulus selective neurons. Shorter latencies reported in other studies were obtained offline (Miller et al., 2016) and did not report asynchronous classification over time (Liu et al., 2009; Majima et al., 2014; Tsuchiya et al., 2008). The TPR over time in Figure 8 revealed that Body, Face and Object generated distinctive broadband γ activity over a relatively long period from 150 to 400 ms. This was much wider than for Digit, Hira, Kanji and Line, which indicates that processing stimuli of types like Body, Face or Object requires more time and thus is a more complex cognitive task.

Face, Kanji and Idle phases could be separated in real time with accuracies between 66.7–80.8% after about 4 minutes of training in experiment II. These accuracies were achieved without giving feedback to the subject. With longer training periods, and with feedback to subjects, performance would probably increase further. The feedback may help the subject focus on the required tasks and maintain concentration, in addition to facilitating learning. The performance difference between subject A (80.80%) and D (66.67%) can be most likely explained by the dense electrode coverage of the ventral temporal cortex of subject A (66 recording sites versus 20 in subject D).

Spontaneous online detection of visual stimuli in the real-world scenario in experiment III demonstrated a surprisingly high accuracy of 74.82%. Noteably, the real-world stimuli (e.g., the face of the experimenter) were not part of the stimuli used for training with the visual stimuli shown on the computer screen. Thus, the real-world scenario was not only based on new and independent data, but also on a different set of stimuli than the artificial stimuli shown on the computer in experiment II. In fact, natural stimuli included images of kanji and faces printed on a sheet of paper, but also real human faces of the experimenters and the subject through a mirror. This showed that, in subject A, the same cortical regions process information from natural stimuli and from trained faces and kanji characters shown on a computer monitor.

Another issue relevant to real-world applications is the additional cortical activity due to eye motion and moving visual targets, described as motion related augmentation of broadband γ activity on the lateral, inferior and polar occipital regions (Nagasawa et al., 2011). Such activation patterns could interfere with the expected features from the training runs and therefore impair the classification performance. Furthermore, time-locking the onset of neural responses due to natural stimuli is much more challenging than time-locking the onset of responses resulting from stimuli presented via a computer.

4.1. Conclusion

Real-time detection and discrimination of visually perceived natural scenes is even possible when the system is trained on different data than was presented on a computer screen. This could lead to improved human-computer interfaces such as those proposed in the context of passive BCIs (van Erp et al., 2012). Specifically, learning the identity of a perceived (or perhaps even covertly attended) visual object could be useful for constraining or otherwise informing the options of an interface. This ability may also prove useful for establishing new communication options for people that have lost the ability to communicate, such as people with amyotrophic lateral sclerosis (ALS).

Acknowledgments

This work was supported by the European Union (Eurostars project RAPIDMAPS 2020 (ID 9273)), the Japanese government (Grant-in-Aid for Scientific Research (B) No. 16H05434, Grant-in-Aid for Exploratory Research No. 26670633 from the Ministry of Education, Culture, Sports, Science and Technology (MEXT), and Grant-in-Aid for Scientific Research on Innovative Areas, JP15H01657), the National Institutes of Health in the USA (P41-EB018783), the US Army Research Office (W911NF-14-1-0440), and Fondazione Neurone.

References

- Allison T, Ginter H, McCarthy G, Nobre AC, Puce A, Luby M, Spencer DD. Face Recognition in Human Extrastriate Cortex. Journal of Neurophysiology. 1994;71(2):821–825. doi: 10.1152/jn.1994.71.2.821. [DOI] [PubMed] [Google Scholar]

- Allison T, Puce A, Spencer DD, McCarthy G. Electrophysiological Studies of Human Face Perception. I: Potentials Generated in Occipitotemporal Cortex by Face and Non-face Stimuli. Cerebral Cortex. 1999;9(5):415–430. doi: 10.1093/cercor/9.5.415. [DOI] [PubMed] [Google Scholar]

- Blankertz B, Tomioka R, Lemm S, Kawanabe M, Muller KR. Optimizing Spatial Filters for Robust EEG Single-Trial Analysis. IEEE Signal processing magazine. 2008;25(1):41–56. [Google Scholar]

- Brewer AA, Liu J, Wade AR, Wandell BA. Visual field maps and stimulus selectivity in human ventral occipital cortex. Nature neuroscience. 2005;8(8):1102–1109. doi: 10.1038/nn1507. [DOI] [PubMed] [Google Scholar]

- Brouwer GJ, Heeger DJ. Decoding and reconstructing color from responses in human visual cortex. Journal of Neuroscience. 2009;29(44):13992–14003. doi: 10.1523/JNEUROSCI.3577-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brouwer GJ, Heeger DJ. Categorical clustering of the neural representation of color. Journal of Neuroscience. 2013;33(39):15454–15465. doi: 10.1523/JNEUROSCI.2472-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Canolty RT, Edwards E, Dalal SS, Soltani M, Nagarajan SS, Kirsch HE, Berger MS, Barbaro NM, Knight RT. High gamma power is phase-locked to theta oscillations in human neocortex. Science. 2006;313(5793):1626–1628. doi: 10.1126/science.1128115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cerf M, Thiruvengadam N, Mormann F, Kraskov A, Quiroga RQ, Koch C, Fried I. On-line, voluntary control of human temporal lobe neurons. Nature. 2010;467(7319):1104–1108. doi: 10.1038/nature09510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang EF, Edwards E, Nagarajan SS, Fogelson N, Dalal SS, Canolty RT, Kirsch HE, Barbaro NM, Knight RT. Cortical spatio-temporal dynamics underlying phonological target detection in humans. Journal of Cognitive Neuroscience. 2011;23(6):1437–1446. doi: 10.1162/jocn.2010.21466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen L, Dehaene S, Naccache L, Lehéricy S, Dehaene-Lambertz G, Hénaff MA, Michel F. The visual word form area. Brain. 2000;123(2):291–307. doi: 10.1093/brain/123.2.291. [DOI] [PubMed] [Google Scholar]

- Collins JA, Olson IR. Beyond the FFA: the role of the ventral anterior temporal lobes in face processing. Neuropsychologia. 2014;61:65–79. doi: 10.1016/j.neuropsychologia.2014.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coon WG, Schalk G. A method to establish the spatiotemporal evolution of task-related cortical activity from electrocorticographic signals in single trials. Journal of Neuroscience Methods. 2016;271:76–85. doi: 10.1016/j.jneumeth.2016.06.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coon W, Gunduz A, Brunner P, Ritaccio AL, Pesaran B, Schalk G. Oscillatory phase modulates the timing of neuronal activations and resulting behavior. NeuroImage. 2016;133:294–301. doi: 10.1016/j.neuroimage.2016.02.080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crone NE, Boatman D, Gordon B, Hao L. Induced electrocorticographic gamma activity during auditory perception. Journal of Clinical Neurophysiology. 2001;112(4):565–582. doi: 10.1016/s1388-2457(00)00545-9. [DOI] [PubMed] [Google Scholar]

- Crone NE, Miglioretti DL, Gordon B, Lesser RP. Functional mapping of human sensorimotor cortex with electrocorticographic spectral analysis. II. Event-related synchronization in the gamma band. Brain. 1998;121(12):2301–2315. doi: 10.1093/brain/121.12.2301. [DOI] [PubMed] [Google Scholar]

- Dale A, Fischl B, Sereno M. Cortical Surface-Based Analysis. I. Segmentation and Surface Reconstruction. NeuroImage. 1999;9(2):179. doi: 10.1006/nimg.1998.0395. [DOI] [PubMed] [Google Scholar]

- Edwards E, Nagarajan SS, Dalal SS, Canolty RT, Kirsch HE, Barbaro NM, Knight RT. Spatiotemporal imaging of cortical activation during verb generation and picture naming. NeuroImage. 2010;50(1):291–301. doi: 10.1016/j.neuroimage.2009.12.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edwards E, Soltani M, Deouell LY, Berger MS, Knight RT. High gamma activity in response to deviant auditory stimuli recorded directly from human cortex. Journal of Neurophysiology. 2005;94(6):4269–4280. doi: 10.1152/jn.00324.2005. [DOI] [PubMed] [Google Scholar]

- Edwards E, Soltani M, Kim W, Dalal SS, Nagarajan SS, Berger MS, Knight RT. Comparison of time-frequency responses and the event-related potential to auditory speech stimuli in human cortex. Journal of Neurophysiology. 2009;102(1):377–386. doi: 10.1152/jn.90954.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engell AD, Huettel S, McCarthy G. The fMRI BOLD signal tracks electrophysiological spectral perturbations, not event-related potentials. NeuroImage. 2012;59(3):2600–2606. doi: 10.1016/j.neuroimage.2011.08.079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engell AD, McCarthy G. The Relationship of Gamma Oscillations and Face-Specic ERPs Recorded Subdurally from Occipitotemporal Cortex. Cerebral Cortex. 2011;21(5):1213–1221. doi: 10.1093/cercor/bhq206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engell AD, McCarthy G. Face, eye, and body selective responses in fusiform gyrus and adjacent cortex: an intracranial EEG study. Frontiers in Human Neuroscience. 2014a;8:642. doi: 10.3389/fnhum.2014.00642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engell AD, McCarthy G. Repetition Suppression of Face-Selective Evoked and Induced EEG Recorded From Human Cortex. Human Brain Mapping. 2014b;35(8):4155–4162. doi: 10.1002/hbm.22467. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gerber EM, Golan T, Knight RT, Deouell LY. Persistent neural activity encoding real-time presence of visual stimuli decays along the ventral stream. bioRxiv. 2016:088021. [Google Scholar]

- Ghuman AS, Brunet NM, Li Y, Konecky RO, Pyles JA, Walls SA, Destefino V, Wang W, Richardson RM. Dynamic encoding of face information in the human fusiform gyrus. Nature Communications. 2014;5:5672. doi: 10.1038/ncomms6672. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill-Spector K, Weiner KS. The functional architecture of the ventral temporal cortex and its role in categorization. Nature Reviews Neuroscience. 2014;15(8):536–548. doi: 10.1038/nrn3747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guger C, Ramoser H, Pfurtscheller G. Real-time EEG analysis with subject-specific spatial patterns for a brain-computer interface (BCI) IEEE Transactions on Rehabilitation Engineering. 2000;8(4):447–456. doi: 10.1109/86.895947. [DOI] [PubMed] [Google Scholar]

- Gunduz A, Brunner P, Daitch A, Leuthardt EC, Ritaccio AL, Pesaran B, Schalk G. Neural correlates of visual–spatial attention in electrocorticographic signals in humans. Frontiers in human neuroscience. 2011;5:89. doi: 10.3389/fnhum.2011.00089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gunduz A, Brunner P, Daitch A, Leuthardt EC, Ritaccio AL, Pesaran B, Schalk G. Decoding covert spatial attention using electrocorticographic (ECoG) signals in humans. NeuroImage. 2012;60(4):2285–2293. doi: 10.1016/j.neuroimage.2012.02.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gunduz A, Brunner P, Sharma M, Leuthardt EC, Ritaccio AL, Pesaran B, Schalk G. Differential roles of high gamma and local motor potentials for movement preparation and execution. Brain-Computer Interfaces. 2016:1–15. [Google Scholar]

- Halgren E, Dale AM, Sereno MI, Tootell RB, Marinkovic K, Rosen BR. Location of Human Face-Selective Cortex With Respect to Retinotopic Areas. Human Brain Mapping. 1999;7(1):29–37. doi: 10.1002/(SICI)1097-0193(1999)7:1<29::AID-HBM3>3.0.CO;2-R. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacques C, Witthoft N, Weiner KS, Foster BL, Rangarajan V, Hermes D, Miller KJ, Parvizi J, Grill-Spector K. Corresponding ECoG and fMRI category-selective signals in human ventral temporal cortex. Neuropsychologia. 2016;83:14–28. doi: 10.1016/j.neuropsychologia.2015.07.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen O, Kaiser J, Lachaux JP. Human gamma-frequency oscillations associated with attention and memory. Trends in Neuroscience. 2007;30(7):317–324. doi: 10.1016/j.tins.2007.05.001. [DOI] [PubMed] [Google Scholar]

- Kadosh KC, Johnson MH. Developing a cortex specialized for face perception. Trends in Cognitive Sciences. 2007;11(9):367–369. doi: 10.1016/j.tics.2007.06.007. [DOI] [PubMed] [Google Scholar]

- Kam J, Szczepanski S, Canolty R, Flinker A, Auguste K, Crone N, Kirsch H, Kuperman R, Lin J, Parvizi J, et al. Differential Sources for 2 Neural Signatures of Target Detection: An Electrocorticography Study. Cerebral Cortex. 2016:1–12. doi: 10.1093/cercor/bhw343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The Fusiform Face Area: A Module in Human Extrastriate Cortex Specialized for Face Perception. Journal of Neuroscience. 1997;17(11):4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kubanek J, Brunner P, Gunduz A, Poeppel D, Schalk G. The tracking of speech envelope in the human cortex. PloS one. 2013;8(1):e53398. doi: 10.1371/journal.pone.0053398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kubanek J, Miller K, Ojemann J, Wolpaw J, Schalk G. Decoding flexion of individual fingers using electrocorticographic signals in humans. Journal of Neural Engineering. 2009;6(6):066001. doi: 10.1088/1741-2560/6/6/066001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kubanek J, Schalk G. NeuralAct: A Tool to Visualize Electrocortical (ECoG) Activity on a Three-Dimensional Model of the Cortex. Neuroinformatics. 2015;13(2):167–174. doi: 10.1007/s12021-014-9252-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lachaux JP, George N, Tallon-Baudry C, Martinerie J, Hugueville L, Minotti L, Kahane P, Renault B. The many faces of the gamma band response to complex visual stimuli. NeuroImage. 2005;25(2):491–501. doi: 10.1016/j.neuroimage.2004.11.052. [DOI] [PubMed] [Google Scholar]

- Leuthardt E, Pei XM, Breshears J, Gaona C, Sharma M, Freudenburg Z, Barbour D, Schalk G. Temporal evolution of gamma activity in human cortex during an overt and covert word repetition task. Frontiers in Human Neuroscience. 2012;6:99. doi: 10.3389/fnhum.2012.00099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu H, Agam Y, Madsen JR, Kreiman G. Timing, Timing, Timing: Fast Decoding of Object Information from Intracranial Field Potentials in Human Visual Cortex. Neuron. 2009;62(2):281–290. doi: 10.1016/j.neuron.2009.02.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu Y, Coon W, de Pesters A, Brunner P, Schalk G. The effects of spatial filtering and artifacts on electrocorticographic signals. Journal of Neural Engineering. 2015;12(5):056008. doi: 10.1088/1741-2560/12/5/056008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Logothetis NK, Pauls J, Augath M, Trinath T, Oeltermann A. Neurophysiological investigation of the basis of the fMRI signal. Nature. 2001;412(6843):150–157. doi: 10.1038/35084005. [DOI] [PubMed] [Google Scholar]

- Lotte F, Brumberg JS, Brunner P, Gunduz A, Ritaccio AL, Guan C, Schalk G. Electrocorticographic representations of segmental features in continuous speech. Frontiers in Human Neuroscience. 2015;9:97. doi: 10.3389/fnhum.2015.00097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Majima K, Matsuo T, Kawasaki K, Kawai K, Saito N, Hasegawa I, Kamitani Y. Decoding visual object categories from temporal correlations of ECoG signals. NeuroImage. 2014;90:74–83. doi: 10.1016/j.neuroimage.2013.12.020. [DOI] [PubMed] [Google Scholar]

- Makeig S, Westerfield M, Jung TP, Enghoff S, Townsend J, Courchesne E, Sejnowski TJ. Dynamic brain sources of visual evoked responses. Science. 2002;295(5555):690–694. doi: 10.1126/science.1066168. [DOI] [PubMed] [Google Scholar]

- Manning JR, Jacobs J, Fried I, Kahana MJ. Broadband shifts in local field potential power spectra are correlated with single-neuron spiking in humans. Journal of Neuroscience. 2009;29(43):13613–13620. doi: 10.1523/JNEUROSCI.2041-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maris E, van Vugt M, Kahana M. Spatially distributed patterns of oscillatory coupling between high-frequency amplitudes and low-frequency phases in human iEEG. NeuroImage. 2011;54(2):836–850. doi: 10.1016/j.neuroimage.2010.09.029. [DOI] [PubMed] [Google Scholar]

- Matsuo T, Kawasaki K, Kawai K, Majima K, Masuda H, Murakami H, Kunii N, Kamitani Y, Kameyama S, Saito N, et al. Alternating zones selective to faces and written words in the human ventral occipitotemporal cortex. Cerebral Cortex. 2015;25(5):1265–1277. doi: 10.1093/cercor/bht319. [DOI] [PubMed] [Google Scholar]

- Mazaheri A, Jensen O. Posterior α activity is not phase-reset by visual stimuli. PNAS USA. 2006;103(8):2948–2952. doi: 10.1073/pnas.0505785103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazaheri A, Jensen O. Asymmetric amplitude modulations of brain oscillations generate slow evoked responses. Journal of Neuroscience. 2008;28(31):7781–7787. doi: 10.1523/JNEUROSCI.1631-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCandliss BD, Cohen L, Dehaene S. The visual word form area: expertise for reading in the fusiform gyrus. Trends in Cognitive Sciences. 2003;7(7):293–299. doi: 10.1016/s1364-6613(03)00134-7. [DOI] [PubMed] [Google Scholar]

- McCarthy G, Puce A, Belger A, Allison T. Electrophysiological Studies of Human Face Perception. II: Response Properties of Face-specific Potentials Generated in Occipitotemporal Cortex. Cerebral Cortex. 1999;9(5):431–444. doi: 10.1093/cercor/9.5.431. [DOI] [PubMed] [Google Scholar]

- Miller KJ, Leuthardt EC, Schalk G, Rao RP, Anderson NR, Moran DW, Miller JW, Ojemann JG. Spectral changes in cortical surface potentials during motor movement. Journal of Neuroscience. 2007;27(9):2424–2432. doi: 10.1523/JNEUROSCI.3886-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller KJ, Schalk G, Hermes D, Ojemann JG, Rao RP. Spontaneous Decoding of the Timing and Content of Human Object Perception from Cortical Surface Recordings Reveals Complementary Information in the Event-Related Potential and Broadband Spectral Change. PLoS Computational Biology. 2016;12(1):e1004660. doi: 10.1371/journal.pcbi.1004660. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller KJ, Sorensen LB, Ojemann JG, den Nijs M. Power-Law Scaling in the Brain Surface Electric Potential. PLoS Computational Biology. 2009;5(12):e1000609. doi: 10.1371/journal.pcbi.1000609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mukamel R, Gelbard H, Arieli A, Hasson U, Fried I, Malach R. Coupling between neuronal firing, field potentials, and fMRI in human auditory cortex. Science. 2005;309(5736):951–954. doi: 10.1126/science.1110913. [DOI] [PubMed] [Google Scholar]

- Müller-Gerking J, Pfurtscheller G, Flyvbjerg H. Designing optimal spatial filters for single-trial EEG classification in a movement task. Clinical Neurophysiology. 1999;110(5):787–798. doi: 10.1016/s1388-2457(98)00038-8. [DOI] [PubMed] [Google Scholar]

- Nagasawa T, Matsuzaki N, Juhász C, Hanazawa A, Shah A, Mittal S, Sood S, Asano E. Occipital gamma-oscillations modulated during eye movement tasks: Simultaneous eye tracking and electrocorticography recording in epileptic patients. NeuroImage. 2011;58(4):1101–1109. doi: 10.1016/j.neuroimage.2011.07.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niessing J, Ebisch B, Schmidt KE, Niessing M, Singer W, Galuske RA. Hemodynamic signals correlate tightly with synchronized gamma oscillations. Science. 2005;309(5736):948–951. doi: 10.1126/science.1110948. [DOI] [PubMed] [Google Scholar]

- Parvizi J, Jacques C, Foster BL, Withoft N, Rangarajan V, Weiner KS, Grill-Spector K. Electrical stimulation of human fusiform face-selective regions distorts face perception. Journal of Neuroscience. 2012;32(43):14915–14920. doi: 10.1523/JNEUROSCI.2609-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pei X, Barbour DL, Leuthardt EC, Schalk G. Decoding vowels and consonants in spoken and imagined words using electrocorticographic signals in humans. Journal of Neural Engineering. 2011;8(4):046028. doi: 10.1088/1741-2560/8/4/046028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pei X, Leuthardt EC, Gaona CM, Brunner P, Wolpaw JR, Schalk G. Spatiotemporal dynamics of electrocorticographic high gamma activity during overt and covert word repetition. NeuroImage. 2011;54(4):2960–2972. doi: 10.1016/j.neuroimage.2010.10.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Penny WD, Friston KJ, Ashburner JT, Kiebel SJ, Nichols TE. Statistical Parametric Mapping: The Analysis of Functional Brain Images. 1. Academic Press; 2007. [Google Scholar]

- Potes C, Brunner P, Gunduz A, Knight RT, Schalk G. Spatial and temporal relationships of electrocorticographic alpha and gamma activity during auditory processing. NeuroImage. 2014;97:188–195. doi: 10.1016/j.neuroimage.2014.04.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Potes C, Gunduz A, Brunner P, Schalk G. Dynamics of electrocorticographic (ECoG) activity in human temporal and frontal cortical areas during music listening. NeuroImage. 2012;61(4):841–848. doi: 10.1016/j.neuroimage.2012.04.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramoser H, Muller-Gerking J, Pfurtscheller G. Optimal Spatial Filtering of Single Trial EEG During Imagined Hand Movement. IEEE Transactions on Rehabilitation Engineering. 2000;8(4):441–446. doi: 10.1109/86.895946. [DOI] [PubMed] [Google Scholar]

- Ray S, Maunsell J. Different origins of gamma rhythm and high-gamma activity in macaque visual cortex. PLoS Biology. 2011;9(4):e1000610. doi: 10.1371/journal.pbio.1000610. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ray S, Niebur E, Hsiao SS, Sinai A, Crone NE. High-frequency gamma activity (80–150Hz) is increased in human cortex during selective attention. Clinical Neurophysiology. 2008;119(1):116–133. doi: 10.1016/j.clinph.2007.09.136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rupp K, Roos M, Milsap G, Caceres C, Ratto C, Chevillet M, Crone NE, Wolmetz M. Semantic attributes are encoded in human electrocorticographic signals during visual object recognition. NeuroImage. 2017 doi: 10.1016/j.neuroimage.2016.12.074. [DOI] [PubMed] [Google Scholar]

- Schalk G, Kapeller C, Guger C, Ogawa H, Hiroshima S, Lafer-Sousa R, Saygin ZM, Kamada K, Kanwisher N. Facephenes and rainbows: Causal evidence for functional and anatomical specificity of face and color processing in the human brain. Proceedings of the National Academy of Sciences. 2017:201713447. doi: 10.1073/pnas.1713447114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schalk G, Kubanek J, Miller K, Anderson N, Leuthardt E, Ojemann J, Limbrick D, Moran D, Gerhardt L, Wolpaw J. Decoding two-dimensional movement trajectories using electrocorticographic signals in humans. Journal of Neural Engineering. 2007;4(3):264. doi: 10.1088/1741-2560/4/3/012. [DOI] [PubMed] [Google Scholar]

- Sederberg PB, Schulze-Bonhage A, Madsen JR, Bromfield EB, Litt B, Brandt A, Kahana MJ. Gamma Oscillations Distinguish True From False Memories. Psychological Science. 2007;18(11):927–932. doi: 10.1111/j.1467-9280.2007.02003.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shum J, Hermes D, Foster BL, Dastjerdi M, Rangarajan V, Winawer J, Miller KJ, Parvizi J. A brain area for visual numerals. Journal of Neuroscience. 2013;33(16):6709–6715. doi: 10.1523/JNEUROSCI.4558-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutton RS, Barto AG. Reinforcement Learning: An Introduction. MIT Press; Cambridge, MA: 1998. p. 22447. [Google Scholar]

- Tort AB, Kramer MA, Thorn C, Gibson DJ, Kubota Y, Graybiel AM, Kopell NJ. Dynamic cross-frequency couplings of local field potential oscillations in rat striatum and hippocampus during performance of a T-maze task. PNAS USA. 2008;105(51):20517–20522. doi: 10.1073/pnas.0810524105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsuchiya N, Kawasaki H, Oya H, Howard MA, III, Adolphs R. Decoding Face Information in Time, Frequency and Space from Direct Intracranial Recordings of the Human Brain. PLoS One. 2008;3(12):e3892. doi: 10.1371/journal.pone.0003892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Erp J, Lotte F, Tangermann M. Brain-computer interfaces: beyond medical applications. Computer. 2012;45(4):26–34. [Google Scholar]

- van Vugt MK, Schulze-Bonhage A, Litt B, Brandt A, Kahana MJ. Hippocampal Gamma Oscillations Increase with Memory Load. Journal of Neuroscience. 2010;30(7):2694–2699. doi: 10.1523/JNEUROSCI.0567-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang Z, Gunduz A, Brunner P, Ritaccio AL, Ji Q, Schalk G. Decoding onset and direction of movements using electrocorticographic (ECoG) signals in humans. Frontiers in Neuroengineering. 2012;5(15) doi: 10.3389/fneng.2012.00015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whittingstall K, Logothetis NK. Frequency-band coupling in surface EEG reflects spiking activity in monkey visual cortex. Neuron. 2009;64(2):281–289. doi: 10.1016/j.neuron.2009.08.016. [DOI] [PubMed] [Google Scholar]