Abstract

Background

Social media discussions may reflect public interest and stance regarding vaccine hesitancy.

Objective

We assess differences in posting frequency and timing between individuals expressing vaccine hesitancy and individuals expressing opposing views.

Methods

We classified social media posts as pro-vaccination, expressing vaccine hesitancy (broadly defined), uncertain whether vaccine hesitant or not, or off topic. Spearman correlations with CDC reported measles cases and differenced smoothed cumulative case counts over this period were reported (using time series bootstrap confidence intervals).

Results

A total of 58 078 Facebook posts and 82 993 tweets were identified from January 4, 2009–August 27, 2016; we analyzed all posts regardless of location, since geolocation was highly incomplete. For both Facebook and Twitter, pro-vaccination posts were correlated with the US weekly reported cases (Facebook: Spearman correlation 0.22 (95% CI: 0.09 to 0.34), Twitter: 0.21 (95% CI: 0.06 to 0.34)). Anti-vaccination posts, however, were essentially unrelated to the presence of a measles epidemic in the US (Facebook: 0.01 (95% CI: −0.13 to 0.14), Twitter: 0.0011 (95% CI: −0.12 to 0.12)).

Conclusions

These findings may result from more consistent social media engagement by individuals expressing vaccine hesitancy, contrasted with media- or event-driven episodic interest on the part of individuals favoring current policy.

Keywords: measles, vaccination, treatment refusal, patient compliance, social media

Introduction

Measles results from infection due to an RNA virus in the genus Paramyxoviridae. Transmitted by the respiratory route, measles is one of the most contagious diseases known [1]. Most individuals in the developed world recover without complications, and the death rate of 1–3 per 1000 is largely due to measles pneumonia [2]. The disease causes fever, cough, and rash [3, 4], following an incubation period of 8–12 days [3, 5, 6]. Subacute sclerosing panencephalitis is a rare but fatal and untreatable complication [2,7]. Measles infection normally confers lifelong immunity [8].

Vaccination with the MMR vaccine confers long-lasting protection, which may be lifelong [9]. The two-dose MMR schedule shows an eficacy of approximately 94% [10]. High vaccination rates achieve herd immunity, and are the basis for measles elimination [8]. The World Health Organization estimates that measles vaccination has prevented 20.3 million deaths worldwide during 2000–2015 [11]. In the United States, although measles was declared locally eliminated [12], it is repeatedly reintroduced. In late 2014–early 2015, a measles outbreak linked to Disneyland theme parks caused approximately 130 cases in California alone; it spread to other states in the US, as well as Canada and Mexico [13,14].

Measles transmission in recent years has been linked to vaccine refusal [15]. Opposition to preventive inoculation is hardly new [16, 17], though in recent years, a now-retracted [18] study led by A. Wakefield may have reinvigorated anti-vaccination controversy [19,20]. Significant media attention to this research and personal anecdotes from celebrities may have caused a sense of fear and distrust in the vaccine among some individuals, potentially contributing to a drop in vaccination rates in some locations [19, 21, 22]. Despite these real concerns about falling vaccination rates, overall US national levels of MMR vaccine coverage appear to have remained high in recent years [23,24].

Social media sites may now have become a reflection of the beliefs and practices of people in their daily lives [25]. Use of social media is extensive; Twitter reports over 300 million active users globally in 2016 [26], and significant numbers of online American adults interviewed by the Pew Research Center endorsed using Twitter in 2015 (23%) and Facebook (72%). Furthermore, Twitter and Facebook provide large amounts of publicly accessible data over a number of years, from very large user bases.

Social media has had a large and growing role in health communication [27–30]. Public health and research organizations have also turned to social media as a form of digital public health surveillance to augment traditional methods of risk or disease monitoring [31–38] or as a communication tool [39–41]. A growing number of non-traditional sources for health information, including blogs and alternative news sites, are available online [42–46].

Examination of social media can provide insight into public discussion of vaccination [47], and may be predictive of geographical variation in vaccination compliance [48, 49]. While Internet communication has played a large role in vaccine opposition for a number of years [50], social media provide an outlet to share beliefs widely in real time [42, 51, 52]. Individuals opposing vaccination have also made substantial use of social media and emerging communication technologies [51,53,54].

Previous studies examining social media and public discourse on measles vaccinations have focused on posts during an outbreak [35,51], and predictors of which articles are shared on social media [55]. Others have found differences in linguistic usage between pro- and anti-vaccination posts in certain settings [56]. Other studies have focused on vaccines other than MMR [52,57]. An important resource for understanding vaccine sentiment worldwide is provided by the recently introduced Vaccine Sentimeter [47], which used the public Twitter API and other sources of information (including news sources and blogs), to yield an index of vaccine sentiment (positive, negative, and neutral/unclear). This tool reports data from May 2012 to November 2014 [58]. Previous studies have also used machine learning classifiers to simply explore and describe the Twitter conversation during a disease outbreak [59]. Other studies have used social media to relate human mobility to disease transmission [60], to forecast disease incidence during an outbreak [61, 62], to track the spread of rumor and perceived risk during an outbreak [63].

In this report, we examine Facebook and Twitter social media discussion of vaccination in relation to measles from 2009 to mid-2016, a period including several widely publicized outbreaks. We related volume and machine-learned vaccine-related stance [64] to CDC-reported weekly measles counts. Vaccine hesitancy refers to “delay in acceptance or refusal of vaccination despite availability of vaccination services” [65]. We conjectured that individuals who do not express vaccine hesitancy (who are in favor of current practice) become drawn into the debate during actual outbreaks of measles (which may, for example, be perceived as a threat). Accordingly, we hypothesized that the number of posts exhibiting a given stance—pro-vaccination (essentially in favor of current practice), or vaccine hesitant—expressed in Facebook posts and on Twitter regarding vaccinations would differ depending on whether or not a measles outbreak was occurring in real time.

Methods

Overview

We constructed a list of keywords regarding opinion about vaccinations or measles, and used a commercial social media analytics platform [66,67] (Crimson Hexagon) to collect Facebook posts and tweets, from 2009 to mid-2016, that contained these keywords. We trained the BrightView classifier provided by the platform to group Facebook posts and tweets into four classes: pro-vaccination, expressing vaccine hesitancy, on-topic but unclear, and off-topic. The BrightView classifier is a supervised learning algorithm based in part on stacked regression analysis of simplified numerical representation of text [68]. These machine-coded classifications were assessed by comparison with human classifications of a random sample of Facebook posts and tweets. Finally, we compared the volume of Facebook posts and tweets to CDC reports of daily measles counts.

Data Collection: Social Media

Facebook and Twitter data

Facebook and Twitter data yield spatiotemporal patterns for measles/vaccination posts of interest. Using Boolean search, we searched for Facebook posts and tweets related to vaccination (the query is found in the Appendix). To focus posts expressing the original stance of individuals, retweets and posts with URLs (or those likely to be advertisements) were filtered out prior to our primary analyses. To compare those results to the broader conversation, such posts were included in subsequent analysis. Along with the post contents, we collected the date and time the posts were published as well as metadata regarding the Twitter and FB accounts submitting the posts.

Using the primary corpus (having already removed retweets, for example), we assessed the effect of highly repetitive tweets as follows. We computed the edit distance [69] for all pairs of unique tweets found in our search. From this, for each tweet, we identified all tweets in our corpus within an edit distance of 20 for each tweet (chosen to allow posts to differ by, for instance, a short URL or user name). For each author, we assessed whether or not that author had emitted at least one tweet to which there corresponded another tweet in the corpus which was within a distance of 20 (whether by the same or by an additional author).

Data Collection: Measles Cases

Measles data were acquired from the CDC MMWR website, tabulated MMWR Reports of infrequently reported notifiable diseases, for years 2009–2016. The period covered by our study was January 4, 2009–August 27, 2016. US measles data are presented by the CDC as (1) weekly reported cases, and (2) cumulative to date. Cases are diagnosed based on laboratory findings or confirmed epidemiologic link to a laboratory-confirmed case. Methods of laboratory confirmation include viral isolation, detection of measles-virus specific nucleic acid, IgG seroconversion (or a significant rise), or a positive serologic test for measles immunoglobulin M antibody [70]. Since initial samples are ideally collected within the first few days following rash onset [71–77] and possibly within a few weeks [72, 73], diagnosis-related delays longer than this are unlikely. The cumulative column reported by the CDC contains additional cases not reported in the weekly totals, since cases newly reported from past weeks are added to the cumulative total. Thus, neither of these reporting streams provides a complete count of all cases occurring in a given week. For the cumulative series, we removed outliers (reporting error) using a five-week centered median filter [78], and then computed the first differenced series (number of new cases added to the cumulative total each week).

Stance Analysis

Using the built-in BrightView classifier [66], the media analytics platform was also trained to separate Facebook posts and tweets as (1) vaccine hesitant, (2) pro-vaccination, or (3) neither; a total of 96 posts were used for each (the minimum recommended being 25 per category). The third category was further subdivided into (3a) unclear whether pro-vaccination or vaccination hesitancy despite being related to vaccination, or (3b) unrelated to vaccination. Individuals exhibiting vaccine hesitancy do not form a monolithic bloc [65,79], and so we viewed vaccine hesitancy in a broad sense. We classified as vaccine hesitant tweets expressing views including universal opposition, opposition to contents of selected vaccines or vaccination schedules, or a conviction that vaccine-related injuries occur at higher rates than commonly believed. Individuals expressing views in favor of vaccination, of current policy, or simply expressing opposition to vaccine hesitancy were classified simply as “pro-vaccination”; no evidence supports the view that “pro-vaccination” individuals form a monolithic bloc either.

To validate the training, three raters manually reviewed and categorized Facebook posts and tweets into the same four categories and their categorizations were compared to the machine-learned categorizations. The human categorization process consisted of each rater independently reading each tweet or Facebook posts and determining for each post, does the post either 1) clearly express vaccine hesitancy in whole or in part, 2) favor vaccination, or clearly critique vaccine hesitancy or “anti-vaxxers”, 3) reference vaccination without clearly expressing or opposing vaccine hesitancy, or is it 4) irrelevant to the debate).

Reviewers first trained together on randomly selected tweets from our corpus (excluding any used in training the machine). A group discussion with all three raters followed to reconcile disagreements in the categorization and to gain familiarity with the discussion on Twitter (i.e. to become aware of the language used in these posts, as well as prominent or potentially polarizing figures in the vaccination debate). The raters subsequently completed a second iteration of the training with a smaller set of tweets followed by a brief discussion.

We then provided the raters with Google sheets containing 512 randomly chosen tweets (excluding any used in training either the raters or the machine; the sample size would provide a confidence interval of half-width approximately 0.02 for estimated proportions near 0.5). All rated the same tweets, masked to the results of other raters and that of the machine. In addition to the tweet content and URLs to the original tweet, the raters were provided the authors twitter handle (username) and their actual name, geolocation, and gender if they were available through Crimson Hexagon. For tweets classified by the machine as expressing vaccine-hesitancy by the machine, we report the fraction of such posts actually classified as vaccine hesitant by each rater; similarly, for tweets classified as pro-vaccination by the machine, we report the fraction of posts classified as pro-vaccination by the raters.

After completing tweet classifications, we conducted a similar process for the three human raters to classify a set of Facebook posts, masked to the classifications assigned by other raters and by the machine. Their classifications were analyzed for validation of the machine learned categorizations. The sample size was halved, since the Facebook posts were substantially longer than tweets.

Statistical Analysis

We report (1) the total weekly volume of identified Facebook posts and tweets identified as either pro-vaccination, vaccine-hesitant, or neither pro- nor vaccine-hesitant (but on topic), (2) the weekly volume of tweets classified by the machine as pro-vaccination, and (3) the weekly volume of tweets classified by the machine as vaccine hesitant. For Facebook posts and separately for tweets, and for each of these three outcome variables, we computed the Spearman rank correlation with (A) the CDC weekly counts, and (B) the first-differenced cumulative count series described above. As a sensitivity analysis, we used our classification of weeks as being in or not in an outbreak (as described in the Methods section), and report the number of Facebook posts and tweets, advocating and criticizing vaccine hesitancy, during outbreak and non-outbreak periods; we computed the odds ratio for association. Confidence intervals in all cases were constructed using time series bootstrap [80], with a fixed width of 8. All calculations were conducted in R, version 3.2 for MacIntosh (R Foundation for Statistical Computing, Vienna, Austria).

Results

Identified posts

A total of 58 078 Facebook posts and 82 993 tweets were identified. Of Facebook posts, 22 331 were known to be located in the United States. A total of 38 644 tweets were known to be located in the United States. Geolocation was unavailable for 33 335 out of 58 078 (57.40%) of Facebook posts, and for 30 086 out of 82 993 (36.25%) of tweets. The earliest post matching our query occurred on June 23, 2009.

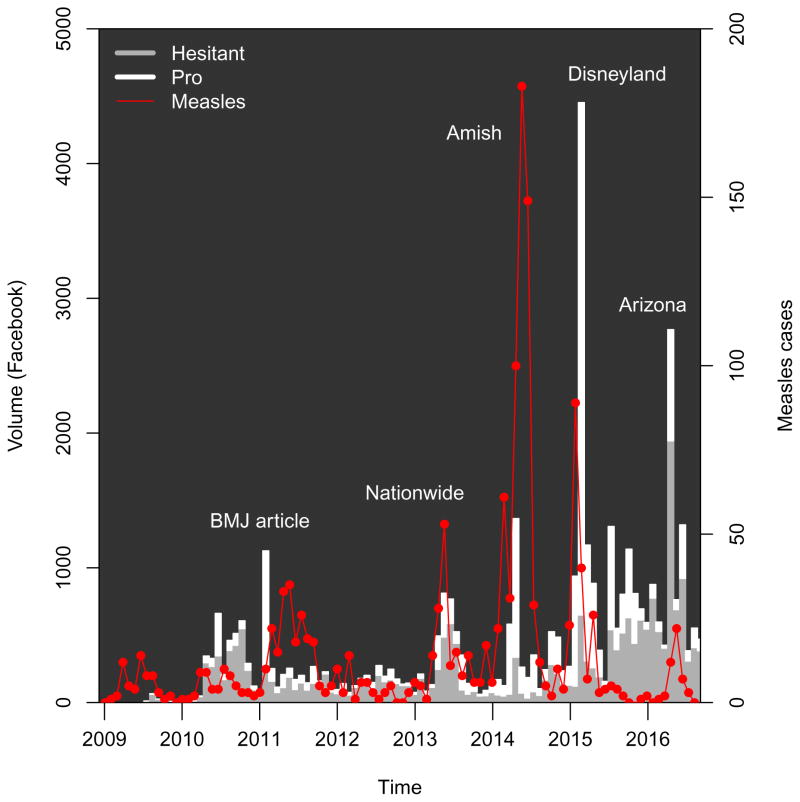

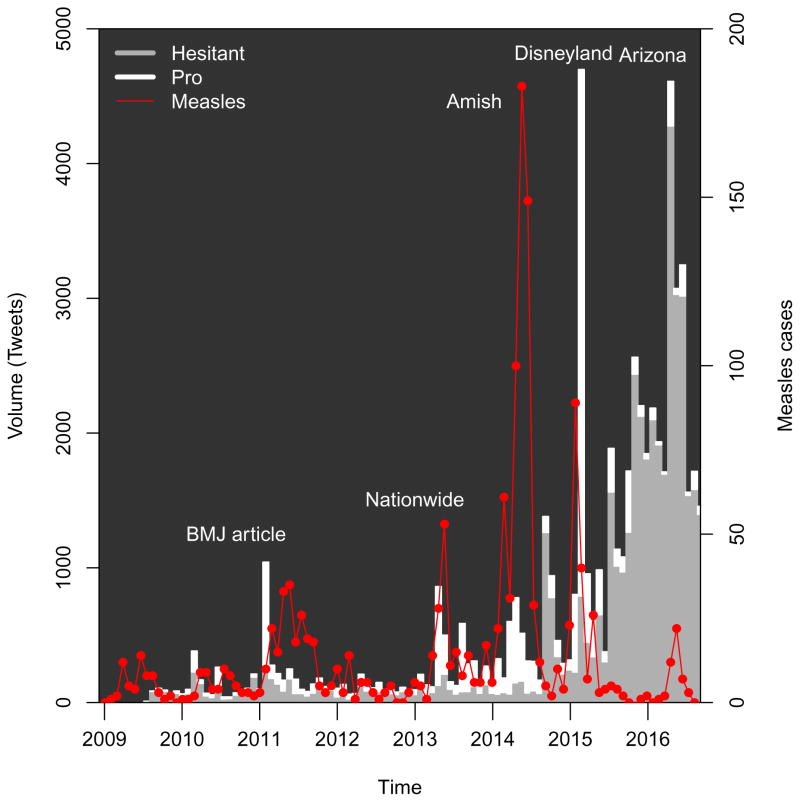

Classification

For Facebook, the algorithm classified 22 306 posts as against vaccination, a total of 17 928 posts as pro-vaccination, and 2431 posts as uncertain but on topic (“Neither” in the table). The machine algorithm classified a total of 43 259 tweets as against vaccination, a total of 16 297 tweets as pro-vaccination, and 12 222 tweets as uncertain but on topic (“Neither” in the table). The number and stance of Facebook posts and tweets classified as expressing vaccine hesitancy or expressing pro-vaccination views is shown in Figures ?? and ??.

The figures also show changes in reported measles cases over the study period. Epidemics occurring during most of 2011 were was reported at the time as “the worst measles year in 15 years”, with 222 cases provisionally reported across 31 states [81]. Another epidemic period corresponds to epidemics across 16 states, including New York City during the spring and summer of 2013 [82], as well as a publicized epidemic which included a large church in Texas [83]. A third includes the outbreak in Ohio largely among Amish residents, which was also the largest outbreak post-elimination [84]. The figure also illustrates the outbreak which apparently began in Disneyland, causing approximately 130 cases in California and spreading to other states [13]. Finally, a recent epidemic period includes an outbreak in Arizona [85].

Validation

Comparison of the machine algorithm with each human rater is shown in Table 1, and agreement of the human raters with each other is shown in Table 2. The first table shows the fraction of times the human rater agreed with a machine classification of a post as being vaccine hesitant versus pro-vaccination.

Table 1.

Agreement of stance classification of social media posts by three human raters and automated algorithm. The columns show the fraction of time each of the individual raters agreed with a machine classification of vaccine hesitant versus pro-vaccination. All four possible categories were included when computing agreement.

| Platform | Machine | Total | Rater 2 | Rater 1 | Rater 3 |

|---|---|---|---|---|---|

| Hesitant | 92 | 67.4% | 60.9% | 69.6% | |

| Pro | 92 | 59.8% | 58.7% | 64.1% | |

|

| |||||

| Hesitant | 250 | 67.2% | 64.4% | 66.4% | |

| Pro | 106 | 72.6% | 72.6% | 71.7% | |

Table 2.

Agreement of human raters with one another. For each rater (Column 2) who reached the result vaccine hesitant or pro-vaccination in Column 3, the fraction of times the other raters agreed is given in the remaining columns.

| Platform | Reference Grader | Reference Result | Rater 1 Agreement | Rater 2 Agreement | Rater 3 Agreement |

|---|---|---|---|---|---|

| Rater 1 | Hesitant | - | 83.5% | 85.3% | |

| Pro | - | 89.7% | 97.9% | ||

| Rater 2 | Hesitant | 89% | - | 85% | |

| Pro | 91.6% | - | 94.7% | ||

| Rater 3 | Hesitant | 89.6% | 80.2% | - | |

| Pro | 88.8% | 84.1% | - | ||

|

| |||||

| Rater 1 | Hesitant | - | 83.5% | 85.3% | |

| Pro | - | 85.2% | 83.5% | ||

| Rater 2 | Hesitant | 94.6% | - | 89.7% | |

| Pro | 94.5% | - | 87.2% | ||

| Rater 3 | Hesitant | 86.4% | 80.3% | - | |

| Pro | 86.9% | 81.7% | - | ||

Social media and measles surveillance

For the total Facebook post volume identified by our search and the weekly measles reports, we found that the Spearman rank correlation was 0.11 (95% CI: −0.02 to 0.22). For the number of pro-vaccination Facebook posts, the correlation with the weekly reports was 0.22 (95% CI: 0.09 to 0.34). For the number of Facebook posts expressing vaccine hesitancy, the correlation with the weekly reports was 0.01 (95% CI: −0.13 to 0.14).

For the total tweet volume identified by our search and the weekly measles reports, we found that the Spearman rank correlation was 0.06 (95% CI: −0.08 to 0.18, time series bootstrap). For the number of pro-vaccination tweets, the correlation with the weekly reports was 0.21 (95% CI: 0.06 to 0.34). For the number of tweets expressing vaccine hesitancy, the correlation with the weekly reports was 0.0011 (95% CI: −0.12 to 0.12). Correlations with the differenced cumulative series and for Facebook are shown in Table 3.

Table 3.

Correlation of social media stance with reported US measles cases , 2009–2016. Posts on Twitter and Facebook were classified by the BrightView classifier (Crimson Hexagon) as indicated in the text. Both CDC weekly reported cases and the increase in the cumulative case count were used (see text for details). Spearman rank correlations are reported, with confidence intervals derived from time series bootstrap.

| Medium | Classification | Weekly measles | Differenced cumulative measles |

|---|---|---|---|

| Total | 0.11 (−0.02 to 0.22) | 0.15 (−0.02 to 0.31) | |

| Pro | 0.22 (0.09 to 0.34) | 0.28 (0.13 to 0.41) | |

| Hesitant | 0.01 (−0.13 to 0.14) | 0.04 (−0.13 to 0.22) | |

|

| |||

| Total | 0.06 (−0.08 to 0.18) | 0.06 (−0.11 to 0.22) | |

| Pro | 0.21 (0.06 to 0.34) | 0.27 (0.11 to 0.41) | |

| Hesitant | 0.0011 (−0.12 to 0.12) | −0.02 (−0.18 to 0.14) | |

We also conducted the same analysis restricting the posts to those known to be in the United States. Because geolocation information for Facebook was almost entirely unavailable after March 2015, we restricted the analysis of US-based Facebook posts to the period ending March 31, 2015. As before, we found significant Spearman correlation between the weekly case counts and the frequency of posts classified as pro-vaccination (0.269, (95% CI: 0.17 to 0.41), but not for those posts classified as vaccine hesitant 0.0406, (95% CI: −0.14 to 0.24). Similar results were found for the frequency of tweets (for which unavailability of geolocation did not mandate restriction to the period ending March 31, 2015), the Spearman correlation between the weekly case counts and the frequency of tweets classified as pro-vaccination was 0.196 (95% CI: 0.06 to 0.34), while for those classified as vaccine hesitant, we found 0.00111 (95% CI: −0.12 to 0.12).

In the analyses above, we used a dataset filtered to focus on individuals’ original expressions (that excluded retweets, URLSs, and presumed advertisements). To compare results of that more focused corpus to the broader conversation, we conducted a sensitivity analysis based on a broader query in which we did not remove retweets dollar signs, or URLs (see Appendix for details). For this broader query, similar results were obtained, though corpus volumes were larger. We found a Spearman correlation between weekly case counts and non-vaccine hesitant tweets of 0.173 (95% CI: 0.05 to 0.28). The Spearman correlation between weekly case counts and non-vaccine hesitant tweets was 0.0804 (95% CI: −0.05 to 0.2). Similar findings were obtained for Facebook, and for the differenced cumulative series (not shown). In all cases, non-vaccine hesitant tweets and posts showed a greater relationship with measles case counts than was seen for vaccine hesitant tweets.

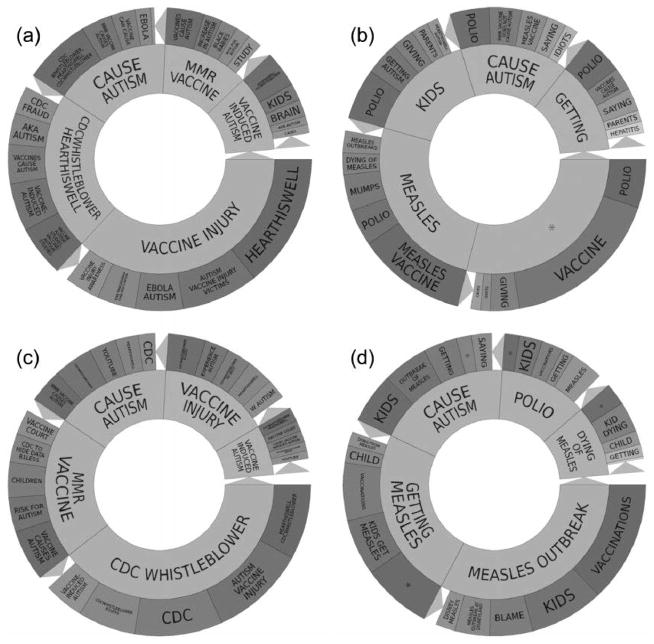

As a further sensitivity analysis, we also examined the proportion of pro-vaccination tweets and tweets expressing vaccine hesitancy in the weeks we classified as being in an outbreak or not being in an outbreak. The classification of weeks as corresponding to an outbreak or not is provided in the Appendix, based on the two CDC measles time series. For Facebook, the odds ratio for a given post being pro versus hesitant during an outbreak was 3.2 compared with a non-outbreak week (95% CI: 1.21 to 6.62). We found that for Twitter, the odds ratio for a given tweet being pro versus hesitant during an outbreak was 4.3 compared with a non-outbreak week (95% CI: 1.28 to 12.2). We also used the two series to define epidemic or outbreak periods nationally; details are provided in the Appendix. Note that in general, an “epidemic” or “outbreak” simply indicates more than the usual number of cases, and no unambiguous definition is possible. Topic wheels for vaccine hesitant and non-vaccine hesitant Twitter posts prior to and during the 2014–2015 outbreak are provided in the Appendix.

Excluding the year 2009, for which there is a low volume, we find an overall Spearman rank correlation of the total on-topic tweets with the weekly reported cases of 0.04 (95% CI: −0.12 to 0.19). We found an overall Spearman rank correlation of the total pro-vaccination tweets with the weekly reported cases of 0.2, (95% CI: 0.04 to 0.34), while the Spearman rank correlation of the total vaccine hesitant tweets with the weekly reported cases was −0.05, (95% CI: −0.2 to 0.09).

Additionally, we computed the Spearman correlation of the measles time series with total tweet volume matching our query, tweets classified as pro-vaccination, and tweets classified as vaccine hesitant, but with the additional step of excluding all accounts which individually contributed more than 0.2% (chosen arbitrarily) of the total identified tweets; high-volume accounts may contain contributions from automated scripts. We found an overall Spearman rank correlation of the total on-topic tweets with the weekly reported cases of 0.07 (95% CI: −0.06 to 0.21). We found an overall Spearman rank correlation of the total pro-vaccination tweets with the weekly reported cases of 0.2, (95% CI: 0.05 to 0.34), while the overall Spearman rank correlation of the total vaccine hesitant tweets with the weekly reported cases was 0.01, (95% CI: −0.11 to 0.13).

When we removed tweets from accounts identified as having produced a highly repetitive tweet (see Methods section), the number of tweets classified as pro-vaccination decreased from 16 297 to 13 029; while the number of tweets classified as vaccine hesitant decreased from 43 259 to 13 462 When correlating the weekly number of remaining pro-vaccination and vaccine hesitant tweets with the reported weekly case series, we found that for the remaining pro-vaccination tweets, the Spearman correlation was 0.208 (95% CI: 0.059 to 0.339), while for the remaining vaccine-hesitant tweets, the Spearman correlation was 0.0092 (95% CI: −0.11 to 0.131).

Discussion

Social media have, in a few years, gained pervasive importance in many fields [86], and have begun to change healthcare [87] and healthcare research [88] as well. Social media can be used to assess public discussions of health related issues and behaviors (such as vaccination) and as a vehicle for disseminating public health information, health promotion messages, or other evidence-based interventions (e.g. [41,89–91]). By contrast, social media may also be a vehicle for the dissemination of views not supported by evidence [51,92].

We classified social media posts related to measles as vaccine hesitant or not, and compared the frequency of such responses with the occurrence of measles in the US. Across the two social media platforms we examined, the proportion of pro-vaccination versus vaccine-hesitant posts, at times, depended upon the presence of an outbreak. For the corpus we identified, posts classified as vaccine-hesitant occured more regularly on the topic of vaccinations and/or measles than those classified as pro-vaccination. However, during a reported outbreak, pro-vaccination posts overtook the number of vaccine-hesitant posts, a feature which was attenuated in the final year. During these online discussions, vaccine hesitant individuals may call into question the safety and eficacy of vaccines, while non-vaccine hesitant individuals criticize and blame those who choose not to vaccinate for the recent outbreaks in vaccine-preventable diseases and for putting immunocompromised and vulnerable populations at risk for these diseases [93]. Our work differs from prior work in that we examined the relationship between volume and stance of the conversation and the occurrence of measles itself, as well as the comparison of Twitter and Facebook. It is possible that online discussions are driven by in part media coverage of outbreaks, an effect not captured by our classification of weeks based only on reported case series. Our findings are consistent with a simple view: many individuals who promote vaccine hesitancy are frequently or continually engaged in online discussions of vaccination, while non-vaccine hesitant individuals on the whole engage in the debate when current events—such as a measles outbreak—bring measles to public awareness.

Our results suggest an ongoing substantial presence of vaccine hesitancy and vaccination opposition in social media, contrasted with sporadic involvement by individuals favoring vaccination. What effect could this social media involvement be yielding? While many factors affect message credibility [94–96], of particular concern is the potential importance of bandwagon effects in a social media setting [96]. Is it possible that the large volume of social media content in opposition to vaccines can contribute to such an effect? If so, strategies to increase social media engagement by institutions and individuals in favor of vaccination should be developed, urgently. It may be valuable for medical and public health practitioners to ask not only how they may use social media effectively, but how they may empower or encourage social media activism by advocates of evidence-based positions (such as vaccination).

Categorizing social media posts requires inferring a user’s stance from a user’s post. For example, tweets were capped at 140 characters, which may have forced users to use nonstandard abbreviations or phrases that either lack clear context or are poorly phrased. Such texts may be harder to interpret, whether by machine or human. Our machine classification appears to have underestimated the number of pro-vaccination posts, when compared to human raters. This potentially results in underestimating the correlation of pro-vaccination post frequency with outbreak data. We also note that by correlating US measles cases to all available posts, regardless of geocoding, we potentially weaken the effect and bias the analysis towards null hypotheses—an effect perhaps partially offset by international coverage of the 2015 measles outbreak (e.g. [97]). Finally, we observe that an article critical of Wakefield’s research [98] appears to have caused a large number of pro-vaccination posts, even though no outbreak was occurring. This effect would also dilute the correlation of pro-vaccination posts and outbreak counts. Our use of the BrightView classifier appears to have achieved substantial agreement with the humans, but to have fallen short of human-level performance in its classifications; training on a larger corpus with a more nuanced classification may improve our ability to assess sentiment and stance [99]. Finally, our study was descriptive and retrospective; no predictions of future social media behavior were ventured.

Figure 1.

Facebook volume matching query, by stance, 2009–2016, according to classification by as described in the text. Measles cases are shown by the differenced cumulative series derived from CDC reports, as described in the text. Facebook volume and reported measles cases shown in consecutive periods of four weeks, with a final period consisting of 3 weeks. BMJ article indicates Deer, 2011. Additional details on the measles outbreaks are given in the Appendix. Source: Crimson Hexagon, CDC.

Figure 2.

Tweet volume matching query, by stance , 2009–2016, according to classification by BrightView, as described in the text. Measles cases are shown by the differenced cumulative series derived from CDC reports, as described in the text. Tweet volume and reported measles cases shown in consecutive periods of four weeks, with a final period consisting of 3 weeks. Additional details on the measles outbreaks are given in the Appendix. Source: Crimson Hexagon, CDC.

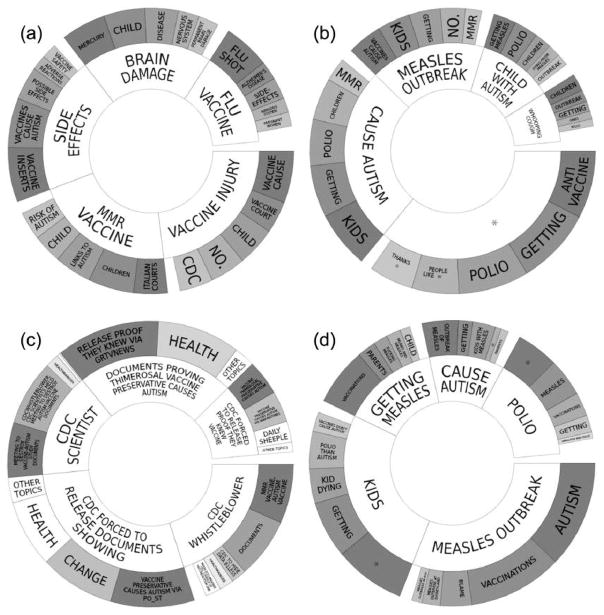

Figure 3.

(a) Topic wheel for posts expressing vaccine hesitancy on Facebook, 23 June 2009 to 27 August 2016, derived from Crimson Hexagon. (b) Topic wheel for non-vaccine-hesitant Facebook posts in our corpus, 23 June 2009 to 27 August 2016, derived from Crimson Hexagon. (c) Topic wheel for tweets expressing vaccine hesitancy, 23 June 2009 to 27 August 2016, derived from Crimson Hexagon. (d) Topic wheel for non-vaccine-hesitant tweets in our corpus, 23 June 2009–27 August 2016, derived from Crimson Hexagon. (*redacted specific famous celebrity’s name).

Figure 4.

(a) Topic wheel for tweets expressing vaccine hesitancy during the non-outbreak period 14 September 2014 to 15 November 2014, derived from Crimson Hexagon. (b) Topic wheel for tweets in our corpus not expressing vaccine hesitancy during the non-outbreak period 14 September 2014 to 14 November 2014, derived from Crimson Hexagon. (c) Topic wheel for tweets expressing vaccine hesitancy during the outbreak period 30 November 2014 to 30 May 2015, derived from Crimson Hexagon. (d) Topic wheel for tweets in our corpus not expressing vaccine hesitancy during the outbreak period 30 November 2014–30 May 2015, derived from Crimson Hexagon. (*redacted specific famous celebrity’s name).

Acknowledgments

TCP and TML gratefully acknowledge support from the US NIH NIGMS MIDAS program, 1-U01-GM087728, and TCP, TML, and MD from the US NIH NEI, 1-R01-EY024608. SFA acknowledges support from the US NIH NIGMS (grant number F31GM120985). CF acknowledges support from the Ingram Alumni Fund, Vanderbilt University. The authors thank Byron Wallace for useful discussions. The funders/sponsors played no role in hypothesis generation, review, approval, or decision to publish.

Appendix

Query

Our Crimson Hexagon search query is as follows:

(autism OR mercury OR thimerosal OR wakefield OR mccarthy OR immigrant OR obama OR #vax) AND (vaccine OR measles OR “MMR vaccine” OR sb40 OR polio OR chickenpox OR hepatitis OR “mmr shot”) AND -$ AND -http AND -RT

To more effectively target conversations between individuals, we excluded dollar signs, http, and retweets from the primary analysis. This minimizes the number of posts which consist of advertisements, forwarded web links, and retweets. To examine the role of retweets and other forwarded content, we conducted a second broader query, identical to the first except no longer excluding dollar signs, http, and retweets. Counts (not post content) were extracted with and without these restrictions separately.

Classification

Topic wheels (produced within the Crimson Hexagon platform) illustrate the classification of posts into pro-vaccination posts and posts which express vaccine hesitancy.

We also include topic wheels showing topics within posts during the period before and during the 2014–2015 outbreak, for posts classified as vaccine hesitant and non-vaccine hesitant.

Outbreak periods

To identify starting and ending periods for national outbreak periods in a consistent manner across the study period, we used cumulative case reports (which are more complete than the weekly counts). Specifically, we removed outliers (reporting error) using a five-week median smooth. Epidemics then appear as peaks in the first-differenced cumulative series. The average weekly increase in this cumulative count is approximately 3.6 cases per week; we chose an epidemic threshold as 250% of this, or a count of 9, as indicating an epidemic or outbreak. Weeks where the cumulative count rose by nine or more cases were assumed to be during an candidate epidemic or outbreak; for any such week, we included the four previous weeks and the three following weeks—the asymmetry reflecting the fact that the cumulative counts contain some reporting delay. This yielded seven candidate epidemics based on the cumulative count series. Two of these contained fewer than four cases during the weekly reported counts, and were excluded. This procedure was conducted by a team member masked to the time series of Tweets and Facebook. This procedure yielded the five epidemic periods (see Table 4) discussed in the text.

Table 4.

Start and end dates for outbreak periods as defined in the Appendix. The total number of weekly cases during the period is shown, along with the total change in the cumulative case count as reported by the CDC.

| Outbreak period | Start | End | Total Weekly Cases |

|---|---|---|---|

| 1 | February 20, 2011 | October 15, 2011 | 82 |

| 2 | March 31, 2013 | July 6, 2013 | 21 |

| 3 | January 26, 2014 | September 13, 2014 | 401 |

| 4 | November 30, 2014 | May 30, 2015 | 46 |

| 5 | April 17, 2016 | June 11, 2016 | 18 |

Footnotes

Conflicts of Interest

Financial disclosures. The authors have no financial conflicts of interest.

References

- 1.Henderson DA, Dunston FJ, Fedson DS, et al. The measles epidemic: The problems, barriers, and recommendations. JAMA. 1991;266:1547–1552. [PubMed] [Google Scholar]

- 2.Sabella C. Measles: not just a childhood rash. Clevel Clin J Medicine. 2010;77:207–213. doi: 10.3949/ccjm.77a.09123. [DOI] [PubMed] [Google Scholar]

- 3.Perry RT, Halsey NA. The clinical significance of measles: a review. J Infect Dis. 2004;189(Suppl 1):S4–S16. doi: 10.1086/377712. [DOI] [PubMed] [Google Scholar]

- 4.Moss WJ, Griffin DE. Measles. Lancet. 2012;379:153–164. doi: 10.1016/S0140-6736(10)62352-5. [DOI] [PubMed] [Google Scholar]

- 5.Committee on Infectious Diseases. Red Book 2015. Report of the Committee on Infectious Diseases. American Academy of Pediatrics; 2015. [Google Scholar]

- 6.Lessler J, Reich NG, Brookmeyer R, Perl TM, Nelson KE, Cummings DAT. Incubation periods of acute respiratory viral infections: a systematic review. Lancet Infect Dis. 2009;9:291–300. doi: 10.1016/S1473-3099(09)70069-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gutierrez J, Isaacson RS, Koppel BS. Subacute sclerosing panencephalitis: an update. Dev Medicine Child Neurol. 2010;52:901–907. doi: 10.1111/j.1469-8749.2010.03717.x. [DOI] [PubMed] [Google Scholar]

- 8.Black FL. Measles active and passive immunity in a worldwide perspective. Prog Med Virol. 1989;36:1–33. [PubMed] [Google Scholar]

- 9.Markowitz LE, Preblud SR, Fine PE, Orenstein WA. Duration of live measles vaccine- induced immunity. Pediatr Infect Dis J. 1990;9:101–110. doi: 10.1097/00006454-199002000-00008. [DOI] [PubMed] [Google Scholar]

- 10.Uzicanin A, Zimmerman L. Field effectiveness of live attenuated measles-containing vaccines: a review of published literature. J Infect Dis. 2011;204(S1):S133–S148. doi: 10.1093/infdis/jir102. [DOI] [PubMed] [Google Scholar]

- 11.Patel MK, Gacic-Dobo M, Strebel PM, et al. Progress toward regional measles elimination—worldwide, 2000–2015. Morb Mortal Wkly Rep. 2016;65:1228–1233. doi: 10.15585/mmwr.mm6544a6. [DOI] [PubMed] [Google Scholar]

- 12.Katz SL, Hinman AR. Summary and conclusions: measles elimination meeting, 16–17 March 2000. J Infect Dis. 2004;189(Suppl 1):S43–S47. doi: 10.1086/377696. [DOI] [PubMed] [Google Scholar]

- 13.Zipprich J, Winter K, Hacker J, et al. Measles outbreak—California, December 2014–February 2015. Morb Mortal Wkly Rep. 2015;64:153–154. [PMC free article] [PubMed] [Google Scholar]

- 14.Blumberg S, Worden L, Enanoria W, et al. PLOS Currents Outbreaks. 1. May 7, 2015. Assessing measles transmission in the United States following a large outbreak in California. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Phadke VK, Bednarczyk RA, Salmon DA, Omer SB. Association between vaccine refusal and vaccine-preventable diseases in the United States: A review of measles and pertussis. JAMA. 2016;315:1149–1158. doi: 10.1001/jama.2016.1353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Best M, Neuhauser D, Slavin L. “Cotton Mather, you dog, dam you! I’l inoculate you with this; with a pox to you”: Smallpox inoculation, Boston, 1721. Qual & safety health care. 2004;13:82–83. doi: 10.1136/qshc.2003.008797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Wolfe RM, Sharp LK. Anti-vaccinationists past and present. BMJ. 2002;325:430–432. doi: 10.1136/bmj.325.7361.430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dyer C. Lancet retracts Wakefield’s MMR paper. BMJ. 2010;340:c696. doi: 10.1136/bmj.c696. [DOI] [PubMed] [Google Scholar]

- 19.Brown KF, Long SJ, Ramsay M, et al. U.K. parents’ decision-making about measles-mumps-rubella (MMR) vaccine 10 years after the MMR-autism controversy: a qualitative analysis. Vaccine. 2012;30:1855–1864. doi: 10.1016/j.vaccine.2011.12.127. [DOI] [PubMed] [Google Scholar]

- 20.Callender D. Vaccine hesitancy: More than a movement. Hum Vaccines Immunother. 2016;12:2464–2468. doi: 10.1080/21645515.2016.1178434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Larson HJ, Cooper LZ, Eskola J, Katz SL, Ratzan S. Addressing the vaccine confidence gap. Lancet. 2011;378:526–535. doi: 10.1016/S0140-6736(11)60678-8. [DOI] [PubMed] [Google Scholar]

- 22.McClure CC, Cataldi JR, O’Leary ST. Vaccine hesitancy: Where we are and where we are going. Clin Ther. 2017 Aug;39(8):1550–1562. doi: 10.1016/j.clinthera.2017.07.003. Epub ahead of print 31 July 2017. [DOI] [PubMed] [Google Scholar]

- 23.Hill HA, Elam-Evans LD, Yankey D, Singleton JA, Dietz V. Vaccination coverage among children aged 19–35 months—United States, 2015. Morb Mortal Wkly Rep. 2016;65:1065–1071. doi: 10.15585/mmwr.mm6539a4. [DOI] [PubMed] [Google Scholar]

- 24.Seither R, Calhoun K, Mellerson J, et al. Vaccination coverage among children in kindergarten—United States, 2015–16 school year. Morb Mortal Wkly Rep. 2016;65:1057–1064. doi: 10.15585/mmwr.mm6539a3. [DOI] [PubMed] [Google Scholar]

- 25.Pew Research. Mobile messaging and social media 2015. Twitter demographics. http://www.pewinternet.org/2015/08/19/mobile-messaging-and-social-media-2015/2015-08-19_social-media-update_11/. Archived at http://www.webcitation.org/6m9FcIr4T.

- 26.Twitter. Twitter usage/company facts. https://about.twitter.com/company. Archived at http://www.webcitation.org/6m9FjrMn9.

- 27.Chou WS, Hunt YM, Beckjord EB, Moser RP, Hesse BW. Social media use in the United States: implications for health communication. J Med Internet Res. 2009;11:e48. doi: 10.2196/jmir.1249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Hawn C. Take two aspirin and tweet me in the morning: how Twitter, Facebook, and other social media are reshaping health care. Heal Aff (Milwood) 2009;28:361–368. doi: 10.1377/hlthaff.28.2.361. [DOI] [PubMed] [Google Scholar]

- 29.Grajales FJ, III, Sheps S, Ho K, Novak-Lauscher H, Eysenback G. Social media: a review and tutorial of applications in medicine and health care. J Med Internet Res. 2014;16:e13. doi: 10.2196/jmir.2912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Moorhead SA, Hazlett DE, Harrison L, Carroll JK, Irwin A, Hoving C. A new dimension of health care: Systematic review of the uses, benefits, and limitations of social media for health communication. J Med Internet Res. 2013;15:e85. doi: 10.2196/jmir.1933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Allen C, Tsou MH, Aslam A, Nagel A, Gawron JM. Applying GIS and machine learning methods to Twitter data for multiscale surveillance of influenza. PloS one. 2016;11:e0157734. doi: 10.1371/journal.pone.0157734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Velasco E, Agheneza T, Denecke K, Kirchner G, Eckmanns T. Social media and internet-based data in global systems for public health surveillance: a systematic review. Milbank Q. 2014;92:7–33. doi: 10.1111/1468-0009.12038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Hartley DM. Using social media and internet data for public health surveillance: the importance of talking. Milbank Q. 2014;92:34–39. doi: 10.1111/1468-0009.12039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Keller B, Labrique A, Jain KM, Pekosz A, Levine O. Mind the gap: social media engagement by public health researchers. J Med Internet Res. 2014;16:e8. doi: 10.2196/jmir.2982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Mollema L, Harmsen IA, Broekhuizen E, et al. Disease detection or public opinion reflection? Content analysis of tweets, other social media, and online newspapers during the measles outbreak in the Netherlands in 2013. J Med Internet Res. 2015;17:e128. doi: 10.2196/jmir.3863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Orr D, Baram-Tsabari A, Landsman K. Social media as a platform for health-related public debates and discussions: the polio vaccine on Facebook. Isr J Heal Policy Res. 2016;5:34. doi: 10.1186/s13584-016-0093-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Deiner M, Lietman TM, McLeod SD, Chodosh J, Porco TC. Eye disease surveillance tools emerging from search and social media data: A study of temporal patterns of conjunctivitis. JAMA Ophthalmol. 2016;134:1024–1030. doi: 10.1001/jamaophthalmol.2016.2267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Surian D, Nguyen DQ, Kennedy G, Johnson M, Coiera E, Dunn AG. Characterizing Twitter discussions about HPV vaccines using topic modeling and community detection. J Med Internet Res. 2016;18:e232. doi: 10.2196/jmir.6045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Harris JK, Mueller NL, Snider D. Social media adoption in local health departments nationwide. Am J Public Heal. 2013;103:1700–1707. doi: 10.2105/AJPH.2012.301166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Warren KE, Wen LS. Measles, social media and surveillance in Baltimore City. J Public Heal (Oxford) 2016 doi: 10.1093/pubmed/fdw076. to appear. [DOI] [PubMed] [Google Scholar]

- 41.Washington TA, Applewhite S, Glenn W. Using Facebook as a platform to direct young Black men who have sex with men to a video-based HIV testing intervention: a feasibility study. Urban Soc Work. 2017;1:36–52. doi: 10.1891/2474-8684.1.1.36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Dubé E, Vivion M, MacDonald NE. Vaccine hesitancy, vaccine refusal and the anti-vaccine movement: influence, impact and implications. Expert Rev Vaccines. 2015;14:99–117. doi: 10.1586/14760584.2015.964212. [DOI] [PubMed] [Google Scholar]

- 43.Cataldi JR, Dempsey AF, O’Leary ST. Measles, the media, and MMR: Impact of the 2014–15 measles outbreak. Vaccine. 2016 doi: 10.1016/j.vaccine.2016.10.048. [DOI] [PubMed] [Google Scholar]

- 44.Powell GA, Zinszer K, Verma A, et al. Media content about vaccines in the United States and Canada, 2012–2014: An analysis using data from the Vaccine Sentimeter. Vaccine. 2016 doi: 10.1016/j.vaccine.2016.10.067. [DOI] [PubMed] [Google Scholar]

- 45.Grant L, Hausman BL, Cashion M, Lucchesi N, Patel K, Roberts J. Vaccination per-suasion online: a qualitative study of two provaccine and two vaccine-skeptical websites. J Med Internet Res. 2015;17:e133. doi: 10.2196/jmir.4153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Dunn AG, Leask J, Zhou X, Mandl KD, Coiera E. Associations between exposure to and expression of negative opinions about human papillomavirus vaccines on social media: an observational study. J Med Internet Res. 2015;17:e144. doi: 10.2196/jmir.4343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Bahk CY, Cumming M, Paushter L, Madoff LC, Thomson A, Brownstein JS. Publicly available online tool facilitates real-time monitoring of vaccine conversations and sentiments. Heal Aff (Millwood) 2016;35:341–347. doi: 10.1377/hlthaff.2015.1092. [DOI] [PubMed] [Google Scholar]

- 48.Salathé M, Khandelwal S. Asessing vaccination sentiments with online social media: implications for infectious disease dynamic and control. PLoS Comput Biol. 2011;7:e1002199. doi: 10.1371/journal.pcbi.1002199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Dunn AG, Surian D, Leask J, Dey A, Mandl KD, Coiera E. Mapping information exposure on social media to explain differences in HPV vaccine coverage in the United States. Vaccine. 2017;35:3033–3040. doi: 10.1016/j.vaccine.2017.04.060. [DOI] [PubMed] [Google Scholar]

- 50.Kata A. A postmodern Pandora’s box: anti-vaccination misinformation on the Internet. Vaccine. 2010;28:1709–1716. doi: 10.1016/j.vaccine.2009.12.022. [DOI] [PubMed] [Google Scholar]

- 51.Radzikowski J, Stefanidis A, Jacobsen KH, Croitoru A, Crooks A, Delamater PL. The measles vaccination narrative in Twitter: A quantitative analysis. JMIR Public Heal Surveillance. 2016;2:e1. doi: 10.2196/publichealth.5059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Becker BF, Larson HJ, Bonhoeffer J, van Mulligen EM, Kors JA, Sturkenboom MC. Evaluation of a multinational, multilingual vaccine debate on Twitter. Vaccine. 2016 doi: 10.1016/j.vaccine.2016.11.007. [DOI] [PubMed] [Google Scholar]

- 53.Kata A. Anti-vaccine activists, Web 2. 0, and the postmodern paradigm—an overview of tactics and tropes used online by the anti-vaccination movement. Vaccine. 2012;30:3778–3789. doi: 10.1016/j.vaccine.2011.11.112. [DOI] [PubMed] [Google Scholar]

- 54.Kang GJ, Ewing-Nelson SR, Mackey L, et al. Semantic network analysis of vaccine sentiment in online social media. Vaccine. 2017;35:3621–3638. doi: 10.1016/j.vaccine.2017.05.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Broniatowski DA, Hilyard KM, Dredze M. Effective vaccine communication during the Disneyland measles outbreak. Vaccine. 2016;34:3225–3228. doi: 10.1016/j.vaccine.2016.04.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Faasse K, Chatman CJ, Martin LR. A comparison of language use in pro- and anti-vaccination comments in response to a high profile Facebook post. Vaccine. 2016;34:5808–5814. doi: 10.1016/j.vaccine.2016.09.029. [DOI] [PubMed] [Google Scholar]

- 57.Massey PM, Leader A, Yom-Tov E, Budenz A, Fisher K, Klassen AC. Applying multiple data collection tools to quantify Human Papillomavirus vaccine communication on Twitter. J Med Internet Res. 2016;18:e318. doi: 10.2196/jmir.6670. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. [Accessed 1 December 2016];Vaccine sentimeter. http://www.healthmap.org/viss/

- 59.Miller M, Banerjee T, Muppalla R, Romine W, Sheth A. What are people tweeting about Zika? An exploratory study concerning its symptoms, treatment, transmission, and prevention. JMIR public health surveillance. 2017;3:e38. doi: 10.2196/publichealth.7157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Roche B, Gaillard B, Léger L, et al. An ecological and digital epidemiology analysis on the role of human behavior on the 2014 Chikungunya outbreak in Martinique. Sci reports. 2017;7:5967. doi: 10.1038/s41598-017-05957-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Thapen N, Simmie D, Hankin C, Gillard J. DEFENDER: Detecting and Forecasting Epidemics Using Novel Data-Analytics for Enhanced Response. PloS one. 2016;11:e0155417. doi: 10.1371/journal.pone.0155417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.McGough SF, Brownstein JS, Hawkins JB, Santillana M. Forecasting Zika incidence in the 2016 Latin America outbreak combining traditional disease surveillance with search, social media, and news report data. PLoS neglected tropical diseases. 2017;11:e0005295. doi: 10.1371/journal.pntd.0005295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.van Lent LG, Sungur H, Kunneman FA, van de Velde B, Das E. Too far to care? Measuring public attention and fear for Ebola using Twitter. J medical Internet research. 2017;19:e193. doi: 10.2196/jmir.7219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Mohammad SN, Kiritchenko S, Sobhani P, Zhu X, Cherry C. A dataset for detecting stance in tweets. 2016 http://saifmohammad.com/WebDocs/StanceDataset-LREC2016.pdf. Archived at http://www.webcitation.org/6mfImc9Fp.

- 65.MacDonald NE Working Group on Vaccine Hesitancy. Vaccine hesitancy: Definition, scope and determinants. Vaccine. 2015;33:4161–4164. doi: 10.1016/j.vaccine.2015.04.036. [DOI] [PubMed] [Google Scholar]

- 66.Crimson Hexagon. 2016 http://www.crimsonhexagon.com. Archived at http://www.webcitation.org/6mRCbG5Xs.

- 67.Hopkins D, King G. A method of automated nonparametric content analysis for social science. Am J Polit Sci. 2010;54:229–247. doi: 10.1111/j.1540-5907.2009.00428.x. [DOI] [Google Scholar]

- 68.Firat A, Brooks M, Bingham C, Herdagdelen A, King G. Systems and methods for calculating category proportions. 13/804,096. US Patent App. 2014 http://www.freepatentsonline.com/y2014/0012855.html. Archived at http://www.webcitation.org/6mfNftXdX.

- 69.Nugues PM. Language processing with Perl and Prolog: Theories, Implementation, and Application. 2. Berlin: Springer-Verlag; 2014. [Google Scholar]

- 70.Measles 2013 Case Definition. https://wwwn.cdc.gov/nndss/conditions/measles/case-definition/2013/. Archived at http://www.webcitation.org/6m9FGrurN.

- 71.Measles Serology: Lab Tools, CDC. http://www.cdc.gov/measles/lab-tools/serology.html. Archived at http://www.webcitation.org/6m9FPRSNw.

- 72.Helfand RF, Heath JL, Anderson LJ, Maes EF, Guris D, Bellini WJ. Diagnosis of measles with an IgM capture EIA: the optimal timing of specimen collection after rash onset. J Infect Dis. 1997;175:195–199. doi: 10.1093/infdis/175.1.195. [DOI] [PubMed] [Google Scholar]

- 73.Ratnam S, Tipples G, Head C, Fauvel M, Fearon M, Ward BJ. Performance of indirect immunoglobulin M (IgM) serology tests and IgM capture assays for laboratory diagnosis of measles. J Clin Microbiol. 2000;38:99–104. doi: 10.1128/jcm.38.1.99-104.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Perry KR, Brown DWG, Parry JV, Panday S, Pipkin C, Richards A. Detection of measles, mumps, and rubella antibodies in saliva using antibody capture radioimmunoassay. J medical virology. 1993;40:235–240. doi: 10.1002/jmv.1890400312. [DOI] [PubMed] [Google Scholar]

- 75.Fulginiti VA, Eller JJ, Downie AW, Kempe CH. Altered reactivity to measles virus: atypical measles in children previously immunized with inactivated measles virus vaccines. JAMA. 1967;202:1075–1080. doi: 10.1001/jama.202.12.1075. [DOI] [PubMed] [Google Scholar]

- 76.Matsuzono Y, Narita M, Ishiguro N, Togashi T. Detection of measles virus from clinical samples using the polymerase chain reaction. Arch pediatrics & adolescent medicine. 1994;148:289–293. doi: 10.1001/archpedi.1994.02170030059014. [DOI] [PubMed] [Google Scholar]

- 77.Nakayama T, Mori T, Yamaguchi S, et al. Detection of measles virus genome directly from clinical samples by reverse transcriptase-polymerase chain reaction and genetic variability. Virus research. 1995;35:1–16. doi: 10.1016/0168-1702(94)00074-m. [DOI] [PubMed] [Google Scholar]

- 78.Jayant NS. Average- and median-based smoothing techniques for improving digital speech quality in the presence of transmission errors. IEEE Transactions on communications. 1976;24:1043–1045. doi: 10.1109/TCOM.1976.1093415. [DOI] [Google Scholar]

- 79.Sobo EJ, Huhn A, Sannwald A, Thurman L. Information curation among vaccine cautious parents: Web 2.0, Pinterest thinking, and pediatric vaccination choice. Med An-thropol. 2016;35:529–546. doi: 10.1080/01459740.2016.1145219. [DOI] [PubMed] [Google Scholar]

- 80.Kreiss J, Lahiri SN. Bootstrap methods for time series. In: Rao TS, Rao SS, Rao CR, editors. Time Series Analysis: Methods and Applications. Oxford: North-Holland (El-sevier); 2012. pp. 3–26. [Google Scholar]

- 81.CDC. Measles—United States, 2011. Morb Mortal Wkly Rep. 2012;61:253–257. [PubMed] [Google Scholar]

- 82.CDC. Measles—United States, January 1–August 24, 2013. Morb Mortal Wkly Rep. 2013;62:741–743. [PMC free article] [PubMed] [Google Scholar]

- 83.Le Tellier A. Only after measles outbreak does Texas megachurch support vaccines. Los Angeles Times. 2013 Archived at http://www.webcitation.org/6m9FVocJG.

- 84.Gastañaduy PA, Redd SB, Fiebelkorn AP, et al. Measles—United States, January 1–May 23, 2014. Morb Mortal Wkly Rep. 2014;63:496–499. [PMC free article] [PubMed] [Google Scholar]

- 85.Arizona Department of Health Services. Nation’s largest measles outbreak of the year ends. 2016 Aug 8; http://www.azdhs.gov/director/public-information-office/index.php#news-release-080816. Archived at http://www.webcitation.org/6m9DT5zOr.

- 86.Fuchs C. Social Media: A Critical Introduction. London: SAGE; 2017. [Google Scholar]

- 87.Hors-Fraile S, Atique S, Mayer MA, Denecke K, Merolli M, Househ M. The unintended consequences of social media in healthcare: New problems and new solutions. Yearb medical informatics. 2016:47–52. doi: 10.15265/IY-2016-009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Sinnenberg L, Buttenheim AM, Padrez K, Mancheno C, Ungar L, Merchant RM. Twitter as a tool for health research: A systematic review. Am J Public Heal. 2017;107:e1–e8. doi: 10.2105/AJPH.2016.303512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Mita G, Mhurchu N, Jull A. Effectiveness of social media in reducing risk factors for noncommunicable diseases: a systematic review and meta-analysis of randomized controlled trials. Nutr Rev. 2016;74:237–247. doi: 10.1093/nutrit/nuv106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Balatsoukas P, Kennedy CM, Buchan I, Powell J, Ainsworth J. The role of social network technologies in online health promotion: A narrative review of theoretical and empirical factors influencing intervention effectiveness. J Med Internet Res. 2015;17:e141. doi: 10.2196/jmir.3662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Isaac BM, Zucker JR, MacGregor J, et al. Use of social media as a communication tool during a mumps outbreak—New York City, 2015. Morb Mortal Wkly Rep. 2017;66:60–61. doi: 10.15585/mmwr.mm6602a5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Oyeyemi SO, Gabarron E, Wynn R. Ebola, Twitter, and misinformation: a dangerous combination? BMJ. 2014;349:g6178. doi: 10.1136/bmj.g6178. [DOI] [PubMed] [Google Scholar]

- 93.Guidry JP, Carlyle K, Messner M, Jin Y. On pins and needles: how vaccines are portrayed on Pinterest. Vaccine. 2015;33:5051–5056. doi: 10.1016/j.vaccine.2015.08.064. [DOI] [PubMed] [Google Scholar]

- 94.Westerman D, Spence PR, van der Heide B. Social media as information source: recency of updates and credibility of information. J Comput Commun. 2014;19:171–183. [Google Scholar]

- 95.Lachlan KA, Spence PR, Edwards A, Reno KM, Edwards C. If you are quick enough, I will think about it: Information speed and trust in public health organizations. Comput Hum Behav. 2014;33:377–380. [Google Scholar]

- 96.Lin X, Spence PR, Lachlan KA. Social media and credibility indicators: The effect of influencecues. Comput Hum Behav. 2016;63:264–271. [Google Scholar]

- 97.Lesnes C. Aux Etats-Unis, la rougeole devient une maladie de riches. Le Monde; Oct 2, 2015. [accessed 31 October 2017]. Archived at http://www.lemonde.fr/ameriques/article/2015/02/10/aux-etats-unis-la-rougeole-devientune-maladie-de-riches_4573604_3222.html. [Google Scholar]

- 98.Deer B. How the case against the MMR vaccine was fixed. BMJ. 2011;342:c5347. doi: 10.1136/bmj.c5347. [DOI] [PubMed] [Google Scholar]

- 99.Du J, Xu J, Song H, Liu X, Tao C. Optimization on machine learning based approaches for sentiment analysis on HPV vaccines related tweets. J biomedical semantics. 2017;8:9. doi: 10.1186/s13326-017-0120-6. [DOI] [PMC free article] [PubMed] [Google Scholar]