Abstract

Random backpropagation (RBP) is a variant of the backpropagation algorithm for training neural networks, where the transpose of the forward matrices are replaced by fixed random matrices in the calculation of the weight updates. It is remarkable both because of its effectiveness, in spite of using random matrices to communicate error information, and because it completely removes the taxing requirement of maintaining symmetric weights in a physical neural system. To better understand random backpropagation, we first connect it to the notions of local learning and learning channels. Through this connection, we derive several alternatives to RBP, including skipped RBP (SRPB), adaptive RBP (ARBP), sparse RBP, and their combinations (e.g. ASRBP) and analyze their computational complexity. We then study their behavior through simulations using the MNIST and CIFAR-10 bechnmark datasets. These simulations show that most of these variants work robustly, almost as well as backpropagation, and that multiplication by the derivatives of the activation functions is important. As a follow-up, we study also the low-end of the number of bits required to communicate error information over the learning channel. We then provide partial intuitive explanations for some of the remarkable properties of RBP and its variations. Finally, we prove several mathematical results, including the convergence to fixed points of linear chains of arbitrary length, the convergence to fixed points of linear autoencoders with decorrelated data, the long-term existence of solutions for linear systems with a single hidden layer and convergence in special cases, and the convergence to fixed points of non-linear chains, when the derivative of the activation functions is included.

1 Introduction

Over the years, the question of biological plausibility of the backpropagation algorithm, implementing stochastic gradient descent in neural networks, has been raised several times. The question has gained further relevance due to the numerous successes achieved by backpropagation in a variety of problems ranging from computer vision [21, 31, 30, 14] to speech recognition [12] in engineering, and from high energy physics [7, 26] to biology [8, 32, 1] in the natural sciences, as well to recent results on the optimality of backpropagation [6]. There are however, several well known issues facing biological neural networks in relation to backpropagation, these include: (1) the continuous real-valued nature of the gradient information and its ability to change sign, violating Dale’s Law; (2) the need for some kind of teacher’s signal to provide targets; (3) the need for implementing all the linear operations involved in backpropagation; (4) the need for multiplying the backpropagated signal by the derivatives of the forward activations each time a layer is traversed; (5) the need for precise alternation between forward and backward passes; and (6) the complex geometry of biological neurons and the problem of transmitting error signals with precision down to individual synapses. However, perhaps the most formidable obstacle is that the standard backpropagation algorithm requires propagating error signals backwards using synaptic weights that are identical to the corresponding forward weights. Furthermore, a related problem that has not been sufficiently recognized, is that this weight symmetry must be maintained at all times during learning, and not just during early neural development. It is hard to imagine mechanisms by which biological neurons could both create and maintain such perfect symmetry. However, recent simulations [24] surprisingly indicate that such symmetry may not be required after all, and that in fact backpropagation works more or less as well when random weights are used to backpropagate the errors. Our general goal here is to investigate backpropagation with random weights and better understand why it works.

The foundation for better understanding random backpropagation (RBP) is provided by the concepts of local learning and deep learning channels introduced in [6]. Thus we begin by introducing the notations and connecting RBP to these concepts. In turn, this leads to the derivation of several alternatives to RBP, which we study through simulations on well known benchmark datasets before proceeding with more formal analyses.

2 Setting, Notations, and the Learning Channel

Throughout this paper, we consider layered feedforward neural networks and supervised learning tasks. We will denote such an architecture by

| (1) |

where N0 is the size of the input layer, Nh is the size of hidden layer h, and NL is the size of the output layer. We assume that the layers are fully connected and let denote the weight connecting neuron j in layer h − 1 to neuron i in layer h. The output of neuron i in layer h is computed by:

| (2) |

The transfer functions are usually the same for most neurons, with typical exceptions for the output layer, and usually are monotonic increasing functions. The most typical functions used in artificial neural networks are the: identity, logistic, hyperbolic tangent, rectified linear, and softmax.

We assume that there is a training set of M examples consisting of input and output-target pairs (I(t), T(t)), with t = 1, …, M. Ii(t) refers to the i-th component of the t-th input training example, and similarly for the target Ti(t). In addition, there is an error function ℰ to be minimized by the learning process. In general we will asssume standard error functions such as the squared error in the case of regression and identity transfer functions in the output layer, or relative entropy in the case of classification with logistic (single class) or softmax (multi-class) units in the output layer, although this is not an essential point.

While we focus on supervised learning, it is worth noting that several “unsupervised” learning algorithms for neural networks (e.g. autoencoders, neural autoregressive distribution estimators, generative adversarial networks) come with output targets and thus fall into the framework used here.

2.1 Standard Backpropagation (BP)

Standard backpropagation implements gradient descent on ℰ, and can be applied in a stochastic fashion on-line (or in mini batches) or in batch form, by summing or averaging over all training examples. For a single example, omitting the t index for simplicity, the standard backpropagation learning rule is easily obtained by applying the chain rule and given by:

| (3) |

where η is the learning rate, is the presynaptic activity, and is the backpropagated error. Using the chain rule, it is easy to see that the backpropagated error satisfies the recurrence relation:

| (4) |

with the boundary condition:

| (5) |

Thus in short the errors are propagated backwards in an essentially linear fashion using the transpose of the forward matrices, hence the symmetry of the weights, with a multiplication by the derivative of the corresponding forward activations every time a layer is traversed.

2.2 Standard Random Backpropagation (RBP)

Standard random backpropagation operates exactly like backpropagation except that the weights used in the backward pass are completely random and fixed. Thus the learning rule becomes:

| (6) |

where the randomly back-propagated error satisfies the recurrence relation:

| (7) |

and the weights are random and fixed. The boundary condition at the top remains the same:

| (8) |

Thus in RBP the weights in the top layer of the architecture are updated by gradient descent, identically to the BP case.

2.3 The Critical Equations

Within the supervised learning framework considered here, the goal is to find an optimal set of weights . The equations that the weights must satisfy at any critical point are simply:

| (9) |

Thus in general the optimal weights must depend on both the input and the targets, as well as the other weights in the network. And learning can be viewed as a lossy storage procedure for transferring the information contained in the training set into the weights of the architecture.

The critical Equation 9 shows that all the necessary forward information about the inputs and the lower weights leading up to layer h − 1 is subsumed by the term . Thus in this framework a separate channel for communicating information about the inputs to the deep weights is not necessary. Thus here we focus on the feedback information about the targets, contained in the term which, in a physical neural system, must be transmitted through a dedicated channel.

Note that depends on the output OL(t), the target T(t), as well as all the weights in the layers above h in the fully connected case (otherwise just those weight which are on a path from unit i in layer h to the output units), and in two ways: through OL(t) and through the backpropagation process. In addition, depends also on all the upper derivatives, i.e. the derivatives of the activations functions for all the neurons above unit i in layer h in the fully connected case (otherwise just those derivatives which are on a path from unit i in layer h to the output units). Thus in general, in a solution of the critical equations, the weights must depend on , the outputs, the targets, the upper weights, and the upper derivatives. Backpropagation shows that it is sufficient for the weights to depend on , T − O, the upper weights, and the upper derivatives.

2.4 Local Learning

Ultimately, for optimal learning, all the information required to reach a critical point of ℰ must appear in the learning rule of the deep weights. In a physical neural system, learning rules must also be local [6], in the sense that they can only involve variables that are available locally in both space and time, although for simplicity here we will focus only on locality in space. Thus typically, in the present formalism, a local learning rule for a deep layer must be of the form

| (10) |

and

| (11) |

assuming that the targets are local variables for the top layer. Among other things, this allows one to organize and stratify learning rules, for instance by considering polynomial learning rules of degree one, two, and so forth.

Deep local learning is the term we use to describe the use of local learning in all the adaptive layers of a feedforward architecture. Note that Hebbian learning [15] is a form of local learning, and deep local learning has been proposed for instance by Fukushima [10] to train the neocognitron architecture, essentially a feed forward convolutional neural network inspired by the earlier neurophysiological work of Hubel and Wiesel [18]. However, in deep local learning, information about the targets is not propagated to the deep layers and therefore in general deep local learning cannot find solutions of the critical equations, and thus cannot succeed at learning complex functions [6].

2.5 The Deep Learning Channel

From the critical equations, any optimal neural network learning algorithm must be capable of communicating some information about the outputs, the targets, and the upper weights to the deep weights and, in a physical neural system, a communication channel [28, 27] must exist to communicate this information. This is the deep learning channel, or learning channel in short [6], which can be studied using tools from information and complexity theory. In physical systems the learning channel must correspond to a physical channel and this leads to important considerations regarding its nature, for instance whether it uses the forward connections in the reverse direction or a different set of connections. Here, we focus primarily on how information is coded and sent over this channel.

In general, the information about the outputs and the targets communicated through this channel to is denoted by . Although backpropagation propagates this information from the top layer to the deep layers in a staged way, this is not necessary and could be sent directly to the deep layer h somehow skipping all the layers above. This observation leads immediately to the skipped variant of RBP described in the next section. It is also important to note that in principle this information should have the form . However standard backpropagation shows that it is possible to send the same information to all the synapses impinging onto the same neuron, and thus it is possible to learn with a simpler type of information of the form targeting the postsynaptic neuron i. This class of algorithms or channels is what we call deep targets algorithms, as they are equivalent to providing a target for each deep neuron. Furthermore, backpropagation shows that all the necessary information about the outputs and the targets is contained in the term T − OL so that we only need . Standard backpropagation uses information about the upper weights in two ways: (1) through the output OL which appears in the error terms T − OL; and through the backpropagation process itself. Random backpropagation crucially shows that the information about the upper weights contained in the backpropagation process is not necessary. Thus ultimately we can focus exclusively on information which has the simple form: , where r denotes a set of fixed random weights.

Thus, using the learning channel, we are interested in local learning rules of the form:

| (12) |

In fact, here we shall focus exclusively on learning rules with the multiplicative form:

| (13) |

corresponding to a product of the presynaptic activity with some kind of backpropagated error information, with standard BP and RBP as a special cases. Obvious important questions, for which we will seek full or partial answers, include: (1) what kinds of forms can take (as we shall see there are multiple possibilities)? (2) what are the corresponding tradeoffs among these forms, for instance in terms of computational complexity or information transmission? and (3) are the upper derivatives necessary and why?

3 Random Backpropagation Algorithms and Their Computational Complexity

We are going to focus on algorithms where the information required for the deep weight updates is produced essentially through a linear process whereby the vector T(t) − O(t), computed in the output layer, is processed through linear operations, i.e. additions and multiplications by constants (which can include multiplication by the upper derivatives). Standard backpropagation is such an algorithm, but there are many other possible ones. We are interested in the case where the matrices are random. However, even within this restricted setting, there are several possibilities, depending for instance on: (1) whether the information is progressively propagated through the layers (as in the case of BP), or broadcasted directly to the deep layers; (2) whether multiplication by the derivatives of the forward activations is included or not; and (3) the properties of the matrices in the learning channel (e.g. sparse vs dense). This leads to several new algorithms. Here we will use the following notations:

BP= (standard) backpropagation.

RBP= random backpropagation, where the transpose of the feedforward matrices are replaced by random matrices.

SRBP = skipped random backpropagation, where the backpropagated signal arriving onto layer h is given by Ch(T − O) with a random matrix Ch directly connecting the output layer L to layer h, and this for each layer h.

ARBP = adaptive random backpropagation, where the matrices in the learning channel are initialized randomly, and then progressively adapted during learning using the product of the corresponding forward and backward signals, so that , where R denotes the randomly backpropagated error. In this case, the forward channel becomes the learning channel for the backward weights.

ASRBP = adaptive skipped random backpropagation, which combines adaptation with skipped random backpropagation.

The default for each algorithm involves the multiplication at each layer by the derivative of the forward activation functions. The variants where this multiplication is omitted will be denoted by: “(no f′)”.

The default for each algorithm involves dense random matrices, generated for instance by sampling from a normalized Gaussian for each weight. But one can consider also the case of random ±1 (or (0,1)) binary matrices, or other distributions, including sparse versions of the above.

As we shall see, using random weights that have the same sign as the forward weights is not essential, but can lead to improvements in speed and stability. Thus we will use the word “congruent weights” to describe this case. Note that with fixed random matrices in the learning channel initialized congruently, congruence can be lost during learning when the sign of a forward weight changes.

SRBP is introduced both for information theoretic reasons– what happens if the error information is communicated directly?–and because it may facilitate the mathematical analyses since it avoids the backpropagation process. However, in one of the next sections, we will also show empirically that SRBP is a viable learning algorithm, which in practice can work even better than RBP. Importantly, these simulation results suggest that when learning the synaptic weight the information about all the upper derivatives ( for l ≥ h)) is not needed. However the immediate (l = h) derivative is needed.

Note this suggests yet another possible algorithm, skipped backropagation (SBP). In this case, for each training example and at each epoch, the matrix used in the feedback channel is the product of the corresponding transposed forward matrices, ignoring multiplication by the derivative of the forward transfer functions in all the layers above the layer under consideration. Multiplication by the derivative of the forward transfer functions is applied to the layer under consideration. Another possibility is to have a combination of RBP and SRBP in the learning channel, implemented by a combination of long-ranged connections carrying SRBP signals with short-range connections carrying a backpropagation procedure, when no long-range signals are available. This may be relevant for biology since combinations of long-ranged and short-ranged feedback connections is common in biological neural systems.

In general, in the case of linear networks, f′ = 1 and therefore including or excluding derivative terms makes no difference. Furthermore, for any linear architecture 𝒜[N, …, N, …, N] where all the layers have the same size, then RBP is equivalent to SRBP. However, if the layers do not have the same size, then the layer sizes introduce rank constraints on the information that is backpropagated through RBP that may differ from the information propagated through SRBP. In both the linear and non-linear cases, for any network of depth 3 (L = 3), RBP is equivalent to SRBP, since there is only one random matrix.

Additional variations can be obtained by using dropout, or multiple sets of random matrices, in the learning channel, for instance for averaging purposes. Another variation in the skipped case is cascading, i.e. allowing backward matrices in the learning channel between all pairs of layers. Note that the notion of cascading increases the number of weights and computations, yet it is still interesting from an exploratory and robustness point of view.

3.1 Computational Complexity Considerations

The number of computations required to send error information over the learning channel is a fundamental quantity which, however, depends on the computational model used and the cost associated with various operations. Obviously, everything else being equal, the computational cost of BP and RBP are basically the same since they differ only by the value of the weights being used. However more subtle differences can appear with some of the other algorithms, such as SRBP.

To illustrate this, consider an architecture 𝒜[N0, …, Nh, …, NL], fully connected, and let W be the total number of weights. In general, the primary cost of BP is the multiplication of each synaptic weight by the corresponding signal in the backward pass. Thus it is easy to see that the bulk of the operations required for BP to compute the backpropagated signals scale like O(W) (in fact Θ(W)) with:

| (14) |

Note that whether biases are added separately or, equivalently, implemented by adding a unit clamped to one to each layer, does not change the scaling. Likewise, adding the costs associated with the sums computed by each neuron and the multiplications by the derivatives of the activation functions does not change the scaling, as long as these operations have costs that are within a constant multiplicative factor of the cost for multiplications of signals by synaptic weights.

As already mentioned, the scaling for RBP is obviously the same, just using different matrices. However the corresponding term for SRBP is given by

| (15) |

In this sense, the computational complexity of BP and SRBP is identical if all the layers have the same size, but it can be significantly different otherwise, especially taking into consideration the tapering off associated with most architectures used in practice. In a classification problem, for instance, NL = 1 and all the random matrices in SRBP have rank 1, and W′ scales like the total number of neurons, rather than the total number of forward connections. Thus, provided it leads to effective learning, SRBP could lead to computational savings in a digital computer. However, in a physical neural system, in spite of these savings, the scaling complexity of BP and SRBP could end up being the same. This is because in a physical neural system, once the backpropagated signal has reached neuron i in layer h it still has to be communicated to the synapse. A physical model would have to specify the cost of such communication. Assuming one unit cost, both BP and SRBP would require Θ(W) operations across the entire architecture. Finally, a full analysis in a physical system would have to take into account also costs associated with wiring, and possibly differential costs between long and short wires as, for instance, SRBP requires longer wires than standard BP or RBP.

4 Algorithm Simulations

In this section, we simulate the various algorithms using standard benchmark datasets. The primary focus is not on achieving state-of-the-art results, but rather on better understanding these new algorithms and where they break down. The results are summarized in Table 1 at the end.

Table 1.

Summary of experimental results showing the final test accuracy (in percentages) for the RBP algorithms after 100 epochs of training on MNIST and CIFAR-10. For the experiments in this section, training was repeated five times with different weight initializations; in these cases the mean is provided, with the sample standard deviation in parentheses. Also included are the quantization results from Section 5, and the experiments applying dropout to the learning channel from Section 6.

| BP | RBP | SRBP | Top layer only | |

|---|---|---|---|---|

| MNIST Baseline | 97.9 (0.1) | 97.2 (0.1) | 97.2 (0.2) | 84.7 (0.7) |

|

| ||||

| No-f′ | 89.9 (0.3) | 88.3 (1.1) | 88.4 (0.7) | |

|

| ||||

| Adaptive | 97.3 (0.1) | 97.3 (0.1) | ||

|

| ||||

| Sparse-8 | 96.0 (0.4) | 96.9 (0.1) | ||

| Sparse-2 | 96.3 (0.5) | 95.8 (0.2) | ||

| Sparse-1 | 90.3 (1.1) | 94.6 (0.6) | ||

|

| ||||

| Quantized error 5-bit | 97.6 | 95.4 | 95.1 | |

| Quantized error 3-bit | 96.5 | 92.5 | 93.2 | |

| Quantized error 1-bit | 94.6 | 89.8 | 91.6 | |

|

| ||||

| Quantized update 5-bit | 95.2 | 94.0 | 93.3 | |

| Quantized update 3-bit | 96.5 | 91.0 | 92.2 | |

| Quantized update 1-bit | 92.5 | 9.6 | 90.7 | |

|

| ||||

| LC Dropout 10% | 97.7 | 96.5 | 97.1 | |

| LC Dropout 20% | 97.8 | 96.7 | 97.2 | |

| LC Dropout 50% | 97.7 | 96.7 | 97.1 | |

|

| ||||

| CIFAR-10 Baseline | 83.4 (0.2) | 70.2 (1.1) | 72.7 (0.8) | 47.9 (0.4) |

|

| ||||

| No-f′ | 54.8 (3.6) | 32.7 (6.2) | 39.9 (3.9) | |

|

| ||||

| Sparse-8 | 46.3 (4.3) | 70.9 (0.7) | ||

| Sparse-2 | 62.9 (0.9) | 65.7 (1.9) | ||

| Sparse-1 | 56.7 (2.6) | 62.6 (1.8) | ||

4.1 MNIST

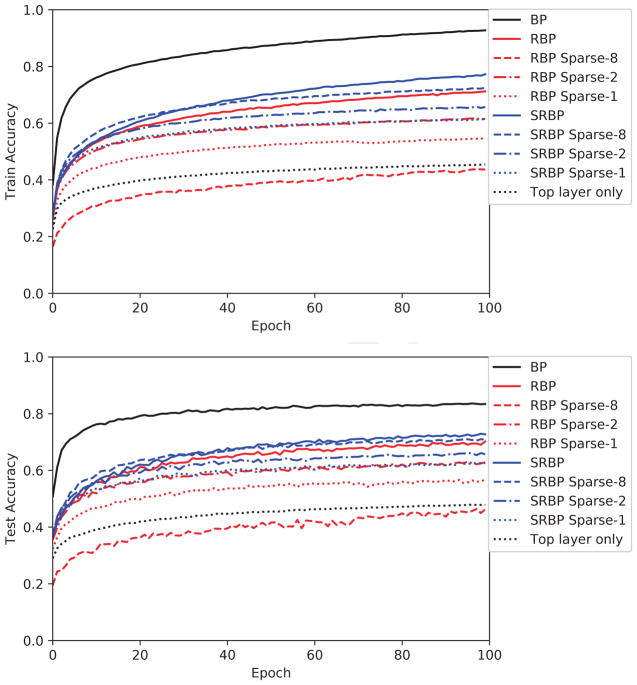

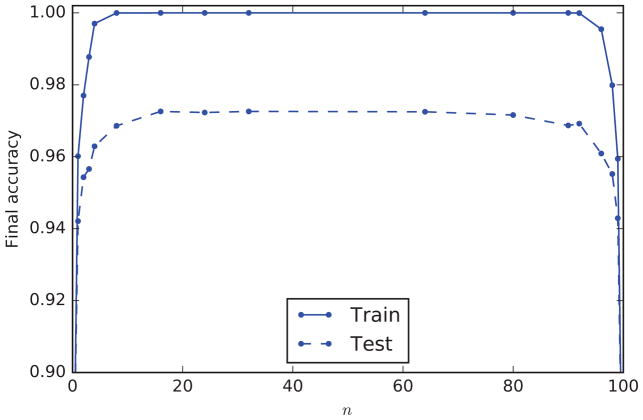

Several learning algorithms were first compared on the MNIST [22] classification task. The neural network architecture consisted of 784 inputs, four fully-connected hidden layers of 100 tanh units, followed by 10 softmax output units. Weights were initialized by sampling from a scaled normal distribution [11]. Training was performed for 100 epochs using mini-batches of size 100 with an initial learning rate of 0.1, decaying by a factor of 10−6 after each update, and no momentum. In Figure 1, the performance of each algorithm is shown on both the training set (60,000 examples) and test set (10,000 examples). Results for the adaptive versions of the random propagation algorithms are shown in Figure 2, and results for the sparse versions are shown in Figure 3.

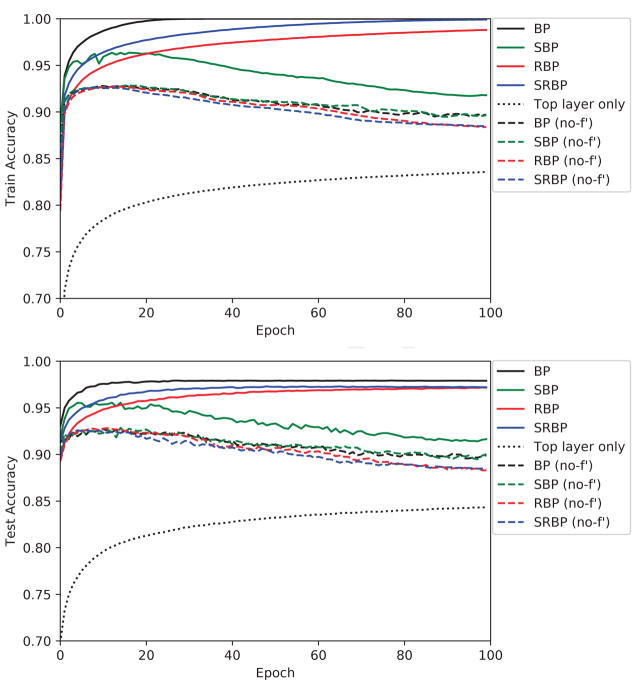

Figure 1.

MNIST training (upper) and test (lower) accuracy, as a function of epoch, for nine different learning algorithms: backpropagation (BP), skip BP (SBP), random BP (RBP), skip random BP (SRBP), the version of each algorithm in which the error signal is not multiplied by the derivative of the post-synaptic transfer function (no-f′), and the case where only the top layer is trained while the lower layer weights are fixed (Top Layer Only). Note that these algorithms differ only in how they backpropagate error signals to the lower layers; the top layer is always updated according to the typical gradient descent rule. Models are trained five times with different weight initializations; the trajectory of the mean is shown here.

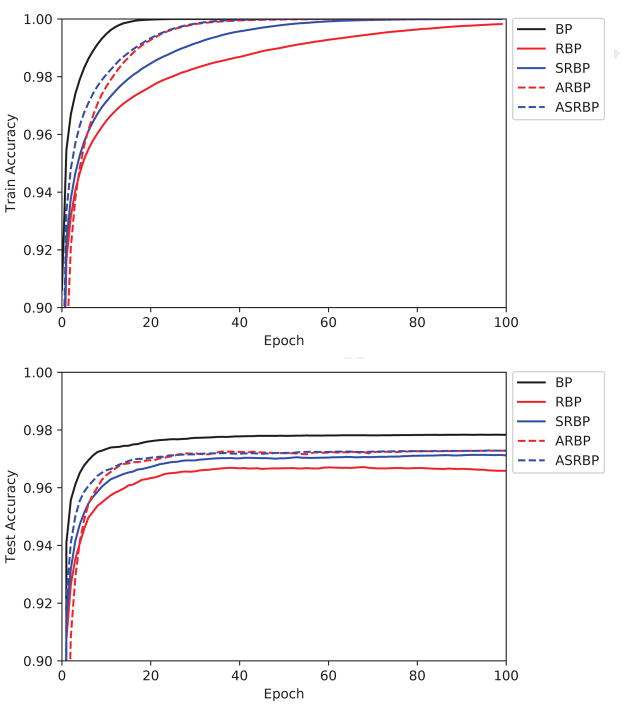

Figure 2.

MNIST training (upper) and test (lower) accuracy, as a function of training epoch, for the adaptive versions of the RBP algorithm (ARBP) and SRBP algorithm (ASRBP). In these simulations, adaption slightly improves the performance of SRBP and speeds up training. For the ARBP algorithm, the learning rate was reduced by a factor of 0.1 in these experiments to keep the weights from growing too quickly. Models are trained five times with different weight initializations; the trajectory of the mean is shown here.

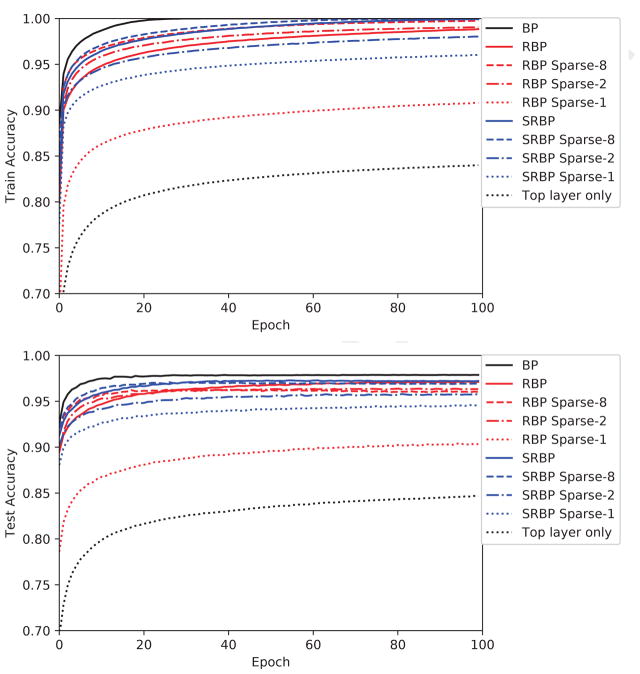

Figure 3.

MNIST training (upper) and test (lower) accuracy, as a function of training epoch, for the sparse versions of the RBP and SRBP algorithms. Experiments are run with different levels of sparsity by controlling the expected number n of non-zero connections sent from one neuron to any other layer it is connected to in the backward learning channel. The random back-propagation matrix connecting any two layers is created by sampling each entry using a (0,1) Bernoulli distribution, where each element is 1 with probability p = n/(fan − in) and 0 otherwise. For example, in SRBP (Sparse-1), each of the 10 softmax outputs sends a non-zero (hence with a weight equal to 1) connection to an average of one neuron in each of the hidden layers. We compare to the (Normal) versions of RBP and SRBP, where the elements of these matrices are initialized from a standard Normal distribution scaled in the same way as the forward weight matrices [11]. Models are trained five times with different weight initializations; the trajectory of the mean is shown here.

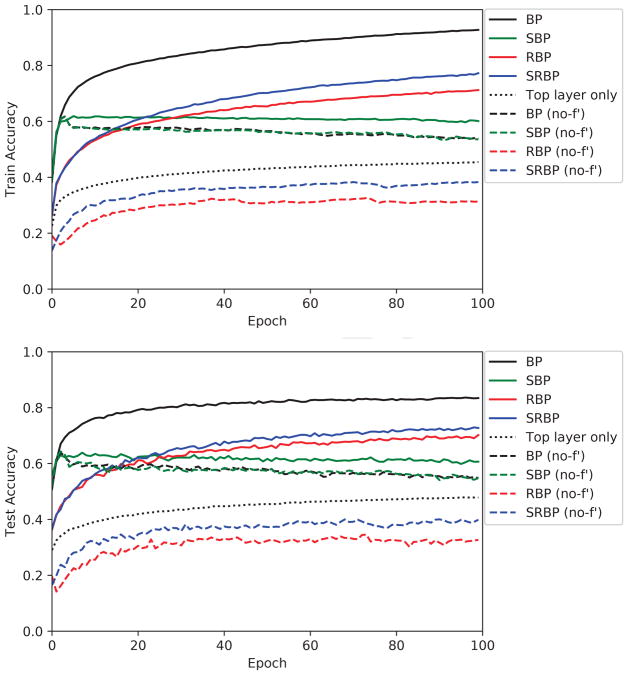

The main conclusion is that the general concept of RBP is very robust and works almost as well as BP. Performance is unaffected or degrades gracefully when the the random backwards weights are initialized from different distributions or even change during training. The skipped versions of the algorithms seem to work slightly better than the non-skipped versions. Finally, it can be used with different neuron activation functions, though multiplying by the derivative of the activations seem to play an important role.

4.2 Additional MNIST Experiments

In addition to the experiments presented above, the following observations were made by training on MNIST with other variations of these algorithms:

If the matrices of the learning channel in RBP are randomly changed at each stochastic mini-batch update, sampled from a distribution with mean 0, performance is poor and similar to training only the top layer.

If the matrices of the learning channel in RBP are randomly changed at each stochastic mini-batch update, but each backwards weight is constrained to have the same sign as the corresponding forward weight, then training error goes to 0%. This is the sign-concordance algorithm explored by Liao, et al. [23].

If the elements of the matrices of the learning channel in RBP or SRBP are sampled from a uniform or normal distribution with non-zero mean, performance is unchanged. This is also consistent with the sparsity experiments above, where the means of the sampling distributions are not zero.

Updates to a deep layer with RBP or SRBP appear to require updates in the precedent layers in the learning channel. If we fix the weights in layer h, while updating the rest of the layers with SRBP, performance is often worse than if we fix layers l ≤ h.

If we remove the magnitude information from the SRBP updates, keeping only the sign, performance is better than the Top Layer Only algorithm, but not as good as SRBP. This is further explored in the next section.

If we remove the sign information from the SRBP updates, keeping only the absolute value, things do not work at all.

If a different random backward weight is used to send an error signal to each individual weight, rather than to a hidden neuron which then updates all it’s incoming weights, things do not work at all.

The RBP learning rules work with different transfer functions as well, including linear, logistic, and ReLU (rectified linear) units.

4.3 CIFAR-10

To further test the validity of these results, we performed similar simulations with a convolutional architecture on the CIFAR-10 dataset [20]. The specific architecture was based on previous work [16], and consisted of 3 sets of convolution and max-pooling layers, followed by a densely-connected layer of 1024 tanh units, then a softmax output layer. The input consists of 32-by-32 pixel 3-channel images; each convolution layer consists of 64 tanh channels with 5×5 kernel shape and 1×1 strides; max-pooling layers have 3×3 receptive fields and 2×2 strides. All weights were initialized by sampling from a scaled normal distribution [11], and updated using stochastic gradient descent on mini-batches of size 128 and a momentum of 0.9. The learning rate started at 0.01 and decreased by a factor of 10−5 after each update. During training, the training images are randomly translated up to 10% in either direction, horizontally and vertically, and flipped horizontally with probability p = 0.5.

Examples of results obtained with these 2D convolutional architectures are shown in Figures 5 and 6. Overall they are very similar to those obtained on the MNIST dataset.

Figure 5.

CIFAR-10 training (upper) and test (lower) accuracy, as a function of training epoch, for nine different learning algorithms: backpropagation (BP), skip BP (SBP), random BP (RBP), skip random BP (SRBP), the version of each algorithm in which the error signal is not multiplied by the derivative of the post-synaptic transfer function (no-f′), and the case where only the top layer is trained while the lower layer weights are fixed (Top Layer Only). Models are trained five times with different weight initializations; the trajectory of the mean is shown here.

Figure 6.

CIFAR-10 training (upper) and test (lower) accuracy for the sparse versions of the RBP and SRBP algorithms. Experiments are run with different levels of sparsity by controlling the expected number n of non-zero connections sent from one neuron to any other layer it is connected to in the backward learning channel. The random backpropagation matrix connecting any two layers is created by sampling each entry using a (0,1) Bernoulli distribution, where each element is 1 with probability p = n/(fan − in) and 0 otherwise. We compare to the (Normal) versions of RBP and SRBP, where the elements of these matrices are initialized from a standard Normal distribution scaled in the same way as the forward weight matrices [11]. Models are trained five times with different weight initializations; the trajectory of the mean is shown here.

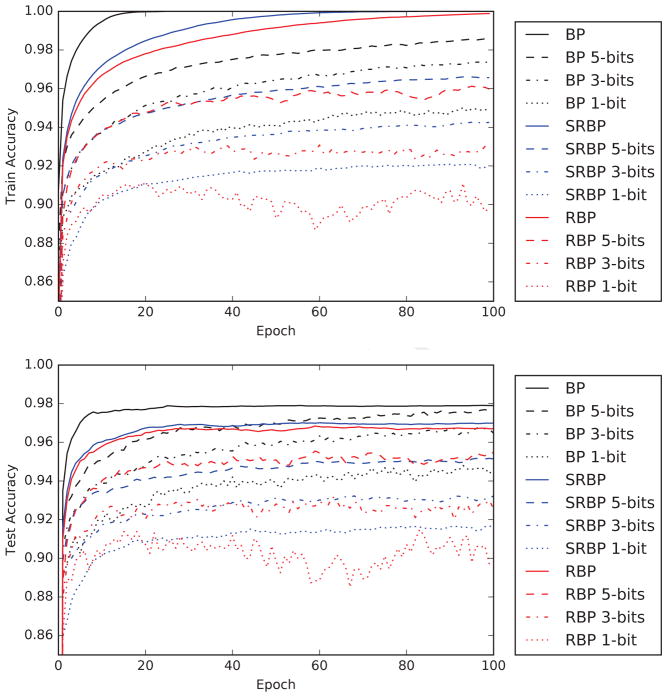

5 Bit Precision in the Learning Channel

5.1 Low-Precision Error Signals

In the following experiment, we investigate the nature of the learning channel by quantizing the error signals in the BP, RBP, and SRBP algorithms. This is distinct from other work that uses quantization to reduce computation [17] or memory [13] costs. Quantization is not applied to the forward activations or weights; quantization is only applied to the backpropagated signal received by each hidden neuron, , where each weight update after quantization is given by

| (16) |

| (17) |

where is the derivative of the activation function and

| (18) |

in the non-quantized update. We define the quantization formula used here as

| (19) |

where bits is the number of bits needed to represent 2bits possible values and α is a scale factor such that the quantized values fall in the range [−α, α]. Note that this definition is identical to the quantization function defined in Hubara, et al. [17], except that this definition is more general in that α is not constrained to be a power of 2.

In BP and RBP, the quantization occurs before the error signal is backpropagated to previous layers, so the quantization errors accumulate. In experiments, we used a fixed scale parameter α = 2−3 and varied the bit width bits. Figure 7 shows that the performance degrades gracefully as the precision of the error signal decreases to small values; for larger values, e.g. bits = 10, the performance is indistinguishable from the unquantized updates with 32-bit floats.

Figure 7.

MNIST training (upper) and test (lower) accuracy, as a function of training epoch, for the sparse versions of the RBP and SRBP algorithms. Experiments are run with different levels of quantization of the error signal by controlling the bitwidth bits, according to the formula given in the text (Equation 19).

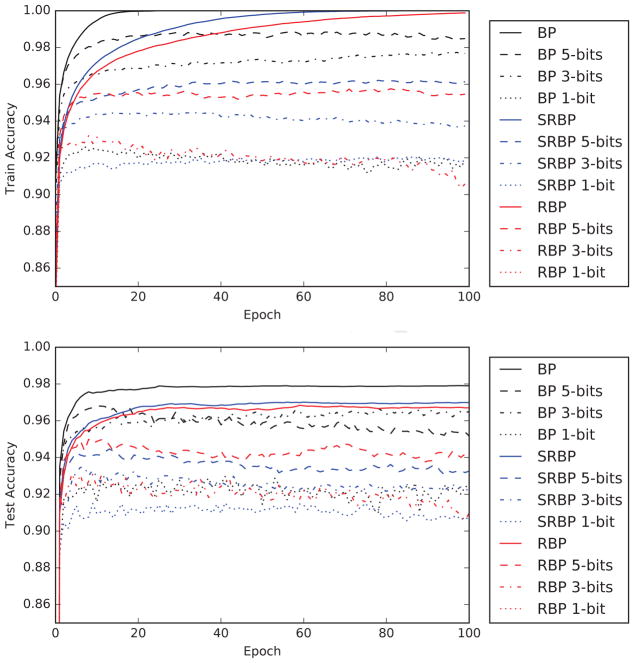

5.2 Low-Precision Weight Updates

The idea of using low-precision weight updates is not new [25], and Liao, et al. [23] recently explored the use of low-precision updates with RBP. In the following experiment, we investigate the robustness of both RBP and SRBP to low-precision weight updates by controlling the degree of quantization. Equation 19 is again used for quantization, with the scale factor reduced to α = 2−6 since weight updates need to be small. The quantization is applied after the error signals have been backpropagated to all the hidden layers, but before summing over the minibatch; as in the previous experiments, we use minibatch updates of size 100, a non-decaying learning rate of 0.1, and no momentum term (Figure 8). The main conclusion is that even very lowprecision updates to the weights can be used to train an MNIST classifier to 90% accuracy, and that low-precision weight updates appear to degrade the performance of BP, RBP, and SRBP in roughly the same way.

Figure 8.

MNIST training (upper) and test (lower) accuracy, as a function of training epoch, for the sparse versions of the RBP and SRBP algorithms. Experiment are carried with different levels of quantization of the weight updates by controlling the bitwidth bits, according to the formula given in the text (Equation 19). Quantization is applied to each example-specific update, before summing the updates within a minibatch.

6 Observations

In this section, we provide a number of simple observations that provide some intuition for some of the previous simulation results and why RBP and some of its variations may work. Some of these observations are focused on SRBP which in general is easier to study than standard RBP.

Fact 1: In all these RBPs algorithms, the L-layer at the top with parameters follows the gradient, as it is trained just like BP, since there are no random feedback weights used for learning in the top layer. In other words, BP=RBP=SRBP for the top layer.

Fact 2: For a given input, if the sign of T − O is changed, all the weights updates are changed in the opposite direction. This is true of all the algorithms considered here–BP, RBP, and their variants–even when the derivatives of the activations are included.

Fact 3: In all RBP algorithms, if T − O = 0 (online or in batch mode) then for all the weights (on line or in batch mode).

Fact 4: Congruence of weights is not necessary. However it can be helpful sometimes and speed up learning. This can easily be seen in simple cases. For instance, consider a linear or non-linear 𝒜[N0, N1, 1] architecture with coherent weights, and denote by a the weights in the bottom layer, by b the weights in the top layer, and by c the weights in the learning channel. Then, for all variants of RBP, all the weights updates are in the same direction as the gradient. This is obvious for the top layer (Fact 1 above). For the first layer of weights, the changes are given by , which is very similar to the change produced by gradient descent since ci and bi are assumed to be coherent. So while the dynamics of the lower layer is not exactly in the gradient direction, it is always in the same orthant as the gradient and thus downhill with respect to the error function. Additional examples showing the positive but not necessary effect of coherence are given in Section 7.

Fact 5: SRBP seems to perform well showing that the upper derivatives are not needed. However the derivative of the corresponding layer seem to matter. In general, for the activation functions considered here, these derivatives tend to be between 0 and 1. Thus learning is attenuated for neurons that are saturated. So an ingredient that seems to matter is to let the synapses of neurons that are not saturated change more than the synapses of neurons that are saturated (f′ close to 0).

Fact 6: Consider a multi-class classification problem, such as MNIST. All the elements in the same class tend to receive the same backpropagated signal and tend to move in unison. For instance, consider the the beginning of learning, with small random weights in the forward network. Then all the images will tend to produce a more or less uniform output vector similar to (0.1, 0.1, …, 0.1). Thus all the images in the “0” class will tend to produce a more or less uniform error vector similar to (0.9,− 0.1, …, − 0.1). All the images in the “1” class will tend to produce a more or less uniform error vector similar to (− 0.1, 0.9, …, − 0.1), which is essentially orthogonal to the previous error vector, and so forth. In other words, the 10 classes can be associated with 10 roughly orthogonal error vectors. When these vectors are multiplied by a fixed random matrix, as in SRBP, they will tend to produce 10 approximately orthogonal vectors in the corresponding hidden layer. Thus the backpropagated error signals tend to be similar within one digit class, and orthogonal across different digit classes. At the beginning of learning, we can expect roughly half of them (5 digits out of 10 in the MNIST case) to be in the same direction as BP.

Thus, in conclusion, an intuitive picture of why RBP may work is that: (1) the random weights introduce a fixed coupling between the learning dynamics of the forward weights (see also mathematical analyses below); (2) the top layer of weights always follows gradient descent and stirs the learning dynamic in the right direction; and (3) the learning dynamic tends to cluster inputs associated with the same response and move them away from other similar clusters. Next we discuss a possible connection to dropout.

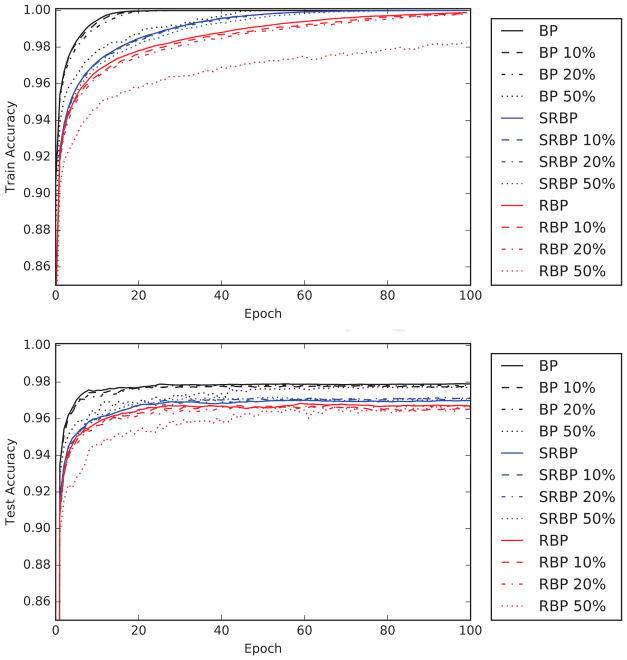

6.1 Connections to Dropout

Dropout [16, 5] is a very different training algorithm which, however, is also based on using some form of randomness. Here we explore some possible connections to RBP.

First observe that the BP equations can be viewed as a form of dropout averaging equations, in the sense that, for a fixed example, they compute the ensemble average activity of all the units in the learning channel. The ensemble average is taken over all the possible backpropagation networks where each unit is dropped stochastically, unit i in layer h being dropped with probability [assuming the derivatives of the transfer functions are always between 0 and 1 inclusively, which is the case for the standard transfer functions, such as the logistic or the rectified linear transfer functions–otherwise some rescaling is necessary]. Note that in this way the dropout probabilities change with each example and units that are more saturated are more likely to be dropped, consistently with the remark above that saturated units should learn less.

In this view there are two kinds of noise: (1) choice of the dropout probabilities which vary with each example; (2) the actual dropout procedure. Consider now adding a third type of noise on all the symmetric weights in the backward pass in the form

| (20) |

and assume for now that . The distribution of the noise could be Gaussian for instance, but this is not essential. The important point is that the noise on a weight is independent of the noise on the other weights, as well as independent of the dropout noise on the units. Under these assumptions, as shown in [5], the expected value of the activity of each unit in the backward pass is exactly given by the standard BP equations and equal to for unit i in layer h. In other words, standard backpropagation can be viewed as computing the exact average over all backpropagation processes implemented on all the stochastic realizations of the backward network under the three forms of noise described above. Thus we can reverse this argument and consider that RBP approximates this average or BP by averaging over the first two kinds of noise, but not the third one where, instead of averaging, a random realization of the weights is selected and then fixed at all epochs. This connection suggests other intermediate RBP variants where several samples of the weights are used, rather than a single one.

Finally, it is possible to use dropout in the backward pass. The forward pass is robust to dropping out neurons, and in fact the dropout procedure can be beneficial [16, 5]. Here we apply the dropout procedure to neurons in the learning channel during the backward pass. The results of simulations are reported in Figure 9 and confirm that BP, RBP, SRBP, are robust with respect to dropout.

Figure 9.

MNIST training (upper) and test (lower) accuracy, as a function of training epoch, for BP, RBP, and SRBP with different dropout probabilities in the learning channel: 0% (no dropout), 10%, 20%, and 50%. For dropout probability p, the error signals that are not dropped out are scaled by 1/(1− p). As with dropout in the forward propagation, large dropout probabilities lead to slower training without hurting final performance.

7 Mathematical Analysis

7.1 General Considerations

The general strategy to try to derive more precise mathematical results is to proceed from simple architectures to more complex architectures, and from the linear case to the non-linear case. The linear case is more amenable to analysis and, in this case, RBP and SRBP are equivalent when there is only one hidden layer, or when all the layers have the same size. Thus we study the convergence of RBP to optimal solutions in linear architectures of increasing complexity: 𝒜[1, 1, 1], 𝒜[1, 1, 1, 1], 𝒜[1, 1, …, 1], 𝒜[1, N, 1] 𝒜[N, 1, N], and then the general 𝒜[N0, N1, N2] case with a single hidden layer. This is followed by the study of a non-linear 𝒜[1, 1, 1] case.

For each kind of linear network, under a set of standard assumptions, one ca derive a set of non-linear–in fact polynomial–autonomous, ordinary differential equations (ODEs) for the average (or batch) time evolution of the synaptic weights under the RBP or SRBP algorithm. As soon as there is more than one variable and the system is non-linear, there is no general theory to understand the corresponding behavior. In fact, even in two dimensions, the problem of understanding the upper bound on the number and relative position of the limit cycles of a system of the form dx/dt = P(x, y) and dy/dt = Q(x, y), where P and Q are polynomials of degree n is open–in fact this is Hilbert’s 16-th problem in the field of dynamical systems [29, 19].

When considering the specific systems arising from the RBP/SRBP learning equations, one must first prove that these systems have a long-term solution. Note that polynomial ODEs may not have long-term solutions (e.g. dx/dt = xα, with x(0) ≠ 0, does not have long-term solutions for α > 1). If the trajectories are bounded, then long-term solutions exist. We are particularly interested in long-term solutions that converge to a fixed point, as opposed to limit cycles or other behaviors.

A number of interesting cases can be reduced to polynomial differential equations in one dimension. These can be understood using the following theorem.

Theorem 1

Let dx/dt = Q(x) = k0 + k1x + … + knxn be a first order polynomial differential equation in one dimension of degree n > 1, and let r1 < r2 … < rk (k ≤ n) be the ordered list of distinct real roots of Q (the fixed points). If x(0) = ri then x(t) = ri and the solution is constant If ri < x(0) < ri+1 then x(t) → ri if Q < 0 in (ri, ri+1), and x(t) → ri+1 if Q > 0 in (ri, ri+1). If x(0) < r1 and Q > 0 in the corresponding interval, then x(t) → r1. Otherwise, if Q < 0 in the corresponding interval, there is no long time solution and x(t) diverges to −∞ within a finite horizon. If x(0) > rk and Q < 0 in the corresponding interval, then x(t) → rk. Otherwise, if Q > 0 in the corresponding interval, there is no long time solution and x(t) diverges to +∞ within a finite horizon. A necessary and sufficient condition for the dynamics to always converge to a fixed point is that the degree n be odd, and the leading coefficient be negative.

Proof

The proof of this theorem is easy and can be visualized by plotting the function Q.

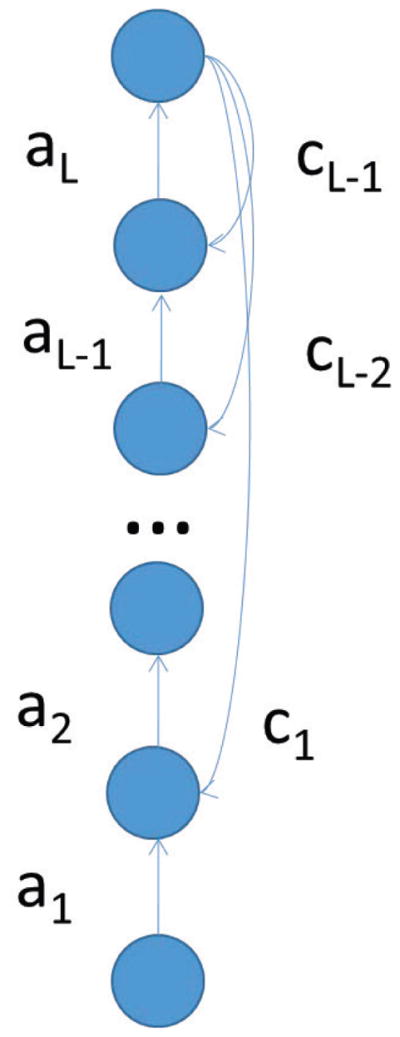

Finally, in general the matrices in the forward channel are denoted by A1, A2, …, and the matrices in the learning channel are denoted by C1, C2, … Theorems are stated in concise form and additional important facts are contained in the proofs.

7.2 The Simplest Linear Chain: 𝒜[1, 1, 1]

Derivation of the System

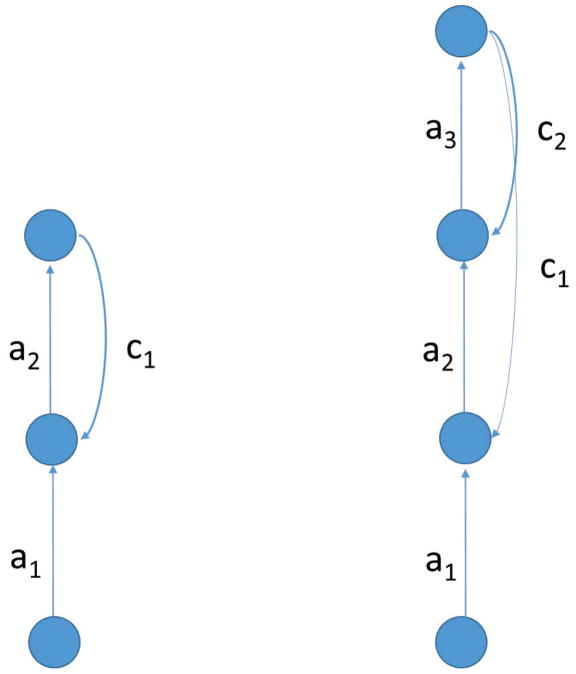

The simplest case correspond to a linear 𝒜[1, 1, 1] architecture (Figure 10). Let us denote by a1 and a2 the weights in the first and second layer, and by c1 the random weight of the learning channel. In this case, we have O(t) = a1a2I(t) and the learning equations are given by:

| (21) |

Figure 10.

Left: 𝒜[1, 1, 1] architecture. The weights a1 and a2 are adjustable, and the feedback weight c1 is constant. Right: 𝒜[1, 1, 1, 1] architecture. The weights a1, a2, and a3 are adjustable, and the feedback weights c1 and c2 are constant.

When averaged over the training set:

| (22) |

where α = E(IT) and β = E(I2). With the proper scaling of the learning rate (η = Δt) this leads to the non-linear system of coupled differential equations for the temporal evolution of a1 and a2 during learning:

| (23) |

Note that the dynamic of P = a1a2 is given by:

| (24) |

The error is given by:

| (25) |

and:

| (26) |

the last equality requires ai ≠ 0.

Theorem 2

The system in Equation 23 always converges to a fixed point. Furthermore, except for trivial cases associated with c1 = 0, starting from any initial conditions the system converges to a fixed point corresponding to a global minimum of the quadratic error function. All the fixed points are located on the hyperbolas given by α − βP = 0 and are global minima of the error function. All the fixed points are attractors except those that are interior to a certain parabola. For any starting point, the final fixed point can be calculated by solving a cubic equation.

Proof

As this is the first example, we first deal with the trivial cases in detail. For subsequent systems, we will skip the trivial cases entirely.

Trivial Cases

If β = 0 then we must have I = 0 and thus α = 0. As a result the activity of the input, hidden, and output, neuron will always be 0. Therefore the weights a1 and a2 will remain constant (da1/dt = da2/dt = 0) and equal to their initial values a1(0) and a2(0). The error will also remain constant, and equal to 0 if and only if T = 0. Thus from now on we can assume that β > 0.

If c1 = 0 then the lower weight a1 never changes and remains equal to its initial value. If this initial value satisfies a1(0) = 0, then the activity of the hidden and output unit remains equal to 0 at all times, and thus a2 remains constant and equal to its initial value a2 = a2(0). The error remains constant and equal to 0 if only if T is always 0. If a1(0) ≠ 0, then the error is a simple quadratic convex function of a2 and since the rule for adjusting a2 is simply gradient descent, the value of a2 will converge to its optimal value given by: a2 = α/βa1(0).

General Case

Thus from now on, we can assume that β > 0 and c1 ≠ 0. Furthermore, it is easy to check that changing the sign of α corresponds to a reflection about the a2-axis. Likewise, changing the sign of c1 corresponds to a reflection about the origin (i.e. across both the a1 and a2 axis). Thus in short, it is sufficient to focus on the case where: α > 0, β > 0, and c1 > 0. In this case, the critical points for a1 and a2 are given by:

| (27) |

which corresponds to two hyperbolas in the two-dimensional (a1, a2) plane, in the first and third quadrant for α = E(IT) > 0. Note that these critical points do not depend on the feedback weight c1. All these critical points correspond to global minima of the error function . Furthermore, the critical points of P include also the parabola:

| (28) |

(Figure 11). These critical points are dependent on the weights in the learning channel. This parabola intersects the hyperbola a1a2 = P = α/β at one point with coordinates: a1 = (−c1α/β)1/3 and .

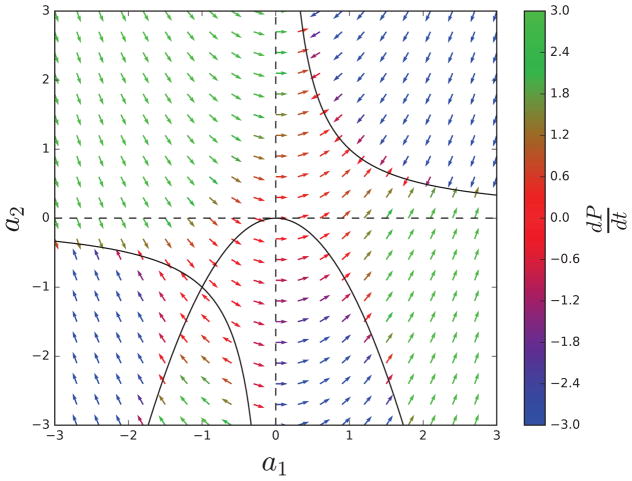

Figure 11.

Vector field for the 𝒜[1, 1, 1] linear case with c1 = 1, α = 1, and β = 1. a1 correspond to the horizontal axis and a2 correspond to the vertical axis. The critical points correspond to the two hyperbolas, and all critical points are fixed points and global minima of the error functions. Arrows are colored according to the value of dP/dt, showing how the critical points inside the parabola are unstable. All other critical points are attractors. Reversing the sign of α, leads to a reflection across the a2-axis; reversing the sign of c1, leads to a reflection across both the a1 and a2 axes.

In the upper half plane, where a2 and c1 are congruent and both positive, the dynamics is simple to understand. For instance in the first quadrant where a1, a2, c1 > 0, if α − βP > 0 then da1/dt > 0, da2/dt > 0, and dP/dt > 0 everywhere and therefore the gradient vector flow is directed towards the hyperbola of critical points. If started in this region, a1, a2, and P will grow monotonically until a critical point is reached and the error will decrease monotonically towards a global minimum. If α − βP < 0 then da1/dt < 0, da2/dt < 0, and dP/dt < 0 everywhere and again the vector flow is directed towards the hyperbola of critical points. If started in this region, a1, a2, and P will decrease monotonically until a critical point is reached and the error will decrease monotonically towards a global minimum. A similar situation is observed in the fourth quadrant where a1 < 0 and a2 > 0.

More generally, if a2 and c1 have the same sign, i.e. are congruent as in BP, then and P will increase if α − βP > 0, and decrease if α − βP < 0. Note however that this is also true in general when c1 is small regardless of its sign, relative to a1 and a2, since in this case it is still true that is positive. This remains true even if c1 varies, as long as it is small. When c1 is small, the dynamics is dominated by the top layer. The lower layer changes slowly and the top layer adapts rapidly so that the system again converges to a global minimum. When a2 = c1 one recovers the convergent dynamic of BP, as dP/dt always has the same sign as α − βP. However, in the lower half plane, the situation is slightly more complicated (Figure 11).

To solve the dynamics in the general case, from Equation 23 we get:

| (29) |

which gives so that finally:

| (30) |

Given a starting point a1(0) and a2(0), the system will follow a trajectory given by the parabola in Equation 30 until it converges to a critical point (global optimum) where da1/dt = da2/dt = 0. To find the specific critical point to which it converges to, Equations 30 and 27 must be satisfied simultaneously which leads to the depressed cubic equation:

| (31) |

which can be solved using the standard formula for the roots of cubic equations. Note that the parabolic trajectories contained in the upper half plane intersect the critical hyperbola in only one point and therefore the equation has a single real root. In the lower half plane, the parabolas associated with the trajectories can intersect the hyperbolas in 1, 2, or 3 distinct points corresponding to 1 real root, 2 real roots (1 being double), and 3 real roots. The double root corresponds to the point −(c1α/β)1/3 associated with the intersection of the parabola of Equation 30 with both the hyperbola of critical points a1a2 = α/β and the parabola of additional critical points for P given by Equation 28.

When there are multiple roots, the convergence point of each trajectory is easily identified by looking at the derivative vector flow (Figure 11). Note on the figure that all the points on the critical hyperbolas are stable attractors, except for those in the lower half-plane that satisfy both a1a2 = α/β and . This can be shown by linearizing the system around its critical points.

Linearization Around Critical Points

If we consider a small deviation a1 + u and a2 + v around a critical point a1, a2 (satisfying α − βa1a2 = 0) and linearize the corresponding system, we get:

| (32) |

with a1a2 = α/β. If we let w = a2u + a1v we have:

| (33) |

Thus if , w converges to zero and a1, a2 is an attractor. In particular, this is always the case when c1 is very small, or c1 has the same sign as a2. If , w diverges to +∞, and corresponds to unstable critical points as described above. If , w is constant.

Finally, note that in many cases, for instance for trajectories in the upper half plane, the value of P along the trajectories increases or decreases monotonically towards the global optimum value. However this is not always the case and there are trajectories where dP/dt changes sign, but this can happen only once.

7.3 Adding Depth: the Linear Chain 𝒜[1, 1, 1, 1]

Derivation of the System

In the case of a linear 𝒜[1, 1, 1, 1] architecture, for notational simplicity, let us denote by a1, a2 and a3 the forward weights, and by c1 and c2 the random weights of the learning channel (note the index is equal to the target layer). In this case, we have O(t) = a1a2a3I(t) = PI(t). The learning equations are:

| (34) |

When averaged over the training set:

| (35) |

where P = a1a2a3. With the proper scaling of the learning rate (η = Δt) this leads to the non-linear system of coupled differential equations for the temporal evolution of a1, a2 and a3 during learning:

| (36) |

The dynamic of P = a1a2a3 is given by:

| (37) |

Theorem 3

Except for trivial cases (associated with c1 = 0 or c2 = 0), starting from any initial conditions the system in Equation 36 converges to a fixed point, corresponding to a global minimum of the quadratic error function. All the fixed points are located on the hypersurface given by α − βP = 0 and are global minima of the error function. Along any trajectory, and for each i, ai+1 is a quadratic function of ai. For any starting point, the final fixed point can be calculated by solving a polynomial equation of degree seven.

Proof

If c1 = 0, a1 remains constant and thus we are back to the linear case of a 𝒜[1, 1, 1] architecture where the inputs I are replaced by a1I. Likewise, if c2 = 0 a2 remains constant and the problem can again be reduced to the 𝒜[1, 1] case with the proper adjustments. Thus for the rest of this section we can assume c1 ≠ 0 and c2 ≠ 0.

The critical points of the system correspond to α − βP = 0 and do not depend on the weights in the learning channel. These critical points correspond to global minima of the error function. These critical points are also critical points for the product P. Additional critical points for P are provided by the hypersurface: with (a1, a2, a3) in ℝ3.

The dynamics of the system can be solved by noting that Equation 36 yields:

| (38) |

As a result:

| (39) |

and:

| (40) |

Substituting these results in the first equation of the system gives:

| (41) |

and hence:

| (42) |

In short da1/dt = Q(a1) where Q is a polynomial of degree 7 in a1. By expanding and simplifying Equation 42, it is easy to see that the leading term of Q is negative and given by . Therefore, using Theorem 1, for any initial conditions a1(0), a1(t) converges to a finite fixed point. Since a2 is a quadratic function of a1 it also converges to a finite fixed point, and similarly for a3. Thus in the general case the system always converges to a global minimum of the error function satisfying α − βP = 0. The hypersurface depends on c1, c2 and provides additional critical points for the product P. It can be shown again by linearization that this hypersurface separates stable from unstable fixed points.

As in the previous case, small weights and congruent weights can help learning but are not necessary. In particular, if c1 and c2 are small, or if c1 is small and c2 is congruent (with a3), then and dP/dt has the same sign as α − βP.

7.4 The General Linear Chain: 𝒜[1, …, 1]

Derivation of the System

The analysis can be extended immediately to a linear chain architecture 𝒜[1, …, 1] of arbitrary length (Figure 12). In this case, let a1, a2, …, aL denote the forward weights and c1, …, cL−1 denote the feedback weights. Using the same derivation as in the previous cases and letting O = PI = a1a2 … aLI gives the system:

| (43) |

for i = 1, …, L. Taking expectations as usual leads to the set of differential equations:

| (44) |

or, in more compact form:

| (45) |

with cL = 1. As usual, , α = E(TI), and β = E(I2). A simple calculation yields:

| (46) |

the last equality requiring ai ≠ 0 for every i.

Figure 12.

Left: 𝒜[1, …, 1] architecture. The weights ai are adjustable, and the feedback weight ci are fixed. The index of each parameter is associated with the corresponding target layer.

Theorem 4

Except for trivial cases, starting from any initial conditions the system in Equation 44 converges to a fixed point, corresponding to a global minimum of the quadratic error function. All the fixed points are located on the hypersurface given by α − βP = 0 and are global minima of the error function. Along any trajectory, and for each i, ai+1 is a quadratic function of ai. For any starting point, the final fixed point can be calculated by solving a polynomial equation of degree 2L − 1.

Proof

Again, when all the weights in the learning channel are non zero, the critical points correspond to the curve α − βP = 0. These critical points are independent of the weights in the learning channel and correspond to global minima of the error function. Additional critical points for the product P = a1 … aL are given by the surface . These critical points are dependent on the weights in the learning channel. If the ci are small or congruent with the respective feedforward weights, then and dP/dt has the same sign as α − βP. Thus small or congruent weights can help the learning but they are not necessary.

To see the convergence, from Equation 45, we have:

| (47) |

Note that if one the derivatives dai/dt is zero, then they are all zero and thus there cannot be any limit cycles. Since in the general case all the ci are non zero, we have:

| (48) |

showing that there is a quadratic relationship between ai+1 and ai, with no linear term, for every i. Thus every ai can be expressed as a polynomial function of a1 of degree 2i−1, containing only even terms:

| (49) |

and:

| (50) |

By substituting these relationships in the equation for the derivative of a1, we get da1/dt = Q(a1) where Q is a polynomial with an odd degree n given by:

| (51) |

Furthermore, from Equation 50 it can be seen that leading coefficient is negative therefore, using Theorem 1, for any set of initial conditions the system must converge to a finite fixed point. For a given initial condition, the point of convergence can be solved by looking at the nearby roots of the polynomial Q of degree n.

Gradient Descent Equations

For comparison, the gradient descent equations are:

| (52) |

(the equality in the middle requires that ai ≠ 0). In this case, the coupling between neighboring terms is given by:

| (53) |

Solving this equation yields:

| (54) |

7.5 Adding Width (Expansive): 𝒜[1,N, 1]

Derivation of the System

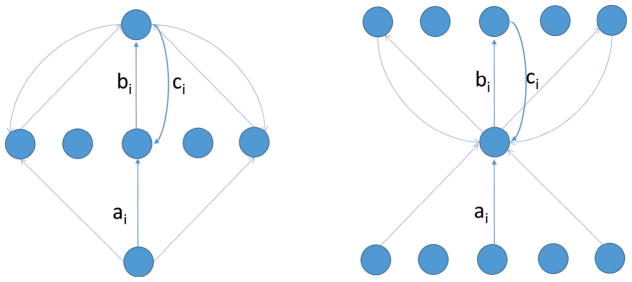

Consider a linear 𝒜[1,N, 1] architecture (Figure 13). For notational simplicity, we let a1, …, aN be the weights in the lower layer, b1, …, bN be the weights in the upper layer, and c1, …, cN the random weights of the learning channel. In this case, we have O(t) = Σi aibiI(t). We let P = Σi aibi. The learning equations are:

| (55) |

Figure 13.

Left: Expansive 𝒜[1,N, 1] Architecture. Right: Compressive 𝒜[N, 1N] Architecture. In both cases, the parameters ai and bi are adjustable, and the parameters ci are fixed.

When averaged over the training set:

| (56) |

where α = E(IT) and β = E(I2). With the proper scaling of the learning rate (η = Δt) this leads to the non-linear system of coupled differential equations for the temporal evolution of ai and bi during learning:

| (57) |

The dynamic of P = Σi aibi is given by:

| (58) |

Theorem 5

Except for trivial cases, starting from any initial conditions the system in Equation 57 converges to a fixed point, corresponding to a global minimum of the quadratic error function. All the fixed points are located on the hyersurface given by α − βP = 0 and are global minima of the error function. Along any trajectory, each bi is a quadratic polynomial function of ai. Each ai is an affine function of any other aj.For any starting point, the final fixed point can be calculated by solving a polynomial differential equation of degree 3.

Proof

Many of the features found in the linear chain are found again in this system using similar analyses. In the general case where the weights in the learning channel are non zero, the critical points are given by the surface α − βP = 0 and correspond to global optima. These critical points are independent of the weights in the learning channel. Additional critical points for the product P = Σi aibi are given by the surface which depends on the weights in the learning channel. If the ci’s are small, or congruent with the respective bi’s, then and dP/dt has the same sign as α − βP.

To address the convergence, Equations 57 leads to the vertical coupling between ai and bi:

| (59) |

for each i = 1, …, N. Thus the dynamics of the ai variables completely determines the dynamics of the bi variables, and one only needs to understand the behavior of the ai variables. In addition to the vertical coupling between ai and bi, there is an horizontal coupling between the ai variables given again by Equation 57 resulting in:

| (60) |

Thus, iterating, all the variables ai can be expressed as affine functions of a1 in the form:

| (61) |

Thus solving the entire system can be reduced to solving for a1. The differential equation for a1 is of the form da1/dt = Q(a1) where Q is a polynomial of degree 3. Its leading term, is the leading term of −c1βP. To find its leading term we have:

| (62) |

and thus the leading term of Q is given by where:

| (63) |

Thus the leading term of Q has a negative coefficient, and therefore a1 always converges to a finite fixed point, and so do all the other variables.

7.6 Adding Width (Compressive): 𝒜[N, 1,N]

Derivation of the System

Consider a linear 𝒜[N, 1,N] architecture (Figure 13). The on-line learning equations are given by:

| (64) |

for i = 1, …, N. As usual taking expectations, using matrix notation and a small learning rate, leads to the system of differential equations:

| (65) |

Here A is an 1 × N matrix, B is an N × 1 matrix, and C is an 1 × N matrix, and Mt denotes the transpose of the matrix M. ΣII = E(IIt) and ΣTI = E(TIt) are N × N matrices associated with the data.

Lemma 1

Along the flow of the system defined by Equation 65, the solution satisfies:

| (66) |

where K is a constant depending only on the initial values.

Proof

The proof is immediate since:

| (67) |

where . The theorem is obtained by integration.

Theorem 6

In the case of an autoencoder with uncorrelated normalized data (Equation 68), the system converges to a fixed point satisfying A = βC, where β is a positive root of a particular cubic equation. At the fixed point, B = Ct/(β||C||2) and the product P = BA converges to CtC/||C||2.

Proof

For an autoencoder with uncorrelated and normalized data (ΣTI = ΣII = Id). In this case the system can be written as:

| (68) |

We define

| (69) |

and let A0 = A(0). Note that σ(t) ≥ K. We assume that C and A0 are linearly independent, otherwise the proof is easier. Then we have:

| (70) |

Therefore the solution A(t) must have the form:

| (71) |

which yields:

| (72) |

or:

| (73) |

From the above expressions, we know that both f and g are nonnegative. We also have

| (74) |

Since σ(t) ≥ K, g(t) is bounded, and thus

| (75) |

By a more general theorem shown in the next section, we know also that ||A|| is bounded and therefore f is also bounded. Using Equation 74, this implies that that g(t) → 0 as t→∞. Now we consider again the equation:

| (76) |

Now consider the cubic equation:

| (77) |

For t large enough, since g(t) → 0, we have:

| (78) |

Thus Equation 76 is close to the polynomial differential equation:

| (79) |

By Theorem 1, this system is always convergent to a positive root of Equation 77, and by comparison the system in Equation 76 must converge as well. This proves that f(t) → β as t → ∞, and in combination with g(t) → 0 as t → ∞, shows that A converges to βC. As A converges to a fixed point, the error function converges to a convex function and B performs gradient descent on this convex function and thus must also approach a fixed point. By the results in [2, 3], the solution must satisfy BAAt = At. When A = βC this gives: B = Ct/(βCCt) = Ct/(β||C||2). In this case, the product P = BA converges to the fixed point: CtC/||C||2. The proof can easily be adapted to the slightly more general case where ΣII is a diagonal matrix.

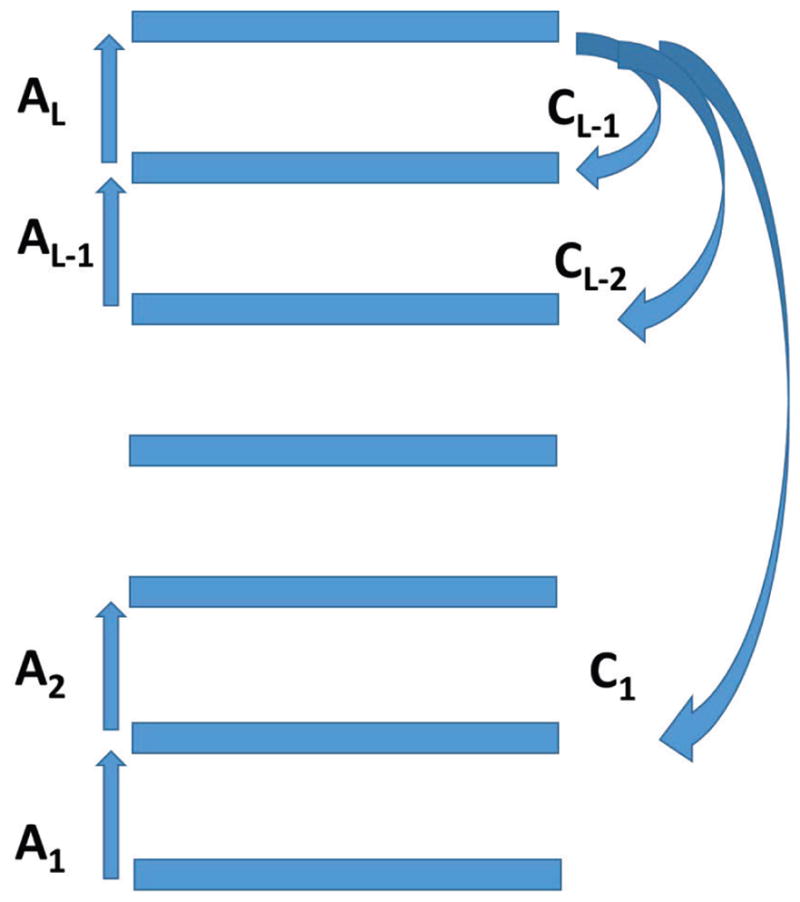

7.7 The General Linear Case: 𝒜[N0,N1, …, NL]

Derivation of the System

Although we cannot yet provide a solution for this case, it is still useful to derive its equations. We assume a general feedforward linear architecture (Figure 14) 𝒜[N0,N1, …, NL] with adjustable forward matrices A1, …, AL and fixed feedback matrices C1, …, CL−1 (and CL = Id). Each matrix Ai is of size Ni ×Ni−1 and, in SRBP, each matrix Ci is of size Ni × NL. As usual, .

Figure 14.

General linear case with an architecture 𝒜[N0, …, NL]. Each forward matrix Ai is adjustable and of size Ni×Ni−1. In SRBP, each feedback matrices Ci is fixed and of size Ni × NL.

Assuming the same learning rate everywhere, using matrix notation we have:

| (80) |

which, after taking averages, leads to the system of differential equations

| (81) |

with P = ALAL−1 …A1, ΣTI = E(TIt), and ΣII = E(IIt). ΣTI is a NL×N0 matrix and ΣII is a N0 × N0 matrix. In the case of an autoencoder, T = I and therefore ΣTI = ΣII. Equation 81 is true also for i = 1 and i = L with CL = Id where Id is the identity matrix. These equations establish a coupling between the layers so that:

| (82) |

When the layers have the same sizes, the coupling can be written as:

| (83) |

where we can assume that the random matrices Ci are invertible square matrices.

Gradient Descent Equations

For comparison, the gradient descent equations are given by:

| (84) |

resulting in the coupling:

| (85) |

and, by definition:

| (86) |

where ℰ = E(T − PI)2/2.

RBP Equations

Note that in the case of RBP with backward matrices C1, …, CL−1, as opposed to SRBP, one has the system of differential equations:

| (87) |

By letting Bi = Ci … CL−1 one obtains the SRBP equations however the size of the layers may impose contraints on the rank of the matrices Bi.

7.8 The General Three-Layer Linear Case 𝒜[N0,N1,N2]

Derivation of the System

Here we let A1 be the N1 × N0 matrix of weights in the lower layer, A2 be N2 × N1 matrix of weights in the upper layer, and C1 the N1 ×N2 random matrix of weights in the learning channel. In this case, we have O(t) = A2A1I(t) = PI(t)) and ΣII = E(IIt) (N0×N0), and ΣTI = E(TIt) (N2 × N1). The learning equations are given by:

| (88) |

resulting in the coupling:

| (89) |

The corresponding gradient descent equations are obtained immediately by replacing C1 with .

Note that the two-layer linear case corresponds to the classical Least Square Method which is well understood. The general theory of the three-layer linear case, however, is not well understood. In this section, we take a significant step towards providing a complete treatment of this case. One of the main results is that system defined by Equation 88 has long-term existence, and C1P = C1A2A1 is convergent and thus, in short, the system is able to learn. However this alone does not imply that the matrix valued functions A1(t), A2(t) are individually convergent. We can prove the latter in special cases like 𝒜[N, 1,N] and 𝒜[1,N, 1] studied in the previous sections, as well as 𝒜[2, 2, 2].

We begin with the following theorem.

Theorem 7

The general three layer linear system (Equation 88) always has long-term solutions. Moreover ||A1|| is bounded.

Proof

As in Lemma 1, we have:

| (90) |

Thus we have:

| (91) |

It follows that:

| (92) |

where C0 is a constant matrix. Let:

| (93) |

Using Lemma 2 below, we have:

| (94) |

Since:

| (95) |

or:

| (96) |

using Equation 92, we have:

| (97) |

Using the second inequality in Lemma 2 below, we have:

| (98) |

for positive constants c1, …, c5. Since A1 has long-term existence, so does f. Note that it is not possible for f to be increasing as t → ∞ because if we had f′(t) ≥ 0, then we would have and thus f would be bounded ( ). But if f is not always increasing, at each local maximum point of f we have , which implies everywhere.

Lemma 2

There is a constant c1 > 0 such that

f ≥ c1||A1||2,

.

Proof

The first statement is obvious. To prove the second one, we observe that:

| (99) |

for some constants c1, c2 > 0.

To complete the proof of Theorem 7, we must estimate A2 to make sure it does not diverge at a finite time. Let

| (100) |

Then:

| (101) |

and thus:

| (102) |

Since we have shown that ||A1|| is bounded:

| (103) |

for some constant K. As a result, h ≤ K1t2 + K2 or

| (104) |

Since for every t, 1/||A2|| is bounded, the system has long-term solutions.

The main result of this section is as follows.

Theorem 8: [Partial Convergence Theorem]

Along the flow of the system in Equation 88, A1 and C1A2 are uniformly bounded. Moreover, as t→∞ and:

| (105) |

Proof

Let:

| (106) |

Then:

| (107) |

It follows that:

Thus we have:

| (108) |

Here, for two matrices X and Y, we write X ≤ Y if and only if Y − X is a semi-positive matrix. Let:

Then:

By Theorem 7, there is a lower bound on the matrix V

for a constant matrix C. Thus as t → ∞, V = V (t) is convergent. Using the inequality above, the expression

| (109) |

is monotonically decreasing. Since A1 is bounded by Theorem 7, and is nonnegative, the expression is convergent. In particular, C1A2 is also bounded along the flow. By the (108), both A1 and C1A2 are L2 integrable. Thus in fact we have pointwise convergence of C1A2A1. Since C1 may not be full rank, we call it partial convergence. If C1 has full rank (which in general is the case of interest), then as C1A2A1 is convergent, so is A2A1.

When does partial convergence imply the convergences of the solution (A1(t), A2(t))? The following result gives a sufficient condition.

Theorem 9

If the set of matrices A1, A2 satisfying:

| (110) |

is discrete, then A1(t) and C1A2(t) are convergent.

Proof

By the proof of Theorem 8, we know that A1(t), C1A2(t) are bounded, and the limiting points of the pair (A1(t), C1A2(t)) satisfy the relationships in Equation 110. If the set is discrete, then the limit must be be unique and A1(t) and C1A2(t) converge.

If C1 has full rank, then the system in Equation (88) is convergent, if the assumptions in Theorem 9 are satisfied. Applying this result to the 𝒜[1, N, 1] and 𝒜[N, 1, N] cases, provides alternative proofs for Theorem 3 and Theorem 6. The details are omitted. Beyond these two cases, the algebraic set defined by Equation (110) is quite complicated to study. The first non-trivial case that can be analyzed corresponds to the 𝒜[2, 2, 2] architecture. In this special case, we we can solve the convergence problem entirely as follows.

For the sake of simplicity, we assume that ΣII = ΣTI = C1 = I. Then the system associated with Equation (88) can be simplified to:

| (111) |

where A(t), B(t) are 2 × 2 matrix functions. By Theorem 7, we know that B(t)A(t) is convergent. In order to prove that B(t) and A(t) are individually convergent, we prove the following result.

Theorem 10

Let ℱ be the set of 2 × 2 matrices A, B satisfying the equations:

| (112) |

where K, L are fixed matrices. Then ℱ is a discrete set and the system defined by Equation 111 is convergent.

Proof

The proof is somewhat long and technical and thus is given in the Appendix. It uses basic tools from algebraic geometry.

Theorem 10 provides evidence that in general the algebraic set defined by Equation (110) might be discrete. Although at this moment we are not able to prove discreteness in the general case, this is a question of separate interest in mathematics (real algebraic geometry). The system defined by Equation (110) is an over-determined system of algebraic equations. For example, if A(t), B(t) are n × n matrices, and if C is non-singular, then the system contains n(n + 1) equations with n2 unknowns. One can define the Koszul complex [9] associated with these equations Using the complex, given specific matrices C, ΣTI, ΣII, K, L, there is a constructive algorithmic way to determine whether the set is discrete. If it is, then the corresponding system of ODE is convergent.1

7.9 A Non-Linear Case

As can be expected, the case of non-linear networks is challenging to analyze mathematically. In the linear case, the transfer functions are the identity and thus all the derivatives of the transfer functions are equal to 1 and thus play no role. The simulations reported above provide evidence that in the non-linear case the derivatives of the activation functions play a role in both RBP and SRBP. Here we study a very simple non-linear case which provides some further evidence.

We consider a simple 𝒜[1, 1, 1] architecture, with a single power function non linearity with power μ ≠ 1 in the hidden layer, so that O1(S) = (S1)μ. The final output neuron is linear O2(S2) = S2 and thus the overall input-output relationship is: O = a2(a1I)μ. Setting μ to 1/3, for instance, provides an S-shaped transfer function for the hidden layer, and setting μ = 1 corresponds to the linear case analyzed in a previous section. The weights are a1 and a2 in the forward network, and c1 in the learning channel.

Derivation of the System Without Derivatives

When no derivatives are included, one obtains:

| (113) |

where here α = E(TIμ), β = E(I2μ), γ = E(TI), and δ = E(Iμ+1). Except for trivial cases, such a system cannot have fixed points since in general one cannot have and at the same time.

Derivation of the System With Derivatives

In contrast, when the derivative of the forward activation is included the system becomes:

| (114) |

This leads to the coupling:

| (115) |

excluding as usual the trivial cases where c1 = 0 or μ = 0. Here K is a constant depending only on a1(0) and a2(0). The coupling shows that if da1/dt = 0 then da2/dt = 0 and therefore in general limit cycles are not possible. The critical points are given by the equation:

| (116) |

and do not depend on the weight in the learning channel. Thus, in the non-trivial cases, a2 is an hyperbolic function of . It is easy to see, at least in some cases, that the system converges to a fixed point. For instance, when α > 0, c1 > 0, μ > 1, and a1(0) and a2(0) are small and positive, then da1/dt > 0 and da2/dt > 0 and both derivatives are monotonically increasing and decreases monotonically until convergence to a critical point. Thus in general the system including the derivatives of the forward activations is simpler and better behaved. In fact, we have a more general theorem.

Theorem 12

Assume that α > 0, β > 0 c1 > 0, and μ ≥ 1. Then for any positive initial values a1(0) ≥ 0 and a2(0) ≥ 0, the system described by Equation 114 is convergent to one of the positive roots of the equation for t:

| (117) |

Proof