Abstract

Introduction

This study describes the development of a self-audit tool for public health and the associated methodology for implementing a district health system self-audit tool that can provide quantitative data on how district governments perceive their own performance of the essential public health functions.

Methods

Development began with a consensus building process to engage Ministry of Health and provincial health officers in Mozambique and Botswana. We then worked with lists of relevant public health functions as determined by these stakeholders to adapt a self-audit tool describing essential public health functions to each country’s health system. We then piloted the tool across districts in both countries and conducted interviews with district health personnel to determine health workers’ perception of the usefulness of the approach.

Results

Country stakeholders were able to develop consensus around eleven essential public health functions that were relevant in each country. Pilots of the self-audit tool enabled the tool to be effectively shortened. Pilots also disclosed a tendency to up code during self-audits that was checked by group deliberation. Convening sessions at the district enabled better attendance and representative deliberation. Instant feedback from the audit was a feature that 100% of pilot respondents found most useful.

Conclusions

The development of metrics that provide feedback on public health performance can be used as an aid in the self-assessment of health system performance at the district level. Measurements of practice can open the door to future applications for practice improvement and research into the determinants and consequences of better public health practice. The current tool can be assessed for its usefulness to district health managers in improving their public health practice. The tool can also be used by ministry of health or external donors in the African region for monitoring the district level performance of the essential public health functions.

Keywords: Essential public health functions, Mozambique, Botswana, District Health Management, public health practice, Performance improvement, Africa

Introduction

As health systems across the developing world continue to struggle with the double burden of communicable and non-communicable disease, many countries have focused on service delivery as the key to improving the health of nations. However, historically, increases in life span and drops in death rates were achieved through traditional public health practices which saved millions of lives even before the advent of modern medicine. 1,2 Efforts to codify these public health practices occurred throughout the 20th century. 3. As early as 1925 the American Public Health Association developed an Appraisal Form and later an Evaluation Schedule for public health departments to document their performance 4. Following the 1988 Institute of Medicine report, “The Future of Public Health”, development of standards and measures of public health practice accelerated 5.

The CDC formed a workgroup in 1989 to develop what was initially called “ten public health practices” 6. Later in 1994, the US Public Health Service built on this work to produce a very similar list called the “ten essential services” 4. The 1994 list is the one that most people think of today. The CDC’s Essential Public Health Functions List has been used to benchmark practice in the US.

To avoid confusion over the word “services” which sometimes makes people confuse the type of work that public health agencies do with what clinical provides do the “ten essential public health services” list is often referred to as “essential public health functions” (EPHF). In the US, the CDC developed and promoted a set of measurement instruments for the EPHF called the National Public Health Performance Standards Program (NPHPSP) that are used in many local and state health departments. The measurements of performance are the foundation of performance improvement 7,8,9

In the late 1990s, the World Health Organization developed a task force to examine the concept of EPHF. A group of 145 public health experts from all of the world’s regions were queried using a Delphi Process to determine priorities and make recommendations for member countries. The consensus was that country health ministries and regional offices need to work to define national level lists of what is deemed essential and that the list should vary from country to country 10. The World Health Organization has encouraged its regional offices to develop context specific measurements of essential public health functions and these have been carried out in regional offices for all of the Americas 10 Europe 11, Western Pacific 12 and Eastern Mediterranean 13. Some WHO regional offices have developed measurement tools to assist ministries of health in assessing their performance.

The largest effort came from the regional office of WHO for the Americas, Pan American Health Association (PAHO), developed detailed essential public health functions measurement instruments and charged every member state in the Americas to measure their performance along the same dimensions as the US’s essential public health services/functions as shown in Table 1 14. The PAHO initiative spread measurement tools in English, Spanish, and Portuguese to every country in the region and led to a publication of cross country benchmarks on how public health systems were performing in the Americas 14. Many countries continued their regular assessments at the national and sub-national level 15,16. Among the Americas, the U.S. and Canada have continued to invest heavily in public health practice assessments at provincial, state, or county level using instruments developed for this purpose 17-22. Roughly 82% of US State Health departments now use measurement instruments systematically to improve quality 23. In the US as of 2008, 55% out of 545 surveyed local health departments surveyed by NACCHO were conducting QI activities and of these 78% reported that managers had received QI training 24,25.

Table 1.

Final stakeholders’ list of EPHF for Botswana and Mozambique from August 2012

|

In India, the World Bank worked with the Ministry of Health in Karnataka state in India to develop a locally relevant measurement tool for national and sub-national use in India 26,27. Unlike the situation in North America, where regular performance assessments produce data for local use in performance assessment, in India the data flowed to researchers and never led to an on-going program of continuous quality improvement. The essential public health functions (EPHF) list served as a framework to guide both health department improvement and personal competencies for leaders in public health 28. Australia developed its own list called “National Public Health Partnership Public Health Practices List” and used it to assess the public health workforce in rural western Australia 29.

Health systems in low and middle income countries today have scope to improve in ensuring that public health functions are occurring. One survey conducted by researchers from International Association of Public Health Institutes found room for improvement in responses on performance from low and middle income countries 30. A useful step would be to develop local ownership of tools that can assess the performance of public health functions in districts. This paper describes one approach to doing this in Botswana and Mozambique.

To date, the African Regional Office of the WHO (AFRO) has yet to assess health systems in Africa using the essential public health functions approach, partly due to the fact that prior self-assessment tools and methods for public health practice improvement were not adapted for use in the local context. Guidelines for public health practice would be particularly timely because many countries, including Mozambique and Botswana, are decentralizing their health systems thus increasing the complexity of operations at the district level. In Botswana, the “Integrated Health Services Plan” (IHSP) established in 2010 presented a 10 year strategy to redesign Botswana’s health system with goals of creating a more effective, efficient, and decentralized system to improve health outcomes across the country. As part of the implementation of the IHSP, the Ministry of Health was reorganized, and district public health offices were delegated more power and decision making responsibilities to address the public health needs of their respective geographic areas. Where previously, the Department of Local Government had overseen district public health offices, under the IHSP redesign, the Ministry of Health took over the responsibility of managing the district offices 31. In Mozambique, the Ministry of Health’s Strategic Plan for the Instituto Nacional de Saúde (INS) 2000-2014 was organized around eight strategic areas, the majority of which was directly associated with one or more Essential Public Health Functions. Given the demographic, geographic and socioeconomic characteristics of Mozambique, the decentralization and the improvement of public health offices at the district level (subprovincial), the so call called “Serviços Distritais de Saúde, Mulher e Acção Social (SDSMAS)”, formerly “Direcções Distritais” were deemed to be of extreme importance for reaching the goals contained in the strategic plan.

This situation jeopardizes attention to public health functions. During the decentralization process, Ministry of Health officials tend to delegate responsibility to district health offices without providing adequate resources 32. District health officers subsequently struggle to execute the new responsibilities within the limited authority they are given, and donors struggle to understand whom to approach in this complex system. Decentralization without proper support from the central ministry of health can therefore lead to a deterioration of performance for public health functions.

These problems are compounded because personnel are often assigned to the role of district health officer with little or no training in public health. Formal job descriptions outlining the necessary public health tasks are seldom available. Furthermore, data collection of essential indicators for planning and financing is jeopardized when public health officers do not have appropriate knowledge of what they should be measuring and how to best keep track of key indicators. Also, when personnel do not receive adequate training, the task of informing, educating and empowering individuals about health issues and of enforcing health laws and regulations becomes daunting. Overall, there are few resources to guide these officers in public health practice. As a result, the Centers for Disease Control (CDC) funded a pilot study in partnership with Johns Hopkins University and Johns Hopkins Program in Education in Gynecology and Obstetrics (JHPIEGO) in order to develop an EPHF self-assessment tool adapted to the low and middle-income country context that could help monitor and strengthen public health in systems across Africa.

Several approaches for performance improvement are possible, and they vary on a continuum from those that rely heavily on external inspection and externally applied incentives to those that rely more on self-inspection and intrinsic motivation 33. Self-audit with feedback approaches appeal to the intrinsic desire to continuously improve one’s own performance.7 Rather than having an authority figure administer an assessment, this method allows district health teams composed of public health workers and managers to evaluate themselves to determine where improvements are needed 34. Our approach chose to rely more on self audit and intrinsic motivation. As prior self-assessment tools and methods for public health practice improvement had not been tailored for the African context, the team adapted existing tools to fit into a self-audit and feedback framework, from which district health management teams would be empowered to evaluate themselves on performance of public health.

The purpose of this paper is to describe the methods used to develop and pilot the self-assessment tool for public health improvement at the district level.

Methods

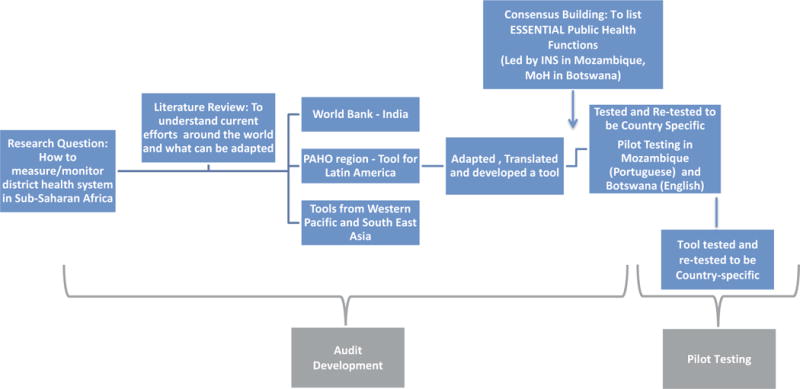

The three phases we will describe are: stakeholders meetings, instrument development, and pilot testing the instruments in the field. This study was conducted from January 2012 to August 2014, and was led by a team from Johns Hopkins University and Johns Hopkins Program in Education in Gynecology and Obstetrics (JHPIEGO), with funding and oversight by the Centers for Disease Control (CDC) in partnership with the Ministries of Health in Botswana and Mozambique.

Stakeholders meetings

Development began with introductory meetings at ministry headquarters in the capitals, Gaborone and Maputo to identify any existing efforts aimed at addressing the performance of districts in order to avoid any duplications. Representatives from all Departments in the Ministry of Health at headquarters, staff from district health offices and representatives from key partnering organizations, and high level Ministry of Health officials were invited to the meetings. Eligibility to be included as a stakeholder was determined by the chief of public health in each country and was based on familiarity with public health practice. In Gaborone, these meetings were attended by staff from the Departments of Public Health, Clinical Services, HIV/AIDS, Health Inspectorate, Policy, Planning, and Monitoring and Evaluation. In Maputo, these meetings were attended by representatives of the Instituto Nacional de Saúde (INS), Provincial directors, and the National Ministry of Health (MISAU). In August, 2012 representatives from these entities came together to attend a full day workshop devoted to identifying which public health functions were indeed essential at the district level in Botswana and Mozambique. Meetings were recorded by an audio recorder as well as note takers from Johns Hopkins University and JHPIEGO, and were facilitated by the team from Johns Hopkins University and the Ministries of Health in each respective country.

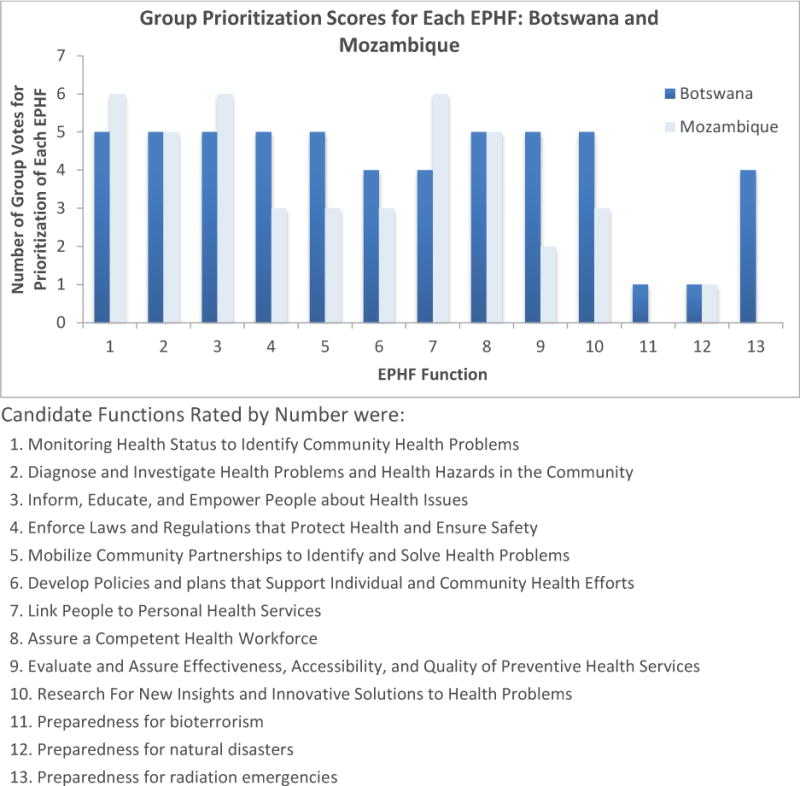

At the partners’ workshops, a set of candidate public health functions was distributed to each small group of five attendees. Small groups were charged with rank ordering a list of possible candidates in order of priority, adding new functions if necessary, and possibly deleting any functions judged to be inessential at the district level. Small groups reported to the whole group and through these group discussions, a consensus list of essential public health functions at the district level emerged. Items chosen for inclusion as essential public health functions were then incorporated in the self-audit tool.

Audit instrument development

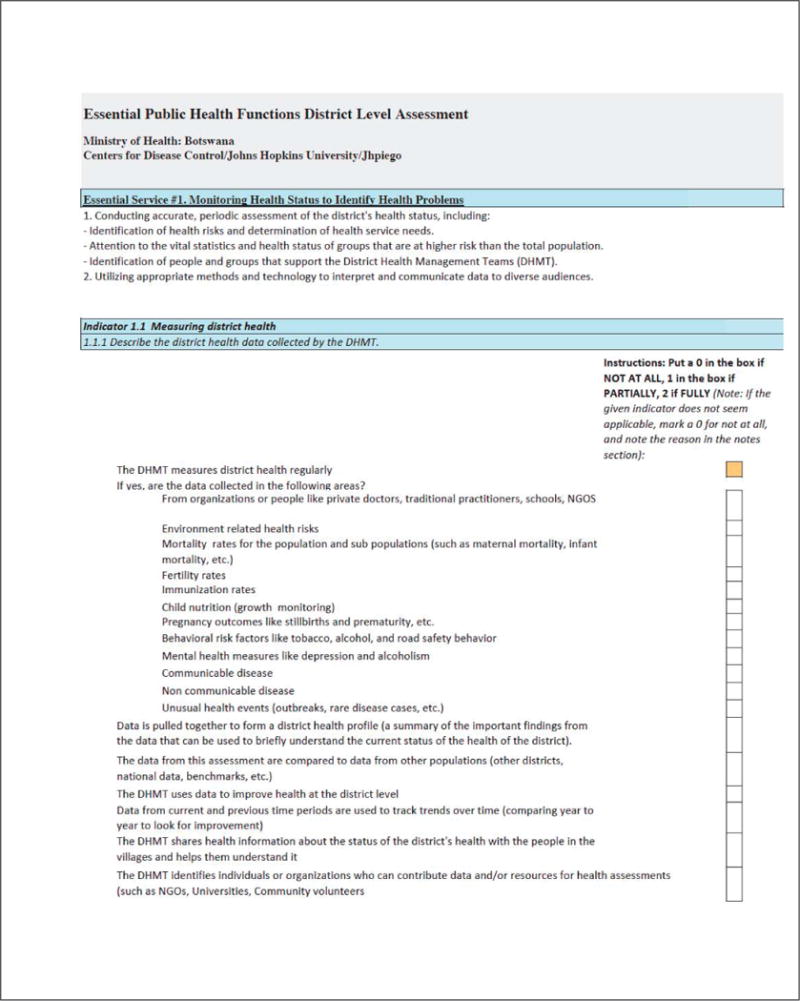

Existing EPHF assessment tools have been developed by World Bank, The Pan American Organization (PAHO), The Centers for Disease Control and Prevention (CDC)— specifically the National Public Health Performance Standards Program (NPHPSP), and Public Health Accreditation Board (PHAB). Each was reviewed for its appropriateness for African districts and for their ability to generate immediate feedback for practice improvement. The CDC’s NPHPSP had been used in Israel 35 and Indonesia 36. The World Bank tool had been developed with reference to NPHPSP for use in Karnataka state in India 27. The World Bank tool was selected as an appropriate model because it focused on auditing the district level in a developing country setting. Prior to the first pilot test, the World Bank form was extensively revised to adapt it for an African application and a Portuguese translation was prepared. Initial edits mostly focused on wording with changes to reflect the African context rather than the Indian context. The tool was adapted into an interactive Excel spreadsheet whereby responses can be entered directly at the site and a graphical display of summary scores is automatically entered into a linked sheet to produce instantaneous graphs. Similar to other regions like Latin America, the Botswanan and Mozambiquan stakeholders also elected an eleventh EPHF: Disaster and Emergency Preparedness. The final format of the tool included sections for each of the 11 EPHF that consisted of indicators and sub indicators for each EPHF, along with definitions of each EPHF and explanations of each indicator. Sub indicators in each section rolled up into the indicators in each section, which together rolled up to create a score for each EPHF. See Figure 2 for an example of the tool format. A second round of revisions shortened the number of questions in the self-audit tool by almost 50% after the first pilot test was completed in Mozambique in November 2012 when participants complained that the self-audit tool was too long and time consuming. The final tool retained all of the ten EPHF domains as the original World Bank tool. Shortening occurred by cutting the number of items in each domain and by streamlining the language used to describe each item. Lengthening occurred when stakeholders asked for one new domain to be added to cover emergency preparedness.

Figure 2.

Pilot tests

The package of tools was pilot-tested in district health offices across Mozambique and Botswana, as both countries were in the process of decentralizing. Both were at a point where assistance was needed in public health strengthening. In Mozambique, the Instituto Nacional de Saúde (INS) had recently switched its focus from basic science research to public, and had been reorganized based on supporting essential public health functions 37, so welcomed assistance in actually measuring and strengthening the EPHF at the district level. In Botswana, the decentralization of the health systems took place in 2010, and authority was moving from the Ministry of Local Government to the Ministry of Health. Under the reorganization, the Ministry of Health provided oversight to district public health offices, however the transition period of the Ministry of Local Government did not come without challenges. The need to define duties and responsibilities for public health officers, a lack of resources at the district offices, and the need for leadership from the Ministry of Health to the district public health officers left the MoH requiring assistance in how to support and monitor DHOs.

Sites were selected by the Ministry of Health to include both strong performing and weak performing local health departments, and the team from JHPIEGO and the MOH invited representatives from all divisions of the local health departments, as well as the chief public health officers and other local health department leadership to attend the workshops. Central MOH staff were responsible for facilitating the workshops to the local health staff by both describing and administering the tool, while CDC and JHPIEGO researchers observed implementation. Respondents were asked to rate their experience during the pilot tests and comment on the most useful parts of the exercises as well as the elements that were most confusing. CDC staff, JHPIEGO staff and MOH representatives led follow up discussion sessions together after going through the tool with the local health department. The toolkit was piloted in 5 districts in Botswana, and 5 in Mozambique.

In Mozambique, the assessment tool was also translated from English to Portuguese by a Mozambican translation company after the initial tool was created, and again after improvements were made. After the translation process, personnel from the Ministry of Health held a meeting with public health personnel with experience at the district level in order to do a pre-test of the assessment tool, to assure the translation was appropriate to the Mozambican public health context. Minor changes were made during that meeting.

During the pilot exercises in Mozambique, an INS representative would lead the process of asking the questions contained in the assessment exercise (Excel format). The spreadsheet was also projected on the wall, so all participants could also see the questions and not just listen. The team made it clear to participants that the self-audit was a pilot exercise and encouraged them to ask questions and clarify any words or sentences that were unclear or confusing. During the pilot tests one researcher was always in charge of writing down any sentences that were not understood or that generated any confusion. At the moment of misunderstanding, the researcher would intervene and ask what about the sentence made it difficult to understand. For example, after a problem was identified on a question in the self-audit tool, the researcher wrote the note, “this question required further explanation for full understanding”. Or similarly, “it was necessary to explain the difference between threat (ameaça) and epidemic (surto) in order for participants to grasp meaning of the assessment question”. All unclear and confusing questions were marked in red, and a note next to the question was written with the reason for the mark and reactions were written in quotes.

The process in Botswana followed the same methods, with a MOH representative leading the process, and a researcher taking notes at all times on which words, questions, or phrasings needed edits. The researcher also noted feedback from each team related to their perceptions of the pilot exercise and self-audit tool. Two rounds of pilot tests (see Figure 1) were conducted in each country and revisions were made after each round to change questions that were confusing or poorly worded.

Figure 1.

Flow chart for project development.

Pilot and Post-Piloting Optimization of Questions

After each pilot, the entire team, consisting of CDC, JHPIEGO, and MOH team members, would meet and discuss each problematic question in order to seek the best change and implement it. They would incorporate the feedback from respondents as they analyzed the tool sentence by sentence in order to develop easier and less confusing sentences for substitution. Repetitious questions were removed and the team looked carefully at time the local health department teams spent on each function to ensure it was not too time consuming. In the first version, the Yes/No choice indicated whether the district health office performed or did not perform each item. In the second version the choice was widened to 0=”Not at all”; 1=”Partially”; 2=”Fully” for the performance of each item in response to early feedback from multiple respondents who claimed an intermediate response for functions being performed to some extent but not fully would also be suitable for the types of questions asked.

Iteratively, the next time the assessment was piloted, the newer and improved version was always used. Regularly updating the instrument follows survey development methods from cognitive interviewing 38-40. For the most part, changes to synonym words enabled better understanding during the subsequent pilot. A full account of all notes was kept for any future reference.

The protocol for this research was reviewed by the CDC’s Center for Global Health and was determined to be not human subjects research.

Results

National Stakeholder Results

National stakeholder meetings were attended by 40 people in Mozambique and 29 in Botswana. The priority areas were different in each country. Extensive discussion took place at the meetings in both countries around inclusion of two EPHFs in particular: the district health officers’ roles in regulatory enforcement and in development of a local disaster preparedness plan. Some participants felt that these areas were not proper to be left in the authority of district health officers, but should be left in provincial or national control. After discussion on the roles of public health related to these EPHF, both sets of country stakeholders independently reached a consensus to include both disaster preparedness and regulatory enforcement in the essential public health functions lists (Table 1). Participants in both countries also chose to single out bioterrorism and radiation as unique types of natural disaster which they may face, due to proximity to nuclear power plants and the recent increase in the threat of terrorism across parts of Africa. Figure 2 shows the prioritization scores for the various public health functions. Table 1 shows the final list of public health functions endorsed by the stakeholders.

Engagement of Local Health Departments in Pilot Testing

At the time of the first pilot test, the self-audit tool required four hours in order to respond to 288 separate yes/no questions. One reason that the self-audit required so much time was the cognitive engagement and discussion that spontaneously arose among the public health teams participating in the exercise. While holding the sessions at the district health office enabled more of the public health team to attend and was therefore more inclusive of various sectors of the local health department, it also added to the time burden as it facilitated a wider discussion.

At the beginning of the interviews, none of the district health officers expressed any familiarity with the term “Essential Public Health Functions” during initial DHMT meetings. One officer remarked, “This was a good eye opener; it made me wake up and say wow we are not doing a good job of this. We can use it to find public health gaps and look to the areas we uncover to discover where public health improvements are needed”. Another public health officer mentioned, “The DHMT sometimes feel like they are just clinical services and this helps remind DHMT that they also have public health activities that they must be doing.” The tool also provided opportunity for the identification of barriers to improvement that could be addressed by central ministry personnel, for example: one officer remarked, “It is difficult for us to get resources such as transportation, because vehicles are needed as ambulances. Then we cannot visit other villages to see how they are doing because we do not have a way to get there.”

Adapting to Respondent Burden

The self-audit tool was shortened after initial pilot testing by taking out items that were perceived by local health department teams as repetitive, shortening wordy sentences, and removing summaries for each indicator that were perceived as repetitive of the items following each indicator (Definitions of each essential public health function were not removed). The final version required between 90 and 140 minutes to complete. Adding a notes section on the assessment helped teams come to faster agreement on scores, as they could note any disagreements for later reference and move on.

Feedback on Self-Audit Tool Pilot

The public health teams in both countries had a tendency to rate themselves highly with a large number of 2’s. This up-coding behavior did not seem to be reduced by several explanations that the exercise was not a provincial site inspection or a supervision visit that would be followed by praise blame and sanctions. Up-coding was more frequent at the beginning of a session. As the rapport developed, more deliberation would precede and the group process would begin to question the validity responding that the item was being performed “fully”. The need to comment and deliberate was also seen in evaluation of how respondents approached the NPHPSP 20.

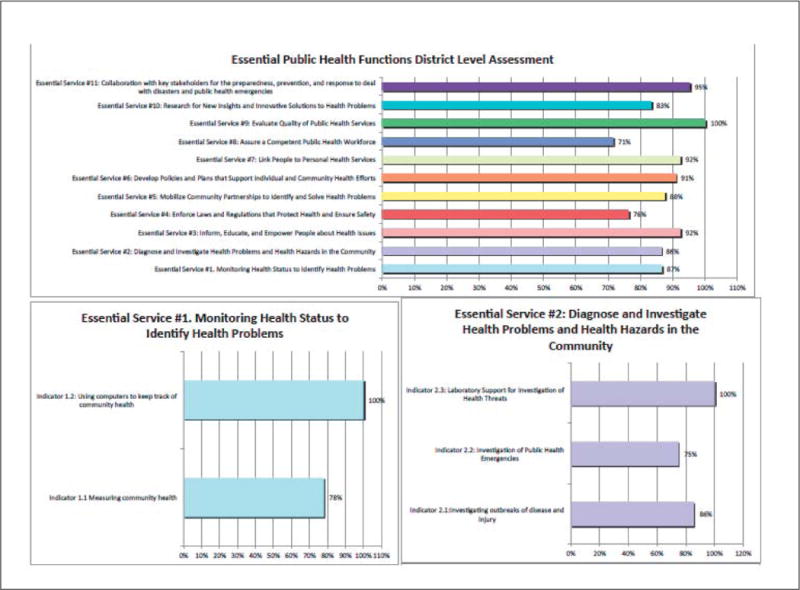

Of the districts visited in Botswana and Mozambique, 100% shared a consensus that the most useful part of the pilot was not the self-audit itself, but the ability to use the graphical output from the self-audit to set priorities and a strategy for performance improvement. The self-audit tool automatically produced horizontal bar graphs displaying the summary score with each public health function and sub-function as an unweighted sum divided by the maximum score. These were reviewed and discussed by all the participants of the exercise. The teams were encouraged not to attempt to develop plans to improve all eleven public health functions at once, but to choose areas for immediate attention.

It was a subjective impression that younger DHOs and district medical officers seemed more interested in participating in the assessment than those who had been in their positions for a longer time. Many of the older DHO’s expressed skepticism about their ability to change the way things were running at the district level. Younger DHO’s who had more recently joined the DHMTs did not list as many immutable constraints to improvement as those who had been at the district office longer.

The public health teams sometimes broke into two or three smaller groups to use results from the self-audit tool to set priorities for improvement on certain EPHFs. In sessions where there were larger numbers of small groups, there was a tendency for disagreement over priorities. Talking through the task of how to set priorities involved the team trading off between areas that were most feasible to improve and areas that potentially had the highest impact. The teams requested and received an opportunity to review the specific items in the self-audit tool that had led to lower scores, and these were incorporated in the performance improvement plan. One example of a performance improvement plan that was created using the feedback provided by the self-audit tool took place in a district in Botswana where the team identified a problem with oversight of data collectors during the self-audit exercise. They discovered that a lack of oversight meant that community health data was not being collected appropriately and there was a serious issue with missing data for community health assessments. The district chose to improve data collection in their communities by creating quarterly supervision meetings for managers to meet with frontline staff to hold them accountable for data collection. At the time of the three month follow up visit, this district had held their first supervisory meeting and planned to continue these in order to improve data collection in the next year. They also built lessons learned from their EPHF performance assessment into their yearly plan to ensure that areas which they had identified as weak during the self-audit would be strengthened in the following year.

Conclusion

This project developed and pilot tested a tool to be used for self-audit and feedback around public health practice at the district level in Mozambique and Botswana. Pilot tests showed that asking respondents Yes/No questions about their performance was not preferred, and that there needed to be space for comment. Studies of the NPHPSP tool which is a progenitor of ours also garnered this feedback 20. Our approach to contextualizing the tool centered around having African stakeholders from each country define essential public health functions and visiting public health officers in districts to engage them in an exercise to evaluate self-assessed performance of these functions. The EPHF self-assessment exercise initial visit can now be conducted comfortably during a half-day visit, with 90 to 140 minutes for the self-audit, and the rest of the time spent on priority setting.

The virtue of a self-audit and feedback approach is that it forsakes a command-and- control approach where standards are enforced by an external supervisor or international funding agency. It instead relies on the intrinsic motivation of the professional to want to know what is expected and to meet standards that are subsequently tracked. It takes maximal advantage of the district health officers’ superior knowledge of their local resources, strengths, weaknesses and opportunities. By designing onsite visits to districts, the program may provide an opportunity for broader inclusion of front line workers on district health teams whose views are less heard by the central ministry.

The development of the self-audit and feedback exercise for self-assessment of public health performance represents a way forward for public health at the district level in the African context, even given the many challenges this entails. As evidenced from the results of this study, public health systems in Africa may suffer from a lack of well-trained public health officers, a lack of strong public health leadership, and a lack of funding and resources. Often, more urgent medical services and pharmaceuticals are prioritized before public health investments when resources are limited, even when public health services may reduce the burden on the medical system longer term. While there are many challenges to development of strong public health systems in developing countries, putting measurement of public health practice performance in the hands of the public health staff offers a way to identify and prioritize areas needing improvement, and subsequently, create improvement plans to enhance performance of the EPHF.

The Ebola outbreak has given new urgency to the importance of attending to the strength of a health system’s performance of public health assessment, community engaged policy development and assurance 41. These tools can continue to be adapted to meet the needs of other countries in strengthening the public health practice in their health systems.

Figure 3.

Image of Page 1 of the self-audit Tool

Figure 4.

Example Results

Key Points.

Collaborative self-audit and feedback is a method for encouraging group self-assessment of performance for the purposes of identifying weaknesses in performance and creating improvement plans to address these weaknesses, and works by soliciting the participant’s own intrinsic motivation for self-improvement

The self-audit process allows for comparison of performance against expectations, and instant provision of feedback fosters performance improvement partnership among the group and a coach.

This paper describes the development and piloting of an essential public health function self-audit tool in districts in Mozambique and Botswana

Key Ministry of Health stakeholders achieved consensus on country-specific lists of public health functions deemed to be essential at the district level in meetings supported by central health ministries.

Conducting exercises at the district office maximizes participant attendance

Districts placed most value on being able to prioritize the public health functions they most desired to work on.

Audit and feedback exercises help the district health officers and staff identify the priority goals for improvement and monitor their progress toward those goals

Acknowledgments

The United States President’s Emergency Plan for AIDS Relief (PEPFAR) and Centers for Disease Control and Prevention (CDC) Division of Global HIV/AIDS Health Systems offered grant support for this research. The findings and conclusions in this paper are those of the authors and do not necessarily represent the views of the Centers for Disease Control and Prevention.

References

- 1.Szreter S. The Importance of Social Intervention in Britain’s Mortality Decline c. 1850-1914: a Reinterpretation of the Role of Public Health. Social History of Medicine. 1988;1(1):1–38. [Google Scholar]

- 2.Colgrove J, Fairchild A, Rosner D. The history of structural approaches in public health. In: Sommer M, Parker R, editors. Structural Approaches in Public Health. 2013. [Google Scholar]

- 3.Winslow CE. Municipal Health Department Practice. Am J Public Health (N Y) Jan. 1925;15(1):39–44. doi: 10.2105/ajph.15.1.39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Derose SF, Schuster MA, Fielding JE, Asch SM. Public health quality measurement: Concepts and challenges. Annual Review of Public Health. 2002;23:1–21. doi: 10.1146/annurev.publhealth.23.092601.095644. [DOI] [PubMed] [Google Scholar]

- 5.Beitsch LM, Landrum LB, Turnock B, Handler AS. Performance Management in Public Health. In: Shi L, Johnson JA, editors. Novick & Morrow’s Public Health Administration. 2013. p. 357. [Google Scholar]

- 6.Dyal WW. Ten organizational practices of public health: a historical perspective. American journal of preventive medicine. 1995 Nov-Dec;11(6 Suppl):6–8. [PubMed] [Google Scholar]

- 7.Corso LC, Wiesner PJ, Halverson PK, Brown CK. Using the essential services as a foundation for performance measurement and assessment of local public health systems. J Public Health Manag Pract. 2000 Sep;6(5):1–18. doi: 10.1097/00124784-200006050-00003. [DOI] [PubMed] [Google Scholar]

- 8.Corso LC, Lenaway D, Beitsch LM, Landrum LB, Deutsch H. The national public health performance standards: driving quality improvement in public health systems. Journal of Public Health Management and Practice. 2010;16(1):19–23. doi: 10.1097/PHH.0b013e3181c02800. [DOI] [PubMed] [Google Scholar]

- 9.Upshaw V. The National Public Health Performance Standards Program: will it strengthen governance of local public health? J Public Health Manag Pract. 2000 Sep;6(5):88–92. doi: 10.1097/00124784-200006050-00013. [DOI] [PubMed] [Google Scholar]

- 10.Bettcher DW, Sapirie S, Goon EH. Essential public health functions: results of the international Delphi study. World Health Stat Q. 1998;51(1):44–54. [PubMed] [Google Scholar]

- 11.WHO, editor. European Action Plan for Strengthening Public Health Capacities and Services-DRAFT. Copenhagen: WHO; 2012. [Google Scholar]

- 12.World Health Organization, editor. Essential public health functions: a three-country study in the Western Pacific Region. WHO; 2003. [Google Scholar]

- 13.EMRoo WHO. Assessment of essential public health functions in countries of the Eastern Mediterranean Region. 2014 http://www.emro.who.int/about-who/public-health-functions/index.html. Accessed June 30, 2015.

- 14.PAHO, editor. Public Health in the Americas. PAHO: PAHO; 2001. [Google Scholar]

- 15.PAHO, editor. The essential public health functions as a strategy for Improving overall health systems performance: trends and challenges since the Public Health in the Americas Initiative, 2000-2007. Washington, DC: PAHO; 2008. [Google Scholar]

- 16.Ramagem C, Rualies J. The Essential Public Health Functions as a Strategy for Improving Overall Health Systems Performance: Trends and Challenges since the Public Health in the Americas Initiative, 2000-2007. 2008 http://www.nwph.net/hawa/writedir/15b0WHO%20Pan%20American%20Health%20Organisation.pdf.

- 17.Mays GP, McHugh MC, Shim K, et al. Getting what you pay for: public health spending and the performance of essential public health services. Journal of public health management and practice: JPHMP. 2004 Sep-Oct;10(5):435–443. doi: 10.1097/00124784-200409000-00011. [DOI] [PubMed] [Google Scholar]

- 18.Erwin PC, Hamilton CB, Welch S, Hinds B. The Local Public Health System Assessment of MAPP/The National Public Health Performance Standards Local Tool: a community-based, public health practice and academic collaborative approach to implementation. Journal of public health management and practice. 2006;12(6):528–532. doi: 10.1097/00124784-200611000-00005. [DOI] [PubMed] [Google Scholar]

- 19.Ellison JH. National Public Health Performance Standards: are they a means of evaluating the local public health system? Journal of public health management and practice JPHMP. 2005;11(5):433–436. doi: 10.1097/00124784-200509000-00011. [DOI] [PubMed] [Google Scholar]

- 20.Beaulieu J, Scutchfield FD, Kelly AV. Content and criterion validity evaluation of National Public Health Performance Standards measurement instruments. Public health reports Washington, D C 1974. 2003;118(6):508–517. doi: 10.1093/phr/118.6.508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Barry MA. How can performance standards enhance accountability for public health? Journal of public health management and practice JPHMP. 2000;6(5):78–84. doi: 10.1097/00124784-200006050-00011. [DOI] [PubMed] [Google Scholar]

- 22.Barron G, Glad J, Vukotich C. The use of the National Public Health Performance Standards to evaluate change in capacity to carry out the 10 essential services. Journal of environmental health. 2007;70(1):29–31. [PubMed] [Google Scholar]

- 23.Madamala K, Sellers K, Pearsol J, Dickey M, Jarris PE. State landscape in public health planning and quality improvement: results of the ASTHO survey. Journal of Public Health Management and Practice. 2010;16(1):32–38. doi: 10.1097/PHH.0b013e3181c029cc. [DOI] [PubMed] [Google Scholar]

- 24.Beitsch LM, Leep C, Shah G, Brooks RG, Pestronk RM. Quality improvement in local health departments: results of the NACCHO 2008 survey. Journal of Public Health Management and Practice. 2010;16(1):49–54. doi: 10.1097/PHH.0b013e3181bedd0c. [DOI] [PubMed] [Google Scholar]

- 25.Leep C, Beitsch LM, Gorenflo G, Solomon J, Brooks RG. Quality improvement in local health departments: progress, pitfalls, and potential. Journal of Public Health Management and Practice. 2009;15(6):494–502. doi: 10.1097/PHH.0b013e3181aab5ca. [DOI] [PubMed] [Google Scholar]

- 26.World Bank, editor. Second Essential Public Health Functinos Project (FESP II) Washington, DC: World Bank; 2010. [Google Scholar]

- 27.Khalegian P, Das Gupta M. Public Management and the Essential Public Health Functions. World Development. 2005;33(7):1083–1099. [Google Scholar]

- 28.Brownson R, Fielding J, Maylahn C. Evidence-based Decision Making to Improve Public Health Practice. Frontiers in Public Health Services and Systems Research. 2013;2 [Google Scholar]

- 29.Lower T, Durham G, Bow D, Larson A. Implementation of the Australian core public health functions in rural Western Australia. Australian and New Zealand Journal of Public Health. 2004 Oct;28(5):418–425. doi: 10.1111/j.1467-842x.2004.tb00023.x. [DOI] [PubMed] [Google Scholar]

- 30.Binder S, Adigun L, Dusenbury C, Greenspan A, Tanhuanpää P. National public health institutes: contributing to the public good. Journal of public health policy. 2008;29(1):3–21. doi: 10.1057/palgrave.jphp.3200167. [DOI] [PubMed] [Google Scholar]

- 31.Government of Botswana. The Essential Health Service Package for Botswana. 2010 [Google Scholar]

- 32.Khaleghian P. Decentralization and public services: the case of immunization. Social Science and Medicine. 2004 Jul;59(1):163–183. doi: 10.1016/j.socscimed.2003.10.013. [DOI] [PubMed] [Google Scholar]

- 33.Epstein A. {Ivers, 2012 #4885} In: Smith P, Mossalios E, Papanicolos I, Leatherman S, editors. Performance Measurement for Health System Improvement. Cambridge: Cambridge Press; 2010. [Google Scholar]

- 34.Ivers N, Jamtvedt G, Flottorp S, et al. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2012;6:CD000259. doi: 10.1002/14651858.CD000259.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Scutchfield DF, Miron E, Ingram RC. From service provision to function based performance-perspectives on public health systems from the USA and Israel. Isr J of Health Policy Res. 2012 doi: 10.1186/2045-4015-1-46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Sapirie S, Timmons R. Managing Performance Improvement of Decentralized Health Services. The Manager. 2004;13(1):2–26. [Google Scholar]

- 37.República de Moçambique MdS, Instituto Nacional de Saúde. Plano Estratégico 2010-2014. 2010 [Google Scholar]

- 38.Jobe JB, Mingay DJ. Cognitive Research Improves Questionnaires. American Journal of Public Health. 1989;79(8) doi: 10.2105/ajph.79.8.1053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Stussman B. Questionnaire Design Research Laboratory: Cognitive Laboratory Testing of the 1993 Teenage Attitudes and Practices Survey II. Washington, DC: National Center for Health Statistics; Mar, 1994. 1994. [Google Scholar]

- 40.Willis GB. Cognitive Interviewing and Questionnaire Design: A Training Manual. Office of Research Methodology Centers for Health Statistics; 1994. p. 7. [Google Scholar]

- 41.Osterholm MT, Moore KA, Gostin LO. Public health in the age of ebola in West Africa. JAMA internal medicine. 2015 Jan;175(1):7–8. doi: 10.1001/jamainternmed.2014.6235. [DOI] [PubMed] [Google Scholar]