Abstract

Infants increasingly attend to the mouths of others during the latter half of the first postnatal year, and individual differences in selective attention to talking mouths during infancy predict verbal skills during toddlerhood. There is some evidence suggesting that trajectories in mouth-looking vary by early language environment, in particular monolingual or bilingual language exposure, which may have differential consequences in developing sensitivity to the communicative and social affordances of the face. Here, we evaluated whether 6- to 12-month-olds’ mouth-looking is related to skills associated with concurrent social communicative development—including early language functioning and emotion discriminability. We found that attention to the mouth of a talking face increased with age but that mouth-looking was more strongly associated with concurrent expressive language skills than chronological age for both monolingual and bilingual infants. Mouth-looking was not related to emotion discrimination. These data suggest that selective attention to a talking mouth may be one important mechanism by which infants learn language regardless of home language environment.

Keywords: Bilingualism, Language development, Selective attention, Face perception, Infant, Eye-tracking, Social attention

Introduction

Language learning is a multimodal process that builds on infants’ attention to the face as a source of both social communicative information (Brooks & Meltzoff, 2005; Kuhl, 2007; Munhall & Johnson, 2012) and audiovisual speech cues (Lewkowicz & Pons, 2013; Rosenblum, Schmuckler, & Johnson, 1997; Teinonen, Aslin, Alku, & Csibra, 2008). For infants learning two languages, the audiovisual speech cues afforded by the face may be particularly informative and relevant for visually differentiating the types of languages being spoken (Pons, Bosch, & Lewkowicz, 2015; Sebastián-Gallés, Albareda-Castellot, Weikum, & Werker, 2012; Weikum et al., 2007). However, the precise mechanisms by which visual social attention to faces and language environment support language acquisition and early social communicative skills remain unclear. Here, we examined the possibility that developmental changes in face perception reflect an increased sensitivity to social and communicative affordances of the face, which in turn contributes to skills necessary for language and social development. In particular, the current study primarily focused on selective attention to mouths and its association with concurrent language skills in 6- to 12-month-olds from monolingual and bilingual households. We also examined the relation between mouth-looking and emotion discriminability in our sample to further evaluate a potential additional effect of mouth-looking on social development in monolingual and bilingual infants.

Mouth-looking and language development

During the latter half of the first postnatal year, infants—regardless of language background—gradually shift from preferentially looking at the eyes toward preferentially looking at the mouths of others (Ayneto & Sebastián-Gallés, 2017; Frank, Vul, & Saxe, 2011; Hunnius & Geuze, 2004; Lewkowicz & Hansen-Tift, 2012; Pons et al., 2015; Tenenbaum, Shah, Sobel, Malle, & Morgan, 2013; Wagner, Luyster, Yim, Tager-Flusberg, & Nelson, 2013). The timing of this phenomenon coincides with the approximate onset of babbling (~6 months), an early expressive language skill. Studies examining this phenomenon have found that the developmental trajectory for mouth-looking may differ between monolingual and bilingual infants. For monolingual infants, some studies reported that mouth-looking continues to increase over the latter half of the first postnatal year (Frank et al., 2011; Tenenbaum et al., 2013), whereas other work found that monolingual infants’ trajectory for mouth-looking follows an inverted U-curve such that preferential attention to the eyes is reestablished after the first birthday (e.g., Lewkowicz & Hansen-Tift, 2012). In contrast, bilingual infants have been consistently found to exhibit protracted mouth-looking trajectories and continue to increasingly attend to the mouth at 12 months (Ayneto & Sebastian-Gallés, 2017; Pons et al., 2015). Thus, although monolingual and bilingual infants exhibit similar mouth-looking behaviors at younger ages, the developmental trajectory for mouth-looking may differ by 12 months.

The protracted developmental trajectory of mouth-looking in bilingual infants may have consequences for language learning. Developmental increases in mouth-looking—especially during the establishment of initial language expertise—are believed to facilitate the detection of redundant audiovisual speech cues (Hillairet de Boisferon, Tift, Minar, & Lewkowicz, 2017; Lewkowicz & Hansen-Tift, 2012; Pons et al., 2015). Young infants are able to disambiguate speech cues from mouth movements (e.g., Rosenblum et al., 1997), and, importantly, bilingual infants have been shown to visually discriminate between two languages even at ages when monolingual infants are no longer able to do so (Sebastián-Gallés et al., 2012; Weikum et al., 2007). Developmental differences in sensitivity to redundant audiovisual speech cues and attunement to mouths may, to some extent, underlie individual differences in specific aspects of language acquisition (e.g., language production vs. comprehension skills). For instance, longitudinal studies of monolingual infants have found that greater attention to the mouth during the first postnatal year is associated with greater rates of expressive language growth during the second postnatal year (Tenenbaum, Sobel, Sheinkopf, Malle, & Morgan, 2015; Young, Merin, Rogers, & Ozonoff, 2009). What remains unknown is whether differences in mouth-looking in monolingual and bilingual infants also reflect individual differences in early language profiles, including those in expressive versus receptive language skills. Given that monolingual and bilingual infants have been found to differ in their language learning rates by 30 months of age (e.g., Hoff et al., 2012), it is possible that differences in mouth-looking trajectories between monolingual and bilingual infants may also be associated with differences in language learning between monolingual and bilingual infants. Moreover, to our knowledge, the direct association between mouth-looking and language skills as a function of second language exposure has not been previously examined.

Mouth-looking and development of emotion perception

It has been hypothesized that bilingual infants may be less adept at perceiving emotion cues because greater attention to the mouth may impede the uptake of relevant social communicative signals from other parts of the face, including socioemotional information from the eyes (Ayneto & Sebastián-Gallés, 2017). Differences in the way monolingual and bilingual infants view nonlinguistic social stimuli may underlie observed variability in subsequent social communicative development. Thus, in addition to affecting language learning, prolonged preferential attention to the mouth may affect social communicative development (e.g., Jones, Carr, & Klin, 2008)—emotion perception in particular.

Attention to the eye region appears to facilitate the development of face expertise in infants (e.g., Gliga & Csibra, 2007), including emotion discrimination. For example, for 6- to 11-month-olds, greater attention to the eyes of still images of faces with fearful expressions was associated with a larger posthabituation novelty preference to faces with happy expressions, implying that selective attention to the eyes supports emotion discrimination (Amso, Fitzgerald, Davidow, Gilhooly, & Tottenham, 2010). In addition, infants have been found to attend more to the eyes when a face was smiling than when it was talking (Tenenbaum et al., 2013). To our knowledge, visual discrimination of emotional expressions and its relation to mouth-looking have not been previously examined in monolingual and bilingual infants. As a secondary aim, the current study examined the possibility for a trade-off between language development and emotion discriminability as a function of increased mouth-looking. We hypothesized that emotion discriminability is diminished in bilingual infants, perhaps as a consequence of reduced attention to the eyes in favor of the mouth.

The current study

The current study examined how attention to a speaking mouth in 6- to 12-month-old monolingual and bilingual infants is associated with concurrent verbal skills and visual discrimination of emotional expressions. Infants participated in two eye-tracking tasks: (a) a free-viewing task using naturalistic video stimuli of a woman engaging in infant-directed speech to measure visual attention to eyes and mouth in a talking face and (b) a visual target search task to evaluate discrimination of facial expressions. Infants also participated in a developmental assessment, the Mullen Scales of Early Learning (MSEL; Mullen, 1995), to gauge early language comprehension and production skills as well as general nonverbal cognitive skills given evidence that bilingual exposure affects infants’ nonverbal cognitive development (Brito & Barr, 2012; Brito & Barr, 2014; Brito, Grenell, & Barr, 2014; Kovács & Mehler, 2009a, 2009b; Poulin-Dubois, Blaye, Coutya, & Bialystok, 2011; Singh et al., 2015; cf. Schonberg, Sandhofer, Tsang, & Johnson, 2014).

Given reported differences in mouth-looking trajectories between monolingual and bilingual infants (e.g., Ayneto & Sebastián-Gallés, 2017; Pons et al., 2015), we were particularly interested in disentangling developmental changes in mouth-looking from chronological age and, thus, used age-normed measures of language skills. That is, we hypothesized that mouth-looking plays a functional role in early language acquisition and would be more closely related to language level than chronological age. We predicted that increased mouth-looking would support language development—potentially supporting expressive language skills more than receptive language skills (e.g., Tenenbaum et al., 2015; Young et al., 2009)—but would be negatively associated with discriminability of emotional expressions.

Method

Participants

A total of 60 6- to 12-month-old monolingual and bilingual infants completed experimental protocols, which included two eye-tracking tasks and a behavioral assessment (Mage = 8.55 months, SD = 1.91, range = 5.75–12.10). An additional 21 infants were observed but excluded from the final sample due to failure to complete any part of the experimental paradigm due to fussiness (n = 9), excessive movement resulting in insufficient point of gaze (POG) data (i.e., more than 50% data lost in a session) (n = 9), or unreliable calibration of POG (n = 3). Thus, all infants included in the final sample provided data from both eye-tracking tasks and the developmental assessment.

Infants were categorized as monolingual or bilingual based on a parent-completed questionnaire of language exposure, which specified the percentage of waking hours their infants were exposed to a given language. Infants were considered monolingual if they were exposed to their primary language 90-100% of the day (n = 27, 17 female; Mage = 8.62 months, SD = 2.09; M = 95.33%, SD = 17.36) as reported by their primary caregivers. All bilingual infants were simultaneous bilinguals who had been exposed to English and another language and were exposed to English 20–85% of the day (n = 33, 16 female; Mage = 8.49 months, SD = 1.76; M = 49.39%, SD = 22.63), with 21 of the 33 bilingual infants being exposed to English more than 50% of the day (MEnglish_exposure = 63.81%, SD = 14.13). Because all infants lived in a linguistically diverse city, inclusion of a broad range of non-English exposure in the bilingual sample was intentional and served to examine how visual attention may quantitatively vary by early language exposure. This approach is in line with a recent trend in the adult bilingualism literature that characterizes bilingualism continuously rather than categorically (e.g., Luk & Bialystok, 2013) and with developmental studies that include bilingual participants from other linguistically diverse cities (e.g., Poulin-Dubois et al., 2011). Inclusion of a broad range of heterogeneous non-English exposure may contribute ecological validity to examinations of the impact of early language environment on social communicative developmental domains. The non-English languages that bilingual infants were exposed to included Spanish (n = 9), Korean (n = 3), Russian (n = 3), Chinese (n = 2), Farsi (n = 2), German (n = 2), Mandarin (n = 2), Romanian (n = 2), and Bengali, Catalan, Persian, Polish, Punjabi, Swedish, and Thai (n = 1 each); one bilingual infant was reported to have exposure to a non-English language that was not specified (see Table 1 for full demographic description). Monolingual and bilingual infants’ mothers did not differ statistically in educational attainment (Mann–Whitney U = 337.5, Z = −0.28, p = .78).

Table 1.

Demographic information by language background.

| Monolingual (%) |

Bilingual (%) |

|

|---|---|---|

| Race/ethnicity | ||

| White | 42 | 36 |

| Black | 4 | 0 |

| Hispanic | 0 | 18 |

| Asian | 13 | 12 |

| Mixed | 42 | 33 |

| Non-English language | ||

| Spanish | 22 | 28 |

| Chinese | 11 | 13 |

| Korean | 7 | 9 |

| Russian | 0 | 9 |

| Farsi | 4 | 6 |

| German | 4 | 6 |

| Romanian | 0 | 6 |

| Persian | 0 | 3 |

| Catalan | 0 | 3 |

| Polish | 0 | 3 |

| Thai | 0 | 3 |

| Swedish | 0 | 3 |

| Bengali | 0 | 3 |

| Punjabi | 0 | 3 |

| None | 52 |

Four infants—1 monolingual and 3 bilinguals—had slightly different language backgrounds than the majority of the monolinguals and bilinguals, respectively. English was the primary language for all but 1 monolingual infant, whose primary language was Chinese. Three of the bilingually exposed infants were also exposed to a third language, Spanish (10–30% of the day). We used Mahalanobis distance to identify any potential multivariate outliers in the eye-tracking and behavioral data. No data point was considered a statistical multivariate outlier, including the aforementioned infants with different language backgrounds [calculated Mahalanobis distance = 0.63–16.93; all fell below the critical value: χ2(5) = 20.52]. The reported analyses include the full sample of 60 infants.

Apparatus

Stimuli were presented on a ViewSonic VX2268wm monitor with a 47.4 × 29.6-cm display. Participants were seated approximately 60 cm from the display. Eye-movement data were collected via an EyeLink 1000 eye tracker (SR Research, Kanata, Ontario, Canada). Eye movements were recorded at 500 Hz with a spatial accuracy of approximately 0.5°–1° visual angle and downsampled to 60 Hz to match the maximum refresh rate of the computer monitor. Experimental stimuli were generated with Experiment Builder software.

Procedure

Prior to data collection, a five-point calibration scheme was used to calibrate each infant’s POG and was repeated until the POG was within 1° of the center of the target. The experimental session began only after the calibration criterion had been reached. The visual search task always preceded the free-viewing task. This consistent task ordering was chosen because previous work has shown that infants seem to prefer social free-viewing tasks over visual search tasks, and visual search performance is poor when tested after free-viewing tasks (Frank, Amso, & Johnson, 2014). Infants were recalibrated between eye-tracking tasks with the same initial calibration procedure. The MSEL was administered after the eye-tracking tasks were completed.

Visual search task

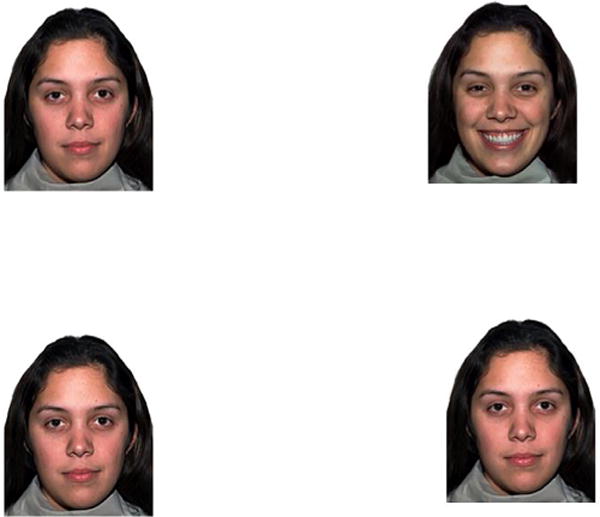

The visual search task examined whether monolingual and bilingual infants’ ability to detect emotional faces is affected by individual differences in mouth-looking. Four static female faces were presented in a 2 × 2 matrix, with three “distractor” faces conveying a calm expression and a target face conveying happiness, fear, sadness, or surprise (see Fig. 1). Each face was in full color and subtended 6.3° × 9.9° of visual angle. The same model was featured within trials—Model 02, 05, 07, or 10 from the NimStim set of facial expressions (Tottenham et al., 2009). All four models conveyed each of the four target emotions three times for a total of 48 trials. Model, target location, and facial expression were pseudorandomized across trials such that any combination was repeated for no more than 2 consecutive trials. To increase the uncertainty of target location across trials and reduce anticipatory looking, the centroids of the face stimuli within the four quadrants of the monitor differed across trials but were still equidistant from the center of the monitor.

Fig. 1.

An example stimulus from the visual search task with “happy” as the target emotional face. Calm faces of the same actress were selected as the “neutral” emotion. All faces were selected from the NimStim collection.

Individual trials terminated either when the infant fixated on the target face for at least 300 ms (in a single fixation) or after 4 s had elapsed. A small attention-grabbing fixation stimulus appeared between trials to reorient infants to the center of the monitor. Infants were presented with a total of 48 trials; visual discrimination of emotional expressions on the visual search task was operationalized as the percentage of trials in which the target emotional face was fixated for at least 300 ms (i.e., accuracy).

Free-viewing task

The free-viewing task gauged visual social attention to a dynamic talking face. Infants viewed 10 17- to 23-s videos of a woman seated in a playroom looking directly into the camera and engaging in infant-directed speech (see Fig. 2). The verbal content of the videos involved scenarios familiar to infants (e.g., meal-time and bath-time routines). Video frames were 8-bit color images and 1280 × 720 pixels in resolution. An attention-grabbing fixation stimulus appeared on either the left or right side of the monitor between trials. Because the woman was always in the center of the scene, an off-center target ensured that attention to the face was a reorientation rather than a persistence of gaze in a central location.

Fig. 2.

A frame from the free-viewing task with areas of interest overlaid. Fixations directed within the white region were classified as attention to the eyes; fixations directed within the black region were classified as attention to the mouth. Fixations to all other regions were classified as “other”. Relative attention to the mouth was calculated as the difference in amount of time looking at the mouth versus the eyes, normed by total time spent viewing the woman’s face.

Each frame was hand-traced for the woman’s face, which was then divided into two “areas of interest” (AOIs) encompassing the eyes and the mouth. All other parts of the scene were coded as a separate AOI (see Fig. 2). These AOIs were selected to capitalize on the spatiotemporal strengths and weaknesses of the SR Research eye-tracker, and similarly drawn AOIs have been previously used in dynamic free-viewing studies of infant social attention (e.g., Chawarska, Macari, & Shic, 2012). The area of the eye AOI occupied 7.17% of the screen, and the area of the mouth AOI occupied 4.47% of the screen. Fixations directed at each of the three AOIs were calculated using software written in MATLAB (MathWorks, Natick, MA, USA). Individual trials of the free-viewing task were included in analyses if infants viewed at least 50% of the clip. Relative attention to the mouth versus the eyes was calculated as the ME Index, the difference in total accumulated dwell time between the mouth and eye AOIs normed by total attention to the face. This is similar to the proportion total looking time (PTLT) metric used by Lewkowicz and Hansen-Tift (2012) except that the numerator used in our metric subtracts dwell time to the eyes from dwell time to the mouth. This was done to better reflect our primary interest in examining behavioral correlates of mouth-looking. Thus, positive values of the ME Index indicate greater attention allocation to the mouth; negative values indicate greater attention allocation to the eyes.

Mullen Scales of Early Learning

A trained experimenter administered the MSEL (Mullen, 1995). The MSEL is a standardized, play-based developmental assessment of nonverbal cognitive and verbal skills that is administered in English. It was originally developed for and normed with children from birth to 68 months of age whose primary language was English (Shank, 2011). The MSEL captures functioning in several developmental domains, including expressive language, receptive language, visual reception, and fine motor skills. The age-equivalent scores for each of these subscales can be used to calculate age-normed developmental quotients of verbal function (VDQ: average of expressive and receptive language scores divided by chronological age; e.g., Campbell, Shic, Macari, & Chawarska, 2014) and nonverbal function (NVDQ: average of fine motor and visual reception scores divided by chronological age). These age-normed developmental quotients provide a metric of skill level relative to what is expected given an infant’s chronological age.

Age-normed expressive language and receptive language subscale scores from the MSEL were used as separate dependent measures in our analyses. These inform relative skill level in language production and comprehension, respectively, as compared with age-expected norms. Expressive language skills evaluated in the 6- to 12-month age range include producing consonant sounds, babbling, jabbering with inflection, jabbering with gestures, and first word approximations. Receptive language skills include orienting to peripheral sounds, responding to parent-reported familiar words (e.g., mama, bottle, toy), pointing to objects (e.g., ball, chair), and recognizing one’s own name.

In addition to measuring prelinguistic verbal skills, the MSEL also provides a measure of infants’ overall early nonverbal cognitive functioning (i.e., NVDQ) when considering language development. Nonverbal cognitive skills that are assessed in the 6- to 12-month age range include those related to object permanence (e.g., A-not-B error, hiding with displacement), shape matching, and imitation.

Plan of statistical analyses

The current study aimed to examine how monolingual and bilingual infants’ visual attention to dynamic talking faces modulates early verbal skills and visual discrimination of emotional expressions. Similar to our categorical versus continuous conceptualization of early language environment (e.g., monolingual/bilingual vs. non-English language exposure), we were also interested in examining mouth-looking as both a categorical variable and a continuous variable. These complementary sets of analyses inform the extent to which mouth-looking yields differences of kind or degree in the outcome variables.

As a categorical variable, mouth-looking informs whether preferential attention to the mouth, in general, affects infants’ concurrent language skills and visual attention to nonlinguistic social stimuli (Ayneto & Sebastián-Gallés, 2017). Infants were categorized as either mouth-lookers or eye-lookers based on whether they preferentially attended to the mouth (positive ME Index value, n = 25) or the eyes (negative ME Index value, n = 35) on the free-viewing task. We used three 2 (monolingual vs. bilingual) × 2 (mouth-looker vs. eye-looker) analyses of covariance (ANCOVAs) to examine group differences in (a) age-normed receptive language skills from the MSEL, (b) age-normed expressive language skills from the MSEL, and (c) accuracy at detecting the emotional face on the visual search task while covarying for age and NVDQ. These categorical analyses inform whether preferential attention to the mouth and language background confer differences in either language development or visual discrimination of emotional expressions.

As a continuous variable, mouth-looking reveals how individual differences in mouth-looking may account for differences in communicative and social development. The full range of the ME Index—both positive and negative ME Index values—was used as the continuous metric of mouth-looking. Multiple linear regressions quantified the relative effects of mouth-looking and non-English exposure on expressive and receptive language skills and visual search accuracy while controlling for any effects of age and nonverbal cognitive ability. This approach identifies the proportion of variance in language skills and emotion perception that can be uniquely explained by relative attention to the mouth and whether the relation is moderated by non-English exposure. The primary dependent variables for the regression analyses were the ME Index, the percentage non-English exposure, and their interaction.

Power analyses were conducted with G*Power to ensure that our analyses had sufficient power to detect significant effects. For our categorical analyses, a sample size of 60 infants was sufficiently powered at 1 − β = 0.80 to detect moderate effects (partial η2 = 0.50) at an alpha of 0.05 in an ANCOVA model with two covariates (e.g., age and nonverbal cognitive skill) and four groups (mouth vs. eye looker and monolingual vs. bilingual). Similarly, for our continuous analyses, a sample size of 60 infants was sufficiently powered at 1 − β = 0.80 to detect moderate effects (f2 = 0.40) at an alpha of 0.05 with a linear regression with five predictors (age, nonverbal skill, non-English exposure, ME Index, and non-English exposure * ME Index). Age and NVDQ were chosen as covariates to statistically control for any effects that chronological age or nonverbal cognitive skill level may have on our measures of visual social attention.

Results

The aims of this study were to examine (a) relations between infants’ mouth-looking and early verbal skills in contexts of early monolingual or bilingual language environments and (b) relations between infants’ mouth-looking and visual discrimination of emotional expressions, again in contexts of early monolingual or bilingual language environments. We first report preliminary analyses in which we describe data from the free-viewing eye-tracking task, the MSEL, and the visual search task to quantify mouth-looking, verbal skills, and visual discrimination of emotional expressions, respectively. A correlation matrix of performance across both eye-tracking tasks and demographic information is provided in Supplemental Table 1 of the online supplementary material. Next, we report categorical analyses examining group differences in verbal skills and visual discrimination of emotional expressions by mouth-looking versus eye-looking and monolingual versus bilingual language background (Table 2). Finally, we report our continuous analyses examining whether individual differences in relative attention to a talking mouth moderates verbal skills and visual discrimination of emotional expressions as a function of non-English language exposure.

Table 2.

Sample characteristics by language background.

| Monolingual (n = 27; 10 male) |

Bilingual (n = 33, 17 male) |

t(58) | p | |

|---|---|---|---|---|

| Age (months) | 8.62 (2.09) |

8.49 (1.76) |

0.26 | .80 |

| MSEL | ||||

| Expressive language | 94.62 (17.39) |

91.47 (16.04) |

0.73 | .47 |

| Receptive language | 89.64 (13.29) |

82.51 (19.50) |

1.62 | .11 |

| NVDQ | 106.51 (13.14) |

107.21 (18.00) |

−0.17 | .87 |

| Visual search task | ||||

| Accuracy (%) | 50.08 (11.26) |

45.73 (11.59) |

1.46 | .15 |

| Free-viewing task | ||||

| ME index | −1.16 (54.39) |

−13.30 (56.29) |

0.84 | .40 |

| Viewing time (s) | 91.30 (42.00) |

95.58 (44.15) |

−0.38 | .70 |

Note. Standard deviations appear in parentheses below means. MSEL, Mullen Scales of Early Learning; NVDQ, nonverbal developmental quotient; ME Index, relative attention to the mouth.

Preliminary analyses in task performance

Free-viewing task: age-related changes in mouth-looking by language background

Infants provided data for an average of 7.21 video clips (range = 3–10). Across all infants, the amount of total time spent viewing the videos did not vary significantly with age (r = 0.11, p = .42; Mdwell_time = 93.65 s, SD = 42.89). Infants primarily looked at the face rather than the background (i.e., eyes + mouth; Mface = 85.82%, SD = 11.72).

Age-related effects of mouth-looking were examined categorically, with a binomial logistic regression predicting the likelihood that an infant would be a mouth-looker versus an eye-looker by age and language background (i.e., monolingual vs. bilingual). The interaction between language background and age was not significant (odds ratio = 1.05, Wald = 0.035, p = .85). The results from the model indicate that mouth-lookers and eye-lookers did not significantly differ in age (mouth-lookers: Mage = 8.84 months, SD = 2.17; eye-lookers: Mage = 8.33 months, SD = 1.67; odds ratio = 1.122, Wald = 0.306, p = .58), and bilingual infants were not more likely to be mouth-lookers than monolingual infants (mouth-lookers: monolingual = 13, bilingual = 12; eye-lookers: monolingual = 14, bilingual = 21; odds ratio = 1.02, Wald <0.001, p = .99). The model suggests that neither age nor language background influences the odds that an infant would preferentially attend to a talking mouth.

Age-related changes in mouth-looking were also examined continuously, with a linear regression predicting ME Index by age, non-English exposure, and their interaction. There was a significant model fit (R2 = 0.138), F(3, 59) = 2.989, p = .039, and a significant interaction between non-English exposure and age (β = −1.465, p = .013), indicating that greater non-English exposure was related to less mouth-looking with age. There were also main effects of age (β = 0.474, p = .007) and non-English exposure (β = 1.399, p = .017), such that mouth-looking increased with age and by non-English exposure. This corroborates prior eye-tracking studies examining mouth-looking across development during infancy and childhood (Frank et al., 2011; Tenenbaum et al., 2013; but see Lewkowicz & Hansen-Tift, 2012, and findings from monolingual infants in Pons et al., 2015).

Developmental assessment of verbal and nonverbal skills

Early verbal skills and nonverbal cognitive skills were gauged by the MSEL. The MSEL was administered in English, but this feature of the assessment did not have an effect on monolingual versus bilingual infants’ performance; monolingual and bilingual infants did not differ in their nonverbal scores, t(58) = −0.17, p = .87, or verbal scores [receptive language: t(58)= 1.62, p = .11; expressive language: t(58) = 0.73, p = .47], nor was non-English exposure significantly associated with nonverbal scores (r = −0.10, p = .43) or verbal scores (receptive language: r = −0.23, p = .07; expressive language: r = −0.12, p = .35). A repeated-measures ANCOVA revealed that infants had relatively similar language profiles; age-normed expressive language and receptive scores did not differ, F(1, 58) = 2.36, p = .13, and did not vary by non-English language exposure, F(1, 58) = 0.68, p = .41.

Visual search task

Infants provided data for an average of 45.02 trials (range = 35–48). A one-sample t test confirmed that infants as a group were above chance (25%) at fixating the target emotion face (M = 48.31%, SD = 9.84), t(59) = 37.83, p < .001. Although performance on the visual search task was moderately associated with age, this relation was not statistically significant (r = 0.23, p = .07).

Relating free-viewing task with MSEL and visual search task performance

Group differences in verbal skills and emotion discriminability by language background and mouth-looking

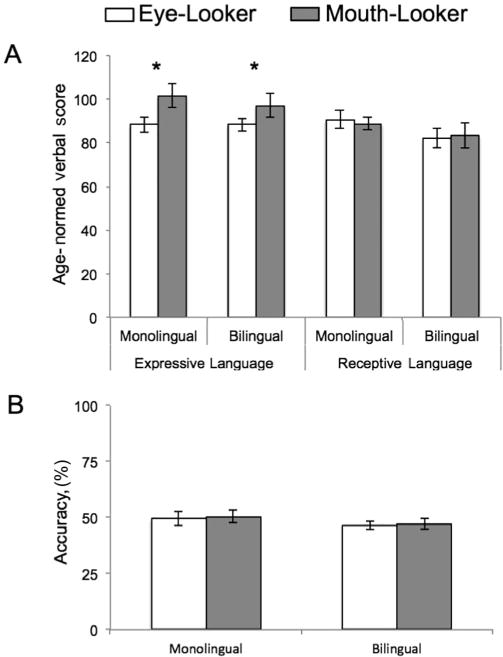

Three 2 × 2 ANCOVAs evaluated age-normed (a) expressive language skills, (b) receptive language skills, and (c) visual search accuracy, separately, with language background (monolingual vs. bilingual) and facial feature preference (mouth-looker vs. eye-looker) as between-group factors. There were no significant interactions between language group and facial feature preference on expressive language, receptive language, or visual search accuracy (all ps > .51). Rather, mouth-lookers had significantly higher age-normed expressive language skills than eye-lookers, F(1, 54) = 6.27, p = .015, partial η2 = 0.10. Eye-lookers and mouth-lookers did not differ in receptive language skills, F(1, 54) = 0.049, p = .83, partial η2 = .001, or in their ability to detect an emotional face, F(1, 54) = 0.145, p = .71, partial η2 = 0.003. These results suggest that, regardless of language background, preferential attention to a talking mouth is associated with expressive language development. However, we found no evidence that preferential attention to a talking mouth was associated with the ability to detect emotional expressions (see Fig. 3).

Fig. 3.

Mean verbal scores and visual search accuracy by facial feature preference. (A) Infants who primarily attended to the mouth had higher age-normed expressive language scores in comparison with infants who primarily attended to the eyes of the woman in the free-viewing task regardless of language background. (B) Receptive language scores and visual search accuracy did not differ between mouth-lookers and eye-lookers or between monolingual and bilingual infants. *p < .05.

Individual differences in verbal skills and emotion discriminability by non-English language exposure and mouth-looking

Three separate linear regression models were evaluated to determine the effects of mouth-looking on expressive language scores, receptive language scores, and visual search accuracy while controlling for age and nonverbal cognitive scores (NVDQ). These analyses inform how infants’ visual attention to the mouth modulates face perception and early verbal skills and how this relation may be moderated by non-English exposure (range = 0–95%). Table 3 provides a summary of these three linear regression models. Model fit was significant for predicting expressive and receptive language but not visual search accuracy; altogether ME Index, non-English exposure, and non-English exposure * ME Index were significant predictors when modeling expressive language scores (R2 = 0.20), F(5, 59) = 2.75, p = .028, and receptive language scores (R2 = 0.30), F(5, 59) = 4.58, p = .001, but not visual search accuracy (R2 = 0.14), F(5, 59) = 1.70, p = .15.

Table 3.

Summary of regression analyses.

| Expressive language

|

Receptive language

|

Visual search accuracy

|

|||||||

|---|---|---|---|---|---|---|---|---|---|

| B | SE B | β | B | SE B | β | B | SE B | β | |

| Age | 0.90 | 1.22 | 0.10 | −0.70 | 1.19 | −0.08 | 0.83 | 0.89 | 0.14 |

| NVDQ | 0.16 | 0.14 | 0.16 | 0.55 | 0.13 | 0.51** | 0.19 | 0.10 | 0.27 |

| Non-English exposure | −0.05 | 0.07 | −0.09 | −0.10 | −0.07 | −0.17 | −0.04 | 0.05 | −0.11 |

| ME index | 0.13 | 0.06 | 0.45* | −0.02 | −0.06 | −0.06 | −0.006 | 0.04 | −0.03 |

| ME index * non-English exposure | −0.001 | 0.001 | −0.16 | 0.001 | 0.001 | 0.15 | <0.001 | 0.001 | −0.02 |

| R2 | 0.20 | 0.30 | 0.14 | ||||||

| F | 2.75* | 4.58** | 1.70 | ||||||

Note. NVDQ, nonverbal developmental quotient; ME Index, relative attention to the mouth.

p < .05.

p < .01.

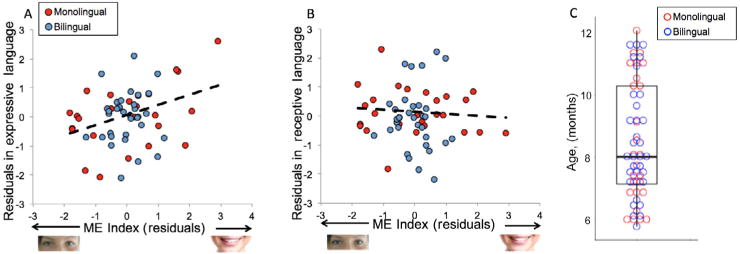

There was not a significant interaction between relative attention to the mouth and non-English exposure on expressive language scores (β = −0.16, p = .39), receptive language scores (β = 0.15, p = .39), or visual search accuracy (β = −0.02, p = .90). Rather, corroborating findings from the ANCOVA models, increased attention to the talking mouth was associated only with higher age-normed expressive language scores (β = 0.45, p = .02) over and above the effects of age (β = 0.10, p = .47), NVDQ (β = 0.16, p = .24), and non-English exposure (β = −0.09, p = .45). NVDQ was a significant predictor of receptive language scores (β = 0.51, p < .001) and was a marginal predictor of visual search accuracy (β = 0.27, p = .054). These data suggest that—regardless of type of language exposure—selective attention to the mouth is a sensitive marker for expressive language development but not for receptive language development or emotion discrimination (see Fig. 4).

Fig. 4.

(A, B) Scatter plots of residuals from regression analyses showing partial correlations between mouth-looking and expressive (A) and receptive (B) language skills by language background. (C) Distribution of age in the sample. Mouth-looking was significantly associated only with expressive language skills over and above the effects of nonverbal cognitive development, age, and percentage non-English exposure.

We followed up the above findings by running additional regression analyses using monolingual infants and a subset of infants that fell within a narrower definition of bilingualism (i.e., 25–75% exposure to a non-English language; cf. Pearson, Fernandez, Lewedeg, & Oller, 1997) to examine whether the heterogeneity within our sample partly accounted for the null effect of non-English exposure on mouth-looking and verbal skill set (see Supplemental Tables 2 and 3 in supplementary material). These analyses similarly indicated a positive association between mouth-looking and expressive language scores (β = 0.45, p = .03), but this was not moderated by non-English language exposure (ME Index * non-English exposure: β = −0.25, p = .29). Interestingly, by excluding infants who were less balanced in their English versus non-English exposure (i.e., infants with 10–25% and 75–85% non-English exposure), we observed significant effects of language exposure on visual search performance such that greater non-English language exposure was associated with lower visual discrimination of facial expressions (β = −0.37, p = .03). This may indicate a nonlinear effect of language exposure on aspects of social communicative development (Fig. 5)—specifically, a nonlinear effect of second language exposure on visual discrimination of facial expressions but not necessarily on mouth-looking.

Fig. 5.

Scatterplot of the standardized residuals from the regression examining the effects of non-English language exposure on visual search accuracy over and above the effects of age, nonverbal cognitive performance, ME-Index, and the interaction between ME-Index and non-English language exposure. Although the regression excluding infants with non-English language exposure outside a more conventional operationalization of bilingualism depicted a significant linear relation between language exposure and visual search accuracy, this relationship is nonlinear across the whole sample (R2=0.18, p = 0.02).

Discussion

This study investigated how infants’ visual attention to faces—specifically, mouth-looking—might be related to early language skills and how this relation may be affected by infants’ early language environment. As expected, infants’ relative attention to the mouth of a talking face increased with age. More important, individual differences in mouth-looking were positively correlated with expressive—but not receptive—language skills regardless of early language exposure. In addition, infants’ detection of emotional faces as targets among neutral distractors did not differ by language environment and was not related to infants’ mouth-looking. These results suggest that mouth-looking may be a context-dependent, goal-directed means for infants to learn about relevant linguistic information in their environment (Amso et al., 2010; Elsabbagh et al., 2014).

To our knowledge, the current study is the first to test the possibility that selective attention to a talking mouth facilitates concurrent language learning. Several researchers have found that early attention to the mouth predicts later expressive language development (Elsabbagh et al., 2014; Tenenbaum et al., 2013; Young et al., 2009) and have posited that increased mouth-looking may allow infants to capitalize on redundant audiovisual speech cues to learn language (e.g., Hillairet de Boisferon et al., 2017; Lewkowicz & Hansen-Tift, 2012; Pons et al., 2015). Corroborating previous findings, we observed developmental increases in relative attention to a talking mouth between 6 and 12 months of age in both monolingual infants (Frank et al., 2011; Tenenbaum et al., 2013; but see Lewkowicz & Hansen-Tift, 2012) and bilingual infants (Pons et al., 2015). Moreover, we showed that—regardless of infants’ language background and degree of non-English language exposure—attention to the mouth is related to concurrent expressive language development beyond what is expected for an infant’s chronological age; infants who preferentially attended to the speaker’s mouth had better age-normed expressive language scores than those who preferentially attended to the speaker’s eyes.

Notably, there were no age-related differences in mouth-looking when it was analyzed as a categorical phenomenon. Infants in our study who preferentially looked at the mouth (i.e., mouth-lookers) were not older than those who preferentially looked at the woman’s eyes (i.e., eye-lookers). This suggests that the variability in mouth-looking tracks more closely associated with measures of expressive language than with chronological age. The overall trend of developmental increases in mouth-looking previously observed across other studies (e.g., Frank et al., 2011; Pons et al., 2015; Tenenbaum et al., 2013) likely reflects the application of age as a proxy measure of developmental skill set. Therefore, our findings provide important confirmatory evidence for this suggestion by demonstrating that mouth-looking is indeed associated with infants’ development of early expressive language skills.

Our results revealed that infants who looked more at the talking mouth had more advanced repertoires of vocalizations but not necessarily more advanced receptive language skills. The expressive verbal skills measured in our infants included preverbal vocalizations such as babbling, phonemic utterances, and jabbering (Mullen, 1995). Greater productive language may have yielded more opportunities to elicit contingent vocal responses from caregivers during face-to-face interactions (Gros-Louis, West, Goldstein, & King, 2006; Wu & Gros-Louis, 2014). This may iteratively scaffold expressive language development in a feed-forward fashion; for instance, 9.5-month-olds produce more mature vocalizations in response to their mothers’ contingent vocal feedback (Goldstein & Schwade, 2008). Attending to the mouth for these speech cues during face-to-face interactions may also support the development of sensorimotor maps necessary for speech production (e.g., Imada et al., 2006). In contrast, it is possible that mouth-looking might not have been related to receptive language because the receptive language skills measured in our infants largely focused on early communicative skills that were not necessarily linguistic in nature such as orienting to peripheral sounds, pointing to objects, and recognizing one’s own name (Mullen, 1995).

It should be noted that mouth-looking is only one means by which infants develop expressive language. No infant in our study looked at the woman’s mouth 100% of the time, and the association between mouth-looking and expressive language appeared closely coupled for both bilingual and monolingual infants. Although mouth-looking during the first postnatal year is particularly adaptive for speech acquisition (e.g., Tenenbaum et al., 2013; Young et al., 2009), attention to the mouth alone does not support expressive language development (Elsabbagh et al., 2014). Making appropriate attentional shifts during dyadic interactions, for example, may play an equally important role in early language development. Thus, understanding the mechanisms that underlie temporal aspects of social attention and context-dependent viewing behaviors and developmental factors predicating increased mouth-looking in both monolingual and bilingual infants are critical next steps in early language research.

Infants’ ability to detect emotional faces did not vary by non-English language exposure when considering the whole sample, contrary to the hypothesis that bilingual infants’ protracted attention to the mouth region may hinder sensitivity to nonlinguistic communicative information such as emotion (Ayneto & Sebastián-Gallés, 2017). Moreover, although recent work demonstrates that bilingual infants attend more to the mouth region of dynamic, nonlinguistic, emotional faces than do monolingual infants (Ayneto & Sebastián-Gallés, 2017), our findings show that infants’ ability to detect emotional faces was not related to how much infants attended to the eye or mouth region of a talking face. Rather, our data suggest that visual detection of emotional expressions during infancy may involve processes in face perception that are distinct from visual attention to dynamic faces (e.g., nonverbal cognitive skills).

In contrast to prior studies, the monolingual and bilingual infants in the current study did not differ in our measures of verbal development and cognitive development. Although non-English exposure did appear to moderate age-related changes in mouth-looking, this effect seems to be largely accounted for by expressive language skills. When considering either verbal skill level or emotion discrimination, non-English exposure did not moderate the effects of mouth-looking. One possible explanation for these effects lies within the characteristics of our sample. Prior studies categorized infants as bilingual if they received 25% to 75% daily bilingual exposure (Ayneto & Sebastián-Gallés, 2017; Pons et al., 2015); in contrast, second language exposure in our bilingual sample was somewhat wider (20–85%). However, if familiarity to the speaker’s language (i.e., English) influenced viewing behaviors, we would have expected to find that non-English exposure moderated the interaction between mouth-looking and verbal skill set, which it did not.

Prior studies have also examined combinations of two related Indo-European languages such as Spanish/Catalan (Ayneto & Sebastián-Gallés, 2017; Pons et al., 2015) and French/English (Weikum et al., 2007). In contrast, our bilingual sample was far more diverse in terms of the non-English languages to which infants were exposed, ranging from languages that are related to English (e.g., German) to those that are unrelated (e.g., Chinese). The heterogeneity inherent to our bilingual sample may be more reflective of the bilingual language exposure in the general population. Although it is not well understood how these differences in language distance between bilinguals’ two languages affect infants’ mouth-looking, it is possible that language distance played a role in our findings. Another possibility is that degree of second language exposure might not have a linear effect on either language or social communicative development (e.g., Thordardottir, 2011). Indeed, when we restricted our bilingual sample to be consistent with a more restrictive operationalization of “bilingual exposure” (e.g., Pearson et al., 1997), we observed a significant effect of non-English language exposure on performance on the visual search task. This suggests that the “dosage” effect of language exposure on developmental domains associated with nonlinguistic social communication may vary by language distance and exposure. Notably, the null effect of non-English language exposure on our measures of language development and mouth-looking are likely due to the diversity of the bilingual sample and not due to sample size. Our bilingual infants may have been qualitatively more diverse than our parent-report measures were able to capture. This highlights the complexity inherent to bilingualism that warrants further investigation.

There are additional complexities of studying bilingual populations that should also be taken into account. First, many of the commonly used measures of language development for English-speaking populations have been developed for and normed with English monolingual children (e.g., Dunn & Dunn, 2007; Fenson et al., 1994; Martin & Brownell, 2010a; Martin & Brownell, 2010b) or Spanish–English bilingual children (e.g., Martin, 2012a; Martin, 2012b; Pena, Gutiérrez-Clellen, Iglesias, Goldstein, & Bedore, 2014). Measures normed with English monolinguals might not be appropriate for use with bilingual populations (but see Marchman & Martínez-Sussman, 2002), and measures made for Spanish–English bilingual children might not be appropriate for use with English-speaking bilinguals exposed to languages other than Spanish. Although the MSEL was normed with children whose primary language was English (Shank, 2011), it measures infants’ proto-language skills independent of the language or languages to which the infants are exposed (e.g., babbling, recognizing one’s own name). Nevertheless, it is possible that the MSEL might not capture the full range of the early language skills of infants exposed to multiple languages. Second, bilingual families are typically also bicultural families, and there may be cultural differences in how parents perceive and report their children’s language exposure. Cultural differences have been reported in adult bilinguals’ self-reported language proficiency (e.g., Japanese–English vs. Spanish–English bilinguals’ self-rated second language proficiency; Hoshino & Kroll, 2008). Given that we tested a diverse group of infants, it is possible that such cultural differences may have affected how parents reported their infants’ language exposure.

The impact of linguistically diverse environments should also be taken into account when testing monolingual infants. Our participants were recruited from a linguistically diverse city in the United States where more than 50% of the city’s population is multilingual (U.S. Bureau of the Census, 2016). Recent research has found that monolingual infants who reside in linguistically diverse neighborhoods more often imitate and learn from diverse informants relative to monolingual infants who reside in more linguistically homogeneous neighborhoods (Howard, Carrazza, & Woodward, 2014). Such research suggests that infants’ linguistic environments—beyond their home and family environments—may affect social attention and social learning. Although the linguistic environments of monolingual samples are rarely described in as much detail as those of bilingual samples, it is possible that the monolingual infants in our sample were from linguistic environments that differed from those of monolingual infants in other studies.

Conclusion

Increased attention to the mouth may facilitate detection of redundant audiovisual speech cues to language (e.g., Lewkowicz & Hansen-Tift, 2012; Pons et al., 2015). Our findings corroborate this assertion by demonstrating that mouth-looking may play a prominent role in supporting expressive language development in infants regardless of early language environment. Selective attention to relevant speech cues from the face, thus, may be an important means by which infants acquire language production skills.

Supplementary Material

Acknowledgments

The authors thank all the families who participated as well as the UCLA Baby Lab for helpful feedback and comments. This work was supported by grants from the National Defense Science and Engineering Graduate Fellowship to TT, National Science Foundation Graduate Research Fellowship (DGE-07074240707424) to NA, and National Institutes of Health (R01 HD082844) to SPJ.

Appendix A. Supplementary material

Supplementary data associated with this article can be found, in the online version, at https://doi.org/10.1016/j.jecp.2018.01.002.

References

- Amso D, Fitzgerald M, Davidow J, Gilhooly T, Tottenham N. Visual exploration strategies and the development of infants’ facial emotion discrimination. Frontiers in Psychology. 2010;1 doi: 10.3389/fpsyg.2010.00180. https://doi.org/10.3389/fpsyg.2010.00180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ayneto A, Sebastián-Gallés N. The influence of bilingualism on the preference for the mouth region of dynamic faces. Developmental Science. 2017;20 doi: 10.1111/desc.12446. https://doi.org/10.1111/desc.12446. [DOI] [PubMed] [Google Scholar]

- Brito N, Barr R. Influence of bilingualism on memory generalization during infancy. Developmental Science. 2012;15:812–816. doi: 10.1111/j.1467-7687.2012.1184.x. [DOI] [PubMed] [Google Scholar]

- Brito N, Barr R. Flexible memory retrieval in bilingual 6-month-old infants. Developmental Psychobiology. 2014;56:1156–1163. doi: 10.1002/dev.21188. [DOI] [PubMed] [Google Scholar]

- Brito NH, Grenell A, Barr R. Specificity of the bilingual advantage for memory: Examining cued recall, generalization, and working memory in monolingual, bilingual, and trilingual toddlers. Frontiers in Psychology. 2014;5 doi: 10.3389/fpsyg.2014.01369. https://doi.org/10.3389/fpsyg.2014.01369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brooks R, Meltzoff AN. The development of gaze following and its relation to language. Developmental Science. 2005;8:535–543. doi: 10.1111/j.1467-7687.2005.00445.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell DJ, Shic F, Macari S, Chawarska K. Gaze response to dyadic bids at 2 years related to outcomes at 3 years in autism spectrum disorders: A subtyping analysis. Journal of Autism and Developmental Disorders. 2014;44:431–442. doi: 10.1007/s10803-013-1885-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chawarska K, Macari S, Shic F. Context modulates attention to social scenes in toddlers with autism. Journal of Child Psychology and Psychiatry. 2012;53:903–913. doi: 10.1111/j.1469-7610.2012.02538.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn LM, Dunn DM. PPVT-4: Peabody picture vocabulary test. Bloomington, MN: Pearson Assessments; 2007. [Google Scholar]

- Elsabbagh M, Bedford R, Senju A, Charman T, Pickles A, Johnson MH, BASIS Team What you see is what you get: Contextual modulation of face scanning in typical and atypical development. Social Cognitive and Affective Neuroscience. 2014;9:538–543. doi: 10.1093/scan/nst012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fenson L, Dale PS, Reznick JS, Bates E, Thal DJ, Pethick SJ, Ellipsis Stiles J. Variability in early communicative development. Monographs of the Society for Research in Child Development. 1994;59(5) Serial No. 242. [PubMed] [Google Scholar]

- Frank M, Amso D, Johnson SP. Visual search and attention to faces during early infancy. Journal of Experimental Child Psychology. 2014;118:13–26. doi: 10.1016/j.jecp.2013.08.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frank M, Vul E, Saxe R. Measuring the development of social attention using free-viewing. Infancy. 2011;17:355–375. doi: 10.1111/j.1532-7078.2011.00086.x. [DOI] [PubMed] [Google Scholar]

- Gliga T, Csibra G. Seeing the face through the eyes: A developmental perspective on face expertise. Progress in Brain Research. 2007;164:323–339. doi: 10.1016/S0079-6123(07)64018-7. [DOI] [PubMed] [Google Scholar]

- Goldstein MH, Schwade JA. Social feedback to infants’ babbling facilitates rapid phonological learning. Psychological Science. 2008;19:515–523. doi: 10.1111/j.1467-9280.2008.02117.x. [DOI] [PubMed] [Google Scholar]

- Gros-Louis J, West MJ, Goldstein MH, King AP. Mothers provide differential feedback to infants’ prelinguistic sounds. International Journal of Behavioral Development. 2006;30:509–516. [Google Scholar]

- Hillairet de Boisferon A, Tift AH, Minar NJ, Lewkowicz DJ. Selective attention to a talker’s mouth in infancy: Role of audiovisual temporal synchrony and linguistic experience. Developmental Science. 2017;20 doi: 10.1111/desc.12381. https://doi.org/10.1111/desc.12381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoff E, Core C, Place S, Rumiche R, Señor M, Parra M. Dual language exposure and early bilingual development. Journal of Child Language. 2012;39:1–27. doi: 10.1017/S0305000910000759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoshino N, Kroll JF. Cognate effects in picture naming: Does cross-language activation survive a change of script? Cognition. 2008;106:501–511. doi: 10.1016/j.cognition.2007.02.001. [DOI] [PubMed] [Google Scholar]

- Howard LH, Carrazza C, Woodward AL. Neighborhood linguistic diversity predicts infants’ social learning. Cognition. 2014;133:474–479. doi: 10.1016/j.cognition.2014.08.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hunnius S, Geuze RH. Developmental changes in visual scanning of dynamic faces and abstract stimuli in infants: A longitudinal study. Infancy. 2004;6:231–255. doi: 10.1207/s15327078in0602_5. [DOI] [PubMed] [Google Scholar]

- Imada T, Zhang Y, Cheour M, Taulu S, Ahonen A, Kuhl PK. Infant speech perception activates Broca’s area: A developmental magnetoencephalography study. NeuroReport. 2006;17:957–962. doi: 10.1097/01.wnr.0000223387.51704.89. [DOI] [PubMed] [Google Scholar]

- Jones W, Carr K, Klin A. Absence of preferential looking to the eyes of approaching adults predicts level of social disability in 2-year-old toddlers with autism spectrum disorder. Archives of General Psychiatry. 2008;65:946–954. doi: 10.1001/archpsyc.65.8.946. [DOI] [PubMed] [Google Scholar]

- Kovács ÁM, Mehler J. Cognitive gains in 7-month-old bilingual infants. Proceedings of the National Academy of Sciences of the United States of America. 2009;106:6556–6560. doi: 10.1073/pnas.0811323106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kovács ÁM, Mehler J. Flexible learning of multiple speech structures in bilingual infants. Science. 2009;325:611–612. doi: 10.1126/science.1173947. [DOI] [PubMed] [Google Scholar]

- Kuhl PK. Is speech learning “gated” by the social brain? Developmental Science. 2007;10:110–120. doi: 10.1111/j.1467-7687.2007.00572.x. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ, Hansen-Tift AM. Infants deploy selective attention to the mouth of a talking face when learning speech. Proceedings of the National Academy of Sciences of the United States of America. 2012;109:1431–1436. doi: 10.1073/pnas.1114783109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewkowicz DJ, Pons F. Recognition of amodal language identity emerges in infancy. International Journal of Behavioral Development. 2013;37:90–94. doi: 10.1177/0165025412467582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luk G, Bialystok E. Bilingualism is not a categorical variable: Interaction between language proficiency and usage. Journal of Cognitive Psychology. 2013;25:605–621. doi: 10.1080/20445911.2013.795574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marchman VA, Martínez-Sussman C. Concurrent validity of caregiver/parent report measures of language for children who are learning both English and Spanish. Journal of Speech, Language, & Hearing Research. 2002;45:983–997. doi: 10.1044/1092-4388(2002/080). [DOI] [PubMed] [Google Scholar]

- Martin NA. Expressive one-word picture vocabulary test: Spanish-English. Novato, CA: Academic Therapy; 2012a. (bilingual edition) [Google Scholar]

- Martin NA. Receptive one-word picture vocabulary test: Spanish-English. Novato, CA: Academic Therapy; 2012b. (bilingual edition) [Google Scholar]

- Martin NA, Brownell R. Expressive one-word picture vocabulary test. 4th. Novato, CA: Academic Therapy; 2010a. [Google Scholar]

- Martin NA, Brownell R. Receptive one-word picture vocabulary test. 4th. Novato, CA: Academic Therapy; 2010b. [Google Scholar]

- Mullen EM. Mullen scales of early learning. Circle Pines, MN: American Guidance Service; 1995. [Google Scholar]

- Munhall KG, Johnson EK. Speech perception: When to put your money where the mouth is. Current Biology. 2012;22:R190–R192. doi: 10.1016/j.cub.2012.02.026. [DOI] [PubMed] [Google Scholar]

- Pearson BZ, Fernandez SC, Lewedeg V, Oller DK. The relation of input factors to lexical learning by bilingual infants. Applied Psycholinguistics. 1997;18:41–58. [Google Scholar]

- Peña ED, Gutiérrez-Clellen VF, Iglesias A, Goldstein BA, Bedore LM. Bilingual English Spanish assessment. San Rafael, CA: AR Clinical; 2014. [Google Scholar]

- Pons F, Bosch L, Lewkowicz DJ. Bilingualism modulates infants’ selective attention to the mouth of a talking face. Psychological Science. 2015;26:490–498. doi: 10.1177/0956797614568320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poulin-Dubois D, Blaye A, Coutya J, Bialystok E. The effects of bilingualism on toddlers’ executive functioning. Journal of Experimental Child Psychology. 2011;108:567–579. doi: 10.1016/j.jecp.2010.10.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenblum LD, Schmuckler MA, Johnson JA. The McGurk effect in infants. Perception & Psychophysics. 1997;59:347–357. doi: 10.3758/bf03211902. [DOI] [PubMed] [Google Scholar]

- Schonberg C, Sandhofer CM, Tsang T, Johnson SP. Does bilingual experience affect early visual perceptual development? Frontiers in Psychology. 2014;5 doi: 10.3389/fpsyg.2014.01429. https://doi.org/10.3389/fpsyg.2014.01429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sebastián-Gallés N, Albareda-Castellot B, Weikum WM, Werker JF. A bilingual advantage in visual language discrimination in infancy. Psychological Science. 2012;23:994–999. doi: 10.1177/0956797612436817. [DOI] [PubMed] [Google Scholar]

- Shank L. Mullen scales of early learning. In: Kreutzer J, DeLuca J, Caplan B, editors. Encyclopedia of clinical neuropsychology. New York: Springer; 2011. pp. 1669–1671. [Google Scholar]

- Singh L, Fu CS, Rahman AA, Hameed WB, Sanmugam S, Agarwal P, GUSTO Research Team Back to basics: A bilingual advantage in infant visual habituation. Child Development. 2015;86:294–302. doi: 10.1111/cdev.12271. [DOI] [PubMed] [Google Scholar]

- Teinonen T, Aslin RN, Alku P, Csibra G. Visual speech contributes to phonetic learning in 6-month-old infants. Cognition. 2008;108:850–855. doi: 10.1016/j.cognition.2008.05.009. [DOI] [PubMed] [Google Scholar]

- Tenenbaum EJ, Shah RJ, Sobel DM, Malle BF, Morgan JL. Increased focus on the mouth among infants in the first year of life: A longitudinal eye-tracking study. Infancy. 2013;18:534–553. doi: 10.1111/j.1532-7078.2012.00135.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tenenbaum EJ, Sobel DM, Sheinkopf SJ, Malle BF, Morgan JL. Attention to the mouth and gaze following in infancy predict language development. Journal of Child Language. 2015;42:1173–1190. doi: 10.1017/S0305000914000725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thordardottir E. The relationship between bilingual exposure and vocabulary development. International Journal of Bilingualism. 2011;15:426–445. doi: 10.3109/17549507.2014.923509. [DOI] [PubMed] [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, Nelson C. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research. 2009;168:242–249. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- U.S. Census Bureau. American Community Survey 5-Year Estimates, 2012-2016. 2016 Table S1601. Retrieved from https://factfinder.census.gov/faces/tableservices/jsf/pages/productview.xhtml?src=bkmk.

- Wagner JB, Luyster RJ, Yim JY, Tager-Flusberg H, Nelson CA. The role of early visual attention in social development. International Journal of Behavioral Development. 2013;37:118–124. doi: 10.1177/0165025412468064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weikum WM, Vouloumanos A, Navarra J, Soto-Faraco S, Sebastián-Gallés N, Werker JF. Visual language discrimination in infancy. Science. 2007;316:1159. doi: 10.1126/science.1137686. [DOI] [PubMed] [Google Scholar]

- Wu Z, Gros-Louis J. Infants’ prelinguistic communicative acts and maternal responses: Relations to linguistic development. First Language. 2014;34:72–90. [Google Scholar]

- Young GS, Merin N, Rogers SJ, Ozonoff S. Gaze behavior and affect at 6 months: Predicting clinical outcomes and language development in typically developing infants and infants at risk for autism. Developmental Science. 2009;12:798–814. doi: 10.1111/j.1467-7687.2009.00833.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.