Abstract

Preventive services required for performance measurement often are completed in outside health systems and not captured in electronic medical records (EMRs). A before–after study was conducted to examine the ability of clinical decision support (CDS) to improve performance on preventive quality measures, capture clinician-reported services completed elsewhere, and patient/medical exceptions and to describe their impact on quality measurement. CDS improved performance on colorectal cancer screening, osteoporosis screening, and pneumococcal vaccination measures (P < .05) but not breast or cervical cancer screening. CDS captured clinician-reported services completed elsewhere (2% to 10%) and patient/medical exceptions (<3%). Compared to measures using only within-system data, including services completed elsewhere in the numerator improved performance: pneumococcal vaccine (73% vs 82%); breast (69% vs 75%), colorectal (58% vs 70%), and cervical cancer (53% vs 62%); and osteoporosis (72% vs 75%) screening (P < .05). Visit-based CDS can capture clinician-reported preventive services, and accounting for services completed elsewhere improves performance on quality measures.

Keywords: clinical decision support, preventive care, quality measurement, primary care

Performance measurement is critical to assessing the quality of health care delivered by clinicians, health plans, and accountable care organizations (ACOs) and incentivizing improvements in clinical care.1 In the past decade, reimbursement has become increasingly linked to performance on quality measures. The Medicare Access and CHIP Reauthorization Act of 2015 (MACRA) further strengthens the link between quality measurement and reimbursement through its Quality Payment Program.2 As a result, clinicians, medical groups, and ACOs face increasing financial gains (and losses) based on their performance on quality metrics, and underperformance, by even a small amount, can have major financial ramifications.

Given the high stakes of performance measurement, the availability of accurate data is critical to measure calculations. Although administrative claims data and manual chart abstraction are commonly used to calculate quality measures, the time, costs, delays, and inaccuracies intrinsic in these approaches pose significant limitations.3–5 Electronic quality measures (eMeasures), which utilize clinical data captured and entered directly into an electronic medical record (EMR), are becoming increasingly common.3 In addition to saving time and costs, eMeasures more accurately identify the proper numerator and denominator for metrics.6 However, suboptimal or nonexistent EMR standards, interoperability, and data exchange pose significant challenges to eMeasure calculation. As a result, much of the data needed for quality measures must be obtained from patients, outside health systems, consulting clinicians, and laboratory interfaces.3

While we wait for significant and consistent advances in EMR standards, interoperability, and data exchange for sharing of structured data for quality measurement, new EMR functionalities are needed to support structured data capture and facilitate quality measurement. EMR-driven clinical decision support (CDS) can improve performance on quality measures7 by providing clinicians with timely, intelligently filtered, patient-specific information to enhance health care delivery and outcomes.8 However, the accuracy and effectiveness of CDS is limited by the availability of structured data in the local EMR.9,10 In open health care systems, where patients often see providers in multiple health systems that use different EMRs, data exchange is uncommon. This results in inaccurate eMeasure calculations and limits the effectiveness of CDS to improve performance.5,6 For example, onetime preventive services (osteoporosis screening and pneumococcal vaccination) or infrequent services (colonoscopy every 10 years) are likely to be completed elsewhere and not available in structured data fields within the local EMR. Similarly, clinicians are often aware of medical (limited life expectancy) or patient reasons (declining vaccination) for not completing preventive services, but this information—if documented—resides in free-text notes. As a result, performance on quality measures may be underestimated, and providers may be penalized for practicing tailored, patient-centered, cost-conscious care.

To help address data gaps in the local EMR and improve performance on preventive service quality measures, the research team designed EMR-enabled, visit-based, interactive CDS to facilitate the recording of preventive services completed in outside health systems and patient and medical exceptions to service completion. This study examines the effect of visit-based CDS reminders on the performance of national preventive care measures using National Quality Forum (NQF) measure specifications, and quantifies the incremental change in results seen when care delivered elsewhere and patient/medical exceptions are included in measure calculations from 3 different measurement perspectives.

Methods

Design and Population

The research team conducted a before–after study to assess the effectiveness of point-of-care, EMR-based CDS reminders for preventive health services and the incremental impact of adding features to CDS to allow reporting of preventive services completed in outside health systems and medical and patient exceptions to recommended services. Established primary care patients eligible for 1 or more preventive services were included in the study. An established patient was defined as having 2 or more office visits with the same primary care clinician over 2 years with at least 1 visit in the past 12 months.

CDS reminders were implemented as part of routine clinical practice in 3 general internal medicine clinics at an academic medical center in July 2011. Study clinics were National Committee for Quality Assurance–accredited Level 3 patient-centered medical homes (PCMHs) with more than 10 000 unique patients cared for by attending physicians. At the time of implementation, clinics had more than 10 years of experience using the ambulatory Epic EMR (Epic Systems Corporation, Verona, Wisconsin).

CDS Design and Implementation

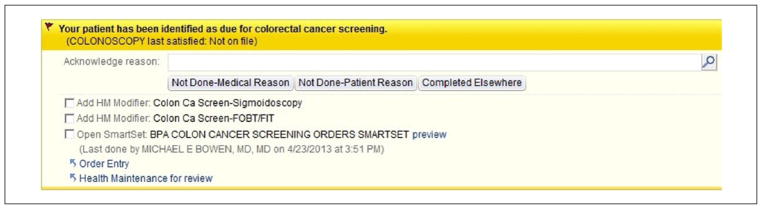

A multidisciplinary team of primary care clinicians, institutional quality officers, health services researchers, and clinical informaticians implemented visit-based CDS in the Epic EMR. CDS utilized age, sex, and result data in the EMR to identify patients eligible for preventive services. International Classification of Diseases, Ninth Revision (ICD-9) codes were used to identify exclusions for each performance measure using NQF criteria.11 At each office visit, CDS automatically identified patients who were not up-to-date on each measure, provided a yellow, highlighted alert to clinicians with the date the measure was last satisfied, and a direct link to appropriate orders and associated ICD-9 codes (Figure 1). CDS was designed as a passive, noninterruptive alert integrated into provider workflow to minimize interruptions and alert fatigue and promote real-time discussion and ordering of preventive services during the visit.7 CDS prompts included user-friendly exception reporting buttons to electronically capture discrete, clinician-reported data on tests completed elsewhere and patient and medical reasons the recommended action was not appropriate. The CDS intervention was targeted toward attending physicians, who were trained to use CDS through demonstrations at clinical practice meetings and brief, email-delivered tutorials.

Figure 1.

Visit-based, clinical decision support reminder.

Performance Measure Selection and Measurement

The research team focused on 5 common NQF preventive health measures: breast, colorectal, and cervical cancer screening; osteoporosis screening; and pneumococcal vaccination (Table 1).11 The team selected these measures because they are well-established, national primary care performance measures endorsed by the National Quality Forum and utilized by the Centers for Medicare & Medicaid Services and managed care plans to assess ambulatory quality.

Table 1.

Preventive Health Quality Measures and Approaches to Measure Calculations.

| NQF Approach | Informed-Clinician Approach | Patient-Centered Approach | ||||

|---|---|---|---|---|---|---|

|

|

|

|

||||

| Measure | Numerator Criteria | Denominator Criteria | Numerator Criteria | Denominator Criteria | Numerator Criteria | Denominator Criteria |

| Breast cancer screening | Mammogram (2 years) | Women (ages 40–69)a | (NQF criteria + completed elsewhere) | (NQF criteria - patient reasons - medical reasons) | (NQF criteria + completed elsewhere + patient reason + medical reason) | NQF criteria |

| Colorectal cancer screening | FOBT/FIT (annual); sigmoidoscopy/barium enema (5 years) colonoscopy (10 years) | Ages 50–75b | (NQF criteria + completed elsewhere) | (NQF criteria - patient reasons - medical reasons) | (NQF criteria + completed elsewhere + patient reason + medical reason) | NQF criteria |

| Cervical cancer screening | Cervical cytology/Pap test (3 years) | Women (ages 21–64)c | (NQF criteria + completed elsewhere) | (NQF criteria - patient reasons - medical reasons) | (NQF criteria + completed elsewhere + patient reason + medical reason) | NQF criteria |

| Osteoporosis screening or therapy | DEXA or medical therapy for osteoporosis | Women (ages 65–85)d | (NQF criteria + completed elsewhere) | (NQF criteria - patient reasons - medical reasons) | (NQF criteria + completed elsewhere + patient reason + medical reason) | NQF criteria |

| Pneumococcal vaccination | Pneumococcal vaccine (ever) | Ages ≥65e | (NQF criteria + completed elsewhere) | (NQF criteria - patient reasons - medical reasons) | (NQF criteria + completed elsewhere + patient reason + medical reason) | NQF criteria |

Abbreviations: DEXA, dual energy X-ray absorptiometry; FOBT/FIT, fecal occult blood testing/fecal immunochemical testing; NQF, National Quality Forum.

Denominator exclusions for breast cancer screening: bilateral mastectomy or 2 unilateral mastectomies.

Denominator exclusions for colorectal cancer screening: diagnosis of colorectal cancer or total colectomy.

Denominator exclusions for cervical cancer screening: hysterectomy with no residual cervix.

Denominator exclusions for osteoporosis screening: documentation of medical, patient, or system reason for not ordering DEXA or prescribing treatment.

Denominator exclusions for pneumococcal vaccination: does not meet age criteria.

Baseline Performance

Baseline performance was calculated from electronic clinical data using NQF measure specifications.11 Vaccination records, procedure reports, laboratory data, imaging data, and billing codes were electronically abstracted from the EMR to determine the numerator, denominator, and exclusions for each measure. Baseline performance was calculated as follows: (NQF criteria/[NQF measure eligible - NQF specified exclusions]); this was termed the “NQF approach.” Although specifications allow for data identified during manual chart abstraction (both paper and electronic) to satisfy measure criteria, the research team did not include manually abstracted data because the study institution utilizes a comprehensive EMR and relies on electronic reporting.

Outcomes

The research team calculated the proportion of patients satisfying performance measures 12 months after CDS implementation using the NQF approach. To examine the potential impact of care completed elsewhere and patient/medical exceptions to preventive services, the team calculated 2 additional approaches to performance measurement using additional data reported using CDS.

Informed-Clinician Approach to Performance Measurement

The “Informed-Clinician” approach was designed to reflect how clinicians use data in real-world practice to decide if preventive services are indicated. This approach included information known and reported by clinicians through CDS about tests done elsewhere. The informed-clinician approach was defined as follows: ([NQF criteria + completed elsewhere]/[measure eligible − NQF exclusions − patient exceptions − medical exceptions]). Care done elsewhere was ascertained from patients during clinical encounters and recorded by physicians using CDS. The research team did not require documentation of preventive services from outside health systems.

Patient-Centered Approach to Performance Measurement

The “Patient-Centered” approach was designed to credit clinicians for engaging patients in informed conversations about preventive care and customizing delivery of preventive services based on the patient’s medical conditions, preferences, and previously completed services. For example, colorectal cancer screening might not be indicated in a patient with limited life expectancy. This medically appropriate decision is considered a “screening failure” in the NQF approach. However, the Patient-Centered approach would count this as a success in the numerator as follows: ([NQF criteria + completed elsewhere + patient reasons + medical reasons]/measure eligible − NQF exclusions).

Statistical Analysis

Descriptive statistics including frequency, mean, and cross-tabulations were used to describe patient characteristics at baseline. A χ 2 analysis with Bonferroni adjustment was used to compare performance on preventive health quality measures in the 12 months before (June 2010 to June 2011) and 12 months after (July 2011 to July 2012) CDS implementation. Baseline performance was assessed using the NQF approach for all comparisons. In the post-CDS implementation period, χ2 analysis with a Bonferroni adjustment was used to compare the proportion of patients satisfying performance measures with the NQF, Informed-Clinician, and Patient-Centered measurement approaches. Statistical analyses were conducted using SAS version 9.1 (SAS Institute, Inc., Cary, North Carolina). The research team had full access to the data and take responsibility for its integrity. This study was reviewed by the University of Texas Southwestern Medical Center Institutional Review Board and Quality Office and considered exempt as a quality improvement project.

Results

At baseline, 10 917 established patients were cared for by the 3 study clinics and 9780 were eligible for at least 1 preventive service. Those eligible for preventive care completed 23 805 office visits during the 12-month study period. One or more CDS reminders were activated in 59% of office visits for a total of 21 757 preventive service reminders. On average, patients were 62 years old, 60% were female, and 62% were non-Hispanic white. Nearly all patients were covered by commercial insurance (53%) or Medicare (44%).

Baseline Performance on Preventive Health Quality Measures

The number of eligible patients and baseline performance according to NQF measure specifications are shown in Table 2. At baseline, local performance exceeded the national average on only 2 of the 5 performance measures targeted by CDS.

Table 2.

Absolute and Relative Change in Performance on Preventive Health Performance Measures After CDS Implementation Using Standard and Alternate Approaches to Measure Calculations.

| Measure Eligible, n | NQF National Benchmarka | Local Performance at Baseline With NQF Approach (%) | Absolute (Relative) Change From Baseline With CDS Reminders (%) | Absolute (Relative) Change From Baseline With Informed Clinician Approach (%) | Absolute (Relative) Change From Baseline With Patient-Centered Approach (%) | |

|---|---|---|---|---|---|---|

| Breast cancer screening | 3699 | 67.2 | 69.2 | 0 (0) | 5.6 (8.1)b | 6.1 (8.8)b |

| Colorectal cancer screening | 6441 | 49.3 | 53.7 | 4.3 (8.0)b | 16.4 (30.5)b | 16.7 (31.1)b |

| Cervical cancer screening | 3445 | 67.2 | 52.5 | 0.7 (1.3) | 9.2 (17.5)b | 10.1 (19.2)b |

| Osteoporosis screening | 2868 | 71.0 | 67.1 | 4.7 (7.0)b | 8.3 (12.4)b | 8.7 (13.1)b |

| Pneumococcal vaccination | 4876 | 69.5 | 67.3 | 6.1 (9.1)b | 14.2 (21.1)b | 14.5 (21.5)b |

Abbreviations: CDS, clinical decision support; NQF, National Quality Forum.

National benchmarks defined by 2010 Medicare (breast and colorectal cancer screening, osteoporosis screening, and pneumococcal vaccination) and Medicaid (cervical cancer screening) data.34

P < .001 for comparison with local performance at baseline using the NQF approach.

Performance on Preventive Health Quality Measures 12 Months After CDS Implementation

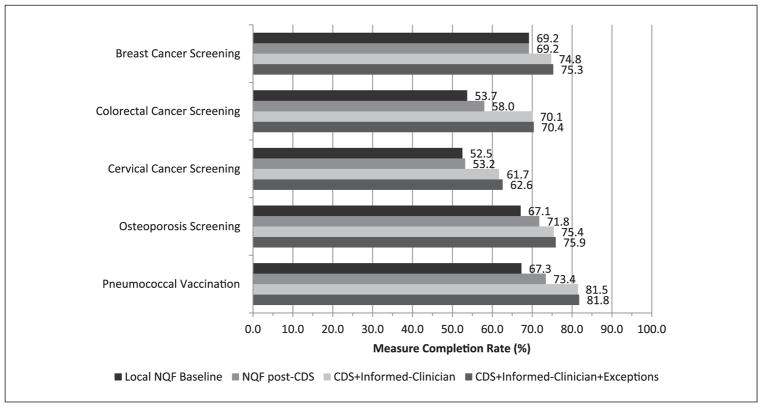

The reminder component of CDS improved the percentage of patients completing colorectal cancer screening, pneumococcal vaccination, and osteoporosis screening (P < .001 for all) using NQF specifications. However, reminders alone did not increase rates of breast cancer screening or cervical cancer screening (Figure 2).

Figure 2.

Absolute performance on preventive health quality measures using different approaches to measure calculation. CDS, clinical decision support; NQF, National Quality Forum.

Frequency of Preventive Services Done Elsewhere and Patient and Medical Exceptions Prompted by CDS

Using provider-reported data captured by CDS, the frequency of patients completing preventive health services in outside health care settings ranged from 2% to 9% (Table 3). Colorectal cancer screening was most frequently completed elsewhere followed by cervical cancer screening, pneumococcal vaccination, and breast cancer screening. Clinicians rarely recorded patient or medical reasons for not completing indicated preventive services. Only a small percentage of patients eligible for cervical cancer screening, breast cancer screening, colorectal cancer screening, and pneumonia vaccination had exceptions reported (Table 3).

Table 3.

Frequency of Preventive Health Services Completed Elsewhere and Patient/Medical Reasons for Not Completing Recommended Services.

| Measure | Eligible, n | Completed Elsewhere, n (%) | Patient or Medical Exception, n (%) |

|---|---|---|---|

| Breast cancer screening | 3699 | 155 (4.2) | 70 (1.9) |

| Colorectal cancer screening | 6441 | 605 (9.4) | 45 (0.7) |

| Cervical cancer screening | 3445 | 241 (7.0) | 83 (2.4) |

| Osteoporosis screening | 2868 | 57 (2.0) | 49 (1.7) |

| Pneumococcal vaccination | 4876 | 332 (6.8) | 78 (1.6) |

Impact of Accounting for Care Done Elsewhere and Patient and Medical Exceptions to Preventive Care on Performance Measurement

When preventive services completed in outside health systems were included in performance calculations (Informed-Clinician approach), significant absolute improvements ranging from 5.6% to 16.4% were seen in all 5 measures (P < .001 for all; Table 2; Figure 2). Corresponding relative improvements in care ranged from 8.1% to 30.5% (P < .001 for all; Table 2). Similarly, the Patient-Centered approach, which credits patient exceptions, medical exceptions, and services completed elsewhere to the numerator, demonstrated significant absolute and relative improvements in all 5 performance measures (P < .001 for all; Figure 2). However, the incremental gains of accounting for patient and medical exceptions in the numerator were small compared with those resulting from including preventive services completed in outside health systems (Table 2).

Cross-sectional comparison of the different approaches to performance measurement in the post-implementation period demonstrated significant improvements in performance when preventive services completed elsewhere were included in measures. The Informed-Clinician approach yielded significantly better performance than the NQF approach on all measures (breast cancer screening +5.6%, P < 0.001; pneumococcal vaccination +8.1%, P < .001; colorectal cancer screening +12.1%, P < .001; osteoporosis screening +3.6%, P = .014; and cervical cancer screening +8.5%, P < .001). Similarly, performance was better with the Patient-Centered approach than the NQF approach for breast cancer screening, pneumococcal vaccination, colorectal cancer screening, cervical cancer screening (P < .001 for all), and osteoporosis screening (P = .003). However, there were no differences between the Informed-Clinician approach and the Patient-Centered approach, largely because of the very low rates of medical and patient exceptions reported (P = 1.0 for all; Figure 2).

Discussion

This study examined the impact of CDS reminders with customized features to capture clinician-reported data on the quality of preventive care in 3 large academic primary care practices. The study found that a passive, visit-based CDS reminder alone improved performance on 3 of 5 preventive health quality measures using NQF criteria. CDS customization can prompt clinicians to report information not available in the local EMR. Including preventive services completed elsewhere that were reported through CDS markedly improved performance on all 5 measures. Patient and medical exceptions to preventive services were infrequent and including them in calculations did not significantly change performance. Preventive health quality measures should account for services completed in outside health systems to accurately measure performance and reflect real-world practice.

Overall improvement with visit-based CDS reminders alone was modest with absolute improvements ranging from 0% to 6.1% using NQF measure calculations. However, these improvements were large enough for the study practice to go from meeting benchmarks on 2 measures at baseline (breast and colorectal cancer screening) to 3 measures (breast cancer screening, colorectal cancer screening, and pneumococcal vaccination) after CDS implementation. When preventive services completed elsewhere were included in metrics (Informed-Clinician approach), the greatest improvements in performance were observed and exceeded NQF benchmarks on 4 of the 5 measures examined with absolute improvements ranging from 5.6% to 16.4%.

In spite of substantial efforts to improve the quality of outpatient care, improvements have been modest and care deficits persist.12 Previous studies examining visit-based CDS reminders to improve preventive health care have shown mixed results.13,14 The present study results using CDS reminders alone (NQF approach) are similar to those observed in other studies using reminder-based interventions.15,16 The change in performance observed in the present study when care delivered elsewhere and patient/medical exceptions were included in measure calculations was greater than that observed in a similarly designed CDS study.7 However, baseline performance in the present study was lower, providing greater opportunity for improvement.

Current performance measurement strategies do not consistently account for the fragmentation of health care delivery and are not designed to include preventive services completed elsewhere in measure calculations.6 When patients receive care from multiple providers in different health care systems with different EMRs,17 data needed to calculate measures are frequently missing. As a result, performance is underestimated and open health care systems appear systematically worse than closed systems. Given the onetime or infrequent nature of many preventive health services, even all-payer, all-site claims data will underestimate the true rate of preventive service delivery.18 As a consequence, the NQF preventive measures may “miss” up to 10% of preventive care that is in reality up-to-date. In community settings, the “miss” rate could be even greater than that observed in the present study, which was conducted at an academic center with comprehensive preventive screenings available and captured in the same EMR. In this study, performance on 2 measures (breast and cervical cancer screening) improved only after accounting for services completed elsewhere. Although providers could obtain outside records, the logistical barriers are overwhelming given that primary care providers coordinate care with up to 99 physicians and 53 practices for every 100 Medicare beneficiaries.19 Thus, the current NQF measurement approach may penalize providers for underperformance or incentivize them to “choose unwisely” and reorder unnecessary screening tests or vaccinations to close perceived quality gaps.

Although ACOs are designed to share potential savings resulting from improved quality of care, mechanisms to promote data capture and sharing across institutions are limited and may limit the actualization of shared savings.20,21 Innovative, automated approaches to data capture and exchange across diverse health care systems and EMRs are needed for more accurate calculation of ambulatory quality.22,23 The present study’s visit-based CDS reminders were designed to help bridge this gap by engaging clinicians and patients in an informed discussion about guideline-indicated services and capture exceptions.24,25 The addition of user-friendly “done elsewhere” or “not done patient/medical reasons” exception reporting buttons to CDS reminders is a promising, pragmatic approach to capturing key data not readily available in the EMR from the clinicians who know the patient best.

From a policy perspective, as health information exchanges mature and data sharing across EMRs becomes more feasible, quality measures should more closely approximate the Informed-Clinician perspective that this study has shown yields superior performance. This will become even more important in the new MACRA era where providers have much to gain and lose financially based on their performance on preventive service delivery measures.

Although patient and medical exceptions to preventive health services are perceived to be common in clinical practice, they were uncommon in this study and did not affect performance significantly. Patient refusal rates in this study were lower than those reported in other studies for pneumococcal vaccination26,27 and colorectal cancer screening28,29 and may underestimate the true frequency. However, they approximate those reported in a similarly designed CDS reminder study.7,30 Although the impact of these exceptions was small, the research team believes that factoring patient preferences into quality assessments is important from a shared decision-making perspective because it reflects the realities of clinical practice and may bolster clinician engagement with CDS and quality improvement initiatives.28,31,32

A few study strengths are worth noting. First, the passive EMR reminders utilized in this study incorporated key features of successful CDS interventions.7,14 Second, this study included nearly 10 000 patients in 3 different practices within an open health care system that utilizes the most commonly used commercial EMR. Third, the CDS harvested important data from clinicians that are not readily available in the EMR or captured in standard quality measures.

However, this study is not without limitations. First, the findings may have been influenced by underlying secular trends. Second, the study was conducted in 3 academic, Level 3 PCMHs with well-established EMRs, which may limit generalizability. However, baseline performance was similar to the national average on most measures. Third, preventive services completed elsewhere and medical/patient exceptions were reported by patients and documented by clinicians during routine clinical care but were not validated by chart review. However, physician-reported exceptions to quality measures are appropriate more than 90% of the time.30,33 Last, because the CDS was passive and noninterruptive, the research team was unable to determine the frequency with which clinicians interacted with CDS to order preventive services versus used the CDS as a “reminder” to order services outside of CDS workflows.

Conclusions

Visit-based, EMR-enabled CDS can improve performance on preventive service quality measures through several mechanisms. Beyond simple reminder functions, CDS that prompts the recording (in structured fields) of care completed elsewhere and allows inclusion of these data in performance measures can markedly improve performance. Developing better systems to capture and track patient-specific receipt of preventive health services delivered anywhere and over time will be critical to optimizing performance measurement and reducing unnecessary duplication of care. Improvements in EMR interoperability and/or the emergence of functional health information exchanges would be positive developments toward these goals.

Acknowledgments

We would like to thank David Baker, MD, MPH, Kim Batchelor, MPH, Heather Schneider, Darren Kaiser, MS, and Jason Thompson. We also greatly appreciate the support of the UT Southwestern Information Resources team and all the physicians in the 3 participating practices.

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This study was supported by a grant from the University of Texas Health System Chancellor’s Health Fellow for Information Technology initiative. Additional resources were provided by the UT Southwestern Center for Patient-Centered Outcomes Research (AHRQ R24 HS022418) and the Dedman Family Scholars in Clinical Care. Dr Bowen was supported by the National Center for Advancing Translational Sciences of the NIH (KL2TR001103) and the NIH/NIDDK K23 DK104065. Dr Halm was supported in part by AHRQ R24 HS022418.

Footnotes

Preliminary data from this study were presented at the 2013 Society of General Internal Medicine National Meeting (Denver, Colorado).

Declaration of Conflicting Interests

The authors declared the following potential conflicts of interest with respect to the research, authorship, and/or publication of this article: Dr Bowen and other authors do not have any conflicts of interest. Dr Persell has received support for unrelated research from Pfizer, Inc. and Omron Healthcare, Inc.

References

- 1.Schneider EC, Riehl V, Courte-Wienecke S, Eddy DM, Sennett C. Enhancing performance measurement: NCQA’s road map for a health information framework. National Committee for Quality Assurance. JAMA. 1999;282:1184–1190. doi: 10.1001/jama.282.12.1184. [DOI] [PubMed] [Google Scholar]

- 2.Centers for Medicare & Medicaid Services. [Accessed September 20 2016];MACRA: Delivery System Reform, Medicare Payment Reform. https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/Value-Based-Programs/MACRA-MIPS-and-APMs/MACRA-MIPS-and-APMs.html.

- 3.Anderson KM, Marsh CA, Flemming AC, Isenstein H, Reynolds J. Quality Measurement Enabled by Health IT: Overview, Possibilities, Challenges. Rockville, MD: Agency for Healthcare Research and Quality; 2012. (AHRQ Publication No. 12-0061-EF) [Google Scholar]

- 4.Tang PC, Ralston M, Arrigotti MF, Qureshi L, Graham J. Comparison of methodologies for calculating quality measures based on administrative data versus clinical data from an electronic health record system: implications for performance measures. J Am Med Inform Assoc. 2007;14:10–15. doi: 10.1197/jamia.M2198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Schneider EC, Nadel MR, Zaslavsky AM, McGlynn EA. Assessment of the scientific soundness of clinical performance measures: a field test of the National Committee for Quality Assurance’s colorectal cancer screening measure. Arch Intern Med. 2008;168:876–882. doi: 10.1001/archinte.168.8.876. [DOI] [PubMed] [Google Scholar]

- 6.American College of Physicians. [Accessed October 26, 2016];EHR-Based Quality Measurement & Reporting: Critical for Meaningful Use and Health Care Improvement. https://www.acponline.org/advocacy/where-we-stand/health-information-technology.

- 7.Persell SD, Kaiser D, Dolan NC, et al. Changes in performance after implementation of a multifaceted electronic-health-record-based quality improvement system. Med Care. 2011;49:117–125. doi: 10.1097/MLR.0b013e318202913d. [DOI] [PubMed] [Google Scholar]

- 8.Berner E. Clinical Decision Support Systems: State of the Art. Rockville, MD: Agency for Healthcare Research and Quality; 2009. (AHRQ Publication No. 09-0069-EF) [Google Scholar]

- 9.Baker DW, Persell SD, Thompson JA, et al. Automated review of electronic health records to assess quality of care for outpatients with heart failure. Ann Intern Med. 2007;146:270–277. doi: 10.7326/0003-4819-146-4-200702200-00006. [DOI] [PubMed] [Google Scholar]

- 10.Persell SD, Wright JM, Thompson JA, Kmetik KS, Baker DW. Assessing the validity of national quality measures for coronary artery disease using an electronic health record. Arch Intern Med. 2006;166:2272–2277. doi: 10.1001/archinte.166.20.2272. [DOI] [PubMed] [Google Scholar]

- 11.The National Committee for Quality Assurance. [Accessed March 1, 2016];HEDIS and performance measurement. http://www.ncqa.org/HEDISQualityMeasurement/HEDISMeasures/HEDISArchives.aspx.

- 12.Levine DM, Linder JA, Landon BE. The quality of outpatient care delivered to adults in the United States, 2002 to 2013. JAMA Intern Med. 2016;176:1778–1790. doi: 10.1001/jamainternmed.2016.6217. [DOI] [PubMed] [Google Scholar]

- 13.Garg AX, Adhikari NK, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005;293:1223–1238. doi: 10.1001/jama.293.10.1223. [DOI] [PubMed] [Google Scholar]

- 14.Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005;330:765. doi: 10.1136/bmj.38398.500764.8F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Baron RC, Melillo S, Rimer BK, et al. Intervention to increase recommendation and delivery of screening for breast, cervical, and colorectal cancers by healthcare providers a systematic review of provider reminders. Am J Prev Med. 2010;38:110–117. doi: 10.1016/j.amepre.2009.09.031. [DOI] [PubMed] [Google Scholar]

- 16.Holt TA, Thorogood M, Griffiths F. Changing clinical practice through patient specific reminders available at the time of the clinical encounter: systematic review and meta-analysis. J Gen Intern Med. 2012;27:974–984. doi: 10.1007/s11606-012-2025-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Pham HH, Schrag D, O’Malley AS, Wu B, Bach PB. Care patterns in Medicare and their implications for pay for performance. N Engl J Med. 2007;356:1130–1139. doi: 10.1056/NEJMsa063979. [DOI] [PubMed] [Google Scholar]

- 18.Freeman JL, Klabunde CN, Schussler N, Warren JL, Virnig BA, Cooper GS. Measuring breast, colorectal, and prostate cancer screening with Medicare claims data. Med Care. 2002;40(8 suppl):IV-36–42. doi: 10.1097/00005650-200208001-00005. [DOI] [PubMed] [Google Scholar]

- 19.Pham HH, O’Malley AS, Bach PB, Saiontz-Martinez C, Schrag D. Primary care physicians’ links to other physicians through Medicare patients: the scope of care coordination. Ann Intern Med. 2009;150:236–242. doi: 10.7326/0003-4819-150-4-200902170-00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Singer S, Shortell SM. Implementing accountable care organizations: ten potential mistakes and how to learn from them. JAMA. 2011;306:758–759. doi: 10.1001/jama.2011.1180. [DOI] [PubMed] [Google Scholar]

- 21.Shortell SM, Casalino LP, Fisher ES. How the Center for Medicare and Medicaid Innovation should test accountable care organizations. Health Aff (Millwood) 2010;29:1293–1298. doi: 10.1377/hlthaff.2010.0453. [DOI] [PubMed] [Google Scholar]

- 22.Roski J, McClellan M. Measuring health care performance now, not tomorrow: essential steps to support effective health reform. Health Aff (Millwood) 2011;30:682–689. doi: 10.1377/hlthaff.2011.0137. [DOI] [PubMed] [Google Scholar]

- 23.Pronovost PJ, Lilford R. Analysis & commentary: a road map for improving the performance of performance measures. Health Aff (Millwood) 2011;30:569–573. doi: 10.1377/hlthaff.2011.0049. [DOI] [PubMed] [Google Scholar]

- 24.Schroy PC, Emmons KM, Peters E, et al. Aid-assisted decision making and colorectal cancer screening: a randomized controlled trial. Am J Prev Med. 2012;43:573–583. doi: 10.1016/j.amepre.2012.08.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Rimer BK, Briss PA, Zeller PK, Chan EC, Woolf SH. Informed decision making: what is its role in cancer screening? Cancer. 2004;101(5 suppl):1214–1228. doi: 10.1002/cncr.20512. [DOI] [PubMed] [Google Scholar]

- 26.Miller LS, Kourbatova EV, Goodman S, Ray SM. Brief report: risk factors for pneumococcal vaccine refusal in adults: a case control study. J Gen Intern Med. 2005;20:650–652. doi: 10.1111/j.1525-1497.2005.0118.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Jacobson TA, Thomas DM, Morton FJ, Offutt G, Shevlin J, Ray S. Use of a low-literacy patient education tool to enhance pneumococcal vaccination rates. A randomized controlled trial. JAMA. 1999;282:646–650. doi: 10.1001/jama.282.7.646. [DOI] [PubMed] [Google Scholar]

- 28.Walter LC, Davidowitz NP, Heineken PA, Covinsky KE. Pitfalls of converting practice guidelines into quality measures: lessons learned from a VA performance measure. JAMA. 2004;291:2466–2470. doi: 10.1001/jama.291.20.2466. [DOI] [PubMed] [Google Scholar]

- 29.Denny JC, Choma NN, Peterson JF, et al. Natural language processing improves identification of colorectal cancer testing in the electronic medical record. Med Decis Making. 2012;32:188–197. doi: 10.1177/0272989X11400418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Persell SD, Dolan NC, Friesema EM, Thompson JA, Kaiser D, Baker DW. Frequency of inappropriate medical exceptions to quality measures. Ann Intern Med. 2010;152:225–231. doi: 10.7326/0003-4819-152-4-201002160-00007. [DOI] [PubMed] [Google Scholar]

- 31.Sox HC, Greenfield S. Quality of care—how good is good enough? JAMA. 2010;303:2403–2404. doi: 10.1001/jama.2010.810. [DOI] [PubMed] [Google Scholar]

- 32.Hofer TP, Hayward RA, Greenfield S, Wagner EH, Kaplan SH, Manning WG. The unreliability of individual physician “report cards” for assessing the costs and quality of care of a chronic disease. JAMA. 1999;281:2098–2105. doi: 10.1001/jama.281.22.2098. [DOI] [PubMed] [Google Scholar]

- 33.Kmetik KS, O’Toole MF, Bossley H, et al. Exceptions to outpatient quality measures for coronary artery disease in electronic health records. Ann Intern Med. 2011;154:227–234. doi: 10.7326/0003-4819-154-4-201102150-00003. [DOI] [PubMed] [Google Scholar]

- 34.National Committee for Quality Assurance. [Accessed October 26, 2016];Improving quality and patient experience: the state of health care quality. 2013 https://www.ncqa.org/Portals/0/Newsroom/SOHC/2013/SOHC-web_version_report.pdf.