Abstract

Background

The estimation of an effect size is an important step in designing an adequately powered, feasible clinical trial intended to change clinical practice. During the planning phase of VA Cooperative Study #590, “Double-Blind Placebo-Controlled Study of Lithium for Preventing Repeated Suicidal Self-Directed Violence in Patients with Depression or Bipolar Disorder (Li+),” it was not clear what effect size would be considered large enough to influence prescribing behavior among practicing clinicians.

Methods

We conducted an online survey of VA psychiatrists to assess their interest in the study question, their clinical experience with lithium, and their opinion about what suicide reduction rate would change their prescribing habits. The 9-item survey was hosted on SurveyMonkey© and VA psychiatrists were individually emailed an invitation to complete an anonymous online survey. Three email waves were sent over three weeks.

Results

Overall, 862 of 2713 VA psychiatrists (response rate = 31.8%) responded to the anonymous survey. 74% of the respondents would refer a patient to the proposed trial, 9% would not, and 17% were unsure. Presented with suicide reduction rates in 10% increments ranging from 10 to 100%, 61% of respondents indicated that they would use lithium if suicide attempts were reduced by at least 40%; 83% would use lithium if it reduced attempts by at least 50%.

Conclusions

Even with the limitations of response bias and the reliability of responses on future prescribing behavior, a survey of potential users of a clinical trial's results offers a convenient, empirical method for determining and justifying clinically relevant effect sizes.

Keywords: Controlled clinical trial, Effect size, Clinical survey, Clinicians' opinions, Sample size estimation

1. Introduction

Randomized controlled trials face numerous challenges to complete successfully [1], [2]. Thoughtful pre–trial planning is necessary to determine the feasibility and to balance rigor, relevance, and the resources available. The estimation of an effect size is an important step in designing an adequately powered, feasible clinical trial that has the potential to change clinical practice and to improve patient outcomes.

The Veterans Affairs Cooperative Studies Program (CSP) has unique characteristics that make investigator-initiated multicenter studies possible: an integrated healthcare system, a large stable patient population, national databases, the oldest functioning universal electronic medical record, core infrastructure with statistical, epidemiological, pharmaceutical, and health economics expertise, and resources for planning studies [3]. During the planning phase of CSP #590, “Double-Blind Placebo-Controlled Study of Lithium for Preventing Repeated Suicidal Self-Directed Violence in Patients with Depression or Bipolar Disorder (Li+),” a multi-center study that has since been funded by the Veterans Affairs Cooperative Studies Program, there was a lack of agreement among experts and outside consultants on whether there was sufficient support for the proposed study in the field. In addition, it was not clear what effect size would be considered large enough to influence prescribing behavior among practicing clinicians. In response, we designed a provider survey to address these concerns from the largest number of potential users of the trial results. CSP#590 would be the first adequately powered randomized clinical trial to evaluate the effectiveness of lithium in preventing episodes of suicidal self-directed violence.

The survey was intended to address three primary goals. The first was to understand current experience and practice with regards to lithium; the second was to see whether psychiatrists would be supportive of randomizing their patients to lithium or placebo and endorse the study design; and the third goal was to determine what effect size would be considered large enough to influence prescribing behavior among practicing psychiatrists.

2. Methods

2.1. Overview of survey design

The study team developed a nine-item survey to address the three major goals outlined above (see Appendix A for survey questions). Before conducting the survey, we had extensive consultation with our Quality Assurance department and the Institutional Review Board (IRB) at VA Boston Healthcare System. We were advised that IRB approval was not necessary as the survey was a preparatory to research activity and meant to facilitate the planning of a study. After completion of the survey, we realized that the methods and results may be of interest to the scientific community and we again sought guidance from the local VA IRB. The VA Boston Healthcare System IRB Committee certified that the provider survey met all published guidelines for “exempt research” as defined in 38 CFR §16.101 and Veterans Health Administration (VHA) Handbook 1200.05 [4], [5]. The VA Boston Healthcare System Research and Development Committee subsequently approved this research study (i.e., publication of the survey results).

The survey was aimed at the entire population of VA psychiatrists. Specifically, we used an informatics-based approach to identify all actively prescribing psychiatrists in the VA system. Information from several VHA databases were merged and compiled to assemble an email distribution list for all survey recipients. Prescribing psychiatrists were surveyed from approximately 129 VA medical centers and VA healthcare systems across the 50 United States as well as Puerto Rico. The final survey was hosted on SurveyMonkey©. The online survey took approximately 5 minutes to complete. The CSP Study Director (MHL) emailed 2713 individual VA psychiatrists inviting them to complete an anonymous online survey that would help with the planning of a VA Cooperative Study. The survey was conducted between April 16th and May 16th of 2012. After the initial email was deployed, two additional reminder emails were sent to encourage individuals to complete the survey (see Appendix A for invitational email letter). Therefore, there were three waves of data collection occurring 9 to 14 days apart.

2.2. Identifying study population and creating the distribution list

The assembly of 2713 active VA email addresses was the most labor intensive and time consuming aspect of implementing the survey. In brief, the VHA Outpatient Encounter file was used to identify all physicians who completed encounter forms for outpatient visits to VA Mental Health clinics during Fiscal Year (FY) 2011. Next, this list was narrowed to the most relevant Provider Type in the database: Allopathic and Osteopathic Physicians; Psychiatry and Neurology; Psychiatry providers. Scrambled Social Security Numbers (SSNs), VA station codes, and names of the selected providers were retrieved from the Decision Support System (DSS) Providers file. This list of providers was then merged with the Staff Table of the Corporate Data Warehouse (CDW) database and the automated Lightweight Directory Access Protocol (LDAP) lookup system was used to retrieve email addresses for all providers.

Finally, duplicates were manually checked and records that had missing or obviously wrong position titles (e.g., incorrect provider type, non-MDs) and/or non-VA addresses were excluded from the final list. Psychiatry Residents and Fellows were included in the final list of providers. The total number of psychiatry providers identified using the VHA databases was validated against the number identified by the VA Office of Mental Health. The resultant list was inserted into the blind CC portion of email so that each individual got a personal letter without disclosing the addressees of other recipients.

2.3. Data collection

The final survey was hosted on SurveyMonkey© (https://www.surveymonkey.com/). This platform records all survey responses as well as the precise time (EST) that the online survey was completed. SurveyMonkey© has the capability of analyzing data online in real time as responses are accumulating. It is also possible to export the data file to analyze the responses using statistical software of one's choice.

3. Results

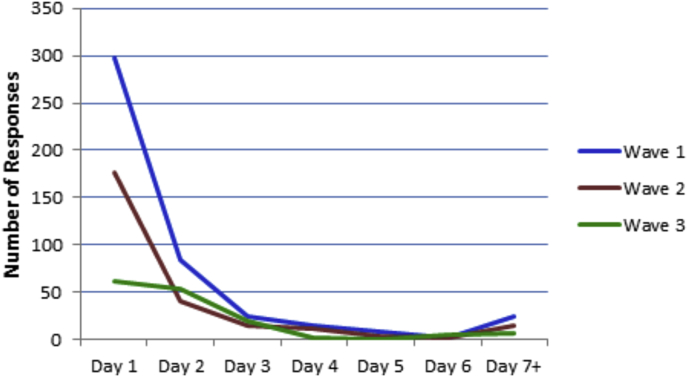

A total of 2713 email invitation letters were sent and online survey responses were received from 862 psychiatrists at VA medical centers across the United States. Overall, the response rate after three waves each 9 to 14 days apart was 31.8%. Among respondents, the response rate for individual items ranged from 98.0 to 99.9% with one exception: only 34.3% of respondents provided their three digit VA station code. Fig. 1 shows the frequency of survey response over time. The best response rate occurred on the first day the survey opened (n = 297). The response after the first email reminder (Wave 2, Day 1) was also very strong (n = 177). However, by the third email reminder (Wave 3, Day 1), the responses tapered off considerably (n = 61).

Fig. 1.

Provider survey response rate over time.

The first goal of the survey was to understand current experience and practice with respect to lithium usage (see Appendix A, Questions 2 and 3). As shown in Table 1, 93.3% of the responders currently prescribed lithium for patients with bipolar disorder and 72.7% for patients with major depression who did not respond to antidepressants. Among respondents who reported currently prescribing lithium for bipolar disorder, approximately three-quarters (73.9%) prescribed lithium for 10 to 50% of their patients with bipolar disorder. Among respondents who endorsed currently prescribing lithium for major depression, the majority (60.3%) reported prescribing lithium only rarely (i.e., <10% of patients with major depression who had not responded to antidepressants).

Table 1.

VA psychiatrists' report of lithium use for the treatment of bipolar disorder and treatment-resistant major depression.

| Response options | Prescribe lithium for bipolar disorder, n (%) | Prescribe lithium for treatment-resistant major depression, n (%) |

|---|---|---|

| Yes | 804 (93.3%)a | 625 (72.7%)c |

| Rarely (<10% of patients) | 115 (14.3%)b | 377 (60.3%)d |

| Occasionally (10–25% of patients) | 309 (38.4%)b | 215 (34.4%)d |

| Fairly often (25–50% of patients) | 285 (35.4%)b | 31 (5.0%)d |

| Very often (>50% of patients) | 95 (11.8%)b | 2 (0.3%)d |

Note: Superscripts denote total numbers of respondents used as the denominators to calculate the percentages for each response option: a = 862, b = 804, c = 860, d = 625.

The second goal of the survey was to determine whether VA psychiatrists would be willing to randomize their patient into the proposed study and endorse the study design (see Appendix A, Question 6). The major assumptions of the study design were given and respondents were asked: “Would you refer such a patient to a randomized, double-blind, placebo-controlled study of adjunctive lithium treatment for suicide prevention? (Lithium will be carefully monitored),” and 74.2% answered “Yes”, 16.8% were unsure, and 8.9% answered “No”.

The third goal was to determine what effect size would be considered large enough to influence prescribing behavior among practicing psychiatrists (see Appendix A, Question 7). Respondents were asked, “What overall percentage of reduction in suicide attempts would convince you to prescribe lithium for your own patient after a recent attempt?” Options between 10 and 100% were given in 10% increments. Fig. 2a displays the distribution of raw responses to the effect size question. The option of “need more information” was selected by 18.5% of respondents. Another 17.8% of respondents said they would be convinced to prescribe lithium for suicide prevention if a randomized controlled trial found a 50% reduction in suicide reattempts, followed by 17.4% who endorsed a 30% reduction in suicide reattempts. Among those who selected a numerical response (n = 690), the largest number of respondents (21.9%) selected a 50% reduction, 21.3% selected a 30% reduction, and 19.6% selected a 20% reduction. Fig. 2b shows the distribution of cumulative response percent for the subset of numerical response options in ascending order from 10 to 100%. Taken together, over half of respondents (61%) would prescribe lithium if it reduced suicide attempts by at least 40% and an even greater majority (83%) if it reduced suicide attempts by at least 50%.

Fig. 2.

a. VA psychiatrists' opinions about the reduction in suicide reattempts that would be needed to change prescribing practice (distribution of raw response percent). b. VA psychiatrists' opinions about the reduction in suicide reattempts that would be needed to change prescribing practice (cumulative distribution of response percent).

4. Discussion

During the planning phase of CSP#590 (Li+), experts and consultants could not reach consensus on two key issues. First, it was unclear whether the proposed study had sufficient support in the field to warrant the large expenditure of resources needed to conduct the trial. Second, there was uncertainty about what effect size would be large enough to change prescribing behavior among practicing clinicians. The results of the survey helped to resolve these concerns and we believe played an important role in the study sponsor's funding decision.

This survey was completed by 862 psychiatrists at more than 107 VA medical centers across the United States. The overall response rate for the survey was 31.8%. The best response rate occurred on the first day the survey opened; however, there were additional surges immediately following reminder emails albeit with diminishing returns (see Fig. 1). Little was gained by allowing a longer interval between reminders as indicated by the relatively flat line from day three onward. This response pattern is consistent with other online surveys which have found that reminder notices are associated with higher response rates but there are diminishing returns with larger numbers of contacts (see meta-analysis [6]). Although reported response rates for online surveys range widely from 5 to 85% [7], our response rate of 31.8% is consistent with the average rate achieved in online surveys (mean = 33% and 35%, reported in two meta-analyses [7], [6] respectively). Notably, the meta-analyses included diverse types of online surveys that were conducted in a variety of fields such as medicine, management, market research, policy research, education, and telecommunication. Compared with a review of internet-based surveys of health professionals in the United States which reported response rates ranging from 9 to 75% (n = 8; mean = 45%) [8], our survey response rate fell in the middle of this range.

The first goal of this provider survey was to understand current experience and practice with lithium among VA prescribing clinicians. Survey results revealed that VA psychiatrists use lithium for treating bipolar disorder (93%) as well as treatment-resistant major depressive disorder (73%). Our survey shows that currently (as of 2012), the majority of VA psychiatrists report they use lithium in 10 to 50% of their patients with bipolar disorder and in less than 10% of individuals with refractory depression (see Table 1 for complete breakdown of responses). We compared the psychiatrists' reports on their use of lithium with contemporaneous pharmacy data from the VA's Serious Mental Illness Treatment Resource and Evaluation Center (SMITREC), a program evaluation center in the VA Office of Mental Health Operations. SMITREC examined outpatient lithium prescription rates among VHA patients with a recorded diagnosis of bipolar disorder in FY2013 and outpatient lithium receipt was observed for 14.8% of patients. Among individuals with a bipolar disorder diagnosis in FY2013 who had utilized VHA services in at least three of the prior five fiscal years, the prevalence of outpatient lithium receipt in the six-year period FY2008 – FY2013 was 26.5% (J.F. McCarthy, personal communication, September 29, 2014). These data suggest that a significant subset of survey respondents endorsed higher rates of lithium prescription than reflected in VHA pharmacy records. Differences in providers' reported prescription patterns relative to prescription rates in the VHA pharmacy records may be partially attributed to provider factors such as individual provider decision-making and geographic regional variation affecting medication choices [9], as well as response bias.

By comparison, a non-VA study analyzed utilization rates for psychotropic drugs using computerized medical benefits data from 2002 to 2003 in the United States and found that lithium was prescribed as an initial monotherapy in only 7.5% of individuals diagnosed with bipolar disorder [10]. Despite an overall trend of declining use of lithium for the treatment of bipolar disorder in the United States [11], our survey results suggest that a majority of VA psychiatrists continue to prescribe lithium for appropriate patients with bipolar disorder on a regular basis (i.e., occasionally to fairly often). Furthermore, SMITREC's analysis of VHA pharmacy records provides corroborative evidence that lithium prescription rates among VA providers appear to be at least double that of the overall rate in the United States (14.8% vs. 7.5%). Proponents of lithium have argued that the pattern of underutilizing lithium (despite its proven efficacy in the treatment of bipolar disorder) may be due in part to the abundance of marketing efforts for newer FDA-approved drugs, combined with concerns regarding adverse effects, tolerability, and the perception that regular toxicity monitoring is difficult [11], [12]. Indeed, a recent analysis of VA administrative data for Veterans diagnosed with bipolar disorder from 2003 to 2010 demonstrated that second-generation antipsychotics have supplanted lithium, valproate, and carbamazepine/oxcarbazepine as frontline antimanic treatment for bipolar disorder [9].

The second goal was to see whether psychiatrists would be supportive of randomizing their patients to lithium or placebo and endorse the study design. Three out of four respondents indicated that they would refer their patients who had recently survived a suicide attempt to the proposed randomized controlled trial. This result suggested very good field support for implementing a randomized, double-blind, placebo-controlled study of adjunctive lithium treatment for suicide prevention. Furthermore, the results helped to confirm that the research question is clinically important and compelling to the potential end-users of the trial.

The third objective was to determine what effect size would be considered large enough to influence prescribing behavior among practicing psychiatrists. Sixty-one percent of respondents would prescribe lithium if it reduced subsequent suicide attempts by at least 40% and 83% if it reduced reattempts by at least 50%. These survey findings provided additional support for the hypothesized effect size which was based on conventional methods of effect size estimation (i.e., a weighted average of effects observed in published lithium studies). Specifically, based on an effect size of approximately 43% observed in previous lithium studies and allowing for attenuation due to non-adherence, the CSP#590 study design is powered to detect an effect of 37% or greater reduction in the one-year rate of repeated suicide attempts. Thus, these survey findings lend support to the effect size used for this trial design and suggest that this is a clinically meaningful effect size that would convince a majority of psychiatrists to change their practice to prescribe lithium for appropriate patients with bipolar disorder or treatment-resistant major depression who have a survived a suicide attempt.

The estimation of an a priori sample size on which to plan a rigorous clinical trial has had relatively little study and discussion but is a critical step in trials planning. The usual gambits for estimating an effect size include using observed differences from previous trials, expert opinions, or when in doubt, the usual 20% difference. Reflecting this ad hoc approach are the varied terms to describe its designation: minimal clinically important difference, meaningful difference, clinically worthwhile difference, minimum responsiveness, et cetera. In this survey, we reasoned that the consumers or users of trials information are either the patient and/or the clinicians who treat persons with the condition (in this example, individuals with mood disorders at potential suicide risk) and they are best qualified to state what treatment difference would influence their practice and how likely it would be changed.

To the best of our knowledge, this represents the first survey of clinicians' beliefs conducted during the planning of a VA Cooperative Study with the primary goal of informing sample size estimates as well as gauging field support. Several other research groups have administered structured questionnaires to elicit expert opinions and gather a priori information for the purpose of informing trial design and sample size estimates, including assessing the level of treatment benefit that was deemed to be sufficient for causing clinicians to change their practice [13], [14], [15]. This approach emphasizes an important but sometimes overlooked aim of clinical trials, specifically that the product of a clinical trial should not be merely to establish treatment differences, but should be to meaningfully influence medical practice [13].

4.1. Limitations and conclusions

This method has several limitations that deserve comment, some of which are inherent to any survey. First, the survey was emailed and may be associated with lower response rates than mailed versions [7], [16], [17]. Nonetheless, the resources required to deploy an internet survey are considerably less than traditional paper-based mail surveys. The design and implementation of the current survey was carried out over several weeks and at little cost. Furthermore, using personalized correspondence and follow-up reminders proved to be effective in boosting the overall survey response rate, a finding which is consistent with previous studies [6], [8].

Second, potential response bias of responders and non-responders cannot be inferred because the survey was completely anonymous. Responders may differ from non-responders and limit the generalizability of the results to the entire population of VA psychiatrists. Response bias arguably could go in either direction but responses generally indicated that the majority of respondents were supportive of the trial. The anonymity of the survey also precludes the possibility of fully evaluating the representativeness of survey responses. However, one indicator of sample representativeness is the range of participation by providers at different VA medical centers. Providers could volunteer their site code on the survey and 34% (n = 295) of respondents provided their three digit VA station code. By inspection of the station code data, we found that survey participants represented 107 unique VA medical centers from 44 U.S. states as well as Puerto Rico (see Fig. 3 which depicts the geographic distribution of survey responders). Among those who reported station codes, there were no confirmed respondents from the following states: Alaska, Kentucky, Maine, New Hampshire, North Dakota, and Utah. Given that only a third of respondents provided valid station codes, we do not have a full picture of how representative respondents are of the whole VA population. However, it is reassuring that the survey results reflect the opinions of psychiatrists from a large number of VA medical centers across a large majority of the United States, and the geographical distribution of respondents appears to generally correspond with the volume of Veterans served by various VA facilities. A related drawback of conducting an anonymous survey was that we could not know the identity of responders or non-responders and we therefore sent reminder emails to the entire distribution list rather than only targeting the non-responders.

Fig. 3.

Geographic distribution of survey respondents who reported VA station code (n = 295).

Third, the primary rate- and time-limiting step was assembling a distribution list of appropriate clinicians from available data sources. It highlighted the fact that comprehensive lists of providers by specialties are not always available. We estimated 50 hours were required to assemble the target population based on the providers' specialty (psychiatry), credentials (medical degree), position title, and valid VA email address for physicians involved in providing mental health services. For future studies, access to a national listserv for VA providers in the specialty of interest (if available) would be a much more efficient method of reaching the target population.

Finally, what clinicians say they would do in hypothetical situations might not reflect actual behavior. Confidentiality may increase reliability in sensitive topics where respondents might feel judged and possibly increase response rate. In conclusion, even with the limitations of potential response bias and the reliability of responses on future prescribing behavior, a survey of targeted users of a trial's results may add a convenient, empirical method to the standard ways of estimating and justifying clinically relevant effect sizes for clinical trials.

Declaration of conflicting interests

The views expressed in this article are those of the authors and do not represent the official views of the Department of Veterans Affairs or the U.S. government.

Funding

This work was supported by the Cooperative Studies Program, Department of U.S. Veterans Affairs, Office of Research and Development.

Footnotes

Supplementary data related to this article can be found at http://dx.doi.org/10.1016/j.conctc.2016.08.004.

Appendix A. Supplementary data

The following is the supplementary data related to this article:

References

- 1.IOM (Institute of Medicine) The National Academies Press; Washington, DC: 2010. Transforming Clinical Research in the United States: Challenges and Opportunities: Workshop Summary. [PubMed] [Google Scholar]

- 2.Reith C., Landray M., Devereaux P.J. Randomized clinical trials—removing unnecessary obstacles. N. Engl. J. Med. 2013;369:1061. doi: 10.1056/NEJMsb1300760. [DOI] [PubMed] [Google Scholar]

- 3.Peduzzi P., Kyriakides T., O'Connor T.Z. Methodological issues in comparative effectiveness research: clinical trials. Am. J. Med. 2008;123(12):e8–e15. doi: 10.1016/j.amjmed.2010.10.003. [DOI] [PubMed] [Google Scholar]

- 4.38 CFR §16.101. Pensions, Bonuses, and Veterans' Relief: Protection of Human Subjects. 2008. [Google Scholar]

- 5.VHA Handbook 1200.05. Requirements for the Protection of Human Subjects in Research. 2012. [Google Scholar]

- 6.Cook C., Heath F., Thompson R.L. A meta-analysis of response rates in web- or internet-based surveys. Educ. Psychol. Meas. 2000;60(6):821–836. [Google Scholar]

- 7.Shih T.H., Fan X. Comparing rates in e-mail and paper surveys: a meta-analysis. Educ. Res. Rev. 2009;4:26–40. [Google Scholar]

- 8.Braithwaite D., Emery J., de Lusignan S. Using the internet to conduct surveys of health professionals: a valid alternative? Fam. Pract. 2003;20(5):545–551. doi: 10.1093/fampra/cmg509. [DOI] [PubMed] [Google Scholar]

- 9.Miller C.J., Li M., Penfold R.B. The ascendency of second-generation antipsychotics as frontline antimanic agents. J. Clin. Psychopharmacol. 2015;35(6):645–653. doi: 10.1097/JCP.0000000000000405. [DOI] [PubMed] [Google Scholar]

- 10.Baldessarini R., Leahy L., Arcona S. Patterns of psychotropic drug prescription for U.S. patients with diagnoses of bipolar disorder. Psychiatr. Serv. 2007;58(1):85–91. doi: 10.1176/ps.2007.58.1.85. [DOI] [PubMed] [Google Scholar]

- 11.Watts V. Psychiatric News; 2015. Lithium Remains a Viable Option for People with Bipolar Disorder.http://psychnews.psychiatryonline.org/doi/full/10.1176/appi.pn.2015.7a15 Retrieved from: [Google Scholar]

- 12.Malhi G.S., Tanious M., Bargh D. Safe and effective use of lithium. Aust. Prescr. 2013;36(1):18–21. [Google Scholar]

- 13.Fayers P.M., Cuschieri A., Fielding J. Sample size calculation for clinical trials: the impact of clinician beliefs. Br. J. Cancer. 2000;82(1):213–219. doi: 10.1054/bjoc.1999.0902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Parmar M.K.B., Spiegelhalter D.J., Freedman L.S. The CHART trials: Bayesian design and monitoring in practice. Stat. Med. 1994;13:1297–1312. doi: 10.1002/sim.4780131304. [DOI] [PubMed] [Google Scholar]

- 15.Tan S.B., Chung Y.F.A., Tai B.C. Elicitation of prior distributions for a phase III randomized controlled trial of adjuvant therapy with surgery for hepatocellular carcinoma. Control Clin. Trials. 2003;24:110–121. doi: 10.1016/s0197-2456(02)00318-5. [DOI] [PubMed] [Google Scholar]

- 16.Dillman D.A., Smyth J.D., Christian L.M. third ed. John Wiley & Sons, Inc.; 2008. Internet, Mail, and Mixed-mode Surveys: the Tailored Design Method. [Google Scholar]

- 17.Nulty D.D. The adequacy of response rates to online and paper surveys: what can be done? Assess. Eval. High. Educ. 2008;33(3):301–314. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.