Abstract

Objectives

An estimated 14% to 25% of all scientific studies in peer-reviewed emergency medicine journals are medical records reviews. The majority of the chart reviews in these studies are performed manually, a process that is both time-consuming and error-prone. Computer-based text search engines have the potential to enhance chart reviews of electronic emergency department (ED) medical records. The authors compared the efficiency and accuracy of a computer-facilitated medical record review of ED clinical records of geriatric patients with a traditional manual review of the same data, and describe the process by which this computer-facilitated review was completed.

Methods

Clinical data from consecutive ED patients age 65 years or older were collected retrospectively by manual and computer-facilitated medical record review. The frequency of three significant ED interventions in older adults was determined using each method. Performance characteristics of each search method, including sensitivity and positive predictive value were determined, and the overall sensitivities of the two search methods were compared using McNemar’s test.

Results

For 665 patient visits, there were 49 (7.4%) Foley catheters placed, 36 (5.4%) sedative medications administered, and 15 (2.3%) patients who received positive pressure ventilation. The computer-facilitated review identified more of the targeted procedures (99 of 100, 99%), compared to manual review (74 of 100 procedures; 74%) (p < 0.0001).

Conclusions

A practical, non–resource intensive, computer-facilitated free-text medical record review was completed and was more efficient and accurate than manually reviewing ED records.

INTRODUCTION

As recently as 2009, the rate of electronic health record (EHR) adoption in the United States was only 10% to 19% .1 The passage of the HITECH Act (The Health Information Technology for Economic and Clinical Health) in 2009, and its associated financial incentives, resulted in an abrupt increase in the rate of EHR adoption.2 It is hoped that the accumulated data gleaned from electronic patient records will facilitate advanced medical record reviews that foster the rapid development of both personalized and evidence-based care strategies.3 Medical record review is also an important research technique for hypothesis development,4,5 and a potential component of comparative effectiveness research.5 In the emergency medicine (EM) literature, an estimated 14% to 25% of all scientific studies in peer-reviewed EM journals are medical records reviews.6-8

However, even with the adoption of EHRs, it can be difficult to search clinical data. Text is often entered in difficult to search free-text format, as it can be time-consuming for clinicians to enter data in a more accessible structured format. Searching for specific medical concepts in the free text of clinical notes is rarely directly available to clinical researchers, and natural language processing tools are also not available to most clinical researchers. Thus, electronic searching frequently requires knowledge of proprietary search languages and programming skills beyond the capability of many clinical researchers. Furthermore, the usual methodology of screening records based on discharge diagnosis code is particularly ineffectual for emergency department (ED) records, as these administrative codes often agree with neither the actual clinical diagnosis, nor the initial complaint.9-11 The net result of these difficulties in performing electronic searches for retrospective reviews is that these reviews are still often done manually. There is need for a more efficient method of extracting information from large numbers of free-text medical records.

Literature regarding automated extraction of clinical information from free-text portions of EHRs dates back to the early 1970s.12,13 Additionally, there are numerous widely used commercial systems that are used to process free text and generate ICD-9 codes for billing purposes. The performance of these systems to find research cohorts, however, has not been studied in a systematic manner, and the particulars of the algorithms are proprietary.

We set out to develop a minimally resource-intensive secure computer-facilitated system for chart review. We transferred the raw data from the local hospital information system (HIS) and created our own secure workspace that allowed researchers to directly perform ad hoc free-text queries on ED records using a familiar “search box” interface. The results of these searches were then compared to the accuracy of manual review of a subset of these medical records in order to determine if simple string searches using Boolean operators would improve sensitivity without an unacceptable loss of positive predictive value. Our goal was to improve the efficiency of manual chart review by decreasing the total number of charts requiring manual review, while increasing the overall number of pertinent cases retrieved.

Criterion Standard

The criterion standard was defined as the integrated results of the manual chart review and the computer-facilitated chart review with manual re-review of all cases in which there was not agreement between the manual review and the computer-facilitated review. It was necessary to define the criterion standard, as this study reassesses the accuracy of the traditional method for gathering data in retrospective research, the manual chart review, and hence an alternative criterion standard is required.

METHODS

Study Design

We compared the results of a manual medical record review, which looked for specific low-frequency ED interventions, to a computer-facilitated review, which we define as an initial computer search followed by a manual search of the limited number of charts identified in the computer search. The manual medical record review was part of an observational study on consecutively enrolled geriatric patients (age ≥ 65 years) who presented to the ED over a one-month period.14 The computer-facilitated search was a retrospective review of the same data set. The ED charting at our institution is performed using the T-System EV v.2.5, 2001-2005 (T-System Technologies, Ltd., Dallas, TX) ED information system (EDIS). T-System uses a combination of structured data fields and free-text entry to generate a final free-text document. The information recorded in T-System is then transferred to the main hospital EHR (WebCIS, University of North Carolina. WebCIS v 3.0.1. Chapel Hill, NC 2011). With the exception of demographics, all clinical documentation is combined into a single large text field during the transfer process, which makes both manual and computer review challenging. For both the manual medical record review and the computer-facilitated medical record review, strategies to improve accuracy and minimize inconsistencies in medical chart reviews as advocated by Gilbert et al.7 were used wherever applicable, including training of chart abstractors, explicit case selection and variables, abstraction forms, periodic meetings, monitoring, blinding, and testing interrater agreement. The study received institutional review board approval with a waiver of informed consent requirements.

Study Setting and Population

We conducted our study at a university teaching hospital and Level I trauma center ED with approximately 65,000 patient visits per year. All patients aged 65 years or older who visited the ED between June 19th and July 17th of 2008 were included in the study. A study nurse enrolled consecutive ED patients in the study by identifying their presence in the T-System log.

Study Protocol and Measurements

Manual Medical Record Review

An experienced study nurse trained in medical record abstraction methodology for this study reviewed each patient’s medical record in the EDIS within 48 hours of the patient’s ED visit. The purpose of collecting these data was to identify the rates of key clinical interventions associated with the ED care of older adult patients, including Foley catheter placement, sedation of elderly adults in the ED, and use of positive pressure ventilation. These interventions were targeted because of concerns for increased use, iatrogenic infection, or increased mortality in geriatric patients.15,16 The study chart abstractor was blinded to the aims of our computer-facilitated medical record review study. Information was recorded from the EHR onto a chart abstraction spreadsheet by reviewing the charts on-line, without the assistance of a search function, as our EHR does not offer this feature. Information collected by the study nurse included patient demographics, triage score, presence of family at triage, chief complaint, advance directives, ED resource use, occurrence of an immediate intervention in the ED, and admission and discharge information. Study variables were pre-defined using explicit criteria based on a two-week trial data collection period, during which the study nurse and study authors reviewed the results on a daily basis and inter-rater reliability was assessed.

The variables of targeted interventions were specifically defined. The placement of an indwelling catheter was defined as a patient receiving a new urinary catheter in the ED that remained in place at the time of the patient’s departure from the ED. Medications considered sedatives were diazepam, lorazepam, midazolam, alprazolam, haloperidol, trazodone, desyrel, zyprexa, olanzepine, or ziprasidone. Positive pressure ventilation was considered as being present for any patient who received intubation or non-invasive positive pressure ventilation during any portion of the ED visit.

Computer-Facilitated Medical Record Review

Data were obtained from the University of North Carolina hospital EHR system (WebCIS) as a text file (WebCIS, Chapel Hill, NC). This information was imported into a single Microsoft Access 2003 database table (Microsoft Corp, Redmond, WA). Each database record contained patient demographic information and visit-specific data (date and time of visit, method of arrival, etc.) as well as a single text field containing all of the clinical notes (nursing, resident physician, and attending physician notes) entered into T-System.

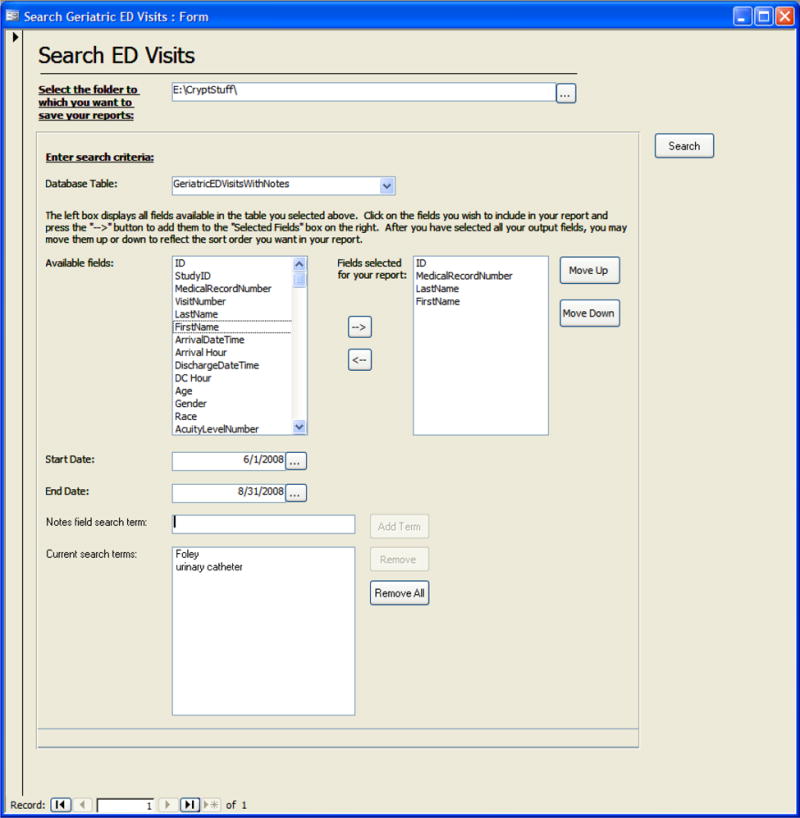

In contrast to the resource-intensive techniques used in previous studies,10 our system used simple text string searches and Boolean operators, implemented using Microsoft Access. An Access form was designed to provide an interface to create easily modifiable and reproducible database queries (Figure 1). The form accepted as input the date range of records to search, as well as one or more keywords for which to search in the notes field. When the user initiated a search, the form generated and executed a simple structured query language (SQL) query on the database. Results were formatted as locally stored hyper-text markup language (HTML) documents, with the search terms highlighted for quick identification by the researchers.

Figure 1.

Access interface form

The researcher running the database queries was blinded to the results of the manual medical record review. The initial keywords employed to search for each intervention were identified by consensus approach, based on the clinical experience of the investigators. Terms were selected by considering the interventions in question, and deciding which terms would most likely be recorded in our EHR when those interventions occurred. Keyword searches were not case-sensitive, and a record was counted as positive if it contained documentation supporting the interventions of interest. A small sample of charts not identified by the keyword search (< 10%) was reviewed manually to ensure that charts with the intervention of interest were identified by the search terms. A sample of charts identified by the keyword search was also reviewed manually to determine if the search was identifying mostly charts of interest, or if the initial search terms lacked positive predictive value (were frequently identifying charts that did not include the intervention of interest). If needed, the query results were then refined, based on the consensus of the reviewers, to ensure no charts with the interventions of interest were missed, while also maximizing the overall positive predictive value to limit the number of charts that would eventually require manual review. Table 1 lists the search terms and revised search terms used to identify each intervention.

Table 1.

Search terms used to identify each study intervention.

| Intervention | Search Terms (initial) | Search Terms (revised) |

|---|---|---|

| Placement of indwelling urinary catheter | foley, catheter, coude | foley, urinary catheter, coude |

| Sedatives administered in the ED | diazepam, valium, lorazepam, ativan, midazolam, versed, haloperidol, haldol, xanax, alprazolam, trazodone, desyrel, olanzepine, zyprexa, ziprasidone, geodon | No revised search |

| Positive pressure ventilation | bag valve mask, ventilation, vent, intubation, intubated, endotracheal tube, laryngoscope, surgical airway, cricothyrotomy, cricoidotomy, CPAP, BiPAP, positive pressure, pressure support | same as initial search terms except for the exclusion of the term “vent” |

CPAP = continuous positive airway pressure; BiPAP = bi-level positive airway pressure

Data Analysis

The computer-facilitated and manual search results were compared using frequency counts for each targeted intervention. The medical records of all cases in which discrepancies existed between the results of the manual and the computer-facilitated searches were adjudicated by two emergency physicians and a decision was made as to whether these cases met study definitions for the given intervention. These results were used to define a “criterion standard” document set for assessing the accuracy of the searches. The primary outcome was to determine performance characteristics of each search method including sensitivity and positive predictive value, and compare overall sensitivity of the two search methods using McNemar’s test.

Standard summary statistics (frequencies and percentages for categorical data, medians with ranges for continuous data) were used to describe the population. To assess the reliability of the intervention classifications, a sample of 30 medical records for which the manual and computer searches were in agreement was reviewed independently by two physicians blinded to the results of both the manual and computer-facilitated searches. Cohen’s kappa statistic was calculated to assess inter-rater reliability (SAS, version 9.2, Cary, NC).

In order to evaluate the performance of information retrieval systems, additional metrics of recall, precision, and F-score were calculated. Recall and precision are equivalent to sensitivity and positive predictive value, respectively. The F-Score is the harmonic mean of precision and recall ([2*precision*recall]/[precision +rRecall]) and is measure of a system’s overall accuracy.17

RESULTS

Characteristics of Study Subjects

During the one-month enrollment period, 665 ED patient visit records of 595 patients age 65 years or older were identified and eligible for review. The median age of these patients was 76 years, with a range from 65 to 99 years. Three hundred forty-one patients were female (57%), and were seen more often than males (254 patients, 43%). There were 403 white patients (68%), 187 African American patients (31%), and 5 patients (1%) were otherwise classified.

Main Results

Of the 665 patient ED visits, adjudicated results based on review of both manual and computer-facilitated searches identified 49 (7.4%) visits in which a Foley catheter was placed, 36 (5.4%) visits in which a sedative medication was administered, and 15 (2.3%) visits in which a patient received positive pressure ventilation. Manual medical record review without computer assistance identified 33 of the 49 Foley catheter placements (67%), 29 of the 36 sedative medication administrations (81%), and 12 of the 15 instances of positive pressure ventilation (80%). Thus, the manual review identified 74 of 100 (74%) targeted procedures.

The initial computer search (without refinement of terms or manual review of identified charts) identified 250, 93, and 613 charts for further review as possibly having Foley catheter, sedative medications, or positive pressure ventilation, respectively. The refined computer search then identified 79, 93, and 29 charts for further review for these interventions. The computer-facilitated search (initial computer search followed by refined search and then manual search of the identified records) identified 49 of 49 Foley catheter placements (100%), 35 of the 36 sedative medication administrations (97%), and 15 of the 15 instances of positive pressure ventilation (100%), while requiring far fewer charts for manual review (Table 2). The computer-facilitated search missed one visit in which a sedative medication was administered because the name of the sedation medication “trazodone” was misspelled in the medical record. The overall computerized-facilitated review identified more of the targeted procedures (99 of 100, 99%) than the manual search (74 of 100, 74%) (p < 0.0001 by McNemar’s test).

Table 2.

Results of manual and computer-facilitated medical record review.

| Intervention | Foley Catheter | Sedation Medications | Positive Pressure Ventilation |

|---|---|---|---|

| Total number of medical records in cohort | 665 | 665 | 665 |

| Cases for further review identified by initial computer search | 250 | 93 | 613 |

| Cases for further review identified by revised computer search | 79 | No revised search | 29 |

| Cases identified by computer – facilitated review (manual review of records identified by revised computer search) | 49 | 35 | 15 |

| Cases identified by manual medical record review without computer assistance | 33 | 29 | 12 |

| Adjudicated (final) results | 49 | 36 | 15 |

| Charts not requiring manual review because of computer-facilitated search | 88.1% | 86.0% | 95.6% |

| Cases identified by computer-facilitated review that were not identified by manual review (% increase over manual review) | 16 (48%) | 6 (21%) | 3 (25%) |

As illustrated in Table 3, the manual-only search and computer-only search (computer search without manual review of identified charts) had different search characteristics. The manual-only search had high positive predictive value, but relatively low sensitivity, while the computer-only search had high sensitivity, but relatively low positive predictive value. The computer-facilitated review combined these two search characteristics by an initial (and then refined) computer search to maximize sensitivity while eliminating the need to manually review many of the charts, followed by a manual review of the identified charts to optimize positive predictive power. The computer-facilitated search thus missed far fewer interventions of interest and removed the need to manually review between 86% and 96% of the charts for each search.

Table 3.

Results of manual and computer only medical record review

| Intervention | Manual Search | Computer Only Search | |||||

|---|---|---|---|---|---|---|---|

|

| |||||||

| Sensitivity* | Positive Predicative Value† | F-Score | Sensitivity* | Positive Predicative Value† | F-Score | Proportion of Charts Requiring Manual Review | |

| Foley catheter placement | 0.66 | 1.0 | 0.80 | 1.0 | 0.63 | 0.77 | 0.12 |

| Sedative medication administration | 0.81 | 1.0 | 0.89 | 0.97 | 0.38 | 0.54 | 0.14 |

| Positive pressure ventilation | 0.80 | 1.0 | 0.88 | 1.0 | 0.52 | 0.68 | 0.04 |

Sensitivity = Recall

Positive Predicative Value = Precision

The independent review by the two physicians blinded to the search results revealed a crude agreement as to the occurrence or non-occurrence of the three interventions from a sample of 30 medical records (90 potential interventions of interest) of 97.8% with a kappa of 0.94.

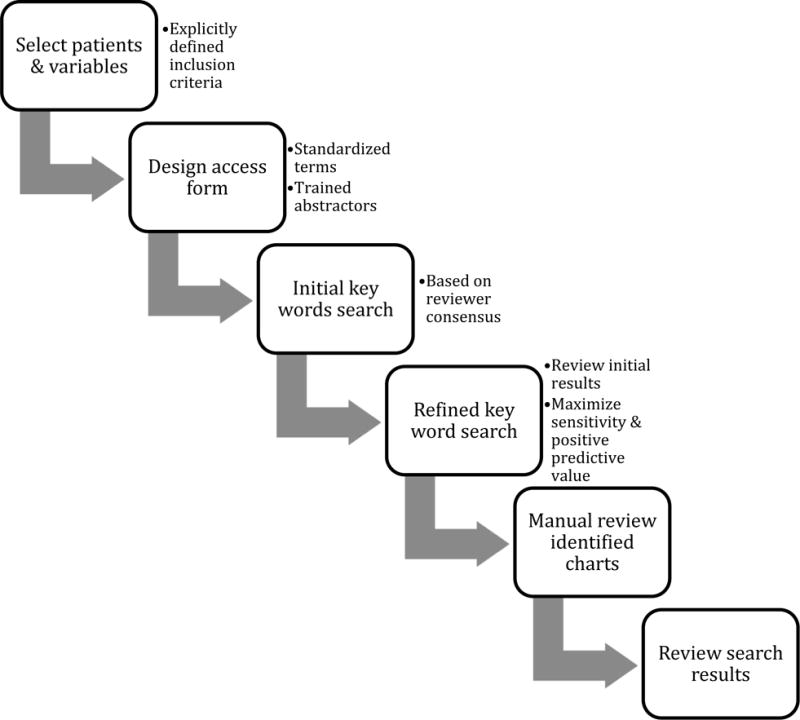

DISCUSSION

Computer-based searches for specific terms of interest have become popular, whether on internet search engines, literature databases (PubMed), or simply using the “Find” function within a word processing program. The extension of these searches to the increasing pool of EHRs creates the opportunity for conducting efficient review of medical records, including studies of uncommon events. Yet, search functions are relatively rare in most deployed EHRs. Additionally, imperfect computer-facilitated searches have the potential to introduce bias; thus it is important that clinical researchers begin to define the performance characteristics of computer-facilitated medical record search systems. This study demonstrated that a relatively simple computer-facilitated review could be superior to manual reviews. The steps of this computer-facilitated review process are illustrated in Figure 2.

Figure 2.

Computer-facilitated review

Most systems for general clinical text processing require extensive customization based on specialty, institution, and type of document.8,18,19 The goal of this project was to develop a system that was practical, yet effective for the identification of low-frequency clinical events. When searching for rare events, we sought to optimize the balance between practicality and precision in order to improve recall. The resulting approach revealed a 30% true positive rate for the refined computer-only searches for a manageable absolute number of records to review manually (range: 4% to 14%). This approach was optimal using unambiguous terms and suggests that Boolean searches can perform extremely well in clinical records.20,21 Our computer algorithm identified all but one item of interest; the missed item was secondary to a misspelling in the medical record. Our analysis suggests that a single manual search by a trained reviewer, however, will miss between 20% to 33% of desired strings (sensitivity of 67%, 81%, and 80% for each of our three manual searches). These sensitivities of a manual review are similar to those reported in a study for the National Surgical Quality Improvement Program (68% and 80%).22

The critical aspect of computerized searches hinges not on the computer’s ability to recognize strings, which can be performed unfailingly, but rather on the specificity of the findings. The three searches selected for this study (urinary catheterization, sedation medication administration, and use of positive pressure ventilation) were chosen both because they were subjects of interest, and because the word strings are good candidates for computer-facilitated medical record review. Each subject is a specific intervention that has associated keywords that are relatively specific and uncommon in medical records where these interventions did not occur (e.g. “Foley,” “Haldol,” and “intubation”). On the other hand, detecting all of the geriatric patients who suffered a clinically significant fall performs poorly because the word ‘fall’ can be ambiguous in clinical documentation. WordNet gives 12 different noun senses and 32 different verb meanings for the word ‘fall’ and the verb senses for ‘fall’ have more than 40 synonyms.22 When we ran an initial computer only search on our clinical records for one or more of the terms “fall,” “fell,” “faint,” or “syncop,” in order to identify patients that fell, the program flagged 582 of the 665 medical records for review, indicating a very poor positive predictive value with these unrefined terms.

The power of the computer-facilitated medical record review lies in its ability to accurately identify the conditions of interest and thus eliminate the need for manual review of a large number of irrelevant charts. We were able to reduce the number of charts needing review to identify Foley catheter insertion by 88%, for chemical sedations by 86%, and for positive pressure ventilation by 96%. This substantial reduction in the number of records to be manually reviewed, along with the fact that the computer can highlight the term of interest in flagged medical records, suggests the potential for significant time saving with the use of computer-facilitated medical record search. Potential advantages and disadvantages of computer-facilitated medical record review are listed in Table 4.

Table 4.

Advantages and disadvantages of computer-facilitated medical record review

| Advantages | Disadvantages |

|---|---|

| Identifies more outcomes of interest | May miss atypical cases |

| Time saving | Still requires initial comparison to manually reviewed subset of medical records to confirm search terms are appropriate, and review by medically trained personnel of computer identified charts |

| Effective for searches with easily targeted terms and low prevalence events | May not work well for events with difficult-to-target search terms |

LIMITATIONS

An important limitation is that the method of computer-facilitated chart review is only useful when search terms with a very high sensitivity and at least a moderate positive predictive value are identified. Hence, as illustrated above, using computer-facilitated chart review to identify patients who fell would not be practical in our institution. Before relying on the results of a computer-facilitated chart review, it is important to determine initial search terms and examine a small cohort of the charts, including those in which the search terms are found and those in which they are not found, to determine if the positive predicative value and sensitivity of these terms will facilitate the computer-facilitated review process.

Additionally, our approach, while practical, required the development of a custom software solution, as well as the training of research personal in the computer-facilitated chart review process. We recognize that the effort put into software development can be substantial, yet the amount of effort needed to implement this system by users with average computer skills was not unreasonable, and it was more time-efficient than employing additional manual reviewers.

The study examined only patients age 65 years and over at a single institution with a specific computerized medical record system. Search engines designed for other types of EHRs may have different performance characteristics based on search functionality and database structure.

Of note, we did not correlate the accuracy of the manual review with the number of variables to be extracted. It is possible that the more variables to be extracted, the more likely a manual reviewer will fatigue or be distracted and therefore fail to retrieve relevant records. Nevertheless, the similarity of our results to those of other studies indicated that maximal accuracy of a single manual review is likely to be both less accurate and more time-consuming than the same review using a search engine. It is also likely that if we had multiple reviewers, the sensitivity of the manual chart review would have increased. This would require additional resources, however, and further underscores the efficiency of the computer-facilitated chart review in comparison.

Finally, as noted above, because we reassess the accuracy of the traditional method for gathering data in retrospective research, the manual chart review, the question arises as to what we define as our criterion standard. We defined the criterion standard as the integrated results of a manual chart review and a computer-facilitated chart review with manual re-review of all cases in which there was not agreement between the initial manual review and the computer-facilitated review. While it is still possible that some ED interventions of interest were not identified in either of these two reviews, we believe the number of missed items, if any, to be very low, as both the manual and computer-facilitated reviews were done independently and with rigor, and the results of the manual review are consistent with those of other manual chart reviews cited in the literature.

CONCLUSIONS

Our findings support the hypothesis that computer-facilitated medical record review can be an efficient and accurate alternative to manual medical record review when searching for specific interventions or medications. A simplified approach, with minimal software development, greatly reduced the effort needed to conduct retrospective research in medical records while simultaneously improving accuracy.

Footnotes

Prior Presentations: Society for Academic Emergency Medicine, New Orleans, May 2009

Disclosures: Dr. LaMantia was a fellow of the John A. Hartford Foundation when his work on this project was initiated, and was a post-doctoral fellow on a T32 award from the NIH when his work on this project was completed.

Supervising Editor: Manish N. Shah, MD, MPH

References

- 1.Jha AK, DesRoches CM, Campbell EG, et al. Use of electronic health records in U.S. hospitals. N Engl J Med. 2009;360:1628–38. doi: 10.1056/NEJMsa0900592. [DOI] [PubMed] [Google Scholar]

- 2.Hsiao CJ, Hing E, Socey TC, Cai B. Electronic health records systems and intent to apply for meaningful use incentives among office-based physician practices: United States, 2001 – 2011. NCHS Data Brief. 2011;79:1–8. [PubMed] [Google Scholar]

- 3.Murphy S, Patlak M. A foundation for evidence-driven practice: a rapid learning system for cancer care: workshop summary. Washington, DC: National Academies Press; 2010. [PubMed] [Google Scholar]

- 4.Timmermans S, Mauck A. The promises and pitfalls of evidence-based medicine. Health Aff (Millwood) 2005;24:18–28. doi: 10.1377/hlthaff.24.1.18. [DOI] [PubMed] [Google Scholar]

- 5.Tinetti ME, Studensk SA. Comparative effectiveness research and patients with multiple chronic conditions. N Engl J Med. 2011;364(26):2478–81. doi: 10.1056/NEJMp1100535. [DOI] [PubMed] [Google Scholar]

- 6.Worster A, Bledsoe RD, Cleve P, Fernandes CM, Upadhye S, Eva K. Reassessing the methods of medical record review studies in emergency medicine research. Ann Emerg Med. 2005;45:448–51. doi: 10.1016/j.annemergmed.2004.11.021. [DOI] [PubMed] [Google Scholar]

- 7.Gilbert EH, Lowenstein SR, Koziol-McLain J, Barta DC, Steiner J. Chart reviews in emergency medicine research: where are the methods? Ann Emerg Med. 1996;27:305–8. doi: 10.1016/s0196-0644(96)70264-0. [DOI] [PubMed] [Google Scholar]

- 8.Corriol C, Daucourt V, Grenier C, Minvielle E. How to limit the burden of data collection for quality indicators based on medical records? The COMPAQH experience. BMC Health Serv Res. 2008;8:215. doi: 10.1186/1472-6963-8-215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Aronsky D. Accuracy of administrative data for identifying patients with pneumonia. Am J Med Qual. 2005;20:319–28. doi: 10.1177/1062860605280358. [DOI] [PubMed] [Google Scholar]

- 10.Chapman W, Dowling JN, Cooper G, Hauskrecht M, Valko M. A comparison of chief complaints and emergency department reports for identifying patients with acute lower respiratory syndrome [Abstract] Adv Dis Surveill. 2007;2:195. [Google Scholar]

- 11.Ratcliffe A, Barnett C, Ising A, Waller A. Evaluating the validity of ED visit data for biosurveillance [Abstract] Adv Dis Surveill. 2008;5:57. [Google Scholar]

- 12.Stanfill MH, Williams M, Fenton SH, Jenders RA, Hersh WR. A systematic literature review of automated clinical coding and classification systems. J Am Med Inform Assoc. 2010;17:646–51. doi: 10.1136/jamia.2009.001024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sager N, Lyman M, Bucknall C, Nhan N, Tick LJ. Natural language processing and the representation of clinical data. J Am Med Inform Assoc. 1994;1:142–60. doi: 10.1136/jamia.1994.95236145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Platts-Mills T, Travers D, Biese K, et al. Accuracy of the Emergency Severity Index triage instrument for identifying elderly emergency department patients receiving an immediate life-saving intervention. Acad Emerg Med. 2010;17:238–43. doi: 10.1111/j.1553-2712.2010.00670.x. [DOI] [PubMed] [Google Scholar]

- 15.Hazelett SE, Tsai M, Gareri M, Allen K. The association between indwelling urinary catheter use in the elderly and urinary tract infection in acute care. BMC Geriatr. 2006;6:15. doi: 10.1186/1471-2318-6-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Beers MH. Explicit criteria for determining potentially inappropriate medication use by the elderly. An update. Arch Intern Med. 1997;157:1531–6. [PubMed] [Google Scholar]

- 17.Powers DMW. Evaluation: from precision, recall and F-measure to ROC, informedness, markedness & correlation. J Machine Learn Technol. 2011;2:37–63. [Google Scholar]

- 18.Crowley RS, Castine M, Mitchell K, Chavan G, McSherry T, Feldman M. caTIES: a grid based system for coding and retrieval of surgical pathology reports and tissue specimens in support of translational research. J Am Med Inform Assoc. 2010;17:253–64. doi: 10.1136/jamia.2009.002295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Savova GK, Masanz JJ, Ogren PV, et al. Mayo clinical text analysis and knowledge extraction system (cTAKES): architecture, component evaluation and applications. J Am Med Inform Assoc. 2010;17:507–13. doi: 10.1136/jamia.2009.001560. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Barrows RC, Jr, Busuioc M, Friedman C. Limited parsing of notational text visit notes: ad-hoc vs. NLP approaches. Proc AMIA Symp. 2000:51–5. [PMC free article] [PubMed] [Google Scholar]

- 21.Lussier Y, Shagina L, Friedman C. Automating SNOMED coding using medical language understanding: a feasibility study. Proc AMIA Symp. 2001:418–22. [PMC free article] [PubMed] [Google Scholar]

- 22.Hanauer DA, Englesbe MJ, Cowan JA, Jr, Campbell DA. Informatics and the American College of Surgeons National Surgical Quality Improvement Program: automated processes could replace manual record review. J Am Coll Surg. 2009;208(1):37–41. doi: 10.1016/j.jamcollsurg.2008.08.030. [DOI] [PubMed] [Google Scholar]

- 23.Miller GA, Beckwith R, Fellbaum C, Gross D, Miller K. WordNet Homepage. Available at: http://wordnet.princeton.edu. Accessed Mar 18, 2013.