Abstract

Semantic cognition requires conceptual representations shaped by verbal and nonverbal experience and executive control processes that regulate activation of knowledge to meet current situational demands. A complete model must also account for the representation of concrete and abstract words, of taxonomic and associative relationships, and for the role of context in shaping meaning. We present the first major attempt to assimilate all of these elements within a unified, implemented computational framework. Our model combines a hub-and-spoke architecture with a buffer that allows its state to be influenced by prior context. This hybrid structure integrates the view, from cognitive neuroscience, that concepts are grounded in sensory-motor representation with the view, from computational linguistics, that knowledge is shaped by patterns of lexical co-occurrence. The model successfully codes knowledge for abstract and concrete words, associative and taxonomic relationships, and the multiple meanings of homonyms, within a single representational space. Knowledge of abstract words is acquired through (a) their patterns of co-occurrence with other words and (b) acquired embodiment, whereby they become indirectly associated with the perceptual features of co-occurring concrete words. The model accounts for executive influences on semantics by including a controlled retrieval mechanism that provides top-down input to amplify weak semantic relationships. The representational and control elements of the model can be damaged independently, and the consequences of such damage closely replicate effects seen in neuropsychological patients with loss of semantic representation versus control processes. Thus, the model provides a wide-ranging and neurally plausible account of normal and impaired semantic cognition.

Keywords: semantic diversity, imageability, parallel distributed processing, semantic dementia, semantic aphasia

Our interactions with the world are suffused with meaning. Each of us has acquired a vast collection of semantic knowledge—including the meanings of words and the properties of objects—which is constantly called upon as we interpret sensory inputs and plan speech and action. In addition to storing such conceptual information in a readily accessible form, we must call upon different aspects of knowledge to guide behavior under different circumstances. The knowledge that books are heavy, for example, is irrelevant to most of our interactions with them but becomes important when one is arranging a delivery to a library. These twin, intertwined abilities—the representation of acquired knowledge about the world and the controlled, task-oriented use of this knowledge—we refer to as semantic cognition.

The representation of semantic knowledge has long been the target of statistical and computational modeling approaches. One popular perspective, prevalent in cognitive neuroscience, holds that representations of object concepts arise from associations between their key verbal and nonverbal properties (Barsalou, 1999; Damasio, 1989; Martin, 2016; Patterson, Nestor, & Rogers, 2007; Pulvermuller, 2001; Simmons & Barsalou, 2003; Tyler & Moss, 2001). Another, rooted in computational linguistics, holds that semantic representation develops through sensitivity to the distributional properties of word usage in language (Andrews, Vigliocco, & Vinson, 2009; Firth, 1957; Griffiths, Steyvers, & Tenenbaum, 2007; Jones & Mewhort, 2007; Landauer & Dumais, 1997; Lund & Burgess, 1996; Rohde, Gonnerman, & Plaut, 2006). To date, these two approaches have made limited contact with one another. However, as we will demonstrate in the present work, these approaches are mutually compatible and considerable theoretical leverage can be gained by combining them. The second element of semantic cognition—its flexible and controlled use—has been investigated extensively in functional neuroimaging, transcranial magnetic stimulation and neuropsychological studies (Badre & Wagner, 2002; Gold et al., 2006; Jefferies, 2013; Jefferies & Lambon Ralph, 2006; Robinson, Shallice, Bozzali, & Cipolotti, 2010; Thompson-Schill, D’Esposito, Aguirre, & Farah, 1997) but has rarely been incorporated formally into computational models.

In this article, we present an implemented computational model that synthesizes the two distinct approaches to semantic representation and, furthermore, we propose a mechanism by which control processes interact with the knowledge store. Our primary tests of this model were its ability: (a) to generate a unified account of semantic representation and control spanning concrete and abstract items; and (b) to account for the contrastive impairments observed in two neuropsychological syndromes, semantic dementia and semantic aphasia, which have been attributed to representational and control damage, respectively (Jefferies & Lambon Ralph, 2006; Rogers, Patterson, Jefferies, & Lambon Ralph, 2015). The main strengths of our model are (a) its ability to represent a range of semantic information, including the meanings of abstract as well as concrete words, in a perceptually embodied and context-sensitive format, and (b) its ability to regulate activation of this knowledge in a way that meets changing task demands.

The article is structured as follows. We begin by considering the key challenges in knowledge representation that motivated this work. We describe the architecture of the model and illustrate how it meets these challenges. We then move on to consider the important but neglected issue of semantic control and describe how we have implemented a controlled retrieval process, which interacts with the knowledge store to direct semantic processing in a task-appropriate fashion. With these representational and control elements in place, we next present three simulations of performance on semantic tasks. We demonstrate that damage to the model’s representations and control processes induces divergent patterns of performance that closely replicate those of patients with hypothesized deficits in these abilities. We conclude by considering implications for theories of the neural basis of semantic cognition and by noting some challenges for future work.

Part 1: Representation of Semantic Knowledge

In cognitive neuroscience, there is widespread agreement that verbal, sensory, and motor experience, and the brain regions that represent such information, play an integral role in conceptual representation (Allport, 1985; Barsalou, 2008; Binder & Desai, 2011; Kiefer & Pulvermuller, 2012; Martin, 2016; Paivio, 1986; Lambon Ralph, Jefferies, Patterson, & Rogers, 2017). This embodied semantics position is supported by functional neuroimaging studies indicating that particular sensory and motor processing regions are activated when people process concepts which are linked to them (Chao, Haxby, & Martin, 1999; Goldberg, Perfetti, & Schneider, 2006; Kellenbach, Brett, & Patterson, 2001; Martin, Haxby, Lalonde, Wiggs, & Ungerleider, 1995; Thompson-Schill, Aguirre, D’Esposito, & Farah, 1999) and by neuropsychological and neurostimulation studies that link impairments in sensory-motor (S-M) processing with deficits for particular classes of semantic knowledge (Campanella, D’Agostini, Skrap, & Shallice, 2010; Farah & McClelland, 1991; Pobric, Jefferies, & Lambon Ralph, 2010; Warrington & Shallice, 1984). For example, damage to frontoparietal regions involved in representing actions disproportionately affects the semantic representations of tools and other manipulable objects (Buxbaum & Saffran, 2002). The degree of embodiment varies across theories (Meteyard, Cuadrado, Bahrami, & Vigliocco, 2012), with the most strongly embodied approaches proposing little distinction between the processes involved in direct S-M experience and those involved in representing knowledge acquired from such experiences (e.g., Gallese & Lakoff, 2005). Other theories hold that activation of S-M information is necessary but not sufficient for semantic representation, and that an additional, transmodal layer of representation is also needed (Binder, 2016; Blouw, Solodkin, Thagard, & Eliasmith, 2015; Damasio, 1989; Mahon & Caramazza, 2008; Patterson et al., 2007; Simmons & Barsalou, 2003). This rerepresentation is thought to be necessary because the mapping between the observable properties of objects and their conceptual significance is complex and nonlinear. As such, the development of coherent, generalizable conceptual knowledge requires integration of information from multiple modalities through a shared transmodal hub (Lambon Ralph, Sage, Jones, & Mayberry, 2010).

Rogers et al. (2004) provided a demonstration of the importance of transmodal representation, in an implemented neural network model known as the hub-and-spoke model, which is the starting point for the present work. The model consisted of several sets of “spoke” units representing sensory and verbal elements of experience. There were also a set of hidden units (the hub) which did not receive external inputs but instead mediated between the various spokes. The model’s environment consisted of names, verbal descriptions, and visual properties for 48 different objects. When presented with a particular input (e.g., the name dog), it was trained to activate other forms of information associated with that concept (its visual characteristics and verbal description) by propagating activation through the hub. During training, a learning algorithm applied slow, incremental changes to the connections between units, such that over time the network came to activate the correct information for all of the stimuli. In so doing, it developed distributed patterns of activity over the hub units that represented each of the 48 concepts. The similarity structure among these representations captured the underlying, multimodal semantic structure present in the training set.

To test the model further, Rogers et al. (2004) progressively removed the hub unit connections, which resulted in increasingly impaired ability to activate the appropriate information for each concept. These impairments closely mimicked the deficits observed in patients with semantic dementia (SD). SD is a form of frontotemporal dementia in atrophy centered on the anterior temporal lobes accompanies a selective erosion of all semantic knowledge—verbal and nonverbal (Hodges & Patterson, 2007; Hodges, Patterson, Oxbury, & Funnell, 1992; Snowden, Goulding, & Neary, 1989). SD patients exhibit deficits across a wide range of tasks that require semantic knowledge, including naming pictures, understanding words, using objects correctly, and identifying objects from their tastes and smells (Bozeat, Lambon Ralph, Patterson, Garrard, & Hodges, 2000; Hodges, Bozeat, Lambon Ralph, Patterson, & Spatt, 2000; Luzzi et al., 2007; Piwnica-Worms, Omar, Hailstone, & Warren, 2010). Deficits in SD have long been considered to result from damage to a central store of semantic representations (Warrington, 1975). Damage to the hub component of the Rogers et al. (2004) model produced the same pattern of multimodal impairment.

The close correspondence between the deficits of SD patients and the performance of the damaged hub-and-spoke model suggest that damage to the transmodal “hub” is the root cause of these patients’ deficits. Indeed, the pervasive semantic deficits in SD have been linked with damage to, and hypometabolism of, one particular area of the cortex: the ventrolateral anterior temporal lobe (Butler, Brambati, Miller, & Gorno-Tempini, 2009; Mion et al., 2010). Investigations using functional neuroimaging, transcranial magnetic stimulation and intracranial recordings have all confirmed that this region is selectively involved in many forms of verbal and nonverbal semantic processing, as one would expect of a transmodal semantic hub (Humphreys, Hoffman, Visser, Binney, & Lambon Ralph, 2015; Marinkovic et al., 2003; Pobric, Jefferies, & Lambon Ralph, 2007; Shimotake et al., 2015; Visser, Jefferies, Embleton, & Lambon Ralph, 2012).

The hub-and-spoke model, with its commitment to the embodied view that S-M experience plays an important role in shaping semantic representation, provides a parsimonious account of a range of phenomena in normal and impaired semantic processing (Dilkina, McClelland, & Plaut, 2008; Patterson et al., 2007; Lambon Ralph et al., 2017; Rogers et al., 2004; Rogers & McClelland, 2004; Schapiro, McClelland, Welbourne, Rogers, & Lambon Ralph, 2013). Its core principle, that semantic knowledge requires interaction between modality-specific and supramodal levels of representation, is also integral to a number of other theories of semantic cognition (Allport, 1985; Binder & Desai, 2011; Damasio, 1989; Simmons & Barsalou, 2003) and has been employed in other connectionist models (Blouw et al., 2015; Garagnani & Pulvermüller, 2016; Plaut, 2002). There are, however, some critical and challenging aspects of semantic representation which have not been accommodated by these theories, and which we address in this work. First, the representation of abstract concepts is a significant challenge to embodied semantic theories (Binder & Desai, 2011; Leshinskaya & Caramazza, 2016; Meteyard et al., 2012; Shallice & Cooper, 2013). Because abstract words are not strongly linked with S-M experiences, it is unclear how a semantic system based on such experience would represent these concepts. A number of alternative accounts of abstract word knowledge have been put forward, which are not mutually exclusive. First, it is likely that some information about abstract words can be gleaned from the statistics of their use in natural language, an important mechanism that is central to our model and which we will consider in more detail shortly. Second, abstract words often refer to aspects of a person’s internal experiences, such as their emotions or cognitive states (Kousta, Vigliocco, Vinson, Andrews, & Del Campo, 2011; Vigliocco et al., 2014), and it is likely that these internally generated sensations make an important contribution to the representations of some abstract words. These influences were not a specific target of our model, though they are compatible with the approach we take. Finally, it has been suggested that, although abstract words do not represent S-M experiences directly, some abstract words might become grounded in this information through linkage with concrete situations with which they are associated (Barsalou, 1999; Pulvermüller, 2013). For example, the abstract word direction might become associated with S-M information related to pointing or to steering a car. However, it remains unclear exactly how abstract words might become associated with S-M experiences. In this study, we make an important advance on this issue by demonstrating how a neural network can learn to associate abstract words with S-M information indirectly, even if its training environment does not include such associations in any direct form.

The representation of associative relationships between items also represents a challenge to embodied semantic models that represent semantic structure in terms of similarity in S-M properties. Such models are highly sensitive to category-based taxonomic structure, because objects from the same taxonomic category (e.g., birds) typically share many S-M characteristics (e.g., have feathers, able to fly; Cree & McRae, 2003; Dilkina & Lambon Ralph, 2012; Garrard, Lambon Ralph, Hodges, & Patterson, 2001). In hub-and-spoke models, for example, as the units in the hub layer learn to mediate between different S-M systems, so objects with similar properties come to be represented by similar patterns of activation (Rogers et al., 2004). However, semantic processing is also strongly influenced by associative relationships between items that are encountered in similar contexts but may have very different properties (e.g., knife and butter; Alario, Segui, & Ferrand, 2000; Lin & Murphy, 2001; Perea & Gotor, 1997; Seidenberg, Waters, Sanders, & Langer, 1984). To represent these relationships, the semantic system must be sensitive to patterns of spatiotemporal co-occurrence among words and objects.

For this reason, some researchers have suggested that taxonomic and associative relations are represented in two distinct systems, rather than a single semantic hub (Binder & Desai, 2011; Mirman & Graziano, 2012; Schwartz et al., 2011). On this view, only the extraction of taxonomic, category-based semantic structure is served by the anterior temporal cortex (ATL). A separate system, linked with ventral parietal cortex (VPC), processes information about actions and temporally extended events and is therefore sensitive to associations between items. An alternative perspective, adopted in the present work, is that both types of relationship are represented within a single semantic space (Jackson, Hoffman, Pobric, & Lambon Ralph, 2015). To do so, the hub must be simultaneously sensitive to similarities in S-M properties and to temporal co-occurrence. As we shall go on to explain in more detail, this can be achieved by training the model to predict upcoming words on the basis of context, in addition to learning the S-M patterns associated with words. Previous computational work by Plaut and colleagues demonstrated that a single semantic system can simulate semantic priming effects for both taxonomic and associative relationships, through sensitivity to item co-occurrence as well as S-M similarity (Plaut, 1995; Plaut & Booth, 2000). That work focused on understanding the timing of access to semantic representations under different conditions. Our focus in the present work is on the structure of the learned semantic representations; we investigate whether the hub-and-spoke architecture develops sensitivity to both types of relationship within a single hub layer.

The final phenomenon we consider is that of context-sensitivity in the processing of meaning. Some words, termed homonyms, take on entirely different meanings when used in different situations (e.g., bark). Many more words are polysemous: Their meaning changes in a more subtle and graded fashion across the various contexts in which they appear (consider the change in the meaning of life in the two phrases “the mother gave him life” and “the judge gave him life”). While a number of implemented computational models have explored consequences of this ambiguity for lexical processing (Armstrong & Plaut, 2008; Hoffman & Woollams, 2015; Kawamoto, 1993; Rodd, Gaskell, & Marslen-Wilson, 2004), few have considered how context-dependent variation in meaning is acquired or how a contextually appropriate interpretation of a word is activated in any given instance. In order to address such issues, a model must have some mechanism for representing the context in which a particular stimulus is processed. Previous hub-and-spoke models were not developed with this in mind and thus has no such mechanism. Another class of connectionist models have, however, made progress on these issues. Simple recurrent networks process stimuli sequentially and include a buffering function, which allows the network to store the pattern of activity elicited by one input and use this to influence how the next input in the sequence is processed (Elman, 1990). In so doing, simple recurrent networks become highly sensitive to statistical regularities present in temporal streams of information, such as those found in artificial grammars or in sequences of letters taken from English sentences, and can make accurate predictions about upcoming items (Cleeremans, Servan-Schreiber, & McClelland, 1989; Elman, 1990). St. John and McClelland (1990) used a simple recurrent network to represent the meanings of sentences that were presented to the network as a series of individual constituents. Upon processing each constituent, the model was trained to make predictions about the content of the sentence as a whole. Following training, the same word could elicit radically different patterns of activity depending on the particular sentence in which it appeared. This model demonstrated that a simple recurrent network could acquire context-sensitive representations of the meanings of words. The potential value of recurrent networks in developing context-sensitive semantic representations has also been noted by other researchers (Yee & Thompson-Schill, 2016). In the present work, we harness this powerful computational mechanism by integrating it within a hub-and-spoke framework.

To summarize, a number of embodied semantic models hold that concepts are acquired as the semantic system learns to link various verbal and S-M elements of experience through an additional transmodal level of representation. This model is compatible with a range of empirical data but there are three key theoretical issues that remain unresolved. How does such a framework represent the meanings of abstract words? How does it represent associative relations between concepts? And what mechanisms would be necessary to allow its representations to vary depending on the context in which they occur? In tackling these questions, we took inspiration from a different tradition in semantic representation that provides a useful alternative perspective. The distributional semantics approach developed in computational linguistics and holds that patterns of lexical co-occurrence in natural language are key determinants of word meanings. Firth (1957) summarized this principle with the phrase “You shall know a word by the company it keeps.” Words that are frequently used in the same or similar contexts are assumed to have related meanings. Modern computing power has allowed this theory to be applied to large corpora of real-world language, with considerable success (Griffiths et al., 2007; Jones & Mewhort, 2007; Landauer & Dumais, 1997; Lund & Burgess, 1996). These statistical models represent words as high-dimensional semantic vectors, in which similarity between the vectors of words is governed by similarity in the contexts in which they are used. Similarity in contextual usage is assumed to indicate similarity in meaning. Representations derived in this way have been shown to be useful in predicting human performance across a range of verbal semantic tasks (Bullinaria & Levy, 2007; Jones & Mewhort, 2007; Landauer & Dumais, 1997).

The distributional semantics approach is well-suited to addressing the challenges in semantic representation we have already identified. Because it is based on linguistic and not S-M experiences, it is possible to code abstract words in exactly the same way as for concrete words. Because its representations are based on contextual co-occurrence, it is highly sensitive to associative relationships between concepts, irrespective of whether they share S-M properties (Hoffman, 2016). Finally, because its central tenet is that meaning is determined by context, it naturally allows for variation in meaning when the same words are used in different contexts (Kintsch, 2001; Landauer, 2001).

The distributional approach has come under heavy criticism because, unlike embodied approaches to semantics, it makes no connection with S-M experiences (Barsalou, 1999; Glenberg & Robertson, 2000). Because the representation of each word is determined solely by its relationships with other words, the system as a whole lacks grounding in the external world. The distributional account would thus seem to provide no insights into the considerable neuroscientific evidence for S-M embodiment of semantic knowledge. Recently, however, some promising efforts have been made to modify distributional models so that they take into account information about S-M properties as well as the statistics of lexical co-occurrence (Andrews et al., 2009; Durda, Buchanan, & Caron, 2009; Johns & Jones, 2012; Steyvers, 2010). These have, for example, shown that S-M properties of concrete words can be accurately inferred by analyzing their patterns of lexical co-occurrence with other words whose S-M characteristics are already known (Johns & Jones, 2012). In addition, a number of researchers have advocated a hybrid view of semantic representation in which embodied and distributional aspects both play a role (Barsalou, Santos, Simmons, & Wilson, 2008; Dove, 2011; Louwerse & Jeuniaux, 2008; Vigliocco, Meteyard, Andrews, & Kousta, 2009). We took a similar position in developing our model.

In the present study, one of our key goals was to develop a connectionist model that combined the distributional approach with the principle of embodiment in S-M experience. Critically, we implemented this synthesis within the hub-and-spoke conceptual framework, which has proved successful in addressing other aspects of semantic representation. In so doing, we addressed another, perhaps more basic limitation of the distributional approach, namely that it provides minimal insights into the mechanisms underpinning acquisition of conceptual knowledge. We will take the most well-known statistical model, latent semantic analysis (Landauer & Dumais, 1997), as an example. This technique involves the construction of a large matrix of word occurrence frequencies, aggregating data from a corpus of several million words. When this matrix has been fully populated, it is subjected to singular value decomposition in order to extract the latent statistical structure thought to underpin semantic knowledge. While the resulting representations appear to bear useful similarities to human semantic knowledge, this process by which they are derived bears little relation to the way in which conceptual knowledge is acquired by humans. Children do not accumulate vast reserves of data about which words they have heard in which contexts, only to convert these data into semantic representations once they have been exposed to several million words. In reality, acquisition of conceptual knowledge is a slow, incremental process, in which knowledge is constantly updated on the basis of new experiences (McClelland, McNaughton, & O’Reilly, 1995). Some researchers have addressed this concern, proposing distributional models in which representations are gradually updated online as linguistic information is processed (Jones & Mewhort, 2007; Rao & Howard, 2008). Nevertheless, the distributional approach to semantic knowledge has yet to be integrated with neurally inspired embodied approaches to semantic cognition.

In this article, we present a model that simultaneously assimilates the embodied and distributional approaches to semantic representation. The basic tenet of the model is that semantic knowledge is acquired as individuals learn to map between the various forms of information, verbal and nonverbal, that are associated with particular concepts (Patterson et al., 2007; Lambon Ralph et al., 2017; Rogers et al., 2004; Rogers & McClelland, 2004). The “hub” that mediates these interactions develops representations that code the deeper, conceptual relationships between items. To this framework, we have added the distributional principle, which holds that sensitivity to context and to the co-occurrence of items is an important additional source of semantic information. To achieve this synthesis, we added two ingredients to the model. The first was a training environment in which concepts are processed sequentially and in which the co-occurrence of concepts in the same sequence is indicative of a semantic relationship between them. The second was a buffering function, inspired by work with simple recurrent networks (Elman, 1990; St. John & McClelland, 1990), that allowed the model’s hub to be influenced by its own previous state. To encourage the model to become sensitive to item co-occurrences, upon processing each stimulus, it was trained to predict the next item in the sequence. This is in tune with the widely held view that prediction is an important mechanism in language processing (Altmann & Kamide, 2007; Dell & Chang, 2013; Pickering & Garrod, 2007) and with recent interest in the use of predictive neural networks to learn distributed representations of word meaning (e.g., Mikolov, Chen, Corrado, & Dean, 2013).

The Model

Overview

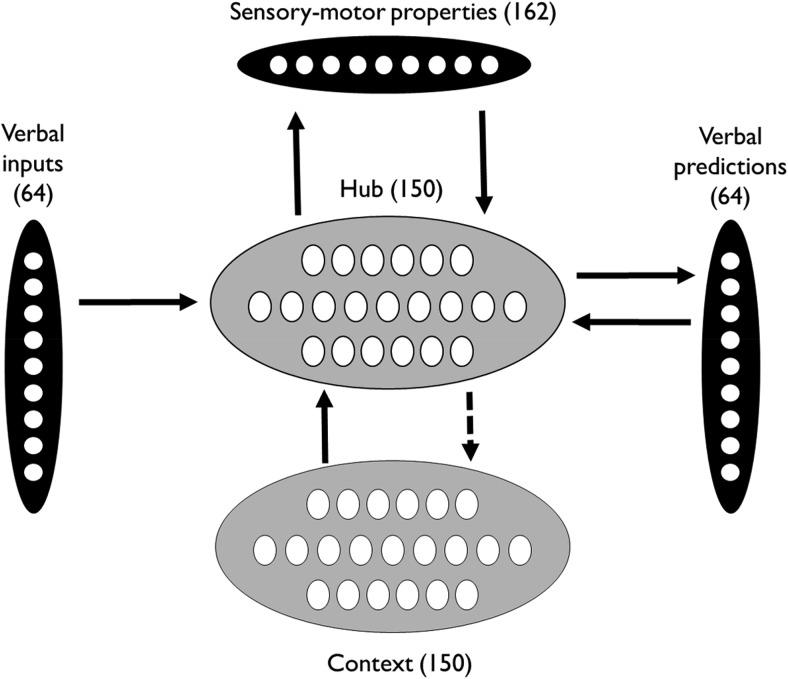

The model is shown in Figure 1. Inputs are presented to the model sequentially. Inputs may be verbal, analogous to hearing words, or they may be constellations of S-M properties, analogous to interaction with objects in the environment. The model learns to perform two tasks simultaneously in response to these inputs. First, following the presentation of each stimulus, it is required to make predictions about which word will appear next in the sequence, taking into account recent context. Second, when presented with a concrete word as a stimulus, it is also required to activate the S-M properties of the word’s referent.

Figure 1.

Architecture of the representational model. Black layers comprise visible units that receive inputs and/or targets from the environment. Gray layers represent hidden units. Solid arrows indicate full, trainable connectivity between layers. The dashed arrow represents a copy function whereby, following processing of a stimulus, the activation pattern over the hub layer is replicated on the context layer where it remains to act as the context for the next stimulus.

Architecture

The model is a fully recurrent neural network, consisting of 590 units organized into five pools. Sixty-four verbal input units represent the 64 words in the model’s vocabulary. Activation of these units is controlled by external input from the environment. In contrast, the 64 verbal prediction units never receive external inputs, but are used to represent the model’s predictions about the identity of the next word in the sequence. There are 162 units representing S-M properties. These can either activated externally, representing perception of an object in the environment, or they can be activated by the model in the course of processing a particular verbal input. This latter process can be thought of as a mental simulation of the properties of an object upon hearing its name.

The connections between the three layers are mediated by 150 hidden units, known collectively as the “hub.” Activation patterns over the hub layer are not specified directly by the modeler and are instead shaped by the learning process. As the hub is trained to map between verbal inputs, verbal predictions and S-M properties, it develops patterns of activation that reflect the statistics underlying these mappings. Words that are associated with similar verbal predictions and/or similar S-M properties come to be represented by similar activation patterns in the hub.

Finally, at each step in the sequence, the 150 context units are used to store a copy of the hub activations elicited by the previous input (see Processing section for more detail). This information is an additional source of constraint on the hub, allowing its processing of each input to be influenced by the context in which it occurs.

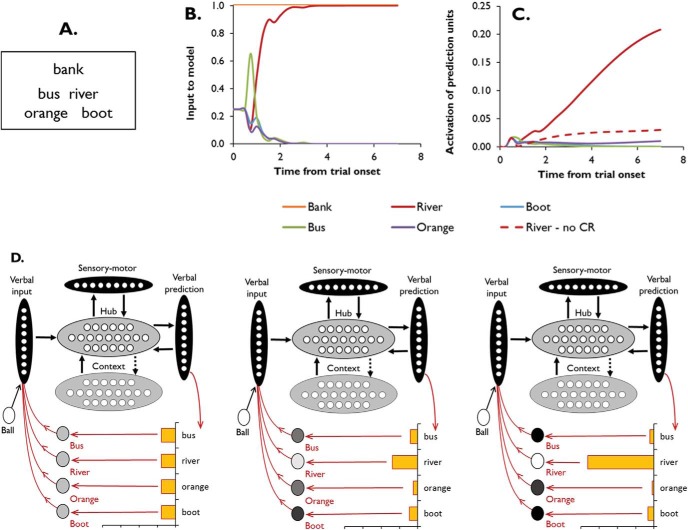

Processing

The model is presented with sequences of stimuli consisting of words and S-M properties, arranged in “episodes” of five inputs. An example episode is shown in Figure 2. As we were primarily concerned with comprehension of individual words, sequences have no syntactic structure and consist entirely of nouns. The word sequences therefore do not represent sentences as such; instead, they represent a series of concepts that one might encounter while listening to a description of an event or a scene. At some points in the sequence, a set of S-M properties representing a particular concrete object is presented in lieu of a word. This reflects the fact that when we are listening to a verbal statement, we often simultaneously observe objects in the environment that are relevant to the topic under discussion. In the model, this concurrent experience of verbal and nonverbal stimuli is implemented as a sequential process, with the nonverbal perceptions interspersed within the verbal stream.

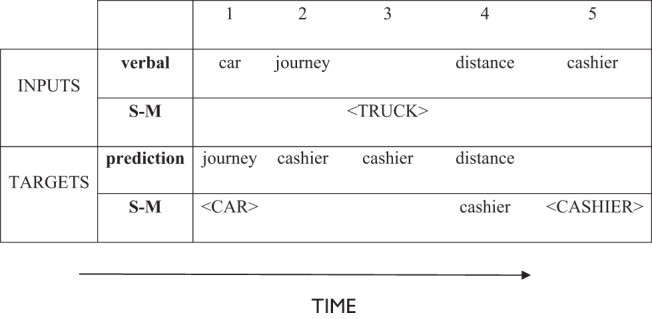

Figure 2.

An example episode. The 10 inputs for the episode are shown from left to right, along with the targets provided at each point. For example, at the first point in this sequence, the verbal input unit for car is activated and the model is trained to turn on the S-M units associated with cars and the prediction unit for journey (as this is the next item in the sequence). <ITEM > represents the S-M properties of a concrete item.

Each stimulus is processed for seven time steps, with unit activations updated four times in each time step. To present the model with a word, the corresponding verbal input unit is clamped on for the full seven time steps and activation is allowed to propagate through the rest of the network. No direct input is provided to the prediction or S-M units; instead, their activity develops as a consequence of the flow of activation through the network in response to the word. At the end of this process, the activation states of the prediction and S-M units can be read off as the model’s outputs. Once fully trained, the model produces a pattern of activation over its prediction units that represents its expectation about the identity of the next word, given the word just presented and the preceding context. Activation of S-M units represents the S-M properties that the model has come to associate with the presented word.

During the training phase, the model is presented with targets that are used to influence learning. During the final two time steps for each stimulus, it receives targets on the prediction layer and, optionally, on the S-M layer. The prediction unit representing the next word in the episode is given a target value of one (all other prediction units have targets of zero). When the input is a concrete word or homonym, the model is also given S-M targets corresponding to the S-M properties of the word’s referent. If the input is an abstract word, no S-M targets are provided and the model is free to produce any pattern of activity over the S-M units. The actual activation patterns over the prediction and S-M layers are compared with their targets so that errors can be calculated and the connection weights throughout the network adjusted by back-propagation (see training and other model parameters). When abstract words are presented, there are no targets on the S-M units.

When the model is presented with a S-M pattern as stimulus, the process is similar. The S-M units are clamped for the full seven time steps and the verbal input units are clamped at zero. Activation propagates through the network and targets are provided for the prediction layer during the final two time steps. The prediction target again represents the next word in the episode.

Following the processing of each stimulus, the activation values of the hub units are copied over to the context units. The context units are then clamped with this activation pattern for the duration of the next stimulus. The context units provide an additional input to the hub layer, allowing it to be influenced by its previous state. This recurrent architecture allows the model to develop representations that are sensitive to context.

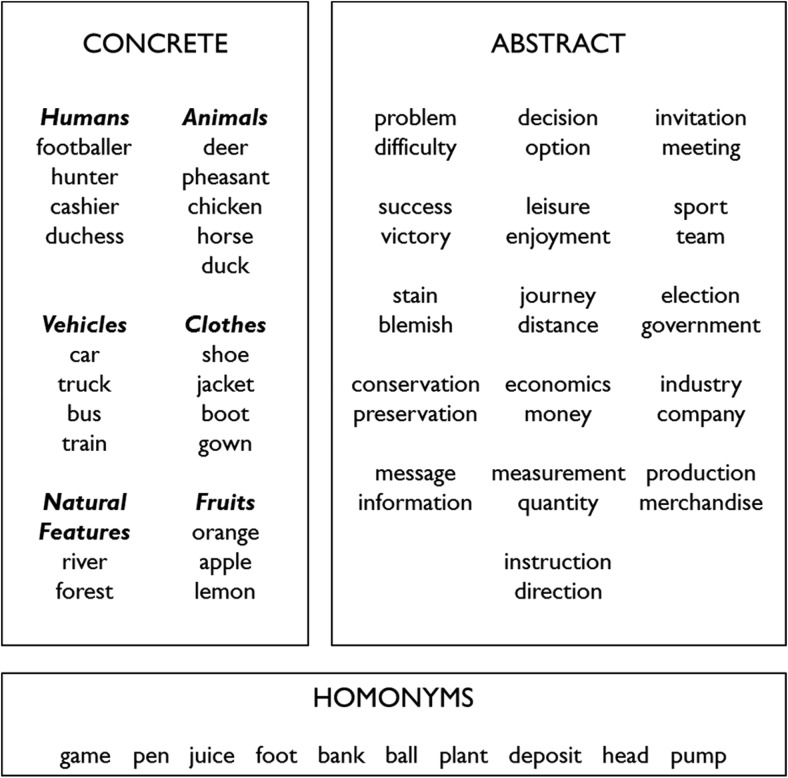

Model vocabulary

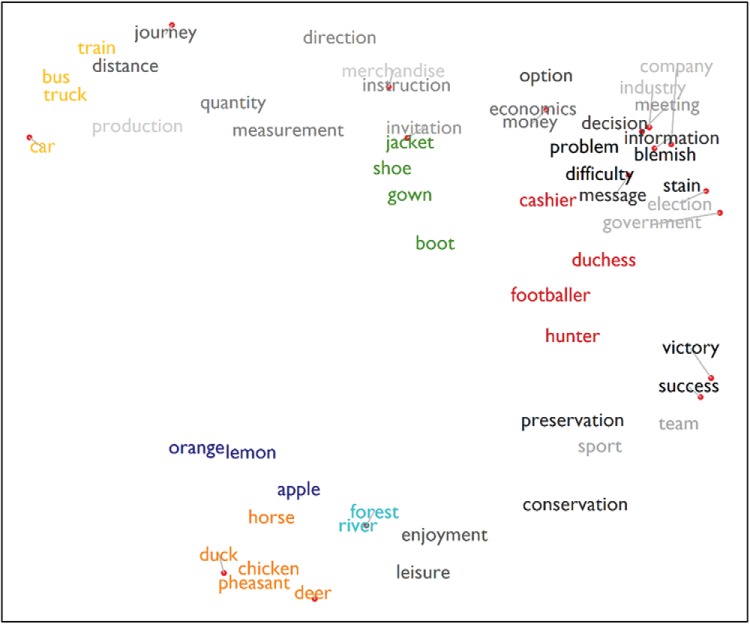

In common with other connectionist approaches to semantics, the model was trained in a simplified artificial environment designed to capture the key features of semantic processing that are relevant to our goals. The 64 concepts in the model’s vocabulary comprise 22 concrete concepts, 32 abstract concepts, and 10 homonyms (see Figure 3). The concrete and abstract words were used to investigate how knowledge for abstract concepts could become embodied in S-M experience (see below and Simulation 2). The concrete words were also used to explore the model’s ability to represent taxonomic and associative semantic relationships (Simulation 3). The homonyms, which we define as words that have two meanings associated with distinct contexts, were used to investigate the model’s sensitivity to context (Simulation 1).

Figure 3.

The model’s vocabulary.

S-M properties

The 162 S-M units represent the sensory and motor properties of objects. Many studies have investigated how the structure of S-M properties varies across different categories of object (e.g., Cree & McRae, 2003; Garrard et al., 2001) and insights from these studies have been incorporated into models that seek to explain dissociations between particular categories (e.g., Farah & McClelland, 1991; Tyler, Moss, Durrant-Peatfield, & Levy, 2000). Such effects were not germane in the present study so we only implemented the most robust general finding in this domain: that members of the same category tend to share more S-M properties than items from different categories. Concrete concepts were organized into six taxonomic categories (see Figure 3). Each item was associated with six properties that it shared with its category neighbors and three that were unique to that item. Abstract concepts were not assigned S-M properties, on the basis that these concepts are not linked directly with specific S-M experiences. In natural language, the meanings of homonyms can be either concrete or abstract. In the model, we assumed for simplicity that all homonyms had concrete meanings.1 We assigned two different sets of S-M properties to each homonym, corresponding to each of its meanings. Each set consisted of six properties shared with other concrete concepts and three properties unique to that meaning.

Training corpus

Our construction of a training corpus for the model was inspired by a particular class of distributional semantic models known as topic models (Griffiths et al., 2007). These models assume that samples of natural language can be usefully represented in terms of underlying topics, where a topic is a probability distribution over a particular set of semantically related words. To generate a training corpus for our model, we constructed 35 artificial topics. An example topic is shown in Figure 4. Each topic consisted of a list of between 10 and 19 concepts that might be expected to be used together in a particular context. There was also a probability distribution that governed their selection. The construction of topics was guided by the following constraints:

-

1

Topics were composed of a mixture of concrete, abstract and homonym concepts (although two topics, ELECTION and REFERENDUM, featured only abstract concepts).

-

2

Abstract concepts were organized in pairs with related meanings (see Figure 3). Word pairs with related meanings frequently occurred in the same topics, in line with the distributional principle. That is, words with related meanings had similar (but not identical) probability distributions across the 35 topics. For example, journey and distance could co-occur in seven different topics, but with differing probabilities, and there were an additional five topics in which one member of the pair could occur but the other could not.

-

3

Concrete concepts belonging to the same category frequently occurred in the same topics, in line with distributional data from linguistic corpora (Hoffman, 2016) and visual scenes (Sadeghi, McClelland, & Hoffman, 2015). In addition, particular pairs of concrete concepts from different categories co-occurred regularly in specific topics (e.g., deer and hunter both appeared with high probability in the HUNTING topic). This ensured that the corpus included associative relationships between items that did not share S-M properties.

-

4

Each homonym occurred in two disparate sets of topics. For example, bank regularly occurred in the FINANCIAL topic, representing its dominant usage, but also occasionally in the RIVERSIDE topic, representing its subordinate meaning.2

Figure 4.

Example topic distributions. Concepts with S-M features are shown in italics. The PETROL STATION topic was used to generate the episode shown in Figure 2.

Some additional constraints, required for Simulation 2, were also included and are described as they become relevant.

The topics were used to generate episodes consisting of 5 stimuli. To generate an episode, a topic was first chosen in a stochastic fashion, weighted such that eight particular topics were selected five or 10 times more often than the others. This weighting ensured that some concepts occurred more frequently than others (necessary for Simulation 2). Next, a concept was sampled from the probability distribution for the chosen topic. If a concrete concept or homonym was chosen, it was presented either verbally or as a S-M pattern (with equal probability). For concrete words, the S-M pattern used was always the same. For homonyms, the S-M pattern varied depending on whether the word was being used in its dominant or subordinate sense. Another concept was then sampled and the process continued until a sequence of five stimuli had been generated. The same concept could be sampled multiple times within an episode.

A total of 400,000 episodes were generated in this fashion; this served as the training corpus for the model. The corpus was presented as a continuous stream of inputs to the model, so there was no indication of when one episode ended and the next began. On the last stimulus for each episode, however, no prediction target was given to the model.

Training and other model parameters

Simulations were performed using the Light Efficient Network Simulator (Rohde, 1999). The network was initialized with random weights that varied between −0.2 and 0.2. All units were assigned a fixed, untrainable bias of −2, ensuring that they remained close to their minimum activation level in the absence of other inputs. Activation of the hub units and S-M units was calculated using a logistic function. Error on the S-M units was computed using a cross-entropy function. As the prediction units represented a probability distribution, their activation was governed by a soft-max function which ensured that their combined activity always summed to one. These units received a divergence error function.

The model was trained with a learning rate of 0.1 and momentum of 0.9, with the condition that the premomentum weight step vector was bounded so that its length could not exceed one (known as “Doug’s momentum”). Error derivatives were accumulated over stimuli and weight changes applied after every hundredth episode. Weight decay of 10−6 was applied at every update. The model was trained for a total of five passes through the corpus (equivalent to 20,000 weight updates, two million episodes, or 10 million individual stimuli).

Ten models were trained in this way, each with a different set of random starting weights. All the results we present are averaged over the 10 models.

Results: Representational Properties of the Model

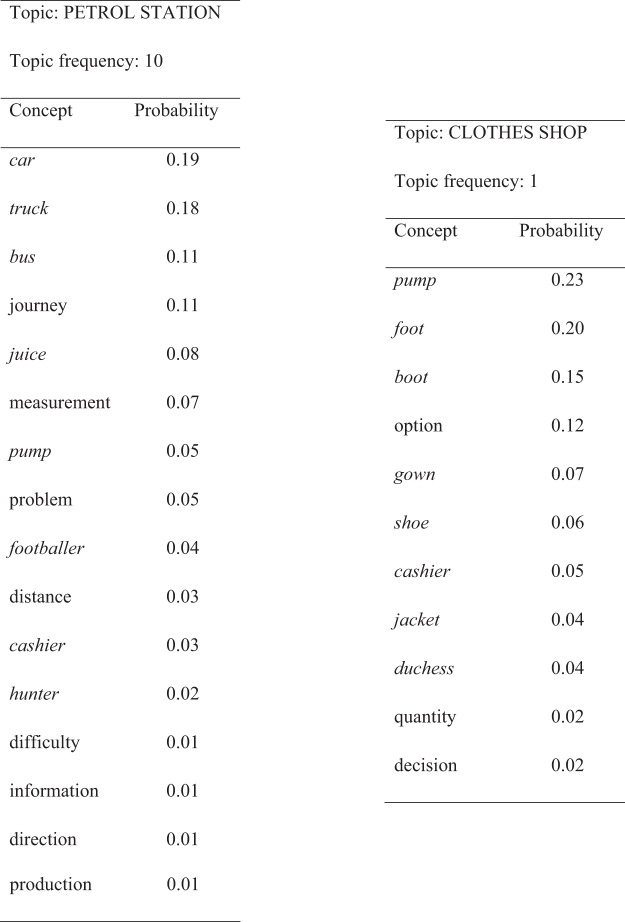

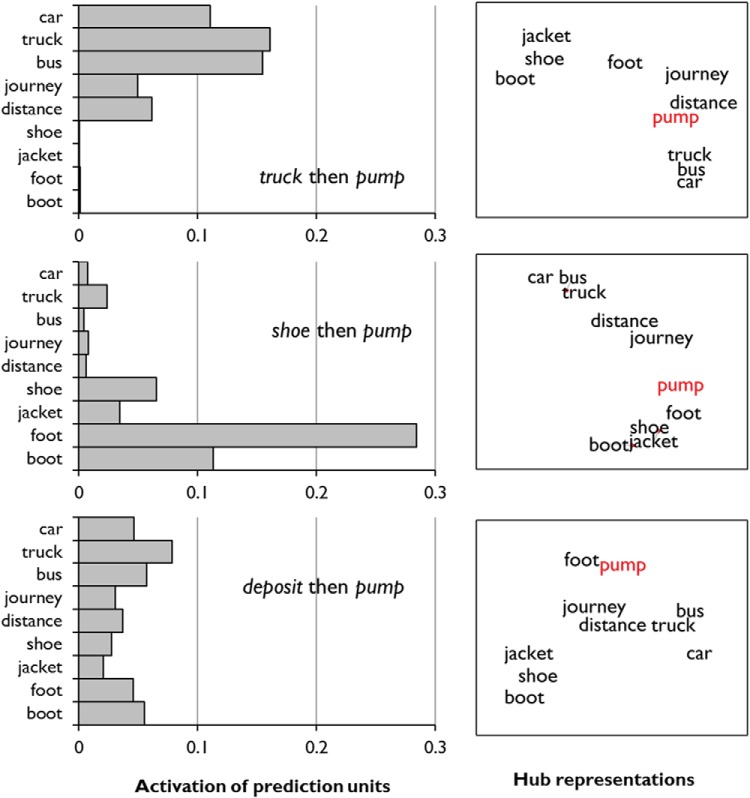

Context-sensitivity

Once trained, the model is able to take a word as input and predict which other words it is likely to encounter subsequently. Due to its recurrent architecture, these predictions are shaped by the context in which the word is presented. To illustrate this, we presented the word pump to the model immediately after one of three other words. Two of these words, truck and shoe, represent the two disparate types of context in which pump appeared during training. The third, deposit represents a novel context. The left-hand panel of Figure 5 shows activation of some of the network’s prediction units in each context. The model demonstrates context-sensitivity, appropriately biasing its predictions toward petrol-related words in the first case and clothing-related words in the second. When the word appears in a novel context, the model hedges its bets and assigns intermediate probabilities to both types of word.

Figure 5.

Context-sensitive representation of the word pump. The model was presented with pump immediately following either truck, shoe, or deposit. Results are averaged over 50 such presentations. Left: Activation of prediction units, indicating that the model’s expectations change when the word appears in these different contexts. Right: Results of multidimensional scaling analyses performed on the hub representations of words presented in each context. In these plots, the proximity of two words indicates the similarity of their representations over the hub units (where similarity is measured by the correlation between their activation vectors). The model’s internal representation of pump shifts as a function of context.

The model is able to shift its behavior in this way because the learned representations over the hub layer are influenced by prior context. This is illustrated in the right-hand panel of Figure 5, which represents graphically the relationships between the network’s representations of particular words in the three different contexts. We presented the network with various words, each time immediately after one of the three context words, and recorded the pattern of activity over the hub units. We performed multidimensional scaling on these representations, so that each word could be plotted in a two-dimensional space in which the proximities of words indicates the degree of similarity in their hub representations. When presented in the context of truck, the model’s representation of pump is similar to that of journey, distance and other petrol-related words. Conversely, when pump is presented after shoe, the model generates an internal representation that is similar to that of foot and other items of clothing. In a novel context, the pump representation lies in the midst of these two sets. In other words, by including context units that retain the network’s previous states, the model has developed semantic representations for words that take into account the context in which they are being used. This context-dependence is a key feature of models with similar recurrent architectures (Elman, 1990; St. John & McClelland, 1990).

It is worth noting that these context-dependent shifts in representation are graded and not categorical. In other words, the model’s representation of a word’s meaning varies continuously as a function of the context in which it is being used. This graded variation in representation is consistent with a proposition from the distributional semantics approach, which holds that any two uses of the same word are never truly identical in meaning. Instead, their precise connotation depends on their immediate linguistic and environmental context (Cruse, 1986; Landauer, 2001). This means that, in addition to homonyms, the model is well-suited to the representation of polysemous words, whose meanings change more subtly when they are used in different contexts. We consider this aspect of the model in more detail in Simulation 2, where we simulate the effects of semantic diversity on comprehension (Hoffman, Lambon Ralph, & Rogers, 2013b; Hoffman, Rogers, & Lambon Ralph, 2011b).

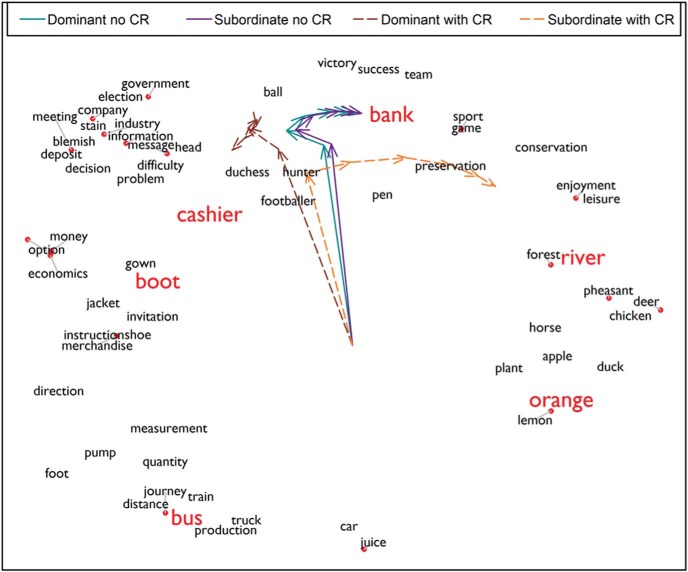

Representation of abstract words and taxonomic and associative semantic structure

A key feature of the model is that all concepts, concrete and abstract, are associated with characteristic patterns of activity over the same hub units and are therefore represented in a common semantic space. To explore the characteristics of this space, we performed multidimensional scaling on the hub’s representations of all concrete and abstract words. In this case, we were interested in the general structure of the semantic space, independent of any specific context. We therefore presented each word to the network 64 times, each time preceded by a different word from the model’s vocabulary. To obtain context-independent representations for each word, we averaged the activation patterns elicited on the hub units over these 64 presentations. The resulting activation patterns for all words were used to compute a pairwise distance matrix between words. The process was repeated 50 times and the averaged distance matrix was used to generate the multidimensional scaling plot shown in Figure 6.

Figure 6.

Hub representations of concrete and abstract concepts. Concrete concepts are color-coded by category. Abstract concepts are shown in greyscale, where shading indicates pairs of semantically related words.

The model acquires internal representations that allow it to generate appropriate patterns of activity over the S-M and prediction units. As a consequence, words that are associated with similar S-M features come to be associated with similar hub representations, as do those that elicit similar predictions about upcoming words. Several consequences of this behavior are evident in Figure 6.

-

1

Taxonomic structure emerges as an important organizational principle for concrete words. There are two reasons why the model learns this representational structure. First, concrete items from the same category share a number of S-M features. Second, items from the same category regularly occur in the same contexts and are therefore associated with similar predictions about which words are likely to appear next.

-

2

Abstract words that occur in similar contexts have similar representations. The corpus was designed such that particular pairs of abstract words frequently co-occurred (see Figure 2). In Figure 6, it is clear that these pairs are typically close to one another in the network’s learned semantic space. When the model is presented with abstract words, it is only required to generate predictions; therefore, the representation of abstract words is governed by the distributional principle. Words that frequently occur in the same contexts come to have similar semantic representations because they generate similar predictions.

-

3

The units in the hub make no strong distinction between concrete and abstract words. Concrete and abstract words can be represented as similar to one another if they occur in similar contexts (e.g., journey and distance and the vehicles). Of course, concrete and abstract words are more strongly distinguished in the S-M units, where only concrete words elicit strong patterns of activity (though abstract words come to generate some weaker activity here too; see below).

-

4

Associative relationships between concrete items are also represented. Although taxonomic category appears to be the primary organizing factor for concrete concepts, the structure of these items also reflects conceptual co-occurrence. For example, the fruits, plants, and animals are all close to one another because they regularly co-occur in contexts relating to the outdoors/countryside (in addition, some of the animals and fruits co-occur in cooking contexts).

To investigate the degree to which the model acquired associative as well as taxonomic relationships, we performed further analyses on pairwise similarities between the hub representations of the concrete items. The mean similarity between item pairs from the same category was 0.44 (SD = .061) while for between-category pairs it was 0.01 (SD = .056; t(229) = 40.5, p < .001). This confirms our assertion that items from the same category have much more similar representations than those from different categories. To investigate the effect of associative strength on representational similarity, we considered the between-category pairs in more detail. We defined the associative strength A between two words x and y as follows:

Where Nxy indicates the number of occasions x was immediately followed by y in the training corpus, Nyx is the number of times y was followed by x and Nx and Ny represent the total number of occurrences of x and y, respectively. There was a significant positive correlation between the associative strength of two items and the similarity of their hub representations, ρ(199) = 0.39, p < .001. In other words, the more frequently two items occur together during training, the more likely the model is to represent them with similar patterns in the hub. The average similarity for strongly associated between-category pairs (defined with an arbitrary threshold of A > 0.07) was 0.10 (SD = .08).

Acquired embodiment of abstract concepts

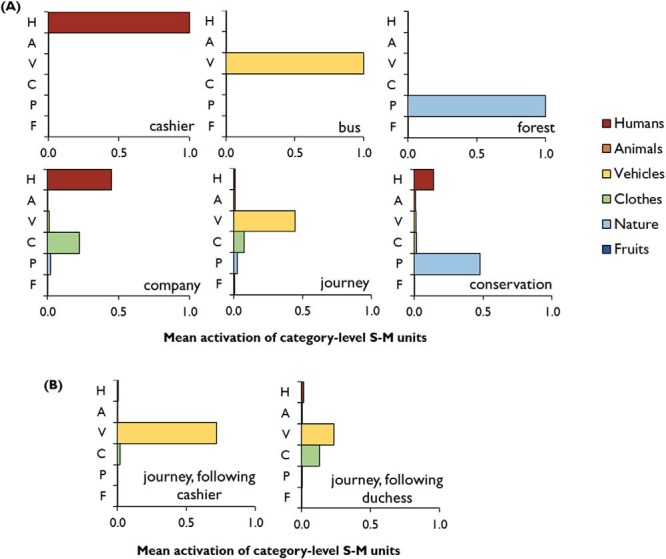

As discussed earlier, the representation of abstract concepts is a contentious issue. Some researchers have suggested that knowledge of abstract words is derived solely through their use in language. Others have argued that abstract concepts must be grounded in perceptual experience (e.g., Barsalou, 1999) but it is not clear how such grounding would take place. When being trained to process abstract words, our model only receives verbal distributional information; it is not trained to associate abstract words with S-M experiences. However, abstract words come to be linked to S-M information by virtue of their associations with concrete words—a process we refer to as “acquired embodiment.” Figure 7A provides some examples of this. We have plotted activations for the S-M features shared by all members of a category when the network is presented with some representative concrete and abstract words. For concrete words, the network is trained to activate the S-M features of the item whenever it is encountered. Each of the concrete words therefore elicits a clear, binary pattern of S-M activation. For abstract words, the S-M units do not receive any targets during training, in line with the idea that abstract concepts are not directly associated with S-M experiences. The activity of these units is entirely unconstrained by the learning process. As seen in Figure 7A, however, when presented with abstract words, the network comes to partially activate the S-M features of the concrete items with which they regularly co-occur. For example, journey elicits partial activation of the S-M features of vehicles and company partially activates the features of humans.

Figure 7.

S-M unit activations for a selection of concrete and abstract words. (A) Activations of S-M units shared by the members of each category, in response to a selection of words. Each word was presented to the network 50 times (with a different random pattern of activity on the context units) and the results averaged to generate this figure. (B) Activation of S-M units in response to the same abstract word in two different contexts.

This acquired embodiment is an emergent consequence of the requirement for the model to represent the statistics of conceptual co-occurrence and S-M experience in a single system. As we saw earlier, the model represents concrete and abstract words in a single semantic space and both can elicit similar patterns of activity on the hub layer if they are associated with similar verbal predictions. For example, journey has a similar representation to bus because both words are found in contexts in which words like car, distance, and pump are likely to occur. Because the activity of the S-M units is determined by the inputs they receive from the hub units, words with similar hub representations generate similar patterns of S-M activity. So journey comes to partially activate vehicular S-M features as a by-product of its regular co-occurrence with vehicle names.

A number of alternative modeling approaches have also merged S-M information with distributional statistics from natural language (Andrews et al., 2009; Durda et al., 2009; Steyvers, 2010) and have shown how S-M knowledge linked with a particular word can be indirectly extended to its lexical associates (Johns & Jones, 2012). One important way in which our model differs from these other approaches is that, in our model, the embodiment of abstract words is context-dependent. This is illustrated in Figure 7B, which shows the different S-M activations elicited by the same abstract words in two different contexts. When journey occurs immediately after cashier, vehicle S-M units are strongly activated because journey and cashier regularly co-occur in contexts in which modes of transport are discussed. In contrast, journey presented after duchess elicits only weak activation because in the topics in which these two words co-occur, vehicles are rarely. Thus, the type of S-M information activated by abstract words depends on the particular context in which they appear, which is consistent with data showing that context affects the types of S-M knowledge participants retrieve in response to words (Wu & Barsalou, 2009).

Summary

In this section, we have described how our model acquires semantic representations under the simultaneous pressure to predict upcoming words based on preceding context (thus learning the distributional properties of the language) and to associate concrete words with S-M experiences (thus embodying conceptual knowledge in the physical world). Importantly, both of these challenges are met by a single set of “hub” units, whose activation patterns come to represent the underlying semantic structure of the concepts processed by the model. We have demonstrated that this architecture has a number of desirable characteristics. The recurrent architecture allows the network’s predictions about upcoming words to be influenced by prior context. As a consequence, the model’s internal representations of specific concepts also vary with context. This is an important property, because most words are associated with context-dependent variation in meaning (Cruse, 1986; Klein & Murphy, 2001; Rodd, Gaskell, & Marslen-Wilson, 2002). Second, the model represents concrete and abstract words in a single representational space, and is sensitive to associative semantic relationships as well as those based on similarity in S-M features. This is consistent with neuroimaging and neuropsychological evidence indicating that all of these aspects of semantic knowledge are supported by the transmodal “hub” cortex of the ventral anterior temporal lobes (Hoffman, Binney, & Lambon Ralph, 2015; Hoffman, Jones, & Lambon Ralph, 2013a; Jackson et al., 2015; Jefferies, Patterson, Jones, & Lambon Ralph, 2009). Finally, the model provides an explicit account of how abstract words can become indirectly associated with S-M information by virtue of their co-occurrence with concrete words. This process of acquired embodiment demonstrates how representations of abstract words based on the distributional principle can become grounded in the physical world.

At the outset of this article, we stated that a comprehensive theory of semantic cognition requires not only an account of how semantic knowledge is represented but also how it is harnessed to generate task-appropriate behavior. In the next section, we turn our attention to this second major challenge: The need for control processes that regulate how semantic information is activated to complete specific tasks.

Part 2: Executive Regulation of Semantic Knowledge

The semantic system holds a great deal of information about any particular concept and different aspects of this knowledge are relevant in different situations. Effective use of semantic knowledge therefore requires that activation of semantic knowledge is shaped and regulated such that the most useful representation for the current situation comes to mind. An oft-quoted example is the knowledge required to perform different tasks with a piano (Saffran, 2000). When playing a piano, the functions of the key and pedals are highly relevant and must be activated in order to guide behavior. However, when moving a piano, this information is no longer relevant and, instead, behavior should be guided by the knowledge that pianos are heavy, expensive and often have wheels. The meanings of homonyms are another case that is germane to the present work. When a homonym is processed, its distinct meanings initially compete with one another for activation and this competition is thought to be resolved by top-down executive control processes, particularly when context does not provide a good guide to the appropriate interpretation (Noonan, Jefferies, Corbett, & Lambon Ralph, 2010; Rodd, Davis, & Johnsrude, 2005; Zempleni, Renken, Hoeks, Hoogduin, & Stowe, 2007).

These top-down regulatory influences are often referred to as semantic control (Badre & Wagner, 2002; Jefferies & Lambon Ralph, 2006) and are associated with activity in a neural network including left inferior frontal gyrus, inferior parietal sulcus and posterior middle temporal gyrus (Badre, Poldrack, Paré-Blagoev, Insler, & Wagner, 2005; Bedny, McGill, & Thompson-Schill, 2008; Noonan, Jefferies, Visser, & Lambon Ralph, 2013; Rodd et al., 2005; Thompson-Schill et al., 1997; Whitney, Kirk, O’Sullivan, Lambon Ralph, & Jefferies, 2011a, 2011b; Zempleni et al., 2007). One long-standing source of evidence for the importance of semantic control comes from stroke patients who display semantic deficits following damage to these areas (Harvey, Wei, Ellmore, Hamilton, & Schnur, 2013; Jefferies, 2013; Jefferies & Lambon Ralph, 2006; Noonan et al., 2010; Schnur, Schwartz, Brecher, & Hodgson, 2006). These patients, often termed semantic aphasics (SA, after Head, 1926), present with multimodal semantic impairments but, unlike the SD patients described earlier, their deficits have been linked with deregulated access to semantic knowledge rather than damage to the semantic store itself. Moreover, these patients’ performance on semantic tasks is strongly influenced by the degree to which the task requires executive regulation and the severity of their semantic impairments is correlated with their deficits on nonsemantic tests of executive function (which is not the case in SD; Jefferies & Lambon Ralph, 2006). Indeed, there is ongoing debate as to the degree to which semantic control recruits shared executive resources involved in other controlled processing in other domains (we consider this in the General Discussion).

Semantic control deficits have been linked with the following problems.

-

1

Difficulty tailoring activation of semantic knowledge to the task at hand. This is evident in picture naming tasks, in which SA patients frequently give responses that are semantically associated with the pictured object but are not its name (e.g., saying “nuts” when asked to name a picture of a squirrel; Jefferies & Lambon Ralph, 2006). In category fluency tasks, patients are also prone to name items from outside the category being probed (Rogers et al., 2015).

-

2

Difficulty selecting among competing semantic representations. SA patients perform poorly on semantic tasks that require selection among competing responses, particularly when the most obvious or prepotent response is not the correct one (Jefferies & Lambon Ralph, 2006; Thompson-Schill et al., 1998). This problem is also evident in the “refractory access” effects exhibited by this group, in which performance deteriorates when competition between representations is increased by presenting a small set of semantically related items rapidly and repeatedly (Jefferies, Baker, Doran, & Lambon Ralph, 2007; Warrington & Cipolotti, 1996). These deficits are thought to reflect impairment in executive response selection mechanisms.

-

3

Difficulty identifying weak or noncanonical semantic associations. SA patients find it difficult to identify weaker semantic links between concepts (they can identify necklace and bracelet as semantically related but not necklace and trousers; Noonan et al., 2010). They have difficulty activating the less frequent meanings of homonyms (see Simulation 1). In the nonverbal domain, SA patients have difficulty selecting an appropriate object to perform a task when the canonical tool is unavailable (e.g., using a newspaper to kill a fly in the absence of a fly swat; Corbett, Jefferies, & Lambon Ralph, 2011). These results may indicate deficits in top-down “controlled retrieval” processes that regulate semantic activation in the absence of strong stimulus-driven activity (see below).

-

4

High sensitivity to contextual cues. Performance on verbal and nonverbal semantic tasks improves markedly when patients are provided with external cues that boost bottom-up activation of the correct information, thus reducing the need for top-down control (Corbett et al., 2011; Hoffman, Jefferies, & Lambon Ralph, 2010; Jefferies, Patterson, & Lambon Ralph, 2008; Soni et al., 2009). For example, their comprehension of the less common meanings of homonyms (e.g., bank-river) improves when they are provided with a sentence that biases activation toward the appropriate aspect of their meaning (e.g., “They strolled along the bank;” Noonan et al., 2010). These findings indicate that these individuals retain the semantic representations needed to perform the task but lack the control processes necessary to activate them appropriately.

Despite the importance of control processes in regulating semantic activity, this aspect of semantic cognition has rarely been addressed in computational models. Where efforts have been made, these have been based on the “guided activation” approach to cognitive control (Botvinick & Cohen, 2014). On this approach, representations of the current goal or task, often assumed to be generated in prefrontal cortex, bias activation elsewhere in the system to ensure task-appropriate behavior. The best-known example of this approach is the connectionist account of the Stroop effect, in which task units represent the goals “name word” and “name color” and these potentiate activity in the rest of the network, constraining it to produce the appropriate response on each trial (Cohen, Dunbar, & McClelland, 1990). In the semantic domain, models with hub-and-spoke architectures have used task units to regulate the degree to which different spoke layers participate in the completion of particular tasks (Dilkina et al., 2008; Plaut, 2002). Although semantic control was not the focus of these models, they do provide a plausible mechanism by which control could be exercised in situations where the task-relevant information is signaled by an explicit cue. For example, one task known to have high semantic control demands is the feature selection task, in which participants are instructed to match items based on a specific attribute (e.g., their color) while ignoring other associations (e.g., salt goes with snow, not pepper; Thompson-Schill et al., 1997). SA patients have great difficulty performing this task (Thompson, 2012) and it generates prefrontal activation in a region strongly associated with semantic control (Badre et al., 2005). To simulate performance on this task in a hub-and-spoke architecture, a task representation could be used to bias activation toward units representing color and away from other attributes, thus biasing the decision-making process toward the relevant information for the task and avoiding the prepotent association.

In the present study, we consider a different aspect of semantic control which, to our knowledge, has yet to receive any attention in the modeling literature. It is well-known that detecting weak semantic associations (e.g., bee-pollen), compared with strong ones (bee-honey), activates frontoparietal regions linked with semantic control (Badre et al., 2005; Wagner, Paré-Blagoev, Clark, & Poldrack, 2001). SA patients with damage to the semantic control network also exhibit disproportionately severe deficits in identifying weak associations (Noonan et al., 2010). However, the cognitive demands of this task are rather different to the ones described in the previous paragraph. In the Stroop and feature selection tasks, participants are instructed to avoid a prepotent response option in favor of a less obvious but task-appropriate response. But in the weak association case, the difficulty arises from the fact that none of the response options has a strong, prepotent association with the probe word. For example, a participant may be asked whether bee is more strongly associated with knife, sand, or pollen. When one thinks of the concept of a bee, one may automatically bring to mind their most common properties, such as buzzing, flying, making honey, and living in hives. Because these dominant associations do not include any of the response options, the correct answer can only be inferred by activating the bees’ less salient role in pollinating flowers.

In this situation, when automatic, bottom-up processing of the stimuli has failed to identify the correct response, it has been proposed that participants engage in a top-down “controlled retrieval” process (Badre & Wagner, 2002; Gold & Buckner, 2002; Wagner et al., 2001; Whitney et al., 2011b). Badre and Wagner (2002) describe this process as follows:

Controlled semantic retrieval occurs when representations brought online through automatic means are insufficient to meet task demands or when some prior expectancy biases activation of certain conceptual representations. Hence, controlled semantic retrieval may depend on a top-down bias mechanism that has a representation of the task context, either in the form of a task goal or some expectancy and that facilitates processing of task-relevant information when that information is not available through more automatic means. (p. 207)

Although various authors have discussed the notion of a controlled retrieval mechanism for supporting the detection of weak associations, no attempts have been made to specify how such a process would actually operate. This is, we believe, a nontrivial issue. Task representations of the kind described earlier are unlikely to be helpful since the task instruction (“decide which option is most associated with this word”) provides no clue as to what aspect of the meaning of the stimulus will be relevant. In some cases, prior semantic context may provide a useful guide (e.g., the bee-pollen association may be detected more easily if one is first primed by reading “the bee landed on the flower”). Indeed, Cohen and Servan-Schreiber (1992) proposed a framework for cognitive control in which deficits in controlled processing stemmed from an inability to maintain internal representations of context. The same mechanism was used to maintain task context in the Stroop task and to maintain sentence context in a comprehension task. For these researchers, then, the role of top-down control in semantic tasks was to maintain a representation of prior context that can guide meaning selection. However, in most of the experiments that have investigated controlled retrieval, no contextual information was available and thus this account is not applicable. Furthermore, as we have stated, SA patients show strong positive effects of context, which suggests that an inability to maintain context representations is not the source of control deficits in this group.

How, then, do control processes influence activity in the semantic network in order to detect weak relationships between concepts? In the next section, we address this issue by describing an explicit mechanism for controlled retrieval in our model. The core assumption of our approach is that, in order to reach an appropriate activation state that codes the relevant semantic information, the semantic system must be simultaneously sensitive to the word being probed and to its possible associates. Controlled retrieval takes the form of a top-down mechanism that forces the network to be influenced by all of this information as it settles, and which iteratively adjusts the influence of each potential associate. In so doing, the network is able to discover an activation state that accommodates both the probe and the correct associate.

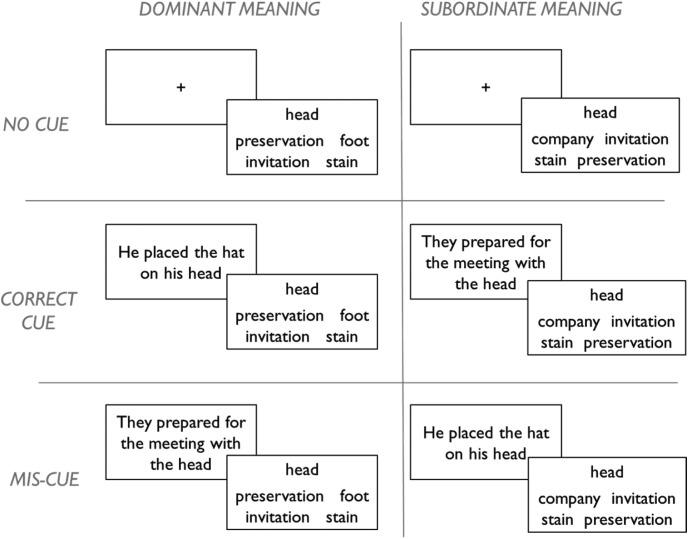

Controlled Retrieval of Semantic Information

To illustrate the controlled retrieval process, we need to introduce an experimental task (Noonan et al., 2010) that will later form the basis for Simulation 1. Figure 8 shows some example stimuli. The experiment probes comprehension of homonyms using a 2 (meaning dominance) × 3 (context) design. On each trial, participants are presented with a probe (head in Figure 8) and asked to select which of four alternatives has the strongest semantic relationship with it. Half of the trials probe the dominant meanings of the homonyms (e.g., head-foot) and half their subordinate meanings (head-company). The subordinate trials represent a case in which controlled retrieval is thought to be key in identifying the correct response, because bottom-up semantic activation in response to the probe will tend toward its dominant meaning. Furthermore, each trial can be preceded by one of three types of context: either a sentence that primes the relevant meaning of the word (correct cue), a sentence that primes the opposing meaning (miscue), or no sentence at all (no cue). These conditions are randomly intermixed throughout the task so that participants are not aware whether the cue they receive on each trial is helpful or not. The context manipulation allows us to explore how external cues can bias semantic processing toward or away from aspects of meaning relevant to the task.

Figure 8.

Example trials from the homonym comprehension task.

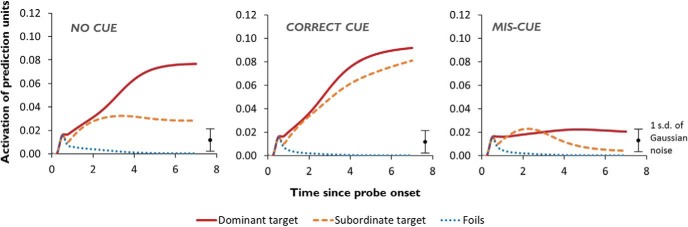

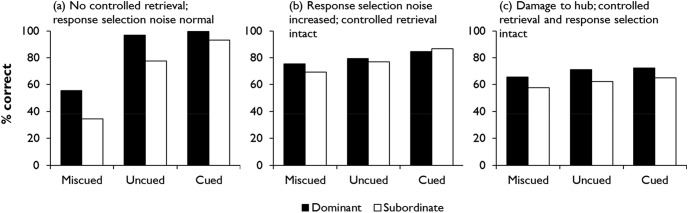

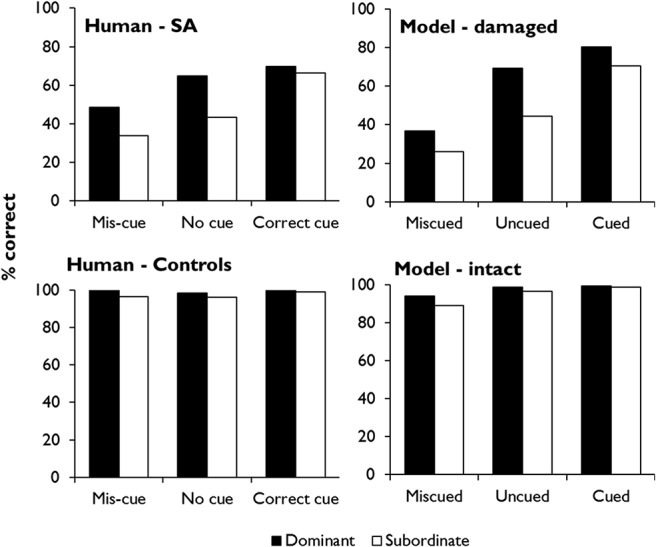

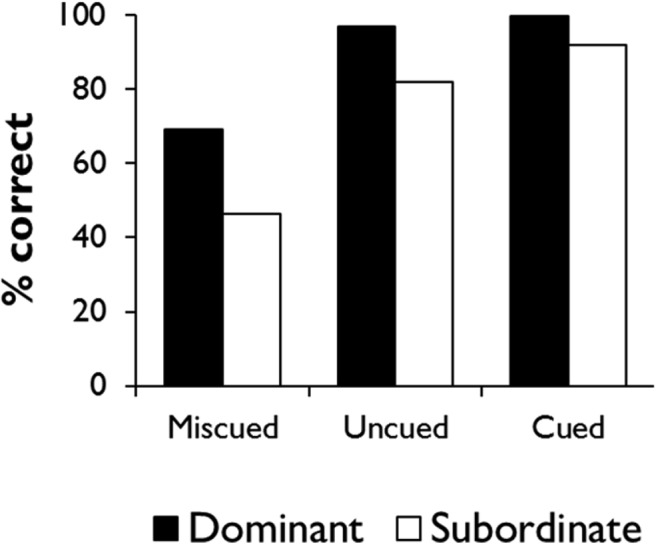

The top-left panel of Figure 9 shows performance on the task by seven SA patients studied by Noonan et al. (2010; see Simulation 1 for more further details). In the no-cue condition, patients were more successful when dominant, prepotent meanings were probed, relative to subordinate ones, and this result was attributed to impairment of controlled retrieval. Provision of correct contextual information improved performance for the subordinate meanings, so that these items reach a similar accuracy level to the dominant trials. This is thought to occur because the guiding context elicits strong, bottom-up activation of the trial-appropriate meaning, reducing the need for controlled retrieval. Incorrect contextual information, in contrast, had a negative effect.

Figure 9.

Target data and model performance for Simulation 1.

To explore the effects of these manipulations in our model, we must first adopt a procedure by which the network can complete the task. We believe that responses in lexical association tasks of this kind are heavily influenced by the co-occurrence rates of the various response options in natural language contexts (see Barsalou et al., 2008). In the model, this information is represented by the activations of the prediction units. To simulate the task in the model, we therefore present a probe word as input, allow the model to settle and then read off the activations of the prediction units representing the four response options. The option with the highest activation is the one that the model considers most likely to co-occur with the probe and should be selected as the response.

Response selection is, however, a complex process. Human decision-making processes are typically stochastic in nature (e.g., Usher & McClelland, 2001) and, in the semantic domain in particular, regions of prefrontal cortex have been linked with resolving competition between possible responses (Badre et al., 2005; Thompson-Schill et al., 1997). To simulate the potential for error at the response selection stage, we add a small amount of noise, sampled from a Gaussian distribution, to each of the activations before selecting the option with the highest activation. The effect of this step varies according to the difference in activation between the most active option and its competitors. When the most active option far exceeds its competitors, the small perturbation of the activations has no effect on the outcome. But when two options have very similar activation levels, the addition of a small amount of noise can affect which is selected as the response. Therefore, this stochastic element introduces a degree of uncertainty about the correct response when two options appear similarly plausible to the model.

Finally, we also manipulate context as in the original experiment. On no-cue trials, the context units are assigned a random pattern of activity. On cued and miscued trials, the model processes a context word prior to the probe, which is consistent with either the trial-appropriate or inappropriate meaning of the word. For example, a trial where the model is required to match bank with cashier could be preceded by either economics or plant.

What happens when we use this procedure to test the model’s abilities using stimuli analogous to those shown in Figure 8? Figure 10 shows the mean activations of the prediction units representing the dominant and subordinate targets in this task, as well as the alternative options (these results are averaged across trials probing all 10 of the model’s homonyms; for further details, see Simulation 1). The results from the uncued condition illustrate the limitations of the model (which, at this stage, has no mechanism for controlled retrieval). As expected, the target relating to the dominant meaning is strongly activated such that, even when the stochastic response selection process is applied, the model is likely to distinguish the correct response from the foils. The subordinate target, however, is much less differentiated from the foils, so there is a greater chance that one of the three foils might be incorrectly selected. Context can modulate these effects in either direction. On correctly cued trials, the model’s expectations are shifted toward the trial-appropriate interpretation of the probe. Subordinate targets therefore become just as strongly predicted as dominant targets and are unlikely to be confused with foils. Conversely, when context primes the incorrect meaning of the word, the model fails to activate the target very strongly for either trial type. Thus, like the SA patients, the model’s ability to discriminate the target from its competitors is highly dependent on the degree to which the target receives strong bottom-up activation.

Figure 10.

Activation of response options in the model with no control processes. The bars in the bottom right corner of each plot show the standard deviation of the Gaussian function used to add noise to each activation.