Abstract

Neural models of a distributed system for face perception implicate a network of regions in the ventral visual stream for recognition of identity. Here, we report a functional magnetic resonance imaging (fMRI) neural decoding study in humans that shows that this pathway culminates in the right inferior frontal cortex face area (rIFFA) with a representation of individual identities that has been disentangled from variable visual features in different images of the same person. At earlier stages in the pathway, processing begins in early visual cortex and the occipital face area with representations of head view that are invariant across identities, and proceeds to an intermediate level of representation in the fusiform face area in which identity is emerging but still entangled with head view. Three-dimensional, view-invariant representation of identities in the rIFFA may be the critical link to the extended system for face perception, affording activation of person knowledge and emotional responses to familiar faces.

Keywords: fusiform face area, inferior frontal face area, neural decoding, occipital face area, view-invariant face identity

Introduction

Humans arguably can recognize an unlimited number of face identities but the neural mechanisms underlying this remarkable capability are still unclear. Studies using functional magnetic resonance imaging (fMRI) have consistently shown that, as compared to non-face objects, faces elicit an increased response in a distributed set of brain areas. According to a functional model, originally proposed by Haxby et al. (2000), brain regions involved in face processing can be subdivided into 2 systems, the core and the extended systems. The occipital face area (OFA), the fusiform face area (FFA) and the posterior superior temporal sulcus (pSTS) are part of the core system, which plays a role in the visual analysis of faces. The extended system consists of brain areas that are not face-selective that can act in concert with the core system to extract meaning from faces. The distributed cortical fields in the core system for the visual analysis of faces and the computations performed in this system are a matter of intense investigation and controversy (Haxby et al. 2000; Ishai et al. 2005; Gobbini and Haxby 2007; Haxby and Gobbini 2011; Collins and Olson 2014; Duchaine and Yovel 2015), in particular the neural underpinnings for recognition of identity. Some studies with fMRI in humans have shown the importance of the FFA and more extended regions in the ventral temporal cortex for identity recognition (Natu et al. 2010; Nestor et al. 2011; Anzellotti et al. 2014; Axelrod and Yovel 2015), as well as face-identity discrimination in more anterior portions of the temporal lobes (Kriegeskorte et al. 2007; Anzellotti et al. 2014; Nestor et al. 2011), while the more posterior areas are tuned more to viewpoint perception of faces (Natu et al. 2010; Kietzmann et al. 2012, 2015). A coherent overview of the role of the different face processing brain regions is missing.

Freiwald and Tsao (2010) analysed the neural population responses in cortical face patches in macaque temporal lobes identified with fMRI. While population codes in the more posterior face areas, middle lateral (ML) and middle fundus (MF) represent face view that is invariant across identities, population codes in the most anterior face-responsive area, anterior medial (AM), represent face identity that is almost fully view-invariant. A face patch located intermediately, anterior lateral (AL), is tuned to mirror symmetric views of faces.

Here we show, for the first time, a progressive disentangling of the representation of face identity from the representation of head view in the human face processing system with a structure that parallels that of the macaque face patch system (Freiwald and Tsao 2010). While early visual cortex (EVC) and the OFA distinguish head views, view-invariant representation of identities in the human face perception system is fully achieved in the right inferior frontal face area (rIFFA). A frontal face area has been described in both the human and monkey brain (Tsao et al. 2008b; Rajimehr et al. 2009; Axelrod and Yovel 2015; Duchaine and Yovel 2015), but has not previously been linked to view-invariant representation of identity. The representation of faces in the FFA revealed an intermediate stage of processing at which identity begins to emerge but is still entangled with head view.

Materials and Methods

Subjects

We scanned 13 healthy right-handed subjects (6 females; mean age = 25.3 ± 3.0) with normal or corrected-to-normal vision. Participants gave written informed consent and the protocol of the study was approved by the local ethical committee.

Stimuli

4 undergraduates (2 females) from Dartmouth College served as models for face stimuli. We took still pictures and short videos of each model. The short video of our models were taken while we briefly explained to the models the experiment and asked them to look around the room so that we could capture their head in all angles. The video covered the subject's head and shoulders. All our subjects were wearing a black T-shirt provided by us. During the video, subjects were behaving normally while conversing and listening to us explain the experiment. We selected 4 15 s clips for each identity to be used for the training session such that each 15 s clip contained at least one sweep of models looking around the room. Still face images were color pictures taken with 5 different head views: left and right full profile, left and right half profile, and full-frontal view (see Supplementary Fig. S1). To assure consistent image quality, all pictures were made in the same studio with identical equipment and lighting conditions. All still images were cropped to include the hair. Each image was scaled to a resolution of 500×500 pixels. Before training, we confirmed that participants did not know any of the identities shown in the experiment.

Training Session before the fMRI Experiment

Subjects were visually familiarized with the identities of the 4 stimulus models on the day before the scanning session through a short training held in our laboratory. Subjects passively watched 4 15 s videos without audio of each identity, then performed a face identity matching task in which they saw 2 stimuli in succession with 0.5 s interstimulus interval. Stimuli were still images of the 4 models at different head views (presented for 1 s) or 2 s video clips randomly selected from the aforementioned 15 s video clips. Subjects indicated if the identity was the same or different using a keyboard. There were 360 trials in total, with matching identity in half, and subjects were provided their accuracy as feedback every 30 trials. All the subjects performed the task successfully and expressed no difficulty in identifying the models from the images of different head views.

Scanning

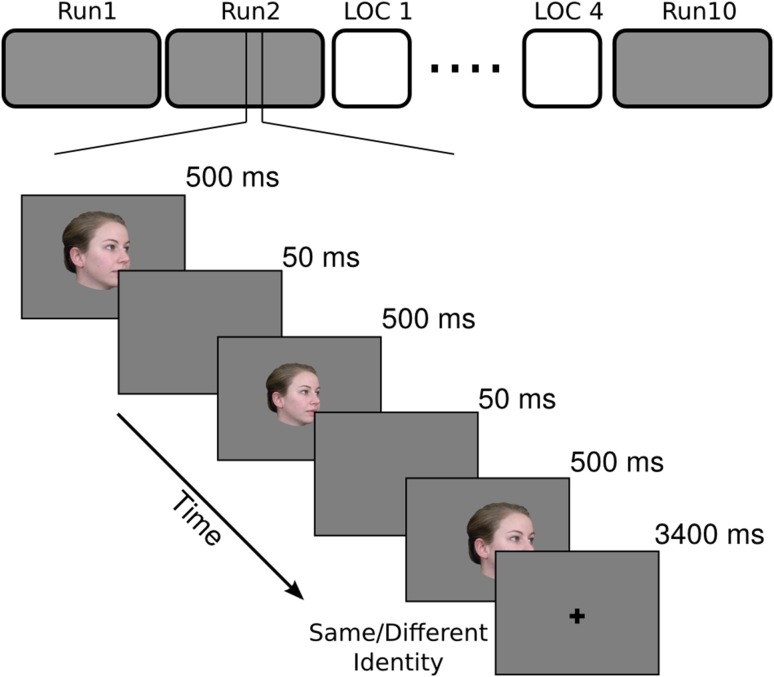

During scanning, each subject participated in 10 functional runs of the main experiment. Each run had 63 trials (60 stimulus trials and 3 fixation trials), and started and ended with a 15 s of fixation of black cross on a gray background. Each stimulus trial was 5 s long and started with a stimulus image presented for 500 ms followed by a 50 ms gray screen, repeated 3 times, followed by 3400 ms of fixation (Fig. 1). The 3 repetitions on each trial were of the same identity and head view but with the image size and location jittered (±50 pixels equivalent to 1.25° degree variations in image size and ±10 pixel variations in the horizontal and vertical location). Each face image subtended approximately 12.5° of visual angle. Subjects performed a one-back repetition detection task based on identity, pressing a button with the right index finger for ‘same’ and the right middle finger for ‘different’.

Figure 1.

Schematic of the main experiment with an example of the trial structure. fMRI study had 10 runs of the main experiment and 4 runs of localizer interspersed. Each run of the main experiment had 63 trials—60 stimulus trials and 3 fixation trials. Each trial started with one of the 20 face images presented 3 times at variable size and location with 50 ms of ISI between presentations, and ended with a 3400 ms fixation. Subjects performed a 1-back identity matching task to keep their attention to the stimuli.

Localizer

In addition to the main experiment, 4 runs of a functional localizer were interleaved with the experimental runs. Each localizer run had 2 blocks each of faces, objects, and scenes with 8 s of fixation separating them. During each presentation block, subjects saw 16 still images from a category with 900 ms of image presentation and 100 ms of ISI. Subjects performed a one-back repetition detection task. Each run started with 12 s of fixation at the beginning and ended with 12 s of fixation. None of the faces used for the localizer were part of the set of stimuli used for the fMRI experiment on head view and identity. Stimuli and design for the localizer have been used previously (Guntupalli et al. 2016) and are published online (Sengupta et al. 2016).

fMRI protocol

Subjects were scanned using a Philips Intera Achieva 3T scanner with a 32 channel head coil at the Dartmouth Brain Imaging Center. Functional scans were acquired with an echo planar imaging sequence (TR = 2.5 s, TE = 30 ms, flip angle = 90°, 112 × 112 matrix, FOV = 224 mm × 224 mm, R-L phase encoding direction) every 2.5 s with a resolution of 2 × 2 mm covering the whole brain (49×2 mm thick interleaved axial slices). We acquired 140 functional scans in each of the 10 runs. We acquired a fieldmap scan after the last functional run and a T1-weighted anatomical (TR = 8.265 ms, TE = 3.8 ms, 256 × 256 × 220 matrix) scan at the end. The voxel resolution of anatomical scan was 0.938 mm × 0.938 mm × 1.0 mm.

Data Preprocessing

Each subject's fMRI data was preprocessed using AFNI software (Cox 1996). Functional data was first corrected for the order of slice acquisition and then for the head movement by aligning to the fieldmap scan. Functional volumes were corrected for distortion using fieldmap with FSL-Fugue (Smith et al. 2004). Temporal spikes in the data were removed using 3dDespike in AFNI. Time series in each voxel was filtered using a high-pass filter with a cutoff at 0.00667 Hz, and the motion parameters were regressed out using 3dBandpass in AFNI. Data were then spatially smoothed using a 4 mm full-width at half-max Gaussian filter (3dmerge in AFNI). We ran a GLM analysis to estimate beta and t-statistic values for each of the 20 stimulus images using TENT function of 3dDeconvolve in AFNI resulting in 7 estimates from 2.5 s to 17.5 s after stimulus onset. In the end, we obtained 7 estimates per voxel per stimulus image in each subject. We used the first 5 of those 7 response t-statistic estimates in all our analyses (Misaki et al. 2010). Thus, in each searchlight or region of interest (ROI) we measured a pattern of response to each stimulus image as a vector whose features were 5 timepoints for each voxel. We extracted cortical surfaces from the anatomical scans of subjects using FreeSurfer (Fischl et al. 1999), aligned them to the FreeSurfer's cortical template, and resampled surfaces into a regular grid with 20 484 nodes using MapIcosahedron in AFNI. We implemented our methods and ran our analyses in PyMVPA (Hanke et al. 2009) unless otherwise specified (http://www.pymvpa.org).

Definition of Face-selective ROIs

We used the same preprocessing steps for face localizer data and estimated the contrast for faces greater than objects using GLM analysis to define face-selective regions. Clusters of voxels with stronger responses to faces were assigned to OFA, FFA, pSTS, and anterior temporal face area (ATFA) based on their anatomical locations (Haxby et al. 2000). We used faces greater than objects contrast with a threshold of t = 2.5 to 3 for bilateral FFA, OFA, and right pSTS face areas, and 2–2.5 for right ATFA. For the ATFA and pSTS face areas, only the right hemisphere yielded robust ROIs in all subjects, whereas FFA and OFA were identified bilaterally in all subjects but one who had no identifiable OFA at our threshold range. These criteria based on responses to still images of unfamiliar faces did not identify a consistent face-selective cluster in the rIFFA even though MVPA (classification and similarity analyses) did reveal such a cluster based on face-identity-selective patterns of response. Post-hoc analysis of responses to the localizer stimuli in a searchlight centered on the cortical node with peak accuracy for classification of identity confirmed that also the rIFFA showed face-selectivity based on the univariate contrast between faces and objects (Supplementary Fig. S3). Table S1 lists the average number of voxels and volumes of face-selective ROIs.

Multivariate Pattern Classification

Searchlight

We performed MVPC analyses to localize representations of face stimuli in terms of head view, independent of identity, and identity, independent of head view, using surface-based searchlights (Oosterhof et al. 2011). We centered searchlights on each surface node, and included all voxels within a cortical disc of radius 10 mm. Thickness of each disc was extended by 50% into and outside the gray matter to account for differences between EPI and anatomical scans due to distortion. Identity classifications were performed using a leave-one-viewpoint-out cross-validation scheme, and viewpoint classifications were performed using a leave-one-identity-out cross-validation scheme. For example, each classifier for identity was built on responses to all head views but one (16 vectors—4 head views of 4 identities) and tested on the left out head view (1 out of 4 classification). This was done for all 5 data folds on head view. Similarly, each classifier for head view was built on all identities but one (15 vectors—5 head views of 3 identities) and tested on the left out identity (1 out of 5 classification). MVPC used a linear support vector machine (SVM) classifier (Cortes and Vapnik 1995). The SVM classifier used the default soft margin option in PyMVPA that automatically scales the C parameter according to the norm of the data. Classification accuracies from each searchlight were placed into their center surface nodes resulting in one accuracy map per subject per classification type. We also performed an identical analysis but using permuted labels, 20 per subject per classification type, for significance testing. To compute significant clusters across subjects, we performed a between-subject threshold-free cluster enhancement procedure (Smith and Nichols 2009) using our permuted label accuracy maps. We then thresholded the average accuracy map with correct labels across subjects at t > 1.96 (P < 0.05, two-tailed, corrected for multiple comparisons) for visualization.

Face-selective ROIs

In each face-selective ROI of each subject, we performed classification of identity and head view as described above. We used a nested cross-validation scheme to perform feature selection using ANOVA scores. In each fold, training data is used to compute ANOVA scores and classification using different numbers of top features within the training data. The set of features that gave the best accuracy on training data is then used to classify the test data. Both ANOVA scores and which features to use are computed only from the training dataset. We performed significance testing in each ROI for each classification type using permutation testing using 100 permutations in each subject and sampling them with replacement for 10 000 permutations across subjects to compute the null distribution.

Representation Similarity Analyses

We performed a RSA (Kriegeskorte et al. 2008) to model the representational geometry—representational similarity matrices (RSM)—in each searchlight using 3 models of representation: 1) identity invariant to head view, 2) head view invariant to identity, and 3) head view with mirror symmetry (Supplementary Fig. S2). Correlation is used to compute similarities between patterns of response to different images. Both the neural and the model similarity matrices are rank ordered before performing a ridge regression with α = 0.1 to fit the 3 model similarity structures to the neural similarity structure. Beta values from regression were assigned to the surface node at the center of each searchlight. We performed permutation testing to assess the significance of the beta values for each model regression at each surface node using permuted labels and threshold-free cluster enhancement method as described above. We then thresholded the average beta maps with correct labels for each model at t > 1.96 (corrected) for visualization. We performed a similar modeling of representational geometry in each face-selective ROI. To remove any possible confounds between mirror symmetry and other models, we zeroed out any elements in the mirror symmetry model that overlaps with the other 2 models. We assess the significance of model coefficients in each ROI using permutation testing. We computed 100 model coefficients in each subject using permuted labels, and sampling them with replacement for 10 000 samples across subjects to compute null distribution for each model coefficient in each ROI.

Multidimensional Scaling

We performed multidimensional scaling in significant head view and identity classification clusters and face-selective ROIs to visualize the representation of face stimuli in each of those regions. Since classification clusters were defined on surface, we aggregated the data from all the voxels that participated in searchlights with their center nodes in those clusters in each subject. Data in each cluster of searchlights and each ROI were reduced to a 20 principal component space (all components) before computing distance matrices to account for variable sizes. Pairwise correlation distance matrices were computed for all 20 face stimuli for each cluster and ROI in each subject. Distance matrices were first normalized in each subject by dividing by the maximum correlation distance within that subject and were averaged across subjects to produce an average distance matrix in each cluster and ROI. A metric MDS was performed with 10 000 iterations to project the stimuli onto a 2-dimensional space. MDS solutions for face-selective ROIs were also computed using the same procedure. Supplementary Fig. S4 shows MDS plots for these ROIs.

Results

Subjects viewed 4 visually familiar identities, 2 males and 2 females, with 5 different head views, while performing a one-back identity repetition detection task to ensure attention to the stimuli. We performed multivariate pattern classification and representational similarity analyses across the whole brain using searchlights and in face-selective ROIs.

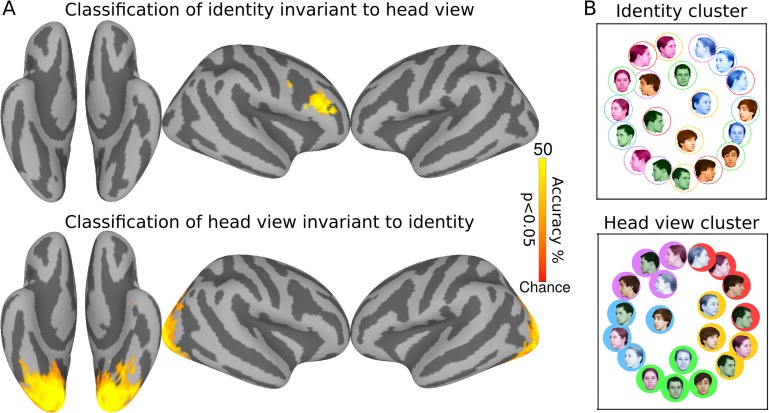

Classification Analyses

First, we used multivariate pattern classification (MVPC) in surface-based searchlights to identify cortical areas that encode faces in terms of view and identity (Haxby et al. 2001). For classification of identity invariant over face views, a classifier was trained to classify 4 identities over 4 views and tested on the left out view of the 4 identities. For classification of head view invariant over identities, a classifier was trained to classify head views over 3 identities and tested on 5 head views of the left out identity. Results (Fig. 2A) show robust representation of face identity invariant to head view in the right inferior frontal cluster, the rIFFA, with a peak classification accuracy of 41.2% (chance = 25%; 95% CI = [35.0%, 46.2%]), and representation of view invariant to face identity in a large expanse of EVC that includes the OFA and part of the right FFA with peak classification accuracy of 65.4% (chance = 20%; 95% CI = [60.4%, 70.8%]). To visualize the representational geometries of responses to face images with different identities and head views, we performed a multidimensional scaling analyses (MDS) of patterns response in these 2 clusters. MDS of the head view cluster clearly shows a representation of faces arranged according to head view invariant to identity with a circular geometry in which adjacent head views are closer to each other and the left and right full profiles are closer to each other (Fig. 2B). In contrast, MDS of the rIFFA identity cluster shows a representation of faces arranged according to identity invariant to view with the responses to each of the 4 identities clustered together for most or all head views.

Figure 2.

Surface searchlight classification of faces. (A) Classification accuracies for face identity cross-validated over views (top) and head view cross-validated over identities (bottom). Chance accuracy is 25% for face identity and 20% for head view classifications. Maps are thresholded at P < 0.05 after correcting for multiple comparisons using permutation testing. (B) MDS plots of representational geometries of responses to face stimuli in the identity cluster (top) and the head view cluster (bottom). Faces of the same identity are colored the same, and faces with same head view have the same background or outline. Coloring based on the identity is emphasized on the top and coloring based on the head view emphasized on the bottom.

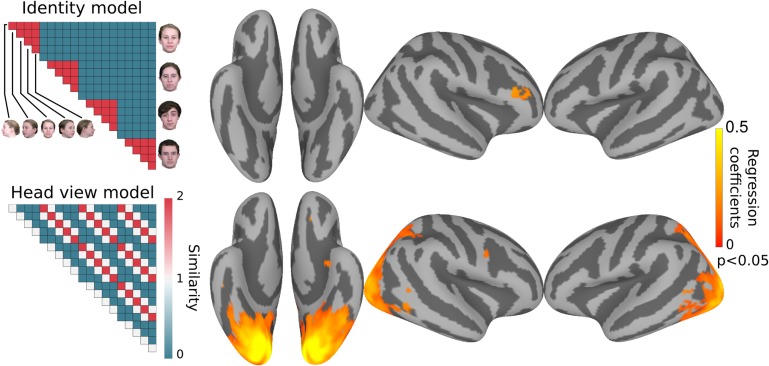

Representational Similarity Analyses

For an analysis of representational geometry across cortex, we next performed a searchlight representational similarity analysis (RSA) (Kriegeskorte et al. 2008). We constructed 3 model similarity structures capturing representation of 1) identity invariant to view, 2) mirror symmetry of views, and 3) view invariant to identity (Supplementary Fig. S2). We performed ridge regression to fit these 3 model similarity structures in surface-based searchlights, producing 3 coefficients in each searchlight. Figure 3 shows cortical clusters with coefficients for models capturing identity invariant to views and views invariant to identity. Consistent with the classification results, representational geometry in the right inferior frontal cluster is correlated significantly with the face identity similarity model and representational geometry in a large EVC cluster that included OFA and part of the right and left FFAs correlated significantly with the head view similarity model.

Figure 3.

Surface searchlight based modeling of representational geometry. Neural representation of faces in each searchlight was modeled with 3 model similarity structures as regressors using ridge regression. Representational geometry in the rIFFA correlated with the identity model (top), whereas representational geometry in EVC correlated with the head view model (bottom). Maps are thresholded at P < 0.05 after correcting for multiple comparisons using permutation testing. Correlation with the mirror symmetry model did not reveal any significant clusters.

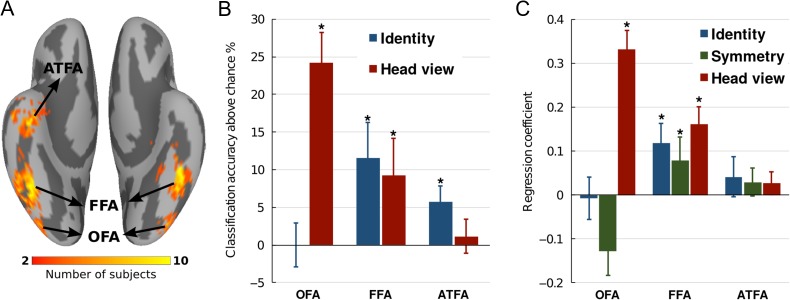

Classification Analyses and Representational Similarity Analyses in Face-selective ROIs

Searchlight analyses revealed representation of head view in posterior visual cortex and identity in the rIFFA but unlike other reports these searchlight analyses did not find representation of identity in ventral temporal (VT) face areas (Haxby et al. 1994; Kriegeskorte et al. 2007; Natu et al. 2010; Nestor et al. 2011; Anzellotti et al. 2014; Axelrod and Yovel 2015). To investigate further the representation of faces in the core face system, we defined face-selective regions in all subjects with a localizer. We defined the FFA, OFA, pSTS face area, and ATFA (Fig. 4A). We then performed MVPC and RSA in each of these ROIs. Classification of face identity, invariant to views, was significant in FFA and ATFA with average accuracies of 36.5% (Chance = 25%; 95% CI = [28.5%, 45.8%]) and 30.8% (95% CI = [27.3%, 34.2%]) respectively, but was not different from chance in OFA and pSTS (Fig. 4B). Classification of head view, invariant to identity, was successful in OFA and FFA with average accuracies of 44.2% (Chance = 20%; 95% CI = [35.0%, 50.4%]) and 29.2% (95% CI = [20.8%, 38.1%]) respectively, but was not different from chance in ATFA and pSTS (Fig. 4B). Analysis of neural representational geometry showed that representational geometry in the OFA correlated significantly only with head view model (beta = 0.33; P < 0.001) (Fig. 4C). Representational geometry in the FFA correlated significantly with head view (beta = 0.12; P < 0.001), mirror symmetry (beta = 0.08; P < 0.01), and identity models (beta = 0.16; P < 0.001) corroborating its intermediate role in disentangling identity from head view and mirror symmetry (Fig. 4C). Representational geometry in the ATFA did not significantly correlate with any model and representational geometry in the pSTS correlated only with the head view model (beta = 0.07; P < 0.01).

Figure 4.

Classification and representational similarity analyses in face-selective ROIs. (A) Anatomical locations of face-selective ROIs as determined by localizer. (B) Classification accuracies of face identity invariant to view and head view invariant to identity in regions of the core face system. (C) Modeling representational geometry in ROIs. Asterisks indicate accuracies that were significant with permutation. Error bars indicate standard error (SEM).

Discussion

Our results show a progressive disentangling of the representation of face identity from the variable visual features of different images of that face in a hierarchically organized distributed neural system in occipital, VT, and inferior frontal human cortices. Unlike previous reports (Kriegeskorte et al. 2007; Freiwald and Tsao 2010; Natu et al. 2010; Nestor et al. 2011; Anzellotti et al. 2014; Axelrod and Yovel 2015), we show that this disentangling process culminates in a face area in the right inferior frontal cortex, the rIFFA. The representational geometry of rIFFA responses to face images grouped images by identity and not by head view. By contrast, the representational geometry in EVC and the OFA grouped the same images by head view and not by identity. At an intermediate stage in the FFA, representational geometry reflected both identity and head view. A view-invariant representation of identity also was found in the ATFA but was not as strong as the representation in the rIFFA. The representation of identity in the FFA was detected only in the ROI analysis, presumably because definition of the FFA with a localizer in each individual restricted analysis to a representation that may be confined to this face-selective region. The representation of head view, by contrast, appears to be more widely distributed in visual cortices. Our results reveal an organization similar to that described in monkeys based on single unit recording (Freiwald and Tsao 2010) but these studies did not examine population codes in the frontal face patch.

A face-responsive area in the inferior frontal cortex was first reported in humans using functional brain imaging and monkeys using single unit recording (Haxby et al. 1994, 1995, 1996; Courtney et al. 1996, 1997; Scalaidhe et al. 1997). Further reports of this area followed in fMRI studies in humans (Ishai et al. 2002; Fox et al. 2009; Rajimehr et al. 2009; Pitcher et al. 2011; Axelrod and Yovel 2015) and monkeys (Tsao et al. 2008a, 2008b; Rajimehr et al. 2009; Dubois et al. 2015). The human neuroimaging studies have found this area to be face-responsive using perceptual matching of different views of the same identity (Haxby et al. 1994), face working memory (Haxby et al. 1995; Courtney et al. 1996, 1997), retrieval from long-term memory (Haxby et al. 1996), imagery from long-term memory (Ishai et al. 2002), repetition–suppression (Pourtois et al. 2005), release from adaptation (Rotshtein et al. 2005), and functional localizers with dynamic face stimuli (Fox et al. 2009; Pitcher et al. 2011). There also is evidence from patients that support the role of the IFG in face processing. For example, a patient with implanted electrodes in inferior frontal cortex reported face-related hallucinations after direct stimulation in prefrontal cortex (Vignal et al. 2000). Other studies using intracerebral and depth electrodes in patients have reported face-selective activity in IFG as early as 117 ms slightly lagging the activity in the fusiform gyrus (Halgren et al. 1994, Marinkovic et al. 2000, Barbeau et al. 2008). Patients with damage to frontal lobe regions have been reported to have face memory impairments even for novel faces (Rapcsak et al. 2001) and false recognition without prosopagnosia (Rapcsak et al. 1996). One study reported correlation of activation in right IFG with the perceptual distortion of faces in a prosopagnosic (Dalrymple et al. 2014). Another study using transcranial magnetic stimulation (TMS) implicated a more causal role of right IFG in configural face processing (Renzi et al. 2013). Anatomical connection strength between face-selective regions in the temporal cortex and IFG has been shown to correlate with face-selective activation in fusiform gyrus (Saygin et al. 2012) and age-related changes in face perception (Thomas et al. 2008).

As far as we know, a clear case of prosopagnosia with an isolated lesion of the inferior frontal cortex has not been reported in the literature. The main difficulty of having clear cases of prosopagnosia with a right inferior frontal lesion might arise from the fact that the face area in this location may be intertwined with other functions in such a way that a lesion there might not produce prosopagnosia without impairment of other functions. Therefore, patients with right inferior frontal lesions might have a constellation of symptoms that overshadow any impairment in face recognition. We hope that our findings will encourage careful investigation of patients with inferior frontal lesions for symptoms related to face-processing impairments such as prosopagnosia.

Previous fMRI studies of identity decoding using multivariate pattern classification, however, have mostly concentrated on the ventral visual pathway in temporal cortex, using imaging volumes or ROIs that excluded frontal areas (Kriegeskorte et al. 2007; Natu et al. 2010; Nestor et al. 2011; Anzellotti et al. 2014; Ghuman et al. 2014), with the exception of a recent report (Axelrod and Yovel 2015). Previous identity decoding studies found identity information in the posterior VT cortex including the FFA (Natu et al. 2010; Anzellotti et al. 2014; Axelrod and Yovel 2015) and anterior temporal areas, albeit with locations that are inconsistent across reports (Kriegeskorte et al. 2007; Natu et al. 2010; Nestor et al. 2011; Anzellotti et al. 2014) and absent in one (Axelrod and Yovel 2015). None of these reports analysed the representation of face view or how the representation of identity is progressively disentangled from the representation of face view in the face processing system. In separate studies, representation of head view and mirror symmetry have been reported in more posterior locations both in face responsive areas such as the OFA but also in areas that are object-responsive such as the parahippocampal gyrus and in the dorsal visual pathway (Pourtois et al. 2005; Natu et al. 2010; Nestor et al. 2011; Kietzmann et al. 2012). 2 studies reported representation of mirror symmetry in the FFA (Kietzmann et al. 2012; Axelrod and Yovel 2015). We find that view-invariance of the representation of identity in FFA is limited as it is entangled with the representation of face view, including mirror symmetry, suggesting that it may be more like the monkey face patch AL than ML/MF. Identity-invariant representation of head view in EVC and OFA suggests that ML/MF may be more like the OFA than FFA.

The rIFFA appears to be difficult to identify with localizers that use static images without multiple views of the same identity. It is more consistently activated by tasks that involve matching identity across views, dynamic images, or face memory. The increased sensitivity to dynamic face stimuli led Duchaine and Yovel (2015) to conclude that this area is part of a “dorsal face pathway” that is more involved in processing face movement, but the review of the literature and our current results suggest that this area plays a key role in the representation of identity that is integrated across face views. Dynamic stimuli may enhance the response in this area because they present changing views of the same identity in a natural sequence. Dynamic visual features that capture how face images change with natural movement may play an important role in building a three-dimensional view-invariant representation (Blanz and Vetter 2003; O'Toole et al. 2011). Prior to scanning, subjects saw dynamic videos of the 4 identities to afford learning a robust, three-dimensional representation of each identity.

We were able to replicate others’ findings in anterior temporal cortex generally, but only with the ROI analysis. Identity decoding accuracy in the ATFA was lower than accuracy in the FFA, but that may be due to the larger number of voxels in the FFA ROI and the greater reliability of identifying face-selective voxels there. The rIFFA was identified initially with multivariate pattern analyses but also showed face-selectivity with univariate contrasts (Supplementary Fig. S3), whereas the ATFA was identified only with the localizer. Both the ATFA and rIFFA showed significant decoding of identity and no trend towards decoding head view. The nature of further processing that is realized in the rIFFA, and how this area interacts with the ATFA, remains unclear. Single unit recording studies of the representation of identity in the monkey inferior frontal face patch (Tsao et al. 2008b) may further elucidate the role of this face patch in representing face identity. Such studies, however, may require familiarization with face identities using dynamic stimuli and/or a task that involves memory.

A view-invariant representation of a face's identity may be necessary to activate person knowledge about that individual and evoke an appropriate emotional response (Gobbini and Haxby 2007; O'Toole et al. 2011). Thus, the view-invariant representation in rIFFA may provide a link to the extended system for face perception, most notably regions in medial prefrontal cortex and temporoparietal junction for person knowledge and the anterior insula and amygdala for emotion (Haxby et al. 2000; Gobbini and Haxby 2007; Collins and Olson 2014), and thereby be critical for engaging the extended system in the successful recognition of familiar individuals.

Supplementary Material

Supplementary material can be found here.

Supplementary Material

Notes

We are grateful to Jim Haxby, Carlo Cipolli and Brad Duchaine for helpful discussion and comments on a previous draft of this paper and to Yu-Chien Wu for help with the scanning parameters. Conflict of Interest: None declared.

Funding

This research was supported by the CompX Faculty Grant from the William H Neukom 1964 Institute for Computational Science to MIG.

References

- Anzellotti S, Fairhall SL, Caramazza A. 2014. Decoding representations of face identity that are tolerant to rotation. Cereb Cortex. 24:1988–1995. [DOI] [PubMed] [Google Scholar]

- Axelrod V, Yovel G. 2015. Successful decoding of famous faces in the fusiform face area. PLoS ONE. 10:e0117126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barbeau EJ, Taylor MJ, Regis J, Marquis P, Chauvel P, Liégeois-Chauvel C. 2008. Spatio temporal dynamics of face recognition. Cereb Cortex. 18:997–1009. [DOI] [PubMed] [Google Scholar]

- Blanz V, Vetter T. 2003. Face recognition based on fitting a 3D morphable model. IEEE Trans Pattern Anal Mach Intell. 25:1063–1074. [Google Scholar]

- Collins JA, Olson IR. 2014. Beyond the FFA: the role of the ventral anterior temporal lobes in face processing. Neuropsychologia. 61:65–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cortes C, Vapnik V. 1995. Support-vector networks. Mach Learn. 20:273–297. [Google Scholar]

- Courtney SM, Ungerleider LG, Keil K, Haxby JV. 1996. Object and spatial visual working memory activate separate neural systems in human cortex. Cereb Cortex. 6:39–49. [DOI] [PubMed] [Google Scholar]

- Courtney SM, Ungerleider LG, Keil K, Haxby JV. 1997. Transient and sustained activity in a distributed neural system for human working memory. Nature. 386:608–611. [DOI] [PubMed] [Google Scholar]

- Cox RW. . 1996. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages Comput Biomed Res Int J. 29:162–173. [DOI] [PubMed] [Google Scholar]

- Dalrymple KA, Davies-Thompson J, Oruc I, Handy TC, Barton JJS, Duchaine B. 2014. Spontaneous perceptual facial distortions correlate with ventral occipitotemporal activity. Neuropsychologia. 59:179–191. [DOI] [PubMed] [Google Scholar]

- Dubois J, de Berker AO, Tsao DY. 2015. Single-unit recordings in the macaque face patch system reveal limitations of fMRI MVPA. J Neurosci. 35:2791–2802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duchaine B, Yovel G. 2015. A revised neural framework for face processing. Annu Rev Vis Sci. 1:393–416. [DOI] [PubMed] [Google Scholar]

- Fischl B, Sereno MI, Tootell RBH, Dale AM. 1999. High-resolution intersubject averaging and a coordinate system for the cortical surface. Hum Brain Mapp. 8:272–284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox CJ, Iaria G, Barton JJS. 2009. Defining the face processing network: optimization of the functional localizer in fMRI. Hum Brain Mapp. 30:1637–1651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freiwald WA, Tsao DY. 2010. Functional compartmentalization and viewpoint generalization within the macaque face-processing system. Science. 330:845–851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghuman AS, Brunet NM, Li Y, Konecky RO, Pyles JA, Walls SA, Destefino V, Wang W, Richardson RM. 2014. Dynamic encoding of face information in the human fusiform gyrus. Nat Commun. 5:5672. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gobbini MI, Haxby JV. 2007. Neural systems for recognition of familiar faces. Neuropsychologia, The Perception of Emotion and Social Cues in Faces. 45:32–41. [DOI] [PubMed] [Google Scholar]

- Guntupalli JS, Hanke M, Halchenko YO, Connolly AC, Ramadge PJ, Haxby JV. 2016. A model of representational spaces in human cortex. Cereb Cortex. 26:2919–2934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Halgren E, Baudena P, Heit G, Clarke M, Marinkovic K, Chauvel P. 1994. Spatio-temporal stages in face and word processing. 2. Depth-recorded potentials in the human frontal and Rolandic cortices. J Physiol. 88:51–80. [DOI] [PubMed] [Google Scholar]

- Hanke M, Halchenko YO, Sederberg PB, Hanson SJ, Haxby JV, Pollmann S. 2009. PyMVPA: a Python toolbox for multivariate pattern analysis of fMRI data. Neuroinformatics. 7:37–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. 2001. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 293:2425–2430. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. 2000. The distributed human neural system for face perception. Trends Cogn Sci. 4:223–233. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Horwitz B, Ungerleider LG, Maisog JM, Pietrini P, Grady CL. 1994. The functional organization of human extrastriate cortex: a PET-rCBF study of selective attention to faces and locations. J Neurosci. 14:6336–6353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Ungerleider LG, Horwitz B, Maisog JM, Rapoport SI, Grady CL. 1996. Face encoding and recognition in the human brain. Proc Natl Acad Sci USA. 93:922–927. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Ungerleider LG, Horwitz B, Rapoport SI, Grady CL. 1995. Hemispheric differences in neural systems for face working memory: a PET-rCBF study. Hum Brain Mapp. 3:68–82. [Google Scholar]

- Haxby JV, Gobbini MI. 2011. Distributed neural systems for face perception In: Calder A, Rhodes G, Johnson M, Haxby J, editors. Oxford handbook of face perception. New York: Oxford University Press; p. 93–110. [Google Scholar]

- Ishai A, Haxby JV, Ungerleider LG. 2002. Visual imagery of famous faces: effects of memory and attention revealed by fMRI. NeuroImage. 17:1729–1741. [DOI] [PubMed] [Google Scholar]

- Ishai A, Schmidt CF, Boesiger P. 2005. Face perception is mediated by a distributed cortical network. Brain Res Bull. 67:87–93. [DOI] [PubMed] [Google Scholar]

- Kietzmann TC, Swisher JD, König P, Tong F. 2012. Prevalence of selectivity for mirror-symmetric views of faces in the ventral and dorsal visual pathways. J Neurosci. 32:11763–11772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kietzmann TC, Poltoratski S, König P, Blake R, Tong F, Ling S. 2015. The occipital face area is causally involved in facial viewpoint perception. J Neurosci. 35:16398–16403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Formisano E, Sorger B, Goebel R. 2007. Individual faces elicit distinct response patterns in human anterior temporal cortex. Proc Natl Acad Sci USA. 104:20600–20605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Bandettini P. 2008. Representational similarity analysis—connecting the branches of systems neuroscience. Front Syst Neurosci. 2:4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marinkovic K, Trebon P, Chauvel P, Halgren E. 2000. Localised face processing by the human prefrontal cortex: face-selective intracerebral potentials and post-lesion deficits. Cogn Neuropsychol. 17:187–199. [DOI] [PubMed] [Google Scholar]

- Misaki M, Kim Y, Bandettini PA, Kriegeskorte N. 2010. Comparison of multivariate classifiers and response normalizations for pattern-information fMRI. NeuroImage. 53:103–118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Natu VS, Jiang F, Narvekar A, Keshvari S, Blanz V, O'Toole AJ. 2010. Dissociable neural patterns of facial identity across changes in viewpoint. J Cogn Neurosci. 22:1570–1582. [DOI] [PubMed] [Google Scholar]

- Nestor A, Plaut DC, Behrmann M. 2011. Unraveling the distributed neural code of facial identity through spatiotemporal pattern analysis. Proc Natl Acad Sci USA. 108:9998–10003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oosterhof NN, Wiestler T, Downing PE, Diedrichsen J. 2011. A comparison of volume-based and surface-based multi-voxel pattern analysis. NeuroImage. 56:593–600. [DOI] [PubMed] [Google Scholar]

- O'Toole AJ, Jonathon Phillips P, Weimer S, Roark DA, Ayyad J, Barwick R, Dunlop J. 2011. Recognizing people from dynamic and static faces and bodies: Dissecting identity with a fusion approach. Vis Res. 51:74–83. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Dilks DD, Saxe RR, Triantafyllou C, Kanwisher N. 2011. Differential selectivity for dynamic versus static information in face-selective cortical regions. NeuroImage. 56:2356–2363. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Schwartz S, Seghier ML, Lazeyras F, Vuilleumier P. 2005. Portraits or people? Distinct representations of face identity in the human visual cortex. J Cogn Neurosci. 17:1043–1057. [DOI] [PubMed] [Google Scholar]

- Rajimehr R, Young JC, Tootell RBH. 2009. An anterior temporal face patch in human cortex, predicted by macaque maps. Proc Natl Acad Sci USA. 106:1995–2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rapcsak SZ, Nielsen L, Littrell LD, Glisky EL, Kaszniak AW, Laguna JF. 2001. Face memory impairments in patients with frontal lobe damage. Neurology. 57:1168–1175. [DOI] [PubMed] [Google Scholar]

- Rapcsak SZ, Polster MR, Glisky ML, Comers JF. 1996. False recognition of unfamiliar faces following right hemisphere damage: neuropsychological and anatomical observations. Cortex. 32:593–611. [DOI] [PubMed] [Google Scholar]

- Renzi C, Schiavi S, Carbon C-C, Vecchi T, Silvanto J, Cattaneo Z. 2013. Processing of featural and configural aspects of faces is lateralized in dorsolateral prefrontal cortex: a TMS study. NeuroImage. 74:45–51. [DOI] [PubMed] [Google Scholar]

- Rotshtein P, Henson RNA, Treves A, Driver J, Dolan RJ. 2005. Morphing Marilyn into Maggie dissociates physical and identity face representations in the brain. Nat Neurosci. 8:107–113. [DOI] [PubMed] [Google Scholar]

- Saygin ZM, Osher DE, Koldewyn K, Reynolds G, Gabrieli JDE, Saxe RR. 2012. Anatomical connectivity patterns predict face selectivity in the fusiform gyrus. Nat Neurosci. 15:321–327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scalaidhe SPÓ, Wilson FAW, Goldman-Rakic PS. 1997. Areal segregation of face-processing neurons in prefrontal cortex. Science. 278:1135–1138. [DOI] [PubMed] [Google Scholar]

- Sengupta A, Kaule FR, Guntupalli JS, Hoffmann MB, Häusler C, Stadler J, Hanke M. 2016. A studyforrest extension, retinotopic mapping and localization of higher visual areas. Scientific Data. 3:160093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TEJ, Johansen-Berg H, Bannister PR, De Luca M, Drobnjak I, Flitney DE, et al. 2004. Advances in functional and structural MR image analysis and implementation as FSL. NeuroImage. 23:S208–S219. [DOI] [PubMed] [Google Scholar]

- Smith SM, Nichols TE. 2009. Threshold-free cluster enhancement: addressing problems of smoothing, threshold dependence and localisation in cluster inference. NeuroImage. 44:83–98. [DOI] [PubMed] [Google Scholar]

- Thomas C, Moya L, Avidan G, Humphreys K, Jung KJ, Peterson MA, Behrmann M. 2008. Reduction in white matter connectivity, revealed by diffusion tensor imaging, may account for age-related changes in face perception. J Cogn Neurosci. 20 (2):268–284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsao DY, Moeller S, Freiwald WA. 2008. a. Comparing face patch systems in macaques and humans. Proc Natl Acad Sci. 105:19514–19519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsao DY, Schweers N, Moeller S, Freiwald WA. 2008. b. Patches of face-selective cortex in the macaque frontal lobe. Nat Neurosci. 11:877–879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vignal JP, Chauvel P, Halgren E. 2000. Localised face processing by the human prefrontal cortex: stimulation-evoked halucinations of faces. Cogn Neuropsychol. 17:281–291. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.