Abstract

Purpose

The aim of this article is to summarize recent published and unpublished research from our 2 laboratories on improving speech understanding in complex listening environments by listeners fit with cochlear implants (CIs).

Method

CI listeners were tested in 2 listening environments. One was a simulation of a restaurant with multiple, diffuse noise sources, and the other was a cocktail party with 2 spatially separated point sources of competing speech. At issue was the value of the following sources of information, or interventions, on speech understanding: (a) visual information, (b) adaptive beamformer microphones and remote microphones, (c) bimodal fittings, that is, a CI and contralateral low-frequency acoustic hearing, (d) hearing preservation fittings, that is, a CI with preserved low-frequency acoustic in the same ear plus low-frequency acoustic hearing in the contralateral ear, and (e) bilateral CIs.

Results

A remote microphone provided the largest improvement in speech understanding. Visual information and adaptive beamformers ranked next, while bimodal fittings, bilateral fittings, and hearing preservation provided significant but less benefit than the other interventions or sources of information. Only bilateral CIs allowed listeners high levels of speech understanding when signals were roved over the frontal plane.

Conclusions

The evidence supports the use of bilateral CIs and hearing preservation surgery for best speech understanding in complex environments. These fittings, when combined with visual information and microphone technology, should lead to high levels of speech understanding by CI patients in complex listening environments.

Presentation Video

This research forum contains papers from the 2016 Research Symposium at the ASHA Convention held in Philadelphia, PA.

In this article, we review recent research from our two laboratories on speech understanding by cochlear implant (CI) recipients when speech signals are presented in complex listening environments or, more generally, environments involving noise. Our aim is to evaluate different approaches to improving speech understanding in these environments.

The Problem

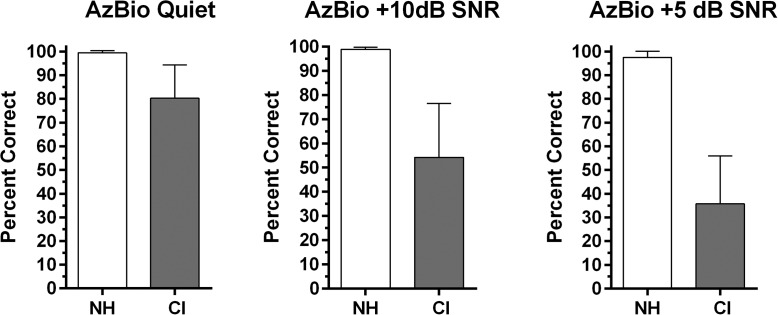

CIs can restore high levels of sentence understanding in quiet for a majority of listeners (e.g., Wilson, Dorman, Gifford, & McAlpine, 2016). However, this is not generally the case for signals presented in a noise background (e.g., Spahr, Dorman, & Loiselle, 2007). Moreover, even listeners who achieve a high level of performance in noise report increased listening difficulty and effort (Gifford et al., 2017). Figure 1 displays sentence understanding in quiet and in noise in a standard audiological test environment that used collocated speech and noise (multitalker babble) at 0° azimuth. The data in Figure 1 are from adults with normal hearing and adults with CIs. The listeners with normal hearing (n = 82) ranged in age from 20 to 70 years. The CI recipients (n = 65) had at least one year of experience with their devices and were a superset of patients described in Spahr et al. (2007). It is important to note that all had consonant-nucleus-consonant word scores of 50% correct or higher and, thus, were either average or better-than-average performers.

Figure 1.

Speech understanding in quiet and in noise (multitalker babble) by normal hearing listeners and by cochlear implant (CI) recipients. Error bars represent ±1 standard deviation. AzBio = sentence material; SNR = signal-to-noise ratio; NH = normal hearing.

For the listeners with normal hearing, noise presented with a signal-to-noise ratio (SNR) of +10 or +5 dB had very little effect on performance, with scores in quiet, +10 dB, and +5 dB SNR averaging 99%, 99%, and 97% correct, respectively. Statistical analysis revealed no difference in sentence recognition in the quiet and noise conditions. Noise, however, had a large effect on the CI listeners. Performance in quiet, although poorer than normal, was high with a mean score of 82% correct. In noise, performance plummeted to 54% correct at +10 dB SNR and 36% correct at +5 dB SNR. Statistical analysis revealed a significant effect of listening condition with scores in quiet, +10 dB, and +5 dB all being significantly different from one another. Thus, noise levels that cause no deficits in performance for listeners with normal hearing produce significant deficits for CI listeners.

The Mechanism

In broad outline, signal processing for CIs involves stages of (a) bandpass filtering of the signal into n continuous bands, (b) estimation of the energy in the bands, and (c) generation of pulses that are proportional to the energy in filter bands (for reviews, see Wilson et al., 2016; Zeng, Rebscher, Harrison, Sun, & Feng, 2008). The pulses are directed to electrodes in tonotopic fashion; that is, the outputs of filters with high center frequencies are directed to more basal electrodes and the outputs from filters with lower center frequencies are directed to more apical electrodes.

Modern CIs have 12–26 physical electrodes and can create many more virtual pitch percepts and/or channels (e.g., Donaldson, Dawson, & Borden, 2011; Wilson, Lawson, Zerbi, & Finley, 1992). If cortical processing regions responded to the energy at each electrode as a separate channel of stimulation and information, then it is very likely that the problem of speech understanding in noise, illustrated in Figure 1, would be very much lessened. However, this is not the case.

For example, Fishman, Shannon, and Slattery (1997) configured a CI signal processor to output to one, two, four, seven, 10, or 20 electrodes. Sentence understanding reached approximately 70% correct with four electrodes activated, and performance did not increase significantly with seven, 10, or 20 electrodes activated. Replications and extensions of this experiment have reported asymptotic performance, most generally, with activation of four to eight electrodes (e.g., Friesen, Shannon, Baskent, & Wang, 2001; Kiefer, Von Ilberg, Rupprecht, Hubner-Egner, & Knecht, 2000; Lawson, Wilson, & Zerbi, 1996; Wilson, 1997). Thus, the number of functional or independent channels in a CI is far fewer than the physical number of electrodes. This is relevant to the problem of speech understanding in noise because tests in noise with listeners with normal hearing using vocoder simulations of CIs show that the fewer the channels, the greater the impact of a fixed level of noise (e.g., Dorman, Loizou, Spahr, & Maloff, 2002). If CI listeners have access to only four to six channels of information, then, in the absence of other technologies, performance in noise will be poor.

In the following sections, we describe approaches to improving speech understanding in noise by CI recipients.

Add Visual Information

In the United States, speech understanding in quiet, or in noise, by CI listeners is evaluated, with very few exceptions, in auditory-only test environments. On the other hand, CI listeners report that, most of the time, they can see the face of the person with whom they are conversing (Dorman, Liss, et al., 2016). It is difficult to imagine any listener, especially one with hearing loss, purposely closing their eyes in a restaurant or cocktail party, indeed in any noisy environment, while attempting to understand speech.

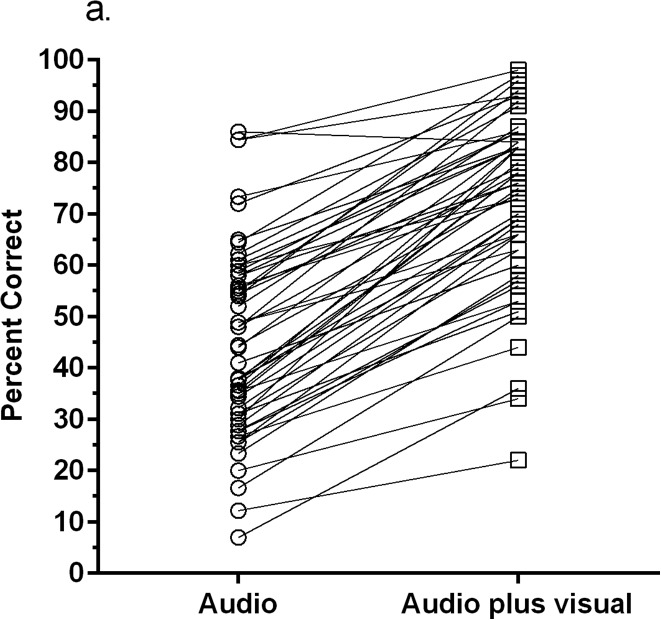

Visual information provides significant value for speech understanding in noise with improvements up to 15 dB in speech reception threshold even for listeners with normal hearing (Sumby & Pollack, 1954). Figure 2, using data from Dorman, Liss, et al. (2016), displays speech understanding in a multitalker babble environment (with collocated speech and babble) for unilateral and bilateral CI recipients in auditory-only and in audiovisual conditions. The data indicate that improvements of 30 to 40 percentage points in sentence understanding in noise can be obtained when visual information is added to the auditory information (e.g., Desai, Stickney, & Zeng, 2008; Gray, Quinn, Vanat, & Baguley, 1995; Kaiser, Kirk, Lachs, & Pisoni, 2003). It is important to note that visual information, as shown in Figure 2, can improve scores even when the scores in auditory-only test conditions are very high, for example, between 80% and 90% correct.

Figure 2.

Percent sentence recognition for cochlear implant listeners in noise (multitalker babble) in an audio-alone condition and in an audio-plus-visual condition (data from Dorman, Liss, et al., 2016).

Add a Noise Reduction Strategy

The signal processors in the most recent generation of CIs have access to the outputs of two omnidirectional microphones mounted on a single CI case. The difference in time of arrival of a noise source at the two microphone locations can be used to steer maximum sensitivity to the front of the listener and to attenuate inputs to the side and back. Devices of this type are termed beamformers and have long been available for hearing aids (e.g., Peterson, Wei, Rabinowitz, & Zurek, 1990) and more recently for CIs (e.g., Buechner, Dyballa, Hehrmann, Fredelake, & Lenarz, 2014; Spriet et al., 2007; Wolfe et al., 2012). In an adaptive beamformer, phase information is used to steer a null, or maximum attenuation, toward a noise source, and the location of the null will vary as a function of the noise location. For a review of noise reduction strategies for CIs, see Kokkinakis, Azimi, Hu, and Friedland (2012).

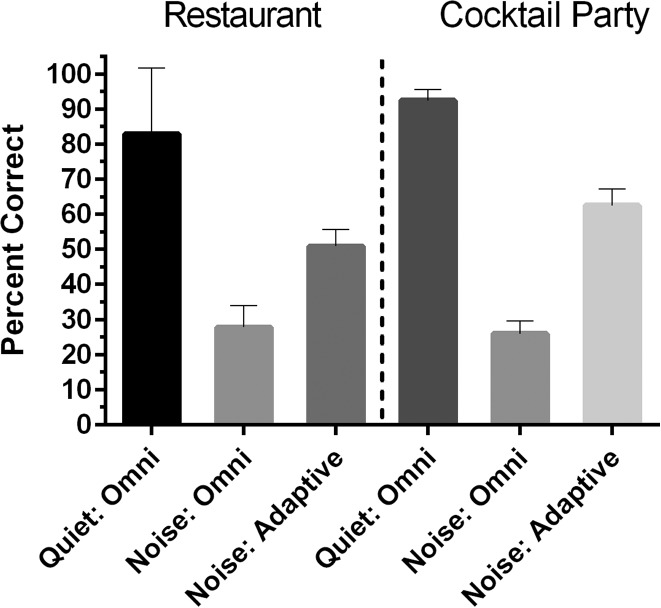

Adaptive beamformers are most effective with well-defined noise sources and less so with diffuse noise sources. This is shown in Figure 3, which displays the sentence understanding scores of 10 unilateral CI recipients using the MED-EL ASM 2.0 microphone system in two noise environments. Results from a diffuse noise environment are shown in the left-hand panel (redrawn from Dorman, Natale, & Loiselle, in press). In this environment (the R-SPACE listening environment; Revitronix, Braintree, VT), directionally appropriate noise (recorded in a restaurant) was output from eight loudspeakers surrounding the listener, including the speaker from which the target sentences were presented. Noise levels were adjusted for each listener to drive performance in the omnidirectional microphone condition down to less than 50% correct. Implementation of the adaptive beamformer produced a 23-percentage-point advantage in speech understanding relative to an omnidirectional microphone.

Figure 3.

Speech understanding in noise for cochlear implant listeners in a diffuse noise field (restaurant noise) and in a field with point sources for noise (different talkers) at ±90° to the listener (i.e., the cocktail party environment). Dotted line separates data collected in the two listening environments. Error bars represent ±1 standard deviation. Omni = omnidirectional microphone; Adaptive = adaptive beamformer.

In Figure 3 (right-side panel), data are shown for a complex environment in which two noise sources were used. In this environment, termed the cocktail party, target sentences (female talker) were output from the front loudspeaker, whereas continuous distracter sentences (two different male talkers) were output from speakers at ±90°. Implementation of the adaptive beamformer improved performance by 37 percentage points relative to an omnidirectional microphone.

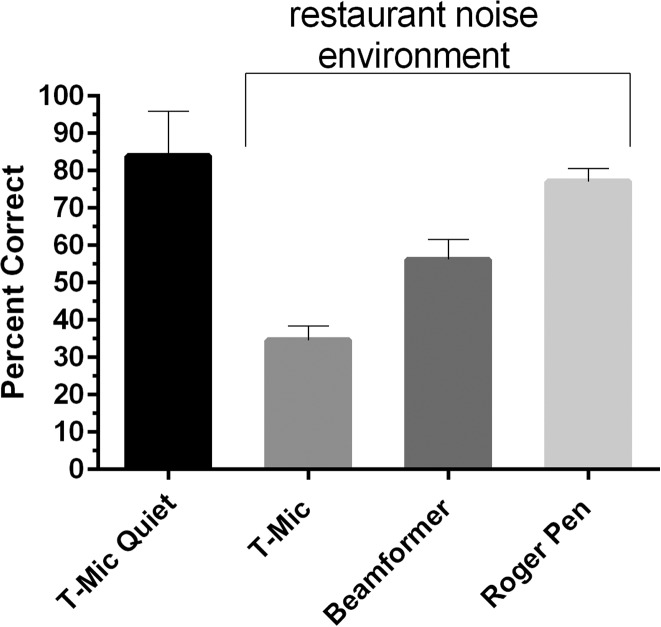

The largest gains in intelligibility in noise can be obtained when CI listeners have access to a remote microphone system. Figure 4 shows the performance of 10 experienced unilateral CI recipients fit with an adaptive beamformer (Phonak UltraZoom; Hehrmann, Fredelake, Hamacher, Dyballa, & Büchner, 2012) and a digital modulation microphone system (Roger Pen, Phonak AG). The R-SPACE restaurant environment was used but with no noise at 0°, that is, from the speaker from which the target sentences were output. Noise levels were adjusted for each listener to drive performance in the T-Mic condition down to less than 50% correct. At this noise level, implementation of the adaptive beamformer produced a 21-percentage-point improvement in performance. The Roger Pen afforded an impressive 42-percentage-point improvement—a score that doubled the benefit provided by the beamformer and was within 10 percentage points of the listeners' performance in quiet. For other reports using remote microphones, see, for example, Schafer and Thibodeau (2004) and Wolfe, Morais, Schafer, Agrawal, and Koch (2015).

Figure 4.

Speech understanding by cochlear implant listeners in a modified restaurant noise using three microphone systems. Unpublished data from Arizona State University. Error bars represent ±1 standard deviation.

Add Hearing in the Ear Contralateral to the CI

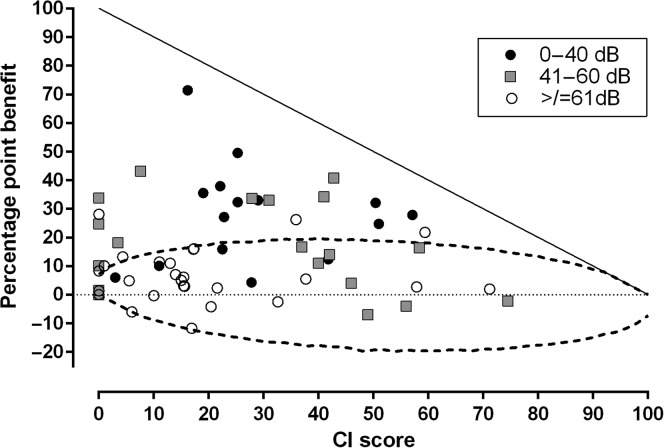

A very large body of literature attests to the value of low-frequency acoustic hearing in the ear contralateral to the CI, that is, a bimodal fitting (e.g., Ching, Incerti, & Hill, 2004; Gifford, Dorman, McKarns, & Spahr, 2007; Kong, Stichney, & Zeng, 2005; Mok, Grayden, Dowell, & Lawrence, 2006). For bimodal recipients, the magnitude of the improvement in performance is especially large for sentences in noise. Figure 5, adapted from data in Dorman et al. (2015), shows the percentage point gain in performance in CI plus contralateral hearing aid relative to the CI-alone condition. The x-axis is the CI-alone score. The listeners were unilateral CI recipients and were tested at +5 dB in multitalker babble—a common real-world SNR (Pearsons, Bennett, & Fidell, 1977; Smeds, Wolters, & Rung, 2015). One group had low-frequency hearing thresholds (average thresholds at 125, 250, and 500 Hz) of 40 dB HL or better. A second had thresholds between 41 and 60 dB HL, whereas the third group had thresholds greater than 61 dB HL. For the group with low-frequency thresholds better than 41 dB HL, the mean improvement in performance was 28 percentage points, and about two thirds of the recipients showed benefit. For the group with thresholds between 41 and 60 dB HL,the mean improvement was significantly less, 17 percentage points, whereas for the group with thresholds greater than 60 dB HL, the mean improvement was only 6 percentage points. Thus, if CI recipients have relatively good low-frequency hearing in the ear contralateral to the CI, and if they are appropriately aided, then speech understanding in noise will be significantly better.

Figure 5.

Percentage point benefit in speech understanding in a bimodal test condition relative to a cochlear implant (CI)-alone condition. The x-axis is the CI-alone score. The parameter is the mean low-frequency threshold in the ear with acoustic hearing. The speech material was the AzBio sentences presented at +5 dB SNR. The noise signal was multitalker babble. The dotted function is the 95th confidence limit for the test material. Figure reproduced with permission from Dorman et al. (2015).

Add Low-Frequency Hearing in Both the Contralateral and the Operated Ear

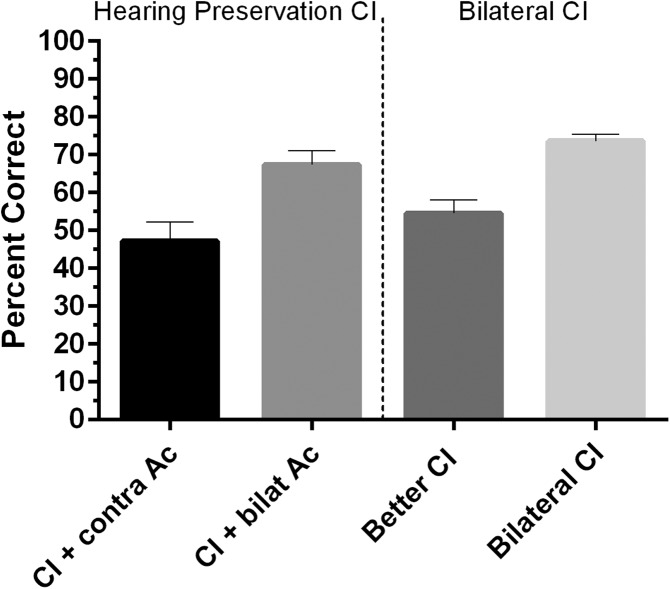

A recent advance in the CI field has been the design of electrodes and surgical procedures that allow the preservation of acoustic hearing in the cochlea in which the CI is inserted (Hunter et al., 2016; Lenarz et al., 2013; Skarzynski et al., 2014; von Ilberg et al., 1999). When hearing is preserved, the recipients commonly have low-frequency acoustic hearing in both the ear contralateral to the CI and in the ear with the CI. In a recent experiment Loiselle et al. (2016) tested a group of unilateral CI recipients with excellent hearing preservation in the operated ear (i.e., the mean threshold at 250 Hz was 30 dB HL and thresholds were within 15 dB of the thresholds in the ear contralateral to the CI). The test environment was the cocktail party described earlier (i.e., a female talker in front and continuous male talkers at ±90°). Figure 6 (left panel) shows the performance of the listeners in two conditions: (a) CI plus contralateral acoustic hearing or bimodal hearing and (b) bimodal hearing configuration plus ipsilateral acoustic hearing (i.e., aiding the preserved acoustic hearing in the CI ear). Performance in the latter condition was significantly higher—18 percentage points—than performance in the bimodal condition. Thus, in a complex listening environment, hearing preservation surgery can be of significant value for speech understanding. The theorized mechanism underlying benefit is the retention of low-frequency timing cues (e.g., Gifford et al., 2013, 2014). Retention of interaural timing differences (ITDs) affords the listener a central comparison of the ITDs associated with the competing noises as compared to the target that, when placed at 0°, will have a 0-μs ITD. This benefit is commonly referred to as squelch or binaural unmasking of speech.

Figure 6.

Speech understanding in a cocktail party noise environment by hearing preservation recipients (left) in two conditions, cochlear implant (CI) plus contralateral acoustic hearing (contra Ac) and CI plus acoustic hearing in both ears (bilat Ac), and by bilateral CI recipients (right) in two conditions, better CI alone and bilateral CIs. Error bars represent ±1 standard error of mean. Figure redrawn from Loiselle et al. (2016).

Add a Second CI

Recipients fit first with a single CI and who then receive a second CI report a significant improvement in quality of life (Bichey & Miyamoto, 2008). Part of this improvement is related to the improvement in speech understanding in complex listening environments, which is attributed to both summation (e.g., Buss et al., 2008; Litovsky et al., 2006; Schleich, Nopp, & D'Haese, 2004) and the value of interaural level differences (ILDs; Grantham, Ashmead, Ricketts, Haynes, & Labadie, 2008). Figure 6 (right panel) shows the gain in speech understanding for bilateral CI listeners in test conditions identical to those used for the hearing preservation recipients described earlier. The mean improvement in score from the single CI condition to the bilateral CI condition was 20 percentage points. Thus, as was the case for hearing preservation and bilateral, low-frequency acoustic hearing, bilateral CIs can be of significant value in noise. Note, however, that the bilateral CI recipients use different cues (ILDs) than the hearing preservation recipients (ITDs) to achieve this benefit.

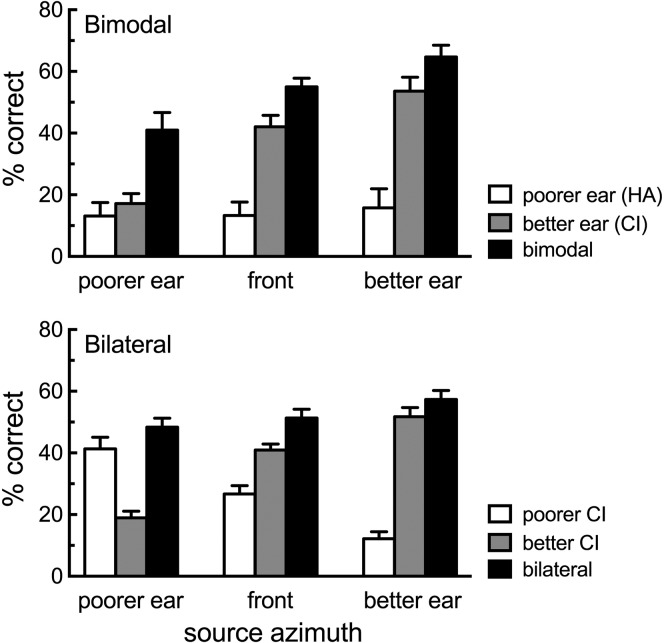

Another set of test conditions may more accurately demonstrate the value of bilateral CIs in real-world listening situations. These conditions test speech understanding in a diffuse restaurant noise with a roving target signal. In communicative environments involving group gatherings, there will rarely be a single talker. Rather, listeners participate in group conversation in which the target signal moves among the group participants.

We recently assessed the effect of a roving target on speech understanding for both bilateral and bimodal adult listeners. For each listener group, performance was assessed for the poorer ear alone, the better ear alone, and the bilateral, best-aided condition (bimodal or bilateral CI). The noise environment was provided by the R-SPACE system with restaurant noise originating from all speakers except the one presenting the target signal. SNR was individually determined to drive performance in the better ear condition to approximately 50% correct. Mean data are shown in Figure 7. For the better ear conditions, both groups demonstrated source effects, with the highest performance originating when the signal was to the side of the better ear and significantly poorer performance for a signal originating from both 0 and toward the contralateral ear. It is important to note that, for the bilateral CI listeners in the bilateral CI condition, speech understanding scores were not significantly different across the three source azimuths. That is, the bilateral CI users overcame the deleterious effects of ear asymmetry and source azimuth that significantly influenced the bimodal listeners because they had access to better ear listening on both sides. One real-world implication of these data is that bilateral CI users will be less dependent upon preferential seating in group communicative environments.

Figure 7.

Mean TIMIT (Texas Instruments Massachusetts Institute of Technology) sentence recognition (in percent correct) as a function of the target signal azimuth for bimodal and bilateral cochlear implant (CI) listeners. Error bars represent ±1 standard error of mean. HA = hearing aid.

Conclusions

The opportunities for improving speech understanding for CI recipients in complex listening environments are much greater today than a decade ago. Beamforming technology and digital, remote microphone systems are now available for CIs. Clinicians routinely recommend a bimodal hearing configuration or bilateral cochlear implantation. In cases of both unilateral and bilateral implantation, recipients may have acoustic hearing preservation in the implanted ear. As a result, most CI recipients listen with two ears.

In less complex listening environments, that is, with low-level background noise and a stationary target at 0°, each of the interventions discussed earlier is capable of improving speech understanding. However, in more complex environments with nonstationary sound sources, there is a clear advantage to having two CIs or two ears with low-frequency acoustic hearing. Thus, bimodal listeners who have restricted high-frequency audibility and significant asymmetry in speech understanding across the ears will be better served by a second implant. In the bimodal configuration, these recipients will not have access to either ITDs or ILDs in both ears, placing them at a significant disadvantage in complex listening environments (e.g., Dorman, Loiselle, Cook, Yost, & Gifford, 2016; van Hoesel, 2015).

With advancements in (a) electrode design, (b) pharmaceutical interventions to ameliorate inflammation and for delivering neurotrophins to the inner ear, and (c) surgical techniques, the ability to preserve hearing in the operated ear will improve in the future. Indeed, the number of recipients with bilateral CIs and bilateral low-frequency acoustic hearing (e.g., Dorman et al., 2013) is already growing. This is the intervention of the future. It will afford listeners the greatest possibility for restoration of audibility, speech quality, speech understanding in various complex environments, and spatial hearing abilities. If fit with the latest microphone technology, these patients will achieve very high levels of speech understanding in many complex, real-world listening environments.

Acknowledgments

The Research Symposium is supported by the National Institute On Deafness and Other Communication Disorders of the National Institutes of Health under Award Number R13DC003383. This work was supported by Grant R01 DC 008329 awarded to Michael F. Dorman and Grants R01 DC 009404 and DC 010821 awarded to Rene H. Gifford from the National Institute on Deafness and Other Communication Disorders. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Funding Statement

The Research Symposium is supported by the National Institute On Deafness and Other Communication Disorders of the National Institutes of Health under Award Number R13DC003383. This work was supported by Grant R01 DC 008329 awarded to Michael F. Dorman and Grants R01 DC 009404 and DC 010821 awarded to Rene H. Gifford from the National Institute on Deafness and Other Communication Disorders.

References

- Bichey B. G., & Miyamoto R. T. (2008). Outcomes in bilateral cochlear implantation. Otolaryngology–Head and Neck Surgery, 138(5), 655–661. https://doi.org/10.1016/j.otohns.2007.12.020 [DOI] [PubMed] [Google Scholar]

- Buechner A., Dyballa K-H, Hehrmann P., Fredelake S., & Lenarz T. (2014). Advanced beamformers for cochlear implant users: Acute measurement of speech perception in challenging listening conditions. PLoS ONE, 9(4), e95542 https://doi.org/10.1371/journal.pone.0095542 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buss E., Pillsbury H. C., Buchman C. A., Pillsbury C. H., Clark M. S., Haynes D. S., … Barco A. L. (2008). Multicenter U.S. bilateral MED-EL cochlear implantation study: Speech perception over the first year of use. Ear and Hearing, 29(1), 20–32. https://doi.org/10.1097/AUD.0b013e31815d7467 [DOI] [PubMed] [Google Scholar]

- Ching T. Y. C., Incerti P., & Hill M. (2004). Binaural benefits for adults who use hearing aids and cochlear implants in opposite ears. Ear and Hearing, 25(1), 9–21. https://doi.org/10.1097/01.AUD.0000111261.84611.C8 [DOI] [PubMed] [Google Scholar]

- Desai S., Stickney G., & Zeng F.-G. (2008). Auditory-visual speech perception in normal-hearing and cochlear-implant listeners. The Journal of the Acoustical Society of America, 123(1), 428–440. https://doi.org/10.1121/1.2816573 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donaldson G. S., Dawson P. K., & Borden L. Z. (2011). Within-subjects comparison of the HiRes and Fidelity120 speech processing strategies: Speech perception and its relation to place-pitch sensitivity. Ear and Hearing, 32(2), 238–250. https://doi.org/10.1097/AUD.0b013e3181fb8390 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman M. F., Cook S., Spahr A., Zhang T., Loiselle L., Schramm D., … Gifford R. (2015). Factors constraining the benefit to speech understanding of combining information from low-frequency hearing and a cochlear implant. Hearing Research, 322, 107–111. https://doi.org/10.1016/j.heares.2014.09.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman M. F., Liss J., Wang S., Berisha V., Ludwig C., &Natale S. C. (2016). Experiments on auditory-visual perception of sentences by users of unilateral, bimodal, and bilateral cochlear implants. Journal of Speech, Language, and Hearing Research, 59(6), 1505–1519. https://doi.org/10.1044/2016_JSLHR-H-15-0312 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman M. F., Loiselle L. H., Cook S. J., Yost W. A., & Gifford R. H. (2016). Sound source localization by normal-hearing listeners, hearing-impaired listeners and cochlear implant listeners. Audiology and Neurotology, 21(3), 127–131. https://doi.org/10.1159/000444740 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman M. F., Loizou P. C., Spahr A. J., & Maloff E. (2002). A comparison of the speech understanding provided by acoustic models of fixed-channel and channel-picking signal processors for cochlear implants. Journal of Speech, Language, and Hearing Research, 45(4), 783–788. https://doi.org/10.1044/1092-4388(2002/063) [DOI] [PubMed] [Google Scholar]

- Dorman M. F., Natale S., & Loiselle L. (in press). Speech understanding and sound source localization by cochlear implant listeners using a pinna-effect imitating microphone and an adaptive beamformer. Journal of the American Academy of Audiology. [DOI] [PubMed] [Google Scholar]

- Dorman M. F., Spahr A. J., Loiselle L., Zhang T., Cook S., Brown C., & Yost W. (2013). Localization and speech understanding by a patient with bilateral cochlear implants and bilateral hearing preservation. Ear and Hearing, 34(2), 245–248. https://doi.org/10.1097/AUD.0b013e318269ce70 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fishman K. E., Shannon R. V., & Slattery W. H. (1997). Speech recognition as a function of the number of electrodes used in the SPEAK cochlear implant speech processor. Journal of Speech, Language, and Hearing Research, 40(5), 1201–1215. https://doi.org/10.1044/jslhr.4005.1201 [DOI] [PubMed] [Google Scholar]

- Friesen L. M., Shannon R. V., Baskent D., & Wang X. (2001). Speech recognition in noise as a function of the number of spectral channels: Comparison of acoustic hearing and cochlear implants. The Journal of the Acoustical Society of America, 110(2), 1150–1163. [DOI] [PubMed] [Google Scholar]

- Gifford R. H., Davis T. J., Sunderhaus L. W., Menapace C., Buck B., Crosson J., … Segel P. (2017). Combined electric and acoustic stimulation with hearing preservation: Effect of cochlear implant low-frequency cutoff on speech understanding and perceived listening difficulty [epub ahead of print] Ear and Hearing. https://doi.org/10.1097/AUD.0000000000000418 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford R. H., Dorman M. F., McKarns S. A., & Spahr A. J. (2007). Combined electric and contralateral acoustic hearing: Word and sentence recognition with bimodal hearing. Journal of Speech, Language, and Hearing Research, 50(4), 835–843. https://doi.org/10.1044/1092-4388(2007/058) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford R. H., Dorman M. F., Skarzynski H., Lorens A., Polak M., Driscoll C. L. W., … Buchman C. A. (2013). Cochlear implantation with hearing preservation yields significant benefit for speech recognition in complex listening environments. Ear and Hearing, 34(4), 413–425. https://doi.org/10.1097/AUD.0b013e31827e8163 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford R. H., Grantham D. W., Sheffield S. W., Davis T. J., Dwyer R., & Dorman M. F. (2014). Localization and interaural time difference (ITD) thresholds for cochlear implant recipients with preserved acoustic hearing in the implanted ear. Hearing Research, 312, 28–37. https://doi.org/10.1016/j.heares.2014.02.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grantham D. W., Ashmead D. H., Ricketts T. A., Haynes D. S., & Labadie R. F. (2008). Interaural time and level difference thresholds for acoustically presented signals in post-lingually deafened adults fitted with bilateral cochlear implants using CIS+ processing. Ear and Hearing, 29(1), 33–44. https://doi.org/10.1097/AUD.0b013e31815d636f [DOI] [PubMed] [Google Scholar]

- Gray R. F., Quinn S. J., Vanat Z., & Baguley D. M. (1995). Patient performance over eighteen months with the Ineraid intracochlear implant. The Annals of Otology, Rhinology & Laryngology. Supplement, (166), 275–277. [PubMed] [Google Scholar]

- Hehrmann P., Fredelake S., Hamacher V., Dyballa K. H., & Büchner A. (2012). Improved speech intelligibility with cochlear implants using state-of-the-art noise reduction algorithms. ITG-Fachbericht, 236, 26–28. [Google Scholar]

- Hunter J. B., Gifford R. H., Wanna G. B., Labadie R. F., Bennett M. L., Haynes D. S., & Rivas A. (2016). Hearing preservation outcomes with a mid-scala electrode in cochlear implantation. Otology & Neurotology, 37(3), 235–240. https://doi.org/10.1097/MAO.0000000000000963 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaiser A. R., Kirk K. I., Lachs L., & Pisoni D. B. (2003). Talker and lexical effects on audiovisual word recognition by adults with cochlear implants. Journal of Speech, Language, and Hearing Research, 46(2), 390–404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiefer J., Von Ilberg C., Rupprecht V., Hubner-Egner J., & Knecht R. (2000). Optimized speech understanding with the continuous interleaved sampling speech coding strategy in patients with cochlear implants: Effect of variations in stimulation rate and number of channels. Annals of Otology, Rhinology & Laryngology, 109(11), 1009–1020. https://doi.org/10.1177/000348940010901105 [DOI] [PubMed] [Google Scholar]

- Kokkinakis K., Azimi B., Hu Y., & Friedland D. R. (2012). Single and multiple microphone noise reduction strategies in cochlear implants. Trends in Amplification, 16(2), 102–116. https://doi.org/10.1177/1084713812456906 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kong Y.-Y., Stickney G. S., & Zeng F.-G. (2005). Speech and melody recognition in binaurally combined acoustic and electric hearing. The Journal of the Acoustical Society of America, 117(3, Pt 1), 1351–1361. https://doi.org/10.1121/1.1857526 [DOI] [PubMed] [Google Scholar]

- Lawson D. T., Wilson B. S., & Zerbi M. F. C. (1996). Speech processors for auditory prostheses: 22 electrode percutaneous study—Results for the first five subjects. Third Quarterly Progress Report, NIH Project N01-DC-5-2103 Bethesda, MD: Neural Prosthesis Program, National Institutes of Health. [Google Scholar]

- Lenarz T., James C., Cuda D., Fitzgerald O'Connor A., Frachet B., Frijns J. H. M., … Uziel A. (2013). European multi-centre study of the Nucleus Hybrid L24 cochlear implant. International Journal of Audiology, 52(12), 838–848. https://doi.org/10.3109/14992027.2013.802032 [DOI] [PubMed] [Google Scholar]

- Litovsky R. Y., Johnstone P. M., Godar S., Agrawal S., Parkinson A., Peters R., & Lake J. (2006). Bilateral cochlear implants in children: Localization acuity measured with minimum audible angle. Ear and Hearing, 27(1), 43–59. https://doi.org/10.1097/01.aud.0000194515.28023.4b [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loiselle L., Dorman M. F., Yost W. A., Cook S. J., & Gifford R. H. (2016). Using ILD and ITD cues for sound source localization and speech understanding in a complex listening environment by listeners with bilateral and with hearing-preservation cochlear-implants. Journal of Speech, Language, and Hearing Research, 59(4), 810–818. https://doi.org/10.1044/2015_JSLHR-H-14-0355 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mok M., Grayden D., Dowell R. C., & Lawrence D. (2006). Speech perception for adults who use hearing aids in conjunction with cochlear implants in opposite ears. Journal of Speech, Language, and Hearing Research, 49(2), 338–351. https://doi.org/10.1044/1092-4388(2006/027) [DOI] [PubMed] [Google Scholar]

- Pearsons K. S., Bennett R. L., & Fidell S. (1977). Speech levels in various noise environments (Report No. EPA-600/1-77-025) Washington, DC: U.S. Environmental Protection Agency. [Google Scholar]

- Peterson P. M., Wei S. M., Rabinowitz W. M., & Zurek P. M. (1990). Robustness of an adaptive beamforming method for hearing aids. Acta Oto-Laryngologica. Supplementum, 469, 85–90. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/2356741 [PubMed] [Google Scholar]

- Schafer E., & Thibodeau L. (2004). Speech recognition abilities of adults using cochlear implants with FM systems. Journal of the American Academy of Audiology, 15(10), 678–691. [DOI] [PubMed] [Google Scholar]

- Schleich P., Nopp P., & D'Haese P. (2004). Head shadow, squelch, and summation effects in bilateral users of the MED-EL COMBI 40/40+ cochlear implant. Ear and Hearing, 25(3), 197–204. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/15179111 [DOI] [PubMed] [Google Scholar]

- Skarzynski H., Lorens A., Matusiak M., Porowski M., Skarzynski P. H., & James C. J. (2014). Cochlear implantation with the nucleus slim straight electrode in subjects with residual low-frequency hearing. Ear and Hearing, 35(2), e33–e43. https://doi.org/10.1097/01.aud.0000444781.15858.f1 [DOI] [PubMed] [Google Scholar]

- Smeds K., Wolters F., & Rung M. (2015). Estimation of signal-to-noise ratios in realistic sound scenarios. Journal of the American Academy of Audiology, 26(2), 183–196. https://doi.org/10.3766/jaaa.26.2.7 [DOI] [PubMed] [Google Scholar]

- Spahr A. J., Dorman M. F., & Loiselle L. H. (2007). Performance of patients using different cochlear implant systems: Effects of input dynamic range. Ear and Hearing, 28(2), 260–275. https://doi.org/10.1097/AUD.0b013e3180312607 [DOI] [PubMed] [Google Scholar]

- Spriet A., Van Deun L., Eftaxiadis K., Laneau J., Moonen M., van Dijk B., … Wouters J. (2007). Speech understanding in background noise with the two-microphone adaptive beamformer BEAM in the Nucleus Freedom Cochlear Implant System. Ear and Hearing, 28(1), 62–72. https://doi.org/10.1097/01.AUD.0000252470.54246.54 [DOI] [PubMed] [Google Scholar]

- Sumby W. H., & Pollack I. (1954). Visual contribution to speech intelligibility in noise. The Journal of the Acoustical Society of America, 26(2), 212–215. https://doi.org/10.1121/1.1907309 [Google Scholar]

- van Hoesel R. J. M. (2015). Audio-visual speech intelligibility benefits with bilateral cochlear implants when talker location varies. Journal of the Association for Research in Otolaryngology, 16(2), 309–315. https://doi.org/10.1007/s10162-014-0503-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- von Ilberg C., Kiefer J., Tillein J., Pfenningdorff T., Hartmann R., Stürzebecher E., & Klinke R. (1999). Electric-acoustic stimulation of the auditory system. New technology for severe hearing loss. ORL; Journal for Oto-Rhino-Laryngology and Its Related Specialties, 61(6), 334–340. [DOI] [PubMed] [Google Scholar]

- Wilson B. S. (1997). The future of cochlear implants. British Journal of Audiology, 31(4), 205–225. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/9307818 [DOI] [PubMed] [Google Scholar]

- Wilson B. S., Dorman M., Gifford R., & McAlpine D. (2016). Cochlear implant design considerations. In Young N. & Kirk K. I. (Eds.), Cochlear implants in children: Learning and the brain (pp. 3–23). New York, NY: Springer. [Google Scholar]

- Wilson B. S., Lawson D., Zerbi M., & Finley C. (1992). Speech processors for auditory prostheses: Virtual channel interleaved sampling (VCIS) processors—Initial studies with subject SR-2. First Quarterly Progress Report, NIH Project N01-DC2-2401 Bethesda, MD: Neural Prosthesis Program, National Institutes of Health. [Google Scholar]

- Wolfe J., Morais E., Schafer E., Agrawal S., & Koch D. (2015). Evaluation of speech recognition of cochlear implant recipients using adaptive, digital remote microphone technology and a speech enhancement sound processing algorithm. Journal of the American Academy of Audiology, 26, 502–508. https://doi.org/10.3766/jaaa.14099 [DOI] [PubMed] [Google Scholar]

- Wolfe J., Parkinson A., Schafer E., Gilden J., Rehwinkel K., Mansanares J., … Gannaway S. (2012). Benefit of a commercially available cochlear implant processor with dual-microphone beamforming: A multi-center study. Otology and Neurotology, 33(4), 553–560. https://doi.org/10.1097/MAO.0b013e31825367a5 [DOI] [PubMed] [Google Scholar]

- Zeng F.-G., Rebscher S., Harrison W., Sun X., & Feng H. (2008). Cochlear implants: System design, integration and evaluation. IEEE Review of Biomedical Engineering, 1, 115–142. https://doi.org/10.1109/RBME.2008.2008250 [DOI] [PMC free article] [PubMed] [Google Scholar]