Abstract

Electrocardiogram (ECG) signal analysis has received special attention of the researchers in the recent past because of its ability to divulge crucial information about the electrophysiology of the heart and the autonomic nervous system activity in a noninvasive manner. Analysis of the ECG signals has been explored using both linear and nonlinear methods. However, the nonlinear methods of ECG signal analysis are gaining popularity because of their robustness in feature extraction and classification. The current study presents a review of the nonlinear signal analysis methods, namely, reconstructed phase space analysis, Lyapunov exponents, correlation dimension, detrended fluctuation analysis (DFA), recurrence plot, Poincaré plot, approximate entropy, and sample entropy along with their recent applications in the ECG signal analysis.

1. Introduction

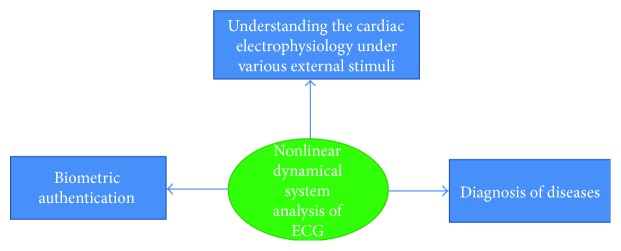

In the last few decades, the ECG signals have been widely analyzed for the diagnosis of the numerous cardiovascular diseases [1, 2]. Apart from this, the ECG signals are processed to extract the RR intervals, which have been reported to divulge information about the influence of the autonomic nervous system activity on the heart through heart rate variability (HRV) analysis [3, 4]. HRV refers to the study of the variation in the time interval between consecutive heart beats and the instantaneous heart rate [5]. An important step in the analysis of the ECG signals is the extraction of the clinically relevant features containing all the relevant information of the original ECG signal and, hence, can act as the representative of the signal for further analysis [6, 7]. Features can be extracted from the ECG signals using the time-domain, frequency-domain, and joint time-frequency domain analysis methods including the nonlinear methods [7–9]. The analysis of the ECG signals using the nonlinear signal analysis methods has received special attention of the researchers in recent years [7–9]. The nonlinear methods of the ECG signal analysis derive their motivation from the concept of nonlinear dynamics [10, 11]. This may be attributed to the fact that the biomedical signals like ECG can be generated by the nonlinear dynamical systems [12]. A dynamical system is a system that changes over time [9]. However, a dynamical system may also be defined as an iterative physical system, which undergoes evolution over time in such a way that the future states of the system can be predicted using the preceding states [13]. Dynamical systems form the basis of the nonlinear methods of the signal analysis [14]. The highly explored nonlinear signal analysis methods include reconstructed phase space analysis, Lyapunov exponents, correlation dimension, detrended fluctuation analysis (DFA), recurrence plot, Poincaré plot, approximate entropy, and sample entropy. This study attempts to provide a theoretical background of the above-mentioned nonlinear methods and their recent applications (last 5 years) in the analysis of the ECG signal for the diagnosis of diseases, understanding the effect of external stimuli (e.g., low-frequency noise and music), and human biometric authentication (Figure 1).

Figure 1.

Various types of application of nonlinear dynamical system analysis of ECG.

2. Dynamical System

Dynamical systems form the basis of the nonlinear methods of signal analysis [15–17]. The study of the dynamical systems has found applications in a number of fields like physics [15–17], engineering [15], biology, and medicine [16]. A dynamical system can be defined as a system, whose state can be described by a set of time-varying (continuous or discrete) variables governed by the mathematical laws [17]. Such a system is said to be deterministic if the current values of time and the state variables can exactly describe the state of the system at the next instant of time. On the other hand, the dynamical system is regarded as stochastic, if the current values of time and the state variables describe only the probability of variation in the values of the state variables over time [18–20]. Dynamical systems can also be categorized either as linear or nonlinear systems. A system is regarded as linear when the change in one of its variable is proportional to the alteration in a related variable. Otherwise, it is regarded as nonlinear [18]. The main difference between the linear and the nonlinear systems is that the linear systems are easier to analyze. This can be attributed to the fact that the linear systems, unlike the nonlinear systems, facilitate the breaking down of the system into parts, performing analysis of the individual parts, and finally recombining the parts to obtain the solution of the system [21]. A set of coupled first-order autonomous differential equations ((1)) is used to mathematically describe the evolution of a continuous time dynamical system [22].

| (1) |

where = vector representing the dynamical variables of the system, = vector corresponding to the parameters, and = vector field whose components are the dynamical rules governing the nature of the dynamical variables.

A system involving any nonautonomous differential equation in Rn can be transformed into an autonomous differential equation in Rn+ 1 [23]. The forced Duffing-Van der Pol oscillator has been regarded as a well-known example of a nonlinear dynamical system, which is described by a second-order nonautonomous differential equation [14, 23].

| (2) |

where μ, f, and w represent the parameters.

This nonautonomous differential equation can be converted into a set of coupled first-order autonomous differential equations (3), (4), and (5) by delineating 3 dynamical variables, that is, x1 = y, x2 = dy/dt, and x3 = wt [23].

| (3) |

| (4) |

| (5) |

The discrete time dynamical systems are described by a set of coupled first-order autonomous difference equations [14, 23, 24].

| (6) |

where = vector describing the dynamical rules and n = integer representing time.

It is possible to obtain a discrete dynamical system from a continuous dynamical system through the sampling of its solution at a regular time interval T, in which the dynamical rule representing the relationship between the consecutive sampled values of the dynamical variables is regarded as a time T map. The sampling of the solution of a continuous dynamical system in the Rn dimensional space at the consecutive transverse intersections with a Rn−1 dimensional surface of the section also results in the formation of a discrete dynamical system. In this case, the dynamical rule representing the relationship between the consecutive sampled values of the dynamical variables is regarded as a Poincaré map or a first return map. For the forced Duffing-Van der Pol oscillator, the Poincaré map is equivalent to the time T map with T = 2π/w when a surface of section is defined by x3 = θ0 with θ0 ∈ (0, 2π) [14, 22, 23].

Generally, randomness is considered to be associated with noise (unwanted external disturbances like power line interference). However, it has been well reported in the last few decades that most of the dynamical systems are deterministic nonlinear in nature and their solutions can be statistically random as that of the outcomes of tossing an unbiased coin (i.e., head or tail) [23]. This statistical randomness is regarded as deterministic chaos, and it allows the development of models for characterizing the systems producing the random signals.

As per the reported literature, the random signals produced by noise fundamentally differ from the random signals produced from the deterministic dynamical systems with a small number of dynamical variables [25]. The differences between them cannot be analyzed using the statistical methods. Phase space reconstruction-based dynamical system analysis has been recommended by the researchers for this purpose [12].

3. Nonlinear Dynamical System Analysis Techniques

3.1. Reconstructed Phase Space Analysis of a Dynamical System

The phase space is an abstract multidimensional space, which is used to graphically represent all the possible states of a dynamical system [23]. The dimension of the phase space is the number of variables required to completely describe the state of the system [19, 26]. Its axes depict the values of the dynamical variables of the system [26]. If the actual number of variables governing the behaviour of the dynamical system is unknown, then the phase space plots are reconstructed by time-delayed embedding, which is based on the concept of Taken's theorem [19]. The theorem states that if the dynamics of a system is governed by a number of interdependent variables (i.e., its dynamics is multidimensional), and only one variable of the system, say, x, is accessible (i.e., only one dimension can be measured), then it is possible to reconstruct the complete dynamics of the system from the single observed variable x by plotting its values against itself for a certain number of times at a predefined time delay [27]. Fang et al. [28] have reported that the reconstructed phase spaces can be regarded as topologically equivalent to the original system and, hence, can recover the nonlinear dynamics of the system.

Let us consider that all the values of the observed variable x is represented by the vector .

| (7) |

where n = number of points in the time series.

If d is the true/estimated embedding dimension of the system (i.e., number of variables governing the dynamics of the system), then each state of the system can be represented in the phase space by the d-dimensional vectors of the form given as follows:

| (8) |

where τ = time lag, and 1 ≤ i ≤ n − (d − 1)τ.

A total of n − (d − 1)τ number of such vectors are obtained, which can be arranged in a matrix V (9) [26, 27]. In matrix V, the row indices signify time, and the column indices refer to a dimension of the phase space.

This set of vectors forms the entire reconstructed phase space [12, 26].

| (9) |

where the rows correspond to the d-dimensional phase space vectors and the columns represent the time-delayed versions of the initial n − (d − 1)τ points of the vector .

The two factors, namely, embedding dimension (d) and time delay (τ) play an important role during the reconstruction of the phase space of a dynamical system [29, 30]. The embedding dimension is determined using either the method of false nearest neighbours [12] or Cao's method [29] or empirically [30]. The false nearest neighbour method has been regarded as the most popular method for the determination of the optimal embedding dimension [31]. This method is based on the principle that the pair of points which are located very near to each other at the optimal embedding dimension m will remain close to each other as the dimension m increases further. Nevertheless, if m is small, then the points located far apart may appear to be neighbours due to projecting into a lower dimensional space. In this method, the neighbours are checked at increasing embedding dimensions until a negligible number of false neighbours are found while moving from dimension m to m + 1. This resulting dimension m is considered as the optimal embedding dimension.

The time delay is usually determined using either the first minimum of the average mutual information function (AMIF) [32] or first zero crossing of the autocorrelation function (ACF) [33] or empirically. The implementation of ACF is computationally convenient and does not require a large data set. However, it has been reported that the use of ACF is not appropriate for nonlinear systems, and hence AMIF should be used for the computation of the optimal time delay [34, 35]. For the discrete time signals, the AMIF can be defined as follows [36]:

| (10) |

where X = {xi} and Y = {yj} are discrete time variables, PX(xi) is the probability of occurrence of X, PY(yj) is the probability of occurrence of Y, and PXY(xi, yj) is the probability of occurrence of both X and Y.

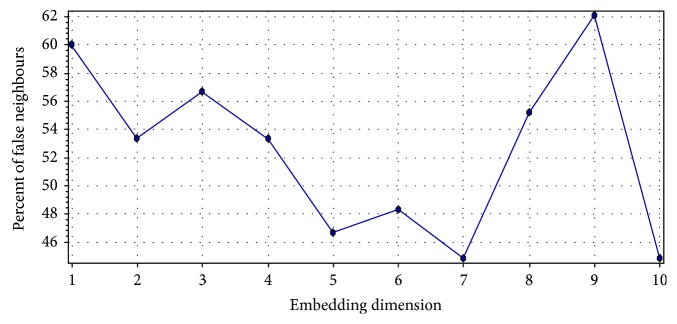

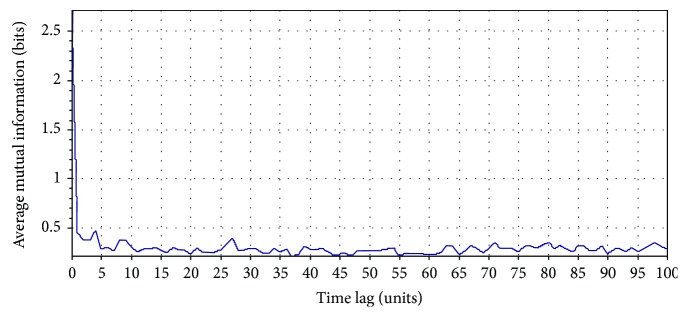

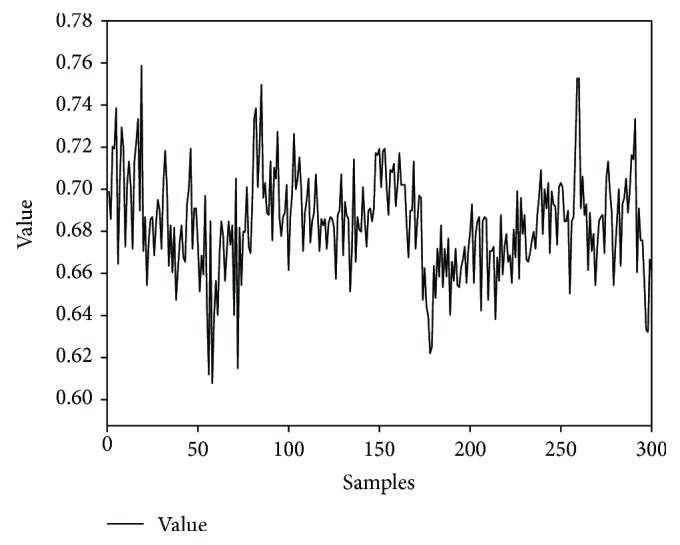

Let us consider an RR interval (RRI) time series extracted from the 5 min ECG recording of a person (Indian male volunteer of 27 years old) consuming cannabis (Figure 2). The ECG signal was acquired using the commercially available single lead ECG sensor (Vernier Software & Technology, USA) and stored into a laptop using a data acquisition device (NI USB 6009, National Instruments, USA). The sampling rate of the device was set at 1000 Hz. The RRI time series was extracted from the acquired ECG signal using Biomedical Workbench toolkit of LabVIEW (National Instruments, USA). The determination of the optimal value of the embedding dimension for this RRI time series by the method of false nearest neighbours is shown in Figure 3. The determination of the proper value of the time delay (by the first minimum of the AMIF) for the above-mentioned RRI time series has been shown in Figure 4.

Figure 2.

A representative RRI time series obtained from a 5 min ECG signal.

Figure 3.

Computation of the optimal embedding dimension by the method of false nearest neighbours. The optimal embedding dimension was 7, and the corresponding percent false neighbour was 44.83%. The method of false nearest neighbour was implemented using Visual Recurrence Analysis freeware (V4.9, USA), developed by Kononov [37].

Figure 4.

Optimal time delay computation by the first minimum of the AMIF. The first minimum of the AMIF was 2. The AMIF was calculated using Visual Recurrence Analysis freeware (V4.9, USA), developed by Kononov [37].

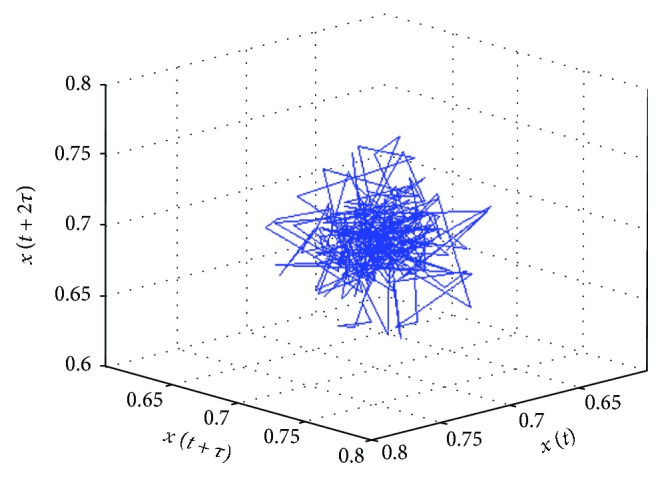

Each point in the reconstructed phase space of a system describes a potential state of the system. The system starts evolving from any point in the phase space (regarded as the initial state/condition of the system), following the dynamic trajectory determined by the equations of the system [19]. A dynamic trajectory describes the rate of change of the system's state with time. All the possible trajectories, for a given initial condition, form the flow of the system. Each trajectory occupies a subregion of the phase space, called as an attractor. An attractor can also be defined as a set of points (indicating the steady states) in the phase space, through which the system migrates over time [38]. The 3D attractor of the RRI time series (represented in Figure 2) has been shown in Figure 5.

Figure 5.

3D phase space attractor of an RRI time series. The attractor was plotted using the MATLAB Toolbox developed by Yang [39].

Each attractor is associated with a basin of attraction, which represents all the initial states/conditions of the system that can go to that particular attractor [38]. Attractors can be points, curves, manifolds, or complicated objects, known as strange attractors. A strange attractor is an attractor having a noninteger dimension.

3.2. Lyapunov Exponents

The nonlinear dynamical systems are highly sensitive to the initial conditions, that is, a small change in the state variables at an instant will cause a large change in the behaviour of the system at a future instant of time. This is visualized in the reconstructed phase space as the adjacent trajectories that diverge widely from the initial close positions or converge. Lyapunov exponents are a quantitative measure of the average rate of this divergence or convergence [40]. They provide an estimation of the duration for which the behaviour of a system is predictable before chaotic behaviour prevails [9]. Positive Lyapunov exponent values indicate that the phase space trajectories are diverging (i.e., the closely located points in the initial state are rapidly separating from each other in the ith direction) and the system is losing its predictability, exhibiting chaotic behaviour [41, 42]. On the other hand, the negative Lyapunov exponent values are representatives of the average rate of the convergence of the phase space trajectories. For example, in a three-dimensional system, the three Lyapunov exponents provide information about the evolution of the volume of a cube and their sum specifies how a hypercube evolves in a multidimensional attractor. The sum of the positive Lyapunov exponents represents the rate of spreading of the hypercube, which in turn, indicates the increase in unpredictability per unit time. The largest positive (dominant) Lyapunov exponent mainly governs its dynamics [43].

If ‖δxi(0)‖ and ‖δxi(t)‖ represent the Euclidean distance between two neighbouring points of the phase space in the ith direction at the time instances of 0 and t, respectively, then, the Lyapunov exponent can be defined as the average growth λi of the initial distance ‖δxi(0)‖ [23, 44].

| (11) |

where λi is the average growth of the initial distance ‖δxi(0)‖.

The dimensionality of the dynamical system decides the number of Lyapunov exponents, that is, if the system is defined in Rm, then it possesses m Lyapunov exponents (λ1 ≥ λ2 ≥ ,…, λm). The complete set of Lyapunov exponents can be described by considering an extremely small sphere of initial conditions having m dimensions, which is fastened to a reference phase space trajectory. If Pi(t) represents the length of the ith axis, and the axes are arranged in the order of the fastest to the slowest growing axes, then 12 denotes the complete set of Lyapunov exponents arranged in the order of the largest to the smallest exponent [23].

| (12) |

where i = 1, 2,…, m.

The divergence of the vector field of a dynamical system is identical to the sum of all its Lyapunov exponents (13). Hence, the sum of all the Lyapunov exponents is negative in case of the dissipative systems. Also, one of the Lyapunov exponents is zero for the bounded trajectories, which do not approach a fixed point.

| (13) |

where represents the vector field of a dynamical system.

Lyapunov exponents can be calculated from either the mathematical equations describing the dynamical systems (if known) or the observed time series [45]. Usually, two different types of methods are used for obtaining the Lyapunov exponents from the observed signals. The first method is based on the concept of the time-evolution of nearby points in the phase space [46]. However, this method enables the evaluation of the largest Lyapunov exponent only. The other method is dependent on the computation of the local Jacobi matrices and estimates all the Lyapunov exponents [47]. All the Lyapunov exponents (in vector form) of a particular system constitute the Lyapunov spectra [45].

3.3. Correlation Dimensions

The geometrical objects possess a definite dimension. For example, a point, a line, and a surface have dimensions of 0, 1, and 2, respectively [9]. This notion has led to the development of the concept of fractal dimension. A fractal dimension refers to any noninteger dimension possessed by the set of points (representing a dynamical system) in a Euclidean space. The determination of the fractal dimension plays a significant role in the nonlinear dynamic analysis. This may be attributed to the fact that the strange attractors are fractal in nature and their fractal dimension indicates the minimum number of dynamical variables required to describe the dynamics of the strange attractors. It also quantitatively portrays the complexity of a nonlinear system. The higher is the dimension of the system; the more is the complexity. The commonly employed method for the determination of the dimension of a set is the measurement of the Kolmogorov capacity (i.e., box-counting dimension). This method covers the set with tiny cells/boxes (squares for sets embedded in 2D and cubes for sets embedded in 3D space) having size ϵ. The dimension D can be defined as follows [23]:

| (14) |

where M(ϵ) is the number of the tiny boxes containing a part of the set.

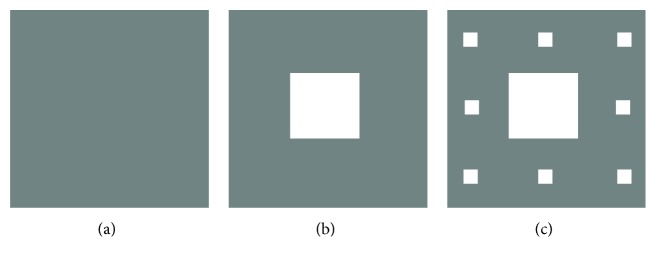

The mathematical example of a set possessing noninteger fractal dimension is a Cantor set. A Cantor set can be defined as the limiting set in a sequence of sets [48]. Let us consider a Cantor set in 2D, characterized by the below mentioned sequence of sets. At stage n = 0 (Figure 6(a)), let S0 designates a square having sides of length l. The square S0 is divided into 9 uniform squares of size l/3, and the middle square is removed at stage n = 1 (Figure 6(b)). This set of squares is regarded as S1. At stage n = 2, each square of set S1 is further divided into 9 squares of size l/9 and the middle squares are removed, which constitute the set S2 (Figure 6(c)). When this process of subdivision and removal of squares is continued to get the sequence of sets S0, S1, and S2, then the Canter set is the limiting set defined by . The Kolmogorov capacity-based dimension of this Cantor set can be calculated easily using the principle of mathematical induction as described below. When n = 0, S0 consists of a square of size l, and hence, ϵ = l and M(ϵ) = 1. When n = 1, S1 comprises of 8 squares of size l/3. Therefore, ϵ = l/3 and M(ϵ) = 8. At n = 2, S2 is made of 64 squares of size l/9. Therefore, ϵ = (l/3)2 and M(ϵ) = 82. Thus, the fractal dimension of the Cantor set is given as follows:

| (15) |

Figure 6.

Illustration of the first 3 stages during the construction of a Cantor set in 2D: (a) n = 0, (b) n = 1, and (c) n = 2 [48].

where the fractal dimension < 2 suggests that the Cantor set does not completely fill an area in the 2D space.

However, the Kolmogorov capacity-based dimension measurement does not describe whether a box contains many points or few points of the set. To describe the inhomogeneities or correlations in the set, Hentschel and Procaccia defined the dimension spectrum [49].

| (16) |

where M(r) = number of m-dimensional boxes of size r required to cover the set, pi = Ni/N is the probability that the ith box contains a point of the set, N is the total number of points in the set, and Ni is the number of points of the set contained by the ith box.

It can be readily inferred that the Kolmogorov capacity is equivalent to D0. The dimension D1 defined by taking the limit q → 1 in 16 is regarded as the information dimension.

| (17) |

where the dimension D2 is the known as the correlation dimension.

The correlation dimension can be expressed as follows:

| (18) |

where C(r) = ∑i=1M(r)pi2 is the correlation sum. It represents the probability of occurrence of two points of the set in a single box.

The correlation dimension signifies the number of the independent variables required to describe the dynamical system [50]. A widely used algorithm for the computation of the correlation dimension (D2) from a finite, discrete time series was introduced by Grassberger and Procaccia [51]. It was based on the assumption that the probability of occurrence of two points of the set in a box of size r is approximately same as the probability that the two points of the set are located at a distance ρ ≤ r. Using this assumption, the correlation sum can be computed as given as follows:

| (19) |

where Θ is the Heaviside function and can be defined as

| (20) |

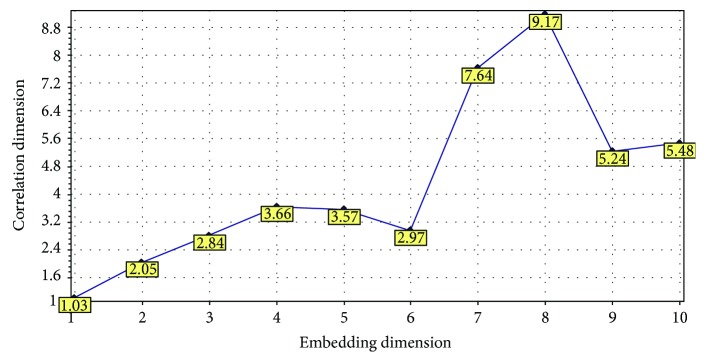

Practically, it is not possible to achieve the limit r → 0 that is used in the definition of the correlation dimension (18). Hence, Grassberger and Procaccia [51] proposed the approximate calculation of the correlation sum C(r) (19) for a number of values of r and then deducing the correlation dimension from the slope of the linear fitting in the linear region of the plot of log(C(r)) versus log(r). The correlation dimension of the reconstructed phase space plot of a dynamical system varies with its embedding dimension. The correlation dimensions of the reconstructed phase space plot of the aforementioned RRI time series at different embedding dimensions have been shown in Figure 7.

Figure 7.

Correlation dimensions of the reconstructed phase space plot of RRI time series at different embedding dimensions. The correlation dimensions were calculated using Visual Recurrence Analysis freeware (V4.9, USA), developed by Kononov [37].

3.4. Detrended Fluctuation Analysis (DFA)

The detection of long-range correlation of a nonstationary time series data requires the distinction between the trends and long-range fluctuations innate to the data. Trends are resulted due to external effects, for example, the seasonal alteration in the environmental temperature values, which exhibits a smooth and monotonous or gradually oscillating behaviour. Strong trends in the time series can cause the false discovery of long-range correlations in the time series if only one nondetrending technique is used for its analysis or if the outcomes of a method are misinterpreted. In recent years, DFA is explored for identifying long-range correlations (autocorrelations) of the nonstationary time series data (or the corresponding dynamical systems) [52]. This may be attributed to the ability of DFA to systematically eliminate the trends of different orders embedded into the data [52]. It provides an insight into the natural fluctuation of the data as well as into the trends in the data. DFA estimates the inherent fractal-type correlation characteristics of the dynamical systems, where the fractal behaviour corresponds to the scale invariance (or self-similarity) among the various scales [9]. The method of DFA was first proposed by Peng et al. [53] for the identification and the quantification of long-range correlations in DNA sequences. It was developed for detrending the variability in a sequence of events, which in turn, can divulge information about the long-term variations in the dataset. Since its inception, DFA has found applications in the study of HRV [54], gait analysis [55, 56], stock market prediction [57, 58], meteorology [59], and geology [60–62]. DFA method has also been given alternative terminologies [61] by various researchers like “linear regression detrended scaled windowed variance” [63] and “residuals of regression” [64].

In order to implement DFA, the bounded time series xt (t ϵ N) is converted into an unbounded series Xt [65].

| (21) |

where Xt = cumulative sum and 〈xi〉 = mean of the time series xt in the window t.

The unbounded time series Xt is then split into a number of portions of equal length n, and a straight line fitting is performed to the data using the method of least square fitting. The fluctuation (i.e., the root-mean-square variation) for every portion from the trend is calculated using [9]

| (22) |

where ai and b indicate the slope and intercept of the straight line fitting, respectively, and n is the split-unbounded time series portion length.

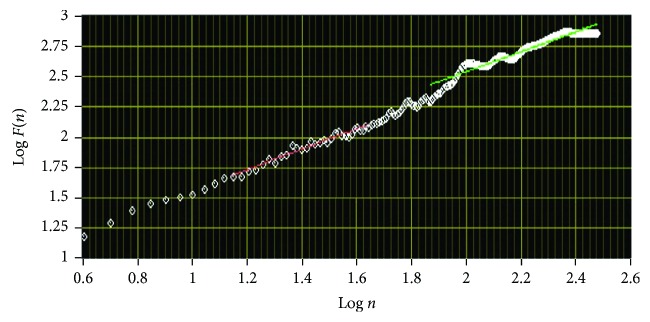

Finally, the log-log graph of F(n) versus n is drawn (Figure 8), where the statistical self-similarity of the signal is represented by the straight line on this graph, and the scaling exponent α is obtained from the slope of the line. The self-similarity is indicated as F(n) ∝ nα. The fluctuation exponent α has different values for different types of data (e.g., α~1/2 for the uncorrelated white noise and α > 1/2 for the correlated processes) [66, 67].

Figure 8.

Log-log graph of F(n) versus n for RRI time series. The graph was plotted using Biomedical Workbench toolkit of LabVIEW (National Instruments, USA).

3.5. Recurrence Plot and Recurrence Quantification Analysis

The dynamical features (e.g., entropy, information dimension, dimension spectrum, and Lyapunov exponents) of a time series can be computed using various methods [68]. However, most of these methods assume that the time series data is obtained from an autonomous dynamical system. In other words, the evolution equation of the time series data does not involve the time explicitly. Further, the time series data should be longer than the characteristic time of the underlying dynamical system. In this regard, the recurrence plot reported by Eckmann et al. [68] has emerged as an important method for the analysis of the dynamical systems and provides useful information even when the aforementioned assumptions are not satisfied. If represents the phase-space trajectory of a dynamical system in a d-dimensional space, then the recurrence plot can be defined as an array of points positioned at the places (i, j) in a N × N square matrix (23) such that is approximately equal to as described by 24 [68–70].

| (23) |

| (24) |

where ε = acceptable distance (error) between and . This ε is required because many systems often do not recur exactly to a previous state but just approximately.

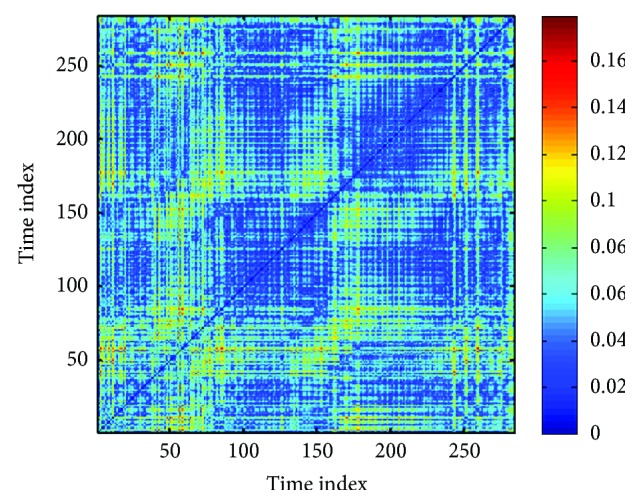

Recurrence plot divulges natural time correlation information at times i and j. In other words, it evaluates the states of a system at times i and j and indicates the existence of similarity by placing a dot (corresponding to Ri,j = 1) in the recurrence plot. The recurrence plot of the RRI time series present in Figure 2 has been shown in Figure 9.

Figure 9.

Recurrence plot of an RRI time series. The recurrence plot was generated using the MATLAB Toolbox developed by Yang [39].

The main advantage of the recurrence plot is that it does not require any mathematical transformation or assumption [69]. But the drawback of this method lies in the fact that the information provided is qualitative. To overcome this limitation, several measures of complexity that quantify the small-scale structures in the recurrence plot have been proposed by many researchers, regarded as recurrence quantification analysis (RQA) [71]. These measures are derived from the recurrence point density as well as the diagonal and the vertical line structures of the recurrence plot. The calculation of these measures in small windows, passing along the line of identity (LOI) of the recurrence plot, provides information about the time-dependent behaviour of these variables. Several studies have reported that the RQA variables can detect the bifurcation points like the chaos-order transitions [72]. The vertical structures in the recurrence plot have been reported to represent the intermittency and the laminar states. The RQA variables, corresponding to the vertical structures, enable the detection of the chaos-chaos transition [71]. The following discussion introduces the RQA parameters along with their potentials in the identification of the changes in the recurrence plot.

-

(i)Recurrence rate (RR) or percent recurrences: RR is the simplest variable of the RQA. It is a measure of the density of the recurrence points in the recurrence plot. Mathematically, it can be defined as 25, which is related to the correlation sum (19) except LOI, which is not included.

where Ri,j(ε) is the recurrence matrix and N is the length of the data series.(25) -

(ii)Average number of neighbours: It is defined by 26 and represents the average number of neighbours possessed by each point of the trajectory in its ε-neighbourhood.

where Nn is the number of (nearest) neighbours.(26) -

(iii)Determinism: The recurrence plot comprises of diagonal lines. The uncorrelated, stochastic, or chaotic processes exhibit either no diagonal lines or very short diagonal lines. On the other hand, the deterministic processes are associated with longer diagonals and less number of isolated recurrence points. The ratio of the number of recurrence points forming diagonal structures (having length ≥ lmin) to the total number of recurrence points is regarded as determinism (DET) or predictability of the system (27). The threshold lmin is used to exclude the diagonal lines which are produced by the tangential motion of the phase space trajectory.

where P(l) = ∑i,j=1N(1 − Ri−1,j−1(ε))(1 − Ri+l,j+l(ε))∏k=0l−1Ri+k,j+k(ε) represents the histogram of diagonal lines of length l.(27) -

(iv)Divergence: Divergence (DIV) is the inverse of the longest diagonal line appearing in the recurrence plot (28). It corresponds to the exponential divergence of the phase space trajectory, that is, when the divergence is more, the diagonal lines are shorter, and the trajectory diverges faster.

where Lmax is the length of the longest diagonal line.(28) -

(v)Entropy: Entropy (ENTR) is the Shannon entropy of the probability p(l) of finding a diagonal line of length l in the recurrence plot (29). It indicates the complexity of the recurrence plot in respect of the diagonal lines. For example, the uncorrelated noise possesses a small value of entropy, which suggests its low complexity.

where p(l) is the probability of finding a diagonal line of length l.(29) -

(vi)RATIO: It is the ratio of the determinism and the recurrence rate (30). It has been reported to be useful for identifying the transitions in the dynamics of the system.

where P(l) = number of diagonal lines of length l.(30) -

(vii)Laminarity: Laminarity (LAM) is the ratio of the number of recurrence points forming vertical lines to the total number of recurrence points in the recurrence plot (31). LAM has been reported to provide information about the occurrence of the laminar states in the system. However, it does not describe the length of the laminar states. The value of LAM decreases if more number of single recurrence points are present in the recurrence plot than the vertical structures.

where P(v) = ∑i,j=1N(1 − Ri,j)(1 − Ri,j+v)∏k=0v−1Ri,j+k is number of vertical lines of length v.(31) -

(viii)Trapping time: Trapping time (TT) is an estimate of the average length of the vertical structures, defined by 32. It indicates the average time for which the system will abide by a specific state. The computation of TT requires the consideration of a minimum length vmin.

where vmin is the predefined minimum length of a vertical length.(32) -

(ix)Maximum length of the vertical lines: The maximum length of the vertical lines (Vmax) in the recurrence plot can be defined as follows:

where Nv is the absolute number of vertical lines.(33)

3.6. Poincaré Plot

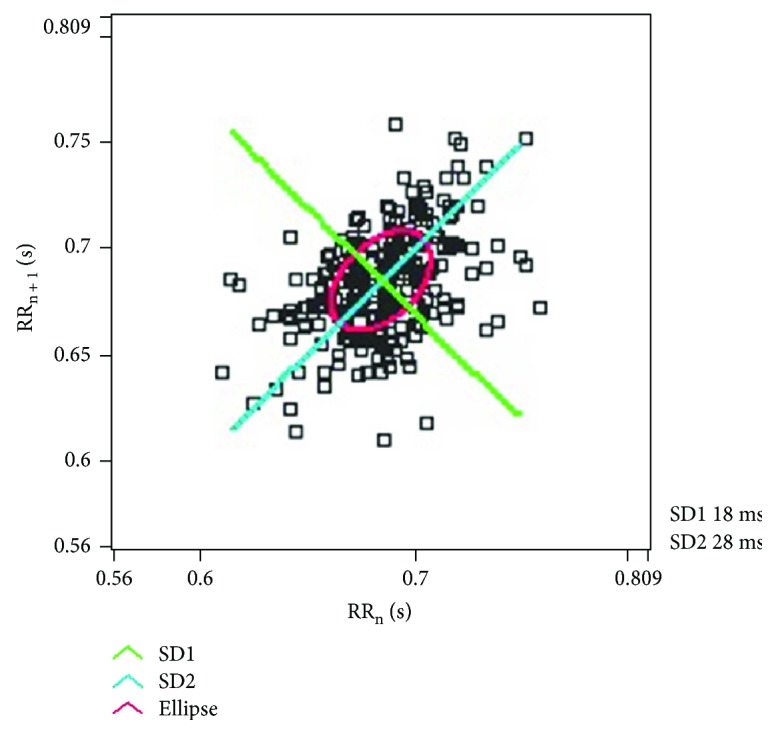

A Poincaré plot is a plot that enables the visualization of the evolution of a dynamical system in the phase space and is useful for the identification of the hidden patterns. It facilitates the reduction of dimensionality of the phase space and simultaneously converts the continuous time flow into a discrete time map [9]. The Poincaré plot varies from the recurrence plot in the sense that Poincaré plot is defined in a phase space, whereas, the recurrence plot is created in the time space. In the recurrence plot, the points represent the instances when the dynamical system traverses approximately the same section of the phase space [9]. On the other hand, the Poincaré plot is generated by plotting the current value of the RR interval (RRn) against the RR interval value preceding it (RRn+1) [73, 74]. Hence, the Poincaré plot takes into account only the length of the RR intervals but not the amount of the RR intervals that occur [75]. The Poincaré plot is also named as scatter plot or scattergram, return map, and Lorentz plot [76]. The Poincaré plot of the aforementioned RRI time series has been shown in Figure 10.

Figure 10.

The Poincaré plot of the RRI time series represented in Figure 2. The plot was generated using Biomedical Workbench toolkit of LabVIEW (National Instruments, USA).

Two important descriptors of the Poincaré plot are SD1 and SD2. SD1 refers to the standard deviation of the projection of the Poincaré plot on the line normal to the line of identity (i.e., y = −x), whereas, the projection on the line of identity (i.e., y = x) is regarded as SD2 [77]. The ratio of SD1 and SD2 is named as SD12. The Poincaré plot has been reported to divulge information about the cardiac autonomic activity [78, 79]. This can be attributed to the fact that SD1 provide information on the parasympathetic activity, whereas, SD2 is inversely related to sympathetic activity [80].

Apart from the above-mentioned dynamical system analysis methods, entropy-based measures such as approximate entropy (ApEn) and sample entropy (SaEn) have also been studied for the analysis of nonstationary signals [9]. These measures have been proposed to reduce the number of points required to obtain the dimension or entropy of low-dimensional chaotic systems and to quantify the changes in the process entropy. However, the methodological drawbacks of ApEn have been pointed out by Richman and Moorman and Costa et al. [9, 81, 82]. SaEn has also suffered from criticism for not completely characterizing the complexity of the signal [9, 83].

4. Applications of Nonlinear Dynamical System Analysis Methods in ECG Signal Analysis

4.1. Applications of Phase Space Reconstruction in ECG Signal Analysis

The phase space reconstruction has found a wide range of applications in the field of research, such as wind speed forecasting for wind farms [84], analyzing molecular dynamics of polymers [85], river flow prediction in urban area [86], and biosignal (such as ECG and EEG) analysis [28]. Among the applications related to biosignal analysis, many extensive studies have been performed for the analysis of ECG signals [87].

The different types of cardiac arrhythmias include ventricular tachycardia, atrial fibrillation, and ventricular fibrillation. Al-Fahoum and Qasaimeh [12] have reported the development of a simple ECG signal processing algorithm which employs reconstructed phase space for the classification of the different types of arrhythmia. The regions occupied by the ECG signals (belonging to the different types of arrhythmias) in the reconstructed phase space were used to extract the features for the classification of the arrhythmias. The authors reported the occurrence of 3 regions in the reconstructed phase space, which were representative of the concerned arrhythmias. Hence, 3 simple features were computed for the purpose of arrhythmia classification. The performance of the proposed algorithm was verified by classifying the datasets from the MIT database. The algorithm was able to achieve a sensitivity of 85.7–100%, a specificity of 86.7–100%, and an overall efficiency of 95.55%. Sayed et al. [88] have proposed the use of a novel distance series transform domain, which can be derived from the reconstructed phase space of the ECG signals, for the classification of the five types of arrhythmias. The transform space represents the manner in which the successive points of the original reconstructed phase space travel nearer or farther from the origin of the phase space. A combination of the raw distance series values and the parameters of the autoregressive (AR) model, the amplitude of the discrete Fourier transform (DFT), and the coefficients of the wavelet transform was used as the features for classification using K-nearest neighbour (K-NN) classifier. The authors have reported that the proposed method outperformed the state-of-the-art methods of classification with an extraordinary accuracy of 98.7%. The sensitivity and the specificity of the classifier were 99.42% and 98.19%, respectively. Based on the results, the authors suggested that their proposed method can be used for the classification of the ECG signals. The recent studies performed in the last 5 years for arrhythmia detection using phase space analysis of the ECG signals have been tabulated in Table 1.

Table 1.

Recent studies performed for arrhythmia detection using phase space analysis of ECG.

| Types of arrhythmia | Classification method | Performance | Ref. |

|---|---|---|---|

| Atrial fibrillation, ventricular tachycardia, and ventricular fibrillation | Distribution of the attractor in the reconstructed phase space | 85.7–100% sensitivity, 86.7–100% specificity, and 95.55% overall efficiency | [12] |

| Ventricular tachycardia, ventricular fibrillation, and ventricular tachycardia followed by ventricular fibrillation | Box-counting in phase space diagrams | 96.88% sensitivity, 100% specificity, and 98.44% accuracy | [89] |

| Ventricular fibrillation and normal sinus rhythm | Neural network with weighted fuzzy membership functions | 79.12% sensitivity, 89.58% specificity, and 87.51% accuracy | [90] |

| Atrial premature contraction, premature ventricular contraction, normal sinus rhythm, left bundle branch block, and right bundle branch block | K-nearest neighbour | 99.42% sensitivity, 98.19% specificity, and 98.7% accuracy | [88] |

| Soon-terminating atrial fibrillation and immediately terminating atrial fibrillation | A genetic algorithm in combination with SVM | 100% sensitivity, 100% specificity, and 100% accuracy | [91] |

Sleep apnoea is a kind of sleep disorder, where a distinct short-term cessation of breathing for >10 sec is observed when the person is sleeping [92]. It can be categorized into 3 categories, namely, obstructive sleep apnoea, central sleep apnoea, and mixed sleep apnoea. Sleep apnoea results in symptoms like daytime sleeping, irritation, and poor concentration [93]. Jafari reported the extraction of the features from the reconstructed phase space of the ECG signals and the frequency components of the heart rate variability (HRV) (i.e., very low-frequency (VLF), low-frequency (LF), and high-frequency (HF) components) for the detection of the sleep apnoea [93]. The extracted features were subjected to SVM-based classification. For the sleep apnoea dataset provided by Physionet database, the proposed feature set exhibited a classification accuracy of 94.8%. Based on the results, the author concluded that the proposed method can help in improving the efficiency of sleep apnoea detection systems.

Syncope, also known as fainting, refers to the unanticipated and the temporary loss of consciousness [94]. This is due to the malfunctioning of the autonomic nervous system (ANS), which is responsible for the regulation of the heart rate and blood pressure [95]. Syncope is characterized by a reduction in blood pressure and bradycardia [95]. It is diagnosed using a medical procedure known as head-up tilt test (HUTT) that varies from 45 to 60 min [96]. Since the test has to be carried out for a long time, it is unsuitable for the physically weak patients as they cannot complete the test. Thus, methods have been proposed to reduce the duration of the test through the prediction of the HUTT results by analyzing cardiovascular signals (e.g., ECG and blood pressure) acquired during HUTT. Khodor et al. [96] proposed a novel phase space analysis algorithm for the detection of syncope. HUTT was carried out for 12 min, and the ECG signals were acquired simultaneously. RR intervals were extracted from the ECG signals, and the phase space plots were reconstructed. Features were extracted from the phase space plot (such as phase space density) and recurrence quantification analysis. Statistically significant parameters were determined using Mann–Whitney test, which were further used for the SVM-based classification. Sensitivity and specificity of 95% and 47% were achieved. In 2015, the same group further reported the acquisition of arterial blood pressure signal along with the ECG signal during the HUTT for the detection of syncope [95]. Features were derived from the phase space analysis of the acquired signals, and important predictors were identified using the relief method [97]. The K-NN-based classification was performed, and a sensitivity of 95% and a specificity of 87% were achieved. Based on the results, the authors suggested that a bivariate analysis may be performed instead of univariate analysis to predict the outcome of HUTT with improved performance.

In recent years, ECG is being widely explored as a biometric to secure body sensor networks, human identification, and verification [98]. As compared to the other biometrics, it provides the advantage that it has to be acquired from a living body. In many previous studies related to the ECG-based biometric, features extracted from the ECG signals were amplitudes, durations, and areas of P, Q, R, S, and T waves [99–101]. However, the extraction of these features becomes difficult when the ECG gets contaminated by noise [102]. Wavelet analysis of the ECG signals was also attempted for the extraction of the ECG features for the identification of persons [103]. But, it required shifting of one ECG waveform with respect to the other for obtaining the best fit [104]. Recently, Fang and Chan proposed the development of an ECG biometric using the phase space analysis of the ECG signals [102]. The phase space plots were reconstructed from the 5 sec ECG signals, and the trajectories were condensed, single course-grained structure. The distinction between the course-grained structures was performed using the normalized spatial correlation (nSC), the mutual nearest point match (MNPM), and the mutual nearest point distance (MNPD) methods. The proposed strategy was tested on 100 volunteers using both single-lead and 3-lead ECG signals. The use of single-lead ECG signals resulted in the person identification accuracies of 96%, 95%, and 96% for MNPD, nSC, and MNDP methods, respectively, whereas, the accuracies increased up to 99%, 98%, and 98% for 3-lead ECG signals. Earlier, the same group had proposed the ECG biometric-based identification of humans by measuring the similarity or dissimilarity among the phase space portraits of the ECG signals [105]. In the experiment involving 100 volunteers, the person identification accuracies of 93% and 99% were achieved for single-lead and 3-lead ECG, respectively.

4.2. Applications of Lyapunov Exponents in ECG Signal Analysis

The concept of Lyapunov exponents has been employed to describe the dynamical characteristics of many biological nonlinear systems including cardiovascular systems. The versatility of the dominant Lyapunov exponents (DLEs) of the ECG signals was effectively applied by Valenza et al. [43] to characterize the nonlinear complexity of HRV in stipulated time intervals. The aforementioned study evaluated the HRV signal during emotional visual elicitation by using approximate entropy (ApEn) and dominant Lyapunov exponents (DLEs). A two-dimensional (valence and arousal) conceptualization of emotional mechanisms derived from the circumplex model of affects (CMAs) was adopted in this study. A distinguished switching mechanism was correlated between regular and chaotic dynamics when switching from neutral to arousal elicitation states [43]. Valenza et al. [106] reported the use of Lyapunov exponents to understand the instantaneous complex dynamics of the heart from the RR interval signals. The proposed method employed a high-order point-process nonlinear model for the analysis. The Volterra kernels (linear, quadratic, and cubic) were expanded using the orthonormal Laguerre basis functions. The instantaneous dominant Lyapunov exponents (IDLE) were estimated and tracked for the RRI time series. The results suggested that the proposed method was able to track the nonlinear dynamics of the autonomic nervous system- (ANS-) based control of the heart. Du et al. [107] reported the development of a novel Lyapunov exponent-based diagnostic method for the classification of premature ventricular contraction from other types of ECG beats.

HRV has been reported to be sensitive to both physiological and psychological disorders [108]. In recent years, HRV has been used as a tool in the diagnosis of the cardiac diseases. HRV is estimated by analyzing the RR intervals extracted from the ECG signals. The HRV analysis requires a sensitive tool, as the nature of the RR interval signal is chaotic and stochastic, and it remains very much controversial [108]. Researchers have proposed Lyapunov exponents as a means for improving the sensitivity of the HRV analysis. In earlier studies, Wolf et al. and Tayel and AlSaba had developed two algorithms for the estimation of the Lyapunov exponents [46, 108]. However, those methods were found to diverge while determining the HRV sensitivity. Recently, Tayel and AlSaba [108] proposed an algorithm known as Mazhar-Eslam algorithm that increases the sensitivity of the HRV analysis with improved accuracy. The accuracy was increased up to 14.34% as compared to Wolf's method. Ye and Huang [109] reported the estimation of Lyapunov exponents of the ECG signals for the development of an image encryption algorithm, which can provide security to images from all sorts of differential attacks. In the same year, Silva et al. [110] proposed the largest Lyapunov exponent-based analysis of the RR interval time series extracted from ECG signals for predicting the outcomes of HUTT.

4.3. Applications of Correlation Dimension in ECG Signal Analysis

The correlation dimension provides a measure of the amount of correlation contained in a signal. It has been used by a number of researchers for analyzing the ECG and the derived RRI time series in order to detect various pathological conditions [111, 112]. Bolea et al. proposed a methodological framework for the robust computation of correlation dimension of the RRI time series [113]. Chen et al. [114] used correlation dimension and Lyapunov exponents for the extraction of the features from the ECG signals for developing ECG-based biometric applications. The extracted ECG features could be classified with an accuracy of 97% using multilayer perceptron (MLP) neural networks [114]. Rawal et al. [115] proposed the analysis of the HRV during menstrual cycle using an adaptive correlation dimension method. In the conventional correlation dimension method, the time delay is calculated using the autocorrelation function, which does not provide the optimum time delay value. In the proposed method, the authors calculated the time delay using the information content of the RR interval signal. The proposed adaptive correlation dimension method was able to detect the HRV variations in 74 young women during the different stages of the menstrual cycle in the lying and the standing positions with a better accuracy than the conventional correlation dimension and the detrended fluctuation analysis methods. Lerma et al. [50] investigated the relationship between the abnormal ECG and the less complex HRV using correlation dimension. ECG signals (24 h Holter ECG signals as well as standard ECG signals) were acquired from 100 volunteers (university workers), among which 10 recordings were excluded due to the detection of >5% of false RR intervals. Examination of the rest 90 standard ECG signals by two cardiologists suggested 29 standard ECG signals to be abnormal. Estimation of the correlation dimensions suggested that the abnormal ECG signals were associated with reduced HRV complexity. Moeynoi and Kitjaidure analyzed the dimensional reduction of sleep apnea features by using the canonical correlation analysis (CCA). The sleep apnea features were extracted from the single-lead ECG signals. The linear and nonlinear techniques to estimate the variance of heart rhythm and HRV from electrocardiography signal were applied to extract the corresponding features. This study reported a noninvasive way to evaluate sleep apnea and used CCA method to establish a relationship among the pair data sets. The classification of the extracted features derived from apnea annotation was comparatively better than the classical techniques [116].

4.4. Applications of DFA in ECG Signal Analysis

It is a well-reported fact that the exposure to the environmental noise can result in annoyance, anxiety, depression, and various psychiatric diseases [117, 118]. However, noise exposure has also been reported to cause cardiovascular problems [118]. Chen et al. [114] proposed the DFA of the RR intervals during exposure to low-frequency noise for 5 min to detect the changes in the cardiovascular activity [119]. From the results, it could be summarized that an exposure to the low-frequency noise might alter the temporal correlation of HRV, though there was no significant change in the mean blood pressure and the mean RR interval variability. Kamath et al. reported the implementation of DFA for the classification of congestive heart failure (CHF) disease [120]. Short-term ECG signals of 20 sec duration, from normal persons and CHF patients, were subjected analysis using DFA. The receiver operating characteristics (ROC) curve suggested the suitability of the proposed method with an average efficiency of 98.2%. Ghasemi et al. reported the DFA of RR interval time series to predict the mortality of the patients in intensive care units (ICUs) suffering from sepsis [121]. In the proposed study, DFA was performed on the RR interval time series of the last 25 h duration of the survived and nonsurvived patients, who were admitted to the ICUs. The results suggested that the scaling exponent (α) was significantly different for the survived and the nonsurvived patients from 9 h before the demise and can be used to predict the mortality. Chiang et al. tested the hypothesis that cardiac autonomic dysfunction estimated by DFA can also be a potential prognostic factor in patients affected by end-stage renal disease and undertaking peritoneal dialysis. Total mortality and increased cardiac varied significantly with a decrease in the corresponding prognostic predictor DFAα1. DFAα1 (≥95%) was related to lower cardiac mortality (hazard ratio (HR) 0.062, 95% CI = 0.007–0.571, P = 0.014) and total mortality [122].

4.5. Applications of RQA in ECG Signal Analysis

RQA has found many applications in ECG signal analysis [123–125]. Chen et al. investigated the effect of the exposure to low-frequency noise of different intensities (for 5 min) on the cardiovascular activities using recurrence plot analysis [126]. The RR intervals were extracted from the ECG signals acquired during the noise exposure of intensities 70 dBC, 80 dBC, and 90 dBC. The change in the cardiovascular activity was estimated using RQA of the RR intervals. Based on the results, the authors concluded that RQA-based parameters can be used as an effective tool for analyzing the effect of the low-frequency noise even with a short-term RR interval time series.

Acharya et al. reported the use of RQA and Kolmogorov complexity analysis of RRI time series for the automated prediction of sudden cardiac death (SCD) risk [127]. In this study, the authors designed a sudden cardiac death index (SCDI) using the RQA and the Kolmogorov complexity parameters for the prediction of SCD. The statistically important parameters were identified using t-test. These statistically important parameters were used as inputs for classification using K-NN, SVM, decision tree, and probabilistic neural network. The K-NN classifier was able to classify the normal and the SCD classes with 86.8% accuracy, 80% sensitivity, and 94.4% specificity. The probabilistic neural network also provided 86.8% accuracy, 85% sensitivity, and 88.8% specificity. Based on the results, the authors proposed that RQA and Kolmogorov complexity analysis can be performed for the efficient detection of SCD. Apart from these studies, the RQA of the ECG signals has been widely studied for the detection of different types of diseases. A few RQA-based studies performed in the last 5 years for the diagnosis of different clinical conditions have been summarized in Table 2.

Table 2.

Recent studies performed for the diagnosis of clinical conditions using RQA-based ECG analysis.

| Clinical conditions | Classification method | Performance | Ref. |

|---|---|---|---|

| Atrial fibrillation, atrial flutter, ventricular fibrillation, and normal sinus rhythm | Decision tree, random forest, and rotation forest | 98.37%, 96.29%, and 94.14% accuracy for rotation forest, random forest, and decision tree, respectively | [123] |

| Effect of the exposure to low-frequency noise of different intensities on the cardiovascular activities | Statistical analysis of RQA-based measures | Statistically significant parameters obtained with p value ≤ 0.05 | [126] |

| Obstructive sleep apnea | A soft decision fusion rule combining SVM and neural network | 86.37% sensitivity, 83.47% specificity, and 85.26% accuracy | [128] |

| Arrhythmia | Joint probability density classifier | 94.83 ± 0.37% accuracy | [129] |

| Sudden cardiac death | K-NN, SVM, decision tree, and probabilistic neural network | 86.8% accuracy, 80% sensitivity, and 94.4% specificity with K-NN classifier and 86.8% accuracy, 85% sensitivity, and 88.8% specificity with PNN | [127] |

| Atrial fibrillation | Unthresholded recurrence plots | 72% accuracy | [130] |

4.6. Applications of Poincaré Plot in ECG Signal Analysis

Ventricular fibrillation has been reported to be the most severe type of cardiac arrhythmia [131]. It results from the cardiac impulses that have gone berserk within the ventricular muscle mass and is indicated by complex ECG patterns [131]. Electrical defibrillation is used as an effective technique to treat ventricular fibrillation. Gong et al. reported the application of Poincaré plot for the prediction of occurrence of successful defibrillation in the patients suffering from ventricular fibrillation [132]. The Euclidean distance of the successive points in Poincaré plot was used to calculate the stepping median increment of the defibrillation, which in turn, was used to estimate the possibility of successful defibrillation. The testing of the proposed method was analyzed using the ROC curve, and the results suggested that the performance was comparable to the established methods for successfully estimating defibrillation.

Polycystic ovary syndrome (PCOS) is a common endocrine disease found in 5–10% of the reproductive women [133]. PCOS has been reported to be associated with cardiovascular risks due to its connection with obesity [134]. Saranya et al. performed the Poincaré plot-based nonlinear dynamical analysis of the HRV signals acquired from the PCOS patients to predict the associated cardiovascular risk [135]. The authors found that the PCOS patients had reduced HRV and autonomic dysfunction (in terms of increased sympathetic activity and reduced vagal activity), which might herald cardiovascular risks. Based on the results, the authors suggested that the Poincaré plot analysis may be used independently to measure the extent of autonomic dysfunction in PCOS patients. Some Poincaré plot-based studies performed in the last 5 years for the diagnosis of different clinical conditions have been given in Table 3.

Table 3.

Recent studies performed for the diagnosis of clinical conditions using Poincaré plot analysis.

| Clinical conditions | Classification method | Performance | Ref. |

|---|---|---|---|

| Dilated cardiomyopathy | Multivariate discriminant analysis | 92.9% sensitivity, 85.7% specificity, and 92.1% AUC | [136] |

| Preeclampsia | Multivariate discriminant analysis | 91.2% accuracy | [137] |

| Polycystic ovary syndrome | Statistical analysis of Poincaré plot-based measures | Statistically significant parameters obtained with p value ≤ 0.05 | [135] |

| Atrial fibrillation | SVM optimized with particle swarm optimization | 92.9% accuracy | [138] |

4.7. Applications of Multiple Nonlinear Dynamical System Analysis Methods in ECG Signal Analysis

In the last few years, some researchers have also implemented multiple nonlinear methods simultaneously for the analysis of the ECG signals [42]. In some cases, the nonlinear methods have been used in combination with the linear methods [139]. Acharya et al. performed analysis of ECG signals using time domain, frequency domain, and nonlinear (i.e., Poincaré plot, RQA, DFA, Shannon entropy, ApEn, SaEn, higher-order spectrum (HOS) methods, empirical mode decomposition (EMD), cumulants, and correlation dimension) techniques for the diagnosis of coronary artery disease [140]. Goshvarpour et al. studied the effect of the pictorial stimulus on the emotional autonomic response by analyzing the nonlinear methods, that is, DFA, ApEn, and Lyapunov exponent-based parameters along with statistical measures of ECG, pulse rate, and galvanic skin response signals [141]. Karegar et al. extracted the nonlinear ECG features using the methods, namely, rescaled range analysis, Higuchi's fractal dimension, DFA, generalized Hurst exponent (GHE), and RQA for ECG-based biometric authentication [142]. The combination of different nonlinear methods for obtaining better performance was observed in the previously reported literature, but the studies prescribing superiority of one method in comparison to the other methods could not be found.

5. Conclusion

Most of the biosignals are nonstationary in nature, which often makes their analysis cumbersome using the conventional linear methods of signal analysis. This led to the development of nonlinear methods, which can perform a robust analysis of the biosignals [9]. Among the biosignals, the analysis of the ECG signals using nonlinear methods has been highly explored. The nonlinear analysis of the ECG signals has been investigated by many researchers for early diagnosis of diseases, human identification, and understanding the effect of different stimuli on the heart and the ANS. The current review dealt with the relevant theory, potential, and recent applications of the nonlinear ECG signal analysis methods. Although the nonlinear methods of ECG signal analysis have shown promising results, it is envisaged that the existing methods may be extended and new methods can be proposed to improve the performance and handle large and complex datasets.

Acknowledgments

The authors thank the National Institute of Technology Rourkela, India, for the facilities provided for the successful completion of the manuscript.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- 1.Birnbaum Y., Wilson J. M., Fiol M., de Luna A. B., Eskola M., Nikus K. ECG diagnosis and classification of acute coronary syndromes. 2014;19(1):4–14. doi: 10.1111/anec.12130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Stengaard C., Sørensen J. T., Rasmussen M. B., Bøtker M. T., Pedersen C. K., Terkelsen C. J. Prehospital diagnosis of patients with acute myocardial infarction. 2016;3(4) doi: 10.1515/dx-2016-0021. [DOI] [PubMed] [Google Scholar]

- 3.Koenig J., Jarczok M. N., Warth M., et al. Body mass index is related to autonomic nervous system activity as measured by heart rate variability — a replication using short term measurements. 2014;18(3):300–302. doi: 10.1007/s12603-014-0022-6. [DOI] [PubMed] [Google Scholar]

- 4.Petković D., Ćojbašić Ž., Lukić S. Adaptive neuro fuzzy selection of heart rate variability parameters affected by autonomic nervous system. 2013;40(11):4490–4495. doi: 10.1016/j.eswa.2013.01.055. [DOI] [Google Scholar]

- 5.Task Force of the European Society of Cardiology the North American Society of Pacing Electrophysiology. Heart rate variability: standards of measurement, physiological interpretation, and clinical use. 1996;93(5):1043–1065. doi: 10.1161/01.CIR.93.5.1043. [DOI] [PubMed] [Google Scholar]

- 6.Guyon I., Elisseeff A. An introduction to feature extraction. 2006;207:1–25. doi: 10.1007/978-3-540-35488-8_1. [DOI] [Google Scholar]

- 7.Li T., Zhou M. ECG classification using wavelet packet entropy and random forests. 2016;18(12):p. 285. doi: 10.3390/e18080285. [DOI] [Google Scholar]

- 8.Poli S., Barbaro V., Bartolini P., Calcagnini G., Censi F. Prediction of atrial fibrillation from surface ECG: review of methods and algorithms. 2003;39(2):195–203. [PubMed] [Google Scholar]

- 9.Blinowska K. J., Zygierewicz J. Boca Raton, FL, USA: CRC Press; 2011. [Google Scholar]

- 10.Schumacher A. Linear and nonlinear approaches to the analysis of R-R interval variability. 2004;5(3):211–221. doi: 10.1177/1099800403260619. [DOI] [PubMed] [Google Scholar]

- 11.Acharya U. R., Chua E. C. P., Faust O., Lim T. C., Lim L. F. B. Automated detection of sleep apnea from electrocardiogram signals using nonlinear parameters. 2011;32(3):287–303. doi: 10.1088/0967-3334/32/3/002. [DOI] [PubMed] [Google Scholar]

- 12.Al-Fahoum A. S., Qasaimeh A. M. A practical reconstructed phase space approach for ECG arrhythmias classification. 2013;37(7):401–408. doi: 10.3109/03091902.2013.819946. [DOI] [PubMed] [Google Scholar]

- 13.Thelen E., Smith L. B. Hoboken, NJ, USA: John Wiley & Sons, Inc.; 1998. Dynamic systems theories; pp. 258–312. [Google Scholar]

- 14.Toor A. A., Sabo R. T., Roberts C. H., et al. Dynamical system modeling of immune reconstitution after allogeneic stem cell transplantation identifies patients at risk for adverse outcomes. 2015;21(7):1237–1245. doi: 10.1016/j.bbmt.2015.03.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gintautas V., Foster G., Hübler A. W. Resonant forcing of chaotic dynamics. 2008;130(3):617–629. doi: 10.1007/s10955-007-9444-4. [DOI] [Google Scholar]

- 16.Kreyszig E. Hoboken, NJ, USA: John Wiley & Sons, Inc.; 2010. [Google Scholar]

- 17.Jackson T., Radunskaya A. Vol. 158. Hoboken, NJ, USA: John Wiley & Sons, Inc.; 2015. [DOI] [Google Scholar]

- 18.Din Q. Stability analysis of a biological network. 2014;4(3):123–129. [Google Scholar]

- 19.Jaeger J., Monk N. Bioattractors: dynamical systems theory and the evolution of regulatory processes. 2014;592(11):2267–2281. doi: 10.1113/jphysiol.2014.272385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Stewart I. New York, NY, USA: John Wiley & Sons, Inc.; 2012. pp. 283–294. [Google Scholar]

- 21.Pham T. D. Possibilistic nonlinear dynamical analysis for pattern recognition. 2013;46(3):808–816. doi: 10.1016/j.patcog.2012.09.025. [DOI] [Google Scholar]

- 22.Strogatz S. H. New York, NY, USA: Harper-Collins Publishers; 2014. [Google Scholar]

- 23.Henry B., Lovell N., Camacho F. Nonlinear dynamics time series analysis. 2012;2:1–39. [Google Scholar]

- 24.Szemplińska-Stupnicka W., Rudowski J. Neimark bifurcation, almost-periodicity and chaos in the forced van der Pol-Duffing system in the neighbourhood of the principal resonance. 1994;192(2-4):201–206. doi: 10.1016/0375-9601(94)90244-5. [DOI] [Google Scholar]

- 25.Ford J. How random is a coin toss? 1983;36(4):40–47. doi: 10.1063/1.2915570. [DOI] [Google Scholar]

- 26.Wallot S., Roepstorff A., Mønster D. Multidimensional recurrence quantification analysis (MdRQA) for the analysis of multidimensional time-series: a software implementation in MATLAB and its application to group-level data in joint action. 2016;7 doi: 10.3389/fpsyg.2016.01835. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Takens F. Detecting strange attractors in turbulence. 1981;898:366–381. doi: 10.1007/BFb0091924. [DOI] [Google Scholar]

- 28.Fang Y., Chen M., Zheng X. Extracting features from phase space of EEG signals in brain–computer interfaces. 2015;151:1477–1485. doi: 10.1016/j.neucom.2014.10.038. [DOI] [Google Scholar]

- 29.Krakovská A., Mezeiová K., Budáčová H. Use of false nearest neighbours for selecting variables and embedding parameters for state space reconstruction. 2015;2015:12. doi: 10.1155/2015/932750.932750 [DOI] [Google Scholar]

- 30.Chai S. H., Lim J. S. Forecasting business cycle with chaotic time series based on neural network with weighted fuzzy membership functions. 2016;90:118–126. doi: 10.1016/j.chaos.2016.03.037. [DOI] [Google Scholar]

- 31.Ye H., Deyle E. R., Gilarranz L. J., Sugihara G. Distinguishing time-delayed causal interactions using convergent cross mapping. 2015;5(1, article 14750) doi: 10.1038/srep14750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Glushkov A. V., Khetselius O. Y., Brusentseva S. V., Zaichko P. A., Ternovsky V. B. Studying interaction dynamics of chaotic systems within a non-linear prediction method: application to neurophysiology. 2014;21:69–75. [Google Scholar]

- 33.Paul B., George R. C., Mishra S. K. Phase space interrogation of the empirical response modes for seismically excited structures. 2017;91:250–265. doi: 10.1016/j.ymssp.2016.12.008. [DOI] [Google Scholar]

- 34.Fraser A. M., Swinney H. L. Independent coordinates for strange attractors from mutual information. 1986;33(2):1134–1140. doi: 10.1103/PhysRevA.33.1134. [DOI] [PubMed] [Google Scholar]

- 35.Kim H. S., Eykholt R., Salas J. D. Nonlinear dynamics, delay times, and embedding windows. 1999;127(1-2):48–60. doi: 10.1016/S0167-2789(98)00240-1. [DOI] [Google Scholar]

- 36.Mars N., Van Arragon G. Time delay estimation in nonlinear systems. 1981;29(3):619–621. doi: 10.1109/TASSP.1981.1163571. [DOI] [Google Scholar]

- 37.Kononov E. Visual recurrence analysis. 2006. http://visual-recurrence-analysis.software.informer.com/download/

- 38.Colombelli A., von Tunzelmann N. Cheltenham, UK: Edward Elgar Publishing Limited; 2011. The persistence of innovation and path dependence; pp. 105–119. [Google Scholar]

- 39.Yang H. Tool box of recurrence plot and recurrence quantification analysis. http://visual-recurrence-analysis.software.informer.com/download/

- 40.Übeyli E. D. Adaptive neuro-fuzzy inference system for classification of ECG signals using Lyapunov exponents. 2009;93(3):313–321. doi: 10.1016/j.cmpb.2008.10.012. [DOI] [PubMed] [Google Scholar]

- 41.Haykin S., Li X. B. Detection of signals in chaos. 1995;83(1):95–122. doi: 10.1109/5.362751. [DOI] [Google Scholar]

- 42.Owis M. I., Abou-Zied A. H., Youssef A. B. M., Kadah Y. M. Study of features based on nonlinear dynamical modeling in ECG arrhythmia detection and classification. 2002;49(7):733–736. doi: 10.1109/TBME.2002.1010858. [DOI] [PubMed] [Google Scholar]

- 43.Valenza G., Allegrini P., Lanatà A., Scilingo E. P. Dominant Lyapunov exponent and approximate entropy in heart rate variability during emotional visual elicitation. 2012;5 doi: 10.3389/fneng.2012.00003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Übeyli E. D. Recurrent neural networks employing Lyapunov exponents for analysis of ECG signals. 2010;37(2):1192–1199. doi: 10.1016/j.eswa.2009.06.022. [DOI] [PubMed] [Google Scholar]

- 45.Güler N. F., Übeyli E. D., Güler I. Recurrent neural networks employing Lyapunov exponents for EEG signals classification. 2005;29(3):506–514. doi: 10.1016/j.eswa.2005.04.011. [DOI] [Google Scholar]

- 46.Wolf A., Swift J. B., Swinney H. L., Vastano J. A. Determining Lyapunov exponents from a time series. 1985;16(3):285–317. doi: 10.1016/0167-2789(85)90011-9. [DOI] [Google Scholar]

- 47.Sano M., Sawada Y. Measurement of the Lyapunov spectrum from a chaotic time series. 1985;55(10):1082–1085. doi: 10.1103/PhysRevLett.55.1082. [DOI] [PubMed] [Google Scholar]

- 48.Henry B., Lovell N., Camacho F. Nonlinear dynamics time series analysis. 2:1–39. [Google Scholar]

- 49.Hentschel H. G. E., Procaccia I. The infinite number of generalized dimensions of fractals and strange attractors. 1983;8(3):435–444. doi: 10.1016/0167-2789(83)90235-X. [DOI] [Google Scholar]

- 50.Lerma C., Reyna M. A., Carvajal R. Association between abnormal electrocardiogram and less complex heart rate variability estimated by the correlation dimension. 2015;36:55–64. [Google Scholar]

- 51.Grassberger P., Procaccia I. Measuring the strangeness of strange attractors. 1983;9(1-2):189–208. doi: 10.1016/0167-2789(83)90298-1. [DOI] [Google Scholar]

- 52.Kantelhardt J. W., Koscielny-Bunde E., Rego H. H. A., Havlin S., Bunde A. Detecting long-range correlations with detrended fluctuation analysis. 2001;295(3-4):441–454. doi: 10.1016/S0378-4371(01)00144-3. [DOI] [Google Scholar]

- 53.Peng C.-K., Buldyrev S. V., Havlin S., Simons M., Stanley H. E., Goldberger A. L. Mosaic organization of DNA nucleotides. 1994;49(2):1685–1689. doi: 10.1103/PhysRevE.49.1685. [DOI] [PubMed] [Google Scholar]

- 54.Perkins S. E., Jelinek H. F., al-Aubaidy H. A., de Jong B. Immediate and long term effects of endurance and high intensity interval exercise on linear and nonlinear heart rate variability. 2017;20(3):312–316. doi: 10.1016/j.jsams.2016.08.009. [DOI] [PubMed] [Google Scholar]

- 55.Hausdorff J. M., Mitchell S. L., Firtion R., et al. Altered fractal dynamics of gait: reduced stride-interval correlations with aging and Huntington’s disease. 1997;82(1):262–269. doi: 10.1152/jappl.1997.82.1.262. [DOI] [PubMed] [Google Scholar]

- 56.Stout R. D., Wittstein M. W., LoJacono C. T., Rhea C. K. Gait dynamics when wearing a treadmill safety harness. 2016;44:100–102. doi: 10.1016/j.gaitpost.2015.11.012. [DOI] [PubMed] [Google Scholar]

- 57.Gu R., Xiong W., Li X. Does the singular value decomposition entropy have predictive power for stock market?—evidence from the Shenzhen stock market. 2015;439:103–113. doi: 10.1016/j.physa.2015.07.028. [DOI] [Google Scholar]

- 58.Auer B. R. Are standard asset pricing factors long-range dependent? 2018;42(1):66–88. doi: 10.1007/s12197-017-9385-y. [DOI] [Google Scholar]

- 59.Ivanova K., Ausloos M. Application of the detrended fluctuation analysis (DFA) method for describing cloud breaking. 1999;274(1-2):349–354. doi: 10.1016/S0378-4371(99)00312-X. [DOI] [Google Scholar]

- 60.Malamud B. D., Turcotte D. L. Self-affine time series: measures of weak and strong persistence. 1999;80(1-2):173–196. doi: 10.1016/S0378-3758(98)00249-3. [DOI] [Google Scholar]

- 61.Heneghan C., McDarby G. Establishing the relation between detrended fluctuation analysis and power spectral density analysis for stochastic processes. 2000;62(5):6103–6110. doi: 10.1103/PhysRevE.62.6103. [DOI] [PubMed] [Google Scholar]

- 62.Ménard S., Darveau M., Imbeau L. The importance of geology, climate and anthropogenic disturbances in shaping boreal wetland and aquatic landscape types. 2013;20(4):399–410. doi: 10.2980/20-4-3628. [DOI] [Google Scholar]

- 63.Cannon M. J., Percival D. B., Caccia D. C., Raymond G. M., Bassingthwaighte J. B. Evaluating scaled windowed variance methods for estimating the Hurst coefficient of time series. 1997;241(3-4):606–626. doi: 10.1016/S0378-4371(97)00252-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Taqqu M. S., Teverovsky V., Willinger W. Estimators for long-range dependence: an empirical study. 1995;3(4):785–798. doi: 10.1142/S0218348X95000692. [DOI] [Google Scholar]

- 65.Pranata A. A., Lee J. M., Kim D. S. Detecting smoking effects with detrended fluctuation analysis on ECG device. 한국통신학회 학술대회논문집; 2017; Korea. pp. 425–426. [Google Scholar]

- 66.Kantelhardt J. W., Zschiegner S. A., Koscielny-Bunde E., Havlin S., Bunde A., Stanley H. E. Multifractal detrended fluctuation analysis of nonstationary time series. 2002;316(1-4):87–114. doi: 10.1016/S0378-4371(02)01383-3. [DOI] [Google Scholar]

- 67.Penzel T., Kantelhardt J. W., Grote L., Peter J., Bunde A. Comparison of detrended fluctuation analysis and spectral analysis for heart rate variability in sleep and sleep apnea. 2003;50(10):1143–1151. doi: 10.1109/TBME.2003.817636. [DOI] [PubMed] [Google Scholar]

- 68.Eckmann J.-P., Oliffson Kamphorst S., Ruelle D. Recurrence plots of dynamical systems. 1987;4(9):973–977. doi: 10.1209/0295-5075/4/9/004. [DOI] [Google Scholar]

- 69.Zbilut J. P., Thomasson N., Webber C. L. Recurrence quantification analysis as a tool for nonlinear exploration of nonstationary cardiac signals. 2002;24(1):53–60. doi: 10.1016/S1350-4533(01)00112-6. [DOI] [PubMed] [Google Scholar]

- 70.Firooz S. G., Almasganj F., Shekofteh Y. Improvement of automatic speech recognition systems via nonlinear dynamical features evaluated from the recurrence plot of speech signals. 2017;58:215–226. doi: 10.1016/j.compeleceng.2016.07.006. [DOI] [Google Scholar]

- 71.Marwan N., Wessel N., Meyerfeldt U., Schirdewan A., Kurths J. Recurrence-plot-based measures of complexity and their application to heart-rate-variability data. 2002;66(2) doi: 10.1103/physreve.66.026702. [DOI] [PubMed] [Google Scholar]

- 72.Trulla L. L., Giuliani A., Zbilut J. P., Webber C. L., Jr Recurrence quantification analysis of the logistic equation with transients. 1996;223(4):255–260. doi: 10.1016/S0375-9601(96)00741-4. [DOI] [Google Scholar]

- 73.Chandra S., Jaiswal A. K., Singh R., Jha D., Mittal A. P. Mental stress: neurophysiology and its regulation by Sudarshan Kriya yoga. 2017;10(2):67–72. doi: 10.4103/0973-6131.205508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Nardelli M., Greco A., Bolea J., Valenza G., Scilingo E. P., Bailon R. Reliability of lagged Poincaré plot parameters in ultra-short heart rate variability series: application on affective sounds. 2017:p. 1. doi: 10.1109/jbhi.2017.2694999. [DOI] [PubMed] [Google Scholar]

- 75.Das M., Jana T., Dutta P., et al. Study the effect of music on HRV signal using 3D Poincare plot in spherical co-ordinates - a signal processing approach. 2015 International Conference on Communications and Signal Processing (ICCSP); April 2015; Melmaruvathur, India. pp. 1011–1015. [DOI] [Google Scholar]

- 76.Muralikrishnan K., Balasubramanian K., Ali S. M., Rao B. V. Poincare plot of heart rate variability: an approach towards explaining the cardiovascular autonomic function in obesity. 2013;57(1):31–37. [PubMed] [Google Scholar]

- 77.Roy B., Choudhuri R., Pandey A., Bandopadhyay S., Sarangi S., Kumar Ghatak S. Effect of rotating acoustic stimulus on heart rate variability in healthy adults. 2012;6(1):71–77. doi: 10.2174/1874205X01206010071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Kamen P. W., Krum H., Tonkin A. M. Poincaré plot of heart rate variability allows quantitative display of parasympathetic nervous activity in humans. 1996;91(2):201–208. doi: 10.1042/cs0910201. [DOI] [PubMed] [Google Scholar]

- 79.Toichi M., Sugiura T., Murai T., Sengoku A. A new method of assessing cardiac autonomic function and its comparison with spectral analysis and coefficient of variation of R–R interval. 1997;62(1-2):79–84. doi: 10.1016/S0165-1838(96)00112-9. [DOI] [PubMed] [Google Scholar]

- 80.Corrales M. M., Torres B. . . C., Esquivel A. G., Salazar M. A. G., Naranjo Orellana J. Normal values of heart rate variability at rest in a young, healthy and active Mexican population. 2012;4(07):377–385. doi: 10.4236/health.2012.47060. [DOI] [Google Scholar]

- 81.Richman J. S., Moorman J. R. Physiological time-series analysis using approximate entropy and sample entropy. 2000;278(6):H2039–H2049. doi: 10.1152/ajpheart.2000.278.6.h2039. [DOI] [PubMed] [Google Scholar]

- 82.Costa M., Goldberger A. L., Peng C.-K. Multiscale entropy analysis of complex physiologic time series. 2002;89(6) doi: 10.1103/PhysRevLett.89.068102. [DOI] [PubMed] [Google Scholar]

- 83.Govindan R. B., Wilson J. D., Eswaran H., Lowery C. L., Preißl H. Revisiting sample entropy analysis. 2007;376:158–164. doi: 10.1016/j.physa.2006.10.077. [DOI] [Google Scholar]

- 84.Wang Y., Wang J., Wei X. A hybrid wind speed forecasting model based on phase space reconstruction theory and Markov model: a case study of wind farms in northwest China. 2015;91:556–572. doi: 10.1016/j.energy.2015.08.039. [DOI] [Google Scholar]