Abstract

Visual stimuli are known to activate the auditory cortex of deaf people, presenting evidence of cross-modal plasticity. However, the mechanisms underlying such plasticity are poorly understood. In this functional MRI study, we presented two types of visual stimuli, language stimuli (words, sign language, and lip-reading) and a general stimulus (checkerboard) to investigate neural reorganization in the superior temporal cortex (STC) of deaf subjects and hearing controls. We found that only in the deaf subjects, all visual stimuli activated the STC. The cross-modal activation induced by the checkerboard was mainly due to a sensory component via a feed-forward pathway from the thalamus and primary visual cortex, positively correlated with duration of deafness, indicating a consequence of pure sensory deprivation. In contrast, the STC activity evoked by language stimuli was functionally connected to both the visual cortex and the frontotemporal areas, which were highly correlated with the learning of sign language, suggesting a strong language component via a possible feedback modulation. While the sensory component exhibited specificity to features of a visual stimulus (e.g., selective to the form of words, bodies, or faces) and the language (semantic) component appeared to recruit a common frontotemporal neural network, the two components converged to the STC and caused plasticity with different multivoxel activity patterns. In summary, the present study showed plausible neural pathways for auditory reorganization and correlations of activations of the reorganized cortical areas with developmental factors and provided unique evidence towards the understanding of neural circuits involved in cross-modal plasticity.

1. Introduction

Cortical structures that are deprived of their normal sensory input may become responsive to the stimulation of adjacent receptors, a process that is generally known as cross-modal plasticity or cross-modal reorganization [1]. In human brain imaging studies, there is growing evidence showing that, in early bilaterally deaf adults, the superior temporal cortex (STC) may experience cross-modal recruitment of different visual inputs, such as visual motion [2–8], biological motion [9–11], sign language [11–19], and silent speech reading [15, 20–23]. Animal models have also confirmed the dystrophic change that occurs when the auditory cortex fails to develop typically due to the absence of auditory input [24–28].

Visual-related responses in the STC of deaf subjects could result from long-term auditory deprivation (e.g., missing auditory sensory input) but could also be caused by other dynamic cognitive functions (e.g., sign language learning) [1, 12, 16, 19, 29, 30]. In the previous studies, STC activity was found to positively correlate with the duration of deafness or the age at cochlear implantation [2, 18, 31–35], suggesting that functional reorganization was likely to take place in the auditory cortex over a considerable period of time. A functional magnetic resonance imaging (fMRI) study showed that STC activation was highly correlated with speech reading fluency, but not with the duration of sensory deprivation [36], indicating that functional compensation of sensory deprivation did not require slow progressive colonization of the STC by visual inputs, but instead rapidly modulated by the preexisting latent connectivity from high-level language-related cortical areas. Thus, for the reorganization of STC, potentially both bottom-up signals (e.g., from the visual cortex) and top-down modulation (e.g., from the associative frontal-temporal areas) could contribute to such cross-modal activity [30]. Meanwhile, a magnetoencephalography study showed that the left frontotemporal network, including the STG, was activated during lexicosemantic processing in the congenitally deaf individuals, but not responsive to the early sensory visual processing, suggesting a more top-down modulation from high-level language-related regions [37].

Although it is clearly known that the STC responds to various visual stimuli in deaf people, the neural mechanisms underlying this cross-modal plasticity are still not fully understood. There are questions remaining to be answered. First, how do developmental factors (e.g., the duration of deafness or the learning of sign languages) in deaf people constrain or promote the reorganized activity in the auditory cortex? Second, how do the bottom-up and top-down two neural pathways contribute to cross-modal activation? Third, does the STC integrate inputs from different pathways, or does it keep them functionally segregated?

In the present study, using fMRI, we aimed to directly compare cross-modal activity and whole-brain functional connectivity in subjects when they were viewing a general stimulus (checkerboard) representing the bottom-up input from the visual cortex and language-related stimuli (words, sign language, and lip-reading) denoting the both bottom-up from visual regions and top-down signals from associative cortical areas. Nineteen profoundly deaf (congenital) subjects, 15 residual hearing subjects with a hearing aid, and 15 hearing subjects were recruited to investigate how behavioral factors (e.g., the duration of hearing loss and age at sign language learning) affected cross-modal activity. This study also aimed to investigate possible sources of cross-modal activation by applying dynamic causal modeling (DCM) [38] and representational similarity analysis (RSA) [39]. We hypothesized that the reorganized STC activity by a checkerboard was mainly induced through a feed-forward network and that activity provoked by language-related stimuli was instigated from both feed-forward and feedback components, but relied more on the feedback regulation. Furthermore, it was considered that the STC activities responsive to the two pathways were likely to be functionally segregated.

2. Materials and Methods

2.1. Participants

Thirty-four early-deaf subjects (14 males; mean age: 20.8 years old) and 15 hearing controls (7 males; mean age: 20.3 years old) participated in the study. The deaf participants were from the Shanghai Youth Technical School for the Deaf (http://www.shlqj.net/longxiao), and their information on the history of hearing loss, hearing aid use, and sign language use was documented through an individual interview (Table 1). All participants were healthy, had a normal or corrected-to-normal vision, were not taking psychoactive medications, did not have a history of neurological or psychiatric illness, took classes at the high-school level, and had normal cognitive functions. In the residual hearing group, most participants communicated by a combination of two or three strategies, which included spoken language (13 out of 15), lip-reading (8 out of 15), and sign language (11 out of 15), while most of the profound deaf (15 out of 19) communicated only via sign language. The ethical committee at East China Normal University in China approved the experimental procedure. All participants gave their informed and written consent according to the Declaration of Helsinki and were paid for their participation. The 15 hearing subjects were recruited from East China Normal University in China and had no learning experience of sign language or lip reading. The groups were matched for age, gender, handedness, and education.

Table 1.

Demographic data of the participants.

(a) Profoundly deaf

| Number | Age (years) | Sex | Cause of deafness | Hearing threshold (dB) | Age of SL (years) | Duration of deafness (years) | |

|---|---|---|---|---|---|---|---|

| Left | Right | ||||||

| 1 | 17 | M | Hereditary | 104 | 106 | N/A | 16 |

| 2 | 20 | M | Ototoxic drugs | 110 | 110 | 6 | 19 |

| 3 | 20 | M | Ototoxic drugs | 84 | 79 | 8 | 18 |

| 4 | 24 | M | Ototoxic drugs | 119 | 118 | 13 | 22 |

| 5 | 21 | F | Ototoxic drugs | 112 | 108 | 10 | 20 |

| 6 | 22 | F | Ototoxic drugs | 118 | 120 | 11 | 15 |

| 7 | 18 | F | Ototoxic drugs | 119 | 120 | 7 | 15 |

| 8 | 20 | M | Ototoxic drugs | 110 | 93 | 9 | 19 |

| 9 | 19 | F | Hereditary | 109 | 109 | N/A | 18 |

| 10 | 20 | M | Hereditary | 94 | 93 | N/A | 20 |

| 11 | 23 | F | Meningitis | 103 | 108 | 6 | 21 |

| 12 | 22 | F | Hereditary | 81 | 95 | N/A | 22 |

| 13 | 21 | F | Unknown | 110 | 109 | 7 | 19 |

| 14 | 22 | F | Ototoxic drugs | 108 | 117 | 7 | 21 |

| 15 | 21 | M | Ototoxic drugs | 110 | 110 | 6 | 21 |

| 16 | 21 | F | Meningitis | 104 | 103 | 6 | 20 |

| 17 | 22 | M | Ototoxic drugs | 105 | 110 | 7 | 20 |

| 18 | 22 | M | Ototoxic drugs | >120 | 109 | 6 | 21 |

| 19 | 21 | M | Ototoxic drugs | >110 | 110 | 13 | 19 |

(b) Residual hearing

| Number | Age (years) | Sex | Cause of deafness | Hearing threshold (dB) | Duration of deafness (years) | Duration of hearing aid use (years) | |

|---|---|---|---|---|---|---|---|

| Left (without aid) | Right (without aid) | ||||||

| 1 | 18 | F | Meningitis | 60 (105) | 55 (101) | 18 | 15 |

| 2 | 19 | M | Ototoxic drugs | 100 | 75 (75) | 17 | 14 |

| 3 | 19 | F | Ototoxic drugs | 48 (73) | 35 (70) | 19 | 10 |

| 4 | 20 | F | Ototoxic drugs | 105 | 79 (106) | 18 | 10 |

| 5 | 19 | M | Meningitis | 41 (88) | 64 (103) | 19 | 15 |

| 6 | 24 | M | Ototoxic drugs | 70 (76) | 93 (101) | 23 | 11 |

| 7 | 24 | F | Head injury | 66 (107) | 94 (98) | 22 | 22 |

| 8 | 24 | F | Ototoxic drugs | 47 (68) | 65 (93) | 23 | 20 |

| 9 | 21 | F | Ototoxic drugs | 75 (86) | 89 | 19 | 14 |

| 10 | 20 | M | Ototoxic drugs | 69 (99) | 75 (103) | 18 | 17 |

| 11 | 21 | F | Ototoxic drugs | 86 (108) | 66 (88) | 19 | 18 |

| 12 | 19 | F | Ototoxic drugs | 69 (88) | 84 (99) | 18 | 15 |

| 13 | 20 | F | Ototoxic drugs | 96 | 83 (95) | 18 | 14 |

| 14 | 20 | F | Ototoxic drugs | 108 | 84 (110) | 18 | 11 |

| 15 | 23 | F | Ototoxic drugs | 65 (101) | 101 | 20 | 19 |

(c) Hearing participants

| Number | Age (years) | Sex |

|---|---|---|

| 1 | 19 | M |

| 2 | 23 | M |

| 3 | 19 | F |

| 4 | 17 | F |

| 5 | 19 | F |

| 6 | 21 | F |

| 7 | 20 | F |

| 8 | 20 | F |

| 9 | 19 | M |

| 10 | 19 | F |

| 11 | 19 | F |

| 12 | 24 | M |

| 13 | 22 | M |

| 14 | 22 | M |

| 15 | 22 | M |

The hearing loss of deaf participants was confirmed by testing hearing thresholds with audiometry (see Methods and Materials) in the Institute of Speech and Hearing Science at East China Normal University. The averaged hearing thresholds in decibels of each participant are reported in the table. Profoundly deaf group: n = 19, 9 females, mean age = 20.84 ± 1.68 years; residual hearing group: n = 15, 11 females, mean age = 20.73 ± 2.05 years. SL: sign language. Note: ototoxic drugs mean the misuse of antibiotics.

Suitable deaf participants were selected by means of hearing threshold pretests, conducted within the 2 weeks preceding the fMRI experiment. To facilitate a preliminary screening of the subjects, deaf participants self-reported their level of hearing loss on the basis of their audiologists' diagnoses. Hearing thresholds of all the participants were then measured at the Institute of Speech and Hearing Science, Shanghai. Thresholds were assessed monaurally for both ears, either with or without a hearing aid, at 250, 500, 1000, 2000, 4000, and 8000 Hz, using steps of 5 dB. According to the International Hearing Impairment Classification Standard [40], we divided the 34 deaf participants into two groups in terms of their hearing loss level: profoundly deaf (>90 dB, n = 19; in average, the left hearing is 106.8 ± 2.5 dB; the right hearing is 106.7 ± 2.4 dB) and residual hearing (<75 dB, n = 15; the left hearing is 73.6 ± 5.5 dB; the right hearing is 76.1 ± 4.4 dB) (Table 1).

2.2. Visual Stimuli

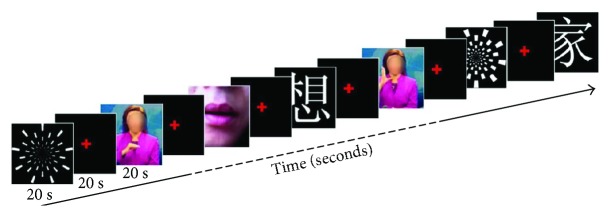

Four different visual materials were presented to participants, a checkerboard pattern to act as a general visual stimulus, and three visual stimuli with language content: words, sign language, and lip-reading (Figure 1, which also see details in Supporting information (available here)). All stimuli were pseudorandomly presented using a block design (Figure 1). Within each block, only one type of stimulus was presented. Each block lasted 20 s and was followed by a 20 s interblock interval. During the 20 s visual presentation, the stimuli were played at a similar rate. During the 20 s interval, a red cross with a black background was presented at the center of the screen and participants were asked to maintain their gaze on the cross. Per subject, twenty blocks in total were included. That is, each type of stimulus was repeated for five times. The blocks were separated into three sessions (6 or 7 blocks per session), with a 5 min intersession interval for rest.

Figure 1.

An example session of experimental paradigm. Subjects were presented alternating blocks of four different visual stimuli: checkerboard, words, sign language, and lip-reading. The order of presentation was pseudorandomly assigned. For each subject, there were 20 blocks (four stimuli × five repetitions), with each stimulus presented for 20 s and the black screen sustained for 20 s as an interval. The whole experiment was separated into three sessions for the purpose of avoiding subject fatigue. Throughout a block, subjects were asked to either fixate on a red cross at the center of the screen or concentrate on the visual stimuli. Questions were asked at the end of the experiment to ensure that subjects had paid attention to the visual stimuli (Supporting information).

Checkerboard stimuli were presented at 1280 × 1024 pixels. Each image was presented for 1 s. Word stimuli were composed of 80 Chinese characters (monosyllable) chosen from the List of Frequently Used Characters in Modern Chinese written by the State Language Commission of China. Each character was written in white on a black background and presented as a stimulus for 1 s using a font and size of SimSun 36. For sign language stimuli, five sentences were chosen and expressed by a female presenter moving her hands and arms without facial expression. The video was presented at a resolution of 1024 × 768 pixels, and each sentence lasting 10 seconds was repeated twice within the same block (20 seconds). The presenter's face in the video was masked to avoid potential interference from the lip-reading. For lip-reading stimuli, consecutive frames of a feminine face pronouncing disyllable Chinese words were presented at a moderate speed. The disyllable words were chosen from the Lexicon of Common Words in Contemporary Chinese by the Commercial Press. Both sign language and lip-reading stimuli were displayed at a rate similar to that used for the word and checkerboard stimuli (~1 Hz). The questionnaire data after scanning showed that all the participants were able to view the stimuli clearly and understand the content of each stimulus (Supporting information).

2.3. Experiment Procedure

The fMRI experiment was approved by the Shanghai Key Laboratory of Magnetic Resonance at East China Normal University. Before scanning, the experimental paradigm and scanning procedures were introduced to the deaf participants through a professional signer. They were asked to stay focused on stimuli and were told that they would be asked questions later after the scan to ensure that attention had been paid to the stimuli. Visual stimuli were displayed on a half-transparent screen hung around 285 cm away from the participant's eyes and displayed via a LCD projector (Epson ELP-7200L, Tokyo, Japan). The participant viewed the screen through a mirror. The participant's right hand was placed on a button box connected to a computer so that the participant was able to press a button as a sign that he/she wished to withdraw at any stage of the experiment or scan, without having to give a reason.

After scanning, all participants were asked to complete a feedback questionnaire about the content of the experiment and their subjective experiences, to ensure that they were paying attention during the experimental sessions. They were also asked to give ratings on a 3-point scale (3 = all stimuli were very clear, 1 = all stimuli were not clear) to ensure both the clarity of visual stimuli presented and their full engagement in the experiment. Additionally, participants had to describe what they had just seen between trials, the frequency of checkerboard flashing, and the meaning of simple words, sign language, and lip-reading sentences used during the experiment. We did not intend to control the complexity of the language stimuli. The rating scores from stimulus categories did not significantly differ from each other (one-way ANOVA, p > 0.3).

2.4. Data Acquisition

The fMRI was performed on a 3-T TimTrio (Siemens, Erlangen, Germany) scanner. During scanning, the participant's head was immobilized using a tight but comfortable foam padding. To avoid nonspecific activation, the participant was asked not to make any sort of response or read aloud during the scan. When presented with visual stimuli, the participant was required to concentrate on the presentation but was not required to perform any mental task or physical operation. Ear defenders were used for all residual and hearing participants throughout the whole procedure. Each participant underwent a T1-weighted structural MR scan (3-D FLASH), with 1 mm-thick slices, a repetition time (TR) of 1900 ms, an echo time (TE) of 3.42 ms, a flip angle of 9°, and a field of view (FOV) of 240 × 240 mm. FMRI was performed using echo planar imaging (EPI) sequences with the following parameters: 32 axial slices acquired in an interleaved order; TR, 2000 ms; TE, 30 ms; voxel size, 3.75 × 3.75 × 3.75 mm; flip angle, 70°; and FOV, 240 × 240 mm. A total of 147 sessions (78,400 volumes) were collected from 49 participants.

2.5. Preprocessing

The first two volumes of each run were discarded to allow for T1 equilibration effects. Data were analyzed using SPM8 (Wellcome Trust Centre for Neuroimaging, London, UK) running within Matlab 7.10 (Mathworks Inc., Natick, MA, USA). The image data preprocessing followed standard SPM8 preprocessing procedures and included slice timing correction, realignment for the correction of motion artifacts, coregistration to the participant's structural T1 image, normalization to the Montreal Neurological Institute (MNI) template, and smoothing with a Gaussian kernel of [8 8 8] full width at half maximum. No participants were discarded from the analysis. The head movements were less than 3.75 mm.

2.6. Cross-Modal Activation Analysis

A first-level analysis approach was adopted for the block-design fMRI data using SPM8. In this step, a general linear model encompassing the design and contrasts at the individual subject level was created. The model contained all the information on different conditions, onsets, and durations for all the scans combined across a subject. The twelve predictors included [1–4] the onsets of the four conditions (checkerboard, words, sign language, and lip-reading) in the profoundly deaf group, [5–8] the onsets of the four conditions in the residual deaf group, and [9–12] the onsets of the four conditions in the hearing group. These twelve events were modeled as delta functions convolved with the canonical hemodynamic response function and its temporal and dispersion derivatives. Head motion parameters derived from realignment were also included in the model as covariates of no interest.

The weighted sum of the parameter estimates from the individual analysis was represented as contrast images that were used for the group analysis using a random effect model. The contrast images obtained from the individual analyses represented the normalized condition-related increment of the MR signal of each subject, with the visual stimulus presentations compared with the resting baseline period (stimuli > baseline). The second-level group analysis of the three participant groups (Group: profoundly deaf, residual hearing, and hearing) in the four experimental conditions (Condition: checkerboard, words, sign language, and lip-reading) was performed using SPM. Each contrast image from the relevant condition was firstly submitted to a one-sample t-test at the group level for the whole brain to examine the cross-modal activations in the auditory cortex in individual groups. Then, to identify the differences between groups and conditions, a two-way ANOVA with two main factors: Group and Condition, was conducted for the whole brain using a general linear model. To define the regions of interests (ROIs) for following analyses, the peak voxels were selected within the STC (Brodmann areas 41 and 42) in the right hemisphere of the whole-brain map showing a significant main effect of Group (peak at ) and within language-related brain regions: the left anterior temporal cortex (ATC, peak at ) and left inferior frontal gyrus (IFG, peak at ) in the map showing the Condition effect. A spherical ROI with a 10 mm radius was then generated and centered on the peak voxel. The mean percent signal change for each participant was extracted from the first-level analysis using the Marsbar software tool (http://marsbar.sourceforge.net).

2.7. Correlation Analysis

In the residual hearing group, most participants communicated by a combination of two or three strategies, which made the analysis of their language learning experience complicated. In the profoundly deaf group, language experience of four participants was not available. Therefore, only 15 profoundly deaf participants were included in the correlation analysis. For the same reason, only the profoundly deaf group was examined to be compared with the hearing group in the functional connectivity analysis and dynamic casual modeling (descripted below). To test the hypothesis that the sign language experience would modulate cross-modal reorganization, we examined the activity in the right superior temporal cortex (STC; using the ROIs defined in the STC showing the Group effect). Spearman's rank tests for correlations between STC activity and the duration of deafness or between STC activity and the age of learning sign language were performed.

2.8. Functional Connectivity Analysis

A functional connectivity analysis was performed to search for brain areas showing significant differences between the profoundly deaf and hearing groups, with the right STC as a seed region (the same ROI in the above analyses). Functional connectivity analyses were performed using CONN-fMRI Functional Connectivity SPM [41]. EPI images that had been preprocessed as described but had undergone no further statistical analysis were used. Connectivity strength was calculated over the visual presentation period. Before the subject-level analysis, standard preprocessing and depositing procedures using the default settings of the CONN toolbox were performed on the EPI data using the BOLD signal derived from white matter masks and cerebrospinal fluid, as well as motion correction parameters from the realignment stage of the spatial preprocessing as covariates of no interest. The data were further band-pass filtered between 0.008 and 0.09 Hz. For each subject, bivariate regression coefficients were estimated to represent the total linear temporal association between the BOLD signal of the ROIs and the rest of the brain. The subsequent analysis compared correlation strengths by a two-sample t-test (FDR, p < 0.05 corrected) on the beta images from the group analysis to examine the differences between the profoundly deaf and the hearing groups at a whole-brain level. To identify the task specificity in each stimulus condition, a further two-sample t-test (FDR, p < 0.05 corrected) on the beta images of differences between the groups was performed to examine the differences between the checkerboard condition and the three language conditions.

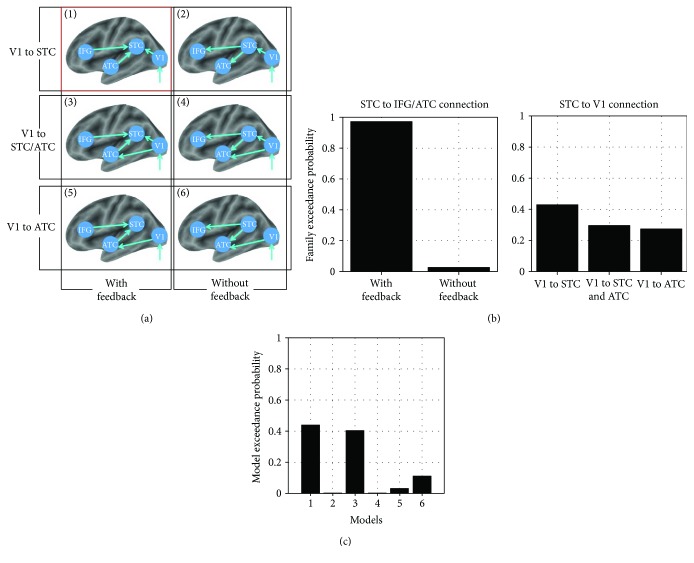

2.9. Dynamic Causal Modeling

Six different models regarding the language-related visual inputs in deaf participants were compared. These models mainly tested whether STC activations were induced by language stimuli receiving the feedback modulation from IFG and ATC and the feed-forward signal from the primary visual cortex (V1) (see Results). Each model was composed of four regions: IFG, ATC, STC, and V1. The extrinsic input (visual stimulation) always entered the system via the V1. The main differences among the models involved the connections among brain regions: specifically, (1) a model with feedback or feed-forward connections between IFG/ATC and STC, (2) a model with both feed-forward connections between V1 and STC and between V1 and ATC, and (3) a model with only feed-forward connections between V1 and STC or between V1 and ATC. The models were split into two classes of families. The first class tested if models with or without feedback (IFG/ATC to STC) were more likely to explain the data. The family with feedback from IFG/ATC to STC included models [1], [3], and [5], and the family without feedback included models [2], [4], and [6]. The second class tested if models fitted the data which explained the connections between V1 and STC, including V1 to STC (models [1] and [2]), V1 to both STC and ATC (models [3] and [4]), or V1 to only ATC (models [5] and [6]). A group analysis (p < 0.001, FDR p < 0.05 corrected) of deaf participants (profoundly deaf and residual hearing groups) was conducted to investigate the voxels most significantly activated across all three language-related stimuli in areas of left V1, STC, ATC, and IFG. Specifically, the peak intensities of four regions were identified at V1 , STC , ATC , and IFG . The principle eigenvariety (time series) was extracted from the volumes of interest that centered at the coordinates of the nearest voxels within a sphere of 8 mm radius (ROI). Based on the estimated model evidence of each model, using SPM8, random effect Bayesian model selection then calculated the “exceedance probability.” When comparing model families, all models within a family were averaged using Bayesian model averaging and the exceedance probabilities were calculated for each model family.

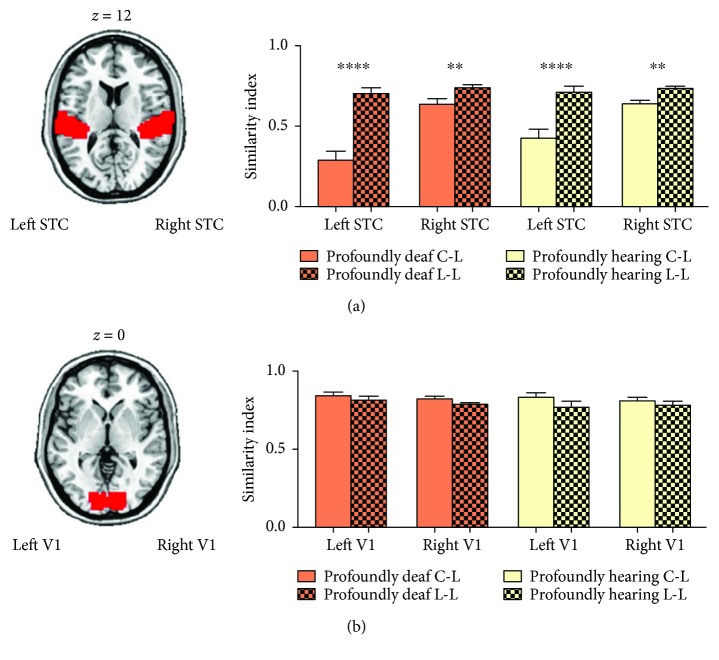

2.10. Representational Similarity Analysis (RSA)

The analysis of neural activity within ROIs was conducted with the RSA toolbox (http://www.mrc-cbu.cam.ac.uk/methods-and-resources/toolboxes/) [39]. Both the primary visual area and STC were selected as ROIs defined anatomically by using WFU PickAtlas [42]. We compared the condition-wise patterns amongst fMRI t-maps for the four types of visual stimuli: checkerboard (nonlanguage), words, sign language, and lip-reading (language). Per subject, the representational dissimilarity matrixes (RDMs) comprised correlation distances (1 correlation coefficient) between the images from the blocks for each condition in both the profoundly deaf and the residual hearing group, which yielded a 4 × 4 matrix. The four conditions were separated into two categories: nonlanguage (checkerboard) and language (words, sign language, and lip-reading). We then compared the correlation coefficient in the three pairs between the nonlanguage and the language conditions (category C-L: checkerboard versus words, checkerboard versus sign language, and checkerboard versus lip-reading) with the three pairs within the language conditions (category L-L: words versus sign language, words versus lip-reading, and sign language versus lip-reading) for each subject. In the individual ROIs, the similarities of the two categories were tested statistically (t-test, p < 0.05) in both the profoundly deaf and the residual hearing groups. As there was no plasticity in the auditory cortex with most of visual stimuli in the hearing group, the RSA analysis did not include such group of participants.

3. Results

3.1. Brain Activations in Auditory Areas in Response to Visual Stimuli

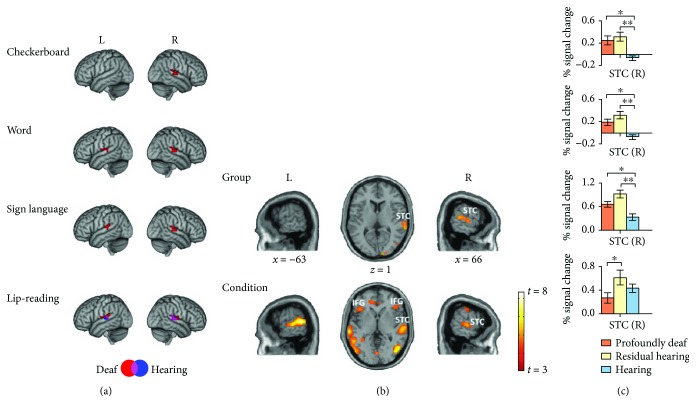

We first examined cross-modal activation in the STC of both the deaf and the hearing groups at the group level for each condition (Table 2). We found that the STC was significantly activated by all of the visual stimuli (p < 0.001, cluster level p FDR < 0.05 corrected; Figure 2(a)) in the deaf participants. The visual stimuli with language content activated the STC bilaterally, and the checkerboard only induced the STC activation in the right hemisphere (Figure 2(a)). The hearing subjects did not show such cross-modal activity, except for the lip-reading condition. Then, we conducted a two-way ANOVA to identify the difference in brain activity between the profoundly deaf, the residual hearing, and the hearing groups and between four visual conditions (Figure 2(b) and Table 3). Results demonstrated that the activations in the right STC had a significant main effect of both Group (p < 0.001, p FDR < 0.05 corrected, Figure 2(b)) and Condition (p < 0.001, p FDR < 0.05 corrected, Figure 2(b)). Other brain areas, including the bilateral middle lateral occipital gyrus, bilateral anterior temporal cortex (ATC), and inferior frontal gyrus (IFG), were also activated to the main effect of Condition (Table 3).

Table 2.

Peak activations for BA41 and BA42: profoundly deaf, residual hearing, and hearing groups (p < 0.001, uncorrected, minimum cluster size = 10).

| Group | Peak coordinates (BA41) | Number of voxels | Peak Z statistic | Peak coordinates (BA42) | Number of voxels | Peak Z statistic | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| X | Y | Z | X | Y | Z | |||||

| Checkerboard | ||||||||||

| Profound | / | / | / | / | / | 66 | −27 | 15 | 19 | 4.47 |

| Residual | / | / | / | / | / | 69 | −24 | 9 | 30 | 3.87 |

| Hearing | / | / | / | / | / | / | / | / | / | |

| Word | ||||||||||

| Profound | −48 | −33 | 12 | 23 | 4.49 | 60 | −30 | 15 | 29 | 3.78 |

| Residual | / | / | / | / | / | 69 | −27 | 9 | 12 | 3.64 |

| Hearing | / | / | / | / | / | / | / | / | / | |

| Sign language | ||||||||||

| Profound | 54 | −24 | 6 | 20 | 4.54 | 66 | −30 | 12 | 34 | 5.54 |

| / | / | / | / | / | −66 | −30 | 6 | 11 | 4.75 | |

| Residual | 48 | −33 | 9 | 24 | 5.07 | 66 | −36 | 18 | 31 | 4.62 |

| / | / | / | / | / | −63 | −30 | 6 | 18 | 4.75 | |

| Hearing | / | / | / | / | / | / | / | / | / | |

| Lip-reading | ||||||||||

| Profound | 54 | −24 | 6 | 16 | 4.72 | 66 | −24 | 12 | 39 | 4.70 |

| / | / | / | / | / | −66 | −30 | 6 | 13 | 3.92 | |

| Residual | 57 | −27 | 12 | 18 | 4.41 | 63 | −24 | 12 | 45 | 5.10 |

| / | / | / | / | / | −69 | −27 | 6 | 38 | 5.36 | |

| Hearing | 54 | −24 | 6 | 13 | 4.44 | 66 | −30 | 12 | 33 | 4.40 |

| / | / | / | / | / | −69 | −27 | 6 | 14 | 4.26 | |

Peak coordinates refer to stereotactic coordinates in MNI (Montreal Neurological Institute) space. BA: Brodmann area.

Figure 2.

Activations in the superior temporal cortex in response to visual stimuli. (a) Group-level activities for the four visual stimuli are displayed in MNI (Montreal Neurological Institute) standard space. Lateral views of left and right hemispheres showing activity for the checkerboard, word, sign language, and lip-reading stimuli in deaf (red, including both the profoundly deaf and the residual hearing groups, n = 34) and hearing (blue, n = 15) groups within the brain regions of Brodmann areas (BAs) 41 and 42. The deaf group showed a response to the checkerboard in the right STC region (p < 0.001, FDR p < 0.05 corrected). Bilateral STC activations were found under the word and sign language conditions in deaf subjects (p < 0.001, FDR p < 0.05 corrected). Both the deaf and the hearing groups showed bilateral activations for the lip-reading stimuli (p < 0.001, FDR p < 0.05 corrected). The coordinates and voxel sizes are listed in detail in Table 2. (b) Brain activation to the main effect of Group and Condition are projected on lateral and top views of the brain (t > 3.0, p < 0.001, FDR p < 0.05 corrected). (c) Percent signal change in the regions of interest (10 mm sphere surrounding the peak voxel at in the right STC of the brain map showing the Group effect) of the profoundly deaf (orange, n = 19), residual hearing (yellow, n = 15), and hearing (blue, n = 15) groups. ∗ p < 0.05 (Student's t-test), ∗∗ p < 0.01. Error bars indicate 1 standard error. L: left hemisphere; R: right hemisphere; STC: superior temporal cortex; IFG: inferior frontal gyrus.

Table 3.

Peak activations for the main effect of Group and Condition (two-way ANOVA, p < 0.001, FDR p < 0.05 corrected).

| Main effect | Brain region | Number of voxels | Peak coordinates | Z score | ||

|---|---|---|---|---|---|---|

| X | Y | Z | ||||

| Group | Temporal_Superior_Right | 151 | 66 | −27 | 13 | 4.16 |

| Occipital_Middle_Left | 53 | −36 | −81 | 0 | 4.14 | |

| Occipital_Middle_Right | 77 | 24 | −96 | 3 | 5.49 | |

| Anglar_Left | 34 | −54 | −60 | 39 | 4.02 | |

| Frontal_Middle_Right | 21 | −33 | 6 | 45 | 4.01 | |

| Precentral_Left | 56 | −24 | −3 | 36 | 3.76 | |

| Thalamus_Right | 10 | 24 | −27 | 21 | 3.92 | |

| Cerebelum_7b_Left | 17 | −36 | −48 | −42 | 3.47 | |

| SupraMarginal_Right | 19 | 42 | −39 | 33 | 3.35 | |

| Cingulum_Anterior_Right | 10 | 6 | 42 | 15 | 3.17 | |

|

| ||||||

| Condition | Temporal_Superior_Right | 426 | 66 | −25 | 10 | 6.75 |

| Temporal_Superior_Left | 305 | −63 | −48 | 9 | 7.54 | |

| Frontal_Inferior_Tri_Left | 249 | −48 | 22 | 13 | 7.58 | |

| Frontal_Inferior_Tri_Right | 45 | 42 | 30 | 0 | 5.85 | |

| Temporal_Middle_Left | 234 | 54 | −3 | 45 | 6.37 | |

| Temporal_Middle_Right | 261 | 51 | −66 | 3 | 5.75 | |

| Temporal_Pole_Left | 34 | −57 | 7 | −9 | 4.47 | |

| Temporal_Pole_Right | 50 | 57 | 6 | −12 | 5.19 | |

| Cingulum_Anterior | 123 | −12 | 42 | 0 | 7.36 | |

| ParaHippocampal_Right | 56 | 30 | −36 | −9 | 7.05 | |

| Fusiform_Left | 30 | −30 | −39 | −12 | 6.44 | |

| Frontal_Middle_Right | 23 | 30 | 24 | 39 | 4.12 | |

| Precentral_Left | 54 | −51 | −6 | 48 | 5.41 | |

| Precentral_Right | 12 | 54 | −3 | 45 | 5.19 | |

| Precuneus_Right | 49 | 9 | −48 | 48 | 6.22 | |

| Lingual_Left | 29 | 0 | −78 | −3 | 4.68 | |

Note: peak coordinates refer to stereotactic coordinates in MNI space.

We next studied the STC activation in the right hemisphere that was induced by all four visual stimuli (Figures 2(a) and 2(c) and Table 2). For the checkerboard stimulus, we found that the right STC was significantly activated, and the post hoc region of interest (ROI, selected from the map showing the main effect of Group) analysis showed that the cross-modal activation was significantly higher in both the profoundly deaf (t-test, p < 0.017) and the residual hearing groups (t-test, p < 0.002) than in the hearing group (Figure 2(c), first row). For the visual word stimulus, the activation in the right STC showed significant differences between the profoundly deaf and the hearing groups (t-test, p < 0.002) and between the residual- and hearing groups (t-test, p < 0.001) (Figure 2(c), second row). For the sign language stimulus, the STC showed enhanced responses in both the profoundly deaf (t-test, p < 0.003) and the residual hearing groups (t-test, p < 0.02) in comparison with the hearing subjects (Figure 2(c), third row). For the lip-reading stimulus, cross-modal activations were found in the right STC in all subject groups, with no significant differences being found between the profoundly deaf and the hearing groups and between the residual hearing and the hearing groups (t-test, all p > 0.2; Figure 2(c), last row).

3.2. Correlations between Developmental Parameters and STC Activations

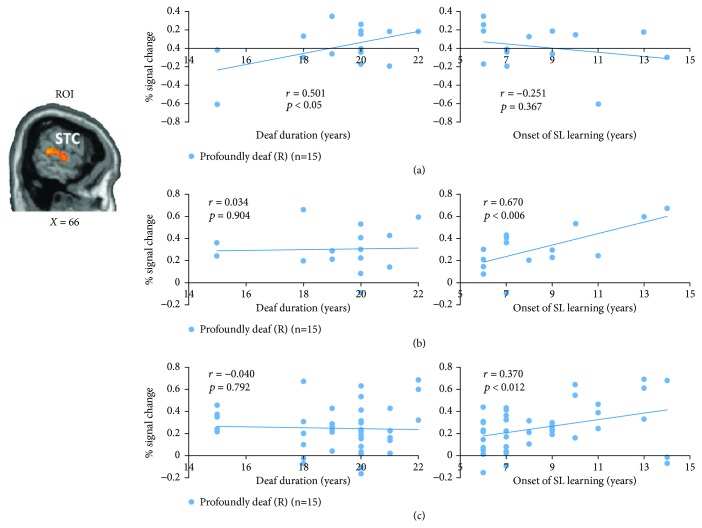

We then wished to investigate whether activations in auditory regions showed a stronger correlation with the duration of hearing loss or with the age of starting to learn sign language. Most of the residual hearing subjects had a reasonably similar learning duration in reading Chinese words and frequently used multiple language strategies (sign language, speech reading, and spoken language) in their communications. Thus, it is difficult to determine the accurate duration of language learning in the residual hearing group. In the correlation analysis, we only included profoundly deaf subjects and the developmental factors of duration of deafness and the age of learning sign language (Table 1). We first confirmed that the two developmental parameters were not significantly correlated with each other (Spearman's rank, r = −0.238, p > 0.392).

For profoundly deaf individuals, we found that the right STC activation resulting from the checkerboard was positively correlated with the duration of deafness (Spearman's rank, r = 0.501, p < 0.05), but not with the age of sign language learning (Spearman's rank, r = −0.251, p > 0.366; Figure 3(a)). In contrast, the STC activation evoked by sign language stimuli was positively associated with the onset of sign language learning (r = 0.670, p < 0.006), but not with the duration of deafness (r = 0.034, p > 0.903; Figure 3(b)). Similar correlations were also found for all the visual stimuli that contained language content. That is, STC activity induced by all of the language stimuli was highly correlated with the onset of sign language learning (Spearman's rank, r = 0.370, p < 0.012), but not with the duration of deafness (Spearman's rank, r = −0.04, p > 0.792; Figure 3(c)). Further analyses showed that the activation in the left ATC and left IFG during the presentation of sign language was highly correlated with the onset of sign language learning (ATC: r = 0.642, p < 0.01; IFG: r = 0.703, p < 0.003; Figure S1). Interestingly, the activation in the same IFG region under the word condition also demonstrated a significant correlation with the onset of sign language learning (IFG: r = 0.501, p < 0.05) (Figure S1). However, no areas showing significant correlation with the onset of sign language were found under the checkerboard condition.

Figure 3.

Correlations between superior temporal cortex (STC) activations and duration of deafness and onset of sign language learning in the profoundly deaf group. (a) A sagittal slice depicting the STC region of interest (ROI, selected based on the brain map showing the Group effect, Figure 2(b)) in the right hemisphere. Significant correlations were found in the profoundly deaf group (n = 15) between right STC activity for the checkerboard and duration of deafness (r = 0.501, p < 0.05, Bonferroni corrected), but not between this activation and the onset of sign language learning (the age of starting to learn sign language) (r = −0.251, p = 0.367). (b) In contrast, significant positive correlation was found between the activation for sign language and the onset of sign language learning (r = 0.670, p < 0.006, corrected), but not between the activation and hearing loss duration (r = 0.034, p = 0.904). (c) In the profoundly deaf group (n = 15), the STC activity induced by all the visual language stimuli (including words, sign language, and lip-reading) was correlated with the onset of sign language learning (r = 0.370, p < 0.012, corrected), but not with the duration of deafness (r = −0.04, p = 0.792).

3.3. Whole-Brain Functional Connectivity with Cross-Modal Activity in the STC

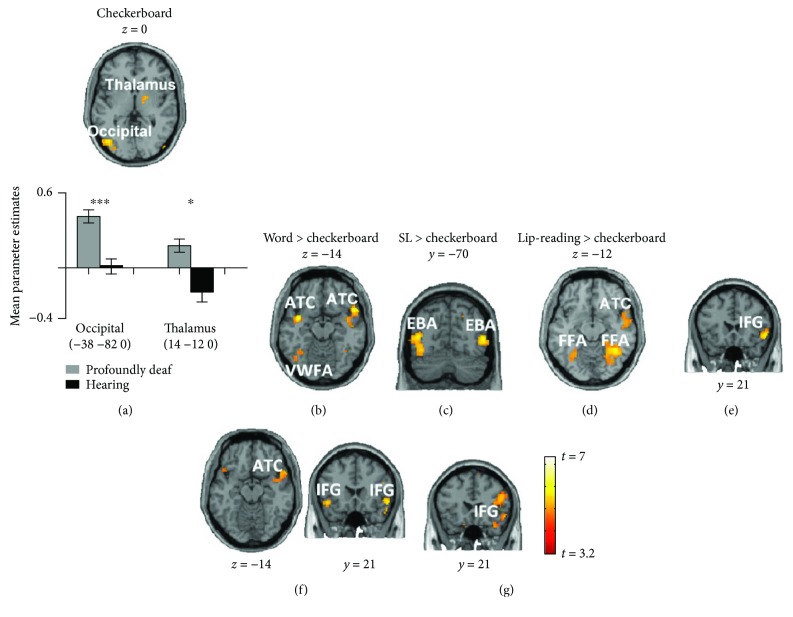

We next examined the neural sources of cross-modal plasticity in the auditory cortex. We placed a seed region in the reorganized right STC and examined the difference in whole-brain functional connectivity between the profoundly deaf and the hearing subjects (p < 0.001, FDR p < 0.05 corrected) under the checkerboard condition. We identified significantly greater connection strengths to the STC in the occipital cortex (peak at , t-test, p < 0.001) and right thalamus (peak at , t-test, p < 0.01) of deaf subjects in comparison with hearing subjects (Figure 4(a)).

Figure 4.

Differences in functional connectivity of the superior temporal cortex (STC) between the profoundly deaf and the hearing groups and between the checkerboard and the language conditions. Brain slices depicting the significant differences in functional connectivity between the profoundly deaf and the hearing groups (p < 0.001, FDR p < 0.05 corrected) with the seed region set in the right STC. For the checkerboard stimulus (a), significantly enhanced functional connectivity between profoundly deaf and hearing subjects was found in the left occipital cortex and right thalamus. In comparison with the checkerboard (whole brain, p < 0.001, FDR p < 0.05 corrected), for the word condition (b, e), greater connectivity was shown in the visual word form area, bilateral anterior temporal cortex, and right inferior frontal gyrus. For sign language (c, f), the bilateral extrastriate body area, right ATC, the bilateral FFA, and the bilateral IFG were identified as having strong functional connectivity with the right STC. For lip-reading stimuli (d, g), the bilateral FFA, right ATC and right IFG showed higher functional connectivity with the right STC. Asterisks denote a significant difference between the profoundly deaf group and the hearing group. Error bars indicate 1 standard error. ∗∗∗ p < 0.001 and ∗ p < 0.05.

To explore the difference in functional connectivity between the language stimuli and the checkerboard, we further compared the connectivity contrast (profoundly deaf versus hearing) of each language stimulus with the checkerboard contrast at the whole-brain level (p < 0.001, FDR p < 0.05 corrected) (Figure 4 and Table 4). For the word stimuli, compared with the checkerboard, we found enhanced connection strengths not only in the left occipital cortex (left hemisphere, peak at , t = 3.89) but also in the bilateral ATC (the left hemisphere: peak at , t = 5.67; the right hemisphere: peak at , t = 4.97) and right IFG (peak at , t = 8.73; Figures 4(b) and 4(e)). The connected area in the left occipital cortex for the word condition was located precisely in the classical visual word form area, which is specific to the processing of visual word information [43–45]. For the sign language stimuli, we identified significantly stronger connections in the bilateral middle temporal areas (the right hemisphere: peak at , t = 6.84; the left hemisphere: peak at , t = 5.74), the bilateral FFA (the right hemisphere: peak at , t = 5.70; the left hemisphere: peak at , t = 4.20), right ATC (peak at , t = 5.63), and bilateral IFG (the right hemisphere: peak at , t = 5.83; the left hemisphere: peak at , t = 5.33; Figures 4(c) and 4(f)). The activated bilateral visual areas were identified to be selective for visual processing of the human body (extrastriate body area, EBA) [46]. For the lip-reading condition, we found significantly greater connection strengths in the bilateral FFA (the right hemisphere: peak at , t = 7.38; the left hemisphere: peak at , t = 5.88, Figure 4(d)), right ATC (peak at , t = 6.13), and right IFG (peak at , t = 3.80; Figure 4(g)). The FFA, which is well known as an area involved in the processing of face information [47], was activated in both the sign language and the lip-reading conditions. In short, in comparison with the checkerboard stimulus, the STC activity induced by language stimuli received extra and common connections from the ATC (e.g., the temporal pole) and frontal (e.g., IFG) regions. Additionally, the sensory component was mainly from visual areas (including the VWFA, EBA, and FFA) that seemed highly selective to stimulus features.

Table 4.

Difference in functional connections with the right STC as a seed region.

| Contrast | Brain region | Number of voxels | Peak coordinates | T value | ||

|---|---|---|---|---|---|---|

| X | Y | Z | ||||

| Checkerboard: profoundly deaf versus hearing group | Occipital_Middle_Left | 399 | −38 | −82 | 0 | 5.23 |

| Occipital_Middle_Left | 318 | −24 | −94 | 8 | 5.21 | |

| Cerebellum_Left | 40 | −32 | −60 | −48 | 4.71 | |

| ParaHippocampal_Right | 15 | 30 | −16 | −28 | 4.38 | |

| Cingulum_Middle_Right | 58 | 10 | 26 | 32 | 4.15 | |

| Thalamus_Right | 35 | 14 | −12 | 0 | 3.89 | |

| Supplementary_Motor_Right | 11 | 12 | 18 | 60 | 3.73 | |

| Cerebellum_Left | 28 | −30 | −58 | −24 | 3.71 | |

|

| ||||||

| Word: (word: profoundly deaf versus hearing groups) versus (checkerboard: profoundly deaf versus hearing) | Frontal_Inferior_Orb_Right | 74 | 54 | 21 | 3 | 6.67 |

| Temporal_Pole_Superior_Right | 54 | 58 | 14 | −16 | 6.02 | |

| Temporal_Pole_Superior_Left | 49 | −40 | 2 | −16 | 4.95 | |

| Visual_word_form_area_Left | 47 | −42 | −58 | −14 | 4.71 | |

| Occipital_Middle_Right | 32 | 42 | −74 | 14 | 4.06 | |

| Cingulum_Middle_Right | 23 | 6 | 4 | 40 | 3.88 | |

|

| ||||||

| Sign language: (sign language: profoundly deaf versus hearing groups) versus (checkerboard: profoundly deaf versus hearing) | Occipital_Middle_Left | 253 | −44 | −70 | 8 | 5.98 |

| Occipital_Middle_Right | 124 | 52 | −68 | 6 | 6.84 | |

| Fusiform_Left | 67 | −32 | −60 | −16 | 4.20 | |

| Fusiform_Right | 51 | 42 | −52 | −16 | 5.70 | |

| Temporal_Pole_Superior_Right | 44 | 50 | 12 | −14 | 5.89 | |

| Temporal_Pole_Superior_Left | 19 | −44 | 14 | −16 | 4.13 | |

| Frontal_Inferior_Orb_Left | 37 | 54 | 21 | −6 | 4.31 | |

| Frontal_Inferior_Orb_Right | 30 | −48 | 21 | −10 | 5.83 | |

| Supplementary_Motor_Area | 39 | 6 | 10 | 64 | 4.39 | |

| Parietal_Superior_Left | 12 | −20 | −54 | 50 | 4.23 | |

|

| ||||||

| Lip-reading: (lip-reading: profoundly deaf versus hearing groups) versus (checkerboard: profoundly deaf versus hearing) | Fusiform_Left | 122 | −26 | −56 | −10 | 5.45 |

| Fusiform_Right | 146 | 38 | −58 | −12 | 7.85 | |

| Temporal_Pole_Superior_Right | 97 | 58 | −2 | −10 | 5.77 | |

| Frontal_Inferior_Tri_Right | 43 | 48 | 22 | 18 | 3.79 | |

| Frontal_Inferior_Orb_Right | 21 | 52 | 21 | 20 | 3.53 | |

| Precentral_Left | 19 | −44 | −6 | −48 | 4.25 | |

| Precentral_Right | 22 | 52 | 4 | 46 | 6.87 | |

| Supplementary_Motor_Area | 13 | −4 | 6 | 60 | 5.13 | |

Note: peak coordinates refer to stereotactic coordinates in MNI space; p < 0.001, FDR p < 0.05 corrected.

3.4. Dynamic Causal Modeling

Although we found that the visual cortical areas, ATC, and IFG showed functional connections with STC under the language condition, we still do not know the causal direction between these brain regions. Dynamic causal modeling (DCM) is a generic Bayesian framework for inferring interactions among hidden neural states from measurements of brain activity and has been used in early blind individuals [48, 49]. Thus, we used DCM and Bayesian model selection to explore how language components reach the STC in deaf subjects by comparing six plausible models (Figure 5(a)). Random effects Bayesian model selection showed that cross-modal activity observed in the STC of deaf subjects was best explained by the feedback connection from IFG/ATC (Figure 5(b), left, with feedback; exceedance probability of 0.97) and feed-forward connection from V1 (Figure 5(b) right, V1 to STC; exceedance probability of 0.43; and V1 to STC/ACT; exceedance probability of 0.30) (in model 1, Figure 5(c); exceedance probability of 0.44). The result strongly suggested that the feedback component from language circuit (ATC and IFG) and the feed-forward component from the sensory region were both involved in the induction of cross-modal plasticity in the STC under the language condition.

Figure 5.

Dynamic causal modeling. (a) The six dynamic causal models used for Bayesian model comparison. Each model receives (parameterized) input at primary visual cortex (V1) source under the language condition in deaf participants. (b) Family-wise Bayesian model selection was used to establish the best neural network architecture for the feedback and feed-forward effect to the STC. Families of models with feedback from IFG/ATC to STC and with feed-forward from V1 to STC/ATC best explained the data. (c) Random effects Bayesian model selection showed model 1 (marked with the red box in (a)) best fits the data for the language condition in deaf individuals.

3.5. Representational Similarity of Cross-Modal Activation in the STC

We finally wanted to explore whether cross-modal activities in the STC shared the same spatial activity pattern when in receipt of distinct contributions from occipital and temporal-frontal areas. We used a multivariate pattern analysis technique known as representational similarity analysis (RSA) [39] to examine how the spatial pattern of BOLD signals over voxels varied in response to different visual stimuli. Per subject, the representational dissimilarity matrixes (RDMs) comprised correlation distances (1 correlation coefficient) between the images from the blocks for each condition in both the profoundly deaf and the residual hearing groups, which yielded a 4 × 4 matrix. The four conditions were separated into two categories: nonlanguage (checkerboard) and language (words, sign language, and lip-reading). We then compared the correlation coefficient in the three pairs between the nonlanguage and the language conditions (category C-L) with the three pairs within the language conditions (category L-L) for each subject. Results showed that correlation coefficients between the checkerboard and any of the language-related stimuli in the bilateral STC were significantly lower than those between any two language-related stimuli in both the profoundly deaf (Figure 6(a), the left hemisphere, t-test, p < 0.0001; the right hemisphere, t-test, p < 0.005) and the residual hearing groups (Figure 6(a), the left hemisphere, p < 0.0001; the right hemisphere, p < 0.05). As a control comparison, no significant differences in RSA were found in the primary visual cortex in either the profoundly deaf (Figure 6(b), the left hemisphere, t-test, p > 0.65; the right hemisphere, t-test, p > 0.81) or the residual hearing individuals (Figure 6(b), the left hemisphere, t-test, p > 0.72; the right hemisphere, t-test, p > 0.87).

Figure 6.

Representational similarities in activation patterns in the superior temporal cortex (STC) and primary visual cortex. (a) Axial slice depicting the auditory region of interest (ROI; Brodmann areas (BAs) 41 and 42) in red. The bars indicate the similarity (correlation coefficient index) of the spatial activation patterns between visual stimuli within the ROI. The solid bars depict the averaged similarities between checkerboard and language-related stimuli (C-L), including sign language, words, and lip-reading, in both the profoundly deaf group (in orange) and the residual hearing group (in yellow). The grid bars depict the average correlation coefficients between language-related stimuli (L-L). The correlation coefficient between language-related stimuli in the bilateral auditory ROIs was significantly higher than that between checkerboard and language-related stimuli in both the profoundly deaf group (left hemisphere: p < 0.0001, right hemisphere: p < 0.001) and the residual hearing group (left hemisphere: p < 0.0001, right hemisphere: p < 0.0016). (b) Such differences in representational similarity were not observed in the primary visual cortex (V1; BA17). ∗∗ p < 0.01; ∗∗∗∗ p < 0.0001. Error bars represent 1 standard error.

4. Discussion

Relative to hearing subjects, both profoundly deaf and residual hearing subjects showed enhanced STC responses to checkerboard, word, and sign language stimuli, which confirmed the existence of cross-modal plasticity after auditory deprivation [2, 14, 35, 50, 51]. While Lambertz et al. [51] reported that cortical reorganization of the auditory cortex was only present in profoundly deaf subjects not in subjects with residual hearing ability, our results showed that such plasticity existed in both groups of hearing-impaired subjects. One possible interpretation could be that intensive behavioral and perceptual training caused neuroplasticity in the late-onset sensory deprivation [30]. Despite the fact that there are differences between pre- and postlingually deaf individuals, cross-modal activity is consistently found in postlingually deaf CI patients as well as in mild to moderately hearing impaired individuals [33, 52–54]. Hearing subjects also showed significant STC responses to lip-reading in the present study, which is compatible with previous observations indicating that silent speech reading activates lateral parts of the superior temporal plane in hearing adults [15, 20–23].

Although sensory deprivation triggers cortical reorganization, the origin of anatomical and functional changes observed in the STC of deaf individuals is not only sensory (feed-forward) but also cognitive (feedback), such as in the use of sign language and speech reading [30]. The purely visual stimulus (checkerboard) provoked activations in the right STC, which showed correlations only with the duration of deafness [52], and strong functional connectivity with the visual cortex and thalamus, implying the contribution of sensory components to the plasticity. However, the cognitive stimuli with language content induced activations in both the right and the left STC, which exhibited strong association only with the experience of sign language learning, and enhanced functional connections with not only visual cortical areas but also the ATC and IFG, suggesting a strong potential top-down modulation of plasticity induced by the linguistic components of the cognitive stimuli. The DCM analysis further confirmed the information flow triggered by the visual stimuli with language content, by showing a strong feedback effect from IFG/ATC and a feed-forward effect from V1 to STC.

In deaf humans, it was found that auditory areas preserved the task-specific activation pattern independent of input modality (visual or auditory), suggesting the task-specific reorganization during the cortical plasticity [55]. Cardin et al. [18] showed that auditory deprivation and language experience cause activations of different areas of the auditory cortex when two groups of deaf subjects with different language experience are watching sign language. In the auditory cortical areas of deaf animals, Lomber with his colleagues showed that the neural basis for enhanced visual functions was located to specific auditory cortical subregions. The improved localization of visual stimuli was eliminated by deactivating the posterior auditory cortex, while the enhanced sensitivity to visual motion was blocked by disabling the dorsal auditory cortex [25, 56, 57]. Land et al. [58] demonstrated that visually responsive and auditory-responsive neurons in the higher-order auditory cortex of deaf cats form two distinct populations that do not show bimodal interactions. However, we still know little of how other brain regions contribute to the task-specific activations in the auditory cortex. In the present study, although neural reorganization in deaf individuals permits both the sensory and language inputs to reach the STC, the RSA result suggested that the functions of the two components were segregated within the reorganized auditory area, which confirmed the functional segregation hypothesis. Furthermore, our functional connectivity analysis suggested that the stimulus-specific activation in STC was probably developed via different neural pathways. Specifically, the sensory component of stimuli was found to be highly stimulus-specific. During the word presentation, visual areas functionally connected with the STC were located exactly within the visual word form area (only in the left hemisphere, Figure 4(b)) which is a region demonstrated to be involved in the identification of words and letters from lower-level shape images prior to association with phonology or semantics [44, 45, 59]. During the sign language and lip-reading stimuli, the functionally connected visual areas were identified as being in the extrastriate body area and fusiform face area, which is known to be especially involved in facial recognition [47, 60] and human body representation [46]. In contrast, for the language component, the cross-modal plasticity shaped by sign language could also be generalized to the responses to other language stimuli (Figure 3(c) and Figure S1). Additionally, STC activities induced by the word, sign language, and lip-reading stimuli were functionally connected with a similar neural network consisting of the temporal pole areas and inferior frontal regions (part of Broca's area) (Figure 4), which was shown to be involved in semantic processing in the language system [61]. These results may suggest that the language components from different visual stimuli share a common language circuit for top-down visual-auditory reorganization.

The difference between checkerboard and language-related stimuli (Figure 6(a)) cannot be interpreted by other experimental accounts. For example, one may argue that the language stimuli have a higher visual richness than the purely visual stimuli, which may therefore have induced higher similarity within language stimuli. This seems unlikely, as such a difference in similarity was not shown in the primary visual cortex (Figure 6(b)).

Previous studies on prelingual deaf groups have proposed a link between poor speech outcomes and exposure to a visual language and indicate that the incapacity for processing auditory signals (poorer outcomes of cochlear implant) is due to usurping of the auditory cortex functionality by visual language [31, 32, 62–64]. However, to the contrary, some studies indicate that proficiency with speech reading is linked to better outcomes of cochlear implant [31, 33, 65–68]. Thus, together with other animal studies, our human imaging results on functional segregation suggest that, although exposure to sign language may indeed partially take over the auditory cortex, the auditory regions could still preserve the ability to process auditory signals following cochlear implants, with this being facilitated through the recruitment of new populations of neurons or different spatial activity patterns.

In conclusion, both language and sensory components contribute to the cross-modal plasticity of the STC in deaf people; these are associated with hearing loss duration and language experience, respectively. The feed-forward signal of sensory input is highly stimulus-specific, while the feedback signal from language input is more associated with a common neural network. Finally, even though both pathways activate auditory areas in deaf people, they seem functionally segregated in respect to cross-modal plasticity. In summary, this study provides important and unique evidence for understanding the neural circuits involved in cross-modal plasticity in deaf people and may guide clinicians in consideration of cochlear implants or hearing recovery.

Acknowledgments

The authors are grateful to all participants. They thank the Institute of Speech and Hearing Science, the Shanghai Deaf Youth Technical School, and the Special Education Department of Shanghai Applied Technology Institute. They thank Dr. Yixuan Ku for the initial discussion on this study. They also thank Haidan Lu, Chunyan Lin, Hang Zhao, and Yan Xiu for the recruitment of participants; Di Zhao for the help with data collection; and Dr. Qing Cai, Lei Li, and Shuai Wang for the suggestions on data analysis. The research leading to these results received funding from the National Key Fundamental Research (973) Program of China (Grant 2013CB329501), the National Natural Science Foundation of China (31571084), and Shanghai Pujiang Plan (15PJ1402000) to Liping Wang.

Conflicts of Interest

The authors have no financial conflict of interests.

Authors' Contributions

Mochun Que and Xinjian Jiang contributed equally to this work.

Supplementary Materials

Supplemental information includes one figure, Supplemental visual materials, and Supplemental questionnaire (translated to English) after scanning.

References

- 1.Kujala T., Alho K., Naatanen R. Cross-modal reorganization of human cortical functions. 2000;23(3):115–120. doi: 10.1016/S0166-2236(99)01504-0. [DOI] [PubMed] [Google Scholar]

- 2.Finney E. M., Fine I., Dobkins K. R. Visual stimuli activate auditory cortex in the deaf. 2001;4(12):1171–1173. doi: 10.1038/nn763. [DOI] [PubMed] [Google Scholar]

- 3.Armstrong B. A., Neville H. J., Hillyard S. A., Mitchell T. V. Auditory deprivation affects processing of motion, but not color. 2002;14(3):422–434. doi: 10.1016/S0926-6410(02)00211-2. [DOI] [PubMed] [Google Scholar]

- 4.Fine I., Finney E. M., Boynton G. M., Dobkins K. R. Comparing the effects of auditory deprivation and sign language within the auditory and visual cortex. 2005;17(10):1621–1637. doi: 10.1162/089892905774597173. [DOI] [PubMed] [Google Scholar]

- 5.Sadato N., Okada T., Honda M., et al. Cross-modal integration and plastic changes revealed by lip movement, random-dot motion and sign languages in the hearing and deaf. 2005;15(8):1113–1122. doi: 10.1093/cercor/bhh210. [DOI] [PubMed] [Google Scholar]

- 6.Vachon P., Voss P., Lassonde M., et al. Reorganization of the auditory, visual and multimodal areas in early deaf individuals. 2013;245:50–60. doi: 10.1016/j.neuroscience.2013.04.004. [DOI] [PubMed] [Google Scholar]

- 7.Bottari D., Heimler B., Caclin A., Dalmolin A., Giard M. H., Pavani F. Visual change detection recruits auditory cortices in early deafness. 2014;94:172–184. doi: 10.1016/j.neuroimage.2014.02.031. [DOI] [PubMed] [Google Scholar]

- 8.Shiell M. M., Champoux F., Zatorre R. J. Reorganization of auditory cortex in early-deaf people: functional connectivity and relationship to hearing aid use. 2015;27(1):150–163. doi: 10.1162/jocn_a_00683. [DOI] [PubMed] [Google Scholar]

- 9.Allison T., Puce A., McCarthy G. Social perception from visual cues: role of the STS region. 2000;4(7):267–278. doi: 10.1016/S1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- 10.MacSweeney M., Campbell R., Woll B., et al. Dissociating linguistic and nonlinguistic gestural communication in the brain. 2004;22(4):1605–1618. doi: 10.1016/j.neuroimage.2004.03.015. [DOI] [PubMed] [Google Scholar]

- 11.Corina D., Chiu Y. S., Knapp H., Greenwald R., San Jose-Robertson L., Braun A. Neural correlates of human action observation in hearing and deaf subjects. 2007;1152:111–129. doi: 10.1016/j.brainres.2007.03.054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Neville H. J., Bavelier D. Neural organization and plasticity of language. 1998;8(2):254–258. doi: 10.1016/S0959-4388(98)80148-7. [DOI] [PubMed] [Google Scholar]

- 13.Petitto L. A., Zatorre R. J., Gauna K., Nikelski E. J., Dostie D., Evans A. C. Speech-like cerebral activity in profoundly deaf people processing signed languages: implications for the neural basis of human language. 2000;97(25):13961–13966. doi: 10.1073/pnas.97.25.13961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.MacSweeney M., Woll B., Campbell R., et al. Neural systems underlying British sign language and audio-visual English processing in native users. 2002;125(7):1583–1593. doi: 10.1093/brain/awf153. [DOI] [PubMed] [Google Scholar]

- 15.Capek C. M., MacSweeney M., Woll B., et al. Cortical circuits for silent speechreading in deaf and hearing people. 2008;46(5):1233–1241. doi: 10.1016/j.neuropsychologia.2007.11.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.MacSweeney M., Capek C. M., Campbell R., Woll B. The signing brain: the neurobiology of sign language. 2008;12(11):432–440. doi: 10.1016/j.tics.2008.07.010. [DOI] [PubMed] [Google Scholar]

- 17.Emmorey K., Xu J., Braun A. Neural responses to meaningless pseudosigns: evidence for sign-based phonetic processing in superior temporal cortex. 2011;117(1):34–38. doi: 10.1016/j.bandl.2010.10.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Cardin V., Orfanidou E., Rönnberg J., Capek C. M., Rudner M., Woll B. Dissociating cognitive and sensory neural plasticity in human superior temporal cortex. 2013;4:p. 1473. doi: 10.1038/ncomms2463. [DOI] [PubMed] [Google Scholar]

- 19.Moreno A., Limousin F., Dehaene S., Pallier C. Brain correlates of constituent structure in sign language comprehension. 2017;167:151–161. doi: 10.1016/j.neuroimage.2017.11.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Calvert G. A., Bullmore E. T., Brammer M. J., et al. Activation of auditory cortex during silent lipreading. 1997;276(5312):593–596. doi: 10.1126/science.276.5312.593. [DOI] [PubMed] [Google Scholar]

- 21.Calvert G. A., Campbell R. Reading speech from still and moving faces: the neural substrates of visible speech. 2003;15(1):57–70. doi: 10.1162/089892903321107828. [DOI] [PubMed] [Google Scholar]

- 22.MacSweeney M., Calvert G. A., Campbell R., et al. Speechreading circuits in people born deaf. 2002;40(7):801–807. doi: 10.1016/S0028-3932(01)00180-4. [DOI] [PubMed] [Google Scholar]

- 23.Reale R. A., Calvert G. A., Thesen T., et al. Auditory-visual processing represented in the human superior temporal gyrus. 2007;145(1):162–184. doi: 10.1016/j.neuroscience.2006.11.036. [DOI] [PubMed] [Google Scholar]

- 24.Kral A., Hartmann R., Tillein J., Heid S., Klinke R. Hearing after congenital deafness: central auditory plasticity and sensory deprivation. 2002;12(8):797–807. doi: 10.1093/cercor/12.8.797. [DOI] [PubMed] [Google Scholar]

- 25.Lomber S. G., Meredith M. A., Kral A. Cross-modal plasticity in specific auditory cortices underlies visual compensations in the deaf. 2010;13(11):1421–1427. doi: 10.1038/nn.2653. [DOI] [PubMed] [Google Scholar]

- 26.Meredith M. A., Clemo H. R., Corley S. B., Chabot N., Lomber S. G. Cortical and thalamic connectivity of the auditory anterior ectosylvian cortex of early-deaf cats: implications for neural mechanisms of crossmodal plasticity. 2016;333:25–36. doi: 10.1016/j.heares.2015.12.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Chabot N., Butler B. E., Lomber S. G. Differential modification of cortical and thalamic projections to cat primary auditory cortex following early- and late-onset deafness. 2015;523(15):2297–2320. doi: 10.1002/cne.23790. [DOI] [PubMed] [Google Scholar]

- 28.Butler B. E., Chabot N., Lomber S. G. Quantifying and comparing the pattern of thalamic and cortical projections to the posterior auditory field in hearing and deaf cats. 2016;524(15):3042–3063. doi: 10.1002/cne.24005. [DOI] [PubMed] [Google Scholar]

- 29.Lyness C. R., Woll B., Campbell R., Cardin V. How does visual language affect crossmodal plasticity and cochlear implant success? 2013;37(10):2621–2630. doi: 10.1016/j.neubiorev.2013.08.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Merabet L. B., Pascual-Leone A. Neural reorganization following sensory loss: the opportunity of change. 2010;11(1):44–52. doi: 10.1038/nrn2758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lee H. J., Giraud A. L., Kang E., et al. Cortical activity at rest predicts cochlear implantation outcome. 2007;17(4):909–917. doi: 10.1093/cercor/bhl001. [DOI] [PubMed] [Google Scholar]

- 32.Sharma A., Campbell J., Cardon G. Developmental and cross-modal plasticity in deafness: evidence from the P1 and N1 event related potentials in cochlear implanted children. 2015;95(2):135–144. doi: 10.1016/j.ijpsycho.2014.04.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Stropahl M., Plotz K., Schönfeld R., et al. Cross-modal reorganization in cochlear implant users: auditory cortex contributes to visual face processing. 2015;121:159–170. doi: 10.1016/j.neuroimage.2015.07.062. [DOI] [PubMed] [Google Scholar]

- 34.Lee J. S., Lee D. S., Oh S. H., et al. PET evidence of neuroplasticity in adult auditory cortex of postlingual deafness. 2003;44(9):1435–1439. [PubMed] [Google Scholar]

- 35.Finney E. M., Clementz B. A., Hickok G., Dobkins K. R. Visual stimuli activate auditory cortex in deaf subjects: evidence from MEG. 2003;14(11):1425–1427. doi: 10.1097/00001756-200308060-00004. [DOI] [PubMed] [Google Scholar]

- 36.Lee H. J., Truy E., Mamou G., Sappey-Marinier D., Giraud A. L. Visual speech circuits in profound acquired deafness: a possible role for latent multimodal connectivity. 2007;130(11):2929–2941. doi: 10.1093/brain/awm230. [DOI] [PubMed] [Google Scholar]

- 37.Leonard M. K., Ferjan Ramirez N., Torres C., et al. Signed words in the congenitally deaf evoke typical late lexicosemantic responses with no early visual responses in left superior temporal cortex. 2012;32(28):9700–9705. doi: 10.1523/JNEUROSCI.1002-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Friston K. J., Dolan R. J. Computational and dynamic models in neuroimaging. 2010;52(3):752–765. doi: 10.1016/j.neuroimage.2009.12.068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kriegeskorte N., Mur M., Bandettini P. Representational similarity analysis - connecting the branches of systems neuroscience. 2008;2:p. 4. doi: 10.3389/neuro.06.004.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.World Health Organization. Geneva: World Health Organization; 1980. [Google Scholar]

- 41.Whitfield-Gabrieli S., Nieto-Castanon A. Conn: a functional connectivity toolbox for correlated and anticorrelated brain networks. 2012;2(3):125–141. doi: 10.1089/brain.2012.0073. [DOI] [PubMed] [Google Scholar]

- 42.Lancaster J. L., Woldorff M. G., Parsons L. M., et al. Automated Talairach atlas labels for functional brain mapping. 2000;10(3):120–131. doi: 10.1002/1097-0193(200007)10:3<120::AID-HBM30>3.0.CO;2-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Brem S., Bach S., Kucian K., et al. Brain sensitivity to print emerges when children learn letter–speech sound correspondences. 2010;107(17):7939–7944. doi: 10.1073/pnas.0904402107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Cohen L., Dehaene S. Specialization within the ventral stream: the case for the visual word form area. 2004;22(1):466–476. doi: 10.1016/j.neuroimage.2003.12.049. [DOI] [PubMed] [Google Scholar]

- 45.Dehaene S., Cohen L. The unique role of the visual word form area in reading. 2011;15(6):254–262. doi: 10.1016/j.tics.2011.04.003. [DOI] [PubMed] [Google Scholar]

- 46.Downing P. E., Jiang Y., Shuman M., Kanwisher N. A cortical area selective for visual processing of the human body. 2001;293(5539):2470–2473. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- 47.Kanwisher N., McDermott J., Chun M. M. The fusiform face area: a module in human extrastriate cortex specialized for face perception. 1997;17(11):4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Collignon O., Dormal G., de Heering A., Lepore F., Lewis T. L., Maurer D. Long-lasting crossmodal cortical reorganization triggered by brief postnatal visual deprivation. 2015;25(18):2379–2383. doi: 10.1016/j.cub.2015.07.036. [DOI] [PubMed] [Google Scholar]

- 49.Klinge C., Eippert F., Roder B., Buchel C. Corticocortical connections mediate primary visual cortex responses to auditory stimulation in the blind. 2010;30(38):12798–12805. doi: 10.1523/JNEUROSCI.2384-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Nishimura H., Hashikawa K., Doi K., et al. Sign language ‘heard’ in the auditory cortex. 1999;397(6715):p. 116. doi: 10.1038/16376. [DOI] [PubMed] [Google Scholar]

- 51.Lambertz N., Gizewski E. R., de Greiff A., Forsting M. Cross-modal plasticity in deaf subjects dependent on the extent of hearing loss. 2005;25(3):884–890. doi: 10.1016/j.cogbrainres.2005.09.010. [DOI] [PubMed] [Google Scholar]

- 52.Sandmann P., Dillier N., Eichele T., et al. Visual activation of auditory cortex reflects maladaptive plasticity in cochlear implant users. 2012;135(2):555–568. doi: 10.1093/brain/awr329. [DOI] [PubMed] [Google Scholar]

- 53.Stropahl M., Debener S. Auditory cross-modal reorganization in cochlear implant users indicates audio-visual integration. 2017;16:514–523. doi: 10.1016/j.nicl.2017.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Campbell J., Sharma A. Cross-modal re-organization in adults with early stage hearing loss. 2014;9(2, article e90594) doi: 10.1371/journal.pone.0090594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Bola Ł., Zimmermann M., Mostowski P., et al. Task-specific reorganization of the auditory cortex in deaf humans. 2017;114(4):E600–E609. doi: 10.1073/pnas.1609000114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Lomber S. G. What is the function of auditory cortex when it develops in the absence of acoustic input? 2017;42:49–61. doi: 10.1016/j.cogdev.2017.02.007. [DOI] [Google Scholar]

- 57.Meredith M. A., Clemo H. R., Lomber S. G. Is territorial expansion a mechanism for crossmodal plasticity? 2017;45(9):1165–1176. doi: 10.1111/ejn.13564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Land R., Baumhoff P., Tillein J., Lomber S. G., Hubka P., Kral A. Cross-modal plasticity in higher-order auditory cortex of congenitally deaf cats does not limit auditory responsiveness to cochlear implants. 2016;36(23):6175–6185. doi: 10.1523/JNEUROSCI.0046-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Liu C., Zhang W. T., Tang Y. Y., et al. The visual word form area: evidence from an fMRI study of implicit processing of Chinese characters. 2008;40(3):1350–1361. doi: 10.1016/j.neuroimage.2007.10.014. [DOI] [PubMed] [Google Scholar]

- 60.Kanwisher N., Yovel G. The fusiform face area: a cortical region specialized for the perception of faces. 2006;361(1476):2109–2128. doi: 10.1098/rstb.2006.1934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Bookheimer S. Functional MRI of language: new approaches to understanding the cortical organization of semantic processing. 2002;25(1):151–188. doi: 10.1146/annurev.neuro.25.112701.142946. [DOI] [PubMed] [Google Scholar]

- 62.Hirano S., Naito Y., Kojima H., et al. Functional differentiation of the auditory association area in prelingually deaf subjects. 2000;27(4):303–310. doi: 10.1016/S0385-8146(00)00072-9. [DOI] [PubMed] [Google Scholar]

- 63.Lee D. S., Lee J. S., Oh S. H., et al. Cross-modal plasticity and cochlear implants. 2001;409(6817):149–150. doi: 10.1038/35051653. [DOI] [PubMed] [Google Scholar]

- 64.Doucet M. E., Bergeron F., Lassonde M., Ferron P., Lepore F. Cross-modal reorganization and speech perception in cochlear implant users. 2006;129(12):3376–3383. doi: 10.1093/brain/awl264. [DOI] [PubMed] [Google Scholar]

- 65.Lee H. J., Kang E., Oh S. H., et al. Preoperative differences of cerebral metabolism relate to the outcome of cochlear implants in congenitally deaf children. 2005;203(1-2):2–9. doi: 10.1016/j.heares.2004.11.005. [DOI] [PubMed] [Google Scholar]

- 66.Bergeson T. R., Pisoni D. B., Davis R. A. O. Development of audiovisual comprehension skills in prelingually deaf children with cochlear implants. 2005;26(2):149–164. doi: 10.1097/00003446-200504000-00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Hassanzadeh S. Outcomes of cochlear implantation in deaf children of deaf parents: comparative study. 2012;126(10):989–994. doi: 10.1017/S0022215112001909. [DOI] [PubMed] [Google Scholar]

- 68.Anderson C. A., Wiggins I. M., Kitterick P. T., Hartley D. E. H. Adaptive benefit of cross-modal plasticity following cochlear implantation in deaf adults. 2017;114(38):10256–10261. doi: 10.1073/pnas.1704785114. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental information includes one figure, Supplemental visual materials, and Supplemental questionnaire (translated to English) after scanning.