Abstract

Objective The purpose of this study was to further explore the effect of EHRs on emergency department (ED) attending and resident physicians' perceived workload, satisfaction, and productivity through the completion of six EHR patient scenarios combined with workload, productivity, and satisfaction surveys.

Methods To examine EHR usability, we used a live observational design combined with post observation surveys conducted over 3 days, observing emergency physicians' interactions with the EHR during a 1-hour period. Physicians were asked to complete six patient scenarios in the EHR, and then participants filled two surveys to assess the perceived workload and satisfaction with the EHR interface.

Results Fourteen physicians participated, equally distributed by gender (50% females) and experience (43% residents, 57% attendings). Frustration levels associated to the EHR were significantly higher for attending physicians compared with residents. Among the factors causing high EHR frustrations are: (1) remembering menu and button names and commands use; (2) performing tasks that are not straightforward; (3) system speed; and (4) system reliability. In comparisons between attending and resident physicians, time to complete half of the cases as well as the overall reaction to the EHR were statistically different.

Conclusion ED physicians already have the highest levels of burnout and fourth lowest level of satisfaction among physicians and, hence, particular attention is needed to study the impact of EHR on ED physicians. This study investigated key EHR usability barriers in the ED particularly, the assess frustration levels among physicians based on experience, and identifying factors impacting those levels of frustrations. In our findings, we highlight the most favorable and most frustrating EHR functionalities between both groups of physicians.

Keywords: electronic health records and systems, interfaces and usability, physician, emergency and disaster care, satisfaction

Background and Significance

The increased adoption of electronic health records (EHRs) has prompted many investigations of their implementation costs and accrued benefits. Hospitals adopting an EHR are more productive, have improved diagnostic accuracy, and have increased quality of care; 1 2 3 4 5 6 one study conducted in a single region suggested that an EHR could reduce costs by $1.9 million in an emergency department (ED) with an interoperable EHR system. 7

As EHRs become standard in hospitals, ease of usability—the facility with which a user can accurately and efficiently accomplish a task—has gained increased attention. 8 9 Many deficiencies exist in EHR usability, such as human errors as a result of poor interface design (i.e., when clinicians' needs are not taken into account, or the information display does not match user workflow). 10 11 12 13 Other usability challenges exist, such as limitations to a user's ability to navigate an EHR, enter information correctly, or extract information necessary for task completion. For EHRs to be effective in assisting with clinical reasoning and decision-making, they should be designed and developed with consideration of physicians, their tasks, and their environment. 14

Physicians may approve of EHRs in concept and appreciate the ability to provide care remotely at a variety of locations; however, in an assessment of physicians' opinions of EHR technologies in practice, the American Medical Association (AMA) found that EHRs significantly eroded professional satisfaction: 42% thought their EHR systems did not improve efficiency, and 72% thought EHR systems did not improve workload. 15 In an Association of American Physicians and Surgeons (AAPS) survey, more than half of physicians felt burned out from using EHRs, 16 and emergency medicine physicians spent almost half their shift on data entry, with 4,000 EHR clicks per day. 17 In a survey of 1,793 physicians, Jamoom et al found that more than 75% of physicians reported that EHR increases the time to plan, review, order, and document care. 18 Physician frustration is associated with lower patient satisfaction and negative clinical outcomes. 19 20 21

EHR dissatisfaction among physicians may be damaging to medicine. 15 22 Providing care in the era of EHRs leads to high level work-related stress and burnout among physicians; 23 ED physicians specifically already have the highest levels of burnout and fourth lowest level of satisfaction among physicians. Particular attention, then, is needed to examine the impact of using an EHR on ED physicians. 24 Increasing age among physicians were negatively correlated with EHR adoption levels; 25 however, only modest, if any, attempts have been made to understand if reported usability dissatisfaction levels will decrease with the changing of the guard, that is, as younger physicians with lifetime experience using information technologies develop and mature in the medical profession.

Objective

The purpose of this study was to explore the effect of EHRs on ED attending and resident physicians' perceived workload, satisfaction, and productivity through the completion of six EHR patient scenarios combined with workload, productivity, and satisfaction surveys. We hypothesized that attending physicians—who are traditionally older, more experienced, and further removed from and/or lacking medical training with robust EHR integration—would be more frustrated with the EHR compared with resident physicians. We hypothesized that the latter would have more training using and experience with EHRs and, thus, be more comfortable with the electronic platforms.

Methods

Study Design

To examine EHR usability, we used a live observational design combined with immediate postobservation surveys conducted over 3 days, observing emergency physicians' interactions with the EHR during a 1-hour period. We created six EHR patient scenarios in the training environment of a commercial EHR—Epic version 2014—to mimic standard ED cases.

Participants and Setting

We conducted the study at a large, tertiary academic medical center. We recruited emergency medicine attending physicians (“attendings”; n = 8) and resident physicians (“residents”; n = 6) in their third and fourth years in training to participate in this study, which took place in the Emergency Medicine Department office space. Participants sat in a private office equipped with a workstation and interacted with the training environment of Epic 2014 used at that medical center. At the time of the study, all participants used Epic 2014 to deliver care with varying degrees of exposure to the EHR. We obtained Institutional Review Board approval. All participants were given an information sheet—describing the study procedure and objective, time commitment, and compensation, and had an opportunity to ask questions. We obtained verbal consent for participation in the study as well as for the participant to be video recorded.

Procedure

Our research team comprised two investigators with PhDs in health informatics and health services, one research coordinator for usability services, a project manager on human factors, and an ED physician. We designed six patient cases that were incorporated into the training environment of the Epic 2014 system used by the hospital. An EHR expert consultant from the hospital team—an Epic builder with a nursing background—created a patient record in the EHR for each of the six cases, entering information about each patient (e.g., name, date of birth (DOB), height, weight, chief complaint, triage note, vital signs, history of present illness, pertinent exam, past medical history, medications, allergies, social history). For each patient record, the team designed a case scenario for participants to follow and execute in the EHR. The study sessions were conducted separately for each participant in a clinical simulation setting.

We asked participants to complete the six comprehensive ED scenarios that included tasks to ensure that participants were exposed to various aspects of the EHR. Every participant had an hour to complete all six scenarios sequentially and was expected to approach each scenario with similar thoroughness, which was evaluated by successful task completion. We conducted the study in a simulated setting to minimize bias; all participants were presented with the same scenarios in the same environment on days during which they did not have scheduled work. First, participants identified the fictitious patients' cases in the EHR. Next, a research assistant read the scenarios, which included a set of initial actions and evaluations, follow-up actions, and disposition, which the participants were to complete in the EHR. Participants could make handwritten notes about the scenario, actions, and disposition. During the encounter, participants could ask the research assistant to repeat the scenario or task as needed.

We measured how managing six different EHR scenarios affected user experience and satisfaction ( Table 1 ). We designed the patient cases to include common EHR challenges such as cognitive overload, patient safety and medication errors, and information management and representation; furthermore, we designed the patient cases to reflect standard EHR tasks done by ED physicians ( Table 1 ).

Table 1. Study scenarios, EHR function, and built-in usability issues.

| Cases | EHR function |

|---|---|

| 1. Pediatric forearm fracture | 1. Method of calculating dosing |

| 2. How physician refers to patient weight | |

| 3. Process of ordering morphine | |

| 4. Process of ordering facility transfer | |

| 2. Back pain | 1. EHR clinical support |

| 2. Process of ordering MRI | |

| 3. Discharge process | |

| 4. Time sensitive protocol but not easily ordered | |

| 3. Chest pain | 1. Situational awareness (How will physician relay need to monitor patient blood pressure?) |

| 2. How does physician view patient's blood pressure | |

| 3. Process of ordering test to be completed at future time | |

| 4. Process of admitting patient for telemetry | |

| 4. Abdominal pain | 1. Process of ordering specific CT scan |

| 2. Process of reviewing CT Scan | |

| 3. Process of discharge | |

| 4. Ordering over 4–6 h | |

| 5. Asthma | 1. Process of delivering nebulizer treatment |

| 2. Process of ordering medication taper | |

| 3. Is SureScripts system tied in | |

| 6. Sepsis | 1. Process of renal dosing for appropriate antibiotics |

| 2. Process of ordering weight based fluids, how does system calculate (if at all) | |

| 3. Process of ordering laboratories to be completed at future time | |

| 4. Does EHR provide guidance on appropriate rate of medication |

Abbreviations: CT, computed tomography; EHR, electronic health record; MRI, magnetic resonance imaging.

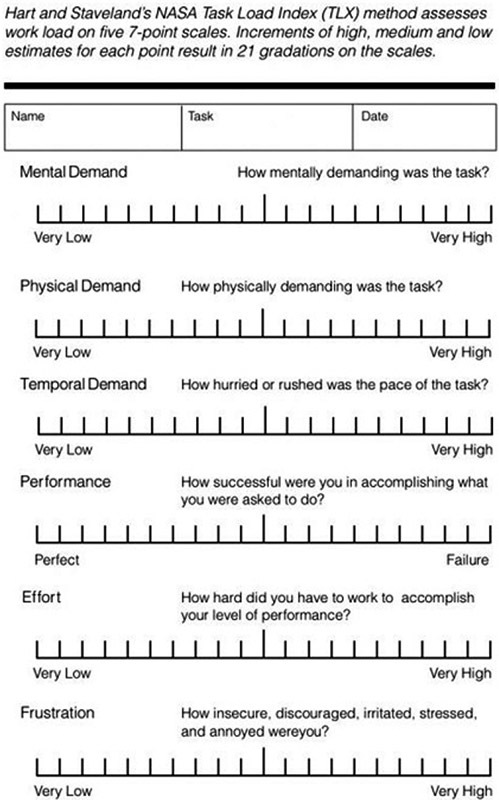

Following completion of the six scenarios, we asked participants to complete two survey questionnaires: the National Aeronautics and Space Administration Task Load Index (NASA-TLX) ( Appendix Fig. A1 ) and Questionnaire for User Interaction Satisfaction (QUIS).

Appendix Fig. A1.

NASA-Task Load Index (TLX) used to assess physician's EHR workload.

Measurement

We recorded usability data, including time to complete a task with usability software Morae Recorder (Okemos, Michigan, United States) installed on the study workstation. The user experience surveys, TLX and QUIS, were administered immediately following the EHR testing sessions to evaluate the user's perception of the EHR experience and the EHR interface design.

The TLX is a selective workload assessment tool of human–computer interface designs measuring users' perceived workload levels in six dimensions: mental demand, physical demand, temporal demand, performance, effort, and frustration. 26 27 Each dimension is ranked on a 20-step bipolar scale, with scores ranging from 0 and 100. The scores for all dimensions then are combined to create an overall workload scale (0–100). The QUIS assesses users' subjective satisfaction with specific aspects of the human–computer interface. 28 According to the Agency for Healthcare Research and Quality (AHRQ), the QUIS is valid even with a small sample size, and provides useful feedback of user opinions and attitudes about the system, 29 specifically about the usability and user acceptance of a human–computer interface. The tool contains a demographic questionnaire; a measure of overall system satisfaction along five subscales: overall reaction, screen factors, terminology and system feedback, learning factors, and system capabilities; as well as open-ended questions about the three most positive and negative aspects of the system. Each area subscale measures the users' overall satisfaction with that facet of the interface, as well as the factors that make up that facet, on a 9-point scale. A detailed description of the QUIS tool and evaluation items is shown in Table 2 .

Table 2. QUIS tool subscales and the corresponding items to be evaluated.

| QUIS | Description | Evaluations Items |

|---|---|---|

| Overall reaction to the EHR | Users assess the overall user experience with the EHR. | • Navigation • Satisfaction • Power • Stimulation |

| Screen | Users rate the screen/interface design of the EHR | • The ability to read characters on the screen • Information overload • Organization of information • Sequence of screens |

| Terminology and system information | Users rate the consistency of terminology, frequency and clarity of hard stops, and system feedback on tasks | • Use of terms through the system • Terminology related to task • Position of message on screen • Prompts for input • Computer informs about its progress • Error messages |

| Learning | Users evaluate their ability to use the system, the effort and time to learn the system, knowledge on how to perform tasks, and the availability of support | • Learning to operate the system • Exploring new features by trials and error • Remembering names and use of commands • Performing tasks is straightforward • Help messages on the screen • Supplemental reference materials |

| System capability | Users rate the performance and usability of the EHR | • System speed • System reliability/System down • Ability to correct mistakes • System designed for all levels of users |

Abbreviations: EHR, electronic health record; QUIS, Questionnaire for User Interaction Satisfaction.

Statistical Analysis

Using SAS software, version 9.4 (Cary, North Carolina, United States), data were analyzed using descriptive statistics (means and standard deviations for continuous variables; frequencies and percentages for categorical variables). Bivariate associations were computed for pairs of continuous variables via Pearson's correlation coefficients. Means were compared between groups and via two-sample Satterhwaite t -tests, separately by gender, role (attending physician vs. resident physician), and hours working in the ED (low vs. high). Although hours working in the ED was captured as a continuous variable, we dichotomized the variable as “low” for residents who worked 50 or fewer hours per week or for attendings who worked 30 or fewer hours, and as “high” for residents who worked more than 50 hours or for attendings who worked more than 30 hours, based on ED physician expert suggestion. We used a two-sided significance level of 0.05 and did not adjust p -values for multiple comparisons.

Results

Fourteen physicians participated, equally distributed by gender (50% female) and experience (43% residents, 57% attendings) ( Table 3 ). All residents had no postresidency clinical practice experience whereas the attendings had 3 or more years of clinical experience since residency; however, 75% of each groups had 3 to 5 years or > 5 years of experience using Epic prior to the study. Residents worked in the ED for an average of 54.2 ± 3.7 hours per week, and attendings for 27.5 ± 7.2 hours per week. The physicians, when not divided by classification, achieved a mean TLX score of 6.3 ± 2.3 out of 20. Participants took an average of 21.7 ± 7.0 minutes to finish all six scenarios, with completion times ranging from 12.6 to 36.3 minutes.

Table 3. Demographics.

| Resident, n (%) | Attending, n (%) | Total | |||

|---|---|---|---|---|---|

| Gender | Male | 5 (83.3) | 2 (25) | 7 | |

| Female | 1 (16.7) | 6 (75) | 7 | ||

| Age | 18–34 | 6 (100) | 0 | 6 | |

| 35–50 | 0 | 7 (87.5) | 7 | ||

| 51–69 | 0 | 1 (12.5) | 1 | ||

| Ethnicity | Asian | 0 | 1 (12.5) | 1 | |

| White | 6 (100) | 7 (87.5) | 13 | ||

| Years of clinical practice (postresidency): | 0 | 6 (100) | 0 | 6 | |

| 1–2 y | 0 | 0 | 0 | ||

| 3–5 y | 0 | 2 (25) | 2 | ||

| More than 5 y | 0 | 6 (75) | 6 | ||

| Number of years of experience in Epic prior to the study | 1–2 y | 1 (25) | 2 (25) | 3 | |

| 3–5 y | 5 (75) | 5 (62.5) | 10 | ||

| > 5 y | 0 | 1 (12.5) | 1 | ||

| Average number of hours worked in Epic per week | < 30 h | 0 | 6 (75) | 6 | |

| 30–50 h | 2 (50) | 2 (25) | 4 | ||

| > 50 h | 4 (50) | 0 | 4 | ||

| Total | 6 | 8 | 14 | ||

Perceived Workload in the EHR

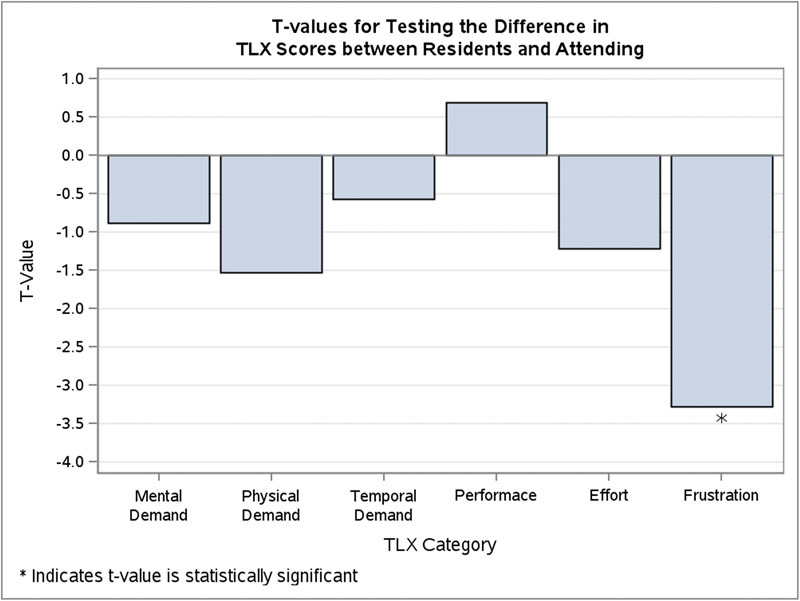

In two-sample t -tests comparing differences for the NASA-TLX total score and its items by role (resident vs. attendings), by gender, and by category of hours worked, we found only one significant difference: the frustration item was significantly lower for residents than for attendings (3.3 vs. 8.0, p < 0.01) ( Fig. 1 ).

Fig. 1.

t -Values for testing the difference in Task Load Index (TLX) scores between residents and attending.

Bivariate associations between the TLX total score and the QUIS items are shown in Table 4 . The TLX total score was significantly correlated with the screen item (Pearson's r = 0.62, p = 0.02), such that higher ratings on the screen were associated with higher TLX total scores. No other significant correlations were found for any TLX item with the QUIS ratings.

Table 4. Correlation coefficients and p -Values (bold) between NASA-TLX and QUIS items .

| Overall reaction | Screen | Terminology and information | Learning | |

|---|---|---|---|---|

| Terminology and information | 0.538 0.047 a |

0.333 0.245 |

NA | |

| Learning | 0.440 0.115 |

0.314 0.274 |

0.616 0.019 a |

NA |

| System capabilities | 0.336 0.240 |

0.600 0.023 a |

0.374 0.188 |

0.805 0.001 a |

| Total number of minutes to complete all scenarios | –0.725 0.003 a |

–0.395 0.162 |

–0.324 0.259 |

–0.291 0.312 |

Abbreviations: NASA-TLX, National Aeronautics and Space Administration Task Load Index; QUIS, Questionnaire for User Interaction Satisfaction.

Statistically significant values.

EHR Satisfaction

We found significant correlations among the NASA-TLX and the QUIS survey results ( Table 4 ). All participants completed both surveys and data were matched using an assigned unique participant identifier. Higher overall reaction, measured by evaluating navigation, satisfaction, power, and stimulation, was associated with higher ratings for terminology and information ( r = 0.54, p < 0.05) and with lower times to completion of all scenarios ( r = –0.73, p < 0.01). Higher system capability ratings were associated with higher ratings for the screen item ( r = 0.60, p = 0.02) and with higher learning ratings ( r = 0.80, p < 0.01). Also, higher terminology and information ratings were positively associated with higher learning ratings ( r = 0.62, p = 0.02). Although not statistically significant, higher ratings of the system capabilities tended to yield shorter times to completion of all scenarios ( r = –0.44).

Pearson's correlation coefficients were determined for frustration with each individual QUIS score, Table 5 . Within the context of learning the system, lower scores for remembering commands and for performing straightforward tasks were associated with greater frustration with the system. Within the items describing system capabilities, lower scores for system speed and for system reliability were also significantly associated with greater frustration with the system.

Table 5. Correlation coefficients and p -Values (bold) between frustration levels and EHR characteristics .

| Remembering names and commands use | Performing tasks is straightforward | System speed | System reliability | |

|---|---|---|---|---|

| Frustration levels | –0.555 0.039 a |

–0.600 0.023 a |

–0.709 0.004 a |

–0.633 0.015 a |

Abbreviation: EHR, electronic health record.

Statistically significant values.

EHR Productivity

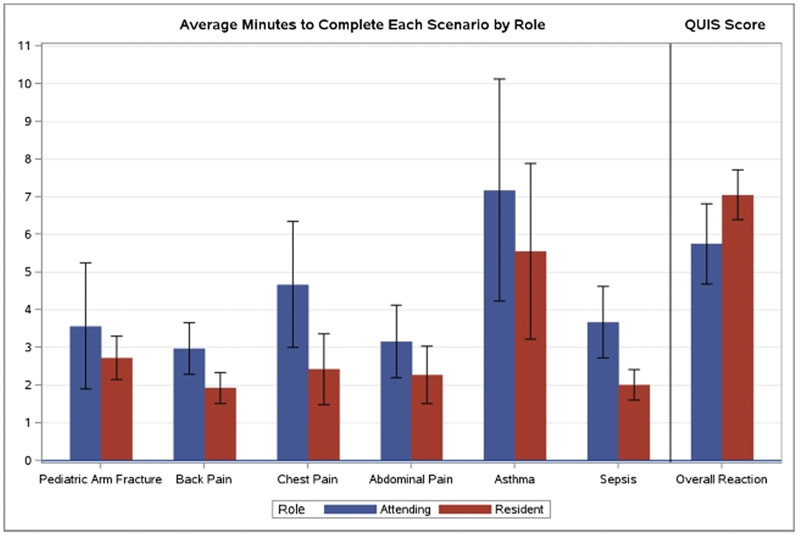

Furthermore, in comparisons between resident and attending physicians, time to complete the back pain, chest pain, and sepsis scenarios, as well as overall reaction (based on QUIS scores), were statistically different ( Fig. 2 ). In comparisons between high and low average numbers of hours worked, the time to complete the sepsis scenario and overall reaction showed significant differences ( Fig. 2 ).

Fig. 2.

Average minutes to complete each scenario by role.

Fig. 2 shows separate assessments for each scenario made via Satterthwaite t -tests by role (resident vs. attending). Residents had significantly shorter mean durations to complete the back pain (1.9 vs. 3.0 minutes, p < 0.01), chest pain (2.4 vs. 4.7 minutes, p < 0.01), and sepsis (2.0 vs. 3.7 minutes, p < 0.01) scenarios compared with attendings. Although not statistically significant, residents also took less time, on average, to complete the abdominal pain scenario (2.3 vs. 3.2 minutes). Fig. 2 also shows results of two-sample t -tests demonstrating significant differences for the QUIS items by role (resident vs. attending). When examining by role, the mean score for overall reaction was significantly higher for residents than for attendings (7.1 vs. 5.8, p = 0.02).

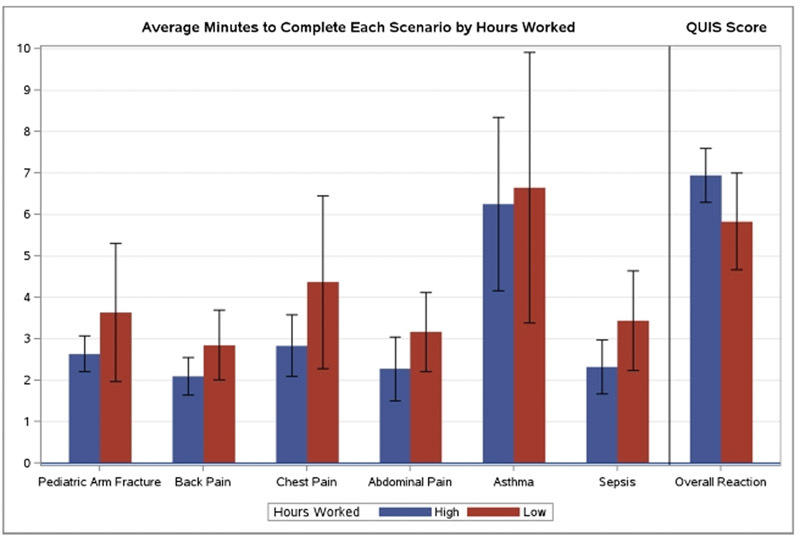

Comparisons by hours worked in the EHR indicated that those working fewer hours took a significantly longer time to complete the sepsis and back pain scenarios compared with those working more hours (3.4 vs. 2.3 minutes, p < 0.05, and 2.9 vs. 2.1 minutes, p = 0.05). Although not statistically significant, Fig. 3 show that the same pattern was demonstrated for the abdominal pain (3.2 vs. 2.3 minutes), and chest pain (4.4 vs. 2.8 minutes, p = 0.09) scenarios. By hours worked, overall reaction ratings were significantly lower for those working fewer hours (5.8 vs. 6.9, p < 0.05) than for those working more hours.

Fig. 3.

Average minutes to complete task by electronic health record (EHR) hours worked. Difference in satisfaction levels based on EHR hours worked.

EHR Preferences and Frustrations

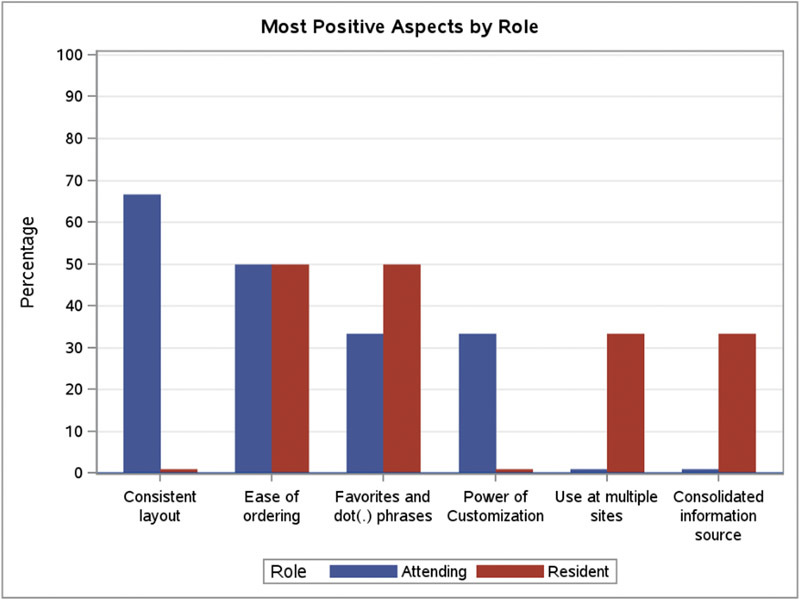

To understand differences in EHR preferences and frustrations, participants were asked at the end of the exercise on the QUIS survey to identify the three most favorable and most frustrating functions of the EHR; 12 (85%) participated and were equally distributed between roles. Common preferences emerged among both groups, such as appreciation for “click” shortcuts available when placing an order, and the ability to autopopulate smart phrases in the notes by using the dot (.) phrases functionality. Two-thirds of attending complimented the EHR design, noting its reactive speed as well as its clean, consistent, and reliable layout. Residents particularly liked the flexibility for care through EHR access at multiple sites. Residents also expressed satisfaction in the comprehensive and consolidated representation of patient information ( Fig. 4 ).

Fig. 4.

Most positive aspects of the electronic health record (EHR) by roles.

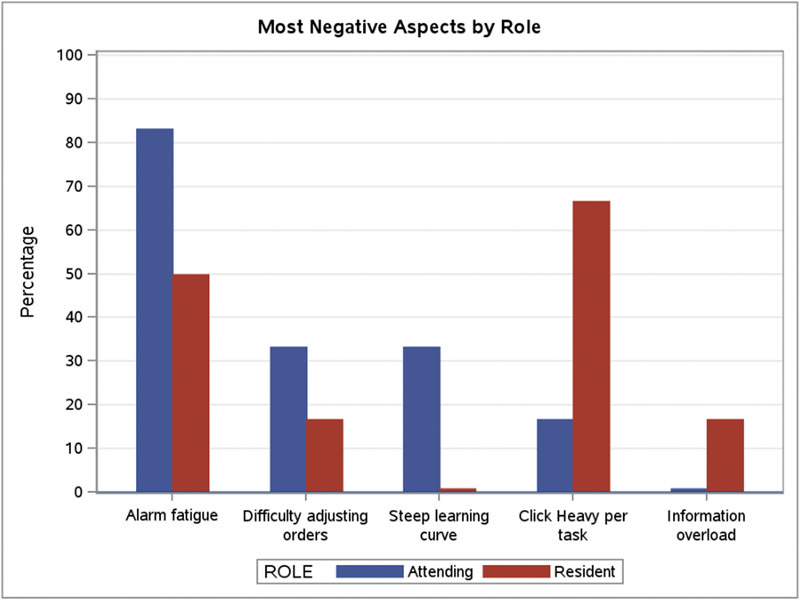

With regard to frustrations, attending complained about the frequency and rationale behind warnings and errors messages, such as alerts when ordering tests, medication, or computed tomography (CT) for patients with allergies. While half of the attendings found it easy to place orders, one-third found it difficult to adjust orders once placed. Attendings also expressed concern about the steep learning curve associated with the EHR, which they often found to be mentally taxing. Residents' main complaints were with the number of mouse clicks per task in the EHR. Like attendings, residents noted that orders were difficult to adjust once placed, and that alert fatigue remains a significant challenge of EHR use. Residents were also dissatisfied with information overload when reviewing patient charts ( Fig. 5 ).

Fig. 5.

Most negative aspects of the electronic health record (EHR) by roles.

Discussion

We found in this study a significant disparity between overall satisfaction with the EHR between residents and attending physicians. Attending reported significantly higher frustration levels with the EHR. This difference may be explained by the time it took for participants to complete the six scenarios: residents completed half the scenarios significantly faster than attending. Aside from computer skills, longer completion times may be attributed to attending physicians' wider repertoire of clinical experience and judgment, and hence, attendings may look for more patient data and consider alternate explanations more than residents. As a result, attendings may take longer to complete a task if more data are required to make clinical judgment. We raise the question of whether shorter completion time is actually a valid indication of better usability, and what is the effect of faster interaction on clinical outcomes. A quicker completion time that is free of errors yet maintains thoroughness would be ideal; nonetheless, this is a topic that should be explored and evaluated in future work.

The workflow discrepancy between a resident and an attending must also be considered. At many institutions, particularly academic ones, the attendings' responsibilities are often supervisory, while residents do much of the work of documentation including entering orders and notes. Attending physicians oversee and supplement direct patient care and documentation. Attending ultimately sign off on documentation completed by a resident; nonetheless, if residents are performing most of the work of order entry and making clinical notes, they may be more comfortable with EHRs and, thus, quicker to complete the scenarios compared with their attending physicians.

The EHR screen design showed a significant relationship with effort exerted to find information, temporal demand levels, and overall perceived workload. Most participants noted a relationship between screen design and how much effort they spent on a task. They tended to be satisfied if the design did not require much effort to interact with it. Finally, we found a relationship between the physician's EHR experiences (i.e., number of hours spent working with the EHR) and the reported levels of satisfaction among physicians: the attending, on average, work < 50 hours per week with Epic, whereas their resident counterparts work 30 to 50 hours (50%) or > 50 hours (50%) weekly with Epic ( Table 3 ). This, coupled with the significantly higher mean score for overall reaction for residents (7.1 vs. 5.8, p = 0.02), indicate that the physicians who spent more time working on the EHR reported higher levels of satisfaction.

Our results showed that participants were satisfied with the broad array of functions provided through the system; however, our findings indicated that a significant learning curve was required to learn ways to navigate the system. Participants ranked the mental demand as the highest perceived EHR workload, and the physical demand as the lowest. Participants rated aspects of the EHR interface design such that system capabilities were the highest category in terms of satisfaction, while learning factors was the lowest ranked category. On average, participants completed six scenarios in 21 minutes; however, there was a range of 7 minutes among participants.

We found a concerning disconnection between the theory behind EHR systems and the reality of using them in practice. EHRs were designed to make the administration of medical care easier for physicians and safer for patients 30 but frustrations with technology have, in many cases, had the opposite effect. Physician frustration with the EHR has been associated with high burnout levels, lower patient satisfaction, and negative clinical outcomes. 19 20 21 For instance, in 2013, a 16-year-old boy received more than 38 trimethoprim/sulfamethoxazole (trade name Septra, Bactrim) tablets while hospitalized. 31 Poor interface design within an EHR system was ultimately blamed for the massive antibiotic overdose, which was nearly fatal for the young patient. The aforementioned impact of poor interface design and human factors is not unique to health care; human factors in other disciplines have shown critical and fatal errors as a result of lack of user–center interface design. For example, the famous Canada Air incident named Gimli Glider shows the importance of human–computer interaction when an accident occurred due to a pilot error with the cockpit Fuel Quantity Indicator System (FQIS). 32 Similarly, human factors research have demonstrated similar results with regards to the relationship between interface designs and complex systems usability in fields like aviation, psychology, education, and computer science. 33 34 35 The usability findings in this article aligns with findings in similar disciplines. For instance, in aviation workers are under extreme stress while attempting to access considerable amounts of data. Information overload, a contributing factor to degraded decision making ability, affects these works by introducing delay in the correct response. 36

To improve EHR usability, we need to assess and address user “personas” that take into account users' clinical experience as well as their technical abilities. Tailoring the EHR interface by clinical roles (e.g., nurse, program coordinator, and physician) is insufficient: rather, we should specify the individual personas associated with each clinical role. Personas are used to create realistic representations of key users; it is commonly used in disciplines such as marketing, information architecture, and user experience. 37 38 39 40 The interface needs to be tailored to the different personas within the same role. For example, attending physicians are domain experts who may have received their medical training prior to EHR adoption and, therefore, their expectations of the EHR will be different than a resident who has been training post-EHR adoption. This study shows that not all physicians are dissatisfied with the EHR and that there are varying levels of satisfaction based on seniority. Our results show that attending physicians are notably more frustrated than resident physicians when using the EHR. We expect that levels of frustration from the EHR among providers may diminish as the careers advance of more physicians who have life-long exposure to information technologies. To meet the expectations of all users, however, current EHR designs require major revisions with input from a range of clinicians with varying degrees of roles and experience and expertise with the electronic platforms.

Although the simulation-based environment we used in this research served the purpose of understanding the perceived workload and satisfaction of ED physicians regarding EHRs, we also noted limitations. Conducting the study in the ED in real-time hold potential to reveal new patterns and create different outcomes for the time to task completion, perceived workload, and satisfaction due to the intensity and interruptions inherent in the ED environment; however, the ability to implement such a study in real-life settings is challenging for several reasons. First, the risk of disclosing protected patient information is significant. Second, providing a survey at the end of a physician's ED shift may introduce bias related to fatigue or salience of the records of last few patients seen.

Finally, the number of participants (8 physicians and 6 resident physicians) may be viewed as a limitation related to the lack of statistical power in the analysis; however, five participants are usually sufficient for usability studies such as this one. 41

Researchers and scientists in fields other than health care developed the TLX and QUIS, therefore these tools are not specifically designed to assess EHR interfaces. We suggest that future efforts should include developing tailored and validated qualitative and quantitative tools to assess the usability of the health information technology applications used by health care providers and researchers.

Furthermore, the use of eye-tracking methodologies has shown promise in the sociobehavioral and engineering fields. 42 43 44 However, despite modest attempts to build a framework explaining how to use eye-tracking devices in health services research, in particular, ways to integrate eye-tracking into a study design, there remains a need for a health-specific manual explaining how to use the said devices. In order for them to best benefit medical research, formal training for medical personnel must occur. 45 46 Importantly, this effort would contribute to the development of creative ways of analyzing the large data files provided by the eye-tracker using automation and algorithms.

Conclusion

In this article, we presented findings from an EHR usability study of physicians in the emergency medicine department at a tertiary academic medical center. Although this study builds on previous research, 16 18 47 it differs from previous work because we focused on responses to EHR, especially frustration, between physicians at two different career levels, and the factors leading to frustration. We found that EHR frustration levels are significantly higher among more senior attending physicians compared with more junior resident physicians. Among the factors causing high EHR frustrations are: (1) remembering menu and button names and commands use; (2) performing tasks that are not straightforward; (3) system speed; and (4) system reliability.

In our findings, we highlight the most favorable and most frustrating EHR functionalities of both groups of physicians. Attending physicians appreciate the consistency in EHR user interface and the ease of entering orders in the system, and they reported frustrations with the frequency of hard stops in the system. Although residents appreciated the ability to use dot phrases and autocomplete functions, as well as the ease of entering orders, they found the click-heavy and alert fatigue aspects of EHR to be most frustrating.

The findings of this study can be used to inform future usability studies, in particular, more specific usability areas such as information representation, standardized pathways to accomplishing tasks, and ways to reduce the steep learning curve for users. Finally, today's resident physicians will become tomorrow's attending physicians, faced with new duties or demands. In this never-ending technological cycle, the only certainty is that nothing stays the same. More research is needed to investigate ways to improve satisfaction with the EHR through meeting the various expectations from tech-savvy residents to domain expert attending physicians.

Clinical Relevance Statement

Electronic health records may lead to higher physician's burnout and dissatisfaction levels if they do not meet the expectations of users. We demonstrate differences in EHR expectations, preferences, and use among resident and attending physicians, suggesting that within the medical field there are different user characteristics that need to be further dissected and analyzed.

Multiple Choice Question

Users have distinct EHR personas. Which of the following stages of persona creation is likely to take the most time?

Stage I: Gathering data for personas

Stage II: Analyzing the data gathered in stage I

Stage III: Crafting the actual personas

All stages typically take the same amount of time

Correct Answer: The correct answer is option a. Personas are representations of a cluster of users with similar behaviors, goals, and motivations. Personas can be a powerful tool for focusing teams and creating user-centered interfaces because they embody characteristics and behaviors of actual users. However, identifying and creating persona consumes the most time. This stage include empirical research, as well as gathering assumptions and existing insights from stakeholders and other individuals with a deep understanding of target users.

Acknowledgments

The authors thank Dr. Beth Black, PhD, RN, and Samantha Russomagno, DNP, CPNP for their contribution during manuscript preparation. This study was sponsored by the American Medical Association (AMA).

Conflict of Interest None.

Protection of Human and Animal Subjects

The study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects, and was reviewed and approved by the University of North Carolina at Chapel Hill (UNC) Institutional Review Board.

References

- 1.Huerta T R, Thompson M A, Ford E W, Ford W F. Electronic health record implementation and hospitals' total factor productivity. Decis Support Syst. 2013;55(02):450–458. [Google Scholar]

- 2.Cebul R D, Love T E, Jain A K, Hebert C J. Electronic health records and quality of diabetes care. N Engl J Med. 2011;365(09):825–833. doi: 10.1056/NEJMsa1102519. [DOI] [PubMed] [Google Scholar]

- 3.Da've D. Benefits and barriers to EMR implementation. Caring. 2004;23(11):50–51. [PubMed] [Google Scholar]

- 4.Walsh S H.The clinician's perspective on electronic health records and how they can affect patient care BMJ 2004328(7449):1184–1187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Clayton P D, Naus S P, Bowes W A, III et al. Physician use of electronic medical records: issues and successes with direct data entry and physician productivity. AMIA Annu Symp Proc. 2005;2005:141–145. [PMC free article] [PubMed] [Google Scholar]

- 6.Linder J A, Schnipper J L, Middleton B. Method of electronic health record documentation and quality of primary care. J Am Med Inform Assoc. 2012;19(06):1019–1024. doi: 10.1136/amiajnl-2011-000788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Frisse M E, Johnson K B, Nian H et al. The financial impact of health information exchange on emergency department care. J Am Med Inform Assoc. 2012;19(03):328–333. doi: 10.1136/amiajnl-2011-000394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Zhang J, Walji M F. TURF: toward a unified framework of EHR usability. J Biomed Inform. 2011;44(06):1056–1067. doi: 10.1016/j.jbi.2011.08.005. [DOI] [PubMed] [Google Scholar]

- 9.Sittig D F, Belmont E, Singh H. Improving the safety of health information technology requires shared responsibility: it is time we all step up. Healthc (Amst) 2017:S2213-0764(17)30020-9. doi: 10.1016/j.hjdsi.2017.06.004. [DOI] [PubMed] [Google Scholar]

- 10.Tang P C, Patel V L.Major issues in user interface design for health professional workstations: summary and recommendations Int J Biomed Comput 199434(1-4):139–148. [DOI] [PubMed] [Google Scholar]

- 11.Linder J A, Schnipper J L, Tsurikova R, Melnikas A J, Volk L A, Middleton B. Barriers to electronic health record use during patient visits. AMIA Annu Symp Proc. 2006;2006:499–503. [PMC free article] [PubMed] [Google Scholar]

- 12.Zhang J. Human-centered computing in health information systems. Part 1: analysis and design. J Biomed Inform. 2005;38(01):1–3. doi: 10.1016/j.jbi.2004.12.002. [DOI] [PubMed] [Google Scholar]

- 13.Ratwani R M, Fairbanks R J, Hettinger A Z, Benda N C. Electronic health record usability: analysis of the user-centered design processes of eleven electronic health record vendors. J Am Med Inform Assoc. 2015;22(06):1179–1182. doi: 10.1093/jamia/ocv050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Terazzi A, Giordano A, Minuco G.How can usability measurement affect the re-engineering process of clinical software procedures? Int J Med Inform 199852(1-3):229–234. [DOI] [PubMed] [Google Scholar]

- 15.Association A M. Research: 2015. Physicians Use of EHR Systems 2014. [Google Scholar]

- 16.Surgeons AAPa.Physician Results EHR Survey. 2015 8/25/2017Available at:https://aaps.wufoo.com/reports/physician-results-ehr-survey/. Accessed August 25, 2017

- 17.Hill R G, Jr, Sears L M, Melanson S W. 4000 clicks: a productivity analysis of electronic medical records in a community hospital ED. Am J Emerg Med. 2013;31(11):1591–1594. doi: 10.1016/j.ajem.2013.06.028. [DOI] [PubMed] [Google Scholar]

- 18.Jamoom E, Patel V, King J, Furukawa M F. Physician experience with electronic health record systems that meet meaningful use criteria: NAMCS physician workflow survey, 2011. NCHS Data Brief. 2013;(129):1–8. [PubMed] [Google Scholar]

- 19.McHugh M D, Kutney-Lee A, Cimiotti J P, Sloane D M, Aiken L H. Nurses' widespread job dissatisfaction, burnout, and frustration with health benefits signal problems for patient care. Health Aff (Millwood) 2011;30(02):202–210. doi: 10.1377/hlthaff.2010.0100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Haas J S, Cook E F, Puopolo A L, Burstin H R, Cleary P D, Brennan T A. Is the professional satisfaction of general internists associated with patient satisfaction? J Gen Intern Med. 2000;15(02):122–128. doi: 10.1046/j.1525-1497.2000.02219.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.DiMatteo M R, Sherbourne C D, Hays R D et al. Physicians' characteristics influence patients' adherence to medical treatment: results from the Medical Outcomes Study. Health Psychol. 1993;12(02):93–102. doi: 10.1037/0278-6133.12.2.93. [DOI] [PubMed] [Google Scholar]

- 22.Friedberg M W, Chen P G, Van Busum K R et al. Factors affecting physician professional satisfaction and their implications for patient care, health systems, and health policy. Rand Health Q. 2014;3(04):1. [PMC free article] [PubMed] [Google Scholar]

- 23.Bodenheimer T, Sinsky C. From triple to quadruple aim: care of the patient requires care of the provider. Ann Fam Med. 2014;12(06):573–576. doi: 10.1370/afm.1713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Shanafelt T D, Hasan O, Dyrbye L N et al. Changes in burnout and satisfaction with work-life balance in physicians and the general US working population between 2011 and 2014. Mayo Clin Proc. 2015;90(12):1600–1613. doi: 10.1016/j.mayocp.2015.08.023. [DOI] [PubMed] [Google Scholar]

- 25.Hamid F, Cline T W.Providers' Acceptance Factors and Their Perceived Barriers to Electronic Health Record (EHR) AdoptionOnline Journal of Nursing Informatics 2013;17(3). Doi: Available at:http://ojni.org/issues/?p=2837. Accessed May 1, 2018

- 26.Hart S G, Staveland L E. Los Angeles, California: North-Holland; 1988. Development of NASA-TLX (Task Load Index): results of empirical and theoretical research; pp. 139–183. [Google Scholar]

- 27.Hart S G.NASA-Task Load Index (NASA-TLX); 20 years laterProceedings of the Human Factors and Ergonomic Society Annual Meeting, 2006;50(9): 904–908

- 28.Chin J P, Diehl V A, Norman K L.Development of an instrument measuring user satisfaction of the human-computer interfaceProceedings of the SIGCHI Conference on Human Factors in Computing Systems; 1988, ACM: Washington, D.C., USA:213–218

- 29.AHRQ.Questionnaire for User Interface Satisfaction; 2017Available at:https://healthit.ahrq.gov/health-it-tools-and-resources/evaluation-resources/workflow-assessment-health-it-toolkit/all-workflow-tools/questionnaire. Accessed September 25, 2017

- 30.Kern L M, Barrón Y, Dhopeshwarkar R V, Edwards A, Kaushal R; HITEC Investigators.Electronic health records and ambulatory quality of care J Gen Intern Med 20132804496–503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wachter R. New York, NY: McGraw-Hill Education; 2015. The Digital Doctor: Hope, Hype, and Harm at the Dawn of Medicine's Computer Age. [Google Scholar]

- 32.Nelson W. The Gimli Glider, in Soaring Magazine; 1997

- 33.Ng A KT.Cognitive psychology and human factors engineering of virtual reality2017 IEEE Virtual Reality (VR); 2017

- 34.Singun A P.The usability evaluation of a web-based test blueprint system 2016 International Conference on Industrial Informatics and Computer Systems (CIICS ) ; 2016

- 35.Weyers B, Burkolter D, Kluge A, Luther W.User-centered interface reconfiguration for error reduction in human-computer interaction. Presented at: Third International Conference on Advances in Human-Oriented and Personalized MechanismsTechnologies and Services; August 22-27, 2010; Nice, France

- 36.Harris D. New York, NY: Taylor & Francis; 2017. Human Factors for Civil Flight Deck Design. [Google Scholar]

- 37.Brown D M. San Francisco, CA: Pearson Education; 2010. Communicating Design: Developing Web Site Documentation for Design and Planning. [Google Scholar]

- 38.O'Connor K.Personas: The Foundation of a Great User Experience; 2011Available at:http://uxmag.com/articles/personas-the-foundation-of-a-great-user-experience. Accessed March 2, 2018

- 39.Friess E. Personas in heuristic evaluation: an exploratory study. IEEE Trans Prof Commun. 2015;58(02):176–191. [Google Scholar]

- 40.Gothelf J. UX Magazine; 2012. Using Proto-Personas for Executive Alignment. [Google Scholar]

- 41.Nielsen J, Landauer T K.A mathematical model of the finding of usability problems. Proceedings of the INTERACT '93 and CHI '93 Conference on Human Factors in Computing SystemsAmsterdam, The Netherlands: ACM; 1993:206–213

- 42.Duque A, Vázquez C. Double attention bias for positive and negative emotional faces in clinical depression: evidence from an eye-tracking study. J Behav Ther Exp Psychiatry. 2015;46:107–114. doi: 10.1016/j.jbtep.2014.09.005. [DOI] [PubMed] [Google Scholar]

- 43.Pierce K, Marinero S, Hazin R, McKenna B, Barnes C C, Malige A. Eye tracking reveals abnormal visual preference for geometric images as an early biomarker of an autism spectrum disorder subtype associated with increased symptom severity. Biol Psychiatry. 2016;79(08):657–666. doi: 10.1016/j.biopsych.2015.03.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Kassner M, Patera W, Bulling A.Pupil: an open source platform for pervasive eye tracking and mobile gaze-based interaction. Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct PublicationSeattle, WA: ACM; 2014:1151–1160

- 45.Hersh W. Who are the informaticians? What we know and should know. J Am Med Inform Assoc. 2006;13(02):166–170. doi: 10.1197/jamia.M1912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Khairat S et al. A review of biomedical and health informatics education: a workforce training framework. J Hosp Adm. 2016;5(05):10. [Google Scholar]

- 47.Ajami S, Bagheri-Tadi T. Barriers for adopting electronic health records (EHRs) by physicians. Acta Inform Med. 2013;21(02):129–134. doi: 10.5455/aim.2013.21.129-134. [DOI] [PMC free article] [PubMed] [Google Scholar]