Abstract

Objective

Neurocognitive functions, specifically verbal working memory (WM), contribute to speech recognition in postlingual adults with cochlear implants (CIs) and normal-hearing (NH) listener shearing degraded speech. Three hypotheses were tested: (1) WM accuracy as assessed using three visual span measures — digits, objects, and symbols — would correlate with recognition scores for spectrally degraded speech (through a CI or when noise-vocoded); (2) WM accuracy would be best for digit span, intermediate for object span, and lowest for symbol span, due to the increasing cognitive demands across these tasks. Likewise, response times, relating to processing demands, would be shortest for digit span, intermediate for object span, and longest for symbol span; (3) CI users would demonstrate poorer and slower performance than NH peers on WM tasks, as a result of less efficient verbally mediated encoding strategies associated with a period of prolonged auditory deprivation.

Methods

Cross-sectional study of 30 postlingually deaf adults with CIs and 34 NH controls. Participants were tested for sentence recognition in quiet (CI users) or after noise-vocoding (NH peers), along with WM using visual measures of digit span, object span, and symbol span.

Results

Of the three measures of WM, digit span scores alone correlated with sentence recognition for CI users; no correlations were found using these three measures for NH peers. As predicted, WM accuracy (and response times) were best (and fastest) for digit span, intermediate for object span, and worst (and slowest) for symbol span. CI users and NH peers demonstrated equivalent WM accuracy and response time for digit span and object span, and similar response times for symbol span, but contrary to our original predictions, CI users demonstrated better accuracy on symbol span than NH peers.

Conclusions

Verbal WM assessed using visual tasks relates weakly to sentence recognition for degraded speech. CI users performed equivalently to NH peers on most visual tasks of WM, but they outperformed NH peers on symbol span accuracy. This finding deserves further exploration but may suggest that CI users develop alternative or compensatory strategies associated with rapid verbal coding, as a result of their prolonged experience of auditory deprivation.

Keywords: Cochlear implants, Sensorineural hearing loss, Speech perception, Verbal working memory, Digit span

Introduction

Cochlear implants (CIs) successfully restore audibility to postlingually deaf adults with moderate-to-profound sensorineural hearing loss; however, a large degree of variability exists in the speech and language processing skills among these patients. Much of this variability cannot be predicted or explained. A focus of our lab is investigating this outcome variability, in hope of developing better methods to prognosticate performance, explaining why some patients demonstrate poor performance with their devices, and improving rehabilitative efforts for this expanding patient population.

To achieve these goals, we have sought to identify and understand the complex neurocognitive mechanisms that underlie speech and language processing in adult CI users. Neurocognitive functions have been demonstrated to be a major source of individual differences among older adult CI users, in part because these functions generally show aging-related declines in the elderly.1 Moreover, a wealth of literature demonstrates the impact of neurocognitive functions on speech recognition performance in adults with milder degrees of hearing loss.2, 3

Working memory (WM) is one specific neurocognitive function that has been targeted for its contributions to speech and language outcomes in both pediatric and adult CI users. WM is a temporary storage and processing mechanism whereby information is held in conscious awareness while additional manipulation of that information occurs (i.e., perceptual processing, retrieval of information from long term memory).4, 5, 6 WM serves a vital role in maintaining information for recognizing and comprehending spoken language. There is abundant evidence that WM capacity has a critical role in speech and language skills in pediatric CI users.7, 8, 9 The role that working memory plays in speech skills among adult CI users has received some attention with regard to word and sentence recognition and sentence comprehension, but remains poorly understood.10, 11, 12

A barrier to further progress in our understanding of how WM relates to speech skills in adults and how it may be therapeutically targeted is that we do not know what the optimal method is to assess WM in this special population. The traditional method of assessing WM is through the use of measures that assess the participant's ability to recall a number of familiar items in correct serial order, known as span tasks. The most widely used version of this methodology is digit span, in which the participant is provided a list of digits (either visually or auditorily) and is asked to recall those digits in the correct forward order (“forward” digit span) or in reverse order (“backward” digit span).13 Previous studies in adults with CIs have demonstrated inconsistent relations of digit span with speech recognition abilities. Tao et al10 investigated WM in a mixed group of prelingual and postlingual CI users, some of whom were young adults. Scores on an auditory digit span task correlated with disyllable speech recognition in that study. Moberly et al11 examined a group of 30 postlingual adult CI users using a similar auditory task of forward digit span. Digit span scores did not correlate significantly with recognition scores for sentences in speech-shaped noise. When it comes to the use of non-auditory measures of WM in adults with CIs, disparate findings also exist. Early studies by Lyxellet al14, 15 demonstrated relations between WM as assessed using a visual Reading Span measure and speech recognition for adult CI users. Moberly et al16 failed to demonstrate a relation between speech recognition and WM assessed using a non-auditory visual measure of Forward and Reverse Memory taken from the Leiter-3 performance scale.17 Thus, the first goal of the current study was to further evaluate the relation between WM using visual measures and speech recognition ability in postlingual adults with CIs, as well as normal-hearing (NH) peers listening to spectrally degraded (noise-vocoded) speech.

Previous studies investigating WM in CI users have identified deficits in WM capacity for patients with CIs, as compared to their NH peers. Again, most of this work has been done in pediatric CI users, who typically demonstrate poorer WM when tested with auditory as well as visual measures of WM capacity.7, 8 In contrast, studies in adults have shown equivalent performance in CI users and NH peers using visual Reading Span,15 and only slightly poorer performance on visual versions of Forward and Reverse Memory tasks from the Leiter-3 and auditory measures of digit span and serial recall of monosyllabic words.11, 16 It is likely that discrepancies in findings between studies are a result of the particular clinical populations examined, but also the specific WM measures chosen. This concern motived the second goal of this study: to compare WM capacity between adult CI users and NH age-matched peers using three different measures of WM. Visual measures of WM were selected to avoid the confounding factor of variability in audibility and spectro-temporal resolution among participants.

Three visual measures of WM were selected for inclusion in this study. First was a visual version of the traditional digit span measure, delivered using a computer touch screen. Based on previous findings, we predicted that visual digit span measures would be equivalent between CI and NH groups. The other two visual span measures were predicted to be more cognitively challenging, which we hypothesized would be reflected in poorer accuracy and longer response times during testing. Thus, the second span test was a visual object span task. In this task, pictures of common items that could easily be labeled verbally were shown on a computer touch screen. A series of objects was shown, one at a time, and the participant was then asked to touch the items on the touch screen in the correct serial order using a 3 × 3 display of the test items. Because this task requires more processing to assign labels to the items and then encode them in memory, we predicted that accuracy and response time on this task would be poorer and slower than for digit span, for which the items should be rapidly identified and labeled. We also predicted that the experience of prolonged auditory deprivation might lead to less efficient verbal encoding of the object names by CI users, as compared with NH peers, resulting in poorer object span accuracy scores. The third and most challenging WM task was a visual symbol span task, in which pictures of nonsense symbols, which did not have readily available verbal labels, were shown in a similar fashion. Again, participants were asked to reproduce sequences of these items on a touch screen in the correct serial order. We predicted that this task would be the most difficult for both groups, as evidenced by poorer accuracy scores and longer response times as compared with digit span and object span. We also predicted that, again as a result of prolonged auditory deprivation, CI users may be less efficient at assigning verbal labels to nonsense symbols, which would result in poorer performance on the symbol span task when compared with NH controls.

In summary, the current study examined relations between WM and speech recognition in adult CI users, as well as NH peers listening to noise-vocoded speech materials, which serve as a reasonable simulation of the spectrally degraded signals CI users hear through their speech processors. Participants were tested using three visual measures of WM: digit span, object span, and symbol span. These measures of WM span were correlated with speech recognition scores, and were compared between CI and NH groups.

Material and methods

Participants

Thirty participants with CIs and 34 adults with NH were enrolled. CI users were between the ages of 50 and 81 years (mean age of 67.6 years, SD 8.1), and NH peers were also between the ages of 50 and 81 years (mean age of 67.3 years, SD 6.7). All CI users had at least one year of CI experience (mean CI use of 7.5 years, SD 7.2). Participants were recruited via flyers posted at The Ohio State University Department of Otorhinolaryngology and through a national research recruitment database, Research Match. For compensation, they received $15 for participation. All participants underwent audiological assessment immediately prior to testing. Normal hearing was defined as four-tone (0.5, 1, 2, and 4 kHz) pure-tone average (PTA) of better than 25 dBHL in the better ear. Because many of these participants were elderly, this criterion was relaxed to 30 dBHL PTA, but only two participants had a PTA poorer than 25 dBHL. All participants also demonstrated scores within normal limits on the Mini-Mental State Examination,18 a cognitive screening task, with raw scores all greater than 26 out of a possible 30, as well as word reading standard scores ≥80 on the word reading subtest of the Wide Range Achievement Test.19 Finally, a test of nonverbal IQ, the Raven's Progressive Matrices task, was collected as a covariate for use in analyses.20 All stimulus materials were presented on a touch screen and participants reproduced the sequence by selecting the option that best fit the pattern. Participants completed as many items as possible in 10 min, and scores were total correct number of items. Participant demographics, screening, and audiologic findings are shown in Table 1.

Table 1.

Participant demographics, screening measure, and audiologic findings for normal hearing and cochlear implant groups (Mean ± SD).

| Group | n | Age (years) | Reading (standard score) | MMSE (raw score) | Nonverbal IQ (raw score) | Pure-tone average (dBHL) | Duration of hearing loss (years) | Duration of CI use (years) |

|---|---|---|---|---|---|---|---|---|

| Normal hearing | 30 | 67.3 ± 6.7 | 100.5 ± 9.5 | 29.3 ± 0.9 | 11.1 ± 3.7 | 15.4 ± 6.1 | — | — |

| Cochlear implant | 24 | 67.6 ± 8.1 | 100.1 ± 11.4 | 28.8 ± 1.3 | 10.2 ± 4.5 | 99.0 ± 16.8 | 40.5 ± 20.5 | 7.5 ± 7.2 |

| t value | 0.18 | 0.17 | 1.71 | 0.93 | 27.1 | |||

| p value | 0.86 | 0.87 | 0.1 | 0.36 | <0.001 |

Equipment and materials

All testing for this study took place at The Ohio State University's Eye and Ear Institute. Visual stimuli were presented on a touch screen monitor, placed two feet in front of the participant. Auditory stimuli were presented via speaker positioned one meter from the listener at zero-degrees azimuth. Speech recognition test responses were audio-visually recorded for later off-line scoring. Participants wore FM transmitters through the use of specially designed vests. This allowed for their responses to have direct input into the camera, permitting later scoring of tasks. Each task was scored by two separate individuals to ensure reliable results, with reliability found to be >95% between scorers for auditory tasks. WM tasks were scored immediately and automatically by the computer.

Subjects were tested over a single 1-h session. During testing, CI participants used their typical hearing prostheses, including any contralateral hearing aid, except during the unaided audiogram. Prior to the start of testing, examiners checked the integrity of the individual's hearing prostheses by administering a brief vowel and consonant repetition task.

Speech recognition measures

Speech recognition tasks were presented in quiet for CI users. For NH peers, 8-channel noise-vocoded versions of speech materials were presented. Two speech recognition measures were included.

Harvard (IEEE) Standard Sentences: Sentences were presented via loudspeaker, and participants were asked to repeat as much of the sentence as they could. The sentences were semantically and grammatically correct (e.g., “Never kill a snake in your bare hands”).21 Scores were percentage of total words and percentage of full sentences repeated correctly.

PRESTO Sentences: Participants were again asked to repeat sentences, but these sentences varied broadly in speaker regional dialect and accent (e.g., “He ate four extra eggs for breakfast”).22 Scores were again percentage of total words and full sentences correct.

Working memory measures

Three measures of WM were used. Scores based on accuracy and processing speed were collected. Accuracy was represented by the total number of items recalled correctly, and processing speed was represented by the average response time for items across trials for the task.

Visual Digit Span: Digits were presented visually one at a time in the center of the computer screen. Span length started at two and gradually increased in length up to seven digits. Once the numbers disappeared from the screen, the participant was asked to reproduce the numbers in the correct serial order on a 3 × 3 matrix that appeared on the touch screen monitor.

Visual Object Span: This task was completed in the same fashion as Digit Span, except that the stimuli were pictures of common objects displayed on the computer screen (comb, thumb, leaf, lamp, shirt, kite, fish, nail, and bag). Performance of this task likely requires verbal encoding of the words representing these objects. Participants were presented with a series of two to seven pictures on the computer screen, and were asked to touch the objects in the same serial order in which they were presented.

Visual Symbol Span: This task was similar to Object Span, but the visual stimuli were complex symbols that did not correspond directly to real-life objects. This task was included to examine participants' ability to reproduce in correct serial order visual items that would be difficult to encode phonologically, because these symbols do not have easily associated names or lexical representations in memory.

Results

Independent-samples t-test analyses comparing CI users and NH controls revealed no significant difference in mean age, MMSE cognitive screening scores, word reading, or nonverbal IQ between CI users and NH controls. These results are summarized in Table 1.

Before investigating whether WM span scores were associated with scores on speech recognition measures, we investigated whether the participant demographic, screening, or audiologic scores were associated with speech recognition outcomes, for each group separately. Results of bivariate Pearson's correlation analyses are shown in Table 2. Significant correlations were found between at least one speech recognition measure and both participant age and nonverbal IQ for CI users; similar correlations were also found for NH peers between speech recognition and age and nonverbal IQ, as well as forword reading ability and pure-tone average. Thus, age and nonverbal IQ were used as covariates in CI users, along with word reading and pure-tone average in NH peers, for the following sets of correlation analyses.

Table 2.

R values from bivariate correlation analyses of sentence recognition in quiet with demographic, screening, and audiologic scores.

| Group | Age (years) | Nonverbal IQ (raw score) | Word reading (standard score) | Pure-tone average (dB HL) | |

|---|---|---|---|---|---|

| NH | Harvard Standard sentences (% words correct) | −0.32∗ | 0.37∗ | 0.40∗ | −0.39∗ |

| PRESTO Sentences (% words correct) | −0.08 | 0.19 | 0.29 | −0.43∗ | |

| CI | Harvard Standard sentences (% words correct) | −0.42∗ | 0.53∗∗ | 0.21 | 0.15 |

| PRESTO Sentences (% words correct) | −0.39∗ | 0.63 | 0.23 | 0.25 | |

Note: Cochlear implant (CI) users were tested with unprocessed speech materials, and normal-hearing (NH) controls were tested with 8-channel noise-vocoded speech; ∗P < 0.05;∗∗P < 0.01.

To address our first question of the relation between WM and speech recognition, separate partial correlation analyses were performed for each group between the WM scores and speech recognition scores, controlling for the above covariates (age and nonverbal IQ for CI users and age, nonverbal IQ, word reading, and PTA for NH peers). For each group, only a single significant partial correlation was identified between WM and speech recognition scores: for CI users, Harvard Standard full sentence scores were correlated with digit span scores, r (26) = 0.40, P = 0.035. No partial correlations were found between speech recognition scores and WM accuracy for NH listeners, nor were any significant partial correlations identified between speech scores and WM response times. Thus, our first hypothesis that WM and speech recognition would be correlated for CI users and NH peers was largely unsupported, because a significant correlation was found only for the CI group, and only for digit span.

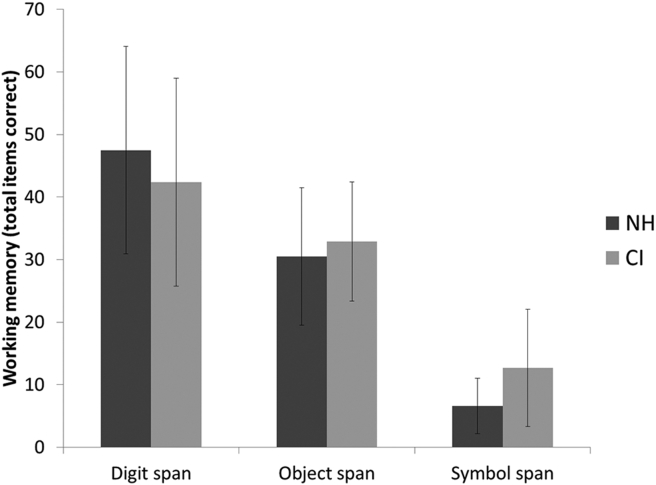

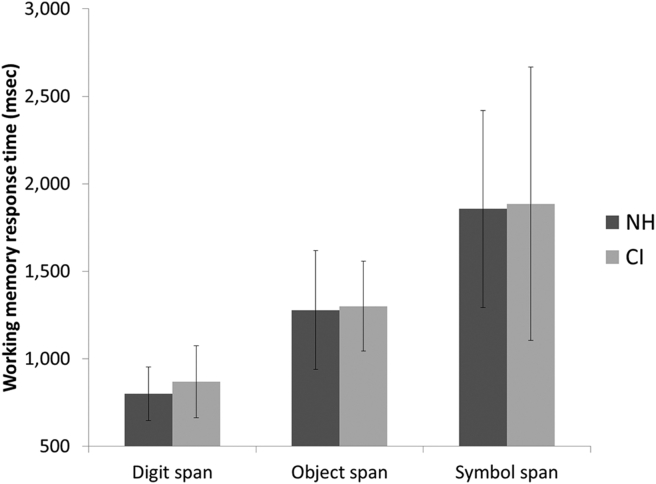

The second hypothesis, based on the increasing cognitive demands across the WM tasks, was that accuracy scores would be incrementally poorer for both CI users and NH listeners from digit span to object span to symbol span. Likewise, we expected that response times would increase in the same incremental fashion. WM accuracy and response times are shown in Fig. 1, Fig. 2. A repeated measures analysis of variance (ANOVA) demonstrated a significant main effect for WM accuracy by WM task (digit span, object span, symbol span), F (2,62) = 197.65, P < 0.001. Pairwise comparisons demonstrated highly significant differences in WM accuracy when comparing digit and object span, object and symbol span, and digit and symbol span (all P < 0.001). Likewise, a repeated measures ANOVA demonstrated a significant main effect for WM response time by WM task (digit span, object span, symbol span), F (2,62) = 124.92, P < 0.001. Pairwise comparisons demonstrated highly significant differences in WM response time when comparing digit and object span, object and symbol span, and digit and symbol span (all P < 0.001). Thus, results supported our original prediction about accuracy being progressively poorer and response times being progressively longer for object span and symbol span, as compared with digit span.

Fig. 1.

Working memory accuracy scores for normal-hearing (NH) versus cochlear implant (CI) participants. Error bars represent standard deviations.

Fig. 2.

Working memory response times for normal-hearing (NH) versus cochlear implant (CI) participants. Error bars represent standard deviations.

The third question of interest was whether scores on the WM tasks would differ between CI and NH groups. It was predicted that WM accuracy and response times would be similar between CI and NH groups for digit span, but for the more challenging object span and symbol span tasks, CI users would perform more poorly and/or slowly than NH peers. This hypothesis was based on the prediction that a prolonged period of auditory deprivation might be associated with less efficient verbal labeling of the visual objects and symbols by CI users. Again looking at Fig. 1, Fig. 2, accuracy and response times between groups appeared similar, except that symbol span accuracy was actually greater in CI users. A series of independent-samples t-tests was performed, comparing WM accuracy and response times between CI users and NH peers. As suggested by visual examination of the figures, only a single significant difference was found: CI users had better WM accuracy than their NH peers on the symbol span task, t (62) = 3.40, P = 0.001. No differences were identified between groups in WM response times.

Discussion

This study was designed to accomplish three objectives. The first was to investigate the relations between WM assessed using visual measures and speech recognition under degraded conditions (through a CI or when spectrally degraded by noise-vocoding). The second objective was to compare WM accuracy and response times on three different visual measures of WM span. Finally, performance on these three WM tasks was compared between CI users and a group of age-matched controls with NH.

Having a better understanding of how alternative approaches to the assessment of WM (i.e., through digit, object, and symbol span) relate to speech skills among CI users compared to NH listeners has important theoretical and clinical significance. While each of these three measures may tap WM, the extent to which components of WM such as encoding, storage, and retrieval are taxed may be different. Likewise, the influence of factors such as visuospatial integration and reorganization, proposed to follow prolonged periods of auditory deprivation, may show differential effects in these two populations.23, 24

The results of our first set of analyses revealed that WM assessed visually using measures of digit span, object span, and symbol span did not correlate strongly with performance on two sentence recognition tasks. Although auditory measures of WM have demonstrated relations to speech and language development in pediatric CI users,7, 8, 9 and there is some evidence that WM assessed auditorily correlates with speech recognition performance in adult CI users,10 the current study provides additional evidence that when assessed using visual tasks, WM is not strongly correlated with speech recognition performance. There are at least two possible explanations for this lack of a relation between these measures of WM and speech recognition. First, it could simply be that these relatively simple span measures do not sufficiently tap the neurocognitive WM processes that underlie recognition of spoken language. For example, a previous study identified relations between scores using a complex Reading Span task and speech recognition performance in adults with CIs.15 It could be argued that Reading Span, which can be considered a better measure of complex working memory capacity because it requires simultaneous storage and manipulation of verbal information, more appropriately taps the underlying neurocognitive processes required for making sense of degraded speech. A second explanation is that performance on modality-general measures of WM (i.e., visual) is not strongly tied to speech recognition, while modality-specific (i.e., auditory) WM tasks more specifically assess a listener's ability to store and manipulate auditory input. Thus, auditory-specific measures of WM processing would be more relevant to the recognition of degraded speech.

The second finding of this study using three visual measures of WM supported our predictions that object span and symbol span would be more difficult tasks than digit span, and that response times would also be slower. In both groups, evidence was provided to support these predictions, with symbol span being the most difficult and requiring the most cognitive processing, as evidenced by slower overall response times. Whether poorer performance on measures of object and symbol span relative to digit span is due to less effective encoding, maintenance, or retrieval operatives cannot be determined from these data. Teasing apart the differences in these subprocesses of WM may motivate future studies.

The third finding of this study was that WM accuracy and response times were generally similar between CI and NH groups, who were equivalent in age and nonverbal IQ, with the exception of symbol span, on which CI users actually outperformed NH peers. This finding was unexpected and deserves further exploration. One potential explanation is that as a result of their prolonged auditory deprivation, CI users may have developed alternative compensatory visual strategies for encoding items in memory using visuospatial features. Cross-modal plasticity and cortical reorganization resulting from prolonged periods of auditory deprivation have received much attention in this population,23, 24 but their potential impact on behavioral measures such as those utilized here are largely unstudied. Additional research studies are currently underway to help elucidate this performance difference between groups.

Conclusions

Visual measures of WM are not strongly associated with recognition performance for spectrally degraded speech by experienced adult CI users or NH peers listening to noise-vocoded sentence stimuli. Accuracy and response times on visual tasks of WM were generally similar between groups on tasks of digit span and object span. However, CI users demonstrated better performance than NH peers on a task of symbol span, potentially reflecting alternative visual strategies used for encoding the visual symbols in WM, or more efficient verbal labeling of items for storage and processing in verbal WM.

Funding

Research reported in this publication was supported by the National Institutes of Health, National Institute on Deafness and Other Communication Disorders (NIDCD), Career Development Award 5K23DC015539-02 and the American Otological Society Clinician-Scientist Award to ACM, and NIDCD research grant DC-015257 to DBP. Normal-hearing participants were recruited through Research Match, which is funded by the NIH Clinical and Translational Science Award (CTSA) program, grants UL1TR000445 and 1U54RR032646-01.

Conflict of interest

The authors declare that they have no conflict of interest.

Acknowledgements

The authors would like to acknowledge Luis Hernandez, PhD, for providing testing materials used in this study, and Lauren Boyce, Natalie Safdar, Kara Vasil, and Taylor Wucinich for assistance in data collection and scoring.

Edited by Yi Fang

Footnotes

Peer review under responsibility of Chinese Medical Association.

References

- 1.Wingfield A., Tun P.A. Cognitive supports and cognitive constraints on comprehension of spoken language. J Am Acad audiol. 2007;18:548–558. doi: 10.3766/jaaa.18.7.3. http://www.ncbi.nlm.nih.gov/pubmed/18236643 [DOI] [PubMed] [Google Scholar]

- 2.Akeroyd M.A. Are individual differences in speech reception related to individual differences in cognitive ability? A survey of twenty experimental studies with normal and hearing-impaired adults. Int J Audiol. 2008;47(suppl 2):S53–S71. doi: 10.1080/14992020802301142. http://www.ncbi.nlm.nih.gov/pubmed/19012113 [DOI] [PubMed] [Google Scholar]

- 3.Pichora-Fuller M.K., Souza P.E. Effects of aging on auditory processing of speech. Int J Audiol. 2003;42(suppl 2) http://www.ncbi.nlm.nih.gov/pubmed/12918623 2S11–2S16. [PubMed] [Google Scholar]

- 4.Baddeley A. Working memory. Science. 1992;255:556–559. doi: 10.1126/science.1736359. [DOI] [PubMed] [Google Scholar]

- 5.Daneman M., Carpenter P.A. Individual differences in working memory and reading. J Verb Learn Verb Behav. 1980;19:450–466. [Google Scholar]

- 6.Daneman M., Hannon B. What do working memory span tasks like reading span really measure? In: Osaka N., Logie R.H., D'Esposito M., editors. The Cognitive Neuroscience of Working Memory. Oxford University Press; New York, NY: 2007. pp. 21–42. [Google Scholar]

- 7.Pisoni D.B., Cleary M. Measures of working memory span and verbal rehearsal speed in deaf children after cochlear implantation. Ear Hear. 2003;24:106S–120S. doi: 10.1097/01.AUD.0000051692.05140.8E. http://www.ncbi.nlm.nih.gov/pubmed/12612485 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Pisoni D.B., Geers A.E. Working memory in deaf children with cochlear implants: correlations between digit span and measures of spoken language processing. Ann Otol Rhinol Laryngol Suppl. 2000;185:92–93. doi: 10.1177/0003489400109s1240. http://www.ncbi.nlm.nih.gov/pubmed/11141023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Harris M.S., Kronenberger W.G., Gao S., Hoen H.M., Miyamoto R.T., Pisoni D.B. Verbal short-term memory development and spoken language outcomes in deaf children with cochlear implants. Ear Hear. 2013;34:179–192. doi: 10.1097/AUD.0b013e318269ce50. http://www.ncbi.nlm.nih.gov/pubmed/23000801 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Tao D., Deng R., Jiang Y., Galvin J.J., Fu Q.J., Chen B. Contribution of auditory working memory to speech understanding in mandarin-speaking cochlear implant users. PLoS One. 2014;9 doi: 10.1371/journal.pone.0099096. http://www.ncbi.nlm.nih.gov/pubmed/24921934 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Moberly A.C., Harris M.S., Boyce L., Nittrouer S. Speech recognition in adults with cochlear implants: the effects of working memory, phonological sensitivity, and aging. J Speech Lang Hear Res. 2017;60:1046–1061. doi: 10.1044/2016_JSLHR-H-16-0119. http://www.ncbi.nlm.nih.gov/pubmed/28384805 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.DeCaro R., Peelle J.E., Grossman M., Wingfield A. The two sides of sensory-cognitive interactions: effects of age, hearing acuity, and working memory span on sentence comprehension. Front Psychol. 2016;7:236. doi: 10.3389/fpsyg.2016.00236. http://www.ncbi.nlm.nih.gov/pubmed/26973557 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wechsler D. Psychological Corporation; San Antonio, TX: 1991. Manual for the Wechsler Intelligence Scale for Children-(WISC-III) [Google Scholar]

- 14.Lyxell B., Andersson J., Andersson U., Arlinger S., Bredberg G., Harder H. Phonological representation and speech understanding with cochlear implants in deafened adults. Scand J Psychol. 1998;39:175–179. doi: 10.1111/1467-9450.393075. http://www.ncbi.nlm.nih.gov/pubmed/9800533 [DOI] [PubMed] [Google Scholar]

- 15.Lyxell B., Andersson U., Borg E., Ohlsson I.S. Working-memory capacity and phonological processing in deafened adults and individuals with a severe hearing impairment. Int J Audiol. 2003;42(suppl 1):S86–S89. doi: 10.3109/14992020309074628. http://www.ncbi.nlm.nih.gov/pubmed/12918614 [DOI] [PubMed] [Google Scholar]

- 16.Moberly A.C., Houston D.M., Castellanos I. Non-auditory neurocognitive skills contribute to speech recognition in adults with cochlear implants. Laryngoscope Invest Otolaryngol. 2016;1:154–162. doi: 10.1002/lio2.38. http://www.ncbi.nlm.nih.gov/pubmed/28660253 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Roid G.H., Miller L.J., Pomplun M. Western Psychological Services; Los Angeles: 2013. Leiter International Performance Scale, (Leiter-3) [Google Scholar]

- 18.Folstein M.F., Folstein S.E., McHugh P.R. "Mini-mental state". A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. http://www.ncbi.nlm.nih.gov/pubmed/1202204 [DOI] [PubMed] [Google Scholar]

- 19.Wilkinson G.S., Robertson G.J. Psychological Assessment Resources; Lutz: 2006. Wide Range Achievement Test (WRAT4) [Google Scholar]

- 20.Raven J.C. Oxford Psychologists Press; Oxford: 1998. Raven's Progressive Matrices. [Google Scholar]

- 21.IEEE IEEE recommended practice for speech quality measurements. IEEE Rep. 1969;297 [Google Scholar]

- 22.Gilbert J.L., Tamati T.N., Pisoni D.B. Development, reliability, and validity of PRESTO: a new high-variability sentence recognition test. J Am Acad Audiol. 2013;24:26–36. doi: 10.3766/jaaa.24.1.4. http://www.ncbi.nlm.nih.gov/pubmed/23231814 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Glick H., Sharma A. Cross-modal plasticity in developmental and age-related hearing loss: clinical implications. Hear Res. 2017;343:191–201. doi: 10.1016/j.heares.2016.08.012. http://www.ncbi.nlm.nih.gov/pubmed/27613397 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Sharma A., Glick H. Cross-modal re-organization in clinical populations with hearing loss. Brain Sci. 2016;6 doi: 10.3390/brainsci6010004. http://www.ncbi.nlm.nih.gov/pubmed/26821049 [DOI] [PMC free article] [PubMed] [Google Scholar]