Abstract

This study evaluated spatial release from masking (SRM) in 2- to 3-year-old children who are deaf and were implanted with bilateral cochlear implants (BiCIs), and in age-matched normal-hearing (NH) toddlers. Here, we examined whether early activation of bilateral hearing has the potential to promote SRM that is similar to age-matched NH children. Listeners were 13 NH toddlers and 13 toddlers with BiCIs, ages 27 to 36 months. Speech reception thresholds (SRTs) were measured for target speech in front (0°) and for competitors that were either Colocated in front (0°) or Separated toward the right (+90°). SRM was computed as the difference between SRTs in the front versus in the asymmetrical condition. Results show that SRTs were higher in the BiCI than NH group in all conditions. Both groups had higher SRTs in the Colocated and Separated conditions compared with Quiet, indicating masking. SRM was significant only in the NH group. In the BiCI group, the group effect of SRM was not significant, likely limited by the small sample size; however, all but two children had SRM values within the NH range. This work shows that to some extent, the ability to use spatial cues for source segregation develops by age 2 to 3 in NH children and is attainable in most of the children in the BiCI group. There is potential for the paradigm used here to be used in clinical settings to evaluate outcomes of bilateral hearing in very young children.

Keywords: bilateral cochlear implants, children, unmasking

Introduction

Young children typically spend numerous hours every day in noisy environments, where complex arrays of sounds co-occur, varying in content and direction. To perform well in complex auditory environments, children benefit from being able to segregate multiple sound sources, to make sense of the “what” and “where” aspects of the auditory environment. This subject has received notable attention in the context of evaluating the potential benefits of bilateral versus unilateral cochlear implantation (e.g., van Wieringen & Wouters, 2015; for review, see Litovsky, Goupell, Misurelli, & Kan, 2017) and to some extent also in evaluating outcomes in children who receive two cochlear implants (CIs) simultaneously versus sequentially (e.g., Chadha, Papsin, Jiwani, & Gordon, 2011; Cullington et al., 2017). Given that a growing number of young children with significant bilateral hearing loss are receiving bilateral cochlear implants (BiCIs), there is impetus to understand whether BiCIs promote spatial hearing, namely, sound localization and segregation of target sounds from background noise (Litovsky & Gordon, 2016). There is growing evidence that children who are bilaterally implanted can localize sounds significantly better with BiCIs than with a unilateral CI (Cullington et al., 2017; Grieco-Calub & Litovsky, 2010, 2012; Reeder, Firszt, Cadieux, & Strube, 2017) and that experience with BiCIs promotes improvement in sound localization skills (Zheng, Godar, & Litovsky, 2015).

The present study aimed to measure an aspect of spatial hearing known as spatial release from masking (SRM): improved speech understanding when target speech is spatially separated from competing sounds, relative to when the target and competing sounds are colocated. SRM has been studied extensively in normal-hearing (NH) adults (Bronkhorst & Plomp, 1988; Drullman & Bronkhorst, 2000; Festen & Plomp, 1990; Freyman, Helfer, McCall, & Clifton, 1999; Jones & Litovsky, 2011; Plomp, 1976; Yost, Dye, & Sheft, 1996). Effect sizes for SRM depend on numerous factors, including the task, stimuli, number of competing sounds and their content, as well as similarity in voices producing target and competing speech (for review, see Litovsky et al., 2017); SRM can be as small as a few dB or as large as 15 to 20 dB (Bronkhorst, 2000, 2015; Jones & Litovsky, 2011).

Studies that aim to measure SRM in children have also been conducted with varying tasks and competing sounds (for review, see Litovsky et al., 2017). In NH children, target stimuli have been single words or sentences, competing stimuli have consisted of speech or noise, and response methods vary as well. Overall, depending on the exact study, SRM can be adult-like by age 4 to 6 years (Garadat & Litovsky, 2007; Johnstone & Litovsky, 2006; Litovsky, 2005), 8 years (Cameron & Dillon, 2007), or immature earlier in childhood and adult-like by 12 years of age (Vaillancourt, Laroche, Giguere, & Soli, 2008). It is likely that SRM varies with task difficulty and reflects, in part, the ability of children to use spatial cues for source segregation (Werner, 2012).

To date, SRM has been studied in bilaterally implanted adults and in children who received their second CI by 4 to 5 years of age. Adult participants have often been postlingually deafened and had acoustic hearing early in life. SRM has nonetheless been shown to result from monaural head shadow, with little evidence for SRM due to binaural effects (e.g., Loizou et al., 2009; Schleich, Nopp, & D'haese, 2004; for review, see Litovsky et al., 2017). In the studies on bilaterally implanted children, most of the children were sequentially implanted; hence, the children first experienced several years of unilateral listening and subsequently transitioned to having bilateral hearing. Some studies report that SRM in these children has been smaller than SRM measured in NH children who are matched for chronological age or hearing age (Misurelli & Litovsky, 2012; Mok, Galvin, Dowell, & McKay, 2010; Murphy, Summerfield, O’Donoghue, & Moore, 2011), and recent work suggests that SRM in implanted children improves with additional listening experience (Killan, Killan, & Raine, 2015).

One possible explanation for smaller SRM in implanted children than NH children is that early unilateral hearing has the potential to promote asymmetries in auditory development, which can compromise responses to sounds from the opposite ear (Gordon, Jiwani, & Papsin, 2013; Jiwani, Papsin, & Gordon, 2016; Papsin & Gordon, 2008). Chadha et al. (2011) studied the issue of early unilateral hearing and its impact on spatial unmasking, in their case defined as the ability to detect a speech sound in the presence of noise. Spatial unmasking was greater in children who had been simultaneously implanted than in unilaterally implanted children, suggesting that early activation of bilateral hearing has the potential to enhance use of spatial cues for detecting the presence of speech sounds in noise. Although that study involved a detection task rather than word identification, it is nonetheless informative regarding the use of spatial cues in complex acoustic environments. The purpose of the current study was to investigate SRM in very young children, who receive BiCIs by 2 years of age, and age-matched toddlers who have NH.

CI devices in bilateral users provide access to bilateral hearing, but binaural stimulation such as that experienced by NH children is absent. Children who use BiCIs do not receive consistent binaural cues because the two devices work on their own time clocks and are programmed independently. BiCIs lack coordinated stimulation which means that the auditory system does not receive binaural cues with fidelity (for review, see Kan & Litovsky, 2015). The cues that are potentially available to BiCI users consist of a combination of monaural level cues, interaural differences in level, and possibly interaural differences in the timing of envelope cues for high-rate modulated signals (Dorman et al., 2014; Jones & Litovsky, 2011; Litovsky, 2011; van Hoesel & Litovsky, 2011; van Hoesel & Tyler, 2003). The consequence is that sound localization in bilaterally implanted children is generally poorer than in NH children (Grieco-Calub & Litovsky, 2010; Zheng et al., 2015). Regarding spatial segregation of target speech from competing sounds, BiCI users function well if they can use monaural head shadow, that is, if they can hear the target well with a good signal-to-noise ratio. However, children with BiCIs have not shown source segregation benefits that depend on binaural integration cues (such as binaural squelch) when presented with stimuli in the sound field through their clinical processors (Misurelli & Litovsky, 2015; Sheffield, Schuchman, & Bernstein, 2015; Van Deun, van Wieringen, & Wouters, 2010). Thus, the SRM measured to date has most likely resulted from monaural head shadow.

The test used here was designed to evaluate the children’s ability to identify words in a closed-set task, in quiet and in the presence of competitors that were either colocated with the target speech or spatially separated from the target. We were careful to select a task that tested word identification only for known words rather than testing vocabulary. This test is based on the “Mr. Potato Head” children’s game, which uses body parts and items, that is, using a vocabulary that was deemed appropriate for normally developing 2.5-year-olds. This test has previously been used in older children who have auditory neuropathy, and use a CI in one ear and a hearing aid in the opposite ear (Runge, Jensen, Friedland, Litovsky, & Tarima, 2011), but has otherwise only been used to test NH children from ages 3 to 5 years (Garadat & Litovsky, 2007). Here, we also compare our results from toddlers with results obtained in our prior work on SRM with older bilaterally implanted children who were tested using a similar task. We predicted that toddlers in the BiCI group would demonstrate similar SRM to their age-matched NH peers because of the early age at bilateral implantation.

Methods

Participants

Two groups of subjects were tested. One group (N = 13) ranged in age from 28 to 36 months (M = 32.38 ± 2.47) and had BiCIs; these subjects had a history of severe-to-profound sensorineural hearing loss, identified before the age of 11 months. Although some of the children had knowledge of sign language, all were primarily using auditory-verbal communication. Inclusionary criteria required that each subject was a native English speaker receiving speech-language therapy or auditory habilitation with a focus on listening, with at least 12 months of listening experience with their first CI at time of testing, but there was no minimum requirement for amount of bilateral CI use. Table 1 describes individual demographic information for each subject. The CIs were programmed by their clinical audiologist prior to arriving in the lab. During data collection, the setting that the child was accustomed to using in everyday listening, as indicated by parent report and audiologist recommendation, was used. Children were tested with both CIs activated. In this study, a unilateral condition was not included because this listening mode is not the one that the children use in their everyday listening.

Table 1.

Subject Demographics.

| Subject code | Sex | Age at test (mo.) | Etiology | Age at ID of HL (mo.) | Age of 1st CI (mo.) | Age of 2nd CI (mo.) | Hearing age (mo.) | Bilateral Exp. (mo.) | Device manufacturer; processor | Frequency of therapy/ week (hr) |

|---|---|---|---|---|---|---|---|---|---|---|

| CIFQ | M | 28 | Unknown | Birth | 6 | 12 | 21 | 15 | Cochlear; N5 | 0.5 |

| CIFZ | M | 30 | Connexin | 1 | 8 | 8 | 22 | 22 | Cochlear; N5 | 3 |

| CIFJ | M | 30 | Connexin | Birth | 14 | 13 | 16 | 16 | MedEl; OPUS-2 | 1 |

| CIFK | M | 30 | Connexin | Birth | 14 | 14 | 16 | 16 | MedEl; OPUS-2 | 1 |

| CIFI | M | 31 | Connexin | 0.5 | 7 | 7 | 24 | 24 | Cochlear; N5 | 6 |

| CIGB | F | 32 | Connexin | Birth | 14 | 15 | 18 | 17 | AB; Neptune | 5 |

| CIFY | F | 34 | Unknown | Birth | 13 | 17 | 22 | 18 | Med-El; OPUS-2 | 4 |

| CIFX | M | 33 | Unknown | 11 | 15 | 16 | 19 | 17 | Med-El; OPUS-2 | 1 |

| CIFO | F | 34 | Unknown | Birth | 8 | 14 | 25 | 19 | MedEl; OPUS-2 | 2.5 |

| CIFN | M | 33 | Unknown | Birth | 13 | 13 | 21 | 21 | MedEl; OPUS-2 | 8 |

| CIGA | F | 34 | Unknown | Birth | 21 | 25 | 14 | 10 | Med-El; OPUS-2 | 1 |

| CIFU | F | 36 | Connexin | Birth | 12 | 15 | 24 | 21 | Med-El; OPUS-2 | 1 |

| CIFT | M | 36 | Connexin | Birth | 8 | 8 | 29 | 28 | Cochlear; N5 | 2.5 |

| Average | 32.38 | 11.77 | 13.62 | 20.85 | 18.77 | 2.8 |

Note. HA = Hearing age at time of testing; Bilateral Exp = Bilateral hearing experience at time of testing; CI = cochlear implant; HL = hearing loss.

A second group (N = 13) of subjects had NH and were matched by age (±2 months) and gender to the BiCI subjects. A t test revealed no significant difference in chronological age between the two groups, t(24) =−0.58, p = .57; however, hearing age significantly differed between the groups, t(24) = 8.15, p < .001, with the BiCI group having a significantly younger hearing age. For the BiCI group, hearing age is defined as the number of months since activation of at least one CI. For the NH group, hearing age is equivalent to chronological age. The NH subjects had hearing sensitivity within normal limits, as indicated by normal tympanograms and audiometric hearing screening at octave frequencies between 250 and 8000 Hz. None of the subjects had ear infections or known illness nor were they taking medication on the day of testing (as reported by the parent/guardian).

Stimuli

All stimuli for this study were digitized using a sampling rate of 44.1 kHz and stored on a computer as .wav files for presentation during testing. Target stimuli (Garadat & Litovsky, 2007) consisted of a closed set of 16 words within the receptive and expressive vocabulary of typically developing NH toddlers in the age range of 2.5 to 3.0 years. Stimuli were prerecorded using a male talker and set to equal root mean square levels.

For conditions with competing speech, stimuli consisted of sentences from the Harvard IEEE corpus (Rothauser, 1969), which were prerecorded using a female talker. Overlaying two recordings of the same voice created two-talker competitors, and the words were filtered to match the long-term average speech spectrum of the target words. Thirty such two-talker sentences were strung together into a repeating loop, and segments were randomly chosen and played during each trial in the conditions with competitors. The timing was such that the target words occurred approximately 1.5 s after the onset of the competing sentence. These stimuli are identical to those used in a prior experiment with 4- to 7-year-old children (Litovsky, 2005).

Apparatus and Task

Testing was conducted in a carpeted double-walled sound booth (2.75 m × 3.25 m) with reverberation time (T60) = 250 ms. Subjects were seated in the middle of the room with loudspeakers mounted on a stand at a distance of 1.2 m from the center of the subject’s head. The target was fed to one loudspeaker, and the competing stimuli were fed to separate loudspeaker (thus two audio channels of a laptop computer), amplified (Crown D-75 [Elkart, Indiana, USA]) and played through separate loudspeakers (Cambridge Soundworks [Cambridge, MA, USA], Center/Surround IV). In the Colocated condition, the two loudspeakers were placed next to one another, with 0° azimuth in the center, and difference of 4° between the center of the two loudspeakers. In the Separated condition, the loudspeaker with the target was centered at 0° azimuth, and the loudspeaker with the competing stimuli were placed at 90° azimuth. A computer monitor was placed directly below the loudspeaker at 0° azimuth. During testing, the child was engaged in a computerized “listening game” with visual stimuli (e.g., picture representations of the targets and reinforcing animations) presented from the center monitor. Visual stimuli presented from the center monitor helped to engage the child and maintain centering of the head during presentation of the auditory stimulus.

The testing paradigm was the same as has been previously used by Litovsky and colleagues (Garadat & Litovsky, 2007; Johnstone & Litovsky, 2006; Litovsky, 2005), involving a four-alternative, forced-choice task. On each trial, the auditory target was presented, after which four pictures appeared simultaneously on the screen. Three of the pictures were randomly selected, and one of the pictures matched the actual target. The pictures were arranged in a 2 × 2 grid of equal-size squares, and the square containing the target picture was randomly chosen from trial to trial. A set of rules was applied to eliminate the possibility of words with similar initial sounds occurring in the same interval (e.g., “hands” and “hat”). On each trial, a carrier phrase of “ready?” preceded the target presentation. Subjects were instructed to listen to the target word (e.g., “tell me what the man is saying”) and to identify the picture that matched the heard word. A verbal or pointing response was required to confirm subjects’ identification of the targets, and the answer was entered into the computer by an examiner. In the unlikely event that a child pointed to one picture but verbally reported another, the trial was repeated with a new stimulus. Feedback was provided for both correct and incorrect responses. Following each correct response, a brief musical clip was presented as reinforcement. Following incorrect responses, a phrase such as “that must have been difficult” or “let’s try a different one” was presented from the front speaker. In the presence of competing stimuli, subjects were told to “listen to the man’s voice, and ignore what the women (or ‘ladies’) are saying.” However, the experimenter did not confirm whether the child knew if the target was male and competitor was female.

Familiarization

Prior to each test, the pictures of the target words were sent to the families via mail so that the parent could familiarize the toddler with the stimuli. Once in the testing room, toddlers underwent a familiarization procedure that lasted for 5 to 15 min. First, the target words, along with their associated pictures, were each presented such that they could be both seen and heard. Subsequently, a verification procedure was conducted to confirm that the toddler could correctly identify each target when the corresponding picture was displayed on the computer monitor in absence of sound. Words that were not correctly identified (i.e., if the target picture was shown to the child and the child did not recall the word associated with the picture) were eliminated from that subject’s target corpus. In a typical case, a toddler was familiar with all targets and could quickly associate the auditory stimulus with the matching picture. All NH toddlers could expressively label target words. The subjects with CIs ranged from being able to expressively label 16/16 target words (N = 7) to only being able to point with their hand to 8/16 (N = 2).

Speech Reception Threshold Estimation and SRM Computation

The target stimulus was always presented from the front (0° azimuth). For each subject, speech reception thresholds (SRTs) were obtained in three conditions: (a) Quiet, (b) Colocated with competitors also at 0° azimuth, and (c) Separated with competitors at 90° azimuth. In the Colocated and Separated conditions, the level of the competitor was fixed at 55 dB sound pressure level (SPL). As noted in Table 1, children with BiCIs were either implanted simultaneously or they received the first CI in the right ear, thus having the competitor on the right side meant that for sequentially implanted subjects, the ear with the first CI was closer to the competitor. In the Colocated and Separated conditions, the competing speech was fixed at 55 dB SPL. During testing, subjects were instructed to attend to the target (male) talker at 0° azimuth and to ignore the female voices. In each of the three conditions, two SRTs were obtained, and the order of the conditions was randomized using a Latin square design. All NH subjects completed both repetitions of each condition. In the BiCI group, 12/13 subjects completed both repetitions of the Quiet condition, 10/13 subjects completed both repetitions of Colocated, and 9/13 of those completed the Separated condition. There are multiple factors that may have contributed to the lack of completion of all trials in each condition for the children in the BiCI group; this is explained further in the Discussion section. The average of the two SRTs from each condition was used in data analysis. Testing was completed in one session that lasted approximately 1 to 1.5 hr, including frequent breaks.

The test incorporated an adaptive tracking procedure to vary the level of the target signal, which was set to 60 dB SPL (+5 dB signal-to-noise ratio) at the beginning of each adaptive track. During the initial portion of the adaptive track, the target level was decreased by 8 dB following each correct response. After the first incorrect response, a modified adaptive three-down/one-up algorithm was used, with these rules: Following each reversal, the step size was halved, with the minimum step size set to 2 dB. If the same step size was used twice in a row in the same direction, the next step size was doubled in value (see Litovsky, 2005 for details on the hybrid adaptive algorithm). Testing was terminated following three reversals, and SRTs were estimated by taking the average of the level at which the last two reversals occurred.

SRM was computed for each listener by subtracting the average SRTs in the Separated condition from the average SRTs in the Colocated condition.

Results

Speech Reception Thresholds

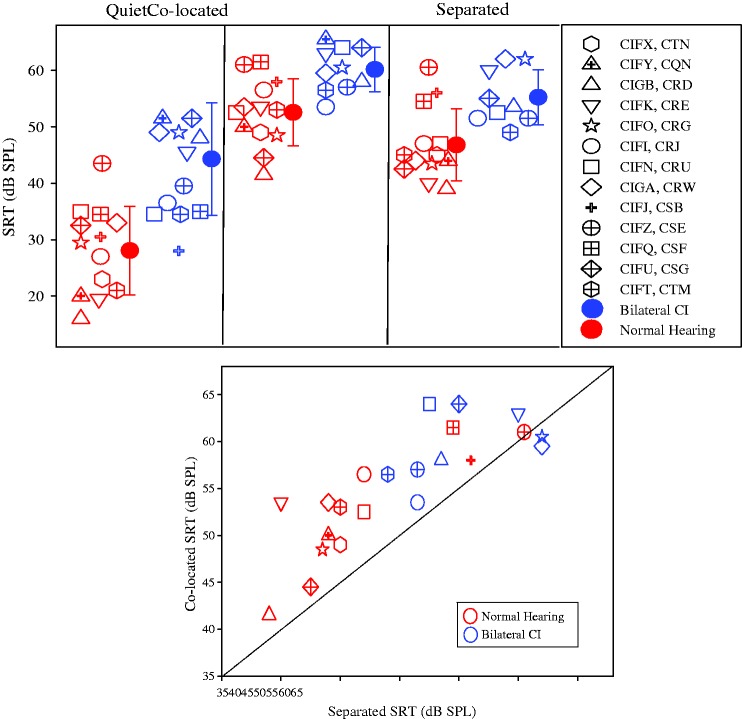

Figure 1 shows SRTs in the three conditions tested (Quiet, Colocated, Separated), for individual subjects in the two groups, along with group means (±SD) for each condition. In the NH group, mean SRTs (±SD) were the following: 28.5 dB (7.9) in Quiet, 52.8 dB (5.9) in the Colocated condition, and 46.9 (6.4) in the Separated condition. In the BiCI group, mean SRTs (±SD) were the following: 44.3 dB (10) in Quiet, 60.2 dB (3.4) in the Colocated condition, and 55.3 (5) in the Separated condition.

Figure 1.

(a) Speech reception thresholds (SRTs in dB SPL) are plotted such that data from each condition are shown in a different panel; from left to right, conditions are Quiet, Colocated, and Separated. Within each panel, SRTs are shown for all individual subjects who provided a data point, in open symbols, and group means (±SD) shown by the solid symbols. The NH group is represented by the gray symbols, and the BiCI group is represented by the black symbols. (b) SRTs are shown for the Colocated conditions as a function of SRTs for the Separated condition. Each data point corresponds to an individual listener, with subjects from the NH and BiCI groups shown in gray and black, respectively. The diagonal line represents the line of unity, that is, no SRM; data points falling above the diagonal line indicate SRM, that is, SRTs higher in the Colocated condition.

CI = cochlear implant; SPL = sound pressure level; NH = normal hearing; BiCI = bilateral cochlear implant; SRM = spatial release from masking.

A mixed-design analysis of variance (ANOVA) was conducted to compare both between (group: BiCI, NH) and within (SRT: Quiet, Colocated, Separated) factors. SRTs were analyzed only for listeners in which SRTs could be obtained in all three conditions (NH, n = 13; BiCI, n = 9). There was a significant main effect of SRT, F(2, 40) = 170.86, p < .001, whereby SRTs in Quiet were the lowest, followed by SRTs in the Separated condition, and SRTs were largest in the Colocated condition. There was also a main effect of group, F(1, 20) = 18.75, p < .001, whereby SRTs were greater for the BiCI versus the NH group. Finally, there was a significant interaction of SRT × group, F(2, 40) = 97.19, p = .003. Due to the significant interaction, analyses were conducted to examine effects with the BiCI and NH groups individually.

A within-subject ANOVA was conducted for each group to investigate the effect of condition (three levels) on SRT. For the NH group, there was a main effect of condition, F(2, 24) = 148.76, p < .001. When further investigated with paired-sample t tests, using a Bonferroni correction for multiple comparisons (significance p < .0167), there were significant differences in the NH group between the following conditions: (a) Colocated and Separated (p < .001), (b) Quiet and Separated (p < .001), and (c) Quiet and Colocated (p < .001). For the BiCI group, there was also a main effect of condition, F(2, 16) = 47.04, p < .001. When further investigated with paired-sample t tests, using a Bonferroni correction for multiple comparisons (significance p < .0167), there were significant differences in the BiCI group between the following conditions: (a) Quiet and Colocated (p < .001) and (b) Quiet and Separated (p < .001). The difference between SRTs in the Colocated and Separated conditions for this group did not reach significance (p = .023). This finding suggests a marginal, but nonsignificant effect.

Figure 1(b) shows the relationship between SRTColocated and SRTSeparated for the two groups of subjects. The dashed diagonal line indicates no difference in SRTs between the Colocated and Separated conditions. In general, the NH data are clustered toward the lower left of the graph. In contrast, the toddlers with BiCIs are clustered toward the upper right corner of the graph, which is another manner of showing the high SRTs (poorer performance) seen in Figure 1(a). Results from a linear regression analysis show that NH toddlers with lower SRTs in the Colocated condition also had lower SRTs in the Separated condition, F(1, 12) = 22.8, p = .001, suggesting that the amount of masking demonstrated by individual subjects with NH has consistent underlying mechanisms regardless of competitor location. No significant relationship between SRTs in the two conditions was found in the BiCI group. In addition, three toddlers with the highest SRTs (poorest performance) in the NH group, whose data overlapped most clearly with data from the toddlers with BiCIs, had some of the youngest chronological ages at the time of testing. This may indicate a possible developmental trend on the measures reported here.

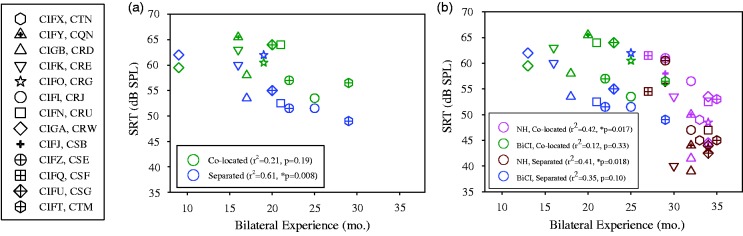

Figure 2(a) shows SRTs for the BiCI group as a function of number of months that the children had been exposed to bilateral hearing, for the Colocated and Separated conditions. These data were analyzed with regression analyses, revealing a significant negative relationship between SRTs in the Separated condition and bilateral listening experience, r2 = .61, p = .008. There was no relationship between SRTs in the Colocated condition and bilateral listening experience. Figure 2(b) shows SRTs for both groups of children, as a function of hearing age. Regression analyses revealed that, for the NH group, hearing age was negatively correlated with SRTs in the Separated, r2 = .41, p = .018, and Colocated, r2 = .42, p = .017, conditions; that is, older children showed smaller SRTs. However, there were no significant findings for either condition in the BiCI group.

Figure 2.

Individual SRTs are plotted. (a) Data from BiCI users are shown for Colocated (open) and Separated (filled) conditions. Data are plotted as a function of the bilateral hearing experience (in months). (b) Data from BiCI users (black outline) and NH listeners (gray outline) are shown for Colocated (open symbols) and Separated (filled symbols) conditions. Data are plotted as a function of the subjects’ hearing age (in months). The insets in each panel show results from the regression analyses.

SRTs = speech reception thresholds; BiCI = bilateral cochlear implant; NH = normal hearing.

It is possible that the difference in hearing age between the BiCI and NH groups (younger hearing age for BiCI group) may have impacted the differences in SRTs between groups. To explore this idea, a repeated measures ANOVA was conducted, revealing a significant main effect of SRT (Colocated, Separated), F(1, 20) =32.18, p < .001, with no SRT × Group interaction, and a between-subjects effect of group, F(1, 20) = 12.22, p = .002. That is, the BiCI group had higher SRTs in both conditions, which may be due to the differences in hearing age of the two groups. Therefore, hearing age was added as a covariate to examine if these effects remained after controlling for age. When controlling for hearing age, effects of SRT and group no longer remained significant. This suggests that development, specifically hearing age, does play a role in SRT.

Spatial Release From Masking

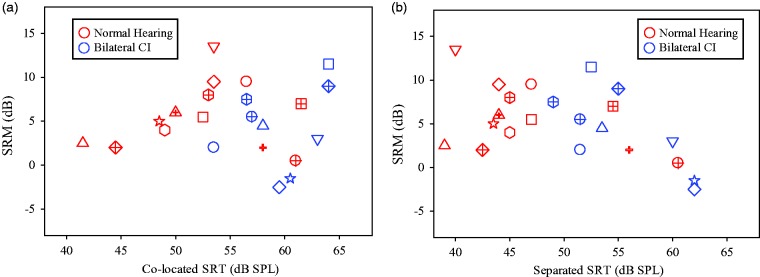

In the NH group, the mean SRM was 5.93 dB (±3.71), and in the BiCI group, the mean SRM was 4.28 dB (±4.72). The ranges were 0.5 dB to 13.5 dB and −2.5 dB to 11.5 dB in the NH and BiCI groups, respectively. There was no significant difference in SRM between the groups, t(20) = 0.80, p = .43. A regression analysis (see Figure 3) was used to explore the relationship between SRM and SRTs from each of the two conditions with competitors (Colocated or Separated) for the two groups of subjects. There was no significant relationship for either group of subjects between SRM and SRTs in the Colocated condition (4A), or between SRM and SRTs in the Separated condition (4B).

Figure 3.

Individual SRM data, in dB, are plotted as a function of SRTs in the Colocated (a) and Separated (b) conditions. Data from children with BiCIs and NH are shown in black and gray, respectively.

SRM = spatial release from masking; SPL = sound pressure level; CI = cochlear implant; SRTs = speech reception thresholds; BiCI = bilateral cochlear implant; NH = normal hearing.

Lastly, regression analyses were conducted to explore the effects of interimplant delay on SRTs and SRM. Results revealed no significant relationship between SRM or SRTs in the Colocated condition and the time between receiving the first and second CI. There was a significant relationship between interimplant delay and SRT in the Separated condition, r2 = .56, p = .021, suggesting that SRTs in the Separated condition were lower for the children who had shorter delays between activation of their first and second CI; however, this result should be interpreted with caution given five of the nine children included in the analyses received their CIs simultaneously, thus limiting the spread of interimplant delay.

Discussion

In this study, we asked whether toddlers who received BiCIs by 2 years of age would show similar SRTs and SRM as their NH age-matched peers. First, the BiCI group, on average, had higher (i.e., poorer) SRTs than the NH group in quiet and in the presence of competitors, regardless of whether the competitors were Colocated or Separated. Second, in both groups of subjects, there was evidence of masking, as shown by higher SRTs in conditions with competitors compared with the Quiet condition. Third, there was a negative relationship between SRTs in the Separated condition and bilateral experience (re: BiCI group), and between SRTs in the Separated condition and hearing age (re: NH group), suggesting that bilateral hearing experience is important for improving SRTs in conditions where spatial hearing is necessary. Fourth, when considering hearing age, there were no significant relationships for the BiCI group; however, the NH group showed a negative relationship between hearing age and SRTs in both the Separated and Colocated conditions; this result may be impacted by the difference in hearing age between the two groups. Fifth, significant SRM was seen only in the NH group. In the BiCI group, there were several children whose SRM values fell in the NH range, but the group, as a whole, did not show a significant effect.

Speech Reception Thresholds

The difference in SRTs between NH and BiCI groups may be due to a variety of factors, including the degraded input that a CI provides and the difference in hearing ages between the two groups. The signal provided through a CI has degraded qualities, to which many CI users, especially children who have had limited to no access to acoustic hearing, become accustomed. These individuals are often mainstreamed in classroom environments and are able to access sound and understand speech in quiet environments. However, the effect of the degraded signal on speech understanding is likely to have impacted performance of the BiCI toddlers tested here, as has been shown in other studies comparing children with NH and with CIs (e.g., Caldwell & Nittrouer, 2013; Misurelli & Litovsky, 2012). It may also be that these children who were implanted at a young age, many simultaneously, will continue to improve on tasks requiring spatial hearing as their hearing age increases.

The NH subjects could also be better at using “top-down” processing to identify the target word. For instance, they may be better at using phonological and lexical knowledge to guess which of the four options was the target in instances when they heard only a portion of the word. In this study, given that the vowel is the most salient and loudest portion of the target word, a listener who heard the “ee” sound and nothing else during one trial might be able to eliminate “mouth,” “eyes,” and “hands” as possible responses and by process of elimination choose “feet.” Central processing, including auditory, linguistic, and cognitive functions, determines the ability of subjects to implement expectations, linguistic knowledge, and context to perceptually restore inaudible portions of speech. Such top-down repair or restoration may enhance speech understanding in noisy environments, but the extent to which children in this study used such context effects is unclear. Buss, Leibold, and Hall (2016) investigated release from masking in children as young as 5 years of age and found that with a female target and female masker, children used context less than adults. The effect may have been limited in that study because same-sex target and masker stimuli may have invoked a high level of informational masking, but the relationship between contextual restoration and informational masking is not known. A recent study (Baskent, 2012) investigated the ability of young NH children to listen to degraded speech through a noise vocoder and found that linguistic restoration, or “top-down processing,” was observed at spectral resolutions of both 16 and 32 channels, but not at lower spectral resolutions (four or eight channels). Overall, they interpreted their findings to mean that degradation in bottom-up signals alone (such as those in CIs) may reduce the top-down restoration of speech. In addition, although there was no statistically significant difference in chronological age between the two groups, the difference in hearing age between the groups may have slightly impacted the results. This study did not include children with NH who had a hearing age of less than 27 months, and therefore, we cannot isolate the effects of development prior to this age. These factors may account for a portion of the differences in the levels of SRTs for children with CIs in the present study as well as previous studies (Cullington et al., 2017; Garadat & Litovsky, 2007; Litovsky, 2005; Litovsky, Johnstone, & Godar, 2006; Misurelli & Litovsky, 2012; Murphy et al., 2011).

The variability in SRTs seen here is consistent with prior research in CI users and more generally with research in auditory development. Identifying the factors that contribute to variability is a ubiquitous issue (Eisenberg et al., 2016; Wilson & Dorman, 2008). In the present study, we considered the number of months since activation of hearing (i.e., hearing age; in the BiCI group, we considered number of months since hearing with at least one CI) as well as bilateral experience. The negative relationship between SRTs in the BiCI group in the Separated condition and bilateral experience suggests that having more hearing experience may facilitate the ability to select the target word in the presence of competing speech, when spatial cues were available as a segregation cue. The current findings are consistent with prior reports in older bilaterally implanted children, noting a general improvement in speech discrimination scores with experience (Killan et al., 2015; Peters, Litovsky, Parkinson, & Lake, 2007; Sparreboom, Snik, & Mylanus, 2011; Strøm-Roum, Laurent, & Wie, 2012).

The NH group showed an effect of hearing age regardless of whether the spatial cues were available, suggesting a more generalized effect of hearing experience on the ability to select the target word in the presence of competing sounds. An interesting issue that the current study could not determine is whether individual-driven factors, such as cognition, attention, and memory, also affect this ability. In the NH group, hearing age cannot be separated from chronological age, suggesting a generalized developmental phenomenon, consistent with previous research showing protracted maturation of thresholds, including detecting changes in intensity (Buss, Hall, & Grose, 2009), frequency discrimination (Moore, Cowan, Riley, Edmondson-Jones, & Ferguson, 2011), and detecting tones in the context of complex and unpredictable tone bursts (Leibold & Neff, 2007; Lutfi, Kistler, Callahan, & Wightman, 2003; Oh, Wightman, & Lutfi, 2001).

SRTs obtained here can be compared most directly with results reported by Garadat and Litovsky (2007), where NH children from ages 3 to 4 and 4 to 5 years were tested using an identical paradigm. The children from ages 4 to 5 years had the lowest SRTs (averages approximately 18 dB, 50 dB, and 40 dB for Quiet, Colocated, and Separated conditions, respectively). In NH children of ages 3 to 4 years, SRTs averaged approximately 27 dB, 57 dB, and 48 dB for Quiet, Colocated, and Separated conditions, respectively. The NH toddlers tested here demonstrated similar SRTs to the 3- to 4-year-olds (averages of 28.5, 52.8, and 46.9 for Quiet, Colocated, and Separated conditions, respectively).

The findings on SRTs in BiCI toddlers reported here are not directly comparable with data in older children with BiCIs tested with the same task because the stimuli used for each group of children were slightly different. The SRTs measured here were, on average, higher than SRTs reported in older children, using a similar task, who are fitted with BiCIs (Misurelli & Litovsky, 2012), but the reason for the difference is not easy to determine. The speech targets alone might account for this difference, as shown by Garadat and Litovsky (2007). SRTs are higher with the speech corpus used here (target words = objects and names of body parts) than the corpus used by Misurelli and Litovsky (2012, 2015; target words = spondees). There is growing evidence to suggest that experience with CIs is an important factor in determining improvement in many aspects of speech understanding and discrimination (e.g., Eisenberg et al., 2016; Killan et al., 2015; Niparko et al., 2010). In addition to experience with the stimulation provided by the CI, there is a good likelihood that other factors impact improved SRTs with age, including continued maturation throughout childhood of nonsensory, central auditory mechanisms and nonauditory factors involving memory and attention (Leibold & Bonino, 2009; Lutfi et al., 2003; McCreery, Spratford, Kirby, & Brennan, 2017).

A final note regarding SRTs in the present study relates to the fact that, of the four toddlers with BiCIs who could not be tested in the conditions with competitors, three had measurable SRTs in Quiet. Anecdotally, for these three toddlers, behavioral changes were noted following introduction of the competitors. The background noise led to a drastic change in their behavior and compliance with the task such that they would not participate in the task or provide a response. One toddler (CIFQ) could not be tested in any condition. He successfully participated in the familiarization task but was fatigued and removed his CIs when asked to provide responses. Although attrition is common in studies with toddlers, the fact that all NH subjects were able to complete the task suggests that there were other factors that caused 4/13 toddlers with BiCIs to be unable to complete the task. It is possible that the listening conditions were too confusing or difficult for them and that their limited exposure to complex auditory environments caused them to “shut down.” It is also possible that listening with the degraded input provided by CIs, and lower hearing age than the NH group, caused these toddlers to fatigue at a faster rate than their NH peers. Further research using reaction time and matching toddlers by bilateral listening age instead of chronological age or hearing age (as defined in this study) is important to help tease apart these issues and to aid in determining the trajectory of development.

Spatial Release From Masking

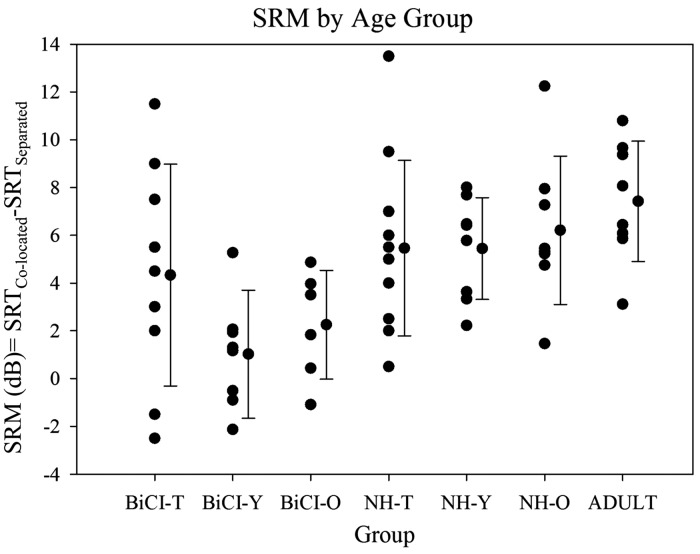

There is growing evidence showing that spatial separation of target and competitors results in improved ability to understand speech, in children as young as 3 to 4 years old (Ching, van Wanrooy, Dillon, & Carter, 2011; Garadat & Litovsky, 2007; Johnstone & Litovsky, 2006; Litovsky, 2005). The present study extended that age range down to 2 to 3 years. Figure 4 shows mean SRM and compares performance with previously published research (Misurelli & Litovsky, 2012) using identical listening conditions and loudspeaker configurations, but different target stimuli. The NH group in the present study shows approximately the same amount of SRM, suggesting that NH toddlers have the capacity to use spatial cues for source segregation in a manner that is similar to that seen in older children and adults when using one-word target stimuli. It should be noted that SRM may differ between groups when more complex stimuli are used.

Figure 4.

SRM values are plotted as a function of child group, from the present study and from previously published data (Misurelli & Litovsky, 2012). Results from the present study are labeled as BiCI-T (BiCI group who are toddlers) and NH-T (NH group who are toddlers). Results from a previous publication are labeled as BiCI-Y (“young,” ages 4–6); BiCI-O (“older,” ages 7–9); NH-Y (“young,” ages 4–6); NH-O (“older,” ages 7–9); and Adult. Individual data points are shown for each group, along with group means (±SD).

SRM = spatial release from masking; SRTs = speech reception thresholds; BiCI = bilateral cochlear implant; NH = normal hearing.

Unlike prior studies (e.g., Misurelli & Litovsky, 2012, 2015), in which SRM in children with BiCIs was smaller than in NH age-matched children, here we observed that SRM was not significantly different between the two groups of children. This novel finding suggests that some toddlers who are fitted with BiCIs by age 18 months may have the capacity to use spatial cues for source segregation similarly to their NH age-matched counterparts. In prior studies by Misurelli and Litovsky, children received their second CI by 4 to 5 years of age, nearly all were sequentially implanted, and unilateral listening experience preceded bilateral activation.

There is evidence to suggest that early unilateral experience can promote asymmetries in auditory development and can thus diminish the ability of the auditory system to respond properly to contralateral stimulation (Gordon, Henkin, & Kral, 2015). The issue of asymmetrical hearing has been explored in the context of spatial unmasking for detecting speech in the presence of competing sounds (Chadha et al., 2011). In that study, the ability to benefit from spatial cues to detect speech in children who were bilaterally implanted was better if the two CIs were implanted simultaneously than if the two CI were received sequentially. Similarly, Killan et al. (2015) measured SRTs and SRM in children who received the two CIs sequentially, between 3 and 10 years of age. SRTs in that study were reported to improve with bilateral experience; the interesting component is that SRTs were measured separately for each ear, and with additional experience, the second-implanted ear improved, leading ultimately to a more symmetrical hearing status. Most recently, in a large-scale longitudinal study, Cullington et al. (2017) found that children who received their second CI sooner had greater improvement in their ability to understand speech in noise than those with a longer period between their first and second CI.

The present study did not test for asymmetries, and the competing sound was always placed closer to the side of the first-implanted ear (or toward the right). It is possible that asymmetries in audibility or speech intelligibility may have affected the SRM values that were measured here. For example, SRM in at least some of the toddlers with BiCIs might have been larger if the competing sound were placed toward the second-implanted ear, as was reported by Litovsky et al. (2006). Thus, a caveat in the present study is that potential effects of asymmetries in SRM were not investigated. Finally, the issue of similarity between children with BiCIs and NH children is important. Ching, Dillon, Leigh, and Cupples (2017) measured SRM in 84 children with CIs at 5 years of age, 56 of whom wore BiCIs; they reported that while overall SRTs were higher, SRM was equivalent to NH peers. In the current study with children 2.5 years of age, while there was overlap in the SRM observed in children with BiCIs and children with NH, it is premature to conclude that at this young age, early bilateral listening experience is responsible for normal development of bilateral hearing. First, the effects observed here are not necessarily due to binaural hearing, and it is well known that bilateral CIs do a poor job of preserving interaural timing cues (e.g., Kan & Litovsky, 2015). Second, is may be that developmental effects unrelated to bilateral hearing per se affect the children’s ability to use spatial cues that are provided by the speech processors; hence, these same children when tested at later ages might not display the same NH-like performance. Finally, because stimuli were presented in free field through clinical processors, little can be said about actual use of binaural cues. In older children with BiCIs, there is evidence that binaural unmasking occurs when research processors are used to deliver stimuli to single pairs of synchronized electrodes (Todd, Goupell, & Litovsky, 2016; Van Deun et al., 2010). Future work could benefit from a more systematic evaluation of these issues.

Conclusion

Little is known about the ability of young children who receive BiCIs during the toddler years (before age 2) to use spatial cues for segregating target speech from background maskers. Here, we used an age-appropriate task to evaluate SRM in 2- to 3-year-old children who use BiCIs and compared performance with age-matched NH toddlers. SRTs were higher in the BiCI than NH group in all conditions. All but two children with BiCIs had SRM values within the NH range. This work shows that to some extent, the ability to use spatial cues for source segregation develops by age 2 to 3 years in NH children and is attainable in most of the children in the BiCI group. Many issues remain to be better understood regarding the impact of early deprivation as well as early activation. The fact that these children do not hear binaural cues with fidelity due to the use of unsynchronized CI processors may limit their binaural unmasking abilities; however, early exposure to sound in both ears may activate neural circuits and symmetry of auditory function required for overall best performance in noisy environments.

Acknowledgments

The authors are grateful to the children and their families for their time and dedication to this study. The authors also thank Erica Ehlers, Shelly Godar, and Kayla Kristensen for help in data collection, and McKenzie Klein for her help with edits to the tables and graphs.

Declaration of Conflicting Interests

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This research is supported by National Institute on Deafness and Other Communication Disorders of the National Institutes of Health under Grant No. R01DC008365 (R. Y. L., PI) and in part by a core grant to the Waisman Center from the National Institute on Deafness and Other Communication Disorders (P30 HD03352).

References

- Baskent D. (2012) Effect of speech degradation on top-down repair: Phonemic restoration with simulations of cochlear implants and combined electric-acoustic stimulation. Journal of the Association for Research in Otolaryngology 13: 683–692. doi: 10.1007/s10162-012-0334-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bronkhorst A. W. (2000) The cocktail party phenomenon: A review of research on speech intelligibility in multiple-talker conditions. Acta Acustica united with Acustica 86: 117–128. [Google Scholar]

- Bronkhorst A. W. (2015) The cocktail-party problem revisited: Early processing and selection of multi-talker speech. Attention, Perception, & Psychophysics 77: 1465–1487. doi: 10.3758/s13414-015-0882-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bronkhorst A. W., Plomp R. (1988) The effect of head-induced interaural time and level differences on speech intelligibility in noise. Journal of the Acoustical Society of America 83: 1508–1516. [DOI] [PubMed] [Google Scholar]

- Buss E., Hall J. W., III, Grose J. H. (2009) Psychometric functions for pure tone intensity discrimination: Slope differences in school-aged children and adults. Journal of the Acoustical Society of America 125: 1050–1058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buss E., Leibold L. J., Hall J. W., III (2016) Effect of response context and masker type on word recognition in school-age children and adults. Journal of the Acoustical Society of America 140: 968–977. doi: 10.1121/1.3050273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caldwell A., Nittrouer S. (2013) Speech perception in noise by children with cochlear implants. Journal of Speech, Language, and Hearing Research 56: 13–30. doi: 10.1044/1092-4388(2012/11-0338). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cameron S., Dillon H. (2007) La audición en la prueba de frases en ruido espaciado (LISN-S): Estudio de confiabilidad de test–retest [The listening in spatialized noise-sentences test (LISN-S): Test-retest reliability study]. International Journal of Audiology 46: 145–153. [DOI] [PubMed] [Google Scholar]

- Chadha N. K., Papsin B. C., Jiwani S., Gordon K. A. (2011) Speech detection in noise and spatial unmasking in children with simultaneous versus sequential bilateral cochlear implants. Otology & Neurotology 32: 1057–1064. doi: 10.1097/MAO.0b013e3182267de7. [DOI] [PubMed] [Google Scholar]

- Ching T. Y., Dillon H., Leigh G., Cupples L. (2017) Learning from the Longitudinal Outcomes of Children with Hearing Impairment (LOCHI) study: Summary of 5-year findings and implications. International Journal of Audiology 11: 1–7. doi: 10.1080/14992027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ching T. Y., van Wanrooy E., Dillon H., Carter L. (2011) Spatial release from masking in normal-hearing children and children who use hearing aids. Journal of the Acoustical Society of America 129: 368–375. doi: 10.1121/1.3523295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cullington H., Bele D., Brinton J., Cooper S., Daft M., Harding J., Wilson K. (2017) United Kingdom national paediatric bilateral project: Demographics and results of localisation and speech perception testing. Cochlear Implants International 18: 2–22. doi: 10.1080/14670100.2016.1265189. [DOI] [PubMed] [Google Scholar]

- Dorman, M. F., Loiselle, L., Stohl, J., Yost, W. A., Spahr, A., Brown, C., & Cook, S. (2014). Interaural level differences and sound source localization for bilateral cochlear implant patients. Ear and Hearing, 35, 633. doi: 10.1097/AUD.0000000000000057. [DOI] [PMC free article] [PubMed]

- Drullman R., Bronkhorst A. W. (2000) Multichannel speech intelligibility and talker recognition using monaural, binaural, and three-dimensional auditory presentation. Journal of the Acoustical Society of America 107: 2224–2235. [DOI] [PubMed] [Google Scholar]

- Eisenberg L. S., Fisher L. M., Johnson K. C., Ganguly D. H., Grace T., Niparko J. K. CDaCI Investigative Team (2016) Sentence recognition in quiet and noise by pediatric cochlear implant users: Relationships to spoken language. Otology & Neurotology 37: e75–e81. doi: 10.1097/MAO.0000000000000910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Festen J. M., Plomp R. (1990) Effects of fluctuating noise and interfering speech on the speech-reception threshold for impaired and normal hearing. Journal of the Acoustical Society of America 88: 1725–1736. [DOI] [PubMed] [Google Scholar]

- Freyman R. L., Helfer K. S., McCall D. D., Clifton R. K. (1999) The role of perceived spatial separation in the unmasking of speech. Journal of the Acoustical Society of America 106: 3578–3588. [DOI] [PubMed] [Google Scholar]

- Garadat S. N., Litovsky R. Y. (2007) Speech intelligibility in free field: Spatial unmasking in preschool children. Journal of the Acoustical Society of America 121: 1047–1055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gordon K., Henkin Y., Kral A. (2015) Asymmetric hearing during development: The aural preference syndrome and treatment options. Pediatrics 136: 141–153. doi: 10.1542/peds.2014-3520. [DOI] [PubMed] [Google Scholar]

- Gordon, K. A., Jiwani, S., & Papsin, B. C. (2013). Benefits and detriments of unilateral cochlear implant use on bilateral auditory development in children who are deaf. Frontiers in Psychology, 4, 719. doi: 10.3389/fpsyg.2013.00719. [DOI] [PMC free article] [PubMed]

- Grieco-Calub, T. M., & Litovsky, R. Y. (2010). Sound localization skills in children who use bilateral cochlear implants and in children with normal acoustic hearing. Ear and Hearing, 31, 645. doi: 10.1097/AUD.0b013e3181e50a1d. [DOI] [PMC free article] [PubMed]

- Grieco-Calub, T. M., Litovsky, R. Y. (2012). Spatial acuity in 2-to-3-year-old children with normal acoustic hearing, unilateral cochlear implants, and bilateral cochlear implants. Ear and Hearing, 31, 561–572. doi: 10.1097/AUD.0b013e31824c7801. [DOI] [PMC free article] [PubMed]

- Jiwani S., Papsin B. C., Gordon K. A. (2016) Early unilateral cochlear implantation promotes mature cortical asymmetries in adolescents who are deaf. Human Brain Mapping 37: 135–152. doi: 10.1002/hbm.23019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnstone P. M., Litovsky R. Y. (2006) Effect of masker type and age on speech intelligibility and spatial release from masking in children and adults. Journal of the Acoustical Society of America 120: 2177–2189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones G. L., Litovsky R. Y. (2011) A cocktail party model of spatial release from masking by both noise and speech interferers. Journal of the Acoustical Society of America 130: 1463–1474. doi: 10.1121/1.3613928. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kan A., Litovsky R. Y. (2015) Binaural hearing with electrical stimulation. Hearing Research 322: 127–137. doi: 10.1016/j.heares.2014.08.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Killan C. F., Killan E. C., Raine C. H. (2015) Changes in children’s speech discrimination and spatial release from masking between 2 and 4 years after sequential cochlear implantation. Cochlear Implants International 16: 270–276. doi: 10.1179/1754762815Y.0000000001. [DOI] [PubMed] [Google Scholar]

- Leibold L. J., Bonino A. Y. (2009) Release from informational masking in children: Effect of multiple signal bursts. Journal of the Acoustical Society of America 125: 2200–2208. doi: 10.1121/1.3087435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leibold L. J., Neff D. L. (2007) Effects of masker-spectral variability and masker fringes in children and adults. Journal of the Acoustical Society of America 121: 3666–3676. [DOI] [PubMed] [Google Scholar]

- Litovsky R. Y. (2005) Speech intelligibility and spatial release from masking in young children. Journal of the Acoustical Society of America 117: 3091–3099. [DOI] [PubMed] [Google Scholar]

- Litovsky R. Y. (2011) Review of recent work on spatial hearing skills in children with bilateral cochlear implants. Cochlear Implants International 12(Suppl 1): S30–S34. doi: 10.1179/146701011X13001035752372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky R. Y., Gordon K. (2016) Bilateral cochlear implants in children: Effects of auditory experience and deprivation on auditory perception. Hearing Research 338: 76–87. doi: 10.1016/j.heares.2016.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky, R. Y., Goupell, M. J., Misurelli, S. M., & Kan, A. (2017). Hearing with cochlear implants and hearing aids in complex auditory scenes. In J. Middlebrooks, J. Simon, R. R. Fay and A. N. Popper (Eds.), The auditory system at the cocktail party (pp. 261–291). Springer.

- Litovsky R. Y., Johnstone P. M., Godar S. P. (2006) Benefits of bilateral cochlear implants and/or hearing aids in children. International Journal of Audiology 45(Suppl 1): S78–S91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loizou P. C., Hu Y., Litovsky R., Yu G., Peters R., Lake J., Roland P. (2009) Speech recognition by bilateral cochlear implant users in a cocktail-party setting. Journal of the Acoustical Society of America 125(1): 372–383. doi: 10.1121/1.3036175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lutfi R. A., Kistler D. J., Callahan M. R., Wightman F. L. (2003) Psychometric functions for informational masking. Journal of the Acoustical Society of America 114: 3273–3282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCreery R. W., Spratford M., Kirby B., Brennan M. (2017) Individual differences in language and working memory affect children’s speech recognition in noise. International Journal of Audiology 56: 306–315. doi: 10.1080/14992027.2016.1266703. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Misurelli S. M., Litovsky R. Y. (2012) Spatial release from masking in children with normal hearing and with bilateral cochlear implants: Effect of interferer asymmetry. Journal of the Acoustical Society of America 132: 380–391. doi: 10.1121/1.4725760. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Misurelli S. M., Litovsky R. Y. (2015) Spatial release from masking in children with bilateral cochlear implants and with normal hearing: Effect of target-interferer similarity. Journal of the Acoustical Society of America 138: 319–331. doi: 10.1121/1.4922777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mok M., Galvin K. L., Dowell R. C., McKay C. M. (2010) Speech perception benefit for children with a cochlear implant and a hearing aid in opposite ears and children with bilateral cochlear implants. Audiology & Neuro-otology 15: 44–56. doi: 10.1159/000219487. [DOI] [PubMed] [Google Scholar]

- Moore D. R., Cowan J. A., Riley A., Edmondson-Jones A. M., Ferguson M. A. (2011) Development of auditory processing in 6- to 11-yr-old children. Ear and Hearing 32: 269–285. doi: 10.1097/AUD.0b013e318201c468. [DOI] [PubMed] [Google Scholar]

- Murphy J., Summerfield A. Q., O’Donoghue G. M., Moore D. R. (2011) Spatial hearing of normally hearing and cochlear implanted children. International Journal of Pediatric Otorhinolaryngology 75: 489–494. doi: 10.1016/j.ijporl.2011.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niparko J. K., Tobey E. A., Thal D. J., Eisenberg L. S., Wang N. Y., Quittner A. L., CDaCI Investigative Team (2010) Spoken language development in children following cochlear implantation. Journal of the American Medical Association 303: 1498–1506. doi: 10.1001/jama.2010.451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oh E. L., Wightman F., Lutfi R. A. (2001) Children’s detection of pure-tone signals with random multitone maskers. Journal of the Acoustical Society of America 109: 2888–2895. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Papsin B. C., Gordon K. A. (2008) Bilateral cochlear implants should be the standard for children with bilateral sensorineural deafness. Current Opinion in Otolaryngology & Head and Neck Surgery 16: 69–74. doi: 10.1097/MOO.0b013e3282f5e97c. [DOI] [PubMed] [Google Scholar]

- Peters B. R., Litovsky R., Parkinson A., Lake J. (2007) Importance of age and postimplantation experience on speech perception measures in children with sequential bilateral cochlear implants. Otology & Neurotology 28: 649–657. [DOI] [PubMed] [Google Scholar]

- Plomp R. (1976) Binaural and monaural speech intelligibility of connected discourse in reverberation as a function of azimuth of a single competing sound source (speech or noise). Acta Acustica united with Acustica 34: 200–211. [Google Scholar]

- Reeder R. M., Firszt J. B., Cadieux J. H., Strube M. J. (2017) A longitudinal study in children with sequential bilateral cochlear implants: Time course for the second implanted ear and bilateral performance. Journal of Speech, Language, and Hearing Research 60: 276–287. doi: 10.1044/2016_JSLHR-H-16-0175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rothauser E. (1969) IEEE recommended practice for speech quality measurements. IEEE Transactions on Audio and Electroacoustics 17: 225–246. [Google Scholar]

- Runge C. L., Jensen J., Friedland D. R., Litovsky R. Y., Tarima S. (2011) Aiding and occluding the contralateral ear in implanted children with auditory neuropathy spectrum disorder. Journal of the American Academy of Audiology 22: 567–577. doi: 10.3766/jaaa.22.9.2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schleich P., Nopp P., D'haese P. (2004) Head shadow, squelch, and summation effects in bilateral users of the MED-EL COMBI 40/40 + cochlear implant. Ear and Hearing 25(3): 197–204. [DOI] [PubMed] [Google Scholar]

- Sheffield B. M., Schuchman G., Bernstein J. G. (2015) Trimodal speech perception: How residual acoustic hearing supplements cochlear-implant consonant recognition in the presence of visual cues. Ear and Hearing 36: e99–e112. doi: 10.1097/AUD.0000000000000131. [DOI] [PubMed] [Google Scholar]

- Sparreboom M., Snik A. F., Mylanus E. A. (2011) Sequential bilateral cochlear implantation in children: Development of the primary auditory abilities of bilateral stimulation. Audiology & Neuro-otology 16: 203–213. doi: 10.1159/000320270. [DOI] [PubMed] [Google Scholar]

- Strøm-Roum H., Laurent C., Wie O. B. (2012) Comparison of bilateral and unilateral cochlear implants in children with sequential surgery. International Journal of Pediatric Otorhinolaryngology 76: 95–99. doi: 10.1016/j.ijporl.2011.10.009. [DOI] [PubMed] [Google Scholar]

- Todd A. E., Goupell M. J., Litovsky R. Y. (2016) Binaural release from masking with single-and multi-electrode stimulation in children with cochlear implants. Journal of the Acoustical Society of America 140: 59–73. doi: 10.1121/1.4954717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaillancourt V., Laroche C., Giguere C., Soli S. D. (2008) Establishment of age-specific normative data for the Canadian French version of the hearing in noise test for children. Ear and Hearing 29: 453–466. doi: 10.1097/01.aud.0000310792.55221.0c. [DOI] [PubMed] [Google Scholar]

- Van Deun L., van Wieringen A., Wouters J. (2010) Spatial speech perception benefits in young children with normal hearing and cochlear implants. Ear and Hearing 31: 702–713. doi: 10.1097/AUD.0b013e3181e40dfe. [DOI] [PubMed] [Google Scholar]

- van Hoesel R. J., Litovsky R. Y. (2011) Statistical bias in the assessment of binaural benefit relative to the better ear. Journal of the Acoustical Society of America 130: 4082–4088. doi: 10.1121/1.3652851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Hoesel R. J., Tyler R. S. (2003) Speech perception, localization, and lateralization with bilateral cochlear implants. Journal of the Acoustical Society of America 113: 1617–1630. [DOI] [PubMed] [Google Scholar]

- van Wieringen A., Wouters J. (2015) What can we expect of normally-developing children implanted at a young age with respect to their auditory, linguistic and cognitive skills? Hearing Research 322: 171–179. doi: 10.1016/j.heares.2014.09.002. [DOI] [PubMed] [Google Scholar]

- Werner, L. A. (2012). Overview and issues in human auditory development. In L. A. Werner, E. Rubel, A. Popper and R. Fay (Eds.), Human auditory development (pp. 1–18). New York, NY: Springer.

- Wilson B. S., Dorman M. F. (2008) Cochlear implants: A remarkable past and a brilliant future. Hearing Research 242: 3–21. doi: 10.1016/j.heares.2008.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yost W. A., Dye R. H., Sheft S. (1996) A simulated “cocktail party” with up to three sound sources. Perception & Psychophysics 58: 1026–1036. [DOI] [PubMed] [Google Scholar]

- Zheng, Y., Godar, S. P., & Litovsky, R. Y. (2015). Development of sound localization strategies in children with bilateral cochlear implants. PLoS One, 10, e0135790. doi: 10.1371/journal.pone.0135790. [DOI] [PMC free article] [PubMed]