Abstract

Surface electromyography signal plays an important role in hand function recovery training. In this paper, an IoT-enabled stroke rehabilitation system was introduced which was based on a smart wearable armband (SWA), machine learning (ML) algorithms, and a 3-D printed dexterous robot hand. User comfort is one of the key issues which should be addressed for wearable devices. The SWA was developed by integrating a low-power and tiny-sized IoT sensing device with textile electrodes, which can measure, pre-process, and wirelessly transmit bio-potential signals. By evenly distributing surface electrodes over user’s forearm, drawbacks of classification accuracy poor performance can be mitigated. A new method was put forward to find the optimal feature set. ML algorithms were leveraged to analyze and discriminate features of different hand movements, and their performances were appraised by classification complexity estimating algorithms and principal components analysis. According to the verification results, all nine gestures can be successfully identified with an average accuracy up to 96.20%. In addition, a 3-D printed five-finger robot hand was implemented for hand rehabilitation training purpose. Correspondingly, user’s hand movement intentions were extracted and converted into a series of commands which were used to drive motors assembled inside the dexterous robot hand. As a result, the dexterous robot hand can mimic the user’s gesture in a real-time manner, which shows the proposed system can be used as a training tool to facilitate rehabilitation process for the patients after stroke.

Keywords: sEMG control, stroke rehabilitation, IoT-enabled wearable device, machine learning

An IoT-enabled stroke rehabilitation system was implemented which was based on a smart wearable armband, machine learning algorithms, and a 3D printed dexterous robot hand. The smart wearable armband was developed by integrating a low-power and tiny-sized IoT sensing device with textile electrodes, which can measure, pre-process, and wirelessly transmit bio-potential signals. Machine learning (ML) algorithms were leveraged to analyze and discriminate features of different hand movements. The patient's hand movement intentions were further extracted to control the dexterous robot hand and the robot hand can mimic the patient's gesture in a real-time manner which enables active response to patient's volition.

I. Introduction

Stroke survivors’ upper limb motor functional recovery is slower and more difficult [1], compared with their lower extremities. Moreover, the recovery of hand function is often limited [2]. Consequently, the effective restoration of its functionality becomes a challenging task.

One-on-one interaction with a therapist who assists and motivates the patient is generally involved in conventional therapy, where patient’s willingness of the involvement is low due the day-by-day repeated passive training [3], [4]. By contrast, the robot-assisted active training system for neurorehabilitation can capture bio-potential signals from the patients, decode the patients’ motion volition, and then enable active response to their intentions. It has been proven that, such robot-assisted active training is more effective than passive methods [5], [6] and can enhance therapeutic effects [7]–[9].

Bio-potential signals can be measured invasively or non-invasively. Nonetheless, invasive technique is effective, while high-cost and inconsistent performance caused by tissue responses issues cannot be ignored [10]. Alternatively, surface electromyography (sEMG) is often the preferred scheme, as the multi-channel sEMG can be collected at any time on the skin surface according to the needs and the actual situation without the assistance of a doctor or professional nursing staff [11].

sEMG control strategy in its early stage primarily focused on mapping each channel of sEMG signal from the muscle group to a corresponding single degree of freedom (DOF) or variable such as speed or direction. In this case, users must be familiar with these unnatural mappings in order to perform the desired actions, which is quite cumbersome. In recent years, machine learning (ML) has been reported as an efficient approach which, to a large extent, could address these restrictions and inconvenience. ML is an approach using machine learning methods to speculate hand gestures from myoelectric patterns. Compared with conventional control strategy, ML based control strategy is more effective, as it maps user’s limb motion volition to dexterous robot hand function, intuitively and naturally [12]–[14]. In addition, the clinical applications of myoelectric pattern recognition also involve prosthetic control [15], and phantom limb pain treatment [16].

In the field of ML based sEMG control, efforts have been devoted to investigating feature selection and classification methods, such as Linear Discriminant Analysis (LDA) [17], Multi-Layer Perception (MLP) [18], and Support Vector Machine (SVM) [19]–[22]. Nevertheless, there are few studies focusing on factors that influence the complexity of the whole classification process. For instance, although the relevance and redundancy of features were investigated in previous studies [23], [24], specific conflicting classes still cannot be informed. Thus, classification complexity estimating algorithms (CCEAs) [26] and principal components analysis (PCA) were applied to estimate the class separability of different feature sets in this work.

Additionally, the classifiers adopted in previous studies by BioPatRec [25], a modular open source research platform based on MATLAB for myoelectric control, are commonly LDA and MLP. It has been reported in previous works that SVM has the advantages in making pattern classification of high-dimensional, nonlinear, and small-sampled data [19], [29]. In this work, a comparative study was conducted among the above mentioned three algorithms, aiming to find the optimal algorithm with the highest classification accuracy and the shortest training time.

Some investigations have been made on the correlation between the number of collected sEMG and the offline CA [22], [31], where usually no more than four channels of sEMG are measured for pattern recognition. However, this approach requires professional knowledge to place the recording electrodes on the specific position of user’s forearm, where the improper placement may lead to low recognition rate or even result in the failure of classification. In addition, the recording units or measurement devices reported in previous works are usually bulky in physical size, which makes it inconvenient to be applied in daily training for the stroke patients. Furthermore, the accuracy of the gesture recognition plays a quite important role for the stroke rehabilitation training system. The classification accuracy can be further improved by measuring extra channels of sEMG signals and processing with LDA [32].

Though non-wearable and wired rehabilitation system can detect bio-potential signals, it suffers from a restricted working region, where the user has quite limited mobility. Due to the advantages of its counter-part, the wearable wireless system is preferred to collect health data [33]. In addition, emerging Internet of Things (IoT) technology has offered great opportunities for developing smart rehabilitation systems [34]–[40]. Therefore, in this work, a tiny-sized, easy-to-use, and comfortable IoT-enabled smart wearable armband was developed, with the miniaturized electronics integrated inside and flexible textile electrodes evenly distributed along the inner side of the band. Additionally, a 3D printed five-finger robot hand driven by ML was implemented for stroke recovery. The real-time assistance from the robot hand gave the users the feedback of muscle activities and helped them strengthen their motion patterns.

The rest of this paper is organized as follows. The architecture of the whole system is presented in Section II. The method of gesture recognition and control is given in Section III. Subsequently, Sections IV presents the results of the proposed method. Finally, Section V discusses and summarizes the whole paper.

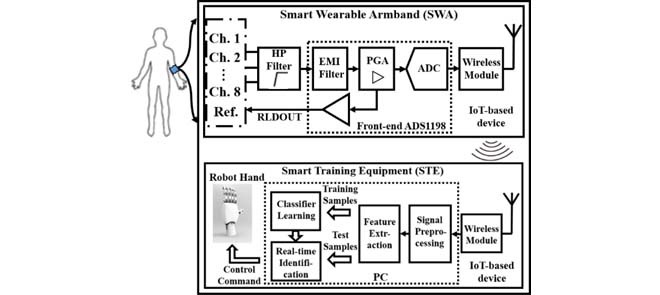

II. System Architecture

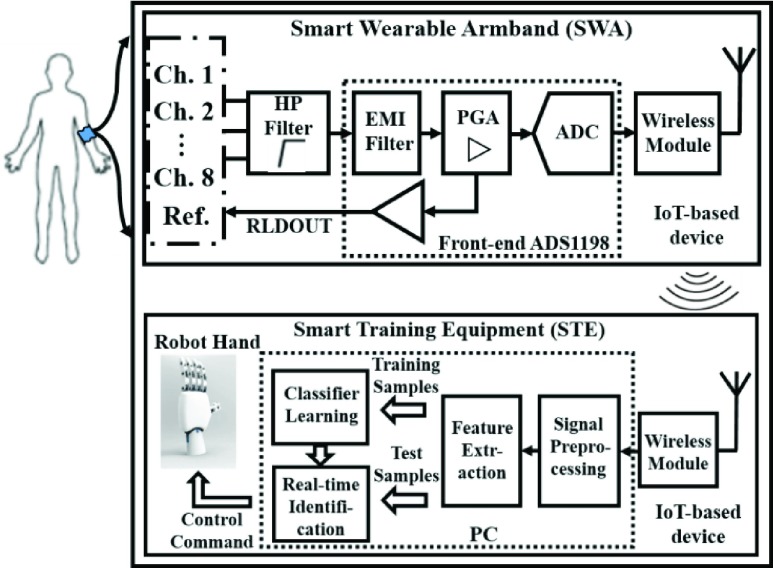

The architecture of the stroke rehabilitation system that has been implemented in this study is shown in Fig. 1. This proposed prototype consists of two parts: a smart wearable armband (SWA) and a smart training equipment (STE). Worn around user’s forearm, the smart wearable armband recorded, pre-processed, and transmitted the sEMG signals to the smart training equipment through wireless communication. After signal pre-processing and feature extraction, ML algorithms which had been off-line trained, were leveraged to discriminate features. Then the recognition outcome was mapped to dexterous robot hand function. As a result, the dexterous robot hand can mimic the user’s gesture in a real-time manner.

FIGURE 1.

Architecture of IoT-enabled stroke rehabilitation system.

A. Smart Wearable Armband

The SWA mainly consists of high pass filters, an analog front-end, a wireless module, and textile electrodes, which can collect, pre-process, and wirelessly transmit bio-potential signals of forearm.

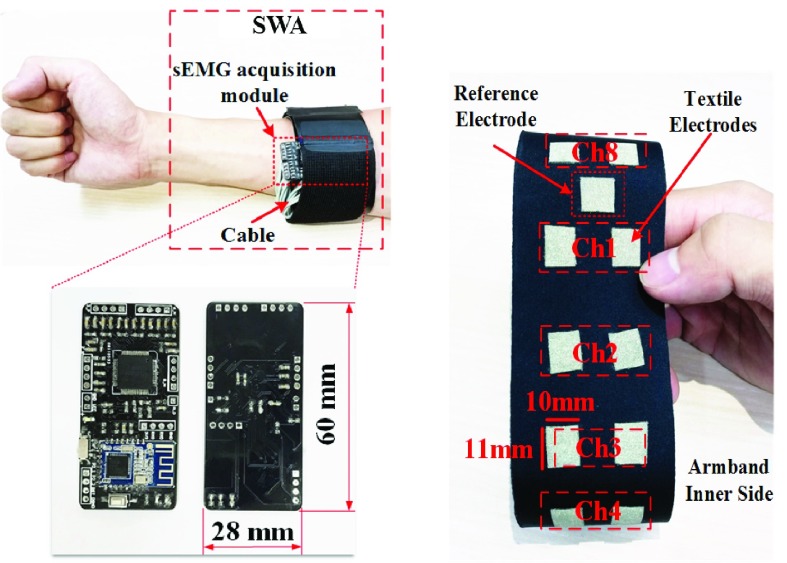

There were two luminescent spots in the design and realization of SWA. Softness is the first highlight. Textile electrodes and stretchable material were employed to fabricate this SWA. In this case, the SWA can be worn around the user’s forearm and provide comfort and softness throughout experiment. The detail of the SWA is shown in Fig. 2, and the center-to-center distance of the paired electrodes forming a differential structure was around 20 mm. The second advantage is its easiness to use as well as its wireless feature. Due to the lack of anatomical knowledge, inaccurate placement of electrode positions hinders the effective identification of motion intentions. In order to mitigate this drawback, evenly distributing surface electrodes was proposed in the design of SWA. Without special attention to the electrode position, relative high recognition rate can be achieved.

FIGURE 2.

Smart wearable armband (SWA).

The design concept of this SWA system as well as the signal processing flow is described as follows: In the first place, raw sEMG signal of each channel was filtered by a 10 Hz high pass filter circuit which can avoid saturation of the amplifier caused by motion artifact. Then, the signal was amplified and digitized by ADS1198, a TI low-power analog front-end with eight channels. This analog front-end contains built-in programmable gain amplifiers, 16-bit analog-to-digital converters and a built-in right leg drive amplifier, which was designed to suppress the common mode noise of the muscle electrical signal. Finally, the upper extremity pre-processed sEMG signals were transmitted in a dedicated packet structure by a wireless module to STE. The wireless communication was achieved by using TI transceiver CC2540 which supports Bluetooth Low Energy (BLE).

B. Smart Training Equipment

The smart training equipment mainly consists of machine learning algorithms and a 3D printed dexterous robot hand. First, the wireless module received pre-processed sEMG signals from SWA and transmitted them to PC host. In the PC environment, after signal pre-processing and feature extraction, off-line trained ML algorithms based on Matlab (Version R2015a, MathWorks, Natick, MA, USA) were leveraged to discriminate features. All nine gestures can be successfully identified. Meanwhile, the real time recognition results were converted to a series of appropriate commands which were used to drive motors integrated in a 3D printed dexterous robot hand, an assistive device for neurorehabilitation.

III. Gesture Recognition and Control

A. Subjects Information and Experiment

To verify the performance of the proposed prototype, experiments were conducted where two male able-bodied subjects (aged 22–24) and one female able-bodied subject (aged 22) were involved. Before carrying out this study, approval of the Ethics Committee of the 117th Hospital of People’s Liberation Army (PLA) has been obtained. Voluntary subjects signed informed consents prior to the experiment.

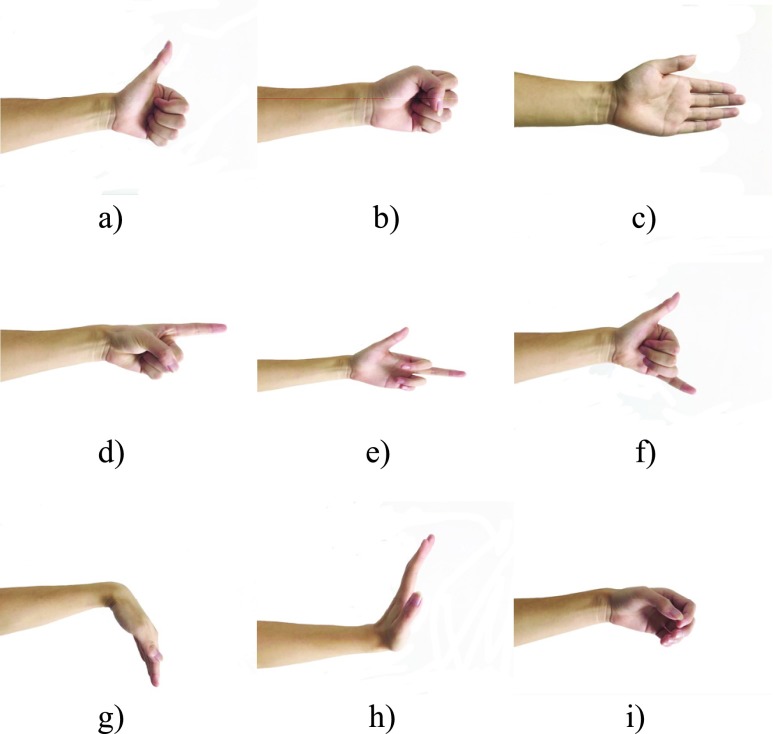

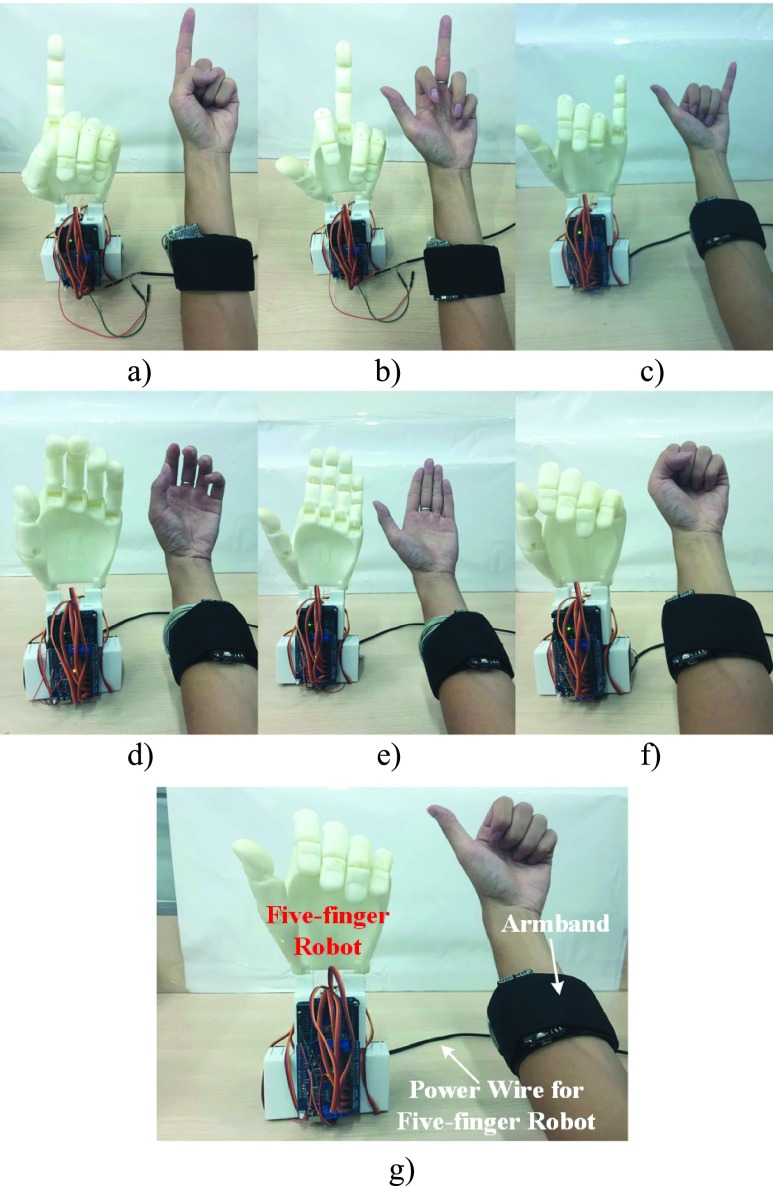

There were two parts in the experiment: a recording section and an identification section. In the recording section, subjects were seated in a comfortable height-adjustable chair. The sEMG signals of each action were recorded in sequence. More specifically, after the skin was cleaned with medical alcohol wipes, the designed armband was placed on the right forearm. The instructed contraction time was 3 s. Between every two consecutive contractions, there was sufficient rest time to avoid muscle fatigue. Each movement was repeated three times. When performing gestures, the fingers only need to be fully extended or fully flexed without excessive force. The target gesture set includes agree (AG), close hand (CH), open hand (OH), pointer (PT), thumb and middle finger (T&M), thumb and little finger (T&L), flex hand (FH), extend hand (EH), relax (RE), as shown in Fig. 3. In the identification section, any movement of the nine target gestures was allowed to execute during this period, with each gesture repeated 12 times in total. And real-time hand gesture recognition result was further served as instructions to control the five-finger dexterous robot hand.

FIGURE 3.

Target gesture set: a) AG, b) CH, c) OH, d) PT, e) T&M, f) T&L, g) FH, h) EH, i) RE.

B. Gesture Recognition

Based on BioPatRec, a modular open source research platform [20], [25], some specific innovations and the main contributions of our work are as follows: First, an additional sEMG signal feature, three-order AR coefficients, was introduced. Second, a new method was put forward to find an optimal feature set. Third, a comparative study was conducted among LDA, MLP, and SVM algorithms, which was rarely studied with BioPatRec. Finally, the performances of the above work were appraised by the combination of CCEAs and PCA.

1). Pre-Processing

In order to get effective information, we removed 15% of the contraction time both at the beginning and end of the recorded data to distill valid signals. For each hand gesture of each subject, in total 6.3 seconds of sEMG recording was involved in the analysis. Then the 6.3 seconds section was segmented into 200-ms-long time windows with an overlap of 50 ms. Thus the valid 6.3 seconds section was divided into 123 time windows.

2). Feature Extraction

Six sEMG features were employed, including mean absolute value (MAV), standard deviation (SD), variance (VAR), waveform length (WL), root mean square (RMS), zero-crossing (ZC). As they are time domain features, they were denoted as “tmabs” “tstd” “tvar” “twl” “trms” “tzc”, respectively. WL and ZC were described in equation (1)–(2). In addition, three-order AR coefficients was introduced as an additional sEMG signal feature in BioPatRec, which is denoted as “tar33”. The system function of the AR model was expressed by equation (3).

|

where  is the window size,

is the window size,  is the sEMG signal.

is the sEMG signal.

|

where the restriction  should be taken into account, and

should be taken into account, and  is the threshold to depress the noise effect.

is the threshold to depress the noise effect.

|

where  is the sEMG signal,

is the sEMG signal,  denotes the order of the AR model,

denotes the order of the AR model,  and

and  are AR coefficients and Gaussian white noise, respectively.

are AR coefficients and Gaussian white noise, respectively.

The feature vector used for classification was composed of the features extracted from a single time window. Every 8 consecutive elements of the feature vector were eight features of the same type which were extracted from 8 different channels. For instance, the first 8 elements of the feature vector were eight MAV features. Different features distribute differently in feature space, and thus the outcome of pattern classifier varies with different feature sets. For instance, MAV and RMS both describe the signal energy of sEMG, indicating that the motion information implied by some certain features is coincident. Therefore, in order to prevent redundant information in gesture recognition, it is necessary to find an optimal feature set [41], [42].

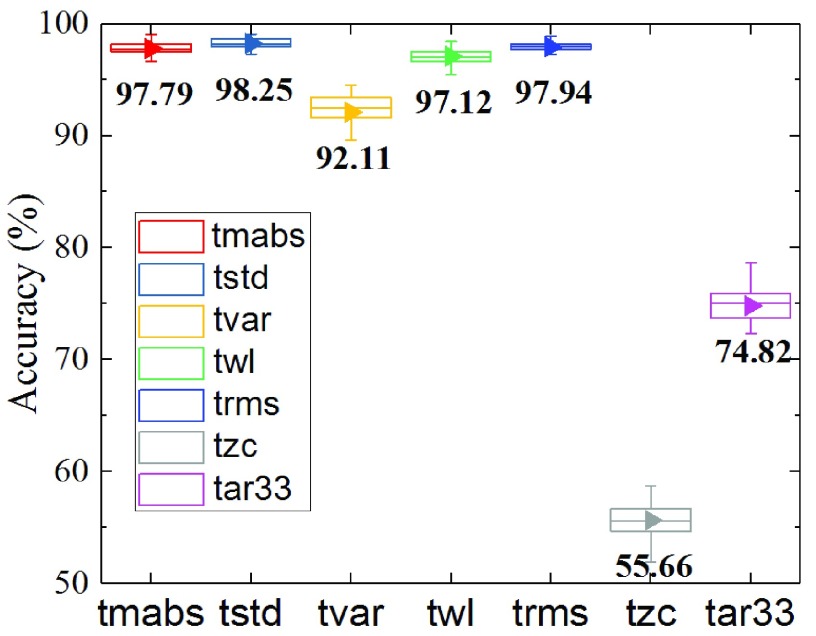

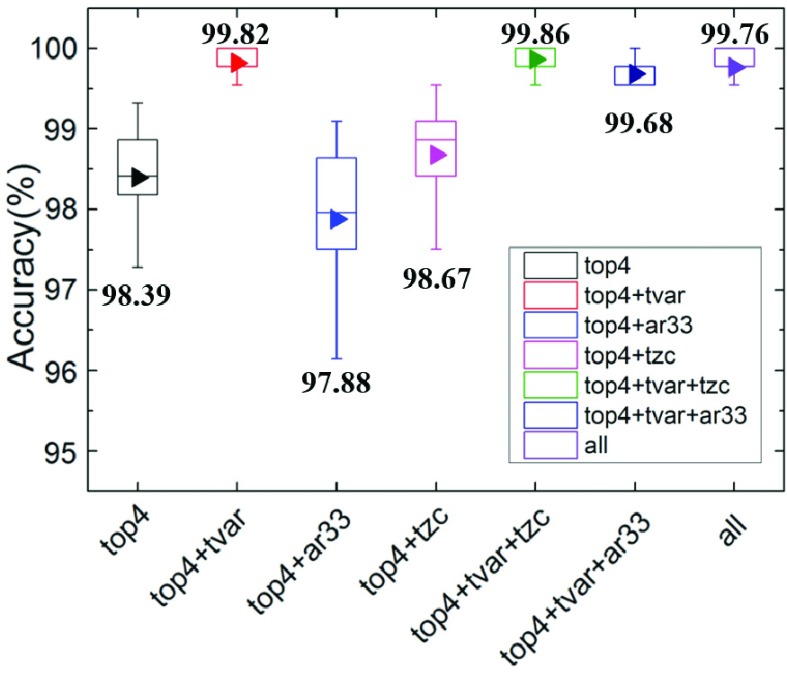

Univariate feature selection to find the optimal feature set was proposed in this study. It can be briefly described as follows. Firstly, offline classification accuracies for individual features were calculated using ML algorithm. The result is shown in Fig. 4. And one set of four features with the highest average CA was selected. Each time add one of the remaining features to form a new feature combination, and then select the combination of highest recognition rate from all options until the feature number of combination was up to six. Finally,we got the best set of six features.

FIGURE 4.

Offline classification accuracy using individual feature.

3). Estimate the Result of Feature Selection

It is known that features with high class separability improve recognition accuracy. Therefore, the data analysis tools including CCEAs [26] and PCA were further used to evaluate class separabilities of different combinations of features.

Mahalanobis distance [27], [28], the average of the distances between all movements and their most conflicting neighbor [49], is one of the measures in CCEAs. Mahalanobis distance can be understood in the following equations.

|

where C is the number of classes,  is the total number of class

is the total number of class  ,

,  and

and  are feature vectors and priori probability for class

are feature vectors and priori probability for class  , respectively,

, respectively,  and

and  are the trace of the divergence matrix between categories and within categories, respectively.

are the trace of the divergence matrix between categories and within categories, respectively.

PCA can present a lower-dimensional picture to view class separability by maintaining the first few principal components, resulting in the reduction of the transformed data dimensions. In this study, the cumulative contribution rate of the first three principal components was 95.86%. Therefore, three eigenvectors corresponding to the largest eigenvalues were selected as the PCA projection matrix in order to view the most informative three-dimensional picture.

4). Classification

A comparative study was conducted among LDA, MLP, and SVM algorithms, which was rarely studied with BioPatRec. LDA, a statistical classification method, is the fastest algorithm with low complexity and quick training than other types of algorithms [15], [50]. Concentrating on the decision boundary, LDA is generally more robust against irrelevant outliers in the training data. Nevertheless, as LDA gives less insight into the structure of the data space, it has limited capacity when dealing with data containing missing entries. The goal of the SVM is to obtain the minimum classification error, as well as the maximum classification interval to ensure maximum stability and excellent generalization ability. Take the two-class linear classification issue, the initial design concept of the SVM, for example.

|

where  is a weight vector,

is a weight vector,  is the kernel map, and

is the kernel map, and  is an offset.

is an offset.

The original optimization problem can be transformed into the corresponding dual optimization problem:

|

where  is slack variable,

is slack variable,  is regularization parameter to make balance between the minimum amount of data point deviation and maximum margin, and

is regularization parameter to make balance between the minimum amount of data point deviation and maximum margin, and  is an indicator vector.

is an indicator vector.

Theoretically, MLP provides a method for finding optimal solutions that can be applied to any kind of classification problem, and it can classify more complex patterns through self-learning.

Since the 6.3 seconds section was segmented into 200-ms-long time windows with an overlap of 50 ms, the 6.3 seconds section was divided into 123 time windows. 123 feature vectors were further obtained, as a feature vector was extracted from a single time window. To assess the classification performance of different feature sets and different classifiers, holdout cross validation was adopted. The detail of holdout cross validation can be described as follows: the whole 123 feature vectors were randomized and were split into three sets: 40% of the feature vectors for training the classifiers, 20% for validation process, and the rest for testing process. These three sets never overlap. Then the holdout cross validation was repeated twenty times to draw the classification results. Classification accuracy (CA) is a notable indicator of the classification results, which is defined below:

|

C. Control

The identified movement intention which was achieved by the steps described above served as control input to a five-finger dexterous robot hand. Its working principle can be briefly described as follows: 3D printing technology was applied to fabricate the robot hand. And Arduino Mega 2560 was chosen as electronic control unit, for its low cost, fast-prototyping, and open-source prototyping platform. Each servo motor drove corresponding reel to rotate, meanwhile, wound fishing line onto the reel. As the fishing line passed through the corresponding finger and the end of the line was fixed at the finger tips, therefore, the 3D printed finger was accordingly bent. Take the movement of agree as an example. In order to realize this gesture on the five-finger dexterous robot hand, the servo motor corresponding to the thumb remained its original angle. Meanwhile, the rest four servo motors drove corresponding reels to rotate, further bending the remaining four 3D printed fingers.

IV. Results

A. Feature Selection

Figure 4 illustrates offline classification accuracy using individual feature, and meanwhile LDA was employed as the classifier. “tmabs” (97.79 ± 0.49%), “tstd” (98.25 ± 0.25%), “twl” (97.12 ± 0.59%), and “trms” (97.94 ± 0.49%) were the four with the highest average classification accuracy, and they were defined as “top4”. Despite the adoption of AR in previous studies, it is obvious that offline classification performance of “tar33” was inferior to “tvar” (74.82 ± 4.20% versus 92.11 ± 2.57%), and their performances ranked sixth and fifth, respectively. As sEMG signals are non-stationary micro electrical signal, the rate of the signal value passing through zero tended to have a very slight difference among nine movements, which means that “tzc” provides too low spatial resolution information to identify gestures independently. Consequently, offline classification performance of “tzc” (55.66 ± 4.05%) was the worst among all the features.

The classification accuracies of different feature combinations were further investigated using LDA, as shown in Fig. 5. “top4” stands for “tmabs”, “tstd”, “twl” and “trms”, the four with the highest average CA, which has been described above. The offline classification performance of “top4+tvar” was just a little bit inferior to “top4+tvar+tzc” (99.81 ± 0.04% versus 99.86 ± 0.02%), and their performances ranked second and first, respectively.

FIGURE 5.

Offline classification accuracy using different combinations of features.

Taking the classification performance and computational complexity into account, “top4+tvar” with low dimension and high classification performance was selected as the optional feature set. It is worth noting that the outcome of combining all the features (99.76 ± 0.10%) was not the best, just ranked third.

B. Verify the Result of Feature Selection

Figure 6 displays the data analysis result using CCEAs, while the feature extraction setting was “top4+tvar”, which means “tmabs”, “tstd”, “twl”, “trms”, and “tvar”. Section 1 shows nine scatter plots, illustrating each class and its closest neighbor in feature space. The red dot set is the concerned movement in a single subfigure. Meanwhile, the blue dot set is the closest neighbor of the concerned movement. The concerned movements presented by the red dot sets vary in different subfigures (see Table 1 for details). Section 2 shows one of the scatter plots more clearly. The red dot set is extend hand, the concerned movement. The blue dot set is open hand which is the closest neighbor of the concerned movement. The Mahalanobis distance between the two classes is 13. Section 3 shows a table in order. And the movement which is the most conflicting neighbor for any of the other movements ranks at the head of the form. The overwhelming majority of scatter plots of nine movements were smooth curves, sufficiently demonstrating the effectiveness of proposed feature selection method.

FIGURE 6.

Data analysis tool using CCEAs [26]. Section 1 illustrates the distance of each class and its closest neighbors in feature space (2D). Section 2 shows a table in the order of the number of times that a movement is the most conflicting neighbor for any of the other movements. Data from a recording session of nine movements was showed. The feature extraction setting is “tmabs”, “tstd”, “twl”, “trms”, and “tvar”. Section 3 shows a table of movement conflicts.

TABLE 1. Mahalanobis Distance Between Two Classes.

| a | b | c | d | e | f | g | h | i | |

|---|---|---|---|---|---|---|---|---|---|

| Red dot | OH | CH | FH | EH | AG | PT | T&L | T&M | RE |

| Blue dot | T&M | AG | RE | OH | CH | T&L | AG | AG | PT |

| Mahalanobis distance | 9.1 | 4.7 | 11.3 | 13 | 4.7 | 6.2 | 5.9 | 7.6 | 12.1 |

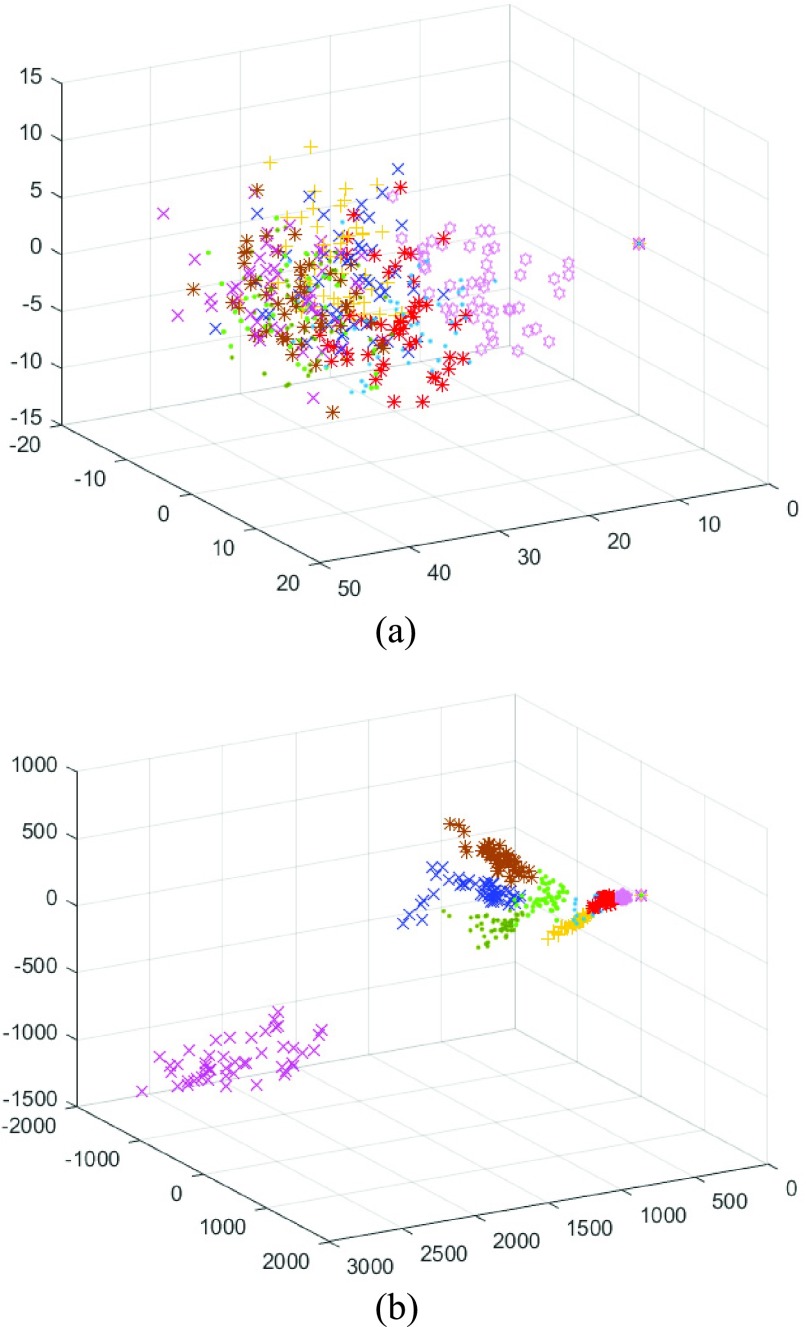

The distribution of nine classes in feature space (3D) by leveraging PCA is shown in Fig. 7. This is done by selecting the first three principal components from the PCA-reduced feature matrix from a typical data record. Each type of marker represented one movement. Selecting “tzc” feature as the only feature fed to classifier, it came out that nine movements were mixed together, which means the separability among classes was low (see Fig. 7 (a)). It vividly illustrates the relatively low offline CA employing the “tzc” feature individually, which is mentioned in the first part of this section (see Fig. 4). In contrast, having applied the proposed method, the optimal feature set of the highest accuracy was obtained, that is “tmabs”, “tstd”, “twl”, “trms”, and “tvar”. Obviously, every movement tends to cluster and there is relatively clear interval among nine movements, and also each kind of marker stands for one movement (see Fig. 7 (b)).

FIGURE 7.

The distribution of nine classes in feature space (3D) leveraging PCA: (a) the feature extraction setting is “tzc”; (b) the feature extraction setting is “tmabs”, “tstd”, “twl”, “trms”, and “tvar”.

The clusters for different classes slightly overlap in the reduced feature space which cannot be used to refute high classification performance (99.81 ± 0.04%). That is because

C. Classification

when the PCA transforms the original feature space into a new feature space with the component in turn having the highest variance possible, it merely produces a well-described coordinate system for most informative projection, without consideration of class separation. In this case, the effect of signal compression by the PCA projection leads to low class separability of the PCA-reduced feature space.

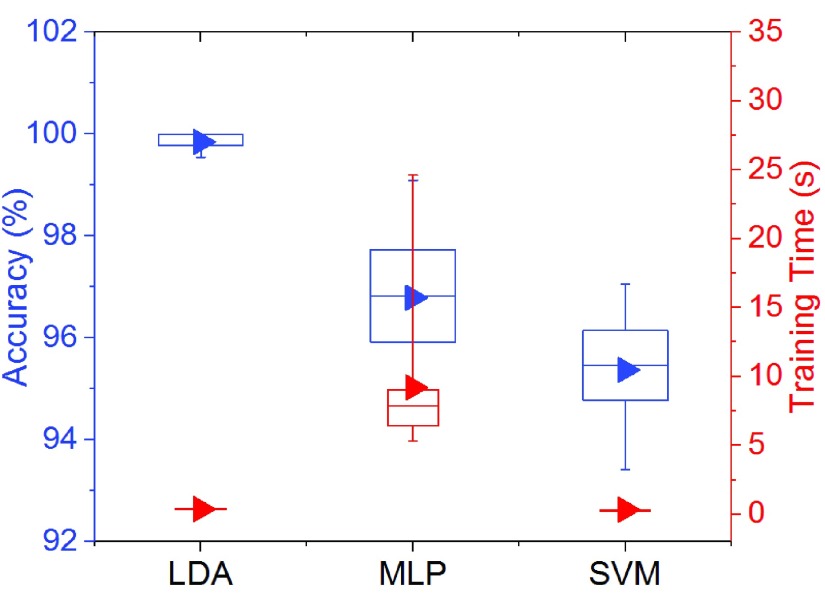

Offline classification accuracy using different classifiers was reported in Fig. 8. Similarly, the feature extraction setting is “tmabs”, “tstd”, “twl”, “trms”, and “tvar”. It was observed that the classification performance of MLP was superior to SVM (96.79 ± 1.84% versus 95.7 ± 0.95%). However, MLP shows a distinct disadvantage in terms of training time (9.20 ± 6.12 s versus 0.33 ± 0.10 s). It illuminates that despite the good approximation performance of the neural network, it has many defects, such as slow convergence speed and tend to stuck in local minima.

FIGURE 8.

Offline classification accuracy and training/validation time using different classifiers.

Nonetheless, the classification ability in multi-classification problems of LDA is limited, while it performed well in the classification of nine kinds of gestures in this study with significant high CA (99.85 ± 0.03%) and short training time (0.37 ± 0.01 s). As it emphasizes on the decision boundary, LDA is universally more robust against irrelevant outliers in the training data.

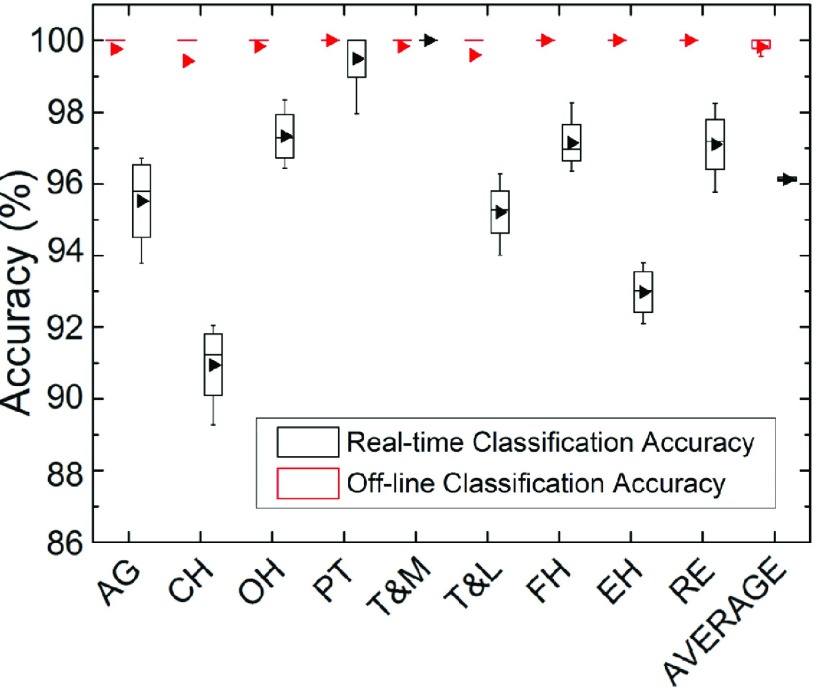

Figure 9 presents the offline and real-time CA of nine movements. “tmabs”, “tstd”, “twl”, “trms”, and “tvar” are the selected features, and meanwhile LDA was employed as the classifier. The offline average CA of nine movements, AG, CH, OH, PT, T&M, T&L, FH, EH, RE, is significantly high (99.82 ± 0.43%). The real-time average CA of close hand was relatively low (90.95 ± 1.64%), compared with offline CA (99.43 ± 1.50%). The different ways of subject holding its fist in recording section and real-time gesture recognition section led to this result. And the real time average CA of nine movements (96.20 ± 0.47%) was superior to a commercial gesture input device named MYO which can recognize five gestures.

FIGURE 9.

Offline and real time classification accuracy of nine movements.

D. Control

As EMG-driven system which enables active response to user’s intention is more effective than the passive mode, a five-finger dexterous robot hand used to assist stroke rehabilitation therapy was designed in this study. The real-time gesture recognition result from ML algorithm was further mapped as instruction to control the 3D printed five-finger dexterous robot hand, which was illustrated in

Fig. 10. The response time of the system was around 190 ms and less than 300 ms, thus the user performed rehabilitation training without being aware of the time delay.

FIGURE 10.

Real time gesture recognition results to control a five-finger dexterous robot.

The whole signal flow is described as below: Firstly, the eight channels raw sEMG signals of forearm detected by the SWA were pre-processed by using high-pass filters on-site the armband to largely remove motion artifact (usually below 20Hz). And then the data were amplified and converted to digital ones by adopting ADS1198, an analog front-end. Secondly, the pre-processed sEMG data were wirelessly forwarded to PC host via BLE, where the data were streamed to BioPatRec for feature extraction and classification which has been described in detail in section III. Thirdly, real time finger motions recognition result was further used as instruction to control the five-finger robot manipulator.

V. Discussion and Conclusion

This study presented a facilitating motor recovery system. It demonstrated a significantly average offline CA (99.82 ± 0.43%) and high real-time gesture recognition performance (96.20 ± 0.64%) to control a five-finger dexterous robot (Fig. 9). Compared to stroke rehabilitation systems based on virtual reality, this proposed prototype can help the patients to achieve more intuitive and authentic experience (Fig. 10). Worn around the user’s forearm, the smart wearable armband provides comfort and softness (Fig. 2), collecting sEMG at any time on the skin surface according to the needs and the actual situation. Three-order AR coefficient does not show its evident advantage, as shown in Fig. 5. The CCEAs (Fig. 6) and PCA (Fig. 7) evaluation results sufficiently demonstrate the effectiveness of our feature selection method and inform specific conflicting classes.

One limitation of this work is that it only focused on user-specific condition, where the training data and the verification data are from the same subject. However, the user-independent manner is more essential. Since the classifier does not need to be recalibrated when applied to a new user in user-independent condition, it can be time-saving. Related works were conducted by [45]–[48]. A representative work, [45], reported the classification accuracy in user-independent condition was 7.4% lower than in user-specific condition. Part of the reason is the strength performed by different subjects varies, which attributes to individual differences and could affect the sEMG amplitudes, further influence the classification accuracy.

Additionally, although this design is capable of recognizing nine gestures in total by analyzing the collected muscle signals from the user’s forearm, more hand gestures are expected be discriminated in order to achieve a more advanced post stroke active rehabilitation training system. Experiments were conducted to analyze and recognize the gestures other than the illustrated nine, and experimental results showed that the recognition accuracy of other gestures was relatively low (70%~80%) compared to the abovementioned nine. This is due to the extracted features from the measured eight channels muscle signals are insufficient to cover all hand gestures. One approach to identify more gestures with high recognition accuracy is to increase sEMG channel number. However, this may lead to higher design requirements and computational load [51], [52]. In addition, the processing capability of BioPatRec is limited to maximum eight channels. Therefore, in order to accommodate more channels, corresponding improvements of the algorithm itself are needed for balancing observational latency and recognition accuracy.

Furthermore, the real-time data in this work were obtained immediately after the off-line training. Actually, the experiment with the armband taken off and then re-taken was also performed. After re-taken, it was observed that the real-time accuracy dropped 6%. This is because after the armband re-taken, electrodes may slightly shift away from their previous positions, leading to electrodes deployment mismatch, as a result, the accuracy dropped after re-taken. An effective approach ensuring proper electrode deployment is to design and fabricate an elbow length glove where the developed smart armband is integrated inner side around the forearm position. The proposed glove scheme would somehow help avoid the armband rotation against the user’s arm. When the user puts on the elbow length glove, the electrodes will be attached on the forearm. As the electrodes are firmly embedded in the glove, the positions of electrodes to the forearm will remain unchanged in different trials with the glove taken off and then re-taken. This mechanism would to a large extent address the issue of electrodes deployment mismatch.

In this work, an IoT-based stroke rehabilitation system has been implemented, consisting of a smart wearable armband, machine learning algorithms, and a 3D printed robot hand. The smart wearable armband is unobtrusive, comfortable, and easy-to-use, which can be easily applied on user’s forearm without the need of professional knowledge or the help from clinicians which largely save the time for the clinical professionals. The utility of CCEAs and PCA was demonstrated with the high classification accuracy yielded by the feature sets selected. A 3D printed five-finger robot hand driven by ML algorithm was developed for stroke recovery. The real-time assistance from the robot hand can give the users the feedback of muscle activities and help them strengthen their motion patterns, which demonstrates the feasibility of robot-assisted active training after stroke.

Acknowledgment

The authors would like to express their gratitude to all the participators of this experiment.

Funding Statement

This work was supported in part by Fundamental Research Funds for the Central Universities and the Science Fund for Creative Research Groups of the National Natural Science Foundation of China under Grant 51521064.

References

- [1].Muth C. C., “Recovery after stroke,” JAMA, vol. 316, no. 22, p. 2440, 2016. [DOI] [PubMed] [Google Scholar]

- [2].Twitchell T. E., “The restoration of motor function following hemiplegia in man,” Brain, vol. 74, no. 4, pp. 443–480, 1951. [DOI] [PubMed] [Google Scholar]

- [3].Krebs H. I.et al. , “Robot-aided neurorehabilitation: A robot for wrist rehabilitation,” IEEE Trans. Neural Syst. Rehabil. Eng., vol. 15, no. 3, pp. 327–335, Sep. 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Dipietro L.et al. , “Changing motor synergies in chronic stroke,” J. Neurophysiol., vol. 98, no. 2, pp. 757–768, Aug. 2007. [DOI] [PubMed] [Google Scholar]

- [5].Van Peppen R. P., Kwakkel G., Wood-Dauphinee S., Hendriks H. J., Van der Wees P. J., and Dekker J., “The impact of physical therapy on functional outcomes after stroke: What’s the evidence?” Clin. Rehabil., vol. 18, no. 8, pp. 833–862, Dec. 2004. [DOI] [PubMed] [Google Scholar]

- [6].Zhang X. and Zhou P., “High-density myoelectric pattern recognition toward improved stroke rehabilitation,” IEEE Trans. Biomed. Eng., vol. 59, no. 6, pp. 1649–1657, Jun. 2012. [DOI] [PubMed] [Google Scholar]

- [7].Song R., Tong K.-Y., Hu X., and Li L., “Assistive control system using continuous myoelectric signal in robot-aided arm training for patients after stroke,” IEEE Trans. Neural Syst. Rehabil. Eng., vol. 16, no. 4, pp. 371–379, Aug. 2008. [DOI] [PubMed] [Google Scholar]

- [8].Lum P. S., Burgar C. G., Kenney D. E., and Van der Loos H. F. M., “Quantification of force abnormalities during passive and active-assisted upper-limb reaching movements in post-stroke hemiparesis,” IEEE Trans. Biomed. Eng., vol. 46, no. 6, pp. 652–662, Jun. 1999. [DOI] [PubMed] [Google Scholar]

- [9].Hu X. L., Tong K.-Y., Song R., Zheng X. J., and Leung W. W. F., “A comparison between electromyography-driven robot and passive motion device on wrist rehabilitation for chronic stroke,” Neurorehabil. Neural Repair, vol. 23, no. 8, pp. 837–846, 2009. [DOI] [PubMed] [Google Scholar]

- [10].Curran E. A. and Stokes M. J., “Learning to control brain activity: A review of the production and control of EEG components for driving brain–computer interface (BCI) systems,” Brain Cognit., vol. 51, no. 3, pp. 326–336, 2003. [DOI] [PubMed] [Google Scholar]

- [11].Liarokapis M. V., Artemiadis P. K., Kyriakopoulos K. J., and Manolakos E. S., “A learning scheme for reach to grasp movements: On EMG-based interfaces using task specific motion decoding models,” IEEE J. Biomed. Health Informat., vol. 17, no. 5, pp. 915–921, Sep. 2013. [DOI] [PubMed] [Google Scholar]

- [12].Lee S. W., Wilson K. M., Lock B. A., and Kamper D. G., “Subject-specific myoelectric pattern classification of functional hand movements for stroke survivors,” IEEE Trans. Neural Syst. Rehabil. Eng., vol. 19, no. 5, pp. 558–566, Oct. 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Hudgins B., Parker P., and Scott R. N., “A new strategy for multifunction myoelectric control,” IEEE Trans. Biomed. Eng., vol. 40, no. 1, pp. 82–94, Jan. 1993. [DOI] [PubMed] [Google Scholar]

- [14].Englehart K., Hudgins B., Parker P. A., and Stevenson M., “Classification of the myoelectric signal using time-frequency based representations,” Med. Eng. Phys., vol. 21, nos. 6–7, pp. 431–438, 1999. [DOI] [PubMed] [Google Scholar]

- [15].Yin Y. H., Fan Y. J., and Xu L. D., “EMG and EPP-integrated human–machine interface between the paralyzed and rehabilitation exoskeleton,” IEEE Trans. Inf. Technol. Biomed., vol. 16, no. 4, pp. 542–549, Jul. 2012. [DOI] [PubMed] [Google Scholar]

- [16].Ortiz-Catalan M., Sander N., Kristoffersen M. B., Hȧkansson B., and Brȧnemark R., “Treatment of phantom limb pain (PLP) based on augmented reality and gaming controlled by myoelectric pattern recognition: A case study of a chronic PLP patient,” Front Neurosci., vol. 8, no. 8, p. 24, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Huang Y., Englehart K. B., Hudgins B., and Chan A. D. C., “A Gaussian mixture model based classification scheme for myoelectric control of powered upper limb prostheses,” IEEE Trans. Biomed. Eng., vol. 52, no. 11, pp. 1801–1811, Nov. 2005. [DOI] [PubMed] [Google Scholar]

- [18].Khong L. M. D., Gale T. J., Jiang D., Olivier J. C., and Ortiz-Catalan M., “Multi-layer perceptron training algorithms for pattern recognition of myoelectric signals,” in Proc. IEEE 6th Biomed. Eng. Int. Conf., Oct. 2013, pp. 1–5. [Google Scholar]

- [19].Oskoei M. A. and Hu H., “Support vector machine-based classification scheme for myoelectric control applied to upper limb,” IEEE Trans. Biomed. Eng., vol. 55, no. 8, pp. 1956–1965, Aug. 2008. [DOI] [PubMed] [Google Scholar]

- [20].Yuan R., Li Z., Guan X., and Xu L., “An SVM-based machine learning method for accurate internet traffic classification,” Inf. Syst. Frontiers, vol. 12, no. 2, pp. 149–156, 2010. [Google Scholar]

- [21].Wang X., Chen X., and Bi Z., “Support vector machine and ROC curves for modeling of aircraft fuel consumption,” J. Manage. Anal., vol. 2, no. 1, pp. 22–34, 2015. [Google Scholar]

- [22].Shen L., Wang H., Da Xu L., Ma X., Chaudhry S., and He W., “Identity management based on PCA and SVM,” Inf. Syst. Frontiers, vol. 18, no. 4, pp. 711–716, 2016. [Google Scholar]

- [23].Cheng Q., Zhou H., and Cheng J., “The Fisher–Markov selector: Fast selecting maximally separable feature subset for multiclass classification with applications to high-dimensional data,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 33, no. 6, pp. 1217–1233, Jun. 2011. [DOI] [PubMed] [Google Scholar]

- [24].Liu J., Li X., Li G., and Zhou P., “EMG feature assessment for myoelectric pattern recognition and channel selection: A study with incomplete spinal cord injury,” Med. Eng., Phys., vol. 36, no. 7, pp. 975–980, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Ortiz-Catalan M., Brȧnemark R., and Hȧkansson B., “BioPatRec: A modular research platform for the control of artificial limbs based on pattern recognition algorithms,” Source Code Biol. Med., vol. 8, no. 1, pp. 11–28, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Nilsson N., Hȧkansson B., and Ortiz-Catalan M., “Classification complexity in myoelectric pattern recognition,” J. Neuroeng., Rehabil., vol. 14, no. 1, p. 68, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Xiang S., Nie F., and Zhang C., “Learning a Mahalanobis distance metric for data clustering and classification,” Pattern Recognit., vol. 41, no. 12, pp. 3600–3612, 2008. [Google Scholar]

- [28].Cowling B. J. and Hedley A. J., “The Mahalanobis distance,” Brit. Med. J., 2017.

- [29].Zheng X., Chen W., and Cui B., “Multi-gradient surface electromyography (SEMG) movement feature recognition based on wavelet packet analysis and support vector machine (SVM),” in Proc. 5th Int. Conf. IEEE Bioinformat. Biomed. Eng., May 2011, pp. 1–4. [Google Scholar]

- [30].Guo W., Sheng X., Liu H., and Zhu X., “Mechanomyography assisted myoeletric sensing for upper-extremity prostheses: A hybrid approach,” IEEE Sensors J., vol. 17, no. 10, pp. 3100–3108, May 2017. [Google Scholar]

- [31].Guo W., Sheng X., Liu H., and Zhu X., “Development of a multi-channel compact-size wireless hybrid SEMG/NIRS sensor system for prosthetic manipulation,” IEEE Sensors J., vol. 16, no. 2, pp. 447–456, Jan. 2016. [Google Scholar]

- [32].Farrell T. R. and Weir R. F., “A comparison of the effects of electrode implantation and targeting on pattern classification accuracy for prosthesis control,” IEEE Trans. Biomed. Eng., vol. 55, no. 9, pp. 2198–2211, Sep. 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Yan H., Da Xu L., Bi Z., Pang Z., Zhang J., and Chen Y., “An emerging technology—Wearable wireless sensor networks with applications in human health condition monitoring,” J. Manage. Anal., vol. 2, no. 2, pp. 121–137, 2015. [Google Scholar]

- [34].Fan Y. J., Yin Y. H., Da Xu L., Zeng Y., and Wu F., “IoT-based smart rehabilitation system,” IEEE Trans. Ind. Informat., vol. 10, no. 2, pp. 1568–1577, May 2014. [Google Scholar]

- [35].Yang G.et al. , “A health-IoT platform based on the integration of intelligent packaging, unobtrusive bio-sensor, and intelligent medicine box,” IEEE Trans. Ind. Inf., vol. 10, no. 4, pp. 2180–2191, Nov. 2014. [Google Scholar]

- [36].Xu B., Xu L., Cai H., Jiang L., Luo Y., and Gu Y., “The design of an m-health monitoring system based on a cloud computing platform,” Enterprise Inf. Syst., vol. 11, no. 1, pp. 17–36, 2017. [Google Scholar]

- [37].Yin Y., Zeng Y., Chen X., and Fan Y., “The Internet of Things in healthcare: An overview,” J. Ind. Inf. Integr., vol. 1, pp. 3–13, Mar. 2016. [Google Scholar]

- [38].Xu B., Da Xu L., Cai H., Xie C., Hu J., and Bu F., “Ubiquitous data accessing method in IoT-based information system for emergency medical services,” IEEE Trans. Ind. Informat., vol. 10, no. 2, pp. 1578–1586, May 2014. [Google Scholar]

- [39].Xu L. D., He W., and Li S., “Internet of Things in industries: A survey,” IEEE Trans. Ind. Informat., vol. 10, no. 4, pp. 2233–2248, Nov. 2014. [Google Scholar]

- [40].Chen Y., “Industrial information integration—A literature review 2006–2015,” J. Ind. Inf. Integr., vol. 2, pp. 30–64, Jun. 2016. [Google Scholar]

- [41].Li H., Da Xu L., Wang J. Y., and Mo Z. W., “Feature space theory in data mining: Transformations between extensions and intensions in knowledge representation,” Expert Syst., vol. 20, no. 2, pp. 60–71, 2003. [Google Scholar]

- [42].Li H.et al. , “Feature space theory-a mathematical foundation for data mining,” Knowl.-Based Syst., vol. 14, pp. 253–257, 2001. [Google Scholar]

- [43].Ortiz-Catalan M. (Aug. 5, 2016). BioPatRec. [Online]. Available: https://github.com/biopatrec/biopatrec/wiki [Google Scholar]

- [44].Lu Z., Chen X., Zhao Z., and Wang K., “A prototype of gesture-based interface,” in Proc. Conf. Human-Comput. Interact. Mobile Devices Services (DBLP), Stockholm, Sweden, Aug./Sep. 2011, pp. 33–36. [Google Scholar]

- [45].Zhang X., Chen X., Li Y., Lantz V., Wang K., and Yang J., “A framework for hand gesture recognition based on accelerometer and EMG sensors,” IEEE Trans. Syst., Man, Cybern. A, Syst., Humans, vol. 41, no. 6, pp. 1064–1076, Nov. 2011. [Google Scholar]

- [46].Saponas T. S., Tan D. S., Morris D., and Balakrishnan R., “Demonstrating the feasibility of using forearm electromyography for muscle-computer interfaces,” in Proc. SIGCHI Conf. Hum. Factors Comput. Syst., 2008, pp. 515–524. [Google Scholar]

- [47].Kim J., Mastnik S., and André E., “EMG-based hand gesture recognition for realtime biosignal interfacing,” in Proc. Int. Conf. Intell. User Interfaces, Jan. 2008, pp. 30–39. [Google Scholar]

- [48].Kim J., Wagner J., Rehm M., and André E., “Bi-channel sensor fusion for automatic sign language recognition,” in Proc. IEEE Int. Conf. Autom. Face, Gesture Recognit., Sep. 2008, pp. 1–6. [Google Scholar]

- [49].Bunderson N. E. and Kuiken T. A., “Quantification of feature space changes with experience during electromyogram pattern recognition control,” IEEE Trans. Neural Syst. Rehabil. Eng., vol. 20, no. 3, pp. 239–246, May 2012. [DOI] [PubMed] [Google Scholar]

- [50].Zhang H., Zhao Y., Yao F., Xu L., Shang P., and Li G., “An adaptation strategy of using LDA classifier for EMG pattern recognition,” in Proc. IEEE Eng. Med. Biol. Soc. Annu. Conf., Jul. 2013, pp. 4267–4270. [DOI] [PubMed] [Google Scholar]

- [51].Wang D., Zhang X., Gao X., Chen X., and Zhou P., “Wavelet packet feature assessment for high-density myoelectric pattern recognition and channel selection toward stroke rehabilitation,” Front Neurol, vol. 7, no. 3, p. s197–207, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [52].Li Y., Chen X., Zhang X., and Zhou P., “Several practical issues toward implementing myoelectric pattern recognition for stroke rehabilitation,” Med. Eng., Phys., vol. 36, no. 6, pp. 754–760, 2014. [DOI] [PubMed] [Google Scholar]