Abstract.

The ability to correlate anatomical knowledge and medical imaging is crucial to radiology and as such, should be a critical component of medical education. However, we are hindered in our ability to teach this skill because we know very little about what expert practice looks like, and even less about novices’ understanding. Using a unique simulation tool, this research conducted cognitive clinical interviews with experts and novices to explore differences in how they engage in this correlation and the underlying cognitive processes involved in doing so. This research supported what has been known in the literature, that experts are significantly faster at making decisions on medical imaging than novices. It also offers insight into the spatial ability and reasoning that is involved in the correlation of anatomy to medical imaging. There are differences in the cognitive processing of experts and novices with respect to meaningful patterns, organized content knowledge, and the flexibility of retrieval. Presented are some novice–expert similarities and differences in image processing. This study investigated extremes, opening an opportunity to investigate the sequential knowledge acquisition from student to resident to expert, and where educators can help intervene in this learning process.

Keywords: medical imaging, human anatomy, simulation, expert–novice, cognition

1. Introduction

Radiological anatomy knowledge is highly relevant to clinicians’ daily work as imaging studies are becoming more central to clinical pathways and clinical decision making.1 With the growing use of imaging studies,2,3 being able to correlate the elements of the physical exam and the medical imaging studies is an important clinical skill for physicians to have for patient care.

For example, breast cancer patients will often have image-guided biopsies of breast lesions and an axillary node prior to receiving neoadjuvant chemotherapy. Many times, the tumor lesions are no longer visible after the chemotherapy, but the markers placed at the time of image-guided biopsy remain visible. In the breast, the visible biopsy markers can be localized with mammography. However, the markers placed in the axillary nodes may not be visible for localization by ultrasound or mammography. Patient’s cross-sectional imaging studies [computed tomography (CT) or magnetic resonance] can be used to guide the surgeon to the location of the biopsy markers. This involves using prior imaging studies, the surrounding anatomical structures, and how they relate to the external skin surface. This work is challenging and complex but is a common practice for patients whose disease requires coordinated care of the radiologist and surgeon to aid in the removal of the entire tumor.

As this example demonstrates, interpreting radiology images and coordinating them with anatomy is a complex skill. It involves combining the information from visual pattern recognition, anatomical knowledge, knowledge of pathological processes, and patient-specific information.4 It goes beyond the task of searching, interpreting, and reading images and exemplifies the amalgamation of perceptual and cognitive skills.5 Given its importance and complexity, how can we teach this coordination of skills in medical education?

Rubin4 postulated that the cognitive sciences might help to provide insights into how radiologists interpret imaging studies and thus provide resources to trainees in radiology. To effectively teach students, cognitive science suggests that we should know the extremes of this skill—the final state of this skill (what expertise looks like) and where students start (the novice state).6–10 Understanding how experts reason through problems can provide a model toward which an effective educational process can aim. In addition, understanding how novices engage in similar situations provides information about the skills and knowledge upon which to build instruction.

But how can we study novice reasoning in a way that allows us to systematically compare it to expert reasoning? Studying how experts do these correlations in naturalistic, clinical settings is possible, but we cannot study novices in the same spaces. As a result, we need to simulate these activities outside of clinical settings and engage both experts and novices in the same acts of reasoning. Simulations have been used with great success both to support and study medical reasoning.7,11–16 The ability to evaluate a learner within an active engagement setting around a simulation allows researchers to view their direct performance and also ask questions in the moment about their performance (something that is not possible in naturalistic clinical settings).

In this study, we use a specially designed simulation tool to explore the thought processes of novices and experts as they make correlations between anatomy and medical imaging. Although there is a large body of research in radiology focused on image perception and lesion detection,17–27 we know little about how either experts or novices integrate the imaging studies commonly used in radiology and medical anatomy. Knowing how experts and novices reason about this correlation will support us as we consider how to redesign medical education to support this important clinical skill.

2. Background

2.1. Novice–Expert Paradigm

The expert–novice paradigm, long used in cognitive science research, seeks to understand the beginning and end points of a learning objective or a domain-specific expertise. An expert is someone that excels within a domain. Experts possess an organized body of knowledge that can be effortlessly accessed and used.28 In contrast, a novice is someone who is completely new to an area and who does not possess a significant amount of pre-existing knowledge of that discipline.29 These two groups can be viewed as extremes of mastery.

There are two main ways researchers study expertise.30 The first is to study experts within their domain and to understand how they perform. The goal here is to understand their superior performance, so the methods of assessment need to accurately portray their expertise. This method looks at how different these few are from the multitudes and makes the assumption that there is something inherently unique about these individuals (absolute approach).

The second is to approach the study of expertise by comparing experts with novices. This approach makes the assumption that expertise is an attainable goal by novices. Chi8,30 calls this later approach the relative approach. An expert in this context is someone that is more advanced and measured within the domain of his or her expertise. The goal of relative approach is to understand how experts excel, such that others can facilitate and/or accelerate to a level of expertise. This latter approach suggests that there is a continuum from novice to expert, and education/training can help people build on their prior knowledge to proceed along the continuum.

2.2. Expert–Novice Differences in Radiology

Most research of expertise in medicine has focused on expertise in internal medicine. Radiology differs from other areas of medicine in that it is highly reliant on visual input. It involves a substantial perceptual component, formalized medical knowledge, and knowledge gained in clinical experience. It also involves integrating bodies of medical knowledge that have distinct structures to them, including anatomy, physiology, pathology, and the projective geometry of radiography (medical physics).31 However, radiology also differs as a discipline of expertise as it needs to answer three questions with every image interpretation: what is it, where is it, and what is it that makes it what it is.32

Studies examining expertise in radiology have mainly focused on perceptual skills. For example, Berbaum and colleagues33 tested the perceptual phenomena of “satisfaction of search” by adding simulated nodules distractors on chest x-rays. Similarly, eye-tracking patterns were studied and demonstrated that patterns vary with the level of expertise.34–36 Analogously, different search patterns with respect to visual attention and correction interpretation have been seen in experts and novices.34,37,38 These studies have looked only at the perception piece of radiology—not its full complexity.

Far fewer studies have examined how expert radiologists analyze imaging studies and relate them to a clinical situation. Two approaches have been described about how radiologist expertise relates the imaging findings to the clinical situation. First, expertise is an accumulation of specialized schemata that are sensitive to specific disease states (where schemata are the perception of a finding that is dependent upon the other surrounding indirect evidence).10,39 Second, expertise is the development of feature lists with accurate status values and appropriate combining weights to make a diagnosis.40 These two approaches ascribe generalizations. More research is needed to determine if these are domain-specific.

2.3. Simulation in the Study of Medical Reasoning

Studying experts and novices in real medical situations would be challenging logistically, but also unethical to allow novices to practice medicine without the authorized expertise. Thus, simulation can provide a safe “realistic” environment or situation that mimics the medical situation or scenario, without the risk to real patients. Simulation can be used to gain expertise in a specific technique (e.g., simulation for performance and training), but simulation can also be used to understand the process and reasoning that is the essence of expertise. There is a large and diverse body of research that has investigated the use of simulation in skill acquisition,41–50 and a smaller but growing body of literature on understanding reasoning and cognition that occurs during simulation.51–55 Researchers have used simulation to evaluate different facets of learning; some of these include image perceptual skills, knowledge application and critical thinking, and experiential learning to support prior knowledge.

Image perceptual skills are a growing interest in the education of trainees. A computer-based simulation system was used to study whether or not this simulated environment can determine preparedness of radiology residents for being on-call56–58 and reading mammograms.59 Additionally, simulations using radiology workstations have been used to explore perception differences in experts and novices, and explore reasons for missed diagnoses,60,61 search pattern training to improve nodule detection,62,63 and central venous line placement64 on chest x-rays.

Virtual patients have been studied in nursing students as they provide clinical assessments. These simulations demonstrated progressive clinical reasoning and critical thinking, and over time developed awareness in the nursing students of what to focus on in clinical practice.65,66 Similarly, high-fidelity patient simulations were used to develop critical thinking skills related to physiology in medical students,67 and virtual patient simulators to develop clinical reasoning in surgical oncology.68

Basic science concepts are difficult for many medical students to recognize as being clinically relevant. Simulations that provide experiential learning have shown to strengthen those basic science concepts.69 Sheakley and colleagues70 used a high-fidelity cardiovascular simulation to bridge the gap between basic science and clinical knowledge using clinical scenarios that incorporated auscultation with a stethoscope and electrocardiogram tracings. Similarly, a thoracic ultrasound simulation with medical students increased their anatomical knowledge and clinical relevance of the thoracic anatomy.71

The simulation system used in this study embodies methods in which simulation has been used in medical reasoning. First, it offers an experiential learning by the immersion in an activity72,73 that correlates two domains (cross-sectional imaging and physical examination) that are known to the participants. Although the participants have some knowledge of cross-sectional imaging, they will be asked to interpret the image that they are presented and understand the anatomy that is portrayed in that image.23,27 Finally, they will have to use their prior knowledge7,8 of medical imaging and the physical examination anatomy, and evaluate how the two are related.

Our simulation system is unique in which it allows us to specifically study the ability of experts and novices to make the direct correlation of a cross-sectional image to the physical exam traits of a human torso. On a real patient, the correlation of the physical exam and the person’s CT scan could not directly happen unless this evaluation took place in the CT suite in a radiology department, which would potentially expose the patient and users to unwarranted radiation. Although we could perform direct anatomy correlation on a patient to the physical exam with ultrasound, most novices are not versed in ultrasound anatomy and have more exposure to cross-sectional CT imaging. The ability to move the probe over the body and directly manipulate the CT images and correlate the physical body landmarks exceeds the experience that can be offered with a computer screen-based simulation of a similar CT image set.

3. Study Objectives and Hypothesis

The objective of this study was to establish features of experts and novices when correlating medical anatomy and cross-sectional imaging within a specialized simulation system. Studies examining expertise in coordinating imaging and the physical exam are important because of the specialized nature of radiological expertise. However, research from cognitive science that looks at expertise across domains also indicates there are generic features of expertise which are likely present in some form in radiological expertise.

This study looked at the ways in which the general features of expertise show up in radiological expertise. In doing so, this study builds on existing research by investigating the differences between experts and novices when correlating medical anatomy and cross-sectional imaging within the framework of simulation.

4. Methods

Expert and novice volunteers participated in this Institutional Review Board (IRB) approved exempt study correlating cross-sectional medical imaging with the physical exam and anatomy of a specialized simulation tool. All participants signed an IRB approved consent form. Qualitative and quantitative data were collected during each simulation session with prescribed tasks. The data provide insight into the features of expertise in this subdomain of radiology.

4.1. Simulation Tool

As it would be difficult to collect controlled data of radiologists and novices looking at actual images in a clinical setting, we developed and used a simulation tool specifically developed for these types of scenario evaluations. The simulation tool allows the user to control a calibrated CT scan of the human torso by moving a handheld probe over the torso and viewing the “real-time” images on an iPad. The integration of the handheld probe controlling the images allows for direct correlation of the physical exam and the imaging at that location (Fig. 1).

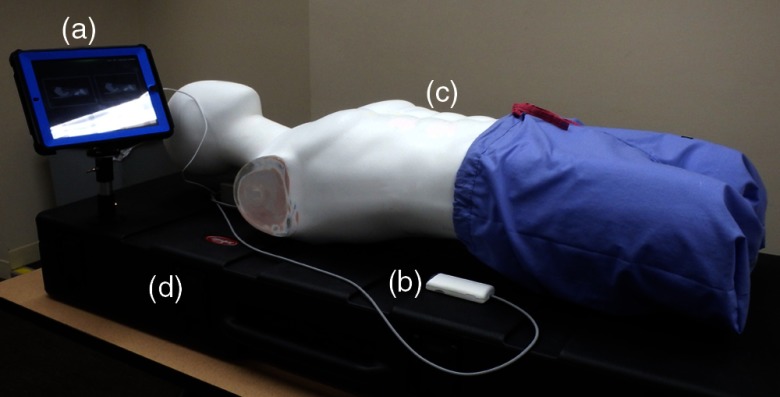

Fig. 1.

Components of the simulation system. (a) iPad image viewer and control panel; (b) handheld probe that moves image set in integration with the torso; (c) torso model for physical integration with the image set and handheld probe; and (d) mounted box housing all the controls.

The axial CT images are 3-mm-thick slices and the reconstructed sagittal images are 2.5-mm-thick slices. The handheld probe works through a magnetic motion-tracking sensor that is able to accurately measure position and orientation with six degrees of freedom. The system tracks the probe motion every 500 ms using epoch time. The axial and sagittal image matrices are to . These matrices maximize the 512-pixel imaging matrix of the CT images. The external landmarks facilitate the correlation of this image matrix to the internal anatomy.

The image set is an anonymized normal male CT scan of the abdomen and pelvis with intravenous contrast. This anatomical region is accessible to the physical examination and has important clinical and external landmarks commonly used to evaluate the underlying anatomy.

4.2. Participants

Ten radiologist experts, who interpret abdominal imaging as part of their practice (average age 47.4 years with 13.5 average years of experience; nine males, one female), participated. In this study, experts were defined because of their skill and years of training in evaluating abdominal cross-sectional imaging. The novices, senior (fourth year) medical students, were defined by their degree of background knowledge, as someone that has some exposure to medicine and medical imaging, but with limited imaging knowledge.

Research shows that expertise is heavily domain-dependent.10,31,74 Thus, the radiologists in this cohort interpret abdominal imaging to exclude nonexpert bias from radiologists that were subspecialized in other fields such as neuroradiology, musculoskeletal, chest or breast imaging. Six of the radiologists were community-based and four radiologists were academic-based within the same large midwest university radiology practice group.

Eleven senior medical students from a large midwest medical school (average age 27.4 years; five males, six females) were invited using a snowball invitation method.75–77 Students from the first author’s advanced anatomy course, who participated in a pilot study using the simulation tool, invited peers to participate. These potential participants’ contact information was sent to an administrative assistant, who arranged the session times and dates. These students represent the novice group for the study.

These novices, in this study, had a traditional course-based curriculum with a strong reliance on multiple-choice tests. The medical school curriculum had 2 years of basic science and 2 years of clinical-based medical rotations. This novice group has foundational knowledge of the basic sciences (including anatomy) and the applied clinical experiences to engage with the study tasks, but not enough knowledge and skill to be considered experts.

4.3. Study Activities

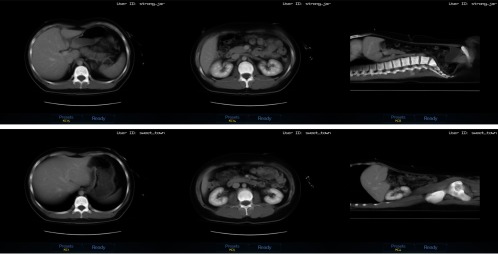

Participants had the same sequence of CT images (Figs. 2 and 3) to locate in the body. The CT images consisted of a practice case (not used in quantitative data analysis), then two axial images, a sagittal image, two more axial images, and finally, one more sagittal image. All participants had the same image set and order for equivalent comparisons across levels of expertise.

Fig. 2.

Location within the simulation torso where the image slices are located. (Axial images marked with pink tabs, sagittal images by yellow tabs, and purple tab represents the test image location.)

Fig. 3.

Axial and sagittal images of the abdomen, corresponding to the external simulation locations noted in Fig. 2, which the participants localized in the simulation. (Left to right and top to bottom; KC15, KC14, KC8, KC1, KC5, and KC4).

An “event” was defined as the presentation of one CT image to localize within the simulation body. The participants used the handheld probe to localize that specific CT image (slice) to the best of their ability within the human simulation torso. Once localized, they logged in that position of the probe by touching a button on the iPad. They visualized their success in localization with side-by-side images on the iPad screen of the target image and where they localized the image to be in the body. If the images did not match, they matched the two images by moving the probe in the appropriate direction. They finalized this step by touching a button on the iPad.

4.4. Data Collected

4.4.1. Simulation session data

The simulation session data record the performance of the participant’s ability to correlate a CT image to its location in the physical body through the assessment data aggregator for game environments computer program specifically developed for this simulation. The preassigned CT images for each event have a known coordinate axis in space. When the user places the probe on the model and sets that location, this records their start point. The computer then logs the movements of the probe until the user completes the task. For axial images, the probe only records superior and inferior movements, and for sagittal images, the probe only records the left and right movements.

In addition to location, the simulation session recorded continuous time points. Probe movements are recorded every 500 ms. However, only three time points were used for analysis in this study: the moment the participant saw the target image (preset), the moment when the participant localized the target image with the probe on the simulation torso (ready), and finally, the moment when they completed the final matching of their original probe location to the target image (complete).

4.4.2. Think aloud data

Although the participants were locating the position of the image within the simulation body, they were encouraged to verbalize their thoughts. Think aloud is a method of gathering information that provides rich verbal data about an individual’s reasoning during a problem task. It provides in-the-moment information about what is being concentrated on and how information is structured during a problem-solving task.78,79 This method provides valid data on the underlying thought processes that are occurring during the activity.80

Although think aloud can provide access to cognition, Fonteyn et al.78 found that when subjects are working under a heavy cognitive load, they tend to stop verbalizing or provide less complete verbalizations. To ensure capturing complete data in situations when participants neglect to verbalize, questions related to that event were asked right after completing the task. The sessions were audio-recorded with permission to allow transcription for analysis.

Despite the cognitive load of think alouds, think alouds remain a predominant methodology in the expert/novice research paradigm.81,82 Across a variety of domains, researchers use data comparing expert and novice performance during think alouds to make claims about differences in cognitive processing between experts and novices.79,80 In these studies, experts and novices are compared with one another but not to a “control” group that engages in the task without thinking aloud. Although we acknowledge the potential differing effects of cognitive load on expert and novices, we carried out this study in a way consistent with the norms of the expert–novice paradigm. Further research could unpack the potential impact of thinking aloud on participants’ performance.

4.5. Data Analysis

4.5.1. Quantitative data analysis

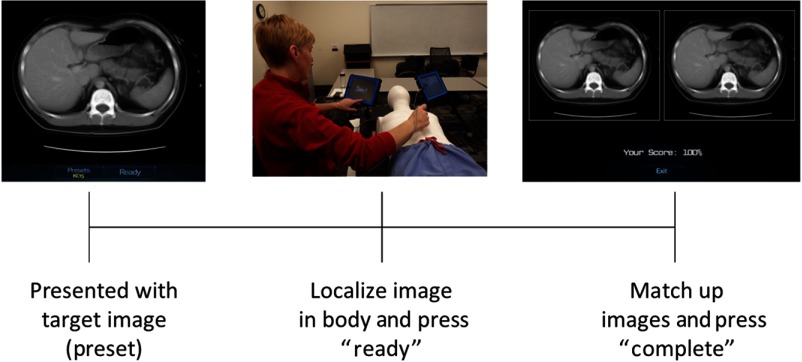

One-way analysis of variances (ANOVAs) were used to assess for mean performance differences of experts and novices within different time segments of the simulation (preset to ready, ready to completion) (Fig. 4). Here, “preset” is the time point when the participant is presented with the target image on the iPad, “ready” is when they localized the target image by placing the probe on the simulation torso, and “completion” is when they made adjustments to match up the target image.

Fig. 4.

Simulation event demonstrates the interaction of a participant with the simulation tool. Schematic steps for each image (“preset to ready” and “ready to completion”) which correlate to the time points for analysis.

Two-way ANOVAs were used to calculate the interaction of two variables of the simulation activity and the level of expertise. To evaluate if the differences in performance were due to a learned effect over the course of the simulation session, a two-way mixed ANOVA with repeated measures was performed. Statistical analysis was performed using statistical package for the social sciences (SPSS) and Laerd Statistics.83

The percentage differences between individual groups were evaluated using the “” Chi-square test,84,85 and Pearson Chi-square test using SSPS. Significance was assessed at .

4.5.2. Qualitative data analysis

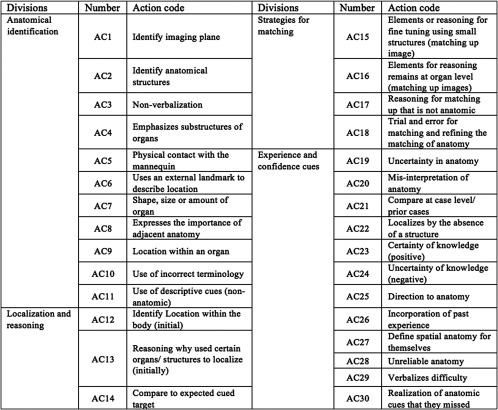

The transcripts from the simulation events were coded with codes modified (Fig. 5) from Crowley et al.,86 who investigated visual diagnostic performance expertise in pathology. Crowley and colleagues86 used large categories of data examination, data exploration, interpretation, control process, and operational verbalizations. Using this as a foundation, the action codes were divided based on anatomical identification (AC1-AC11), localization and reasoning (AC12-AC14), strategies for matching (AC15-AC18), and experience and confidence cues (AC19-AC30) (Fig. 6). The coding scheme closely followed the sequence of steps for the participants during the simulation events and provided a method for comparing the reasoning between experts and novices.

Fig. 5.

Qualitative coding schemata used for analyzing transcripts. The description of the codes and examples is included in Appendix. Modified from Crowley et al.42

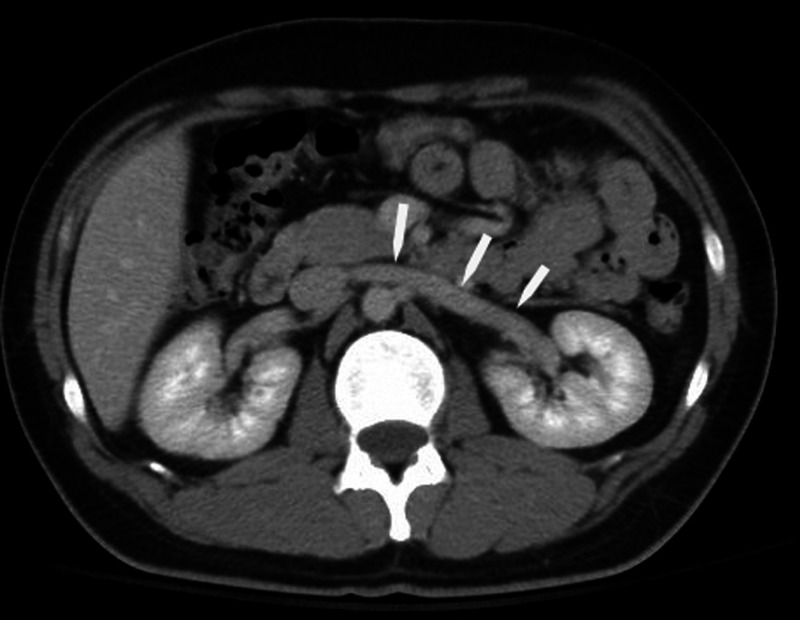

Fig. 6.

Axial CT image at the level of the right renal vein (white arrows). The renal vein has a narrow zone of transition, which was used by experts to fine-tune matching of images to the target image.

To evaluate the reliability of the coding scheme developed for this study, another researcher coded 10% of the data after initial instruction. According to Cohen’s ,83 there is good agreement between the two researchers’ judgments, , 95% CI [0.574, 0.664], and . Good agreement for is between 0.61 and 0.80. However, there were codes that required heavy medical anatomy understanding. The research coder did not have expertise in medical anatomy, so Cohen’s calculation was repeated after removing those medical anatomy codes. Cohen’s for this data is slightly higher and suggests good agreement between the two researchers’ judgments, , 95% CI [0.627, 0.721], and . The first author coded the remaining 90% of the transcripts.

The transcripts of the test event and the following six simulation events were coded. There were 77 coded events for the novices ( each), and 70 coded events for the experts ( each). The lines of the transcript for each event were coded for the presence of the overarching action codes (Fig. 5), and if present in that line of the transcript, it would be scored a “1.” An action code could be used multiple times in an event.

For this study, the use of the action code within an event was counted as being present or not. The action codes were summed over all 77 events for novices (7 events for 11 novices) and 70 events for experts (7 events for 10 experts). The percentage of using a specific action code was obtained by dividing the total number of times an action code was used by experts/novices by the total number of events (77-novices or 70-experts). The percentage (proportion) of these codes used by novices and experts were compared using Chi-square analysis.

5. Results

The qualitative and quantitative results of this study demonstrated similarities and differences between experts and novices. The results are organized according to the dimensions of expertise outlined in the expert/novice literature in cognitive science and education.6 The results are presented this way to give meaning to the extensive number of statistical differences identified between experts and novices in this study.

5.1. Experts are Faster Than Novices

Experts were faster than novices at localizing the cross-sectional images within the simulation torso. Experts are significantly faster than novices, independent of imaging plane presented for the event. In the axial plane, experts’ average time from ready to completion was 16.6 s compared with 26.6 s for novices (Table 1). Similarly, in the sagittal plane, experts’ average time was 19.99 s compared with 38.6 s for novices.

Table 1.

Time comparisons for novices and experts during specific segment intervals and imaging planes used during the simulation. The analysis of preset to ready and ready to completion combined both sagittal and axial events.

| Event Interval | Expert Average Time (s) | 95% Confidence Interval | Novice Average Time (s) | 95% Confidence Interval | -statistic | Significance |

|---|---|---|---|---|---|---|

| Pretest to ready | 20.48 | 18.137 to 22.822 | 44.11 | 38.540 to 49.684 | 54.741a | |

| Ready to completion | 17.76 | 15.328 to 20.189 | 30.57 | 24.797 to 36.346 | 14.978a | |

| Axial image (ready to completion) | 16.6 | 10.880 to 22.405 | 26.6 | 21.205 to 31.915 | 6.232b | |

| Sagittal image (ready to completion) | 19.99 | 11.842 to 28.141 | 38.6 | 31.021 to 46.168 | 10.693b |

One-way ANOVA with .

Two-way ANOVA with using SPSS.

In our study, however, quickness was not associated with accuracy. Three data points are missing for the experts (two axial and one sagittal) due to a technical malfunction in data recording. The novices on average were more accurate at initial localization of the image within the simulation torso (Table 2). For axial images, both novices ( events) and experts ( events) tended to localize superior to the target image location, and for sagittal images (novices events; experts events) to the right of the target image location. Comparisons were made with independent -test using SPSS with significance of .

Table 2.

Average distance away for initial localization of the target image location by novices and experts. The negative value for axial images reflects that the localization is superior to the target image, and a negative value for sagittal images reflects localization to the right of the target image.

| Expert (average distance away from the localization target) | Novice (average distance away from the localization target) | 95% Confidence Interval | Sig. (2-tailed) | |

|---|---|---|---|---|

| Axial | to | 0.364 | ||

| Sagittal | to 14.110 | 0.028 |

However, knowing that experts are faster at localizing the image within the human torso provides very little information on “why” or “how” they perform the task faster than novices. As such, we probed deeper into the data to learn more about the features that contribute to these differences.

5.2. Experts Exhibit Flexible Retrieval with Little Effort

Experts can more flexibly retrieve their knowledge with little conscious effort,87 and frequently perform these tasks faster than novices.7,86,88,89 Experts can effortlessly retrieve knowledge to apply to an unknown problem. This automaticity frees up the ability to attend to portions of the problem that need more attention.6,90 This study corroborates these findings (Table 1), as assessed through both time and number of probe movements when matching up the target image to the location in the simulation torso. On average, when moving the probe to match up the position location to the target image, experts make 36 probe movements compared with the 61 made by novices [, ]. The probe movements of the novices were slower with more increments, and also had more movements away from the target when finally matching.

Experts were equally proficient in performing the task in both the axial and sagittal orientations ( and ). However, novices were significantly faster with axial-orientated CT images compared with sagittal CT images ( and ). Sagittal images are not commonly used outside of radiology. A sagittal image requires not only the ability to identify the anatomical structures, but also processing the image location on the right or left side of the body. Processing sagittal images use retrieval that is more advanced in addition to application of anatomical information. Images in the sagittal plane have an added order of complexity that most novices may not understand. Thus, it is not surprising that experts excel in processing sagittal images compared with novices.

In this study, compared with studies performed in silence while performing the task, the time to localize the image is mildly inflated with the think aloud process. Novices talked more during the events compared with experts (83.3% compared with 70% of all events). However, there was no statistical difference in the number of events that the novices and experts verbalized ( and ), thus this does not appear to be a factor affecting speed. However, the complexity of the conversation or number of words spoken for experts and novices was not factored into this comparison.

In addition to experts being significantly faster in all components of the simulation components, (Table 1) they also perform the task of matching the target image with their localized image with significantly fewer movements of the probe, an average of 36 movements compared with 61 movements for novices (one-way ANOVA and ). Experts are more intentional and direct in movements compared with novices. This may also reflect a confidence in knowing the anatomy and the direction of movement needed to get the correct anatomical location. The time and movement data indicate that experts do possess more flexible retrieval than do novices when it comes to coordinating radiological images and physical anatomy.

As the subjects performed similar repetitive tasks, there could be an improvement in event time from the first event to the last, which could account for differences in event times. A two-way mixed ANOVA with repeated measures analysis determined that the event order was not significant () (), but rather expertise was a greater factor in the event time.

5.3. Experts Structure and Organize Their Content Knowledge

Expert knowledge is organized around core concepts or big ideas, and not as a list of facts or formulas within a domain.6,91 Experts in this study organize their knowledge by relating to prior experience, using external landmarks to describe the location and define the spatial anatomy. The qualitative think aloud data provide evidence of this difference between expert and novice radiologists in the present study.

Experts tended to structure their content knowledge around core concepts that make sense from a perspective of clinical experience; they approached each event as a clinical problem. Both novices and experts used references to physical landmarks to isolate the internal anatomy (AC5, ; Table 3). Experts related to past experience and how imaging is different between patients relying on general anatomical principles when interpreting imaging exams rather than the specific image details (such as novices). They did not take the images at face value (literal) but related it back to experience and how they would define this spatial relationship in the body.

Table 3.

Qualitative action codes related to anatomical identification used by novices and experts during the simulation events.

| Number | Action Code | Number of Events with Action Codes | Chi-squared Analysis | ||

|---|---|---|---|---|---|

| Expert () | Novice () | -value | |||

| AC1 | Identify imaging plane | 10 | 12 | 0.048 | 0.827 |

| AC2 | Identify anatomical structures | 65 | 77 | 5.653 | 0.017 |

| AC3 | Nonverbalization | 21 | 17 | 1.192 | 0.275 |

| AC4 | Emphasizes substructures of organs | 25 | 13 | 6.737 | 0.009 |

| AC5 | Physical contact with the mannequin | 4 | 11 | 2.926 | 0.087 |

| AC6 | Uses an external landmark to describe location | 24 | 26 | 0.004 | 0.947 |

| AC7 | Shape, size or amount of organ | 28 | 51 | 10.079 | 0.0015 |

| AC8 | Expresses the importance of adjacent anatomy | 9 | 18 | 2.688 | 0.101 |

| AC9 | Location within an organ | 31 | 29 | 0.663 | 0.416 |

| AC10 | Use of incorrect terminology | 6 | 33 | 21.967 | |

| AC11 | Use of descriptive cues (nonanatomic)—“color, blobs, patterns” | 7 | 18 | 4.619 | 0.032 |

Novices approached each case in a literal context. They keyed into identifying individual structures (AC2, ; Table 3), and the size and shape of organs (AC7, ; Table 3). Similarly, novices are more likely to misinterpret the anatomy (AC20, ; Table 4) or have uncertainty of the anatomy that they are viewing (AC24, , Table 4). A common example was the misinterpretation of the third segment of the duodenum and referring to it as the pancreas. Another example was the misinterpretation of the lumbar spine on an axial CT image, and referring to it as the thoracic spine. The novice did not notice the size and shape of the lumbar vertebral bodies, and the lack of ribs. Additional errors in anatomy were identifying structures that were not in the image (i.e., the presence of the pancreas or renal arteries). These examples demonstrate that the novices organize knowledge around details rather than the larger concepts. By focusing on the individual details, they did not see the associations of the surrounding anatomy to help distinguish the pancreas from the duodenum, or the other anatomical features to differentiate the lumbar from thoracic spine.

Table 4.

Confidence in knowledge and judgments of experts and novices as they correlate physical examination and cross-sectional imaging.

| Number | Action Code | Number of Events with Action Codes | Chi-squared Analysis | ||

|---|---|---|---|---|---|

| Expert () | Novice () | -value | |||

| AC19 | Uncertainty in anatomy | 6 | 29 | 16.989 | |

| AC20 | Misinterpretation of anatomy | 4 | 20 | 10.944 | 0.0009 |

| AC21 | Compare at case level/prior cases | 17 | 22 | 0.342 | 0.558 |

| AC22 | Localizes by the absence of a structure | 9 | 17 | 2.126 | 0.145 |

| AC23 | Certainty of knowledge (positive) | 32 | 24 | 3.265 | 0.071 |

| AC24 | Uncertainty of knowledge (negative) | 9 | 40 | 25.04 | |

| AC25 | Direction to anatomy | 32 | 43 | 1.495 | 0.221 |

| AC26 | Incorporation of past experience | 19 | 17 | 0.504 | 0.478 |

| AC27 | Define spatial anatomy for themselves | 16 | 17 | 0.013 | 0.910 |

| AC28 | Unreliable anatomy | 8 | 6 | 0.560 | 0.454 |

| AC29 | Verbalizes difficulty | 7 | 12 | 1.001 | 0.316 |

| AC30 | Realization of anatomic cues that they missed | 3 | 7 | 1.323 | 0.250 |

Novices used nonanatomic descriptive cues (color, blobs, and patterns) to describe what they were viewing (AC11, ; Table 3). Some examples include using the pattern of the intestines to localize the image. Many of the experts voiced that this was not a reliable way to evaluate the abdomen, as the intestinal pattern is highly variable within and between patients. Novices would also use the air bubbles in the intestine to match up. They used abstract black/white (gas in the intestine, “brightness of the bones”) over anatomical structures.

Novices organized their knowledge around nonanatomical idiosyncratic things, whereas experts organize around the regional anatomy presented and their prior clinical experience. Experts generalized their anatomical reasoning across all variations of clinical presentation, and novices concentrated on the literal presentation of the image (similar to how anatomy education is often presented). Novices, however, do not have the vast experiential knowledge to draw upon. The novices would go through a “list” of structures (for example, list all the structures that they are seeing, rather than noting where in the body that the image most likely represents) more often than concentrating on the big concept of where the image was located.

5.4. Experts Recognize Complex Patterns

Experts are more likely to recognize meaningful configurations and realize the implications.6 Experts have the ability to chunk information into meaningful and familiar patterns and apply this to a situation. The qualitative data from the think alouds suggest that experts in this study demonstrate this ability in recognizing organ substructures, using small structures for fine-tuning, and using structural differences in the surrounding anatomy.

Expert’s notice features and patterns that are not noticed by novices. When novices were focused on the presence of the larger structures (liver and kidney), the experts were identifying structures that would be more helpful in making an accurate localization of the image. Experts noted those larger structures but would drill it down further. When localizing an image in the body, experts rely on organ substructures (experts 36%, novices 17%; , Table 3; AC4), whereas novices weigh heavily on size or amount of an organ in the image (experts 40%, novices 66%, ; Table 3; AC7).

Demonstrating their ability to recognize meaningful complex patterns, experts significantly used small structures for fine-tuning of matching the images (experts 44%, novice 18%, , Table 5; AC15). Experts would use smaller anatomical findings to fine-tune their search such as the consistent narrowing of the slope of the lungs with inferior scanning or the isolation of a vessel in the abdomen (Fig. 6), which will have a narrow zone of transition in the given plane. They narrow the complex problem by isolating a small and reliable structure that will have a dependable change. In contrast, during this same task, novices typically rely on matching at the organ level (size and shape) (expert 47%, novice 68%; , Table 5; AC16), use nonanatomic cues (expert 17%, novice 36%; , Table 5; AC17) or use trial and error methods (experts 1.4%, novice 18%; , Table 5; AC18).

Table 5.

Strategies for fine-tuning and matching of anatomical structures performed by novices and experts.

| No. | Action Code | Number of Events with Action Code | Chi-squared Analysis | ||

|---|---|---|---|---|---|

| Expert () | Novice () | -value | |||

| AC15 | Elements or reasoning for fine-tuning using small structures (matching up images) | 31 | 14 | 11.688 | 0.0006 |

| AC16 | Elements for reasoning remains at the organ level (matching up images) | 33 | 52 | 6.208 | 0.0127 |

| AC17 | Reasoning for matching up that is not anatomic | 12 | 28 | 6.793 | 0.0092 |

| AC18 | Trial and error for matching and refining the matching of anatomy | 1 | 14 | 11.151 | 0.0008 |

To provide a rich sense of these statistical effects, consider some extended qualitative excerpts from the think alouds. When localizing an image in the upper abdomen (image KC1, Fig. 4), which does contain the liver, novices concentrate on the presence of the organs. Here is an example from one novice interview.

“So I see the liver here. Um, and it’s quite large; so I know it’s probably a little bit near the diaphragm. I think this is the spleen right here, and I think that’s the pancreas. So I’m going to go where I think that all of that stuff is… I’m going to go down a little bit because I think I’m getting some of the like diaphragm here to where the spleen is a little bit larger… I was focusing in on like the smaller organ and the spleen because I felt like I could match that up better than using the liver which is larger. So it’s the size of the spleen is what I was using on that one. And I mean, the shape. Obviously if like the shape changed drastically, I think I would go the other way if I thought. But mostly the size”

For the localization of this same image, this expert notes the dome of the liver but uses the sharp change in the costophrenic shape of the lung as a more accurate landmark.

“when I was trying to localize, I was looking the dome of the liver, but when I’m trying to match the images, then the amount of costophrenic recess I have… the black space of the costophrenic recess is an easier—because it’s an AP dimension, I can just kind of go with that. If I’m doing the liver, you know, every liver is a little different, and so depending on how much like of the left hepatic lobe I’m including. I think it’s a little bit—it changes less than the size of a costophrenic recess when you get that low.”

In this example, the novice uses trial and error and even incorrect anatomical identification in their localization (there was no pancreas in this section). In contrast, experts isolate important landmarks that define the space and more specific cues to further define it. Experts demonstrated the ability to recognize meaningful patterns, whereas these patterns did not come to awareness for the novices.

A similar scenario occurred with the anatomical structures in the middle of the abdomen (image KC14, Fig. 4). The novice concentrated on the large structures and also based judgment on a structure that greatly changes over time (intestinal air).

“I see kidneys again, and then I see the bottom of the liver, what looks to me like small bowel, though I’m not great at distinguishing bowel…So I notice a little bit of difference in the bowel pattern, but otherwise I see kidney and the tip of the liver, so I’m just going try to match up the bowel pattern. But specifically like the air—”

An expert given this same image, concentrated on the renal vessels that have a horizontal orientation and a narrow zone of transition (see Fig. 6).

“—so I’m at the inferior margin of the ribs and the kidneys. So again I’m going to be about—I’m above the umbilicus.…—so I’m looking at the right renal vein—almost. And so I’m just trying to line that renal vein up there.”

This example demonstrates the novice used unpredictable landmarks (intestinal air) and large structures to localize, compared with experts identifying a structure with a narrow transition to solve the complex problem of localizing in the mid abdominal region. The expert was able to recognize the relationship and organization of the anatomy to do the final localization, rather than doing a search of what is in the region. The expert’s plan of execution was based on experience.

5.5. Experts Exhibit Situational Applicability

Experts can retrieve the knowledge appropriate for the task.6,28 Although this simulation session was not in the usual radiology reading room context, experts were able to apply the appropriate terminology and anatomy of the CT imaging to a simulation tool as though it was a standard situation. Experts are more likely than novices to use the correct terminology () (AC10, Table 3). Novices tended to use lay terms to describe anatomical structures, such as belly button for umbilicus, or “bowel” for large intestine or small intestine, or references such as “hipbone,” “front end of the stomach,” “liver shadow” or “stomach bubble.”

“Stomach bubble” or “liver shadow” would not be terms that experts would use in describing findings or organs on CT images. The experts stayed in context to the medical imaging study they were using and did not incorrectly interchange terminology. All the images provided in this exercise were CT images; “stomach bubble” is not a CT descriptor, yet it was a term used by novices. The term, “stomach bubble,” can be used on plane films of the chest or abdomen to identify the gas within the stomach. The reference to liver shadow is more appropriate to ultrasound imaging when image characteristics can be described using shadowing of the sound waves.

Experts know their discipline thoroughly and can apply knowledge to various situations appropriately. The novices may have learned these terms in their prior courses and clinical experiences, but they might not have had the time or practice to connect those terms to the appropriate situation (in this case the CT scan).

5.6. Experts Easily Adapt to a New Situation

Experts can “act in the moment.”92–94 Experts do this on a daily basis with each case and patient that they encounter. They approach new problems using their expertise and look at it as an opportunity to expand their current level of expertise. Doing so requires confidence that they can adapt to and succeed in a new situation.

During the study, participants commented about their level of confidence or their ability to incorporate past experience into their reasoning through each event. Experts favored more self-assured statements (46% compared with 31%, , Table 4, AC23). Both experts and novices incorporated past experience at the event level (AC26, 27% and 22%, respectively, , Table 4). However, if evaluated at the number of times that experts and novices commented in all the events, experts made 42 references to past experience compared with 27 made by novices. For example, experts made statements such as, “It’s experience now, I mean—,” “I am not sure how I know, but I just know,” “It’s a pattern I have in my head. So I suppose it is a concept that I’ve developed over years, you know,” and “from experience looking at lots, thousands of CTs of the belly.”

On the contrary, novices significantly expressed uncertainty in their knowledge (experts 13%, novices 52%; , Table 4, AC24). Common statements were: “Then I guess here,” “I’m not very familiar with this one,” and “I really don’t know.” Their verbalization of difficulty was at times more specific: “Sagittal is just much more difficult for me,” “I feel like we see the most axials so then we have to like switch everything up [referring to looking at sagittal images] to think about the anatomy, It’s hard” and “I don’t have a good sense of the 3-D—how the pelvis would change, so I’m like having to do little testing for that.” These quotes and the uncertainty in their knowledge demonstrate that novices have trouble adapting to new situations, whereas experts do not.

5.7. Similarities Between Experts and Novices

Although we have focused on the differences between experts and novices, there were also a number of ways in which they were similar. When orienting the image to the simulation torso, both experts and novices equally used the reference of the external physical landmarks to describe the location in the body (AC6; ). Similarly, both would describe the location of the image with respect to its location within an organ (e.g., such as the image is at the superior/inferior pole of the kidney or the lower part of the liver (AC9; ). Although not anatomic descriptors, they both had the realization of the part of the organ where the image was located. Also, both used reference to prior anatomy and cases that they had seen or performed within the simulation system (AC 21; ). Each had also developed a method to define the spatial anatomy for themselves (AC 27; ). These similarities are important because they suggest ways of thinking and reasoning that medical education might build on to scaffold novices toward expertise.

6. Discussion

Given the differences in how experts and novices correlate radiology images with the physical body, how can we better support medical students in achieving expertise in this area? Ericsson proposed that expertise is a result of ‘deliberate practice,’ where the learner has full mental engagement that is goal-oriented and focused on overcoming current performance boundaries.95 He proposed that expertise is a product of 10,000 h of deliberate practice.96,97 Does the current state of medical education allow for this type of deliberate practice to support the development of expertise in correlation? If not, what changes could we make such that it does?

In medical school, students are presented with a great deal of facts about disease processes, but they have little experiential knowledge. Thus, when presented with a case, they can list with detail all the lab values, signs, and symptoms, whereas experts will have developed a list of differential diagnoses that apply to the case. How do we assist our novices to focus on critical facts (e.g., narrow zone of transition) and yet freely draw upon encapsulated knowledge (e.g., anatomical relationships) to solve clinical problems such as correlating anatomy with medical images?

6.1. Current State of Medical School Education

The current medical school curriculum has 2 years of basic science followed by 2 years of clinical experience. This separation of the basic science and clinic experience creates barriers for the integration and correlation of the anatomy and clinical practice. Furthermore, due to institutional pressures, “basic science professors” mainly teach the basic science content. This places a strain on knowledge content, suffering from over-detail, which is not necessary for most physicians to practice medicine, and lack of emphasis of clinically important concepts. The terminology used in the basic sciences, especially in anatomy, often differs from clinical medicine, thus causing student confusion and the need to “relearn” material.98,99 The traditional medical school curriculum does not specifically address the correlation of the physical exam, medical anatomy, and medical imaging studies. More or less it is implied that this correlation will be made within the clinical setting or by working through a problem case.

Medical students are predominantly assessed with multiple-choice question (MCQ) exams. No fill in the blank or short answer questions. The MCQs provide convenient standardization and efficient testing for large classes.100 MCQs are often poorly written, and test memory recall of individual facts rather than knowledge application.100 These types of tests do not assess critical thinking skills.

The current curriculum and methods of assessment in medical school do not adequately prepare students to become experts. The topics in medical school are presented to expose students to many disciplines; however, this does not allow enough exposure for the students to self-organize the content meaningful “chunks,” which will later allow for more efficient retrieval. Without the time to organize their knowledge into meaningful chunks, the students are at a disadvantage to be able to recognize complex patterns when presented. Because medical students have not had the opportunity to develop a deeper knowledge for specific content, it is unlikely that they can exhibit ‘conditionalized’ knowledge. This lack of deep understanding also impedes the medical student from being able to flexibility retrieve structured knowledge with little conscious effort in critical situations. Finally, the structure of the curriculum and assessment methods does not prepare the students to “act in the moment,” and apply their knowledge to new situations. It does not promote critical thinking that fosters this behavior.

6.2. Possibilities for Improving Medical Education

If the current state of medical education cannot support the development of expertise in correlating imaging with anatomical knowledge, what changes need to be made? Building upon what we observed in this study, we can adapt the elements of expertise and propose changes in medical education.

6.2.1. Improvement to support structure and organization of knowledge

Experts organize their knowledge around core concepts that make sense from a clinical experience, treating each event as a clinical problem. In contrast, novices took each case in a literal context, listing findings, and even concentrated on details that were nonanatomical. Often exams in medical school exams focus too much on the recall of independent facts rather than the application of knowledge.100 This behavior translates into students concentrating on details, rather than learning critical problem-solving skills. To aid students to develop the skills of experts, curriculum should focus on content but also problem-solving using clinical cases. Critical thinking skills should be integrated into core concepts that are supported by the details and illustrate the importance of these details to the core concepts. The assessment of this content should focus on application of this knowledge utilizing critical thinking skills. Within radiology, students should not only identify the salient features within an imaging exam, but also discuss a differential diagnosis and what imaging features support those diagnoses.

6.2.2. Improvements to support recognition of patterns

Experts are able to notice features and patterns that are not noticed by novices. They are able to chunk information into meaningful packets and apply this to a situation. Novices used large structures to localize, used nonanatomical cues or resorted to trial and error methods. It is important to point out these salient points in a subject to students; however, it goes beyond that. We should be showing them how to use these salient points, and also encourage them to search for them in other disciplines. These features and patterns are not unique to radiology but are part of every discipline of medicine. Showing learners how to identify these patterns, and encouraging them to look for such patterns, will promote responsibility for their own learning. In radiology, students can work one-on-one through cases with radiologists and discuss these key findings. Later, this understanding on how to use these features and patterns can be evaluated by students demonstrating how they used these patterns in unknown cases.

6.2.3. Improvements to support development of situational applicability

Experts can retrieve the knowledge appropriate for the task and can “act in the moment.” They approach new problems to use their expertise and look at it as an opportunity to expand their current level of expertise. Doing so requires confidence that they can adapt to and succeed in a new situation. The novices in this study were not always comfortable using the correct terminology or lacked confidence in applying their knowledge to new difficult situations. In medical school, we need to encourage the students not to think like students but to think and act as professionals. We need to encourage students to think, make decisions, and solve problems through integration and assimilation of knowledge.101,102 There are many ways to accomplish this goal, but one way may be through simulation exercises that create real-world situations that force students to apply their knowledge and think in the moment.101 Simulation sessions also promote practicing and reinforcing experimental inquiry,101 which will lead to confidence when called to “act in the moment.” There will be failures in a simulation exercise, but it is an ideal method to learn areas of knowledge gaps with no detrimental consequence in a true clinical situation.

6.2.4. Improvements to support adaptation to new situations

Experts can easily adapt their knowledge to new situations. This is what occurs for expert physicians with each new clinical patient encounter or in radiology as new imaging studies are to be interpreted. The combination of knowledge structure and organization, and the flexibility to retrieve this knowledge allows experts to apply this to new situations. Exposing students to new situations and scenarios will challenge them to adapt their knowledge to more and more complex situations. Without challenge and deliberate practice, this skill will lack development.

7. Conclusion

Experts and novices in radiology have similar features to experts and novices in other domains—inside and outside of medicine. The results from this simulation study on novice–expert differences demonstrate that experts are faster, use medical terminology appropriate to the specific imaging, identify cues (narrow zone of transition) to fine-tune image localization, and recognize meaningful patterns to focus on an anatomical region. In contrast, novices were more likely to identify anatomical structures in a list fashion, concentrate on organ-level anatomy, and compare locations by organ size and shape.

The expert–novice paradigm is useful for understanding these major differences—and this study has helped us identify the starting and ending points in learning to coordinate physical exams with imaging. However, there are likely many steps along the way—and further work is needed to understand the trajectory from expert to novice. One method would be to follow the Dreyfus and Dreyfus9,103 model of adult skill acquisition and study learners that moves beyond the expert–novice dichotomy and instead looks at five (rather than two) stages of development. These five stages can be applied to medical education, from medical students to experienced clinicians. More investigation is needed to define these stages (novice, competent, proficient, expertise, and master) within medicine and within a specific medical specialty. A deeper understanding of the knowledge and skills at each level can provide specific guidance for defining appropriate curriculum and evaluation processes for medical students and residents. The novice–expert research paradigm opens up targeted opportunities to make our medical education system stronger and more appropriate than current pedagogy for supporting the development of complex medical expertise.

Acknowledgments

This work was supported by an Radiological Society of North America (RSNA) Research and Education Foundation Education Scholar Grant. The authors would like to acknowledge the expertise of Devon Klompmaker (programmer) and Mike Beall (3D artist) at the Learning Games Network, Madison, Wisconsin.

Biographies

Lonie R. Salkowski is a radiologist with a doctorate in education that is interested in the cognitive reasoning and perceptual skills of medical image interpretation. She explores these with specially developed simulation systems.

Rosemary Russ has her doctorate in physics and postdoctoral studies in learning sciences. Her research is grounded in the assumption that peoples’ tacit understandings of knowledge and learning impacts their reasoning and the knowledge they draw on during reasoning.

Appendix.

The overall divisions of the qualitative action codes and description of each action code and representative examples from the transcripts are shown in Table 6.

Table 6.

The overall divisions of the qualitative action codes. A description of each action code and representative examples from the transcripts are provided.

| Divisions | Number | Action code | Description of code | Examples |

|---|---|---|---|---|

| Anatomical identification | AC1 | Identify imaging plane | Describes the imaging plain of the presented image | “So this means another axial image”; “so we’re sagittal” |

| AC2 | Identify anatomical structures | Describe what they are seeing in the image at the time of the initial assessment; names the organs that they see in the image | “stomach, spleen,” “small bowel, and bottom tip of the liver”; | |

| “spine, vertebrae, kidneys”; | ||||

| “left renal vein” | ||||

| AC3 | Nonverbalization | No dialogue while doing task | Silence; often noted in transcripts as pauses or lapsed time | |

| AC4 | Emphasizes substructures of organs | The identification of these substructures is important to their understanding of the image location | “I can see the rugae in the stomach”; “medial limb of the right adrenal gland”; | |

| “third segment of the duodenum crossing over.” | ||||

| AC5 | Physical contact with the mannequin | This action simulates action similar to a physical examination | Palpation of the mannequin to localize or define the location of the anatomy, similar to a physical examination | |

| AC6 | Uses an external landmark to describe location | Uses landmarks on the external surface of the body to describe the anatomic location | “It’s above the umbilicus”; | |

| “I want to be near the epigastric region”; “midclavicular”; “use bottom rib to signify where liver edge is located | ||||

| AC7 | Shape, size or amount of organ | Describes the size or shape of the organ or structure. It becomes of some importance to identifying the organ location | “so the liver is quite a bit larger”; “larger slice of liver”; | |

| “liver took up more of the screen” | ||||

| AC8 | Expresses the importance of adjacent anatomy | Uses the adjacent anatomy to describe the location of the anatomy (presence or absence of anatomy/organs). This relationship of the anatomy is important in their localization. | “So level of like the lowest ribs with—you can still see part of the liver”; “And so, they’re just, like, easy to see how they related to the anatomy around them | |

| AC9 | Location within an organ | Describes a nonanatomic terminology location within an organ to localize where the image is in the organ | “biggest part of the liver”; “upper like third-ish or like the lower third (when describing a kidney)”; “superior/inferior pole of the kidney”; “lower part of the liver” | |

| AC10 | Use of incorrect terminology | Describes anatomic findings using nonmedical or nonanatomic terms | “stomach bubble”; “air fields” (when describing lungs); “Belly button” “bunch of bowel”; “big lung window”; “gastric bubble”; north and south rather than superior and inferior; describing structures by color, black and white, contrast rather than the organ. | |

| AC11 | Use of descriptive cues (nonanatomic) | Describe structure by its color | “And then there are these three little dots in the duodenum, and so I matched up those”; “I was seeing if…this little, black blob—if that’s similar to that one”; “liver shadowing”; “This time, I think it’s more contrast in color.” | |

| Localization and reasoning | AC12 | Identify location within the body (initial) | Describes the location of where organs/structures are in the body | “this is pretty high, I see the stomach”; this is on the right-hand side”; “Well I knew it was right, because his liver was there”; “it’s above the umbilicus” |

| AC13 | Reasoning why used certain organs/structures to localize (initially) | Using key organs or structures to identify the location of the image | “So I’m well medial to the iliac crest. So again that would bring it in here rather than being out here”; “And the pancreas is up pretty high, so I would say, like, I don’t know—there-ish?”; “The relationship, yeah, because knowing the psoas I know that it has to be a little more medial because if I go too far lateral, it’s going to be the quadratus lumborum because I’ve come out of the psoas.” | |

| AC14 | Compared with expected cued target | Compared with the target goal and where they placed the probe | “I’m too high”, “I’m too low”; “I’m too far to the right” | |

| Strategies for matching | AC15 | Elements or reasoning for fine tuning using small structures (matching up image) | Using structures that have narrow zones (vessels, loop of bowel) of change to adjust the location and match images | “Well, it’s thinner in the superior/inferior dimension. So it’s hard to—things that are thicker are harder to fine tune like that”;” Because it was the tiniest structure that I could use that was precise, like as opposed to like a triangular piece of the liver or, you know, kidneys you can’t really, you know, they’re kind of look the same for a period of time, and so I was using the renal vein”; “Yeah, you want—vascular structures are going to be helpful. You see that that’s what I’ve been going after, because they’re relatively unchanging”; “I was just looking at the way the crescents (of the lungs) looked.”; “so I was looking at the loop of small bowel that goes across the middle of the image”; “looking at the shape of the bone here and seeing what, you know, sort of the shape of the iliac crest” |

| AC16 | Elements for reasoning remains at organ level (matching up images) | Using the size and shape of a larger organ (liver, spleen, kidney) to adjust location | “I was focusing in on like the smaller organ and the spleen because I felt like I could match that up better than using the liver which is larger. So it’s the size of the spleen is what I was using on that one.” | |

| AC17 | Reasoning for matching up that is not anatomic | Using nonanatomic references to match up images; matches colors or patterns | “The air and the fluid level in the stomach is what I was matching it up on”; “looking for patterns, like seeing this kind of swirl part of the kidney or looking for that dark spot there that looks similar to the test image or the initial image and looking for the bowel gas patterns” | |

| AC18 | Trial and error for matching and refining the matching of anatomy | Makes movements in search of specific anatomy | “going to keep moving down until I see the kidneys”; “And then I was, like, getting positive reinforcement from the image as I was moving down that it was adjusting more to the correct one.” | |

| Experience and confidence cues | AC19 | Uncertainty in anatomy | Uncertain what structure they are seeing in the image | “looks like a large vessel. I don’t know if that’s the aorta or not, but pretty close to midline. Puts me on the left side. Slightly in the left side. But it could be the IVC, in which case I would be on the right and I really don’t know.”“ I’m not sure what that black is (in reference to the lungs)”; “I think that’s the pancreas” |

| AC20 | Misinterpretation of anatomy | Called the presented anatomy the wrong structure; don’t know what the structure is; not knowing where anatomy is on mannequin (internal or external) | Referring to anatomy that is not present, i.e., I think that’s the pancreas (there is not pancreas in the image); Referring to the duodenum as the pancreas; referred to anatomy in the image that was not present in the image (i.e., Gall bladder’s presence when it was not present); “He doesn’t have a belly button” (but it really does have one) | |

| AC21 | Compare at case level/prior cases | Compares the current image with a prior case—either appearing similar or in relationship to another case within the simulation session | “I’m just a little higher than the last one”; “So I’m going to go a little bit lower than I did the last time”; “this is similar to the first one”; “this is like where I had initially put my probe because I saw too much of the liver”; “So I used the basis of what I had to move the last time”; “I know it’s higher that the last one”; “Kind of looks like the first one you showed me” | |

| AC22 | Localizes by the absence of a structure | The absence of a structure becomes an important way of localization | “I know that I’m on the right side because I don’t see the heart”; “so we’re not seeing much spine”; “I don’t see the kidneys yet”; “I’m not midline because I don’t see the vertebrae” | |

| AC23 | Certainty of knowledge (positive) | Statements about their confidence in what they are doing (affirmative statements; sure about their decision) | “It’s experience now, I mean—”; “I am not sure how I know, but I just know”; “I’m correct” | |

| AC24 | Uncertainty of knowledge (negative) | Statements about their lack of confidence in what they are doing (uncertainty; not completely sure) | “Then I guess here”; “I’m not used to really trying to determine where on the body the pancreas is”; “I guess”; “I’m not very familiar with this one”; “I really don’t know” | |

| AC25 | Direction to anatomy | Vocalization of direction to move to locate desired anatomy (correction to initial localization) | “we’re down at the very edge of the liver, kidneys so I got to go farther inferior”; “So I need to go move toward where there’s more volume of the liver.”; “The liver was too big, so I knew it was too high.” | |

| AC26 | Incorporation of past experience | Relates how they approach the case using reference from their own past experience outside of this simulation event | “It’s a pattern I have in my head. So I suppose it is a concept that I’ve developed over years, you know”; “from experience looking at lots, thousands of CTs of the belly”; “No. I’m afraid now I think CT. I always, even in ultrasound, start with the axial plane because I think CT”; “Like this reminds me of when like you scroll down on the CT chest too low and you start seeing the liver.” | |

| AC27 | Define spatial anatomy for themselves | Their explanation of how they are able to localize the image in space | “I have a very good 3-D picture in my head of where it’s supposed to be”; “So I visualize where it should be physically in space and where I have to interact with the body’s surface to get there”; “Yeah, I’m just picturing the anatomy of the organs in the abdomen. So, yeah”; “I don’t have a good sense of the 3-D how the three pelvis would change, so I’m like having to do little testing for that.” | |

| AC28 | Unreliable anatomy | Explains why some anatomy is less reliable to use for localizing | “between studies, they vary so much because you have peristalsis going on all the time. So bowel is really…bowel is too variable.” | |

| AC29 | Verbalizes difficulty | When localization of the area or plane of imaging is more difficult to assess | “Sagittal is always more difficult”; “Yep. And actually midline is the hardest anyways”; “I don’t have a good sense of the 3D how the three pelvis would change, so I’m like having to do little testing for that.” | |

| AC30 | Realization of anatomic cues that they missed | Retrospect realization that there was key anatomy that could have helped guide their anatomic location | “I guess and the belly button— you see exactly the belly button. [CHUCKLING] That one should have helped me”; “Well, no. I should have seen that. I didn’t look at the umbilicus. That was stupid”; “Again, you got to look at all pictures of the image.” |

Disclosures

No conflicts of interest, financial or otherwise, are declared by the authors.

References

- 1.Pathiraja F., Little D., Denison A. R., “Are radiologists the contemporary anatomists?” Clin. Radiol. 69(5), 458–461 (2014).https://doi.org/10.1016/j.crad.2014.01.014 [DOI] [PubMed] [Google Scholar]

- 2.Cutler D. M., McClellan M., “Is technological change in medicine worth it?” Health Aff. 20(5), 11–29 (2001).https://doi.org/10.1377/hlthaff.20.5.11 [DOI] [PubMed] [Google Scholar]

- 3.Duszak R., Jr., “Medical imaging: is the growth boom over,” Neiman Report, Harvey L. Neiman Health Policy Institute, Reston, Virginia: (2012). [Google Scholar]

- 4.Rubin A., “Contributions of cognitive science and educational technology to training in radiology,” Invest. Radiol. 24, 729–732 (1989).https://doi.org/10.1097/00004424-198909000-00017 [DOI] [PubMed] [Google Scholar]

- 5.Nodine C. F., Krupinski E. A., “Perceptual skill, radiology expertise, and visual test performance with NINA and WALDO,” Acad. Radiol. 5, 603–612 (1998).https://doi.org/10.1016/S1076-6332(98)80295-X [DOI] [PubMed] [Google Scholar]

- 6.Bransford J. D., Brown A. L., Cocking R. R., How People Learn: Brain, Mind, Experience and School, Expanded Edition, National Academy Press, Washington, D.C. (2000). [Google Scholar]

- 7.Chi M. T. H., “Laboratory methods for assessing experts’ and novices’ knowledge,” in The Cambridge Handbook of Expertise and Expert Performance, Ericsson K. A., et al., Eds., pp. 167–184, Cambridge University Press, New York: (2006). [Google Scholar]

- 8.Chi M. T. H., “Theoretical perspectives, methodological approaches, and trends in the study of expertise,” in Expertise in Mathematical Instruction, Li Y., Kaiser G., Eds., pp. 17–39, Springer US, Boston, Massachusetts: (2011). [Google Scholar]

- 9.Dreyfus S. E., Dreyfus H. L., “A five-stage model of the mental activities involved in directed skill acquisition,” No. ORC-80-2, University of California Operations Research Center, Berkeley: (1980). [Google Scholar]

- 10.Lesgold A. M., Acquiring Expertise, Technical Report No. PDS-5, pp. 1–51, University of Pittsburgh Learning Research and Development Center, Office of Naval Research; (1983). [Google Scholar]

- 11.Causer J., Barach P., Williams A. M., “Expertise in medicine: using the expert performance approach to improve simulation training,” Med. Educ. 48(2), 115–123 (2014).https://doi.org/10.1111/medu.12306 [DOI] [PubMed] [Google Scholar]

- 12.Khan K., Pattison T., Sherwood M., “Simulation in medical education,” Med. Teach. 33(1), 1–3 (2011).https://doi.org/10.3109/0142159X.2010.519412 [DOI] [PubMed] [Google Scholar]

- 13.Schuwirth L. W. T., van der Vleuten C. P. M., “The use of clinical simulations in assessment,” Med. Educ. 37, 65–71 (2003).https://doi.org/10.1046/j.1365-2923.37.s1.8.x [DOI] [PubMed] [Google Scholar]

- 14.Steadman R., et al. , “Simulation-based training is superior to problem-based learning for the acquisition of critical assessment and management skills,” Crit. Care Med. 34(1), 151–157 (2006).https://doi.org/10.1097/01.CCM.0000190619.42013.94 [DOI] [PubMed] [Google Scholar]

- 15.Tschan F., et al. , “Explicit reasoning, confirmation bias, and illusory transactive memory: a simulation study of group medical decision making,” Small Group Res. 40(3), 271–300 (2009).https://doi.org/10.1177/1046496409332928 [Google Scholar]

- 16.Ward P., Williams A. M., Hancock P. A., “Simulation for performance and training,” in The Cambridge Handbook of Expertise and Expert Performance, Ericsson K. A., et al., Eds., pp. 243–262, Cambridge University Press, New York: (2006). [Google Scholar]

- 17.Faruque J., et al. , “Modeling perceptual similarity measures in CT images of focal liver lesions,” J. Digital Imaging 26(4), 714–720 (2013).https://doi.org/10.1007/s10278-012-9557-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kok E. M., et al. , “Looking in the same manner but seeing it differently: bottom-up and expertise effects in radiology,” Appl. Cognit. Psychol. 26(6), 854–862 (2012).https://doi.org/10.1002/acp.2886 [Google Scholar]

- 19.Kundel H. L., “History of research in medical image perception,” J. Am. Coll. Radiol. 3(6), 402–408 (2006).https://doi.org/10.1016/j.jacr.2006.02.023 [DOI] [PubMed] [Google Scholar]

- 20.Kundel H. L., La Follette P. S., “Visual search patterns and experience with radiological images,” Radiology 103(3), 523–528 (1972).https://doi.org/10.1148/103.3.523 [DOI] [PubMed] [Google Scholar]

- 21.Kundel H. L., Nodine C. F., Krupinski E. A., “Searching for lung nodules–visual dwell indicates locations of false-positive and false-negative decisions,” Invest. Radiol. 24(6), 472–478 (1989).https://doi.org/10.1097/00004424-198906000-00012 [PubMed] [Google Scholar]

- 22.Krupinski E. A., “Visual scanning patterns of radiologists searching mammograms,” Acad. Radiol. 3(2), 137–144 (1996).https://doi.org/10.1016/S1076-6332(05)80381-2 [DOI] [PubMed] [Google Scholar]

- 23.Krupinski E. A., “The importance of perception research in medical imaging,” Radiat. Med. 18(6), 329–334 (2000). [PubMed] [Google Scholar]

- 24.Krupinski E. A., et al. , “Searching for nodules: what features attract attention and influence detection?” Acad. Radiol. 10(8), 861–868 (2003).https://doi.org/10.1016/S1076-6332(03)00055-2 [DOI] [PubMed] [Google Scholar]

- 25.Kumazawa S., et al. , “An investigation of radiologists’ perception of lesion similarity,” Acad. Radiol. 15(7), 887–894 (2008).https://doi.org/10.1016/j.acra.2008.01.012 [DOI] [PubMed] [Google Scholar]