Abstract

Purpose

The primary purpose of this study was to derive spatial release from masking (SRM) performance-azimuth functions for bilateral cochlear implant (CI) users to provide a thorough description of SRM as a function of target/distracter spatial configuration. The secondary purpose of this study was to investigate the effect of the microphone location for SRM in a within-subject study design.

Method

Speech recognition was measured in 12 adults with bilateral CIs for 11 spatial separations ranging from −90° to +90° in 20° steps using an adaptive block design. Five of the 12 participants were tested with both the behind-the-ear microphones and a T-mic configuration to further investigate the effect of mic location on SRM.

Results

SRM can be significantly affected by the hemifield origin of the distracter stimulus—particularly for listeners with interaural asymmetry in speech understanding. The greatest SRM was observed with a distracter positioned 50° away from the target. There was no effect of mic location on SRM for the current experimental design.

Conclusion

Our results demonstrate that the traditional assessment of SRM with a distracter positioned at 90° azimuth may underestimate maximum performance for individuals with bilateral CIs.

Cochlear implants (CIs) have proven to be effective in restoring audibility to individuals with severe-to-profound hearing loss. For individuals with bilateral severe-to-profound sensorineural hearing loss, bilateral CIs are considered standard of care (Balkany et al., 2008). The benefits of bilateral implantation compared with unilateral implantation include significant improvements in speech understanding in noise, spatial hearing abilities, and subjective reports of communication abilities. These benefits are commonly attributed to access to binaural summation and head shadow cues and, to a lesser extent, binaural squelch (Buss et al., 2008; Grantham, Ashmead, Ricketts, Labadie, & Haynes, 2007; Litovsky, Parkinson, Arcaroli, & Sammeth, 2006; Potts & Litovsky, 2014; Pyschny et al., 2014; Senn, Kompis, Vischer, & Haeusler, 2005; van Hoesel, 2012). Despite the benefit of adding a second implant, most implant users struggle to understand speech in a background noise of spatially separated distracters, compared with listeners with normal hearing (Loizou et al., 2009; Tyler et al., 2002; van Hoesel, 2015).

In a cocktail party environment, spatial separation of talkers can produce a substantial release from masking in adults with normal hearing (Allen, Carlile, & Alais, 2008; Arbogast, Mason, & Kidd, 2002; Cherry, 1953; Hawley, Litovsky, & Culling, 2004). The benefit of spatially separate talkers comes from a listener's ability to differentiate between a target and distracter talker(s). The cues available to differentiate between a spatially separate target and distracter depend on a number of factors, including whether or not the listener is using one or two ears, and the number and locations of the distracters. In the case where only a single distracter is presented, a listener could potentially make use of both monaural head shadow cues and interaural time and level differences to better understand a speech target that is spatially separate from a distracter. Head shadow, also known as better ear listening, is typically a high-frequency cue produced by a difference in the signal-to-noise ratio (SNR) between the two ears at a given frequency. It can also be thought of as a monaural cue as you only need one ear to take advantage of an SNR boost if the noise is presented on the side opposite the attending ear. Listeners with normal hearing are remarkably sensitive to both monaural head shadow and binaural cues. As a result, spatial separations as small as 2° can result in significant spatial release from masking (SRM) and high levels of speech understanding when the target is presented at 0° azimuth (Brungart & Simpson, 2005; Kidd, Mason, Best, & Marrone, 2010; Srinivasan et al., 2016).

Despite the fact that individuals typically perform better with bilateral CIs than a single CI (Buss et al., 2008; Gifford, Dorman, Sheffield, Teece, & Olund, 2014; Litovsky, Parkinson, & Arcaroli, 2009), the observed degree of SRM in this population is substantially worse than what is observed for listeners with normal hearing. This difference can be explained, in large part, by two factors: (a) the distortion of binaural cues caused by implant processing and (b) the high target-to-masker ratio (TMR) needed to perform this task.

In the case of interaural time differences (ITDs), CI processors filter the incoming signal and extract the envelope in each of 12–22 bandpass filters. Envelope extraction involves low-pass filtering the signal, which discards higher frequency fine-structure information. Thus, only the ongoing envelope-based ITD is presumably available for bilateral CI users, and sensitivity to that cue has been shown to be more variable than interaural level differences (ILDs) and consistently much poorer than for listeners with normal hearing (Grantham, Ashmead, Ricketts, Haynes, & Labadie, 2008; Laback & Majdak, 2008; Laback, Pok, Baumgartner, Deutsch, & Schmid, 2004). Listeners with normal hearing are sensitive to and rely heavily on the fine structure ITD cues for localization of complex stimuli (Brughera, Dunai, & Hartmann, 2013; Wightman & Kistler, 1992). Another contributor to poor ITD sensitivity is the fact that bilateral CI processors are not synchronized, and thus, timing of individual pulses is not coordinated between ears. Without synchrony between processors, envelope ITD cues are likely to fluctuate over time and, thus, be relatively unreliable. Additionally, with the exception of MED-EL FSP and FS4 or FS4p signal processing, current implant signal processing strategies present pulses at fixed intervals at rates often exceeding 1,000 pulses per second (pps) and possibly different rates across ears. Given that neurons need to undergo a refractory period and most can only fire up to 300–500 action potentials per second, precise ITD sensitivity is simply not realistic with current implant signal-coding strategies, channel stimulation rates, and lack of processor synchronization. Even in the case of FS4 signal processing, there is little evidence that fine-structure ITD sensitivity is improved for most listeners (Zirn, Arndt, Aschendorff, Lazig, & Wesarg, 2016). In addition to hardware limitations, individuals with CIs may be subject to physiological limitations related to deterioration of ganglion cells in the peripheral auditory system due to prolonged auditory deprivation (Coco et al., 2007; Leake, Hradek, & Snyder, 1999; Litovsky, Jones, Agrawal, & van Hoesel, 2010) and/or insertion trauma from cochlear implantation (Leake & Rebscher, 2004). Not surprisingly, ITD thresholds of bilateral CI users are commonly much poorer than those of individuals with normal hearing, and at times, thresholds cannot be obtained (Grantham et al., 2008).

ILD cues are also heavily distorted by CI signal processors. All commercial CI signal processing strategies include an automatic gain control (AGC) circuit. The AGC circuit differentially amplifies incoming signals to fit the programmed electrical dynamic range. Dorman et al. (2014) applied a simulation of MED-EL's signal processor with and without the input and output AGC to three different noise signals. They found that ILDs were significantly reduced with the AGC activated, particularly in the high frequencies. In some cases, low-frequency ILDs actually became negative (i.e., higher in the far ear) as a consequence of independent AGCs in each ear. Furthermore, they demonstrated that localization accuracy for bilateral CI users was best for broadband and high-pass noise and, therefore, related to the availability of ILD cues, irrespective of AGC. Similarly, Grantham et al. (2008) reported mean ILD thresholds of bilateral CI users of 3.8 dB with AGC on and 1.9 dB with AGC off. These values are close to those of listeners with normal hearing and are in agreement with other studies showing that ILD sensitivity in bilateral CI can surprisingly approach that of adults with normal hearing. Grantham et al. also showed that horizontal-plane localization performance was highly correlated with ILD thresholds but not ITD thresholds. Thus, these findings suggest that individuals with bilateral CIs heavily rely on ILDs for spatial hearing.

There are several reports in the literature that the amount of SRM observed can be significantly impacted by the TMR used in that measurement. Arbogast, Mason, and Kidd (2005) measured SRM in individuals with normal hearing and individuals with hearing impairment. They found that individuals with hearing impairment demonstrated about 5 dB less SRM than individuals with normal hearing. The authors suggested that this difference may be due to the lower masker sensation level. Freyman, Balakrishnan, and Helfer (2008) conducted an SRM experiment with 10 adults with normal hearing using vocoded stimuli. In one experiment, both the target and maskers were vocoded sentences. In order for listeners to complete this experiment, very high TMRs were necessary (+24 dB). There was no evidence of informational masking or SRM in this experiment. In a follow-up experiment, the task was modified from repeating sentences to identifying single words in a closed-set design. With this simpler task, TMRs were able to be decreased significantly (down to −5 dB), and SRM was observed as expected. Like Arbogast et al. (2005), Best, Marrone, Mason, and Kidd (2012) also measured SRM in adults with normal hearing and hearing impairment. Best et al. (2012) also manipulated the TMR by varying the difficulty of the task with noise-vocoded stimuli. Like the other two examples, they also observed smaller amounts of SRM and less evidence of informational masking when TMRs were greater. In sum, there is significant evidence that, when high TMRs are required to conduct a task, there is less potential for informational masking and, thus, release from informational masking via spatial cues.

Although binaural cues are affected by implant signal processing, individuals with bilateral implants are often able to derive some SRM. Litovsky et al. (2009) evaluated the SRM of 15 bilateral cochlear recipients using the Bamford Kowal Bench Speech in Noise test (BKB-SIN; Etymotic Research Inc, 2005). They showed that 86% of subjects received a significant bilateral benefit (defined as a ≥ 3.1-dB improvement) when the babble was spatially separated by 90° from the target speech. This advantage was noted to increase as participants gained experience with their devices (evaluated at 3 and 6 months in this study). Other studies have also demonstrated significant SRM with various speech materials in adults with bilateral CIs. Gifford et al. (2014) tested a relatively large group of bilateral CI recipients (n = 30) and reported an average SRM of 5.1 dB on BKB-SIN sentences in the bilateral condition and a 20.4-percentage-point benefit on AzBio sentences (Spahr et al., 2012) at +5 dB SNR. Loizou et al. (2009) reported an average spatial release of 2–5 dB with Institute of Electrical and Electronics Engineers sentences with 30° of separation, or more, between the target and one or more distracters. Unlike Gifford et al. (2014) and Litovsky et al. (2009), Loizou et al. used the SPEAR3 research processor (van Hoesel & Tyler, 2003) via direct connect and simulated head-related transfer functions (HRTFs) to create a “spatial” listening environment. Together, these studies demonstrate that significant spatial hearing benefits are possible in adults with bilateral CIs. Again, in a single-masker paradigm like the one in the abovementioned studies, listeners need only take advantage of monaural better ear listening to perform this task.

The conventional method for assessing SRM is to compare speech recognition thresholds for a colocated target and distracter to a spatially separated target and distracter. Most commonly, the spatially separated distracter is presented from ±90°, whereas the target is presented from 0° in both the colocated and separated conditions (Gifford et al., 2014; Litovsky et al., 2009). This setup is used in part because it creates an “ideal” situation where the largest onset ITD cue is created, maximizing that binaural cue and creating a near-maximal ILD cue. In this sense, SRM estimations measured in this way may approximate a “best-case scenario” for a given listener, much in the same way binaural masking level difference is typically measured with the phase completely inverted. However, the extent to which the typical experimental design for assessing SRM in bilateral CI recipients is ideal is somewhat unclear and discussed below.

There are at least three limitations to the typical method for assessing SRM. First, presenting noise directed toward the side of the head can create a negative SNR due to the location and sensitivity of the behind-the-ear (BTE) microphone of a typical implant processor or hearing aid (Festen & Plomp, 1986; Kolberg, Sheffield, Davis, Sunderhaus, & Gifford, 2015; Pumford, Seewald, Scollie, & Jenstad, 2000). Another inherent weakness is that this type of experimental design informs us of performance in only a single listening situation. Although understanding the best-case scenario is very informative, a more applicable method may be to derive a performance-azimuth function by incrementally separating a masking talker from a target and tracking performance. Such studies have been completed with listeners with normal hearing (Kidd et al., 2010; Marrone, Mason, & Kidd, 2008; Srinivasan et al., 2016) using two symmetrically spaced distracters. As mentioned, listeners with normal hearing need very little spatial separation to derive substantial release from masking. For both Marrone et al. (2008) and Kidd et al. (2010), spatial separation of 15° was sufficient for significant SRM, and Srinivasan et al. (2016) observed significant SRM in young adults with normal hearing with as little as 2° of separation. To date, there have not been any investigations of SRM at many incremental spatial separations for individuals with CIs. Doing so will provide potentially useful information for clinicians as they understand the spatial separation an individual needs to derive benefit in a cocktail party environment. This information could also be useful in the programming of directional microphone technology and/or may indicate the need for remote microphone technology (e.g., frequency modulation or digital modulation system).

Finally, investigation of SRM at incremental azimuths is also important because ITD and ILD cues are not maximized at the same azimuth. Because ITD cues are maximized at azimuths of ±90° but ILD cues are maximized at azimuths ranging from 40° to 70° for frequencies of 1000–4000 Hz (Macaulay, Hartmann, & Rakerd, 2010), the conventional setup for testing SRM is biased toward ITD sensitivity. As CI users are typically much less sensitive to ITD cues than ILD cues, many previous studies may have likely underestimated SRM. Because CI users are forced to rely on ILD cues and monaural head shadow, peak SRM may in fact occur at distracter azimuths less than 90°.

The current literature suffers from several potential weaknesses regarding measurement of SRM for adults with bilateral CIs. The restriction of masker presentation azimuths to 0° and 90° may not be optimally assessing SRM. The possible bias toward presentation of optimal ITD and nonoptimal ILD cues, deleterious microphone effects on SNR, and lack of understanding of how much spatial separation is needed to improve performance over a colocated target and masker condition are the primary motivators for the current study.

With these limitations in mind, the current study had three primary objectives: (a) derive a performance-azimuth function for each hemifield, (b) compare SRM thresholds for a masker located on the left versus right, and (c) compare performance with BTE-microphone and a microphone placed at the entrance to the ear canal (T-mic) for eligible participants. It was expected that most subjects would experience some SRM. For those who did, our primary hypothesis was that peak SRM would occur for maskers located between 30° and 90° azimuth, approximately the point at which ILD cues are maximized. Our secondary hypothesis was that performance would be better for distracters positioned near the “poorer” ear, for cases of interaural asymmetry. Finally, we also hypothesized that the use of a T-mic would increase SRM over the more conventional BTE-mic as the T-mic placement has been demonstrated to be less susceptible to negative SNRs when distracters are presented from the side (Festen & Plomp, 1986; Kolberg et al, 2015).

Addressing these three objectives can provide insight into the mechanisms underlying spatial hearing abilities of adults with CIs. Previous work has only quantified SRM at limited azimuths. Mapping SRM across a wide range of azimuths will allow us to determine how much spatial separation is needed for an individual with bilateral CIs to obtain a benefit in speech recognition. This information can then act as a baseline comparison for future studies of individuals with various hearing configurations.

Method

Participants

Twelve postlingually deafened adults with bilateral CIs participated in this study. All participants had at least 6 months of experience with each implant prior to enrollment. Additional demographic information is provided in Table 1. Briefly, ages ranged from 32 to 83 years (M = 56.6), and experience with the newest implant for each person ranged from 1 to 12 years (M = 4.5).

Table 1.

Demographic information for 12 adult subjects enrolled in the current study.

| Subject ID | Gender | Age | Internal device | Processor | Strategy | Stim rate L | Stim rate R | Experience (years) | CNC words (L) | CNC words (R) | CNC words (B) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| S1 | M | 33 | HR90K 1j | Harmony | Optima S | 1727 | 2395 | 12 | 80 | 52* | 82 |

| S2 | F | 59 | CI24RCA (L), CI512 (R) | N5 | ACE | 900 | 900 | 6 | 72* | 86 | 88 |

| S3 | M | 67 | HR90K 1j | Naida Q70 | Optima P | 3712 | 3712 | 2 | 24 | 40* | 44 |

| S4 | F | 70 | Concert Standard (L), Sonata Standard (R) | Opus II | FSP | 1542 | 1542 | 4 | 56 | 68* | 94 |

| S5 | F | 64 | CI24RE(CA) (L), CI24M (R) | N5 (CP810) | ACE (L), CIS (R) | 900 | 900 | 3 | 38 | 44* | 54 |

| S6 | F | 52 | CI24RE(CA) | Freedom | ACE | 1200 | 1200 | 7 | 94 | 94 | 92 |

| S7 | M | 63 | Concert Standard | Sonnet | FS4 | 1600 | 1299 | 5 | 64 | 48* | 48 |

| S8 | F | 48 | HR90K MS | Naida Q70 | Optima S | 1428 | 1428 | 2 | 90 | 84 | 72* |

| S9 | M | 83 | Sonata Standard | Sonnet | FS4p | 1690 | 1440 | 6 | 48 | 72 | 74* |

| S10 | M | 40 | HR90K MS | Naida Q70 | Optima S | 2475 | 3535 | 1 | 64 | 84* | 86 |

| S11 | M | 69 | HR90K MS | Naida Q90 | Optima S | 1350 | 2121 | 1 | 71 | 71* | 80 |

| S12 | F | 32 | HR90K 1j | Naida Q90 | Optima S | 1401 | 2560 | 1 | 56 | 36* | 62 |

Note. Stimulation rates (Stim rates) are shown in pulses per second, experience is shown for each subject's more recently implanted ear (if not simultaneously implanted), and CNC (consonant–nucleus–consonant) word scores are shown as percent correct. Subjects with bold IDs participated in both BTE and T-mic testing. Subjects without an asterisk were implanted simultaneously. L = left; R = right; B = both; M = male; F = female.

CNC scores indicate the participant's first implanted ear.

Processors

Omnidirectional microphones were used in the MED-EL and Advanced Bionics devices, whereas a directional (cardioid) microphone was used in the Cochlear device because all microphone settings in Cochlear processors incorporate at least a modest amount of directionality. That said, with the “Zoom” feature disabled, the polar plot is fairly uniform for azimuths ranging from 0° to 90°.

Only minor modifications to clinical programs were made for those participants with advanced bionics devices prior to testing. Each participant's programs were checked and verified to contain a minimally directional program, and this was used for all testing. For Advanced Bionics users, the correct microphone configuration (i.e., BTE mic or T-mic) was selected in the programming software prior to testing. Five of the six Advanced Bionics recipients were tested in both the BTE-mic and T-mic conditions. The only modification made for these subjects was the microphone configuration. Two of the five subjects completed testing with the BTE microphones and then repeated testing with the T-mics, and the other three completed testing in the opposite order. While there are certainly more substantial programming changes that could have been made to optimize loudness balance between ears, such adjustments fell beyond the scope of the current study, which was to assess how bilateral CI users perform with sound processors as used in an everyday setting as clinically programmed. Although we did not explicitly balance loudness across ears, we asked each participant prior to testing whether they felt they had any obvious loudness mismatches. No such imbalances were reported by any of our participants.

Test Environment

Participants were seated in an anechoic chamber. The listener was centered in a 360° array of 64 stationary loudspeakers. The speaker array measures 155” in diameter, with each speaker spaced approximately 5.6° apart. Listeners were positioned such that the speakers were at ear level. The participants' heads were not restrained, but they were instructed to face a single speaker directly in front of them during stimulus presentation. The participants' head position was continually monitored via a live video feed from inside the anechoic chamber. Testing time ranged from about 3 hr for individuals who participated only in the BTE-mic portion of the study to approximately 5–6 hr for those Advanced Bionics users who participated in both microphone portions. All subjects were consented prior to study enrollment, and the study was approved by the Vanderbilt University Institutional Review Board.

Stimuli

SRM was assessed with the coordinate response measure (CRM) corpus (Bolia, Nelson, Ericson, & Simpson, 2000). The corpus contains sentences spoken by eight different talkers (four male). Only the male talkers were used in the study in order to reduce cues other than spatial separation that may lead to erroneous trends. Using same-gender maskers would have reduced the number of additional cues further, but different-gender maskers were ultimately chosen to increase the ecological validity of the study design. All sentences follow the structure “Ready [Call sign], go to [Color] [Number] now.” Combinations of eight call signs (“Arrow,” “Baron,” “Charlie,” “Eagle,” “Hopper,” “Laker,” “Ringo,” and “Tiger”), four colors (red, white, blue, and green), and eight numbers (1–8) create 256 unique sentences for each talker. The distracter consisted of one different male talker presented simultaneously with the target. The target was never the same male talker as the distracter. No two talkers uttered the same call sign, color, or number on any given trial. The target talker was always designated with the call sign “Baron.” Thus, the distracter always began with “Ready [non-target call sign].” Participants were instructed to respond with the color and number spoken by the target talker via a keyboard. Four buttons were modified with colored squares corresponding to the four possible color choices. Feedback was not provided. The target talker was always presented at 60 dB SPL, whereas the distracter level was adaptively varied per participant performance.

Procedure

Blocks of trials were grouped by spatial separation and hemifield. That is, performance was measured at each of a predetermined set of spatial separations using an adaptive SNR task. The target was always presented from the listener's front (0° azimuth), and the distracter was presented from one of the following azimuths: 0 (colocated), ±10°, ±30°, ±50°, ±70°, and ±90°. Only a single masker was presented at a time so the masker-right and masker-left conditions were presented in separate blocks of trials. Within a block of trials, only a single spatial separation was used. A block of trials consisted of up to 70 presentations and was terminated after eight reversals had been achieved. Reversals followed a two-down, one-up procedure tracking a performance level of 70.7% correct (Levitt, 1971). A response was considered correct only if both the correct color and number of the target were provided. The SNR for a given block of trials was defined as the average SNR of the last six reversals. The step size of the first two reversals was double that of the final six and varied on a participant-by-participant basis. An individual's score for each azimuth was calculated as the average SNR on two to three blocks of trials. Only two blocks of trials were run for a given distracter azimuth if the result of those two blocks were within 2 dB of each other. Otherwise, a third block was run, and the SNR was calculated as the average of all three blocks.

A stop rule was put in place to account for the possibility that some subjects would struggle greatly with understanding speech in the presence of any background noise. Because the dynamic range of speech extends approximately 12 dB to 15 dB above its root-mean-square value, an SNR exceeding +15 dB would hold little relevance as the target would be fully audible and the distracter would be approaching a subject's threshold of audibility. If a subject responded incorrectly enough for the program to exceed a +15 dB SNR during any block of runs, that block was terminated and not included in the analysis. SRM was calculated for each ear as the difference in performance between each distracter azimuth and the colocated condition.

Four Advanced Bionics recipients completed the entire protocol once using the BTE microphone and once with the T-mic configuration. Two subjects were first tested in the BTE-mic condition and two others in the T-mic condition. A fifth subject completed testing in both microphone conditions, but only for the better hemifield, and had to discontinue testing due to fatigue. That participant was also among the poorer performers and was on the border of our stop rule of performance at +15 dB SNR.

Results

BTE-mic

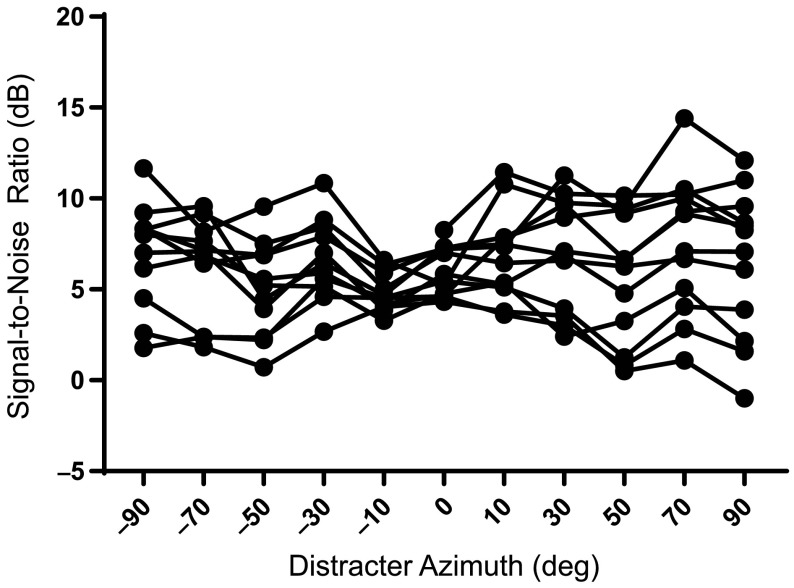

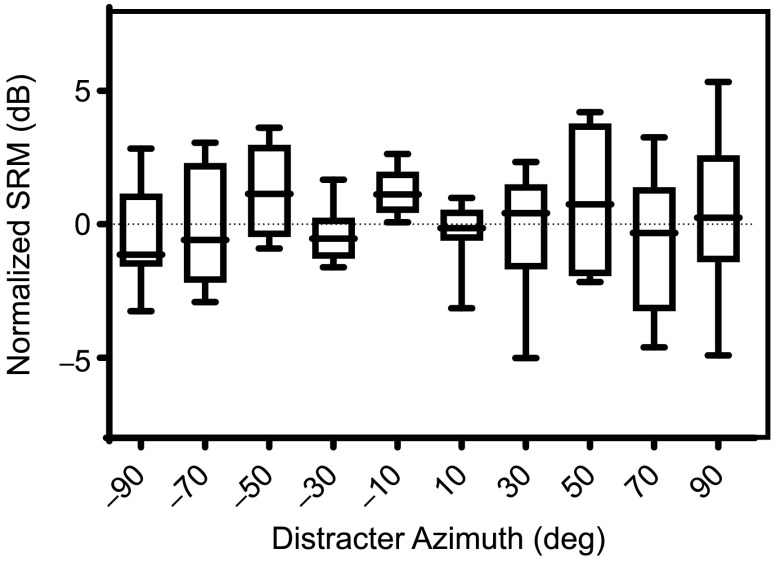

A one-way repeated-measures analysis of variance with Greenhouse–Geisser correction revealed a significant effect of distracter azimuth at the group level, F(3.198, 31.98) = 3.323, p = .029. When averaged across subjects and azimuths, mean SRM was slightly negative (−0.42 dB, range = −1.54 dB to 0.70 dB). Figure 1 displays the raw data for all 12 participants at each azimuth as a function of SNR. With few exceptions, the SNR needed to achieve 50% correct speech understanding ranging from 0 dB to 12 dB. Figure 2 displays SRM for each azimuth as normalized to the colocated condition. The most negative SRM was observed, on average over the two hemifields, with 70° of spatial separation, with best mean performance with 50° of separation. However, average performance was also 1.6 dB better when the distracter was located on the participant's poorer ear side. This difference was found to be significant using a Wilcoxon signed-ranks test (p = .01). Given this difference between hemifields, we were led to consider the data in terms of each participant's “better” and poorer ear, as judged by overall average performance on the SRM task and consonant–nucleus–consonant (CNC; Peterson & Lehiste, 1962) monosyllabic word scores.

Figure 1.

Raw data for all 12 participants and all distracter azimuths in the BTE-mic condition.

Figure 2.

Normalized SRM for all 12 participants in the BTE-mic condition. Horizontal bars indicate median values, boxes represent 25th and 75th percentiles, and whiskers denote 5th and 95th percentiles. SRM = spatial release from masking.

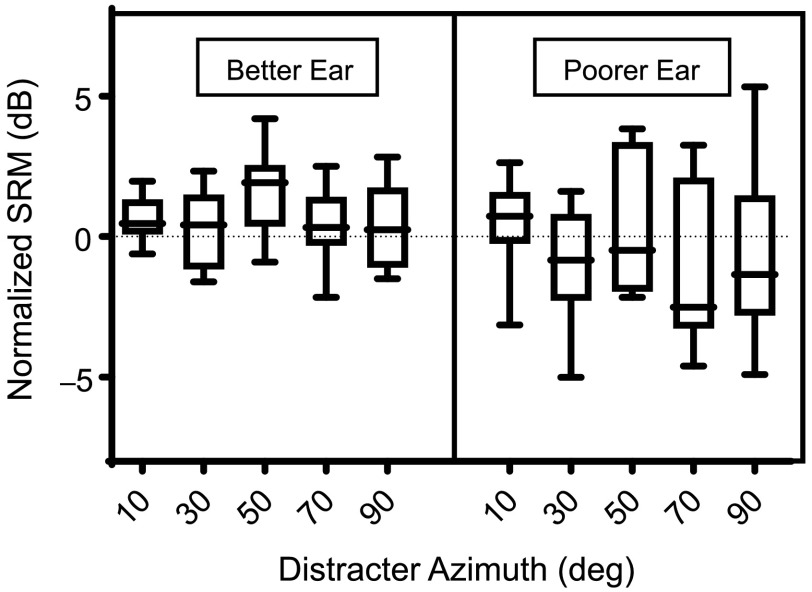

When considering performance of each participant's better hemifield only, mean SRM increased to 0.67 dB (range = 0.19 dB to 1.69 dB), which was not found to be significantly different from 0 using a one-sample t test (t = 0.576, df = 11, p = .57). Listeners showed negative mean SRM at all but one azimuth on their poorer hemifield—the exception being 50°, 0.13-dB mean SRM. SRM for the better ear versus the poorer ear is shown in Figure 3. Participant S11's data are not shown in this figure as the pattern of performance was significantly different from all other participants. All other participants in this study exhibited some positive SRM, whereas S11 SRM did not demonstrate positive SRM at any distracter azimuth (M = −5.0 dB, representing negative SRM). This participant also required the highest SNR (+11 dB). Given that this participant's pattern of performance was so different from all the others, we chose to remove it from the analysis of better ear versus poorer ear performance. The t test was rerun without S11 to consider whether the better hemifield SRM was significantly different from 0 and that result was statistically significant (t = 2.44, df = 10, p = .035). SRM at 50° with each subject's better hemifield was significantly positive (M = 1.69 dB) using a one-sample t test (p = .004). Significant SRM was also observed with 10° of separation in the better hemifield (p = .027) with a mean SNR benefit of 0.64 dB. No significant SRM was observed at other azimuths in either hemifield.

Figure 3.

SRM for the better and poorer ear at each distracter azimuth in the BTE-mic condition. The same plotting convention is used here as in Figure 1. SRM = spatial release from masking.

T-mic

We first compare raw speech understanding performance at each individual azimuth for both microphone conditions. Mean performance was slightly better (0.66 dB) when participants used the BTE microphones. Comparing SRM for both microphone conditions, essentially equivalent spatial benefit was observed for both conditions and was only 0.3 dB greater on average with the T-mic. This difference was not statistically significant (p = .34). As a comparison of the condition that yielded the greatest spatial benefit, mean SRM with 50° of separation with the distracter on the poorer ear side was 0.95 dB in the T-mic condition as compared with 1.68 dB in the BTE-condition.

Although subjects were not recruited on the basis of having asymmetric performance between ears on any measure, we did observe that group performance was significantly better at some azimuths in one hemifield than the other. To determine the extent to which this observation may be related to better speech understanding in one ear, a Pearson correlational analysis was conducted using CNC word understanding scores measured in quiet and average normalized SRM asymmetry (better–poorer ear score at each distracter azimuth). That analysis did not reveal a significant relationship (r = .26, p = .44). SRM scores for this analysis were only taken from the BTE-mic configuration because those values were available for all 12 participants. Average CNC score asymmetry and normalized SRM asymmetry were 13.5 percentage points (range = 0%–28%) and 1.41 dB (range = 0.19 to 2.86), respectively. As previously mentioned, normalized SRM asymmetry was statistically significant, as was CNC asymmetry (p = .033).

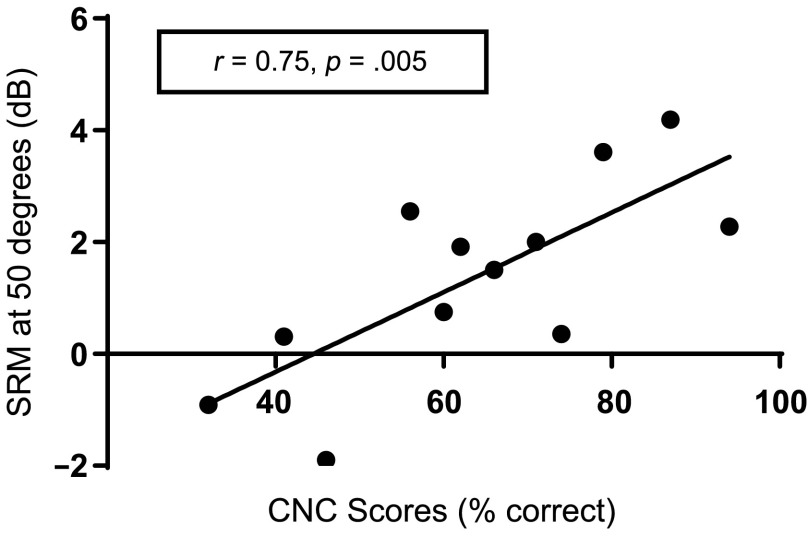

Perhaps, this correlational analysis was not found to be significant because the SRM component was a difference score composed of an average SNR across azimuths. Because most azimuths did not produce significant SRM for most subjects, additional analysis was warranted. We then conducted an analysis comparing mean CNC word scores and SRM only at 50°—the spatial separation showing best mean performance—to assess the extent to which overall word understanding scores may be related to observed SRM. This analysis revealed a significant positive correlation (r = .75, p = .005) and is shown in Figure 4. This relationship is not surprising given that the ability to derive SRM is based, in part, on one's ability to understand speech.

Figure 4.

Scatter plot of SRM values (in dB) with 50° of spatial separation and the distracter positioned near each participant's “poorer” ear as a function of mean CNC word recognition (in percent correct). The solid line represents the linear regression. SRM = spatial release from masking; CNC = consonant–nucleus–consonant.

Discussion

SRM has been demonstrated in the CI literature with bilateral CI listeners. Here, we sought to determine the pattern of SRM as talker separation increased from 10° to 90° in each hemifield for 12 participants listening with their own processors and clinically programmed settings.

Overall, all participants but one demonstrated some SRM for at least one distracter azimuth, with greatest group mean SRM observed with a distracter located at 50° azimuth. Participants tended to demonstrate greater SRM in one hemifield than the other, and most of these participants also reported feeling that they had a better and poorer ear. This report was generally reflected in the participants' CNC word recognition with scores somewhat higher in one ear than the other; this difference between ears did reach statistical significance at the group level. Not surprisingly, participants tended to perform better and demonstrate more SRM when the distracter was located in the hemifield corresponding to their poorer ear. This phenomenon is often referred to as better ear listening and is created when the distracter is positioned on one side of the head such that it creates a poorer SNR at the near ear than the far ear. When a participant listens with a distracter on the poorer side, he or she is able to take advantage of the higher SNR on the side of his or her better hearing ear. In contrast, when the distracter originates from the better hearing side, the higher SNR is on the poorer hearing side, thus degrading overall performance. In sum, these findings suggest that individuals with bilateral CIs are able to demonstrate SRM but only when the distracter is located on the poorer side.

The amount of SRM shown in this study is markedly less than in several previously published reports (Gifford et al., 2014; Loizou et al., 2009), and the greatest amount of SRM in this study was at 50° azimuth, with only a negligible SRM at 90°. There are several potential reasons for this difference. First, this difference may be partially attributed to the different sound processors worn by the participants. Using the SPEAR3 research sound processor, Loizou et al. (2009) demonstrated SRM in the range of 2 dB to 5 dB. In the current study, we observed a group mean SRM of 1.6 dB with clinically programmed processors that, unlike the research processors, did not use a direct-connect approach nor HRTFs. The use of direct-connect input in the Loizou study prevented those participants from being exposed to potentially negative microphone effects and some azimuths. As mentioned in that study, the use of HRTFs obtained from an acoustic manikin may have “slightly overestimated the performance of CI users wearing behind-the-ear microphones.”

Second, the different pattern of SRM observed in this study (greatest at 50° and minimal at 90°) may be related to the stimuli used. The CRM corpus has been shown to produce substantial SRM (Arbogast et al., 2002; Kidd et al., 2010; Marrone et al., 2008). Arbogast et al. (2002) demonstrated that the majority of spatial release was attributable to a release from informational masking. That is, sound sources from different locations created a perceptual difference adequate for telling them apart and, thus, improved identification and understanding of the target talker. Such a benefit is based on the ability of a listener to be able to take advantage of those spatial cues to create a perceptual difference between talkers. Pyschny et al. (2014) demonstrated that, despite poor transmission of F0 information—which is thought to underlie one's susceptibility to informational masking—bilateral CI recipients demonstrate significant informational masking and release from informational masking. The current study used only male talkers from the CRM, so there was relatively little difference in the sound quality of the talkers, which increases potential for informational masking compared with different-gender target and distracter talkers. Because the CRM corpus creates such a large amount of informational masking with its rigid sentence structure and individuals with bilateral CIs generally have poor spatial hearing abilities, it is possible that they were unable to perceive a perceptual difference between the target and distracter talkers (Stickney, Assmann, Chang, & Zeng, 2007; Stickney, Zeng, Litovsky, & Assmann, 2004), even when spatially separated, and were thus always burdened with high levels of informational masking.

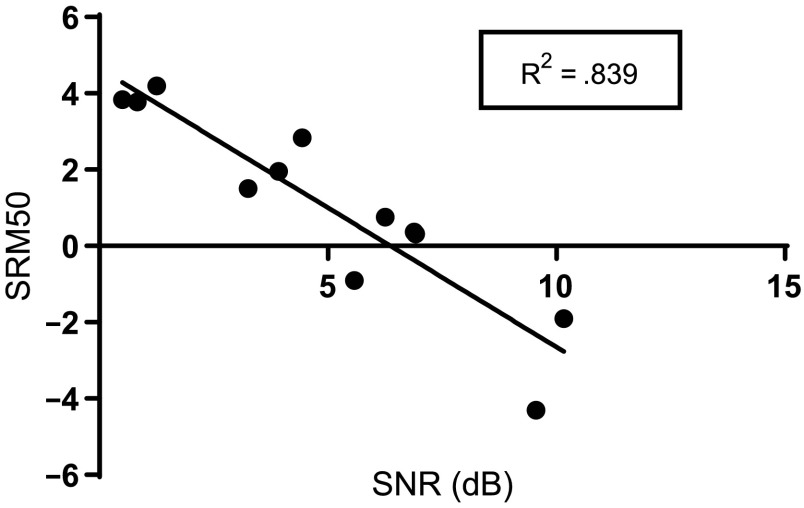

In line with this, previous studies have found limited potential for SRM when particularly higher SNRs are required to complete the task, as is often the case for individuals with hearing impairment (Arbogast et al., 2005; Best et al., 2012; Freyman et al., 2008). Figure 5 shows a scatter plot of each individual's SRM when the masker was positioned at 50° azimuth directed to the poorer ear, and the SNR needed to achieve that score. Because the largest SRM observed in this study was with a distracter azimuth of 50°, this generally represents the SNR needed to achieve optimal spatial benefit. A highly significant negative correlation was observed for this comparison (p < .001). This finding is in agreement with previous reports that individuals demonstrate greater SRM when lower SNRs can be used.

Figure 5.

Scatter plot of SRM values (in dB) with 50° of spatial separation and the distracter positioned near each participant's “poorer” ear as a function of the SNR needed to obtain 50% correct speech understanding. Solid line is a linear regression. SRM = spatial release from masking; SNR = signal-to-noise ratio.

Lastly, it is possible that different channel stimulation rates could be used across ears, which holds potential to impact ITDs. This is particularly true for Advanced Bionics and MED-EL for which pulse width is automatically varied to keep the system operating within the limits of voltage compliance. Half of the participants in this study had different stimulation rates between ears with an average interaural difference of 350 pps (range = 301 to 1,159 pps). For the participants with different stimulation rates across ears, all rates were above 1,200 pps and are thus not likely to differentially impact performance (Shannon, Cruz, & Galvin, 2011; Verschuur, 2005). Furthermore, because fine structure information is largely discarded in envelope-based stimulation strategies and asynchronous stimulation rates are not expected to alter envelope ITDs, this variable did not likely impact the current results.

The relatively small difference observed between microphone conditions in this study was somewhat unexpected. Previous work has demonstrated the potential negative influence of listening to speech in noise via BTE microphones, particularly when the noise is presented to the side of the microphone, such as in the ±90° distracter locations used in this study (Kolberg et al., 2015). We did not observe any substantial decrement in performance from 70° to 90° in the BTE-mic conditions, but performance did decline from the peak at 50°. A possible reason for the lack of observed difference may be the different processors used in this study compared to those used in a study that previously reported the potential negative SNR effect with the BTE mic. Kolberg et al. (2015) reported this negative effect for 11 adults with Harmony processors. In the current study, all but one Advanced Bionics participant used newer Naida CI processors, with different omnidirectional microphones. Aronoff et al. (2011) measured speech recognition thresholds for listeners with normal hearing using HRTFs based on various CI microphone locations. They reported that the T-mic HRTF yielded a 2-dB improvement in the SNR as compared with the BTE-mic-derived HRTF. A similar 2 dB to 3 dB advantage has been reported in other studies comparing BTE-microphone placement to one at the entrance of the ear canal as in the case of a T-mic or in-the-ear hearing aid (Festen & Plomp, 1986; Mantokoudis et al., 2011; Pumford et al., 2000).

The current results support the first two hypotheses: (a) participants with bilateral CIs would demonstrate SRM, and (b) the greatest SRM would occur at azimuths in the 40° to 70° range. Indeed, SRM was greatest with a distracter located at 50° azimuth but was also present at 10° azimuth on the poorer ear side. This finding supports the hypothesis that bilateral CI recipients rely on ILD cues and/or monaural head shadow rather than ITD cues for spatial hearing, something that is well established in the literature (Grantham et al., 2008; van Hoesel & Tyler, 2003), and they achieve spatial hearing benefits in accordance with talker positions that produce the most robust ILD cues. The findings of this study also highlight the significant effect of better ear versus poorer ear performance. Most participants demonstrated spatial hearing benefits only when the distracter was located on their poorer ear side, allowing them to fully take advantage of the improved SNR for the better hearing side.

The finding of statistically significant SRM with the masker positioned at 10° azimuth toward the better ear (i.e., poorer hemifield) is more difficult to interpret. Given that all other masker positions in that hemifield resulted in negative SRM, we suggest that finding be interpreted cautiously. It is more reasonable to conclude that individuals with bilateral CIs require 50° of separation between talkers with the masker positioned on the poorer ear side in order to achieve spatial benefit. This extent of separation could therefore be interpreted as the minimum separation needed for this population to obtain a spatial benefit.

Together, these findings highlight the vulnerability of spatial hearing abilities for individuals with bilateral CIs. While some significant spatial hearing benefits are shown here and elsewhere, these abilities are fragile and easily attenuated in more complex listening environments, such as those with more diffuse noise, reverberation, and informational and energetic masking, as well as in the absence of visual cues. Indeed, much effort is still needed to advance spatial hearing abilities for all CI recipients. That research processors have been shown to improve spatial hearing abilities provides inspiration for future studies of this nature. We also look forward to expanding this research into other CI listening configurations to see how ITD and ILD cues are differentially influential for listeners with potential access to both cues, such as in the case of hearing preservation and bilateral acoustic hearing. Spatial hearing is important for listener safety and satisfaction and contributes to better subjective quality-of-life ratings. We strive to continue this work to better our patients' hearing abilities.

Acknowledgments

This research was supported by Grants R01 DC009404 from the National Institute on Deafness and Other Communication Disorders, awarded to Dr. René Gifford. Portions of this data set were presented at the 2016 meeting of the American Auditory Society in Scottsdale, AZ, and the 14th International Conference on CIs in Toronto, Canada. Institutional review board approval was by Vanderbilt University (No. 101509). The authors would like to thank Chris Stecker for his contributions to an earlier version of this article. René Gifford is on the audiology advisory board for Advanced Bionics and Cochlear Americas and the scientific advisory board for frequency therapeutics.

Funding Statement

This research was supported by Grants R01 DC009404 from the National Institute on Deafness and Other Communication Disorders, awarded to Dr. René Gifford. Portions of this data set were presented at the 2016 meeting of the American Auditory Society in Scottsdale, AZ, and the 14th International Conference on CIs in Toronto, Canada. Institutional review board approval was by Vanderbilt University (No. 101509).

References

- Allen K., Carlile S., & Alais D. (2008). Contributions of talker characteristics and spatial location to auditory streaming. The Journal of the Acoustical Society of America, 123(3), 1562–1570. [DOI] [PubMed] [Google Scholar]

- Arbogast T. L., Mason C. R., & Kidd G. (2002). The effect of spatial separation on informational and energetic masking of speech. The Journal of the Acoustical Society of America, 1112(5), 2086–2098. [DOI] [PubMed] [Google Scholar]

- Arbogast T. L., Mason C. R., & Kidd G. Jr. (2005). The effect of spatial separation on informational masking of speech in normal-hearing and hearing-impaired listeners. The Journal of the Acoustical Society of America, 117, 2169–2180. [DOI] [PubMed] [Google Scholar]

- Aronoff J. M., Freed D. J., Fisher L. M., Pal I., & Soli S. D. (2011). The effect of different cochlear implant microphones on acoustic hearing individuals' binaural benefits for speech perception in noise. Ear and Hearing, 32(4), 468–484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balkany T., Hodges A., Telischi F., Hoffman R., Madell J., Parisier S., … Litovsky R. (2008). William House Cochlear Implant Study Group: Position statement on bilateral cochlear implantation. Otology and Neurotology, 29(2), 107–108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Best V., Marrone N., Mason C. R., & Kidd G. Jr. (2012). The influence of non-spatial factors on measures of spatial release from masking. The Journal of the Acoustical Society of America, 13, 3103–3110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolia R. S., Nelson W. T., Ericson M. A., & Simpson B. D. (2000). A speech corpus for multitalker communications research. The Journal of the Acoustical Society of America, 107(2), 1065–1066. [DOI] [PubMed] [Google Scholar]

- Brughera A., Dunai L., & Hartmann W. M. (2013). Human interaural time difference thresholds for sine tones: The high-frequency limit. The Journal of the Acoustical Society of America, 133(5), 2839–2855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brungart D. S., & Simpson B. D. (2005). Optimizing the spatial configuration of a seven-talker speech display. ACM Transactions on Applied Perception, 2(4), 430–436. [Google Scholar]

- Buss E., Pillsbury H. C., Buchman C. A., Pillsbury C. H., Clark M. S., Haynes D. S., … Barco A. L. (2008). Multicenter U.S. bilateral MED-EL cochlear implantation study: Speech perception over the first year of use. Ear and Hearing, 29(1), 20–32. [DOI] [PubMed] [Google Scholar]

- Cherry E. C. (1953). Some experiments on the recognition of speech, with one and two ears. The Journal of the Acoustical Society of America, 25(5), 975–979. [Google Scholar]

- Coco A., Epp S. B., Fallon J. B., Xu J., Millard R. E., & Shepherd R. K. (2007). Does cochlear implantation and electrical stimulation affect residual hair cells and spiral ganglion neurons? Hearing Research, 225, 60–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman M. F., Loiselle L., Stohl J., Yost W. A., Spahr A., Brown C., & Cook S. (2014). Interaural level differences and sound source localization for bilateral cochlear implant patients. Ear and Hearing, 35(6), 633–640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Etymotic Research Inc. (2005). BKB-SIN speech-in-noise test, version 1.03. Elk Grove Village, IL. Retrieved from http://www.etymotic.com/auditory-research/speech-in-noise-tests/bkb-sin.html

- Festen J. M., & Plomp R. (1986). Speech-reception threshold in noise with one and two hearing aids. The Journal of the Acoustical Society of America, 79(2), 465–471. [DOI] [PubMed] [Google Scholar]

- Freyman R. L., Balakrishnan U., & Helfer K. S. (2008). Spatial release from masking with noise-vocoded speech. The Journal of the Acoustical Society of America, 124, 1627–1637. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford R. H., Dorman M. F., Sheffield S. W., Teece K., & Olund A. P. (2014). Availability of binaural cues for bilateral implant recipients and bimodal listeners with and without preserved hearing in the implanted ear. Audiology and Neurotology, 19(1), 57–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grantham D. W., Ashmead D. A., Ricketts T. A., Haynes D. S., & Labadie R. F. (2008). Interaural time and level difference thresholds for acoustically presented signals in post-lingually deafened adults fitted with bilateral cochlear implants using CIS+ processing. Ear and Hearing, 29(1), 33–44. [DOI] [PubMed] [Google Scholar]

- Grantham D. W., Ashmead D. H., Ricketts T. A., Labadie R. F., & Haynes D. S. (2007). Horizontal-plane localization of noise and speech signals by postlingually deafened adults fitted with bilateral cochlear implants. Ear and Hearing, 28(4), 524–541. [DOI] [PubMed] [Google Scholar]

- Hawley M. L., Litovsky R. Y., & Culling J. F. (2004). The benefit of binaural hearing in a cocktail party: Effect of location and type of interferer. The Journal of the Acoustical Society of America, 115(2), 833–843. [DOI] [PubMed] [Google Scholar]

- Kidd G., Mason C. R., Best V., & Marrone N. (2010). Stimulus factors influencing spatial release from speech-on-speech masking. The Journal of the Acoustical Society of America, 128(4), 1965–1978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kolberg E. R., Sheffield S. W., Davis T. J., Sunderhaus L. W., & Gifford R. H. (2015). Cochlear implant microphone location affects speech recognition in diffuse noise. Journal of the American Academy of Audiology, 26(1), 51–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laback B., & Majdak P. (2008). Binaural jitter improves interaural time-difference sensitivity of cochlear implantees at high pulse rates. Proceedings of the National Academy of Sciences, 105(2), 814–817. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laback B., Pok S. M., Baumgartner W. D., Deutsch W. A., & Schmid K. (2004). Sensitivity to interaural level and envelope time differences of two bilateral cochlear implant listeners using clinical sound processors. Ear and Hearing, 25(5), 488–500. [DOI] [PubMed] [Google Scholar]

- Leake P. A., Hradek G. T., & Snyder R. L. (1999). Chronic electrical stimulation by a cochlear implant promotes survival of spiral ganglion neurons after neonatal deafness. The Journal of Comparative Neurology, 412(4), 543–562. [DOI] [PubMed] [Google Scholar]

- Leake P. A., & Rebscher S. J. (2004). Anatomical considerations and long-term effects of electrical stimulation. In Zeng F. G., Popper A. N., & Fay R. R. (Eds.), Cochlear implants: Auditory prostheses and electric hearing (pp. 101–148). New York, NY: Springer. [Google Scholar]

- Levitt H. (1971). Transformed up-down methods in psychoacoustics. The Journal of the Acoustical Society of America, 49(2), 467–477. [PubMed] [Google Scholar]

- Litovsky R. Y., Jones G. L., Agrawal S., & van Hoesel R. (2010). Effect of age at onset of deafness on binaural sensitivity in electric hearing in humans. The Journal of the Acoustical Society of America, 127(1), 400–414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky R. Y., Parkinson A., & Arcaroli J. (2009). Spatial hearing and speech intelligibility in bilateral cochlear implant users. Ear and Hearing, 30(4), 419–431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky R. Y., Parkinson A., Arcaroli J., & Sammeth C. (2006). Simultaneous bilateral cochlear implantation in adults: A multicenter clinical study. Ear and Hearing, 27(6), 714–731. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loizou P. C., Hu Y., Litovsky R. Y., Yu G., Peters R., Lake J., & Roland P. (2009). Speech recognition by bilateral cochlear implant users in a cocktail-party setting. The Journal of the Acoustical Society of America, 125(1), 372–383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Macaulay E. J., Hartmann W. M., & Rakerd B. (2010). The acoustical bright spot and mislocalization of tones by human listeners. The Journal of the Acoustical Society of America, 127(3), 1440–1449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mantokoudis G., Kompis M., Vischer M., Häusler R., Caversaccio M., & Senn P. (2011). In-the-canal versus behind-the-ear microphones improve spatial discrimination on the side of the head in bilateral cochlear implant users. Otology and Neurotology, 32(1), 1–6. [DOI] [PubMed] [Google Scholar]

- Marrone N., Mason C. R., & Kidd G. (2008). Tuning in the spatial dimension: Evidence from a masked speech identification task. The Journal of the Acoustical Society of America, 124(2), 1146–1158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peterson G. E., & Lehiste I. (1962). Revised CNC lists for auditory tests. Journal of Speech and Hearing Disorders, 27(1), 62–70. [DOI] [PubMed] [Google Scholar]

- Potts L. G., & Litovsky R. Y. (2014). Transitioning from bimodal to bilateral cochlear implant listening: Speech recognition and localization in four individuals. American Journal of Audiology, 23(1), 79–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pumford J. M., Seewald R. C., Scollie S. D., & Jenstad L. M. (2000). Speech recognition with in-the-ear and behind-the-ear dual-microphone hearing instruments. Journal of the American Academy of Audiology, 11(1), 23–35. [PubMed] [Google Scholar]

- Pyschny V., Landwehr M., Hahn M., Lang-Roth R., Walger R., & Meister H. (2014). Head shadow, squelch, and summation effects with an energetic or informational masker in bilateral or bimodal CI users. Journal of Speech, Language, and Hearing Research, 57(5), 1942–1960. [DOI] [PubMed] [Google Scholar]

- Senn P., Kompis M., Vischer M., & Haeusler R. (2005). Minimum audible angle, just noticeable interaural differences and speech intelligibility with bilateral cochlear implants using clinical speech processors. Audiology and Neurotology, 10(6), 342–352. [DOI] [PubMed] [Google Scholar]

- Shannon R. V., Cruz R. J., & Galvin J. J. 3rd (2011). Effect of stimulation rate on cochlear implant users' phoneme, word and sentence recognition in quiet and in noise. Audiology and Neurotology, 16(2), 113–123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spahr A. J., Dorman M. F., Litvak L. M., Van Wie S., Gifford R. H., Loizou P. C., … Cook S. (2012). Development and validation of the AzBio sentence lists. Ear and Hearing, 33(1), 112–117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Srinivasan N. K., Gallun F. J., Kampel S. D., Jakien K. M., Gordon S., & Stansell M. (2016). Release from masking for small spatial separations: Effects of age and hearing loss. The Journal of the Acoustical Society of America, 140, EL73–EL78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stickney G. S., Assmann P. F., Chang J., & Zeng F.-G. (2007). Effects of cochlear implant processing and fundamental frequency on the intelligibility of competing sentences. The Journal of the Acoustical Society of America, 112, 1069–1078. [DOI] [PubMed] [Google Scholar]

- Stickney G. S., Zeng F.-G., Litovsky R., & Assmann P. (2004). Cochlear implant speech recognition with speech maskers. The Journal of the Acoustical Society of America, 116, 1081–1091. [DOI] [PubMed] [Google Scholar]

- Tyler R. S., Gantz B., Rubenstein J. T., Wilson B. S., Parkinson A. J., Wolaver A., … Lowder M. (2002). Three-month results with bilateral cochlear implants. Ear and Hearing, 23(1S), 80S–89S. [DOI] [PubMed] [Google Scholar]

- van Hoesel R. J. M. (2012). Contrasting benefits from contralateral implants and hearing aids in cochlear implant users. Hearing Research, 228, 100–113. [DOI] [PubMed] [Google Scholar]

- van Hoesel R. J. M. (2015). Audio-visual speech intelligibility benefits with bilateral cochlear implants when talker location varies. Journal of the Association for Research in Otolaryngology, 16(2), 309–315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Hoesel R. J. M., & Tyler R. S. (2003). Speech perception, localization, and lateralization with bilateral cochlear implants. The Journal of the Acoustical Society of America, 113(3), 1617–1630. [DOI] [PubMed] [Google Scholar]

- Verschuur C. A. (2005). Effect of stimulation rate on speech perception in adult users of the Med-El CIS speech processing strategy. International Journal of Audiology, 44(1), 58–63. [DOI] [PubMed] [Google Scholar]

- Wightman F. L., & Kistler D. J. (1992). The dominant role of low-frequency interaural time differences in sound localization. The Journal of the Acoustical Society of America, 91(3), 1648–1661. [DOI] [PubMed] [Google Scholar]

- Zirn S., Arndt S., Aschendorff A., Lazig R., & Wesarg T. (2016). Perception of interaural phase differences with envelope and fine structure coding strategies in bilateral cochlear implant users. Trends in Hearing, 20, 1–20. [DOI] [PMC free article] [PubMed] [Google Scholar]